Abstract

This paper presents modified memoryless quasi-Newton methods based on the spectral-scaling Broyden family on Riemannian manifolds. The method involves adding one parameter to the search direction of the memoryless self-scaling Broyden family on the manifold. Moreover, it uses a general map instead of vector transport. This idea has already been proposed within a general framework of Riemannian conjugate gradient methods where one can use vector transport, scaled vector transport, or an inverse retraction. We show that the search direction satisfies the sufficient descent condition under some assumptions on the parameters. In addition, we show global convergence of the proposed method under the Wolfe conditions. We numerically compare it with existing methods, including Riemannian conjugate gradient methods and the memoryless spectral-scaling Broyden family. The numerical results indicate that the proposed method with the BFGS formula is suitable for solving an off-diagonal cost function minimization problem on an oblique manifold.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Riemannian optimization has recently attracted a great deal of attention and has been used in many applications, including low-rank tensor completion [10, 30], machine learning [17], and shape analysis [8].

Iterative methods for solving unconstrained optimization problems on the Euclidean space have been studied for a long time [18]. Quasi-Newton methods and nonlinear conjugate gradient methods are the especially important ones and have been implemented in various software packages.

Here, quasi-Newton methods need to store dense matrices, so it is difficult to apply them to large-scale problems. Shanno [27] proposed a memoryless quasi-Newton method as a way to deal with this problem. This method [9, 12,13,14,15] has proven effective at solving large-scale unconstrained optimization problems. The concept is simple: an approximate matrix is updated by using the identity matrix instead of the previous approximate matrix. Similar to the case of nonlinear conjugate gradient methods, the search direction can be computed without having to use matrices and simply by taking the inner product without matrices.

Kou and Dai [9] proposed a modified memoryless spectral-scaling BFGS method. Their method involves adding one parameter to the search direction of the memoryless self-scaling BFGS method. In [13], Nakayama used this technique to devise a memoryless spectral-scaling Broyden family. In addition, he showed that the search direction is a sufficient descent direction and has the global convergence property. Nakayama, Narushima, and Yabe [15] proposed memoryless quasi-Newton methods based on the spectral-scaling Broyden family [3]. Their methods generate a sufficient descent direction and have the global convergence property.

Many useful iterative methods for solving unconstrained optimization problems on manifolds have been studied (see [2, 24]). They have been obtained by extending iterative methods in Euclidean space by using the concepts of retraction and vector transport. For example, Riemannian quasi-Newton methods [6, 7] and Riemannian conjugate gradient methods [20, 24, 26, 34] have been developed. Sato and Iwai [26] introduced scaled vector transport [26, Definition 2.2] in order to remove the assumption of isometric vector transport from the convergence analysis. Zhu and Sato [34] proposed Riemannian conjugate gradient methods that use an inverse retraction instead of vector transport. In [24], Sato proposed a general framework of Riemannian conjugate gradient methods. This framework uses a general map instead of vector transport and utilizes the existing Riemannian conjugate gradient methods such as ones that use vector transport, scaled vector transport [26], or inverse retraction [34].

In [19], Ring and Wirth proposed the BFGS method, which has a global convergence property under some convexity assumptions. Narushima et al. [16] proposed memoryless quasi-Newton methods based on the spectral-scaling Broyden family on Riemannian manifolds. They extended the memoryless spectral-scaling Broyden family in Euclidean space to Riemannian manifolds with an additional modification to ensure a sufficient descent condition. Moreover, they presented a global convergence analysis under the Wolfe conditions. In particular, they did not assume convexity of the objective function or isometric vector transport. The results of the previous studies are summarized in Tables 1 and 2.

In this paper, we propose a modified memoryless quasi-Newton method based on the spectral-scaling Broyden family on Riemannian manifolds, exploiting the idea used in the paper [13]. In the case of Euclidean space, Nakayama [13] reported that the modified memoryless quasi-Newton method based on the spectral-scaling Broyden family shows good experimental performance with parameter tuning. Therefore, it is worth extending it to Riemannian manifolds. Our method is based on the memoryless quasi-Newton methods on Riemannian manifolds proposed by Narushima et al. [16] as well as on the modification by Kou and Dai [9]. It uses a general map to transport vectors similarly to the general framework of Riemannian conjugate gradient methods [25]. This generalization allows us to use maps such as an inverse retraction [34] instead of vector transport. We show that our method generates a search direction satisfying the sufficient descent condition under some assumptions on the parameters (see Proposition 4.1). Moreover, we present global convergence analyses under the Wolfe conditions (see Theorem 4.2). Furthermore, we describe the results of numerical experiments comparing our method with the existing ones, including Riemannian conjugate gradient methods [20] and the memoryless spectral-scaling Broyden family on Riemannian manifolds [16]. The key advantages of the proposed methods are the added parameter \(\xi _{k-1}\) and support for maps other than vector transports. As shown in the numerical experiments, the proposed method may outperform the existing methods depending on how the parameter \(\xi _{k-1}\) is chosen. It has an advantage over [16] in that it can use a map such as an inverse retraction, which is not applicable in [16].

This paper is organized as follows. Section 2 reviews the fundamentals of Riemannian geometry and Riemannian optimization. Section 3 proposes the modified memoryless quasi-Newton method based on the spectral-scaling Broyden family. Section 4 gives a global convergence analysis. Section 5 compares the proposed method with the existing methods through numerical experiments. Section 6 concludes the paper.

2 Mathematical Preliminaries

Let M be a Riemannian manifold with Riemannian metric g. \(T_xM\) denotes the tangent space of M at a point \(x\in M\). The tangent bundle of M is denoted by TM. A Riemannian metric at \(x\in M\) is denoted by \(\langle \cdot ,\cdot \rangle _x:T_xM\times T_xM\rightarrow \mathbb {R}\). The induced norm of a tangent vector \(\eta \in T_xM\) is defined by \(\Vert \eta \Vert _x:=\sqrt{\langle \eta ,\eta \rangle _x}\). For a given tangent vector \(\eta \in T_xM\), \(\eta ^\flat \) represents the flat of \(\eta \), i.e., \(\eta ^\flat :T_xM\rightarrow \mathbb {R}:\xi \mapsto \langle \eta ,\xi \rangle _x\). Let \(F:M\rightarrow N\) be a smooth map between smooth manifolds; then, the derivative of F at \(x\in M\) is denoted by \(\textrm{D}F(x):T_xM\rightarrow T_{F(x)}N\). For a smooth function \(f:M\rightarrow \mathbb {R}\), \(\textrm{grad}f(x)\) denotes the Riemannian gradient at \(x\in M\), i.e., a unique element of \(T_xM\) satisfying

for all \(\eta \in T_xM\). \(\textrm{Hess}f(x)\) denotes the Riemannian Hessian at \(x\in M\), which is defined as

where \(\nabla \) denotes the Levi-Civita connection of M (see [2]).

Definition 2.1

Any smooth map \(R:TM\rightarrow M\) is called a retraction on M if it has the following properties.

-

\(R_x(0_x)=x\), where \(0_x\) denotes the zero element of \(T_xM\);

-

\(\textrm{D}R_x(0_x)=\textrm{id}_{T_xM}\) with the canonical identification \(T_{0_x}(T_xM)\simeq T_xM\),

where \(R_x\) denotes the restriction of R to \(T_xM\).

Definition 2.2

Any smooth map \(\mathcal {T}:TM\oplus TM\rightarrow TM\) is called a vector transport on M if it has the following properties.

-

There exists a retraction R such that \(\mathcal {T}_\eta (\xi )\in T_{R_x(\eta )}M\) for all \(x\in M\) and \(\eta ,\xi \in T_xM\);

-

\(\mathcal {T}_{0_x}(\xi )=\xi \) for all \(x\in M\) and \(\xi \in T_xM\);

-

\(\mathcal {T}_\eta (a\xi +b\zeta )=a\mathcal {T}_\eta (\xi )+b\mathcal {T}_\eta (\zeta )\) for all \(x\in M\), \(a,b\in \mathbb {R}\) and \(\eta ,\xi ,\zeta \in T_xM\),

where \(\mathcal {T}_\eta (\xi ):=\mathcal {T}(\eta ,\xi )\).

Let us consider an iterative method in Riemannian optimization. For an initial point \(x_0\in M\), step size \(\alpha _k>0\), and search direction \(\eta _k\in T_{x_k}M\), the k-th approximation to the solution is described as

where R is a retraction. We define \(g_k:=\textrm{grad}f(x_k)\). Various algorithms have been developed to determine the search direction \(\eta _k\). We say that \(\eta _k\) is a sufficient descent direction if the sufficient descent condition,

holds for some constant \(\kappa >0\).

In [6, 7, 16], the search direction \(\eta _k\in T_{x_k}M\) of Riemannian quasi-Newton methods is computed as

where \(\mathcal {H}_k:T_{x_k}M\rightarrow T_{x_k}M\) is a symmetric approximation to \(\textrm{Hess}f(x_k)^{-1}\).

In [25], Sato proposed a general framework of Riemannian conjugate gradient methods by using a map \(\mathscr {T}^{(k-1)}:T_{x_{k-1}}M\rightarrow T_{x_k}M\) which satisfies Assumption 2.1, to transport \(\eta _{k-1}\in T_{x_{k-1}}M\) to \(T_{x_k}M\); i.e., the search direction \(\eta _k\) is computed as

where \(\beta _k\in \mathbb {R}\), and \(\sigma _{k-1}\) is a scaling parameter (see [25, Section 4.1]) satisfying

Assumption 2.1

There exist \(C \ge 0\) and \(K\subset \mathbb {N}\), such that for all \(k\in K\),

and for all \(k\in \mathbb {N}-K\),

Note that inequality (5) is weaker than (4). For k satisfying the stronger condition (4), the assumption of Theorem 4.1 can be weakened. Further details can be found in [25, Remark 4.3]. Assumption 2.1 requires that \(\mathscr {T}^{(k)}\) is an approximation of the differentiated retraction. Therefore, the differentiated retraction clearly satisfies the conditions of Assumption 2.1. In [25, Example 4.5] and [25, Example 4.6], Sato gives examples of maps \(\mathscr {T}^{(k)}\) satisfying Assumption 2.1 in the case of the unit sphere and Grassmann manifolds, respectively. In [34, Proposition 1], Zhu and Sato proved that the inverse of the retraction satisfies Assumption 2.1.

Sato [25, Section 4.3] generalized the parameter \(\beta _k\) (i.e., Fletcher–Reeves (FR) [26], Dai–Yuan (DY) [23], Polak–Ribière–Polyak (PRP) and Hestenes–Stiefel (HS) methods) as follows:

where \(l_{k-1}>0\) and \(\mathscr {S}^{(k-1)}:T_{x_{k-1}}M\rightarrow T_{x_k}M\) is an appropriate mapping. Therefore, we can use the Hager-Zhang (HZ) methods [22, Section 3] generalized by the above techniques, as follows:

where \(\mu >1/4\).

We suppose that the search direction \(\eta _k\in T_{x_k}M\) is a descent direction. In [25, Section 4.4], Sato introduced the Riemannian version of the Wolfe conditions with a \(\mathscr {T}^{(k)}:T_{x_k}M\rightarrow T_{x_{k+1}}M\), called \(\mathscr {T}^{(k)}\)-Wolfe conditions. \(\mathscr {T}^{(k)}\)-Wolfe conditions are written as

where \(0<c_1<c_2<1\). Note that the existence of a step size \(\alpha _k>0\) satisfying the \(\mathscr {T}^{(k)}\)-Wolfe conditions is discussed in [25, Section 4.4]. Moreover, algorithms [21, Algorithm 3] and [23, Section 5.1] exist for finding step sizes which satisfy the \(\mathscr {T}^{(k)}\)-Wolfe conditions.

3 Memoryless Spectral-Scaling Broyden Family

Let us start by reviewing the memoryless spectral-scaling Broyden family in Euclidean space. In the Euclidean setting, an iterative optimization algorithm updates the current iterate \(x_k\) to the next iterate \(x_{k+1}\) with the updating formula,

where \(\alpha _k>0\) is a positive step size. One often chooses a step size \(\alpha _k>0\) to satisfy the Wolfe conditions (see [31, 32]),

where \(0<c_1<c_2<1\). The search direction \(d_k\) of the quasi-Newton methods is defined by

where \(g_k=\nabla f(x_k)\) and \(H_k\) is a symmetric approximation to \(\nabla ^2f(x_k)^{-1}\). In this paper, we will focus on the Broyden family, written as

where

\(s_{k-1}=x_k-x_{k-1}\) and \(y_{k-1}=\nabla f(x_k)-\nabla f(x_{k-1})\). \(\phi _{k-1}\) is a parameter, which becomes the DFP formula when \(\phi _{k-1}=0\) or the BFGS formula when \(\phi _{k-1}=1\) (see [18, 28]). Here, if \(\phi _{k-1}\in [0,1]\), then (11) is a convex combination of the DFP formula and the BFGS formula; we call this interval the convex class. Zhang and Tewarson [33] found a better choice in the case \(\phi _{k-1}>1\); we call this interval the preconvex class. In [3], Chen and Cheng proposed the Broyden family based on the spectral-scaling secant condition [4] as follows:

where \(\tau _{k-1}>0\) is a spectral-scaling parameter.

Shanno [27] proposed memoryless quasi-Newton methods in which \(H_{k-1}\) is replaced with the identity matrix in (11). Memoryless quasi-Newton methods avoid having to make memory storage for matrices and can solve large-scale unconstrained optimization problems. In addition, Nakayama, Narushima and Yabe [15] proposed memoryless quasi-Newton methods based on the spectral-scaling Broyden family by replacing \(H_{k-1}\) with the identity matrix in (12), i.e.,

where

From (10) and (12), the search direction \(d_k\) of memoryless quasi-Newton methods based on the spectral-scaling Broyden family can be computed as

In [15], they also proved global convergence for step sizes satisfying the Wolfe conditions (see [15, Theorem 3.1] and [15, Theorem 3.6]). In [9], Kou and Dai proposed a modified memoryless self-scaling BFGS method and showed that it generates a search direction satisfying the sufficient descent condition. Moreover, Nakayama [13] used the modification by Kou and Dai and proposed a search direction \(d_k\) defined by

where \(\xi _{k-1}\in [0,1]\) is a parameter.

3.1 Memoryless Spectral-Scaling Broyden Family on Riemannian Manifolds

We define \(s_{k-1}=\mathcal {T}_{\alpha _{k-1}\eta _{k-1}}(\alpha _{k-1}\eta _{k-1})\) and \(y_{k-1}=g_k-\mathcal {T}_{\alpha _{k-1}\eta _{k-1}}(g_{k-1})\). The Riemannian quasi-Newton method with the spectral-scaling Broyden family [16, (23)] is written as

where

and

Here, \(\phi _{k-1}\ge 0\) is a parameter, and \(\tau _{k-1}>0\) is a spectral-scaling parameter.

The idea of behind the memoryless spectral-scaling Broyden family is very simple: replace \(\tilde{\mathcal {H}}_{k-1}\) with \(\textrm{id}_{T_{x_{k-1}}M}\). In [16], a memoryless spectral-scaling Broyden family on a Riemannian manifold is proposed by replacing \(\tilde{\mathcal {H}}_{k-1}\) with \(\textrm{id}_{T_{x_k}M}\). To guarantee global convergence, they replaced \(y_{k-1}\) by \(z_{k-1}\in T_{x_k}M\) satisfying the following conditions [16, (27)]: for positive constants \(\underline{\nu },\overline{\nu }>0\),

Here, we can choose \(z_{k-1}\) by using Li-Fukushima regularization [11], which is a Levenberg-Marquardt type of regularization, and set

where

and \(\hat{\nu }>0\). We can also use Powell’s damping technique [18], which sets

where \(\hat{\nu }\in (0,1)\) and

The proof that these choices satisfy conditions (15) and (16) is given in [16, Proposition 4.1]. Thus, a memoryless spectral-scaling Broyden family on a Riemannian manifold [16, (28)] can be described as

where

Here, \(\gamma _{k-1}>0\) is a sizing parameter. From (3), we can compute the search direction of the memoryless spectral-scaling Broyden family on a Riemannian manifold as follows:

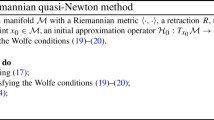

3.2 Proposed Algorithm

Let \(\mathscr {T}^{(k-1)}:T_{x_{k-1}}M\rightarrow T_{x_k}M\) be a map which satisfies Assumption 2.1. Furthermore, we define \(y_{k-1}=g_k-\mathscr {T}^{(k-1)}(g_{k-1})\) and \(s_{k-1}=\mathscr {T}^{(k-1)}(\alpha _{k-1}\eta _{k-1})\). We propose the following search direction of the modified memoryless spectral-scaling Broyden family on a Riemannian manifold:

where \(\xi _{k-1}\in [0,1]\) is a parameter, and \(z_{k-1}\in T_{x_k}M\) is a tangent vector satisfying (15) and (16). Note that equation (21) has not only added \(\xi _{k-1}\), but also changed the definition of the two tangent vectors \(y_{k-1}\) and \(s_{k-1}\) for determining \(z_{k-1}\). The proposed algorithm is listed in Algorithm 1. Note that Algorithm 1 is a generalization of memoryless quasi-Newton methods based on the spectral-scaling Broyden family proposed in [16]. In fact, if \(\xi _{k-1}=1\) and \(\mathscr {T}^{(k-1)}=\mathcal {T}_{\alpha _{k-1}\eta _{k-1}}(\cdot )\), then Algorithm 1 coincides with it.

4 Convergence Analysis

Assumption 4.1

Let \(f:M\rightarrow \mathbb {R}\) be a smooth, bounded below function with the following property: there exists \(L>0\) such that

for all \(\eta \le T_xM\), \(\Vert \eta \Vert _x=1\), \(x\in M\) and \(t\ge 0\).

Assumption 4.2

We suppose that there exists \(\Gamma >0\) such that

for all k.

Zoutendijk’s theorem about the \(\mathscr {T}^{(k)}\)-Wolfe conditions [25, Theorem 5.3], is described as follows:

Theorem 4.1

Suppose that Assumptions 2.1 and 4.1 hold. Let \((x_k)_{k=0,1,\cdots }\) be a sequence generated by an iterative method of the form (1). We assume that the step size \(\alpha _k\) satisfies the \(\mathscr {T}^{(k)}\)-Wolfe conditions (8) and (9). If the search direction \(\eta _k\) is a descent direction and there exists \(\mu >0\), such that \(\eta _k\) satisfies \(\Vert g_k\Vert _{x_k}\le \mu \Vert \eta _k\Vert _{x_k}\) for all \(k\in \mathbb {N}-K\), then the following holds:

where K is the subset of \(\mathbb {N}\) in Assumption 2.1.

We present a proof that the search direction (21) satisfies the sufficient descent condition (2), which involves generalizing the Euclidean case in [13, Proposition 3.1] and [15, Proposition 2.1].

Proposition 4.1

Assume that \(0<\underline{\gamma }\le \gamma _{k-1}\) and \(0\le \phi _{k-1}\le \overline{\phi }^2\) hold, where \(1<\overline{\phi }<2\). The search direction (21) with

where \(0\le \overline{\xi }<1\), satisfies the sufficient descent condition (2) with

Proof

The proof involves extending the discussion in [13, Proposition 3.1] to the case of Riemannian manifolds. For convenience, let us set

From the definition of the search direction (2), we have

From the relation \(2\langle u,v\rangle \le \Vert u\Vert ^2+\Vert v\Vert ^2\) for any vectors u and v in an inner product space, we obtain

From (15) (i.e., \(0<\underline{\nu }\Vert s_{k-1}\Vert _{x_k}^2\le s_{k-1}^\flat z_{k-1}\)), we have

Here, we consider the case \(0\le \phi _{k-1}\le 1\). From \(\xi _{k-1}(1-\phi _{k-1})\ge 0\) and the Cauchy-Schwarz inequality, we obtain

From \(0\le \xi _{k-1}\le \overline{\xi }<1\) and \(0\le \underline{\gamma }\le \gamma _{k-1}\), we have

Next, let us consider the case \(1<\phi _{k-1}<\overline{\phi }\). From \(\xi _{k-1}(1-\phi _{k-1})\le 0\) and \(0\le \underline{\gamma }\le \gamma _{k-1}\), we obtain

Therefore, the search direction (21) satisfies the sufficient descent condition (2), i.e., \(\langle g_k,\eta _k\rangle _{x_k}\le -\kappa \Vert g_k\Vert _{x_k}^2\), where

\(\square \)

Now let us show the global convergence of Algorithm 1.

Theorem 4.2

Suppose that Assumptions 2.1,4.1 and 4.2 are satisfied. Assume further that \(0<\underline{\gamma }\le \gamma _{k-1}\le \overline{\gamma }\), \(\underline{\tau }\le \tau _{k-1}\) and \(0\le \phi _{k-1}\le \overline{\phi }^2\) hold, where \(\underline{\tau }>0\) and \(1<\overline{\phi }<2\). Moreover, suppose that \(\xi _k\in [0,1]\) satisfies (22). Let \((x_k)_{k=0,1,\cdots }\) be a sequence generated by Algorithm 1, and let the step size \(\alpha _k\) satisfy the \(\mathscr {T}^{(k)}\)-Wolfe conditions (8) and (9). Then, Algorithm 1 converges in the sense that

holds.

Proof

For convenience, let us set

From (21) and the triangle inequality, we have

Here, from the Cauchy-Schwarz inequality, we obtain

which together with (15) (i.e., \(\underline{\nu }\Vert s_{k-1}\Vert _{x_k}^2\le s_{k-1}^\flat z_{k-1}\)) and \(0\le \phi _{k-1}<4\), gives

From (16) (i.e., \(\Vert z_{k-1}\Vert _{x_k}\le \overline{\nu }\Vert s_{k-1}\Vert _{x_k}\)), \(0\le \xi _{k-1}\le 1\), and \(\gamma _{k-1}\le \overline{\gamma }\), we have

which, together with \(\Vert g_k\Vert _{x_k}\le \Gamma \), give

To prove convergence by contradiction, suppose that there exists a positive constant \(\varepsilon >0\) such that

for all k. From Proposition 4.1,

where

It follows from the above inequalities that

This contradicts the Zoutendijk theorem (Theorem 4.1) and thus completes the proof.\(\square \)

5 Numerical Experiments

We compared the proposed method with existing methods, including the Riemannian conjugate gradient methods and memoryless spectral-scaling Broyden family. In the experiments, we implemented the proposed method as an optimizer of pymanopt (see [29]) and solved two Riemannian optimization problems (Problems 5.1 and 5.2).

Problem 5.1 is the Rayleigh-quotient minimization problem on the unit sphere [2, Chapter 4.6].

Problem 5.1

Let \(A\in \mathbb {R}^{n\times n}\) be a symmetric matrix,

where \(\Vert \cdot \Vert \) denotes the Euclidean norm.

In the experiments, we set \(n=100\) and generated a matrix \(B\in \mathbb {R}^{n\times n}\) with randomly chosen elements by using numpy.random.randn. Then, we set a symmetric matrix \(A=(B+B^\top )/2\).

Absil and Gallivan [1, Section 3] introduced an off-diagonal cost function. Problem 5.2 is an off-diagonal cost function minimization problem on an oblique manifold.

Problem 5.2

Let \(C_i\in \mathbb {R}^{n\times n}\) \((i=1,\cdots ,N)\) be symmetric matrices,

where \(\Vert \cdot \Vert _F\) denotes the Frobenius norm and \(\textrm{ddiag}(M)\) denotes a diagonal matrix M with all its off-diagonal elements set to zero.

In the experiments, we set \(N=5\), \(n=10\), and \(p=5\) and generated five matrices \(B_i\in \mathbb {R}^{n\times n}\) \((i=1,\cdots ,5)\) with randomly chosen elements by using numpy.random.randn. Then, we set symmetric matrices \(C_i=(B_i+B_i^\top )/2\) \((i=1,\cdots ,5)\).

The experiments used a MacBook Air (M1, 2020) with version 12.2 of the macOS Monterey operating system. The algorithms were written in Python 3.11.3 with the NumPy 1.25.0 package and the Matplotlib 3.7.1 package. Python implementations of the methods used in the numerical experiments are available at https://github.com/iiduka-researches/202307-memoryless.

We considered that a sequence had converged to an optimal solution when the stopping condition,

was satisfied. We set \(\mathscr {T}^{(k-1)}=\mathcal {T}_{\alpha _{k-1}\eta _{k-1}}^R(\cdot )\),

We compared the proposed methods with the existing Riemannian optimization algorithms, including Riemannian conjugate gradient methods. Moreover, we compared twelve versions of the proposed method with different parameters, i.e., \(\phi _{k-1}\), \(z_{k-1}\) and \(\xi _{k-1}\). We compared the BFGS formula \(\phi _{k-1}=1\) and the preconvex class \(\phi _{k-1}\in [0,\infty )\). For the preconvex class (see [16, (43)]), we used

where

Moreover, we compared Li-Fukushima regularization (17) and (18) with \(\hat{\nu }=10^{-6}\) and Powell’s damping technique (19) and (20) with \(\hat{\nu }=0.1\). In addition, we used a constant parameter \(\xi _{k-1}=\xi \in [0,1]\) and compared our methods with \(\xi =1\) (i.e., the existing methods when \(\mathscr {T}^{(k)}=\mathcal {T}_{\alpha _{k-1}\eta _{k-1}}(\cdot )\)), \(\xi =0.8\), and \(\xi =0.1\). For comparison, we also tested two Riemannian conjugate gradient methods, i.e., DY (6) and HZ (7).

As the measure for these comparisons, we calculated the performance profile \(P_s:\mathbb {R}\rightarrow [0,1]\) [5] defined as follows: let \(\mathcal {P}\) and \(\mathcal {S}\) be the sets of problems and solvers, respectively. For each \(p\in \mathcal {P}\) and \(s\in \mathcal {S}\),

We defined the performance ratio \(r_{p,s}\) as

Next, we defined the performance profile \(P_s\) for all \(\tau \in \mathbb {R}\) as

where \(|A|\) denotes the number of elements in a set A. In the experiments, we set \(|\mathcal {P}|=100\) for Problems 5.1 and 5.2, respectively.

Figures 1–4 plot the results of our experiments. In particular, Fig. 1 shows the numerical results for Problem 5.1 with Li-Fukushima regularization (17) and (18). It shows that Algorithm 1 with \(\xi =0.1\) has much higher performance than that of Algorithm 1 with \(\xi =1\) (i.e., the existing method) regardless of whether the BFGS formula or the preconvex class is used. In addition, we can see that Algorithm 1 with \(\xi =0.8\) and \(\xi =1\) have about the same performance.

Figure 2 shows the numerical results for solving Problem 5.1 with Powell’s damping technique (19) and (20). It shows that Algorithm 1 with \(\xi =0.1\) is superior to Algorithm 1 with \(\xi =1\) (i.e., the existing method), regardless of whether the BFGS formula or the preconvex class is used. Moreover, it can be seen that Algorithm 1 with \(\xi =0.8\) and \(\xi =1\) has about the same performance.

Figure 3 shows numerical results for Problem 5.1 with Li-Fukushima regularization (17) and (18). It shows that if we use the BFGS formula (i.e., \(\phi _k=1\)), then Algorithm 1 with \(\xi =0.8\) and the HZ method outperform the others. However, Algorithm 1 with the preconvex class is not compatible with is an off-diagonal cost function minimization problem on an oblique manifold.

Figure 4 shows the numerical results for solving Problem 5.1 with Powell’s damping technique (19) and (20). It shows that if we use the BFGS formula (i.e., \(\phi _k=1\)), then Algorithm 1 with \(\xi =0.8\) or \(\xi =1\) is superior to the others. However, Algorithm 1 with the preconvex class is not compatible with an off-diagonal cost function minimization problem on an oblique manifold. Therefore, we can see that Algorithm 1 with the BFGS formula (i.e., \(\phi _k=1\)) is suitable for solving an off-diagonal cost function minimization problem on an oblique manifold.

6 Conclusion

This paper presented a modified memoryless quasi-Newton method with the spectral-scaling Broyden family on Riemannian manifolds, i.e., Algorithm 1. Algorithm 1 is a generalization of the memoryless self-scaling Broyden family on Riemannian manifolds. Specifically, it involves adding one parameter to the search direction. We use a general map instead of vector transport, similarly to the general framework of Riemannian conjugate gradient methods. Therefore, we can utilize methods that use vector transport, scaled vector transport, or an inverse retraction. Moreover, we proved that the search direction satisfies the sufficient descent condition, and the method globally converges under the Wolfe conditions. Moreover, the numerical experiments indicated that the proposed method with the BFGS formula is suitable for solving an off-diagonal cost function minimization problem on an oblique manifold.

Data availability

Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

References

Absil, P.-A., Gallivan, K. A.: Joint diagonalization on the oblique manifold for independent component analysis. In 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings, volume 5, pages V–V. IEEE, (2006)

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, (2008)

Chen, Z., Cheng, W.: Spectral-scaling quasi-Newton methods with updates from the one parameter of the Broyden family. J. Comput. Appl. Math. 248, 88–98 (2013)

Cheng, W., Li, D.: Spectral scaling BFGS method. J. Opt. Theor. Appl. 146(2), 305–319 (2010)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Prog. 91(2), 201–213 (2002)

Huang, W., Absil, P.-A., Gallivan, K.A.: A riemannian BFGS method without differentiated retraction for nonconvex optimization problems. SIAM J. Opt. 28(1), 470–495 (2018)

Huang, W., Gallivan, K.A., Absil, P.-A.: A Broyden class of quasi-Newton methods for Riemannian optimization. SIAM J. Opt. 25(3), 1660–1685 (2015)

Huang, W., Gallivan, K.A., Srivastava, A., Absil, P.-A.: Riemannian optimization for registration of curves in elastic shape analysis. J. Math. Imaging Vis. 54, 320–343 (2016)

Kou, C.-X., Dai, Y.-H.: A modified self-scaling memoryless Broyden-Fletcher-Goldfarb-Shanno method for unconstrained optimization. J. Opt. Theor. Appl. 165, 209–224 (2015)

Kressner, D., Steinlechner, M., Vandereycken, B.: Low-rank tensor completion by Riemannian optimization. BIT Num. Math. 54, 447–468 (2014)

Li, D.-H., Fukushima, M.: A modified BFGS method and its global convergence in nonconvex minimization. J. Comput. Appl. Math. 129(1–2), 15–35 (2001)

Moyi, A.U., Leong, W.J.: A sufficient descent three-term conjugate gradient method via symmetric rank-one update for large-scale optimization. Optimization 65(1), 121–143 (2016)

Nakayama, S.: A hybrid method of three-term conjugate gradient method and memoryless quasi-Newton method for unconstrained optimization. SUT J. Math. 54(1), 79–98 (2018)

Nakayama, S., Narushima, Y., Yabe, H.: A memoryless symmetric rank-one method with sufficient descent property for unconstrained optimization. J. Operat. Res. Soc. Japan 61(1), 53–70 (2018)

Nakayama, S., Narushima, Y., Yabe, H.: Memoryless quasi-Newton methods based on spectral-scaling Broyden family for unconstrained optimization. J. Indust. Manage. Opt. 15(4), 1773–1793 (2019)

Narushima, Y., Nakayama, S., Takemura, M., Yabe, H.: Memoryless quasi-Newton methods based on the spectral-scaling Broyden family for Riemannian optimization. J. Opt. Theor. Appl. 197(2), 639–664 (2023)

Nickel, M., Kiela, D.: Poincaré embeddings for learning hierarchical representations. Advances in Neural Information Processing Systems, 30, (2017)

Nocedal, J., Wright, S. J.: Numerical Optimization. Springer, (1999)

Ring, W., Wirth, B.: Optimization methods on Riemannian manifolds and their application to shape space. SIAM J. Opt. 22(2), 596–627 (2012)

Sakai, H., Iiduka, H.: Hybrid Riemannian conjugate gradient methods with global convergence properties. Comput. Opt. Appl. 77, 811–830 (2020)

Sakai, H., Iiduka, H.: Sufficient descent Riemannian conjugate gradient methods. J. Opt. Theor. Appl. 190(1), 130–150 (2021)

Sakai, H., Sato, H., Iiduka, H.: Global convergence of Hager-Zhang type Riemannian conjugate gradient method. Appl. Math. Comput. 441, 127685 (2023)

Sato, H.: A Dai-Yuan-type Riemannian conjugate gradient method with the weak Wolfe conditions. Comput. Opt. Appl. 64(1), 101–118 (2016)

Sato, H.: Riemannian Optimization and Its Applications. Springer, (2021)

Sato, H.: Riemannian conjugate gradient methods: General framework and specific algorithms with convergence analyses. SIAM J. Opt. 32(4), 2690–2717 (2022)

Sato, H., Iwai, T.: A new, globally convergent Riemannian conjugate gradient method. Optimization 64(4), 1011–1031 (2015)

Shanno, D.F.: Conjugate gradient methods with inexact searches. Math. Operat. Res. 3(3), 244–256 (1978)

Sun, W., Yuan, Y.-X.: Optimization Theory and Methods: Nonlinear Programming, volume 1. Springer, (2006)

Townsend, J., Koep, N., Weichwald, S.: Pymanopt: a python toolbox for optimization on manifolds using automatic differentiation. J. Mach. Learn. Res. 17(1), 4755–4759 (2016)

Vandereycken, B.: Low-rank matrix completion by Riemannian optimization. SIAM J. Opt. 23(2), 1214–1236 (2013)

Wolfe, P.: Convergence conditions for ascent methods. SIAM Rev. 11(2), 226–235 (1969)

Wolfe, P.: Convergence conditions for ascent methods II: Some corrections. SIAM Rev. 13(2), 185–188 (1971)

Zhang, Y., Tewarson, R.: Quasi-Newton algorithms with updates from the preconvex part of Broyden’s family. IMA J. Num. Anal. 8(4), 487–509 (1988)

Zhu, X., Sato, H.: Riemannian conjugate gradient methods with inverse retraction. Comput. Opt. Appl. 77, 779–810 (2020)

Acknowledgements

This work was supported by a JSPS KAKENHI Grant Number JP23KJ2003.

Funding

Open Access funding provided by Meiji University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Alexandru Kristály.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sakai, H., Iiduka, H. Modified Memoryless Spectral-Scaling Broyden Family on Riemannian Manifolds. J Optim Theory Appl (2024). https://doi.org/10.1007/s10957-024-02449-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10957-024-02449-8

Keywords

- Riemannian optimization

- Memoryless quasi-Newton method

- Riemannian geometry

- Sufficient descent condition