Abstract

We consider optimal control problems involving two constraint sets: one comprised of linear ordinary differential equations with the initial and terminal states specified and the other defined by the control variables constrained by simple bounds. When the intersection of these two sets is empty, typically because the bounds on the control variables are too tight, the problem becomes infeasible. In this paper, we prove that, under a controllability assumption, the “best approximation” optimal control minimizing the distance (and thus finding the “gap”) between the two sets is of bang–bang type, with the “gap function” playing the role of a switching function. The critically feasible control solution (the case when one has the smallest control bound for which the problem is feasible) is also shown to be of bang–bang type. We present the full analytical solution for the critically feasible problem involving the (simple but rich enough) double integrator. We illustrate the overall results numerically on various challenging example problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Optimal control problems are infinite-dimensional optimization problems, involving processes evolving with time. Infeasibility in optimal control arises in many situations, most typically when resources for a process are overly limited: for example, insufficient amount of insecticides for a dengue epidemic [32] or a highly restricted driving motor capacity of a vehicle [36]. Infeasibility can also arise when one aims to achieve initial or terminal states which are not realistic or when there are state constraints which are too restrictive [38]. In this paper, we consider infeasible and critically feasible optimal control problems, where the dynamics are governed by linear ordinary differential (state) equations with initial and end states specified and the control variables constrained by simple bounds.

Infeasibility is also widely encountered in finite-dimensional optimization problems: Error in measurements, for example the noise in images taken during computer tomography, may give rise to an inconsistent set of equations in a pertaining optimization model, making the problem infeasible [33]. In [15], and in its extension [14], algorithms, which incorporate sequential quadratic programming methods, are proposed for infeasible finite-dimensional nonconvex optimization problems. Under a set of conditions, these algorithms are shown to be convergent to an infeasible stationary point, minimizing a measure of infeasibility [14, 15].

In their paper [6], Bauschke and Moursi study the Douglas–Rachford (DR) algorithm for finding a point in the intersection of two nonempty closed and convex sets in (possibly infinite-dimensional) Hilbert spaces. They show that, for the case when the intersection of the two sets is empty, i.e., when the problem is infeasible, the DR algorithm finds a pair of points in the respective sets which minimize (as a measure of infeasibility) the distance between the two sets; in other words, the DR algorithm finds the “gap” between the two constraint sets assuming that the gap is attained. The work in [6] has been further generalized in [7]. Indeed, the authors in [7] proved that under mild assumptions (see also [26]) the DR algorithm can find a generalized solution (this is also known as normal solution) (see [4, Definition 3.7]) for inconsistent convex optimization problems, i.e., when the solution set is empty.

The results in [7] are not only applicable to infinite-dimensional problems (e.g. optimal control problems), but also if one of the constraints is hard, that is a particular constraint must be satisfied, then a solution satisfying the hard constraint, that is an implementable solution, can be returned. In the present paper, we use the idea of the minimization of the distance between the two sets, namely finding the “gap” between the two sets (assuming it is attained), as our motivation in finding a best approximation solution to infeasible optimal control problems so that the optimal control we find is also implementable.

The optimal control problems we consider have two constraint sets: one involves the ODE with specified initial and end states (this set is an affine subspace, which is closed and convex) and the other involves the box constraint on the control (this set is a box, which is also closed and convex). We pose the problem of finding a best approximation pair to the infeasible problem as one of minimizing the distance between these two constraint sets and finding the “gap”. In practical optimal control problems, the control variable is expected to satisfy the simple bounds imposed on it; therefore, we regard the box as a hard constraint set.

A best approximation pair of Problem (Pf) below (see Eq.(10) below) can be expressed as a solution of a minimization problem which is strongly convex w.r.t. one the variables (see Lemmas 1–2).

We prove that, under a controllability assumption, the control variable that belongs to the box and solves the best approximation problem is of bang–bang type, i.e., the value of the control variable switches between its lower and upper bounds. Interestingly, the sign of a gap function component determines which bound value the corresponding control variable component in the box must take; in other words, a gap function component plays the role of a switching function. We also formulate the problem of finding a critically feasible solution, i.e., a solution for the least bound on the control resulting in a nonempty intersection of the two constraint sets. We prove that the critically feasible optimal control is also of bang–bang type. For the case of a double integrator problem, which is often employed as part of case studies for optimal control, we derive the full analytical solution for the critically feasible optimal control problem.

For a numerical illustration of the results, both for the critically feasible and infeasible cases, we study example problems involving (i) a double integrator, (ii) a damped oscillator and (iii) a machine tool manipulator, in the order of increasing numerical difficulty.

The paper is organized as follows. In Sect. 2, we introduce the optimal control problem and define the two constraint sets, namely the affine space and the box. In Sect. 3, we define the problem of best approximation, provide the maximum principle, discuss controllability and existing results, and derive the first main result of the paper on infeasible problems in Theorem 3. In Sect. 4, we introduce the concept of critical feasibility and provide the second main result in Theorem 4. We also provide the full critically feasible solution for a problem involving the double integrator in Theorem 5. In Sect. 5, we carry out numerical experiments on various example problems to illustrate the results of the paper. Finally in Sect. 6, we provide concluding remarks and comment on future lines of research.

2 Preliminaries

We consider optimal control problems where the aim is to find a control u which minimizes a general functional

subject to the differential equation constraints

with \(\dot{x}:= dx/dt\), and the boundary conditions

In the optimal control problem above the time horizon is set to be [0, 1], but without loss of generality it can be taken as any interval \([t_0,t_f]\), with \(t_0\) and \(t_f\) specified. The integrand function \(f_0:\mathrm{{I\!R}}^n\times \mathrm{{I\!R}}^m\times [0,1]\rightarrow \mathrm{{I\!R}}_+\) is continuous. We define the state variable vector \(x:[0,1]\rightarrow \mathrm{{I\!R}}^n\) with \(x(t):= (x_1(t)\,\ldots ,x_n(t))\in \mathrm{{I\!R}}^n\) and the control variable vector \(u:[0,1]\rightarrow \mathrm{{I\!R}}^m\) with \(u(t):= (u_1(t)\,\ldots ,u_m(t))\in \mathrm{{I\!R}}^m\). The time-varying matrices \(A:[0,1]\rightarrow \mathrm{{I\!R}}^{n\times n}\) and \(B:[0,1]\rightarrow \mathrm{{I\!R}}^{n\times m}\) are continuous. The vector function \(\varphi :\mathrm{{I\!R}}^{2n}\rightarrow \mathrm{{I\!R}}^r\), with \(\varphi (x(0),x(1)):= (\varphi _1(x(0),x(1)),\ldots ,\varphi _r(x(0),x(1)))\in \mathrm{{I\!R}}^r\), is affine.

It is realistic, especially in practical situations, to consider restrictions on the values u is allowed to take. In many applications, it is common practice to impose simple bounds on the components of u(t); namely,

where, respectively, the lower and upper bound functions \(\underline{a}_i,\overline{a}_i:[0,1]\rightarrow \mathrm{{I\!R}}\) are continuous and that \(\underline{a}_i(t) \le \overline{a}_i(t)\), for all \(t\in [0,1]\), \(i = 1,\ldots ,m\). We define for convenience \(\underline{a}:=(\underline{a}_1\,\ldots ,\underline{a}_m)\) and \(\overline{a}:=(\overline{a}_1\,\ldots ,\overline{a}_m)\), and write in concise form \(\underline{a}(t) \le u(t) \le \overline{a}(t)\); in other words, we formally state

as an expression alternative but equivalent to (4).

The objective functional in (1) and the constraints in (2)–(3) and (4) can be put together to present the optimal control problem as follows.

We split the constraints of Problem (P) into two sets:

We assume that the control system \(\dot{x}(t) = A(t)x(t) + B(t)u(t)\) is controllable—See the precise definition in Sect. 3.3. Then there exists a (possibly not unique) \(u(\cdot )\) such that, when this \(u(\cdot )\) is substituted, the boundary-value problem given in \({\mathcal {A}}\) has a solution \(x(\cdot )\). In other words, \({\mathcal {A}} \ne \varnothing \). Also, clearly, \({\mathcal {B}} \ne \varnothing \). Recall that \(\varphi \) is affine, so the constraint set \({\mathcal {A}}\) is an affine subspace. We note that by [10, Corollary 1],

Given that \({\mathcal {B}}\) is a box, the constraints turn out to be two convex sets in Hilbert space. Moreover, we note that \({\mathcal {B}}\) is closed in \(L^2(0,1;\mathrm{{I\!R}}^m)\). It will be convenient to use the expression

where \(b_i(t)\) is the ith column of the matrix B(t), interpreted as the column vector associated with the ith control component \(u_i\).

If \({\mathcal {A}} \cap {\mathcal {B}} \ne \varnothing \) , then one has a feasible LQ optimal control problem. The feasibility problem is posed as one of finding an element in \({\mathcal {A}} \cap {\mathcal {B}}\), namely:

If, however, \({\mathcal {A}} \cap {\mathcal {B}} = \varnothing \) , then the problem is said to be infeasible. The feasibility problem in (9) has obviously no solution in this case, but in Sect. 3 we will pose the problem of finding (in some sense) a best approximation solution.

3 Best Approximation Solution to the Infeasible Problem

Consider the case when \({\mathcal {A}} \cap {\mathcal {B}} = \varnothing \). We define the best approximation pair as \((u_{\mathcal {A}}^*, u_{\mathcal {B}}^*) \in {\mathcal {A}}\times {\mathcal {B}}\) which minimizes the squared distance between the two sets. Namely \((u_{\mathcal {A}}^*, u_{\mathcal {B}}^*)\) is in this case required to solve

where \(\Vert \cdot \Vert _{L^2}\) is the \({L^2}\) norm. In other words, we want to minimize the “gap” between the two sets. Observe that \({\mathcal {A}}-{\mathcal {B}}\) is convex, closed (by, e.g., [5, Proposition 3.42]), and nonempty. Therefore, it follows from [2, Sect. 2] and the fact that \({{\mathcal {A}}-{\mathcal {B}}}\) is closed that

We define the gap (function) vector (see [6])

Using \(u_{\mathcal {A}} = v + u_{\mathcal {B}}\) and the definitions of \({\mathcal {A}}\) and \({\mathcal {B}}\) in (6)–(7), the problem in (10) can be rewritten in the format of a classical, or standard, optimal control problem as follows.

where \(\Vert \cdot \Vert _2\) is the Euclidean norm. Problem (Pf) is an optimal control problem with two control variable vectors, namely v and \(u_{\mathcal {B}}\), where \(v(t):=(v_1(t),\ldots ,v_m(t))\in \mathrm{{I\!R}}^m\) and \(u_{\mathcal {B}}(t):= (u_{{\mathcal {B}},1}(t),\ldots ,u_{{\mathcal {B}},m}(t))\in \mathrm{{I\!R}}^m\).

3.1 Properties of Problem (Pf)

Denote by \(S_f\) the set of solutions of Problem (Pf). Recall that, for any given set C of a Hilbert space H, the indicator function of C, denoted by \(\iota _C:H\rightarrow \mathbb {R}\cup \{+\infty \}\), is defined as \(\iota _C(x)=0\) for every \(x\in C\), and \(\iota _C(x)=+\infty \) for every \(x\not \in C\). We show in this section the main properties of Problem (Pf).

Lemma 1

The constraint set of Problem (Pf) is convex and (strongly and weakly) closed (i.e., closed w.r.t. the norm topology and w.r.t. the weak topology in \(L^2\)).

Proof

The constraint set of Problem (Pf) can be written as follows:

where \({\mathcal {A}},\,{\mathcal {B}}\) are as in (6) and (7), respectively. Consider the map

defined by \(\Psi (z,x):=(z+x,x)\). By construction, we have that \(\Psi ({\mathcal {D}})= {\mathcal {A}}\times {\mathcal {B}}\). The map \(\Psi \) is a linear bijection which is continuous in \(L^2\). The convexity of \({\mathcal {D}}\) now follows from the fact that \(\Psi ^{-1}\) is linear and \({\mathcal {A}}\times {\mathcal {B}}\) (being the product of an affine set and a box), is convex. Note also that \({\mathcal {A}}\times {\mathcal {B}}\) is closed because each factor is closed. Indeed, \({\mathcal {A}}\) is closed by (8). The set \({\mathcal {B}}\) is closed in \(L^{2}([0,1];\mathrm{{I\!R}}^m)\) because every sequence converging in \(L^{2}([0,1];\mathrm{{I\!R}}^m)\) has a subsequence converging a.e. in [0, 1]. The latter implies that every limit in the topology of \(L^2\) must belong to \({\mathcal {B}}\). Altogether, the set \({\mathcal {A}}\times {\mathcal {B}}\) is closed in \(L^{2}([0,1];\mathrm{{I\!R}}^m)\) and therefore \({\mathcal {D}}\) is closed because it is the preimage of a closed set by a continuous function. The fact that the closedness holds for both the strong and weak topology follows from convexity (see Fact 1(ii) below). \(\square \)

Lemma 2 below makes use of some results from Functional Analysis which are summarized in Fact 1 for the reader’s convenience. Fact 1(i) is a corollary of the Bourbaki–Alaoglu theorem [9, Corollary 3.22]. Fact 1(ii) is [9, Theorem 3.7] (see also [5, Theorem 3.34]), and Fact 1(iii) is [9, Corollary 3.9] (see also [5, Theorem 9.1]). Fact 1(ii) and (iii) both follow from the Hahn–Banach theorem.

Fact 1

Let X be a reflexive Banach space.

-

(i)

Let \(K \subset X\) be bounded, closed, and convex. Then K is weakly compact (i.e., K is compact w.r.t. the weak topology in X).

-

(ii)

Let C be a convex subset of X. Then C is closed in the weak topology if and only if it is closed in the strong topology.

-

(iii)

Assume that \(\varphi :X\rightarrow \mathrm{{I\!R}}\cup {+\infty }\) is convex and lower-semicontinuous in the strong topology, then it is weakly lower semicontinuous.

Lemma 2

The solution set of Problem (Pf) is not empty. Moreover, if \((v_1,u_1),\, (v_2,u_2)\) solve Problem (Pf), then \(v_1=v_2\) (i.e., the coordinate v of any solution to Problem (Pf) is unique).

Proof

Note first that (Pf) can be equivalently written as having for objective function

where \(\iota _{\mathcal {B}}\) is the indicator function of the set \({\mathcal {B}}\) and \({\mathcal {B}}\) is as in (7). Now the second statement follows directly from the fact that h is strongly convex in the variable v. We proceed next to prove the first statement. Since the functions \(\underline{a},\, \overline{a}\) are continuous, the set \({\mathcal {B}}\) is bounded, and hence h is coercive in both variables. Consider the set \({\mathcal {D}}\) as in the proof of Lemma 1. The coercivity of h allows us to find a closed ball B[0, R] such that a solution of (Pf) (if any) must be in \(D_0:={\mathcal {D}}\cap B[0,R]\). By Lemma 1, \(D_0\) is convex and closed. It is also bounded because it is contained in the ball B[0, R]. By Fact 1(i), \(D_0\) is weakly compact. Since the function \(h_1(v):=\frac{1}{2}\int _0^1 \Vert v(t)\Vert _2^2 \, dt\) is continuous and convex, by Fact 1(iii), it is weakly lower-semicontinuous. Recall that the set \({\mathcal {B}}\) is closed and convex, and hence by Fact 1(ii), it is weakly closed and convex. Therefore, the function \(h_2:=\iota _{\mathcal {B}}\) is weakly lower-semicontinuous. Altogether, \(h= h_1+h_2\) is weakly lower-semicontinuous. We can now consider the problem

By construction, the solution set of Problem (PD) is \(S_f\). Since h is weakly lower-semicontinuous and \(D_0\) is weakly compact, Problem (PD) has a solution, and hence Problem (Pf) has (the same) solution(s). \(\square \)

3.2 Maximum Principle for Problem (Pf)

In what follows we will derive the necessary conditions of optimality for Problem (Pf), using the maximum principle. Various forms of the maximum principle and their proofs can be found in a number of reference books—see, for example, [30, Theorem 1], [21, Chapter 7], [35, Theorem 6.4.1], [28, Theorem 6.37], and [18, Theorem 22.2]. We will state the maximum principle suitably utilizing these references for our setting and notation.

First, define the Hamiltonian function \(H:\mathrm{{I\!R}}^n \times \mathrm{{I\!R}}^m \times \mathrm{{I\!R}}^m \times \mathrm{{I\!R}}^n \times [0,1]\rightarrow \mathrm{{I\!R}}\) for Problem (Pf) as

where \(\lambda _0 \ge 0\), and \(\lambda (t):= (\lambda _1(t),\ldots ,\lambda _n(t))\in \mathrm{{I\!R}}^n\) is the adjoint variable (or costate) vector such that

i.e.,

where the transversality conditions involving \(\lambda (0)\) and \(\lambda (1)\) depend on the boundary condition \(\varphi (x(t_0),x(t_f)) = 0\), but are not expressed here. In (14), the dependence of variables on t is not shown for clarity in appearance, as often done in the optimal control literature.

Maximum Principle. Suppose that the triplet

is optimal for Problem (Pf). Then there exist a number \(\lambda _0 \ge 0\) and a continuous adjoint variable vector \(\lambda \in W^{1,\infty }([0,1];\mathrm{{I\!R}}^n)\) as defined in (15), such that \((\lambda _0,\lambda (t))\ne \textbf{0}\) for all \(t\in [0,1]\), and that, for a.e. \(t\in [0,1]\),

and

for \(i = 1,\ldots ,m\). Condition (16) can in turn be rewritten as

for \(i = 1,\ldots ,m\), i.e.,

for all \(t\in [0,1]\). On the other hand, Condition (17) results in, also by incorporating (18),

for a.e. \(t\in [0,1]\), \(i = 1,\ldots ,m\).

The expression in (20) prompts two types of optimal control that are widely studied in the optimal control literature, as elaborated next.

Bang–Bang and Singular Types of Optimal Control. If \(v_i(t) \ne 0\) for a.e. \(t\in [t',t'']\subset [0,1]\) with \(t' < t''\), then the optimal control \(u_{{\mathcal {B}},i}(t)\) in (20) is referred to be of bang–bang type in the interval \([t',t'']\). In this case, the optimal control might switch from \(u_{{\mathcal {B}},i}(t) = \overline{a}_i(t)\) to \(u_{{\mathcal {B}},i}(t) = \underline{a}_i(t)\), or vice versa, at some finitely many switching times in \([t',t'']\). However, if \(v_i(t) = 0\) for a.e. \(t\in [s',s'']\subset [0,1]\), \(s'<s''\), then the optimal control is said to be of singular type in the interval \([s',s'']\). Note that in general the optimal control might also switch from a bang-arc to a singular arc, and vice versa.

The optimality conditions we have just derived in (19)–(20) for Problem (Pf) give rise to Theorem 3 stated further below. If the dynamical control system is controllable, a definition of which is to be provided next, then the theorem eliminates the singularity for \(u_i\), i.e., that the condition \(v_i(t) = 0\) in (20) can happen only at isolated time instants, and expresses the optimal \(u_{{\mathcal {B}},i}(\cdot )\) as a control which is of bang–bang type.

Before stating Theorem 3 on the best approximation solution we first discuss the concept of controllability and some existing results.

3.3 Controllability

The state equation, or the control system,

is said to be controllable on a finite interval \([t_0,t_f]\) if given any initial state \(x(t_0) = x_0\) there exists a continuous control \(u(\cdot )\) such that the corresponding solution of (21) satisfies \(x(t_f) = 0\).

The solution of the (uncontrolled) system \(\dot{x}(t) = A(t)x(t)\), with \(x(0) = x_0\), is given by \(x(t) = \Phi _A(t_0,t_f)\,x_0\). Recall that \(\Phi _A(t_0,t_f)\) is the state transition matrix, or the fundamental matrix, of the differential equation.

Theorem 1

(Controllability via a Gramian test matrix [31, Theorem 9.2]) The system in (21) is controllable on \([t_0,t_f]\) if and only if the \(n\times n\) (Gramian) matrix

is invertible.

The matrix \(W(t_0,t_f)\) defined above is called the controllability Gramian, and in general it is not easy to compute, making Theorem 1 rather impractical. Hence, we present next a computable version of this result. Suppose that \(A(\cdot )\) and \(B(\cdot )\) are not only continuous but also “sufficiently” smooth. Let

With these definitions, a (much more easily) computable version of Theorem 1 can be given as follows.

Theorem 2

(Controllability via a more easily computable test matrix) ([31, Theorem 9.4], [34]) Suppose q is a positive integer such that, on \([t_0,t_f]\), B(t) is q-times continuously differentiable, and A(t) is \((q-1)\)-times continuously differentiable. Then the system in (21) is controllable on \([t_0,t_f]\) if for some \(t_c\in [t_0,t_f]\),

with \(K_j(t_c)\), \(j = 1,\ldots ,q\), computed using (22a)–(22b).

Checking (23) is in general far easier than checking the invertibility of \(W(t_0,t_f)\).

Component-wise Controllability. We call the control system in (21) controllable w.r.t. \(u_i\) on \([t_0,t_f]\) if given any initial state \(x(t_0) = x_0\) there exists a continuous ith component \(u_i(\cdot )\) of the control \(u(\cdot )\) such that the corresponding solution of

satisfies \(x(t_f) = 0\). Then clearly Theorems 1 and 2, with B(t) replaced by \(b_i(t)\), hold for the system in (24), as the component-wise definition of controllability is stronger than that for the more general definition we gave originally.

3.4 Best Approximation Solution

Next, we provide in a theorem the best approximation solution in the set \({\mathcal {B}}\), in the case when the constraint sets \({\mathcal {A}}\) and \({\mathcal {B}}\) are disjoint.

Theorem 3

(Gap vector and the best approximation control in \({\mathcal {B}}\)) With the notation of Problem \(\mathrm{(Pf)}\), assume that \({\mathcal {A}} \cap {\mathcal {B}} = \varnothing \). Moreover, suppose that \(A(\cdot )\) and \(B(\cdot )\) are sufficiently smooth and that the control system (21) is controllable w.r.t. \(u_i\) on any \([t',t'']\subset [0,1]\), \(t'<t''\), for some \(i = 1,\ldots ,m\). Then the optimal gap vector is given by \(v(t) = -B^T(t)\lambda (t)\), for all \(t\in [0,1]\), where \(\lambda (\cdot )\) solves (15), and, for a.e. \(t\in [0,1]\),

In other words, such \(u_{{\mathcal {B}},i}\) is of bang–bang type.

Proof

For contradiction purposes, suppose that, for the index i as in the hypothesis (i.e., verifying the controllability assumption), the solution is not (only) bang–bang. Using (20), this means that \(v_i(t) = 0\) for a.e. \(t\in [t',t'']\subset [0,1]\), \(t'<t''\). Then the kth derivative of \(v(\cdot )\) is also zero over this nontrivial interval. Namely, \(v_i^{(k)}(t) = 0\), for a.e. \(t\in (t',t'')\), and all \(1\le k\le (n-1)\). Note that using (15) and (18) one has, for a.e. \(t\in (t',t'')\),

Let \(p_{i,0}(t):= b_i(t)\). Equations (26a)–(26c) can be rewritten as

where

Note that \(p_{i,k}(t)\), \(k = 1,2,\ldots \), are the same as \(K_j(t)\), \(k = 1,2,\ldots \), in (22a)–(22b), but with B(t) replaced by \(b_i(t)\). From (27a)–(27b), one gets

where

Suppose that the control system is controllable w.r.t. \(u_i\) on \([t',t'']\). Then by Theorem 2 there exists some \(t_c\in [t',t'']\) such that \({{\,\textrm{rank}\,}}Q_c^i(t_c) = n\). This implies from (29) that \(\lambda (t_c) = 0\), and that in turn implies by the ODE in (15) that \(\lambda (t) = 0\) for all \(t\in [0,1]\). Consequently, \(\lambda _0 = 0\) from (19) since \(v(t) \ne 0\) for some \(t\in [0,1]\) because \(\mathcal{A} \cap \mathcal{B} = \varnothing \). This results in \((\lambda _0, \lambda (t)) = 0\) for all \(t\in [0,1]\), which is not allowed by the maximum principle. Therefore one cannot have that \(v_i(t) = 0\) for a.e. \([t',t'']\subset [0,1]\) implying that \(\lambda _0 \ne 0\) (i.e., Problem (Pf) is normal). As a result, one can choose \(\lambda _0 = 1\) without loss of generality, yielding \(v(t) = -B^T(t)\lambda (t)\), for all \(t\in [0,1]\), and giving rise to (25). \(\square \)

Remark 1

(The best approximation control in \({\mathcal {A}}\)) Consider the expressions for the ith component of the optimal gap vector \(v(\cdot )\) and the ith component of the best approximation control \(u_{{\mathcal {B}}}(\cdot )\), given as in (12) and (25), respectively. One can then simply express the ith component of the best approximation control in the affine set \({\mathcal {A}}\) as

for a.e. \(t\in [0,1]\). We observe that while \(v_i(\cdot )\) as given in (18) is continuous, \(u_{{\mathcal {A}},i}(\cdot )\) is piecewise continuous. \(\square \)

Remark 2

(Time-invariant systems) Suppose that the control system in (21) is time-invariant; namely that \(A(t) = A\) and \(B(t) = B\), A and B constant matrices, for all \(t\in [0,1]\). This is a widely encountered case in control theory although the time-varying case is more general. We note that, in (28a)–(28b), \(\dot{p}_{i,k-1} = 0\) and so we can write

Since \(p_{i,k}(\cdot )\) is constant, write \(Q_c^i:= Q_c^i(t_c)\). In turn the rank condition \({{\,\textrm{rank}\,}}Q_c^i = n\) can explicitly be stated as

The condition in (32) for time-invariant control systems is referred to as the Kalman controllability rank condition [31] in control theory. In conclusion, for invariant systems, if the rank condition in (32) holds then the control component \(u_{{\mathcal {B}},i}\) for the infeasible optimal control problem is of bang–bang type as given in (25). \(\square \)

Remark 3

(Feasible problem) If \({\mathcal {A}} \cap {\mathcal {B}} \ne \varnothing \) then the gap vector function \(v=0\) for all \(t\in [0,1]\), and, to the contrary of Theorem 3, the optimal control is not necessarily of bang–bang type—see, for example, [3, 10, 11, 13]. An exception is when the feasibility is “critical,” which is elaborated in the next section. \(\square \)

4 Critical Feasibility

Suppose that \(\overline{a}_i(t) = a > 0\) and \(\underline{a}_i(t) = -a\) for all \(t\in [0,1]\) and \(i = 1,\ldots ,m\). Since it is assumed that \({\mathcal {A}} \ne \varnothing \), if \(a = \infty \) or large enough, the optimal control problem given in (1)–(4) is feasible, i.e., \({\mathcal {A}} \cap {\mathcal {B}} \ne \varnothing \). By the same token, if a is small enough, the problem is infeasible for some specified initial and terminal end states, i.e., \({\mathcal {A}} \cap {\mathcal {B}} = \varnothing \). In fact, from the geometry of the sets \({\mathcal {A}}\) and \({\mathcal {B}}_a\), where \({\mathcal {B}}_a\) indicates the explicit dependence of \({\mathcal {B}}\) on a, there exists a critical bound \(a_c\) such that for all \(a < a_c\), \({\mathcal {A}} \cap {\mathcal {B}}_a = \varnothing \), since \({\mathcal {B}}_a\) is strictly contained by (or strictly smaller than) \({\mathcal {B}}_{a_c}\). By this definition, when \(a=a_c\) we say that the problem is critically feasible.

We are interested in knowing when a problem becomes critically feasible. In other words, we want to find the smallest value \(a_c\) of a for which the problem is feasible. We can pose this question as a new (parametric) optimal control problem, where the parameter a is to be minimized subject to the constraint sets \({\mathcal {A}}\) and \({\mathcal {B}}_a\):

the optimal value of which will be \(a_c\).

Remark 4

We observe that \(|u_i(t)| \le a\), \(i = 1,\ldots ,m\), can be written as \(\Vert u(t)\Vert _{\infty }\le a\), where \(\Vert \cdot \Vert _{\infty }\) is the \(\ell _{\infty }\)-norm in \(\mathrm{{I\!R}}^m\). By also observing that the problem of “minimizing the value of the variable a subject to \(\Vert u(t)\Vert _{\infty }\le a\), for a.e. \(t\in [0,1]\),” is equivalent to “minimizing the \(L^\infty \)-norm of u,” Problem (Pcf) can be re-written as follows.

It is interesting to note that Problem (Pf1) is a generalized form of the problem studied in [24]. In what follows we will use the procedure in [24]. \(\square \)

Before we apply the maximum principle, it is convenient to re-write Problem (Pcf) as an optimal control problem in standard (or classical) form. First, we define a new state variable \(y(t):= a\) and a new control variable

Problem (Pcf) can then be re-cast using these new variables as

The Hamiltonian function \(H:\mathrm{{I\!R}}^n \times \mathrm{{I\!R}}\times \mathrm{{I\!R}}^m \times \mathrm{{I\!R}}^n \times \mathrm{{I\!R}}\times [0,1] \rightarrow \mathrm{{I\!R}}\) for the critical feasibility problem (Pcf2) can be written as

where \(\lambda (t):= (\lambda _1(t),\ldots ,\lambda _n(t))\in \mathrm{{I\!R}}^n\) is the adjoint variable solving (15), and \(\mu (t)\) is an additional adjoint variable such that

so that

4.1 Maximum Principle for Problem (Pcf2)

Suppose that the triplet

is optimal for Problem (Pcf2). Then there exists a continuous adjoint variable vector \(\lambda \in W^{1,\infty }([0,1];\mathrm{{I\!R}}^n)\) as defined in (15) and an additional continuous adjoint variable\(\mu \in W^{1,\infty }([0,1];\mathrm{{I\!R}})\) as defined in (35) such that \((\lambda (t),\mu (t))\ne \textbf{0}\) for all \(t\in [0,1]\), and that, for a.e. \(t\in [0,1]\),

for \(i = 1,\ldots ,m\). Condition (36) results in

for a.e. \(t\in [0,1]\), \(i = 1,\ldots ,m\).

We show next that the solution of (Pcf) is bang–bang. Namely, there is no nontrivial subinterval of [0, 1] where \(b_i^T(t)\lambda (t)\) vanishes almost everywhere.

4.2 Solution to the Critically Feasible Problem

Theorem 4

(Critically feasible control) Suppose that the system and control matrices \(A(\cdot )\) and \(B(\cdot )\) are sufficiently smooth. Assume that, for some \(i = 1,\ldots ,m\), the control system (21) is controllable w.r.t. \(u_i\) on any \([s',s'']\subset [0,1]\), \(s'<s''\). Then, the ith component \(u_i(\cdot )\) of the critically feasible control for the optimal control problem in (1)–(4), with \(\overline{a}_i(t) = a_c\) and \(\underline{a}_i(t) = -a_c\), is given as

for a.e. \(t\in [0,1]\), where \(\lambda (\cdot )\) solves (15). In other words, such \(u_i\) is of bang–bang type.

Proof

Suppose that the control system (21) is controllable w.r.t. \(u_i\) on any \([s',s'']\subset [0,1]\), \(s'<s''\). For contradiction purposes, suppose that \(w_i\) in (37) is singular, i.e., \(\lambda ^T(t)\,b_i(t) = 0\) for all \(t\in [t',t'']\subset [0,1]\), with \(t' < t''\). Then, as in the proof of Theorem 3, the consecutive time-derivatives of \(\lambda ^T(t)\,b_i(t)\) will also equal to zero for all \(t\in [t',t'']\). Defining \(p_{i,0}(t):= b_i(t)\) and \(p_{i,k}(t):= -A(t)\,p_{i,k-1}(t) + \dot{p}_{i,k-1}(t)\) as before, for \(k = 1,2,\ldots \,n-1\), one similarly gets (29) (with a sign difference) and (30). Then by means of the same arguments using the controllability of (21), as in the proof of Theorem 3, \(\lambda (t) = 0\) for all \(t\in [0,1]\). Then the differential equation in (35) with the initial condition \(\mu (0) = 0\) in (35) yields \(\mu (t) = 0\) for all \(t\in [0,1]\), contradicting the terminal condition \(\mu (1) = 1\) in (35), and thus furnishing the theorem. \(\square \)

Remark 5

We note that, in the critically feasible case, \(u_{\mathcal {A}} = u_{\mathcal {B}} = u_i\) and so \(v_i = 0\), for all \(i = 1,\ldots ,m\), and thus \(v_i\) does not serve as the switching function for \(u_i\). \(\square \)

4.3 A Double Integrator Problem

References [3, 11] studied applications of splitting and projection methods to the feasible problem of finding the so-called minimum-energy control of the double integrator, the problem stated as

Although Problem (PDI) constitutes a relatively simple instance of an optimal control problem, a solution to it can only be found numerically. This is the first reason why we find it interesting. Secondly, (PDI) acts as a building block in, for example, the problem of finding cubic spline interpolants with constrained acceleration, an active area of research in numerical analysis and approximation theory. A much wider range of optimal control problems involving the double integrator have been studied in the relatively recent book [25], however it does not include Problem (PDI). Problem (PDI) is simple and yet rich enough to study when introducing and illustrating many basic and new concepts or when testing new numerical approaches in optimal control—see, in addition to [3, 11, 25], also [12, 22].

With a large enough a (so that the constraint \(|u(t)|\le a\) never becomes active for any \(t\in [0,1]\)), Problem (PDI) can be solved analytically to find a cubic curve \(x_1(t)\), satisfying the initial point and velocity \(s_0\) and \(v_0\), and the terminal point and velocity \(s_f\) and \(v_f\), respectively – see [3] for the working of such an unconstrained solution. A small enough a, on the other hand, restricts the values the function u can take and thus rules out finding an analytical solution and necessitates the use of a numerical procedure for finding an approximate solution. This altogether furnishes a testimony to the practical significance of such a simple looking problem like (PDI).

Critical bound \(a_c\) : going from feasible to infeasible For the numerical experiments in [3], the special case when \(s_0 = s_f = v_f = 0\) and \(v_0 = 1\) was considered. The feasible optimal control for this instance of Problem (PDI) is continuous, given by

where \(0 \le t_1 < t_2 \le 1\) are the so-called junction times. When the value of a is too small, problem (PDI) becomes inconsistent. Namely, there exists a critical value \(a_c>0\) such that, when \(a < a_c\) Problem (PDI) is infeasible. Thus, the control constraint will be active when \(a\in [a_c, 4)\). The latter is the consistent, or the feasible, case, for which the control solution u is still active. If \(a=4\) or larger, then \(t_1 = 0\) and \(t_2 = 1\) and the solution is the same as that of the case when u is unconstrained. In other words, when \(a\ge 4\) the bound constraint on u becomes superfluous. When \(a\in (a_c,4)\), the solution u of problem (P) given in (39) is continuous over the time horizon [0, 1]. On the other hand, when \(a=a_c\), as has been stated in Theorem 4 that the control solution has to be of bang–bang type, or discontinuous. In Remark 2.1 of [3], it is observed that

based on the numerical experiments conducted, without elaborating further. It is also reported in the same remark that when \(a = a_c\) the unique feasible solution appears to be bang–bang, i.e., in particular, u(t) switches once from \(-a_c\) to \(a_c\) at the switching time

confirming our statement above that the optimal control u in this case is discontinuous.

How to find the solution for \(a_c\) and \(t_c\) The Hamiltonian function \(H:\mathrm{{I\!R}}^3 \times \mathrm{{I\!R}}\times \mathrm{{I\!R}}^3 \rightarrow \mathrm{{I\!R}}\) for Problem (Pcf2) emanating from Problem (PDI) is

where \((\lambda _1(t),\lambda _2(t),\mu (t))\in \mathrm{{I\!R}}^3\) is the adjoint variable (or costate) vector such that

with the transversality conditions

This leads to the solutions

where \(c_1\) and \(c_2\) are unknown real constants.

The following is a straightforward corollary to Theorem 4 for the double integrator problem.

Corollary 1

(Critically feasible control) The critically feasible optimal control \(u_c\) for the double integrator problem is of bang–bang type with at most one switching; namely

where \(t_c\) is the switching time.

Proof

The lemma follows from the expression in (38) in Theorem 4 and the linearity of \(\lambda _2\) in (43) (which implies that \(\lambda _2\) can change sign at most once). \(\square \)

A similar line of proof with \(a < a_c\) (the infeasible case) results in the following corollary to Theorem 3.

Corollary 2

(Best approximation control in \({\mathcal {B}}\)) The best approximation optimal control \(u_{\mathcal {B}}\) for the double integrator problem is of bang–bang type with at most one switching; namely

where \(t_s\) is the switching time.

The theorem we present below provides the full analytical solution to Problem (PDI) when \(a = a_c\).

Theorem 5

(Full critically feasible solution to problem (PDI))

-

(a)

If \(s_f - s_0 \ne (v_0 + v_f)/2\), then the critical control is given by

$$\begin{aligned} u_c(t) = \left\{ \begin{array}{rl} r\,, &{}\ \ \hbox {if}\ \ 0\le t < t_c\,, \\ -r\,, &{}\ \ \hbox {if}\ \ t_c\le t \le 1\,, \end{array} \right. \end{aligned}$$(46)and

$$\begin{aligned} a_c = |r|\,, \end{aligned}$$(47)with r and the switching time \(t_c\) given in the following two cases.

-

(i)

\(v_0 \ne v_f\):

$$\begin{aligned} r = \frac{v_f - v_0}{2\,t_c - 1}\, \end{aligned}$$(48)and \(t_c\) solves the quadratic equation

$$\begin{aligned} (v_f - v_0)\,t_c^2 + 2\,(s_f - s_0 - v_f)\, t_c + \frac{1}{2}\,(v_0 + v_f) - (s_f - s_0) = 0\,. \end{aligned}$$(49) -

(ii)

\(v_0 = v_f\):

$$\begin{aligned} r = 4\,(s_f - s_0 - v_0)\quad \text{ and }\quad t_c = \frac{1}{2}\,. \end{aligned}$$(50)

-

(i)

-

(b)

If \(s_f - s_0 = (v_0 + v_f)/2\), then \(t_c = 0\) or 1 . Furthermore, the critical control is given by

$$\begin{aligned} u_c(t) = \left\{ \begin{array}{rl} v_f - v_0\,,\ \ \hbox {if}\ \ t_c = 1\,, \\ v_0 - v_f\,,\ \ \hbox {if}\ \ t_c = 0\,, \end{array} \right. \end{aligned}$$(51)for all \(t\in [0,1]\), and so

$$\begin{aligned} a_c = |v_f - v_0|\,. \end{aligned}$$(52)

Proof

Recall from (43) that \(\lambda _2(t) = -c_1\,t - c_2\), for all \(t\in [0,1]\). Also note that \(c_1\) and \(c_2\) cannot both be zero, otherwise, with \(\mu (0) = 0\) in (42) and continuity of \(\mu \), it leads to \(\mu (t) = 0\) for all \(t\in [0,1]\), a contradiction with the fact that \(\mu (1) = 1\) in (42). Therefore in the rest of the proof we examine three cases:

Case (I): Suppose that \(c_2 = 0\). Then \(\lambda _2(t) = -c_1\,t\), \(c_1 \ne 0\), and by (44) \(u(t) = {{\,\textrm{sgn}\,}}(c_1)\,a_c\), for all \(t\in [0,1]\) (no switching). By solving the state equations with this u(t) substituted, one gets \(x_2(t) = {{\,\textrm{sgn}\,}}(c_1)\,a_c\,t + v_0\) and \(x_1(t) = {{\,\textrm{sgn}\,}}(c_1)\,a_c\,t^2/2 + v_0\,t + s_0\), and subsequently \(x_2(1) = v_f = {{\,\textrm{sgn}\,}}(c_1)\,a_c + v_0\) and \(x_1(1) = s_f = {{\,\textrm{sgn}\,}}(c_1)\,a_c/2 + v_0 + s_0\). Now, from these solutions, \({{\,\textrm{sgn}\,}}(c_1)\,a_c = v_f - v_0\), and thus \(s_f = (v_f - v_0)/2 + v_0 + s_0\), resulting in \(s_f - s_0 = (v_0 + v_f)/2\), which is nothing but the case in part (b) of the theorem. Finally one gets \(u(t) = {{\,\textrm{sgn}\,}}(c_1)\,a_c = v_f - v_0\) and thus \(a_c = |v_f - v_0|\) as required by (51) and (52).

Case (II): Suppose that \(c_1 = 0\). Then \(\lambda _2(t) = -c_2\), \(c_2 \ne 0\), and by (44) \(u(t) = {{\,\textrm{sgn}\,}}(c_2)\,a_c\), for all \(t\in [0,1]\) (no switching). The rest of the arguments follows similarly to the case when \(c_2 = 0\) above simply by replacing \(c_1\) by \(c_2\) in the expressions. This case also corresponds to and proves part (b) of the theorem.

Case (III): Finally suppose that \(\lambda _2(t) = -c_1\,t - c_2\) with both \(c_1 \ne 0\) and \(c_2 \ne 0\). Then by Corollary 1, observing that \(\lambda _2(0) = -c_2\),

where

verifying (46). Next substitute \(u(t) = u_c(t)\) into the differential equations in Problem (Pc). The respective solutions of \(\dot{x}_2(t) = r\) with \(x_2(0) = v_0\), and \(\dot{x}_1(t) = x_2(t)\) with \(x_1(0) = s_0\), for \(0\le t < t_c\), are simply

Furthermore the respective solutions of \(\dot{x}_2(t) = -r\) with \(x_2(1) = v_f\), and \(\dot{x}_1(t) = x_2(t)\) with \(x_1(1) = s_f\), for \(t_c\le t < 1\), can be obtained as

Since \(x_i\) are continuous, \(\lim _{t\rightarrow t_c^-} x_i(t) = \lim _{t\rightarrow t_c^+} x_i(t)\), \(i = 1,2\). In other words,

Case (III)(ii): Suppose that \(v_0 = v_f\). Then, since \(r>0\), \(t_c = 1/2\) is the unique solution. Substitution of \(t_c = 1/2\) and \(v_0 = v_f\) into (55) and re-arrangements yield \(r = 4\,(s_f - s_0 - v_0)\), or \(a_c = 4\,|s_f - s_0 - v_0|\), verifying (50).

Case (III)(i): Suppose that \(v_0 \ne v_f\). Then, Eq. (54) results in

verifying (48). After algebraic manipulations and re-arranging, (55) can be rewritten as

Substituting the expression for r in (48) into the above equation and multiplying both sides by \((2\,t_c - 1)\) give

Further algebraic manipulations reduce the above equation to (49), as required. The proof is complete. \(\square \)

Remark 6

Suppose that \(s_0 = s_f = v_f = 0\) and \(v_0 = 1\), as in the numerical example studied in [3]. Then one has the case Theorem 5(a)(i): Equation (49) reduces to

yielding \(t_c = 1/\sqrt{2}\). Then using (50), one gets \(r = -1/(\sqrt{2} - 1) = -(1 + \sqrt{2})\), or \(a_c = 1 + \sqrt{2}\). Finally, the optimal control can simply be written from (46) as

The numerical observations made in [3], re-iterated in (40)–(41), agree with the result in (57): \(a_c \approx 2.4142\) and \(t_c \approx 0.7071\), correct to four decimal places.

5 Numerical Experiments

For computations numerically solving the three problems in Sects. 5.1–5.3, we employ the AMPL–Ipopt computational suite: AMPL is an optimization modelling language [19] and Ipopt is an Interior Point Optimization software [37] (version 3.12.13 is used here). The suite is commonly utilized to solve discretized optimal control problems. We discretize the optimal control problems (Pf) and (Pcf) using the Euler scheme, with the number of time discretization nodes (or time partition points) set in most of the cases as 2000. The number of these nodes is increased (as reported in situ) only when \(a_c\) (in the case of critically infeasible solution) needs to be reported with a higher accuracy. The Euler scheme is more suitable than higher-order Runge–Kutta discretization for these problems as the solutions exhibit bang–bang types of control making the state variable solutions only of \(C^0\) class of functions. Numerical chatter is evident when a higher-order discretization scheme, such as the trapezoidal rule, is used. With 2000 grid points, the resulting large-scale finite-dimensional problems have about 6000 variables and 4000 constraints for the double integrator and the damped oscillator problems, and 16,000 variables and 4000 constraints for the machine tool manipulator problem. We set the tolerance tol for Ipopt to \(10^{-8}\) in all problems. We note that AMPL can also be paired with other optimization software, such as Knitro [16], SNOPT [20] or TANGO [1, 8].

All three example problems in Sects. 5.1–5.3 have a single control variable and the constraint on the control is given as

where a is a positive constant. The optimality condition (25) can then be written for this particular case as

for a.e. \(t\in [0,1]\). We will conveniently verify the optimality of the numerical results using (58).

5.1 Double Integrator

From the double integrator problem (PDI) in Sect. 4.3, one simply has

using the notation in Problem (Pf) in Sect. 3 and Problem (Pcf) in Sect. 4. As in Remark 6, we take \(s_0 = s_f = v_f = 0\) and \(v_0 = 1\). In other words, the boundary conditions in \(\varphi (x(0),x(1)) = 0\) are expressed as \(x(0) = (0, 1)\) and \(x(1) = (0, 0)\).

First of all, we establish that the double integrator control system is controllable since \({{\,\textrm{rank}\,}}Q_c = {{\,\textrm{rank}\,}}[b\ |\ Ab] = 2 = n\).

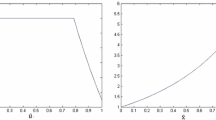

We have solved Problem (Pcf) to find the critically feasible solution depicted in Fig. 1, where the solution curves for \(u_{\mathcal {A}}\), \(u_{\mathcal {B}}\) and v are graphed. With 10,000 time partition points, we obtained \(a_c \approx 2.414\) (2000 time partition points only yields \(a_c \approx 2.4\)), which reconfirms the analytical solution \(a_c = 1 + \sqrt{2} \approx 2.4142\) that was reported in Remark 6. We also observe (after zooming into the plot) that \(t_c = 0.707\) which agrees with \(t_c = 1/\sqrt{2} \approx 0.7071\) in Remark 6 up to three decimal places. The control \(u_{\mathcal {A}}\) overlaps \(u_{\mathcal {B}}\) since, in the critically feasible case, \({\mathcal {A}}\cap {\mathcal {B}} \ne \varnothing \) and so the gap function v is the zero function. The graph of \(u = u_{\mathcal {A}} = u_{\mathcal {B}}\) in Fig. 1a in turn verifies the analytical expression in (57).

For the infeasible case, i.e., when \(a < a_c\), it is no longer possible to get a solution analytically, even for the relatively simple-looking double integrator problem. In Fig. 1b–d, the solution plots for \(a=2, 1.5\) and 1 are shown, respectively. The solution for \(u_{\mathcal {B}}\) is of bang–bang type with one switching, verifying Corollary 2. The role of v as a switching function is clear from these plots. We recall the fact that \(v = -\lambda _2\) by Theorem 3, and point that v appears linearly in the plots since \(\lambda (t)\) is linear in t. The switching time for each case shown in Fig. 1b–d is found graphically as: (b) \(t_s \approx 0.701\), (c) \(t_s \approx 0.693\) and (d) \(t_s \approx 0.685\). Further numerical experiments with even smaller a suggest that as \(a\rightarrow 0\), \(t_s\rightarrow 2/3\).

5.2 Damped Oscillator

While the ODE underlying the double integrator problem is \(\ddot{z}(t) = u(t)\), the ODE underlying the damped oscillator problem is \(\ddot{z}(t) + 2\,\zeta \,\omega _n\,\dot{z}(t) + \omega _n^2(t)\,z(t) = u(t)\), with the damping and stiffness terms added, where the parameter \(\omega _n>0\) is the natural frequency and the parameter \(\zeta \ge 0\) is the damping ratio of the system. When \(\zeta = 0\) the system is referred to as the (simple) harmonic oscillator. Defining the state variables \(x_1:= z\) and \(x_2:= \dot{z}\) (as in the case of the double integrator), one gets, for the case of the damped oscillator,

again using the notation in Problems (Pf) and (Pcf). As the time interval of the problem we take [0, 1], and set the boundary conditions to be the same as those of the double integrator problem: \(x(0) = (0, 1)\) and \(x(1) = (0, 0)\). We set the values of the parameters as \(\omega _n = 20\) and \(\zeta = 0.1\).

First, we can assert that the damped oscillator control system is controllable since \({{\,\textrm{rank}\,}}Q_c = {{\,\textrm{rank}\,}}[b\ |\ Ab] = 2 = n\).

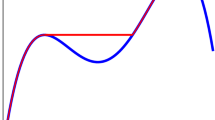

Numerical solutions to Problems (Pf) and (Pcf) are depicted in Fig. 2: The critically feasible solution to (Pcf) appears in Fig. 2a and the infeasible solutions to (Pf) appear in Fig. 2b–d. With \(2\times 10^5\) time partition points, we have obtained \(a_c \approx 0.475\), correct to three decimal places. No analytical solution is available. As expected from Theorem 4, the control \(u_{\mathcal {B}}\) is of bang–bang type, and it overlaps with \(u_{\mathcal {A}}\). The control \(u_{\mathcal {B}}\) appears to be periodic with six switchings. Further experiments with various other boundary conditions not only result in different \(a_c\) but also in different number of switchings; but the control \(u_{\mathcal {B}}\) still appears to be periodic.

In Fig. 2b–d, we provide the respective solution plots for \(a=0.4, 0.3\) and 0.2. The solution for \(u_{\mathcal {B}}\) is of bang–bang type as asserted by Theorem 3. It is observed that not only the control \(u_{\mathcal {B}}\) appears to be periodic but also the switching times seem to remain the same as those in the critically feasible solution in Fig. 2a. The role of the gap function v as a switching function is clear from these plots, verifying (58).

5.3 Machine Tool Manipulator

A linear ODE model and an associated optimal control problem for a machine tool manipulator is described in [17]. Using the notation in Problems (Pf) and (Pcf), one has that

Clearly, the control system has seven state variables and one control variable. In [17], the time interval for the dynamics is chosen to be [0, 0.0522], and the boundary conditions are imposed as \(x(0)=(0,0,0,0,0,0,0)\), \(x(0.0522)=(0,0.0027,0,0,0.1,0,0)\). Moreover, the control variable is constrained as \(-2000 \le u(t) \le 2000\), under which the problem is feasible. A minimum-energy control model for this machine tool manipulator has also subsequently been studied in [10, 11, 13].

It can easily be verified that the machine tool manipulator control system is controllable as \({{\,\textrm{rank}\,}}Q_c = {{\,\textrm{rank}\,}}[b\ |\ Ab\ |\ A^2b\ |\ \cdots |\ A^6b] = 7 = n\).

Numerical solutions to Problems (Pf) and (Pcf) are depicted in Fig. 3: The critically feasible solution to (Pcf) is depicted in Fig. 3a and the infeasible solutions to (Pf) appear in Fig. 3b–d. With 10,000 time partition points, and implementing SNOPT (instead of Ipopt) with AMPL, we obtained \(a_c \approx 1769.46\)—Just on this occasion Ipopt was not successful in getting a solution. As asserted by Theorem 4, the control \(u_{\mathcal {B}}\) is of bang–bang type, and it overlaps with \(u_{\mathcal {A}}\). The control \(u_{\mathcal {B}}\) appears to have five switchings.

In Fig. 3b–d, we provide the solutions for \(a=1500, 1000\) and 500. The solutions for \(u_{\mathcal {B}}\) are of bang–bang type as asserted by Theorem 3. We observe that the number of switchings decreases with decreasing a: With \(a = 500\), and by other experiments with \(a < 500\), numerical solutions suggest that there is only one switching. The role of the gap function v as a switching function is clear from these plots for this example as well, verifying (58).

6 Conclusion

We have studied a class of infeasible and critically feasible optimal control problems and proved that the best approximation control in the box constraint set is of bang–bang type for each problem. We presented a full analytical solution for the critically feasible double integrator problem. We numerically illustrated these results on three increasingly difficult example problems. For numerical computations, we discretized the example problems and solved large-scale optimization problems using popular optimization software.

The numerical scheme described in this paper can further be improved: Since the solution structure is known to be of bang–bang type, one can solve problems discretized over a coarse time grid first, and then, once there is a rough idea about the number of switchings and the places of the switchings, a switching time parameterization technique (see [23, 27, 29]) can be implemented to find the switching times accurately.

The paper [7] motivated us in looking at infeasible optimal control problems and study the properties of the gap (function) vector. Reference [7] also studies in a theoretical setting an application of the Douglas–Rachford algorithm to infinite-dimensional infeasible optimization problems in Hilbert space. A next step would be to employ the Douglas–Rachford algorithm to solve the infeasible optimal control problems we are looking at in the present paper. It would also be interesting to employ and test the Peaceman–Rachford algorithm [5, Sect. 26.4 and Proposition 28.8], which is another projection type method, for the class of problems we have studied.

It would be interesting to extend the applications in this paper to the case of infeasible and critically infeasible nonconvex optimal control problems, including those with state constraints, and carry out numerical experiments, although no theory is available yet for such more general classes of problems in infinite dimensions.

Data Availability

The full resolution Matlab graph/plot files that support the findings of this study are available from the corresponding author upon request.

References

Andreani, R., Birgin, E.G., Martínez, J.M., Schuverdt, M.L.: On augmented Lagrangian methods with general lower-level constraints. SIAM J. Optim. 18, 1286–1309 (2008). https://doi.org/10.1137/060654797

Bauschke, H.H., Borwein, J.M.: Dykstra’s alternating projection algorithm for two sets. J. Approx. Theory 79, 418–443 (1994). https://doi.org/10.1006/jath.1994.1136

Bauschke, H.H., Burachik, R.S., Kaya, C.Y.: Constraint splitting and projection methods for optimal control of double integrator. In: Bauschke, H.H., Burachik, R.S., Luke, D.R. (eds.) Splitting Algorithms, Monotone Operator Theory, and Applications, pp. 45–68. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-25939-6_2

Bauschke, H.H., Hare, W.L., Moursi, W.M.: Generalized solutions for the sum of two maximally monotone operators. SIAM J. Control. Optim. 52, 1034–1047 (2014). https://doi.org/10.1137/130924214

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd edn. Springer, Cham (2017)

Bauschke, H.H., Moursi, W.M.: On the Douglas-Rachford algorithm. Math. Program (Ser. A) 164, 263–284 (2017). https://doi.org/10.1007/s10107-016-1086-3

Bauschke, H.H., Moursi, W.M.: On the Douglas-Rachford algorithm for solving possibly inconsistent optimization problems. Math. Oper. Res. (2023). https://doi.org/10.1287/moor.2022.1347

Birgin, E.G., Martínez, J.M.: Practical Augmented Lagrangian Methods for Constrained Optimization. SIAM Publications, Philadelphia (2014)

Brezis, H.: Functional Analysis, Sobolev Spaces and Partial Differential Equations. Springer, Berlin (2011)

Burachik, R.S., Caldwell, B.I., Kaya, C.Y.: Douglas–Rachford algorithm for control-constrained minimum-energy control problems. To appear in ESAIM Control Optim Calc Var. (2024). https://doi.org/10.48550/arXiv.2210.17279, arXiv:2210.17279v2

Burachik, R.S., Caldwell, B.I., Kaya, C.Y., Moursi, W.M.: Optimal control duality and the Douglas-Rachford algorithm. SIAM J. Control. Optim. 62, 680–698 (2024). https://doi.org/10.1137/23M1558549

Burachik, R.S., Kaya, C.Y., Liu, X.: A primal–dual algorithm as applied to optimal control problems. Pure Appl. Funct. Anal. 8: 1301–1331 (2023). http://yokohamapublishers.jp/online2/oppafa/vol8/p1301.html

Burachik, R.S., Kaya, C.Y., Majeed, S.N.: A duality approach for solving control-constrained linear-quadratic optimal control problems. SIAM J. Control. Optim. 52, 1771–1782 (2014). https://doi.org/10.1137/130910221

Burke, J.V., Curtis, F.E., Wang, H.: A sequential quadratic optimization algorithm with rapid infeasibility detection. SIAM J. Optim. 24, 839–872 (2014). https://doi.org/10.1137/120880045

Byrd, R.H., Curtis, F.E., Nocedal, J.: Infeasibility detection and SQP methods for nonlinear optimization. SIAM J. Optim. 20, 2281–2299 (2010). https://doi.org/10.1137/080738222

Byrd, R.H., Nocedal, J., Waltz, R.A.: KNITRO: An integrated package for nonlinear optimization. In: di Pillo, G., Roma, M. (eds.) Large-Scale Nonlinear Optimization, pp. 35–59. Springer, New York (2006). https://doi.org/10.1007/0-387-30065-1_4

Christiansen, B., Maurer, H., Zirn, O.: Optimal control of machine tool manipulators. In: Diehl, M., Glineur, F., Jarlebring, E., Michiels, W. (eds.) Recent Advances in Optimization and Its Applications in Engineering, pp. 451–460. Springer, Berlin (2010). https://doi.org/10.1007/978-3-642-12598-0_39

Clarke, F.: Functional Analysis, Calculus of Variations and Optimal Control. Springer, London (2013). https://doi.org/10.1007/978-1-4471-4820-3

Fourer, R., Gay, D.M., Kernighan, B.W.: AMPL: A Modeling Language for Mathematical Programming, 2nd edn. Brooks/Cole Publishing Company/Cengage Learning, Boston (2003)

Gill, P.E., Murray, W., Saunders, M.A.: SNOPT: an SQP algorithm for large-scale constrained optimization. SIAM Rev. 47, 99–131 (2005). https://doi.org/10.1137/S1052623499350013

Hestenes, M.R.: Calculus of Variations and Optimal Control Theory. Wiley, New York (1966)

Kaya, C.Y.: Optimal control of the double integrator with minimum total variation. J. Optim. Theory Appl. 185, 966–981 (2020). https://doi.org/10.1007/s10957-020-01671-4

Kaya, C.Y., Noakes, J.L.: Computational method for time-optimal switching control. J. Optim. Theory Appl. 117, 69–92 (2003). https://doi.org/10.1023/A:1023600422807

Kaya, C.Y., Noakes, J.L.: Finding interpolating curves minimizing \(L^\infty \) acceleration in the Euclidean space via optimal control theory. SIAM J. Control. Optim. 51, 442–464 (2013). https://doi.org/10.1137/12087880X

Locatelli, A.: Optimal Control of a Double Integrator: A Primer on Maximum Principle. Springer, Switzerland. (2017). https://doi.org/10.1007/978-3-319-42126-1

Moursi, W.M.: The range of the Douglas–Rachford operator in infinite-dimensional Hilbert spaces. (2022). https://arxiv.org/pdf/2206.07204.pdf

Maurer, H., Büskens, C., Kim, J.-H.R., Kaya, C.Y.: Optimization methods for the verification of second-order sufficient conditions for bang-bang controls. Optim. Contr. Appl. Meth. 26, 129–156 (2005). https://doi.org/10.1002/oca.756

Mordukhovich, B.S.: Variational Analysis and Generalized Differentiation II: Applications. Springer, Berlin (2006). https://doi.org/10.1007/3-540-31246-3

Osmolovskii, N.P., Maurer, H.: Applications to Regular and Bang-Bang Control: Second-Order Necessary and Sufficient Conditions in Calculus of Variations and Optimal Control. SIAM Publications, Philadelphia (2012)

Pontryagin, L.S., Boltyanskii, V.G., Gamkrelidze, R.V., Mishchenko, E.F.: The Mathematical Theory of Optimal Processes. Wiley, New York (1962). https://doi.org/10.1002/zamm.19630431023

Rugh, W.J.: Linear System Theory, 2nd edn. Prentice-Hall, Upper Saddle River, NJ (1996)

Sepulveda-Salcedo, L.S., Vasilieva, O., Svinin, M.: Optimal control of dengue epidemic outbreaks under limited resources. Stud. Appl. Math. 144, 185–212 (2020). https://doi.org/10.1111/sapm.12295

Sidky, E.Y., Jørgensen, J.S., Pan, X.: First-order convex feasibility algorithms for x-ray CT. Med. Phys. 40, 31115-1–031115-15 (2013). https://doi.org/10.1118/1.4790698

Silverman, L.M., Meadows, H.E.: Controllability and observability in time-variable linear systems. SIAM J. Control 5, 64–73 (1967). https://doi.org/10.1137/0305005

Vinter, R.B.: Optimal Control. Birkhäuser, Boston (2000)

Xiao, W., Cassandras, G.C., Belta, C.: Safety-critical optimal control for autonomous systems. J. Syst. Sci. Complex. 34, 1723–1742 (2021). https://doi.org/10.1007/s11424-021-1230-x

Wächter, A., Biegler, L.T.: On the implementation of a primal-dual interior point filter line search algorithm for large-scale nonlinear programming. Math. Program. 106, 25–57 (2006). https://doi.org/10.1007/s10107-004-0559-y

Zauner, C., Gattringer, H., Müller, A.: Multistage approach for trajectory optimization for a wheeled inverted pendulum passing under an obstacle. Robotica 41, 2298–2313 (2023). https://doi.org/10.1017/S0263574723000401

Acknowledgements

The authors would like to thank the two anonymous reviewers whose comments and suggestions improved the paper.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing, or conflict of, interests to declare that are relevant to the content of this article.

Additional information

Communicated by Giovanni Colombo.

Dedicated to Boris S. Mordukhovich on the Occasion of his 75th Birthday.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Burachik, R.S., Kaya, C.Y. & Moursi, W.M. Infeasible and Critically Feasible Optimal Control. J Optim Theory Appl (2024). https://doi.org/10.1007/s10957-024-02419-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10957-024-02419-0