Abstract

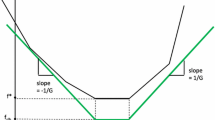

Conditional gradient algorithms (aka Frank–Wolfe algorithms) form a classical set of methods for constrained smooth convex minimization due to their simplicity, the absence of projection steps, and competitive numerical performance. While the vanilla Frank–Wolfe algorithm only ensures a worst-case rate of \({\mathcal {O}}(1/\epsilon )\), various recent results have shown that for strongly convex functions on polytopes, the method can be slightly modified to achieve linear convergence. However, this still leaves a huge gap between sublinear \({\mathcal {O}}(1/\epsilon )\) convergence and linear \({\mathcal {O}}(\log 1/\epsilon )\) convergence to reach an \(\epsilon \)-approximate solution. Here, we present a new variant of conditional gradient algorithms that can dynamically adapt to the function’s geometric properties using restarts and smoothly interpolates between the sublinear and linear regimes. These interpolated convergence rates are obtained when the optimization problem satisfies a new type of error bounds, which we call strong Wolfe primal bounds. They combine geometric information on the constraint set with Hölderian error bounds on the objective function.

Similar content being viewed by others

References

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: An approach based on the Kurdyka–Łojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010)

Attouch, H., Buttazzo, G., Michaille, G.: Variational Analysis in Sobolev and BV Spaces: Applications to PDEs and oPtimization, vol. 17. SIAM, Philadelphia (2014)

Bashiri, M.A., Zhang, X.: Decomposition-invariant conditional gradient for general polytopes with line search. In: Guyon, I., et al. (eds.) Advances in Neural Information Processing Systems, pp. 2687–2697 (2017)

Bauschke, H.H., Bolte, J., Teboulle, M.: A descent lemma beyond Lipschitz gradient continuity: first-order methods revisited and applications. Math. Oper. Res. 42(2), 330–348 (2016)

Bolte, J., Daniilidis, A., Lewis, A.: The Łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim. 17(4), 1205–1223 (2007)

Bolte, J., Nguyen, T.P., Peypouquet, J., Suter, B.W.: From error bounds to the complexity of first-order descent methods for convex functions. Math. Program. 165(2), 471–507 (2017)

Braun, G., Pokutta, S., Zink, D.: Lazifying conditional gradient algorithms. In: Precup, D., Teh, Y.W. (eds.) International Conference on Machine Learning, pp. 566–575 (2017)

Braun, G., Pokutta, S., Tu, D., Wright, S.: Blended conditonal gradients. In: Chaudhuri, K., Salakhutdinov, R. (eds.) International Conference on Machine Learning, pp. 735–743 (2019)

Canon, M.D., Cullum, C.D.: A tight upper bound on the rate of convergence of Frank–Wolfe algorithm. SIAM J. Control 6(4), 509–516 (1968)

Chen, Z., Xu, Y., Chen, E., Yang, T.: Sadagrad: strongly adaptive stochastic gradient methods. In: Dy, J., Krause, A. (eds.) International Conference on Machine Learning, pp. 912–920 (2018)

Combettes, C., Pokutta, S.: Boosting Frank–Wolfe by chasing gradients. In: Daumé, H. III, Singh, A. (eds.) International Conference on Machine Learning, pp. 2111–2121 (2020)

Diakonikolas, J., Carderera, A., Pokutta, S.: Locally accelerated conditional gradients. In: Chiappa, S., Calandra, R. (eds.) International Conference on Artificial Intelligence and Statistics, pp. 1737–1747 (2020)

Fercoq, O., Qu, Z.: Restarting accelerated gradient methods with a rough strong convexity estimate. arXiv preprint arXiv:1609.07358 (2016)

Frank, M., Wolfe, P.: An algorithm for quadratic programming. Naval Res. Logist. Q. 3(1–2), 95–110 (1956)

Frankel, P., Garrigos, G., Peypouquet, J.: Splitting methods with variable metric for Kurdyka–Łojasiewicz functions and general convergence rates. J. Optim. Theory Appl. 165(3), 874–900 (2015)

Freund, R.M., Grigas, P.: New analysis and results for the Frank–Wolfe method. Math. Program. 155(1), 199–230 (2016)

Freund, R.M., Grigas, P., Mazumder, R.: An extended Frank–Wolfe method with “in-face” directions, and its application to low-rank matrix completion. SIAM J. Optim. 27(1), 319–346 (2017)

Garber, D., Hazan, E.: Faster rates for the Frank–Wolfe method over strongly-convex sets. In: Bach, F., Blei, D. (eds.) Proceedings of the 32th International Conference on Machine Learning (2015)

Garber, D., Hazan, E.: A linearly convergent variant of the conditional gradient algorithm under strong convexity, with applications to online and stochastic optimization. SIAM J. Optim. 26(3), 1493–1528 (2016)

Garber, D., Meshi, O.: Linear-memory and decomposition-invariant linearly convergent conditional gradient algorithm for structured polytopes. In: Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R. (eds.) Advances in Neural Information Processing Systems (2016)

Giselsson, P., Boyd, S.: Monotonicity and restart in fast gradient methods. In: 53rd IEEE Conference on Decision and Control, pp. 5058–5063. IEEE (2014)

Guélat, J., Marcotte, P.: Some comments on Wolfe’s ‘away step’. Math. Program. 35(1), 110–119 (1986)

Hoffman, A.J.: On approximate solutions of systems of linear inequalities. J. Res. Natl. Bur. Stand. 49(4) (1952)

Jaggi, M.: Revisiting Frank–Wolfe: projection-free sparse convex optimization. In: Dasgupta, S., McAllester, D. (eds.) Proceedings of the 30th international conference on machine learning, pp. 427–435 (2013)

Joulin, A., Tang, K., Fei-Fei, L.: Efficient image and video co-localization with Frank–Wolfe algorithm. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) European Conference on Computer Vision, pp. 253–268. Springer (2014)

Karimi, H., Nutini, J., Schmidt, M.: Linear convergence of gradient and proximal-gradient methods under the Polyak–Łojasiewicz condition. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases, pp. 795–811. Springer (2016)

Kerdreux, T., Pedregosa, F., d’Aspremont, A.: Frank–Wolfe with subsampling oracle. In: Dy, J., Krause, A. (eds.) International Conference on Machine Learning, pp. 2591–2600 (2018)

Kerdreux, T., d’Aspremont, A., Pokutta, S.: Restarting Frank–Wolfe. In: Chaudhuri, K., Sugiyama, M. (eds.) The 22nd International Conference on Artificial Intelligence and Statistics, pp. 1275–1283 (2019)

Kerdreux, T., d’Aspremont, A., Pokutta, S.: Projection-free optimization on uniformly convex sets. In: International Conference on Artificial Intelligence and Statistics, pp. 19–27 (2021)

Lacoste-Julien, S., Jaggi, M.: On the global linear convergence of Frank–Wolfe optimization variants. In: Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 28, pp. 496–504 (2015)

Lacoste-Julien, S., Jaggi, M., Schmidt, M., Pletscher, P.: Block-coordinate Frank–Wolfe optimization for structural SVMs. In: Dasgupta, S., McAllester, D. (eds.) International Conference on Machine Learning, pp. 53–61 (2013)

Lan, G., Zhou, Y.: Conditional gradient sliding for convex optimization. SIAM J. Optim. 26(2), 1379–1409 (2016)

Lan, G., Pokutta, S., Zhou, Y., Zink, D.: Conditional accelerated lazy stochastic gradient descent. In: Precup, D., Teh, Y.W. (eds.) International Conference on Machine Learning, pp. 1965–1974 (2017)

Levitin, E.S., Polyak, B.T.: Constrained minimization methods. USSR Comput. Math. Math. Phys. 6(5), 1–50 (1966)

Li, G., Pong, T.K.: Calculus of the exponent of Kurdyka–Łojasiewicz inequality and its applications to linear convergence of first-order methods. Found. Comput. Math. 18(5), 1199–1232 (2018)

Locatello, F., Khanna, R., Tschannen, M., Jaggi, M.: A unified optimization view on generalized matching pursuit and Frank–Wolfe. In: Singh, A., Zhu, J. (eds.) Artificial Intelligence and Statistics, pp. 860–868 (2017)

Locatello, F., Tschannen, M., Rätsch, G., Jaggi, M.: Greedy algorithms for cone constrained optimization with convergence guarantees. In: Guyon, I., et al. (eds.) Advances in Neural Information Processing Systems, pp. 773–784 (2017)

Lojasiewicz, S.: Ensembles semi-analytiques. Institut des Hautes Études Scientifiques (1965)

Lojasiewicz, S.: Sur la géométrie semi-et sous-analytique. Ann. Inst. Fourier 43(5), 1575–1595 (1993)

Miech, A., Alayrac, J.-B., Bojanowski, P., Laptev, I., Sivic, J.: Learning from video and text via large-scale discriminative clustering. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 5267–5276. IEEE (2017)

Nemirovskii, A., Nesterov, Y.E.: Optimal methods of smooth convex minimization. USSR Comput. Math. Math. Phys. 25(2), 21–30 (1985)

O’Donoghue, B., Candes, E.: Adaptive restart for accelerated gradient schemes. Found. Comput. Math. 15(3), 715–732 (2015)

Osokin, A., Alayrac, J.-B., Lukasewitz, I., Dokania, P.K., Lacoste-Julien, S.: Minding the gaps for block Frank–Wolfe optimization of structured SVMs. In: Balcan, M.F., Weinberger, K.Q. (eds.) International Conference on Machine Learning (2016)

Pena, J., Rodriguez, D.: Polytope conditioning and linear convergence of the Frank–Wolfe algorithm. Math. Oper. Res. 44, 1–18 (2018)

Roulet, V., d’Aspremont, A.: Sharpness, restart, and acceleration. SIAM J. Optim. 30(1), 262–289 (2020)

Shah, N., Kolmogorov, V., Lampert, C.H.: A multi-plane block-coordinate Frank–Wolfe algorithm for training structural SVMs with a costly max-oracle. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2737–2745 (2015)

Xu, Y., Yang, T.: Frank–Wolfe method is automatically adaptive to error bound condition. arXiv:1810.04765 (2018)

Acknowledgements

This research was partially funded by Deutsche Forschungsgemeinschaft (DFG) through the DFG Cluster of Excellence MATH+ and the Research Campus Modal funded by the German Federal Ministry of Education and Research (fund numbers 05M14ZAM,05M20ZBM). Research reported in this paper was partially supported by NSF CAREER Award CMMI-1452463. TK acknowledges funding from the CFM-ENS chaire les modèles et sciences des données. AA is at CNRS & département d’informatique, École normale supérieure, UMR CNRS 8548, 45 rue d’Ulm 75005 Paris, France, INRIA and PSL Research University. The authors would like to acknowledge support from the ML & Optimization joint research initiative with the fonds AXA pour la recherche and Kamet Ventures, funding by the French government under management of Agence Nationale dela Recherche as part of the “Investissements d’avenir” program, reference ANR-19-P3IA-0001 (PRAIRIE3IA Institute), as well as a Google focused award. This paper is a journal version of the conference version [28]. The authors are also thankful to Jérôme Bolte for insights about subanalycity of the strong Wolfe gap when \({\mathcal {C}}\) is a polytope.

Author information

Authors and Affiliations

Additional information

Communicated by Jérôme Bolte.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. One Shot Application of the Fractional Away-Step Frank–Wolfe

Fractional Away-step Frank–Wolfe output a point \(x_t\in {\mathcal {C}}\) s.t. \(w(x_t) \le e^{-\gamma }w_0\). Hence, running once fractional Away-step Frank–Wolfe with a large value of \(\gamma \) allows finding an approximate minimizer with the desired precision. The following lemma proves a sublinear \({\mathcal {O}}(1/T)\) convergence rate which corresponds to the convergence rate of the Frank–Wolfe algorithm. Importantly, the rate does not depend on r. Hence, there is no hope of observing linear convergence for the strongly convex case.

Lemma A.1

Let f be a smooth convex function, \(\epsilon > 0\) be a target accuracy, and \(x_0 \in {\mathcal {C}}\) be an initial point. Then for any \(\gamma > \ln \frac{w(x_0)}{ \epsilon }\), Algorithm 1 satisfies:

for \(T \ge \frac{2C_f^{{\mathcal {A}}}}{\epsilon }\).

Proof

We can stop the algorithm as soon as the criterion \(w(x_t) < \epsilon \) in step 2 is met or we observe an away step, whichever comes first. In former case, we have \(f(x_t) - f^* \le w(t) < \epsilon \), in the latter it holds

Thus, when the algorithms stops, we have achieved the target accuracy and it suffices to bound the number of iterations required to achieve that accuracy. Moreover, while running, the algorithm only executes Frank–Wolfe and we drop the FW superscript in the directions; otherwise, we would have stopped.

From the proof of Proposition 4.1, we have each Frank–Wolfe step ensures progress of the form

For convenience, let \(h_t \triangleq f(x_t) - f^*\). By convexity, we have \(h_t \le \langle -\nabla f(x_t), \, d_t \rangle \), so that the above becomes

and moreover observe that the second case can only happen in the very first step: \(h_{1} \le h_0 - (h_0 - C_f^{{\mathcal {A}}} / 2) = C_f^{{\mathcal {A}}} / 2 \le 2 C_f^{{\mathcal {A}}} / t\) for \(t=1\) providing the start of the following induction: we claim \(h_t \le \frac{2C_f^{{\mathcal {A}}}}{t}\).

Suppose we have established the bound for t, then for \(t+1\), we have

The induction is complete and it follows that the algorithm requires \(T \ge \frac{2C_f^{{\mathcal {A}}}}{\epsilon }\) to reach \(\epsilon \)-accuracy. \(\square \)

Appendix B. Non-Standard Recurrence Relation

This is a technical Lemma derived in [47, proof of Theorem 1. ], and we repeat it here for the sake of completeness. It is used in Sect. 7.

Lemma B.1

(Recurrence and sub-linear rates) Consider a sequence \((h_n)\) of non-negative numbers. Assume there exists \(M>0\) and \(0 < \beta \le 1\) s.t.

then \(h_T = {\mathcal {O}}\big (1/T^{1/\beta } \big )\). More precisely for all \(t\ge 0\),

with (k, C) such that \(\frac{2 - 2^{\beta }}{2^\beta -1} \le k\) and \( \text {max}\{h_0 k^{1/\beta }, 2(C^\prime /M)^{1/\beta }\} \le C\), with \(C^\prime \ge \frac{1}{\beta -(1-\beta )(2^\beta -1)}\).

Proof

Let (k, C) satisfying the condition in Lemma B.1. Let us show by induction that

For \(t=0\), it is true because we assumed \(h_0 k^{1/\beta } \le C\). Let \(t \ge 1\). Consider the case where the maximum in the right hand side of (20) is obtained with \(\frac{1}{2}\), then

because \(k\ge \frac{2 - 2^{\beta }}{2^\beta - 1}\). Otherwise we have

If \(h_t \le \frac{C}{2(t+k)^{1/\beta }}\), conclusion holds as before. Otherwise, assume \(\frac{C}{2(t+k)^{1/\beta }} \le h_t \le \frac{C}{(t+k)^{1/\beta }}\) and (20) implies

From Lemma B.2, for \(C\ge 2(C^\prime /M)^{1/\beta }\) with \(C^\prime \ge \frac{1}{\beta -(1-\beta )(2^\beta -1)}\), we have

which proves the induction. \(\square \)

Lemma B.2

For any \(t\ge 1\), we have

where \(k\ge \frac{2 - 2^{\beta }}{2^\beta - 1}\), \(\beta \in ]0,1]\), \(C^\prime \ge \frac{1}{\beta -(1-\beta )(2^\beta -1)}\) and C such that \(C\ge 2 (C^\prime /M)^{1/\beta }\).

Proof

Write \(x = \frac{1}{t+k}\). Because \(t\ge 1\) and \(k\ge \frac{2 - 2^{\beta }}{2^\beta - 1}\), we have \(x\in ]0, 2^{\beta }-1]\). (21) is equivalent to

Choosing C greater or equal to \(2 \Big (C^\prime /M\Big )^{1/\beta }\) ensures that \(\log (1 - M \Big (\frac{C}{2}\Big )^\beta x)\le \log (1 - C^\prime x)\). Also for \(C^\prime \ge \frac{1}{\beta -(1-\beta )(2^\beta -1)}\), the function \(h(x)\triangleq \log (1 + C^\prime x) - \frac{1}{\beta }\log (1 + x)\) is non-decreasing (and hence nonnegative) on \(]0,2^\beta -1]\). Reciprocally for \(C^\prime \ge \frac{1}{\beta -(1-\beta )(2^\beta -1)}\) and \(C\ge \Big (C^\prime /M\Big )^{1/\beta }\), (21) holds. \(\square \)

Rights and permissions

About this article

Cite this article

Kerdreux, T., d’Aspremont, A. & Pokutta, S. Restarting Frank–Wolfe: Faster Rates under Hölderian Error Bounds. J Optim Theory Appl 192, 799–829 (2022). https://doi.org/10.1007/s10957-021-01989-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-021-01989-7