Abstract

In earlier works (Tits et al. SIAM J. Optim., 17(1):119–146, 2006; Winternitz et al. Comput. Optim. Appl., 51(3):1001–1036, 2012), the present authors and their collaborators proposed primal–dual interior-point (PDIP) algorithms for linear optimization that, at each iteration, use only a subset of the (dual) inequality constraints in constructing the search direction. For problems with many more variables than constraints in primal form, this can yield a major speedup in the computation of search directions. However, in order for the Newton-like PDIP steps to be well defined, it is necessary that the gradients of the constraints included in the working set span the full dual space. In practice, in particular in the case of highly sparse problems, this often results in an undesirably large working set—or in an expensive trial-and-error process for its selection. In this paper, we present two approaches that remove this non-degeneracy requirement, while retaining the convergence results obtained in the earlier work.

Similar content being viewed by others

Notes

The “min” in the top portion of (22) places a positive lower bound on \(x_{i}^{+}, i\in Q\), preventing it from being too close to zero away from a solution point; a small value of ξ>0 ensures that this lower bound is not too large. The bottom portion of (22) helps centrality while keeping \(x^{+}, i\not\in Q\), bounded. The second argument of the “max” in (23) is needed to allow local quadratic convergence, which is also the motivation for the right-hand side of (26).

The proof in [3] contains an unfortunate typographical error: In three places, in the text that precedes equation (76), \(\Delta s^{{\rm a},k}\) should be \(\Delta s_{{\scriptscriptstyle{Q}}}^{{\rm a},k}\).

Note that Assumption 4.2 does not rule out rank degeneracy away from the solution, nor even close to the solution unless |Q k| is exactly equal to m.

This follows from Assumption 4.2 because solvable linear programs always have at least one strictly complementary solution [1, p. 28].

More generally, we could define a parametrized measure d(A,M) as the average of \(\dim \mathcal{N}(A_{{\scriptscriptstyle{Q}}} ^{\mathrm{T}})\) over all Q∈n with |Q|=M, where notionally M might be set closer to the average size of Q used in the algorithms. After some experimentation, we found the measure to be most accurate in estimating the “tube-dimension” and counts of kernel-like steps when M was set equal to m, and thus did so and dropped the parameter from the definition. The more general version may still be useful for future work.

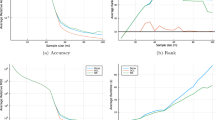

On that figure, with Regularized rPDAS, it seems that the total time is almost independent of |Q| when |Q|/n is larger than about 0.1. This is because these problems are rather small, and the time is dominated by overhead.

For these two problems, to overcome observed numerical problems near the solution, the algorithms in [3] used an ad-hoc, fixed regularization of the normal equations, as in (16) with δ=10−5. Furthermore, when numerical difficulties prevented factorization of the normal matrix even when the working set was increased to \(Q={\bf n}\), following [2], the algorithm would fall back on Matlab’s cholinc(⋅,’inf’). This problem was observed in the current investigation as well, but here we fall back on the symmetric-indefinite factorization of the augmented system (14), using Matlab’s command ldl, rather than rely on cholinc.

References

Wright, S.J.: Primal–Dual Interior-Point Methods. SIAM, Philadelphia (1997)

Tits, A., Absil, P., Woessner, W.: Constraint reduction for linear programs with many constraints. SIAM J. Optim. 17, 119–146 (2006)

Winternitz, L.B., Nicholls, S.O., Tits, A.L., O’Leary, D.P.: A constraint reduced variant of Mehrotra’s predictor-corrector algorithm. Comput. Optim. Appl. 51, 1001–1036 (2012)

He, M.Y., Tits, A.L.: Infeasible constraint-reduced interior-point methods for linear optimization. Optim. Methods Softw. 27, 801–825 (2012)

Winternitz, L.: Primal–dual interior point algorithms for linear programs with many inequality constraints. Ph.D. thesis, University of Maryland (2010)

Bertsimas, D., Tsitsiklis, J.: Introduction to Linear Optimization. Athena, Belmont (1997)

Dantzig, G., Ye, Y.: A build-up interior-point method for linear programming: Affine scaling form. Working paper, Department of Management Science, University of Iowa (1991)

Saunders, M.A., Tomlin, J.A.: Solving regularized linear programs using barrier methods and KKT systems. Technical report, Stanford University, Department of EES (1996)

Friedlander, M., Orban, D.: A primal–dual regularized interior-point method for convex quadratic programming. Math. Program. Comput. 4, 71–107 (2012)

Friedlander, M.P., Orban, D.: Exact primal–dual regularization of linear programs. Presentation given at ICCOPT Hamilton, Ontario (2007)

Tits, A.L., Absil, P.A., O’Leary, D.P.: Constraint reduction for certain degenerate linear programs. In: 19th ISMP, July 30–August 4 (2006)

Dikin, I.: On convergence of an iterative process. Upr. Syst. 12, 54–60 (1974). In Russian

Stewart, G.W.: On scaled projections and pseudo-inverses. Linear Algebra Appl. 112, 189–194 (1989)

Saigal, R.: A simple proof of a primal affine scaling method. Ann. Oper. Res. 62, 303–324 (1996)

Mehrotra, S.: On the implementation of a primal–dual interior point method. SIAM J. Optim. 2, 575–601 (1992)

Netlib linear programming test problems. See http://www-fp.mcs.anl.gov/OTC/Guide/TestProblems/LPtest/

Acknowledgements

The work of the first two authors was supported in part by DOE grant DESC0002218. The work of the third author was supported by the Belgian Network DYSCO (Dynamical Systems, Control, and Optimization), funded by the Interuniversity Attraction Poles Programme initiated by the Belgian Science Policy Office.

The authors wish to thank Dianne O’Leary for helpful discussions, and Ming-Tse Paul Laiu for running some numerical comparisons.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Johannes O. Royset.

Rights and permissions

About this article

Cite this article

Winternitz, L.B., Tits, A.L. & Absil, PA. Addressing Rank Degeneracy in Constraint-Reduced Interior-Point Methods for Linear Optimization. J Optim Theory Appl 160, 127–157 (2014). https://doi.org/10.1007/s10957-013-0323-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-013-0323-7