Abstract

The advent of generative artificial intelligence (AI) offers transformative potential in the field of education. The study explores three main areas: (1) How did ChatGPT answer questions related to science education? (2) What are some ways educators could utilise ChatGPT in their science pedagogy? and (3) How has ChatGPT been utilised in this study, and what are my reflections about its use as a research tool? This exploratory research applies a self-study methodology to investigate the technology. Impressively, ChatGPT’s output often aligned with key themes in the research. However, as it currently stands, ChatGPT runs the risk of positioning itself as the ultimate epistemic authority, where a single truth is assumed without a proper grounding in evidence or presented with sufficient qualifications. Key ethical concerns associated with AI include its potential environmental impact, issues related to content moderation, and the risk of copyright infringement. It is important for educators to model responsible use of ChatGPT, prioritise critical thinking, and be clear about expectations. ChatGPT is likely to be a useful tool for educators designing science units, rubrics, and quizzes. Educators should critically evaluate any AI-generated resource and adapt it to their specific teaching contexts. ChatGPT was used as a research tool for assistance with editing and to experiment with making the research narrative clearer. The intention of the paper is to act as a catalyst for a broader conversation about the use of generative AI in science education.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Artificial Intelligence and the Field of Education

Artificial intelligence (AI) is playing a crucial role in the ever-increasing digitisation of society. AI’s capability to automate tasks, process large quantities of data, and provide predictive insights will increasingly revolutionise various aspects of our daily lives (Yang, 2022). Previously, AI has been described as technology that has the capacity to mimic human-like responses, such as reasoning, exercising judgement, and exhibiting intentionality (Shubhendu & Vijay, 2013). Technological advancements such as machine learning and neural networks have sparked further discussion about how to define AI (Wang, 2019). The challenge … “is to specify the parameters of artificiality, or the ways in which computers are unlike human intelligence. They are much less than human intelligence—they can only calculate. And they are much more—they can calculate larger numbers and faster than humans. We have cause to be in awe at the super-human brilliance of their feats of calculation” (Cope et al., 2021, p. 1230). Discourse about the potential societal impact of AI has recently been attracting significant attention. For instance, there have been increasing concerns about significant job losses and debates about classifying AI output as art (e.g. WALL-E 2, Midjourney) (Pavlik, 2023). Discussions around the use of AI, like in other fields, are mostly in the early stages. Celik (2023) claims that educators have not maximised the potential of AI in teaching and learning contexts. It has even been described as the “Cinderella of the AI story”, alluding to its underdeveloped and often ignored status in education (Lameras & Arnab, 2021). Despite calls for further uptake, some educators are resistant to using software that collects large amounts of student data and, moreover, sceptical of companies that present technology as a panacea for all educational issues (Stockman & Nottingham et al., 2022). This research focuses on an AI platform called ChatGPT. It has garnered worldwide attention and captured the public zeitgeist.

ChatGPT

Large language models are a form of generative AI that produces human-like language (OpenAI, 2023a). OpenAI trains its text-generating models using machine learning algorithms on large amounts of text, including books, news articles, websites, and Wikipedia (Scharth, 2022). By processing terabytes of data, models acquire the patterns and structure of language, enabling them to provide users with relevant and meaningful content in response to their requests. When I asked ChatGPT to describe itself (prompt-Describe ChatGPT), it generated the following italicised text:

ChatGPT is a language model developed by OpenAI. It is a variant of the GPT (Generative Pre-training Transformer) model, which is trained on a massive amount of text data to generate human-like text. It can be used for a variety of natural language processing tasks, such as language translation, text summarization, question answering, and also for chatbot applications. It can understand and respond to human inputs in a conversational way, making it well suited for interactive applications. ChatGPT can be fine-tuned on specific data sets and task, which means it can be customized to perform specific task and generate outputs that are more accurate and useful.

ChatGPT can… “write stories, give life advice, even compose poems and code computer programs” (Scharth, 2022, para. 2). There have been reports however that it… “produces fake citations and reproduces biases that exist in the literature” (Gleason, 2022, para. 7). According to cited ChatGPT output, “AI systems can perpetuate biases that are present in the data they are trained on. This can lead to unequal and unfair outcomes for students, particularly if the bias is related to factors such as race, gender, or socio-economic status” (Zhai, 2022, p. 7). OpenAI (2023b) acknowledge in their advice to educators that… “ChatGPT may produce content that perpetuates harmful biases and stereotypes, sometimes in subtle ways… the model is generally skewed towards content that reflects Western perspectives and people” (para. 18).

ChatGPT has gone viral on social media, and anecdotal evidence suggests that students are already familiar with it. It is safe to assume that undergraduate students are using ChatGPT or a similar software. Given ChatGPT’s apparent usage and stakeholder concerns, it is crucial to evaluate its output about science education. As far as I am aware, there has been no prior research that has examined the text generated by ChatGPT in relation to science education topics or its potential applications to create science teaching resources. Also, there is little written about its utilisation as a research tool, especially in STEM education contexts. This presents as a significant and timely research gap to address.

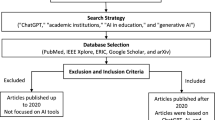

Methodology

Inspired by Pavlik’s (2023) exploration of ChatGPT in the field of journalism and media studies, research question 1 of this study asks: How did ChatGPT answer questions related to science education? For example, what are key characteristics of effective science teaching? Should I use chalk and talk to teach science or embrace more student-centred pedagogies? What happens if a student is failing in science, should I care?

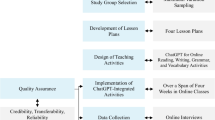

Research question 2 of this study asks: What are some ways educators could utilise ChatGPT in their science pedagogy? For example, how might it design a science unit using the 5Es model, or how could it design a rubric to use in my science classroom? How might it generate a multiple-choice quiz with an answer key?

Research question 3 of this study asks: How has ChatGPT been utilised in this study, and what are my reflections about its use as a research tool? “All tools do more than human minds and bodies can achieve unaided, which is why we create and use them” (Cope et al., 2021, p. 1230). Positioning the AI as a research tool, I will reflect on its use in the present study.

This is exploratory research; the intention is to document my experiences with ChatGPT and to reflect on its possible implications. This exploratory study applies a self-study methodology to investigate the technology (Hamilton et al., 2009). Through this study, I acknowledge that aspects of my educator and researcher identities have influenced the kinds of AI prompts I am interested in posing. As part of my exploration, I critically analyse ChatGPT output about science education, comparing it to key themes in the research. Moreover, I am eager to investigate the practical applications of using it for the classroom. Anecdotally, educators are already utilising ChatGPT to teach science. As discussed earlier, I am also interested in the application of ChatGPT as a tool for researchers.

The text italicised below has been directly copied from ChatGPT. It is important to remember that the following results are a moment-in-time response, meaning the same questions could be entered into ChatGPT and you may get a different response. The responses below were collected in January 2023.

Results

[ChatGPT Prompt 1]: What Are Key Characteristics of Effective Science Teaching?

-

1.

Active and inquiry-based learning: Effective science teaching encourages students to actively engage in the learning process through hands-on activities, experiments, and inquiry-based projects. This approach allows students to make connections between what they are learning and the world around them.

-

2.

Real-world connections: Effective science teaching makes connections between what students are learning in the classroom and real-world applications. This helps to make the subject more relevant and engaging for students.

-

3.

Differentiation: Effective science teaching takes into account the diverse needs and learning styles of students. Teachers use a variety of strategies to differentiate instruction, such as providing multiple ways to access information and using a variety of assessment methods.

-

4.

Collaboration: Effective science teaching fosters a collaborative learning environment where students work together to solve problems and share ideas. This helps students to develop important skills such as communication, problem-solving and teamwork.

-

5.

Use of technology: Effective science teaching incorporates technology to enhance student learning. This can include using simulations and virtual labs, online resources, and other digital tools to support the learning process.

-

6.

Assessment: Effective science teaching uses a variety of assessment methods to measure student understanding and provide feedback to guide instruction. This includes formative and summative assessments, as well as performance-based assessments.

-

7.

Professional development: Effective science teaching requires ongoing professional development for teachers to stay current with the latest research, best practices and new technologies in the field of science education.

Let us compare ChatGPT’s response above to seminal research discussing characteristics of effective science teaching. Hackling and Prain (2005) report six elements including:

-

1.

Students experience a curriculum that is relevant to their lives and interests.

-

2.

Classroom science is linked with the broader community.

-

3.

Students are actively engaged with inquiry, ideas, and evidence.

-

4.

Students are challenged to develop and extend meaningful conceptual understandings.

-

5.

Assessment facilitates learning and focuses on outcomes that contribute to scientific literacy.

-

6.

Information and communication technologies are exploited to enhance the learning of science with opportunities to interpret and construct multimodal representations (p. 19).

Both lists stress the importance of inquiry-based learning, real-world connections, differentiation of instruction, the use of technology to enhance science learning, the importance of effective assessments, and student-led, collaborative science learning. The first list by ChatGPT mentions the importance of professional development while the second list by Hackling et al. makes no explicit mention of this. It does however stress the importance of connecting science learning with the broader community, which is less obvious in the ChatGPT response (e.g. real world connection). Overall, there seems strong alignment between the two lists.

[ChatGPT Prompt 2]: Should I Use Chalk and Talk to Teach Science or Embrace More Student-Centred Pedagogies?

Both “chalk and talk” teaching, where the teacher primarily lectures and writes on the board, and student-centred pedagogies, which put the focus on the student’s own learning and discovery, have their own advantages and disadvantages.

“Chalk and talk” teaching can be effective for introducing new concepts and providing a clear structure for the material. However, it can also be less engaging for students and may not allow for enough student interaction and active learning.

On the other hand, student- centred pedagogies such as inquiry-based learning, project-based learning and problem-based learning, allow students to take an active role in their own learning, encouraging them to ask questions, explore, and discover new concepts on their own. This type of teaching has been shown to be more engaging for students and can lead to better retention of the material.

It is important to note that a good science teaching should aim to be a balance between the teacher-centred and student-centred pedagogies.

It can be beneficial to use a variety of teaching methods in the classroom, such as incorporating hands-on activities, group work, and discussions to supplement traditional lectures. This can help to keep students engaged and promote active learning. Additionally, allowing for student choice and incorporating real-world connections can help to make the material more relevant and interesting to students.

The response balances the different pedagogical approaches to teaching science. Its output defines “chalk and talk” as a process whereby the teacher primarily lectures and writes on the board… ‘chalk and talk’ teaching can be effective for introducing new concepts. The former is more closely aligned with a teaching model that emphasises direct instruction (Kirschner et al., 2006). The output also details the benefits of inquiry-based, student-centred models of science education (Rannikmäe et al., 2020), stating that this type of teaching has been shown to be more engaging for students and can lead to better retention of the material. Later, ChatGPT discusses the importance of aiming for a balance between teacher-centred and student-centred pedagogies. Where is the evidence base to support a balance? I also wonder about the importance of context? (e.g. individual student needs, school profile/priorities). We will elaborate on these points later.

[ChatGPT Prompt 3]: What Happens if a Student Is Failing in Science, Should I Care?

Yes, as a teacher it’s important to care if a student is failing in science because it can have a significant impact on their academic and future career opportunities. Failing in science can indicate that a student may be struggling with the material and may need additional support or different teaching methods.

It’s important to identify the reasons why the student is failing and address the underlying issues. This could include providing additional support such as tutoring, adjusting teaching methods to better suit the student’s learning style, or providing opportunities for hands-on, inquiry-based learning.

It’s also important to communicate with the student’s parents or guardians and work together to develop a plan to support the student’s success in science. Additionally, creating a positive and encouraging classroom environment can help to motivate the student to improve their performance in science.

In addition, it’s important to recognize that students may fail for a variety of reasons, such as lack of interest, lack of prior knowledge or skills, or other personal issues. Therefore, it’s important to be understanding and supportive and not to blame the student for their failure but instead, help them to overcome the obstacles and achieve their goals.

There are several helpful suggestions in the ChatGPT response that align with the research literature. For instance, ensuring more opportunities for student-centred learning (Kang & Keinonen, 2017), working with guardians or caregivers to support student learning (Archer et al., 2013; Gorard & See, 2009), and fostering a supportive learning environment (Du & Wong, 2019).

[ChatGPT Prompt 4]: Create a Teaching Unit Using the 5Es Model that Is Challenging for Students Who Have a Strong Understanding of Renewable and Non-renewable Energy Sources at a Year 7 Level. Also Provide Support and Scaffolding for Students Who Are Struggling with the Material

Engage:

-

Begin the unit by posing a real-world problem related to energy sources such as “How can we ensure a sustainable future for our community?”

-

Show a video or pictures of a community facing energy challenges, such as a power outage during a heatwave, or an oil spill

-

Encourage students to brainstorm and share their prior knowledge about energy sources

Explore:

-

Divide the class into small groups and assign each group a different renewable or non-renewable energy source to research.

-

Provide students with a set of guiding questions to help them focus their research. (e.g. What are the advantages and disadvantages of this energy source? How is it produced? What are the environmental impacts?)

-

Give students time to conduct their research and gather information.

-

Students can use a variety of resources such as books, articles, videos, and websites to research their topic.

-

Provide additional support and resources for students who are struggling with the material, such as providing a more simplified version of the guiding questions or offering extra time for research.

Explain:

-

Have each group present their findings to the class, using visual aids and hands-on activities to help explain the concepts

-

Encourage class discussion and questions

-

Provide additional support and resources for students who are struggling with the material, such as providing a graphic organiser or a template for the presentation

Elaborate:

-

Students will work in groups again to create a proposal for a sustainable energy plan for their community.

-

Provide a list of criteria that their plan must meet such as cost-effectiveness, environmental impact, and reliability.

-

Provide additional support and resources for students who are struggling with the material, such as providing a sample proposal or a step-by-step guide to creating the plan.

Evaluate:

-

Have students self-assess their understanding and participation in the unit using a rubric

-

Have students peer-assess the presentations and plans of other groups

-

Have the teacher provide feedback and grades on the final plan.

-

Finalise the unit with a class debate about which energy source is the best for the community

This teaching unit allows students to actively engage in the learning process, explore and research the subject, explain their findings, elaborate a plan, evaluate their understanding and participation and debate about the best energy source for the community. The unit provides support and scaffolding for students who are struggling with the material, while also challenging students who have a strong understanding of the topic to think critically and apply their knowledge to real-world scenarios.

The 5Es model (Bybee, 2002) is a popular teaching model used by schools and influential in pre-service teacher courses globally. One might imagine how it could be used by an educator as a catalyst for ideas, to move beyond a blank page, when designing a science unit. Example learning experiences in the output above seem mostly underpinned by a social-constructivist pedagogy (e.g. students sharing prior knowledge, working in groups to create a proposal, classroom debates, and opportunities for students to assess their own and peer presentations). As discussed, context is crucial. I can see it as a helpful tool for teachers as a starting point who then should consider their own teaching contexts (e.g. student needs, school profile, curriculum, access to resources). It is important that educators are critical about the ChatGPT output, deleting parts that are not helpful and building on elements that are. While the unit certainly needs refining, I suspect many educators will find this feature in ChatGPT helpful. Early career teachers and pre-service teachers may find the use of ChatGPT especially helpful for teaching resources because they are less likely to have an extensive bank of trusted resources.

[ChatGPT Prompt 5]: Create a Rubric that Year 7 Students Can Use to Self-assess Their Learning About Renewable and Non-renewable Energy Sources (Note I Asked ChatGPT a Follow-Up Formatting Request: Make This Rubric Easier to Copy to Word in a Tidy Format)

This rubric can be used by students to self-assess their learning about renewable and non-renewable energy sources, it can be used to evaluate their understanding of the concepts, research, critical thinking, and participation. Each criteria will have a scale of four levels: exemplary, proficient, developing and emerging. The students can check the level that they think they have reached on each criteria and then have a teacher or peer review and feedback on their self-assessment.

I see some potential for the use of ChatGPT to help with rubric design (Table 1). As you can see from the question I asked ChatGPT, it self-identified rubric criteria (e.g. understanding, research, participation). The rubric categories make a distinction between levels (e.g. exemplary, proficient) but are too vague to identify specific indicators in each category. This might suit some educators. Others might add further detail and use the ChatGPT response as a starting point, in a similar way to the unit design discussed earlier.

[ChatGPT Prompt 6]: Generate a Quiz on the Concept on Renewable and Non-renewable Energy Sources at a Year 7 Level and Include Answer Key

Renewable and Non-Renewable Energy Sources Quiz

What is a renewable energy source?

-

(a)

An energy source that can never be depleted

-

(b)

An energy source that is not replenished by natural processes

-

(c)

An energy source that can be replenished by natural processes

-

(d)

An energy source that is not produced by natural processes

What are the three main types of renewable energy sources?

-

(a)

Solar, wind, and hydro

-

(b)

Coal, oil, and natural gas

-

(c)

Nuclear, geothermal, and biomass

-

(d)

Gasoline, propane, and diesel

What are the advantages of using renewable energy sources?

-

(a)

They are non-polluting, they do not contribute to climate change, and they are sustainable.

-

(b)

They are cheaper, they are easy to find, and they are easy to extract.

-

(c)

They are polluting, they contribute to climate change, and they are not sustainable.

What is a non-renewable energy source?

-

(a)

An energy source that can be replenished by natural processes

-

(b)

An energy source that is not replenished by natural processes

-

(c)

An energy source that is not produced by natural processes

-

(d)

An energy source that can never be depleted

What are the three main types of non-renewable energy sources?

-

(a)

Solar, wind, and hydro

-

(b)

Coal, oil, and natural gas

-

(c)

Nuclear, geothermal, and biomass

-

(d)

Gasoline, propane, and diesel

Answer key:

-

1.

c

-

2.

a

-

3.

a

-

4.

b

-

5.

b

This quiz assesses the student’s understanding of the concept of Renewable and Non-Renewable Energy Sources, it includes multiple-choice questions that test their knowledge of the definition of Renewable and Non-renewable energy sources.

Discussion

RQ1: How Did ChatGPT Answer Questions Related to Science Education?

I asked ChatGPT a series of questions broadly related to science education. It is worth acknowledging that its capacity to emulate human-like responses is nothing short of extraordinary. Broadly speaking, the ChatGPT response commonly aligned with key research themes in the literature. As discussed, the AI output in both prompts 1 and 3 highlighted strong synergies between the ChatGPT response and key themes in the research. Despite this, a major criticism of its current design is the absence of evidence to support its output. As it currently stands, ChatGPT runs the risk of positioning itself as the ultimate epistemic authority, where a single truth is assumed, without a proper grounding in evidence or presented with sufficient qualifications. The response to prompt 2 highlights the problematic absence of evidence, where the AI stated it was important to aim for a balance between teacher-centred and student-centred pedagogies. As stated earlier, where is the recognition of context? Where is the evidence base? Even when ChatGPT is prompted to provide references, it has been reported that… “it continually provides false and misleading references. To make matters worse, it will often provide correct references to papers that do exist and mix these in with incorrect references and references to non-existent papers… The question is, when does it give good answers and when does it give garbage answers?” (Buchanan, 2023, para. 1–3). Science educators, who prioritise evidence-based explanations in their own teaching, may find the current design of ChatGPT problematic.

Beyond its narrow framing of truth, its output is based on… “argumentum ad populum-it considers to be true what is repeated the most” (Darics & Poppel, 2023, para. 4). As discussed, the model is generally skewed towards content that reflects Western perspectives and people. Whose voices are silenced by the algorithm? Who is the author, and what is their bias? These are critical questions for educators, as well as students, to think carefully about.

Although this paper does not extensively probe the ethical implications related to ChatGPT, it may be valuable to discuss the matters listed below with students who are exploring its use or AI in general. One consideration is the potential environmental impact of AI platforms. Although the information is not readily available on ChatGPT, machine learning models require substantial processing power, and data centres hosting cloud networks must be effectively cooled (Boudreau, 2023; Wu et al, 2022). It was also reported that Kenyan workers were paid about $2 per hour to work as content moderators for systems associated with the creation of ChatGPT, sifting through disturbing content like sexual abuse, hate speech, and violence (Perrigo, 2023). The use of large language models such as ChatGPT also raises questions about the potential for copyright infringement when generated text resembles or copies existing content (Karim, 2023). Apart from these broader concerns, there are other considerations for how students use AI. For instance, is it okay for students to reference ChatGPT verbatim in an assessment? Given my previous discussion about its lack of evidence, I have instructed students in my classes not to. Students may first generate essay text in ChatGPT and subsequently insert key references mentioned in class. I do not know how I feel about this, it does not quite sit well with me. Instead of allowing research to drive the argument, it seems more like an essay hack. Matching an AI-generated narrative with research to legitimise it.

I am interested in the potential of ChatGPT to be used as (1) a learning scaffold for learning new concepts (before supporting students to engage with more traditional stimuli, such as an academic journal or textbook) and its potential to (2) help students who are not strong writers. For instance, I have modelled prompts students can enter to get a broad overview of a concept (e.g. ChatGPT prompt-Imagine I am an undergrad student, make some bullet points about [phenomena]). I suspect that for students who find it difficult to write, it will be helpful in overcoming writer’s block. To demonstrate to students the advantages of using ChatGPT to improve their writing, again, I have modelled prompts (e.g. ChatGPT prompt-rewrite: [paste your text here]). It is important for stakeholders to carefully consider how AI impacts the design of, and completion of, assessments and pre-service teacher programmes more broadly. Prioritising student’s critical thinking, critiquing ethical issues related to the use of AI systems, modelling its responsible use, and being clear about expectations for its use in assessments seems like a good place to start a broader conversation. Beyond large language models, educators need to consider generative AI more broadly (e.g. image, audio, video etc.). The ability to think critically as an educator is now more important than ever, an essential element of a science teacher’s toolkit. In an age of social media echo chambers, climate change scepticism, and uncertainty about sources of evidence and “truth(s)”, the emergence of generative AI introduces further complexity.

RQ2: What Are Some Ways Educators Could Utilise ChatGPT in Their Science Pedagogy?

In this part of the study, I was interested in exploring how educators might draw on its use of ChatGPT. Its output to prompts 4, 5, and 6 illustrates ways ChatGPT can be helpful to generate ideas when designing science units, rubrics, and quizzes. I was particularly impressed by its capacity to generate a science unit underpinned by the 5Es model, even if some of the output seemed a little generic and in need of further refinement. The output embedded the science topic (renewable and non-renewable energy sources) within a pedagogical framework (the 5Es). Again, however, educators need to critically evaluate any resources and adapt it to their specific context. Teacher’s expertise, experience, and understanding of their students remain key to making sound pedagogical decisions. AI does not replace the expertise of the science teacher (yet).

RQ3: How Has ChatGPT Been Utilised in This Study, and What Are My Reflections About its Use as a Research Tool?

As part of my research exploration, I was interested in using ChatGPT as a research tool in the present study. It has been reported that some scientists are already…. “using chatbots as research assistants- to help organize their thinking, generate feedback on their work, assist with writing code and summarize research literature” (Nature, 2023, p. 612). The large bulk of its use in this research was assistance with editing. There were sentences that I asked ChatGPT to rewrite (ChatGPT prompt-rewrite: [paste sentence]) at different stages of the paper to help with phrasing, flow, and word choice. Researchers who tend to write excessively long or complicated sentences could use ChatGPT to clarify their message. Certain sentences, however, had better phrasing prior to being entered into ChatGPT, while others were improved after a rewrite by the AI. When composing this paper, I kept a browser window open, experimenting with the possibilities of making my research narrative clearer.

There is presently a debate among journal editors, researchers, and publishers regarding the role of such AI tools in published literature and whether it is acceptable to attribute authorship to the bot (Stokel-Walker, 2023). Nature, along with all Springer Nature journals, has formulated two key principles to their existing guidelines for authors in response to the rise of ChatGPT. The first principle is that no large language model will be recognised as an accredited author because attribution carries accountability for the work, which AI tools cannot take such responsibility (Nature, 2023). “If ChatGPT deserves authorship, Microsoft Word deserves it, too, for providing us with the platform to organise and write documents more efficiently…. Excel, R, or Python deserve to be co-authors for calculating statistics or analysing data for a quantitative scientific publication” (Karim, 2023, para. 5). The second principle added to Nature’s author guidelines is that researchers need to disclose their use of large language models in the methods or acknowledgements sections (Nature, 2023). Similar to how journals require statements about data availability or ethical research, authors may soon have the option to disclose their use of large language models or AI during the journal submission process. I suspect formatting guidelines about the use of ChatGPT input and output will become clearer soon as well. The addition of AI to the research process commonly means new rules and processes for investigators. Ultimately, transparency and clearer guidelines about the use of AI platforms in research are essential for advancing scientific knowledge.

Conclusion

The emergence of generative AI is already having far-reaching implications for science educators. It seems like we are in the early stages of a seismic shift. This article is in no way intended to be a comprehensive discussion of its use, merely an exploratory study that hopefully acts as a catalyst for a broader conversation-how do generative AI tools, such as ChatGPT, fit into our research and teaching pedagogy? How do we feel about them? What feels right, what does not? How will generative AI platforms evolve? And what are the potential future implications for science educators? At this stage, I have more questions than answers.

Availability of Data and Materials

All raw data published in the paper.

References

Archer, L., DeWitt, J., Osborne, J., Dillon, J., Willis, B., & Wong, B. (2013). ‘Not girly, not sexy, not glamorous’: Primary school girls’ and parents’ constructions of science aspirations1. Pedagogy, Culture & Society, 21(1), 171–194. https://doi.org/10.1080/14681366.2012.748676

Boudreau, C. (2023). I asked ChatGPT about its carbon footprint and it didn’t have a real answer. Insider. https://www.businessinsider.com/chatgpt-openai-carbon-footprint-ai-climate-crisis-2023-2

Buchanan, J. (2023). ChatGPT cites economics papers that do not exist. Economist Writing Every Day. https://economistwritingeveryday.com/2023/01/21/chatgpt-cites-economics-papers-that-do-not-exist/

Bybee, R. W. (2002). Learning science and the science of learning: Science educators’ essay collection. NSTA Press.

Celik, I. (2023). Towards Intelligent-TPACK: An empirical study on teachers’ professional knowledge to ethically integrate artificial intelligence (AI)-based tools into education. Computers in Human Behavior, 138, 107468. https://doi.org/10.1016/j.chb.2022.107468

Cope, B., Kalantzis, M., & Searsmith, D. (2021). Artificial intelligence for education: Knowledge and its assessment in AI-enabled learning ecologies. Educational Philosophy and Theory, 53(12), 1229–1245. https://doi.org/10.1080/00131857.2020.1728732

Darics, E., & Poppel, L. (2023). Debate: ChatGPT offers unseen opportunities to sharpen students’ critical skills. The Conversation. https://theconversation.com/debate-chatgpt-offers-unseen-opportunities-to-sharpen-students-critical-skills-199264

Du, X., & Wong, B. (2019). Science career aspiration and science capital in China and UK: A comparative study using PISA data. International Journal of Science Education, 1–20. https://doi.org/10.1080/09500693.2019.1662135

Gleason, N. (2022). ChatGPT and the rise of AI writers: How should higher education respond? Times Higher Education. https://www.timeshighereducation.com/campus/chatgpt-and-rise-ai-writers-how-should-higher-education-respond

Gorard, S., & See, B. (2009). The impact of socio-economic status on participation and attainment in science. Studies in Science Education, 45(1), 93–129. https://doi.org/10.1080/03057260802681821

Hamilton, M. L., Smith, L., & Worthington, K. (2009). Fitting the methodology with the research: An exploration of narrative, self-study and auto-ethnography. Studying Teacher Education, 4(1), 17–28. https://doi.org/10.1080/17425960801976321

Hackling, M.W., & Prain, V. (2005). Primary Connections– Stage 2 Trial: Research Report. A Research Report prepared for the Australian Academy of Science. http://www.qualityteaching.dest.gov.au/Content/

Kang, J., & Keinonen, T. (2017). The effect of student-centered approaches on students’ interest and achievement in science: Relevant topic-based, open and guided inquiry-based, and discussion-based approaches. Research in Science Education, 48(4), 865–885. https://doi.org/10.1007/s11165-016-9590-2

Karim, R. (2023). ChatGPT: Old AI problems in a new guise, new problems in disguise. Monash Lens. https://lens.monash.edu/@politics-society/2023/02/13/1385448/chatgpt-old-ai-problems-in-a-new-guise-new-problems-in-disguise

Kirschner, P. A., Sweller, J., & Clark, R. E. (2006). Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educational Psychologist, 41(2), 75–86. https://doi.org/10.1207/s15326985ep4102_1

Lameras, P., & Arnab, S. (2021). Power to the teachers: An exploratory review on artificial intelligence in education. Information, 13(1), 14. MDPI AG. Retrieved from https://doi.org/10.3390/info13010014

Nature. (2023). Tools such as ChatGPT threaten transparent science; here are our ground rules for their use. https://www.nature.com/articles/d41586-023-00191-1

OpenAI. (2023a). ChatGPT: Optimizing language models for dialogue. https://openai.com/blog/chatgpt/

OpenAI. (2023b). Educator considerations for ChatGPT. https://platform.openai.com/docs/chatgpt-education

Pavlik, J. V. (2023). Collaborating with ChatGPT: Considering the implications of generative artificial intelligence for journalism and media education. Journalism & Mass Communication Educator, 107769582211495. https://doi.org/10.1177/10776958221149577

Perrigo, B. (2023). Exclusive: The $2 per hour workers who made ChatGPT safer. Time. https://time.com/6247678/openai-chatgpt-kenya-workers/

Rannikmäe, M., Holbrook, J., & Soobard, R. (2020). Social constructivism—Jerome Bruner. In B. Akpan & T. J. Kennedy (Eds.), Science education in theory and practice: An introductory guide to learning theory (pp. 259–275). Springer Nature.

Scharth, M. (2022). The ChatGPT chatbot is blowing people away with its writing skills. The University of Sydney. https://www.sydney.edu.au/news-opinion/news/2022/12/08/the-chatgpt-chatbot-is-blowing-people-away-with-its-writing-skil.html

Shubhendu, S., & Vijay, J. F. (2013). Applicability of artificial intelligence in different fields of life. https://www.semanticscholar.org/paper/Applicability-of-Artificial-Intelligence-in-Fields-Shubhendu-Vijay/2480a71ef5e5a2b1f4a9217a0432c0c974c6c28c

Stockman, C., & Nottingham, E. (2022). Surveillance capitalism in schools: What’s the problem? Digital Culture & Education, 14(1), 1–15.

Stokel-Walker, C. (2023). ChatGPT listed as author on research papers: Many scientists disapprove. Nature. https://www.nature.com/articles/d41586-023-00107-z

Wang, P. (2019). On defining artificial intelligence. Journal of Artificial General Intelligence, 11(2). https://doi.org/10.2478/jagi-2019-0002

Wu, C. J., Raghavendra, R., Gupta, U., Acun, B., Ardalani, N., Maeng, K., & Hazelwood, K. (2022). Sustainable AI: Environmental implications, challenges and opportunities. Proceedings of Machine Learning and Systems, 4, 795–813.

Yang, W. (2022). Artificial intelligence education for young children: Why, what, and how in curriculum design and implementation. Computers and Education: Artificial Intelligence, 3, 100061. https://doi.org/10.1016/j.caeai.2022.100061

Zhai, X. (2022). ChatGPT User Experience: Implications for Education. https://doi.org/10.2139/ssrn.4312418

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

Not applicable.

Consent to Participate

Informed consent was obtained from all individual participants included in the study.

Consent for Publication

The participant has consented to the submission of the case report to the journal.

Competing Interests

The author declares no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cooper, G. Examining Science Education in ChatGPT: An Exploratory Study of Generative Artificial Intelligence. J Sci Educ Technol 32, 444–452 (2023). https://doi.org/10.1007/s10956-023-10039-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10956-023-10039-y