Abstract

We solve the Random Euclidean Matching problem with exponent 2 for the Gaussian distribution defined on the plane. Previous works by Ledoux and Talagrand determined the leading behavior of the average cost up to a multiplicative constant. We explicitly determine the constant, showing that the average cost is proportional to \((\log \, N)^2,\) where N is the number of points. Our approach relies on a geometric decomposition allowing an explicit computation of the constant. Our results illustrate the potential for exact solutions of random matching problems for many distributions defined on unbounded domains on the plane.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Here we consider the Random Euclidean Matching problem with exponent 2 for some unbounded distribution in the plane. The case of unbounded distributions is important both from a mathematical and an applied point of view.

In particular we consider the case of the Gaussian, the case of the Maxwellian and we give some hints on the case of other unbounded exponentially decaying densities.

Random Euclidean Matching is a combinatorial optimization problem in which N red points and N blue points extracted independently from the same probability distribution are paired, a red point with a single blue point and vice versa, in order to minimize the sum of the distances (the distance raised to a certain power) between the points. The problem is equivalent to calculating the Wasserstein distance between the two empirical measures associated with the points or, which is the same, finding the optimal transport between the two empirical measures.

Apart for the great mathematical interest in the subject, in the last years applications of Matching and Optimal Transport in statistical learning and more in general in applied mathematics has enormously increased. In particular we refer to [12, 18, 25] and references therein for applications of Optimal Transport to statistical learning, computer graphics, image processing, shape analysis, pattern recognition, particle systems and more. For general reviews on Optimal Transport we refer to [30] and with a more applicative aim to [26], while for a general review of results and tools and methods in probability, with applications to the matching problem we refer the reader to [28].

Coming to the mathematical problem, recently there has been a lot of activity on this topic because of some sharp progress, starting from a conjecture by Caracciolo et al., which shows how the problem can be rephrased in terms of PDE.

Let \(\mu \) be a probability distribution defined on \(\Lambda \subset {\mathbb {R}}^2.\) Let us consider two sets \(X^N =\{X_i\}_{i=1}^N\) and \(Y^N = \{Y_i\}_{i=1}^N\) of N points independently sampled from the distribution \(\mu \). The Euclidean Matching problem with exponent 2 consists in finding the matching \(i\rightarrow \pi _i\), i.e. the permutation \(\pi \) of \(\{1,\dots , N\}\) which minimizes the sum of the squares of the distances between \(X_i\) and \(Y_{\pi _i}\), that is

The cost defined above can be seen, but for a constant factor N, as the square of the 2-Wasserstein distance between two probability measures. In fact, the p-Wasserstein distance \(W_p(\mu ,\nu )\), with exponent \(p\ge 1\), between two probability measures \(\mu \) and \(\nu ,\) is defined by

where the infimum is taken on all the joint probability distributions \(dJ_{\mu ,\nu }(x,y)\) with marginals with respect to dx and dy given by \(\mu \) and \(\nu \), respectively. Defining the empirical measures

it is possible to show that

(see for instance [13]). In the sequel we will shorten \(C_N: = C_N(X^N,Y^N)\).

The first general result on Random Euclidean Matching was obtained by Ajtai et al. by combinatorial arguments in [1]. In particular, in the case of dimension 2 and exponent 2, assumed that \(X_i\) and \(Y_i\) are independently sampled with uniform density on the unit square Q they prove that \(\mathbb {E}_\sigma [W_2^2(\mu ^N,\nu ^N)] \) behaves like \(\frac{\log N}{N}\), where with \(\mathbb {E}_\sigma \) we have indicated the expected value with respect to the uniform distribution \(d\sigma (x) = dx\) of the points \(\{X_i\}\) and \(\{Y_i\}\).

In the challenging paper [15], Caracciolo et al. conjecture that

where we say that \(f\sim g\) if \(\lim _{N\rightarrow +\infty } f(N)/g(N) =1\). In terms of \(W_2^2\) the conjecture is equivalent to

Furthermore, in [15] it is conjectured that asymptotically the expected value of \(W_2^2(\mu ^N,\sigma )\) between the empirical density \(X^N\) and the uniform probability measure \(\sigma \) on Q is given by

The above conjectures were proved by Ambrosio et al. [4]. In [2] more precise estimates are given and it is proved that the result can be extended to the case where particles are sampled from the uniform measure on a two-dimensional Riemannian compact manifold. In [3] it is shown that the optimal transport map for \(W_2(\mu ^N,\sigma )\) can be approximated as conjectured in [15].

We notice that, by simple scaling arguments, if we consider squares or manifold \(\Lambda \) of measure \(|\Lambda |\), the cost has to be multiplied by \(|\Lambda |\).

Then, in [8] it has been conjectured that, if the points are sampled from a smooth and strictly positive density \(\rho \) in a regular set \(\Lambda ,\) then the result is the same: i.e. the leading term of the expected value of the cost is \( \frac{|\Lambda |}{2\pi }\log N.\) The conjecture is based on a linearization of Monge–Ampere equation close to a non uniform density and a proof of the estimate form above is given when \(\Lambda \) is a square. This result has been proved by Ambrosio et al. [5]. In particular they generalize the result to Hölder continuous positive densities in bounded regular sets and in Riemannian manifolds.

Summarizing, if the density \(\sigma =\rho dx ,\) is supported in a bounded regular set \(\Lambda \) where \(\rho \) is Hölder continuous, and if there exist constants a and b such that \(0< a<\rho <b,\) then

In [9], in the case of constant densities, the correction to the leading behavior has been studied. In particular it is conjectured that the correction is given in terms of the regularized trace of the inverse of the Laplace operator in the set. This result seems very hard to be proven. A related question is if in a generic domain \(\mathbb {E}_\sigma [C_N] \sim \frac{|\Lambda |}{2\pi }\log N +O(1).\) Recently Goldman et al. [21] proved that \(\mathbb {E}_\sigma [C_N] \sim \frac{|\Lambda |}{2\pi }\log N +O(\log \log N).\)

1.1 Main Results

Interestingly (1.5) implies that the limiting average cost is not continuous in the space of densities, even in \(L_{\infty }\) norm.

Indeed, if we consider a sequence of smooth strictly positive densities \(\rho _k\) on a disk of radius 2, converging, as \(k\rightarrow \infty \) to \(\rho =\frac{1}{\pi }1_{|x|<1},\) that is the uniform density on the disk of radius 1, we get that for any k : \(\mathbb {E}_{\rho _k}[C_N] \sim 2\log N,\) while for the limiting density \(\rho \) we get \(\mathbb {E}_\rho [C_N] \sim \frac{1}{2}\log N.\)

It is therefore natural to ask if it is possible to define sequences of densities \(\rho _N\), positive on all the disk of radius 2, that converge to the density \(\rho =\frac{1}{\pi }1_{|x|<1},\) and such that \(\mathbb {E}_{\rho _N}[C_N] \sim c\log N,\) where \(c\in (\frac{1}{2},2).\) The answer is yes.

For instance, if we consider, in the disk of radius 2 the sequence of N-dependent “multiscaling” densities

where \(0< \alpha <1.\) That is, in the average, there are \(N-N^\alpha \) points in the disk and \(N^\alpha \) points in the annulus (Fig. 1).

In this case if \(d\sigma (x)=\rho _N(x)dx\), as we prove in Sect. 3, the average of the cost is given by

Here we consider three problems.

The first is a generalization of the example seen above to any finite number of circular annuli. As we shall see this can be considered as a toy model for the Gaussian case.

Under suitable monotonicity conditions we will prove, see Theorem 3.1, that the average cost is given by

The second case is the case of the Gaussian distribution, that is \(d\mu (x)=\rho (x)dx\) and

In this case Talagrand proved [29] that the average cost, for large N satisfies

An estimate from above proportional to \((\log N)^2\) was previously proved by Ledoux in [23], see also [22] where an estimate from below is proved using PDE techniques as in [4].

In this case we prove, see Theorem 4.1, that the average cost is

The third case, is when the density is given by

and again \(d\mu (x)=\rho (x)dx\). This density, interpreting \(x_2\) as a velocity, is simply the Maxwellian distribution for a gas in the box (the segment) [0, 1].

In this case we prove, see Theorem 5.1, that the average limit cost is

As we shall see the problem of the Gaussian and the problem of the Maxwellian can be considered as the limit of a suitable sequence of the multiscaling densities, in particular, in both cases we obtain that

Dealing with other radially symmetric exponentially decaying densities, that is with densities proportional to \(e^{-|x|^\alpha }\), Talagrand showed that the leading behavior is proportional to \((\log N)^{1+2/\alpha }.\) We think that it would be possible to modify the proofs given here to deal with these cases and to get also in this case the exact leading behavior (i.e. determining also the multiplicative constant). More precisely, by (1.7) we would get

Note that for \(\alpha = 2\) we find the constant \(\frac{1}{4}\) instead of \(\frac{1}{2}\) because here we are considering the Gaussian \(e^{-|x|^2}\) and not \(e^{-|x|^2/2}.\)

To prove this is out of the aim of the present paper and the technique would be slightly different, because here we make use of the fact that the gaussian is a product measure.

For what concerns probability distributions that decays as a power of the distance, for instance \(\frac{1}{1+|x|^\alpha },\) we do not dare to make conjectures. In that case the slow decay of the distribution does not allow us to apply techniques similar to those used in this work.

1.2 Further Questions and Literature

Now we briefly review what it is known, up to our knowledge, on the Random Euclidean Matching in dimension \(d\ne 2,\) with particular attention to the case of the constant distribution in the unite cube and of the Gaussian distribution.

In dimension 1 the Random Euclidean Matching problem is almost completely characterized, for any \(p\ge 1.\) This is due to the fact that the best matching between two set of points on a line is monotone, see for instance [11, 14, 16] where a general discussion on the one-dimensional case, also for the case of non-constant densities is given. In particular, for a segment of length 1 and for \(p=2:\) \(\mathbb {E}[C_N]\rightarrow 1/3\) as \(N\rightarrow \infty .\) For the normal distribution in dimension 1 in [10] it is proved that \(\mathbb {E}[C_N]\sim \log \log N,\) while in [11] estimates from below and from above proportional to \(\log \log N\) were given.

In dimension \(d\ge 3,\) for the constant density in a cube, it has been proved that \(\mathbb {E}[C_N]\) behaves as \(N^{1-p/d},\) for any \(p\ge 1\) (see [17, 23, 27]). In [20] it has been proved the existence of the limit \(\frac{\mathbb {E}[C_N]}{N^{1-p/d}}\) for any \(p\ge 1\).

In dimension \(d\ge 3\), the case of unbounded densities and in particular the gaussian case has been widely studied, see [6, 17, 24, 29]. In particular, in [6], it has been proved that \(\mathbb {E}[C_N]\) behaves as \(N^{1-p/d},\) for any \(0< p < d/2,\) and an explicit expression for the constant multiplying \(N^{1-p/d}\) is conjectured, while in [24], it has been proved that \(\mathbb {E}[C_N]\) behaves as \(N^{1-p/d},\) for any \(1\le p < d.\) General results on Random Euclidean Matching, including the case \(p > d\) and the case of unbounded densities are given in [19].

The structure of the paper is the following.

In Sect. 2 we give some general results on Wasserstein distance that we use in the sequel.

In Sect. 3 we consider the case of multiscaling densities, in Sect. 4 the case of the Gaussian density and in Sect. 5 the case of the Maxwellian density.

2 Useful Results and Notations

In this section, we recall some preliminary results that we will need later.

The following Lemma links the cost of semidiscrete problem to the cost of bipartite one.

Lemma 2.1

([4], Proposition 4.8) Let \(\rho \) be any probability density on \(\mathbb {R}^2\) and let \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) independent random variables in \(\mathbb {R}^2\) with common distribution \(\rho \), then

We will use also the following property for the upper bounds of the leading terms, that is a consequence of Benamou–Brenier formula.

Theorem 2.1

([7], Benamou–Brenier formula) If \(\mu \) and \(\nu \) are probability measures on \(\Omega \subseteq \mathbb {R}^d\), then

The result we will use is the following.

Theorem 2.2

([2], Corollary 4.4) If \(\mu \) and \(\nu \) are probability densities on \(\Omega \subseteq \mathbb {R}^d\) and \(\phi \) is a weak solution of \(\Delta \phi =\mu -\nu \) with Neumann boundary conditions, then

Remark 2.1

Above \(\Omega \) is a regular convex domain. Otherwise, the distance between the points is understood as the geodesic distance and not the Euclidean distance.

We will also use a result by Talagrand, that relates the Wasserstein distance with the relative entropy, that is the following.

Theorem 2.3

([27], Talagrand inequality) Let \(\rho \) be the Gaussian density in \(\mathbb {R}^d\), that is \(\rho (x)=\frac{1}{\sqrt{2\pi }^d}e^{-\frac{|x|^2}{2}}\). If \(\mu \) is another density on \(\mathbb {R}^d\), then we have

Then, when proving the convergence, while for the upper bound we will use the canonical Wasserstein distance, for the lower bound we will use, as in [5], a distance between non-negative measures introduced in [18], that is

In the following Theorem we denote with \(W_{2,*}\) either the canonical \(W_2\) and the boundary version of it, that is \(Wb_2\), and we collect some known results that we will need later.

Theorem 2.4

([5], Theorems 1.1 & 1.2, Propositions 3.1 & 3.2) Let \(\Omega \subseteq \mathbb {R}^2\) be a bounded connected domain with Lipschitz boundary and let \(\rho \) be a Hölder continuous probability density on \(\Omega \) uniformly strictly positive and bounded from above. Given iid random variables \(\{X_i\}_{i=1}^N\) and \(\{Y_i\}_{i=1}^M\) with common distribution \(\rho \), we have

Finally, we specify that hereafter we will denote the expected value conditioned to a random variable X as \(\mathbb {E}_{X}[\cdot ]:=\mathbb {E}[\cdot |X]\).

In the sequel, we denote with the same symbol a probability measure absolutely continuous with respect to Lebesgue measure and its density.

3 A Piecewise Multiscaling Density

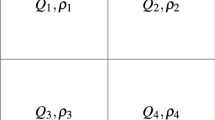

In this section, we examine the transportation cost of a random matching problem when \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) are independent random variables in the disk \(C=\{|x|\le S\}\) with common distribution \(\rho ^L_N\), defined by

where we have chosen the exponents \(\alpha _l\) strictly positive and decreasing with the index l, \(\alpha _0:=1,\) and where the annuli \(C_l\) are defined by

This density is piecewise constant on the annuli \(C_l\), it depends on the number of particles we are considering and it allows to have (in the expected value) \(N^{\alpha _l}\) particles (or \(N-\sum _{l=1}^{L-1}N^{\alpha _l}\) if \(l=0\)) in the annulus \(C_l\). Here we prove the following theorem.

Theorem 3.1

If \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) are iid random variables with common distribution \(\rho ^L_N\), it holds

Let us notice that also if \(\rho ^L_N\) is supported on all the disk C the asymptotic cost of the problem (except for a factor \(2\pi \) or \(4\pi \)) is multiplied for \(\sum _{l=0}^{L-1}\alpha _l|C_l|\), and

therefore the cost is strictly smaller than the cost of the problem with particles distributed with a density bounded from below from a positive constant, as proved in [5]. This happens because \(\rho ^L_N\) is not bounded from below: except for the disk \(C_0\) it is everywhere vanishing for large N (Fig. 2).

We can also notice that

while the total cost is strictly larger than the cost of the problem when the particles are distributed with measure \(\mu _0\).

Finally, let us notice that the second statement of Theorem 3.1 is equivalent to

First we prove the following Lemma, similar to propositions proved in [5, 8]. It allows to compute the total cost as the sum of the costs of the problems on the annuli. The argument used to estimate the Wasserstein distance between two measures that are not bounded from below is that when we use Benamou–Brenier formula we find a divergent term due to a vanishing denominator. This term in the annulus \(C_l\) is balanced from the numerator, which involves the fluctuations of the particles in \(C_l\) and in \(\cup _{l=0}^{L-1}C_l\), whose order is the same thanks to the choice of the exponents \(\alpha _l\).

Lemma 3.1

There exists a constant \(c>0\) such that if \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) are independent random variables in C with common distribution \(\rho ^L_N\), \(N_l\) and \(M_l\) are respectively the number of points \(X_i\) and \(Y_i\) in \(C_l\), i.e. \(N_l:=\sum _{i=1}^N\mathbbm {1}(X_i\in C_l)\) and \(M_l:=\sum _{i=1}^N\mathbbm {1}(Y_i\in C_l)\), \(\theta \) small enough and

it holds

Proof

Since the proofs are very similar, we only focus on (3.2) and then we explain how to obtain (3.3) and (3.4).

Let \(f_N\) be the weak solution of

then by Theorem 2.2 we get

As explained before, now we are going to prove that, when we take the expectation, the divergent term due to the vanishing density is balanced by the small fluctuations of the particles.

We can find \(f_N\) depending only on |x|, i.e. in the form

Then, if we define

and we observe that the factor \(2\pi s\) is exactly what we need to write the integral in polar coordinates, we have

where in the last inequality we have used that if \(l=0\) the first summand disappears since \(P_l=N\rho ^L_N(B_l)=0\) almost everywhere and therefore the function \(2\pi r\) in the denominator is multiplied for \(r^4\), thus it is integrable. Moreover, thanks to the choice of \(\{\alpha _l\}_{l=0}^{L-1}\) we have

where c depends on L. Therefore

while

Finally from (3.5), (3.6) and (3.7) we get

Then, the proof of (3.3) is exactly the same as (3.2).

To obtain (3.4) it is sufficient to observe that in \(A_{\theta }\)

and then we use (3.2) and (3.3). \(\square \)

Now we can prove Theorem 3.1.

Thanks to Lemma 2.1 it is sufficient to prove the upper bound for semidiscrete matching, in Proposition 3.1, and the lower bound for bipartite matching, in Proposition 3.2.

The structure of the proofs is the same as Theorem 1 in [8] and Theorems 1.1 and 1.2 in [5]. First, we use the fact that the total transportation cost on the disk C is estimated by the sum of the costs on the annuli \(C_l\). This is possible thanks to Lemma 3.1. Then we use the fact that the problem on the annulus \(C_l\) has been solved in [5] (it is a particular case of Theorem 1.1 and 1.2) because the probability density \(\rho ^L_N\) is piecewise constant on the annuli \(C_l\) (and, thus, piecewise bounded from below). Therefore, if \(N_l\) is the number of particles in \(C_l\), each annulus contributes to the total cost with a term approximated by

except for a factor \(4\pi \) or \(2\pi \) in semidiscrete and bipartite matching respectively. The total cost is a convex combination of all these terms, so the main contributions (avoiding the factors \(4\pi \) and \(2\pi \)) turns out to be

Proposition 3.1

Let \(X_1,\dots ,X_N\) be independent random variables in C with common distribution \(\rho ^L_N\). Then

Proof

Let \(N_l\) be the number of points \(X_i\) in \(C_l\), i.e. \(N_l:=\sum _{i=1}^N\mathbbm {1}(X_i\in C_l)\). Then, we define

Hence, thanks to triangle inequality and convexity of quadratic Wasserstein distance, if \(\beta >0\)

and, since \(\beta >0\) is arbitrary, combining (3.8) with (3.2) of Lemma 3.1 we get

Let now \(A_l\) be defined as

We can compute the expected value in (3.9) separately in the sets \(A_l^c\) and \(A_l\).

In \(A_l^c\) we have the bound

while

Using (3.10) and (3.11) we get

Therefore we can limit ourselves to consider the expected value in \(A_l\): we use the properties of the conditioned expected value and (2.2) of Theorem 2.4.

Indeed, since \(\min _{l=0,\dots ,L-1}N\rho ^L_N(C_l)\xrightarrow [N\rightarrow \infty ]{}\infty \), there exists a function \(\omega (N)\xrightarrow [N\rightarrow \infty ]{}0\) such that

Finally, we use the concavity of the function \(\log x\) to observe that

Thus, using (3.12), (3.13) and (3.14) we obtain

and combining this with (3.9) we obtain the thesis. \(\square \)

Proposition 3.2

Let \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) independent random variables in C with common distribution \(\rho ^L_N\). Then it holds

Proof

(Sketch) Since the proof is quite similar to the previous one, we only explain the differences.

First, we can restrict to a special set

where \(\theta =\frac{1}{\sqrt{\log N}}\). Its complementary has small probability. Then, if \(N_l\) and \(M_l\) are respectively the number of particles \(X_i\) and \(Y_i\) in \(C_l\), we rename

Using the superadditivity of \(Wb_2^2\), we obtain

The main contribution is given by the term in (3.15), and as in the previous Theorem it can be estimated using (2.3) of Theorem 2.4.

To prove that the term in (3.16) is negligible, it is sufficient to recall Lemmas 6.2 and 3.1.

Letting \(\beta \rightarrow 0\), we get the thesis. \(\square \)

Now we have proved Theorem 3.1. Let us observe that we can choose the exponents \(\alpha _l\) and the annuli \(C_l\) in an interesting way. If

and

we obtain

that is a Riemann sum for the function \(f(x)=\pi S^2x\). Therefore, if we decrease \(\max _{l=0,\dots ,L-1}\{\alpha _l-\alpha _{l+1}\}\), in the limit we obtain

In particular, the case \(S=\sqrt{2\log N}\) introduces us to Sect. 4, indeed the Gaussian density has the following property: if \(\alpha _0=1>\alpha _1>\dots >\alpha _L=0\), the annulus \(A_l\), defined by

has measure \(2\pi (\alpha _l-\alpha _{l+1})\log N\). Moreover the averaged number of particles in \(A_l\) is \(N^{\alpha _l}-N^{\alpha _{l+1}}\sim N^{\alpha _l}\), therefore it contributes to the total cost with

and by summing over \(l=0,\dots ,L-1\) and decreasing \(\max _{l=0,\dots ,L-1}\{\alpha _l-\alpha _{l+1}\}\) we get

Notwithstanding this, the case of the Gaussian density have some further difficulties.

The first one is that what we have proved in the case of the multiscaling density depends on the number of the annuli we are considering, while we are currently not able to approximate the Gaussian density with a piecewise multiscaling density on a finite number of annuli. Otherwise, if we consider a countable set of annuli, we need a uniform bound for the cost of the problem on an annulus. Instead, when dividing the disk of radius \(\sqrt{2\log N}\) into squares, using a rescaling argument, we only need a bound for the cost of the problem on a square (and we already have it from [4]). Moreover, the main property we use here is not that the Gaussian density is a radial density, but rather that it is a product measure, i.e. a function of \(x_1\) times a function of \(x_2.\)

4 The Gaussian Density

This section concerns the problem of \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) independent random variables in \(\mathbb {R}^2\) distributed according to Gaussian measure \(\rho \), that is

In Subsect. 4.2 we prove the following theorem.

Theorem 4.1

If \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) are iid random variables distributed with the Gaussian density \(\rho \), it holds

First, we underline that also if Gaussian density has an unbounded support, the number of particles in a cube of side dx is \(Ne^{-{\frac{|x|^2}{2}}}dx\), and we can notice that \(Ne^{-\frac{|x|^2}{2}}\) is strictly smaller than 1 when \(|x|>\sqrt{2\log N}\). Therefore, using the results in [5, 8], we can suppose that the cost for semidiscrete and bipartite matching is

except for a factor \(\frac{1}{4\pi }\) or \(\frac{1}{2\pi }\) respectively.

To achieve these result, we apply a cut-off and we substitute \(\rho \) with a density that we will call again \(\rho _N\) and whose support is contained in \(\{|x|\le \sqrt{2\log N}\}\). To define \(\rho _N\), we proceed in the following way. We cannot arrive exactly at \(\sqrt{2\log N}\), otherwise there would be too few particles close to the boundary of \(\{|x|\le \sqrt{2\log N}\}\), therefore we define

and we construct a collection of squares that covers \(\{|x|\le r_N\}\), in this way

\(\mathcal{J}\) is a set of intervals in direction \(x_1\), while \(\mathcal{K}\) is a set of intervals in direction \(x_2\). Now we define a set of squares that covers \(\{|x|\le r_N\}\), as follows. First, we denote by \(k_{\min }\) and \(k_{\max }\) and by \(j_k^{\min }\) and \(j_k^{\max }\)

and then we define \(\mathcal{Q}\) as the minimal set of squares that covers \(\{|x|\le r_N\}\), that is

Before going on, here we can notice that, thanks to the choice of the squares (Fig. 3), if \(N^j_k\) is the (random) number of points in the square \(Q^j_k\) when the distribution of the particles is Gaussian (after having applied the cut-off, the expectation of this number can only increase) we have

Let also \(\mathcal{R}\) be set of horizontal rectangles that covers \(\{|x|\le r_N\}\), that is

(Fig. 4) with projections \(J^k\) on the axis \(x_1\), where each \(J^k\) is defined by

Finally, we define \(E_N\) as

and \(\rho _N\) is the Gaussian measure restricted to \(E_N\), that is

Hereafter, if \({\tilde{X}}_1,\dots ,{\tilde{X}}_N\) and \(\tilde{Y}_1,\dots ,{\tilde{Y}}_N\) are independent and identically distributed with measure \(\rho _N,\) and we define

as the number of points \({\tilde{X}}_i\) and \({\tilde{Y}}_i\) in the square \(Q^j_k,\) respectively.

Finally, where not specified, we denote \(\sum _{j,k}:=\sum _{k=k_{\min }}^{k_{\max }}\sum _{j=j_k^{\min }}^{j_k^{\max }}\).

In Subsect. 4.1 we prove some bounds that we will need for the proof of Theorem 4.1 in Subsect. 4.2.

4.1 Preliminary Estimates

This first result proves that we can substitute N independent random variables with common distribution \(\rho \) with N independent random variables with common distribution \(\rho _N\).

Lemma 4.1

Let \(\rho \) and \(\rho _N\) defined as before, \(X_1,\dots ,X_N\) independent random variables in \(\mathbb {R}^2\) with common distribution \(\rho \) and \(T:\mathbb {R}^2\rightarrow \mathbb {R}^2\) the optimal map that transports \(\rho \) in \(\rho _N\). Then we have

Proof

For (4.1) we use again Theorem 2.3 to write

while as for (4.2), if T is the optimal map that transports \(\rho \) in \(\rho _N\), that is

we have

that is the thesis thanks to (4.1). \(\square \)

The following Proposition allows us to compute the total cost of the problem as the sum of the costs of the problems on the squares \(Q^j_k\). We have to bound the expectation of the distance between the Gaussian measure and the same Gaussian measure modified on the squares \(Q^j_k\) by a factor \(\frac{N^j_k}{N\rho (Q^j_k)}\). Therefore the two measures we are considering are \(\rho \) and \(\sum _{j,k}\frac{N^j_k}{N}\frac{\rho \mathbbm {1}_{Q^j_k}}{\rho (Q^j_k)}\).

The reason why these measures should be similar is that \(N^j_k\) is very close to its expectation, that is \(\mathbb {E}(N^j_k)=N\rho (Q^j_k)\). To prove it, we proceed in two steps and use the triangle inequality between the two measure involved and a third measure, that is \( \sum _k\frac{N_k}{N}\frac{\rho \mathbbm {1}_{R_k}}{\rho (R_k)}\), where \(N_k\) is the number of points \({\tilde{X}}_i\) in the rectangle \(R_k\).

As for the distance between \(\sum _{j,k}\frac{N^j_k}{N}\frac{\rho \mathbbm {1}_{Q^j_k}}{\rho (Q^j_k)}\) and \(\sum _k\frac{N_k}{N}\frac{\rho \mathbbm {1}_{R_k}}{\rho (R_k)}\), first we use convexity of Wasserstein distance to restrict the problem to the rectangles, indeed we have

Then, we argue as in Lemma 3.1: when using Benamou–Brenier formula there is a vanishing density in the denominator, and this causes a divergent term. But this divergent term is completely balanced from the fluctuations of the particles, which are very few.

Then, we use again Talagrand inequality to bound the distance between \(\sum _k\frac{N_k}{N}\frac{\rho \mathbbm {1}_{R_k}}{\rho (R_k)}\) and \(\rho \).

Proposition 4.1

There exist a constant \(c>0\) such that if \({\tilde{X}}_1,\dots ,\tilde{X}_N\) and \({\tilde{Y}}_1,\dots ,{\tilde{Y}}_N\) are independent random variables with common distribution \(\rho _N\) and if

then

Proof

We only prove (4.3) and (4.5), indeed (4.4) is analogue to (4.3).

We start by proving (4.3), therefore we define

so that \(N_k\) is the numbers of particles \(X_i\) in the whole rectangle \(R_k\) and

Therefore \(P^j_k\) is the numbers of particles \(X_i\) in \(R_k\) not in the whole rectangle, but rather only until \(a_j\).

As for (4.4), the only difference in the proof is that where we have summands involving \(N^j_k\) we will find the same terms involving \(M^j_k+\frac{N^j_k-M^j_k}{\theta }\) (if \(M^j_k\) is the number of points \(Y_i\) in the square \(Q^j_k\)).

We proceed in two steps. First, we focus on the distance between the density modified on all the squares \(Q^j_k\) and the one modified only on the rectangles \(R_k\); then, we study the distance between the measure modified on the rectangles \(R_k\) and the Gaussian measure itself.

Using first the triangle inequality and then the convexity of quadratic Wasserstein distance we get

As for the term in (4.6), we observe that we are considering again product measures in the rectangle \(R_k\) whose marginals coincide in the direction \(x_2\), indeed we have

therefore we just have a one dimensional problem: thanks to Lemma 6.1 we get

To bound this term we argue as in Lemma 3.1: we define \(f:J^k\rightarrow \mathbb {R}\) such that if \(x_1\in (a_j,a_{j+1})\)

so that, thanks to \(\mathbb {E}_{N_k}(N^j_k)=N_k\frac{\mu (a_j,a_{j+1})}{\mu (J^k)}\), f is the weak solution of

that is the difference between the densities we are considering.

Then, thanks to Theorem 2.2 we have

where in the last inequality we have used that, thanks to the choice of the points \(a_j\), we have \(|a_{j+1}^2-a_j^2|\le c\).

We are going to estimate these two terms using the fact that where the density is small there are also small fluctuations of the particles, so that there will be a balance between the fluctuations and the divergent terms. In particular, our aim is to show that all the terms in the last two sums perfectly balance, and only \((a_{j+1}-a_j)\) remains (Fig. 5).

Thus, to bound (4.9) and (4.10) we observe that we can condition to the number of particles \(X_i\) in \(R_k\) (that is \(N_k\)) to obtain

Before going on, here we observe that, when proving (4.4), to bound (4.9) and (4.10) at this point we should condition both to \(N_k\) and to \(M_k\) (and not only to \(N_k\)). In this way instead of \(\frac{1}{N_k}\) in (4.11) and (4.12), up for a constant we would obtain

and this term can be estimated (in \(A_{\theta }\), and but for multiplicative constants) by

Finally, combining (4.8) with (4.9) and (4.10) we get

A graphical representation of the proof. \(N^j_k\) is the number of particles in \(Q^j_k\) (the first square in blue), while \(P^j_k\) is the number of particles in the red rectangle. Once fixed \(N_k\), that is the number of particles in \(R_k\), the fluctuations of the particles in the red rectangle are exactly the fluctuations of the particles in the blue one (Color figure online)

Now for (4.13) we claim that there exist a constant \(c>0\) such that \(\forall j\)

The first inequality follows from

while the second one is a consequence of

Thus the claim is proved and we have

Applying (4.14) to (4.13) and using the properties of the conditioned expected value we get

Now we have bounded the expectation of (4.6).

To estimate (4.7) we argue in the following way: thanks to Theorem 2.3 and using \(\log x\le x-1\) and \(\mathbb {E}(N_k)=N\rho _N(R_k)=N\frac{\rho (R_k)}{\rho (E_N)},\) we have

Therefore

Before concluding the proof we can observe that for (4.4) at this point we would obtain the following term

that is analogue to the previous one but with a restriction to the set \(A_{\theta }\): we can bound the expectation computed in \(A_{\theta }\) with the expectation computed everywhere because this term is everywhere positive (indeed the function \(f(x)=x^2-x\) is convex and we are considering a convex combination of the summands).

Finally, combining (4.6) with (4.15) and (4.7) with (4.16) we obtain (4.3).

To prove (4.5) we observe that in \(A_{\theta }\)

and this implies the thesis thanks to (4.3) and (4.4). \(\square \)

With the following Lemma we prove that thanks to the choice of the squares \(Q^j_k\) we can transport \(\frac{\rho \mathbbm {1}_{Q^j_k}}{\rho (Q^j_k)}\) in the uniform measure. This implies that the problem on the square \(Q^j_k\) is (approximately) a random matching problem in the square with the uniform measure, and thus solved in [4].

Lemma 4.2

There exist a function \(\epsilon (N)\xrightarrow [N\rightarrow \infty ]{}2\epsilon \) such that if \({\tilde{X}}_1,\dots ,{\tilde{X}}_{N^j_k}\) and \({\tilde{Y}}_1,\dots ,\tilde{Y}_{M^j_k}\) are independent random variables in \(Q^j_k\) with common distribution \(\frac{\rho _N\mathbbm {1}_{Q^j_k}}{\rho _N(Q^j_k)}\) and \(Z_1,\dots ,Z_{N^j_k}\) and \(W_1,\dots ,W_{M^j_k}\) are independent random variables in \(Q^j_k\) with common distribution \(\frac{\mathbbm {1}_{Q^j_k}}{|Q^j_k|}\), then

Proof

To prove the statement, arguing as in [8], we can reduce to find a suitable map \(S:Q^j_k\rightarrow Q^j_k\) which transports \(\frac{\rho _N\mathbbm {1}_{Q^j_k}}{\rho _N(Q^j_k)}\) in \(\frac{1}{|Q^j_k|}\). Let \(S_j:(a_j,a_{j+1})\rightarrow (a_j,a_{j+1})\) and \(S^k:(b_k,b_{k+1})\rightarrow (b_k,b_{k+1})\) be defined by

Then the map \(S:Q^j_k\ni x\mapsto (S_j(x_1),S^k(x_2))\in Q^j_k\) and its inverse switch the measures we are considering and fix the boundary of \(Q^j_k\), and since

if \(\epsilon (N):=2\epsilon +\frac{\epsilon ^2}{r_N^2}\), we find

Therefore

and this concludes the proof. \(\square \)

The following Lemma allows us to restrict to a good event in the bound from below for bipartite matching. It only uses Chernoff bound, as in [5].

Lemma 4.3

Let \({\tilde{X}}_1,\dots ,{\tilde{X}}_N\) and \({\tilde{Y}}_1,\dots ,{\tilde{Y}}_N\) be independent random variables with common distribution \(\rho _N\). Then if \(\theta =\theta (N):=\frac{1}{(\log N)^{\xi }}\), \(0<\xi<\frac{\alpha -1}{2}<\frac{1}{2},\) and

it holds

Proof

Thanks to Chernoff bound we have

therefore

that implies the thesis. \(\square \)

Finally, this last Proposition collects all the contributions to the total cost given from each square. It makes rigorous the idea explained at the beginning of this section.

Proposition 4.2

Let \({\tilde{X}}_1,\dots ,{\tilde{X}}_N\) be independent random variables with common distribution \(\rho _N\). Then we have

Proof

First, we focus on the estimate from above, therefore we choose \(0=\alpha _0<\alpha _1<\dots <\alpha _L=1\) and we define

so that

and

These last two properties imply

therefore

Now we recognize in the right hand side of this inequality a Riemann sum for the function \(f(x)=1-x\) which verifies

and since our choice of \(\{\alpha _l\}_{l=0}^{L}\) was arbitrary in [0, 1] we have

As for the estimate from below, since function \(\log x\) is concave, for a suitable function \(\zeta (N)\xrightarrow [N\rightarrow \infty ]{}0\) we have

therefore

\(\square \)

4.2 Convergence Theorems

In this subsection we prove Theorem 4.1.

Thanks to Lemma 2.1 it is sufficient to prove the upper bound for semidiscrete matching, in Theorem 4.2, and the lower bound for bipartite matching, in Theorem 4.3. The structure of the proof is the same as Theorem 1 in [8] and Theorem 1.1 and 1.2 in [5].

Both in Theorem 4.2 and in Theorem 4.3, the first step consists in substituting N independent random variables with common distribution \(\rho \) with N independent random variables with common distribution \(\rho _N\). This is possible thanks to Lemma 4.1.

Then we have to bound the distance between two measures, one of which is \(\rho \) (in semidiscrete case, the first one) or the empirical measure on N independent random variables with distribution \(\rho _N\) (in bipartite case, the second one) while the other is the same measure as the first , multiplied in each square \(Q^j_k\) by \(\frac{N^j_k}{N\rho (Q^j_k)}\). This factor is expected to be very close to one, and this is the reason why the two measures involved are similar. This is possible thanks to Proposition 4.1.

At this point, we are allowed to compute the total cost of the problem as the sum of the costs on the squares. When proving the upper bound we use a subadditivity argument while for the lower bound we use, as in [5], the distance introduced in [18], that is (2.1). This distance is superadditive.

Then, since we need that in each square \(Q^j_k\) there is an increasing (with N) number of particles, in Theorem 4.2 we consider separately two events: the event in which the number of particles in the square is close to its expected value, and its complementary, and we show that the contribution of the second event is negligible. In Theorem 4.3 we simply restrict to a good event (that is \(A_{\theta }\)) and thanks to Lemma 4.3 we are sure its probability to be close to 1.

Once made these assumptions, thanks to Lemma 4.2 we can approximate the probability measure on the square \(Q^j_k\) whose density is \(\frac{\rho _N\mathbbm {1}_{Q^j_k}}{\rho _N(Q^j_k)}\) with the uniform measure on the square itself. Therefore, using the results obtained in [4, 5] except for a factor \(4\pi \) or \(2\pi \) the cost with uniform measure (on the square \(Q^j_k\)) is bounded from above and below by a term close to

Finally, the total cost is a convex combination of all these contributions and the main term in the estimate turns out to be

divided by \(4\pi \) in Theorem 4.2 and \(2\pi \) in Theorem 4.3. We have already examined it in Proposition 4.2, and this concludes both the proofs.

Theorem 4.2

If \(X_1,\dots ,X_N\) are independent random variables with common distribution \(\rho \), it holds

Proof

As explained before, first we substitute \(X_1,\dots ,X_N\) with N independent random variables distributed with the probability measure whose density is \(\rho _N\). If \(\gamma >0\) and if \(T:\mathbb {R}^2\rightarrow \mathbb {R}^2\) is the map that transports \(\rho \) in \(\rho _N\), we denote \({\tilde{X}}_i:=T(X_i)\). Using the triangle inequality we have

The first term in the sum in (4.19) gives the main contribution, while we have a bound for the second and the third one thanks to (4.2) of Lemma 4.1 and (4.3) of Proposition 4.1. So we only focus on the first term.

To estimate it first we exclude the events with few particles in any square \(Q^j_k\), therefore we define

and we observe that the contributions in the events \({A^j_k}^c\) are negligible, indeed

and therefore

Then, we use (4.17) of Lemma 4.2, therefore if \(Z_i:=S({\tilde{X}}_i)\) where S is the map that transports \(\frac{\rho \mathbbm {1}_{Q^j_k}}{\rho (Q^j_k)}\) in the uniform measure on the square \(Q^j_k\), we have

for a suitable function \(\epsilon (N)\xrightarrow [N\rightarrow \infty ]{}2\epsilon \).

Moreover, since in the event \(A^j_k\), \(N^j_k\ge \frac{\mathbb {E}(N^j_k)}{2}\ge \frac{\epsilon ^2}{r_N^2}\frac{e^{-\frac{r_N^2}{2}}}{2\sqrt{2\pi }}\ge c\epsilon ^2(\log N)^{\alpha -1}\), we can use (2.2) of Theorem 2.4 to find a function \(\omega (N)\xrightarrow [N\rightarrow \infty ]{}0\) such that in \(A^j_k\)

which leads to

Finally, combining (4.19), (4.20) and (4.21) and using Lemma 4.1 and Proposition 4.1, we have

Using Proposition 4.2 we find

and letting \(\gamma ,\epsilon \rightarrow 0\) we conclude. \(\square \)

Theorem 4.3

If \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) are independent random variables with common distribution \(\rho \), we have

Proof

(Sketch) Except for some steps, the proof is very similar to the previous one, therefore we only underline the differences. Once made the substitution of \(\{X_i,Y_i\}_{i=1}^N\) with \(\{{\tilde{X}}_i,{\tilde{Y}}_i\}_{i=1}^N\), we restrict to the set

where \(\theta =\frac{1}{(\log N)^{\xi }}\) and \(0<\xi<\frac{\alpha -1}{2}<\frac{1}{2}\).

Then, if we rename

we apply triangle inequality and superadditivity of \(Wb_2^2\) to obtain

The main term is (4.22) and it can be estimated with (4.18) and (2.3) of Theorem 2.4, as in the previous theorem.

Then, as proved in Lemma 6.2 and Proposition 4.1, up for a constant, the term in (4.23) is bounded by

and therefore, sending \(\delta \) and \(\epsilon \) (which is hidden in (4.22)) to 0 we get the proof. \(\square \)

5 The Maxwellian Density

In this section we briefly focus on the Maxewellian density, that is

where \(\rho \) is uniform in one direction and Gaussian in the other one.

We consider \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) independent random variables with values in \((0,1)\times \mathbb {R}\) with density \(\rho \).

Arguing as in the case of the Gaussian density, we can prove the following result

Theorem 5.1

If \(X_1,\dots ,X_N\) and \(Y_1,\dots ,Y_N\) are independent random variables in \(\mathbb {R}^2\) with common distribution \(\rho \), we have

The idea is again that the number of particles \(X_i\) or \(Y_i\) close to a point \(x\in (0,1)\times \mathbb {R}\) is \(Ne^{-\frac{x_2^2}{2}}dx\), that is strictly smaller than 1 when \(|x_2|>\sqrt{2\log N}\). Therefore, also if the density \(\rho \) has an unbounded support, we expect all the particles to be in the domain \((0,1)\times (-\sqrt{2\log N},\sqrt{2\log N})\). As for the case of the Gaussian density we expect the total cost to be estimated by

once omitted the factor \(\frac{1}{2\pi }\) or \(\frac{1}{4\pi }\).

To make this rigorous we substitute again \(\rho \) with a density (that we will call \(\rho _N\)) whose support is compact but increases with N. To define \(\rho _N\), first we define \(r_N\) and \({\tilde{r}}_N\) as

We define \({\tilde{r}}_N\) in this way because when multiplying for \(m\lfloor r_N\rfloor \) we obtain an integer number and therefore the set can easily covered by an integer number of squares, while \(\frac{{\tilde{r}}_N}{r_N}\xrightarrow [N\rightarrow \infty ]{}1\). Moreover, m will be sent to \(\infty \) in the end.

Then we apply the cut-off:

Finally we cover \((0,1)\times (-{\tilde{r}}_N,{\tilde{r}}_N)\) with squares \(\{Q^j_k\}_{j,k}\) whose side is \(\frac{1}{m\lfloor r_N\rfloor }\), and we also define the horizontal rectangles \(\{R_k\}_{k}:=\{\cup _j Q^j_k\}_k\), which are aimed to be used in the analogous of the Proposition 4.1 (Fig. 6).

Using this partition the arguments used are analogous to the Gaussian case, and in this way we can prove Theorem 5.1.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Ajtai, M., Komlós, J., Tusnády, G.: On optimal matchings. Combinatorica 4, 259–264 (1984)

Ambrosio, L., Glaudo, F.: Finer estimates on the \(2\)-dimensional matching problem. J. Éc. Polytech. Math. 6, 737–765 (2019)

Ambrosio, L., Glaudo, F., Trevisan, D.: On the optimal map in the \(2\)-dimensional random matching problem. Discrete Contin. Dyn. Syst. 39(12), 7291–7308 (2019)

Ambrosio, L., Stra, F., Trevisan, D.: A PDE approach to a 2-dimensional matching problem. Probab. Theory Relat. Fields 173(1–2), 433–477 (2019)

Ambrosio, L., Goldman, M., Trevisan, D.: On the quadratic random matching problem in two-dimensional domains. Electron. J. Probab. 27, 35. Id/No 54 (2022)

Barthe, F., Bordenave, C.: Combinatorial optimization over two random point sets. In: Séminaire de Probabilités XLV, pp. 483–535. Springer, New York (2013)

Benamou, J.D., Brenier, Y.: A computational fluid mechanics solution to the Monge-Kantorovich mass transfer problem. Numer. Math. 84(3), 375–393 (2000)

Benedetto, D., Caglioti, E.: Euclidean random matching in 2d for non-constant densities. J. Stat. Phys. 181(3), 854–869 (2020)

Benedetto, D., Caglioti, E., Caracciolo, S., D’Achille, M., Sicuro, G., Sportiello, A.: Random assignment problems on \(2d\) manifolds. J. Stat. Phys. 183(2), 40. Id/No 34 (2021)

Berthet, P., Fort, J.C.: Exact rate of convergence of the expected \(W_2\) distance between the empirical and true Gaussian distribution. Electron. J. Probab. 25, 1–16 (2020)

Bobkov, S., Ledoux, M.: One-dimensional empirical measures, order statistics, and Kantorovich transport distances. Paperback Mem. Am. Math. Soc. 1259 (2019)

Boissard, E., Le Gouic, T., Loubes, J.M.: Distribution’s template estimate with Wasserstein metrics. Bernoulli 21(2), 740–759 (2015)

Brezis, H.: Remarks on the Monge-Kantorovich problem in the discrete setting. Comptes Rendus Math. 356, 207–213 (2018)

Caracciolo, S., Sicuro, G.: One dimensional Euclidean matching problem: exact solutions, correlation functions and universality. Phys. Rev. E 90, 4 (2014)

Caracciolo, S., Lucibello, C., Parisi, G., Sicuro, G.: Scaling hypothesis for the Euclidean bipartite matching problem. Phys. Rev. E 90, 012118 (2014)

Caracciolo, S., D’Achille, M., Sicuro, G.: Anomalous scaling of the optimal cost in the one-dimensional random assignment problem. J. Stat. Phys. 174, 846–864 (2019)

Dobrić, V., Yukich, J.E.: Asymptotics for transportation cost in high dimensions. J. Theor. Probab. 8, 97–118 (1995)

Figalli, A., Gigli, N.: A new transportation distance between non-negative measures, with applications to gradients flows with Dirichlet boundary conditions. J. Math. Pures Appl. (9) 94(2), 107–130 (2010)

Fournier, N., Guillin, A.: On the rate of convergence in Wasserstein distance of the empirical measure. Probab. Theory Relat. Fields 162(3–4), 707–738 (2015)

Goldman, M., Trevisan, D.: Convergence of asymptotic costs for random Euclidean matching problems. Prob. Math. Phys. 2(2), 341–362 (2021)

Goldman, M., Huesmann, M., Otto, F.: Almost sharp rates of convergence for the average cost and displacement in the optimal matching problem. arXiv:2312.07995 (2024)

Ledoux, M.: On optimal matching of Gaussian samples II (2018)

Ledoux, M.: On optimal matching of Gaussian samples. J. Math. Sci. NY 238(4), 495–522 (2019)

Ledoux, M., Jie-Xiang, Z.: On optimal matching of Gaussian samples III. Probab. Math. Stat. 41, 237–265 (2021)

Peyré, G., Cuturi, M.: Computational optimal transport: with applications to data science. Found. Trends Mach. Learn. 11(5–6), 355–607 (2019)

Santambrogio, F.: Optimal Transport for Applied Mathematicians, vol. 55, no. 58–63, p. 94. Birkäuser, New York (2015)

Talagrand, M.: Transportation cost for Gaussian and other product measures. Geom. Funct. Anal. 6(3), 587–600 (1996)

Talagrand, M.: Upper and Lower Bounds for Stochastic Processes. Springer, New York (2014)

Talagrand, M.: Scaling and non-standard matching theorems. C. R. Math. Acad. Sci. Paris 356(6), 692–695 (2018)

Villani, C.: Optimal Transport: Old and New. Springer, Berlin (2009)

Acknowledgements

This work has been partially supported by the grant “Progetti di ricerca di Ateneo 2021” by Sapienza University, Rome and by PNRR MUR project PE0000013-FAIR, and GNFM - INdAM. The authors warmly thank the referees for their useful suggestions and comments.

Funding

Open access funding provided by Università degli Studi di Roma La Sapienza within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Communicated by Hal Tasaki.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Lemma 6.1

Let \(\mu \) and \(\lambda \) be two probability measures on \(\mathbb {R}\) absolutely continuous with respect to Lebesgue measure, and let \(\nu \) be any probability measure on \(\mathbb {R}\). Then

Proof

If \(S:\mathbb {R}\rightarrow \mathbb {R}\) is the optimal map that transports \(\mu \) in \(\lambda \), i.e.

the map \(T:\mathbb {R}\times \mathbb {R}\ni (x_1,x_2)\mapsto (S(x_1),x_2)\in \mathbb {R}\times \mathbb {R}\) transports \(\mu \otimes \nu \) in \(\lambda \otimes \nu \), indeed using (6.1) we have

therefore, using (6.2)

\(\square \)

Lemma 6.2

([5], Proof of Theorem 1.2, Step 3) Let \(\rho \) (also depending on N) be any of the probability densities we are considering and \(\Omega \subseteq \mathbb {R}^2\) its support (\(\Omega \) depending on N too). Let \(\{X_i\}_{i=1}^N\) and \(\{Y_i\}_{i=1}^N\) be iid random variables with distribution \(\rho \).

Let \(\{\Omega _h\}_h\) be the partition of \(\Omega \) we have considered. We denote by \(N_h\) and \(M_h\) respectively the number of \(\{X_i\}_{i=1}^N\) and \(\{Y_i\}_{i=1}^N\) in \(\Omega _h\) and

For \(\theta \in (0,1)\) let \(A_{\theta }\) be the set

Then

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Caglioti, E., Pieroni, F. Random Matching in 2D with Exponent 2 for Gaussian Densities. J Stat Phys 191, 62 (2024). https://doi.org/10.1007/s10955-024-03275-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-024-03275-y