Abstract

We study the long time behaviour of a Brownian particle evolving in a dynamic random environment. Recently, Cannizzaro et al. (Ann Probab 50(6):2475–2498, 2022) proved sharp \(\sqrt{\log }\)-super diffusive bounds for a Brownian particle in the curl of (a regularisation of) the 2-D Gaussian Free Field (GFF) \(\underline{\omega }\). We consider a one parameter family of Markovian and Gaussian dynamic environments which are reversible with respect to the law of \(\underline{\omega }\). Adapting their method, we show that if \(s\ge 1\), with \(s=1\) corresponding to the standard stochastic heat equation, then the particle stays \(\sqrt{\log }\)-super diffusive, whereas if \(s<1\), corresponding to a fractional heat equation, then the particle becomes diffusive. In fact, for \(s<1\), we show that this is a particular case of Komorowski and Olla (J Funct Anal 197(1):179–211, 2003), which yields an invariance principle through a Sector Condition result. Our main results agree with the Alder–Wainwright scaling argument (see Alder and Wainwright in Phys Rev Lett 18:988–990, 1967; Alder and Wainwright in Phys Rev A 1:18–21, 1970; Alder et al. in Phys Rev A 4:233–237, 1971; Forster et al. in Phys Rev A 16:732–749, 1977) used originally in Tóth and Valkó (J Stat Phys 147(1):113–131, 2012) to predict the \(\log \)-corrections to diffusivity. We also provide examples which display \(\log ^a\)-super diffusive behaviour for \(a\in (0,1/2]\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Result

We study the motion of a Brownian particle in \(\mathbb {R}^2\), evolving in a dynamic random environment (DRE), given by the solution to the Itô SDE

where \((B(t))_{t \ge 0}\) is a standard two-dimensional Brownian motion and \((\omega _t(x))_{t\ge 0,x\in \mathbb {R}^2}\) is a time-dependent random field which is independent from \((B(t))_{t \ge 0}\). We take \((\omega _t(x))_{t\ge 0,x\in \mathbb {R}^2}\) to be a regularised version of the curl of the solution to the (fractional) stochastic heat equation with additive noise in \(\mathbb {R}^2\) and initial condition given by the curl of the regularised Gaussian Free Field (GFF) \(\underline{\omega }\). The coordinates of \(\omega _t = (\omega _t^1,\omega _t^2)\) satisfy

where \(s \in [0,\infty )\) and \((\partial ^\perp _1,\partial ^\perp _2):= (\partial _{x_2},-\partial _{x_1})\). Here, W is a mollified (in space) space-time white noise, with covariance \({\textbf{E}}[W_r(x)W_t(y)] = \min \{r,t\} V(x-y)\), and \(\underline{\omega }\) is distributed according to the law of the curl of a mollified GFF. More precisely, for every \(k,l = 1,2\), \(r,t \ge 0\) and \(x,y \in \mathbb {R}^2\), \(W_t^s(x):= (-\Delta )^{\frac{s-1}{2}}W_t(x)\) and \(\underline{\omega }\) have mean zero and covariance

where  denotes the convolution over \(\mathbb {R}^2\) and, for every \(\varphi _1,\varphi _2 \in {\mathcal {S}}(\mathbb {R}^2)\), the space of Schwartz functions over \(\mathbb {R}^2\),

denotes the convolution over \(\mathbb {R}^2\) and, for every \(\varphi _1,\varphi _2 \in {\mathcal {S}}(\mathbb {R}^2)\), the space of Schwartz functions over \(\mathbb {R}^2\),

The smooth function V is given by

for a \(U \in C^\infty (\mathbb {R}^2)\), radially symmetric, decaying exponentially fast at infinity and with \(\int _{\mathbb {R}^2} U(x) {\text {d}}\! x = 1\). To simplify some computations, we may also assume that U has Fourier transform supported in ball of radius 1. Also, the kernel \(g_r: \mathbb {R}^2 \setminus \{0\} \rightarrow \mathbb {R}\) in (3) and (5) is given by

where \(\Gamma \) denotes the Gamma function. In other words, \(g_r\) is the Green’s function of \((-\Delta )^r\) in \(\mathbb {R}^2\), for \(r \in [0,1]\). Also, the fractional Laplacian \((-\Delta )^{s-1}\) for \(s > 1\) can be defined in terms of its Fourier multiplier, as \(\widehat{(-\Delta )^{s-1}f}(p) = |p|^{2(s-1)} \widehat{f}(p)\).

Remark 1

Note that expressions (3)–(5) make sense due to the presence of the smooth function V. Plugging, e.g., the right-hand side of (5) into (6), we get

which is equal to the expression in the middle of (5). Furthermore, even though the GFF in the full space is only defined up to a constant (i.e. inverting the Laplacian \(\Delta \)), taking the derivatives \(\partial ^\perp _k\partial ^\perp _l\) of its regularisation makes it rigorous without ambiguity. The same reasoning holds to define the noise \(\partial ^\perp _k(-\Delta )^{\frac{s-1}{2}}W_t\) when \(s=0\).

Remark 2

As we show in Proposition 10, the dynamics (2) leave the law of \(\underline{\omega }\) invariant. The case \(s=1\) corresponds to the standard stochastic heat equation (SHE), whereas \(s=0\) is the infinite dimensional Ornstein Uhlenbeck process, as defined, for example in [16, Chapter 1.4]. The parameter \(s \in [0,\infty )\) controls the speed of the environment on different scales: smaller values of s correspond to faster movement of the larger scales.

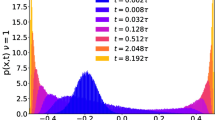

By definition, the drift field \(\omega _t(x)\) in (1) is divergence-free. Brownian particles evolving in stationary divergence-free random fields have been considered as a toy model for anomalous diffusions in inhomogeneous media, such as the motion of a tracer particle in an incompressible turbulent flow. See e.g. the surveys [9, Chapter 11] and [13]. Depending on the decay of the spatial correlations of the drift field, the particle could behave either diffusively or superdiffusively, meaning that the mean square displacement satisfies for large t

Here, \({\mathbb {E}}\) denotes the expectation under the joint law of B and \(\omega \), see Sect. 2. If the correlations of the environment decay fast enough (see e.g. [9, Chapter 11]), one gets diffusive behaviour, and if the decay is too slow (see [10]), one gets superdiffusive behaviour. There is, however, an intermediate regime for which the correlations decay in such a way that D(t) diverges only as \((\log t)^\gamma \), for \(\gamma > 0\). These logarithmic corrections are expected to be present in two-dimensional Brownian particles evolving in isotropic random drift fields. Indeed, by the Alder–Wainwright scaling argument (see [1,2,3, 7]), in 2d, if the displacement of the particle scales faster than the correlations of the environment field, then the (only) expected behaviour for the mean square displacement of the particle is to be of order \(t \sqrt{\log t}\). We briefly elaborate on this, following the Appendix of [19]. Let \(K(t,x):= {\textbf{E}}[\omega _0(0)\omega _t(x)]\). Now, assume that \({\mathbb {P}}(X(t) \in {\text {d}}\! x) \approx \alpha (t)^{-2} \varphi (\alpha (t)^{-1}x) {\text {d}}\! x\), where \(\varphi \) is a density and \(\alpha (t) = t^\nu (\log t)^\gamma \) for some \(\nu , \gamma \ge 0\). If we also assume that \(K(t,x) \approx \beta (t)^{-2}\psi (\beta (t)^{-1}x)\), for another density \(\psi \), then if

we must have \(\nu = 1/2\) and \(\gamma = 1/4\), which yields \(X(t) \approx t^\frac{1}{2} (\log t)^\frac{1}{4}\). We emphasise here that this argument, even though instructive, it is not mathematically rigorous. Indeed, the \(\sqrt{\log }\) correction was rigorously established recently by Cannizzaro et al. [5]. They showed that for a time-independent drift field \(\omega \) distributed according to the law of \(\underline{\omega }\), one has

up to \(\log \log t\) corrections, confirming a conjecture made by Tóth and Valkó [19] based on this scaling argument. The result was obtained in the Tauberian senseFootnote 1, i.e., in terms of the Laplace transform of the mean square displacement

Note that in the case considered by [5, 19], the correlations of the drift field do not scale in time since the drift field is time-independent, so (9) is trivially satisfied. Moving to the time-dependent case treated in the present work, if we take \(s\ge 1\) in (2), we still have that the correlations of the drift field do not scale fast enough - \(t^{\frac{1}{2s}}\) for \(\omega \) vs. \(t^{\frac{1}{2}} (\log t)^{\frac{1}{4}}\) for X. Therefore, we should still expect for the particle X to behave \(\sqrt{\log }\)-superdiffusively, since condition (9) remains true. However, if we move to the case where \(s < 1\) in (2), then the picture changes substantially and condition (9) is no longer satisfied, since \(t^{\frac{1}{2}} (\log t)^{\frac{1}{4}}<< t^{\frac{1}{2s}}\) for \(t>> 1\). Theorems 3 and 4 below rigorously establish the expected abrupt difference between super diffusive and diffusive behaviours depending on the exponent s, agreeing with the scaling argument.

Theorem 3

If \(s \ge 1\) in (2), then, for every \(\varepsilon > 0\), there exist constants \(A_\varepsilon , B_\varepsilon > 0\), depending only on \(\varepsilon \) and s, such that, for \(\lambda \in (0,1)\), we have

For the case \(s \in [0,1)\) we can apply a sector condition result of Komorowski and Olla [11] to obtain the following invariance principle.

Theorem 4

If \(s \in [0,1)\) in (2), then there exist constants \(A, B > 4\), such that, for all \(t \ge 0\), we have

Furthermore, let \((Q^\omega _\varepsilon )_{\varepsilon \in (0,1]}\) denote the laws of \((\varepsilon X(\frac{t}{\varepsilon ^2}))_{t\ge 0}\), over \(C[0,\infty )\), for \(\varepsilon \in (0,1]\), given the initial configuration \(\omega _0 = \omega \). Then \((Q^\omega _\varepsilon )_{\varepsilon \in (0,1]}\) converge weakly, with respect to the law of \(\underline{\omega }\), as \(\varepsilon \downarrow 0\), to the law of a Brownian motion with deterministic covariance matrix D, which only depends on s. The covariance matrix D is defined in (66).

The asymptotic behaviour of \(D_T(\lambda )\) in (12) is a reflection of the fact that the dynamics provided by the SHE (with the full Laplacian) does not mix the environment fast enough to produce a scaling of the correlations which is faster than the scaling of the displacement of the particle, as discussed above. On the other hand, the result in (13) confirms that the fractional dynamics on the environment changes dramatically the behaviour of the particle. Nonetheless, the fact that \(A>4\), with 4t being the mean square displacement of the Brownian part \(\sqrt{2}B(t)\), shows that the drift has a non-negligible effect on large scales. Moreover, the estimates in (12) are exactly the same as the ones obtained in [5], and our proof is an adaptation of theirs, which is based on Yau’s method [20] of recursive estimates of iterative truncations of the resolvent equation in (21). Indeed, when \(s\ge 1\), the dominant terms in the estimates are the ones coming from the stationary drift field, which are the same as for the static case. What we show is that we can remove the additional terms coming from the dynamics of the environment in the estimates, maintaining the same asymptotic behaviour. However, when \(s<1\), the dominant terms are now precisely the ones coming from the dynamics of the environment. The effect can be seen already in the first upper bound obtained by the first truncation of (21), and it is enough to show (13) in Theorem 4, see Remark 13.

If now we consider intermediate regimes between \(s=1\) and \(s<1\), only adding a logarithmic divergence to the operator \(\Delta \) in (2), we obtain something which was not predicted by the Alder–Wainwright scaling argument. Namely, for any given \(a \in (0,\frac{1}{2}]\), we can find an interpolation between the regimes \(s=1\) and \(s<1\) such that we prove corrections to diffusivity of order \((\log t)^a\). More precisely, if we consider that the coordinates of \(\omega _t = (\omega _t^1,\omega _t^2)\) satisfy

for a parameter \(\gamma > 0\). Then, we can show the following

Theorem 5

If \((X(t))_{t\ge 0}\) is the solution to (1) with \((\omega _t)_{t\ge 0}\) solution to (14), then, for every \(\gamma \in [\frac{1}{2},\infty )\), there exist constants \(A,B>0\), only depending on \(\gamma \), such that:

If \(\gamma \in [\frac{1}{2},1)\), then for \(\lambda \in (0,1)\),

If \(\gamma = 1\), then for \(\lambda \in (0,1)\),

Furthermore, if \(\gamma > 1\), we have

with \(A,B>4\).

Remark 6

The correlations of the field \((\omega _t)_{t\ge 0}\) solution to (14) should scale as \(\beta (t)\approx t^\frac{1}{2} (\log t)^\frac{\gamma }{2}\), providing a finer tuning of scaling than \(t^\frac{1}{2s}\) described by (2). While the regime \(\gamma \in (1/2,1]\) showcases behaviours of X(t) beyond the scope of the Alder–Wainwright scaling argument, the case \(\gamma = 1/2\) is borderline for the condition (9), for which the expected behaviour \(X(t)\approx t^\frac{1}{2} (\log t)^\frac{1}{4}\) is obtained. We do not treat the case \(\gamma \in [0,1/2)\) here, but we do expect a similar behaviour to the one in (12) for the case \(s=1\) [i.e. \(\gamma = 0\) in (14)].

Remark 7

The model described by (14) is a toy model to investigate the behaviour near the critical scaling exponent \(s=1\) in (2). However, recent results in [6] suggest that the scaling \(\beta (t)\approx t^\frac{1}{2} (\log t)^\frac{1}{4}\) arises in the 2-D stochastic Navier–Stokes equations.

1.1 Structure of the Paper

In Sect. 2 we define the environment seen from the particle process as a technical tool. In Sect. 3 we derive the action of the infinitesimal generator of the environment seen from the particle on Fock space, and show that the law of \(\underline{\omega }\) is invariant under the family of dynamics given by (2). Section 4 contains the proof of the main recursive estimates through an iterative analysis of the resolvent equation in (21) and a proof of (13) in Theorem 4 using only the first truncation of the resolvent equation. In Sect. 5 we prove Theorem 3 by using the recursive estimates obtained in Sect. 4. In Sect. 6, we present a general overview of the method in [11] of homogenisation of diffusions in divergence-free, Gaussian and Markovian fields and show that for \(s<1\) we may apply their results to get Theorem 4. In Sect. 7 we prove Theorem 5. Appendices A and B gather important ingredients from Cannizzaro et al. [5], and some generalisations to the present setting, necessary in Sects. 4 and 5 and Appendix C presents the final argument for the proof of Theorem 3, taken from [5].

2 Setting and preliminaries

Let \({\mathscr {T}}_0:= (\Omega , {\mathcal {B}}, {\textbf{P}})\) be a probability space supporting \(\underline{\omega }\) and an independent Wiener process W as defined between displays (2) and (3). Let \({\mathscr {T}}_1:= (\Sigma , {\mathcal {F}}, Q)\) be another probability space supporting a standard 2d Brownian motion B. We consider solutions to the system (1), (2) on \(\Omega \times \Sigma \) equipped with the product measure \({\mathbb {P}}= {\textbf{P}}\otimes Q\). The law of \((X(t))_{t\ge 0}\) under \({\mathbb {P}}\) is called the annealed law. Note, that under \({\mathbb {P}}\), the process \((X(t))_{t\ge 0}\) alone is not Markovian. Notwithstanding, we may define a different Markovian process, the so-called environment seen from the particle, which takes values on the larger space of functions over \(\mathbb {R}^2\) [12]. It evolves by spatially shifting the environment by the position of the walker, at any given time \(t\ge 0\). Precisely, we set

The law of X is rotationally invariant, and therefore we have that \({\mathbb {E}}[|X(t)|^2] = {\mathbb {E}}[X_1(t)^2 + X_2(t)^2] = 2{\mathbb {E}}[X_1(t)^2]\). Hence we may focus on its first coordinate only. Furthermore, \({\mathbb {E}}[X(t)] = 0\). Formula (18) allows us to write

where \({\mathcal {V}}(\omega ):= \omega ^1(0)\), for \(\omega = (\omega ^1,\omega ^2)\). Using the so-called Yaglom-reversibility (see Section 1.4 of [18]), we get that, for every \(0 \le s < t\), the random variables \(B(t) - B(s)\) and \(\int _s^t{\mathcal {V}}(\eta _r) {\text {d}}\! r\) are uncorrelated, so that

This in turn implies that we can rewrite (11) as \(D_T(\lambda ) = D_B(\lambda ) + D_{\mathcal {V}}(\lambda )\), where for all \(\lambda > 0\),

and therefore, we may focus on \(D_{\mathcal {V}}(\lambda )\), which requires a good understanding of the process \((\eta _t)_{t\ge 0}\). Since the drift field is stationary (see Proposition 10) and divergence-free, the law of \(\underline{\omega }\) is invariant also for \((\eta _t)_{t\ge 0}\) (see e.g. Chapter 11 in [9]). This ensures that, by Lemma 5.1 in [4], we can write

where \({\mathcal {L}}^s\) denotes the infinitesimal generator of \((\eta _t)_{t\ge 0}\), defined in (32) below, and with a slight abuse of notation we use \({\textbf{E}}\) to denote the expectation with respect to the law of \(\underline{\omega }\).

3 Operators on Fock Space

In order to analyse expression (21), we describe the infinitesimal generator of the infinite dimensional Markov process \(t \mapsto \omega _t\). With a small abuse of notation, let \({\textbf{P}}\) denote the law of \(\underline{\omega }\) and consider \(F \in L^2({\textbf{P}})\) of the form \(F(\omega ) = f(\omega ^{i_1}(x_1), \dots , \omega ^{i_n}(x_n))\) for arbitrary points \(x_1, \dots , x_n \in \mathbb {R}^2\) and for an \(f \in C_p^2(\mathbb {R}^n,\mathbb {R})\), the \(C^2\) functions with polynomially growing partial derivatives of order less or equal than 2. In this section, to emphasise its dependence in \(s \in [0,\infty )\), let us denote by \({\mathcal {L}}_0^s\) the infinitesimal generator of \((\omega _t)_{t\ge 0}\). For every \(s \in [0,\infty )\), an application of Itô’s formula gives

where \((\cdot ,\cdot )_V\) is given by (6), \(\partial _kf\) denotes the function \( y = (y_1,\dots ,y_n) \mapsto \partial _{y_k}f(y)\) and for every \(x \in \mathbb {R}^2\), the expression with \(\delta _x\) is well defined by Remark 1.

Let us introduce the Wiener chaos with the respect to \({\textbf{P}}\), following the same convention and notation as [5]. Let \(x_{1:n}:= (x_1, \dots , x_n)\), \({\textbf {i}}:= (i_1,\dots , i_n)\) and \(:\cdots :\) denotes the Wick product with respect to \({\textbf{P}}\). Define \(H_0\) as the set of constant random variables and for \(n \ge 1\) let \(H_n\) be the set

where the functions \(f_{{\textbf {i}}}\) are symmetric and such that

satisfies

Here, \((p^\perp _{k,1}, p^\perp _{k,2}):= (p_{k,2}, -p_{k,1})\) for \(p_k = (p_{k,1}, p_{k,2})\) and \(\hat{f_{{\textbf {i}}}}\) denotes the Fourier transform of \(f_{{\textbf {i}}}\), given by

where \(x_{1:n} \cdot p_{1:n}\) denotes the canonical inner product in \(\mathbb {R}^{2n}\) and \(\iota = \sqrt{-1}\).

Remark 8

Note that since we have the mollification in the noise, the objects \(f_{{\textbf {i}}}\) can be distributions of any negative regularity, such as the delta Dirac distribution. The random variable which we are most interested in here, namely \({\mathcal {V}}(\omega ) = \omega ^1(0)\), defined in the previous section, can be seen as

Furthermore, \(\hat{{\mathcal {V}}}(p) = p_2\) for \(p = (p_1,p_2)\).

It is well known, see e.g. Nualart [16] or Janson [8], that

and for \(F^i \in L^2({\textbf{P}}), \; i=1,2\) given by \(F^i = \sum _{n=0}^{\infty } \psi _n^i\), for \(\psi _n^i \in H_n\), the expectation \({\textbf{E}}[F^1F^2]\) can be written as

Remark 9

Henceforth we will implicitly identify a random variable \(F \in H_n \subset L^2({\textbf{P}})\) of the form (23) with its kernel \(\widehat{\psi _n}\) in Fourier space. In the same philosophy, we will denote linear operators acting on \(L^2({\textbf{P}})\) with the correspondent operators acting on Fock space \(\bigoplus _n L^2_{sym}(\mathbb {R}^{2n})\), and we will denote them by the same symbol.

Now we are ready to prove

Proposition 10

The action of the infinitesimal generator \({\mathcal {L}}_0^s\) in (22) is diagonal in Fock space (\({\mathcal {L}}_0^s: H_n \rightarrow H_n\)), and is given by

on Wick monomials, and in Fourier variables by

Furthermore, the law of \(\underline{\omega }\) is invariant under the dynamics governed by \({\mathcal {L}}_0^s\), i.e., the infinite dimensional Markov process \((\omega _t)_{t\ge 0}\) is stationary and it is distributed according to the law of \(\underline{\omega }\) for every \(t \ge 0\).

Proof

By the definition of Wick monomials, we have that

where  for \(a,b,c \in \mathbb {R}\). Now, the above applied to (22) with \(F =~:\,\omega ^{i_1}(x_1) \cdots \omega ^{i_n}(x_n):\) gives

for \(a,b,c \in \mathbb {R}\). Now, the above applied to (22) with \(F =~:\,\omega ^{i_1}(x_1) \cdots \omega ^{i_n}(x_n):\) gives

Note that on Wick monomials, multiplication by \(\omega ^{i_k}(x_k)\), as in (30), produces both a term in one higher homogeneous chaos and a term in one lower homogeneous chaos. Precisely, for each \(1 \le k \le n\) in (30) we have

where \(((-\Delta )^{-1}(-(-\Delta )^s)\partial ^\perp _{i_k}\delta _{x_k}, \partial ^\perp _{i_l}\delta _{x_l})_V = {\textbf{E}}[(-(-\Delta )^s) \omega ^{i_k}(x_k), \omega ^{i_l}(x_l)]\). Summing over k, the first term after the equal sign gives us (28) and the second term after the equality cancels out with (31). (29) is a direct consequence of (28) and (24). Now we move to the invariance of the law of \(\underline{\omega }\). It is known that a necessary and sufficient condition for this is that \(({\mathcal {L}}_0^{s})^* {\textbf {1}} = 0\), where \(({\mathcal {L}}_0^{s})^*\) denotes the adjoint of the operator \({\mathcal {L}}_0^s\) in \(L^2({\textbf{P}})\) and \({\textbf {1}}\) denotes the constant function equal to 1, see e.g. [14, Theorem 3.37]. Also, by (26), it is enough to consider \(F =~:\omega ^{i_1}(x_1) \cdots \omega ^{i_n}(x_n):\), so that

completes the proof. \(\square \)

So far we gathered all the ingredients necessary to characterise the full generator \({\mathcal {L}}=: {\mathcal {L}}^s\) of \((\eta _t)_{t\ge 0}\). Putting together the generator \({\mathcal {L}}_0^s\) of the environmental process \((\omega _t)_{t\ge 0}\) with Proposition 10, the arguments in Section 2.1 of Tóth and Valkó [19] and the main result of Komorowski [12], we get that the generator \({\mathcal {L}}^s\) is given by

where \({\mathcal {V}}\nabla := {\mathcal {V}}_1D_1 + {\mathcal {V}}_2D_2\), with \({\mathcal {V}}_i(\omega ) = \omega ^i(0)\) and \(D_i\) is the infinitesimal generator of the spatial shifts in the canonical directions of \(\mathbb {R}^2\), for \(i=1,2\), see [12]. Also, \({\mathcal {V}}\nabla = {\mathscr {A}}_+ - {\mathscr {A}}_+^*\) can be decomposed into a creation and annihilation parts, one being minus the adjoint of the other, and it comes from the drift part of (1), i.e., the environment, while \(\Delta = \nabla ^2\) comes from the Brownian part in (1), see [19]. We have that

As noted in Cannizzaro et al. [5], adopting the conventions on Fock space discussed earlier, one has

where  and for \(p,q \in \mathbb {R}^2\), \(p \times q\) denotes the scalar given by the third coordinate of the cross product of p with q, when thought as vectors in \(\mathbb {R}^3\), precisely, \(p \times q = p_1q_2 - p_2q_1 = |p||q| \sin \theta \), where \(\theta \) is the angle between p and q.

and for \(p,q \in \mathbb {R}^2\), \(p \times q\) denotes the scalar given by the third coordinate of the cross product of p with q, when thought as vectors in \(\mathbb {R}^3\), precisely, \(p \times q = p_1q_2 - p_2q_1 = |p||q| \sin \theta \), where \(\theta \) is the angle between p and q.

Remark 11

Here we can see that if \(s=1\) in (29), the difference between the operators \(\Delta \) and \({\mathcal {L}}_0^1\) is simply the cross terms in (33). The most important observation here is that if \(s\ge 1\) and \(|p|\le 1\), in view of (21) and Remark 8, for any function \(\psi _1 \in H_1\), we have that

This is a good evidence to suggest (12), as can be further seen in Remark 13. Also, a good heuristics for the drastic change in behaviour in s contained in Theorem 3 is that in Fourier variables, the operator \({\mathcal {L}}_0^s\) acts much more severely in large scales when \(s<1\) than when \(s<1\) than when \(s\ge 1\), since \(|p|^{2s}<< |p|^{2s^\prime }\) for \(|p|<< 1\), if \(s^\prime < s\).

Now we proceed to the analysis of the resolvent equation in (21).

4 Iterative Analysis of the Resolvent Equation

We can write \({\textbf{E}}[{\mathcal {V}}(\lambda - {\mathcal {L}}^s)^{-1}{\mathcal {V}}]\) as \({\textbf{E}}[{\mathcal {V}}{\textbf{V}}]\), where \({\textbf{V}}\) is the solution to the resolvent equation \((\lambda - {\mathcal {L}}^s){\textbf{V}} = {\mathcal {V}}\). Note however that \({\mathcal {V}}\in H_1\) is in the first Wiener chaos and that the operator \({\mathcal {L}}^s\) maps \(H_n\) to \(H_{n-1} \oplus H_{n} \oplus H_{n+1}\), one should expect that the solution \({\textbf{V}}\) to the resolvent equation has non-trivial componentes in all Wiener chaoses. Following the idea introduced by Landim et al. [15] we truncate the generator \({\mathcal {L}}^s\) by using \({\mathcal {L}}_n^s:= P_{\le n} {\mathcal {L}}^s P_{\le n}\), where \(P_{\le n}\) denotes the orthogonal projection onto the inhomogeneous chaos of order n, i.e., \(P_{\le n}: L^2({\textbf{P}}) \rightarrow \bigoplus _{k=0}^n H_k\). Denote by \({\textbf{V}}^n \in \bigoplus _{k=0}^n H_k\) the solution to the resolvent equation truncated at level n, i.e.,

Now, writing one equation for each of the components of \({\textbf{V}}\) above we get that the equation above is equivalent to the system of equations

Note that as it was observed in [19, Section 2], \({\mathscr {A}}_+^*F = 0\) for every \(F \in H_1\), so that \({\textbf{V}}^n_0 = 0\) and we do not write an equation for it. Note that since \({\mathcal {V}}\in H_1\) to evaluate (21) at the level of the truncation, only the component in the first Wiener chaos is necessary, i.e., \({\textbf{V}}^n_1\). For that, the system above can be solved and shows that

where

It is important to note that \({\mathscr {H}}_k: H_n \rightarrow H_n\) for every \(k,n \in \mathbb {N}\). Recall that by (27) we can write \({\textbf{E}}[{\mathcal {V}}(\lambda - {\mathcal {L}}_n^s)^{-1}{\mathcal {V}}] = \langle {\mathcal {V}}, {\textbf{V}}^n_1 \rangle \). As it was first noticed in [15, Eq. (2.4)], the following monotonicity formula follows from the fact that \(\lambda - \Delta - {\mathcal {L}}_0^s\) is a positive operator.

Lemma 12

Let \(S:= \lambda - \Delta - {\mathcal {L}}_0^s\), then, for every \(n \ge 1\), we get the bounds

Remark 13

Let us look to the first upper bound when taking \(n=1\) in Lemma 12 above. Recall that \({\mathcal {V}}\in H_1\) and that \(\hat{{\mathcal {V}}}(p) = p_2\) for \(p = (p_1,p_2)\). Thus by considering the solution \({\textbf{V}}^1\) to the truncation at the first level, we arrive at

for a constant \(C>0\). Note now that for the case \(s<1\), the inequalities in (13) imply the diffusive bounds (13) in Theorem 4, see (63) in Sect. 6 and the following discussion. On the other hand, for the case of \(s \ge 1\), the estimates in (13) together with the first lower bound obtained with \(n=1\) in Lemma 12, by the same argument for the lower bound in Sect. 7 for the case \(\gamma =1\), gives

for constants \(A,B>0\). These are precisely the estimates obtained in [19] for the static case. In particular this already implies that the dynamics of SHE is not enough to remove the super diffusivity caused by the random environment.

The estimates in (37) can be iterated for higher levels and be improved at each step. Indeed, to get (12), it is necessary to use Lemma 12 in full by taking the level k to diverge with \(\lambda \downarrow 0\). Moreover, an understanding of the estimates for every level is necessary, and for that it suffices to analyse the operators \({\mathscr {H}}_k\). For this, we make use of the following three lemmas, taken from Cannizzaro et al. [5]. In what follows, \({\mathcal {S}}\) is an operator which acts diagonally in Fock space with Fourier multiplier denoted by \(\sigma \), such that \(\widehat{{\mathcal {S}}\psi _n}(p_{1:n}) = \sigma _n(p_{1:n})\widehat{\psi _n}(p_{1:n})\) for any \(\psi _n \in H_n\), which will later be taken to be \({\mathcal {S}} = S + {\mathscr {H}}_n\), for \(n \ge 1\).

Lemma 14

For any \(\psi _n \in H_n\), it holds that

where

and

Lemma 15

If for every \(n \in \mathbb {N}\) and any \(p_{1:n} \in \mathbb {R}^{2n}\) with \(\sum _{k=1}^{n} p_k \ne 0\)

with \(\theta \) the angle between q and \(\sum _{k=1}^{n} p_k\), then for every \(\psi _n\)

where \(\tilde{{\mathcal {S}}}\) is the diagonal operador whose Fourier multiplier is \(\tilde{\sigma }\). If the inequality in (38) is \(\ge \), then (39) holds with \(\ge \) as well.

Lemma 16

If for every \(n \in \mathbb {N}\) and any \(p_{1:n} \in \mathbb {R}^{2n}\)

with \(\theta \) the angle between q and \(\sum _{k=1}^{n} p_k\), then for every \(\psi _n\)

where \(\tilde{{\mathcal {S}}}\) is the diagonal operador whose Fourier multiplier is \(\tilde{\sigma }\).

Here are some preliminary definitions, needed to state and prove the next theorem. Expressions (40) and (41) arise naturally when iterating the estimates for different levels k in Lemma 12. For \(k \in \mathbb {N}, x > 0\) and \(z \ge 0\), let \(\textrm{L}, \textrm{LB}_k\) and \(\textrm{UB}_k\) be given by

and, for \(k \ge 1\), define \(\sigma _k\) as

We have that \(\sigma _k \equiv 1\). Also, for \(n \in \mathbb {N}\), let

where \(K_1,K_2\) are constants to be chosen sufficiently large later and \(\varepsilon \) is the small positive constant appearing in the main Theorem 3. Now, for \(k \ge 1\), let \(\delta _k\) be an operator such that its Fourier multiplier is \(\sigma _k\), meaning

where \({\mathcal {N}}\) denotes the so-called Number Operator, the infinitesimal generator of \(\partial _tu = -u + \sqrt{2}(-\Delta )^{-\frac{1}{2}}\xi \), which acts diagonally on the n-th Wiener chaos by multiplying by n: \({\mathcal {N}}\psi _n = n \psi _n\) for every \(\psi _n \in H_n\).

Remark 17

Note that the functions \(\textrm{L}, \textrm{LB}_k\) and \(\textrm{UB}_k\) are the same as in Cannizzaro et al. [5], while the operators \(\delta _k\) carry the the generator \({\mathcal {L}}_0^s\), which is the difference between the dynamic and the static settings.

Gathering these we put them into the next theorem.

Theorem 18

If \(s\ge 1\) in (2), then for every \(\varepsilon > 0\), we may choose \(K_1\) and \(K_2\) in (42) to be large enough so that, for \(0 < \lambda \le 1\) and \(k \ge 1\), the following operator estimates hold true.

and

where \(c_1 = 1\) and

Remark 19

We shall emphasise here that the sequences \(c_{2k}\) and \(c_{2k+1}\) in (45) do converge to finite, strictly positive constants, as \(k \rightarrow \infty \), provided that \(\varepsilon > 0\). Furthermore, the limits are strictly greater than \(2\pi \) and strictly smaller than 1, respectively. This can be seen, e.g. for the even sequence, \(\frac{c_{2k+2}}{c_{2k}} = (1 + \frac{1}{k^{1 + \varepsilon }})(1 - \frac{1}{(k+1)^{1 + \varepsilon }})^{-1} > 1\) and \(c_2 = 2\pi \). Also, by iterating the definition for \(c_{2k}\), it can be shown that convergence of the sequence is equivalent to the convergence of \(\sum _{l=1}^\infty l^{-(1+\varepsilon )}\), which only holds when \(\varepsilon >0\).

Now we will prove Theorem 18 by induction on k. Note that the induction alternates between lower (43) and upper (44) bounds, being one the consequence of the other, and so forth.

Proof of the Lower Bound (43)

Recall that \(s \ge 1\). For \(k=1\) we note that, by definition, \({\mathscr {H}}_1 = 0\) and \(\delta _1\) is non-positive if we choose the constant \(K_2\) in (42) to be large enough.

We now show (43) with \(2k+1\) for \(k\ge 1\), assuming by induction that (44) holds for 2k:

For every \(\psi \in H_n\), we use Lemma 14 with \({\mathcal {S}} = (\lambda - \Delta (1 + c_{2k}\delta _{2k}) - {\mathcal {L}}_0^s)^{-1}\) to separate

into a diagonal and an off-diagonal part, and we treat each separately. For the diagonal part, we apply Lemma 15 for which it suffices to lower bound

where \(p = \sum _{i=1}^{n} p_i\) and \(|p_{1:n}|^{2\,s}:= \sum _{i=1}^{n} |p_i|^{2\,s}\), for \(p_1,\dots , p_n \in \mathbb {R}^2\), and \(\theta \) is the angle between p and q. Clearly, \(|p_{1:n}|^{2s}\) is different from \(|p|^2\) even for \(s=1\). Naturally, the argument in \(z_{2k},f_{2k}\) is \(n+1\) since \({\mathscr {A}}_+\psi \in H_{n+1}\), but by (42) we get that \(z_{2k}(n+1) = z_{2k+1}(n)\) and \(f_{2k}(n+1) = f_{2k+1}(n)\) and henceforth we drop the argument n to lighten the notation. We may upper bound the denominator in (48) by

where we have used for both inequalities that \(c_{2k}, f_{2k+1}, \textrm{UB}_{k-1} \ge 1\) and the monotonicity of \(\textrm{UB}_{k-1}\). Therefore, we may look to

By Lemma 24, we get for the integral above, the lower bound

By (76) the primitive of the integral above is \(-2\textrm{LB}_k(\varrho ,z_{2k+1})\), hence the expression above equals

where in the last inequality we have again used Lemma 20 and chosen the constant \(K_2\) in (42) large enough so that for all \(k,n \in \mathbb {N}\), it holds that

So by Lemma 15 we get that the diagonal part of (47) is lower bounded by \(\langle \psi , (-\Delta ) {\tilde{S}} \psi \rangle \), where

Here we have twice lower bounded \(z_{2k+1} = z_{2k+1}(n) \ge z_{2k+1}(1)\) and \(f_{2k+1} = f_{2k+1}(n) \ge f_{2k+1}(1)\).

For the off-diagonal part of (47) we use Lemma 16. For that, denote \(p = \sum _{i=1}^{n} p_i\) and \(p^\prime = \sum _{i=1}^{n-1} p_i\) and we must upper bound

where in the last inequality we have used the monotonicity of \(\textrm{UB}_{k-1}\) and that since \(\hat{V}\) is supported on \(|q| \le 1\), we have that \(|q|^{2\,s} \le |q|^2\) if \(s\ge 1\). Thanks to Lemma 22 the functions \(f(x,z) = c_{2k}f_{2k+1}\textrm{UB}_{k-1}(x,z)\) and \(g(x,z) = \frac{1}{c_{2k}f_{2k+1}}\textrm{LB}_{k-1}(x,z)\) satisfy the assumptions of Lemma 25 and we obtain the upper bound

where we have used that \(\textrm{LB}_{k-1} \le \textrm{LB}_{k} \), the definition of \(z_{2k+1} = z_{2k+1}(n)\) in (42) and the fact that

Altogether, Lemmas 15 and 16 combined with expressions (51) and (53), we obtain that the operator \({\mathscr {A}}_+^*(\lambda - \Delta (1 + c_{2k}\delta _{2k}) - {\mathcal {L}}_0^s)^{-1} {\mathscr {A}}_+\) is lower bounded by

where

which by (46) is also a lower bound for \({\mathscr {H}}_{2k+1}\). Again, making the constants \(K_1\) and \(K_2\) in (42) as large as necessary, we obtain that

which combined with the definition of \(c_{2k+1}\) in (45) concludes the proof of the lower bound in (43). \(\square \)

Proof of the Upper Bound (44)

For \(k \ge 1\), by the induction hypothesis, we have that

As we did before, for every \(\psi \in H_n\), we use Lemma 14 with \({\mathcal {S}} = (\lambda - \Delta (1 + c_{2k-1}\delta _{2k-1}) - {\mathcal {L}}_0^s)^{-1}\) to separate

into a diagonal and an off-diagonal part, and we treat each separately. For the diagonal part, we apply Lemma 15, but this time we want to upper bound

since \(f_{2k-1}(n+1) = f_{2k}(n)\), \(z_{2k-1}(n+1) = z_{2k}(n)\). The first inequality is due to the fact that \(c_{2k-1} < 1\) and \(f_{2k} > 1\) and the second inequality is a consequence of

Note that the above is equivalent to

Thanks to (78) and the Mean Value Theorem, we have that, for every \(k \in \mathbb {N}\) and \(x<y \in \mathbb {R}\)

The above applied to the difference in (57), which is positive and hence equals its absolute value, yields

To upper bound the integral in (56) we make use of Lemmas 21 and 22 considering \(\tilde{\lambda }:= \lambda + |p_{1:n}|^{2s}\) instead of \(\lambda \), to obtain the upper bound

The integral above, by Lemmas 20 and 23, is controlled by

We deal with the off-diagonal term in the same fashion than in (52), upper estimating

Further, we make use of Lemma 25, this time with \(f = \textrm{LB}_{k-1}\) and \(g = \textrm{UB}_{k-1}\), to get the upper bound

Putting all the estimates together and noting that \(z_{2k}(n) > z_{2k}(1)\), we establish that \({\mathscr {A}}_+^*(\lambda - \Delta (1 + c_{2k-1}\delta _{2k-1}) - {\mathcal {L}}_0^s)^{-1}{\mathscr {A}}_+\) is upper bounded by

where, by choosing \(K_1\) as big as necessary, we obtain

This is enough to see that (44) holds with \(c_{2k}\) defined in (45). \(\square \)

5 Proof of (12) in Theorem 3

In this section we finish proving Theorem 3 by using the full power of the iterative estimates provided by Lemma 12. This is done by choosing the level of the truncation depending on \(\lambda \), i.e., as \(\lambda \rightarrow 0\), \(n \rightarrow \infty \) in Lemma 12. Again, C denotes a constant, which may change from line to line, but is independent of \(p,z,\lambda \) and k.

Proof of Theorem 3for \(s\ge 1\). Recall that for \(p = (p_1,p_2) \in \mathbb {R}^2\), \(\hat{{\mathcal {V}}}(p) = p_2\) and that \({\mathcal {V}}\in H_1\) implies that the multiplier of \(-\Delta - {\mathcal {L}}_0^s\) is \(|p|^2 + |p|^{2s}\). Let us start with upper bound. By Lemma 12 and (21) we get that

which by (43) in Theorem 18 is upper bounded by

where we have used (57). Note that since \({\mathcal {V}}\in H_1\), the arguments in \(f_{2k+1}\) and \(z_{2k+1}\) are both 1 and therefore they are constants which only depend on k. Now we conclude exactly as [5], since the expression above is equal to expression (5.1) in their paper. We include the missing steps in Appendix C for completeness.

Now we proceed to the lower bound. Again, by Lemma 12 and (21), we get that

which in turn, by Theorem 18, is lower bounded by

where

we have substituted \(c_{2k}\) by its limit as \(k \rightarrow \infty \) and used the monotonicity of \(\textrm{UB}_{k-1}\).

Now, note that since all the functions in (59) but \(p \mapsto p_2^2\) are rotationally invariant, the integral has the exact same value as if we replace \(p \mapsto p_2^2\) with \(p \mapsto p_1^2\). Summing the integrals with \(p \mapsto p_2^2\) and \(p \mapsto p_1^2\) and diving it by two, we get that expression (59) is equal to (the 1/2 is merged into C)

Thus, an application of (83) gives the lower bound

where the second inequality is a consequence of (76) in Lemma 20. Once again, expression (60) above reduces to the exact same as the third line in display (5.7) in [5], and thus we include the end of the proof in Appendix C for completeness.

\(\square \)

6 Proof of Theorem 4

In this section, we show that our model for \(s<1\) is a particular case of the theory developed in Komorowski and Olla [11] of homogenisation for diffusions in divergence free, Gaussian and Markovian random environments. See also Chapters 11 and 12 of the monograph [9].

Let us consider here the function \({\mathcal {V}}(\omega ):= \omega (0) = (\omega ^1(0), \omega ^2(0)) = ({\mathcal {V}}^1(\omega ), {\mathcal {V}}^2(\omega ))\). In view of Remark 8, we see that \({\mathcal {V}}^i \in H_1, i=1,2\). Now, we may write

and focus on the additive functionals of \((\eta _t)_{t\ge 0}\) given by \(\int {\mathcal {V}}^i(\eta _s) {\text {d}}\! s\), for \(i=1,2\), since \(\varepsilon B(t/\varepsilon ^2) \overset{d}{=}\ B(t)\) for every \(\varepsilon >0\) and \(t\ge 0\). Let \({\mathscr {C}}:= \bigcup _n \oplus _{k \le n} H_k\) be a core for \({\mathcal {L}}^s\) and \(({\mathcal {L}}^s)^*\). Let \({\mathcal {S}}:= ({\mathcal {L}}^s + ({\mathcal {L}}^s)^*)/2 = {\mathcal {L}}_0^s + \Delta \) be the symmetric part of the generator \({\mathcal {L}}^s\). For every \(\psi \in {\mathscr {C}}\), let \(\Vert \psi \Vert _1^2:= \langle \psi , -{\mathcal {L}}^s \psi \rangle = \langle \psi , -{\mathcal {S}} \psi \rangle \) be a norm and

be another norm. By [17, Theorem 2.2], for every \(t\ge 0\), it holds that

where the last inequality is a consequence of

since \(s<1\), as discussed previously in (36) in Remark 13 for \(i=1\). Note that (63) and (64) prove the upper bound (13) in Theorem 4. Now recall that \({\mathbb {E}}[|X(t)|^2] = 2{\mathbb {E}}[X_1(t)^2]\), so the lower bound follows from the Yaglom-reversibility (19), since the Brownian motion provides \(A\ge 4\) and the contribution from the drift is non-negative. In order to give an argument for the positive contribution of the drift (i.e. \(A>4\)), we use the Laplace transform (20) and (21). We already know that by taking \(n=1\) in Lemma 12, it holds that \({\textbf{E}}[{\mathcal {V}}(\lambda - {\mathcal {L}}^s)^{-1}{\mathcal {V}}] \le \Vert {\mathcal {V}}^i\Vert _{-1}^2 < \infty \) is finite, so let us now show that \({\textbf{E}}[{\mathcal {V}}(\lambda - {\mathcal {L}}^s)^{-1}{\mathcal {V}}]>0\). Indeed, we consider the lower bound corresponding to \(n=1\) in Lemma 12 and note that \(s<1\) implies that by adapting the proof of Lemma 25, the off diagonal part for this estimate is bounded by a constant. Thus, for \(\theta \) the angle between \(p,q \in \mathbb {R}^2\) below, we obtain

Therefore, by (20) and (21) we get (see [18, Lemma 1] or [5, Remark 2.3])

which concludes that \(A>4\).

To conclude, we show that our model, for \(s<1\), is a particular case of the general framework of divergence-free, Gaussian and Markovian environments treated in [11, Section 6].

Proof of Theorem 4

In Section 6 of [11], the same SDE as in (1) is considered, with a dynamic random environment \((\omega _t)_{t\ge 0}\) which is divergence-free, Gaussian and Markovian. Moreover, they assume that, in \(d=2\), the space-time correlations of the drift field \(\omega \) satisfy expression (1.2) in p. 181, which reads as

where \(a: [0,\infty ) \rightarrow [0,\infty )\) is a compactly supported and bounded cut-off function, \(\beta \ge 0\) and \(\alpha < 1\). Also, the notation \(p \otimes p\) represents the canonical tensor product in \(\mathbb {R}^2\) and \({\textbf{I}}\) the identity \(2 \times 2\) matrix. Since here we consider the dyamics in (2), we identify \(\beta \) in (65) with s. Also, since \(\hat{V}\) is rotationally invariant and has compact support, we may identify a(|p|) in (65) with \(\hat{V}(p)\). Now, note that, for \(p = (p_1,p_2) \in \mathbb {R}^2\),

In view of (27) and (24), we get that, for every \(\psi ^j \in H_1\), \(j=1,2\), given by \(\psi ^j(\omega ) = \int _{\mathbb {R}^2} f^j_1(x) \omega ^1(x) {\text {d}}\! x + \int _{\mathbb {R}^2} f^j_2(x) \omega ^2(x) {\text {d}}\! x\), (in what follows we suppress p from \(\hat{f^i_j}(p)\))

With this observation, we see that \(\alpha < 1\) in (65) translates to \(\alpha = 0\). With the same argument, we conclude that the law of \(\underline{\omega }\) satisfies assumption (E) in Section 6 of [11] with \(\alpha = 0\). Therefore, since \(s = \beta < 1\), by [11, Theorem 6.3], we get Theorem 4 with the covariance matrix D given by

where the objects \(\psi _*^i\), for \(i=1,2\) satisfy \(\lim _{\lambda \downarrow 0} \Vert \psi _\lambda ^i - \psi _*^i\Vert _1 = 0\) for \(\psi _\lambda ^i\) solution to the resolvent equations

The inner product \(\langle \cdot , \cdot , \rangle _1\) is defined through polarisation by

\(\square \)

7 Proof of Theorem 5

In this section we prove Theorem 5 by making use of the first upper and lower bounds provided by Lemma 12, i.e., the estimates obtained for \(n=1\). When \(\omega _t = \omega _t^\gamma \) is the solution to (14), the dominant terms in the estimates are once again the ones coming from the dynamics of the environment, as in the case of \(s<1\) in Theorem 3. This is the reason why we can find matching upper and lower bounds just going to the first two estimates.

Since \((-\Delta )\) is a self-adjoint, positive operador, we can make sense of the operator \((\log (e + (-\Delta )^{-1}))^\gamma \) for every \(\gamma > 0\) through its Fourier multiplier, in the spirit of Proposition 10, given by

Expression (67) is associated with the generator \({\mathcal {L}}_0^\gamma \) of the process \((\omega _t^\gamma )_{t\ge 0}\) solution to (14). Therefore, since we have the correction \((\log (e + (-\Delta )^{-1}))^\frac{\gamma }{2}\) in front of the noise in (14), Proposition 10 holds true with \(-(-\Delta )^s\) replaced by \((\log (e + (-\Delta )^{-1}))^\gamma \Delta \) and thus the dynamics in (14) preserves the law of \(\underline{\omega }\) as invariant measure for every \(\gamma > 0\). Note also that \((\log (e + x^{-1}))^\gamma > 1\) for every \(x \ge 0\).

Let us start with some calculus, which are analogous results to the ones in Lemma 20. For every \(\gamma >0\), \(\gamma \ne 1\), the following holds:

Proof of Theorem 5

We denote \({\mathcal {L}}^\gamma = {\mathcal {L}}_0^\gamma + {\mathscr {A}}_+ - {\mathscr {A}}_+^* + \Delta \). Here again we only consider \({\mathcal {V}}:= {\mathcal {V}}^1 \in H_1\) given by \({\mathcal {V}}(\omega ):= \omega ^1(0)\), as in Theorem 3. Recall that \(\hat{{\mathcal {V}}}(p) = p_2\) for \(p = (p_1,p_2)\). Thus, by taking \(n=1\) in Lemma 12, we arrive at

Now, adapting (81) in Lemma 21 and (93) in Lemma 24, we have that expression (70) is upper bounded by

Therefore, by (69) and (21), we see that

Note that if \(\gamma > 1\), (72) is bounded by a constant and this is enough to show the diffusive bounds in (17) with \(A>4\) in the same fashion as in Sect. 6 for the case of \(s<1\) in Theorem 4. Also, if \(\gamma = 1\) in (71), then by (76) with \(k=0\), we get the upper bound in (16).

Now, let us proceed to the lower bound by also taking \(n=1\) in Lemma 12, for the case \(\gamma \in [\frac{1}{2},1]\). First, note that by adapting Lemma 25, the off diagonal term in the first lower estimate is bounded above by a constant C. Also, note that by adapting (80) in Lemma 21 and using (69) again, we see that, for a constant \(D > 0\)

The third inequality is a result of the same argument as in (59), the fourth inequality is true because \(\gamma \in [\frac{1}{2},1] \Rightarrow 1-\gamma \le \gamma \) and thus we may absorb the lower order terms into \(|p|^2(\log (e + |p|^{-2}))^\gamma \) by changing the constant C. The fifth inequality is due to an application of the Mean Value Theorem together with the inequality in (68), in the same spirit of (57). Once again, by adapting (81) in Lemma 21, we get that (73) is lower bounded by

Therefore, if \(\gamma \in [\frac{1}{2},1)\), by (69) and (21), we get that

and if \(\gamma = 1\), by (76) with \(k=0\), we get the lower bound in (16), which concludes the proof of Theorem 5. \(\square \)

References

Alder, B.J., Wainwright, T.E.: Velocity autocorrelations for hard spheres. Phys. Rev. Lett. 18, 988–990 (1967)

Alder, B.J., Wainwright, T.E.: Decay of the velocity autocorrelation function. Phys. Rev. A 1, 18–21 (1970)

Alder, B.J., Wainwright, T.E., Gass, D.M.: Decay of time correlations in two dimensions. Phys. Rev. A 4, 233–237 (1971)

Cannizzaro, G., Erhard, D., Schönbauer, P.: 2D anisotropic KPZ at stationarity: scaling, tightness and nontriviality. Ann. Probab. 49(1), 122–156 (2021)

Cannizzaro, G., Haunschmid-Sibitz, L., Toninelli, F.: \(\sqrt{\log t}\)-Superdiffusivity for a Brownian particle in the curl of the 2D GFF. Ann. Probab. 50(6), 2475–2498 (2022)

Cannizzaro, G., Kiedrowski, J.: Stationary stochastic Navier–Stokes on the plane at and above criticality. Stoch. Partial Differ. Equ.: Anal. Comput. (2023). https://doi.org/10.1007/s40072-022-00283-5

Forster, D., Nelson, D.R., Stephen, M.J.: Large-distance and long-time properties of a randomly stirred fluid. Phys. Rev. A 16, 732–749 (1977)

Janson, S.: Gaussian Hilbert Spaces. Cambridge University Press, Cambridge (1997)

Komorowski, T., Landim, C., Olla, S.: Fluctuations in Markov Processes: Time Symmetry and Martingale Approximation. Springer-Verlag, Berlin, Heidelberg (2012)

Komorowski, T., Olla, S.: On the superdiffusive behavior of passive tracer with a Gaussian drift. J. Stat. Phys. 108, 647–668 (2002)

Komorowski, T., Olla, S.: On the sector condition and homogenization of diffusions with a Gaussian drift. J. Funct. Anal. 197(1), 179–211 (2003)

Komorowski, T.: An abstract Lagrangian process related to convection-diffusion of a passive tracer in a Markovian flow. Bull. Pol. Acad. Sci. Math. 48(4), 413–427 (2000)

Kozlov, S.M.: The method of averaging and walks in inhomogeneous environments. Russ. Math. Surv. 40(2), 73–145 (1985)

Liggett, T.M.: Continuous Time Markov Processes. An Introduction. Graduate Studies in Mathematics, vol. 113. American Mathematical Society, Providence (2010)

Landim, C., Quastel, J., Salmhofer, M., Yau, H.T.: Superdiffusivity of asymmetric exclusion process in dimensions one and two. Commun. Math. Phys. 244, 455–481 (2004)

Nualart, D.: The Malliavin Calculus and Related Topics. Springer-Verlag, Berlin, Heidelberg (2006)

Sethuraman, S., Varadhan, S., Yau, H.: Diffusive limit of a tagged particle in asymmetric simple exclusion process. Comm. Pure Appl. Math. 53, 972–1006 (2000)

Tarrès, P., Tóth, B., Valkó, B.: Diffusivity bounds for 1D Brownian polymers. Ann. Probab. 40(2), 695–713 (2012)

Tóth, B., Valkó, B.: Superdiffusive bounds on self-repellent Brownian polymers and diffusion in the curl of the Gaussian free field in d = 2. J. Stat. Phys. 147(1), 113–131 (2012)

Yau, H.-T.: \((\log t)^{2/3}\) law of the two dimensional asymmetric simple exclusion process. Ann. Math. 159(2), 377–405 (2004)

Acknowledgements

GF would like to thank Bálint Tóth for presenting him the paper [11] and for inspiring discussions. GF also gratefully acknowledges funding via the EPSRC Studentship 2432406 in EP/V520305/1. HW acknowledges financial support by the Royal Society through the University Research Fellowship UF140187. Moreover, GF and HW are funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy EXC 2044 -390685587, Mathematics Münster: Dynamics-Geometry-Structure.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Stefano Olla.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Technical Lemmas I

In this section, for completeness, we list some important technical lemmas used throughout the estimates in the proofs of Theorems 18 and 3, all of them due to Cannizzaro et al. [5].

Lemma 20

For \(k \in \mathbb {N}\) let \(\textrm{L}\), \(\textrm{L}B_{k}\) and \(\textrm{UB}_k\) be the functions defined in (40) and (41). Then, the three are decreasing in the first variable and increasing in the second. For every \(x > 0\) and \(z \ge 1\), the following holds true

Furthermore, for every \(0< a < b\), one has

Finally, it also holds that

Lemma 21

Let V be as in (7). Let \(z > 1\) and \(f(\cdot , z): [0,\infty ) \mapsto [1,\infty )\) be a strictly decreasing and differentiable function, such that

and the function \(g(\cdot , z): [0,\infty ) \mapsto [1,\infty )\) a strictly decreasing function such that \(g(x,z)f(x,z) \ge z\). Then, there exists a constant \(C_{\textit{Diag}} > 0\) such that, for all \(z > 1\), one gets the bound

where \(p = \sum _{i=1}^{n} p_i\) for some \(n \in \mathbb {N}\) and \(p_1,\dots , p_n \in \mathbb {R}^2\) and \(\theta \) is the angle between p and q. The second integral is zero if \(\lambda + |p|^2 \ge 1\). Moreover, for \(\lambda \le 1\),

Lemma 22

The functions \(\textrm{UB}_k(\cdot , z)\) and \(\textrm{LB}_k(\cdot , z)\) satisfy the conditions of the previous lemmas.

Lemma 23

For every \(z\ge 1\), \(\lambda \in \mathbb {R}_+\) and \(p \in \mathbb {R}^2\) such that \(\lambda + |p|^2 \le 1\), one has

Technical Lemmas II

The two lemmas in this section are modifications of Lemma 21 and Lemma A.3 in Cannizzaro et al. [5]. Throughout this section we use a generic constant C which may change from line to line, but is always independent of \(p, q, z, \lambda , k\) and n.

Lemma 24

Let \(s\ge 1\) in (2) and \(\tilde{\lambda }:= \lambda + |p_{1:n}|^{2s}\). Then, there exists a constant \(C_{\textit{Diag}} > 0\) such that, for all \(z > 1\) and every \(k \ge 0\), we get the bound

where \(p = \sum _{i=1}^{n} p_i\) for some \(n \in \mathbb {N}\) and \(p_1,\dots , p_n \in \mathbb {R}^2\) and \(\theta \) is the angle between p and q. The second integral is zero if \(\tilde{\lambda } + |p|^2 \ge 1\). Moreover, for \(\lambda \le 1\),

Proof

Since z is fixed, we suppress the dependence of \(\textrm{UB}_k\) and \(\textrm{LB}_k\) on it. A fact used multiple times here is that for all \(a,b > 0\) and \(z \ge 1\) we have

First, we separate the left hand side of (82) into three terms

Note that (85) and (87) have the same flavour as (A.11) and (A.12) in [5, Lemma A.2], respectively.

In fact, we handle them almost indentically and we add the proof here for completeness. The main difference is then in the term (86). We start with (85). Note that under the restriction \(|p+q| < |p|\), we may bound each integral individually. In fact, for the first we use \((\sin \theta )^2 \le \frac{|p+q|^2}{|p|^2}\) to get

For the second, we see that \(|p+q|< |p| \Rightarrow |q| < 2|p|\) and therefore \((\tilde{\lambda } + |p|^2 + |q|^2)\textrm{UB}_k(\tilde{\lambda } + |p|^2 + |q|^2) + |q|^{2\,s} \ge |p|^2\textrm{UB}_k(\tilde{\lambda } + 5|p|^2)\) and again \(\int _{|p+q| < |p|} {\text {d}}\! q \le C|p|^2\).

For the region \(|p+q| \ge |p|\), let \(h(x) = x\textrm{UB}_k(x)\), which by Lemma 22 satisfies (79) and thus \(|h^\prime (x)| \le 2|\textrm{UB}_k(x)|\). So by the Mean Value Theorem,

Note that by trashing both \(|q|^{2s} \ge 0\) in the denominator of the difference in (85), over \(|p+q| \ge |p|\) the difference is bounded by

where the last inequality is a consequence of the integral in the last line being of order \(|p|^{-1}\). To see that, further divide the integral into the regions \(|q| \ge 2|p|\) and \(|q| < 2|p|\). For the first, note that \(|q| \ge 2|p| \Rightarrow |p+q| \ge \frac{|q|}{2}\)

while for the second

This concludes the estimate of the first term.

Now, we move to (87) and conclude with (86) at the end since we will need (87) for its proof. Here, we consider the first integral over the region \(|q|^2 \ge 1 - (\tilde{\lambda } + |p|^2)\), which implies \((\tilde{\lambda } + |p|^2 + |q|^2)^{-1} \le 1\). Using (84), we obtain the following upper bound

Still the first integral in (87) but now in the complement of the previous region, we first observe that since \(\hat{V}\) is smooth and rotationally invariant, there exists a constant \(C > 0\) such that \(|\hat{V}(q) - \hat{V}(0)| < C|q|^2\) for \(|q| \le 1\). Then, we may re-write that integral as

Passing the integral in (89) into polar coordinates and then setting \(s = \tilde{\lambda } + |p|^2 + r^2\), we get

Lastly, we control the integral in (90) using \(|\hat{V}(q) - \hat{V}(0)| < C|q|^2\) for \(|q| \le 1\) and (84)

The estimate of the third term is then concluded.

Finally, we deal with (86), even though not necessary, we treat the cases \(s>1\) and \(s=1\) differently, to emphasise the influence of the exponent 2s. Consider first \(s>1\). We see that the difference in (86) is equal to

where in the first inequality we have used that \(|q|^{2s} \ge 0\) and that \(\textrm{UB}_k \ge 1\), and the integral in the last line is of order \(\int _0^1 r^{2s-3} {\text {d}}\! r \le C\) since \(s>1\). Now, we treat \(s=1\). The difference in (86) is equal to

where in the last inequality we have used (75). Now, by the estimate obtained for (87), we have the following upper bound

Now, note that

Therefore, (92) is upper bounded by

where the last inequality is a consequence of (76) in Lemma 21. The result follows from collecting all the estimates so far. \(\square \)

Lemma 25

Let the same assumptions of Lemma 21 to hold and let \(\tilde{\lambda } = \lambda + |p_{1:n}|^{2s}\), for every \(s\ge 1\). Then, there exists a constant \(C_{\textit{Off}} > 0\), such that

where \(p = \sum _{i=1}^{n} p_i\), \(p^\prime = \sum _{i=1}^{n-1} p_i\) and \(|p_{1:n}|^{2s} = \sum _{i=1}^{n} |p_i|^{2s}\).

Proof

We split \(\mathbb {R}^2\) into three regions, \(\Omega _1 = \{q: |p + q| < \frac{|p|}{2}\}\), \(\Omega _2 = \{q: |p^\prime + q| < \frac{|p|}{2}\}\) and \(\Omega _3 = \mathbb {R}^2 {\setminus } (\Omega _1 \cup \Omega _2)\). Note that since we are looking for an upper bound, it is irrelevant whether \(\Omega _1\) intersects \(\Omega _2\) or not. In \(\Omega _1\), note that \(|p + q|< \frac{|p|}{2} \Rightarrow |q|< \frac{3}{2}|p| \Rightarrow |p + q|^2 + |q|^2 < \frac{5}{2}|p|^2\), so use the monotonicity of f to get \(f(\tilde{\lambda } + |p+q|^2 + |q|^2) \ge f(\tilde{\lambda } + \frac{5}{2}|p|^2)\) and also \((\sin \theta )^2 \le \frac{|p+q|^2}{|p|^2}\) to obtain

since by assumption \(f(x,z) \ge \frac{z}{g(x,z)}\) and g is decreasing in x. Also, since in \(\Omega _1\) we have \(|p + q|< \frac{|p|}{2} \Rightarrow |q| < \frac{3|p|}{2}\), the last integral is of order |p|. Indeed, note that denoting \(B_R(a)\) the ball of radius R centred at a,

since \(q \mapsto |q|^{-1}\) has a singularity at zero. For the region \(\Omega _2\) we use

where \(a \vee b:= \max \{a,b\}\). This is true since, for \(|p^\prime | \le 2|p|\), it is a weaker estimate than the previous one, and for \(|p^\prime | > 2|p|\) it can be shown that, in the region \(\Omega _2\), the right hand side is always greater or equal than 1 (see [5, (A.13)]). Inserting this into the integral it follows that

Note that, in \(\Omega _2\), we have that \(|p+q|^2 + |q|^2 \le (\frac{3}{2}|p| + |p^\prime |)^2 + (\frac{1}{2}|p| + |p^\prime |)^2 \le 2(\frac{3}{2}|p| + |p^\prime |)^2\), so using the monotonicity of f we obtain the upper bound

In order to estimate the last term we maximise in \(|p^\prime |\) (here we think of \(p^\prime \) as any vector in \(\mathbb {R}^2\)). It can be easily seen that it is monotonously increasing for \(|p^\prime | < 2|p|\). For \(|p^\prime | \ge 2|p|\) we show that it is monotonously decreasing: since f satisfies (79),

for any \(a,b \ge 0\), it holds that

where the argument of f and \(f^\prime \) is always \(a+ 2(b + r)^2\). Therefore, the maximum over \(p^\prime \) of the right hand side of (94) is attained at \(|p^\prime | = 2|p|\) and is equal to

The final part of the proof is to consider the region \(\Omega _3\), for which we use \((\sin \theta )^2 \le 1\) and apply Hölder inequality with exponents \(\frac{3}{2}\) and 3, to the functions \([\tilde{\lambda } + |p+q|^2f(\tilde{\lambda } + |p+q|^2 + |q|^2)]^{-1}\) and \(|p^\prime + q|^{-1}\) with respect to the measure \(\hat{V}(q) {\text {d}}\! q\) to get

Since in \(\Omega _3\) we have that \(|p^\prime + q| \ge \frac{|p|}{2}\), the second term in (95) is bounded by a constant times \(|p|^{-\frac{1}{3}}\).

Moving to the integral inside the first parenthesis in (95), note that in \(\Omega _3\) we have that \(|p + q| \ge \frac{|p|}{2} \Rightarrow |q| \le 3|p+q|\) and then by the monotonicity of f we get the upper bound

where the last inequality is obtained by bounding \(\hat{V}(q)\) by a constant, setting \({\tilde{q}} = p + q\) and passing to polar coordinates. Now, we divide the domain of integration \(\frac{|p|}{2} \le r < \infty \) into two regions, \(\tilde{\lambda } < r^2\) and its possibly empty complement \(\tilde{\lambda } \ge r^2\). In the first, it holds that

Using that by assumption \(f(x,z) \ge \frac{z}{g(x,z)}\) and that g is decreasing in x, together with (97), we can control (96) by

The last step is to consider the region \(\tilde{\lambda } \ge r^2\). For that, we have

Inserting all the estimates for the region \(\Omega _3\) into (95) we obtain the desired upper bound

which completes the proof. \(\square \)

End of Proof of Theorem 3

end of proof of (12) in Theorem 3

We start with the end of the proof for the upper bound. Note that the sequence \(c_{2k+1}\) in (58) is monotonously decreasing and convergent, so we may replace it by its limit and merge it into the constant C below. By (81), expression (58) is bounded, by

where we have used Lemma 23 for the first inequality, (77) in Lemma 20 for the second and that \(\textrm{LB}_k\) is increasing in z for the last. Now we invoke the Central Limit Theorem applied to Poisson random variables of rate one to get

which yieds that uppon the choice

and recalling the definition of \(\textrm{LB}_k\) in (41), for \(\lambda \) small enough, the bound

Inserting the above into (99) and using the definitions of \(z_{2k+1} = z_{2k+1}(1)\) and \(f_{2k+1} = f_{2k+1}(1)\) in (42), we arrive at

which completes the proof of the upper bound, since

Moving to the lower bound, recall (60)

where we use (50) and that, for k large enough, \(1 - \frac{1}{\sqrt{z_{2k+1}}} \ge c > 0\). Also, the \(-f_{2k+1}\) term only produces a constant contribution, which can be absorved by reducing C if \(\lambda \) is sufficiently small. Using (100) with the same choice of k, we obtain

which allied to the definition of \(f_{2k+1} = f_{2k+1}(1)\) in (42), concludes that

Therefore, (12) follows from (20) and (21) and the proof of Theorem 3 is concluded. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Lima Feltes, G., Weber, H. Brownian Particle in the Curl of 2-D Stochastic Heat Equations. J Stat Phys 191, 16 (2024). https://doi.org/10.1007/s10955-023-03224-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-023-03224-1