Abstract

We study an energy-constrained random walker on a length-N interval of the one-dimensional integer lattice, with boundary reflection. The walker consumes one unit of energy for every step taken in the interior, and energy is replenished up to a capacity of M on each boundary visit. We establish large N, M distributional asymptotics for the lifetime of the walker, i.e., the first time at which the walker runs out of energy while in the interior. Three phases are exhibited. When \(M \ll N^2\) (energy is scarce), we show that there is an M-scale limit distribution related to a Darling–Mandelbrot law, while when \(M \gg N^2\) (energy is plentiful) we show that there is an exponential limit distribution on a stretched-exponential scale. In the critical case where \(M / N^2 \rightarrow \rho \in (0,\infty )\), we show that there is an M-scale limit in terms of an infinitely-divisible distribution expressed via certain theta functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is motivated by a number of problems in mathematical ecology. The motion of individual animals and the space-time statistics of animal populations are of central interest in ecology, crucial to the understanding of population structure and dynamics, dispersion patterns, foraging, herding, territoriality, and other aspects of the behaviour of animals and their interactions with and responses to their environment and broader ecosystem [22, 26, 33, 35, 40]. Movement ultimately bears on large-scale (in space and time) phenomena, such as biological fitness, genetic variability, and inter-species dynamics.

Random walks, diffusions, and related processes have been used for over a century to model animal movement: see e.g. [15, 27, 40] for an introduction to the extensive literature on these topics. Assuming Fickian diffusion leads to reaction–diffusion models in which dynamics is driven by smooth gradients of resource or habitat, such as chemotactic or haptotactic dynamics in microbiology [37], but fails in several important respects to reflect the real-world behaviour of animals [21, 27]. Various modelling paradigms attempt to incorporate more realistic aspects of animal behaviour, such as memory and persistence [24], intermittent rest periods [39], or anomalous diffusion [27].

A basic aspect of population dynamics is the flow of energy: individuals consume resource and subsequently expend energy in somatic growth, maintenance, reproduction, foraging, and so on; penguins must balance feeding and swimming, for example [11]. The distribution of scarce food or water in the environment imposes constraints on animal movement, as is seen, for instance, in flights of butterflies between flowers, elk movements between feeding craters, and elephants moving between water sources in the dry season [24, 41]. An important modelling challenge is to incorporate resource heterogeneity in space and time, to model the distribution of food and shelter, consumption of resources, and seasonality; in bounded domains, one must provide a well-motivated choice of boundary conditions.

Traditionally, mathematical ecology modelling of spatially distributed processes in bounded domains employs Dirichlet or Neumann boundary conditions, which do not do justice to the wealth of behaviour that can be exhibited at the boundary of a domain occupied by an animal population. Clearly, availability of resources can be different in the interior of the domain and at the boundary, as can the nature of interactions among members of the population. It is not impossible that an animal may want to spend some time staying at, or diffusing along the boundary. An example of the differences in behaviours, this time of a population of molecules, is provided in the pregnancy-test model of [32], where the species only diffuse in the bulk of the sample, but participate in reactions with the coating of the vessel where the test takes place. Another area in which reaction–diffusion systems are applied and in which boundary conditions enter in a crucial way is porous-medium transport [13, 38].

There have been proposed random walk models where arrival on the boundary results in the termination of the walk; see [6] for a review. In the present paper we take a “dual” view, in which staying in the interior of the domain leads ultimately to the demise of the walker, while arrival at the boundary provides viaticum to replenish the walker’s energy and allows the walk to continue. For example, an island in a lake may support animals that roam the interior but must return to shore to drink. Adjacent models include the “mortal random walks” of [7] and the “starving random walks” of [42], but in neither of these does the walker carry an internal energy state. Our model differs from models that incorporate resource depletion by feeding [8,9,10, 14, 23] in that, for us, energy replenishment only occurs on the boundary and the resource is inexhaustible. Comparison of our results to those for models including resource depletion, in which the domain in which the walk is in danger of extinction grows over time, is a topic we hope to address in future work.

Section 2 describes our mathematical set-up and our main results, starting, in Sect. 2.1 with an introduction of a class of Markov models of energy-constrained random walks with boundary replenishment.

2 Model and Main Results

2.1 Energy-Constrained Random Walk

For \(N \in \mathbb {N}:= \{1,2,3,\ldots \}\), denote the finite discrete interval \(I_N:= \{ x \in \mathbb {Z}: 0 \le x \le N \}\). Also define the semi-infinite interval by \(I_\infty := {\mathbb {Z}}_+:= \{ x \in \mathbb {Z}: x \ge 0 \}\). We write \({\overline{\mathbb {N}}}:= \mathbb {N}\cup \{ \infty \}\) and then \(I_N, N \in {\overline{\mathbb {N}}}\) includes both finite and infinite cases. The boundary \(\partial I_N\) of \(I_N\) is defined as \(\partial I_N:= \{0,N\}\) for \(N \in \mathbb {N}\), and \(\partial I_\infty := \{0\}\) for \(N = \infty \); the interior is \(I^{{{{\circ }}}}_N:= I_N {\setminus } \partial I_N\). We suppose \(N \ge 2\), so that \(I^{{{{\circ }}}}_N\) is non-empty. Over \({\overline{\mathbb {N}}}\) we define simple arithmetic and function evaluations in the way consistent with taking limits over \(\mathbb {N}\), e.g., \(1/\infty := 0\), \(\exp \{ -\infty \}:= 0\), and so on.

We define a class of discrete-time Markov chains \(\zeta := (\zeta _0, \zeta _1, \ldots )\), where \(\zeta _n:= (X_n,\eta _n) \in I_N \times {\mathbb {Z}}_+\), whose transition law is determined by an energy update (stochastic) matrix P over \({\mathbb {Z}}_+\), i.e., a function \(P: {\mathbb {Z}}_+^2 \rightarrow [0,1]\) with \(\sum _{j \in {\mathbb {Z}}_+} P(i,j) = 1\) for all \(i \in {\mathbb {Z}}_+\). The coordinate \(X_n\) represents the location of a random walker, and \(\eta _n\) its current energy level. Informally, the dynamics of the process are as follows. As long as it has positive energy and is in the interior, the walker performs simple random walk steps; each step uses one unit of energy. If the walker runs out of energy while in the interior, the walk terminates. If the process is at the boundary \(\partial I_N\) with current energy level i, P(i, j) is the probability that the energy changes to level j; the walk reflects into the interior.

Formally, the transition law is as follows.

-

Energy-consuming random walk in the interior: If \(i \in \mathbb {N}\) and \(x \in I^{{{{\circ }}}}_N\), then

$$\begin{aligned} {{\,\mathrm{\mathbb {P}}\,}}( X_{n+1} = X_n + e, \, \eta _{n+1} = \eta _n - 1 \mid X_n = x, \eta _n = i ) = \frac{1}{2}, e \in \{-1,+1\}.\end{aligned}$$(2.1) -

Extinction through exhaustion: If \(x \in I^{{{{\circ }}}}_N\), then

$$\begin{aligned} {{\,\mathrm{\mathbb {P}}\,}}( X_{n+1} = x, \, \eta _{n+1} = 0 \mid X_n = x, \eta _n = 0 ) = 1.\end{aligned}$$(2.2) -

Boundary reflection and energy transition: If \(i \in {\mathbb {Z}}_+\), \(x \in \partial I_N\), and \(y \in I^{{{{\circ }}}}_N\) is the unique y such that \(|y-x| = 1\), then

$$\begin{aligned} {{\,\mathrm{\mathbb {P}}\,}}( X_{n+1} = y, \, \eta _{n+1} = j \mid X_n = x, \eta _n =i ) = P ( i, j). \end{aligned}$$(2.3)

In the present paper, we focus on a model with finite energy capacity and maximal energy replenishment at the boundary. This corresponds to a specific choice of P, namely \(P(i, M)=1\), as described in the next section. Other choices of the update matrix P are left for future work.

2.2 Finite Energy Capacity

Our finite-capacity model has a parameter \(M \in \mathbb {N}\), representing the maximum energy capacity of the walker. The boundary energy update rule that we take is that energy is always replenished up to the maximal level M. Here is the definition.

Definition 2.1

For \(N \in {\overline{\mathbb {N}}}, M \in \mathbb {N}\), and \(z \in I_N \times I_M\), the finite-capacity (N, M, z)-model is the Markov chain \(\zeta \) with initial state \(\zeta _0 = z\) and with transition law defined through (2.1)–(2.3) with energy update matrix P given by \(P(i,M) =1\) for all \(i \in {\mathbb {Z}}_+\).

For the (N, M, z) model from Definition 2.1, with \(z = (x,y) \in I_N \times I_M\), we write \({{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z\) and \({{{\,\mathrm{\mathbb {E}}\,}}}^{N,M}_z\) for probability and expectation under the law of the corresponding Markov chain \(\zeta \) with spatial domain \(I_N\), energy capacity M, initial location \(X_0=x \in I_N\), and initial energy \(\eta _0=y \in I_M\). The main quantity of interest for us here is the total lifetime (i.e., time before extinction) of the process, defined by

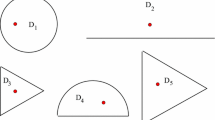

where we adopt the usual convention that \(\min \emptyset :=+\infty \). The process \(\zeta \in I_N \times I_M\) can be viewed as a two-dimensional random walk with a reflecting/absorbing boundary, in which the interior drift (negative in the energy component) competes against the (positive in energy) boundary reflection: see Fig. 1 for a schematic.

Schematic of the transitions for the reflecting random walk \(\zeta _n = (X_n, \eta _n)\) in the rectangle \(I_N \times I_M\). The arrows indicate some possible transitions: in the interior, energy decreases and the particle executes a nearest-neighbour random walk, while the boundary states provide energy replenishment. The larger (red-filled) circles indicate the absorbing states (zero energy, but not at the boundary)

If for initial state \(z = (x,y) \in I_N \times I_M\) it holds that \(N > M+x\) (including \(N = \infty \)), then the energy constraint and the fact that \(X_0 =x\) ensures that \(X_n\) can never exceed \(M+x\), so \(X_n\) is constrained to the finite interval \(I_{M+1+x}\). In other words, every (N, M, z) model with \(N > M+x\) is equivalent to the \((\infty ,M,z)\) model, for any \(y \in I_M\). Since we start with \(\eta _0 = y\) and energy decreases by at most one unit per unit time, \({{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z( \lambda \ge y ) = 1\). The following ‘irreducibility’ result, proved in Sect. 4.1, shows that extinction is certain, i.e., \({{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z(\lambda < \infty ) =1\), provided there are at least two sites in \(I^{{{{\circ }}}}_N\).

Lemma 2.2

Suppose that \(N \in {\overline{\mathbb {N}}}\) with \(N \ge 3\), that \(M \in \mathbb {N}\), and that \(\zeta _0 = z = (x,y)\). Under \({{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z\), the process \(\zeta \) is a time-homogeneous Markov chain on the finite state space \(\Lambda _{N,M}:= I_{N \wedge (M+x)} \times I_M\). Moreover, there exists \(\delta >0\) (depending only on M) such that \(\sup _{z \in I_N \times I_M} {{{\,\mathrm{\mathbb {E}}\,}}}^{N,M}_z[ \textrm{e}^{\delta \lambda } ] < \infty \).

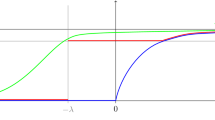

Our main results concern distributional asymptotics for the random variable \(\lambda \) as \(N, M \rightarrow \infty \). We will demonstrate a phase transition depending on the relative growth of M and N. Roughly speaking, since in time M a simple random walk typically travels distance of order \(\sqrt{M}\), it turns out that if \(M \gg N^2\) the random walk will visit the boundary many times, and \(\lambda \) grows exponentially in \(M/N^2\), while if \(M \ll N^2\) then there are relatively few (in fact, of order \(\sqrt{M}\)) visits to the boundary before extinction, and \(\lambda \) is of order M. The case \(M \ll N^2\), where the capacity constraint dominates, we call the meagre-capacity limit, and we treat this case first; in the limit law appears the relatively unusual Darling–Mandelbrot distribution (see Sect. 2.3). The case \(M \gg N^2\), where energy is plentiful, we call the confined-space limit, and (see Sect. 2.4) the limit law is exponential, as might be expected due to the small rate of extinction in that case. The critical case, where \(M /N^2 \rightarrow \rho \in (0,\infty )\) is dealt with in Sect. 2.5. Section 2.6 gives an outline, at the level of heuristics, of the proofs.

2.3 The Meagre-Capacity Limit

Our first result looks at the case where N, M are both large but \(M \in \mathbb {N}\) is small (in a sense we quantify) with respect to \(N \in {\overline{\mathbb {N}}}\). This will include all the \((\infty ,M,z)\) models, which, as remarked above, coincide with the (N, M, z) models for \(N > M+x\). However, it turns out that the \((\infty ,M,z)\) behaviour is also asymptotically replicated in (N, M, z) models for \(N \gg \sqrt{M}\). The formal statement is Theorem 2.3 below.

To describe the limit distribution in the theorem, we define, for \(t \in \mathbb {R}\),

Then \(t_0 \approx 0.8540326566\) (see Lemma A.1 below), and

defines the moment generating function of a distribution on \({\mathbb {R}}_+\) known as the Darling–Mandelbrot distribution with parameter 1/2. We write \(\xi \sim \textrm{DM}(1/2)\) to mean that \(\xi \) is an \({\mathbb {R}}_+\)-valued random variable with \({{\,\mathrm{\mathbb {E}}\,}}[ \textrm{e}^{t \xi } ] = \varphi _{\textrm{DM}}(t)\), \(t < t_0\). We refer to Sect. 2.6 for an heuristic explanation behind the appearance in our Theorem 2.3 below of the \(\textrm{DM}(1/2)\) distribution, based on its role in the theory of heavy-tailed random sums. We also refer to Lemma A.3 for the moments of \(\textrm{DM}(1/2)\), the first of which is \({{\,\mathrm{\mathbb {E}}\,}}\xi = 1\).

The result in this section is stated for a sequence of \((N_M,M,z_M)\) models, indexed by \(M \in \mathbb {N}\), with \(z_M = (x_M,y_M) \in I_{N_M} \times I_M\) and \(N_M \in {\overline{\mathbb {N}}}\) satisfying the meagre-capacity assumption that, for some \(a \in [0,\infty ]\) and \(u \in (0,1]\),

Included in (2.7) is any sequence \(N_M\) for which \(N_M = \infty \) eventually.

For \(b = (b_t, t\in {\mathbb {R}}_+)\) a standard Brownian motion on \(\mathbb {R}\) started from \(b_0=0\), define \(\tau ^{{\tiny BM }}_1:= \inf \{ t \in {\mathbb {R}}_+: b_t = 1\}\). Then (see Remarks 2.4(d) below) \(\tau ^{{\tiny BM }}_1\) has a positive (1/2)-stable (Lévy) distribution:

Write \(\Phi (s):= {{\,\mathrm{\mathbb {P}}\,}}( b_1 \le s)\) and \({\overline{\Phi }}(s):= 1 - \Phi (s) = {{\,\mathrm{\mathbb {P}}\,}}( b_1 > s)\), \(s \in \mathbb {R}\), for the standard normal distribution and tail functions. Define

Here is the limit theorem in the meagre-capacity case. The case \(N_M=\infty \), \(a=0\) of (2.10) can be read off from results of [6] (see Sect. 2.6); the other cases, we believe, are new. We use ‘\(\overset{\textrm{d}}{\longrightarrow }\)’ to denote convergence in distribution under the implicit probability measure (in the following theorem, namely \({{{\,\mathrm{\mathbb {P}}\,}}}^{N_M,M}_{z_M}\)).

Theorem 2.3

Consider the \((N_M,M,z_M)\) model with \(M \in \mathbb {N}\), \(N_M \in {\overline{\mathbb {N}}}\) and \(z_M \in I_{N_M} \times I_M\) such that (2.7) holds. Then, if \(\xi \sim \textrm{DM}(1/2)\) and \(\tau ^{{\tiny BM }}_1\sim \textrm{S}_+(1/2)\) are independent with distributions given by (2.6) and (2.8) respectively, it holds that

where g is defined at (2.9). In particular, if \(a=0\), then the limits in (2.10) and (2.11) are equal to \(1+\xi \) and \(2 = 1 + {{\,\mathrm{\mathbb {E}}\,}}\xi \), respectively, while for \(a=\infty \) they are both u.

Remarks 2.4

-

(a)

For every \(0 < u \le 1\), the function \(a \mapsto g(a,u)\) on the right-hand side of (2.9) is strictly decreasing: see Lemma 4.4 below.

-

(b)

If \(a >0\), then the distribution of the limit in (2.10) has an atom at value u of mass \({{\,\mathrm{\mathbb {P}}\,}}( a \tau ^{{\tiny BM }}_1\ge u ) = 2 \Phi ( \sqrt{a /u} ) -1\), as given by (2.12) below; on the other hand, if \(a=0\), it has a density (since \(\xi \) does, as explained in the next remark). The atom at u represents the possibility that the random walk runs out of energy before ever reaching the boundary.

-

(c)

The \(\textrm{DM}(1/2)\) distribution specified by (2.6) appears in a classical result of Darling [17] on maxima and sums of positive \(\alpha \)-stable random variables in the case \(\alpha =1/2\), and more recently in the analysis of anticipated rejection algorithms [6, 29, 31], where it has become known as the Darling–Mandelbrot distribution with parameter 1/2. Darling [17, p. 103] works with the characteristic function (Fourier transform); Feller [20, p. 465] gives the \(t<0\) Laplace transform (2.6). The \(\textrm{DM}(1/2)\) distribution has a probability density which is continuous on \((0,\infty )\), is non-analytic at integer points, and has no elementary closed form, but has an infinite series representation, can be efficiently approximated, and its asymptotic properties are known: see [6, 29, 30].

-

(d)

To justify (2.8), recall that, by the reflection principle, for \(t \in (0,\infty )\),

$$\begin{aligned} {{\,\mathrm{\mathbb {P}}\,}}( \tau ^{{\tiny BM }}_1> t ) = {{\,\mathrm{\mathbb {P}}\,}}\biggl ( \sup _{0 \le s \le t} b_s< 1 \biggr )= 2 {{\,\mathrm{\mathbb {P}}\,}}( b_t < 1 ) -1 = 2 \Phi ( t^{-1/2} ) -1; \end{aligned}$$(2.12)

2.4 The Confined-Space Limit

We now turn to the second limiting regime. The result in this section is stated for a sequence of \((N_M,M,z_M)\) models, indexed by \(M \in \mathbb {N}\), with \(z_M = (x_M, y_M) \in I_{N_M} \times I_M\) and \(N_M \in \mathbb {N}\) (note \(N_M < \infty \) now) satisfying the confined-space assumption

Let \({\mathcal {E}_1}\) denote a unit-mean exponential random variable. Here is the limit theorem in this case; in contrast to Theorem 2.3, the initial location \(x_M\) is unimportant for the limit in (2.14), and the initial energy \(y_M\) only enters through the lower bound in (2.13).

Theorem 2.5

Consider the \((N_M,M,z_M)\) model with \(M \in \mathbb {N}\), \(N_M \in \mathbb {N}\) and \(z_M \in I_{N_M} \times I_M\) such that (2.13) holds. Then, as \(M \rightarrow \infty \),

Remarks 2.6

-

(a)

The appearance of the exponential distribution in the limit (2.14) is a consequence of the fact that it is a rare event for the random walk to spend time \(\gg N^2\) in the interior of the interval \(I_N\), and can be viewed as a manifestation of the Poisson clumping heuristic for Markov chain hitting times [1, §B] or metastability of the system that arises from the fact extinction is certain but unlikely on any individual excursion.

-

(b)

Since \(\log \cos \theta = - (\theta ^2/2) + O (\theta ^4)\) as \(\theta \rightarrow 0\), we can re-write (2.14), in the case where M does not grow too fast compared to \(N_M^2\), as

$$\begin{aligned} \frac{4\lambda }{N_M^2} \exp \left\{ - \frac{\pi ^2 M}{2N_M^2} \right\} \overset{\textrm{d}}{\longrightarrow }{\mathcal {E}_1}, \text { if } \lim _{M \rightarrow \infty } \frac{M}{N_M^4} = 0.\end{aligned}$$

2.5 The Critical Case

Finally, we treat the critical case in which there exists \(\rho \in (0,\infty )\) such that

Define the decreasing function \(H: (0,\infty ) \rightarrow {\mathbb {R}}_+\) by

Since \(H(y) \sim 1/\sqrt{8\pi y}\) as \(y \downarrow 0\) (see Lemma 4.8 below), for every \(\rho >0\) and \(s \in \mathbb {R}\),

is finite. For fixed \(\rho >0\), \(s \mapsto G(\rho ,s)\) is strictly increasing for \(s \in \mathbb {R}\), and \(G (\rho , 0 ) = 0\). For \(\rho >0\), define \(s_\rho := \sup \{ s > 0: G(\rho , s) < 1 \}\), and then set

Finally, define \(\mu : (0, \infty ) \rightarrow {\mathbb {R}}_+\) by

Here is our result in the critical case. For simplicity of presentation, we restrict to the case where \(\zeta _0 = (1,M)\); one could permit initial conditions similar to those in (2.7), but this would complicate the statement and lengthen the proofs (see Remarks 2.8(d) below).

Theorem 2.7

Consider the \((N_M,M,z_M)\) model with \(M \in \mathbb {N}\), \(N_M \in \mathbb {N}\) and \(z_M = (1,M)\) such that (2.15) holds. Then, as \(M \rightarrow \infty \),

where \(\xi _\rho \) has moment generating function \({{\,\mathrm{\mathbb {E}}\,}}[ \textrm{e}^{s \xi _\rho } ] = \phi _\rho (s)\), \(s < s_\rho \), and expectation \({{\,\mathrm{\mathbb {E}}\,}}\xi _\rho = \mu (\rho )\). Moreover,

Remarks 2.8

-

(a)

The function H defined at (2.16) is in the family of theta functions, which arise throughout the distributional theory of one-dimensional Brownian motion on an interval, which is the origin of the appearance here: see e.g. [20, pp. 340–343] or Appendix 1 of [12], and Sect. 3.3 below.

-

(b)

The \(\rho \rightarrow \infty \) asymptotics in (2.21) are consistent with Remark 2.6(b), in the sense that both are consistent with, loosely speaking, the claim that

$$\begin{aligned} {{{\,\mathrm{\mathbb {E}}\,}}}^{N_M,M}_{(1,M)}\lambda \sim \frac{N_M^2}{4} \exp \left\{ - \frac{\pi ^2 M}{2N_M^2} \right\} , \text { when } N_M^2 \ll M \ll N_M^4, \end{aligned}$$although neither of those results formally establishes this.

-

(c)

An integration by parts shows that G defined at (2.17) has the representation

$$\begin{aligned} G(\rho , s)= & {} \int _0^\infty ( \textrm{e}^{sx} -1) \frac{m_\rho (x)}{x} \textrm{d}x, \text { where }\\{} & {} \quad m_\rho (x)\\ m_{\rho }(x):= & {} \frac{\pi ^2 \rho x}{2 H(\rho )} \mathbbm {1}_{[0,1]} (x) \sum _{k=1}^\infty (2k-1)^2 h_k (\rho x), \end{aligned}$$which shows that \(\phi _\rho \) corresponds to an infinitely divisible distribution (see e.g. [36, p. 91]); since \(m_\rho \) is compactly supported, however, the distribution is not compound exponential [36, p. 100].

-

(d)

It is possible to extend Theorem 2.7 to initial states \(z_M = (x_M, y_M)\) satisfying \(y_M/M \rightarrow u \in (0,1]\) and \(x_M^2/M \rightarrow a \in [0,\infty ]\), and the limit statement (2.20) would need to be modified to include additional terms similar to in Theorem 2.3, but with \(\tau ^{{\tiny BM }}_1\) replaced by a two-sided exit time. We do not pursue this extension here.

2.6 Organization and Heuristics Behind the Proofs

The rest of the paper presents proofs of Theorems 2.3, 2.5, and 2.7. The basic ingredients are provided by some results on excursions of simple symmetric random walk on \(\mathbb {Z}\), given in Sect. 3; some of this material is classical, but the core estimates that we need are, in parts, quite intricate and we were unable to find them in the literature. The main body of the proofs is presented in Sect. 4. First, we establish a renewal framework that provides the structure for the proofs, which, together with a generating-function analysis involving the excursion estimates from Sect. 3, yields the results. In Sect. 5 we comment on some possible future directions. Appendix A briefly presents some necessary facts about the Darling–Mandelbrot distribution appearing in Theorem 2.3. Here we outline the main ideas behind the proofs, and make some links to the literature.

It is helpful to first imagine a walker that is “immortal”, i.e., has an unlimited supply of energy. The energy-constrained walker is indistinguishable from the immortal walker until the first moment that the time since its most recent visit to the boundary exceeds \(M+1\), at which point the energy-constrained walker becomes extinct. Let \(s_1< s_2 < \cdots \) denote the successive times of visits to \(\partial I_N\) by the immortal walker, and let \(u_k = s_k - s_{k-1}\) denote the duration of the kth excursion (\(k \in \mathbb {N}\), with \(s_0:=0\)). The energy-constrained walker starts \(\kappa \in \mathbb {N}\) excursions before becoming extinct, where \(\kappa = \inf \{ k \in \mathbb {N}: u_k > M+1\}\). At every boundary visit at which the energy-constrained walk is still active, there is a probability \(\theta (N, M) = {{\,\mathrm{{\textbf{P}}}\,}}_1 ( \tau _{0,N} > M+1 )\) that the walk will run out of energy on the next excursion, where \(\tau _{0,N}\) is the hitting time of \(\partial I_N\) by the random walk, and \({{\,\mathrm{{\textbf{P}}}\,}}_1\) indicates we start from site 1 (equivalently, site \(N-1\)). Each time the walker visits \(\partial I_N\) there is a “renewal”, because energy is topped up to level M and the next excursion begins, started from one step away from the boundary. If the walk starts at time 0 in a state other than at full energy, next to the boundary, then the very first excursion has a different distribution from the rest, and this plays a role in some of our results, but is a second-order consideration for the present heuristics. The key consequence of the renewal structure is that the excursions have a (conditional) independence property, and the number \(\kappa \) of excursions has a geometric distribution with parameter given by the extinction probability \(\theta (N,M)\) (see Lemma 4.1 below for a formal statement).

Suppose first that \(N = \infty \), the simplest case of the meagre-capacity limit. We indicate the relevance of Darling’s result on the maxima of stable variables [17, Theorem 5.1] to this model, which gives some intuition for the appearance of the \(\textrm{DM}(1/2)\) distribution in Theorem 2.3. First we describe Darling’s result. Suppose that \(Z_1, Z_2, \ldots \) are i.i.d. \({\mathbb {R}}_+\)-valued random variables in the domain of attraction of a (positive) stable law with index \(\alpha \in (0,1)\), and let \(S_n:= \sum _{i=1}^n Z_i\) and \(T_n:= \max _{1 \le i \le n} Z_i\). Darling’s theorem says that \(S_n / T_n\) converges in distribution to \(1 +\xi _\alpha \), as \(n \rightarrow \infty \), where \(\xi _\alpha \sim \textrm{DM} (\alpha )\). A generalization to other order statistics is [3, Corollary 4].

In the case where \(N = \infty \), the durations \(u_1, u_2, \ldots \) of excursions of simple symmetric random walk on \({\mathbb {Z}}_+\) away from 0 satisfy the \(\alpha =1/2\) case of Darling’s result, so that \(T_n:= \sum _{i=1}^n u_i\) and \(M_n:= \max _{1 \le i \le n} u_i\) satisfy \(T_n / M_n \overset{\textrm{d}}{\longrightarrow }1 + \xi \) where \(\xi \sim \textrm{DM}(1/2)\). Replacing n by \(\kappa \), the number of excursions up to extinction, for which \(\kappa \rightarrow \infty \) in probability as \(M, N \rightarrow \infty \), it is plausible that \(T_\kappa / M_\kappa \overset{\textrm{d}}{\longrightarrow }1 + \xi \) also. But \(T_\kappa \) is essentially \(\lambda \), while \(M_\kappa \) will be very close to M, the upper bound on \(u_i\), \(i < \kappa \). This heuristic argument is not far from a proof of the case \(N = \infty \) of Theorem 2.3. More precisely, one can express \(\lambda \) in terms of the threshold sum process [6], and then the \(N=\infty \), \(a=0\) case of Theorem 2.3 is a consequence of Theorem 1 of [6]. Another way to access intuition behind the M-scale result for \(\lambda \) is that in this case \(\theta (N, M)\) is of order \(M^{-1/2}\) (see Proposition 4.2 below), so there are of order \(M^{1/2}\) completed excursions before extinction, while the expected duration of an excursion of length less than M is of order \(M^{1/2}\) (due to the 1/2-stable tail). Our proof below (Sect. 4.2) covers the full regime \(M \ll N^2\). That this is the relevant scale is due to the fact that simple random walk travels distance about \(\sqrt{M}\) in time M, so if \(N^2 \gg M\) it is rare for the random walk to encounter the opposite end of the boundary from which it started.

Consider now the confined-space regime, where \(M \gg N^2\). Now it is very likely that the random walk will traverse the whole of \(I_N\) many times before it runs out of energy, and so there will be many excursions before extinction. Indeed, the key quantitative result in this case, Proposition 4.5 below, shows that \(\theta (N, M) \sim (4/N) \cos ^M (\pi /N)\), which is small. Each excursion has mean duration about N (\({{\,\mathrm{{\textbf{E}}}\,}}_1 \tau _{0,N} = N-1\); see Lemma 3.3). Roughly speaking, the law of large numbers ensures that \(\lambda \approx \kappa N\), and then

which is essentially the exponential convergence result in Theorem 2.5.

The case that is most delicate is the critical case where \(M \sim \rho N^2\). The extinction probability estimate, given in Proposition 4.7 below, is now \(\theta (N, M) \sim (4/N) H(\rho )\), where H is given by (2.16); the delicate nature is because, on the critical scale, the two-boundary nature of the problem has an impact (unlike the meagre-capacity regime), while extinction is sufficiently likely that the largest individual excursion fluctuations are on the same scale as the total lifetime (unlike the confined-space regime). Since \(\theta (N, M)\) is of order 1/N, both the number of excursions before extinction, and the duration of a typical excursion, are of order N (i.e., \(M^{1/2}\)), similarly to the meagre-capacity case, and so again there is an M-scale limit for \(\lambda \), but the (universal) Darling–Mandelbrot distribution is replaced by the curious distribution exhibited in Theorem 2.7, which we have not seen elsewhere.

3 Excursions of Simple Random Walks

3.1 Notation and Preliminaries

For \(x \in \mathbb {Z}\), let \({{\,\mathrm{{\textbf{P}}}\,}}_x\) denote the law of a simple symmetric random walk on \(\mathbb {Z}\) with initial state x. We denote by \(S_0, S_1, S_2, \ldots \) the trajectory of the random walk with law \({{\,\mathrm{{\textbf{P}}}\,}}_x\), realised on a suitable probability space, so that \({{\,\mathrm{{\textbf{P}}}\,}}_x (S_0 = x) =1\) and \({{\,\mathrm{{\textbf{P}}}\,}}_x ( S_{n+1} - S_n = + 1 \mid S_0, \ldots , S_n ) = {{\,\mathrm{{\textbf{P}}}\,}}_x ( S_{n+1} - S_n = - 1 \mid S_0, \ldots , S_n) = 1/2\) for all \(n \in {\mathbb {Z}}_+\). Let \({{\,\mathrm{{\textbf{E}}}\,}}_x\) denote the expectation corresponding to \({{\,\mathrm{{\textbf{P}}}\,}}_x\). We sometimes write simply \({{\,\mathrm{{\textbf{P}}}\,}}\) and \({{\,\mathrm{{\textbf{E}}}\,}}\) in the case where the initial state plays no role.

For \(y \in \mathbb {Z}\), let \(\tau _y:= \inf \{ n \in {\mathbb {Z}}_+: S_n = y \}\), the hitting time of y. As usual, we set \(\inf \emptyset := +\infty \); the recurrence of the random walk says that \({{\,\mathrm{{\textbf{P}}}\,}}_x ( \tau _y < \infty ) = 1\) for all \(x, y \in \mathbb {Z}\). Also define \(\tau _{0,\infty }:= \tau _0\) and, for \(N \in \mathbb {N}\), \(\tau _{0,N}:= \tau _0 \wedge \tau _N = \inf \{ n \in {\mathbb {Z}}_+: S_n \in \{0,N\}\}\).

The number of \((2n+1)\)-step simple random walk paths that start at 1 and visit 0 for the first time at time \(2n+1\) is the same as the number of \((2n+1)\)-step paths that start at 0, finish at 1, and never return to 0, which, by the classical ballot theorem [19, p. 73], is \(\frac{1}{2n+1} \left( {\begin{array}{c}2n+1\\ n+1\end{array}}\right) = \frac{1}{n+1} \left( {\begin{array}{c}2n\\ n\end{array}}\right) \). Hence, by Stirling’s formula (cf. [19, p. 90]),

Similarly, since \({{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _0 \ge 2n+1 ) = {{\,\mathrm{{\textbf{P}}}\,}}_1( S_1>0, \ldots , S_{2n-1}> 0 ) = 2{{\,\mathrm{{\textbf{P}}}\,}}_0 ( S_1> 0, \ldots , S_{2n} > 0)\) (cf. [19, pp. 75–77]) we have that

The distribution of \(\tau _{0,N}\) is more complicated; there’s an exact formula (see e.g. [19, p. 369]) that will be needed when we look at larger time-scales (see Theorem 3.4 below), but for shorter time-scales, it will suffice to approximate the two-sided exit time \(\tau _{0,N}\) in terms of the (simpler) one-sided exit time \(\tau _0\). This is the subject of the next subsection.

3.2 Short-Time Approximation by One-Sided Exit Times

The next lemma studies the duration of excursions that are constrained to be short.

Lemma 3.1

-

(i)

For all \(N \in {\overline{\mathbb {N}}}\), all \(x \in I_N\), and all \(n \in {\mathbb {Z}}_+\),

$$\begin{aligned} \left| {{\,\mathrm{{\textbf{P}}}\,}}_x ( \tau _{0,N}> n ) - {{\,\mathrm{{\textbf{P}}}\,}}_x ( \tau _{0} > n ) \right| \le \frac{x}{N}.\end{aligned}$$(3.3) -

(ii)

Let \(\varepsilon >0\). Then there exists \(n_\varepsilon \in \mathbb {N}\), depending only on \(\varepsilon \), such that, for all \(N \in {\overline{\mathbb {N}}}\),

$$\begin{aligned} \left| {{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N} > n ) - n^{-1/2} \sqrt{ {2} / {\pi }} \right| \le \varepsilon n^{-1/2} + \frac{1}{N}, \text { for all } n \ge n_\varepsilon .\end{aligned}$$(3.4) -

(iii)

Fix \(\varepsilon >0\). Then there exist \(n_\varepsilon \in \mathbb {N}\) and \(\delta _\varepsilon \in (0,\infty )\) such that

$$\begin{aligned} \sup _{n_\varepsilon \le n < \delta _\varepsilon N^2 } \left| n^{1/2} {{\,\mathrm{{\textbf{P}}}\,}}_1 ( \tau _{0,N} > n ) - \sqrt{ {2} / {\pi }} \right| \le \varepsilon , \text { for all } N \in {\overline{\mathbb {N}}}. \end{aligned}$$(3.5)

Proof

Suppose that \(N \in \mathbb {N}\) and \(x \in I_N\). Since \(\tau _0 \ne \tau _{0,N}\) if and only if \(\tau _N < \tau _0\),

by the classical gambler’s ruin result for symmetric random walk; if \(N = \infty \) then \({{\,\mathrm{{\textbf{P}}}\,}}_x ( \tau _{0,N}> n) = {{\,\mathrm{{\textbf{P}}}\,}}_x (\tau _0 > n)\) by definition. This verifies (3.3). Then (3.4) follows from the \(x=1\) case of (3.3) together with (3.2). Finally, for a given \(\varepsilon >0\), the bound (3.4) implies that there exists \(n_\varepsilon \in \mathbb {N}\) such that, for all \(n_\varepsilon \le n \le \delta N^2\),

if \(\delta = \varepsilon ^2\), say. This yields (3.5) (suitably adjusting \(\varepsilon \)). \(\square \)

Lemma 3.2 gives a limit result for the duration of a simple random walk excursion started with initial condition \(x_M\) of order \(\sqrt{M}\); we will apply this to study the initial excursion of the energy-constrained walker. Recall that \(b = (b_t, t\in {\mathbb {R}}_+)\) denotes standard Brownian motion started at \(b_0 =0\), and \(\tau ^{{\tiny BM }}_1\) its first time of hitting level 1.

Lemma 3.2

Let \(a \in [0,\infty ]\). Suppose that \(M, N_M \in \mathbb {N}\) and \(x_M \in I_{N_M}\) are such that \(x^2_M / M \rightarrow a\) and \(x_M / N_M \rightarrow 0\) as \(M \rightarrow \infty \). Then for every \(y \in {\mathbb {R}}_+\),

where the right-hand side of (3.6) is to be interpreted as 0 whenever \(a \in \{0,\infty \}\). In particular, for every \(\beta \in {\mathbb {R}}_+\) and all \(y \in {\mathbb {R}}_+\),

Proof

Suppose that \(0 \le a < \infty \). Let \(\mathcal {C}\) denote the space of continuous functions \(f: {\mathbb {R}}_+\rightarrow \mathbb {R}\), endowed with the uniform metric \(\Vert f - g \Vert _\infty := \sup _{t \in {\mathbb {R}}_+} | f(t) - g(t) |\). Define the re-scaled and interpolated random walk trajectory \(z_M \in \mathcal {C}\) by

Then \(S_0 / M^{1/2} \rightarrow \sqrt{a}\), and, by Donsker’s theorem (see e.g. Theorem 8.1.4 of [18]), \(z_M\) converges weakly in \(\mathcal {C}\) to \((b_t)_{t \in {\mathbb {R}}_+}\), where b is standard Brownian motion started from 0. For \(f \in \mathcal {C}\), let \(T_a (f):= \inf \{ t \in {\mathbb {R}}_+: f(t) \le -\sqrt{a} \}\). By Brownian scaling, \(T_a (b)\) has the same distribution as \(a \tau ^{{\tiny BM }}_1\). With probability 1, \(T_a(b)< \infty \) and b has no intervals of constancy. Hence (cf. [18, p. 395]) the set \(\mathcal {C}':= \{ f \in \mathcal {C}: T_a \text { is finite and continuous at } f \}\) has \({{\,\mathrm{\mathbb {P}}\,}}( b \in \mathcal {C}' ) = 1\) and hence, by the continuous mapping theorem, \(T_a ( z_M) \rightarrow T_a (b)\) in distribution. If \(a = \infty \) this says \(T_\infty (z_M) \rightarrow \infty \) in probability, and if \(a=0\) it says \(T_0 (z_M) \rightarrow 0\) in probability; otherwise, \({{\,\mathrm{\mathbb {P}}\,}}( a \tau ^{{\tiny BM }}_1> y )\) is continuous for all \(y \in {\mathbb {R}}_+\) and \(\tau _0 / M = T_a ( z_M + M^{-1/2} S_0 - \sqrt{a} )\) has the same limit as \(T_a (z_M)\). Hence we conclude that

where the right-hand side is equal to 0 if \(a=0\) and 1 if \(a=\infty \). It follows from (3.3) that (3.8) also holds for \(\tau _{0,N_M}\) in place of \(\tau _0\), provided that \(x_M / N_M \rightarrow 0\). Hence, under the conditions of the lemma, we have

If random variables \(X, X_n\) satisfy \(X_n \overset{\textrm{d}}{\longrightarrow }X\), then, for every \(y \in {\mathbb {R}}_+\), \(X_n {\mathbbm {1}\hspace{-0.83328pt}}{\{ X_n \le y \}} \overset{\textrm{d}}{\longrightarrow }X {\mathbbm {1}\hspace{-0.83328pt}}{\{ X \le y \}}\); this follows from the fact that

and \(x <y\) is a continuity point of \({{\,\mathrm{\mathbb {P}}\,}}( X {\mathbbm {1}\hspace{-0.83328pt}}{\{ X \le y \}} \le x)\) if and only if it is a continuity point of \({{\,\mathrm{\mathbb {P}}\,}}(X \le x)\). Hence (3.9) implies (3.6). The bounded convergence theorem then yields (3.7).

\(\square \)

3.3 Long Time-Scale Asymptotics for Two-Sided Exit Times

The following result gives the expectation and variance of the duration of the classical gambler’s ruin game; the expectation can be found, for example, in [19, pp. 348–349], while the variance is computed in [2, 5].

Lemma 3.3

For every \(N \in \mathbb {N}\) and every \(x \in I_N\), we have

Note that although \({{\,\mathrm{{\textbf{E}}}\,}}_1\tau _{0,N} = N-1\), \({{\,\mathrm{{\textbf{V}\!ar}}\,}}_1 \tau _{0,N} \sim N^3/3\) is much greater than the square of the mean, which reflects the fact that while (under \({{\,\mathrm{{\textbf{P}}}\,}}_1\)) \(\tau _{0,N}\) is frequently very small, with probability about 1/N it exceeds \(N^2\) (consider reaching around N/2 before 0). Theorem 3.4 below gives asymptotic estimates for the tails \({{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N} > n )\). These estimates are informative when n is at least of order \(N^2\), i.e., at least the scale for \(\tau _{0,N}\) that contributes to most of the variance.

Define the trigonometric sum

Theorem 3.4

Suppose that \(k_0 \in \mathbb {N}\). Then, there exists \(N_0 \in \mathbb {N}\) (depending only on \(k_0\)) such that, for all \(N \ge N_0\) and all \(n \in {\mathbb {Z}}_+\),

where the function \(\Delta \) satisfies

Before giving the proof of Theorem 3.4, we state two important consequences. Part (i) of Corollary 3.5 will be our key estimate in studying the confined space regime (\(M \gg N^2\)), while part (ii) is required for the critical regime (\(M \sim \rho N^2\)).

Corollary 3.5

-

(i)

If \(n / N^2 \rightarrow \infty \) as \(n \rightarrow \infty \), then

$$\begin{aligned} {{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N} > n ) = \frac{4}{N} (1 + o(1) ) \cos ^n ( \pi / N). \end{aligned}$$(3.13)Moreover, for every \(\beta \in {\mathbb {R}}_+\),

$$\begin{aligned} \lim _{n \rightarrow \infty } \frac{{{\,\mathrm{{\textbf{E}}}\,}}_1[ \tau ^\beta _{0,N} \mid \tau _{0,N} \le n ]}{{{\,\mathrm{{\textbf{E}}}\,}}_1[ \tau ^\beta _{0,N} ]} = 1.\end{aligned}$$(3.14) -

(ii)

Let \(y_0 \in (0,\infty )\). Then, with H defined in (2.16),

$$\begin{aligned} \lim _{N \rightarrow \infty } \sup _{y \ge y_0} \left| \frac{N}{4} {{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N} > y N^2 ) - H(y) \right| = 0.\end{aligned}$$

Remark 3.6

We are not able to obtain the conclusions of Corollary 3.5 directly from Brownian scaling arguments. Indeed, an appealing approximation is to say \({{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N}> n ) \approx {{\,\mathrm{\mathbb {P}}\,}}_{1/N} ( \tau ^{{\tiny BM }}_{0,1}> n/N^2)\), where \(\tau ^{{\tiny BM }}_{0,1}\) is the first hitting time of \(\{0,1\}\) for Brownian motion started at 1/N. This approximation does not, however, achieve the correct asymptotics for the full range of n, N, even if the error is quantified. In particular (see e.g. [20, p. 342] or [12, p. 126]) we have

which for \(n \gg N^2\) leads to the conclusion that

This agrees with asymptotically with (3.13) only when \(N^2 \ll n \ll N^4\); cf. Remark 2.6(b). Hence we develop the quantitative estimates in Theorem 3.4.

To end this section, we complete the proofs of Theorem 3.4 and Corollary 3.5.

Proof of Theorem 3.4

A classical result, whose origins Feller traces to Laplace [19, p. 353], yields

(The expression in (3.15) is 0 if N and n are both even.) Define \(m_N:= \big \lceil \frac{N-1}{2} \big \rceil \) and note that \(N - 2m_N = \delta _N \in \{0,1\}\), where

Also define

Then, from (3.15), and the notation introduced in (3.10), we have

The proof of the theorem requires several key estimates. The first claim is that

for all \(m_N > k_0 \in \mathbb {N}\), where \(\mathcal {S}_1\) is defined at (3.17), and

The second claim is that, for every \(k_0 \in \mathbb {N}\),

Take the bounds in (3.19) and (3.20) as given, for now; then from (3.18) and (3.19),

and then applying (3.20) we obtain

where the terms \(\Delta _2, \Delta _3\) satisfy

Now, there exists \(\theta _0 > 0\) (\(\theta _0=1\) will do) for which \(\log \cos \theta \ge - \theta ^2\) for all \(| \theta | \le \theta _0\). Hence

for all N sufficiently large (as a function of \(k_0 \in \mathbb {N}\)), and all \(n \in \mathbb {N}\). Hence, by (3.23),

and, together with (3.21) and (3.22), this implies (3.11).

It remains to verify (3.19) and (3.20). For the former, write

using the change of variable \(\ell = m-k+1\) and the fact that \(\cos ( \pi - \theta ) = -\cos \theta \). Since \(\log \cos \theta \le - \theta ^2/2\) for \(| \theta | < \pi /2\), we have

and, similarly, since \(N - 2m_N = \delta _N \in \{0,1\}\),

From (3.24), we obtain that, for any \(k_0 \in \mathbb {N}\), since \(\lfloor m_N /2 \rfloor \le N/4\),

using the inequality \((2\ell + 2k_0 + 1)^2 \ge 8 (1+k_0) \ell + (2k_0 +1)^2\). Since

we get

Similarly, from (3.25), we get

This verifies (3.19). Finally, we have from (3.10) that

for all N sufficiently large (depending only on \(k_0\)), since \(| 1 - \cos \theta | \le \theta ^2\) for all \(\theta \in \mathbb {R}\). Similarly,

where, again, N must be large enough. This verifies (3.20). \(\square \)

Proof of Corollary 3.5

First, suppose that \(n / N^2 \rightarrow \infty \). Then we can take \(k_0 =1\) in Theorem 3.4 to see that \({{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N} > n ) = (4/N) (1+ \Delta (N, 1, n) ) \cos ^n ( \pi /N)\), where, by (3.12), \(| \Delta (N,1,n) | = o(1)\). This proves (3.13). Since \(\log \cos \theta \le - \theta ^2/2\) for \(|\theta | < \pi /2\), it follows from (3.13) that

For any random variable \(X \ge 0\), and \(\beta \in {\mathbb {R}}_+\), and any \(r \in {\mathbb {R}}_+\), one has

Hence (3.26) shows that there is a constant \(C_\beta \in {\mathbb {R}}_+\) such that, for all n sufficiently large,

using the change of variable \(t = s/N^2\). Since \(n /N^2 \rightarrow \infty \), this means that

In particular, the \(\beta =0\) case of (3.27) shows that \(\lim _{n \rightarrow \infty } {{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N} \le n ) =1\). A consequence of (3.5) is that \({{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N} > \delta N^2 ) \ge \delta /N\) for some \(\delta >0\), and hence \({{\,\mathrm{{\textbf{E}}}\,}}_1[ \tau ^\beta _{0,N} ] \ge c_\beta N^{2\beta -1}\) for some \(c_\beta >0\); the conclusion in (3.14) then follows from (3.27). The proves part (i)

Finally, fix \(y \ge y_0 > 0\) and suppose that \(n / N^2 \rightarrow y\). Then, uniformly in \(k \le N^{1/2}\),

as \(n \rightarrow \infty \), where \(h_k\) is defined at (2.16) and \(\sum _{k=1}^\infty h_k (y) = H(y)\) satisfies \(\sup _{y \ge y_0} H(y) < \infty \). In particular, for any \(\varepsilon >0\) we can choose \(k_0 \in \mathbb {N}\) large enough so that \(\sup _{y \ge y_0} |\sum _{k=1}^{k_0} h_k (y) - H(y) | < \varepsilon \). From (3.28) it follows that, for any \(\varepsilon >0\),

for all n sufficiently large. Hence, for every \(\varepsilon >0\),

for all n sufficiently large. By (3.12), there exist \(k_0, n_0 \in \mathbb {N}\) such that, for every \(y \ge y_0\),

for all \(n \ge n_0\) (given \(\varepsilon \) and \(y_0\), first take \(k_0\) large, and then N large). From (3.11) with (3.29), (3.30), and the fact that \(\sup _{y \ge y_0} H(y) < \infty \), we verify part (ii). \(\square \)

4 Proofs of Main Results

4.1 Excursions, Renewals, and Extinction

We start by giving the proof of the irreducibility result, Lemma 2.2, stated in Sect. 2.2.

Proof of Lemma 2.2

Let \(\mathcal {F}_n:= \sigma ( \zeta _0, \ldots , \zeta _n)\), the \(\sigma \)-algebra generated by the first \(n \in {\mathbb {Z}}_+\) steps of the Markov chain \(\zeta \). Then, given \(\mathcal {F}_n\), at least one of the two neighbouring sites of \(\mathbb {Z}\) to \(X_n\) is in \(I^{{{{\circ }}}}_N\); take \(y \in I^{{{{\circ }}}}_N\) to be any site such that \(| y - X_n | = 1\). If \(X_n \in \partial I_N\), then the walker will move to y with probability 1, and the energy level will be refreshed to M:

Otherwise, \(X_n \in I^{{{{\circ }}}}_N\). If \(\eta _n \ge 1\), then the walker can, with probability 1/2, take a step to y on the next move, which uses 1 unit of energy. On the other hand, if \(\eta _n = 0\), then \(X_{n+1} = X_n\) must remain in \(I^{{{{\circ }}}}_N\). Thus, writing \(x^+:= x {\mathbbm {1}\hspace{-0.83328pt}}{\{ x > 0\}}\),

In particular, since \(\eta _n \le M\), we can combine (4.1) and (4.2) (applied M times) to get

Thus

repeated application of which implies that

uniformly over \(N \in {\overline{\mathbb {N}}}\). Every \(n \in {\mathbb {Z}}_+\) has \(k (M+1) \le n < (k+1)(M+1)\) for some \(k = k(n) \in {\mathbb {Z}}_+\), and so

The verifies that \(\sup _{z \in I_N \times I_M} {{{\,\mathrm{\mathbb {E}}\,}}}^{N,M}_z[ \textrm{e}^{\delta \lambda } ] < \infty \) for any \(\delta \in (0, \frac{2^{-M}}{M+1} )\). \(\square \)

We denote by \(\sigma _1< \sigma _2< \cdots < \sigma _\kappa \) the successive times of visiting the boundary before time \(\lambda \); formally, set \(\sigma _0:= 0\) and

with the usual convention that \(\inf \emptyset := \infty \). Then \(\sigma _k < \infty \) if and only if \(\sigma _k < \lambda \). The number of complete excursions is then

i.e., the number of visits to \(\partial I_N\) before extinction. Since \(\lambda < \infty \), a.s. (by Lemma 2.2), \(\kappa \in {\mathbb {Z}}_+\) is a.s. finite.

For \(k \in \mathbb {N}\), define the excursion duration \(\nu _k:= \sigma _k - \sigma _{k-1}\) as long as \(\sigma _k <\infty \); otherwise, set \(\nu _k = \infty \). We claim that

To see (4.4), observe that, if \(\sigma _{k-1} < \lambda \) then \(\eta _{\sigma _{k-1}+1} = M\) and \(X_n \in I^{{{{\circ }}}}_N\) for all \(\sigma _{k-1}< n < \sigma _k\). Hence \(\eta _{\sigma _{k-1} + i} = M+1 -i\) for all \(1 \le i \le \nu _k\) if \(\nu _k < \infty \). In particular, if \(\nu _k < \infty \), then \(0 \le \eta _{\sigma _k} = M + 1 - \nu _k\), which implies that \(\nu _k \le M+1\). This verifies (4.4).

For simplicity, we write \(\nu := \nu _1\). By the strong Markov property, for all \(k, n \in {\mathbb {Z}}_+\),

meaning that excursions subsequent to the first are identically distributed. On the other hand, \({{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z( \nu _1 = n ) = {{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z( \nu = n)\) for \(n \in {\mathbb {Z}}_+\), so that if \(z \ne (1,M)\) the first excursion may have a different distribution.

After the final visit to the boundary at time \(\sigma _\kappa \), the energy \(\eta _{\sigma _\kappa +1} =M\) decreases one unit at a time until \(\eta _{\sigma _\kappa + M + 1} = 0\) achieves extinction. Thus, by (4.4),

In the terminology of renewal theory, \(\nu _1, \nu _2, \ldots \) is a renewal sequence that is delayed (since \(\nu _1\) may have a different distribution from the subsequent terms) and terminating, since \({{\,\mathrm{\mathbb {P}}\,}}(\nu _k = \infty ) >0\). A key quantity is the per-excursion extinction probability

the probability that the process started in state z terminates before reaching the boundary. Set \(\theta (N,M):= \theta _{(1,M)} (N,M)\) for the case where \(z=(1,M)\), which plays a special role, due to (4.5). The following basic result exhibits the probabilistic structure associated with the renewal set-up. Recall the definitions of the number of completed excursions \(\kappa \) and extinction probability \(\theta _z(N,M)\) from (4.3) and (4.7), respectively.

Lemma 4.1

Let \(N \in {\overline{\mathbb {N}}}\) and \(M \in \mathbb {N}\). Then, for all \(k \in \mathbb {N}\),

and

Moreover, given that \(\kappa =k \in \mathbb {N}\), the random variables \(\nu _1, \ldots \nu _k\) are (conditionally) independent, and satisfy, for every \(n_1, \ldots , n_k \in I_{M+1}\),

Proof

By definition, \(\sigma _0 = 0\). Let \(k \in \mathbb {N}\). From (4.3), we have that \(\kappa \ge k\) if and only if \(\sigma _k < \lambda \). If \(\sigma _k < \lambda \), then \(|X_{\sigma _k+1} - y | = 1\) for some \(y \in \partial I_N\), and \(\eta _{\sigma _k+1} = M\). Hence, by (4.7) and the strong Markov property applied at the stopping time \(\sigma _k+1\), for \(k \in \mathbb {N}\),

Hence \({{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z( \kappa \ge k+1 \mid \kappa \ge k ) = 1 - \theta (N,M)\) for \(k \in \mathbb {N}\), and, since \({{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z( \kappa \ge 1) = {{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z( \sigma _1 < \lambda ) = 1 - \theta _z (N,M)\), we obtain (4.8). Moreover, for \(n_1, \ldots , n_k \le M+1\),

by repeated application of (4.5). Similarly,

Dividing the expression in (4.10) by that in (4.11) gives (4.9), since, by (4.7), \( {{{\,\mathrm{\mathbb {P}}\,}}}^{N,M}_z( \nu < \infty ) = 1 - \theta _z (N,M)\). \(\square \)

4.2 The Meagre-Capacity Limit

In this section we present the proof of Theorem 2.3; at several points we appeal to the results from Sect. 3 on simple random walk. Consider the generating function

Define for \(t \in \mathbb {R}\),

For each \(t \in \mathbb {R}\), the series in (4.13) converges absolutely, and, indeed, \(| 1 - K(t) | \le \textrm{e}^{|t|}\) for all \(t \in \mathbb {R}\). The series for K defined by (4.13) compared to equation (13.1.2) in [4] identifies K as the Kummer (confluent hypergeometric) function \(K(t) = M\big (-\frac{1}{2}, \frac{1}{2}, t \big )\); see Appendix A for some of its properties.

The following result gives asymptotics for \(\theta (N_M, M) = \theta _{(1,M)} (N_M, M)\) as defined at (4.7), and for the generating function \(\psi _M\) as defined at (4.12).

Proposition 4.2

Consider the \((N_M,M,z_M)\) model with \(M \in \mathbb {N}\) and \(N_M \in {\overline{\mathbb {N}}}\) such that \(\lim _{M \rightarrow \infty } (M / N_M^2 ) = 0\). Then

Moreover,

uniformly over \(t \in R\), for any compact \(R \subset \mathbb {R}\).

In the proof of this result, and later, we will use the following integration by parts formula for restricted expectations, which is a slight generalization of Theorem 2.12.3 of [25, p. 76].

Lemma 4.3

For any real-valued random variable X and every \(a, b \in \mathbb {R}\) with \(a \le b\), and any monotone and differentiable \(g: \mathbb {R}\rightarrow \mathbb {R}\),

Proof

Suppose that \(g: \mathbb {R}\rightarrow \mathbb {R}\) is differentiable. Write \(F_X (y):={{\,\mathrm{\mathbb {P}}\,}}( X \le y)\) for the distribution function of X. If g is monotone non-decreasing, then

where the first equality is e.g. Theorem 2.7.1 of [25, p. 60] and the second follows from Theorem 2.9.3 of [25, p. 66]; this yields (4.16). If g is monotone non-increasing, then the same result follows by considering \({\tilde{g}} (y):= g(b) - g(y)\). \(\square \)

Proof of Proposition 4.2

For ease of notation, write \(\tau := \tau _{0,N_M}\) throughout this proof. Apply Lemma 3.1 with \(n = M+1\) to get

Since \(M = o( N_M^2 )\), the \(O(1/N_M)\) term here can be neglected asymptotically; this verifies (4.14).

We apply (4.16) with \(X = \tau /M\), \(a=0\), \(b=1\), and \(g (y) = \textrm{e}^{ty} -1\), (\(t \ne 0\)) to get

Fix \(\varepsilon >0\), and let \(n_\varepsilon \in \mathbb {N}\) and \(\delta _\varepsilon > 0\) be as in Lemma 3.1(iii). Since \(M / N_M^2 \rightarrow 0\), we have \(M < \delta _\varepsilon N_M^2\) for all M sufficiently large. Hence from (3.5) we have that

for all M sufficiently large. It follows from (4.18) that, as \(M \rightarrow \infty \),

using the definition of \(\mathcal {I}\) from (2.5); here the o(1) is uniform for \(t \in R\) for a given compact \(R \subset \mathbb {R}\). Similarly, from (4.18) again, uniformly for \(t \in R\) (compact),

Combining (4.19) and (4.20), since \(\varepsilon >0\) was arbitrary, we obtain

as \(M \rightarrow \infty \), uniformly for \(t \in R\), R compact. Together with the asymptotics for \({{\,\mathrm{{\textbf{P}}}\,}}_1( \tau > M+1 ) = \theta (N_M, M )\) from (4.14), we thus conclude from (4.17) that

uniformly for \(t \in R\), R compact, from which (4.15) follows, since \(1 - \textrm{e}^t + \mathcal {I}( t) = 1-K(t)\) by (A.1), and, from (4.12),

where \({{\,\mathrm{{\textbf{P}}}\,}}_1( \tau \le M+1) = 1 - \theta (N_M, M ) \rightarrow 1\), by (4.14). \(\square \)

To avoid burdening notation with conditioning, let \(Y_M\) denote a random variable taking values in \(I_{M+1}\) (enriching the underlying probability space if necessary) such that

Given \(Y_n\) as constructed through (4.21), it is the case that \(\psi _M\) as defined at (4.12) can be represented via \(\psi _M(s) = {{{\,\mathrm{\mathbb {E}}\,}}}^{N_M,M}_{(1,M)}[ \textrm{e}^{s Y_M} ]\), \(s \in \mathbb {R}\).

The next lemma proves that the limit distribution in Theorem 2.3 has expectation given by the function g defined at (2.9), and establishes some of the key properties of g.

Lemma 4.4

Suppose that \(\xi \sim \textrm{DM}(1/2)\) and \(\tau ^{{\tiny BM }}_1\sim \textrm{S}_+(1/2)\) are independent with distributions given by (2.6) and (2.8) respectively. Let \(a \in {\mathbb {R}}_+\) and \(u \in (0,\infty )\). The random variable \(\zeta _{a,u}:= \min ( u, a \tau ^{{\tiny BM }}_1) + (1+ \xi ) {\mathbbm {1}\hspace{-0.83328pt}}{\{ a \tau ^{{\tiny BM }}_1< u\}}\) has mean given by \({{\,\mathrm{\mathbb {E}}\,}}\zeta _{a,u} = g(a,u)\), where g is defined at (2.9). Moreover, for every \(u\in (0,1]\), the function \(a \mapsto g(a,u)\) is strictly decreasing and infinitely differentiable on \((0,\infty )\), with \(g(0,u)=2\) and \(\lim _{a \rightarrow \infty } g(a,u)=u\).

Proof

If \(a=0 <u\), then \(\zeta _0 = 1 +\xi \), a.s., and the result is true because \(g(0,u) = 2 = 1 +{{\,\mathrm{\mathbb {E}}\,}}\xi \). Suppose that \(a, u \in (0,\infty )\). From (2.8), we have that

using the substitutions \(y = u/(at)\) in the first integral and \(s^2 = 1/t\) in the second. Here

Moreover, an integration by parts followed by the substitution \(s^2 = ry\) gives

Hence

Finally, by (2.12), \({{\,\mathrm{\mathbb {P}}\,}}( a \tau ^{{\tiny BM }}_1< u ) = {{\,\mathrm{\mathbb {P}}\,}}( \tau ^{{\tiny BM }}_1< u/a) = 2 {\overline{\Phi }}(\sqrt{a/u} )\), and then using the independence of \(\xi \) and \(\tau ^{{\tiny BM }}_1\), and the fact that \({{\,\mathrm{\mathbb {E}}\,}}\xi = 1\), gives

which is equal to g(a, u) as defined at (2.9).

Write \(\phi (z):= (2\pi )^{-1/2} \textrm{e}^{-z^2/2}\) for the standard normal density, so that \({\overline{\Phi }}' ( z) = - \phi (z)\) and \(\phi '(z) = - z \phi (z)\). The formula (2.9) can then be expressed as

which, on differentiation, yields, for \(u >0\),

Thus \(g'(a,u) < 0\) for all \(a \in (0,\infty )\) and all \(u \in (0,1]\), with \(\lim _{a \rightarrow 0} g'(a,u) = -\infty \) for \(u \in (0,1)\) and \(\lim _{a \rightarrow 0} g'(a,1) = -1\). In particular, \(a \mapsto g(a,u)\) is strictly decreasing. \(\square \)

Proof of Theorem 2.3

To simplify notation, set \(\theta _M:= \theta (N_M, M)\). First suppose that \(X_0 = 1\) and \(\eta _0 =M\). Then in the representation \(\sigma _\kappa = \sum _{i=1}^\kappa \nu _i\), Lemma 4.1 shows that, given \(\kappa = k \in \mathbb {N}\), \(\nu _1, \ldots , \nu _k\) are i.i.d. with the law of \(Y_M\) as given by (4.21), and the law of \(\kappa \) is \({{{\,\mathrm{\mathbb {P}}\,}}}^{N_M,M}_{(1,M)}( \kappa = k ) = (1-\theta _M)^k \theta _M\), for \(k \in {\mathbb {Z}}_+\). In particular, for \(| r (1-\theta _M ) | < 1\),

Hence, by (conditional) independence,

For \(s=t/M\), we have from Proposition 4.2 that, for \(t \in \mathbb {R}\),

as \(M \rightarrow \infty \). In particular, for \(t \in \mathcal {T}\), with \(\mathcal {T}\) defined at (4.13), the asymptotics in (4.24) show that \(( 1 - \theta _M ) \psi _M( t / M ) < 1\) for all M sufficiently large. Hence from (4.23) applied at \(s = t/M\), \(t \in \mathcal {T}\), with (4.24) and another application of (4.14), we get

Since \(\lambda = M +1 + \sigma _\kappa \), by (4.6), it follows from (4.25), and the fact that convergence of moment generating functions in a neighbourhood of 0 implies convergence in distribution, and convergence of all moments (see e.g. [25, p. 242]), that \(\lambda /M \overset{\textrm{d}}{\longrightarrow }1+\xi \) where \({{\,\mathrm{\mathbb {E}}\,}}[ \textrm{e}^{t \xi } ] = 1 / K(t)\) for \(t \in \mathcal {T}\), and \(M^{-1} {{{\,\mathrm{\mathbb {E}}\,}}}^{N_M,M}_{(1,M)}[ \sigma _\kappa ] \rightarrow {{\,\mathrm{\mathbb {E}}\,}}\xi = 1\). The form for \({{\,\mathrm{\mathbb {E}}\,}}[ \textrm{e}^{t \xi }]\) given in (2.6) is obtained using the relation (A.1). This establishes both (2.10) and (2.11) in the case \(X_0 = x_M \equiv 1\) and \(\eta _0 = y_M \equiv M\), where (2.7) is satisfied for \(a=0\) and \(u=1\).

More generally, suppose that \((X_0,\eta _0) = ( x_M,y_M)\) satisfying (2.7). Then

by the strong Markov property, where the \(Z, \nu _1\) on the right are independent, and Z has the distribution of \(\sigma _\kappa \) under \({{{\,\mathrm{\mathbb {P}}\,}}}^{N_M,M}_{(1,M)}\). Here \(\sigma _\kappa / M \overset{\textrm{d}}{\longrightarrow }\xi \) and, by Lemma 3.2,

and \(M^{-1} \nu _1 {\mathbbm {1}\hspace{-0.83328pt}}{\{ \nu _1 < \infty \}}\) converges in distribution to \(a \tau ^{{\tiny BM }}_1{\mathbbm {1}\hspace{-0.83328pt}}{\{ a \tau ^{{\tiny BM }}_1\le u \}}\). This completes the proof of (2.10). Taking expectations in (4.26), and using the stated independence, we get

where \({{{\,\mathrm{\mathbb {P}}\,}}}^{N_M,M}_{z_M}( \nu < \infty ) = \theta _{z_M} (N_M, M) = {{\,\mathrm{\mathbb {P}}\,}}( a \tau ^{{\tiny BM }}_1\le u) + o(1)\), by the \(\beta =0\) case of (3.7) and hypothesis (2.7). It follows that

using the fact that \(\lim _{M \rightarrow \infty } M^{-1} {{{\,\mathrm{\mathbb {E}}\,}}}^{N_M,M}_{(1,M)}[ \sigma _\kappa ] = 1\), as established via (4.25) above. Moreover, using (2.7), we have from Lemma 3.2 that

Thus we conclude that, as \(M \rightarrow \infty \),

where \(\zeta _{a,u}\) is as defined in Lemma 4.4. This establishes (2.11), and completes the proof of the theorem. \(\square \)

4.3 The Confined-Space Limit

In this section we present the proof of Theorem 2.5. As in the previous section, we start with an asymptotic estimate on \(\theta (N_M, M):= \theta _{(1,M)} (N_M, M)\) as defined at (4.7).

Proposition 4.5

Suppose that (2.13) holds. Then, as \(M \rightarrow \infty \),

Moreover, as \(M \rightarrow \infty \),

Proof

This follows from Corollary 3.5(i) Indeed (4.27) is (3.13) applied to \(\theta (N_M, M ) = {{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N_M} > M+1 )\). Similarly (4.28) is a consequence of (3.14) together with the facts that \({{\,\mathrm{{\textbf{E}}}\,}}_1\tau _{0,N} = N-1\) and \({{\,\mathrm{{\textbf{V}\!ar}}\,}}\tau _{0,N} = N (N-1)(N-2)/3\), given in Lemma 3.3. \(\square \)

We will use the following exponential convergence result for triangular arrays.

Lemma 4.6

Let \(K_M \in {\mathbb {Z}}_+\) satisfy \({{\,\mathrm{\mathbb {P}}\,}}( K_M = k) = (1-p_M)^{k} p_M\) for \(k \in {\mathbb {Z}}_+\), where \(p_M \in (0,1)\), and \(\lim _{M \rightarrow \infty } p_M = 0\). Suppose also that \(Y_M,Y_{M,1}, Y_{M,2}, \ldots \) are i.i.d., \({\mathbb {R}}_+\)-valued, and independent of \(K_M\), with \({{\,\mathrm{\mathbb {E}}\,}}[ Y_M^2 ] = \sigma _M^2 < \infty \) and \({{\,\mathrm{\mathbb {E}}\,}}Y_M = \mu _M >0\). Let \(Z_M:= \sum _{i=1}^{K_M} Y_{M,i}\). Assuming that

it is the case that, as \(M \rightarrow \infty \),

Lemma 4.6 can be deduced from e.g. Theorem 3.2.4 of [28, p. 85], by verifying that the condition (4.29) implies the ‘uniformly weighted family’ condition from [28, p. 44]. For convenience, we include a direct proof here.

Proof of Lemma 4.6

Let \(r_M:= 1/p_M \in (0,\infty )\). For \(s \in (0,\infty )\), we have

Write \(\psi _M (t):= {{\,\mathrm{\mathbb {E}}\,}}[ \textrm{e}^{ i t Y_M} ]\), the characteristic function of \(Y_M\). Then, for \(t \in \mathbb {R}\),

Set \(a_M:= r_M \mu _M\). Thus, for \(t \in \mathbb {R}\),

Fix \(t_0 \in (0,\infty )\). The \(n=1\) case of (3.3.3) in [18, p. 135], together with the facts that \({{\,\mathrm{\mathbb {E}}\,}}[ Y_M^2 ] = \sigma _M^2 < \infty \) and \({{\,\mathrm{\mathbb {E}}\,}}Y_M = \mu _M\), yields

which tends to zero as \(M \rightarrow \infty \), by (4.29). Since \(r_M \rightarrow \infty \), it follows that \(1 - (r_M-1) ( \psi _M (t/a_M) - 1) = 1 - i t + o(1)\), uniformly in \(| t | \le t_0\), and so, by (4.30)

for all t in an open interval containing 0, and since \({{\,\mathrm{\mathbb {E}}\,}}[ \textrm{e}^{i t {\mathcal {E}_1}} ] = (1-it)^{-1}\) is the characteristic function of the unit-mean exponential distribution, this completes the proof. \(\square \)

Proof of Theorem 2.5

To simplify notation, we write \(\theta _M:= \theta (N_M, M)\). First suppose that \(X_0 = 1\). Then in the representation \(\sigma _\kappa = \sum _{i=1}^\kappa \nu _i\), Lemma 4.1 shows that, given \(\kappa = k \in \mathbb {N}\), \(\nu _1, \ldots , \nu _k\) are i.i.d. with the law of \(Y_M\) as given by (4.21), and the law of \(\kappa \) is \({{{\,\mathrm{\mathbb {P}}\,}}}^{N_M,M}_{(1,M)}( \kappa = k ) = (1-\theta _M)^k \theta _M\), for \(k \in {\mathbb {Z}}_+\). Thus Lemma 4.6 applies to show that \(\sigma _\kappa \rightarrow {\mathcal {E}_1}\) in distribution, provided that (4.29) holds, where \(p_M = \theta _M\) satisfies (4.27), and, by (4.28), \(\mu _M = {{{\,\mathrm{\mathbb {E}}\,}}}^{N_M,M}_{(1,M)}[ \nu \mid \nu < \infty ] \sim N_M\) and \(\sigma ^2_M = {{{\,\mathrm{\mathbb {V}ar}\,}}}^{N_M,M}_{(1,M)}[ \nu \mid \nu < \infty ] \sim N_M^3/3\). Hence the quantity in (4.29) satisfies

which tends to 0 provided that (2.13) holds. Lemma 4.6 then establishes (2.14) in the case where \(\zeta _0 = (1,M)\). For general \(z_M\) we have (by Lemma 3.3) that \({{{\,\mathrm{\mathbb {E}}\,}}}^{N_M,M}_{z_M}[ \nu \mid \nu < \infty ] = O ( N_M^2)\), and, since, by (2.13), \(M /N_M^2 \rightarrow \infty \) and \(y_M \ge \varepsilon M\) for some \(\varepsilon >0\) and all M large enough, it follows from the \(a=0\), \(y = \varepsilon \) case of (3.8) that \(\theta _{z_M} (N_M, M) \rightarrow 0\) as \(M \rightarrow \infty \). Hence the first excursion does not change the limit behaviour. \(\square \)

4.4 The Critical Case

Recall the definition of H from (2.16).

Proposition 4.7

Suppose that (2.15) holds. Then,

Moreover, for any \(s_0 \in (0,\infty )\), as \(M \rightarrow \infty \), uniformly for \(s \in (0,s_0]\),

Proof

We have from Corollary 3.5(ii) that \({{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N}/N^2 > y ) = (4/N) (1+o(1)) H (y)\), as \(N \rightarrow \infty \), uniformly in \(y \ge y_0 >0\), where H is defined at (2.16). In particular, under condition (2.15), it follows from continuity of H that \(\theta (N_M,M) = {{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N_M}/N_M^2> (M +1)/N_M^2 ) = (1+o(1)) {{\,\mathrm{{\textbf{P}}}\,}}_1( \tau _{0,N_M}/N_M^2 > \rho )\) satisfies (4.31).

Let \(\varepsilon \in (0,\rho )\). Note that, for every \(s \in {\mathbb {R}}_+\),

To estimate the second term on the right-hand side of (4.33), we apply (4.16) with \(X = \tau _{0,N_M} / N_M^2\), \(g(y) = \textrm{e}^{sy} - 1\), \(a = 0\), and \(b = \varepsilon \) to obtain, for every \(s \in (0,\infty )\),

Here, it follows from (3.4) that there exists \(C < \infty \) such that, for all \(N \in \mathbb {N}\),

Thus, for \(0 \le s \le s_0 < \infty \) and all \(\varepsilon \in (0,1)\),

where \(C(s_0) < \infty \) depends on \(s_0\) only. To estimate the first term on the right-hand side of (4.33), we apply (4.16) with \(X = \tau _{0,N_M} / N_M^2\), \(g(y) = \textrm{e}^{sy} - 1\), \(a = \varepsilon \), and \(b = \rho _M:= (M+1)/N_M^2 = \rho + o(1)\) to obtain

note that \(\rho _M > \varepsilon \) for all M sufficiently large, since \(\varepsilon < \rho \). Since there is some \(C < \infty \) for which \(\textrm{e}^{s\varepsilon }-1 \le C s_0 \varepsilon \) for all \(s \le s_0\) and all \(\varepsilon \in (0,1)\), we have from (4.34) that the first term on the right-hand side of (4.36) satisfies

where, again, \(C(s_0) < \infty \) depends on \(s_0\) only. Corollary 3.5(ii) implies that, for any fixed \(\varepsilon \in (0,1)\),

and

Hence, combining (4.33) with (4.35) and (4.36), and taking \(\varepsilon \downarrow 0\), we obtain

Finally, we observe that

and \({{{\,\mathrm{\mathbb {P}}\,}}}^{N_M,M}_{(1,M)}( \nu < \infty ) = 1- \theta (N_M,M) \rightarrow 1\), by (4.7) and (4.31). Now (4.32) follows. \(\square \)

Lemma 4.8

The function H from (2.16) satisfies the following.

-

(i)

As \(y \downarrow 0\), \( 2\,H(y) \sqrt{2 \pi y} \rightarrow 1\).

-

(ii)

As \(y \rightarrow \infty \), \(H(y) = (1+o(1)) \textrm{e}^{-\pi ^2 y /2}\).

Proof

Write \(c:= \pi ^2 /2\), so that (2.16) reads \(H(y) = \sum _{k=1}^\infty \textrm{e}^{-c (2k-1)^2 y}\). Then,

and hence \(0 \le H(y) - \textrm{e}^{-\pi ^2 y /2} \le \textrm{e}^{- 4 \pi ^2 y}\) for all y sufficiently large. This yields part (ii). On the other hand, since \(k \mapsto h_k (y)\) is strictly decreasing for fixed \(y >0\),

where, by the change of variable \(z = (2t-1) \sqrt{2 cy}\),

as \(y \downarrow 0\), which gives part (i). \(\square \)

Proof of Theorem 2.7

Let \(s > 0\), and recall the definition of \(\psi _M(s)= {{{\,\mathrm{\mathbb {E}}\,}}}^{N_M,M}_{(1,M)}[ \textrm{e}^{s \nu } \mid \nu < \infty ]\) from (4.12). Then, as at (4.23),

where \(\theta _M:= \theta (N_M, M)\). Combining (4.31) and (4.32), with the fact that \(M \sim \rho N_M^2\), by (2.15), we conclude that

where G is defined at (2.17). Since \(\lambda = M +1 + \sigma _\kappa \), by (4.6), it follows from (4.37) that \(\lambda / M\) converges in distribution to \(1 + \xi _\rho \), where \({{\,\mathrm{\mathbb {E}}\,}}[ \textrm{e}^{s\xi _\rho } ] = \phi _\rho (s)\) as defined at (2.18).

From (2.17), we have that, for fixed \(\rho >0\), \(s \mapsto G(\rho , s)\) is differentiable on \(s \in (0,\infty )\), with derivative \(G' ( \rho , s):= \frac{\textrm{d}}{\textrm{d}s} G (\rho , s)\) satisfying

as given by (2.19), as we see from the change of variable \(y = \rho v\). Hence \(\phi _\rho \) is differentiable for \(s \in (0,s_\rho )\), with derivative \(\phi '_\rho (s) = \frac{G'(\rho ,s)}{(1-G(\rho ,s))^2}\), and

The convergence of the moment generating function in (4.37) in the region \(s \in (0,s_0)\) implies convergence also of the mean, \(\lim _{M \rightarrow \infty } M^{-1} {{{\,\mathrm{\mathbb {E}}\,}}}^{N_M,M}_{(1,M)}\sigma _\kappa = \phi _\rho (0^+) = \mu (\rho )\), and hence \(\lim _{M \rightarrow \infty } M^{-1} {{{\,\mathrm{\mathbb {E}}\,}}}^{N_M,M}_{(1,M)}\lambda = 1 + \mu (\rho )\). The asymptotics for \(\mu \) stated in (2.21) follow from (2.19) with the asymptotics for H in Lemma 4.8, together with the observation that

using Fubini’s theorem. This completes the proof. \(\square \)

5 Concluding Remarks

To conclude, we identify a number of potentially interesting open problems.

-

Staying in the context of the M-capacity models, we expect that at least the non-critical results of the present paper are rather universal, and should extend to more general domains, and to more general diffusion dynamics within a suitable class. For example, we expect that for a large class of energy-constrained random walk models in a domain of diameter N with energy capacity M, the regimes \(M \ll N^2\) (meagre capacity) and \(M \gg N^2\) (confined space) should lead to Darling–Mandelbrot and exponential limits, respectively, for the total lifetime.

-

The total lifetime is just one statistic associated with the model: other quantities that it would be of interest to study include the location of the walker on extinction.

-

The model presented in Sect. 2.1 includes several other natural models, in addition to the particular finite-capacity model with total replenishment that we study here. For example, one could consider a model of infinite capacity and a random replenishment distribution. In models with unbounded energy, there may be positive probability that the walker survives for ever (i.e., transience).

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Aldous, D.: Probability Approximations via the Poisson Clumping Heuristic. Springer, New York (1989)

Anděl, J., Hudecová, S.: Variance of the game duration in the gambler’s ruin problem. Stat. Probab. Lett. 82, 1750–1754 (2012)

Arov, D.Z., Bobrov, A.A.: The extreme terms of a sample and their role in the sum of independent variables. Theory Probab. Appl. 5, 377–396 (1960)

Abramowitz, M., Stegun , I.A.: (eds.) Handbook of Mathematical Functions, National Bureau of Standards, Applied Mathematics Series, vol. 55. U.S. Government Printing Office, Washington (1965)

Bach, E.: Moments in the duration of play. Stat. Probab. Lett. 36, 1–7 (1997)

Bacher, A., Sportiello, A.: Complexity of anticipated rejection algorithms and the Darling-Mandelbrot distribution. Algorithmica 75, 812–831 (2016)

Balakrishnan, V., Abad, E., Abil, T., Kozak, J.J.: First-passage properties of mortal random walks: ballistic behavior, effective reduction of dimensionality, and scaling functions for hierarchical graphs. Phys. Rev. E 99, 062110 (2019)

Bénichou, O., Bhat, U., Krapivsky, P.L., Redner, S.: Optimally frugal foraging. Phys. Rev. E 97, 022110 (2018)

Bénichou, O., Chupeau, M., Redner, S.: Role of depletion on the dynamics of a diffusing forager. J. Phys. A 49, 394003 (2016)

Berbert, J.M., Lewis, M.A.: Superdiffusivity due to resource depletion in random searches. Ecol. Complex. 33, 41–48 (2018)

Boersma, P.D., Rebstock, G.A., Frere, E., Moore, S.E.: Following the fish: penguins and productivity in the South Atlantic. Ecol. Monogr. 79, 59–76 (2009)

Borodin, A.N., Salminen, P.: Handbook of Brownian Motion—Facts and Formulae, 2nd edn. Birkhäuser, Basel (2002)

Caffarelli, L.A., Roquejoffre, J.-M.: A nonlinear oblique derivative boundary value problem for the heat equation: analogy with the porous medium equation. Ann. I. H. Poincaré 19, 41–80 (2002)

Chupeau, M., Bénichou, O., Redner, S.: Universality classes of foraging with resource renewal. Phys. Rev. E 93, 032403 (2016)

Codling, E.A., Plank, M.J., Benhamou, S.: Random walk models in biology. J. R. Soc. Interface 5, 813–834 (2008)

Csáki, E., Erdős, P., Révész, P.: On the length of the longest excursion. Z. Wahrsch. Verw. Gebiete 68, 365–382 (1985)

Darling, D.A.: The influence of the maximum term in the addition of independent random variables. Trans. Am. Math. Soc. 73, 95–107 (1952)

Durrett, R.: Probability: Theory and Examples, 5th edn. Cambridge University Press, Cambridge (2019)

Feller, W.: An Introduction to Probability Theory and Its Applications, vol. I, 3rd edn. Wiley, New York (1968)

Feller, W.: An Introduction to Probability Theory and Its Applications, vol. II, 2nd edn. Wiley, New York (1971)

Garlick, M.J., Powell, J.A., Hooten, M.B., McFarlane, L.R.: Homogenization of large-scale movement models in ecology. Bull. Math. Biol. 73, 2088–2108 (2011)

Giuggioli, L., Bartumeus, F.: Animal movement, search strategies, and behavioural ecology: a cross-disciplinary way forward. J. Animal Ecol. 79, 906–909 (2010)

Grebenkov, D.S.: Depletion of resources by a population of diffusing species. Phys. Rev. E 105, 054402 (2022)

Gurarie, E., Fleming, C.H., Fagan, W.F., Laidre, K.L., Hernández-Pliego, J., Ovaskainen, O.: Correlated velocity models as a fundamental unit of animal movement: synthesis and applications. Mov. Ecol. 5, 13 (2017)

Gut, A.: Probability: A Graduate Course. Springer, New York (2005)

Hooten, M.B., Johnson, D.S., McClintock, B.T., Morales, J.M.: Animal Movement: Statistical Models for Telemetry Data. CRC Press, Boca Raton (2017)

James, A., Plank, M.J., Edwards, A.M.: Assessing Lévy walks as models of animal foraging. J. R. Soc. Interface 8, 1233–1247 (2011)

Kalashnikov, V.: Geometric Sums: Bounds for Rare Events with Applications. Kluwer, Dordrecht (1997)

Lew, J.S.: On the Darling-Mandelbrot probability density and the zeros of some incomplete Gamma functions. Constr. Approx. 10, 15–30 (1994)

Lindell, A., Holst, L.: Distributions of the longest excursions in a tied down simple random walk and in a Brownian bridge. J. Appl. Probab. 44, 1056–1067 (2007)

Louchard, G.: Asymptotic properties of some underdiagonal walks generation algorithms. Theoret. Comput. Sci. 218, 249–262 (1999)

Merino, S., Grinfeld, M., McKee, S.: A degenerate reaction diffusion system modelling an optical biosensor. Z. Angew. Math. Phys. 49, 46–85 (1998)

Prins, H.H.T., Langevelde, F. (eds.): Resource Ecology. Springer, New York (2008)

Révész, P.: Long excursions and iterated processes. In: Szyszkowicz, B. (ed.) Asymptotic Methods in Probability and Statistics, pp. 243–249. North-Holland, Amsterdam (1998)

Stephens, D.W., Brown, J.S., Ydenberg, R.C.: Foraging: Behavior and Ecology. University of Chicago Press, Chicago (2007)

Steutel, F.W., van Harn, K.: Infinite Divisibility of Probability Distributions on the Real Line. Marcel Dekker, New York (2004)

Tao, Y., Winkler, M.: Global classical solutions to a doubly haptotactic cross-diffusion system modeling oncolytic virotherapy. J. Differ. Equ. 268, 4973–4997 (2020)

Tartakovsky, D.M., Dentz, M.: Diffusion in porous media: phenomena and mechanisms. Transp. Porous Med. 130, 105–127 (2019)

Tilles, P.F.C., Petrovskii, S.V., Natti, P.L.: A random walk description of individual animal movement accounting for periods of rest. R. Soc. Open Sci. 3, 160566 (2016)

Viswanathan, G.M., da Luz, M.G.E., Raposo, E.P., Stanley, H.E.: The Physics of Foraging. Cambridge University Press, Cambridge (2011)

Wato, Y.A., Prins, H.H.T., Heitkönig, I.M.A., Wahungu, G.M., Ngene, S.M., Njumbi, S., van Langevelde, F.: Movement patterns of African elephants (Loxodonta africana) in a semi-arid savanna suggest that they have information on the location of dispersed water sources. Front. Ecol. Evol. 6, 167 (2018)

Yeakel, J.D., Kempes, C.P., Redner, S.: Dynamics of starvation and recovery predict extinction risk and both Damuth’s law and Cope’s rule. Nat. Commun. 9, 657 (2018)

Acknowledgements

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest to declare.

Additional information

Communicated by Deepak Dhar.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Darling–Mandelbrot Distribution

Appendix A: Darling–Mandelbrot Distribution

Recall the definition of the function \(K: \mathbb {R}\rightarrow \mathbb {R}\) and the set \(\mathcal {T}\) from (4.13). Write M(a, b, t) for the Kummer function defined for \(a, b \notin -\mathbb {N}\) by the convergent series

see [4, Chapter 13] or [12, pp. 647–8]. Then, by (4.13), \(K (t) = M (-\frac{1}{2}, \frac{1}{2}, t)\). This identification is the basis for the following facts.

Lemma A.1

-

(a)

As \(t \rightarrow \infty \), we have \(K(-t) \sim \sqrt{\pi t}\) and \(K(t) \sim - \textrm{e}^t/(2t)\).

-

(b)

The function K is infinitely differentiable, with \(K^{(\ell )} (t):= (\textrm{d}^\ell / \textrm{d}t^\ell ) K (t) < 0\) for every \(\ell \in \mathbb {N}\) and all \(t \in \mathbb {R}\), and \(K^{(\ell )} (0) = -1/(2\ell -1)\).

-

(c)

We have that \(\mathcal {T}= (-\infty , t_0)\) where \(t_0\) is uniquely determined by \(\sum _{\ell =1}^\infty \frac{t_0^\ell }{(2\ell -1) \cdot \ell !} =1\); numerically, \(t_0 \approx 0.8540326566\).

-

(d)

With \(\mathcal {I}\) as defined at (2.5), it is the case that

$$\begin{aligned} K(t) = \textrm{e}^t - \mathcal {I}( t ), \text { for all } t \in \mathbb {R}. \end{aligned}$$(A.1)

Remark A.2

The constant \(t_0\) in Lemma A.1(c) also appears in relation to asymptotics of \(T_n\), the maximum excursion duration over the first n steps of a simple symmetric random walk on \(\mathbb {Z}\). It was shown by Csáki, Erdős and Révész [16, Thm. 1] that

See also [30, 34] for some neighbouring results.

Proof of Lemma A.1

The \(t \rightarrow \pm \infty \) asymptotics can be read off from (13.1.4) and (13.1.5) in [4]. The derivatives of K are obtained from equation (13.4.9) in [4] as

Thus \(K^{(\ell )} (0) = -1/(2\ell -1)\). For \(b> a > 0\), the integral representation (13.2.1) in [4] shows that \(M (a,b,t) > 0\) for all \(t \in \mathbb {R}\), and hence \((\textrm{d}^\ell / \textrm{d}t^\ell ) K (t) < 0\) for all \(\ell \in \mathbb {N}\), as claimed. In particular, K is monotone decreasing, with \(K(0) = 1\), so there is a unique \(t_0 \in \mathbb {R}\) with \(K(t_0) = 0\), one has \(t_0 > 0\), and \(K(t) > 0\) if and only if \(t < t_0\). Finally, equations (13.4.4) and (13.6.12) in [4] show that