Abstract

We investigate and prove the mathematical properties of a general class of one-dimensional unimodal smooth maps perturbed with a heteroscedastic noise. Specifically, we investigate the stability of the associated Markov chain, show the weak convergence of the unique stationary measure to the invariant measure of the map, and show that the average Lyapunov exponent depends continuously on the Markov chain parameters. Representing the Markov chain in terms of random transformation enables us to state and prove the Central Limit Theorem, the large deviation principle, and the Berry-Esséen inequality. We perform a multifractal analysis for the invariant and the stationary measures, and we prove Gumbel’s law for the Markov chain with an extreme index equal to 1. In addition, we present an example linked to the financial concept of systemic risk and leverage cycle, and we use the model to investigate the finite sample properties of our asymptotic results

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we investigate and prove some mathematical properties—detailed below—for the following discrete-time random dynamical system:

Here \(\phi _t\), \(t \in \mathbb {N}_{\ge 1}\)Footnote 1, is a sequence of real numbers in a bounded interval of \(\mathbb {R}\), T is a deterministic map on [0, 1] perturbed with the additive and heteroscedasticFootnote 2 noise \(\sigma _{\mathbbm {n}}(\phi _{t-1})Y_{t-1}\), being \(\mathbbm {n}\in \mathbb {N}_{\ge 1}\) a parameter that modulates the intensity of the noise; \(\mathbbm {n}\) is such that one retrieves the deterministic dynamic as \(\mathbbm {n}\rightarrow \infty \). Finally, \(Y_t\), \(t \in \mathbb {N}_{\ge 1}\), is a sequence of independent and identically distributed (i.i.d.) real-valued random variables defined on some filtered probability space \((\Omega , \mathcal {F}, (\mathcal {F}_{t})_{t\ge 0}, \mathbb {P})\) satisfying to the usual conditions. The precise assumptions on T, \(\sigma _{\mathbbm {n}}\), and \(Y_t\), \(t \in \mathbb {N}_{{\ge 1}}\), will be given in Sect. 2. The peculiarity of the model in Eq. (1) is that the law of the random perturbation, particularly its variance, depends on the position \(\phi _{t-1}\) of the point, and therefore of its iterates by the dynamics. The model in (1) can be used to describe situations where a slow deterministic dynamics interacts with a fast random one, and more generally when the two systems interact with a separation of time scales; in such a description, the parameter \(1/\mathbbm {n}\ll 1\) is the fast to slow timescale ratio. It turns out that data from physical dynamical systems commonly exhibit multiple (separated) time scales. In particular, many problems in science—physics, chemistry, and biology (e.g., [12]), meteorology (e.g., [44]), neuroscience (e.g., [33]), econometrics and mathematical finance (e.g., [67])—can be described as systems possessing motions on two time scales, especially the slow-fast dynamical systems with random perturbations (e.g., [9, 18]). In this respect, we cite the chemical reaction dynamics model (e.g., [39]), the cell modelling (e.g., [40]), and the laser systems (e.g., [19]). Because of this popularity, in the first part of the present paper, we put (1) in a very general setting. In Sect. 7, instead, we will present an example taken from a specific financial problem whose dynamics can be brought back to (1).

To study the mathematical properties of (1), we describe the dynamics of \(\phi _{t}\), \(t\in \mathbb {N}_{\ge 1}\), using a Markov chain parametrized by \(\mathbbm {n}\); we will study the regime of finite \(\mathbbm {n}\) and the limit for \(\mathbbm {n}\rightarrow \infty \). As far as we know, the Markov chains with the kind of heteroscedastic noise we introduce are new (see [24] for another type of heteroscedastic nonlinear auto-regressive process applied to financial time series). Since the paper is unavoidably technical and present several rigorous mathematical derivations, we provide hereafter a detailed description of the main results.

The paper is divided in two parts: in the first part we present several results concerning the properties of the generic discrete-time random dynamical system of Eq. (1) whereas in the second part we consider a specific example of relevance for the modeling of financial systemic risk and leverage dynamics. A part from the specific interest for this model, we use it to perform numerical simulations and test the finite size effect of some asymptotic results presented in the first part.

More specifically, in the first part of the paper, we prove the following results:

-

Stationary measures. We investigate the stability of the Markov chain. Some specific properties of the stochastic kernel that defines our model do not allow us to apply general results available in the literature, as e.g., [3, 48]. For instance, we do not know if our chain is Harris recurrent. We look, instead, at the spectral properties of the Markov operator associated with the chain on suitable Banach spaces and prove the quasi-compactness of such an operator. This result allows us to get finitely many stationary measures with bounded variation densities. The uniqueness of the stationary measure is achieved when the chain perturbs the map T, which is either topologically transitive on a compact subset of [0, 1] or an attracting periodic orbit.

-

Convergence to the invariant measure. We show the weak convergence of the unique stationary measure to the invariant measure of the map. This step is not trivial because the stochastic kernel becomes singular in the limit of large \(\mathbbm {n}\).

-

Lyapunov exponent and Central Limit Theorem. we introduce the average Lyapunov exponent by integrating the logarithm of the derivative of the map T with respect to the stationary measure and show that the average Lyapunov exponent depends continuously on the Markov chain parameters. The previous result hinges on the explicit construction of a sequence of random transformations close to T, which allows us to replace the deterministic orbit of T with a random orbit given by the concatenation of the maps randomly chosen in the sequence. Representing the Markov chain in terms of random transformation enables us to state and prove some important limit theorems, such as the Central Limit Theorem, the large deviation principle, and the Berry-Esséen inequality.

-

Multifractal analysis. For the class of unimodal maps T of the chaotic type, we perform a multifractal analysis for the invariant and the stationary measures. In particular, the detailed analysis of the multifractal structure of a set invariant for a chaotic dynamical system allows one to obtain a more refined description of the chaotic behavior than the description based upon purely stochastic characteristics. It turns out that multifractal signals satisfy power-law scaling invariance, or scaling, (e.g., [59]) and exhibit singularity exponents associated to subset of points with different fractal dimension (cfr. Eq. (24)). In our case, the signal is the distribution of the probability measure which rules our system at the equilibrium, namely the invariant measure for the deterministic system and the stationary measure for the randomly perturbed system. The invariant measure of a deterministic map has usually fine properties which reveal themselves in a fractal or multifractal structure of the density. Instead, the equilibrium measure of Markov chains are usually more uniform and indistinguishable from absolutely continuous measures with bounded densities. In this respect, the multifractal analysis of the equilibrium measure might be useful as one of the approaches for discriminating between the chaotic motion associated with a deterministic map and the randomness inherent to a Markov chain.

-

Extreme Value Theory. Finally, we develop an Extreme Value Theory (EVT, henceforth) for our Markov chain with finite values for the parameter \(\mathbbm {n}\). In particular, EVT was originally introduced by Fisher and Tippett [21] and later formalized by Gnedenko [25]. The attention of researchers to the problem of understanding extreme value theory is growing. Indeed, this theory is crucial in a wide class of applications for defining risk factors such as those related to instabilities in the financial markets (e.g., [20]) and to natural hazards related to seismic, climatic and hydrological extreme events (e.g., [10, 46]). In the context of, eventually random, dynamical systems, an application of EVT informed by the dynamics of the system, allows the formalization of two questions related to the recurrence statistics of specific system states. Let U be a set in the phase space of small measure, and thus named rare set or location of an extreme event. The first question is: What is the probability that the first visit to U of our physical system is larger than some prescribed time? Suppose now that the system has entered the set U. The second question is: What is the probability that it resides there a predetermined time in a prescribed time interval? These two very simple questions allow to quantify the rarity of an event and its persistence—both essential characteristics for understanding extremes in several circumstances of relevant physical nature, for instance in geophysics and climate. In addition to the above EVT-related references, we defer to the book [43] for a presentation and a discussion of such systems. In the present work, we prove Gumbel’s law for the Markov chain with an extreme index equal to 1. Such an index can be related to a local persistence indicator [49], suitable to estimate the average cluster size of the trajectories in the set U. Notice that an EVT for Markov chains with the spectral techniques we will use is, as far as we know, a new result. In particular, it allows to treat dynamical system perturbed with a noise more general than the additive noise, which is the standard way of adding randomness to the system.

In the second part of the paper, we present an example linked to the financial concept of systemic risk to which our theory applies. In this setting, \(\phi _t\) in (1) represents the suitably scaled financial leverage of a representative investor (a bank) that invests in a risky asset. At each point in time, the scaling is a linear function of the leverage itself. The bank’s risk management consists of two components. First, the bank uses past market data to estimate the future volatility (the risk) of its investment in the risky asset. Second, the bank uses the estimated volatility to set its desired leverage. However, the bank is allowed maximum leverage, which is a function of its perceived risk because of the Value-at-Risk (VaR) capital requirement policy. More specifically, the representative bank updates its expectation of risk at time intervals of unitary length, say \((t, t + 1]\) with \(t\in \mathbb {N}_{\ge 1}\), and, accordingly, it makes new decisions about the leverage. Moreover, the model assumes that over the unitary time interval \((t, t + 1]\) the representative bank re-balances its portfolio to target the leverage without changing the risk expectations. The re-balancing takes place in \(\mathbbm {n}\) sub-intervals within \((t, t + 1]\). In particular, the considered model is a discrete-time slow-fast dynamical system; as described in the first paragraph in the introduction. After showing that the dynamics of the scaled leverage follows—under suitable approximations—a deterministic unimodal map on [0, 1] perturbed with additive and heteroscedastic noise of the type of Eq. (1), we perform a detailed numerical analysis in support of our theory. The numerical analysis also investigates the finite-size validity of some of our asymptotic results. In addition, we provide a financial discussion of the results. Notice that the example presented is a non-trivial extension of the models in [14, 42, 47], where the scaling of the leverage is constant. In particular, in [42], the authors were also able to show that the constant-scaled leverage follows a (different) deterministic unimodal map with heteroscedastic noise. Also, they were able to prove the existence of a unique stationary density with bounded variation, the stochastic stability of the process, and the almost certain existence and continuity of the Lyapunov exponent for the stationary measure. In the present paper, we prove and extend the previous results but for a more general class of maps, and, as said, we generalize the model in [42].

Organization of the paper. Section 2 presents and discuss the working assumptions of the dynamics in (1). Section 3 details the construction of the Markov chain. Section 4 represents our model regarding random transformations. In Sect. 5, we investigate the mathematical properties of our model. An EVT theory for the Markov chain in 3 is provided in Sect. 6. In Sect. 7, we present the financial model of a representative bank managing its leverage. We show that the model leads to a slow-fast deterministic random dynamical system which can be recast into a unimodal deterministic map with heteroscedastic noise of the type of Eq. (1). We present and discuss some numerical investigation of this system in connection with our theory.

2 Assumptions

In this section, we define and discuss assumptions on T, \(\sigma _{\mathbbm {n}}\) and \(Y_t\), \(t \in \mathbb {N}_{\ge 1}\), as in Eq. (1).

-

(A1)

The map T satisfies the following assumptions:

-

(a)

T is a continuous map of the unit interval \(I\overset{\text {def}}{=}[0,1]\) with a unique maximum at the point \(\mathfrak {c}\) such that \(\Delta \overset{\text {def}}{=} T(\mathfrak {c}) < 1\).

-

(b)

There exists a closed interval \([d_1, d_2] \subset I\) which is forward invariant for the map and upon which T and all its power \(T^{t},\,t\in \mathbb {N}_{\ge 1}\) are topologically transitiveFootnote 3

-

(c)

T preserves a unique Borel probability measure \(\eta \), which is absolute continuous with respect to the Lebesgue measure.

-

(a)

Assumption (A1)–(b) is necessary in order to prove the mathematical properties in Sect. 5; in general, one could ask for several transitive component but this would be an additional technicality that would not add to the present work’s conceptual advancements. Assumption (A1)-(c) is used only in the proof of the stochastic stability; see Sect. 5.1. We give now the following important Example.

Example 2.1

An important class of maps susceptible to verify (A1) is given by the class of unimodal maps T [15, 63] with negative Schwarzian derivativeFootnote 4; notice that in this case, one has to require that the maps are at least \(C^{3}\) on the interval I. Moreover, if T verifies \(T(\Delta )< \mathfrak {c} < \Delta \), then the interval \([T(\Delta ), \Delta ]\), called dynamical core, is mapped onto itself and absorbs all initial conditions; in particular \([d_1,d_2]\) in (A1)–(b) coincides with the dynamical core. The latter could exhibit motions other than simply attracting fixed points or 2-cycles. In the general class of unimodal maps T with negative Schwarzian derivative, one can distinguish two types:

-

(i)

T is periodic if there is a globally attracting fixed point or a globally attracting cycle.

-

(ii)

T is chaotic if (A1)–(b) and (A1)–(c) hold.

Perturbations of unimodal maps with uniform additive noise were studied in [4, 6]. As we already mentioned in the Introduction, [42], instead, studies the perturbations of unimodal maps with heteroscedastic noise.

Before presenting the assumptions on \(\sigma _{\mathbbm {n}}\), we need to introduce the following quantities to which in the following we will refer. First, we introduce

i.e., the gap between \(T(\mathfrak {c})\) and 1. Second, we define a positive constant a satisfying one of the following two bounds:

or

where \(\sigma _{\max } \overset{\text {def}}{=} \max _{x \in I}\sigma _{\mathbbm {n}}(x)\), and the positive constant q is the eventual intercept of the map T at zero (see Assumption (B1.2)).

-

(B1)

The function \(\sigma _{\mathbbm {n}}\) is a non-negative differentiable function for \(x \in (0,1)\) such that \(\forall x\) \(\sigma _{\mathbbm {n}}(x)\rightarrow 0\) as \(\mathbbm {n}\rightarrow \infty \).

Note that \(\sigma _{\mathbbm {n}}(x)\) modulates the effect of the noise. In particular, \(1/\mathbbm {n}\) can be interpreted as the time-scale separation parameter, while \(\sigma _{\mathbbm {n}}\) dictates the size of the noise; see the first paragraph of the Introduction.

We distinguish the following two sub-cases of (B1):

-

(B1.1)

\(T(0)=0\), and \(\sigma _{\mathbbm {n}}(0)=0\). In this case, we assume that for any fixed \(\mathbbm {n}\in \mathbb {N}_{\ge 1}\) there exists \(\varepsilon _{\mathbbm {n}} \in \mathbb {R}\) such that:

-

\(T(x) - a \sigma _{\mathbbm {n}}(x) > 0\) for \(x \in (0, 1-\Gamma /2]\).

-

\(T(x) - a \sigma _{\mathbbm {n}}(x) > x\) for \(x \in (0, \varepsilon _{\mathbbm {n}})\) (in particular, \(T^{'}(0)>0\)).

-

\(T(x) - a \sigma _{\mathbbm {n}}(x) > \varepsilon _{\mathbbm {n}}\) for \(x \in (\varepsilon _{\mathbbm {n}}, 1-\Gamma /2)\).

-

-

(B1.2)

\(T(0)=q>0\). In this case, the positive multiplicative constant a in (B1.1) satisfies (4).

The following remark better clarifies Assumptions (B1.1)–(B1.2).

Remark 2.2

The objective of assumptions (B1.1)–(B1.2) is twofold:

-

(i)

it allows us to define the transition probabilities for constructing our Markov chain. Indeed, the probability density \(p_{\mathbbm {n}}(x,\,\cdot \,)\) defining those probabilities will be supported on \([s_{a,-}(x), s_{a,+}(x)]\) with \(s_{a,\pm }(x) = T(x)\pm a\sigma _{\mathbbm {n}}(x)\); see Sect. 3. Moreover, notice that (B1.1) requires T to be \(C^{1}\).

-

(ii)

It enables us to determine precisely the support of the stationary measure \(\mu _{\mathbbm {n}}\); see Sect. 5.1. In particular the example presented in Sect. 7 verifies the condition (B1.2) which simply requires to choose the parameter “a" small enough to satisfy the bound (4). Instead, condition (B1.1) covers the case studied in (our former paper) [42]: since both T and \(\sigma _{\mathbbm {n}}\) vanishes at 0, we need to compare them in the neighborhood of 0 and this explains the introduction of the quantity \(\varepsilon _{\mathbbm {n}}\). The role of \(\varepsilon _{\mathbbm {n}}\) appears clearly in the construction of the support of the stationary measure in Sect. 5.1 since it determines a measurable set where the chain is not recurrent.

Finally, we have that

-

(C1)

\(Y_t\), \(t \in \mathbb {N}_{\ge 1}\) is a sequence of i.i.d real-valued random variables defined on some filtered probability space \((\Omega , \mathcal {F}, (\mathcal {F}_{t})_{t\ge 0}, \mathbb {P})\) satisfying to the usual conditions. Their distribution function \(g_{a}\), depending on the parameter a in (B1), is such that \(\forall \omega \in \Omega \) and \(x \in \tilde{I}\), with \(\tilde{I} \supset I\), we have \(T(x)+\sigma _{\mathbbm {n}}(x)Y_{1} \in \tilde{I}\). The interval \(\tilde{I}\) is slightly larger than I and will be precisely determined later. Accordingly, the map T will be extended on \(\tilde{I}\). The distribution function \(g_a\) has the following form:

$$\begin{aligned} g_{a}(y)\overset{\text {def}}{=} c_{a} \chi _{a}(y)e^{-\frac{y^2}{2}},\quad y \in \mathbb {R}, \end{aligned}$$(5)where \(\chi \) is a \(C^{\infty }\) bump functionFootnote 5 on \([-a,a]\) and

$$\begin{aligned} c_a = \left( \int _{\mathbb {R}} \chi _{a}(y)e^{-\frac{\varepsilon ^2}{2}}\,dy\right) ^{-1}. \end{aligned}$$

Assumption (C1) has two main objectives. On the one hand, the perturbation should not be too strong so that T admits an extension to some compact interval \(\tilde{I} \supset I\). On the other hand, the stochastic kernel associated with our Markov chain must be uniformly bounded on some interval to prove the Markov operator’s quasi-compactness. As said in the Introduction, the Markov operator’s quasi-compactness will provide stationary measures for the chain.

From now on, we will denote by

the triple composed by a map T satisfying ((A1)), perturbed with an additive heteroscedastic noise in which the variance-like function \(\sigma _{\mathbbm {n}}\) and the noise verifies ((B1)) and ((C1)), respectively.

Under (A1), (B1), and (C1), we define the following stochastic process:

In addition, the random orbit associated with our initial difference equation is given by:

Before proceeding, the following observation is in order. In Sects. 3, we will see that several results valid under Assumption (A1) could also be extended for the class of maps in Example 2.1 that are periodic. This will be in particular relevant for the leading example in Sect. 7. The validity of (A1) is much easier to verify for uniformly or even piecewise continuous maps. In principle, one could also consider multimodal maps as having several critical points. However, in the latter case, one has to handle the construction of the stationary measure as outlined in Sect. 5. Notice that such a construction is also based on Assumption (B1) and (C1).

3 Markov Chain

In this section, we define a Markov chain that describes our model. We obtain it as a deterministic map T satisfying Assumption (A1) perturbed with an additive noise as in Assumptions (B1)-(C1). In particular, for fixed T we parametrize the chain by the intensity of the noise \(\mathbbm {n}\), consequently indexing with \(\mathbbm {n}\) the chain \((X_t^{(\mathbbm {n})})\), the transition probabilities \(P_{x}^{(\mathbbm {n})}\), and the stochastic kernel \(p_{\mathbbm {n}}(x, y)\). According to the theory of random transformations, a Markov chain can be constructed as follows; see, e.g., [38]. Take an initial point \(x \in I\)Footnote 6 and define the following stochastic process for any \(t \in \mathbb {N}_{\ge 1}\):

Then, for \(x \in I\) the transition probabilities are defined as:

because all the \(F_t^{(\mathbbm {n})}\), \(t \in \mathbb {N}_{\ge 1}\), have the same distribution. By Assumption (C1), and \(\forall x\,:\,\sigma _{\mathbbm {n}}(x)>0\), we have:

where \(p_{\mathbbm {n}}(x,y)\) is the stochastic kernel and in the third equality, we use the following change of variable: \(z=T(x)+\sigma _{\mathbbm {n}}(x)y\). Instead, if for some x we have \(\sigma _{\mathbbm {n}}(x)=0\), the transition probability verifies \(P_{x}(A)=1_{A}(T(x))\) (meaning \(P_{x} = \delta _{T(x)}\), where \(\delta _{T(x)}\) is the Dirac mass at x). So, the stochastic kernel is

with \(\int p_{\mathbbm {n}}(x,y)\,dy=1\) for every \(x \in I, \sigma _{\mathbbm {n}}(x)>0\). Therefore, \(z \in [s_{a,-}, s_{a,+}]\) with \(s_{a,\pm }=T(x) \pm a \sigma _{\mathbbm {n}}(x)\).

Since the noise varies in a neighborhood of 0, we need to enlarge the domain of definition of the map T to take into account the action of the noise. More precisely, we extend the domain of T to the larger interval \(\tilde{I} \overset{\text {def}}{=} [-\Gamma , 1]\). On the interval \([-\Gamma ,0]\), T is extended continuously and decreasing with \(T(-\Gamma ) < \Delta \) and with the same slope of T restricted to the interval \([0,\varepsilon _{\mathbbm {n}}]\), where \(\varepsilon _{\mathbbm {n}}\) is given in (B1.1). With abuse of language and notation, we will continue to call T the map after its redefinition, and we put \(I = \tilde{I}\). We have the following remark.

Remark 3.1

[6] consider a similar extension to allow perturbations with additive noise; in particular, it was supposed that T admits an extension to some compact interval \(J \supset I\), preserving all the previous properties and satisfying \(T(\partial J) \subset \partial J\). Notice that, in our case and with these extensions, the map T could lose smoothness in 0. However, this regularity persists on the interval (0, 1), and this will be enough for the subsequent considerations, particularly for the construction of the stationary measure whose support will be strictly included in (0, 1).

We look at the Markov operator corresponding to the transition probabilities. To this aim, we denote by \(\mathcal {M}\) the space of (real-valued) Radon measure on \(\tilde{I}\), and by \(\mathcal {L}\,:\,\mathcal {M}\rightarrow \mathcal {M}\) the Markov operator acting by

for every Borel set \(A \in I\), or, equivalently,

for all \(\varphi \in C^{0}\), where \(C^{0}\) denotes the Banach space of continuous function on I with the sup norm. In our case \(\sigma _{\mathbbm {n}}(\tilde{x})=0\) in at most two points, \(\tilde{x}=0,1.\) Therefore in such a case we could write

We note that \(\mathcal {L}:L^{1} \rightarrow L^{1}\) is an isometry, where \(L^{1}\) is intended, from now on, with respect to the Lebesgue measure. In Sect. 5.1, we will be interested in stationary measures \(\rho \), which are absolutely continuous with respect to the Lebesgue measure and, therefore, non-atomic. If we denote by \(h \in L^{1}\) the density of such a measure, it will be a fixed point of the operator \(\mathcal {L}\,:L^{1} \rightarrow L^{1}\), i.e.,

where \(p_{\mathbbm {n}}\) is the stochastic kernel in formula (12). In particular, it should satisfy

We will return to the previous formula in Sect. 5. Now, in the next section, we present a slightly different, yet equivalent (see, e.g., [38]), approach for representing the model in Eq. (1), namely the random transformation approach.

4 Random Transformations

We consider the following identity:

Assumption (C1) implies that \(T_{\eta }\) can be seen as a family of random maps of I into itself. Let \(\theta (\eta ) \overset{\text {def}}{=} g_{a}(\eta )\,d\eta \) be the probability measure of \(\eta \) with density \(g_{a}\). Now, let \(\rho \in \mathcal {M}\) (see Sect. 3 for the definition of \(\mathcal {M}\)) a measure with density \(h \in L^{1}\). By requiring its invariance, we have that:

In addition, by using the definition of \(\rho (A)\), we have

where \(\mathcal {L}_{\eta }:L^{1}\rightarrow L^{1}\) is the Perron-Fröbenius operator associated to the map \(T_{\eta }\)Footnote 7. By changing the order of integration again, we finally get that the Markov operator in Eq. (13) satisfies for any \(h \in L^1\) the following identity

We now present a correlation integral that we will use to derive some statistical properties of our model. In order to do this, let \((\eta _t)_{t\ge 1}\) be an i.i.d. stochastic process where each \(\eta _t\) has distribution \(\theta \), \(\bar{\eta }_t \overset{\text {def}}{=} (\eta _{1}, \ldots , \eta _{t})\), and \(\theta ^{t}(\bar{\eta }_t)\overset{\text {def}}{=}\theta (\eta _1)\times \ldots \times \theta (\eta _t)\) the product measure. We call the following concatenation, or composition, of randomly chosen maps \(T_{\eta _t} \circ \ldots \circ T_{\eta _1}\), where \((\eta _s)_{s=1}^{t}\) are i.i.d. with distribution \(\theta \), as random transformation. In particular, the above-mentioned correlation integral reads as

where \(h \in L^{1}\) and \(g \in L^{\infty }\). Notice that in [42], authors use the Lebesgue measure instead of the probability measure \(\theta \). By using the latter, we do not need to modify the map T as in the Lebesgue measure case.

5 Mathematical Properties of the Model

In this section, we investigate some mathematical properties of our model. In Sect. 5.1, we show the existence and uniqueness of an absolutely continuous stationary measure and establish its convergence to the invariant measure of the deterministic map. This result allows us to define the Lyapunov exponent and prove its continuity with respect to the model parameters. We also discuss some limit theorems in Sect. 5.3. Finally, Sect. 7.3 concerns a multi-fractal analysis of our model.

5.1 Stationary Measure and Stochastic Stability

In this subsection, we establish the existence of a unique stationary measure for the Markov chain associated with our model.

In Sect. 3, we extended the domain of definition of the map T to the set \(\tilde{I}= [-\Gamma , 1]\). In particular, if the constant a satisfies the bound in Eq. (4), then the support of the stationary measure \(\mu _{\mathbbm {n}}\), say \(\text {supp}(\mu _{\mathbbm {n}}) \subset I_{\Gamma }\), where

Indeed, on the one hand, if we take a point \(z \in \left( 1-\frac{\Gamma }{2}, 1\right] \), then it will be surely greater than \(T(x) \pm a\sigma _{\mathbbm {n}}(x)\), \(x \in I\). In order to understand the left-hand side of the interval in Eq. (18), suppose first that \(T(0)=q>T\left( 1-\frac{\Gamma }{2}\right) \). If \(z \in \text {supp}(h)\), being h the density of \(\mu _{\mathbbm {n}}\), then \(T(x)\in [\tilde{s}_{a,-},\tilde{s}_{a,+}]\) with \(\tilde{s}_{a,\pm }=z \pm a\sigma _{\mathbbm {n}}(x)\), where \(x\in \text {supp}(\mu _{\mathbbm {n}})\) too. If z is in a neighborhood of 0, then the values of x, which could contribute in T(x) are smaller than \(\left( 1-\frac{\Gamma }{2}\right) \) by choice of a. So, if we take \(z < \frac{1}{2}T\left( 1-\frac{\Gamma }{2}\right) \) and we require that \(a\sigma _{\mathbbm {n}}(x)< \frac{1}{2}T\left( 1-\frac{\Gamma }{2}\right) \), then \(z\notin \text {supp}(h)\).

If, instead, the constant a satisfies Eq. (3), then the interval \(I_{\varepsilon _{\mathbbm {n}},\Gamma }\overset{def}{=}\left[ \varepsilon _{\mathbbm {n}},1-\frac{\Gamma }{2}\right] \) is invariant for \(T_{\eta }\), \(\forall \eta \in [-a,a]\) (see Eq. (15)). In particular, if \(x \in I_{\varepsilon _{\mathbbm {n}},\Gamma }^{c}\), where \(I_{\varepsilon _{\mathbbm {n}},\Gamma }^{c}\) is the complementary set of \(I_{\varepsilon _{\mathbbm {n}},\Gamma }\), then x will spend finitely many times in \(I_{\varepsilon _{\mathbbm {n}},\Gamma }^{c}\); note that \(x=0\) is a fixed point. In particular, the chain \(X_t^{(\mathbbm {n})}\) visits finitely many times any open set K in \(I_{\varepsilon _{\mathbbm {n}},\Gamma }^{c}\). Therefore, the chain is not recurrent and \(\mu _{\mathbbm {n}}(K)=0\).

The above considerations implies that the subspace \(\{h \in L^{1}\,:\,\text {supp}(h) \subset I_{\Gamma }\}\) (resp. \(\{h \in L^{1}\,:\,\text {supp}(h) \subset I_{\varepsilon _{\mathbbm {n}},\Gamma }\}\)) is \(\mathcal {L}\)-invariant, and that the stochastic kernel \(p_{\mathbbm {n}}(x,z)\) has total variation of order \(\frac{1}{\sigma _{\mathbbm {n}}(x)}\). Therefore, it is uniformly boundedFootnote 8 when restricted to \(I_{\Gamma }\times I_{\Gamma }\) (resp. \(I_{\varepsilon _{\mathbbm {n}},\Gamma }\times I_{\varepsilon _{\mathbbm {n}},\Gamma }\)). In particular, we can apply Proposition 4.2 and Theorem 4.3 in [42] to conclude that the following proposition hold.

Proposition 5.1

The random system in Eq. (6) admits a unique stationary measure \(\mu _{\mathbbm {n}}\) with density \(h_{\mathbbm {n}}\) of bounded variation and such that \([d_1, d_2] \subset \text {supp}(h_{\mathbbm {n}})\). Moreover, for any observable \(f \in L^{1}\), \(g \in BV\), there exists \(0<r<1\) and \(C>0\), depending only on the system, such that, for all \(t \in \mathbb {N}_{\ge 0}\), we have

Proof

Let BV the Banach space of bounded variation functions on \(I_{\Gamma }\) (or \(I_{\Gamma ,\varepsilon _{\mathbbm {n}}}\)) equipped with the complete norm

where \(|f|_{TV}\) is the total variation of the function \(f \in L^{1}\). Because the stochastic kernel has uniformly bounded variation on \(I_{\Gamma }\times I_{\Gamma }\) (or on \(I_{\Gamma ,\varepsilon _{\mathbbm {n}}} \times I_{\Gamma ,\varepsilon _{\mathbbm {n}}}\)), we have

see Lemma 4.1 in [42]. By the previous equation, we have

for any \(\eta <1\); this is the Lasota-Yorke inequality for the operator \(\mathcal {L}\). The latter, plus the fact that BV is compactly embedded in \(L^1\), implies that the operator \(\mathcal {L}\) has the following spectral decomposition

where all \(v_i\) are eigenvalues of \(\mathcal {L}\) of modulus 1, \(\Pi _{i}\) are finite-rank projectors onto the associated eigenspaces, Q is a bounded operator with a spectral radius strictly less than one. They satisfy the following properties:

Standard techniques show that 1 is an eigenvalue and therefore the chain will admit finitely many absolutely continuous ergodic stationary measures, with supports that are mutually disjoint up to sets of zero Lebesgue measure. Moreover the peripheral spectrum is completely cyclic. We require that 1 is a simple eingenvalue of \(\mathcal {L}\), and that there is no other peripheral eigenvalue, hence implying that our Markov chain is mixing and therefore the norm of \(\Vert \mathcal {L}^{t} f\Vert _{BV}\) goes exponentially fast to zero when \(t\rightarrow \infty \), for \(f \in BV\) and \(\int _{\mathbb {R}} f\,dx=0\) (exponential decay of correlations). These properties, which are consequences of the Ionescu-Tulcea-Marinescu theorem, are summarized by saying that the operator \(\mathcal {L}\) acting on BV is quasi-compact, see, e.g., [30]; we will implicitly assume in the following that the operator has the mixing property too. In order to prove that 1 is a simple eigenvalue of \(\mathcal {L}\), and that there is no other peripheral eigenvalue we first observe that the peripheral spectrum of \(\mathcal {L}\) consists of a finite union of finite cyclic groups; then there exists \(t \in \mathbb {N}_{\ge 1}\) such that 1 is the unique peripheral eigenvalue of \(\mathcal {L}^{t}\). It suffices then to show that the corresponding eigenspace is one-dimensional. Standard arguments show there exists a basis of positive eigenvectors for this subspace, with disjoint supports. At this point we use a simple generalization of Theorem 4.3 in [42] for the powers of \(\mathcal {L}^{{t}}\) plus the assumption on the topological transitivity of \(T^{{t}}, t\ge 1\) on \([d_1, d_2]\) to get that the basis is one dimensional. \(\square \)

We investigate now the stochastic stability of the system, which means to determine if a sequence of stationary measure will converge weaklyFootnote 9 to the invariant measure of the unperturbed map. In our case, the sequence of probability measure is given by \(\mu _{\mathbbm {n}}\overset{\text {def}}{=}h_{\mathbbm {n}}\,dx\). Notice that \(h_{\mathbbm {n}} \in L^{\infty },\,\forall \,\mathbbm {n}\) because they have finite total variation. Nonetheless, to prove the above-mentioned stochastic stability, we need the following assumption

- (A\(_{p}\)):

-

There exists \(p>1\) and \(C_p > 0\) such that for all \(\mathbbm {n}\ge 1\) we have \(\Vert h_n\Vert _{p} \le C_p\); the \(L^{p}\) norm is taken again with respect to Lebesgue.

We have the following

Proposition 5.2

For the random system in Eq. (6), under Assumption (A\(_{p}\)), the sequence of stationary measure \(\mu _{\mathbbm {n}}\) converges weakly to the unique T invariant probability \(\mu \) as \(\mathbbm {n}\rightarrow \infty \), in the sense that for any real-valued function \(g \in C^{0}(I)\), we have

Proof

See Theorem 5.3 in [42]. \(\square \)

We will see in the next section that with the preceding assumption we can prove the convergence of the Lyapunov exponent (Proposition 5.5) and then verify it numerically on the examples in Sect. 7, which is an indirect indication of the validity of \((A_p).\)

We conclude this section with the following observations.

Observation 5.3

Proposition 5.2 is proved by using the representation of the Markov chain in terms of random transformation; in particular, one uses the correlation integral in Eq. (17) and the continuity of the map \(\eta \rightarrow F_{\eta } \in C^{0}(I)\).

Observation 5.4

Proposition 5.2 can be extended to periodic unimodal maps under the following additional assumption:

- (Ap.1):

-

\(\forall \,\mathbbm {n}\) sufficiently large and \(\forall \,x\in \text {supp}(\mu _{\mathbbm {n}})\) we have that \(|T^{'}(x)|<1\).

In particular, if T has a globally attracting periodic orbit carrying the discrete measure \(\mu \) and satisfies (Ap.1), then the sequence \(\mu _{\mathbbm {n}}\) converges to \(\mu \) in the weak-\(^{\star }\)topology as \(\mathbbm {n}\rightarrow \infty \). This requirement can be strengthened by adding the following assumption

- (Ap.2):

-

If T is a unimodal periodic map (see Example 2.1) and the critical point of the map \(\mathfrak {c}\) does not belong to the attracting periodic orbit, then \(h_{\mathbbm {n}} \rightarrow 0\) uniformly in a neighbourhood of \(\mathfrak {c}\) as \(\mathbbm {n}\rightarrow \infty \).

5.2 Lyapunov Exponent

As done in Sect. 4.3 of [42], we define the so-called average Lyapunov exponent; see [23, 52]. If the chain admits a unique stationary measure \(\mu _{\mathbbm {n}}\), then the average Lyapunov exponent is defined as:

In particular, because the stationary measure \(\mu _{\mathbbm {n}}\) has density of bounded variation, it is enough that \(\log |T^{'}| \in L^{p}(\mu _{\mathbbm {n}})\) for some \(p \ge 1\). For instance, this is the case when T is chaotic or periodic unimodal map (see Example 2.1) with a non-flat critical pointFootnote 10.

The average Lyapunov exponent in Eq. (19) is introduced to prove that it converges to the analogous quantity computed with respect to the invariant measure \(\mu \) of T. The following proposition holds.

Proposition 5.5

Suppose that one of the following conditions is satisfied:

-

(a)

The random system in Eq. (6) verifies (A\(_{p}\)) with the additional assumption that \(\log |T^{'}| \in L^{p}(\mu _n)\) for some \(p \ge 1\), where \(\mu _{\mathbbm {n}}\) is the unique stationary measure of the associated Markov chain.

-

(b)

The deterministic map T is a unimodal periodic map (see Example 2.1) and verifies Assumptions (Ap.1) and (Ap.2).

Then, the average Lyapunov exponent in Eq. (19) converges to the Lyapunov exponent of the deterministic map T as \(\mathbbm {n}\rightarrow \infty \). Moreover, for \(\mathbbm {n}\) large enough, it is positive if T verifies (A1), and negative if T is a periodic unimodal map (see Example 2.1, (i)).

Proof

See [42], Appendix B, Sect. B.5. \(\square \)

The average Lyapunov exponent was associated with the phenomenon of noise induced order [61], which happens when the perturbed system admits a unique stationary measure depending on some parameter, say \(\theta \), and the Lyapunov exponent depends and exhibits a transition from positive to negative values. Denote by \(\Theta \overset{\text {def}}{=}\{\theta \in \tilde{\Theta }\,|\,\tilde{\Theta }\,\text {is open and}\,\max T_{\theta } < 1\}\) the (extended) parameter space of the map T. We use the term “extended" because also the parameter \(\mathbbm {n}\) belongs to \(\Theta \). Moreover, let index the map T as \(T_{\theta }\) to make explicit the dependence on the parameters. Suppose that \(T_{\theta }(x) \in C^{3}(\tilde{\Theta } \times I)\) and \(p_{\theta }(x,y) \in C^{2}(\tilde{\Theta } \times I^2)\), and let \(\bar{\Theta } \subset \tilde{\Theta }\) be the set of parameters for which there exists a unique stationary measure \(\mu _{\mathbbm {n}}\) with a density of bounded variation. We can now state the following

Theorem 5.6

The mapping \(\bar{\Theta }\ni \theta \mapsto \Lambda _{\theta }\in \mathbb {R}\) is continuous.

Proof

See [42], Theorem 4.12. \(\square \)

5.3 Limit Theorems

We will take advantage of the Markov chain description of our model to state a few important limit theorems for fixed \(\mathbbm {n}\). These limit theorems are relatively easy to obtain for a fixed \(\mathbbm {n}\), but they could become very technical for the unperturbed map T because they depend in a non-obvious way on the parameters defining T; see, e.g., [63, 66] for a discussion in the case of unimodal maps.

As observed above, if T satisfies Assumption (A1), then the Markov operator \(\mathcal {L}\) associated with the Markov chain is quasi-compact on the Banach space BV of bounded variation functions. The adjoint operator \(\mathcal {U}\) of \(\mathcal {L}\) acts in the following way

where \(f_1 \in L^{\infty }\) and \(f_2\in L^{1}\). In particular

where \(\theta (\eta )\) is as in Sect. 4. In particular, we can write the correlation integral in Eq. (17) in terms of the adjoint operator:

We take now a function \(g \in BV\) such that \(\int _{\mathbb {R}} g d\mu _{\mathbbm {n}} = 0\). In addition, let \(W_k(\bar{\eta }_k, x) = g(T_{\eta _k} \circ \ldots \circ T_{\eta _1})(x)\), where \(\bar{\eta }_k=(\eta _1,\ldots ,\eta _k)\), and

We now apply the Nagaeev-Guivarc’s perturbative approach [26, 51]. This technique enables us to get our limit theorem by twisting the transfer operator \(\mathcal {L}\); see [30]. Before stating the results, we precise that the underlying probability is \(\widetilde{\mathbb {P}}_{\mathbbm {n}}\overset{\text {def}}{=}\theta ^{\otimes \mathbb {N}}\otimes \mu _{\mathbbm {n}}\). If we use this probability, then we should choose a realization \((\eta _t)_{t\ge 1}\) where any \(\eta _t \overset{d}{\sim }\eta \), and the initial condition \(x \in I\) is chosen \(\mu _{\mathbbm {n}}\)-a.s. The following theorem holds:

Theorem 5.7

Suppose the deterministic map T satisfies Assumption (A1) and \(g \in BV\). In addition, let \(T_{\eta }\) be the random transformation in Eq. (15). Then, we have:

-

(e1)

The limit \(\iota ^{2} \overset{def}{=}\ \lim _{t\rightarrow \infty } \frac{1}{t}\mathbb {E}_{\widetilde{\mathbb {P}}_{\mathbbm {n}}}(S^2_t)\) exists and is equal to

$$\begin{aligned} \iota ^2 = \int _{I} g^2\,d\mu _{\mathbbm {n}} + 2 \sum _{t=1}^{\infty } g (\mathcal {U}^{t} g)\,d\mu _{\mathbbm {n}}. \end{aligned}$$(22) -

(e2)

(Central Limit Theorem). Suppose \(\iota > 0\). The process \(\left( \frac{S_t}{\sqrt{t}}\right) _{t \ge 1}\) converges in law to \(\mathcal {N}(0,\iota ^2)\) under the probability \(\widetilde{\mathbb {P}}_{\mathbbm {n}}\).

-

(e3)

(Large Deviation Principle). There exists a non-negative rate function \(\mathcal {R}\), continuous, strictly convex, vanishing only at 0, such that for every \(\varepsilon \) sufficiently small we have

$$\begin{aligned} \lim _{t\rightarrow \infty } \frac{1}{t}\log \widetilde{\mathbb {P}}_{\mathbbm {n}}(S_t > t \varepsilon ) = - \mathcal {R}(\varepsilon ). \end{aligned}$$ -

(e4)

(Berry-Essén inequality). There exists \(D>0\) such that

$$\begin{aligned} \sup _{r \in \mathbb {R}}\Big | \widetilde{\mathbb {P}}_{\mathbbm {n}}\left( \frac{S_t}{\sqrt{t}} \le r\right) -\frac{1}{\iota \sqrt{2\pi }}\int _{-\infty }^{r}e^{-\frac{u^2}{2\iota ^2}}\,du\Big | \le \frac{D\Vert h_{\mathbbm {n}}\Vert _{BV}}{\sqrt{t}} \end{aligned}$$(23)

Proof

See [2], Sect. 3. \(\square \)

We conclude this section with the following

Remark 5.8

The previous theorem hinges on the following exponential decay of correlations (see Proposition 5.1), which is a consequence of the spectral gap prescribed by the Markov operator’s quasi-compactness and the uniqueness and mixing property of the absolutely continuous stationary measure. For any observables, \(f \in L^{1}\) and \(g \in BV\), there exists \(0<v<1\) and \(C>0\), depending only on the system, such that, for all \(k \ge 0\),

5.4 A Multifractal Analysis

We now focus on unimodal maps T of chaotic type, as defined in the Example 2.1, and preserving a unique absolute continuous invariant measure \(\mu \). The latter is not essentially bounded, but its density is in \(L^{p}(\mu )\), for some \(p\ge 1\). The presence of divergent values for the density could generate a non-trivial multi-fractal spectrum for the measure \(\mu \).

We start with a few reminders about multi-fractal theory; see, e.g., [7, 32, 34, 55, 56]. Let \(\mu \) be a probability measure, and B(x, r) the ball of center and radius r on the interval I. We denote by

the local dimension of the measure \(\mu \) at the point x, provided that the limit exists. Then, the generalized dimension \(D_q(\mu )\), or simply \(D_q\), where \(q \in \mathbb {Z}\) is obtained as

where \(f(\alpha )\) denotes the Haursdorff dimension of the set of points for which \(d_{\mu }(x)=\alpha \). Interest in the generalized dimensions originated in the eighties of the last century (e.g., [27, 57]), primarily for the study of chaotic attractors and fully developed turbulence (e.g., [8, 54]), and rapidly became important also from the mathematical viewpoint. The measure \(\mu \) is called multifractal when \(\tau (q)\) is not affine, or \(D_q\) is not a constant, otherwise it is simply named fractal, where the latter attribute is always reserved to non-integer values for the dimension. The quantity \(\tau (q)\), also called Legendre transformation, can be linked to the scaling exponent of a suitable correlation integral. In fact, for several dynamical systems \((M, \mu , T)\), where M is a metric space, we have that the following limit

exists and coincides with \(\tau (q)\) in Eq. (24). Notice that for \(q=1\), the limit in Eq. (25) is replaced by

by an application of the Hôpital’s rule. For unimodal maps of Benedicks-Carleson typeFootnote 11 preserving an absolute continuous invariant measure \(\mu \), it is possible to compute the spectrum of generalized dimensions. Authors in [5] prove the remarkable result that the density h of \(\mu \) has the form

with \(\psi _0\in C^1\), \(\phi _k\in C^1\) is such that \(||\phi _k||_{\infty } \le e^{-a k}\) for some \(a>0\) and \(\chi _k = 1_{[-1, z_k]}\) if \(f^k\) has a local maximum at \(z_0\), while \(\chi _k = 1_{[z_k,1]}\) if \(f^k\) has a local minimum at \(z_0\). For such a measure, one can explicitly compute the generalized dimensions via the definition in Eq. (24) (see [11]):

We think that a similar result holds for the class of unimodal maps considered in Example 2.1. We said similar and not the same result because the non-constant part of \(D_q\) depends on the order of divergence at the singular points \(z_k\) of the density, which for the Benedicks-Carleson type maps, behaves like \(|x-z_k|^{-1/2}\). The values of \(D_q\) are constant for negative q whenever the invariant density h is bounded away from zero, see [11]; in this case it is also very ease to see that all the dimensions are less or equal to 1.

Now, it becomes interesting to explore the spectrum of the generalized dimensions for randomly perturbed orbits. We do not expect any multifractal structure for the stationary measure when its density is essentially bounded, so \(D_q=1\), \(q \in \mathbb {R}\). Nevertheless the density could become locally very large when \(\mathbbm {n}\rightarrow \infty \) making it numerically indistinguishable from the unbounded density of the deterministic map on the orbit of the critical point. To study the dimensions for the stationary measure it is convenient to adopt the point of view of random transformations (see Sect. 4), and consider a realization \(T_{\eta _t} \circ \ldots \circ T_{\eta _1}\) of a random orbit producing the following empirical measure for a given \(\mathbbm {n}\):

where \(\tilde{\eta }_t = T_{\eta _{t-1}}\circ \ldots \circ T_{\eta _{1}}(x)\) for a suitable point x (see below). Again, each \(\eta _k\) has distribution \(\theta \). From the ergodic theorem for random transformations, it now follows that

where \(\mu _{\mathbbm {n}}\) is the stationary measure, \(g \in L^{1}(\mu _{\mathbbm {n}})\), and the point x is chosen \(\mu _{\mathbbm {n}}\)-a.e., and the sequence \((\eta _t)_{t\ge 1}\) is chosen \(\theta ^{\otimes \mathbb {N}}\)-a.e.. Since the support of \(\mu _{\mathbbm {n}}\) contains the dynamical core, by taking an arbitrary point x in such a core and by fixing a realization \((\eta _t)_{t\ge 1}\), the generalized dimensions of the stationary measure \(\mu _{\mathbbm {n}}\) could be computed directly via the correlation integral formula (25) by using the empirical measure (28) for large t; see, e.g., [11].

6 Extreme Values Distribution

In this subsection, we develop an EVT for the Markov chain defined in 3 for finite values for the parameter \(\mathbbm {n}\). In particular, we consider the chain \((X_t^{(\mathbbm {n})})_{t \ge 1}\) with the stochastic kernel \(p_{\mathbbm {n}}(x,y)\), endowed with the canonical probability \(\mathbb {P}_{\mathbbm {n}}\) having initial distribution \(\mu _{\mathbbm {n}}=h_{\mathbbm {n}}\,dx\). We focus on the derivation of the Gumbel law for a particular observable by deriving the distribution of the first entrance of the chain in a small set, which we name rare setFootnote 12. To this aim, we index with t the rare set defined as a ball of center \(z \in I\) and with radius \(e^{-u_t}\), \(B_{t}(z)\overset{\text {def}}{=}B(z,e^{-u_t})\), where \(u_t\) is a sequence called boundary levels such that \(u_t\rightarrow \infty \) as \(t\rightarrow \infty \), and verifying

where \(\tau \in \mathbb {R}_{>0}\). Then, we consider the observable

where \(x \in I\), and \(\text {dist}(\,\cdot \,)\) denotes the usual distance on \(\mathbb {R}\). Then, we define the following random variable with values in I

We will be interested in the distribution \(\mathbb {P}_{\mathbbm {n}}(M_t^{(\mathbbm {n})}\le u_t)\) as \(t\rightarrow \infty \). In particular, by the stationarity of the Markov chain, this distribution is equivalent to the probability that the first entrance of the chain into the ball \(B_{t}(z)\) is larger than t.

Condition (30) enables us to get verifiable prescriptions on the sequence of boundary levels \(u_t\). If the stationary measure \(\mu _{\mathbbm {n}}\) is non-atomic, then the measure of a ball is a continuous function of the radius. Therefore, for any given \(\tau \in \mathbb {R}_{+}\) and \(t\in \mathbb {N}_{\ge 1}\), we can find \(u_t\) such that \(\mu _{\mathbbm {n}}(B(z,e^{-u_t}))=\frac{\tau }{t}\). Now, we denote by \(B_t^{c}(z)\) the complement of the ball \(B_t(z)\), and define the perturbed operator \(\tilde{\mathcal {L}}_{(t)}\) for \(g \in BV\) as

It is straightforward to check that

We now show that the operator \(\tilde{\mathcal {L}}_{(t)}\) approaches L in a precise sense that allows us to control the asymptotic behavior of the integral in (34). This result allows us to control the asymptotic behavior of the integral in (34). In order to make the argument rigorous, we need more assumptions on the operator \(\mathcal {L}\), in addition to the quasi compactness. The same quasi compactness property is shared by the operator \(\tilde{\mathcal {L}}_{(t)}\), provided that t is large enough, and provided that \(\tilde{\mathcal {L}}_{(t)}\) is close to \(\mathcal {L}\) in the following sense

where \(c(t)\rightarrow \infty \) as \(t\rightarrow \infty \). Indeed, we have

because the space BV is continuously embedded into \(L^{\infty }\) with constant equal to one. We can apply the perturbation theorem of Keller-Liverani, which gives the asymptotic behavior of the top eigenvalue of \(\tilde{\mathcal {L}}_{(n)}\) around one; see [36, 37]. In addition, see, e.g., [43], Chapter 7, for an application of that theory to Markov chains. At this point, we need a further assumption:

-

(E1)

The density \(h_{\mathbbm {n}}\) of the stationary measure is bounded away from zero on the rare set \(B_{t}(z)\).

Therefore, we can prove that

where the so-called extremal index (EI) \(\theta \) satisfies

with \(q_{k}=\lim _{t\rightarrow \infty } q_{k,t}\), provided that the limit exists, with:

Namely, \(q_{k,t}\) is the probability of \(\mu _{\mathbbm {n}}\)-distributed stationary chain to start in \(B_t(z)\) and then return to it after exactly \((k+1)\) steps. It is now easy to show that all the \(q_{k,t}\) vanishes in the limit as \(t\rightarrow \infty \) since we have

where, to estimate the right-hand side of (39), we use the fact that for a fixed \(\mathbbm {n}\), the stochastic kernel \(p_{\mathbbm {n}}(x,y)\) is uniformly bounded by a constant \(c_{\mathbbm {n}}\). In particular, the right-hand side converges to zero as \(t\rightarrow \infty \). We have just proved the following

Proposition 6.1

Suppose that our Markov chain is constructed upon a map T verifying Assumption (A1), and that \(\mathbb {P}_{\mathbbm {n}}\) is the canonical probability with initial distribution \(\mu _{\mathbbm {n}}=h_{\mathbbm {n}} dx\). Then, we get Gumbel’s law:

where \(M_t^{(\mathbbm {n})}\) is defined in Eq. (32), \(\varphi (\cdot )\) in Eq. (31), the boundary level \(u_t\) verifies \(\mu _{\mathbbm {n}}(B(z,e^{-u_t}))=\frac{\tau }{t}\), and on the set \(B(z,e^{-u_t})\) the density \(h_{\mathbbm {n}}\) of the stationary measure is bounded away from zero for large t (Assumption (E1)).

Our Markov chain visits infinitely often the neighborhood \(B_t(z)\) of any point z. Therefore, we expect that the exponential law \(e^{-\tau }\) given by the extreme value distribution describes the time between successive events in a Poisson process. To formalize this, we introduce the random variable

and we consider the following distribution

We have the following

Proposition 6.2

Suppose that our Markov chain is constructed upon a map T verifying Assumption (A1), and that \(\mathbb {P}_{\mathbbm {n}}\) is the canonical probability with initial distribution \(\mu _{\mathbbm {n}}=h_{\mathbbm {n}} dx\). Then, we have:

where the density \(h_{\mathbbm {n}}\) of the stationary measure is bounded away from zero for large t on the set \(B(z,e^{-u_t})\) (Assumption (E1)).

Proof

See [28]. \(\square \)

In particular, we have shown that the EI is equal to 1. Such an index is less than one when clusters of successive recurrences happen, which is the case, for instance, when the target point z is periodic. Our heteroscedastic noise breaks periodicity, so we expect an EI equal to one.

We conclude this section with the following observation and example.

Observation 6.3

While we rigorously prove an EVT for the Markov chain, we are still determining if a similar result holds for the deterministic map T with respect to its invariant measure. Moreover, there are, in fact, only a few results on EVT for unimodal maps; see, for instance, [13, 22, 50].

7 An Application to Systemic Risk

This section presents a stylized model of the leverage dynamics to which our theory applies. A part from providing a potential application of the models considered in this paper, we will use the specific model to perform numerical simulations of the maps and to test the finite size effect of some asymptotic results presented above. The model is an extension of the one presented in [42] since we add here a possible relation between liquidity and leverage, whereas in [42] liquidity was considered constant. The description of the model follows the same lines as the presentation in [42].

A representative financial institution (hereafter a bank) takes investment decisions at discrete times \(t \in \mathbb {Z}\), which defines the slow time scale of the model. At each time the bank’s balance sheet is characterized by the asset \(A_t\) and equity \(E_t\), which together define the leverage \(\lambda _t := A_t/E_t\). The bank wants to maximize leverage (by taking more debt) to increase profits, but regulation constraints the bank’s Value-at-Risk (VaR) in such a way that \(\lambda _t = \frac{1}{\alpha \sigma _{e,t}}\), where \(\alpha \) depends on the return distribution and VaR constraintFootnote 13, and \(\sigma _{e,t}\) is the expected volatility at time t of the asset, which in this model is composed by a representative risky investment. Thus at each time t the bank recomputes \(\sigma _{e,t}\) and chooses \(\lambda _t\). Then, in the interval \([t,t+1]\) the bank trades the risky investment to keep the leverage close to the target \(\lambda _t\). The trading process occurs on the points of a grid obtained by subdividing \([t,t+1]\) in \(\mathbbm {n}\) subintervals of length \(1/\mathbbm {n}\) (the fast time scale). The dynamics of the investment return can be written as

where \(\varepsilon _{t+k/\mathbbm {n}}\) and \(e_{t+(k-1)/\mathbbm {n}}\) are, respectively, the exogenous and endogenous component of the return. The former is a white noise term with variance \(\sigma ^2_\epsilon \), while the latter depends on the banks’ demand for the risky investment in the previous step. For each bank, the demand for the risky investment at time \(t+k/\mathbbm {n}\) is the difference between the target value of \(A_t\) to reach \(\lambda _t\) and its actual value. Since the bank’s asset is composed by the risky investment, an investment return \(r_{t+k/\mathbbm {n}}\) modifies \(A_t\) and the bank trades at each grid point to reach the target leverage. It is possible to show (see [14, 47]) that to achieve this, at each time \(t+k/\mathbbm {n}\) the bank’s demand for the risky investment is

where \(A^*_{t+(k-1)}\) is the target asset size in the previous step. If there are M identical banks, the aggregated demand is \(MD_{t+k/\mathbbm {n}}\). The endogenous component of returns \(e_{t+k/\mathbbm {n}}\) is determined by the aggregated demand by the equation

where \(C_{t+k/\mathbbm {n}}=MA^*_{t+(k-1)/\mathbbm {n}}\) is a proxy of the market capitalization of the risky asset, and \(\gamma _t\) is a parameter measuring at each point in time the investment liquidity. Notice that in [42] this parameter is considered constant. Using the above expression, it is

and thus in the period \([t, t+1]\) the return \(r_{t+k/\mathbbm {n}}\) follows an AR(1) process with autoregression parameter \(\phi _t=(\lambda _t-1)/\gamma _t\) and idiosyncratic variance \(\sigma ^2_\epsilon \). In the present paper, we assume that \(\gamma _t\) is linked to the level of the leverage \(\lambda _t\) by the following relation:

where \(\gamma _0\) is a positive constant, and \(|c|\le 1\). As far as we know, there is not a unified consensus in the literature on the type (linear or not), and the sign of the relationship between the marketFootnote 14 leverage and liquidity. For instance, [64] states, “The relationship between market leverage ratio and liquidity risk in the long term is negative and statistically significant only for commercial banks belonging to the old EU countries". In particular, it seems that there is no a universal statement on the sign of c. As regards as the type of dependence, we decide for a linear relationship. Admittedly, the linear relationship may seem too crude, but a non linear dependence would be an additional technicality that would not add to the present work’s conceptual advancements.

To close the model, we specify how the bank forms expectations \(\sigma _{e,t}\) on future volatility at time t. We assume that bank uses adaptive expectations, which implies that

where \(\omega \in [0,1]\) is a parameter weighting between the expectation at \(t-1\) and the estimation \({\hat{\sigma }}^2_{e,t}\) of volatility obtained by the return data in \([t-1,t]\). As done in practice, this is obtained by estimating the sample variance of the returns in \([t-1,t]\), i.e.

where the last expression gives the aggregated variance of an AR(1) process as a function of the AR estimated parameters \(\hat{\phi }_{t-1}\) and \({\hat{\sigma }}^2_\epsilon \). In the following we will assume that these are the Maximum Likelihood Estimators (MLE). We remind that when \(\mathbbm {n}\) is large, \({\hat{\phi }}_{t-1}\) is a Gaussian distributed variable with mean \(\phi _{t-1}\) and variance \((1-\phi ^2_{t-1})/\mathbbm {n}\).

In conclusion, the leverage dynamics is described by the following equations:

Since slow variables evolve depending on averages of the fast variables, the model is a slow-fast deterministic-random dynamical system. By using the expression above for the variance, we can rewrite the equation for the slow component only as

where the estimator \({\hat{\sigma }}^2_{e,t}\) can be seen as a stochastic term depending on \(\lambda _{t-1}\) and whose variance goes to zero when \(\mathbbm {n}\rightarrow \infty \).

If \(\mathbbm {n}\) is large, the above map reduces to

When changing \(\mathbbm {n}\) also \(\sigma ^2_\epsilon \) changes, since the AR(1) can be seen as the discretization of a continuous time stochastic process (namely an Ornstein-Uhlenbeck process). A simple scaling argument shows that the quantity \(\Sigma _{\epsilon }=\sigma ^2_\epsilon \mathbbm {n}\) is instead constant and independent from the discretization step \(1/\mathbbm {n}\). With abuse of notation, we set: \(\Sigma _{\epsilon }\overset{\text {def}}{=}\lim _{\mathbbm {n}\rightarrow \infty } \mathbbm {n}\hat{\sigma }^2_{\epsilon }\), and we define \(\overline{\Sigma }_{\epsilon }\overset{def}{=}(1-\omega )\alpha ^2\Sigma _{\epsilon }\). At this point, we observe that since in the large \(\mathbbm {n}\) limit the MLE estimator \(\hat{\phi }_{t-1}\) is a Gaussian variable with mean \(\phi _{t-1}\) and variance \((1-\phi ^2_{t-1})/\mathbbm {n}\), we can write

By using the definition of \(\gamma _t\) in Eq. (45), by defining \(\phi _t\overset{\text {def}}{=}\frac{\lambda _t-1}{\gamma _t}\), and by introducing the function \(V : \mathbb {R}^2\rightarrow \mathbb {R}\) given for any \((u,v)\in \mathbb {R}^2\) by

we get

If the noise \(\eta _{t-1}\) is small (i.e., \(\mathbbm {n}\) is large), we can perform a series expansion, obtaining:

where

Accordingly, Eq. (49) becomes:

By performing, again, a series expansion we obtain:

with \(\tilde{\eta }_{t-1}\overset{d}{\sim }\mathcal {N}(0,1)\), \(t \in \mathbb {N}_{\ge 1}\). Notice that we performed a series expansion for \(\eta _{t-1}\) small, which is justified whenever the variable \(\phi \) stays far from one. Because we are going to iterate the map \(\mathcal {F}\) in Eq. (49) for \(\phi \in [0,1]\) and \(|\eta |\ll 1\), it is enough to show that:

because in this case all the successive iterates \(|\mathcal {F}^{t}(\phi ,\eta )|\), \(t\in \mathbb {N}_{\ge 1}\), satisfy the same bound. It is not difficult to see that the bound holds true provided that \(\gamma _0\) is sufficiently large.

Now, we study the deterministic map T in Eq. (50), which is the deterministic component of \(\mathcal {F}\) for \(\eta \) small. By arguing as above, we have that

provided that \(\gamma _0\) is sufficiently large.

Plot of the deterministic component \(T(\phi )\), \(\gamma _0=15.969\), \(\alpha =1.64\), \(\Sigma _{\epsilon }=2.7\times 10^{-5}\). The value for \(\gamma _0\) is taken from the empirical analysis in [42], Sect. 7.2, (where it is denoted simply by \(\gamma \)) . The value \(\alpha =1.64\) corresponds to a VaR constraint of \(5\%\) in case of a Gaussian distribution for the returns. The values \(\Sigma _{\epsilon }=2.7\times 10^{-5}\) is taken from [47], Table 1, and corresponds to the exogenous idiosyncratic volatility at the time scale of portfolio decisions. The value for \(\omega \) and c are randomly sampled from the dynamical core, once fixed the other parameters. The Blue dot indicates the critical point \(\mathfrak {c}\), the Green dot the intersection between the map and the horizontal axis, the left-hand Red dot indicates the image of 0, the right-hand Red dot indicates \(\lim _{\phi \rightarrow 1^{-}}T(\phi )=-\frac{1}{\gamma _0}\). The support of the invariant density belongs to the so-called dynamical core \([T(\Delta ),\Delta ]\) (Color figure online)

Figure 1 shows the map T for some suitably chosen parameters:

-

\(\gamma _0=15.969\); this value is taken from the empirical analysis in [42], Sect. 7.2 (where it is denoted simply by \(\gamma \)). It corresponds to the maximum value of the leverage computed over a 4,389 time series of US Commercial Banks and Saving and Loans Associations; see [42], Sect. 7.1, for a detailed description of the dataset.

-

\(\alpha =1.64\); it corresponds to a VaR constraint of \(5\%\) in case of a Gaussian distribution for the returns.

-

\(\Sigma _{\epsilon }=2.7\times 10^{-5}\); this value is taken from the numerical analysis in [47], Table 1, and corresponds to the exogenous idiosyncratic volatility at the time scale of portfolio decisions.

-

The values for \(\omega \) and c are free parameters and are randomly sampled (e.g., from the dynamical core).

The figure shows that that T is a unimodal map with a negative Schwarzian derivative (see below). In the figure, \(\Delta \) is the iterate of the unique critical point (Blue dot) \(\mathfrak {c}\) of T, i.e., \(\Delta =T(\mathfrak {c})\). Therefore, if we take the initial condition \(\phi _0\) in the interval \([\Delta , 1]\), then all the successive iterates \(|T^{t}(\phi _0)|\), \(t\in \mathbb {N}_{>1}\) will stay in \([0, \Delta ]\).

By definition, the (re-scaled) leverage of the representative bank is a positive quantity. However, as one can also notice from the graph in Fig. 1, we have that \(\lim _{\phi \rightarrow 1^{-}} T(\phi ) = -\frac{1}{\gamma _0}\). Therefore, we need to slightly modify the definition of our map by restricting it to the interval [0, b], being b the point of intersection between the map and the horizontal axis (Green dot in Fig. 1) Notice that this definition makes sense when \(\Delta< b < 1\). In addition, as we verified numerically, if we take the initial condition in the interval \([\Delta , b]\), then all the other iterates will stay in \([0,\Delta ]\). In particular, the previous redefinition is legitimate also if we consider the effect of the noise. Indeed, it is clear from the considerations in Sect. 2 that if we symmetrize about the horizontal axis, the graph of T in the interval [b, 1] to make it positive, then the equilibrium state for the chain, precisely its unique stationary measure, has support that does not intersect the interval [b, 1] if a satisfies the bound in Eq. (4). Also, we verified numerically that the condition \(\Delta< b < 1\) holds for a \(\gamma _0\) sufficiently large. We continue to denote by T the map after this redefinition. We now modify the map T by enlarging on the left its domain of definition to take into account the action of the additive noise. To do so, we first notice that

see, the Red-left dot in Fig. 1. With abuse of notation, (re)defineFootnote 15\(\Gamma \overset{\text {def}}{=} b-\Delta \), and extend the domain of definition T to the larger interval \([-\Gamma , b]\) so that T is continuous at 0 and on \([-\Gamma ,0)\) is \(C^{4}\) smooth, positive and decreasing, with \(T(-\Gamma )<\Delta \). Again, with abuse of notation, we will still denote by T the map after this second redefinition, and, hereafter, write \(I \overset{def}{=}[-\Gamma ,b]\). The map T just-defined verifies Assumption (B1.2) and we choose the distribution of the random variables \((\tilde{\eta }_{t})_{t \ge 1}\) in order to satisfy Assumption (C1). We need to verify Assumption (A1)-(c). In order to do so, we verify numerically the following important result taken from [35] (see, also, [30], Theorem 12). Define the number

Suppose T is a unimodal map with negative a Schwarzian derivative, and non-flat critical point with \(\ell _{T}(x)=\kappa >0\) for Leb-almost all \(x \in I\), then T admits a unique absolutely continuous invariant probability measure \(\nu \). In this case, \(\kappa \) will be the Lyapunov exponent of the map T with respect to \(\nu \). Figure 2 represents the value of \(\ell _{T}\) in the same parameters configuration of Fig. 1.

Once we have verified that our systemic risk model in Eq. (50) satisfies Assumptions (A1), (B1), and (C1), we pass to investigate whether it satisfies, as it should be, the mathematical properties in Sect. 5 and the EVT in Sect. 6. The order in which we present the results reflects the order in which they were presented in the latter sections.

7.1 Dynamics Properties of the Map

The bifurcation diagram of a dynamical system shows how the asymptotic distribution of a typical orbit varies as a function of a parameter. For our map, either the memory parameter \(\omega \) or the parameter c can be employed as bifurcation parameter. Figure 3 shows the bifurcation diagram as a function of \(c \in [-1,1]\). The choice of the parameter \(\omega \) for this plot corresponds to a value of \(\omega \) for which a specific pair \((c,\omega )\) is in the dynamical core (\(\omega =0.669\)).

We now comment Fig. 3. Moving backward, between 1 and 0.3 there is an attracting fixed point. Then, as c gets smaller and smaller, the period one behaviour splits into period two and the two values are getting further apart. The situation is more complex for c in \([-0.48,0.3]\) as small parameter variations can change the dynamics from chaotic to periodic and back. Finally, when c is between \(-0.48\) and \(-1\) there is an attracting fixed point. However, in this range, \(\phi _t\) takes negative values and this does not make sense in our financial application, since it would correspond to negative leverage. Figure 4 shows how the graph of the map T changes as a function of c reflecting the description of the bifurcation diagram.

To identify more precisely the signature of a chaotic behaviour, we compute the Lyapunov exponent as a function of c. For the deterministic map, the Lyapunov exponent is positive if and only if T admits an absolutely continuous invariant measure. Figure 5, from top to bottom, shows the estimated Lyapunov exponent for the deterministic map, as well as for the random system for different intensities of the noise. The Lyapunov exponent is not displayed for some values of the parameter c because of some numerical issues we encountered to determine the intersection between the map and the horizontal axis. For this reason, it is not possible to fully appreciate that the exponent becomes a smooth function of c when add even a small amount of noise, in agreement with Theorem 5.6. Figure 5 shows also the validity of Proposition 5.5 and therefore indirectly of Assumption (A\(_{p}\)).

Finally, Fig. 6 displays the random map in Eq. (15) together with the quantiles of the distribution of the graphs of the maps associated with the random maps. Notice that we use a different set for the parameters to emphasize the effect of the noise.

Random maps in Eq. (15) together with the quantiles of the distribution of the graphs of the maps associated with the random maps; \(\mathbbm {n}=10, 100, 10000\)

7.2 Limit Theorems

We here investigate the validity of the Central Limit Theorem in Theorem 5.7-(e2). We proceed in the following way. First, we choose as function \(g \in BV\) such that \(\int _{\mathbb {R}}g\,d\mu _{\mathbbm {n}}=0\) the function

Notice that in principle we would like to have a function g with null average with respect the unknown measure \(\mu _{\mathbbm {n}}\); the function in the previous equation verifies this property with respect the Lebesgue measure. Nonetheless, we verify numerically the validity of the cited property also for \(\mu _{\mathbbm {n}}\). Then, we generate 20,000 orbits of length 10,000 by using the random transformation. In this way, for each \(t \in \{1,\ldots , 10000\}\) we have a sample of the quantity \(S_t\) in Eq. (21). Therefore, we can test if the distribution of \(\frac{S_t}{\sqrt{t}}\) becomes more and more Gaussian as t increases. In order to do so, we apply three normality tests, namely the Shapiro [62], the normal test of D’Agostino and Pearson’s [16, 17], and the Jarque–Bera’s test [31]. They all tests the null hypothesis that a sample comes from a normal distribution. Table 1 reports the results. Within each row, the two subrows are the value of the test and, between brackets, the p-value From the table it is clear that the distribution of \(\frac{S_t}{\sqrt{t}}\) becomes more and more Gaussian as t increases, confirming the Central Limit Theorem stated above.

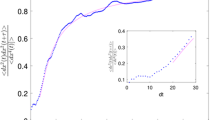

Spectrum of the generalized dimension \(D_q\), for different values of the noise level \(\mathbbm {n}\). The gray line is derived from Eq. (27). Notice that for our unimodal map the picture shows the order of divergence as the square root of the singular points as for the Benedicks-Carleson type

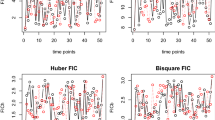

Top panel: the sequence \(u_t\) as a function of t; Bottom panel: estimated \(\mathbb {P}_{\mathbbm {n}}(M_t^{(\mathbbm {n})} \le u_t)\), where \(M_t^{(\mathbbm {n})}\) is defined in Eq. (32), as a function of \(\log t\), together with the theoretical value \(e^{-\tau }\) (Red horizontal line) (Color figure online)

7.3 Multifractal Analysis

In this subsection, we compute the spectrum of the generalized dimension \(D_q\) as in Sect. 7.3 by combining Eq. (24) with Eqs. (25) and (26). The results are displayed in Fig. 7. The gray line represents the value for the comparison as computed in Eq. (27). In order to compute the other lines we proceed in the following way. For \(q>1\) we approximate the integral in Eq. (25) by considering the so called partition sums

where the sum runs over all intervals B of size r. In particular, we follow, e.g., [45, 58] and we restrict the variable r to a sequence \(r_n\) in order to give meaningful results. Our values of r are defined by: \(r = \text {np.linspace}(0.5\times 10^{-5}, 10^{-5},100)\). For a fixed r, the occupation number \(n_{i}(r)\) of the i-th interval is defined as the number of sample points it contains out of N sample points from the trajectory of our dynamical system. The measure \(\mu _i\) of the interval \(B_i\) is the fraction of time which a generic trajectory on the attractor spends in the i-th interval \(B_i\) and is roughly equal to \(n_i(r)/N\). Therefore, we compute D(q) as the slope of a linear fit of

against \(\log r\); note that we have dropped the normalization factor \(N = \sum _{i} n_i(r)\) since it is independent of r. The computation of D(1) follows the same logic. Instead, for negative q we follow [60] and we replace the occupation numbers \(n_i(r)\) by the extended occupation numbers \(n^{*}_i(r)\) which are defined by

that is the number of sample points contained in the interval \(B_i\) and its neighboring boxes. By looking, again, at Fig. 7, it is interesting to see that for \(\mathbbm {n}=10\) the quantity \(D_q\) for the random maps becomes almost a constant equals to 0.8–0.9, whereas this quantity changes more for higher values of \(\mathbbm {n}\) and, in particular, for the unperturbed map (proxied by \(\mathbbm {n}=10^{15}\)). In particular, as we suspected in Sect. 7.3, the generalized dimension \(D_q\) becomes more and more pronounced when \(\mathbbm {n}\) grows as the stationary measure converges (weakly) to the invariant measure of the deterministic map. In this respect, we think, as pointed out in the introduction, that the previous multifractal analysis could help us in discriminating between chaotic and random behaviors. Indeed, the invariant measure of our unimodal map T has a multifrctal structure due to the presence of countably many singularities for its density h. Instead, the equilibrium measure of the Markov chain is absolutely continuous with bounded density. Notice that the curves in Fig. 7 resemble the curves in Fig. 3 in [65], where authors investigate the multifractal features of liquidity in China’s stock market. They claim that the liquidity time series at the studied time is no longer subject to a standard random walk process, but subject to a fractal biased random walk process. This shows that, theoretically, it is feasible to predict the liquidity of the Chinese securities market.

7.4 Extreme Value Theory

Finally we verify the validity of Proposition 6.1 on EVT. We proceed in the following way. We fix values for the parameters characterizing the map \((\gamma _0, \omega , c, \alpha , \Sigma _{\epsilon })\) as described above, for the initial point of each orbit \(x_0\) and z chosen randomly in the dynamical core (\(x_0=0.38\) and \(z=0.80\)), for the parameter \(\tau \) (\(\tau =\log (10)\)), and for the intensity of the noise (\(\mathbbm {n}=10^{3}\)). Then, we determine numerically the sequence \(u_t\) in such a way that \(\mu _{\mathbbm {n}}(B(z,e^{-u_t})) = \frac{\tau }{t}\), where \(\mu _{\mathbbm {n}}\) estimated from the histogram constructed with a very long orbit. The Left Panel of Fig. 8 shows the sequence \(u_t\) as a function of t and the Right Panel displays the estimated \(\mathbb {P}_{\mathbbm {n}}(M_t^{(\mathbbm {n})} \le u_t)\), where \(M_t^{(\mathbbm {n})}\) is defined in Eq. (32), as a function of t, together with the theoretical value \(e^{-\tau }\) (Red horizontal line). The estimated probability converges to the theoretical value, confirming our EVT results. From a financial point of view, this means that we are able to compute what is the probability that, given an initial leverage, the first time the leverage is “close" to a given target is larger than t.

Data Availability

The authors will provide the code to reproduce the results of the article upon request.

Notes

In this paper, the symbol \(\mathbb {N}_{\ge 1}\) denotes the set of natural numbers greater or equal than one.

A sequence of random variables is heteroscedastic if the variance is not constant.

A map T on a topological space X is called topologically transitive if for all nonempty open sets \(U, V \subset X\) there exists some positive integer t such that \(T^{t}(U) \cap V \ne \emptyset \). Notice that the topological transitivity of \(T^{t}\), \(t\in \mathbb {N}_{\ge 1}\) will be substantially used in Sects. 5.2, 5.3, and 7.3.

The Schwarzian derivative S(T) of the map T is defined as \(S(T):=\frac{T^{'''}}{T^{'}}-\frac{3}{2}\left( \frac{T^{'''}}{T^{'}}\right) ^{2}\).

Essentially a smooth version of a step function. See [42] for a precise definition and example.

One could also consider the initial point as a random variable \(X_0\) independent of the \(Y_t\); in this case, the measurable and bounded initial distribution is \(\rho _0(A)=\mathbb {P}(X_0 \in A )\).

The Perron-Fröbenius operator associated to the map \(T_{\eta }\) is defined by the duality relationship \(\int _{\mathbb {R}}\mathcal {L}_{\eta }h\,g\,dx=\int _{\mathbb {R}}hg \circ T_{\eta }(x)\,dx\), where \(h \in L^{1}\) and \(g \in L^{\infty }\).

In general, we say that a stochastic kernel p(x, y) has uniformly bounded variation if \(|p(x,\cdot )|_{TV} \in L^{\infty }\), i.e., there is \(C>0\) such that \(|p(x,\cdot )|_{TV}\le C\) for almost every \(x \in I\).

Notice that this result could be strengthened by showing that \(\Vert h_{\mathbbm {n}}-h\Vert _{1}\rightarrow 0\), which is called the strong stochastic stability.