Abstract

We derive a macroscopic heat equation for the temperature of a pinned harmonic chain subject to a periodic force at its right side and in contact with a heat bath at its left side. The microscopic dynamics in the bulk is given by the Hamiltonian equation of motion plus a reversal of the velocity of a particle occurring independently for each particle at exponential times, with rate \(\gamma \). The latter produces a finite heat conductivity. Starting with an initial probability distribution for a chain of n particles we compute the current and the local temperature given by the expected value of the local energy. Scaling space and time diffusively yields, in the \(n\rightarrow +\infty \) limit, the heat equation for the macroscopic temperature profile T(t, u), \(t>0\), \(u \in [0,1]\). It is to be solved for initial conditions T(0, u) and specified \(T(t,0)=T_-\), the temperature of the left heat reservoir and a fixed heat flux J, entering the system at \(u=1\). |J| equals the work done by the periodic force which is computed explicitly for each n.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

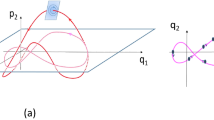

The emergence of the heat equation from a microscopic dynamics after a diffusive rescaling of space and time is a challenging mathematical problem in non-equilibrium statistical mechanics [6]. Here we study this problem in the context of conversion of work into heat in a simple model: a pinned harmonic chain. The system is in contact at its left end with a thermal reservoir at temperature \(T_-\) which acts on the leftmost particle via a Langevin force (Ornstein–Uhlenbeck process). The rightmost particle is acted on by a deterministic periodic force which does work on the system. The work pumps energy into the system with the energy then flowing into the reservoir in the form of heat.

To describe this flow we need to know the heat conductivity of the system. As it is well known, the harmonic crystal has an infinite heat conductivity [19]. To model realistic systems with finite heat conductivity we add to the harmonic dynamics a random velocity reversal. It models in a simple way the various dissipative mechanisms in real systems and produces a finite conductivity (cf. [1, 5]).

In paper [14], which is the first part of the present work, we studied this system in the limit \(t\rightarrow \infty \), see Sect. 2.1 for rigorous statements of the main results obtained there. In this limit the probability distribution of the phase space configurations is periodic with the period of the external force, see Theorem 2.1 below. We also showed that with a proper scaling of the force and period the averaged temperature profile satisfies the stationary heat equation with an explicitly given heat current. In the present paper we study the time dependent evolution of the system, on the diffusive time scale, starting with some specified initial distribution. We derive a heat equation for the temperature profile of the system.

The periodic forcing generates a Neumann type boundary condition for the macroscopic heat equation, so that the gradient of the temperature at the boundary must satisfy Fourier law with the boundary energy current generated by the work of the periodic forcing (see (2.35) below). On the left side the boundary condition is given by the assigned value \(T_-\), the temperature of the heat bath. As \(t\rightarrow \infty \) the profile converges to the macroscopic profile obtained in [14].

The energy diffusion in the harmonic chain on a finite lattice, with energy conserving noise and Langevin heat bath at different temperatures at the boundaries, have been previously considered [2,3,4, 13, 18]. But complete mathematical results, describing the time evolution of the macroscopic temperature profile, have been obtained only for unpinned chains [4, 13].

This article gives the first proof of the heat equation for the pinned chain in a finite interval, and the method can be applied with different boundary conditions (see Remark 2.12). Investigation about energy transport in anharmonic chain under periodic forcing can be found in [10, 11], and very recently in [20]. In the review article [16] we considered various extensions of the present results to unpinned, multidimensional and anharmonic dynamics.

1.1 Structure of the Article

We start Sect. 2 with the precise description of the dynamics of the oscillator chain. Then, as already mentioned, in Sect. 2.1 we give an account of results obtained in [14]. In Sect. 2.2 we formulate our two main theorems: Theorem 2.5 about the limit current generated at the boundary by a periodic force, and Theorem 2.10 about the convergence of the energy profile to the solution of the heat equation with mixed boundary conditions.

In Sect. 3 we obtain a uniform bound on the total energy at any macroscopic time by an entropy argument. As a corollary (cf. Corollary 3.3) we obtain a uniform bound on the time integrated energy current, with respect to the size of the system.

Section 4 contains the proof of the equipartition of energy: Proposition 4.1 shows that the limit profiles of the kinetic and potential energy are equal. Furthermore, we show there the fluctuation-dissipation relation ((4.5)). It gives an exact decomposition of the energy currents into a dissipative term (given by a gradient of a local function) and a fluctuation term (given by the generator of the dynamics applied to a local function).

The fluctuation-dissipation relation (4.5) and equipartition of energy (4.1) are two of the ingredients for the proof of the main Theorem 2.10. The third component is a local equilibrium result for the limit covariance of the positions integrated in time. It is formulated in Proposition 5.1, for the covariances in the bulk, and in Proposition 5.2, for the boundaries. The local equilibrium property allows to identify correctly the thermal diffusivity in the proof of Theorem 2.10, see Sect. 5.

The technical part of the argument is presented in the appendices: the proof of the local equilibrium is given in Appendix D, after the analysis of the time evolution of the matrix for the time integrated covariances of positions and momenta, carried out in Appendix C. Both in Appendix C and Appendix D we use results proven in [14], when possible. Appendix B contains the proof of the current asymptotics (Theorem 2.5), that involves only the dynamics of the averages of the configurations. Appendix E contains the proof of the uniqueness of measured valued solutions of the Dirichlet-Neumann initial-boundary problem for the heat equation, satisfied by the limiting energy profile. Finally, in Appendix F we present an argument for the relative entropy inequality stated in Proposition 3.1.

2 Description of the Model

We consider a pinned chain of \(n+1\)-harmonic oscillators in contact on the left with a Langevin heat bath at temperature \(T_-\), and with a periodic force acting on the last particle on the right. The configuration of particle positions and momenta are specified by

We should think of the positions \(q_x\) as relative displacement from a point, say x in a finite lattice \(\{0,1,\ldots ,n\}\). The total energy of the chain is given by the Hamiltonian: \(\mathcal {H}_n ({\textbf{q}}, {\textbf{p}}):= \sum _{x=0}^n {\mathcal {E}}_x ({\textbf{q}}, {\textbf{p}}),\) where the energy of particle x is defined by

where \(\omega _0>0\) is the pinning strenght. We adopt the convention that \(q_{-1}:=q_0\).

The microscopic dynamics of the process \(\{({\textbf{q}}(t), {\textbf{p}}(t))\}_{t\geqslant 0}\) describing the total chain is given in the bulk by

and at the boundaries by

Here \(\Delta \) is the Neumann discrete laplacian, corresponding to the choice \(q_{n+1}:=q_n\) and \(q_{-1}= q_0\), see (A.1) below. Processes \(\{N_x(t), x=1,\ldots ,n\}\) are independent Poisson of intensity 1, while \({\widetilde{w}}_-(t)\) is a standard one dimensional Wiener process, independent of the Poisson processes. Parameter \(\gamma >0\) regulates the intensity of the random perturbations and the Langevin thermostat. We have choosen the same parameter in order to simplify notations, it does not affect the results concerning the macroscopic properties of the dynamics.

We assume that the forcing \({\mathcal {F}}_n(t)\) is given by

where \( {\mathcal {F}}(t)\) is a 1-periodic function such that

Here

are the Fourier coefficients of the force. Note that by (2.6) we have \({\widehat{{\mathcal {F}}}}(0)=0\).

For a given function \(f:\{0,\ldots ,n\}\rightarrow {\mathbb R}\) define the Neumann laplacian

with the convention \(f_{-1}:=f_0\) and \(f_{n+1}:=f_n\). The generator of the dynamics can be then written as

where

and

Here \(F:{\mathbb R}^{2(n+1)}\rightarrow {\mathbb R}\) is a bounded and measurable function, \({\textbf{p}}^x\) is the velocity configuration with sign flipped at the x component, i.e. \({\textbf{p}}^x=(p_0^x,\ldots ,p_n^x)\), with \(p_y^x=p_y\), \(y\not =x\) and \(p_x^x=-p_x\). Furthermore,

The microscopic energy currents are given by

with \(\mathcal {E}_x(t):={\mathcal {E}}_x \big ({\textbf{q}}(t), \textbf{p}(t)\big )\) and

and at the boundaries

2.1 Summary of Results Concerning Periodic Stationary State

The present section is devoted to presentation of the results of [14] (some additional facts are contained in [15]). They concern the case when the chain is in its (periodic) stationary state. More precisely, we say that the family of probability measures \(\{\mu _t^P, t\in [0,+\infty )\}\) constitutes a periodic stationary state for the chain described by (2.3) and (2.4) if it is a solution of the forward equation: for any function F in the domain of \(\mathcal {G}_t\):

such that \(\mu _{t + \theta }^P=\mu _{t}^P\).

Given a measurable function \(F:{\mathbb R}^{2(n+1)}\rightarrow {\mathbb R}\) we denote

provided that \(|F({\textbf{q}},{\textbf{p}})|\) is integrable w.r.t. the respective product measure.

It has been shown, see [14, Theorem 1.1, Proposition A.1] and also [15, Theorem A.2], that there exists a unique periodic, stationary state.

Theorem 2.1

For a fixed \(n\geqslant 1\) there exists a unique periodic stationary state \(\{\mu _s^P,s\in [0,+\infty )\}\) for the system (2.3)–(2.4). The measures \(\mu _s^P\) are absolutely continuous with respect to the Lebesgue measure \(\textrm{d}{\textbf{q}}\textrm{d}{\textbf{p}}\) and the respective densities \(\mu _s^P(\textrm{d}{\textbf{q}},\textrm{d}{\textbf{p}})=f_s^P({\textbf{q}},{\textbf{p}}) \textrm{d}{\textbf{q}}\textrm{d}{\textbf{p}}\) are strictly positive. The time averages of all the second moments \(\langle \langle p_xp_y\rangle \rangle \), \(\langle \langle p_xq_y\rangle \rangle \) and \(\langle \langle q_xq_y\rangle \rangle \) are finite and \(\min _x \langle \langle p_x^2\rangle \rangle \geqslant T_-\). Furthermore, given an arbitrary initial probability distribution \(\mu \) on \({\mathbb R}^{2(n+1)}\) and \((\mu _t)\) the solution of (2.15) such that \(\mu _{0}=\mu \), we have

Here \(\Vert \cdot \Vert _{\textrm{TV}}\) denotes the total variation norm.

In the periodic stationary state the time averaged energy current \( J_n=\langle \langle j_{x,x+1}\rangle \rangle \) is constant for \(x=-1,\ldots ,n\). In particular

where \({\overline{p}}_x(s):= \int _{{\mathbb R}^{2(n+1)}}p_x \mu _s^P(\textrm{d}{\textbf{q}},\textrm{d}{\textbf{p}})\). It turns out that the stationary current is of size O(1/n) as can be seen from the following.

Theorem 2.2

(see Theorem 3.1 of [14]) Suppose that \(\mathcal {F}(\cdot )\) satisfies (2.6) and, in addition, we also have \(\sum _{\ell \in {\mathbb Z}}\ell ^2|\widehat{\mathcal {F}}(\ell )|^2<+\infty \). Then,

with \(\mathcal {Q}(\ell )\) given by,

In the more general case when the forcing \({\mathcal {F}}_n(t)\) is \(\theta _n\)-periodic, with the period \(\theta _n=n^b\theta \) and the amplitude \(n^{a}\), i.e. \( {\mathcal {F}}_n(t)= \;n^{a} {\mathcal {F}}\left( \frac{t}{ \theta _n}\right) , \) and

the convergence in (2.19) still holds. However, then

Concerning the convergence of the energy profile we have shown the following, see [14, Theorem 3.4].

Theorem 2.3

Under the assumptions of Theorem 2.2 we have

with

for any \(\varphi \in C[0,1]\). Here J is given by (2.19) and

where \(G_{\omega _0}(\ell )\) is the Green function defined in (A.2).

Concerning the time variance of the average kinetic energy we have shown the following.

Theorem 2.4

(Theorem 9.1, [14]) Suppose that the forcing \(\mathcal {F}_n(\cdot )\) is given by (2.5), where \(\mathcal {F}(\cdot )\) satisfies the hypotheses made in Theorem 2.2. Then, there exists a constant \(C>0\) such that

Here \( \overline{p_x^2}(t):=\int _{{\mathbb R}^{2(n+1)}}p_x^2 \mu _t^P(\textrm{d}{\textbf{q}},\textrm{d}{\textbf{p}})\).

2.2 Statements of the Main Results

2.2.1 Macroscopic Energy Current Due to Work

The first results concerns the work done by the forcing in a diffusive limit, i.e.

where \( {\mathbb {E}}_{\mu }\) denotes the expectation of the process with the initial configuration \(({\textbf{q}},{\textbf{p}})\) distributed according to a probability measure \(\mu \). We shall write \(J_n(t, \textbf{q},\textbf{p})\) if for a deterministic initial configuration \((\textbf{q},\textbf{p})\), i.e. \(\mu =\delta _\mathbf{{q},\textbf{p}}\), the \(\delta \)-measure that gives probability 1 to such configuration.

Assume furthermore that \((\mu _n)\) is a sequence of initial distributions, with each \(\mu _n\) probability measure on \({\mathbb R}^{n+1}\times {\mathbb R}^{n+1}\). We suppose that there exist \(C>0\) and \(\delta \in [0,2)\) for which for any integer \(n\geqslant 1\)

Here \(({\overline{{\textbf{q}}}}_n, {\overline{{\textbf{p}}}}_n)\) is the vector of the averages of the configuration with respect to \(\mu _n\). We are interested essentially in the case \(\delta =1\), but Theorem 2.5 is valid also for any \(\delta <2\). In Proposition B.1 we prove that, in the diffusive time scaling, the energy due to the averages (2.28) becomes negligible at any time \(t>0\).

In Section B.2 of the Appendix we prove the following.

Theorem 2.5

Under the assumptions listed above, we have

where J is given by (2.19).

Remark 2.6

The asymptotic current J is the same as in the stationary state (cf. [14]) and it does not depend on the initial configuration.

Remark 2.7

Analogously to the stationary case, rescaling the period \(\theta \) with n and the strenght of the force in such a way that

Theorem 2.5 still holds, but with a different value of the current. Namely, J is given by (2.19) with \(\mathcal {Q}(\ell )\) defined by (2.22). Formula (2.22) corresponds to (2.20) with the value \(\theta = \infty \). If \(b-a\not =1/2\) the macrosopic current \(nJ_n\) is not of order O(1), which leads to an anomalous behavior of the heat conductivity of the chain (it vanishes, if \(b-a>1/2\), becomes unbounded, if \(b-a<1/2\). The assumption \(a\leqslant 0\) guarantees that the force acting on the system does not become infinite, as \(n\rightarrow +\infty \).

Remark 2.8

Using contour integration it is possible to calculate the quantities appearing in (2.20) and (2.22), see [15, Appendix D]. In the case of (2.20) we obtain

with

Furthermore, in the case of (2.22) we have

2.2.2 Macroscopic Energy Profile

Let \(\nu _{T_-} (\textrm{d}{\textbf{q}},\textrm{d}{\textbf{p}})\) be defined as the product Gaussian measure on \(\Omega _n\) (see (2.1)) of zero average and variance \(T_->0\) given by

where Z is the normalizing constant. Let \(f({\textbf{q}},{\textbf{p}})\) be a probability density with respect to \(\nu _{T_-}\). We denote the relative entropy

We assume now that the initial distribution \(\mu _n\) has density \(f_n(0, {\textbf{q}},{\textbf{p}})\), with respect to \(\nu _{T_-}\), such that there exists a constant \(C>0\) for which

For example, it can be verified that local Gibbs measures of the form

with \(\inf _{x,n} T_{x,n} >0\) satisfy (2.33). At this point we only remark that, due to the entropy inequality (see the proof of Corollary 3.2 below), assumption (2.33) implies

Furthermore, since the Hamiltonian \(\mathcal {H}(\cdot ,\cdot )\) is a convex function, by the Jensen inequality

so (2.28) is satisfied with \(\delta =1\).

Denote by \(\mathcal {M}_{\textrm{fin}}([0,1])\), resp \(\mathcal {M}_{+}([0,1])\) the space of bounded variation, Borel, resp. positive, measures on the interval [0, 1] endowed with the weak topology. Before formulating the main result we introduce the notion of a measured valued solution of the following initial-boundary value problem

Here J and D are defined by (2.19) and (2.25), respectively and \(T_0\in \mathcal {M}_{\textrm{fin}}([0,1])\).

Definition 2.9

We say that a function \(T:[0,+\infty )\rightarrow \mathcal {M}_{\textrm{fin}}([0,1])\) is a weak (measured valued) solution of (2.35) if: it belongs to \(C\Big ([0,+\infty ); \mathcal {M}_{\textrm{fin}}([0,1])\Big )\) and for any \(\varphi \in C^2[0,1]\) such that \(\varphi (0)=\varphi '(1)=0\) we have

The proof of the uniqueness of the solution of (2.36) is quite routine. For completeness sake we present it in Appendix E.

Theorem 2.10

Suppose that the initial configurations \((\mu _n)\) satisfy (2.33). Assume furthermore that there exists \(T_0\in \mathcal {M}_+([0,1])\) such that

for any function \(\varphi \in C[0,1]\) - the space of continuous functions on [0, 1]. Here \(\mathcal {E}_x(t) = {\mathcal E}_x({\textbf{q}}(t),{\textbf{p}}(t))\). Then,

Here \(T(t, \textrm{d}u)\) is the unique weak solution of (2.35), with the initial data given by measure \(T_0\) in (2.37).

Remark 2.11

The initial energy \(\mathcal {E}_x(0)\) can be represented as the sum \(\mathcal {E}_x^{\textrm{th}}+\mathcal {E}_x^{\textrm{mech}}\) of the thermal energy

and the mechanical energy

Here \(q'_x = q_x - {\overline{q}}_x\) and \(p'_x = p_x - \overline{p}_x\), with \({\overline{p}}_x:= \int _{\Omega _n}p_x \mu _n(\textrm{d}{\textbf{q}},\textrm{d}{\textbf{p}})\) and \({\overline{q}}_x:= \int _{\Omega _n}q_x \mu _n(\textrm{d}{\textbf{q}},\textrm{d}{\textbf{p}})\).

If \(\mathcal {E}_x^{\textrm{mech}}\not =0\) and satisfies (2.28), with \(\delta =1\), then the initial measure \(T_0(du)\) is the macroscopic distribution of the total energy and not of the temperature, where the latter is understood as the thermal energy. Nevertheless, as a consequence of Proposition B.1, at any macroscopic positive time the entire mechanical energy is transformed immediately into the thermal energy, so that \(T(t, \textrm{d}u)\) for \(t>0\) can be seen as the macroscopic temperature distribution. The situation is different for the unpinned dynamics (\(\omega _0 = 0\)) where the transfer of mechanical energy to thermal energy happens slowly at macroscopic times (see [13]).

Remark 2.12

Concerning Theorem 2.10, a similar proof will work in the case where two Langevin heat baths at two temperatures, \(T_-\) and \(T_+\) are placed at the boundaries, in the absence of the periodic forcing. In this case the macroscopic equation will be the same but with boundary conditions \(T(t,0) = T_-\) and \(T (t,1) =T_+\).

Also, in the absence of any heat bath, we could apply two periodic forces \(\mathcal {F}_n^{(0)}(t)\) and \(\mathcal {F}_n^{(1)}(t)\) respectively at the left and right boundary. They will generate two incoming energy current, \(J^{(0)}>0\) on the left and \(J^{(1)}<0\) on the right, given by the corresponding formula (2.19), and we will have the same equation but with boundary conditions \(\partial _u T (t,0) = -\frac{4\gamma J^{(0)}}{D}\) and \(\partial _u T (t,1) = -\frac{4\gamma J^{(1)}}{D}\). Of course in this case the total energy increases in time and periodic stationary states do not exist.

In the case where both a heat bath and a periodic force are present on the same side, say on the right endpoint, then the macroscopic boundary condition arising is \(T (t,1) =T_+\), i.e. the periodic forcing is ineffective on the macroscopic level, and all the energy generated by its work will flow into the heat bath. It would be interesting to investigate what happens when the amplitude of the forcing is larger than considered here (\(-1/2<a\leqslant 0\) in (2.30)). However, it is not yet clear to us what occurs in this case.

Remark 2.13

If the initial data \(T_0\) is \(C^1\) smooth and satisfies the boundary condition in (2.35), then the initial-boundary value problem (2.35) has a unique strong solution T(t, u) that belongs to the intersection of the spaces \(C\big ([0,+\infty )\times [0,1]\big )\) and \( C^{1,2}\big ((0,+\infty )\times (0,1)\big )\) - the space of functions continuously differentiable once in the first and twice in the second variable, see e.g. [8, Corollary 5.3.2, p.147]. This solution coincides then with the unique weak solution in the sense of Definition 2.9.

Remark 2.14

In the proof of Theorem 2.10 we need to show a result about the equipartition of energy (cf. Proposition 4.1). As a consequence the limit profile of the energy equals the limit profile of the temperature, i.e. we have

for any compactly supported test function.

3 Entropy, Energy and Currents Bounds

We first prove that the initial entropy bound (2.33) holds for all times.

Proposition 3.1

Suppose that the law of the initial configuration admits the density \( f_n(0, {\textbf{q}}, {\textbf{p}})\) w.r.t. the Gibbs measure \(\nu _{T-}\) that satisfies (2.33). Then, for any \(t>0\) there exists \( f_n(t, {\textbf{q}}, {\textbf{p}})\) - the density of the law of the configuration \(\big ({\textbf{q}}(t), {\textbf{p}}(t)\big )\). In addition, for any t there exists a constant C independent of n such that

Proof

For simplicity sake, we present here a proof under an additional assumption that \( f_n(t,{\textbf{q}}, {\textbf{p}})\) is a smooth function such that \(\mu _t(\textrm{d}{\textbf{q}},\textrm{d}{\textbf{p}})= f_n(t,{\textbf{q}}, {\textbf{p}}) \textrm{d}{\textbf{q}}\textrm{d}{\textbf{p}}\) is the solution of the forward equation (2.15). The general case is treated in Appendix F. Using (2.9) for the generator \(\mathcal {G}_t\) we conclude that

with

We have that \( {\textrm{I}}_n\leqslant 0\) because \(\mathcal {S}_{\textrm{flip}}\) and \(\mathcal {S}_{-}\) are symmetric negative operators with respect to the measure \(\nu _{T-}\).

The only positive contribution comes from the second term where the boundary work defined by (2.27) appears:

where \(\textrm{d}\mu _0:=f_n(0) \textrm{d}\nu _{T-}\). Therefore

The conclusion of the proposition then follows from a direct application of (2.33) and Theorem 2.5. \(\square \)

To abbreviate the notation we shall omit the index by the expectation sign, indicating the initial condition.

Corollary 3.2

(Energy bound) For any \(t_* \geqslant 0\) we have

Proof

It follows from the entropy inequality, see e.g. [9, p. 338], that for \(\alpha >0\) small enough we can find \(C_\alpha >0\) such that

\(\square \)

From Theorem 2.5 and Corollary 3.2 we immediately conclude the following.

Corollary 3.3

(Current size) For any \(t_* \geqslant 0\) there exists \(C>0\) such that

In particular, for any \(t>0\) there exists \(C>0\) such that

Proof

By the local conservation of energy

Therefore

and bound (3.4) follows directly from estimates (2.29) and (3.2). Estimate (3.5) is a consequence of the definition of \(j_{-1,0}\) (see (2.14)) and (3.4). \(\square \)

4 Equipartition of Energy and Fluctuation-Dissipation Relations

4.1 Equipartition of the Energy

In the present section we show the equipartition property of the energy.

Proposition 4.1

Suppose that \(\varphi \in {C^1}[0,1]\) is such that \(\textrm{supp}\,\varphi \subset (0,1)\). Then,

Proof

After a simple calculation we obtain the following fluctuation-dissipation relation: for \(x= 1, \dots , n-1\),

where the discrete gradient \(\nabla \) and its adjoint \(\nabla ^\star \) are defined in (A.1) below.

Therefore,

After summing up against the test function \(\varphi \) (that has compact support strictly contained in (0, 1)) and using the energy bound (3.2) we conclude (4.1). \(\square \)

4.2 Fluctuation-Dissipation Relation

In analogy to [14, Section 5.1] define

with the convention that \(q_{-1} = q_{0}\), \(q_n=q_{n+1}\). Then

5 Local Equilibrium and the Proof of Theorem 2.10

The fundamental ingredients in the proof of Theorem 2.10 are the identification of the work done at the boundary given by Theorem 2.5, the equipartition and the fluctuation-dissipation relation contained in Theorem 4, and the following local equilibrium results. In the bulk we have the following:

Proposition 5.1

Suppose that \(\varphi \in C[0,1]\) is such that \(\textrm{supp}\,\varphi \subset (0,1)\). Then

for \(\ell =0,1,2\). Here \(G_{\omega _0}(\ell )\) is the Green’s function of \(-\Delta _{{\mathbb Z}} + \omega ^2_0\), where \(\Delta _{{\mathbb Z}}\) is the lattice laplacian, see (A.2).

At the left boundary the situation is a bit different, due to the fact that \(q_0=q_{-1}\), and we have

Proposition 5.2

We have

The proofs of Propositions 5.1 and 5.2 require the analysis of the evolution of the covariance matrix of the position and momenta vector and will be done in Appendix D. As a consequence, recalling definition (4.4), the bound (3.5) and the identity \(2G_{\omega _0} (1)-G_{\omega _0} (0) -G_{\omega _0} (2) =-\omega _0^2G_{\omega _0} (1)\) we have the following corollary

Corollary 5.3

For any \(t>0\) and \(\varphi \in C[0,1]\) such that \(\textrm{supp}\,\varphi \subset (0,1)\) we have

and

Here D is defined in (2.25).

5.1 Proof of Theorem 2.10

Consider the subset \(\mathcal {M}_{+,E_*}([0,1])\) of \(\mathcal {M}_+([0,1])\) (the space of all positive, finite Borel measures on [0, 1]) consisting of measures with total mass less than or equal to \(E_*\). It is compact in the topology of weak convergence of measures. In addition, the topology is metrizable when restricted to this set.

For any \(t\in [0,t_*]\) and \(\varphi \in C[0,1]\) define

for any \(\varphi \in C[0,1]\). Since flips of the momenta do not affect the energies \({\mathcal {E}}_x\), we have \(\xi _n \in C\left( [0,t_*], \mathcal {M}_+([0,1])\right) \). Here \(C\left( [0,t_*], \mathcal {M}_{+,E_*}([0,1])\right) \) is endowed with the topology of the uniform convergence. As a consequence of Corollary 3.2 for any \(t_*>0\) the total energy is bounded by \(E_* = E(t_*)\) (see (3.2)) and we have that \(\xi _n \in C\left( [0,t_*], \mathcal {M}_{+,E_*}([0,1])\right) \).

5.2 Compactness

Since \(\mathcal {M}_{+,E_*}([0,1])\) is compact, in order to show that \((\xi _n)\) is compact, we only need to control modulus of continuity in time of \(\xi _n(t, \varphi )\) for any \(\varphi \in C^1[0,1]\), see e.g. [12, p. 234]. This will be consequence of the following Proposition.

Proposition 5.4

The proof of Proposition 5.4 is postponed untill Sect. 5.4, we first use it to proceed with the limit identification argument.

5.3 Limit Identification

Consider a smooth test function \(\varphi \in C^2[0,1]\) such that

In what follows we use the following notation. For a given function \(\varphi :[0,1]\rightarrow {\mathbb R}\) and \(n=1,2,\ldots \) we define discrete approximations of the function itself and of its gradient, respectively by

We use the convention \(\varphi (-\tfrac{1}{n+1})=\varphi (0)\). Let \(0<t_* <+\infty \) be fixed. In what follows we show that, for any \(t\in [0,t_*]\)

Here, and in what follows \(o_n\) denotes a quantity satisfying \(\lim _{n\rightarrow +\infty }o_n=0\). Thus any limiting point of \(\big (\xi _n(t)\big )\) has to be the unique weak solution of (2.36) and this obviously proves the conclusion of Theorem 2.10.

By an approximation argument we can restrict ourselves to the case when \(\textrm{supp}\,\varphi ''\subset (0,1)\). Then as in (5.14) we have

By Theorem 2.5 the last term converges to \(- \varphi (1) J t\). On the other hand from (4.5) we have

where

It is easy to see from Corollary 3.2 that \( \textrm{I}_{n,2} ={\overline{o}}_n(t).\) Here the symbol \({\overline{o}}_n(t)\) stands for a quantity that satisfies

Using the fact that \(\varphi '(1)=0\) and the estimate (B.15) respectively we conclude also that \(\textrm{I}_{n,3} ={\overline{o}}_n(t)\). Thanks to Corollary 3.2 and (5.7) we have

Since \({\textrm{supp}}\,\varphi ''\subset (0,1)\), by Corollary 5.3 and the equipartition property (Proposition 4.1) for a fixed \(t\in [0,t_*]\) we have

Concluding, we have obtained

Thus (5.9) follows. \(\square \)

5.4 Proof of Proposition 5.4

From the calculation made in (5.10)–(5.13) we conclude that for any function \(\varphi \in C^2[0,1]\) satisfying (5.7) we have

for any \( 0\leqslant s<t \leqslant t_*\) and (5.6) follows immediately. A density argument completes the proof.

Data Availability

Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

References

Basile, G., Bernardin, C., Jara, M., Komorowski, T., Olla, S.: Thermal conductivity in harmonic lattices with random collisions. In: Lepri, S. (ed.) Thermal Transport in Low Dimensions: From Statistical Physics to Nanoscale Heat Transfer. Lecture Notes in Physics, vol. 921. Springer, Berlin (2016)

Bernardin, C., Kannan, V., Lebowitz, J.L., Lukkarinen, J.: Non-equilibrium stationary states of harmonic chains with bulk noises. Eur. J. Phys. B 84, 685 (2011)

Bernardin, C., Kannan, V., Lebowitz, J.L., Lukkarinen, J.: Harmonic systems with bulk noises. J. Stat. Phys. 146, 800 (2012)

Bernardin, C., Olla, S.: Fourier law and fluctuations for a microscopic model of heat conduction. J. Stat. Phys. 118(3/4), 271–289 (2005). https://doi.org/10.1007/s10955-005-7578-9

Bernardin, C., Olla, S.: Transport properties of a chain of anharmonic oscillators with random flip of velocities. J. Stat. Phys. 145, 1224–1255 (2011). https://doi.org/10.1007/s10955-011-0385-6

Bonetto, F., Lebowitz, J.L., Rey-Bellet, L.: Fourier’s law: A challenge to theorists. In: Fokas, A., Grigoryan, A., Kibble, T., Zegarlinski, B. (eds.) Mathematical Physics, pp. 128–150. Imperial College Press, London (2000)

Bonetto, F., Lebowitz, J.L., Lukkarinen, J.: Fourier’s law for a harmonic crystal with self-consistent stochastic reservoirs. J. Stat. Phys. 116, 783–813 (2004)

Friedman, A.: Partial Differential Equations of Parabolic Type. Prentice-Hall Inc, Englewood Cliffs, NJ (1964)

Kipnis, C., Landim, C.: Scaling Limits of Interacting Particle Systems, Grundlehren Math, Wiss, vol. 320. Springer, Berlin (1999)

Johansson, M., Kopidakis, G., Lepri, S., Aubry, S.: Transmission thresholds in time-periodically driven non-linear disordered systems. EPL 86, 10009 (2009)

Geniet, F., Leon, J.: Energy transmission in the forbidden band gap of a nonlinear chain. Phys. Rev. Lett. 89, 134102 (2002). https://doi.org/10.1103/PhysRevLett.89.134102

Kelley, J.L.: General topology. Springer-Verlag, ISBN 978-0-387-90125-1 (1991)

Komorowski, T., Olla, S., Simon, M.: Hydrodynamic limit for a chain with thermal and mechanical boundary forces. Electron. J. Probab. 26, 1–49 (2021). https://doi.org/10.1214/21-EJP581

Komorowski, T., Lebowitz, J.L., Olla, S.: Heat flow in a periodically forced, thermostatted chain. Commun. Math. Phys. (2023). https://doi.org/10.1007/s00220-023-04654-4

Komorowski, T., Lebowitz, J.L., Olla, S.: Heat flow in a periodically forced, thermostatted chain - with internet supplement. arXiv:2205.03839

Komorowski, T., Lebowitz, J.L., Olla, S., Simon, M.: On the Conversion of Work into Heat: Microscopic Models and Macroscopic Equations (2022). https://doi.org/10.48550/arXiv.2212.00093

Ray, K.: Green’s Function on Lattices. arXiv:1409.7806

Lukkarinen, J.: Thermalization in harmonic particle chains with velocity flips. J. Stat. Phys. 155(6), 1143–1177 (2014)

Rieder, Z., Lebowitz, J.L., Lieb, E.: Properties of harmonic crystal in a stationary non-equilibrium state. J. Math. Phys. 8, 1073–1078 (1967)

Prem, A., Bulchandani, V B., Sondhi, S.L.: Dynamics and transport in the boundary–driven dissipative Klein-Gordon chain. arXiv:2209.03977v1 (2022)

Acknowledgements

The authors would like to thank both anonymous referees for their careful reading of the manuscript and many helpful remarks that lead to the improvement of the paper.

Funding

The work of J.L.L. was supported in part by the A.F.O.S.R. He thanks the Institute for Advanced Studies for its hospitality. T.K. acknowledges the support of the NCN grant 2020/37/B/ST1/00426.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

In addition, the authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Communicated by Herbert Spohn.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: The Discrete Laplacian

1.1 A.1: Discrete Gradient and Laplacian

Recall that the lattice gradient, its adjoint and laplacian of any \(f:{\mathbb Z}\rightarrow {\mathbb R}\) are defined as

and \(\Delta _{\mathbb Z}f_x=-\nabla ^\star \nabla f_x=f_{x+1}+ f_{x-1}-2 f_{x}\), \(x\in {\mathbb Z}\), respectively.

Suppose that \(\omega _0>0\). Consider the Green’s function of \(-\Delta _{{\mathbb Z}} + \omega ^2_0\), where \(\Delta _{{\mathbb Z}}\) is the laplacian on the integer lattice \({\mathbb Z}\). It is given by, see e.g. [17, (27)],

1.2 A.2: Discrete Neumann Laplacian \(-\Delta \)

Let \(\lambda _j\) and \(\psi _j\), \(j=0,\ldots ,n\) be the respective eigenvalues and eigenfunctions for the discrete Neumann laplacian \(-\Delta \) defined in (2.8). They are given by

The eigenvalues of \(\omega _0^2-\Delta \) are given by

Appendix B: The Dynamics of the Means

Let \(\mu \) be a Borel probability measure on \({\mathbb R}^{2(n+1)}\) and let \((\overline{{\textbf{q}}}, \overline{{\textbf{p}}})\) be the vector of the \(\mu \)-averages of initial data. In the following we denote by \( \left( \begin{array}{cc}\overline{{\textbf{q}}}(t)\\ \overline{{\textbf{p}}}(t) \end{array}\right) \) the vector means of positions and momenta by \({\overline{q}}_x(t) = {\mathbb {E}}_{{\textbf{q}},{\textbf{p}}}(q_x(t))\) and \({\overline{p}}_x(t) = {\mathbb {E}}_{{\textbf{q}},{\textbf{p}}}(p_x(t))\). Let \(\textbf{e}_{2(n+1)}\) be the \(2(n+1)\) vector defined by \(\textrm{e}_{2(n+1),j}= \delta _{2(n+1),j}\). Then, performing the averages in (2.3) and (2.4), we conclude the evolution equation for the averages. Its solution is given by

Here A is a \(2\times 2\) block matrix made of \((n+1)\times (n+1)\) matrices of the form

where \({\textrm{Id}}_{n+1}\) is the \((n+1)\times (n+1)\) identity matrix.

Using the expansion

and defining

we can write

To find the formulas for the components of \({\overline{v}}_x(t)\), \(\overline{u}_x(t)\), \(x=0,\ldots ,n\) of the vector \(\left( \begin{array}{c}\overline{\textbf{u}}(t)\\ \overline{\textbf{v}}(t) \end{array}\right) : =e^{-At}\left( \begin{array}{c}\overline{\textbf{q}}\\ \overline{{\textbf{p}}} \end{array}\right) \) it is convenient to use the Fourier coordinates in the base \(\psi _j\) of the eigenvectors for the Neumann laplacian \(\Delta \), see (A.3). Let \({\widetilde{u}}_j(t) =\sum _{x=0}^n{\overline{u}}_x(t)\psi _j(x)\) and \({\widetilde{v}}_j(t) =\sum _{x=0}^n{\overline{v}}_x(t)\psi _j(x)\) be the Fourier coordinates of the vector \(\left( \overline{\textbf{u}}(t), \overline{\textbf{v}}(t) \right) \). Likewise, we let \({\widetilde{q}}_j =\sum _{x=0}^n {\overline{q}}_x\psi _j(x)\) and \({\widetilde{p}}_j =\sum _{x=0}^n {\overline{p}}_x\psi _j(x)\), with \({\overline{q}}_x\), \(\overline{p}_x\), \(x=0,\ldots ,n\) the components of \((\overline{\textbf{q}}, \overline{\textbf{p}})\).

Let

be the two solutions of the equation

Note that \( \lambda _{j,+}\lambda _{j,-}=\mu _j. \) Then,

and

in the case when \(\mu _j\not =\gamma ^2\). When \(\gamma ^2=\mu _j\) (then \(\lambda _{j,\pm }=\gamma \)) we have

Then, by (B.7) and (B.8), we conclude that the components of \(e^{-A t}{} \textbf{e}_{2(n+1)}\) equal

in the case when \(\mu _j\not =\gamma ^2\). In the case that \(\gamma ^2=\mu _j\) (then \(\lambda _{j,\pm }=\gamma \)) we have \({\widetilde{y}}_j (t)= \psi _j(n) te^{-\gamma t}\) and \({\widetilde{z}}_j (t)= \psi _j(n) (1-\gamma t)e^{-\gamma t}\).

Elementary calculations lead to the following bounds

Hence, there exists \(C>0\) such that

for all \(t\geqslant 0\), \(j=0,\ldots ,n\), \(n=1,2,\ldots \). By the Plancherel identity, (B.7) and (B.8) we conclude also that there exist constants \(C,C'>0\) such that, for all \(t\geqslant 0\) and \(n\in \mathbb N\),

1.1 B.1: \(L^2\) Norms of the Means

By (B.4), the triangle inequality and the Plancherel theorem

The constant appearing here below do not depend on t and n. Using (2.6), (B.11) and (B.12) we conclude therefore that

From (B.14) we conclude therefore the following.

Proposition B.1

Assume that the hypotheses of Theorem 2.5 are in force. Then, there exists \(C>0\) such that

for all \(t\geqslant 0\), \(n=1,2,\ldots \). Here \(\kappa =\min \{2-\delta ,1\}\) and \(\delta \) is as in (2.28). If the hypotheses of Theorem 2.10 hold, then \(\delta =1\) and (B.15) is satisfied with \(\kappa =1\).

1.2 B.2: The Proof of Theorem 2.5

We show (2.29) and (2.19). Recall that the initial configuration \(({\textbf{q}},{\textbf{p}})\) is distributed according to \(\mu _n\). For the work done we have

We have \(J_n(t;\mu )=-W_n(n^2t)/n\), see (2.27).

Using (B.1) the utmost right hand side can be rewritten in the form \(W_{n,i}(t)+W_{n,f}(t) \) where

with \({\widetilde{v}}_j(s)\) and \({\widetilde{z}}_j(s')\) defined in (B.8) and (B.9).

Thanks to the last estimate of (2.6) and the Cauchy-Schwarz inequality we conclude from (B.12)

Thanks to (2.28) \(\lim _{n\rightarrow +\infty }|W_{n,i}(n^2t)|/n=0\). Using (B.9) we have

so that we can decompose the work done in \(W_{n,f}(t) =W_{n,f}^{(1)} (t)+W_{n,f}^{(2)} (t),\) where

and

Using (B.6) and integrating over the s variable we conclude that

Here we have used the fact that

Recalling (B.10) we obtain that \(\frac{1}{n}W_{n,f}^{(2)}(n^2t) =O\Big (\frac{1}{n^2}\Big )\) for each \(t>0\).

Concerning \(W_{n,f}^{(1)} (t)\), we use (B.6) and obtain

After substituting for \(\psi _j(n)\) and \(\mu _j\) from (A.3) and (A.4) correspondingly, we obtain

so that

and Theorem 2.5 follows. \(\square \)

Appendix C: The Evolution of the Covariance Matrix

1.1 C.1: Dynamics of Fluctuations

Denote

for \(x=0,\ldots ,n\). From (2.3) and (2.4) we get

and at the left boundary

Here \( {\widetilde{N}}_x(t):=N_x(t)-t\). Let \(\textbf{X}'(t)=[q_0'(t),\ldots ,q_n'(t), p_0'(t),\ldots ,p_n'(t)]\). Denote by \(S_n(t)\) the the covariance matrix

where

1.2 C.2: Structure of the Covariance Matrix

Given a vector \(\mathfrak {y} = (y_0, y_1, \dots , y_n)\), define also the matrix valued function

Let \(\Sigma ({\mathfrak {y}}) \) be the \(2\times 2\) block matrix

Here \(0_{n+1}\) is \((n+1)\times (n+1)\) null matrix. From (C.2) and (C.3) we conclude

where A is given by (B.2) and \(\overline{\mathfrak {\textbf{p}}^2}(s)=[{\mathbb {E}}_{\mu _n}p_1^2(s),\ldots , {\mathbb {E}}_{\mu _n}p_n^2(s)]\). Consequently

Denoting

we have, by integrating in (C.8),

In the following \(\{\psi _j(x), \mu _{j'}\}_{x,j,j'=0,\dots ,n}\) are the eigenfunctions and eigenvalues of \(\omega _0^2 - \Delta \), given in (A.3) in Appendix A.

Given a matrix \([B_{x,x'}]_{x,x'=0,\ldots ,n}\) we define its Fourier transform

Then we have the inverse relations

Following analogous algebraic calculation to those of [14, section 6.3], see also Section C.4 below for a detailed calculation, we obtain

where

and

Concerning \({\widetilde{R}}^{(p)}_{j,j'}(t)\), it is of the form

where Z is a 3 element set consisting of indices p, q and pq and \(\Xi ^{(p)}_\iota \) are some \(C^\infty \) smooth functions defined on \([\omega _0^2,4+\omega _0^2]\times [\omega _0^2,4+\omega _0^2]\).

We also have

and

where the matrices \({\widetilde{R}}^{(q)}_{j,j'}(t)\) and \(\widetilde{R}^{(q,p)}_{j,j'}(t)\) are given by analogues of (C.15).

1.3 C.3: Some Bounds on the Kinetic Energy

From (C.12) we have

where \( \langle \langle S^{(p)}_{x,x} \rangle \rangle _t =\int _0^t[p_x'(s)]^2\textrm{d}s, \)

and

The latter satisfy the following estimates: for each \(t>0\) there exists \(C>0\) such that

The proof of (C.21) can be found in Section C.4.1 below.

It has been shown in [14, Appendix A] that

Recall that \(\langle \langle p_x^2 \rangle \rangle _t=\langle \langle S^{(p)}_{x,x} \rangle \rangle _t+ \int _0^t{\overline{p}}_x^2(n^2\,s)\textrm{d}s\). Under the assumptions of Theorem 2.10 we may admit \(\delta =1\) in the conclusion of Proposition B.1. Thanks to (B.15) we conclude that for each \(t>0\) there exists \(C>0\) such that

From (C.23), (C.21), and (3.5) we infer therefore that

where \(\rho _{x}(t)\) satisfies: for any \(t>0\) there exists \(C>0\) such that

The following lower bound on the matrix \([M_{x,y}]\) comes from [14, Proposition 7.1] (see also [7]).

Proposition C.1

There exists \(c_*>0\) such that

Multiplying both sides of (C.24) by \(\langle \langle p_x^2\rangle \rangle _t\), summing over x and using Proposition C.1 together with estimate (C.24) we immediately conclude the following.

Corollary C.2

For any \(t>0\) there exists \(C>0\) such that

Proposition C.3

For any \(t>0\) there exists \(C>0\) such that

Proof

As a direct consequence of (3.2) and Corollary C.2 we have: for any \(t>0\) there exists \(C>0\) such that

and

Indeed, estimate (C.29) is obvious in light of (C.27). To prove (C.30) note that by the Cauchy-Schwarz inequality

and (C.30) follows, thanks to (3.5).

From (C.24) and (C.25) we conclude that for any \(t>0\) we can find \(C>0\) such that

Using the Cauchy-Schwarz inequality we conclude

Denote \( D_n:=\sum _{x=0}^{n-1}\Big (\langle \langle p_x^2\rangle \rangle _t-\langle \langle p_{x+1}^2\rangle \rangle _t\Big )^2. \) We can summarize the inequalities obtained as follows: for any \(t>0\) there exists \(C>0\) such that

for all \(n=1,2,\ldots \). Thus the second estimate of (C.28) follows, which in turn implies the first estimate of (C.28) as well. \(\square \)

1.4 C.4: Calculation of \({\widetilde{R}}^{(p)}(t)\), \({\widetilde{R}}^{(q)}(t)\) and \({\widetilde{R}}^{(q,p)}(t)\)

Equation (C.10) leads to the following equations (see (B.2) and (C.6)):

Adding and subtracting the second and the third equations sideways we can rewrite (C.34) as follows

where \({\widetilde{F}}_{j,j'}(t)\) is given by (C.13) and

Hence,

with \(\Theta (\cdot ,\cdot )\) given by (C.14) and

1.4.1 C.4.1: Proof of (C.21)

Using (C.41) and (C.15) we can write

Here Z is a set consisting of indices p, q and pq and \(\Xi ^{(p)}_\iota \) are some \(C^\infty \) smooth functions. In what follows we show that for any \(t>0\) there exists \(C>0\) such that

This, in light of (D.12), clearly implies (C.21).

Consider only the case \(\iota =p\), as the other cases can be argued in the same manner. Then,

Using (A.3) and elementary trigonometric identities we obtain

Using [14, Lemma B.1] we conclude that there exists \(C>0\) such that

We conclude therefore that

The supremum extends over all real valued sequences \(h=(h_0,\ldots ,h_n)\), with \(\Vert h\Vert _{\ell ^2}^2=\sum _{x=0}^nh_x^2=1\). Using an elementary inequality \(h_xp_y'(t)\leqslant h_x^2/2+[p_y'(t)]^2/2\) we can estimate the right hand side of (C.41) by

where \(K=\sup _{x,n} \sum _{y=0}^n\Big (k_n(x,y) +k_n(y,x)\Big )\). Estimate (C.39) for \(\iota =p\) is then a straightforward consequence of the energy bound (3.2).

Appendix D: Proof of Local Equilibrium

We prove here Propositions 5.1 and 5.2.

1.1 D.1: Proof of Proposition 5.1

Suppose that \(\rho \in (0,1/2)\) is such that \(\textrm{supp}\,\varphi \subset (\rho ,1-\rho )\). Let

For a fixed integer \(\ell \) define

By [14, Lemma B.1], for a given \(\ell \) there exists \(C>0\) such that

for \(n=1,2,\ldots .\) It has been shown in Section 8.1 of [14] that for any \(\rho \in (0,1/2)\) there exists \(C>0\) such that

for \(n=1,2,\ldots \).

By virtue of (B.15) we have

It suffices therefore to prove that

We prove (D.6) for \(\ell =0\), the argument for other values of \(\ell \) are similar. By (C.16) we have

with (cf (C.16))

Using (3.2) and (3.5) we conclude that \(\lim _{n\rightarrow +\infty }\sup _{x}\Big |B_{n}(t,x)\Big |=0\). Likewise, by (C.16) and (C.37), we conclude that \(\lim _{n\rightarrow +\infty }\sup _{x}\Big |r_{n,x}^{(q)}(t)\Big |=0\).

Furthermore, by (D.4), if \(\rho n\leqslant x \leqslant (1-\rho )n\),

where, for any fixed \(t>0\) we have \(\lim _{n\rightarrow +\infty }\sup _{\rho n\leqslant x \leqslant (1-\rho )n}\Big |o_{n,x}(t)\Big |=0\). Then we have that

Here and below \(\lim _{n\rightarrow +\infty }o_n(t)=0\) for each \(t>0\). We have

It follows from [14, Lemma B.1] that there exists \(C>0\) such that

Using Cauchy-Schwarz inequality and (C.28) we conclude that the right hand side of (D.11) is estimated by

for some constant \(C'\) independent of \(x=0,\ldots ,n\) and \(n=1,2,\ldots \). and Proposition 5.1 follows for \(\ell =0\). \(\square \)

1.2 D.2: Proof of Proposition 5.2

From Proposition B.1 we have

It suffices therefore to calculate \( \int _0^{t} \mathbb {E}\big (q_0'(s)^2\big ) \textrm{d}s = \langle \langle S^{(q)}_{0,0}\rangle \rangle _t\).

We have, see (C.16) and (D.1),

and

The coefficients \(H_y^{(n)} \) have the property

Using [14, Lemma B.1] we conclude that there exists \(C>0\) such that

Then, proceeding as in (D.11)–(D.12), by using the Cauchy-Schwarz inequality, the first estimate of (C.28) and (D.16), we conclude that

Hence

\(\square \)

Appendix E: Uniqueness of Solutions to (2.35)

Theorem E.1

Suppose that \(T_0\in \mathcal {M}_{\textrm{fin}}\Big ([0,1]\Big )\). Then, the initial-boundary value problem (2.35) has a unique weak solution in the sense of Definition 2.9.

Proof

Let \({\overline{T}}(s,du)\) be the signed measure given by the difference of two solutions with the same initial and boundary data. It satisfies the equation

for any \(\varphi \in C^2[0,1]\) such that \(\varphi (0)=\varphi '(1)=0\).

The above implies that also

for any \(\varphi \in C^{1,2}([0,+\infty )\times [0,1])\), such that \(\varphi (t,0)=\partial _u\varphi (t,1)=0\), \(t\geqslant 0\). Suppose now that \(\varphi _0\in C^1[0,1]\) satifies

and \(\varphi (t,u)\) is the strong solution of

Such a solution exists and is unique, thanks to e.g. [8, Corollary 5.3.2, p.147]. It belongs to \(C^{1,2}((-\infty ,t]\times [0,1])\). We conclude that

for any \(\varphi _0\in C^1[0,1]\) satifying (E.3).

Consider now an arbitrary \(\psi \in C[0,1]\). Let \(\varphi _0(u):=-u\int _u^1\psi (u')\textrm{d}u'-\int _0^u\psi (u')\textrm{d}u'\). It satisfies (E.3) and \(\varphi ''_0(u)=\psi (u)\), thus

which ends the proof of uniqueness. \(\square \)

Appendix F: Proof of Proposition 3.1

1.1 Proof of Proposition 3.1 in the General Case

Denote by \(\mathcal {P}_{s,t}\), \(s<t\), the evolution family corresponding to the transition probabilities of the Markov family generated by the dynamics (2.3) and (2.4). Let \(\mu \mathcal {P}_{s,t}\) be the probability distribution obtained by transporting the distribution \(\mu \) at time s by the random flow \(\mathcal {S}_{s,t}\). Using the calculation performed in [5, pp. 1232] we conclude that the relative entropy satisfies the following inequality

where \(\textrm{d}\mu _0=f_n(0)\textrm{d}\nu _{T_-}\), \(\mathcal {G}_t^*\) is the adjoint with respect to \(\nu _{T_-}\) and the infimum is taken over all smooth densities \(\psi \), w.r.t. the Gaussian measure \(\nu _{T_-}\), that are bounded away from 0. Arguing as in the proof of Proposition 3.1 in the smooth initial data case, we conclude that for any \(\psi \) under the infimum the right hand side of (F.1) is less than, or equal to

From this point on the proof follows from an application of (2.33) and Theorem 2.5. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Komorowski, T., Lebowitz, J.L. & Olla, S. Heat Flow in a Periodically Forced, Thermostatted Chain II. J Stat Phys 190, 87 (2023). https://doi.org/10.1007/s10955-023-03103-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-023-03103-9