Abstract

We study the symmetric inclusion process (SIP) in the condensation regime. We obtain an explicit scaling for the variance of the density field in this regime, when initially started from a homogeneous product measure. This provides relevant new information on the coarsening dynamics of condensing interacting particle systems on the infinite lattice. We obtain our result by proving convergence to sticky Brownian motion for the difference of positions of two SIP particles in the sense of Mosco convergence of Dirichlet forms. Our approach implies the convergence of the probabilities of two SIP particles to be together at time t. This, combined with self-duality, allows us to obtain the explicit scaling for the variance of the fluctuation field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The symmetric inclusion process (SIP) is an interacting particle system where a single particle performs symmetric continuous-time random walks on the lattice \({\mathbb {Z}}\), with rates \(k p(i,j)=k p(j,i)\) (\(k>0\)) and where particles interact by attracting each other (see below for the precise definition) at rate \(p(i,j) \eta _i \eta _j\), where \(\eta _i\) is the number of particles at site i. The parameter k regulates the relative strength of diffusion w.r.t. attraction between particles. The symmetric inclusion process is self-dual, and many results on its macroscopic behavior can be obtained via this property. Self-duality implies that the expectation of the number of particles can be understood from one dual particle. In particular, because one dual particle scales to Brownian motion in the diffusive scaling, the hydrodynamic limit of SIP is the heat equation. The next step is to understand the variance of the density field, which requires two dual particles.

It is well-known that in the regime \(k\rightarrow 0\) the SIP manifests condensation (the attractive interaction dominates), and via the self-duality of SIP more information can be obtained about this condensation process than for a generic process (such as zero-range processes). Indeed, in [1] two of the authors of this paper in collaboration with C. Giardinà have obtained an explicit formula for the Fourier-Laplace transform of two-particle transition probabilities for interacting particle systems such as the simple symmetric exclusion and the simple symmetric inclusion process, where simple refers to nearest-neighbor in dimension 1. From this formula, the authors were able to extract information about the variance of the time-dependent density field started from a homogeneous product measure. With the help of duality this reduces to the study of the scaling behavior of two dual particles. In particular, for the inclusion process in the condensation regime, from the study of the scaling behavior of the time-dependent variance of the density field, one can extract information about the coarsening process. It turned out that the scaling limit of two particles is in that case a pair of sticky Brownian motions. From this one can infer the qualitative picture that in the condensation regime, when started from a homogeneous product measure, large piles of particles are formed which move as Brownian motion, and interact with each other as sticky Brownian motions.

The whole analysis in [1] is based on the exact formula for the Fourier-Laplace transform of the transition probabilities of two SIP particles as mentioned above. This exact computation is based on the fact that the underlying random walk is nearest-neighbor, and therefore the results are restricted to that case. However, we expect that for the SIP in the condensation regime, sticky Brownian motion appears as a scaling limit in much larger generality in dimension 1. The exact formula in [1] yields convergence of semigroups, and therefore convergence of finite-dimensional distributions. However, because of the rescaling in the condensation regime, one cannot expect convergence of generators, but rather a convergence result in the spirit of slow-fast systems, i.e., convergence of the type of gamma convergence. Moreover, the difference of two SIP-particles is not simply a random walk slowed down when it is at the origin as in e.g. [2]. Instead, it is a random walk which is pulled towards the origin when it is close to it, which only in the scaling limit leads to a slow-down at the origin, i.e., sticky Brownian motion.

In this paper, we obtain a precise scaling behavior of the variance of the density field in the condensation regime. We find the explicit scaling form for this variance in real time (as opposed to the Laplace transformed result in [1]), thus giving more insight in the coarsening process when initially started from a homogeneous product measure of density \(\rho \). This is the first rigorous result on coarsening dynamics in interacting particle systems directly on infinite lattices, for a general class of underlying random walks. There exist important results on condensation either heuristically on the infinite lattice or rigorous but constrained to finite lattices. For example [3] heuristically discusses on infinite lattices the effective motion of clusters in the coarsening process for the TASIP; or the work [4] which based on heuristic mean-field arguments studies the coarsening regime for the explosive condensation model. On the other hand, on finite lattices via martingale techniques [5] studies the evolution of a condensing zero-range process. In the context of the SIP on a finite lattice, the authors of [6] showed the emergence of condensates as the parameter \(k \rightarrow 0\), and rigorously characterize their dynamics. We also mention the recent work [7] where the structure of the condensed phase in SIP is analyzed in stationarity, in the thermodynamic limit. More recently in [8], condensation was proven for a large class of inclusion processes for which there is no explicit form of the invariant measures. The work in [8] also derived rigorous results on the metastable behavior of non-reversible inclusion processes.

Our main result is obtained by proving that the difference of two SIP particles converges, after a suitable rescaling defined below in Sect. 2.4.1, to a two-sided sticky Brownian motion in the sense of Mosco convergence of Dirichlet forms, originally introduced in [9] and extended to the case of varying state spaces in [10]. Because this notion of convergence implies convergence of semigroups in the \(L^2\)-space of the reversible measure, which is \(dx + \gamma \delta _0\) for the sticky Brownian motion with stickiness parameter \(\gamma >0\), the convergence of semigroups also implies that of transition probabilities of the form \(p_t(x,0)\). This, together with self-duality, helps to explicitly obtain the limiting variance of the fluctuation field. Technically speaking, the main difficulty in our approach is that we have to define carefully how to transform functions defined on the discretized rescaled lattices into functions on the continuous limit space in order to obtain convergence of the relevant Hilbert spaces, and at the same time obtain the second condition of Mosco convergence. Mosco convergence is a weak form of convergence which is not frequently used in the probabilistic context. In our context it is however exactly the form of convergence which we need to study the variance of the density field. As already mentioned before, as it is strongly related to gamma-convergence, it is also a natural form of convergence in a setting reminiscent of slow-fast systems.

The rest of our paper is organized as follows. In Sect. 2 we deal with some preliminary notions; we introduce both the inclusion and the difference process in terms of their infinitesimal generators. In this section we also introduce the concept of duality and describe the appropriate regime in which condensation manifests itself. Our main result is stated in Sect. 3, were we present some non-trivial information about the variance of the time-dependent density field in the condensation regime and provide some heuristics for the dynamics described by this result. Section 4 deals with the basic notions of Dirichlet forms. In the same section, we also introduce the notion of Mosco convergence on varying Hilbert spaces together with some useful simplifications in our setting. In Sect. 5, we present the proof of our main result and also show that the finite-range difference process converges in the sense of Mosco convergence of Dirichlet forms to the two-sided sticky Brownian motion. Finally, as supplementary material in the Appendix, we construct via stochastic time changes of Dirichlet forms the two-sided sticky Brownian motion at zero and we also deal with the convergence of independent random walkers to standard Brownian motion. This last result, despite being basic, becomes a cornerstone for our results of Sect. 5.

2 Preliminaries

2.1 The Model: Inclusion Process

The Symmetric Inclusion Process of parameter k (SIP(k)) is an interacting particle system where particles randomly hop on the lattice \({\mathbb {Z}}\) with attractive interaction and no restrictions on the number of particles per site. Configurations are denoted by \(\eta \) and are elements of \(\Omega ={\mathbb {N}}^{{\mathbb {Z}}}\) (where \({\mathbb {N}}\) denotes the set of natural numbers including zero). We denote by \(\eta _x\) the number of particles at position \(x \in {\mathbb {Z}}\) in the configuration \(\eta \in \Omega \). The generator working on local functions \(f:\Omega \rightarrow {\mathbb {R}}\) is of the type

where \(\eta ^{i,j}\) denotes the configuration obtained from \(\eta \) by removing a particle from i and putting it at j. For the associated Markov process on \(\Omega \), we use the notation \(\{\eta (t):t\ge 0\}\), i.e., \(\eta _x(t)\) denotes the number of particles at time t at location \(x \in {\mathbb {Z}}\). Additionally, we assume that the function \(p:{\mathbb {R}}\rightarrow [0,\infty )\) satisfies the following properties

-

1.

Symmetry: \(p(r)=p(-r)\) for all \(r\in {\mathbb {R}}\).

-

2.

Finite range: there exists \(R>0\) such that: \(p(r)=0\) for all \(|r|>R\).

-

3.

Irreducibility: for all \(x,y\in {\mathbb {Z}}\) there exists \(n\in {\mathbb {N}}\) and \(x=i_1, i_2, \ldots ,i_{n-1},i_n=y\), such that \(\prod \nolimits _{k=1}^{n-1} p(i_{k+1}-i_k)>0\).

It is known that these particle systems have a one-parameter family of homogeneous (w.r.t. translations) reversible and ergodic product measures \(\mu _{\rho }, \rho >0\) with marginals

This family of measures is indexed by the density of particles, i.e.,

Remark 2.1

Notice that for these systems the initial configuration has to be chosen in a subset of configurations such that the process \(\{\eta (t):t\ge 0\}\) is well-defined. A possible such subset is the set of tempered configurations. This is the set of configurations \(\eta \) such that there exist \(C, \beta \in {\mathbb {R}}\) that satisfy \( |\eta (x)| \le C |x|^\beta \) for all \(x\in {\mathbb {R}}\). We denote this set (with slight abuse of notation) still by \(\Omega \), because we will always start the process from such configurations, and this set has \(\mu _{{\bar{\rho }}}\) measure 1 for all \(\rho \). Since we are working mostly in \(L^2(\mu _{\rho })\) spaces, this is not a restriction.

2.2 Self-duality

Let us denote by \(\Omega _f \subseteq \Omega \) the set of configurations with a finite number of particles. We then have the following definition:

Definition 2.1

We say that the process \(\{ \eta _t : t \ge 0 \}\) is self-dual with self-duality function \(D:\Omega _f\times \Omega \rightarrow {\mathbb {R}}\) if

for all \( t \ge 0\) and \(\xi \in \Omega _f, \eta \in \Omega \).

In the definition above \({\mathbb {E}}_\eta \) and \({\mathbb {E}}_\xi \) denote expectation when the processes \(\{ \eta _t : t \ge 0 \}\) and \(\{ \xi _t : t \ge 0 \}\) are initialized from the configuration \(\eta \) and \(\xi \) respectively . Additionally we require the duality functions to be of factorized form, i.e.,

In our case the single-site duality function \(d(m,\cdot )\) is a polynomial of degree m, more precisely

One important consequence of the fact that a process enjoys the self-duality property is that the dynamics of m particles provides relevant information about the time-dependent correlation functions of degree m. As an example we now state the following proposition, Proposition 5.1 in [1], which provides evidence for the case of two particles

Proposition 2.1

Let \(\{\eta (t): t\ge 0\}\) be a process with generator (1), then

where \(\nu \) is assumed to be a homogeneous product measure with \(\rho \) and \(\sigma \) given by

and \(X_t\) and \(Y_t\) denote the positions at time \(t >0\) of two dual particles started at x and y respectively and \({\mathbb {E}}_{x,y}\) the corresponding expectation.

Proof

We refer to [1] for the proof. \(\square \)

Remark 2.2

Notice that Proposition 2.1 shows that the two-point correlation functions depend on the two-particle dynamics via the indicator function \(\mathbb {1}_{ \{ X_t = Y_t \}}\). More precisely, these correlations can be expressed in terms of the difference of the positions of two dual particles and the model parameters.

Motivated by Remark 2.2, and for reasons that will become clear later, we will study in the next section the stochastic process obtained from the generator (1) by following the evolution in time of the difference of the positions of two dual particles.

2.3 The Difference Process

We are interested in a process obtained from the dynamics of the process \(\{\eta (t):t\ge 0\}\) with generator (1) initialized originally with two labeled particles. More precisely, if we denoted by \((x_1(t),x_2(t))\) the particle positions at time \(t \ge 0\), from the generator (1) we can deduce the generator for the evolution of these two particles; this is, for \(f:{\mathbb {Z}}^2 \rightarrow {\mathbb {R}}\) and \( {\mathbf {x}} \in {\mathbb {Z}}^2\) we have

where \({\mathbf {x}}^{i,r}\) results from changing the position of particle i from the site \(x^i\) to the site \(x^i +r\).

Given this dynamics, we are interested in the process given by the difference

Notice that the labels of the particles are fixed at time zero and do not vary thereafter. This process was studied for the first time in [11] and later on [1], but in contrast to [1], we do not restrict ourselves to the nearest-neighbor case, hence any time a particle moves the value of w(t) can change by r units, with \(r \in A:= [-R,R] \cap {\mathbb {Z}}\setminus \{0\}\).

Using the symmetry and translation invariance properties of the transition function we obtain the following operator as generator for the difference process

where we used that \(p(0)=0\) and \(p(-r)=p(r)\).

Let \(\mu \) denote the discrete counting measure and \(\delta _0\) the Dirac measure at the origin, then we have the following result:

Proposition 2.2

The difference process is reversible with respect to the measure \(\nu _k\) given by

Proof

By detailed balance, see for example Proposition 4.3 in [12], we obtain that any reversible measure should satisfy the following:

where, due to the symmetry of the transition function, we have cancelled the factor \(\tfrac{p(-r)}{p(r)}\). In order to verify that \(\nu _k\) satisfies (10) we have to consider three possible cases: Firstly \(w \notin \{0, -r\}\), secondly \(w = 0\) and finally \(w = -r\). For \(w \notin \{0, -r\}\), (10) reads \(\nu _k(w)=\nu _k(w+r)\) which is clearly satisfied by (9). For \(w=0\) and for \(w=-r\), (10) reads \(\nu _k(0)=(1+\frac{1}{k})\nu _k(r)\) which is also satisfied by (9). \(\square \)

Remark 2.3

Notice that in the case of a symmetric transition function the reversible measures \(\nu _k\) are independent of the range of the transition function.

2.4 Condensation and Coarsening

2.4.1 The Condensation Regime

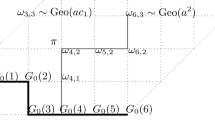

It has been shown in [13] that the inclusion process with generator (1) can exhibit a condensation transition in the limit of a vanishing diffusion parameter k. The parameter k controls the rate at which particles perform random walks, hence in the limit \(k \rightarrow 0\) the interaction due to inclusion becomes dominant which leads to condensation. The type of condensation in the SIP is different from other particle systems such as zero-range processes, see [14] and [15] for example, because in the SIP the critical density is zero.

In the symmetric inclusion process we can achieve condensation by the following rescaling:

-

1.

First, by making the parameter k of order 1/N, more precisely:

$$\begin{aligned} k_N = \tfrac{1}{\sqrt{2} \gamma N} \end{aligned}$$for \(\gamma >0\).

-

2.

Second, rescaling space by 1/N.

-

3.

Third, by rescaling time by a factor of order \(N^3\), more precisely \({N^3 \gamma }/{\sqrt{2}}\).

We refer to this simultaneously rescaling as the condensation regime. In this regime the generator (1) becomes

Notice that by splitting the generator (11) as follows:

where

and

we can indeed see two forces competing with each other. On the one hand, with a multiplicative factor of \(\frac{N^2 }{2}\) we see the diffusive action of the generator (12). While on the other hand, at a much larger factor \(\frac{N^3 \gamma }{\sqrt{2}} \) we see the action of the infinitesimal operator (13) making particles condense. Therefore the sum of the two generators have the flavor of a slow-fast system. This gives us the hint that for the associated process we cannot expect convergence of the generators. Instead, as it will become clear later, we will work with Dirichlet forms.

2.4.2 Coarsening and the Density Fluctuation Field

It was found in [13] that in the condensation regime (when started from a homogeneous product measure with density \(\rho >0\)) sites are either empty with very high probability, or contain a large number of particles to match the fixed expected value of the density. We also know that in this regime the variance of the particle number is of order N and hence a rigorous hydrodynamical description of the coarsening process, by means of standard techniques, becomes inaccessible. Nevertheless, as it was already hinted in [1] at the level of the Fourier-Laplace transform, a rigorous description at the level of fluctuations might be possible. Therefore we introduce the density fluctuation in the condensation regime, i.e.

defined for any \(\varphi \) in the space of Schwartz functions:

Remark 2.4

Notice that the scaling in (14) differs from the standard setting of fluctuation fields, given for example in Chapter 11 of [12]. In our setting, due to the exploding variances (coarsening) it is necessary to re-scale the fields by an additional factor of \(\tfrac{1}{\sqrt{N}}\).

3 Main Result: Time-Dependent Variances of the Density Field

Let us initialize the nearest-neighbor SIP configuration process from a spatially homogeneous product measure \(\nu \) parametrized by its mean \(\rho \) and such that

We have the following result concerning the time-dependent variances of the density field (14):

Theorem 3.1

Let \(\{ \eta _{\alpha (N,t)} : t \ge 0\}\) be the time-rescaled inclusion process, with infinistesimal generator (11), in configuration space. Consider the fluctuation field \({{\mathscr {X}}}_N(\eta ,\varphi , t)\) given by (14). Let \(\nu _{\rho }\) be an initial homogeneous product measure parametrized by its mean \(\rho \) and satisfying (16). Then the limiting time dependent variance of the density field is given by:

where the error function is:

3.1 Heuristics of the Coarsening Process

In this section we give some intuition about the limiting behavior of the density field, as found in Theorem 3.1. More concretely, we show that Theorem 3.1 is consistent with the following “coarsening picture”. Under the condensation regime, and started from an initial homogeneous product measure \(\nu \) with density \(\rho \), over time large piles are created which are typically at distances of order N and of size \(\rho N\). The location of these piles evolves on the appropriate time scale according to a diffusion process. If we focus on two piles, this diffusion process is of the form (X(t), Y(t)) where \(X(t)-Y(t)\) is a sticky Brownian motion \(B^{\text {sbm}}(t)\), and where the sum \(X(t)+Y(t)\) is an independent Brownian motion \({\overline{B}}(t)\), time-changed via the local time inverse at the origin \(\tau (t)\) of the sticky Brownian motion \(B^{\text {sbm}}(t)\) via \(X(t)+ Y(t)= {\overline{B}} (2t- \tau (t))\).

In the following we denote by \(p_t^{\text {sbm}}(x,dy)\) the transition kernel of a Sticky Brownian motion with stickiness parameter \(\sqrt{2} \gamma \). This kernel consists of a first term that is absolutely continuous w.r.t. the Lebesgue measure and a second term that is a Dirac-delta at the origin times the probability mass function at zero. With a slight abuse of notation we will denote by

where \(p_t^{\text {sbm}}(x,y)\) for \(y\ne 0\) denotes a probability density to arrive at y at time t when started from x , and for \(y=0\) the probability to arrive at zero when started at x. See equation (2.15) in [16] for an explicit formula for (18).

Let us now make this heuristics more precise. Define the non-centered field

then one has, using that at every time \(t>0\), and \(x\in {\mathbb {Z}}^d\), \({\mathbb {E}}_\nu (\eta _t(x))=\rho \):

and

As we will see later in the proof of our main theorem, the RHS of (17) can be written as

and hence, we have that

where

In the second line we used the change of variables \(x = \tfrac{u+v}{2}\), \(y = \tfrac{u-v}{2}\).

We now want to describe a “macroscopic” time-dependent random field \({{\mathscr {Z}}}(\varphi , t)\) that is consistent with the limiting expectation and second moment computed in (20) and (21). This macroscopic field describes intuitively the positions of the piles formed from the initial homogeneous background.

For any fixed \(m\in {\mathbb {N}}\) we define the family of \({\mathbb {R}}^m\)-valued diffusion processes \(\{X^{{\mathbf {x}}}(t), \, t\ge 0\}_{{\mathbf {x}}\in {\mathbb {R}}^m}\) together on a common probability space \(\Omega \). Here \({\mathbf {x}}=(x_1,\ldots , x_m)\) is the vector of initial positions: \(X^{\mathbf {x}}(0)={\mathbf {x}}\). Then we will denote by \(X_i^{{\mathbf {x}}}(t)\), \(i=1,\ldots , m\), the i-th component of \(X^{{\mathbf {x}}}(t)=(X_1^{{\mathbf {x}}}(t),\ldots , X_m^{{\mathbf {x}}}(t))\) that is defined as the trajectory started from \(x_i\), i.e. the i-th component of \({\mathbf {x}}\). Then for any fixed \(\omega \in \Omega \), we define the define the macroscopic field \({{\mathscr {Z}}}^{(m)}(\cdot ,t)(\omega )\) working on test functions \(\varphi :{\mathbb {R}}\rightarrow {\mathbb {R}}\) as follows:

We want to find the conditions on the probability law of the trajectories \(\{X^{{\mathbf {x}}}_i(t), \, t\ge 0\}\) and on their couplings that make the macroscopic field \({{\mathscr {Z}}}(\varphi , t)\) compatible with the limiting expectation (20) and second moment (21) of the microscopic field. We will see that, in order to achieve this it is sufficient to define the law of the one-component \(\{X^{{\mathbf {x}}}_i(t), \, t\ge 0\}\) and two-components \(\{(X^{{\mathbf {x}}}_i(t), X^{{\mathbf {x}}}_i(t)), \, t\ge 0\}\) marginals.

We assume that the family of processes \(\{X^{{\mathbf {x}}}(t), \, t\ge 0\}_{{\mathbf {x}}\in {\mathbb {R}}^m}\) is such that, for all \({\mathbf {x}}=(x_1,\ldots , x_m)\),

-

a)

for all \(i=1,\ldots , m\), the marginal \(X^{{\mathbf {x}}}_i(t)\) is a Brownian motion with diffusion constant \(\chi /2 \) started from \(x_i\).

-

b)

for all \(i,j=1,\ldots , m\), the pair \(\{(X^{{\mathbf {x}}}_i(t),X^{{\mathbf {x}}}_j(t)), \, t\ge 0\}\) is a couple of sticky Brownian motions starting from \((x_i,x_j)\), i.e. at any fixed time \(t\ge 0\) it is distributed in such a way that the difference-sum process is given by

$$\begin{aligned} (X^{{\mathbf {x}}}_i(t)-X^{{\mathbf {x}}}_j(t),X^{{\mathbf {x}}}_i(t)+X^{{\mathbf {x}}}_j(t))= (B^{\text {sbm}, x_i-x_j}(t),{\bar{B}}^{x_i+x_j}(2t-\tau (t))). \end{aligned}$$(24)Here \(B^{\text {sbm}, x_i-x_j}(t)\) is a sticky Brownian motion with stickiness at 0, stickiness parameter \(\sqrt{2} \gamma \), and diffusion constant \(\chi \), started from \(x_i-x_j\) and where \(\tau (t)\) is the corresponding local time-change defined in (113), and \({\bar{B}}^{x_i+x_j}(2t-\tau (t))\) is another Brownian motion and diffusion constant \(\chi \), independent from \(B^{\text {sbm}}(t)\) started from \(x_i+x_j\).

Remark 3.1

For an example of a coupling satisfying requirements a) and b) above, we refer the reader to the family of processes introduced in [17].

We will see that, for any fixed m, the field \({{\mathscr {Z}}}^{(m)}(\varphi , t)\) reproduces correctly the first and second moments of (20) and (21).

For the expectation we have, using item a) above

where \(p_t^{\text {bm}}(\cdot , \cdot )\) is the transition kernel of Brownian motion, and the last identity follows from the symmetry: \(p_t^{\text {bm}}(x_i,x)=p_t^{\text {bm}}(x,x_i)\). Notice that indeed the RHS of (25) coincides with (20).

On the other hand, for the second moment, using item b) above

Then, from our assumptions,

Here \(p_t(x_i,y_j;dx,dy)\) is the transition probability kernel of the pair \((X^{{\mathbf {x}}}_i(t),X^{{\mathbf {y}}}_j(t))\). Denoting now by \({\tilde{p}}_t(v_0,u_0;dv,du)\) the transition probability kernel of the pair \((X_i^{{\mathbf {x}}}(t)-X^{{\mathbf {y}}}_j(t),X^{\mathbf {x}}_i(t)+X^{\mathbf {y}}_j(t))\), and by \(\pi _t\) the probability measure of the time change \(\tau (t)\), at time t, we have

(where \( {\tilde{p}}^{(i)}_t(\cdot ,\cdot | s)\) for \(i=1,2\), are resp. the transition probability density functions of the Brownian motions B(t) and \({\bar{B}}(t)\) conditioned on s) as, from (24), the difference and sum processes are independent conditioned on the realization of \(s=\tau (t)\). Now we have that

hence

where the second identity follows from the symmetry of \(p^{\text {bm}}(\cdot ,\cdot )\). Then, from the change of variables \(v_0:=x_i-y_j\), \(u_0=x_i+y_j\), and \(v=x-y\), \(u=x+y\), and since \(dv_0\,du_0=2 dx_i\,dy_j\), it follows that

As a consequence

which is exactly the same expression as (21).

Remark 3.2

In order to match the first two moments of the limiting density field, it suffices to take in (23) any \(m \ge 2\). We believe that in order to match all moments up to order k we need \(m \ge k\), and so the limiting field would correspond to taking the limit \(m \rightarrow \infty \). However, because in the current paper we can only deal with two particles, we cannot say more about higher moments.

4 Basic Tools

Before showing the main result, in this section we introduce some notions and tools that will be useful to show Theorem 3.1. These notions include the concept of Dirichlet forms and the notion of convergence of Dirichlet forms that we will use; Mosco convergence of Dirichlet forms. The reader familiar with these notions can skip this section and move directly to Sect. 5.

4.1 Dirichlet Forms

A Dirichlet form on a Hilbert space is defined as a symmetric form which is closed and Markovian. The importance of Dirichlet forms in the theory of Markov processes is that the Markovian nature of the first corresponds to the Markovian properties of the associated semigroups and resolvents on the same space. Related to the present work, probably one of the best examples of this connection is the work of Umberto Mosco. In [9] Mosco introduced a type of convergence of quadratic forms, Mosco convergence, which is equivalent to strong convergence of the corresponding semigroups. Before defining this notion of convergence, we recall the precise definition of a Dirichlet form.

Definition 4.1

(Dirichletforms) Let H be a Hilbert space of the form \(L^2(E;m)\) for some \(\sigma \)-finite measure space \((E,{{\mathscr {B}}}(E),m)\). Let H be endowed with an inner product \( \langle \cdot ,\cdot \rangle _H\). A Dirichlet form \({\mathscr {E}}(f,g)\), or \(({\mathscr {E}},D({\mathscr {E}}))\), on H is a symmetric bilinear form such that the following conditions hold

-

1.

The domian \(D({\mathscr {E}})\) is a dense linear subspace of H.

-

2.

The form is closed, i.e. the domain \( D({\mathscr {E}})\) is complete with respect to the metric determined by

$$\begin{aligned} {\mathscr {E}}_1 (f,g) = {\mathscr {E}}(f,g) + \langle f,g \rangle _H . \end{aligned}$$ -

3.

The unit contraction operates on \({\mathscr {E}}\), i.e. for \( f \in D({\mathscr {E}})\), if we set \( g := ( 0 \vee f ) \wedge 1\) then we have that \(g \in D({\mathscr {E}})\) and \({\mathscr {E}}(g,g) \le {\mathscr {E}}(f,f)\).

When the third condition is satisfied we say that the form \({\mathscr {E}}\) is Markovian. We refer the reader to [18] for a comprehensible introduction to the subject of Dirichlet forms. For the purposes of this work, the key property of Dirichlet forms is that there exists a natural correspondence between the set of Dirichlet forms and the set of Markov generators (cf. Appendix 6.2.2). In other words, to a reversible Markov process we can always associate a Dirichlet form that is given by:

where the operator L is the corresponding infinitesimal generator of a symmetric Markov process. As an example of this relation, consider the Brownian motion in \({\mathbb {R}}\). We know that the associated infinitesimal generator is given by the Laplacian. Hence its Dirichlet form is

namely the Sobolev space of order 1.

From now on we will mostly deal with the quadratic form \( {\mathscr {E}}(f,f)\) that we can view as a functional defined on the entire Hilbert space H by defining

which is lower-semicontious if and only if the form \(({\mathscr {E}}, D({\mathscr {E}})) \) is closed.

4.2 Mosco Convergence

We now introduce the framework to properly define the mode of convergence we are interested in. The idea is that we want to approximate a Dirichlet form on the continuum by a sequence of Dirichlet forms indexed by a scaling parameter N. In this context, the problem with the convergence introduced in [9] is that the approximating sequence of Dirichlet forms does not necessarily live on the same Hilbert space. However, the work in [10] deals with this issue. We also refer to [19] for a more complete understanding and a further generalization to infinite-dimensional spaces. In order to introduce this mode of convergence, we first define the concept of convergence of Hilbert spaces.

4.3 Convergence of Hilbert Spaces

We start with the definition of the notion of convergence of spaces:

Definition 4.2

(ConvergenceofHilbertspaces) A sequence of Hilbert spaces \(\{ H_N \}_{N \ge 0}\), converges to a Hilbert space H if there exist a dense subset \(C \subseteq H\) and a family of linear maps \(\{ \Phi _N : C \rightarrow H_N \}_N\) such that:

It is also necessary to introduce the concepts of strong and weak convergence of vectors living on a convergent sequence of Hilbert spaces. Hence in Definitions 4.3, 4.4 and 4.6 we assume that the spaces \(\{ H_N \}_{N \ge 0}\) converge to the space H, in the sense we just defined, with the dense set \(C \subset H\) and the sequence of operators \(\{ \Phi _N : C \rightarrow H_N \}_N\) witnessing the convergence.

Definition 4.3

(StrongconvergenceonHilbertspaces) A sequence of vectors \(\{ f_N \}\) with \(f_N\) in \(H_N\), is said to strongly-converge to a vector \(f \in H\), if there exists a sequence \(\{ \tilde{f}_M \} \in C\) such that:

and

Definition 4.4

(WeakconvergenceonHilbertspaces) A sequence of vectors \(\{ f_N \}\) with \(f_N \in H_N\), is said to converge weakly to a vector f in a Hilbert space H if

for every sequence \(\{g_N \}\) strongly convergent to \(g \in H\).

Remark 4.1

Notice that, as expected, strong convergence implies weak convergence, and, for any \(f \in C\), the sequence \(\Phi _N f \) strongly-converges to f.

Given these notions of convergence, we can also introduce related notions of convergence for operators. More precisely, if we denote by L(H) the set of all bounded linear operators in H, we have the following definition

Definition 4.5

(ConvergenceofboundedoperatorsonHilbertspaces) A sequence of bounded operators \(\{ T_N \}\) with \(T_N \in L(H_N)\), is said to strongly (resp. weakly ) converge to an operator T in L(H) if for every strongly (resp. weakly) convergent sequence \(\{ f_N \}\), \(f_N \in H_N\) to \(f \in H\) we have that the sequence \(\{ T_N f_N \}\) strongly (resp. weakly ) converges to Tf.

We are now ready to introduce Mosco convergence.

4.4 Definition of Mosco Convergence

In this section we assume the Hilbert convergence of a sequence of Hilbert spaces \(\{ H_N \}_N\) to a space H.

Definition 4.6

(Moscoconvergence) A sequence of Dirichlet forms \(\{ ({\mathscr {E}}_N, D({\mathscr {E}}_N))\}_N \) on Hilbert spaces \(H_N\), Mosco-converges to a Dirichlet form \(({\mathscr {E}}, D({\mathscr {E}})) \) in some Hilbert space H if:

- Mosco I.:

-

For every sequence of \(f_N \in H_N\) weakly-converging to f in H

$$\begin{aligned} {\mathscr {E}}( f ) \le \liminf _{N \rightarrow \infty } {\mathscr {E}}_N ( f_N ). \end{aligned}$$(36) - Mosco II.:

-

For every \(f \in H\), there exists a sequence \( f_N \in H_N\) strongly-converging to f in H, such that

$$\begin{aligned} {\mathscr {E}}( f) = \lim _{N \rightarrow \infty } {\mathscr {E}}_N ( f_N ). \end{aligned}$$(37)

The following theorem from [10], which relates Mosco convergence with convergence of semigroups and resolvents, is one of the main ingredients of our work:

Theorem 4.1

Let \(\{ ({\mathscr {E}}_N, D({\mathscr {E}}_N))\}_N \) be a sequence of Dirichlet forms on Hilbert spaces \(H_N\) and let \(({\mathscr {E}}, D({\mathscr {E}})) \) be a Dirichlet form in some Hilbert space H. The following statements are equivalent:

-

1.

\(\{ ({\mathscr {E}}_N, D({\mathscr {E}}_N))\}_N \) Mosco-converges to \(\{ ({\mathscr {E}}, D({\mathscr {E}}))\} \).

-

2.

The associated sequence of semigroups \(\{ T_{N} (t) \}_N \) strongly-converges to the semigroup T(t) for every \(t >0\).

4.5 Mosco Convergence and Dual Forms

The difficulty in proving condition Mosco I lies in the fact that (36) has to hold for all weakly convergent sequences, i.e., we cannot choose a particular class of sequences.

In this section we will show how one can avoid this difficulty by passing to the dual form. We prove indeed that Mosco I for the original form is implied by a condition similar to Mosco II for the dual form (Assumption 1).

4.5.1 Mosco I

Consider a sequence of Dirichlet forms \(( {\mathscr {E}}_N, D({\mathscr {E}}_N) )_N\) on Hilbert spaces \(H_N\), and an additional quadratic form \(({\mathscr {E}}, D({\mathscr {E}}))\) on a Hilbert space H. We assume convergence of Hilbert spaces, i.e. that there exists a dense set \(C\subset H\) and a sequence of maps \(\Phi _N: C\rightarrow H_N\) such that \(\lim _{N\rightarrow \infty } \Vert \Phi _N f\Vert _{H_N} =\Vert f\Vert _H\). The dual quadratic form is defined via

Notice that from the convexity of the form we can conclude that it is involutive, i.e., \(({\mathscr {E}}^*)^*={\mathscr {E}}\). We now assume that the following holds

Assumption 1

For all \( g \in H\), there exists a sequence \( g_N \in H_N \) strongly-converging to g such that

We show now that, under Assumption 1, the first condition of Mosco convergence is satisfied.

Proposition 4.1

Assumption 1 implies Mosco I, i.e.

for all \(f_N \in H_N \) weakly-converging to \(f \in H\).

Proof

Let \(f_N\rightarrow f\) weakly then, by Assumption 1, for any \(g\in H\) there exists a sequence \(g_N \in H_N\) such that \( g_N\rightarrow g\) strongly, and (38) is satisfied. From the involutive nature of the form, and by Fenchel’s inequality, we obtain:

by the fact that \(f_N \rightarrow f\) weakly, \( g_N\rightarrow g\) strongly, and (38) we obtain

Since this holds for all \(g \in H\) we can take the supremum over H,

This concludes the proof. \(\square \)

In other words, in order to prove condition Mosco I all we have to show is that Assumption 1 is satisfied.

4.5.2 Mosco II

For the second condition, we recall a result from [20] in which a weaker notion of Mosco convergence is proposed. In this new notion, condition Mosco I is unchanged whereas condition Mosco II is relaxed to functions living in a core of the limiting Dirichlet form. Let us first introduce the concept of core:

Definition 4.7

Let \(({\mathscr {E}},D({\mathscr {E}}))\) and H be as in Definition 4.1. A set \(K \subset D({\mathscr {E}}) \cap C_c(E)\) is said to be a core of \(({\mathscr {E}},D({\mathscr {E}}))\) if it is dense both in \((D({\mathscr {E}}),\left\Vert \cdot \right\Vert _{{\mathscr {E}}_1})\) and \((C_c(E),\left\Vert \cdot \right\Vert _{\infty })\), where \(C_c(E)\) denotes the set of continuous functions with compact support.

We now state the weaker notion from [20]:

Assumption 2

There exists a core \(K \subset D({\mathscr {E}})\) of \({\mathscr {E}}\) such that, for every \(f \in K\), there exists a sequence \(\{ f_N \}\) strongly-converging to f, such that

Despite of being weaker, the authors were able to prove that this relaxed notion also implies strong convergence of semi-groups. We refer the reader to Section 3 of [20] for details on the proof.

5 Proof of Main Result

Our main theorem, Theorem 3.1, is a consequence of self-duality and Theorem 5.1 below concerning the convergence in the Mosco sense of the sequence of Dirichlet forms associated to the difference process to the Dirichlet form corresponding to the so-called two-sided sticky Brownian motion (see the Appendix for details on this process). Before stating Theorem 5.1 let us introduce the relevant setting for this convergence:

The convergence of the difference process to sticky Brownian motion takes place in the condensation regime introduced earlier in Sect. 2.4.1. In this regime the corresponding scaled difference process is given by:

with infinitesimal generator

for \(w \in \frac{1}{N} {\mathbb {Z}}\), with

Notice that by Proposition 2.2 the difference processes are reversible with respect to the measures \(\nu _{\gamma ,N} \) given by

and by (29) the corresponding sequence of Dirichlet forms is given by

Remark 5.1

The choice of the reversible measures \(\nu _{\gamma ,N}\) determines the sequence of approximating Hilbert spaces given by \(H_N^{\text {sip}} :=L^2( \frac{1}{N}{\mathbb {Z}}, \nu _{\gamma ,N})\), \(N\in {\mathbb {N}}\). Here for \(f, g \in H_N^{\text {sip}}\) their inner product is given by

where

is the inner product of Sect. 6.3.

On the other hand, the two sided sticky Brownian motion with sticky parameter \(\gamma >0\) can be described in terms of the Dirichlet form \(\left( {\mathscr {E}}_{\text {sbm}}, D({\mathscr {E}}_{\text {sbm}}) \right) \) given by

whose domain is

Convergence of Hilbert spaces As we already mentioned in Remark 5.1, by choosing the reversible measures \(\nu _{\gamma ,N}\) we have determined the convergent sequence of Hilbert spaces and, as a consequence, we have also set the limiting Hilbert space \(H^{\text {sbm}} \) to be \(L^2( {\mathbb {R}}, {\bar{\nu }})\) with \({\bar{\nu }}\) as in (48). Notice that from the regularity of this measure, by Theorem 13.21 in [21] and standard arguments, we know that the set \(C_k^{\infty } ({\mathbb {R}})\) of smooth compactly supported test functions is dense in \(L^2( {\mathbb {R}}, {\bar{\nu }})\). Moreover the set

denoting the set of all continuous functions on \({\mathbb {R}}\setminus \{ 0 \}\) with finite value at 0, is also dense in \(L^2( {\mathbb {R}}, {\bar{\nu }})\).

Before stating our convergence result, we have to define the right “embedding” operators \(\{ \Phi _N \}_{N \ge 1}\), cf. Definition 4.2 , to not only guarantee convergence of Hilbert spaces \(H_N \rightarrow H\) , but Mosco convergence as well. We define these operators as follows:

Proposition 5.1

The sequence of spaces \(H_N^{\text {sip}}=L^2( \frac{1}{N}{\mathbb {Z}}, \nu _{\gamma ,N} )\), \(N\in {\mathbb {N}}\), converges, in the sense of Definition 4.2, to the space \(H^{\text {sbm}} =L^2( {\mathbb {R}}, {\bar{\nu }})\).

Proof

The statement follows from the definition of \(\{ \Phi _N \}_{N \ge 1}\). \(\square \)

5.1 Mosco Convergence of the Difference Process

In the context described above, we have the following theorem:

Theorem 5.1

The sequence of Dirichlet forms \(\{ {\mathscr {E}}_N, D({\mathscr {E}}_N)\}_{N \ge 1}\) given by (45) converges in the Mosco sense to the form \(\left( {\mathscr {E}}_{\text {sbm}}, D({\mathscr {E}}_{\text {sbm}}) \right) \) given by (47) and (48). As a consequence, if we denote by \(T_N(t)\) and \(T_t\) the semigroups associated to the difference process \(w_N(t)\) and the sticky Brownian motion \(B_t^{\text {sbm}}\), we have that \(T_N(t) \rightarrow T_t\) strongly in the sense of Definition 4.5.

In the following section we will show how to use this result to prove Theorem 3.1. The proof of Theorem 5.1 will be postpone to Sect. 5.2.

5.2 Proof of Main Theorem: Theorem 3.1

We denote by \(T_N(t)\) and \(T_t\) the semigroups associated to the difference process \(w_N(t)\) and the sticky Brownian motion \(B_t^{\text {sbm}}\). We will see that the strong convergence of semigroups implies the convergence of the probability mass functions at 0.

Proposition 5.2

For all \(t >0\) denote by \(p_{t}^N (w, 0)\) the transition function that the difference process starting from \(w \in \frac{1}{N} {\mathbb {Z}}\) finishes at 0 at time t. Then the sequence \(p_{t}^N (\cdot , 0)\) converges strongly to \(p_t^{\text {sbm}}(\cdot ,0)\) with respect to \(H_N^{\text {sip}}\) Hilbert convergence.

Proof

From the fact that \(\{ T_N(t) \}_{N \ge 1}\) converges strongly to \(T_t\), we have that for all \(f_N\) strongly converging to f, the sequence \(\{ T_N(t) f_N \}_{N \ge 1} \in H_N^{\text {sip}}\) converges strongly to \(T_t f\). In particular, for \(f_N = \mathbb {1}_{\{0\}}\) we have that the sequence

converges strongly to

where \({\mathbb {E}}_w^{\text {sbm}}\) denotes expectation with respect to the sticky Brownian motion started at w. \(\square \)

Remark 5.2

Despite the fact that Proposition 5.2 is not a point-wise statement, we can still say something more relevant when we start our process at the point zero:

The reason is that we can see \(p_{t}^N (w, 0)\) as a weakly converging sequence and used again the fact that \(f_N = \mathbb {1}_{\{0\}}\) converges strongly.

Proof of Theorem 3.1

Let \(\rho \) and \(\sigma \) be given by (6), then we can write

where, from Proposition 5.1 in [1], using self-duality, we can simplify the integral above as

Notice that the expectation in the RHS of (54) can be re-written in terms of our difference process as follows:

where \(p_{\alpha (N, t)}\) is the transition function \(p_t^N\) under the space-time rescaling defined in (14), since in the condensation regime we have, as in Sect. 2.4.1, \(k_N = \frac{1}{\sqrt{2} \gamma N}\). We then obtain:

At this point we have 3 non-vanishing contributions:

where we already know:

and, by Remark 5.2,

To analyze the first contribution we use the change of variables \(u = x+y\), \(v = x-y\) from which we obtain:

Hence by (46), \(C_N^{(1)}\) can be re-written as

with \(F_N\) given by

We then have the following proposition:

Proposition 5.3

The sequence of functions \(\{ F_N \}_{N \ge 1} \in H_N^{\text {sip}}\), given by (61), converges strongly to \(F \in H^{\text {sbm}}\) given by

Proof

For simplicity let us deal with the case \(\varphi \in C_k^{\infty }({\mathbb {R}})\). The case where \(\varphi \in {{\mathscr {S}}}({\mathbb {R}}) \setminus C_k^{\infty }({\mathbb {R}})\) can be done by standard approximations using a combination of truncation and convolution with a kernel (see for example the proof of Proposition 6.1 in the Appendix).

In the language of Definition 4.3, we set the following sequence of reference functions:

for all \(x \in {\mathbb {R}}\).

Then we have:

where in the last line we used the convergence

Moreover, a similar expansion (substituting integrals by sums) gives:

Developing the square we obtain:

Therefore, in order to conclude

we can use (65) and the convergence:

\(\square \)

From the strong convergence \(F_N \rightarrow F\), Proposition 5.2, and Remark 5.2 we conclude

Substituting the limits of the contributions we obtain

where in the third equality we used the reversibility of SBM with respect to the measure \( {\hat{\nu }}(dv)=dv + \sqrt{2} \gamma \delta _0(dv)\). Then, (17) follows, after a change of variable, using the expression (2.15) given in [16] for the transition probability measure \(p_t^{\text {sbm}}(0,dv)\) of the Sticky Brownian motion (with \(\theta = \sqrt{2}\gamma \)), namely

This concludes the proof. \(\square \)

Remark 5.3

Using the expression of the Laplace transform of \(p_t^{\text {sbm}}(0,dv)\) given in Section 2.4 of [16], it is possible to verify that the Laplace transform of (17) (using (69)) coincides with the expression in Theorem 2.18 of [1].

5.3 Proof of Theorem 5.1: Mosco convergence for inclusion dynamics

In this section we prove Theorem 5.1; the Mosco convergence of the Dirichlet forms associated to the difference process \(\{w_N(t), \, t\ge 0\}\) to the Dirichlet form corresponding to the two-sided sticky Brownian motion \(\{B_t^{\text {sbm}}, \, t\ge 0\}\) given by (47) and (48).

By Proposition 5.1 we have already determined the relevant notions of weak and strong convergence of vectors living in the approximating sequence of Hilbert spaces (the spaces \(H_N^{\text {sip}}\)). We can then move directly to the verification of conditions Mosco I and Mosco II in the definition of Mosco convergence. We do this in Sects. 5.2.1 and 5.2.2 respectively.

5.3.1 Mosco I

We will divide our task in two steps. First, we will compare the inclusion Dirichlet form with a random walk Dirichlet form and show that the first one dominates the second one. We will later use this bound and the fact that the random walk Dirichlet form satisfies Mosco I, to prove that Mosco I also holds for the case of inclusion particles.

We call \(\{v(t), \;t\ge 0\}\) the random walk on \({\mathbb {Z}}\) with jump range \(A=[-R,R]\cap {\mathbb {Z}}\setminus \{0\}\) (see Appendix 6.3 where similar notation is used for the nearest neighbor case). Thus we denote by \(L^{rw}\) the infinitesimal generator:

Hence, in the diffusive scaling, the N-infinitesimal generator is given by:

where \(A_N^+ := \{ |r |: r \in A_N \}\) i.e. the generator of the process \(v_N(t):=\tfrac{1}{N} v(N^2t)\), \(t\ge 0\), and denote by \(({{\mathscr {R}}}_N,D({{\mathscr {R}}}_N))\) the associated Dirichlet form.

5.3.2 Comparing RW and SIP Dirichlet Forms

The key idea to prove Mosco I is to transfer the difficulties of the SIP nature to independent random walkers. This is done by means of the following observation:

Proposition 5.4

For any \(f_N \in H_N^{\text {sip}} \) we have

Proof

Rearranging (45) and using the symmetry of \( p(\cdot )\) allows us to write:

and the result follows from the fact that the RHS of this identity is nonnegative. \(\square \)

5.3.3 Strong and Weak Convergence in \(H_N^{\text {rw}}\) and \(H_N^{\text {sip}}\) Compared

Proposition 5.5

The sequence \(\{ h_N =\mathbb {1}_{\{0\}} \}_{ N \ge 1}\), with \(h_N \in H_N^{\text {rw}}\), converges strongly to \(h=0 \in H^{\text {bm}}\) with respect to \(H_N^{\text {rw}}\)-Hilbert convergence.

Proof

In the language of Definition 4.3 we set \(\tilde{h}_M\equiv 0 \). With this choice we immediately have

which concludes the proof. \(\square \)

Proposition 5.6

The sequence \(\{ h_N =\mathbb {1}_{\{0\}} \}_{ N \ge 1}\), with \(h_N \in H_N^{\text {sip}}\), converges strongly to \(h=\mathbb {1}_{\{0\}} \in H^{\text {sbm}}\) with respect to \(H_N^{\text {sip}}\)-Hilbert convergence.

Proof

In the language of Definition 4.3 we set \(\tilde{h}_M\equiv \mathbb {1}_{\{0\}} \). With this choice we immediately have

which concludes the proof. \(\square \)

A consequence of Proposition 5.6 is that any sequence which is weakly convergent, with respect to \(H_N^{\text {sip}}\)-Hilbert convergence, converges also at zero.

Proposition 5.7

Let \(\{ f_N \}_{N \ge 1}\) in \(\{ H_N^{\text {sip}} \}_{N \ge 1}\) be a sequence converging weakly to \(f \in H^{\text {sbm}}\) with respect to \(H_N^{\text {sip}}\)-Hilbert convergence, then \(\lim _{N \rightarrow \infty } f_N(0) = f(0)\).

Proof

By Proposition 5.6 we know that \(\{ h_N =\mathbb {1}_{\{0\}} \}_{ N \ge 1}\) converges strongly to \(h=\mathbb {1}_{\{0\}}\) with respect to \(H_N^{\text {sip}}\)-Hilbert convergence. This, together with the fact that \(\{ f_N \}_{N \ge 1}\) converges weakly, implies:

but by (46)

which, together with (77), implies the statement. \(\square \)

To further contrast the two notions of convergence, Proposition 5.5 has a weaker implication

Proposition 5.8

Let \(\{ g_N \}_{N \ge 1}\) in \(\{ H_N^{\text {rw}} \}_{N \ge 1}\) be a sequence converging weakly to \(g \in H^{\text {bm}}\) with respect to \(H_N^{\text {rw}}\)-Hilbert convergence, then \(\lim _{N \rightarrow \infty } \tfrac{1}{N} g_N(0) = 0\).

Proof

By Proposition 5.5 we know that \(\{ h_N =\mathbb {1}_{\{0\}} \}_{ N \ge 1}\) converges strongly to \(h=0\) with respect to \(H_N^{\text {rw}}\)-Hilbert convergence. This, together with the fact that \(\{ g_N \}_{N \ge 1}\) converges weakly, implies:

but we know

which together with (79) concludes the proof. \(\square \)

5.3.4 From \(H_N^{\text {rw}}\) Strong Convergence to \(H_N^{\text {sip}}\) Strong Convergence

Proposition 5.9

Let \(\{ g_N \}_{N \ge 1}\) in \(\{ H_N^{\text {rw}} \}_{N \ge 1}\) be a sequence converging strongly to \(g \in H^{\text {bm}}\) with respect to \(H_N^{\text {rw}}\)-Hilbert convergence. For all \(N\ge 1\) define the sequence

Then \(\{ {\hat{g}}_N \}_{N \ge 0}\) also converges strongly with respect to \(H_N^{\text {sip}}\)-Hilbert convergence to \({\hat{g}}\) given by:

Proof

From the strong convergence in the \(H_N^{\text {rw}} \)-Hilbert convergence sense, we know that there exists a sequence \(\tilde{g}_M \in C_k^{\infty } ({\mathbb {R}})\) such that

and

for each M we define the function \({\hat{g}}_M \) given by

Notice that:

and hence we have \({\hat{g}}_M\) belongs to both \(C^0 ( {\mathbb {R}}\setminus \{ 0\})\) and \(H^{\text {sbm}}\).

As before, we have the relation:

which shows that indeed we have

For the second requirement of strong convergence we can estimate as follows

Relation (84) allows us to see that the RHS of the equality above vanishes. This, together with (87), concludes the proof of the Proposition. \(\square \)

5.3.5 From \(H_N^{\text {sip}}\) Weak Convergence to \(H_N^{\text {rw}}\) Weak Convergence

The following proposition says that with respect to weak convergence the implication comes in the opposite direction

Proposition 5.10

Let \(\{ f_N \}_{N \ge 1}\) in \(\{ H_N^{\text {sip}} \}_{N \ge 1}\) be a sequence converging weakly to \(f \in H^{\text {sbm}}\) with respect to \(H_N^{\text {sip}}\)-Hilbert convergence. Then it also converges weakly with respect to \(H_N^{\text {rw}}\)-Hilbert convergence.

Proof

Let \(\{ f_N \}_{N \ge 0}\) in \(\{ H_N^{\text {sip}} \}_{N \ge 0}\) be as in the Proposition. In order to show that it also converges weakly with respect to \(H_N^{\text {rw}}\)-Hilbert convergence, we need to show that for any sequence \(\{ g_N \}_{N \ge 0}\) in \(\{ H_N^{\text {rw}} \}_{N \ge 0}\) converging strongly to some \(g \in H^{\text {bm}}\) we have

Consider such a sequence \(\{ g_N \}_{N \ge 0}\), by Proposition 5.9 we know that the sequence \(\{ {\hat{g}}_N \}_{N \ge 1}\) also converges strongly with respect to \(H_N^{\text {sip}}\)-Hilbert convergence to \({\hat{g}}\) defined as in (82). Then we have:

which can be re-written as:

and together with Propositions 5.7 and 5.8 implies that:

and the proof is done. \(\square \)

5.3.6 Conclusion of Proof of Mosco I

In order to see that condition Mosco I is satisfied, we combine Proposition 5.4, Proposition 5.10 and the Mosco convergence of Random Walkers to Brownian motion to obtain that for all \(f \in H^{\text {sbm}}\), and all \(f_N \in H_N^{\text {sip}}\) converging weakly to f, we have (cf. Appendix 6.3)

where the last equality comes from equation (117) and Remark 6.4 in the Appendix.

5.3.7 Mosco II

We are going to prove that Assumption 2 is satisfied. We use the set of compactly supported smooth functions \(C_k^\infty ({\mathbb {R}})\), which by the regularity of the measure \(dx+ \delta _0\) is dense in \(H=L^2(dx+ \delta _0)\).

5.3.8 The Recovering Sequence

For every \(f \in C_k^\infty ({\mathbb {R}})\), we need to find a sequence \(f_N\) strongly-converging to f and such that

The obvious choice \(f_N = \Phi _N f\) does not work in this case, the reason of this is the emergence in the limit of a non-vanishing term containing \(f^{\prime }(0)\). Nevertheless our candidate is the sequence \(\{ \Psi _N f \}_{N \ge 1}\) given by

where \(A_N\) is as in (43).

Remark 5.4

The sequence \(\{ \Psi _N f \}_{N \ge 1}\) is chosen in such a way that the SIP part of the Dirichlet form, i.e. the right hand side of (74), vanishes at \(\Psi _N f\) for all N. See below for the details.

Our goal is to show that the sequence \(\{ \Psi _N f \}_{N \ge 1}\) indeed satisfies (92). First of all we need to show that \(\Psi _N f \rightarrow f\) strongly.

Proposition 5.11

For all \(f \in C_k^\infty ({\mathbb {R}}) \subset L^2(dx+ \delta _0)\), the sequence \(\{ \Psi _N f \}_{N \ge 1}\) in \(H_N^{\text {sip}}\) strongly-converges to f w.r.t. the \(H_N^{\text {sip}}\)-Hilbert space convergence given.

Proof

In the language of Definition 4.3 we set \(\tilde{f}_M\equiv f \). Hence the first condition is trivially satisfied:

Moreover

where we used the boundedness of f and the fact that the cardinality of the set \(A_N\) is finite and does not depend on N. \(\square \)

5.3.9 Preliminary Simplifications

To continue the proof of (92), the first thing to notice is that the Dirichlet form \({\mathscr {E}}_N\) evaluated in \(\Psi _N f\) can be substantially simplified:

where, from the observation that for \(i = -r\) and \(r \in A_N\), via (93) we get

and therefore the whole second sum in line (95) vanishes. Then, using the expression (44) for \(\nu _{\gamma ,N}\), we are left with

By (93) we have \( (\Psi _N f(r)- \Psi _N f(0)) = 0 \) for \(r\in A_N\), and therefore our Dirichlet form becomes

that we split again as follows

5.3.10 Idenditification of the Limiting Dirichlet Form

First we show that \(S_N\) vanishes as \(N \rightarrow \infty \). For \(i \in \frac{1}{N} {\mathbb {Z}}\), we define the sets

Notice that for \( r \in A_N^i\) we have \((\Psi _N f(i+r)- \Psi _N f(0)) = 0\) and hence

where we used the symmetry of \(p(\cdot )\) and the fact that \(r \in A_N \setminus A_N^i\) if and only if \(-r \in A_N \setminus A_N^{-i}\). We conclude that \(S_N\) vanishes by recalling that by a Taylor expansion the factor \(( f(i+r)- 2 f(0) + f(-i-r) )\) is of order \(N^{-2}\).

For what concerns the remaining term in (98), we notice that, exploiting the symmetry of the transition function \(p(\cdot )\), we can re-arrange it into

Let us define the following set \(B_N =\frac{1}{N} \{ -2R,-2R +1, \dots ,2R-1, 2R \}\) and split the sum above as follows

The above splitting allows to isolate the first term for which we have no issues of the kind \(\Psi _N f( i +r ) = f(0)\) and hence no complications when taylor expanding around the points \(i \in \frac{1}{N}{\mathbb {Z}}\).

We now show that the second term in the RHS of (100) vanishes as N goes to infinity:

Take a positive \( i \in B_N \setminus A_N\), then for \( r \in A_N^i\), \(\Psi _N f (i+r) = f(0)\).

Remark 5.5

Notice that, for \(-i \in B_N \setminus A_N\), the set \(A_N^{i}=- A_N^{-i}\) is such that

Remark 5.6

We will omit the analysis for \( r\notin A_N^i\) because for those terms we can Taylor expand f around the point i and show that the factors containing the discrete Laplacian are of order \(N^{-2}\).

We now consider the contribution that each pair \((i,-i)\) gives to the second sum in the RHS of (100). Let \( i \in (B_N \setminus A_N )^+\), then

Taylor expanding around zero the terms inside the square brackets in the RHS of (102) gives

Analogously, for the contribution \(C_N(-i)\) we obtain

Summing both contributions over all \(i >0\) we obtain

where we used that the cardinality of the sets \(A_N^i\) and \((B_N \setminus A_N )^+\) does not depend on N. Then we can write

which indeed by a Taylor expansion gives the limit

with \(\chi = \sum _{r=1}^R p(r) r^2 \).

This concludes the proof of Mosco II. \(\square \)

Remark 5.7

Notice that in the second line of (104) we are using the fact that \(f \in C^{\infty }({\mathbb {R}})\), and hence \(f^{\prime }(0^-)=f^{\prime }(0^+)\).

References

Carinci, G., Giardina, C., Redig, F.: Exact formulas for two interacting particles and applications in particle systems with duality, arXiv preprint arXiv:1711.11283 (2017)

Amir, M.: Sticky Brownian motion as the strong limit of a sequence of random walks. Stoch. Process. Appl. 39(2), 221–237 (1991)

Cao, J., Chleboun, P., Grosskinsky, S.: Dynamics of condensation in the totally asymmetric inclusion process. J. Stat. Phys. 155(3), 523–543 (2014)

Chau, Y.-X., Connaughton, C., Grosskinsky, S.: Explosive condensation in symmetric mass transport models. J. Stat. Mech. Theory Exp. 2015(11), P11031 (2015)

Beltrán, J., Jara, M., Landim, C.: A martingale problem for an absorbed diffusion: the nucleation phase of condensing zero range processes. Probab. Theory Relat. Fields 169(3–4), 1169–1220 (2017)

Grosskinsky, S., Redig, F., Vafayi, K.: Dynamics of condensation in the symmetric inclusion process. Electron. J. Probab 18, (2013)

Jatuviriyapornchai, W., Chleboun, P., Grosskinsky, S.: Structure of the condensed phase in the inclusion process. J. Stat. Phys. 178(3), 682–710 (2020)

Kim, S., Seo, I.: Condensation and metastable behavior of non-reversible inclusion processes, arXiv preprint arXiv:2007.05202 (2020)

Mosco, U.: Composite media and asymptotic Dirichlet forms. J. Funct. Anal. 123(2), 368–421 (1994)

Kuwae, K., Shioya, T.: Convergence of spectral structures: a functional analytic theory and its applications to spectral geometry. Commun. Anal. Geom 11(4), 599–674 (2003)

Opoku, A., Redig, F.: Coupling and hydrodynamic limit for the inclusion process. J. Stat. Phys. 160(3), 532–547 (2015)

Kipnis, C., Landim, C.: Scaling Limits of Interacting Particle Systems, vol. 320. Springer, New York (2013)

Grosskinsky, S., Redig, F., Vafayi, K.: Condensation in the inclusion process and related models. J. Stat. Phys. 142(5), 952–974 (2011)

Grosskinsky, S., Schütz, G.M., Spohn, H.: Condensation in the zero range process: stationary and dynamical properties. J. Stat. Phys. 113, 389–410 (2003)

Evans, M.R., Hanney, T.: Nonequilibrium statistical mechanics of the zero-range process and related models. J. Phys. A 38(19), R195 (2005)

Howitt, C.J.: Stochastic flows and sticky brownian motion, Ph.D. thesis, University of Warwick (2007)

Howitt, C., Warren, J., et al.: Consistent families of Brownian motions and stochastic flows of Kernels. Ann. Probab. 37(4), 1237–1272 (2009)

Fukushima, M.: Dirichlet Forms and Markov Processes. North-Holland Publishing Company, Amsterdam (1980)

Kolesnikov, A.V.: Mosco convergence of Dirichlet forms in infinite dimensions with changing reference measures. J. Funct. Anal. 230(2), 382–418 (2006)

Andres, S., von Renesse, M.-K.: Particle approximation of the Wasserstein diffusion. J. Funct. Anal. 258(11), 3879–3905 (2010)

Hewitt, E., Stromberg, K.: Real and abstract analysis: a modern treatment of the theory of functions of a real variable (1975)

Chen, Z.-Q., Fukushima, M.: Symmetric Markov Processes, Time Change, and Boundary Theory (LMS-35), vol. 35. Princeton University Press, Princeton (2012)

Fukushima, M., Oshima, Y., Takeda, M.: Dirichlet Forms and Symmetric Markov Processes, vol. 19. Walter de Gruyter, New York (2011)

Kato, T.: Perturbation Theory for Linear Operators, vol. 132. Springer, New York (2013)

Borodin, A.N., Salminen, P.: Handbook of Brownian Motion-Facts and Formulae. Birkhäuser, Basel (2012)

Folland, G.B.: Introduction to Partial Differential Equations. Princeton University Press, Princeton (1995)

Lawler, G.F., Limic, V.: Random Walk: A Modern Introduction, vol. 123. Cambridge University Press, Cambridge (2010)

Acknowledgements

The authors would like to thank Mark Peletier for helpful discussions; The authors also would like to thank valuable comments from anonymous reviewers. M. Ayala acknowledges financial support from the Mexican Council on Science and Technology (CONACYT) via the scholarship 457347.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Alessandro Giuliani.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this Appendix we present some results, in the context of Dirichlet forms, that are necessary for the proof of Theorem 5.1. First, in Sect. 6.1, we introduce the basic notions related to time changes of Markov processes and their Dirichlet forms. Then, in Sect. 6.2, we use the machinery from Sect. 6.1 to compute the Dirichlet form of the two-sided sticky Brownian motion at zero. Finally in Sect. 6.3 we show the convergence, in the sense of Mosco convergence of Dirichlet forms, of a single random walker to the standard Brownian motion.

1.1 Revuz Measures, PCAF’s and Time Changes of Dirichlet Forms

In this section we present some basic notions in the context time changes of Markov processes and their Dirichlet forms. The content of this section follows [22]. In particular we refer the reader to Chapter 5 and the Appendix of [22] for more details and necessary background.

Let \(M=(\Omega ,{{\mathscr {M}}},M_t,\zeta ,{\mathbb {P}})\) a right continuous Markov process, on a Lusin space \((E,{{\mathscr {B}}}(E))\), where the relevant probability space is given by the triple \((\Omega ,{{\mathscr {M}}},{\mathbb {P}})\), and where for every \(\omega \in \Omega \) the random variable \(\zeta (\omega )\) denotes the lifetime of the sample path of \(\omega \).i.e.,

for \(\partial \) the cemetery point.

Furthermore, we assume that for each \(t \ge 0\) there exists a map \(\theta _t:\Omega \rightarrow \Omega \) such that

for every \(s \ge 0\). Moreover, we have \(\theta _0 \omega = \omega \), and \(\theta _{\infty } \omega = [\partial ]\), where \([\partial ]\) denotes a specific element of \(\Omega \) such that \(M_t([\partial ])=\partial \).

In addition, we denote by \(\{ {{\mathscr {F}}}_t \}_{t \ge 0}\) the filtration generated by the Markov process \(M_t\), i.e., for \(t < \infty \):

For convenience we extend the parameter t of the filtration to \([0,\infty ]\) by setting:

We now define the notion of a positive continuous additive functional (PCAF):

Definition 6.1

(PCAF) A function \(A_t (\omega )\) of two variables \(t\ge 0\) and \(\omega \in \Omega \) is called a positive continuous additive functional of \(M_t\) if there exists \(\Lambda \in {{\mathscr {F}}}_\infty \) and a \(\mu \)-inessential set \(N \subset E\) with

if the following conditions are satisfied:

- (i):

-

For each \( t \ge 0\), \(A_t \mid _{\Lambda }\) is \({{\mathscr {F}}}_t\)-measurable.

- (ii):

-

For any \(\omega \in \Lambda \), \(A_{\cdot } (\omega )\) is right continuous on \([0,\infty )\) has left limits on \((0,\zeta (\omega ))\), \(A_0 (\omega )=0\), \(|A_t (\omega ) |< \infty \) for \(t < \zeta (\omega )\), and \(A_t (\omega )= A_{\zeta (\omega )} (\omega )\) for all \( t \ge \zeta (\omega )\).

- (iii):

-

The additivity property is satisfied, i.e.,

$$\begin{aligned} A_{t+s} (\omega ) = A_{t} (\omega ) + A_{s} (\omega ) \text { for all } t,s \ge 0. \end{aligned}$$(107)

If we denote by \({{\mathscr {A}}}_c^{+}\) the set of all PCAF, it turns out that there exists a one to one correspondence between the set \({{\mathscr {A}}}_c^{+}\) and a special subset of the set of the Borel measures on E. Which we now introduce:

Definition 6.2

(Smoothmeasures) Let \(\nu \) be a positive measure on \((E, {{\mathscr {B}}}(E))\), \(\nu \) is said to be smooth if

-

1.

It does not charge any \({\mathscr {E}}_M\)-polar set.

-

2.

There exists a nest \(\{ F_k \}_{k \ge 1}\) such that \(\nu (F_k) < \infty \) for all \(k \ge 1\).

Remark 6.1

We define all the Dirichlet forms related concepts ( \({\mathscr {E}}_M\)-capacity for example ) are in terms of the Dirichlet space \(({\mathscr {E}}_M,D({\mathscr {E}}_M))\), which corresponds to the symmetric Markov process \(M_t\).

We denote by S(E) the set of all smooth measures on E. The correspondence we mentioned above is between \({{\mathscr {A}}}_c^{+}\) and S(E). Formally, this correspondence is given by the following result:

Theorem 6.1

(PCAF and Smooth measures) For \(A \in {{\mathscr {A}}}_c^+\) we denote by \(\nu _A\) the measure that is in Revuz correspondence with A, i.e. the measure that for any \( f \in {{\mathscr {B}}}_{+} (E)\) satisfies:

where the expectation \(E_\mu \) on the right hand side of (108) is taken over both the position of the starting point of the process \(M_s\), which is selected according to the invariant measure \(\mu \), and over the trajectory of the process \(M_s\).

Then we have the following:

- (i):

-

For any \(A \in {{\mathscr {A}}}_c^+\), \(\nu _A \in S(E)\).

- (ii):

-

For any \(\nu \in S(E)\), there exists \(A \in {{\mathscr {A}}}_c^+\) satisfying \(\nu _A = \nu \) uniquely up to \(\mu \)-equivalence.

Proof

This is part of Theorem 4.1.1 in [22] where the proof is included. \(\square \)

It is known that there exists a one to one correspondence between Markov process and Dirichlet forms [23]. The idea is that given a PCAF \(A_t\) we can define a stochastic time-changed process given by the generalized inverse of \(A_t\) in terms of its corresponding Dirichlet form. More precisely:

Theorem 6.2

Let \(M_t\) be a symmetric Markov process with corresponding Dirichlet space given by \(({\mathscr {E}}_M,D({\mathscr {E}}_M))\). Let also \(A_t\) be a PCAF whose Revuz measure \(\nu _A\) has full quasi support. Denote by \(\tilde{M}_t\) the time-changed process given by the generalized inverse of \(A_t\). Then we have that its corresponding Dirichlet space \(({\mathscr {E}}_{\tilde{M}},D({\mathscr {E}}_{\tilde{M}}))\) is given by

Proof

This theorem is just a specialization of Theorem 5.2.2 in [22]. Where the time-changed form is given by

The specialization consists in the fact that the Revuz measure \(\nu _A\) has full quasi support, i.e.,

where F is the support of \(\nu _A\) and \(\sigma _F\) is its hitting time. We refer the reader to page 176 of the same reference if more details are needed. \(\square \)

1.2 Sticky Brownian Motion and its Dirichlet Form

In this Appendix we provide some background material on the two-sided sticky Brownian motion in the context of Dirichlet forms. Namely, by means of an example we apply the machinery of Dirichlet forms to the theory of stochastic time changes for Markov processes. The example that we will build at the end of this section plays the role of the limiting process for the difference process. In this appendix we will mostly follow the approach presented in Chapter 5 of [22].

1.2.1 Two-Sided Sticky Brownian Motion

The traditional approach to construct sticky Brownian motion (SBM) on the real line is by means of local times and time changes related to them. Let us say that we are in the one dimensional case and we want to build Brownian motion sticky at zero. We consider then standard Brownian motion \(\{ B_t \}_{ t \ge 0}\) taking values on \({\mathbb {R}}\) and define its local time at zero by

Given this local time and for \(\gamma > 0\) we consider the functional

and denote by \(\tau \) its generalized inverse, i.e.,

then the process given by the time change

is what is known in the literature by two-sided sticky Brownian motion.

Remark 6.2

The idea in defining (112) is that we add some “extra time” at zero and by taking the inverse (113) via the time change we slow down the new process whenever it is at 0. Notice that the parameter \(\gamma \) controls the factor by which we slow down time.

As expected, in the context of Dirichlet forms, we can also perfom this kind of stochastic time changes. Our goal for this section is to describe the Dirichlet forms approach to perfom the kind of time changes we are interested in. There are basically two ingredients that we need:

-

1.

A symmetric Markov process \(M_t\) with reversible measure \(\mu \) with support in the state space E.

-

2.

A PCAF that, in a sense to be seen later, plays the role of the local time.

Remark 6.3

In the same way that the local time \(L_t^0\) implicitly defined the point \(\{0\}\) as the “sticky region”, the PCAF of the second ingredient above will determine a “sticky region” for our new process.

Under this setting, it becomes then easier to characterize the time-change of Brownian motion given by the inverse of the functional \(T_t\) defined in (112). The idea is that under the setting given by one dimensional Brownian motion on the real line, we know that the process \(\{ B_t \}_{t \ge 0}\) is reversible with respect to the Lebesgue measure dx. The Lebesgue measure dx is in Revuz correspondence with the trivial PCAF \(A_t^1=t\). Furthermore, the following computation shows the Revuz correspondence between the PCAF \(L_t^0\) and the Dirac measure at zero \(\delta _0\):

Then the measure \(\nu = dx + \gamma \delta _0\) is in Revuz correspondence with the PCAF \(T_t\) and hence by Theorem 6.2 the Dirichlet form for one dimensional Sticky Brownian motion \(\{ B_t^{\text {sbm}} \}_{ t \ge 0}\) is given by:

where \(({\mathscr {E}}_{B}, D({\mathscr {E}}_{B}))\) are given as in (30).

In particular for the quadratic functional \({\mathscr {E}}_{B^{\text {sbm}}}(f)\), given by (31), we have:

for \(f \in H^1({\mathbb {R}},dx) \cap L^2 ({\mathbb {R}},dx + \gamma \delta _0)\).

Remark 6.4

Notice that with an abuse of notation, and taking advantage of the fact that the Lebesgue measure assigns zero mass to the point zero, we can write the equality

for any \(f \in D({\mathscr {E}}_{B^{\text {sbm}}})\).

1.2.2 Domain of the Infinitesimal Generator

In this section we will make use of the correspondence between Dirichlet forms and Markov generators to obtain a description of the generator of sticky Brownian motion with parameter \(\gamma \). Let us then expand a bit on what we mentioned before equation (29); this is how the two directions of the correspondence are actually given:

- (a) From forms \({\mathscr {E}}\) to generators L::

-

The correspondence is defined by

$$\begin{aligned} D(L) \subset D({\mathscr {E}}), \quad {\mathscr {E}}(f,g)= -<Lf, g> \quad \forall f \in D(L), \, g \in D({\mathscr {E}}). \end{aligned}$$(119) - (b) From generators L to forms \({\mathscr {E}}\)::

-

In this case the correspondence is given by

$$\begin{aligned} D({\mathscr {E}})=D(\sqrt{-L}), \quad {\mathscr {E}}(f,g)= <\sqrt{-L}f, \sqrt{-L}g> \quad \forall f, g \in D({\mathscr {E}}). \end{aligned}$$(120)

We can think of these relations as the first and second representation theorems for Dirichlet forms in the spirit of Kato [24] for sesquilinear forms. For the particular case of Dirichlet forms, more details and the connection to semigroups and resolvents, can be found on the Appendix of [22].

Remark 6.5

Please notice that the time-changed process behaves like Brownian motion on the set \({\mathbb {R}}\setminus \{ 0\}\) and differently (sticky behavior) when it visits 0. Therefore we expect the new generator \(L_{B^{\text {sbm}}}\) to be the same Laplace operator in the region \({\mathbb {R}}\setminus \{ 0\}\) i.e.

and some additional restrictions at the point zero.

The idea is to assume that the generator \(L_{B^{\text {sbm}}}\) is just the Laplacian at all points, and by using the properties of the time-changed process determine additional constrains at zero.

For \(f \in D({\mathscr {E}}_{B^{\text {sbm}}})\), thanks to (120) we can re-write (116) in terms of \(L_{B^{\text {sbm}}}\) in the following way:

for all \(g \in D({\mathscr {E}}_{B^{\text {sbm}}})\).

On the other hand, for \( f \in D(L_{B^{\text {sbm}}})\) we have:

where in the first line we used (119), and in the third line we used the fact the the Lebesgue measure assigns zero mass to the singleton \(\{0\}\).

Let us split the first therm on the r.h.s. of (123) in two regions:

Integrating by parts in the first integral of the r.h.s. of (124) we obtain:

where

Similarly we obtain:

therefore, for every \(g \in D({\mathscr {E}}_{B^s})\) we obtain:

which gives

for every \(f \in D(L_{B^{\text {sbm}}})\).

We then indeed have, from (129), that for every \(f \in D(L_{B^{\text {sbm}}})\):

Remark 6.6

Notice that condition (129) coindices with what we would expect from the conditions given for two-sided sticky Brownian motion. See for instance Appendix 1 in [25].

1.3 Mosco Convergence for the Random Walk

In this section, we consider the difference process for the position-coordinates of two particles performing nearest-neighbor symmetric independent random walks. This process, that we denote by \(\{v(t), t\ge 0\}\), is itself a random walk in \({\mathbb {Z}}\) for which convergence to the standard Brownian motion in the diffusive time-scales is well-known. By convergence we mean convergence of generators. In this section we will prove Mosco convergence of Dirichlet forms of v(t).

As we can see in Sect. 5.2, the proof of Mosco-convergence for inclusion walkers strongly relies on the result for independent walkers (in particular for the proof of Mosco I). The choice of considering the independent dynamics case has the purpose of exemplifying the use of the Dirichlet approach in a setting simpler than the one of inclusion dynamics.

The generator of \(\{v(t), \; t\ge 0\}\) is given by the discrete Laplacian \(\Delta _1\):

This is simply the generator of a random walk in \({\mathbb {Z}}\). Speeding up time by a factor \( N^2 \) and scaling the mesh between the lattice sites by a factor \(\tfrac{1}{N}\) we obtain that the generator of this scaled process is

We denote by \(({{\mathscr {R}}}_N,D({{\mathscr {R}}}_N))\) the Dirichlet form associated to the generator (132), that is given by

where \(\mu _N\) is the discrete counting measure on \(\frac{1}{N} {\mathbb {Z}}\), this is

which is reversible for the dynamics. We are going to prove the Mosco convergence of the sequence of Dirichlet forms \(\{({{\mathscr {R}}}_N,D({{\mathscr {R}}}_N))\}_N\) to the Dirichlet form \(({\mathscr {E}}_{\text {bm}},D({\mathscr {E}}_{\text {bm}}))\), i.e. the Dirichlet form associated to the standard Brownian motion in \({\mathbb {R}}\)

1.4 Proof of Mosco Convergence for RW

1.4.1 Convergence of Hilbert Spaces

For the sequence of Hilbert spaces

where \(\mu _N\) is as in (134). It is easy to see that we can guarantee the convergence of \(\{ H^{\text {rw}}_N \}_{N \ge 1}\) to the Hilbert space

i.e. the space of Lebesgue square-integrable functions in \({\mathbb {R}}\), by means of the restriction operators

Remark 6.7

The choice of the space of all compactly supported smooth functions \(C:=C_k^{\infty }({\mathbb {R}})\) as dense set for our Hilbert space turns out to be particularly convenient since it is a core of the Dirichlet form associated to the Brownian motion. As a consequence, we can make use of the same set also for proving that (41) is satisfied.

1.4.2 RW: Mosco I

In order to prove that Assumption 1 is satisfied, it is convenient to split the proof in two cases depending whether f belongs or not to the effective domain of \((-\Delta )^{-1/2}\). It is then sufficient to prove Propositions 6.1 and 6.2 below:

Proposition 6.1

For any \(f \in D((-\Delta )^{-1/2})\), there exists a sequence \( f_N \in H_N^{\text {sip}}\) strongly-converging to f, such that:

Proof

Let us proceed by cases:

Case I \(f \in C_k^\infty ({\mathbb {R}})\)

In this case the approximate sequence \(f_N\) is simply given by:

which converges strongly to f.

Let G(x) be the Green’s function of the Laplacian in \({\mathbb {R}}\), i.e. the fundamental solution to the problem \(\Delta G =\delta _0\) that is given by \(G(x) = -|x |\). We refer the reader to [26] for more details on Green’s functions. Let f be as in the statement, then, by standard variational arguments we know that

Analogously, for the discrete case, we can write

where \(G_N(\cdot )\) is the Green’s function of the discrete Laplacian \(\Delta _N\) in \(\tfrac{1}{N}{\mathbb {Z}}\), i.e. the solution of the discrete problem:

we refer to Chapter 5 in [27] for more details on discrete Green’s functions. Notice that