Abstract

This paper contains the latest installment of the authors’ project on developing ensemble based data assimilation methodology for high dimensional fluid dynamics models. The algorithm presented here is a particle filter that combines model reduction, tempering, jittering, and nudging. The methodology is tested on a two-layer quasi-geostrophic model for a \(\beta \)-plane channel flow with \(O(10^6)\) degrees of freedom out of which only a minute fraction are noisily observed. The model is reduced by following the stochastic variational approach for geophysical fluid dynamics introduced in (Holm in Proc R Soc A 41:20140963, 2015) as a framework for deriving stochastic parameterisations for unresolved scales. The reduction is substantial: the computations are done only for \(O(10^4)\) degrees of freedom. We introduce a stochastic time-stepping scheme for the two-layer model and prove its consistency in time. Then, we analyze the effect of the different procedures (tempering combined with jittering and nudging) on the performance of the data assimilation procedure using the reduced model, as well as how the dimension of the observational data (the number of “weather stations”) and the data assimilation step affect the accuracy and uncertainty of the results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, there has been an increased scientific effort in developing ensemble based data assimilation as an alternative to variational data assimilation which is currently used in operation centres for numerical weather prediction.Footnote 1 Such methods can be more suited for fully nonlinear systems and complex observation operators. The work presented in this paper is part of this wider effort (see the survey paper [23] and the references therein for recent developments in this direction).

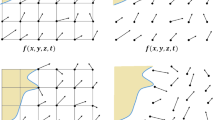

The cornerstone of the current work is the introduction of stochastic parametrization to model uncertainity via the so-called Stochastic Advection by Lie Transport (SALT) approach [12]. The stochasticity is introduced into the advection part of the dynamics via a constrained variational principle. This is a general approach for deriving stochastic partial differential equation (SPDE) models for geophysical fluid dynamics (GFD). In this work we apply it to the N-layer quasi-geostrophic model (see Sect. 2 for details). By adding stochasticity into the advection operator, one can model uncertain transport behaviour. The uncertainty in our case occurs as we assimilate the data coming from observing a high resolution model, but use low resolution realisations of the model. This model reduction is crucial as it enables us to complete the task by using fairly modest computational resources.Footnote 2 The stochastic term used to model the missing uncertainties is calibrated by using a data driven approach as described in [6].

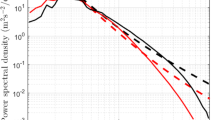

The series of snapshots of PV anomaly q shows the dependence of the solution on the resolution. All the fields are given in units of \([s^{-1}f^{-1}_0]\), where \(f_0=0.83\times 10^{-4}\, \mathrm{s^{-1}}\) is the Coriolis parameter. In order to visualize all the solutions on the same color scale we have multiplied the ones in the second layer by a factor of 5

This paper complements the work done in [7] where the “true state” is chosen to be the solution of the Euler equation with forcing and damping. We choose the quasi-geostrophic (QG) equations for this work as it has a qualitatively different behaviour from Euler in reference [7]: As one can see in Fig. 1, the solutions exhibit multiple large-scale zonally elongated jets as well as small-scale vortices. Indeed, one of the findings of our work is that the formation of jets is heavily influenced by the size of the grid: the coarser the grid the less jets are formed. Nevertheless, once data assimilation is applied to the coarser model (stochastically parametrized and properly calibrated), the number of jets can be preserved. The occurrence of the jets makes the data assimilation problem harder. Whilst in [7] it sufficed to use only tempering and jittering to assimilate the data, in the current work we obtained far better results only after we added the nudging procedure to the two already used in [7]. For the sake of convenience, we give a sketchy description of these procedures here, and refer the reader to Sects. 5.2 and 6.2 for more detail. Tempering allows to artificially flatten the posterior distribution (which can be highly singular in high dimensions) through rescaling the log likelihoods by a factor \(\phi \in (0,1]\). Jittering is a procedure which improves the diversity of the ensemble by computing new ensemble members which have been duplicated during resampling. Nudging corrects the solution of an SPDE to keep ensemble members closer to the true state. Another difference from the work done in [7] was the choice of the initial ensemble, which has been chosen here as a set of independent realizations from the solution of the stochastically perturbed QG equation. We found this to be a more natural alternative to the one used in [7].

The use of the combination of tempering and jittering is theoretically justified. Indeed in [4] it is shown that the use of the two procedures can produce particle filters suitable for solving high dimensional problems. More precisely, it is proved that the effective sample size of the ensemble of particles remains under control as the dimension d of the underlying system increases with a computational cost that is at most quadratic in d. By contrast, a generic (bootstrap) particle filter would require a computational cost that is exponential in d.

As is usually the case in data assimilation, the particle filter proceeds by alternating between forecast and analysis cycles. In each analysis step, observations of the current (and possibly past) state of a system are combined with the results from a prediction model (the forecast) to produce an analysis. The tempering and jittering are used to complete the analysis step, whilst the nudging procedure is used in the forecast step. In the absence of nudging, the ensemble particles have trajectories that are independent solutions of the stochastic QG equations. Nudging consists in adding a drift to the trajectories of the particles with the aim of maximising the likelihood of their positions given the observation data. This introduces a bias in the system that is corrected at the analysis step. It follows that also the nudging procedure is theoretically justified through a standard convergence argument, see for example [9]. It follows that the data assimilation algorithm presented in this paper will give an asymptotically (as the number of particles increases) consistent approximation of the posterior distribution of the state given the data. That does not mean that the empirical distribution of the ensemble of a small size is a good approximation of the posterior. The size of the ensemble is 100 and this is certainly not enough to approximate a posterior distribution in a state space of dimension \(O(10^4)\). However, it offers a sound theoretical basis for the algorithms presented here. We give further details of this issue in Sect. 5.

The paper is structured as follows. In Sect. 2 we describe the Hamiltonian formulation for the deterministic N-layer QG equations and its modified Hamiltonian for the stochastic multi-layer QG model. Section 3 presents the modifications of the deterministic and stochastic QG equations derived in the previous section which are necessary for numerical simulation and discusses the numerical integration methods for the 2-layer QG model investigated below. In Sect. 4 we prove that the stochastic CABARET scheme is consistent with the stochastic QG equation in the mean square sense in time (see [14] for the deterministic CABARET scheme). In Sect. 5 we discuss different procedures used for uncertainty quantification. In particular, we discuss Bootstrap Particle Filter, jittering, tempering and nudging procedures. In Sect. 6 we present and discuss the numerical experiments and results, and study how the data assimilation methods influence the quality of the forecast given by the stochastic QG model. The following is a summary of the main numerical experiments contained in this paper:

-

Dependence of the relative bias and the ensemble mean \(l_2\)-norm relative error between the true deterministic solution and its stochastic parameterisation on the data assimilation step (Figs. 4, 6 ), grid resolution (Fig. 8), data assimilation methods (Figs. 10, 12 ), and the number of weather stations (Figs. 14, 15 ).

-

Analysis of how uncertainty of the stochastic spread is influenced by the data assimilation step (Figs. 5, 7 ), grid resolution (Fig. 9), data assimilation methods (Figs. 11, 13 ), and the number of weather stations (Figs. 16, 17 ).

-

Forecast reliability rank histograms for the stochastic QG model with and without the data assimilation procedure (Fig. 18).

Finally, Sect. 7 concludes the present work and discusses the outlook for future research.

2 Hamiltonian Equations of Motion for a Multi-layer Fluid

This section lays out the fundamental Hamiltonian geometric framework which forms the basis for deriving the stochastic transport theory developed in this paper. Section 2.1 lays out the Lie–Poisson bracket structure for the deterministic Hamiltonian theory. Then Sect. 2.2 explains how the stochasticity we derive arises in the Hamiltonian setting. Namely, the deterministic Hamiltonian is augmented by an additional Stratonovich stochastic Hamiltonian. Applying the Lie–Poisson bracket of the deterministic case to the sum of this stochastic Hamiltonian and the deterministic Hamiltonian has the effect of making the transport velocity stochastic in the Stratonovich sense. The resulting stochastic transport is still Hamiltonian and it still conserves the potential vorticity Lagrangian invariants. However, it does not conserve an energy. Later sections below will perturb the fundamental stochastic transport equations derived in this section by introducing deterministic forcing, as well as viscous dissipation and friction damping. This latter step is required for numerical simulation purposes.

2.1 The Deterministic N-Layer Quasi-Geostrophic (NLQG) Equations

Consider a stratified fluid of N superimposed layers of constant densities \(\rho _1< \dots <\rho _N\); the layers being stacked according to increasing density, such that the density of the upper layer is \(\rho _1\). The quasi-geostrophic (QG) approximation assumes that the velocity field is constant in the vertical direction and that in the horizontal direction the motion obeys a system of coupled incompressible shallow water equations. We shall denote by \({\mathbf {u}}_i = (- \,\partial _y\psi _i, \partial _x\psi _i) = {\hat{\mathbf {z}}}\times \nabla \psi _i\) the velocity field of the \(i^{th}\) layer, where \(\psi _i\) is its stream function, and the layers are numbered from the top to the bottom. We define the potential vorticity of the \(i^{th}\) layer as

where the elliptic operator \(E_{ij}\) defines the layer vorticity

and the constant parameters \(\alpha _i \), \(f_i \), \(f_0\), \(\beta \), \(f_N\) are

where g is the gravitational acceleration, \(\rho _0 = (1/N)(\rho _1 + \dots + \rho _N)\) is the mean density, \(D_i\) is the mean thickness of the \(i^{th}\) layer, R is the Earth’s radius, \(\varOmega \) is the Earth’s angular velocity, \(\phi _0\) is the reference latitude, and d(y) is the shape of the bottom. The \(N \times N\) symmetric tri-diagonal matrix \(T_{ij}\) represents the second-order difference operator,

so that

With these standard notations, the motion of the NLQG fluid is given by

where \({\hat{\mathbf {z}}}\) is the vertical unit vector, \({\mathbf {u}}_i = {\hat{\mathbf {z}}} \times \nabla \psi _i \) is the horizontal flow velocity in the \(i^{th}\) layer, and the brackets in

denote the usual xy canonical Poisson bracket in \({\mathbb {R}}^2\). The boundary conditions in a compact domain \(D\subset {\mathbb {R}}^2\) with smooth boundary \(\cup _{j}\partial {D}_j\) are \(\psi _j|_{(\partial {D}_j)} = constant\), whereas in the entire \({\mathbb {R}}^2\) they are \(\lim _{(x, y)\rightarrow \pm \infty } \nabla \psi _j=0\). The space of variables with canonical Poisson bracket in (6) consists of N-tuples \((q_1,\dots , q_N)\) of real-valued functions on D with the above boundary conditions and certain smoothness properties that guarantee that solutions are at least of class \(C^1\). The Hamiltonian for the N-layer vorticity dynamics in (5) is the total energy

with stream function \(\psi _i\) determined from vorticity \(\omega _i\) by solving the elliptic equation (1) for \(q_i=\omega _i-f_i\) with the boundary conditions discussed above. Hence, we find that

where \(E^{-1}_{ij}*q_j = \psi _i\) denotes convolution with the Greens function \(E^{-1}_{ij}\) for the symmetric elliptic operator \(E_{ij}\). The relation (8) means that \(\delta H/\delta q_i = \psi _i\) for the variational derivative of the Hamiltonian functional H with respect to the function \(q_j\).

Remark 1

(Lie–Poisson bracket) Equation (5) are Hamiltonian with respect to the following sum over layers of Lie–Poisson vorticity brackets

for arbitrary functionals of layer vorticities F and H, provided the domain of flow D is simply connected.Footnote 3

The Lie algebra bracket in the integrand of (9) corresponds to the canonical Poisson bracket \(\{\psi _1,\psi _2\}_{xy}\) of stream functions, (with the standard sign conventions), so when we write the sum over layer vorticity brackets in the form (9) we may regard \(q_i + f_i(x)\), \({\delta F}/{\delta q_i}\) and \({\delta H}/{\delta q_i}\) all as functions on D, and regard the bracket inside the integral as the “ordinary” two-dimensional Poisson bracket on the symplectic manifold D.

The motion equations (5) for \(q_i\) now follow from the Lie–Poisson bracket (9) after an integration by parts to write it equivalently as

and recalling that \(\delta H/\delta q_i =-E^{-1}_{ij}*q_j=- \,\psi _i\), \(i=1,2,\dots ,N\), so that Eq. (5) follow.

Remark 2

(Constants of motion) According to Eq. (5), the material time derivative of \(\omega _i(t, x, y)\) vanishes along the flow lines of the divergence-free horizontal velocity \({\mathbf {u}}_i = {\hat{\mathbf {z}}}\times \nabla \psi _i \). Consequently, for every differentiable function \(\varPhi _i: {\mathbb {R}}\rightarrow {\mathbb {R}}\) the functional

is a conserved quantity for the system (5) for \(i =1,\dots ,N\), provided the integrals exist. By Kelvin’s circulation theorem, the following integrals over an advected domain S(t) in the plane are also conserved,

where \({\hat{\mathbf {n}}}\) is the horizontal outward unit normal and ds is the arclength parameter of the closed curve \(\partial S(t)\) bounding the domain S(t) moving with the flow.

2.2 Hamiltonian Formulation for the Stochastic NLQG Fluid

Having understood the geometric structure (Lie–Poisson bracket, constants of motion and Kelvin circulation theorem) for the deterministic case, we can introduce the stochastic versions of equations (5) by simply making the Hamiltonian stochastic while preserving the previous geometric structure, as done in the previous section. Namely, we choose

so that

where the \(\zeta ^k_i(x,y)\), \(k =1,\dots ,K\) represent the correlations of the Stratonovich noise we have introduced in (14).

For this stochastic Hamiltonian, the Lie–Poisson bracket (9) leads to the following stochastic process for the transport of the N-layer generalised vortices,

where we have defined the stochastic transport velocity in the \(i^{th}\) layer

in terms of its stochastic stream function

determined from the variational derivative of the stochastic Hamiltonian in (14) with respect to the generalised vorticity \(q_i\) in the \(i^{th}\) layer.

Remark 3

(Constants of motion) The constants of motion \(C_{\varPhi _i}\) in (11) and the Kelvin circulation theorem for the integrals \(I_i\) in (12) persist for the stochastic generalised vorticity equations in (15). This is because both of these properties follow from the Lie–Poisson bracket in (9). However, the stochastic Hamiltonian in (14) is not conserved, since it depends explicitly on time, t, through its Stratonovich noise term.

3 The Two-Dimensional Multilayer Quasi-Geostrophic Model

3.1 Deterministic Case

The two-layer deterministic QG equations for the potential vorticity (PV) anomaly q in a domain \(\varOmega \) are given by the PV material conservation law augmented with forcing and dissipation [17, 22]:

where \(\psi \) is the stream function, \(\beta \) is the planetary vorticity gradient, \(\mu \) is the bottom friction parameter, \(\nu \) is the lateral eddy viscosity, and \({\mathbf {u}}=(u,v)\) is the velocity vector. The computational domain \(\varOmega =[0,L_x]\times [0,L_y]\times [0,H]\) is a horizontally periodic flat-bottom channel of depth \(H=H_1+H_2\) given by two stacked isopycnal fluid layers of depth \(H_1\) and \(H_2\). The existence and uniqueness theorem for a mollified version of the QG model can be found in [11].

Forcing in (18) is introduced via a vertically sheared, baroclinically unstable background flow (e.g., [3])

where the parameters \(U_i\) are background-flow zonal velocities.

The PV anomaly and stream function are related through two elliptic equations:

with stratification parameters \(s_1\), \(s_2\).

System (18)–(20) is augmented by the integral mass conservation constraint [16]

by the periodic horizontal boundary conditions,

and no-slip boundary conditions

set at northern and southern boundaries of the domain.

3.1.1 Numerical Method

The QG model (18)–(23) is solved with the CABARET method, which is based on a second-order, non-dissipative and low-dispersive, conservative advection scheme [14]. The CABARET scheme can simulate large-Reynolds-number flow regimes at lower computational costs compared to conventional methods (see, e.g., [2, 13, 21, 24]), since the scheme is low dispersive and non-oscillatory.

The CABARET method is a predictor-corrector scheme in which the components of the conservative variables are updated at half time steps. Algorithm 1 illustrates the principal steps of the CABARET method adopted from [14]. To make the notation more concise, we introduce the forward difference operators in space

and omit spatial and layer indices wherever possible, unless stated otherwise. The time step of the CABARET scheme is denoted by \(\varDelta t\).

3.2 Stochastic Case

The stochastic version of the QG Eq. (18) is given by [12]:

The stochastic terms marked in red color is the only difference from the deterministic QG model (18), all other equations remain the same as in the deterministic case. However, the CABARET scheme in the stochastic case differs from the deterministic version and therefore its use can only be justified if it is consistent with the stochastic QG model. In other words, the CABARET scheme should be in the Stratonovich form.

3.2.1 Numerical Method

The CABARET scheme for the stochastic QG system (24) is given by Algorithm 2 (with the stochastic terms highlighted in red). To the best of our knowledge, the CABARET scheme has not been applied to the stochastic QG equations, and is used in this work for the first time.

In order to show that the CABARET scheme is consistent with the stochastic QG model, we rewrite the scheme as the improved Euler method (also known as Heun’s method) [15],

which solves stochastic differential equations (SDEs) in the form of Stratonovich.

In doing so, we omit the space indices for the potential vorticity anomaly q to emphasize the functional dependence on q, and introduce an extra variable

which allows to recast (25) and (26) (see Algorithm 2) in the form

Substitution of (27a) into (27b) and (25) into the forcing term \(F_{\mathrm{visc}}\left( \psi \left( q^{n+\frac{1}{2}}\right) \right) \) leads to

where

and

Retaining the terms up to order \(\varDelta t\) in (28) we get

where \(G_{\beta }\) does not depend on \(q^n\), and H.O.T. denotes higher order terms. Thus we have shown that the CABARET scheme is in Stratonovich form up to order \((\varDelta t)^{3/2}\).

4 Consistency in Time of the Stochastic CABARET Scheme

In this section we prove that the stochastic CABARET scheme (29) is consistent with the stochastic QG Eq. (24) in the mean square sense in time, since its consistency in space is guaranteed by its second order approximation [14]. We consider a Stratonovich process \(q=q(t,{\mathbf {x}})\), \({\mathbf {x}}=(x,y)\) satisfying the SPDE

and rewrite it in the Itô form

or alternatively

with the stochastic and deterministic parts defined as \(\displaystyle q_d:=a_t+\frac{1}{2}\sum \limits ^{K}_{i=1}b_{i,t}(b_{i,t})\) and \(\displaystyle {q^i_{s,t}:=b_{i,t}}\), respectively. Note that subindex s in Theorem 1 refers only to time.

We define consistency for SPDE (30) as follows

Definition 1

We say that a discrete time-space approximation \(q^n=q^n_d+{q^n_s}\) of \(q=q_d+{q_s}\) with the time step \(\varDelta t\) and space steps \(\varDelta {\mathbf {x}}=(\varDelta x_1,\varDelta x_2,\ldots ,\varDelta x_d)\) is consistent in mean square of order \(\alpha >1\) and \(\beta >1\) in time and space with respect to (30) if there exists a nonnegative function \(c=c((\varDelta t)^\alpha ,(\varDelta {\mathbf {x}})^\beta )\) with \(\lim \limits _{\begin{array}{c} \varDelta t\rightarrow 0 \varDelta {\mathbf {x}}\rightarrow 0 \end{array}}c((\varDelta t)^\alpha ,(\varDelta {\mathbf {x}})^\beta )=0\) such that

for all fixed values \(q^n\), time \(n=0,1,2,\ldots \) and space indices.

Since our focus in this section is on consistency in time, we have to prove that the following estimation holds:

Theorem 1

Assuming that there exists a constant \({\widetilde{C}}>0\) such that the following assumptions hold

- A1.:

-

\({\mathbb {E}}\left[ \left\| a_r-a_s\right\| _{L^2(\varOmega )}\right] \le {\widetilde{C}}\sqrt{r-s}\),

- A2.:

-

\({\mathbb {E}}\left[ \left\| \sum \limits ^{K}_{i=1}(b_{i,r}-b_{i,s})\right\| _{L^2(\varOmega )}\right] \le {\widetilde{C}}\sqrt{r-s}\),

- A3.:

-

\({\mathbb {E}}\left[ \left\| \sum \limits ^{K}_{i=1}\sum \limits ^{K}_{j=1}b_{i,s}(b_{j,s})\right\| _{L^2(\varOmega )}\right] \le {\widetilde{C}}\), for \(i,j=1,2,\ldots ,m\),

- A4.:

-

\({\mathbb {E}}\left[ \left\| \sum \limits ^{K}_{i=1}(b_{i,r}(b_{i,r})-b_{i,s}(b_{i,s}))\right\| _{L^2(\varOmega )}\right] \le {\widetilde{C}}\sqrt{r-s}\),

- A5.:

-

\({\mathbb {E}}\left[ \left\| {H.O.T.}\right\| \right] \le {\widetilde{C}}(r-s)^{3/2}\),

with \(\left| r-s\right| \le \varDelta t\), the stochastic CABARET scheme (29) is consistent in mean square with \(c(\varDelta t)=(\varDelta t)^2\).

The proof of the theorem is given in the Appendix.

5 Data Assimilation Methods

We find it useful to describe the framework and the data assimilation methodology through the language of nonlinear filtering. For this purpose, let us consider a probability space \((\varOmega ,{\mathcal {F}},{\mathbb {P}})\) on which we define a pair of processes Z and Y. The process Z is normally called the signal process, and the process Y models the observational data and is called the observation process. In the context of this work the signal process, also called the true state, is given by the solution of the deterministic QG equation (18) computed on a fine grid \(G_f=2049\times 1025\) and projected onto a coarse grid, denoted by \(G_s\) (details below), by spatially averaging the high-resolution stream function \(\psi ^f\) over the corresponding coarse grid cells.

The filtering problem consists in computing/approximating the posterior distribution of the signal \(Z_t\), denoted by \(\pi _t\) given the observations \(Y_s\), \(s\in [0,t]\). In our context, the observations consist of noisy measurements of the true state recorded at discrete times (every 2 or 4 h) and are taken at locations (called weather stations) on a data grid \(G_d\) defined below. The data assimilation is performed at these times, which we call the assimilation times.

The most basic particle filter, called the bootstrap particle filter (see Sect. 5.1 for details) uses ensembles of, say, N particles that evolve according to the law of the signal between assimilation times. At the data assimilation times, each particle is weighed according to the likelihood of its position given the new data. A new set of particles is then obtained by sampling N times (with replacement) from the set of weighted particles. The end result is that particles with high likelihoods (close to the true trajectory) are kept and possibly multiplied, and particles with small likelihoods (that are away from the true trajectory) are eliminated. As a result, the ensemble of particles should stay closer to the true trajectory when compared to the particles that evolve according to the signal distribution. The bootstrap particle filter described below uses multiple copies of the signal which, in our case, would require the resolution of the deterministic QG equation (18) on a fine grid \(G_f=2049\times 1025\). Each run of the particle filter at such a high resolution is very expensive computationally (one data assimilation step takes approximately 15 min). Taking into account that we assimilate data over thousands steps, the computational resources needed are too large. For this reason we replace the true state with a proxy. We use a process X defined on the same probability space whose sample paths are a lot cheaper to simulate. In our case, the process X will be the solution of the stochastic QG equation (24) computed on the (signal) grid \(G_s\) (each run of the process X requires around 20 seconds). This replacement is a form of model reduction: we reduce the dimension of the underlying state from \(G_f=2049\times 1025\) to \(G_s=129\times 65\). This model reduction is critical to successful implementation of the data assimilation procedure. It is also rigorously justified as we explain now.

The posterior distribution \(\pi _t\) depends continuously on two constituents: the (prior) distribution of the signal and the observation data. That means that if we replace the original signal distribution with a proxy distribution, we will still obtain a good approximation of \(\pi _t\) provided the original and the proxy distributions are close to each other in some suitably chosen topology on the space of distribution.

For the current work, the way in which we ensure that two distributions (original and proxy) remains close to each other is by adding the right type of stochasticity to the model and “in the right directions”. This is done through the Stochastic Advection by Lie Transport (SALT) approach [12]. The stochasticity is calibrated to match the fluctuations of X as explained in [6, 8]. We emphasize that one does not seek a pathwise approximation of the true state, but only an approximation of its distribution.

In our case, the true state is deterministic, and the process X is random. As we saw in [6, 8], one can visualise the distribution of X through ensembles of particles with trajectories that are solutions of the stochastic QG equation (24) computed on the grid \(G_s\) and driven by independent families of Brownian motions. In the language of uncertainty quantification, the difference between the two distributions is interpreted as the “uncertainty of the model”. Typically, the ensemble of particles is a spread “around” the true trajectory, the size of the spread measuring the (model) uncertainty. To visualise this one can look at projections of the true trajectory and the ensembles of particles at various grid points. Of course, the more refined the grid \(G_s\) is, the closer the two distributions are, and the smaller the spread. However, refining the grid \(G_s\) leads to an increase in the computational effort of generating the particle trajectories. One of the roles of data assimilation is to reduce the spread (the uncertainty) without refining the grid.

The average position of the ensemble of particles obtained through the data assimilation, denoted by \({\widehat{Z}}_t\), is a pointwise estimate of the true state \(Z_t\), whilst the spread of the ensemble is a measure of the approximation error \(Z_t-{\widehat{Z}}_t\). As explained in the introduction, the data assimilation methodology presented here is asymptotically consistent: the empirical distribution of the particles converges, as \(N\mapsto \infty \) to the posterior distribution \(\pi _t\) [9]. As a consequence, \({\widehat{Z}}_t\) converges to the conditional expectation of the true state \(Z_t\) given the observations, and the empirical covariance matrix converges to the conditional expectation of \((Z_t-{\widehat{Z}}_t)(Z_t-{\widehat{Z}}_t)^{T}\) given the observations.Footnote 4 This is true if the particles evolve according to the original distribution of the signal. In our case we use a proxy distribution, so the limit will be an approximation of \(\pi _t\), the difference between this approximation and \(\pi _t\) being controlled by the choice of the signal grid \(G_s\).

The data assimilation methodology described below consists in combination between the bootstrap particle filter and three additional procedures: nudging, tempering and jittering. The bootstrap particle filter cannot be used on its own to solve the data assimilation problem. The reason is that the particle likelihoods vary wildly from each other. That is because the particle themselves stray away from the true state rapidly and in different directions. This is reflected through observation data. One particle likelihood or a small number of such likelihoods will become much higher than the rest, and only the corresponding particle(s) will be selected and multiplied. This will not offer a good representation of the posterior distribution. As we will explain below the additional procedures will eliminate this effect ensuring a reasonably spread set of particles.

In the next subsections we study how each of these individual procedures influences the accuracy of the estimation \({\widehat{Z}}_t\) and the quality of the forecast given by the stochastic QG model. In order to study how the dimension of the observation process Y (the number of weather stations) affects data assimilation, we consider two different data grids \(G_d=\{4\times 4,8\times 4\}\). We also study stochastic solutions on two different signal grids \(G_s=\{129\times 65,257\times 129\}\) in order to highlight the effect of more accurate proxy distributions on the results.

As stated above, we will use ensembles \({\mathcal {S}}\) of solutions of the stochastic QG equation (24) driven by independent realizations of the Brownian noise W. For the purpose of this paper, the size of the ensemble is taken to be \(N=100\) and the number of Brownian motion (independent sources of stochasticity) is taken to be \(K=32\); as already stated, the elements of the ensembles will be called particles. It was numerically shown in [6] that \(N=100\) and \(K=32\) is enough to reasonably approximate the fluctuations of the original distribution. Through numerical experiments, we showed that the spread of the ensemble will not increase substantially by taking more particles and/or sources of noise (Brownian motions).

The observations data \(Y_t\) is, in our case, an M-dimensional process that consists of noisy measurements of the velocity field \({\mathbf {u}}\) taken at a point belonging to the data grid \(G_d\):

where \(\mathrm{P}^s_d:G_s\rightarrow G_d\) is a projection operator from the signal grid \(G_s\) to the data grid \(G_d\), \(\eta ={\mathcal {N}}({\mathbf {0}},I_\sigma )\) is a normally distributed random vector, with mean vector \({\mathbf {0}}=(0,\ldots ,0)\) and diagonal covariance matrix \(I_\sigma =diag(\sigma _1^2,\ldots ,\sigma _M^2)\). Rather than choosing an arbitrary \(\sigma =(\sigma _1,\ldots ,\sigma _M)\) for the standard deviation of the noise, we use the standard deviation of the velocity field computed over the coarse grid cell of the signal grid. This is because the coarse model should be though of as only modelling the part of the solution that is resolved on the coarse grid i.e. a local spatial average at that scale. Since the observations are point values from the fine solution, we treat the observation noise as modelling the difference between the fine solution point value and the cell average. Assuming that the small scale fluctuations are sufficiently ergodic, this justifies to model the point value as the cell average plus a random fluctuation. It is assumed that this is the main source of observation error.

We introduce the likelihood-weight function

with M being the number of grid points (weather stations). In order to measure the variability of the weights (32) of particles we use the effective sample size:

which is close to the ensemble size N if the particles have weights that are close to each other, and decays to one, as the ensemble degenerates (i.e. there are fewer and fewer particles with large weights and the rest have small weights). One should resample for the weighted ensemble if the ESS drops below a given threshold, \(N^*\),

We chose \(N^*=80\) to be our threshhold.

5.1 Bootstrap Particle Filter

In this section we consider the most basic particle filter, called the bootstrap particle filter or Sampling Importance Resampling filter [10]. This method works as follows.

Given an initial distribution of particles, each particle is propagated forward according to the stochastic QG equation. Then, based on partial observations, \({\mathbf {Y}}_{t_{j+1}}\) of the true state, the weights of new particles are computed, and if the effective sample size drops below the critical value \(N^*\), the particles are resampled to remove particles with small weights.

For a high dimensional problem such as the one studied in this paper, the effective sample size drops very quickly to 1 as the sample degenerates rapidly. The reason for this is that particles travel very quickly away from the true state, and this is picked up by the observation data (unless the measurement noise is large which is not in our case—the observations are accurate). To counteract this, the resampling procedure would need to be performed unreasonably frequently or a large number of particles would need to be used.

To maintain the diversity of the ensemble we use instead three additional procedures: the tempering technique and jittering based on the Metropolis-Hastings Markov chain Monte Carlo (MCMC) method, and nudging. We explain each of these procedures in the following sub-sections.

5.2 Tempering and Jittering

We will explain briefly the usage of these two procedures, see [7] for further details. The idea behind tempering is to artificially flatten the weights through rescaling the log likelihoods by a factor \(\phi \in (0,1]\), which is called temperature. Once this is done resampling can be applied. This gives a much more diverse ensemble as the ESS will have more reasonable values (the temperature is specifically chosen to ensure this). However, some of resulting particles will still have duplicates. To eliminate these, one uses jittering.

Jittering is another technique which improves the diversity of the ensemble by computing new particles which have been duplicated during resampling. There are different ways how to diversify the ensemble. For example, one can jitter the particles by simply adding some random perturbations to them. However, in this case, the perturbed particles are not the solutions of the stochastic QG equation that, in turn, can lead to nonphysical behaviour of the model. Instead, we compute new particles by solving the stochastic QG equation driven by the Brownian motion \(\rho W+\sqrt{1-\rho ^2}\,d{\widetilde{W}}\), where W is the original Brownian motion W and \({\widetilde{W}}\) is a new Brownian motion independent of W; the initial conditions for this equation are the same as for the stochastic QG equation (24). The perturbation parameter \(\rho \) is chosen so that particles are not placed too far from the original position, yet far enough to ensure the diversity of the sample. In our experiments, we use \(\rho =0.9999\). Each new proposal for the position of the particle is then accepted/rejected through a standard Metropolis-Hastings method, in which \(M_1\) stands for the number of iterations; we choose \(M_1=20\). This ensures that the perturbations do not change the sought distribution. However, this fixed number of jittering steps may not be optimal. Therefore, we plan to study how to optimize this in correlation with the size of the perturbation parameter \(\rho \). We also note that, the wall time of one data assimilation step (computed on a workstation with 128GB of RAM and 2x6-core Intel Xeon E5-2643v4 3.4GHz processors) is approximately 3 and 8 minutes for the grid of size \(129\times 65\) and \(257\times 129\), respectively.

Of course, after the first tempering-jittering cycle has finished, the particles in the resulting ensemble are samples of the altered distribution which is not what we desire, therefore the procedure is repeated by finding the next temperature value in the range \((\varphi ,1]\) that offers a reasonable ESS. This is repeated until the temperature scaling is 1 so that the original distribution is recovered. The tempering-jittering methodology is given by Algorithm 4 below.

5.3 Nudging

Tempering combined with jittering is a powerful technique which can correctly narrow the stochastic spread in the presence of informative data, while also maintaining the diversity of the ensemble over a long time period. Their combined success depends crucially on the quality of the original sample proposals. This quality is produced by evolving the particles using the SPDE (the proxy distribution) and not the true distribution. To reduce the discrepancy introduced in this way, one can use nudging. The idea of nudging is to correct the solution of SPDE (24) so as to keep the particles closer to the true state. To do so, we add a ‘nudging term’ (marked in blue) to SPDE (24),

Note that q depends on the parameter \(\lambda \). The trajectories of the particles will be solutions of this perturbed SPDE (34). To account for the perturbation, the particles will have new weights according to Girsanov’s theorem, given by

As explained above, these weights measure the likelihood of the position of the particles given the observation, and the last term accounts for the change of probability distribution from q to \(q(\lambda )\). It therefore makes sense to choose weights that maximize these likelihoods. In other words, we could look to solve the equivalent minimization problem

together with (34). In general this is a challenging nonlinear optimisation problem, especially if one allows the \(\lambda _k\)’s to vary in time.

To simplify the problem, we perturb only the corrector stage of the final timestep before \(t_{j+1}\). Then the (discrete version of the) minimization problem (36) becomes

where \(\delta t\) is the time step. Let us re-write

where \(q_{t_{j+1/2}}\) and \({\tilde{q}}_{t_{j+1}}\) are computed in the prediction and the extrapolation steps, respectively (see Algorithm 2 for detail).

We can then re-write the minimisation problem (37) as

where

with

and

This is a quadratic minimization problem with the optimal value \(\lambda \) depending (linearly) on the increments \(\varDelta W_{1},...,\varDelta W_{K}\). This optimal choice is not allowed as the parameter \(\lambda \) can only be a function of all the approximation \({\tilde{q}}_{t_{j+1}}\), \(q_{t_{j+1/2}}\) and \(Y_{t_{j+1}}\) (since it needs to be adapted to the forward filtration of the set of Brownian motions \(\{W_{k}\}\)). To ensure that this constraint is satisfied, we minimise the conditional expectation of \( {\mathcal {V}}({{\mathbf {q}}}(\lambda ),{\mathbf {Y}},\lambda )\) given the \({\tilde{q}}_{t_{j+1}}\), \(q_{t_{j+1/2}}\) and \({\mathbf {Y}}_{t_{j+1}}\), that is \( \displaystyle \min \limits _{\lambda _{k},\ k\in [1..K]}{\mathbb {E}}\left[ {\mathcal {V}}({ {\mathbf {q}}}(\lambda ),{\mathbf {Y}},\lambda )|{\tilde{q}}_{t_{j+1}}, q_{t_{j+1/2}},{\mathbf {Y}} _{t_{j+1}}\right] . \) We note that Q is independent of \(\lambda \) and does not play any role in the minimization operation.

Also \(\displaystyle {\mathbb {E}}\left[ Q_{2}(\lambda ,\varDelta W_{1},...,\varDelta W_{K})|{\tilde{q}}_{t_{j+1}}, q_{t_{j+1/2}},{\mathbf {Y}} _{t_{j+1}}\right] \) is independent of \(\lambda \). Finally \(Q_{1}(\lambda )\) is measurable wrt \({\tilde{q}}_{t_{j+1}}, q_{t_{j+1/2}}{\mathbf {Y}}\), that is \( \displaystyle {\mathbb {E}}\left[ Q_{1}(\lambda )|{\tilde{q}}_{t_{j+1}}, q_{t_{j+1/2}},{\mathbf {Y}}_{t_{j+1}}\right] =Q_{1}(\lambda ). \) Consequently, we only need to minimize \(Q_{1}(\lambda )\). This functional is quadratic in \(\lambda \), and hence the optimization can be done by solving a linear system. This is the approach that we use in the present work. We note that this approximation remains asymptotically consistent as the number of ensemble members tends to infinity.

6 Numerical Results

We consider a horizontally periodic flat-bottom channel \(\varOmega =[0,L_x]\times [0,L_y]\times [0,H]\) with \(L_x=3840\, \mathrm km\), \(L_y=L_x/2\, \mathrm km\), and total depth \(H=H_1+H_2\), with \(H_1=1.0\, \mathrm km\), \(H_2=3.0\, \mathrm km\). The governing parameters of the QG model are typical to a mid-latitude setting, i.e. the planetary vorticity gradient \(\beta =2\times 10^{-11}\, \mathrm{m^{-1}\, s^{-1}}\), lateral eddy viscosity \(\nu =3.125\,\mathrm m^2 s^{-1}\), and the bottom friction parameters \(\mu =4\times 10^{-8}\). The background-flow zonal velocities in (19) are given by \(U=[6.0,0.0]\,\mathrm cm\, s^{-1}\), and the stratification parameters in (20) are \(s_1=4.22\cdot 10^{-3}\,\mathrm km^{-2}\), \(s_2=1.41\cdot 10^{-3}\,\mathrm km^{-2}\); chosen so that the first Rossby deformation radius is \(Rd_1=25\, \mathrm{km}\). In order to ensure that the numerical solutions are statistically equilibrated, the model is initially spun up from the state of rest to \(t=0\) over the time interval \(T_{spin}=[-50,0]\, \mathrm{years}\). The numerical solutions of the deterministic QG model (18) at different resolutions are presented in Fig. 1.

We would like to draw the reader’s attention to the fact that the solution significantly depends on the resolution (see Fig. 1). Namely, the number of jets for the highest resolution \(2049\times 1025\) (Fig. 1a) is four (four red striations in the interior of the computational domain; the boundary layers on the top and bottom boundaries are not counted as such). However, there are only two jets for the lower-resolution flows: \(G=1025\times 513\) (Fig. 1b), \(G=513\times 257\) (Fig. 1c), \(G=257\times 129\) (Fig. 1d). Moreover, the lowest resolution flow (computed on the grid \(G=129\times 65\), Fig. 1e) shows no jets at all, and this is the flow that we paramaterise and then apply the data assimilation methods described above. We also use a finer grid \(G=257\times 129\) to study the effect of the resolutions on the data assimilation methods. It is important to note that there is no smooth transition between solutions at different resolutions like, for instance, in the double-gyre problem (e.g. [19]), and this makes lower-resolution solutions much harder to parameterise, since the parameterisation should compensate not only for the information lost because of coarse-graining, but also for the missing physical effects. For example, in the channel flow, the backscatter mechanism (e.g, [20]) at low resolutions is very weak, and thus it is not capable of cascading energy up to larger scales to maintain the jets.

In the following, we compare the dependence of the performance of the various procedures discussed above on the following parameters: the resolution of the signal grid, the number of observations (also referred to as weather stations), and the size of the data assimilation step; note that weather stations are located in both layers. The methods will be applied to the parameterised QG model (24) which has been studied at length in [6].

Before going into further details, we remind the reader how we compute the true solution, which is denoted as \(q^a\), and also referred to as the truth or the the true state. For the purpose of this paper, we have computed two versions of the true solution \(q^a\). The first one is computed on a signal grid \(G_s=257\times 129\) (\(dx\approx dy\approx 15\, \mathrm{km}\)), and the other one is computed on a signal grid \(G_s=129\times 65\) (\(dx\approx dy\approx 299\, \mathrm{km}\)) (Fig. 2). Each true solution is computed as the solution of the elliptic equation (20) with the stream function \(\psi ^a\), where \(\psi ^a\) is computed by spatially averaging the high-resolution stream function \(\psi ^f\) (computed on the fine grid \(G_f=2049\times 1025,\, dx\approx dy\approx 1.9\, \mathrm{km}\)) over the coarse grid cell \(G^s\). From now on, we focus only on the first layer, since the flow in the first layer is more energetic and exhibits an interesting dynamics including small-scale vortices and large-scale zonally elongated jets.

In order to study how the number of weather stations influences the accuracy of data assimilation methods we will consider two different setups including \(M=16\) and \(M=32\) weather stations. Clearly, the location of weather stations can be optimized in such a way so as to give the most accurate data assimilation results. For the purpose of this work, we locate the weather stations at the nodes of equidistant Eulerian grids \(G_d=\{4\times 4,8\times 4\}\) shown in Fig. 3. In all numerical experiments we use \(N=100\) particles (ensemble members) and \(K=32\) (the number of \(\xi \)’s); the choice of these parameters has been justified in [6]. It is worth noting that the initial conditions for the stochastic model have been computed over the spin up period \(T_{\mathrm{spin}}=[-8,0]\) hours. The method of computing physically consistent initial conditions for the stochastic model is given in [6].

Snapshot of PV anomaly \(q^f_1\) and location of weather stations for the data grids \(G_d=\{4\times 4\}\) (green squares) and \(G_d=\{8\times 4\}\) (black squares); the PV anomaly field is given in units of \([s^{-1}f^{-1}_0]\), where \(f_0=0.83\times 10^{-4}\, \mathrm{s^{-1}}\) is the Coriolis parameter (Color figure online)

6.1 Tempering and Jittering

We start with the study to the data assimilation algorithm that uses tempering and jittering, but not nudging (Algorithm 4). In the first experiment we run the stochastic QG model at the coarsest resolution (\(G_s=129\times 65\)) and use \(M=16\) weather stations. We compare the results of the data assimilation methodology with the forward run of the stochastic model. For this experiment we take the data assimilation step to be \(\varDelta t=4\) h (the data assimilation step is the time between to consecutive analysis cycles).

The results are presented in Fig. 4 in terms of the relative bias (RB) and the ensemble mean \(l_2\)-norm relative error (EME) given by

with \({\mathbf {u}}^p_n\) being the n-th member of the stochastic ensemble, \({\mathbf {u}}^a\) is the true solution.

Evolution of the ensemble mean relative \(l_2\)-norm error (EME) and relative bias (RB) for a all weather stations and b the whole domain; \({\mathbf {u}}^a\) is the true solution, \({\mathbf {u}}^p\) is the stochastic solution. In order to assimilate data we use tempering and jittering (Algorithm 4); the data is assimilated from \(M=16\) weather stations every 4 hours; the grid size is \(G_s=129\times 65\). Note that ”No DA—Algorithm 2“ means a free run of the SPDE over 20 days, ignoring the observations

As Fig. 4 shows, the data assimilation method presented by Algorithm 4 (blue line) offers little improvement over the SPDE run without data assimilation methodology (red line) both in terms of the relative bias and in terms of the EME at the weather stations (Fig. 4a) and in the whole domain (Fig. 4b) throughout the time period of 20 days. As we will see later, the situation will improve as we decrease the data assimilation step and/or increase the resolution of the signal grid. But before, let us first look at the uncertainty quantification results for this particular setting. As expected, the spread for the stochastic QG model (24) decreases (to a certain extend) after the application of the data assimilation methodology. We illustrate the shrinkage of the stochastic spreads in Fig. 5 for the velocity computed at weather stations located in the slow flow region (blue stripes) and fast flow region (red jets) in Fig. 3.

Shown are typical stochastic spreads for velocity \({\mathbf {u}}^p_1=(u^p_1,v^p_1)\) at weather stations located in the slow flow region (upper row) and fast flow region (lower row); the grid size is \(G_s=129\times 65\). The vertical axis represents the velocity (given in units \(\mathrm {m}\cdot \mathrm {s}^{-1}\)) at the weather station location (Color figure online)

Figure 5 shows that the truth (green line) is contained within the stochastic spread computed with and without the data assimilation method. Moreover, the spread computed with the data assimilation method (blue spread) is narrower than that computed only with the SPDE (red spread). To reduce it further, one can vary the data assimilation step \(\varDelta t\). In particular, halving \(\varDelta t\) brings further reduction in both RB and EME (Fig. 6) and also reduces the uncertainty of the stochastic solution (Fig. 7) (the spread is narrower).

The same as in Fig. 4, but for the data assimilation step \(\varDelta t=2h\)

The same as in Fig. 5, but for the data assimilation step \(\varDelta t=2h\)

Further substantial improvements in the performance of the data assimilation methodology are obtained when the resolution of the signal grid gets higher (\(G_s=257\times 129\)). In particular, the results are much more accurate both at the observation points (weather stations) (Fig. 8a) and over the whole domain (Fig. 8b). Moreover, the spread of the sample reduces dramatically as shown in figure Fig. 9.

The same as in Fig. 4, but for the signal grid \(G_s=257\times 129\)

The same as in Fig. 5, but for the signal grid \(G_s=257\times 129\)

Based on the above results, we conclude that both the reduction of the data assimilation step and the increase of the resolution of the signal grid can enhance the accuracy of the stochastic solution and reduce the spread of the stochastic ensemble. The effect of the increase of the resolution on both the accuracy and uncertainty appears to be much more pronounced compared to that of the reduction of the data assimilation step.

6.2 Nudging

We will now look at the performance of the data assimilation methodology that includes nudging (Algorithm 5) compared with the one that does not (Algorithm 4). As above we start with the stochastic QG model at the coarsest resolution (\(G_s=129\times 65\)) and use \(M=16\) weather stations. We present the results in Figs. 10 and 11 . The improvements are obvious straightaway. The average of the stochastic spread becomes closer to the true solution compared with the same case but without using the nudging procedure (Fig. 10). However, in some cases, the true solution leaves the spread of the ensemble (Fig. 11). We do not have a clear explanation for this behaviour.

Evolution of the ensemble mean relative \(l_2\)-norm error (EME) and relative bias (RB) for a all weather stations and b the whole domain; \({\mathbf {u}}^a\) is the true solution, \({\mathbf {u}}^p\) is the stochastic solution. In order to assimilate data we use tempering, jittering, and nudging (Algorithm 5); the data is assimilated from \(M=16\) weather stations every 4 hours; the grid size is \(G_s=129\times 65\)

Shown are typical stochastic spreads of velocity \({\mathbf {u}}^p_1=(u^p_1,v^p_1)\) at weather stations located in the slow flow region (upper row) and fast flow region (lower row) for the SPDE without (red) and with (light blue) using tempering, jittering, and nudging (Algorithm 5); Algorithm 4 (blue) is given for ease of comparison. The green line is the true solution; the grid size is \(G_s=129\times 65\). The vertical axis represents the velocity (given in units \(\mathrm {m}\cdot \mathrm {s}^{-1}\)) at the weather station location (Color figure online)

Again, at the higher resolution (\(G_s=257\times 129\)), the nudged solution is even more accurate than its low-resolution version (Fig. 12), and the uncertainty is further reduced (Fig. 13).

The same as in Fig. 10, but for the signal grid \(G_s=257\times 129\)

The same as in Fig. 11, but for the signal grid \(G_s=257\times 129\)

It is important to note that the number of observation locations (weather stations) used in the simulations above is only 0.19% and 0.05% of all degrees of freedom for the grids \(G_s=129\times 65\) and \(G_s=257\times 129\), respectively. Obviously, this number of weather stations is not enough to significantly reduce the uncertainty and decrease the error between the true solution and its parameterisation. Therefore, in the next simulation we double the number of observation locations (\(M=32\)) and compare how Algorithm 5 (data assimilation with the nudging method) performs when more observational data is available.

As can be seen in Fig. 14, adding more weather stations does not significantly influence the results for the low-resolution (Fig. 14) and higher-resolution (Fig. 14) simulation.

Evolution of the ensemble mean relative \(l_2\)-norm error (EME) and relative bias (RB) for a all weather stations and b the whole domain; \({\mathbf {u}}^a\) is the true solution, \({\mathbf {u}}^p\) is the stochastic solution. In order to assimilate data we use tempering, jittering, and nudging (Algorithm 5); the data is assimilated from \(M=32\) weather stations every 4 h; the grid size is \(G_s=129\times 65\). We note that the EME and RB are close for Algorithm 5 indicating reliability

The same as in Fig. 14, but for the signal grid \(G_s=257\times 129\)

When more observational data is available (32 weather stations), the uncertainty of the stochastic solution remains virtually the same compared with the case of using 16 weather stations for both \(G_s=129\times 65\) (Figs. 6, 16) and \(G_s=257\times 129\) (Fig. 17).

Shown are typical stochastic spreads of velocity \({\mathbf {u}}^p_1=(u^p_1,v^p_1)\) at the weather stations located in the slow flow region (upper row) and fast flow region (lower row) for the SPDE without (red) and with (blue) using tempering, jittering, and nudging (Algorithm 5). The green line is the true solution; the grid size is \(G_s=129\times 65\). The vertical axis represents the velocity (given in units \(\mathrm {m}\cdot \mathrm {s}^{-1}\)) at the weather station location

The same as in Fig. 16, but for the signal grid \(G_s=257\times 129\)

Rank histograms for velocity \({\mathbf {u}}^p_1=(u^p_1,v^p_1)\) at six different locations (not shown). Three observation points are located in the fast flow within red jets (first three upper rows in the plot), and the other three observation points are located in the slow flow between the jets (next three rows in the plot). Each histogram is based on 1000 forecast-observation pairs generated by solving the stochastic QG model without (a) and with (b) using data assimilation. For simulating the stochastic QG model we use \(N=100\) (ensemble size), \(K=32\) (number of \(\xi \)’s); for the data assimilation method we use Algorithm 5 (tempering, jittering, and nudging), the number of observation locations \(M=32\), the data assimilation step \(\varDelta t=4h\). Each ensemble member for the rank histogram is selected randomly from the ensemble of 100 members every 4 h. According to the definition in [5], the lead time here is 1

Again, we conclude that the Algorithm 4 improves the accuracy and reduces the uncertainty of the stochastic spread. The three parameters that we studied, the data assimilation step, and the size of the grid and the number of weather station, influence the performance of the data assimilation methodology. In particular, the smaller the data assimilation step is, the higher the accuracy becomes. We have found that the number of weather stations has a minor effect on the accuracy. This outcome is somewhat surprising. Normally, additional observations should result in improved forecast skills. It could be that the effective dimension is small. Alternatively, it could be due to model misspecification, or possibly that the course grain information is in the jets.

If the resolution of the signal grid increases so does the accuracy of the solution computed with using the data assimilation methodology. Moreover, increasing the resolution of the signal grid \(G_s\) dramatically reduces the uncertainty. The same conclusions are true for the Algorithm 5 that incorporates the nudging method. Moreover, the nudging procedure gives even more accurate solutions and further reduces the spread when compared with Algorithm 4.

We have also assessed the ensemble reliability on the grid \(G_s=257\times 129\) by analysing the rank histograms (see, e.g. [1]) computed by simulating the stochastic model (without using the data assimilation methodology) (Fig. 18a) and compared them with the rank histograms for the ensemble produced by Algorithm 5 (Fig. 18b). For this, we have used the methodology analyzed in [5]. The histograms are produced by randomly selecting 10 particles out of the total of 100 particles immediately after the DA step, run them forward for \(\varDelta t =4h\) and comparing the new observations with the particle location perturbed by the noise with the same amplitude as the measurement noise.

As the plots show, the stochastic ensemble, computed without the regular corrections made through the data assimilation method, has a bias (rank histograms are not flat; ; see Fig. 18a) at many observation locations. As a result it will make the ensemble prediction unreliable. The situations changes when one uses the data assimilation method based one tempering, jittering, and nudging (Algorithm 5); see Fig. 18b. All the rank histograms now show no sign of a pronounced bias and demonstrate a well-calibrated ensemble. Moreover, following [5], the chi-squared test can be applied as the ranks are independent, because the lead time is 1. For all but one weather stations analysed the chi-squared test provides no evidence to reject the null hypothesis of forecast reliability at \(5\%\) significance level. In addition, Table 1 shows the corresponding p-value for the velocity field forecast with/without data assimilation. It is clear from the table that the DA improves massively the flatness of the histograms (with one exception), therefore they offer additional quantitative evidence of the forecast reliability. In other words, we can assert that the application of the data assimilation methodology proposed in the paper corrects the bias introduced by the parameterisation and produces a reliable forecast.

Finally, we have compared true PV anomaly q fields and the ones computed with using the data assimilation methodology based on tempering, jittering, and nudging (Algorithm 5), see Fig. 19.

The series of snapshots shows the true deterministic solution \(q^a_1\) and parameterised solution \({\bar{q}}^p_1\) (averaged over the stochastic ensemble of size \(N=100\)) computed with the data assimilation Algorithm 5. All the solutions were computed on the same coarse grid \(G^c=257\times 129\), have the same initial condition, and the parameterised solution uses 32 leading EOFs, and 32 weather stations. All the fields are given in units of \([s^{-1}f^{-1}_0]\), where \(f_0=0.83\times 10^{-4}\, \mathrm{s^{-1}}\) is the Coriolis parameter

As Fig. 19 shows, the paramaterised solution \({\bar{q}}^p_1\) using the data assimilation methodology based on tempering, jittering, and nudging (Algorithm 5) gives an accurate forecast of the true state \(q^a_1\) even with a very small number of weather stations.

7 Conclusion and Future Work

In this paper, we have reported on our recent progress in developing an ensemble based data assimilation methodology for high dimensional fluid dynamics models. Our methodology involves a particle filter which combines model reduction, tempering, jittering, and nudging. The methodology has been tested on the two-layer quasi-geostrophic model with \(O(10^6)\) degrees of freedom. Only a minute fraction of these are noisily observed (16 and 32 weather stations). The model is reduced by following the stochastic variational approach for geophysical fluid dynamics introduced in [12]. We have also introduced a stochastic time-stepping scheme for the quasi-geostrophic model and have proved its consistency in time. In addition, we have analyzed the effect of different procedures (tempering, jittering, and nudging) on the accuracy and uncertainty of the stochastic spread. Our main findings are as follows:

-

The tempering and jittering procedure (Algorithm 4) improves the accuracy by reducing the uncertainty of the stochastic spread;

-

The nudging procedure (Algorithm 5) brings major improvements to the combinations of the tempering and jittering (Algorithm 4), both in terms of the relative bias (RB) and ensemble mean relative \(l_2\)-norm error (EME)

-

The number of weather stations has a minor effect on the RB and EME;

-

The size of the data assimilation step has a substantial effect; namely, the smaller the data assimilation step, the higher the accuracy;

-

The resolution of the signal grid significantly improves the accuracy and reduces the uncertainty of the stochastic spread;

-

The proposed data assimilation methodology corrects the bias introduced by the paramaterisation and produces a reliable forecast.

We regard the data assimilation method based on tempering and jittering combined with the nudging method proposed here as a potentially valuable addition to data assimilation methodologies. We also expect it to be useful in developing data assimilation methodologies for larger, more comprehensive ocean models. The combination of the four components presented here (model reduction, tempering, jittering and nudging) can be enhanced by further improvements including localization, space-time data assimilation, etc. Applications of these combined components will form the subject of subsequent work.

Notes

However, see [18] for a recent application of particle filters within an operational framework.

We used a stand-alone workstation with 128GB of RAM and 2x6-core Intel Xeon E5-2643v4 3.4GHz processors.

If the domain D is not simply connected, then variational derivatives such as \(\delta H/\delta q_i\) must be interpreted with care, because in that case the boundary conditions on \(\psi _i\) will come into play [16].

\((Z_t-{\widehat{Z}}_t)^{T}\) is the transpose of \((Z_t-{\widehat{Z}}_t)\)

References

Anderson, J.: A method for producing and evaluating probabilistic forecasts from ensemble model integrations. J. Clim. 9, 1518–1530 (1996)

Arakawa, A.: Computational design for long-term numerical integration of the equations of fluid motion: two-dimensional incompressible flow. Part I. J. Comput. Phys. 1, 119–143 (1966)

Berloff, P., Kamenkovich, I.: On spectral analysis of mesoscale eddies. Part I: linear analysis. J. Phys. Oceanogr. 43, 2505–2527 (2013)

Beskos, A., Crisan, D., Jasra, A.: On the stability of sequential Monte Carlo methods in high dimensions. Ann. Appl. Prob. 24, 1396–1445 (2014)

Bröcker, J.: Assessing the reliability of ensemble forecasting systems under serial dependence. Q. J. R. Meteorol. Soc 144, 2666–2675 (2018)

Cotter, C., Crisan, D., Holm, D., Pan, W., Shevchenko, I.: Modelling uncertainty using circulation-preserving stochastic transport noise in a 2-layer quasi-geostrophic model. arXiv:1802.05711 [physics.flu-dyn] (2018)

Cotter, C., Crisan, D., Holm, D., Pan, W., Shevchenko, I.: A Particle Filter for Stochastic Advection by Lie Transport (SALT): A case study for the damped and forced incompressible 2D Euler equation. arXiv:1907.11884 [stat.AP] (2019)

Cotter, C., Crisan, D., Holm, D., Pan, W., Shevchenko, I.: Numerically modelling stochastic Lie transport in fluid dynamics. Multiscale Model. Simul. 17, 192–232 (2019)

Crisan, D., Doucet, A.: A survey of convergence results on particle filtering methods for practitioners. IEEE Trans. Signal Process. 50, 736–746 (2002)

Doucet, A., Freitas, N., Gordon, N.: Sequential Monte Carlo Methods in Practice. Springer, New York (2001)

Farhat, A., Panetta, R.L., Titi, E.S., Ziane, M.: Long-time behavior of a two-layer model of baroclinic quasi-geostrophic turbulence. J. Math. Phys. 53, 1–24 (2012)

Holm, D.: Variational principles for stochastic fluids. Proc. R. Soc. A 471, 20140963 (2015)

Hundsdorfer, W., Koren, B., van Loon, M., Verwer, J.: A positive finite-difference advection scheme. J. Comput. Phys. 117, 35–46 (1995)

Karabasov, S., Berloff, P., Goloviznin, V.: CABARET in the ocean gyres. Ocean Model. 2–3, 155–168 (2009)

Kloeden, P., Platen, E.: Numerical Solution of Stochastic Differential Equations. Springer-Verlag, Berlin Heidelberg (1999)

McWilliams, J.: A note on a consistent quasigeostrophic model in a multiply connected domain. Dynam. Atmos. Ocean 5, 427–441 (1977)

Pedlosky, J.: Geophysical Fluid Dynamics. Springer, New York (1987)

Potthast, R., Walter, A., Rhodin, A.: A localized adaptive particle filter within an operational NWP framework. Month. Weather Rev. 147, 345–362 (2019)

Shevchenko, I., Berloff, P.: Multi-layer quasi-geostrophic ocean dynamics in eddy-resolving regimes. Ocean Modell. 94, 1–14 (2015)

Shevchenko, I., Berloff, P.: Eddy backscatter and counter-rotating gyre anomalies of midlatitude ocean dynamics. Fluids 1, 1–16 (2016)

Shu, C., Osher, S.: Efficient implementation of essentially non-oscillatory shock-capturing schemes. J. Comput. Phys. 77, 439–471 (1988)

Vallis, G.: Atmospheric and oceanic fluid dynamics: fundamentals and large-scale circulation. Cambridge University Press, Cambridge (2006)

van Leeuwen, P., Künsch, H., Nerger, L., Potthast, R., Reich, S.: Particle filters for high-dimensional geoscience applications: a review. Q. J. Royal Meteorl. Soc. 145, 1–31 (2019)

Woodward, P., Colella, P.: The numerical simulation of two-dimensional fluid flow with strong shocks. J. Comput. Phys. 54, 115–173 (1984)

Acknowledgements

The authors thank The Engineering and Physical Sciences Research Council for the support of this work through the Grant EP/N023781/1. The work of the second author has been partially supported by a UC3M-Santander Chair of Excellence grant held at the Universidad Carlos III de Madrid.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Valerio Lucarini.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: The Proof of Theorem 1

Appendix: The Proof of Theorem 1

Proof

Integration of (30) with respect to time over the interval [s, t] gives

where \(t:=s+\varDelta t\).

Substitution of (29) and (41) into (31) leads to

By combining the terms in (42), we get

where

with

Applying the triangle and Young’s inequalities to (43) we arrive at

Using A2, the Cauchy–Schwarz inequality and A1, we estimate the first term as

Estimation of the second term is given by

To estimate the term C in (43), we use the triangle inequality to get

and then separately estimate each term on the right hand side.

Applying the Cauchy–Schwarz inequality and A4 to \(C_1\), we get the following estimation

The term \(C_2\) is estimated as

Using A3 for \(C_3\) leads to

Finally, we arrive at the following estimation

which proves the theorem. \(\square \)

Remark 4

Conditions A1–A5 are satisfied and SPDE (30) is well-posed for sufficiently large p for all \(T>0\) if the stochastic QG equation (24) has a solution in \(W^{2p,2}\) such that \({\mathbb {E}}\left[ \sup \limits _{t\in [0,T]}||q_i||^2_{W^{2p,2}}\right] <\infty \), \(i=1,2\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cotter, C., Crisan, D., Holm, D. et al. Data Assimilation for a Quasi-Geostrophic Model with Circulation-Preserving Stochastic Transport Noise. J Stat Phys 179, 1186–1221 (2020). https://doi.org/10.1007/s10955-020-02524-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-020-02524-0