Abstract

We construct martingale observables for systems of multiple SLE curves by applying screening techniques within the CFT framework recently developed by Kang and Makarov, extended to admit multiple SLEs. We illustrate this approach by rigorously establishing explicit expressions for the Green’s function and Schramm’s formula in the case of two curves growing towards infinity. In the special case when the curves are “fused” and start at the same point, some of the formulas we prove were predicted in the physics literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Schramm–Loewner evolution (SLE) processes are universal lattice size scaling limits of interfaces in critical planar lattice models. SLE with parameter \(\kappa > 0\) is a random continuous curve constructed using Loewner’s differential equation driven by Brownian motion with speed \(\kappa \). Solving the Loewner equation gives a continuous family of conformal maps and the SLE curve is obtained by tracking the image of the singularity of the equation. Various geometric observables are useful and important in SLE theory. To name a few examples, the SLE Green’s function, i.e., the renormalized probability that the interface passes near a given point, is important in connection with the Minkowski content parametrization [28]; Smirnov proved Cardy’s formula for the probability of a crossing event in critical percolation which then entails conformal invariance [37]; left or right passage probabilities known as Schramm formulae [36] were recently used in connection with finite Loewner energy curves [39]; and observables involving derivative moments of the SLE conformal maps are important in the study of fractal and regularity properties, see, e.g., [10, 29]. By the Markovian property of SLE, such observables give rise to martingales with respect to the natural SLE filtration and, conversely, it is sometimes possible to construct martingales carrying some specific geometric information about the SLE.

Assuming sufficient regularity, SLE martingale observables satisfy differential equations which can be derived using Itô calculus. If a solution of these differential equations with the correct boundary values can be found, it is sometimes possible to apply a probabilistic argument using the solution’s boundary behavior to show that the solution actually represents the desired quantity. In the simplest case, the differential equation is an ODE, but generically, in the case of multipoint correlations, it is a semi-elliptic PDE in several variables and it is difficult to construct solutions with the desired boundary data. (But see [10, 15, 24].) Seeking new ways to construct explicit solutions and methods for extracting information from them therefore seems to be worthwhile.

It was observed early on that the differential equations that arise in this way in SLE theory also arise in conformal field theory (CFT) as so-called level two degeneracy equations satisfied by certain correlation functions, see [6, 7, 9, 11, 16]. As a consequence, a clear probabilistic and geometric interpretation of the degeneracy equations is obtained via SLE theory. On the other hand, CFT is a source of ideas and methods for how to systematically construct solutions of these equations, cf. [7, 8, 22]. Thus CFT provides a natural setting for the construction of martingale observables for SLE processes.

In [22], a rigorous Coulomb gas framework was developed in which CFTs are modeled using families of fields built from the Gaussian free field (GFF). SLE martingales for any \(\kappa \) can then be represented as GFF correlation functions involving special fields inserted in the bulk or on the boundary. By making additional, carefully chosen, field insertions, the scaling behavior at the insertion points can in some cases be prescribed. In this way many chordal SLE martingale observables were recovered in [22] as CFT correlation functions.

Multiple SLE arises, e.g., when considering scaling limits of models with alternating boundary conditions forcing the existence of several interacting interfaces. See [14] for several examples and results closely related to those of the present paper. Many single-path observables generalize to this setting but when considering several paths, additional boundary points need to be marked thus increasing the dimensionality of the problem. One purpose of this paper is to suggest and explore a method for the explicit construction of at least some martingale observables for multiple SLE starting from single-path observables and exploiting ideas based in CFT considerations. Boundary insertions are easier to handle than insertions in the bulk, so multiple SLE provides a natural first arena in which to consider the extension of one-point functions to multipoint correlations.

The method involves three steps:

-

(1)

The first step uses screening techniques and ideas from CFT to generate a non-rigorous prediction for the observable [4, 13, 22] (see also [12, 24]). The prediction is expressed in terms of a contour integral with an explicit integrand. We refer to these integrals as Dotsenko-Fateev integrals (after [13]) or sometimes simply as screening integrals. The main difficulty is to choose the appropriate integration contour, but this choice can be simplified by considering appropriate limits.

-

(2)

The second step is to prove that the prediction from Step 1 satisfies the correct boundary conditions. This technical step involves the computation of rather complicated integral asymptotics. In a separate paper [32], we present an approach for computing such asymptotics and carry out the estimates required for this paper.

-

(3)

The last step is to use probabilistic methods together with the estimates of Step 2 to rigorously establish that the prediction from Step 1 gives the correct quantity.

Remark

Step 1 can be viewed as a way to “add” a commuting SLE curve to a known observable by first inserting an appropriate marked boundary point and then employing screening to readjust the boundary conditions.

Remark

We stress that we do not need use a-priori information on the regularity of the considered observables as would be the case, e.g., if one would work directly with the differential equations.

1.1 Two Examples

We illustrate the method by presenting two examples in detail. Both examples involve a system of two SLEs aiming for infinity with one marked point in the interior, but we will indicate how the arguments may be generalized to more complicated configurations.

The first example concerns the probability that the system of SLEs passes to the right of a given interior point; that is, the analogue of Schramm’s formula [36]. This probability obviously depends only on the behavior of the leftmost curve. (So it is really an \(\hbox {SLE}_{\kappa }(2)\) quantity.) The main difficulty in this case lies in implementing Steps 1 and 2; the latter step is discussed in detail in [32].

The second example concerns the limiting renormalized probability that the system of SLEs passes near a given point, that is, the Green’s function. We first check that this Green’s function actually exists as a limit. The main step is to verify existence in the case when only one of the two curves grows. We complete this step by establishing the existence of the \(\hbox {SLE}_\kappa (\rho )\) Green’s function when the force point lies on the boundary and \(\rho \) belongs to a certain interval. The proof gives a representation formula in terms of an expectation for two-sided radial SLE stopped at its target point; the formula is similar to that obtained in the main result of [2]. In Step 1, we make a prediction for the observable by choosing an appropriate linear combination of the screening integrals such that the leading order terms in the asymptotics cancel (thereby matching the asymptotics we expect). In Step 2, which is detailed in [32], we carefully analyze the candidate solution and estimate its boundary behavior. Lastly, given these estimates, we show that the candidate observable enjoys the same probabilistic representation as the Green’s function defined as a limit—so they must agree.

For both examples, the asymptotic analysis of the screening integrals in Step 2 is quite involved. The integrals are natural generalizations of hypergeometric functions and belong to a class sometimes referred to as Dotsenko-Fateev integrals. Even though Dotsenko-Fateev integrals and other generalized hypergeometric functions have been considered before in related contexts (see, e.g., [13, 15, 20, 23, 24]), we have not been able to find the required analytic estimates in the literature. We discuss these issues in a separate paper [32] which also includes details of the precise estimates needed here.

1.2 Fusion

By letting the seed points of the SLEs collapse, we obtain rigorous proofs of fused formulas as corollaries. One can verify by direct computation that the limiting one-point observables satisfy specific third-order ODEs which can be alternatively obtained from the non-rigorous fusion rules of CFT, cf. [12]. In fact, in the case of the Schramm probability, the formulas we prove here were predicted using fusion in [18]. The formulas we derive for the fused Green’s functions appear to be new.

The interpretation of fusion in SLE theory as the successive merging of seeds was described in [16]. In [16], the difficult fact that fused one-point observables actually do satisfy higher order ODEs was also established. The ODEs for the Schramm formula for several fused paths were derived rigorously in [16] and the two-path formula in the special case \(\kappa =8/3\) (also allowing for two interior points) was established in [5]. We do not need to use any of these results in this paper.

Regarding the solution of the equation corresponding to Schramm’s formula for two SLE curves started from two distinct points \(x_1,x_2 \in {\mathbb {R}}\) it was noted in [18] that “explicit analytic progress is only feasible in the limit when \(\delta = x_2 - x_1 \rightarrow 0\)”, that is, in the fusion limit. It is only by applying the screening techniques mentioned above that we are able to obtain explicit expressions for Schramm’s formula in the case of two distinct point \(x_1 \ne x_2\) in this paper.

1.3 Outline of the Paper

The main results of the paper are stated in Sect. 2, while we review some aspects of \(\hbox {SLE}_\kappa \) and \(\hbox {SLE}_\kappa (\rho )\) processes in Sect. 3.

In Sect. 4, we review and use ideas from CFT to generate predictions for Schramm’s formula and Green’s function for multiple SLEs with two curves growing toward infinity in terms of screening integrals.

In Sect. 5, we prove rigorously that the predicted Schramm’s formula indeed gives the probability that a given point lies to the left of both curves. The proof relies on a number of technical asymptotic estimates; proofs of these estimates are given in [32].

In Sect. 6, we give a rigorous proof that the predicted Green’s function equals the renormalized probability that the system passes near a given point. The proof relies both on pure SLE estimates (established in Sects. 6–7) and on asymptotic estimates for contour integrals (established in [32]).

In Sect. 7, we prove a lemma which expresses the fact that it is very unlikely that both curves in a commuting system get near a given point.

In Sect. 8, we consider the special case of two fused curves, i.e., the case when both curves in the commuting system start at the same point. In the case of Schramm’s formula, this provides rigorous proofs of some predictions for Schramm’s formula due to Gamsa and Cardy [18].

In the appendix, we consider the Green’s function when \(8/\kappa \) is an integer and derive explicit formulas in terms of elementary functions in a few cases.

2 Main Results

Before stating the main results, we briefly review some relevant definitions.

Consider first a system of two SLE paths \(\{\gamma _j\}_1^2\) in the upper half-plane \(\mathbb {H} := \{{{\mathrm{Im}}\,}z > 0\}\) growing toward infinity. Let \(0 < \kappa \leqslant 4\). Let \((\xi ^1, \xi ^2) \in {\mathbb {R}}^2\) with \(\xi ^1 \ne \xi ^2\). The Loewner equation corresponding to two growing curves is

where \(\xi _t^1\) and \(\xi _t^2\), \(t \geqslant 0\), are the driving terms for the two curves and the growth speeds \(\lambda _j \) satisfy \(\lambda _j \geqslant 0\). The solution of (2.1) is a family of conformal maps \((g_t(z))_{t \geqslant 0}\) called the Loewner chain of \((\xi _t^1, \xi _t^2)_{t \geqslant 0}\). The multiple SLE system started from\((\xi ^1,\xi ^2)\) is obtained by taking \(\xi _t^1\) and \(\xi _t^2\) as solutions of the system of SDEs

where \(B_t^{1}\) and \(B_t^2\) are independent standard Brownian motions with respect to some measure \(\mathbf {P}= \mathbf {P}_{\xi ^1, \xi ^2}\). The paths are defined by

For \(j = 1,2\), \(\gamma _{j,\infty }\) is a continuous curve from \(\xi ^j\) to \(\infty \) in \(\mathbb {H}\). It can be shown that the system (2.1) is commuting in the sense that the order in which the two curves are grown does not matter [15]. Since our theorems only concern the distribution of the fully grown curves \(\gamma _{1,\infty }\) and \(\gamma _{2,\infty }\), the choice of growth speeds is irrelevant. When growing one single curve we will often choose the growth rate to equal \(a:=2/\kappa \).

Let us also recall the definition of (chordal) \(\hbox {SLE}_\kappa (\rho )\) for a single path \(\gamma _1\) in \(\mathbb {H}\) growing toward infinity. Let \(0< \kappa < 8\), \(\rho \in {\mathbb {R}}\), and let \((\xi ^1, \xi ^2)\in {\mathbb {R}}^2\) with \(\xi ^1 \ne \xi ^2\). Let \(W_t\) be a standard Brownian motion with respect to some measure \(\mathbf {P}^{\rho }\). Then \({\textit{SLE}}_\kappa (\rho )\)started from\((\xi ^1,\xi ^2)\) is defined by the equations

Depending on the choice of parameters, a solution may not exist for some range of t. When referring to \(\hbox {SLE}_\kappa (\rho )\) started from \((\xi ^1,\xi ^2)\), we always assume that the curve starts from the first point of the tuple \((\xi ^1, \xi ^2)\) while the second point (in this case \(\xi ^2\)) is the force point. The \(\hbox {SLE}_\kappa (\rho )\) path \(\gamma _1\) is defined as in (2.3), assuming existence of the limit. In general, \(\hbox {SLE}_\kappa (\rho )\) need not be generated by a curve, but it is in all the cases considered in this paper.

The marginal law of either of the SLEs in a commuting system is that of an \(\hbox {SLE}_\kappa (\rho ), \, \rho =2,\) with the force point at the seed of the other curve. A similar statement also holds for stopped portions of the curve(s), see [15].

2.1 Schramm’s Formula

Our first result provides an explicit expression for the probability that an \(\hbox {SLE}_\kappa (2)\) path passes to the right of a given point. (See below for a precise definition of this event.) The probability is expressed in terms of the function \(\mathcal {M}(z, \xi )\) defined for \(z = x + iy \in \mathbb {H}\) and \(\xi >0\) by

where \(\alpha = 8/\kappa > 1\) and the integration contour from \(\bar{z}\) to z passes to the right of \(\xi \), see Fig. 1. (Unless otherwise stated, we always consider complex powers defined using the principal branch of the complex logarithm.)

The integration contour used in the definition (2.5) of \(\mathcal {M}(z, \xi )\) is a path from \(\bar{z}\) to z which passes to the right of \(\xi \)

Theorem 2.1

(Schramm’s formula for \(\hbox {SLE}_\kappa (2)\)) Let \(0 < \kappa \leqslant 4\). Let \(\xi > 0\) and consider chordal \(\hbox {SLE}_\kappa (2)\) started from \((0,\xi )\). Then the probability \(P(z, \xi )\) that a given point \(z = x + iy \in \mathbb {H}\) lies to the left of the curve is given by

where the normalization constant \(c_\alpha \in {\mathbb {R}}\) is defined by

The proof of Theorem 2.1 will be given in Sect. 5. The formula (2.6) for \(P(z,\xi )\) is motivated by the CFT and screening considerations of Sect. 4.

A point \(z \in \mathbb {H}\) lies to the left of both curves in a commuting system iff it lies to the left of the leftmost curve. Since each of the two curves of a commuting process has the distribution of an \(\hbox {SLE}_\kappa (2)\) (see Sect. 3.1.2), Theorem 2.1 can be interpreted as the following result for multiple SLE.

Corollary 2.2

(Schramm’s formula for multiple SLE) Let \(0 < \kappa \leqslant 4\). Let \(\xi > 0\) and consider a multiple \(\hbox {SLE}_\kappa \) system in \(\mathbb {H}\) started from \((0,\xi )\) and growing toward infinity. Then the probability \(P(z, \xi )\) that a given point \(z = x + iy \in \mathbb {H}\) lies to the left of both curves is given by (2.6).

Corollary 2.2 together with translation invariance immediately yields an expression for the probability that a point z lies to the left of a system of two SLEs started from two arbitrary points \((\xi ^1, \xi ^2)\) in \({\mathbb {R}}\). The probabilities that z lies to the right of or between the two curves then follow by symmetry. For completeness, we formulate this as another corollary.

Corollary 2.3

Let \(0 < \kappa \leqslant 4\). Suppose \(-\infty< \xi ^1< \xi ^2 < \infty \) and consider a multiple \(\hbox {SLE}_\kappa \) system in \(\mathbb {H}\) started from \((\xi ^1, \xi ^2)\) and growing toward infinity. Let \(P(z, \xi )\) denote the function in (2.6). Then the probability \(P_{left}(z, \xi ^1, \xi ^2)\) that a given point \(z = x + iy \in \mathbb {H}\) lies to the left of both curves is given by

the probability \(P_{right}(z, \xi ^1, \xi ^2)\) that a point \(z \in \mathbb {H}\) lies to the right of both curves is

and the probability \(P_{middle}(z, \xi ^1, \xi ^2)\) that z lies between the two curves is given by

By letting \(\xi \rightarrow 0+\) in (2.6), we obtain proofs of formulas for “fused” paths. See Sect. 8 for a derivation of the following corollary.

Corollary 2.4

Let \(0 < \kappa \leqslant 4 \) and define \(P_{fusion}(z) = \lim _{\xi \downarrow 0} P(z,\xi )\), where \(P(z,\xi )\) is as in (2.6). Then

where the real-valued function S(t) is defined by

Corollary 2.4 provides a proof of the predictions of [18] where the formula (2.8) was derived by solving a third order ODE obtained from so-called fusion rules. (We prove the result for \(\kappa \leqslant 4\) but the formulas match those from [18] in general.) We note that even given the explicit predictions of [18], it is not clear how to proceed to verify them rigorously without additional non-trivial information. Indeed, as soon the evolution starts, the tips of the curves are separated and the system leaves the fused state. However, [16] provides a different rigorous approach by exploiting the hypoellipticity of the PDEs to show that the fused observables satisfy the higher order ODEs. In the special case \(\kappa =8/3\), the formula for \(P_{fusion}(z)\) was proved in [5] using Cardy and Simmons’ prediction [38] for a two-point Schramm formula.

2.2 The Green’s Function

Our second main result provides an explicit expression for the Green’s function for \(\hbox {SLE}_\kappa (2)\).

Let \(\alpha = 8/\kappa \). Define the function \(I(z,\xi ^1, \xi ^2)\) for \(z \in \mathbb {H}\) and \(-\infty< \xi ^1< \xi ^2 < \infty \) by

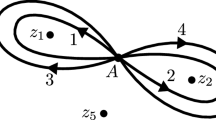

where \(A = (z + \xi ^2)/2\) is a basepoint and the Pochhammer integration contour is displayed in Fig. 2. More precisely, the integration contour begins at the base point A, encircles the point z once in the positive (counterclockwise) sense, returns to A, encircles \(\xi ^2\) once in the positive sense, returns to A, and so on. The points \(\bar{z}\) and \(\xi ^1\) are exterior to all loops. The factors in the integrand take their principal values at the starting point and are then analytically continued along the contour. The use of a Pochhammer contour ensures that the integrand is analytic everywhere on the contour despite the fact that the integrand involves (multiple-valued) non-integer powers.

The Pochhammer integration contour in (2.9) is the composition of four loops based at the point \(A = (z + \xi ^2)/2\) halfway between z and \(\xi ^2\). The loop denoted by 1 is traversed first, then the loop denoted by 2 is traversed, and so on

For \(\alpha \in (1, \infty ) \smallsetminus {\mathbb {Z}}\), we define the function \({\mathcal {G}}(z, \xi ^1, \xi ^2)\) for \(z = x+iy \in \mathbb {H}\) and \(\xi ^1 < \xi ^2\) by

where the constant \(\hat{c} = \hat{c}(\kappa )\) is given by

We extend this definition of \({\mathcal {G}}(z,\xi ^1,\xi ^2)\) to all \(\alpha > 1\) by continuity. The following lemma shows that even though \(\hat{c}\) vanishes as \(\alpha \) approaches an integer, the function \({\mathcal {G}}(z,\xi ^1,\xi ^2)\) has a continuous extension to integer values of \(\alpha \).

Lemma 2.5

For each \(z \in \mathbb {H}\) and each \((\xi ^1, \xi ^2) \in {\mathbb {R}}^2\) with \(\xi ^1 < \xi ^2\), \({\mathcal {G}}(z,\xi ^1,\xi ^2)\) can be extended to a continuous function of \(\alpha \in (1, \infty )\).

Proof

See Appendix A. \(\square \)

The CFT and screening considerations described in Sect. 4 suggest that \({\mathcal {G}}\) is the Green’s function for \(\hbox {SLE}_\kappa (2)\) started from \((\xi ^1, \xi ^2)\); that is, that \({\mathcal {G}}(z, \xi ^1, \xi ^2)\) provides the normalized probability that an \(\hbox {SLE}_\kappa (2)\) path originating from \(\xi ^1\) with force point \(\xi ^2\) passes through an infinitesimal neighborhood of z. Our next theorem establishes this rigorously.

In the following statements, \(\Upsilon _\infty (z)\) denotes 1 / 2 times the conformal radius seen from z of the complement of the curve(s) under consideration (as indicated by the probability measure) in \(\mathbb {H}\). For example, in the case of two paths the conformal radius is with respect to the component of z of \(\mathbb {H} \smallsetminus (\gamma _{1,\infty } \cup \gamma _{2,\infty })\).

Theorem 2.6

(Green’s function for \(\hbox {SLE}_\kappa (2)\)) Let \(0< \kappa \leqslant 4\). Let \(-\infty< \xi ^1< \xi ^2 < \infty \) and consider chordal \(\hbox {SLE}_\kappa (2)\) started from \((\xi ^1,\xi ^2)\). Then, for each \(z = x + iy \in \mathbb {H}\),

where \(d=1+\kappa /8\), \(\mathbf {P}^2\) is the \(\hbox {SLE}_\kappa (2)\) measure, the function \({\mathcal {G}}\) is defined in (2.10), and the constant \(c_* = c_*(\kappa )\) is defined by

The proof of Theorem 2.6 will be presented in Sect. 6.

Remark

The function \({\mathcal {G}}(z, \xi ^1, \xi ^2)\) can be written as

where h is a function of \(\theta ^1 = \arg (z - \xi ^1)\) and \(\theta ^2 = \arg (z - \xi ^2)\). This is consistent with the expected translation invariance and scale covariance of the Green’s function.

In the appendix, we derive formulas for \({\mathcal {G}}(z, \xi ^1, \xi ^2)\) when \(\alpha \) is an integer. In particular, we obtain a proof of the following proposition which provides explicit formulas for the \(\hbox {SLE}_\kappa (2)\) Green’s function in the case of \(\kappa = 4\), \(\kappa = 8/3\), and \(\kappa = 2\).

Proposition 2.7

For \(\kappa = 4\), \(\kappa = 8/3\), and \(\kappa = 2\) (i.e. for \(\alpha = 2,3,4\)), the \(\hbox {SLE}_\kappa (2)\) Green’s function is given by Eq. (2.14) where \(h(\theta ^1, \theta ^2)\) is given explicitly by

and

It is possible to derive an explicit expression for the Green’s function for a system of two multiple SLEs as a consequence of Theorem 2.6. To this end, we need a correlation estimate which expresses the intuitive fact that it is very unlikely that both curves pass near a given point \(z \in \mathbb {H}\).

Lemma 2.8

Let \(0< \kappa \leqslant 4\). Then,

where \(\mathbf {P}_{\xi ^1, \xi ^2}\) denotes the law of a system of two multiple \(\hbox {SLE}_\kappa \) in \(\mathbb {H}\) started from \((\xi ^1, \xi ^2)\) and aiming for \(\infty \), and \(\mathbf {P}^2_{\xi ^1, \xi ^2}\) denotes the law of chordal \(\hbox {SLE}_\kappa (2)\) in \(\mathbb {H}\) started from \((\xi ^1, \xi ^2)\).

The proof of Lemma 2.8 will be given in Sect. 7.

Assuming Lemma 2.8, it follows immediately from Theorem 2.6 that the Green’s function for a system of multiple SLEs started from \((-\xi , \xi )\) is given by

In other words, given a system of two multiple \(\hbox {SLE}_\kappa \) paths started from \(-\xi \) and \(\xi \) respectively, \(G_\xi (z)\) provides the normalized probability that at least one of the two curves passes through an infinitesimal neighborhood of z. We formulate this as a corollary.

Corollary 2.9

(Green’s function for multiple SLE) Let \(0< \kappa \leqslant 4\). Let \(\xi > 0\) and consider a system of two multiple \(\hbox {SLE}_\kappa \) paths in \(\mathbb {H}\) started from \((-\xi , \xi )\) and growing towards \(\infty \). Then, for each \(z = x + iy \in \mathbb {H}\),

where \(d=1+\kappa /8\), the constant \(c_* = c_*(\kappa )\) is given by (2.13), and the function \(G_\xi \) is defined for \(z \in \mathbb {H}\) and \(\xi > 0\) by

Remark 2.10

If the system is started from two arbitrary points \((\xi ^1, \xi ^2) \in {\mathbb {R}}\) with \(\xi ^1 < \xi ^2\), then it follows immediately from (2.18) and translation invariance that

We will prove Theorem 2.6 by establishing two independent propositions, which when combined imply Theorem 2.6. The first of these propositions (Proposition 2.11) establishes existence of a Green’s function for \(\hbox {SLE}_\kappa (\rho )\) and provides a representation for this Green’s function in terms of an expectation with respect to two-sided radial \(\hbox {SLE}_\kappa \). For the proof of Theorem 2.6, we only need this proposition for \(\rho = 2\). However, since it is no more difficult to state and prove it for a suitable range of positive \(\rho \), we consider the general case.

Proposition 2.11

(Existence and representation of Green’s function for \(\hbox {SLE}_\kappa (\rho )\)) Let \(0 < \kappa \leqslant 4\) and \(0 \leqslant \rho < 8-\kappa \). Given two points \(\xi ^1, \xi ^2 \in {\mathbb {R}}\) with \(\xi ^1 < \xi ^2\), consider chordal \(\hbox {SLE}_\kappa (\rho )\) started from \((\xi ^1, \xi ^2)\). Then, for each \(z \in \mathbb {H}\),

where the \(\hbox {SLE}_\kappa (\rho )\) Green’s function \(G^{\rho }\) is given by

Here \(G(z) = ({\text {Im}} z)^{d-2} \sin ^{4a-1}( \arg \, z)\) is the Green’s function for chordal \(\hbox {SLE}_\kappa \) in \(\mathbb {H}\) from 0 to \(\infty \), the martingale \(M^{(\rho )}\) is defined in (3.8), \(\mathbf {E}^*_{\xi ^1,z}\) denotes expectation with respect to two-sided radial \(\hbox {SLE}_\kappa \) from \(\xi ^1\) through z, stopped at T, the hitting time of z, and the constant \(c_*\) is given by (2.13).

We expect that the analogous result is true for \(\kappa \in (0,8)\) and for a wider range of \(\rho \). We restrict to the stated ranges for simplicity, as these assumptions simplify some of the arguments, e.g., due to the relationship between the boundary exponent and the dimension of the path.

The next result (Proposition 2.12) shows that the function \({\mathcal {G}}(z, \xi ^1, \xi ^2)\) predicted by CFT and defined in (2.10) can be represented in terms of an expectation with respect to two-sided radial \(\hbox {SLE}_\kappa \). Since this representation coincides with the representation in (2.19), Theorem 2.6 will follow immediately once we establish Propositions 2.11 and 2.12.

Proposition 2.12

(Representation of \({\mathcal {G}}\)) Let \(0 < \kappa \leqslant 4\) and let \(\xi ^1, \xi ^2 \in {\mathbb {R}}\) with \(\xi ^1 < \xi ^2\). The function \({\mathcal {G}}(z,\xi ^1,\xi ^2)\) defined in (2.10) satisfies

where \(G(z) = ({\text {Im}} z)^{d-2} \sin ^{4a-1}( \arg \, z)\) is the Green’s function for chordal \(\hbox {SLE}_\kappa \) in \(\mathbb {H}\) from 0 to \(\infty \) and \(\mathbf {E}^*_{\xi ^1,z}\) denotes expectation with respect to two-sided radial \(\hbox {SLE}_\kappa \) from \(\xi ^1\) through z, stopped at T, the hitting time of z.

Remark

Note that Eq. (2.20) gives a formula for the two-sided radial SLE observable,

and as a consequence we obtain smoothness and the fact that it satisfies the expected PDE.

The proofs of Propositions 2.11 and 2.12 are presented in Sects. 6.1 and 6.2, respectively.

In Sect. 8.2, we obtain fusion formulas by letting \(\xi \rightarrow 0+\). The formulas simplify for some values of \(\kappa \). In particular, we will prove the following result.

Proposition 2.13

(Fused Green’s functions) Suppose \(\kappa = 4, 8/3\), or 2. Consider a system of two fused multiple \(\hbox {SLE}_\kappa \) paths in \(\mathbb {H}\) started from 0 and growing toward \(\infty \). Then, for each \(z = x + iy \in \mathbb {H}\),

where \(d=1+\kappa /8\), the constant \(c_* = c_*(\kappa )\) is given by (2.13), and the function \({\mathcal {G}}_f\) is defined by

with \(h_f(\theta )\) given explicitly by

2.3 Remarks

We end this section by making a few remarks.

-

We believe the method used in this paper will generalize to produce analogous results for observables for \(N \geqslant 3\) multiple SLE paths depending on one interior point. This would require \(N-1\) screening insertions, and the integrals will then be \(N-1\) iterated contour integrals.

-

In [23, 24] screening integrals for SLE boundary observables (such as the ordered multipoint boundary Green’s function) are given and shown to be closely related to a particular quantum group. In fact, this algebraic structure is used to systematically make the difficult choices of integration contours. It seems reasonable to expect that a similar connection exists in our setting as well, allowing for an efficient generalization to several commuting SLE curves, but we will not pursue this here.

-

Another way of viewing the system of two multiple SLEs growing towards \(\infty \) is as one SLE path conditioned to hit a boundary point, also known as two-sided chordal SLE. Indeed, the extra \(\rho =2\) at the second seed point forces a \(\rho = \kappa -8\) at \(\infty \).

-

Suppose one has an \(\hbox {SLE}_{\kappa }\) martingale and wants to construct a similar martingale for \(\hbox {SLE}_{\kappa }(\rho )\). The first idea that comes to mind is to try to “compensate” the \(\hbox {SLE}_\kappa \) martingale by multiplying by a differentiable process. In the cases we consider this method does not give the correct observables (the boundary behavior is not correct), but rather corresponds to a change of coordinates moving the target point at \(\infty \).

3 Preliminaries

Unless specified otherwise, all complex powers are defined using the principal branch of the logarithm, that is, \(z^\alpha = e^{\alpha (\ln |z| + i {{\mathrm{Arg}}\,}z)}\) where \({{\mathrm{Arg}}\,}z \in (-\pi , \pi ]\). We write \(z = x + iy\) and let

We let \(\mathbb {H} = \{z \in {\mathbb {C}}: {{\mathrm{Im}}\,}z > 0\}\) and \(\mathbb {D} = \{z \in {\mathbb {C}}: |z| < 1\}\) denote the open upper half-plane and the open unit disk, respectively. The open disk of radius \(\epsilon > 0\) centered at \(z \in {\mathbb {C}}\) will be denoted by \(\mathcal {B}_\epsilon (z) = \{w \in {\mathbb {C}}: |w-z| < \epsilon \}\). Throughout the paper, \(c > 0\) and \(C > 0\) will denote generic constants which may change within a computation.

Let D be a simply connected domain with two distinct boundary points p, q (prime ends). There is a conformal transformation \(f:D \rightarrow \mathbb {H}\) taking p to 0 and q to \(\infty \); in fact, f is determined only up to a final scaling. We choose one such f, but the quantities we define do not depend on the choice. Given \(z \in D\), we define the conformal radius \(r_D(z)\) of D seen from z by letting

Schwarz’ lemma and Koebe’s 1/4 theorem give the distortion estimates

We define

and note that this is a conformal invariant. Suppose D is a Jordan domain and that \(J_-,J_+\) are the boundary arcs \(f^{-1}(\mathbb {R}_-)\) and \(f^{-1}(\mathbb {R}_+)\), respectively. Let \(\omega _D(z, E)\) denote the harmonic measure of \(E \subset \partial D\) in D from z. Then it is easy to see that

with the implicit constants universal. By conformal invariance an analogous statement holds for any simply connected domain different from \(\mathbb {C}\). We will use this relation several times without explicitly saying so in order to estimate \(S_{D,p,q}\).

In many places we will estimate harmonic measure using the following lemma often referred to as the Beurling estimate. It is derived from Beurling’s projection theorem, see for example Theorem 9.2 and Corollary 9.3 of [19].

Lemma 3.1

(Beurling estimate) There is a constant \(C < \infty \) such that the following holds. Suppose K is a connected set in \(\overline{\mathbb {D}}\) such that \(K \cap \partial \mathbb {D} \ne \emptyset \). Then

3.1 Schramm–Loewner Evolution

Let \(0< \kappa < 8\). Throughout the paper we will use the following parameters:

We will also sometimes write \(\alpha = 4a\). The assumption \(\kappa = 2/a< 8\) implies that \(\alpha > 1\).

We will work with the \(\kappa \)-dependent Loewner equation

where \(\xi _t\), \(t \geqslant 0\), is the (continuous) Loewner driving term. The solution is a family of conformal maps \((g_t(z))_{t \geqslant 0}\) called the Loewner chain of \((\xi _t)_{t \geqslant 0}\). The \(\hbox {SLE}_\kappa \) Loewner chain is obtained by taking the driving term to be a standard Brownian motion and \(a=2/\kappa \). The chordal \(\hbox {SLE}_\kappa \) path is the continuous curve connecting 0 with \(\infty \) in \(\mathbb {H}\) defined by

We write \(H_t\) for the simply connected domain given by taking the unbounded component of \(\mathbb {H}\smallsetminus \gamma _t\). Given a simply connected domain D with distinct boundary points p, q, we define chordal \(\hbox {SLE}_\kappa \) in D from p to q by conformal invariance. We write

We will make use of the following sharp one-point estimate which also defines the Green’s function for chordal \(\hbox {SLE}_\kappa \), see Lemma 2.10 of [30].

Lemma 3.2

(Green’s function for chordal \(\hbox {SLE}_\kappa \)) Suppose \(0< \kappa < 8\). There exists a constant \(c > 0\) such that the following holds. Let \(\gamma \) be \(\hbox {SLE}_\kappa \) in D from p to q, where D is a simply connected domain with distinct boundary points (prime ends) p, q. As \(\epsilon \rightarrow 0\) the following estimate holds uniformly with respect to all \(z \in D\) satisfying \({\text {dist}}(z,\partial D) \geqslant 2\epsilon \):

where, by definition,

is the Green’s function for \(\hbox {SLE}_\kappa \) from p to q in D, and \(c_*\) is the constant defined in (2.13).

Remark

Using the relation between \(\Upsilon _\infty (z)\) and Euclidean distance, Lemma 3.2 shows that \(\mathbf {P}\left( {\text {dist}}(x, \gamma ) \leqslant \epsilon \right) \leqslant C \epsilon ^{2-d}G_D(z;p,q)\). In fact, the statement of Lemma 3.2 holds with \(\Upsilon _\infty (z)\) replaced by Euclidean distance, with another constant in place of \(c_{*}\).

We also need to use a boundary estimate for SLE which is convenient to express in terms of extremal distance, see Chap. IV of [19] for the definition and basic properties we use here. For a domain D with \(E,F \subset \overline{D}\), we write \(d_D(E,F)\) for the conformally invariant extremal distance between E and F in D. Recall that if \(D = \{z: \, r< |z| < R\}\) is the round annulus and E, F are the two boundary components, then \(d_D(E,F) = \ln (R/r)/(2\pi )\). A crosscut \(\eta \) of D is an open Jordan arc in D with the property that the closure of \(\eta \) equals \(\eta \cup \{x,y\}\), where \(x,y \in \partial D\), and x, y may coincide.

The domain D and the crosscut \(\eta \) of Lemma 3.3

Lemma 3.3

Let \(0< \kappa < 8\). Suppose D is a simply connected Jordan domain and let \(p,q \in \partial D\) be two distinct boundary points. Write \(J_{-}, J_{+}\) for the boundary arcs connecting q with p and p with q in the counterclockwise direction, respectively. Suppose \(\eta \) is a crosscut of D starting and ending on \(J_{+}\), see Fig. 3. Then, if \(\gamma \) is chordal \(\hbox {SLE}_{\kappa }\) in D from p to q,

where \(\beta = 4a-1\) and the constant \(C \in (0, \infty )\) is independent of D, p, q, and \(\eta \).

Proof

By conformal invariance, we may assume \(D = \mathbb {H}, p=0, q = \infty \) and that \(\eta \) separates 1 from \(\infty \). Set \(\delta = \max \{|z-1|: z \in \eta \}\) and let \(w \in \eta \) be a point such that \(|w-1| = \delta \). Then the unbounded annulus A whose boundary components are \({\mathbb {R}}_-\) and \(\eta \cup \bar{\eta }\) separates w and 1 from \({\mathbb {R}}_-\). Hence, first using the symmetry rule for extremal distance and then Teichmüller’s module theorem (see Chap. II.1.3 and Eq. II.2.10 of [31]) and the relation between extremal distance and module

(Note that [31] defines the module of a round annulus by \(\ln (R/r)\), i.e., without the \(1/2\pi \) factor.) Hence

On the other hand, comparing with a half-disk of radius \(\delta \) about 1, the standard boundary estimate for \(\hbox {SLE}_{\kappa }\) in the upper half-plane [1, Theorem 1.1] implies that \(\mathbf {P}(\gamma \cap \eta \ne \emptyset ) \leqslant C \,\delta ^{\beta }\), which together with (3.6) gives the desired bound. \(\square \)

3.1.1 \(\hbox {SLE}_{\kappa }(\rho )\)

Let \(0< \kappa < 8\). We will work with \(\hbox {SLE}_\kappa (\rho )\), for \(\rho \in \mathbb {R}\) chosen appropriately, as defined by weighting \(\hbox {SLE}_\kappa \) by a local martingale via Girsanov’s theorem; we will explain this below. Let \((\xi ^1, \xi ^2) \in {\mathbb {R}}^2\) be given with \( \xi ^1 < \xi ^2\). Suppose \((\xi ^1_t)_{t \geqslant 0}\) is Brownian motion started from \(\xi ^1\) under the measure \(\mathbf {P}\), with filtration \(\mathcal {F}_t\). We refer to \(\mathbf {P}\) as the \(\hbox {SLE}_\kappa \) measure. Let \((g_t)_{t \geqslant 0}\) be the \(\hbox {SLE}_\kappa \) Loewner flow defined by Eq. (3.3) with \(\xi _t = \xi _t^1\) and set

We call \(\xi ^2\) the force point. Define

Note that \(\zeta \geqslant 0\) whenever \(0< \kappa \leqslant 4\) and \(r \geqslant 0\). Itô’s formula shows that

is a local \(\mathbf {P}\)-martingale for any \(\rho \in {\mathbb {R}}\), where \(r=\rho /\kappa \). In fact,

The \(\hbox {SLE}_\kappa (\rho )\) measure \(\mathbf {P}^{\rho } = \mathbf {P}_{\xi ^1, \xi ^2}^{\rho }\) is defined by weighting \(\mathbf {P}\) by the martingale \(M^{(\rho )}\), that is,

Then, using Girsanov’s theorem, the equation for \((\xi ^1_t)_{t \geqslant 0}\) changes to

where \((W_t)_{t \geqslant 0}\) is \(\mathbf {P}^{\rho }\)-Brownian motion. This is the defining equation for the driving term of \(\hbox {SLE}_\kappa (\rho )\). (Since \(M^{(\rho )}\) is a local martingale we need to stop the process before \(M^{(\rho )}\) blows up; we will not always be explicit about this. We will not need to consider \(\hbox {SLE}_\kappa (\rho )\) after the time the path hits or swallows the force point.) We refer to the Loewner chain driven by \((\xi ^1_t)_{t \geqslant 0}\) under \(\mathbf {P}^{\rho }\) as \({\textit{SLE}}_\kappa (\rho )\)started from\((\xi ^1, \xi ^2)\). If \(\rho \) is sufficiently negative, the \(\hbox {SLE}_{\kappa }(\rho )\) path will almost surely hit the force point. In this case it can be useful to reparametrize so that the quantity

decays deterministically; this is called the radial parametrization in this context. Here \(O_t\) is defined as the image under \(g_t\) of the rightmost point in the hull at time t; in particular, \(O_t = g_{t}(0+)\) if \(0 < \kappa \leqslant 4\), see, e.g., [3]. Geometrically \(C_{t}\) equals (1/4 times) the conformal radius seen from \(\xi ^{2}\) in \(H_{t}\) after Schwarz reflection. We define a time-change s(t) so that \(\hat{C}_{t}:=C_{s(t)} = e^{-at}\). A computation shows that if

then \(s'(t) = (\hat{\xi }^2_t-\hat{\xi }^1_t)^2(\hat{A}_t^{-1}-1)\), where \(\hat{A}_t = A_{s(t)}\), see, e.g., Sect. 2.2 of [3]. An important fact is that \((\hat{A}_t)_{t \geqslant 0}\) is positive recurrent with respect to \(\hbox {SLE}_{\kappa }(\rho )\) if \(\rho \) is chosen appropriately.

Lemma 3.4

Suppose \(0< \kappa < 8\) and \( \rho < \kappa /2 - 4\). Consider \(\hbox {SLE}_{\kappa }(\rho )\) started from (0, 1). Then \(\hat{A}_{t}\) is positive recurrent with invariant density

In fact, there is \(\alpha > 0\) such that if f is integrable with respect to the density \(\pi _A\), then as \(t \rightarrow \infty \),

Proof

See, e.g., Sect. 5.3 of [26]. \(\square \)

3.1.2 Relationship Between Multiple SLE and \(\hbox {SLE}_\kappa (\rho )\)

Suppose \(\kappa \leqslant 4\) and consider a system of two multiple chordal SLEs curves started from \((\xi ^1, \xi ^2)\) both aiming at \(\infty \); recall (2.1) and (2.2). Suppose we first grow \(\gamma _2\) up to a fixed capacity time t. The conditional law of \(g_t \circ \gamma _1\) is then an \(\hbox {SLE}_\kappa (2)\) in \(\mathbb {H}\) started from \((\xi ^1_t, \xi ^2_t)\). In particular, the marginal law of \(\gamma _1\) is that of an \(\hbox {SLE}_\kappa (2)\) started from \(\xi ^1\) with force point \(\xi ^2\). Indeed, if we choose the particular growth speeds \(\lambda _1 = a\) and \(\lambda _2 = 0\), then the defining Eqs. (2.1) and (2.2) reduce to

where \((B_t^1)_{t \geqslant 0}\) is \(\mathbf {P}\)-Brownian motion. Evaluating the equation for \(g_t(z)\) at \(z = \xi ^2\) we infer that \(\xi _t^2 = g_t(\xi ^2)\). Comparing this with Eqs. (3.7) and (3.10) defining \(\hbox {SLE}_\kappa (\rho )\), we conclude that \(\gamma _1\) has the same distribution under the multiple \(\hbox {SLE}_\kappa \) measure \(\mathbf {P}\) as it has under the \(\hbox {SLE}_\kappa (2)\)-measure \(\mathbf {P}^2\) started from \((\xi ^1, \xi ^2)\).

3.1.3 Two-Sided Radial SLE and Radial Parametrization

Recall that if \(z \in \mathbb {H}\) is fixed then the \(\hbox {SLE}_{\kappa }\) Green’s function in \(H_{t}\) equals

which is a covariant \(\mathbf {P}\)-martingale. Two-sided radial SLE in \(\mathbb {H}\) through z is the process obtained by weighting chordal \(\hbox {SLE}_\kappa \) by G. (This is the same as \(\hbox {SLE}_{\kappa }(\kappa -8)\) with force point \(z \in \mathbb {H}\).) Since two-sided radial SLE approaches its target point, it is natural to parametrize so that the conformal radius (seen from z) decays deterministically. More precisely, we change time so that \(\Upsilon _{s(t)}(z) = e^{-2at}\); this parametrization depends on z. The Loewner equation implies

Hence \(s'(t) = |\tilde{z}_{t}|^{4}/\tilde{y}_{t}^{2}\), where \(\tilde{S}_{t} = S_{s(t)}\), \(\tilde{z}_{t} = z_{s(t)}\), etc., denote the time-changed processes. If \(\Theta _t = \arg z_t\), then using that

we find that \(\tilde{\Theta }_{t} = \Theta _{s(t)}\) satisfies

where \((\tilde{W}_{t})_{t \geqslant 0}\) is standard \(\mathbf {P}\)-Brownian motion. The time-changed martingale can be written

The measure \(\mathbf {P}^{*} = \mathbf {P}_z^{*}\) is defined by weighting chordal \(\hbox {SLE}_{\kappa }\) by \(\tilde{G}\), that is,

This produces two-sided radial \(\hbox {SLE}_{\kappa }\) in the radial parametrization.

Since \(d\tilde{G}_t = \beta \tilde{G}_t \cot (\tilde{\Theta }_t) d\tilde{W}_t\), Girsanov’s theorem implies that the equation for \(\tilde{\Theta }_{t}\) changes to the radial Bessel equation under the new measure \(\mathbf {P}^{*}\):

where \(\tilde{B}_{t}\) is \(\mathbf {P}^{*}\)-standard Brownian motion.

We will use the following lemma about the radial Bessel equation, see, e.g., Sect. 3 of [27].

Lemma 3.5

Let \(0< \kappa < 8, \, a = 2/\kappa \) and suppose the process \((\Theta _t)_{t \geqslant 0}\) is a solution to the SDE

Then \((\Theta _t)_{t \geqslant 0}\) is positive recurrent with invariant density

where \(c_*\) is the constant in (2.13). In fact, there is \(\alpha > 0\) such that if f is integrable with respect to the density \(\psi \), then as \(t \rightarrow \infty \),

where the error term does not depend on \(\Theta _0\).

4 Martingale Observables as CFT Correlation Functions

4.1 Screening

The CFT framework of Kang and Makarov [22] can be used to generate SLE martingale observables, see in particular Lecture 14 of [22]. The ideas of [22] have been extended to incorporate several multiple SLEs started from different points in [4]. (See also, e.g., [8, 18] for related work.) We will also make use of the screening method [13] which produces observables in the form of contour integrals, which we call Dotsenko-Fateev integrals. From the CFT perspective (in the sense of [22]), one starts from a CFT correlation function with appropriate field insertions giving a corresponding (known) \(\hbox {SLE}_{\kappa }\) martingale. Adding additional paths means inserting additional boundary fields. This will create observables for the system of SLEs. But in the cases we consider, the extra fields change the boundary behavior so that the new observable does not encode the desired geometric information anymore. To remedy this, carefully chosen auxiliary fields are inserted and then integrated out along integration contours. (The mismatching “charges” are “screened” by the contours.) The correct choices of insertions and integration contours depend on the particular problem, and different choices correspond to solutions with different boundary behavior.

Remark

We mention in passing that from a different point of view, it is known that the Gaussian free field with suitable boundary data can be coupled with SLE paths as local sets for the field [33]. Jumps in boundary conditions for the GFF are implemented by vertex operator insertions on the boundary. By the nature of the coupling, correlation functions for the field will give rise to SLE martingales.

In what follows, we briefly summarize how we used these ideas to arrive at the explicit expressions (2.6) and (2.10) for the Schramm probability \(P(z, \xi )\) and the Green’s function \({\mathcal {G}}(z, \xi ^1, \xi ^2)\), respectively. We refer to [4, 22] for an introduction to the underlying CFT framework and we will use notation from these references. Since the discussion is purely motivational, we make no attempt in this section to be complete or rigorous. This is in contrast to the other sections of the paper which are rigorous. Indeed, we shall only use the results of this section as guesses for solutions to be studied more closely later on.

Consider a system of two multiple SLEs started from \((\xi ^1, \xi ^2) \in {\mathbb {R}}^2\). If \(\lambda _1\) and \(\lambda _2\) denote the growth speeds of the two curves, the evolution of the system is described by equations (2.1) and (2.2). In the language of [22], the presence of two multiple SLE curves in \(\mathbb {H}\) started from \(\xi ^1\) and \(\xi ^2\) corresponds to the insertion of the operator

where \(V_{\star ,(b)}^{i\sigma }(z)\) denotes a rooted vertex field inserted at z (see [22], p. 96) and the parameter b satisfies the relation

Notice that we define \(a=2/\kappa \) while [22] defines “a” by \(\sqrt{2/\kappa }\). The framework of [22] (or rather an extension of this framework to the case of multiple curves [4]) suggests that if \(\{z_j\}_1^n \subset {\mathbb {C}}\) are points and \(\{X_j\}_1^n\) are fields satisfying certain properties, then the correlation function

is a (local) martingale observable for the system when evaluated in the “Loewner charts” \((g_t)\), where for each t the map \(g_t\) “removes” the whole system of curves which is growing at constant capacity speed. It turns out that the observables relevant for Schramm’s formula and for the Green’s function belong to a class of correlation functions of the form

where \(z \in \mathbb {H}\), \(u \in {\mathbb {C}}\), and \(\sigma _1, \sigma _2, s \in {\mathbb {R}}\) are real constants. We will later integrate out the variable u, but it is essential to include the screening field \((V_{\star , (b)}^{is}||g_t^{-1})(u)\) in the definition (4.3) in order to arrive at observables with the appropriate conformal dimensions at z and at infinity. The observable \(M_t^{(z,u)}\) can be written as

where \(Z_t = g_t(z)\), \(U_t = g_t(u)\), and the function \(A(z,\xi ^1, \xi ^2, u)\) is defined by

Itô’s formula implies that the CFT generated observable \(M_t^{(z,u)}\) is indeed a local martingale for any choice of \(z,u \in \mathbb {H}\) and \(\sigma _1,\sigma _2,s \in {\mathbb {R}}\).

Since (4.4) is a local martingale for each value of the screening variable u, and the observable transforms as a one-form in u, we expect the integrated observable

to be a local martingale for any choice of \(z \in \mathbb {H}\), \(\sigma _1,\sigma _2,s \in {\mathbb {R}}\), and of the integration contour \(\gamma \), at least as long as the integral in (4.6) converges and the branches of the complex powers in (4.5) are consistently defined. The integral in (4.6) is referred to as a “screening” integral.

By choosing \(\lambda _2 = 0\), we expect the observable \(\mathcal {M}_t^{(z)}\) defined in (4.6) to be a local martingale for \(\hbox {SLE}_\kappa (2)\) started from \((\xi ^1, \xi ^2)\). We later check these facts in the cases of interest by direct computation, see Propositions 5.2 and 6.4. We next describe how the martingales relevant for Schramm’s formula and for the Green’s function for \(\hbox {SLE}_\kappa (2)\) arise as special cases of \(\mathcal {M}_t^{(z)}\) corresponding to particular choices of \(\sigma _1,\sigma _2,s \in {\mathbb {R}}\) and of the contour \(\gamma \).

4.2 Prediction of Schramm’s Formula

In order to obtain the local martingale relevant for Schramm’s formula we choose the following values for the parameters (“charges”) in (4.4):

The choice (4.7) can be motivated as follows. First of all, by choosing \(s = -2\sqrt{a}\) we ensure that \(s^2/2 -s b = 1\) (see (4.1)). This implies that \(\mathcal {M}_t^{(z)}\) involves the one-form \(g_t'(u)^{s^2/2-s b}du = g_t'(u) du\). After integration with respect to du this leads to a conformally invariant screening integral. To motivate the choices of \(\sigma _1\) and \(\sigma _2\), let \(P(z, \xi ^1, \xi ^2)\) denote the probability that the point \(z\in \mathbb {H}\) lies to the left of an \(\hbox {SLE}_\kappa (2)\)-path started from \((\xi ^1,\xi ^2)\). Then we expect \(\partial _zP\) to be a martingale observable with conformal dimensions

The parameters in (4.7) are chosen so that the observable \(\mathcal {M}_t^{(z)}\) in (4.6) has the conformal dimensions in (4.8). We emphasize that it is the inclusion of the screening field in (4.3) that makes it possible to obtain these dimensions. In particular, by including it we can have \(\lambda _{\infty } = 0\). We have considered the derivative \(\partial _zP\) instead of P because then we are able to construct a nontrivial martingale with the correct dimensions.

In the special case when the parameters \(\sigma _1,\sigma _2,s\) are given by (4.7), the local martingale (4.6) takes the form

We expect from the above discussion that there exists an appropriate choice of the integration contour \(\gamma \) in (4.6) such that \(\partial _z P(z, \xi ^1, \xi ^2) = \text {const} \times \mathcal {M}_0^{(z)}\), that is, we expect

where \(c(\kappa )\) is a complex constant and \(y = {{\mathrm{Im}}\,}z\). Setting \(\xi ^1= 0\) and \(\xi ^2 = \xi \) in this formula, we arrive at the prediction (2.6) for the Schramm probability \(P(z,\xi )\). Indeed, the integration with respect to x in (2.6) recovers P from \(\partial _z P\) and ensures that P tends to zero as \({{\mathrm{Re}}\,}z \rightarrow \infty \). On the other hand, the choice of the integration contour from \(\bar{z}\) to z in (2.5) is mandated by the requirement that \(P(z, \xi )\) should satisfy the correct boundary conditions as z approaches the real axis. See [22, Lecture 15] (see also, e.g., [21] and the references therein). Moreover, \(P(z,\xi )\) must be a real-valued function tending to 1 as \({{\mathrm{Re}}\,}z \rightarrow -\infty \); this fixes the constant \(c(\kappa )\).

4.3 Prediction of the Green’s Function

In order to obtain the local martingale relevant for the \(\hbox {SLE}_\kappa (2)\) Green’s function, we choose the following values for the parameters in (4.4):

As in the case of Schramm’s formula, the choice \(s = -2\sqrt{a}\) ensures that \(\mathcal {M}_t^{(z)}\) involves the one-form \(g_t'(u) du\). Moreover, if we let \({\mathcal {G}}(z, \xi ^1, \xi ^2)\) denote the Green’s function for \(\hbox {SLE}_\kappa (2)\) started from \((\xi ^1,\xi ^2)\), then we expect \({\mathcal {G}}\) to have the conformal dimensions (cf. page 124 in [22])

The parameters \(\sigma _1\) and \(\sigma _2\) in (4.10) are determined so that the observable \(\mathcal {M}_t^{(z)}\) in (4.6) has the conformal dimensions in (4.11). For example, a generalization of Proposition 15.5 in [22] to the case of two curves implies that \(\lambda _\infty = (2\sqrt{a}-b)\Sigma + \frac{\Sigma ^2}{2} = 0\) where \(\Sigma = \sigma _1 + \sigma _2 - 2\sqrt{a}\).

Remark

We can see here that the choice \(\rho =2\) is special: we have only two possible ways to add one screening field, corresponding to \(s=-2\sqrt{a}\) or \(s=1/\sqrt{a}\). But the extra \(\rho = 2\) corresponds to additional charges \(\sigma = \sigma _* = 2/\sqrt{8 \kappa }\) (we are using \(\sigma = \rho /\sqrt{8 \kappa }\)), so at infinity we have an additional charge \(\sigma + \sigma _* = 2 \sqrt{a}\). Consequently, the \(\rho = 2\) charge can be screened by only one screening field. If we add more \(\rho \) insertions, they can be screened by one screening field if their charges sum up to \(2\sqrt{a}\). This suggests that every \(\hbox {SLE}_\kappa \) observable with \(\lambda _q=0\) gives an \(\hbox {SLE}_\kappa (2)\) observable with \(\lambda _q=0\) after screening. Similarly, since adding n additional \(\rho _{j} =2\) gives additional charges at \(\infty \) of \(2n\sqrt{a}\), one could expect that one can construct a martingale for a system of n SLEs by adding n screening charges.

In the special case when the parameters \(\sigma _1,\sigma _2,s\) are given by (4.10), the local martingale (4.6) takes the form

where

We expect from the above discussion that there exists an appropriate choice of the integration contour \(\gamma \) in (4.6) such that \({\mathcal {G}}(z, \xi ^1, \xi ^2) = \text {const} \times \mathcal {M}_0^{(z)}\), that is, we expect

where

and \(c(\kappa )\) is a complex constant. By requiring that G satisfy the correct boundary conditions, we arrive at the prediction (2.10) for the Green’s function for \(\hbox {SLE}_\kappa (2)\). The trickiest step is the determination of the appropriate screening contour \(\gamma \). This contour must be chosen so that the Green’s function satisfies the appropriate boundary conditions as \((z,\xi ^1, \xi ^2)\) approaches the boundary of the domain \(\mathbb {H} \times \{-\infty< \xi ^1< \xi ^2 < \infty \}\). The complete verification that the Pochhammer integration contour in (2.5) leads to the correct boundary behavior is presented in Lemma 6.3 and relies on a complicated analysis of integral asymptotics. We first arrived at the Pochhammer contour in (2.5) via the following simpler argument.

Let \({\mathcal {G}}_\xi (z) = {\mathcal {G}}(z, -\xi ,\xi )\), \(J_\xi (z) = J(z, -\xi ,\xi )\). Let also \(I_\xi (z) = I(z, -\xi ,\xi )\) where I is the function defined in (2.9), i.e.,

We make the ansatz that

where the contours \(\{\gamma _i\}_1^4\) are Pochhammer contours surrounding the pairs \((\xi , z)\), \((\xi , \bar{z})\), \((-\xi , z)\), and \((-\xi , \bar{z})\), respectively. The integral involving the pair \((\xi , z)\) is \(I_\xi (z)\). The integrals involving the pairs \((\pm \xi , z)\) are related via complex conjugation to the integrals involving the pairs \((\pm \xi , \bar{z})\). Moreover, by performing the change of variables \(u \rightarrow -\bar{u}\), we see that the integral involving the pair \((-\xi , z)\) can be expressed in terms of \(I(-\bar{z})\). Thus, using the requirement that \(J(z,\xi )\) be real-valued, we can without loss of generality assume that \(J(z,\xi )\) is a real linear combination of the real and imaginary parts of \(I_\xi (z)\) and \(I_\xi (-\bar{z})\).

At this stage it is convenient, for simplicity, to assume \(4< \kappa < 8\) so that \(1< \alpha < 2\). Then we can collapse the contour in the definition (4.15) of \(I_\xi (z)\) onto a curve from \(\xi \) from z; this gives

where \(\hat{I}_\xi (z)\) is defined by

Since \(\hat{I}\) obeys the symmetry \({{\mathrm{Im}}\,}\hat{I}_\xi (z) = {{\mathrm{Im}}\,}\hat{I}_\xi (-\bar{z})\), our ansatz takes the form

where \(A_j = A_j(\kappa )\), \(j = 1,2,3\), are real constants.

Remark

It is not necessary to include further contours surrounding pairs such as \((z, \bar{z})\) and \((-\xi , \xi )\) in the ansatz (4.16) for \(J_\xi (z)\), because the contributions from such pairs can be obtained as linear combinations of the contributions from the four pairs already included. This is most easily seen in the case \(1< \alpha < 2\) where each Pochhammer contour can be collapsed to a single curve connecting the two points in the pair.

Up to factors which are independent of y, we expect the Green’s function \({\mathcal {G}}_\xi (z)\) to satisfy

Indeed, since the influence of the force point \(\xi ^2\) goes to zero as \({{\mathrm{Im}}\,}\gamma (t)\) becomes large, the first relation follows by comparison with \(\hbox {SLE}_\kappa \). The second relation can be motivated by noticing that the boundary exponent for \(\hbox {SLE}_\kappa (\rho )\) at the force point \(\xi ^2\) is \(\beta + \rho a\), see Lemma 7.1. In terms of \(J_\xi (z)\), the estimates (4.18) translate into

We will use these conditions to fix the values of the \(A_j\)’s.

We obtain one constraint on the \(A_j\)’s by considering the asymptotics of \(J_\xi (iy)\) as \(y \rightarrow \infty \). Indeed, for \(x = 0\) we have

where \({}_2F_1\) denotes the standard hypergeometric function. This implies

Substituting this expansion into (4.17), we find an expression for \(J_\xi (iy)\) involving two terms which are proportional to \(y^{2(\alpha - 1)}\) and \(y^{\alpha -1}\), respectively, as \(y \rightarrow \infty \). In order to satisfy the condition (4.19a), we must choose the \(A_j\) so that the coefficient of the larger term involving \(y^{2(\alpha - 1)}\) vanishes. This leads to the relation

We obtain a second constraint on the \(A_j\)’s by considering the asymptotics of \(J_\xi (iy)\) as \(z \rightarrow \xi \). Indeed, for \(x = \xi \) we have

Hence

Similarly, for \(x = -\xi \), we have

Hence

Substituting the expansions (4.21) and (4.22) into (4.17), we find an expression for \(J_\xi (\xi + iy)\) involving two terms which are of order \(O(y^{\frac{3 \alpha }{2}-1})\) and O(1), respectively, as \(y \rightarrow 0\). In order to satisfy the condition (4.19b), we must choose the \(A_j\) so that the coefficient of the larger term of O(1) vanishes. This implies

Using the constraints (4.20) and (4.23), the expression (4.17) becomes

where \(B_j = B_j(\kappa )\), \(j = 1,2\), are real constants. Recalling (4.14), this gives the following expression for \({\mathcal {G}}_\xi (z) = {\mathcal {G}}(z, -\xi ,\xi )\):

where \(\hat{c}(\kappa )\) is an overall real constant yet to be determined. Using translation invariance to extend this expression to an arbitrary starting point \((\xi ^1, \xi ^2)\), we find (2.10). The derivation here used that \(4< \kappa < 8\), but by analytic continuation we expect the same formula to hold for \(0 < \kappa \leqslant 4\).

Remark

We remark here that the non-screened martingale obtained via Girsanov has the conformal dimensions

5 Schramm’s Formula

This section proves Theorem 2.1. The strategy is the same as in Schramm’s original argument [36]. Assume \(0 < \kappa \leqslant 4\), i.e., \(\alpha = 8/\kappa \geqslant 2\). We write the function \(\mathcal {M}(z, \xi )\) defined in (2.5) as

where \(z = x+iy\) and \(J(z, \xi )\) is defined by

and the contour from \(\bar{z}\) to z passes to the right of \(\xi \) as in Fig. 1. We want to prove that the probability that the system started from \((0,\xi )\) passes to the right of \(z = x+iy\) is given by

The idea is to apply Itô’s formula and a stopping time argument to prove that the prediction is correct. Once we have proved Theorem 2.1, we easily obtain fusion formulas by simply collapsing the seeds.

5.1 Proof of Theorem 2.1

In [32], we carefully analyze the function \(P(z,\xi )\) and show that it is well-defined, smooth, and fulfills the correct boundary conditions. We summarize these facts here and then use them to give the short proof of Theorem 2.1.

Lemma 5.1

The function \(P(z, \xi )\) defined in (2.6) is a well-defined smooth function of \((z, \xi ) \in \mathbb {H} \times (0, \infty )\) which satisfies

Proof

See [32]. \(\square \)

Proposition 5.2

(PDE for Schramm’s formula) Let \(\alpha >1\). The function \(\tilde{\mathcal {M}}\) defined by

where \(\mathcal {M}\) is given by (2.5), satisfies the two linear PDEs

where the differential operators \(\mathcal {A}_j\) are defined by

Moreover, the function \(\tilde{P}\) defined by

where \(P(z,\xi )\) is defined by (2.6), satisfies the linear PDEs

Proof

Let \(z = x+iy\) and \(\bar{z} = x-iy\). We have

where the integrand m is given by

Let

A long but straightforward computation shows that m obeys the equations

Suppose first that \(\alpha > 2\). Then we can take the differential operator \(\mathcal {B}_j\) inside the integral when computing \(\mathcal {B}_j\mathcal {M}\) without any extra terms being generated by the variable endpoints. Hence (5.7) implies

An integration by parts with respect to u shows that the integral on the right-hand side vanishes. This shows (5.4) for \(\alpha > 2\). The equations in (5.4) follow in the same way for \(\alpha \in (1,2)\) if we first replace the contour from \(\bar{z}\) to z in (5.6) by a Pochhammer contour:

If \(\alpha = 2\), then

and (5.4) can be verified by a direct computation.

It remains to check the last assertion. We have

where \(\tilde{m} = \frac{1}{c_\alpha } {\text {Re}} \tilde{\mathcal {M}}\). Write

where

and we have only indicated the dependence on x explicitly. Since \(c_\alpha \in {\mathbb {R}}\) and \(\mathcal {A}_j\) has real coefficients, we have

Employing (5.8) twice, we find

Using (5.4) to replace \(\mathcal {A}_j(x')\) and integrating by parts in the term involving \(f_j(x')\), it follows that the right-hand side of (5.9) equals

Since

is purely imaginary and \(g_j(x)\) is real-valued, this yields

Since \(\partial _y{\text {Re}} \tilde{\mathcal {M}}(x') = -\partial _{x'} {\text {Im}} \tilde{\mathcal {M}}(x')\) (see Lemma 7.8 in [32]), we can integrate by parts again to see that

Combining (5.10) and (5.11), we conclude that \(\mathcal {A}_j \tilde{P}=0 \). \(\square \)

Consider a system of multiple SLEs in \(\mathbb {H}\) started from 0 and \(\xi > 0\), respectively. Write \(\xi _t^1\) and \(\xi _t^2\) for the Loewner driving terms of the system and let \(g_t\) denote the solution of (2.1) which uniformizes the whole system at capacity t. Then \(\xi _t^1\) and \(\xi _t^2\) are the images of the tips of the two curves under the conformal map \(g_t\). Given a point \(z \in \mathbb {H}\), let \(Z_t = g_t(z) \) and let \(\tau (z)\) denote the time that \({\text {Im}} g_t(z)\) first reaches 0.

A point \(z \in \mathbb {H}\) lies to the left of both curves iff it lies to the left of the leftmost curve \(\gamma _1\) started from 0. Moreover, since the system is commuting, its distribution is independent of the order at which the two curves are grown. Hence we may assume that the growth speeds \(\lambda _1\) and \(\lambda _2\) are given by \(\lambda _1 = 1\) and \(\lambda _2 =0\), but this assumption is not essential. We are therefore now in the setting of \(\hbox {SLE}_\kappa (2)\) started from \(\xi ^1\) with force point at \(\xi ^2\).

Lemma 5.3

Let \(z \in \mathbb {H}\). Define \(P_t(z)\) by

Then \(P_t(z)\) is an \(\hbox {SLE}_\kappa (2)\) martingale.

Proof

Itô’s formula combined with Proposition 5.2 immediately implies that \(P_t\) is a local martingale for the \(\hbox {SLE}_\kappa (2)\) flow; the drift term vanishes. Since P is bounded by Lemma 5.1, it follows that \(P_t\) is actually a martingale. \(\square \)

Lemma 5.4

Let \(z \in \mathbb {H}\), and \(\Theta _t^1 = \arg (Z_t - \xi _t^1)\). Then,

if and only if z lies to the right (resp. left) of the curve \(\gamma _1\) starting at 0.

Proof

See the proof of Lemma 3 in [36]. \(\square \)

Lemma 5.5

Let \(\tilde{P}(z, \xi )\) be the probability that the point \(z \in \mathbb {H}\) lies to the left of the two curves starting at 0 and \(\xi > 0\), respectively. Then \(\tilde{P}(z,\xi ) = P(z,\xi )\), where \(P(z,\xi )\) is the function defined in (2.6).

Proof

By Lemma 5.4, the angle \(\Theta _t^1 = \arg (Z_t - \xi _t^1)\) approaches \(\pi \) as \(t \uparrow \tau (z)\) on the event that \(z \in \mathbb {H}\) lies to the left of both curves. But (5.3b) shows that

Consequently, on the event that z lies to the left of both curves, \(P_t(z) \rightarrow 1\) as \(t \uparrow \tau (z)\). A similar argument relying on (5.3a) shows that on the event that \(z \in \mathbb {H}\) lies between or to the right of the two curves, then \(P_t(z) \rightarrow 0\) as \(t \uparrow \tau (z)\).

Let \(\tau _n(z)\) be the stopping time defined by

Since \(P_t(z)\) is a martingale, we have

By using the dominated convergence theorem,

Since \(P_0(z) = P(z, \xi )\), this concludes the proof of the lemma and of Theorem 2.1. \(\square \)

If \(\alpha = \frac{8}{\kappa } > 1\) is an integer, the integral (5.2) defining \(J(z, \xi )\) can be computed explicitly. However, the formulas quickly get very complicated as \(\alpha \) increases. We consider here the simplest case of \(\alpha =2\) (i.e. \(\kappa = 4\)). We remark that this case is particularly simple for one curve as well; indeed, the probability that an \(\hbox {SLE}_4\) path passes to the right of z equals \((\arg z)/\pi \).

Proposition 5.6

Let \(\kappa = 4\). Then the function \(P(z,\xi )\) in (2.6) is given explicitly by

Proof

Let \(\alpha = 2\). Then \(c_\alpha = -2\pi ^2\) and an explicit evaluation of the integral in (5.2) gives

Using that

it follows that the function \(\mathcal {M}\) in (2.5) can be expressed as

for \(z = x + iy \in \mathbb {H}\) and \(\xi > 0\). Taking the real part of this expression and integrating with respect to x, we find that the function \(P(z,\xi )\) in (2.6) is given by

The expression (5.12) follows. \(\square \)

Remark

In the fusion limit, Eq. (5.12) is consistent with the results of [18]. Indeed, in the limit \(\xi \downarrow 0\) the expression (5.12) for \(P(z, \xi )\) reduces to

which is Eq. (25) in [18].

6 The Green’s Function

In this section we prove Theorem 2.6. We recall from the discussion in Sect. 2 that the proof breaks down into proving Propositions 2.11 and 2.12. Proposition 2.11 establishes existence of a Green’s function for \(\hbox {SLE}_\kappa (\rho )\) and provides a representation for this Green’s function in terms of an expectation with respect to two-sided radial SLE. Proposition 2.12 then shows that the CFT prediction \({\mathcal {G}}_\xi (z)\) defined in (2.10) obeys this representation in the case of \(\rho = 2\).

6.1 Existence of the Green’s Function: Proof of Proposition 2.11

The basic idea of the proof of existence is similar to the “standard” one for the Green’s function for \(\hbox {SLE}_\kappa \) in \(\mathbb {H}\) which we now briefly recall. (See, e.g., [28] for further discussion.) Consider chordal \(\hbox {SLE}_\kappa \) from 0 to \(\infty \) in \(\mathbb {H}\). If \(\tau = \tau _\epsilon = \inf \{t\geqslant 0 : \Upsilon _t(z) \leqslant \epsilon \}\), we have

Here \(G(z) = \Upsilon (z)^{d-2} S(z)^\beta \) is the \(\hbox {SLE}_\kappa \) Green’s function and \(\mathbf {E}^*\) refers to the two-sided radial SLE measure with marked point \(z \in \mathbb {H}\). The computation uses that \(\mathbf {P}^*(\tau < \infty ) = 1\) and knowledge of the invariant distribution of S under \(\mathbf {P}^*\).

In the present setting, there are several complications. The \(\hbox {SLE}_\kappa \) measure \(\mathbf {P}\) is now weighted by a local martingale (6.1) to accommodate the boundary force point, and one of the main hurdles is to control the magnitude of this weight. Moreover, the expression corresponding to \(\mathbf {E}^*[S_\tau (z)^{-\beta }]\) involves an extra weight. Proving convergence is therefore significantly harder and our argument requires control of crossing events for paths near the marked interior point (Lemma 6.1).

Now let us proceed to the proof. Let \(0 < \kappa \leqslant 4\) and \(0 \leqslant \rho < 8-\kappa \) and consider \(\hbox {SLE}_\kappa (\rho )\) started from \((\xi ^1, \xi ^2)\) with \(\xi ^1 < \xi ^2\). We recall our parameters

and the normalized local martingale

by which we can weight \(\hbox {SLE}_{\kappa }\) in order to obtain \(\hbox {SLE}_{\kappa }(\rho )\), see Sect. 3. Let us first derive a few simple estimates on \(M_t^{(\rho )}\). Note that for our parameter choices we have \(r, \zeta \geqslant 0\). The identity

which follows from (2.4a) shows that

Moreover, if \(E_t\) denotes the interval \(E_t = (\xi _t^1, \xi _t^2) \subset {\mathbb {R}}\) and \(\gamma \) the curve generating the Loewner chain \((g_t)_{t \geqslant 0}\), then conformal invariance of harmonic measure gives

Since the left-hand side is bounded above by a constant times \(1 + {\text {diam}}(\gamma [0,t])\), this gives the estimate

which together with (6.3) shows that \(M_t^{(\rho )}\) only gets large when \({\text {diam}}(\gamma [0,t])\) gets large.

We will also need a geometric regularity estimate. In order to state it, let \(z \in \mathbb {H}\) and \(0<\epsilon _1< \epsilon _2 < {\text {Im}} z\). Let \(\gamma : (0,1] \rightarrow \mathbb {H}\) be a simple curve such that

Write \(H = \mathbb {H}\smallsetminus \gamma \) where \(\gamma = \gamma [0,1]\). For \(\epsilon > 0\) let \(\mathcal {B}_\epsilon = \mathcal {B}_\epsilon (z)\) be the disk of radius \(\epsilon \) about z and let U be the connected component containing z of \(\mathcal {B}_{\epsilon _2} \cap H\). The set \(\partial \mathcal {B}_{\epsilon _2} \cap \partial U\) consists of crosscuts of H. There is a unique outermost one which separates z from \(\infty \) in H and we denote this crosscut

Outermost means that \(\ell \) separates z and any other such crosscut from \(\infty \). See Fig. 4.

Lemma 6.1

Let \(0 < \kappa \leqslant 4\). There exists \(C < \infty \) such that the following holds. Let \(z \in \mathbb {H}\) and \(0< \epsilon _1< \epsilon _2 < {\text {Im}} z\). For \(\epsilon >0\) define the stopping times

If

where

is as in (6.5), then for \(0< 10\epsilon < \epsilon _1\), on the event \(\{\tau _{\epsilon _1}' < \infty \}\),

where \(\beta = 4a -1\).

Schematic picture of the curve \(\gamma \) (solid), the open set V (shaded), and the crosscut \(\ell \) defined in (6.5). If the path reenters V and hits \(\mathcal {B}_\epsilon (z)\) the “bad” event that \(\lambda< \tau < \infty \) occurs. The probability of this event is estimated in Lemma 6.1. The path \(\gamma '=\gamma [\sigma , \lambda ]\) is a crosscut of V that may either separate z from the \(\partial V \smallsetminus \ell \) or not. In either case, the function \(S_{\lambda }(z)\) defined in (3.4) can be estimated by the Beurling estimate since there is only one “side” of the curve facing z inside V

Proof

Let \(\mathcal {B}_1 = \mathcal {B}_{\epsilon _1}(z)\) and \(\mathcal {B}_2 = \mathcal {B}_{\epsilon _2}(z)\). Given \(\gamma _{\tau _{\epsilon _1}'}\) we consider the outermost separating crosscut \(\ell = \ell (z, \gamma _{\tau _{\epsilon _1}'}, \epsilon _2)\). Let \(\sigma = \max \{t \leqslant \tau _{\epsilon _1}': \gamma (t) \in \ell \}\), which is not a stopping time but almost surely \(\ell \) is a crosscut of \(H_\sigma \) which separates z from \(\infty \). Write V for the simply connected component containing z of \(H_{\sigma } \smallsetminus \ell \). Because one of the endpoints of \(\ell \) is the tip \(\gamma (\sigma )\), \(g_\sigma (\partial V \smallsetminus \ell ) - W_\sigma \) is a bounded open interval I contained in either the positive or negative real axis. (This also uses that \(\ell \) is a crosscut.) Almost surely, the curve \(\gamma '=\gamma [\sigma , \lambda ]\) is a crosscut of V starting and ending in \(\ell \). Note that \(g_\sigma (\gamma ') -W_\sigma \) is a curve in \(\mathbb {H}\) connecting 0 with \(g_\sigma (\ell )-W_\sigma \), the latter which is a crosscut of \(\mathbb {H}\) separating I and the point \(g_\sigma (z) - W_\sigma \) from \(\infty \) in \(\mathbb {H}\). Therefore, there is one “side” (i.e., one of \(g_\sigma ^{-1}(W_\sigma \pm \mathbb {R}_+)\)) of the curve \(\gamma \) such that any curve connecting z with it must intersect \(\ell \). If we now write \(\delta := {\text {dist}}(\gamma _\lambda , z) \leqslant \epsilon _1\), we can use (3.2), the maximum principle, and then the Beurling estimate (Lemma 3.1) to see that (see Fig. 4) on the event \(\tau _{\epsilon _1}' < \infty \),

Consequently, on the event that \(\tau _{\epsilon _1}' < \infty \) and \(\delta \geqslant 2 \epsilon \), the one-point estimate Lemma 3.2 and the distortion estimate (3.1) show that

Since \(\delta \leqslant \epsilon _1\) and

the right-hand side gets larger if \(\delta \) is replaced by \(\epsilon _1\). Thus, on the event that \(\tau _{\epsilon _1}' < \infty \) and \(\delta \geqslant 2 \epsilon \),

which proves (6.9) for all curves with \(\delta \geqslant 2 \epsilon \).

On the event that \(\tau _{\epsilon _1}' < \infty \) and \(\epsilon < \delta \leqslant 2\epsilon \), we can use the boundary estimate of Lemma 3.3 as follows. We may view \(\partial \mathcal {B}_\delta (z)\) as a crosscut of \(\mathbb {H}\smallsetminus \gamma [0, \lambda ]\) possibly considering only a subarc. We use the extension rule (see Chap. IV of [19]) to estimate from below the extremal distance between \(g^{-1}_{\lambda }(W_\lambda + \mathbb {R}_+)\) (or \(g^{-1}_{\lambda }(W_\lambda - \mathbb {R}_+)\), whichever does not intersect \(\partial \mathcal {B}_\delta (z)\) viewed as a crosscut) and \(\mathcal {B}_\delta (z)\) in \(\mathbb {H} \smallsetminus \gamma [0, \lambda ]\) by the extremal distance between \(\partial \mathcal {B}_2\) and \(\mathcal {B}_\delta (z)\) in \(\mathcal {B}_2 \cap V\). By comparing with the round annulus, the latter is at least \(\ln (\epsilon _2/\epsilon )/(2\pi )\). Therefore, Lemma 3.3 gives

It follows from (6.10) that, on the event that \(\tau _{\epsilon _1}' < \infty \) and \(\epsilon < \delta \leqslant 2\epsilon \),

which proves (6.9) also for curves with \(\epsilon < \delta \leqslant 2\epsilon \). \(\square \)

6.1.1 Proof of Proposition 2.11

We may without loss of generality assume \(\xi ^{1}=0\) and \(|z| = 1\). Constants are allowed to depend on z and \(\xi ^2\) as well as on \(\kappa \) and \(\rho \).

We will apply Lemma 6.1 with

By choosing \(\epsilon \) sufficiently small, we may assume that \(\epsilon _2 = \epsilon ^{1/4} < {\text {Im}} z\). For \(\epsilon > 0\), let \(\tau = \tau _\epsilon \), \(\tau ' = \tau _\epsilon '\), and \(\lambda = \lambda _{\epsilon _1, \epsilon _2}\) be defined by (6.6) and (6.7). Let \(\ell = \ell (z, \gamma _{\tau '_{\epsilon _1}} , \epsilon _2)\) denote the separating crosscut in (6.8). Let \( p \in (0, 1/4)\) be a constant (to be chosen later) and set \(\Sigma =\Sigma _{\epsilon } = \inf \{t \geqslant 0: |\gamma (t)| \geqslant \epsilon ^{-p}\}\).

We define a “good” event \(E = E_{\epsilon }\) by

where

We claim that

where \(E^c\) denotes the complement of E. To prove (6.11), it is clearly enough to show that

and

Let \(\sigma _{0}=0\) and define \(\sigma _k\) and \(U_k\) for \(k=1,2,\ldots ,\) by

Using (3.8), (6.3), and (6.4), we obtain the estimates

We will use (6.14) to prove (6.12) and (6.13).

We first prove (6.12). Lemma 6.1 and Lemma 3.2 yield

We next estimate the series on the right-hand side of (6.14). We first consider \(i=1\). For this, suppose \(j = -1, \ldots , J := \lfloor \log _2(\epsilon ^{-1}) \rfloor +1\) and define

The distortion estimate (3.1) implies \(\tau \leqslant \tau _{\epsilon 2^{-1}}'\). Also, \(\tau '_{\epsilon 2^J} = 0\) because \(|z| = 1\). Hence, \(U_k = \cup _{j=-1}^J (U_k \cap V_j^k)\) and so

Let us first assume \(-1 \leqslant j \leqslant \lfloor \frac{1}{2}\log _2 \epsilon ^{-1} \rfloor \). We claim that, on the event \(V_{j}^k \cap \{\sigma _{k-1} < \infty \}\),