Abstract

For real analytic expanding interval maps, a novel method is given for rigorously approximating the diffusion coefficient of real analytic observables. As a theoretical algorithm, our approximation scheme is shown to give quadratic exponential convergence to the diffusion coefficient. The method for converting this rapid convergence into explicit high precision rigorous bounds is illustrated in the setting of Lanford’s map \(x\mapsto 2x +\frac{1}{2}x(1-x) \pmod 1 \).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For a real analyticFootnote 1 expanding interval map \(T:X\rightarrow X\) with absolutely continuous invariant probability measure \(\mu \), and a real analytic function \(g:X\rightarrow \mathbb {R}\), the corresponding diffusion coefficient (or variance) is the quantity \(\sigma _\mu ^2(g)\) defined by

The quantity \(\sigma _\mu ^2(g)\) plays a role in the central limit theorem: it is well known (see e.g. [11]) that provided g is not equal to a coboundary plus a constant then

converges in law to a normal distribution with mean zero and variance \(\sigma ^2_\mu (g)>0\). A difficult problem of practical interest is to calculate, or to approximate, the diffusion coefficient \(\sigma ^2_\mu (g)\), noting that (1) is only rarely amenable to direct evaluation. Bahsoun et al. [2] recently gave a method for the rigorous approximation of diffusion coefficients, including error bounds, based on Ulam’s method. They illustrated this approach with the particular map

introduced by Lanford [8], and the function \(g(x)=x^2\), showing that \(0.3458 \le \sigma ^2_\mu (g) \le 0.4152\).

In this paper we develop an alternative algorithm for approximating diffusion coefficients of expanding interval maps. In general the method uses the periodic points of T, and exploits the real analyticity of the map T and the function g. The method gives highly accurate approximations to the diffusion coefficient, both at the level of a theoretical algorithm converging with a given asymptotic speed (namely quadratic exponential convergence, as described in Theorem 1 below), and, most importantly, at the level of completely rigorous certified error bounds (see Theorems 2 and 3). The real analyticity assumptions will be crucial in establishing both the theoretical asymptotics and the concrete error bounds, since explicit use is made of the holomorphic extensions of the function g and (the inverse branches of) the map T to certain regions of the complex plane. The general asymptotic speed of our algorithm is as follows:

Theorem 1

Let \(T:X\rightarrow X\) be a real analytic expanding interval map with absolutely continuous invariant probability measure \(\mu \), and suppose \(g:X\rightarrow \mathbb {R}\) is real analytic. There exists a sequence \(\sigma _n^2 \rightarrow \sigma _\mu ^2(g)\), where each \(\sigma _n^2\) can be explicitly computed in terms of periodic points of period up to n. The rate of convergence is quadratic exponential, in the sense that there exist constants \(C>0\) and \(\kappa \in (0,1)\) such that

The constants C and \(\kappa \) of Theorem 1 can be rendered explicit, a procedure which involves consideration of holomorphic extensions to regions in the complex plane. A more challenging problem, in the context of a specific map T and function g, is to establish effective error bounds on \(|\sigma ^2_\mu (g) - \sigma ^2_n|\), preferably of very high accuracy; a key purpose of this article is to show that in such practical settings there is considerable scope for sharpening our optimal version of the simple asymptotic form (3) so as to obtain effective high quality bounds on the diffusion coefficient. As a model case we shall orient our discussion of this problem around the specific example considered in [2], namely Lanford’s map T, and the function \(g(x)=x^2\), both of which are real analytic; henceforth we refer to this as the model problem. The problem of obtaining high accuracy rigorous estimates on \(\sigma ^2_\mu (g)\) involves both theoretical and computer programming elements, and any proof of such bounds will invariably be computer-assisted. As a starting point we note that, using only a modern desktop computer, it is possible to locate all the periodic points of the Lanford map T up to period P, for someFootnote 2 \(20\le P\le 30\). Choosing maximum period \(P=25\) yields the sequence of approximations to \(\sigma ^2_\mu (g)\) given in Table 2, which at the level of non-rigorous empirical observation suggests that

and indeed a more optimistic interpretation of Table 2 suggests the slightly more accurate

The task is to now harness these computed approximate values \(\sigma _n^2\) (and in particular the last of these computed approximations, \(\sigma _P^2\)) so as to produce a fully rigorous approximation to \(\sigma ^2_\mu (g)\), together with an error bound. Any naive expectation that the theoretical asymptotic (3), together with specific values for \(\kappa \) and C, would automatically yield an effective error bound on \(|\sigma ^2_\mu (g) - \sigma ^2_n|\) is tempered by the realisation that, for the model problem, \(\kappa \) is reasonably close to the valueFootnote 3 1, and C is extremely large.Footnote 4 Although, as noted above, the value \(P=25\) is deemed to be the maximum such that all \((\sigma _n^2)_{n=1}^P\) can be explicitly evaluated, a finer analysisFootnote 5 of the estimates yielding the asymptotic (3) suggests that a good quality rigorous effective estimate on \(\sigma ^2_\mu (g)\) remains out of reach for \(P\le 30\).

In order to obtain high quality effective estimates on \(|\sigma ^2_\mu (g) - \sigma ^2_n|\) we therefore develop a hybrid approach, consisting of three distinct types of computation, the first type being the exact evaluation of \(\sigma ^2_n\) (see Sect. 3 for the formulae defining \(\sigma _n^2\)) for all sufficiently small values of n (i.e. for all \(1\le n\le P\), where e.g. \(P=25\) for the model problem), using exact locations of periodic points (i.e. evaluated to a given precision, typically several hundred decimal places). We next make the observation (see Corollary 1(b)) that \(\sigma ^2_\mu (g)\) can be expressed in terms of certain infinite series; it turns out that there are five such series, which for convenience we denote hereFootnote 6 as \(\sum _{n=1}^\infty s_n^{(j)}\), for \(1\le j\le 5\), where it can be shown that each sequence \((s_n^{(j)})_{n=1}^\infty \) is \(O(\kappa ^{n^2})\) as \(n\rightarrow \infty \). The error \(|\sigma ^2_\mu (g) - \sigma ^2_P|\) can then be expressed in terms of the tails \(\sum _{n=P+1}^\infty s_n^{(j)}\) of these series, and each of these is \(O(\kappa ^{P^2})\) as \(P\rightarrow \infty \), a result which incidentally leads to the proof of Theorem 1. A consequence is that the task of obtaining a concrete bound on \(|\sigma ^2_\mu (g) - \sigma ^2_P|\) reduces to bounding each tail \(\sum _{n=P+1}^\infty s_n^{(j)}\), and here we note that the previously described difficulties in bounding \(|\sigma ^2_\mu (g) - \sigma ^2_P|\) (for e.g. \(P=25\) in our model problem) stem from the natural upper bounds on the terms \(s_n^{(j)}\) being insufficiently sharp for \(n\approx P\).

Our resolution of this problem of insufficiently sharp bounds consists of splitting the tails \(\sum _{n=P+1}^\infty s_n^{(j)}\) into two parts, whose estimation can be tackled by distinct methods. Choosing some valueFootnote 7 \(Q>P\) (e.g. in our model example we take \(Q=40\)) we consider separately the intermediate sum \(\sum _{n=P+1}^Q s_n^{(j)}\) and the deep tail \(\sum _{n=Q+1}^\infty s_n^{(j)}\). The terms in the deep tail can be effectively bounded, essentially by a simple estimate of the form \(|s_n^{(j)}|\le C\kappa ^{n^2}\), the idea being that \(n>Q\) is large enough for the smallness of \(\kappa ^{n^2}\) to dominate the largeness of C, to the extent that the whole deep tail is extremely small.

For the purpose of estimating the intermediate (finite) sum \(\sum _{n=P+1}^Q s_n^{(j)}\) we require some new techniques, whose justification (see Sect. 6) stems from the theory of eigenvalues and approximation numbers applied to a certain auxiliary (transfer) operator; these techniques require a non-trivial amount of computation, though a key point is that the computational effort is relatively light in comparison to that required for locating the \(2^n\) period-n points for some high value of n (e.g. \(n\approx P\)). The coefficients \(s_n^{(j)}\) are related to the Taylor series for the determinant of the transfer operator, and can be bounded in terms of the approximation numbers of the operator. These approximation numbers can in turn be bounded by making a judicious choice of basis for an underlying Hilbert space whose inner product is defined by Lebesgue integration, and explicitly computing the norms of the images of (finitely many of) these basis elements under the transfer operator yields a bound on the approximation numbers which implies a bound on the \(s_n^{(j)}\) for \(P+1\le n\le Q\) (see Sect. 7 for further details).

In Sect. 8 we combine all of these various ingredients, in the context of the model problem, to obtain the following rigorous bound on the diffusion coefficient, noting that it represents a significant improvementFootnote 8 on the estimate \(0.3458 \le \sigma ^2_\mu (g) \le 0.4152\) established in [2] for the same combination of function \(g(x)=x^2\) and Lanford map T.

Theorem 2

For the Lanford map T, with absolutely continuous invariant probability measure \(\mu \), if \(g(x)=x^2\) then the corresponding diffusion coefficient \(\sigma ^2_\mu (g)\) satisfies

The organisation of this article is as follows. Section 2 consists of preliminary material drawn from the ergodic theory of expanding maps, thermodynamic formalism, and Hilbert spaces of holomorphic functions. Our algorithm is described in Sect. 3, together with various reformulations of the diffusion coefficient. The rapid convergence of the algorithm is illustrated in Sect. 4 for certain cases where \(\sigma ^2_\mu (g)\) is known explicitly, and in Sect. 5 for the model problem, where \(\sigma ^2_\mu (g)\) does not have a (known) closed form. The key theoretical tools for deriving rigorous error estimates, based on the theory of eigenvalues and approximation numbers, are developed in Sects. 6 and 7. These tools are then applied in detail to the model problem in Sect. 8, proving a result (Theorem 3) that is slightly stronger than Theorem 2, and concluding with a proof of Theorem 1. Some of the numerical data used in the proof of Theorem 3 is collected as an Appendix.

2 Preliminaries

2.1 Ergodic Theory of Expanding Interval Maps

Suppose the unit interval \(X=[0,1]\) is partitioned as \(X=X_1\cup \cdots \cup X_l\), \(l\ge 2\), where \(X_i=[x_{i-1},x_i]\), and \(0=x_0<x_1<\cdots <x_l=1\). Given \(T:X\rightarrow X\), we shall always assume that \(T|_{X_i}\) is real analytic, for each \(1\le i\le l\). We say T is piecewise expanding if there exists \(\lambda >1\) such that \(|T'|\, |_{X_i} \ge \lambda \) for all \(1\le i\le l\). We say that T is Markov if there exists a \(d\times d\) matrix A (the transition matrix for T) with each entry either 0 or 1, such that \(T(X_i)=\cup _{j:A(i,j)=1} X_j\) for each \(1\le i\le l\). The collection \(\{X_i\}_{i=1}^l\) is called the Markov partition for T. T is topologically mixing if some power of the transition matrix A is a strictly positive matrix.

It is well known (see [9]) that any topologically mixing piecewise \(C^\omega \) expanding Markov map admits a unique ergodic absolutely continuous invariant probability measure, and we shall denote this measure by \(\mu \). Our results are valid for all such maps, though to simplify the exposition we shall always assume that T is a full branch expanding map. In other words, each \(T|_{X_i}\) is assumed to be a surjection onto X, or, equivalently, every entry of the corresponding transition matrix A is a 1. For each \(1\le i\le l\) we write \(\tau _i:= (T|_{X_i})^{-1}\), referring to \(\{\tau _i\}_{i=1}^l\) as the collection of inverse branches of T. Since T is expanding, each inverse branch is a contraction mapping on X; indeed the real analyticityFootnote 9 of T ensures that the inverse branches have a holomorphic extension to some common complex neighbourhood of X on which they are all contraction mappings.

Notation 1

Let \(\mathcal {O}_n:=\{\underline{x}=(x,T(x),\ldots ,T^{n-1}(x))\in X^n: T^n(x)=x\}\) denote the collection of periodic orbits of (not necessarily least) period n, considered as ordered n-tuples. For \(\underline{x}\in \mathcal {O}_n\) and \(g:X\rightarrow \mathbb {R}\), define

and for \(n\ge 1\), \(t\in \mathbb {C}\), define

For a continuous function \(f:X\rightarrow \mathbb {R}\), its pressure \(P(f)=P(f,T)\) is defined (see e.g. [13]) by

2.2 The Diffusion Coefficient

Suppose \(g:X\rightarrow \mathbb {R}\) is real analytic. Its diffusion coefficient (or variance) \(\sigma ^2_\mu (g)\) is defined by

The diffusion coefficient can be expressed in terms of pressure as follows:

Lemma 1

Let \(T:X\rightarrow X\) be a real analytic expanding interval map with absolutely continuous invariant probability measure \(\mu \), and suppose \(g:X\rightarrow \mathbb {R}\) is real analytic. If \(p(t):=P(tg-\log |T'|)\), then the integral of g with respect to \(\mu \) is given by

and the diffusion coefficient is given by

Proof

For (5) see e.g. [11, p. 60], [13, p.133]), and for (6) see e.g. [11, p. 61], [13, p.133]). \(\square \)

2.3 Holomorphic Extensions

As noted in Sect. 2.1, the inverse branches of the real analytic expanding map T extend as contraction mappings to some common (simply connected) complex neighbourhood U of X. If \(g:X\rightarrow \mathbb {R}\) is real analytic then U may be chosen so that g is holomorphic on a neighbourhood of \(\overline{U}\). By the Riemann mapping theorem, no generality is lost by assuming that U can be chosen to be a disc D, and henceforth we make this assumption: an open disc \(D\subset {\mathbb {C}}\) containing X will be called admissible (for the map T and function g) if g has a holomorphic extension to a neighbourhood of \(\overline{D}\), and each inverse branch \(\tau _i\) has a holomorphic extension to D such that \(\overline{\cup _{i=1}^l \tau _i(D)} \subset D\). This will allow consideration of transfer operators acting on certain Hilbert spaces of holomorphic functions.

Let \(D\subset {\mathbb {C}}\) be an open disc of radius r, centred at c. The Hardy space \(H^2(D)\) consists of those holomorphic functions \(\varphi :D\rightarrow \mathbb {C}\) with \(\sup _{\varrho<r} \int _0^{1} |\varphi (c+\varrho e^{2\pi it})|^2\, dt<\infty \). This is a Hilbert space, with inner product given by \((\varphi ,\psi )= \int _0^{1} \varphi (c+r e^{2\pi it}) \overline{\psi (c+r e^{2\pi it})}\, dt\), which is well-defined since members of \(H^2(D)\) extend as \(L^2\) functions on the boundary \(\partial D\); the norm of \(\varphi \in H^2(D)\) will be written as \(\Vert \varphi \Vert =(\varphi ,\varphi )^{1/2}\). Equivalently, \(H^2(D)\) is the set of those holomorphic functions \(\varphi \) on D such that if \(m_k(z)=r^{-k}(z-c)^k\) for \(k\ge 0\), then \(\{(\varphi ,m_k)\}\in l^2(\mathbb {C})\) (see e.g. [14]), so that \( \Vert \varphi \Vert = (\sum _{k=0}^\infty |(\varphi ,m_k)|^2)^{1/2} \).

2.4 Transfer Operators and Determinants

For a real analytic function \(g:X\rightarrow \mathbb {R}\), an important ingredient in our method of approximating the diffusion coefficient \(\sigma ^2_\mu (g)\) is the function \(\Delta _g:{\mathbb {C}}^2\rightarrow {\mathbb {C}}\) defined by

for sufficiently small values of z, and by analytic continuation to the whole of \(\mathbb {C}^2\). It can be shown that (7) defines an entire function (see Corollary 4), with Taylor series expansion

(where we write \(c_n(t)\) for \(c_{g,n}(t)\) whenever g is understood), from which we deduce the recurrence relation

For T and g with holomorphic extensions to D as in Sect. 2.3, the corresponding transfer operator \(\mathcal {L}_{g,t}:H^2(D)\rightarrow H^2(D)\) is defined by

for \(z\in X\), and by holomorphic continuation for \(z\in D\), where \(f= tg - \log |T'|\). The function \(\Delta _g\) is the determinant \(\det (I-z\mathcal {L}_{g,t})\) (see [12]), and its zeros are precisely the reciprocals of the eigenvalues of \(\mathcal {L}_{g,t}\). The leading (i.e. largest in modulus) eigenvalue of \(\mathcal {L}_{g,t}\) is \(e^{p(t)}=e^{P(tg-\log |T'|)}\).

3 The algorithm

3.1 The Diffusion Coefficient in Terms of Derivatives of the Determinant

The reformulation (6) of the diffusion coefficient \(\sigma _\mu ^2(g)\) in terms of pressure, together with the fact that \(e^{-p(t)}\) is a zero of \(\Delta _g(\cdot ,t)\), suggests the possibility of representing \(\sigma _\mu ^2(g)\) in terms of partial derivatives of \(\Delta _g\). In order to establish such a representation, as Proposition 1 below, we first adopt the following notational conventions:

Notation 2

We write first partial derivatives as

and second partial derivatives as

Proposition 1

If \(T:X\rightarrow X\) is a real analytic expanding map with absolutely continuous invariant probability measure \(\mu \), the function \(g:X\rightarrow \mathbb {R}\) is real analytic, and the determinant \( \Delta _g \) is defined by (7), then the diffusion coefficient \(\sigma ^2_\mu (g)\) can be expressed as

Proof

Let \(z(t)=e^{-p(t)}\) where \(p(t)=P(tg-\log |T'|)\), so that \( p(0)=0 \) and therefore

Differentiating gives

so Lemma 1 gives

Differentiating again gives

so evaluating at \(t=0\) and using Lemma 1 gives

or in other words

The zeros of \(\Delta _g(\cdot ,t)\) are the reciprocals of the eigenvalues of \(\mathcal {L}_{g,t}\), and since \(e^{p(t)}=z(t)^{-1}\) is the leading eigenvalue of \(\mathcal {L}_{g,t}\) then

so differentiating (15) with respect to t gives

and therefore

Differentiating (16) with respect to t gives

and evaluating this at \(t=0\) then using (11), (12) and (14), gives

in other words

which is the required expression (10). \(\square \)

Definition 1

If \(g:X\rightarrow \mathbb {R}\) is real analytic, with \(\Delta _g(z,t)=1+\sum _{n=1}^\infty c_n(t)z^n\), then for \(N\ge 1\) define

Corollary 1

Under the same hypotheses as Proposition 1,

-

(a)

The diffusion coefficient \(\sigma ^2_\mu (g)\) can be expressed as

$$\begin{aligned} \sigma ^2_\mu (g)= & {} \left( \frac{D_2\Delta _g(1,0)}{D_1\Delta _g(1,0)}\right) ^2\\&+ \frac{D_{11}\Delta _g(1,0)\left( \frac{D_2\Delta _g(1,0)}{D_1\Delta _g(1,0)}\right) ^2 - 2 D_{12}\Delta _g(1,0)\left( \frac{D_2\Delta _g(1,0)}{D_1\Delta _g(1,0)}\right) + D_{22}\Delta _g(1,0)}{D_1\Delta _g(1,0)}. \end{aligned}$$ -

(b)

The diffusion coefficient \(\sigma ^2_\mu (g)\) can be expressed as

$$\begin{aligned} \sigma ^2_\mu (g)= & {} \left( \frac{\sum _{n=1}^\infty c_n'(0)}{\sum _{n=1}^\infty nc_n(0)}\right) ^2 \\&+ \frac{\sum _{n=1}^\infty n(n-1)c_n(0)\left( \frac{\sum _{n=1}^\infty c_n'(0)}{\sum _{n=1}^\infty nc_n(0)}\right) ^2 - 2 \sum _{n=1}^\infty nc_n'(0)\left( \frac{\sum _{n=1}^\infty c_n'(0)}{\sum _{n=1}^\infty nc_n(0)}\right) + \sum _{n=1}^\infty c_n''(0)}{\sum _{n=1}^\infty nc_n(0)}. \end{aligned}$$ -

(c)

The sequence of approximations (20) converges, with \(\sigma ^2_N \rightarrow \sigma ^2_\mu (g)\) as \(N\rightarrow \infty \).

-

(d)

If \(g:X\rightarrow \mathbb {R}\) is real analytic such that \(\int g\, d\mu =0\), then \(\sigma ^2_\mu (g)\) can be expressed as

$$\begin{aligned} \sigma ^2_\mu (g) = \frac{D_{22}\Delta _g(1,0)}{D_1\Delta _g(1,0)} = \frac{\sum _{n=1}^\infty c_n''(0)}{\sum _{n=1}^\infty nc_n(0)} . \end{aligned}$$ -

(e)

If \(g:X\rightarrow \mathbb {R}\) is real analytic such that \(\int g\, d\mu =0\), and \(\hat{\sigma }^2_N\) is defined by

$$\begin{aligned} \hat{\sigma }^2_N := \frac{\sum _{n=1}^N c_n''(0)}{\sum _{n=1}^N nc_n(0)} , \end{aligned}$$(21)then \(\hat{\sigma }^2_N \rightarrow \sigma ^2_\mu (g)\) as \(N\rightarrow \infty \).

Proof

Part (a) follows from Proposition 1, by substituting (18) into (10). Since the Taylor series around 0 for \(\Delta _g(\cdot , t)\) is written (cf. (8)) as \( \Delta _g(z,t) = 1+\sum _{n=1}^\infty c_n(t) z^n \), termwise differentiation yields (b). Part (d) is a special case of formula (10) in Proposition 1, together with (b), while parts (c) and (e) follow directly from the definitions of \(\sigma ^2_N\) and \(\hat{\sigma }^2_N\). \(\square \)

Remark 1

A consequence of Corollary 1 is that if g is known to have integral zero with respect to the absolutely continuous invariant probability measure \(\mu \), then there is a choice of sequence of approximants to the corresponding diffusion coefficient: both the sequence \(\sigma ^2_N\) and the sequence \(\hat{\sigma }^2_N\) converge to \(\sigma ^2_\mu (g)\).

3.2 Periodic Orbit Formulae

The quantities \(\sigma ^2_N\) approximating the diffusion coefficient \(\sigma ^2_\mu (g)\) are accessible to us in terms of those periodic points of T of period up to N. Recall from (4) that

so that the kth order derivative \(a^{(k)}_n(t)\) is given by

We are interested in derivatives up to order 2, evaluated at \(t=0\), so for \(n\ge 1\) define

in other words

3.3 Computer Implementation

Although in certain special cases (e.g. the doubling map of Sect. 4) the periodic points of T are rational and known explicitly, more generally a non-trivial aspect of our algorithm is to locate these periodic points (to within a specified precision).Footnote 10 For this, note that for \(1\le i\le l\) the inverse branch \(\tau _i:X\rightarrow X_i\), defined as \(\tau _i = (T|_{X_i})^{-1}\), is uniformly contracting. For each \(\xi \in \{1,\ldots , l\}^n\) the composition \(\tau :=\tau _{\xi _1}\circ \ldots \circ \tau _{\xi _n}\) is also uniformly contracting, and the set of period-n points for T is precisely the set of fixed points of such compositions \(\tau \). The fixed point for the contraction mapping \(\tau \) can be determined using standard techniques (e.g. choose \(x_0\in X\) and evaluate \(x:=\tau ^k(x_0)\) for suitably large k, such that \(|\tau (x)-x|<\delta \), where \(\delta \) is appropriately small; provided \(\tau (x+\varepsilon )-\tau (x)>\eta \) and \(\tau (x)-\tau (x-\varepsilon )>\eta \) for \(\varepsilon ,\eta >0\) satisfying \(\eta >\delta +\varepsilon \), an intermediate value argument guarantees that x is within \(\varepsilon \) of the true fixed point of \(\tau \)).

Having located the period-n points of T, and formed the collection \(\mathcal {O}_n\), for all \(1\le n\le N\), the calculation of orbit sums \(a_n(0)\) and their derivatives \(a_n^\prime (0)\), \(a_n^{\prime \prime }(0)\) is then possible (using (4) and (22)) for \(n=1,\ldots ,N\). Differentiation of the recurrence relation (9) yields recurrence relations for the derivatives \(c_n^\prime (0)\) and \(c_n^{\prime \prime }(0)\) which can then be computed for \(n=1,\ldots ,N\), and substitution into (20) gives the approximant \(\sigma _N^2\).

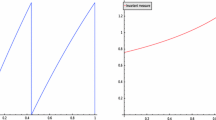

4 Test Cases: Approximation of Known Diffusion Coefficients

For certain combinations of map T and function g, the diffusion coefficient is known exactly. While for these cases there is clearly no need for a numerical algorithm to approximate \(\sigma ^2_\mu (g)\), it is nonetheless instructive to consider them, by way of a warm-up exercise.

4.1 Perfect Approximation of the Diffusion Coefficient via Periodic Orbits

As a simple first example we describe here an expanding map T and function g whose diffusion coefficient \(\sigma ^2_\mu (g)\) is exceedingly well approximated by the sequence \(\sigma ^2_n\): in fact it turns out that each \(\sigma ^2_n\) is equal to \(\sigma ^2_\mu (g)\).

Let \(T:X\rightarrow X\) be the doubling map, defined by \(T(x)=2x \pmod 1\) on [0, 1) and \(T(1)=1\), its absolutely continuous invariant probability measure \(\mu \) being Lebesgue measure itself. Consider the function \(g: X\rightarrow \mathbb {R}\) defined by \(g(x)=2x-1\), which clearly satisfies \(\int g\, d\mu =0\). In fact g is cohomologous to the function h defined by

and it is easily seen that \(\frac{1}{n} \int (\sum _{i=0}^{n-1}h\circ T^i)^2\, d\mu =1\) for all \(n\ge 1\), so the corresponding diffusion coefficient is given by the exact formula

While the existence of an exact formula for \(\sigma ^2_\mu (g)\) means there is no need for numerical approximations, this example has the noteworthy feature that our approximations \(\sigma ^2_n\) are perfect for each value of n:

Proposition 2

For \(T:X\rightarrow X\) the doubling map, and \(g(x)=2x-1\),

so in particular

Proof

If \(n\ge 1\) and \(\underline{x}\in \mathcal {O}_n\) then \(m_{\underline{x}}=2^n\), and \(\mathcal {O}_n\) has cardinality \(2^n\), so \( \alpha _n = \frac{2^n}{n(2^n-1)} \). Since \(g(1-x)= - g(x)\), and the set \(\mathcal {O}_n\) is invariant under \(x\mapsto 1-x\), (23) implies that

while

Now \(\Delta _g(z,t) = \exp \left( - \sum _{n=1}^\infty a_{g,n}(t)z^n\right) \) for z of sufficiently small modulus, therefore

and setting \(t=0\), so that \(a_{g,n}'(0)=\beta _n=0\) for all \(n\ge 1\) by (26), gives

for z of sufficiently small modulus.

Now \( \frac{\partial }{\partial z}\Delta _g(z,t) = \left( - \sum _{n=1}^\infty na_{g,n}(t)z^{n-1}\right) \Delta _g(z,t) \), so that by (27),

Comparing (28) and (29), which are valid for z of sufficiently small modulus, gives

which is in fact valid for all \(z\in {\mathbb {C}}\), by analytic continuation, since both sides of the equation are entire functions of z. Writing \(\Delta _g(z,t)=1+\sum _{n=1}^\infty c_{g,n}(t)z^n\) we deduce from (30) that

and the required equality (24), and hence (25), follows by comparing coefficients. \(\square \)

Remark 2

The setting of Proposition 2 allows an explicit illustration of the quadratic exponential decay of the coefficients \(c_n(0)\) (and hence of the \(c_n''(0)=nc_n(0)\)). Writing

we see that

and thereforeFootnote 11

so in particular

4.2 Rapid Approximation

Suppose, as in Sect. 4.1, that \(T:X\rightarrow X\) is the doubling map, and now define \(g:X\rightarrow \mathbb {R}\) by \(g(x)=x^2\). Clearly the integral of g is known explicitly, namely \(\int g\, d\mu =1/3\), and if \(f=g-1/3\) then \(\int f\, d\mu =0\), and \(\sigma _\mu ^2(g)= \sigma _\mu ^2(f)\), and the equivalent form of the diffusion coefficient \(\sigma ^2_\mu (f)= \int f^2\, d\mu + 2\sum _{n=1}^\infty \int f\circ T^n f \, d\mu \) (see e.g. [3]) gives

More generally, for T the doubling map, we note in passing that there are exact formulae for the diffusion coefficient of monomials \(x^k\) (e.g. for \(g(x)=x^3\) it can can be shown that \(\sigma ^2_\mu (g) = \frac{2783}{11760}\)), and indeed for general polynomials, which can be derived from the following result:

Proposition 3

Let \(T:X\rightarrow X\) be the doubling map, and \(\mu \) Lebesgue measure. If \(B_k\) denotes the k-th Bernoulli polynomial, then its diffusion coefficient is given by

where \(\beta _{2k}=B_{2k}(0)\) is the 2k-th Bernoulli number.

Proof

The Bernoulli polynomial \(B_k\) is an eigenvector of the Perron-Frobenius operator \(\mathcal {L}\), with corresponding eigenvalue \(2^{-k}\), since it is readily checked that the generating function

satisfies \(\mathcal {L}G(x,y) = G(x,y/2)\) (see [6]). Now \(\sigma ^2_\mu (B_k)=\int B_k^2\, d\mu + 2\sum _{n=1}^\infty \int B_k\circ T^n B_k\, d\mu \), and

since \(\int B_k^2\, d\mu = \frac{(k!)^2}{(2k)!} |\beta _{2k}|\) (see e.g. [1]), so the result follows. \(\square \)

For the purpose of observing the speed of approximation of our algorithm, the first six approximationsFootnote 12 \(\sigma ^2_n\) are \( \sigma ^2_1 = 1/4 \), \( \sigma ^2_2\approx 0.200617 \), \( \sigma ^2_3\approx 0.321554 \), \( \sigma ^2_4\approx 0.191905 \), \(\sigma ^2_5\approx 0.262566 \), \( \sigma ^2_6\approx 0.259167 \), which after a slow start show signs of approaching \(\sigma ^2=7/27=0.259\dot{2}5\dot{9}\). The successive approximants shown in Table 1 illustrate the quadratic exponential convergence, which as in Sect. 4.1 is \(O(\kappa ^{n^2})\) as \(n\rightarrow \infty \) for any \(\kappa >1/\sqrt{2}\), with the integer parts \(l_n\) of \(\log _{1/\sqrt{2}}|\sigma ^2_n - \sigma ^2_\mu (g)|\) also tabulated, and observed to satisfy \(n^2-11\le l_n\le n^2-8\) for \(7\le n\le 20\).

5 The Lanford Map: Computed Approximations to the Diffusion Coefficient

Let \(T:X\rightarrow X\) be the Lanford map, introduced in [8] and defined by

As in [2], we shall be interested in approximating the diffusion coefficient \(\sigma _\mu ^2(g)\) where the function \(g:X\rightarrow \mathbb {R}\) is defined by \(g(x)=x^2\). Table 2 gives the sequence of approximations \(\sigma _n^2\) to \(\sigma _\mu ^2(g)\), using points of period up to \(n=25\).

We note that \(|\sigma _{25}^2-\sigma _{24}^2|\le 10^{-50}\), strongly suggesting that \(|\sigma _{25}^2-\sigma _\mu ^2(g)|\le 10^{-50}\), though of course this does not constitute a rigorous proof. The remainder of this article is devoted to the development of techniques for rigorously deriving an error bound for approximations of this kind; the approach is valid in the general context of real analytic T and g, and in the particular case of our model problem (the Lanford map T, and \(g(x)=x^2\)), it turns out that we can rigorously prove \(|\sigma _{25}^2-\sigma _\mu ^2(g)| < 1.48 \times 10^{-18}\) (see Theorem 3).

Notation 3

Writing the Lanford map T as

we see that its real analytic inverse branches \(\tau _i:X\rightarrow X_i\) are given byFootnote 13

Remark 3

-

(a)

As mentioned in Sect. 3.3, the fact that the \(\tau _i\) are contractions facilitates the location of the period-n points for T, since they are fixed points of suitable compositions \(\tau _{\xi _1}\circ \cdots \circ \tau _{\xi _n}\). The computational procedure for locating the collection of period-n points is very swift for smaller values of n; high level software packages such as Mathematica or Matlab may be used for this purpose, though the exponential growth in the number of period-n points makes it advantageous to use an imperative programming language for larger n. For this paper the location of periodic points for the Lanford map was carried out on a personal computer with Fortran F07 compiler using MPFUN MPFR packages by D. Bailey (allowing for thread-safe arbitrary precision computations), for all periods n up to \(n=25\), following the algorithm as described in Sect. 3.

-

(b)

It is noteworthy that in all cases studied (those of Sect. 4 as well as this section), the approximations to \(\sigma ^2_\mu (g)\) are rather inaccurate (e.g. not correct to 2 decimal places) until points of period at least 5 are incorporated into the approximation.

-

(c)

In [2, §4.6], a non-rigorous experiment is performed, which seems to suggest that \(\sigma ^2_\mu (g)\) lies in [0.361, 0.363]. This contrasts with our sequence of approximations in Table 2, and in particular with our best approximation \(\sigma _{25}^2\). It follows from Theorem 3 that the approximation error of the experiment in [2] is at least \(10^{-3}\).

-

(d)

Note that \(\min _{x\in X} T'(x)= T'(1)=3/2 \), corresponding to the fact that 2 / 3 is the largest value attained on X by the derivatives of the inverse branches \(\tau _i\). The value 2 / 3 appears to be significant concerning the rate at which the approximants \(\sigma _n^2\) approach the diffusion coefficient \(\sigma _\mu ^2(g)\). Assuming \(\sigma ^2_{25}\) to be approximately equal to \(\sigma _\mu ^2(g)\), so that \(\delta _n :=|\sigma ^2_n - \sigma ^2_{25}| \approx |\sigma ^2_n - \sigma ^2_{\mu }(g)|\), we note that the values \(\varepsilon _n := \exp ( n^{-2} \log \delta _n)\) are close to \(\sqrt{2/3}\) (e.g. \(\varepsilon _{22}\approx \sqrt{ 0.668617}\), \(\varepsilon _{23}\approx \sqrt{0.667478}\), \(\varepsilon _{24}\approx \sqrt{0.666508}\)), and we therefore tabulate \(l_n:=[\log _{\sqrt{2/3}}|\sigma ^2_n - \sigma ^2_{25}|]\) in Table 2 to illustrate the quadratic exponential convergence. In view of this, it is unsurprising that the value \(\sqrt{2/3}\) (or some value rather close to it) also appears to dictate the quadratic exponential decay of the coefficients \(c_n(t)\) of the determinants \(\Delta _g(z, t)=1+\sum _{n=1}^\infty c_n(t)z^n\): for example the \(c_n(0)\) in Appendix Table 7 are such that the terms \(\kappa _n:=\exp (n^{-2}\log |c_n(0)|)\) appear to be converging to a value close to, or equal to, \(\sqrt{2/3}\) (e.g. for \(20\le n\le 25\) the \(\kappa _n\) are approximately \(\sqrt{0.674246}\), \(\sqrt{0.673346}\), \(\sqrt{0.672570}\), \(\sqrt{0.671899}\), \(\sqrt{0.671313}\), \(\sqrt{0.670801}\) respectively). On the basis of the observed behaviour for the Lanford map, and for the doubling map in Sect. 4, one might speculate that for more general real analytic maps \(T:X\rightarrow X\) and functions \(g:X\rightarrow \mathbb {R}\), if \(\kappa _T:= (\inf \{m_{\underline{x}}^{1/n}:\underline{x}\in \mathcal {O}_n, n\in \mathbb {N}\})^{-1/2}\) then \(|\sigma ^2_n-\sigma ^2_\mu (g)|=O(\kappa ^{n^2})\) as \(n\rightarrow \infty \) for all \(\kappa >\kappa _T\), and that \(\lim _{n\rightarrow \infty } \exp (n^{-2}\log |c_{g,n}(t)|)=\kappa _T\) for all \(t\in \mathbb {C}\). This would constitute a strengthening of our results that if \(\theta \in (\kappa _T^2,1)\) is the contraction ratio for an admissible disc, then \(|\sigma ^2_n-\sigma ^2_\mu (g)|=O(\kappa ^{n^2})\) as \(n\rightarrow \infty \) for all \(\kappa >\theta ^{1/2}\), and \(\limsup _{n\rightarrow \infty } \exp (n^{-2}\log |c_{g,n}(t)|)\le \theta ^{1/2}\) for all \(t\in \mathbb {C}\).

6 Eigenvalues and Approximation Numbers

In this section we recall the definition of approximation numbers \(s_n(\mathcal {L}_{g,t})\) for the transfer operator \(\mathcal {L}_{g,t}\), and introduce a sequence \(\alpha _n(t)\) of upper bounds for \(s_n(\mathcal {L}_{g,t})\), which we call approximation bounds. By then defining the associated contraction ratio \(\theta \in (0,1)\) we are able to establish (see Corollary 2) the exponential bound \(s_n(\mathcal {L}_{g,t}) \le \alpha _n(t) \le K_t \theta ^n\), for a certain explicit constant \(K_t>0\), which in particular will facilitate (see Corollary 3 in Sect. 7) a proof of the quadratic exponential decay of the Taylor coefficients for the associated determinant.

Let \(D\subset \mathbb {C}\) be an open disc of radius \(\varrho \) centred at c, and let \(\{\lambda _n(t)\}_{n=1}^\infty \) denote the eigenvalue sequence for the operator \(\mathcal {L}_{g,t}:H^2(D)\rightarrow H^2(D)\), with the convention that eigenvalues are ordered by decreasing modulus and repeated according to their algebraic multiplicities. The Taylor coefficients \(c_n(t)\) of \(\Delta _g(\cdot ,t)\) then satisfy (see e.g. [15, Lem. 3.3]) the identity

For \(i\ge 1\), the ith approximation number of \(\mathcal {L}_{g,t}:H^2(D)\rightarrow H^2(D)\) is defined to be the value

and the well known relation \( \left| \sum _{i_1< \ldots< i_n} \prod _{j=1}^n \lambda _{i_j}(t) \right| \le \sum _{i_1< \ldots < i_n} \prod _{j=1}^n s_{i_j}(\mathcal {L}_{g,t}) \) (see e.g. [7, Cor. VI.2.6]) implies that

If, for \(k\ge 0\), we define \(m_k:D\rightarrow \mathbb {C}\) by

then \(\{m_k\}_{k=0}^\infty \) constitutes an orthonormal basis for \(H^2(D)\). For \(n\ge 1\) we can define the corresponding nth approximation bound \(\alpha _n(t)\) by

and these values yield a simple upper bound on the approximation numbers of the transfer operator:

Lemma 2

For \(n\ge 1\), the nth approximation number of \(\mathcal {L}_{g,t}:H^2(D)\rightarrow H^2(D)\) satisfies

Proof

If \(f\in H^2(D)\) then \(\{(f,m_k)\}_{k=0}^\infty \in l^2(\mathbb {C})\). Defining \(\mathcal {L}_{g,t}^{(n)} := \mathcal {L}_{g,t} P_n \), where \(P_n:H^2(D)\rightarrow H^2(D)\) is defined by \( P_n(f) = \sum _{k=0}^{n-2} (f,m_k)\, m_k \), we obtain the estimate

and the Cauchy–Schwarz inequality then implies

and hence \(\Vert \mathcal {L}_{g,t}- \mathcal {L}_{g,t}^{(n)} \Vert \le \left( \sum _{k=n-1}^\infty \Vert \mathcal {L}_{g,t}(m_k) \Vert ^2 \right) ^{1/2} = \alpha _n(t)\). But \(\mathcal {L}_{g,t}^{(n)}\) has rank \(n-1\), so the required inequality (39) follows. \(\square \)

Definition 2

Let \(D'\) be the smallest disc, concentric with D, such that \(\cup _{i=1}^l \tau _i(D)\subset D'\), and \(\varrho , \varrho '\) the respective radii of \(D, D'\). The corresponding contraction ratio \(\theta =\theta _{D}\) is defined to be \( \theta = \theta _{D} := \varrho '/\varrho \).

Lemma 3

Let D be an admissible disc, with contraction ratio \(\theta =\theta _{D}\). If \(g:(0,1)\rightarrow \mathbb {R}\) has a holomorphic continuation to a bounded function on D, and each \(|\tau _i'(\cdot )|\) has a holomorphic continuation to a bounded function on D, then for all \(k\ge 0\),

Proof

Defining \(w_{i,t}:D\rightarrow {\mathbb {C}}\) by \(w_{i,t}(z) = e^{tg(\tau _i(z))}|\tau _i'(z)|\), we can write \( \mathcal {L}_{g,t} = \sum _{i=1}^l M_{i,t} C_i \), where \(C_i, M_{i,t}:H^2(D)\rightarrow H^2(D)\) are given by \( C_i f := f\circ \tau _i \) and \(M_{i,t} f := w_{i,t} f\). It follows that

Now each \(\tau _i(D)\) is contained in the disc \(D'\), with the same centre c as D, and of radius \(\varrho '=\theta \varrho \), therefore \(|C_i(m_k)(z)| = \varrho ^{-k} |\tau _i(z)-c|^k < \varrho ^{-k} (\varrho ')^k = \theta ^k\) for all \(z\in D\). It follows that \( \Vert C_i(m_k)\Vert \le \theta ^k \) and combining this with (41) gives the required inequality (40). \(\square \)

Corollary 2

Under the hypotheses of Lemma 3, if \( K_t := \frac{ \sum _{i=1}^l \Vert w_{i,t}\Vert _\infty }{ \theta \sqrt{1 - \theta ^2}} \) then

Proof

Combining (38) and Lemma 3 gives

while the inequality \(s_n(\mathcal {L}_{g,t}) \le \alpha _n(t)\) is the content of Lemma 2. \(\square \)

7 Euler Bounds and Computed Bounds

In this section we introduce two different kinds of bound on the Taylor series coefficients of the determinant \(\Delta _g(\cdot ,t)\). The first of these, the Euler bound, has a simple closed form and is readily seen to converge to zero at a quadratic exponential rate. This implies the quadratic exponential decay of the Taylor coefficients (see Corollary 3), and hence that the determinant is an entire function (see Corollary 4); importantly, the inequality proved in Corollary 3 is subsequently used in Sect. 8 to rigorously bound one part of the error term in our diffusion coefficient approximation. The second kind of bound on the Taylor coefficients of the determinant is based on the approximation bounds \(\alpha _n(t)\) introduced in Sect. 6, and motivated by the recognition (see the comments in Sect. 1) that despite the quadratic exponential decay of the Euler bounds, for practical purposes they may be insufficiently sharp even for moderately large values of n. By first defining an upper computed approximation bound \(\alpha _{n,N,+}(t)\) (the large integer N plays the role of a proxy for \(\infty \) in the definition (38) of \(\alpha _n(t)\)), the inequality (36) then motivates our definition of the upper computed Taylor bound (53), and the resulting Taylor coefficient bound in Proposition 4 provides a key ingredient for the validated approximation of the diffusion coefficient \(\sigma _\mu ^2(g)\) described in Sect. 8.

Let us write

In view of the following bound (44), and the fact that the identity in (43) was first given by Euler (cf. [5, Ch. 16]), we shall refer to the quantity \(K_t^n E_n(\theta )\) as the Euler bound on the \(n^{th}\) Taylor coefficient of the determinant \(\Delta _g(\cdot ,t)\).

Corollary 3

Under the hypotheses of Lemma 3, if \(\Delta _{g}(z,t) =1+\sum _{n=1}^\infty c_n(t)z^n\) then

Proof

From (36) and (42), \( |c_n(t)| \le \sum _{i_1< \ldots< i_n} \prod _{j=1}^n s_{i_j}(\mathcal {L}_{g,t}) \le K_t^n \sum _{i_1< \ldots < i_n} \theta ^{i_1+\ldots +i_n} \). \(\square \)

Corollary 4

Under the hypotheses of Lemma 3, if \(\Delta _{g}(z,t) =1+\sum _{n=1}^\infty c_n(t)z^n\), and \(\kappa \in (\theta ^{1/2},1)\), then

for all \(t\in \mathbb {C}\), and in particular the determinant \(\Delta _g(\cdot ,t)\) is an entire function.

Proof

The asymptotic (45) is immediate from (44), and this in particular implies that the Taylor coefficients of \(\Delta _g(\cdot ,t)\) tend to zero faster than any exponential, hence the function is entire. \(\square \)

In order to exploit Lemma 2, which asserts that

we require a practical means of computing the approximation bound \(\alpha _n(t)\). This will consist of bounding \(\sum _{k=n-1}^\infty \Vert \mathcal {L}_{g,t} (m_k)\Vert ^2\) by the sum of an exactly computed long finite sum \(\sum _{k=n-1}^N \Vert \mathcal {L}_{g,t} (m_k)\Vert ^2\) (the \(H^2(D)\) norms of the summands can be evaluated using numerical integration,Footnote 14 since each \(\mathcal {L}_{g,t}m_k\) is known in closed form) and a rigorous upper bound on \(\sum _{k=N+1}^\infty \Vert \mathcal {L}_{g,t} (m_k)\Vert ^2\) using (40).

With this in mind, for \(n,N\in \mathbb {N}\) with \(n\le N\), we define the lower computed approximation bound

and the upper computed approximation bound

Lemma 4

For \(t\in \mathbb {C}\), and \(n,N\in \mathbb {N}\) with \(n\le N\),

Proof

The inequality \(\alpha _{n,N,-}(t) \le \alpha _n(t)\) is clear. To prove \(\alpha _n(t) \le \alpha _{n,N,+}(t)\) we use (40) to give \(\alpha _n(t)^2 = \sum _{k=n-1}^N \Vert \mathcal {L}_{g,t}(m_k)\Vert ^2 + \sum _{k=N+1}^\infty \Vert \mathcal {L}_{g,t}(m_k)\Vert ^2 \le \sum _{k=n-1}^N \Vert \mathcal {L}_{g,t}(m_k)\Vert ^2 + \left( \sum _{i=1}^l \Vert w_{i,t}\Vert _\infty \right) ^2 \frac{\theta ^{2(N+1)}}{1-\theta ^2} \), and the inequality follows. To prove that \(\alpha _{n,N,+}(t) \le K_t (1 + \theta ^{2(N+2-n)})^{1/2} \theta ^n\), note that combining (42) with \(\alpha _{n,N,-}(t) \le \alpha _n(t)\) gives \( \alpha _{n,N,-}(t) \le K_t \theta ^n \), so (47) gives

and the result follows. \(\square \)

The \(\alpha _{n,N,+}(t)\) can now be used to give rigorous upper bounds on the Taylor coefficients of \(\Delta _g(\cdot , t)\). For \(t\in \mathbb {C}\), and \(n,M,N\in \mathbb {N}\) with \(n\le M\le N\), define the Taylor bound \(\beta _{n,N,+}^{M}(t)\) by

where the sum is over those \(\underline{i}=(i_1,\ldots ,i_n)\in \mathbb {N}^n\) which satisfy \(i_1< i_2< \ldots < i_n\), and the sequence \((\alpha _{n,N,+}^{M}(t) )_{n=1}^\infty \) is defined by:

Note that from (42), (48) and (50) we have \( s_n(\mathcal {L}_{g,t}) \le \alpha _{n,N,+}^{M}(t) \), which combined with (36) establishes that the Taylor bounds \(\beta _{n,N,+}^{M}(t)\) are indeed bounds on the modulus of the \(n^{th}\) Taylor coefficient of \(\Delta _g(\cdot ,t)\):

As computable approximations to \(\beta _{n,N,+}^{M}(t)\) we then define the lower computed Taylor bound by

and for \(Q\in \mathbb {N}\) with \(n\le Q\le M\le N\) we define the upper computed Taylor bound by

In practice the sum on the righthand side of (53) will be extremely small, though is sufficient for the upper computed Taylor bound to be an upper bound on \(|c_n(t)|\):

Proposition 4

For \(t\in \mathbb {C}\), and \(Q,M,N\in \mathbb {N}\) with \(Q\le M\le N\),

Proof

If \( \mathcal {I}_n := \{ \underline{i}= (i_1,\ldots ,i_n) \in \mathbb {N}^n: i_1<\ldots < i_n\} \) then \(\mathcal {I}_n = \bigcup _{l=0}^n \mathcal {I}_n^{(l)}\) is a disjoint union, where the \(\mathcal {I}_n^{(l)}\) are defined by \(\mathcal {I}_n^{(l)} =\{ \underline{i}= (i_1,\ldots ,i_n)\in \mathcal {I}_n: i_l \le M < i_{l+1}\}\) for \(1\le l \le n-1\) and \( \mathcal {I}_n^{(0)} = \{ \underline{i}= (i_1,\ldots ,i_n)\in \mathcal {I}_n: M < i_{1}\}\), \(\mathcal {I}_n^{(n)} = \{ \underline{i}= (i_1,\ldots ,i_n)\in \mathcal {I}_n: i_n \le M \} \). If we define \( \beta _{n,N,+}^{M, (l)}(s) := \sum _{\underline{i}\in \mathcal {I}_n^{(l)}} \prod _{j=1}^n \alpha _{i_j,N,+}^{M}(s) \) for \(0\le l\le n\), so that \( \beta _{n,N,+}^{M, (n)}(s) = \beta _{n,N,+}^{M, -}(s) \), we obtain

Setting \(J := K_t \left( 1+\theta ^{2(N+2-Q)}\right) ^{1/2} \), Lemma 4 gives \( \alpha _{n,N,+}(t) \le J \theta ^n \) for all \(1\le n\le Q\), and this can be used to bound each \( \beta _{n,N,+}^{M, (l)}(s) = \sum _{\underline{i}\in \mathcal {I}_n^{(l)}} \prod _{j=1}^n \alpha _{i_j,N,+}^{M}(s) \le J^{n-l} \sum _{\underline{i}\in \mathcal {I}_n^{(l)}} \theta ^{i_{l+1} +\ldots + i_n} \prod _{j=1}^l \alpha _{i_j,N,+}^{M}(s) , \) or in other words \(\beta _{n,N,+}^{M, (l)}(s) \le J^{n-l} (\sum _{\underline{i}\in \mathcal {I}_l^{(l)}} \prod _{j=1}^l \alpha _{i_j,N,+}^{M}(s) ) ( \sum _{\underline{\iota }\in \mathcal {I}_{n-l}} \theta ^{(n-l)M} \theta ^{\iota _1+\ldots +\iota _{n-l} } ) \), and therefore \(\beta _{n,N,+}^{M, (l)}(s) \le J^{n-l} \beta _{l,N,+}^{M,-}(s)\, \theta ^{M(n-l)} \ E_{n-l}(\theta ) \), and substituting these bounds into (55) gives

Now (53) and (56) together give \( \beta _{n,N,+}^{M}(t) \le \beta _{n,N,+}^{M,+}(t) \), which combined with (51) gives (54). \(\square \)

8 Validated Numerics: The Lanford Map

With the theory of Sects. 6 and 7 in hand, we are finally in a position to rigorously justify the quality of our computed approximation (see Sect. 5) to the diffusion coefficient \(\sigma ^2_\mu (g)\) in the case of our model problem, namely the case of T the Lanford map, \(\mu \) its absolutely continuous invariant probability measure, and \(g:X\rightarrow \mathbb {R}\) the function \(g(x)=x^2\). In Sect. 8.1 we choose a suitable disc D, compute the associated contraction ratio \(\theta \) and constants \(K_t\), and make choices of the natural numbers M, N, Q which arise in connection with the computed Taylor bounds of Sect. 7. In Sect. 8.2 we establish (see Proposition 5) rigorous bounds on the tails of five series which arise in the formula for \(\sigma ^2_\mu (g)\) derived in Corollary 1; each series represents a certain derivative of the determinant, and the bounds are established via our Euler bounds and computed Taylor bounds on its Taylor coefficients \(c_n(t)\). In Sect. 8.3 these tail estimates are combined with the exact evaluations of the corresponding truncated series obtained via periodic point calculations (as described in Sect. 5) to prove a rigorous bound on \(\sigma ^2_\mu (g)\) (see Theorem 3). In Sect. 8.4 we prove Theorems 1 and 2, which were stated in Sect. 1; Theorem 2 is seen to be a minor variant of Theorem 3, while the more abstract Theorem 1 is established by combining the techniques used to prove Theorem 3 with the Taylor series asymptotic (45) from Corollary 4.

8.1 Computed Approximation Bounds and Computed Taylor Bounds

ChoosingFootnote 15 D to be the open disc centred at \(c=0.664\), of radius \(\varrho =0.87\), we note that both image discs \(\tau _0(D)\) and \(\tau _1(D)\) are contained in the disc \(D'\) centred at c, of radius \(\varrho '=\tau _1(c+r)-c\), and the corresponding contraction ratio can be computed as

For \(i=0,1\) we have

We shall be particularly interested in the choices \(t=0\) andFootnote 16 \(t=1/20\), and in these cases the supremum norm on D for both functions \(w_{0,t}\) and \(w_{1,t}\) is attained by evaluating at \(z=c+\varrho \),

We can then compute \(K_0\) and \(K_{1/20}\) to be

We know that \(|c_n(t)|\le K_t^n E_n(\theta )\) for all \(n\ge 1\), and will be interested in those n which are large enough for this bound to be effective, for the cases \(t=0\) and \(t=1/20\). It is easily computed that in both of these cases, the Euler bound \(K_t^n E_n(\theta )\) does not even become smaller than 1 until \(n>20\), and when \(n=26\) (the smallest value of n for which we do not have access to the period-n points) it is of the order of \(10^{-5}\) for small t, so the Euler bound by itself would only permit a bound on \(\sigma _\mu ^2(g)\) which is accurate to around 1 decimal place. It is therefore crucial that we use the computed Taylor bounds in order to yield the high accuracy bound on \(\sigma _\mu ^2(g)\) given in Theorem 3, and in the proof of that result we use the Euler bounds only for \(n>40\).

Henceforth let \(Q=40\), \(M=300\), \(N=400\) (so that in particular \(Q\le M\le N\), as was assumed throughout Sect. 7), and consider the two cases \(t=0\) and \(t=1/20\).

We first evaluate the \(H^2(D)\) norms of the monomial images \(\mathcal {L}_{g,t} (m_k)\) for \(0\le k\le N=400\). These norms are decreasing in k, and Appendix Table 3 contains the first few evaluations, for \(0\le k\le 10\). Using these norms \(\Vert \mathcal {L}_{g,t}(m_k)\Vert \) we then evaluate, for \(1\le n\le M=300\), the upper computed approximation bounds \(\alpha _{n,N,+}(t) = \alpha _{n,400,+}(t)\) defined (cf. (47)) byFootnote 17

These bounds are decreasing in n; Appendix Table 4 contains the first few evaluations, for \(1\le n\le 10\).

The upper computed approximation bounds \(\alpha _{n,400,+}(t)\) are then used to form the upper computed Taylor boundsFootnote 18 \(\beta _{n,N,+}^{M,+}(t) = \beta _{n,N,+}^{M,-}(t) + \sum _{l=0}^{n-1} J_{Q,N,t}^{n-l}\, \beta _{l,N,+}^{M,-}(t)\, \theta ^{M(n-l)} E_{n-l}(\theta )\), where

which are listed in Appendix Tables 5 and 6.

8.2 A Tale of Five Tails: The Ingredients for Validating the Diffusion Coefficient

The following Proposition 5 gives rigorous bounds on the tails of five series appearing in the formula for \(\sigma ^2_\mu (g)\) derived in Corollary 1.

Proposition 5

Proof

Now \(|c_n(0)|\le \beta _{n,N,+}^{M,+}(0)\), and \(|c_n(0)| \le K_0^nE_n(\theta )\), therefore

and using the values in Appendix Table 5 we readily compute the finite sumFootnote 19

while the closed form expression for the Euler bound \(K_0^n E_n(\theta )\) means we also readily compute that

which is the required (58).

In a similar way, Appendix Table 5 gives the finite sum

while the closed form expression for \(K_0^n E_n(\theta )\) means we also readily compute that

so adding the above two quantities gives

which is the required bound (59).

Next we require an estimate on the terms \(c_n'(0)\). From Cauchy’s integral formula

where \(\Gamma _p\) is the positively oriented circle of radius p centred at 0, we see that \( |c_n'(0)| \le \frac{1}{p}\max _{t\in \Gamma _p}|c_n(t)| \), and making the choice \(p=1/20\) gives \( |c_n'(0)| \le 20\max _{t\in \Gamma _{1/20}}|c_n(t)| \). Therefore

and using the values in Appendix Table 6 we can evaluate the finite sum

while the closed form expression for \(K_{1/20}^n E_n(\theta )\) allows the computation

which is the required bound (60).

Similarly,

and the values in Appendix Table 6 give

while the closed form expression for \(K_{1/20}^n E_n(\theta )\) allows the computation

which is the bound (61).

To bound \(c_n''(0)\), Cauchy’s integral formula gives

so \( |c_n''(0)| \le \frac{1}{p^2}\max _{t\in \Gamma _p}|c_n(t)| \), and again choosing \(p=1/20\) we have

so that

The values in Appendix Table 6 then give

while (70) implies

which is the required bound (62). \(\square \)

8.3 The Rigorous Bound on the Diffusion Coefficient

In the proof of Theorem 3 we shall make repeated use of the following simple lemma, in settings where A and B are quantities which cannot be computed precisely, but where a and b are computable approximations, and errors \(\alpha \) and \(\beta \) can be derived.

Lemma 5

If \(A,B,a,b\in \mathbb {R}\) and \(\alpha ,\beta >0\) satisfy \(|A-a|\le \alpha \) and \(|B-b|\le \beta \), then

and

We can now justify the quality of our computed approximation to \(\sigma ^2_\mu (g)\) as follows:

Theorem 3

If T is the Lanford map, \(\mu \) is its absolutely continuous invariant probability measure, and \(g(x)=x^2\), then the diffusion coefficient \(\sigma ^2_\mu (g)\) can be approximated by \(\sigma ^2_{25}\), which is derived using T-periodic points of period up to 25, so that

Proof

For economy of notation, let us write

where for our purposes N will equal either 25 or \(\infty \), so in particular Corollary 1(b) gives

Our periodic orbit calculations (as described in Sect. 5) yield the following:

Using (78) with \(A=R_\infty \) and \(a=R_{25}\),

Combining (88) with (85) and (60), and using (77), we obtain

Using (77) again gives

Using (77) again we see that

Using (77) again we see that

Writing

we use (91) and (92) to see that

and hence

Now

so from (90) and (94) we deduce

and the desired bound (79) follows. \(\square \)

8.4 Conclusion

We conclude by proving the two theorems stated in Sect. 1, beginning with Theorem 2, which follows readily from Theorem 3:

Proof of Theorem 2

Our algorithm (see Table 2) gives

and Theorem 3 gives \(| \sigma ^2_\mu (g) - \sigma ^2_{25}| < 1.48 \times 10^{-18}\), therefore

which in particular implies the required result.

Finally, the more abstract Theorem 1 can be proved using ideas similar to those used in the proof of Theorem 3:

Proof of Theorem 1

Writing \(\Delta _{g}(z,t) =1+\sum _{n=1}^\infty c_n(t)z^n\), the asymptotic (45) implies that each of \(c_n(0)\), \(c_n'(0)\) and \(c_n''(0)\) is \(O(\kappa ^{n^2})\) as \(n\rightarrow \infty \), for some \(\kappa \in (0,1)\). For the sums defined in (80), (81), it then follows that each of the five tails \(|R_\infty - R_n|\), \(|S_\infty - S_n|\), \(|T_\infty - T_n|\), \(|U_\infty - U_n|\), \(|V_\infty - V_n|\) is \(O(\kappa ^{n^2})\) as \(n\rightarrow \infty \). Using Lemma 5 we then successively deduce, via arguments analogous to those used in the proof of Theorem 3, that the intermediate quantities \(|1/R_\infty - 1/R_n|\), \(|T_\infty /R_\infty - T_n/R_n|\), \(|(T_\infty /R_\infty )^2 - (T_n/R_n)^2|\), \(|S_\infty (T_\infty /R_\infty )^2 - S_n(T_n/R_n)^2|\), \(|U_\infty (T_\infty /R_\infty ) - U_n(T_n/R_n)|\), \(|W_\infty - W_n|\), \(|W_\infty /R_\infty - W_n/R_n|\) are also all \(O(\kappa ^{n^2})\) as \(n\rightarrow \infty \). Since

we then deduce that \(|\sigma ^2_\mu (g) - \sigma ^2_n| = O(\kappa ^{n^2})\) as \(n\rightarrow \infty \), as required. \(\square \)

Notes

By convention we say that T is real analytic whenever it is piecewise real analytic, i.e. the interval X admits a partition into intervals, with T real analytic on each partition piece.

In general the specific value of P will depend on available hardware, on the computer programming implementation of our algorithm, and on the time available to make the computation. For the Lanford map T we found it possible to locate points up to period 20 in less than an hour, while locating points of period up to 25 took around a day (computations were performed in an arbitrary precision environment, giving several hundred correct decimal digits); note that since T is a 2-branch map, incrementing the maximum period by one entails an approximate doubling of the computer run time.

For any two branch expanding map, our techniques yield a value of \(\kappa \) lying in the range \([2^{-1/2},1)\), while for the Lanford map itself our optimal value is \(\kappa \approx 0.927734\) (this is the square root of the quantity \(\theta \) defined in (57)). Note that although the term \(\kappa ^{n^2}\) is approximately \(4.3 \times 10^{-21}\) when \(n=25\), the value of C in (3) is too large for the asympotic estimate \(|\sigma ^2_\mu (g) - \sigma ^2_n| \le C\kappa ^{n^2}\) to be effectively used until n is significantly larger (and, crucially, above the maximum value of n for which all \(2^n\) period-n points can be located using the computational resources at our disposal).

The size of C will depend on \(\kappa \), and C becomes larger the closer \(\kappa \) is chosen to the optimal value of approximately 0.927734 (see Footnote 3). As an indication of its order of magnitude, we use the fact (see Footnote 5) that \(|\sigma ^2_\mu (g) - \sigma ^2_n|\) is related to (and in fact somewhat larger than) the quantity \(K_{1/20}^nE_n(\theta ) = K_{1/20}^n (\prod _{i=1}^n (1-\theta ^i))^{-1} \theta ^{n(n+1)/2}\) , where \(\theta \approx 0.860691\), \(K_{1/20}\approx 3.631\). We are at liberty to work with any \(\kappa \in (\theta ^{1/2},1)=(0.927734,1)\), and for example with the concrete choice \(\kappa =0.95\) we can compute \(\sup _{n\in \mathbb {N}} K_{1/20}^nE_n(\theta )/0.95^{n^2}\approx 4.440429\times 10^{10}\) (the supremum is attained at \(n=26\)), so that \(K_{1/20}^nE_n(\theta ) \le C'\kappa ^{n^2}\) for \(C'=4.5 \times 10^{10}\). It follows, after some additional calculations (along the lines of those detailed in Sect. 8), that the value of C in (3) could be chosen to be of the order of \(10^{11}\) when \(\kappa =0.95\).

This finer analysis consists of using what we call Euler bounds, with the quality of the estimate on \(|\sigma ^2_\mu (g) - \sigma ^2_n|\) closely related to the size of the quantities \(K_t^nE_n(\theta ) = K_t^n (\prod _{i=1}^n (1-\theta ^i))^{-1} \theta ^{n(n+1)/2}\) given in Appendix Tables 5 and 6 (for \(t=0\) and \(t=1/20\) respectively), where \(\theta =\kappa ^2\approx 0.860691\), \(K_0\approx 3.378\), \(K_{1/20}\approx 3.631\). We note that for sufficiently small values of n, the quadratic exponential decay of the term \( \theta ^{n(n+1)/2}\) is swamped by the exponential increase of the term \(K_t^n\), and the strong increase of \((\prod _{i=1}^n (1-\theta ^i))^{-1}\) (though this latter term is bounded, by \((\prod _{i=1}^\infty (1-\theta ^i))^{-1}\approx 8876.45\)). In particular, for \(n=20\) both \(K_t^nE_n(\theta )\) terms are greater than 1 (hence \(n=20\) represents a hopeless case for this naive method), while if \(n=25\) then \(K_0^nE_n(\theta )\approx 0.000084\) and \(K_{1/20}^nE_n(\theta )\approx 0.00051\), which in fact can be used (via arguments similar to those used in the proof of Theorem 3) to justify only a single decimal digit of \(\sigma ^2_\mu (g)\).

In terms of the later notation, these series correspond (see Corollary 1(b)) to \(\sum _{n=1}^\infty nc_n(0)\), \(\sum _{n=1}^\infty n(n-1)c_n(0)\), \(\sum _{n=1}^\infty c_n'(0)\), \(\sum _{n=1}^\infty nc_n'(0)\), and \(\sum _{n=1}^\infty c_n''(0)\), which themselves correspond to partial derivatives of the determinant of a (transfer) operator.

As will become clear, one virtue of this method is that it perfectly feasible, from a computational point of view, to choose Q rather large (e.g. some value well over 100), a choice which may be important for expanding maps T for which the expansion is rather mild, corresponding to significant inertia in the quadratic exponential decay of the terms \(s_n^{(j)}\), stemming from a value \(\kappa \in (0,1)\) being close to 1.

While the rigorous estimate of [2] is less accurate than that of Theorem 2, the general strategy of [2] is based on Ulam’s discretization method [16] and can be applied to a wider class of maps T and functions g for which there is no analyticity assumption (see [2] for details and references, and e.g. [10] for a further guide to the literature on numerical computations in the context of piecewise expanding maps).

As noted previously, by this we mean that T is piecewise real analytic, i.e. each \(T|_{X_i}\) is real analytic, or in other words each \(\tau _i\) is real analytic.

Specifically, we say that the chosen precision is \(10^{-m}\) if any number \(\varepsilon \) such that \(|\varepsilon |<10^{-m}\) is assumed to be zero; in particular, if we are working with precision \(10^{-m}\) then x is declared to be a point of period n for T if \(|T^n(x)-x|<10^{-m}\). In our computer programs the various data (T, g, and the \(\tau _i\)) are approximated with very high precision, of \(10^{-999}\), and this precision is maintained during the process of locating periodic points; the points themselves are computed with guaranteed precision of \(10^{-250}\).

In fact a slight sharpening of (31) gives \(|c_n(0)|\le K (1/\sqrt{2})^{n^2-n}\) for \(K=\prod _{i=1}^\infty (1-2^{-i})^{-1}\approx 3.462746\).

In this example we could equally well exploit the fact that \(\int g\, d\mu = 1/3\) is known precisely, and use the approximations \(\hat{\sigma }^2_n\) given in Corollary 1(e), for the function \(f = g-1/3\) (which has zero mean). For example \(\hat{\sigma }^2_1 \approx 0.27777\), \(\hat{\sigma }^2_2 \approx 0.43827\), \(\hat{\sigma }^2_3 \approx 0.38515\), \(\hat{\sigma }^2_{4}(g) = 0.22228\), \(\hat{\sigma }^2_{5} \approx 0.26163\), \(\hat{\sigma }^2_6 \approx 0.25918\), \(\hat{\sigma }^2_7 \approx 0.259260\), \(\hat{\sigma }^2_8\approx 0.2592592530\), \(\hat{\sigma }^2_9\approx 0.259259259277\), \(\hat{\sigma }^2_{10}\approx 0.259259259259232\), and more generally the sequences \(\sigma ^2_n\) and \(\hat{\sigma }^2_n\) converge to \(\sigma ^2=7/27\) at the same rate.

Note that when discussing the Lanford map, our indexing of the intervals \(X_i\) and inverse branches \(\tau _i\) differs from that used in the rest of the article (where \(i=1,\ldots , l\) for some \(l\ge 2\)).

e.g. for the computations in Sect. 8 these integrals were computed with 70 digit accuracy using Mathematica.

We make this choice so as to minimise the error estimates arising from the computed Taylor bounds.

The choice \(t=1/20\) is close to optimal for the purpose of estimating \(c_n'(0)\) and \(c_n''(0)\) via Cauchy’s integral formula in the proof of Proposition 5. This involves, respectively, the integration of \(c_n(\zeta )\zeta ^{-2}\) and \(c_n(\zeta )\zeta ^{-3}\) over a circular contour centred at 0, and for both integrands there is a tension between the bound on \(|c_n(\zeta )|\),which increases with \(|\zeta |\), and the bound on \(|\zeta ^{-k}|\) (for \(k=2,3\)), which decreases with \(|\zeta |\).

Note that \(h\approx 0.860691\) and \(N=400\), so \(\frac{\theta ^{2(N+1)}}{1-\theta ^2} < 2.2 \times 10^{-52}\). Moreover \(\sum _{i=1}^2 \Vert w_{i,t}\Vert _\infty < 1.7\) for both \(t=0\) and \(t=1/20\), thus \( (\sum _{i=1}^2 \Vert w_{i,t}\Vert _\infty )^2 \frac{\theta ^{2(N+1)}}{1-\theta ^2} < 6.4 \times 10^{-52}\). Combining these bounds with the values taken by \(\alpha _{n,N,+}(t) \), it follows that for \(1\le n\le 300\), the approximation bound \(\alpha _n(t) = ( \sum _{k=n-1}^\infty \Vert \mathcal {L}_{g,t} (m_k)\Vert ^2 )^{1/2}\) agrees with both computed approximation bounds \(\alpha _{n,N,-}(t)\) and \(\alpha _{n,N,+}(t)\) to well beyond the desired 70 decimal place precision used in these calculations.

The difference \( \beta _{n,N,+}^{M,+}(t) - \beta _{n,N,+}^{M,-}(t) = \sum _{l=0}^{n-1} J_{Q,N,t}^{n-l}\, \beta _{l,N,+}^{M,-}(t)\, \theta ^{M(n-l)} E_{n-l}(\theta )\) is small enough that the upper and lower computed Taylor bounds, and the Taylor bound \(\beta _{n,N,+}^M(t)\), agree to well beyond the 70 decimal place precision used in these computations.

Note that the \(n=26\) term dominates, since \(26 \times \beta _{26,N,+}^{M,+}(0) \approx 26 \cdot (2.572\ldots ) \cdot 10^{-23} \approx 6.687 \times 10^{-22}\).

References

Apostol, T.: Introduction to analytic number theory. Springer, New York (1976)

Bahsoun, W., Galatolo, S., Nisoli, I., Niu, X.: Rigorous approximation of diffusion coefficients for expanding maps. J. Stat. Phys. 163, 1486–1503 (2016)

Collet, P.: Some ergodic properties of maps of the interval. In: Dynamical Systems (Temuco, 1991/1992), 55–91, Travaux en Cours, 52, Hermann, Paris (1996)

Collet, P., Eckmann, J.-P.: Iterated Maps on the Interval as Dynamical Systems. Birkhauser, Boston (1980)

Euler, L.: Introductio in Analysin Infinitorum. Marcum-Michaelem Bousquet, Lausannae (1748)

Gaspard, P.: \(r\)-adic one-dimensional maps and the Euler summation formula. J. Phys. A 25, L483–L485 (1992)

Gohberg, I., Goldberg, S., Kaashoek, M.A.: Classes of Linear Operators, vol. 1. Birkhäuser, Berlin (1990)

Lanford III, O.E.: Informal remarks on the orbit structure of discrete approximations to chaotic maps. Exp. Math. 7, 317–324 (1998)

Lasota, A., Yorke, J.A.: On the existence of invariant measures for piecewise monotonic transformations. Trans. Am. Math. Soc. 186, 481–488 (1973)

Liverani, C.: Rigorous numerical investigation of the statistical properties of piecewise expanding maps. A feasibility study. Nonlinearity 14, 463–490 (2001)

Parry, W., Pollicott, M.: Zeta functions and the periodic orbit structure of hyperbolic dynamics. Astérisque 187–188 (1990)

Ruelle, D.: Zeta-functions for expanding maps and Anosov flows. Invent. Math. 34, 231–242 (1976)

Ruelle, D.: Thermodynamic Formalism. Addison-Wesley, Reading, MA (1978)

Shapiro, J.H.: Composition Operators and Classical Function Theory. Springer, New York (1993)

Simon, B.: Trace Ideals and Their Applications. LMS Lecture Note Series, vol. 35. Cambridge University Press, Cambridge (1979)

Ulam, S.: Problems in Modern Mathematics. Interscience, New York (1960)

Author information

Authors and Affiliations

Corresponding author

Appendix: Numerical Data for the Model Problem

Appendix: Numerical Data for the Model Problem

Here we include data (presented in truncated form) for various quantities used in the computation of the diffusion coefficient \(\sigma ^2_\mu (g)\) for T the Lanford map and \(g(x)=x^2\).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Jenkinson, O., Pollicott, M. & Vytnova, P. Rigorous Computation of Diffusion Coefficients for Expanding Maps. J Stat Phys 170, 221–253 (2018). https://doi.org/10.1007/s10955-017-1930-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-017-1930-8