Abstract

We construct classes of homogeneous random fields on a three-dimensional Euclidean space that take values in linear spaces of tensors of a fixed rank and are isotropic with respect to a fixed orthogonal representation of the group of \(3\times 3\) orthogonal matrices. The constructed classes depend on finitely many isotropic spectral densities. We say that such a field belongs to either the Matérn or the dual Matérn class if all of the above densities are Matérn or dual Matérn. Several examples are considered.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Random functions of more than one variable, or random fields, were introduced in the 20th years of the past century as mathematical models of physical phenomena like turbulence, see, e.g., [9, 20, 39]. To explain how random fields appear in continuum physics, consider the following example.

Example 1

Let \(E=E^3\) be a three-dimensional Euclidean point space, and let V be the translation space of E with an inner product \((\varvec{\cdot }, \varvec{\cdot })\). Following [43], the elements A of E are called the places in E. The symbol \(B-A\) is the vector in V that translates A into B.

Let \(\mathcal {B}\subset E\) be a subset of E occupied by a material, e.g., a turbulent fluid or a deformable body. The temperature is a rank 0 tensor-valued function \(T:\mathcal {B}\rightarrow \mathbb {R}^1\). The velocity of a fluid is a rank 1 tensor-valued function \(\mathbf {v}:\mathcal {B}\rightarrow V\). The strain tensor is a rank 2 tensor-valued function \(\varepsilon :\mathcal {B}\rightarrow \mathsf {S}^2(V)\), where \(\mathsf {S}^2(V)\) is the linear space of symmetric rank 2 tensors over V. The piezoelectricity tensor is a rank 3 tensor-valued function \(\mathsf {D}:\mathcal {B}\rightarrow \mathsf {S} ^2(V)\otimes V\). The elastic modulus is a rank 4 tensor-valued function \( \mathsf {C}:\mathcal {B}\rightarrow \mathsf {S}^2(\mathsf {S}^2(V))\). Denote the range of any of the above functions by \(\mathsf {V}\). Physicists call \( \mathsf {V}\) for ranks 2 or 3 or 4 the constitutive tensor space. It is a subspace of the tensor power \(V^{\otimes r}\), where r is a nonnegative integer. The form

can be extended by linearity to the inner product on \(V^{\otimes r}\) and then restricted to \(\mathsf {V}\).

At microscopic length scales, spatial randomness of the material needs to be taken into account. Mathematically, there is a probability space \((\Omega ,\mathfrak {F},\mathsf {P})\) and a function \(\mathsf {T}(A,\omega ):\mathcal {B}\times \Omega \rightarrow \mathsf {V}\) such that for any fixed \(A_0\in \mathsf { V}\) and for any Borel set \(B\subseteq \mathsf {V}\) the inverse image \(\mathsf {T }^{-1}(A_0,B)\) is an event. The map \(\mathsf {T}(\mathbf {x},\omega )\) is a random field.

Translate the whole body \(\mathcal {B}\) by a vector \(\mathbf {x}\in V\). The random fields \(\mathsf {T}(A+\mathbf {x})\) and \(\mathsf {T}(A)\) have the same finite-dimensional distributions. It is therefore convenient to assume that there is a random field defined on all of E such that its restriction to \(\mathcal {B}\) is equal to \(\mathsf {T}(A)\). For brevity, denote the new field by the same symbol \(\mathsf {T}(A)\) (but this time \(A\in E\)). The random field \(\mathsf {T}(A)\) is strictly homogeneous, that is, the random fields \(\mathsf {T}(A+\mathbf {x})\) and \(\mathsf {T}(A)\) have the same finite-dimensional distributions. In other words, for each positive integer n, for each \(\mathbf {x}\in V\), and for all distinct places \(A_1\), ..., \(A_n\in E\) the random elements \(\mathsf {T}(A_1)\oplus \cdots \oplus \mathsf {T}(A_n)\) and \(\mathsf {T}(A_1+\mathbf {x})\oplus \cdots \oplus \mathsf {T} (A_n+\mathbf {x})\) of the direct sum on n copies of the space \(\mathsf {V}\) have the same probability distribution.

Let K be the material symmetry group of the material body \(\mathcal {B}\) acting in V. The group K is a subgroup of the orthogonal group \(\mathrm{O }(V)\). For simplicity, we assume that the material is fully symmetric, that is, \(K=\mathrm{O}(V)\). Fix a place \(O\in \mathcal {B}\) and identify E with V by the map f that maps \(A\in E\) to \(A-O\in V\). Then K acts in E and rotates the body \(\mathcal {B}\) by

Let U be the restriction of the orthogonal representation \(g\mapsto g^{\otimes r}\) of the group \(\mathrm{O}(V)\) to the subspace \(\mathsf {V}\) of the space \(V^{\otimes r}\). The group K acts in \(\mathsf {V}\) by \(\mathsf {v}\mapsto U(g)\mathsf {v}\), \(g\in K\). Under the action of K in E, the point \(A_0\) becomes \(g\cdot A_0\). Under the action of K in \(\mathsf {V}\), the random tensor \(\mathsf {T}(A_0)\) becomes \(U(g)\mathsf {T}(A_0)\). The random fields \( \mathsf {T}(g\cdot A)\) and \(U(g)\mathsf {T}(A)\) must have the same finite-dimensional distributions, because \(g\cdot A_0\) is the same material point in a different place. Note that this property does not depend on a particular choice of the place O, because the field is strictly homogeneous. We call such a field strictly isotropic.

Assume that the random field \(\mathsf {T}(A)\) is second-order, that is

Define the one-point correlation tensor of the field \(\mathsf {T}(A)\) by

and its two-point correlation tensor by

Assume that the field \(\mathsf {T}(A)\) is mean-square continuous, that is, its two-point correlation tensor \(\langle \mathsf {T}(A),\mathsf {T} (B)\rangle :E\times E\rightarrow \mathsf {V}\otimes \mathsf {V}\) is a continuous function.

Note that [35] had shown that any finite-variance isotropic random field on a compact group is necessarily mean-square continuous under standard measurability assumptions, and hence its covariance function is continuous. In the related settings, the characterisation of covariance function for a real homogeneous isotropic random field in d-dimensional Euclidean space was given in the classical paper by [40], where it was conjectured that the only form of discontinuity which could be allowed for such a function would occur at the origin. This conjecture was proved by [7] for \(d\ge 2\). This result was widely used in Geostatistics (see, i.e., [13], among the others), who argued that the homogenous and isotropic random field could be expressed as a mean-square continuous component and what they called “nugget effect”, e.g. a purely discontinuous component. In fact this latter component should be necessarily non-measurable (see, i.e., [18, Example 1.2.5]). The relation between measurability and mean-square continuity in non-compact situation is still unclear even for scalar random fields. That is why we assume in this paper that our random fields are mean-square continuous, and hence their covariance functions are continuous.

If the field \(\mathsf {T}(A)\) is strictly homogeneous, then its one-point correlation tensor is a constant tensor in \(\mathsf {V}\), while its two-point correlation tensor is a function of the vector \(B-A\), i.e., a function on V . Call such a field wide-sense homogeneous.

Similarly, if the field \(\mathsf {T}(A)\) is strictly isotropic, then we have

Definition 1

A random field \(\mathsf {T}(A)\) is called wide-sense isotropic if its one-point and two-point correlation tensors satisfy (1).

For simplicity, identify the field \(\{\,\mathsf {T}(A):A\in E\,\}\) defined on E with the field \(\{\,\mathsf {T}^{\prime }(\mathbf {x}):\mathbf {x}\in V\,\}\) defined by \(\mathsf {T}^{\prime }(\mathbf {x})=\mathsf {T} (O+\mathbf {x})\). Introduce the Cartesian coordinate system (x, y, z) in V. Use the introduced system to identify V with the coordinate space \(\mathbb { R}^3\) and \(\mathrm{O}(V)\) with \(\mathrm{O}(3)\). Call \(\mathbb {R}^3\) the space domain. The action of \(\mathrm{O}(3)\) on \(\mathbb {R}^3\) is the matrix-vector multiplication.

Definition 1 was used by many authors including [36, 42, 46].

There is another definition of isotropy.

Definition 2

[46] A random field \(\mathsf {T}(A)\) is called a multidimensional scalar wide-sense isotropic if its one-point correlation tensor is a constant, while the two-point correlation tensor \(\langle \mathsf { T}(\mathbf {x},\mathsf {T}(\mathbf {y})\rangle \) depends only on \(\Vert \mathbf {y}- \mathbf {x}\Vert \).

It is easy to see that Definition 2 is a particular case of Definition 1 when the representation U is trivial, that is, maps all elements \(g\in K\) to the identity operator.

In the case of \(r=0\), the complete description of the two-point correlation functions of scalar homogeneous and isotropic random fields is as follows. Recall that a measure \(\mu \) defined on the Borel \(\sigma \)-field of a Hausdorff topological space X is called Borel measure.

Theorem 1

Formula

establishes a one-to-one correspondence between the set of two-point correlation functions of homogeneous and isotropic random fields \(T(\mathbf {x })\) on the space domain \(\mathbb {R}^3\) and the set of all finite Borel measures \(\mu \) on the interval \([0,\infty )\).

Theorem 1 is a translation of the result proved by [40] to the language of random fields. This translation is performed as follows. Assume that \(B(\mathbf {x})\) is a two-point correlation function of a homogeneous and isotropic random field \(T(\mathbf {x})\). Let n be a positive integer, let \(\mathbf {x}_1, \dots , \mathbf {x}_n\) be n distinct points in \(\mathbb {R}^3\), and let \(c_1, \dots , c_n\) be n complex numbers. Consider the random variable \(X=\sum ^n_{j=1}c_j[T(\mathbf {x} _j)-\langle T(\mathbf {x}_j)\rangle ]\). Its variance is non-negative:

In other words, the two-point correlation function \(\langle T(\mathbf {x}),T( \mathbf {y})\rangle \) is a non-negative-definite function. Moreover, it is continuous, because the random field \(T(\mathbf {x})\) is mean-square continuous, and depends only on the distance \(\Vert \mathbf {y}-\mathbf {x}\Vert \) between the points \(\mathbf {x}\) and \(\mathbf {y}\), because the field is homogeneous and isotropic. [40] proved that Eq. (2) describes all of such functions.

Conversely, assume that the function \(\langle T(\mathbf {x}),T(\mathbf {y} )\rangle \) is described by Equation (2). The centred Gaussian random field with the two-point correlation function (2) is homogeneous and isotropic. In other words, there is a link between the theory of random fields and the theory of positive-definite functions.

In what follows, we consider the fields with absolutely continuous spectrum.

Definition 3

([17]) A homogeneous and isotropic random field \(T(\mathbf {x})\) has an absolutely continuous spectrum if the measure \(\mu \) is absolutely continuous with respect to the measure \(4\pi \lambda ^2\,\mathrm{d}\lambda \), i.e., there exist a nonnegative measurable function \(f(\lambda )\) such that

and \(d\mu (\lambda )=4\pi \lambda ^2f(\lambda )\,\mathrm{d}\lambda \). The function \(f(\lambda )\) is called the isotropic spectral density of the random field \(T(\mathbf {x})\).

Example 2

(The Matérn two-point correlation function) Consider a two-point correlation function of a scalar random field \(T(\mathbf {x})\) of the form

where \(\sigma ^{2}>0,a>0,\nu >0\) and \(K_{{}\nu }\left( z\right) \) is the Bessel function of the third kind of order \(\nu \). Here, the parameter \(\nu \) measures the differentiability of the random field; the parameter \(\sigma \) is its variance and the parameter a measures how quickly the correlation function of the random field decays with distance. The corresponding isotropic spectral density is

Note that Example 2 demonstrates another link, this time between the theory of random fields and the theory of special functions.

In this paper, we consider the following problem. How to define the Matérn two-point correlation tensor for the case of \(r>0\)? A particular answer to this question can be formulated as follows.

Example 3

(Parsimonious Matérn model, [12]) We assume that the vector random field

has the two-point correlation tensor \(B\left( \mathbf {x},\mathbf {y}\right) =(B_{ij}(\mathbf {x},\mathbf {y})) _{1\le i,j\le m}. \) It is not straightforward to specify the cross-covariance functions \( B_{ij}\left( \mathbf {x}\right) ,1\le i,j\le m,i\ne j\), as non-trivial, valid parametric models because of the requirement of their non-negative definiteness. In the multivariate Matérn model, each marginal covariance function

is of the type (3) with the isotropic spectral density \( f_{ii}(\lambda )=f_{\nu _{i},a_{i},\sigma _{i}^{2}}\left( \lambda \right) .\)

Each cross-covariance function

is also a Matérn function with co-location correlation coefficient \( b_{ij},\) smoothness parameter \(\nu _{ij}\) and scale parameter \(a_{ij}.\)The spectral densities are

The question then is to determine the values of \(\nu _{ij},a_{ij}\) and \( b_{ij}\) so that the non-negative definiteness condition is satisfied. Let \( m\ge 2\). Suppose that

and that there is a common scale parameter in the sense that there exists an \(a>0\) such that

Then the multivariate Matérn model provides a valid second-order structure in \(\mathbb {R}^{3}\) if

for \(1\le i,j\le m,i\ne j,\) where the matrix \(\left( \beta _{ij}\right) _{i,j=1,\ldots ,m}\) has diagonal elements \(\beta _{ii}=1\) for \(i=1,\ldots ,m,\) and off-diagonal elements \(\beta _{ij},1\le i,j\le m,i\ne j\) so that it is symmetric and non-negative definite.

Example 4

(Flexible Matérn model) Consider the vector random field \(\mathbf {T}(\mathbf {x})\in \mathbb {R}^{m}, \mathbf {x}\in \mathbb {R}^{3}\) with the two-point covariance tensor

where again

Denote by \(\mathcal {N}\) the set of all nonnegative-definite matrices. Assume that the matrix \(\Sigma =(\sigma _{ij})_{1\le i,j\le m}=(\sigma _{ij})\in \mathcal {N}\), and denote \(\sigma _{i}^{2}=\sigma _{ii} \), \(i=1, \dots , m\).

Then the spectral density \(F=(f_{ij})_{1\le i,j\le m}\) has the entries

We need to find some conditions on parameters \(a_{ij}>0,\nu _{ij}>0,\) under which \(F\in \mathcal {N}\). The general conditions can be found in [2, 8].

Recall that a symmetric, real \(m\times \ m\) matrix \(\Theta =(\theta _{ij})_{1\le i,j\le m},\) is said to be conditionally negative definite [3], if the inequality

holds for any real numbers \(c_{1},\ldots ,\) \(c_{m,}\) subject to

In general, a necessary condition for the above inequality is

which implies that all entries of a conditionally negative definite matrix are nonnegative whenever its diagonal entries are non-negative. If all its diagonal entries vanish, a conditionally negative definite matrix is also named a Euclidean distance matrix. It is known that \(\Theta =(\theta _{ij})_{1\le i,j\le m}\) is conditionally negative definite if and only if an \(m\times \ m\) matrix S with entries \(\exp \{-\theta _{ij}u\}\) is positive definite, for every fixed \(u\ge 0\) (cf. [3, Theorem 4.1.3]), or \(S=e^{-u\Theta },\) where \(e^{\Lambda }\) is an Hadamar exponential of a matrix \(\Lambda .\)

Some simple examples of conditionally negative definite matrices are

-

(i)

\(\theta _{ij}=\theta _{i}+\theta _{j};\)

-

(ii)

\(\theta _{ij}=\mathrm{const};\)

-

(iii)

\(\theta _{ij}=\left| \theta _{i}-\theta _{j}\right| ;\)

-

(iv)

\(\theta _{ij}=\left| \theta _{i}-\theta _{j}\right| ^{2}\)

-

(v)

\(\theta _{ij}=\max \{\theta _{i},\theta _{j}\};\)

-

(vi)

\(\theta _{ij}=-\theta _{i}\theta _{j}.\)

Recall that the Hadamard product of two matrices A and B is the matrix \( A\circ B=(A_{ij}\cdot B_{ij})_{1\le i,j\le m}.\) By Schur theorem if \( A\in \mathcal {N}\), \(B\in \mathcal {N}\), then so is \(A\circ B.\)

Then

where one need to find conditions under which

We consider first the case 1, in which we assume that

Then

if and only if the matrix

is conditionally negative definite (see above examples (i)–(vi)), then for such \((-\nu _{ij})_{1\le i,j\le m},\) we have to check that the matrix \(C=(\varGamma (\nu _{ij}+\frac{3}{2})/\varGamma (\nu _{ij})_{1\le i,j\le m}\ge 0.\) This class is not empty, since it includes the case of the so-called parsimonious model: \(\nu _{ij}=\frac{\nu _{i}+\nu _{j}}{2}\) (see Example 3).

Recall that a Hermitian matrix \(A=(a_{ij})_{i,j=1,\ldots ,p}\) is conditional non-negative if \(\mathbf {x}^{\top }A\mathbf {x}^{*}\ge 0,\) for all \(\mathbf {x}\in \mathbb {C}^{p}\) such that \(\displaystyle \sum \nolimits _{i=1}^{p}x_{i}=0,\) and \(\mathbf {x}^{*}\) is the complex conjugate of \(\mathbf {x}.\)

Thus, for the case 1, the following multivariate Matérn models are valid under the following conditions (see, [2, 8]):

(A1) Assume that

-

(i)

\(a_{i}=\cdots =a_{m}=a,\) \(1\le i,j\le m;\)

-

(ii)

\(-\nu _{ij}\) ,\(1\le i,j\le m;\) form conditionally non-negative matrices;

-

(iii)

\(\sigma _{ij}\frac{\varGamma (\nu _{ij}+\frac{3}{2})}{\varGamma (\nu _{ij})} ,1\le i,j\le m;\) form non-negative definite matrices.

Consider the case 2:

Then the following multivariate Matérn models are valid under the following conditions [2]:

(A2) either

-

(a)

\(-a_{ij}^{2}\) ,\(1\le i,j\le m,\) form a conditionally non-negative matrix and \(\sigma _{ij}a_{ij}^{2\nu },1\le i,j\le m,\) form non-negative definite matrices; or

-

(b)

\(-a_{ij}^{-2}\) ,\(1\le i,j\le m,\) form a conditionally non-negative matrix and \(\sigma _{ij}/a_{ij}^{3},1\le i,j\le m,\) form non-negative definite matrices.

These classes of Matérn models are not empty since in the case of parsimonious model they are consistent with [12, Theorem 1]. For the parsimonious model from this paper \((\) \(\nu _{ij}=\frac{\nu _{ii}+\nu _{jj}}{2},1\le i,j\le m),\) the following multivariate Matérn models are valid under conditions

(A3) either

-

(a)

\(\ \nu _{ij}=\frac{\nu _{ii}+\nu _{jj}}{2},a_{ij}^{2}=\frac{ a_{ii}^{2}+a_{jj}^{2}}{2},1\le i,j\le m,\) and \(\sigma _{ij}a_{ij}^{2\nu _{ij}}/\varGamma (\nu _{ij}),1\le i,j\le m,\)form non-negative definite matrices; or

-

(b)

\(\nu _{ij}=\frac{\nu _{ii}+\nu _{jj}}{2},a_{ij}^{-2}=\frac{ a_{ii}^{-2}+a_{jj}^{-2}}{2},1\le i,j\le m,\)and \(\sigma _{ij}/a_{ij}^{3}/\varGamma (\nu _{ij}),1\le i,j\le m,\) form non-negative definite matrices;

The most general conditions and new examples can be found in [2, 8]. The paper by [11] reviews the main approaches to building multivariate correlation and covariance structures, including the multivariate Matérn models.

Example 5

(Dual Matérn models) Adapting the so-called duality theorem (see, i.e., [10]), one can show that under the conditions A1, A2 or A3

where

is the valid spectral density of the vector random field with correlation structure \(((1+\left\| \mathbf {h}\right\| ^{2})^{-(\nu _{ij}+\frac{3}{2 })})_{1\le i,j\le m}=(D_{ij}(\mathbf {h}))_{1\le i,j\le m}\). We will call it the dual Matérn model.

Note that for the Matérn models

This condition is known as short range dependence, while for the dual Matérn model, the long range dependence is possible:

When \(m=3\), the random field of Example 3 is scalar isotropic but not isotropic. How to construct examples of homogeneous and isotropic vector and tensor random fields with Matérn two-point correlation tensors?

To solve this problem, we develop a sketch of a general theory of homogeneous and isotropic tensor-valued random fields in Sect. . This theory was developed by [30, 33]. In particular, we explain another two links: one leads from the theory of random fields to classical invariant theory, other one was established recently and leads from the theory of random fields to the theory of convex compacta.

In Sect. 3, we give examples of Matérn homogeneous and isotropic tensor-valued random fields. Finally, in Appendices we shortly describe the mathematical terminology which is not always familiar to specialists in probability: tensors, group representations, and classical invariant theory. For different aspects of theory of random fields see also [24, 25].

2 A Sketch of a General Theory

Let r be a nonnegative integer, let \(\mathsf {V}\) be an invariant subspace of the representation \(g\mapsto g^{\otimes r}\) of the group \(\mathrm{O}(3)\), and let U be the restriction of the above representation to \(\mathsf {V}\). Consider a homogeneous \(\mathsf {V}\)-valued random field \(\mathsf {T}(\mathbf {x })\), \(\mathbf {x}\in \mathbb {R}^3\). Assume it is isotropic, that is, satisfies (1). It is very easy to see that its one-point correlation tensor \( \langle \mathsf {T}(\mathbf {x})\rangle \) is an arbitrary element of the isotypic subspace of the space \(\mathsf {V}\) that corresponds to the trivial representation. In particular, in the case of \(r=0\) the representation U is trivial, and \(\langle \mathsf {T}(\mathbf {x})\rangle \) is an arbitrary real number. In the case of \(r=1\) we have \(U(g)=g\). This representation does not contain a trivial component, therefore \(\langle \mathsf {T}(\mathbf {x})\rangle = \mathbf {0}\). In the case of \(r=2\) and \(U(g)=\mathsf {S}^2(g)\) the isotypic subspace that corresponds to the trivial representation is described in Example 12, we have \(\langle \mathsf {T}(\mathbf {x})\rangle =CI\), where C is an arbitrary real number, and I is the identity operator in \( \mathbb {R}^3\), and so on.

Can we quickly describe the two-point correlation tensor in the same way? The answer is positive. Indeed, the second equation in (1) means that \(\langle \mathsf {T}(\mathbf {x}),\mathsf {T}(\mathbf {y})\rangle \) is a measurable covariant of the pair (g, U). The integrity basis for polynomial invariants of the defining representation contains one element \(I_1=\Vert \mathbf {x}\Vert ^2\). By the Wineman–Pipkin theorem (Appendix A, Theorem 6), we obtain

where \(\mathsf {T}_l(\mathbf {y}-\mathbf {x})\) are the basic covariant tensors of the representation U.

For example, when \(r=1\), the basis covariant tensors of the defining representations are \(\delta _{ij}\) and \(x_ix_j\) by the result of [44] mentioned in Appendix C. We obtain the result by [39]:

When \(r=2\) and \(U(g)=\mathsf {S}^2(g)\), the three rank 4 isotropic tensors are \(\delta _{ij}\delta _{kl}\), \(\delta _{ik}\delta _{jl}\), and \( \delta _{il}\delta _{jk}\). Consider the group \(\Sigma \) of order 8 of the permutations of symbols i, j, k, and l, generated by the transpositions (ij), (kl), and the product (ik)(jl). The group \(\Sigma \) acts on the set of rank 4 isotropic tensors and has two orbits. The sums of elements on each orbit are basis isotropic tensors:

Consider the case of degree 2 and of order 4. For the pair of representations \( (g^{\otimes 4},(\mathbb {R}^3)^{\otimes 4})\) and \((g,\mathbb {R}^3)\) we have 6 covariant tensors:

The action of the group \(\Sigma \) has 2 orbits, and the symmetric covariant tensors are

In the case of degree 4 and of order 4 we have only one covariant:

The result by [28]

easily follows.

The case of \(r=3\) will be considered in details elsewhere.

When \(r=4\) and \(U(g)=\mathsf {S}^2(S^2(g))\), the situation is more delicate. A linear relations between isotropic tensors, called syzygies, appear. There are 8 symmetric isotropic tensors connected by 1 syzygy, 13 basic covariant tensors of degree 2 and of order 8 connected by 3 syzygies, 10 basic covariant tensors of degree 4 and of order 8 connected by 2 syzygies, 3 basic covariant tensors of degree 6 and of order 8, and 1 basic covariant tensor of degree 8 and of order 8, see [31, 32] for details. It follows that there are

linearly independent basic covariant tensors. The result by [29] includes only 15 of them and is therefore incomplete.

How to find the functions \(\varphi _m\)? In the case of \(r=0\), the answer is given by Theorem 1:

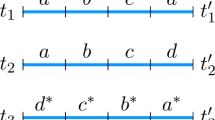

In the case of \(r=1\), the answer has been found by [46]:

where \(\rho =\Vert \mathbf {y}-\mathbf {x}\Vert \), \(j_n\) are the spherical Bessel functions, and \(\varPhi _1\) and \(\varPhi _2\) are two finite measures on \([0,\infty )\) with \(\varPhi _1(\{0\})=\varPhi _2(\{0\})\).

In the general case, we proceed in steps. The main idea is simple. We describe all homogeneous random fields and throw away those that are not isotropic. The homogeneous random fields are described by the following result.

Theorem 2

Formula

establishes a one-to-one correspondence between the set of the two-point correlation tensors of homogeneous random fields \(\mathsf {T}(\mathbf {x})\) on the space domain \(\mathbb {R}^3\) with values in a complex finite-dimensional space \(\mathsf {V}_{\mathbb {C}}\) and the set of all measures \(\mu \) on the Borel \(\sigma \)-field \(\mathfrak {B}(\hat{\mathbb {R}}^3)\) of the wavenumber domain \(\hat{\mathbb {R}}^3\) with values in the cone of nonnegative-definite Hermitian operators in \(\mathsf {V}_{\mathbb {C}}\).

This theorem was proved by [22, 23] for one-dimensional stochastic processes. Kolmogorov’s results have been further developed by [4,5,6, 27] among others.

We would like to write as many formulae as possible in a coordinate-free form, like (5). To do that, let J be a real structure in the space \(\mathsf {V}_{\mathbb {C}}\), that is, a map \(j:\mathsf {V}_{ \mathbb {C}}\rightarrow \mathsf {V}_{\mathbb {C}}\) with

-

\(J(\mathsf {x}+\mathsf {y})=J(\mathsf {x})+J(\mathsf {y})\), \(\mathsf {x}\), \( \mathsf {y}\in \mathsf {V}_{\mathbb {C}}\).

-

\(J(\alpha \mathsf {x})=\overline{\alpha }J(\mathsf {x})\), \(\mathsf {x}\in \mathsf {V}_{\mathbb {C}}\), \(\alpha \in \mathbb {C}\).

-

\(J(J(\mathsf {x}))=\mathsf {x}\), \(\mathsf {x}\in \mathsf {V}_{\mathbb {C}}\).

Any tensor \(\mathsf {x}\in \mathsf {V}_{\mathbb {C}}\) can be written as \(\mathsf { x}=\mathsf {x}^++\mathsf {x}^-\), where

Denote

Both sets \(\mathsf {V}^+\) and \(\mathsf {V}^-\) are real vector spaces. If the values of the random field \(\mathsf {T}(\mathbf {x})\) lie in \(\mathsf {V}^+\), then the measure \(\mu \) satisfies the condition

for all Borel subsets \(A\subseteq \hat{\mathbb {R}}^3\), where \(-A=\{\,-\mathbf { p}:\mathbf {p}\in A\,\}\).

Next, the following Lemma can be proved. Let \(\mathbf {p}=(\lambda ,\varphi _{ \mathbf {p}},\theta _{\mathbf {p}})\) be the spherical coordinates in the wavenumber domain.

Lemma 1

A homogeneous random field described by (5) and (6) is isotropic if and only if its two-point correlation tensor has the form

where \(\nu \) is a finite measure on the interval \([0,\infty )\), and where f is a measurable function taking values in the set of all symmetric nonnegative-definite operators on \(\mathsf {V}^+\) with unit trace and satisfying the condition

When \(\lambda =0\), condition (8) gives \(f(\mathbf {0})=\mathsf {S} ^2(U)(g)f(\mathbf {0})\) for all \(g\in \mathrm{O}(3)\). In other words, the tensor \(f(\mathbf {0})\) lies in the isotypic subspace of the space \(\mathsf {S} ^2(\mathsf {V^+})\) that corresponds to the trivial representation of the group \(\mathrm{O}(3)\), call it \(\mathsf {H}_1\). The intersection of \(\mathsf {H }_1\) with the set of all symmetric nonnegative-definite operators on \( \mathsf {V}^+\) with unit trace is a convex compact set, call it \(\mathcal {C} _1 \).

When \(\lambda >0\), condition (8) gives \(f(\lambda ,0,0)=\mathsf {S} ^2(U)(g)f(\lambda ,0,0)\) for all \(g\in \mathrm{O}(2)\), because \(\mathrm{O}(2)\) is the subgroup of \(\mathrm{O}(3)\) that fixes the point \((\lambda ,0,0)\). In other words, consider the restriction of the representation \(\mathsf {S}^2(U)\) to the subgroup \(\mathrm{O}(2)\). The tensor \(f(\lambda ,0,0)\) lies in the isotypic subspace of the space \(\mathsf {S}^2(\mathsf {V^+})\) that corresponds to the trivial representation of the group \(\mathrm{O}(2)\), call it \(\mathsf { H}_0\). We have \(\mathsf {H}_1\subset \mathsf {H}_0\), because \(\mathrm{O}(2)\) is a subgroup of \(\mathrm{O}(3)\). The intersection of \(\mathsf {H}_0\) with the set of all symmetric nonnegative-definite operators on \(\mathsf {V}^+\) with unit trace is a convex compact set, call it \(\mathcal {C}_0\).

Fix an orthonormal basis \(\mathsf {T}^{0,1,0}\), ..., \(\mathsf {T}^{0,n_0,0}\) of the space \(\mathsf {H}_1\). Assume that the space \(\mathsf {H}_0\ominus \mathsf {H}_1\) has the non-zero intersection with the spaces of \(n_1\) copies of the irreducible representation \(U^{2g}\), \(n_2\) copies of the irreducible representation \(U^{4g}\), ..., \(n_r\) copies of the irreducible representation \(U^{2rg}\) of the group \(\mathrm{O}(3)\), and let \(\mathsf {T} ^{2\ell ,n,m}\), \(-2\ell \le m\le 2\ell \), be the tensors of the Gordienko basis of the nth copy of the representation \(U^{2\ell g}\). We have

with \(f_{\ell n}(0)=0\) for \(\ell >0\) and \(1\le n\le n_{\ell }\). By (8) we obtain

Equation (7) takes the form

where we used the relation

Substitute the Rayleigh expansion

into (10). We obtain

where \(\mathbf {r}=\mathbf {y}-\mathbf {x}\). Returning back to the matrix entries \(U^{2\ell g}_{m0}(\varphi _{\mathbf {r}},\theta _{\mathbf {r}})\), we have

where

It is easy to check that the function \(M^{2\ell ,n}(\varphi _{\mathbf {r} },\theta _{\mathbf {r}})\) is a covariant of degree \(2\ell \) and of order 2r. Therefore, the M-function is a linear combination of basic symmetric covariant tensors, or L-functions:

where \(q_{kr}\) is the number of linearly independent symmetric covariant tensors of degree 2k and of order 2r. The right hand side is indeed a polynomial in sines and cosines of the angles \(\varphi _{\mathbf {r}}\) and \( \theta _{\mathbf {r}}\). Equation (11) takes the form

Recall that \(f_{\ell n}(\lambda )\) are measurable functions such that the tensor (9) lies in \(\mathcal {C}_1\) for \(\lambda =0\) and in \( \mathcal {C}_0\) for \(\lambda >0\). The final form of the two-point correlation tensor of the random field \(\mathsf {T}(\mathbf {x})\) is determined by geometry of convex compacta \(\mathcal {C}_0\) and \(\mathcal {C}_1\). For example, in the case of \(r=1\) the set \(\mathcal {C}_0\) is an interval (see [33]), while \(\mathcal {C}_1\) is a one-point set inside this interval. The set \(\mathcal {C}_0\) has two extreme points, and the corresponding random field is a sum of two uncorrelated components given by Eq. (12) below. The one-point set \(\mathcal {C}_1\) lies in the middle of the interval, the condition \(\varPhi _1(\{0\})=\varPhi _2(\{0\})\) follows. In the case of \(r=2\), the set of extreme points of the set \( \mathcal {C}_0\) has three connected components: two one-point sets and an ellipse, see [33], and the corresponding random field is a sum of three uncorrelated components.

In general, the two-point correlation tensor of the field has the simplest form when the set \(\mathcal {C}_0\) is a simplex. We use this idea in Examples 6 and 8 below.

3 Examples of Matérn Homogeneous and Isotropic Random Fields

Example 6

Consider a centred homogeneous scalar isotropic random field \(T(\mathbf {x})\) on the space \(\mathbb {R}^3\) with values in the two-dimensional space \(\mathbb {R}^2\). It is easy to see that both \(\mathcal {C }_0\) and \(\mathcal {C}_1\) are equal to the set of all symmetric nonnegative-definite \(2\times 2\) matrices with unit trace. Every such matrix has the form

with \(x\in [0,1]\) and \(y^2\le x(1-x)\). Geometrically, \(\mathcal {C}_0\) and \( \mathcal {C}_1\) are the balls

Inscribe an equilateral triangle with vertices

into the above ball. The function \(f(\mathbf {p})\) takes the form

where \(a_m(\Vert \mathbf {p}\Vert )\) are the barycentric coordinates of the point \(f( \mathbf {p})\) inside the triangle. The two-point correlation tensor of the field takes the form

where \(\mathrm{d}\varPhi _m(\lambda )=a_m(\lambda )\mathrm{d}\nu (\lambda )\) are three finite measures on \([0,\infty )\), and \(\nu \) is the measure of Eq. (7). Define \(\mathrm{d}\varPhi _m(\lambda )\) as Matérn spectral densities of Example 2 (resp. dual Matérn spectral densities of Example 5). We obtain a scalar homogeneous and isotropic Matérn (resp. dual Matérn) random field.

Example 7

Using (4) and the well-known formulae

we write the two-point correlation tensor of rank 1 homogeneous and isotropic random field in the form

where \(\mathbf {r}=\mathbf {y}-\mathbf {x}\), and

Now assume that the measures \(\varPhi _1\) and \(\varPhi _2\) are described by Matérn densities:

It is possible to substitute these densities to (12) and calculate the integrals using [37, Eq. 2.5.9.1]. We obtain rather long expressions that include the generalised hypergeometric function \({}_1F_2\).

The situation is different for the dual model:

Using [38, Eqs. 2.16.14.3, 2.16.14.4], we obtain

where

Example 8

Consider the case when \(r=2\) and \(U(g)=\mathsf {S}^2(g)\) . In order to write down symmetric rank 4 tensors in a compressed matrix form, consider an orthogonal operator \(\tau \) acting from \(\mathsf {S}^2( \mathsf {S}^2(\mathbb {R}^3))\) to \(\mathsf {S}^2(\mathbb {R}^6)\) as follows:

see [16, Eq. (44)]. It is possible to prove the following. The matrix \(\tau f_{ijkl}(\mathbf {0})\) lies in the interval \(\mathcal {C}_1\) with extreme points \(C^1\) and \(C^2\), where the nonzero elements of the symmetric matrix \(C^1\) lying on and over the main diagonal are as follows:

while those of the matrix \(C^2\) are

The matrix \(\tau f_{ijkl}(\lambda ,0,0)\) with \(\lambda >0\) lies in the convex compact set \(\mathcal {C}_0\). The set of extreme points of \(\mathcal {C}_0\) contains three connected components. The first component is the one-point set \(\{D^1\}\) with

The second component is the one-point set \(\{D^2\}\) with

The third component is the ellipse \(\{\,D^{\theta }:0\le \theta <2\pi \,\} \) with

Choose three points \(D^3\), \(D^4\), \(D^5\) lying on the above ellipse. If we allow the matrix \(\tau f_{ijkl}(\lambda ,0,0)\) with \(\lambda >0\) to take values in the simplex with vertices \(D^i\), \(1\le i\le 5\), then the two-point correlation tensor of the random field \(\varepsilon (\mathbf {x})\) is the sum of five integrals. The more the four-dimensional Lebesgue measure of the simplex in comparison with that of \(\mathcal {C}_0\), the wider class of random fields is described.

Note that the simplex should contain the set \(\mathcal {C}_1\). The matrix \( C^1 \) lies on the ellipse and corresponds to the value of \(\theta =2\arcsin ( \sqrt{2/3})\). It follows that one of the above points, say \(D^3\), must be equal to \(C^1\). If we choose \(D^4\) to correspond to the value of \( \theta =2(\pi -\arcsin (\sqrt{2/3}))\), that is,

then

and \(C^2\) lies in the simplex. Finally, choose \(D_5\) to correspond to the value of \(\theta =\pi \), that is

The constructed simplex is not the one with maximal possible Lebesgue measure, but the coefficients in formulas are simple.

Theorem 3

Let \(\varepsilon (\mathbf {x})\) be a random field that describes the stress tensor of a deformable body. The following conditions are equivalent.

-

1.

The matrix \(\tau f_{ijkl}(\lambda ,0,0)\) with \(\lambda >0\) takes values in the simplex described above.

-

2.

The correlation tensor of the field has the spectral expansion

where the non-zero functions \(\tilde{N}_{nq}(\lambda ,r)\) are given in Table 1 , and where \(\varPhi _n(\lambda )\) are five finite measures on \( [0,\infty )\) with

Assume that all measures \(\varPhi _n\) are absolutely continuous and their densities are either the Matérn or the dual Matérn densities. The two-point correlation tensors of the corresponding random fields can be calculated in exactly the same way as in Example 7.

Introduce the following notation:

where \(g^{n[n_1,n_2]}_{N[N_1,N_2]}\) are the so called Godunov–Gordienko coefficients described in [14]. Introduce the following notation:

Consider the five nonnegative-definite matrices \(A^n\), \(1\le n\le 5\), with the following matrix entries:

and let \(L^n\) be infinite lower triangular matrices from Cholesky factorisation of the matrices \(A^n\).

Theorem 4

The following conditions are equivalent.

-

1.

The matrix \(\tau f_{ij\ell m}(\lambda ,0,0)\) with \(\lambda >0\) takes values in the simplex described above.

-

2.

The field \(\varepsilon (\mathbf {x})\) has the form

$$\begin{aligned} \varepsilon _{ij}(\rho ,\theta ,\varphi )=C\delta _{ij}+2\sqrt{\pi } \sum _{n=1}^{5}\sum _{\ell =0}^{\infty } \sum _{m=-\ell }^{\ell }\int _{0}^{\infty }j_{\ell }(\lambda \rho )\,\mathrm{d} Z^{n^{\prime }}_{\ell mij}(\lambda )S^m_{\ell }(\theta ,\varphi ), \end{aligned}$$where

$$\begin{aligned} Z^{n^{\prime }}_{\ell mij}(A)=\sum _{(\ell ^{\prime },m^{\prime },k,l)\le (\ell ,m,i,j)}Z^n_{\ell ^{ \prime }m^{\prime }kl}(A), \end{aligned}$$and where \(Z^n_{\ell ^{\prime }m^{\prime }kl}\) is the sequence of uncorrelated scattered random measures on \([0,\infty )\) with control measures \(\varPhi _n\).

The idea of proof is as follows. Write down the Rayleigh expansion for \( \mathrm{e}^{\mathrm{i}(\mathbf {p},\mathbf {x})}\) and for \(\mathrm{e}^{- \mathrm{i}(\mathbf {p},\mathbf {y})}\) separately,substitute both expansions into (10) and use the following result, known as the Gaunt integral:

This theorem can be proved exactly in the same way, as its complex counterpart, see, for example, [34]. Then apply Karhunen’s theorem, see [19].

In order to simulate such fields numerically, one can use simulation algorithms based on spectral expansions. One of such algorithms is described in [21] and realised using MATLAB®, see also references herein. Other software, like R, may be used as well. In comparison with [21], only one new problem appears, that is, calculation of the Godunov–Gordienko coefficients \(g^{n[n_1,n_2]}_{N[N_1,N_2]}\). An algorithm for calculation of the above coefficients is given in [41]. It was realised by the second named author using MATLAB and used for calculation of the syzygies and spectral expansions.

The significance of the Matérn class of tensor-valued random fields follows from the fact that scalar random fields with such a correlation structures are solutions of the fractional analogous of the stochastic Helmholtz equations and hence they are widely used in applications of isotropic random fields on Euclidean space as well spherical random fields obtained as the restriction of isotropic random fields onto the sphere, see [26, Example 2]. For an application of spherical tensor random fields to estimation of parameters of Cosmic Microwave Background one can also propose an analogous of the Matérn class tensor-valued correlation structure, a paper by the authors is currently in preparation.

References

Andrews, D.L., Ghoul, W.A.: Irreducible fourth-rank Cartesian tensors. Phys. Rev. A 25, 2647–2657 (1982). doi:10.1103/PhysRevA.25.2647

Apanasovich, T.V., Genton, M.G., Sun, Y.: A valid Matérn class of cross-covariance functions for multivariate random fields with any number of components. J. Am. Stat. Assoc. 107(497), 180–193 (2012). doi:10.1080/01621459.2011.643197

Bapat, R.B., Raghavan, T.E.S.: Nonnegative Matrices and Applications. Encyclopedia of Mathematics and Its Applications. vol. 64. Cambridge University Press, Cambridge (1997). doi:10.1017/CBO9780511529979

Blanc-Lapierre, A., Fortet, R.: Résultats sur la décomposition spectrale des fonctions aléatoires stationnaires d’ordre 2. C. R. Acad. Sci. Paris 222, 713–714 (1946)

Blanc-Lapierre, A., Fortet, R.: Sur la décomposition spectrale des fonctions aléatoires stationaires d’ordre deux. C. R. Acad. Sci. Paris 222, 467–468 (1946)

Cramér, H.: On harmonic analysis in certain functional spaces. Ark. Mat. Astr. Fys. 28B(12), 7 (1942)

Crum, M.M.: On positive-definite functions. Proc. Lond. Math. Soc. 3(6), 548–560 (1956)

Du, J., Leonenko, N., Ma, C., Shu, H.: Hyperbolic vector random fields with hyperbolic direct and cross covariance functions. Stoch. Anal. Appl. 30(4), 662–674 (2012). doi:10.1080/07362994.2012.684325

Friedmann, A.A., Keller, L.P.: Differentialgleichungen fur Turbulente Bewegung einer Kompressiblen Flussigkeit. In: Proceedings of the First International Congress for Applied Mechanics, Delft, pp. 395–405 (1924)

Fung, T., Seneta, E.: Extending the multivariate generalised \(t\) and generalised VG distributions. J. Multivar. Anal. 101(1), 154–164 (2010). doi:10.1016/j.jmva.2009.06.006

Genton, M.G., Kleiber, W.: Cross-covariance functions for multivariate geostatistics. Stat. Sci. 30(2), 147–163 (2015). doi:10.1214/14-STS487

Gneiting, T., Sasvári, Z.: The characterization problem for isotropic covariance functions. Math. Geol. 31(1), 105–111 (1999)

Gneiting, T., Kleiber, W., Schlather, M.: Matérn cross-covariance functions for multivariate random fields. J. Am. Stat. Assoc. 105(491), 1167–1177 (2010). doi:10.1198/jasa.2010.tm09420

Godunov, S.K., Gordienko, V.M.: Clebsch-Gordan coefficients in the case of various choices of bases of unitary and orthogonal representations of the groups SU(2) and SO(3). Sibirsk. Mat. Zh. 45(3), 540–557 (2004). doi:10.1023/B:SIMJ.0000028609.97557.b8

Gordan, P.: Beweis dass jede Covariante und invariante einer binären Form eine ganze Function mit numerischen Coefficienten einer endlichen Anzahl solcher Formen ist. J. Reine Angew. Math. 69, 323–354 (1868)

Helnwein, P.: Some remarks on the compressed matrix representation of symmetric second-order and fourth-order tensors. Comput. Methods Appl. Mech. Eng. 190(22–23), 2753–2770 (2001). doi:10.1016/S0045-7825(00)00263-2

Ivanov, A.V., Leonenko, N.N.: Statistical Analysis of Random Fields. Mathematics and its Applications (Soviet Series), vol. 28. Kluwer, Dordrecht (1989). doi:10.1007/978-94-009-1183-3. With a preface by A. V. Skorokhod, Translated from the Russian by A. I. Kochubinskiĭ

Kallianpur, G.: Stochastic Filtering Theory. Applications of Mathematics, vol. 13. Springer, New York (1980)

Karhunen, K.: Über lineare Methoden in der Wahrscheinlichkeitsrechnung. Ann. Acad. Sci. Fenn. A. 1947(37), 79 (1947)

von Kármán, T., Howarth, L.: On the statistical theory of isotropic turbulence. Proc. R. Soc. Lond. A 164, 192–215 (1938)

Katafygiotis, L.S., Zerva, A., Malyarenko, A.A.: Simulation of homogeneous and partially isotropic random fields. J. Eng. Mech. 125(10), 1180–1189 (1999). doi:10.1061/(ASCE)0733-9399(1999)125:10(1180)

Kolmogorov, A.N.: Kurven im Hilbertschen Raum, die gegenüber einer einparametrigen Gruppe von Bewegungen invariant sind. C. R. (Doklady) Acad. Sci. URSS (N.S.) 26, 6–9 (1940)

Kolmogorov, A.N.: Wienersche Spiralen und einige andere interessante Kurven im Hilbertschen Raum. C. R. (Doklady) Acad. Sci. URSS (N.S.) 26, 115–118 (1940)

Leonenko, N.: Limit Theorems for Random Fields with Singular Spectrum. Mathematics and its Applications, vol. 465. Kluwer, Dordrecht (1999). doi:10.1007/978-94-011-4607-4

Leonenko, N., Sakhno, L.: On spectral representations of tensor random fields on the sphere. Stoch. Anal. Appl. 30(1), 44–66 (2012). doi:10.1080/07362994.2012.628912

Leonenko, N.N., Taqqu, M.S., Terdik, G.: Estimation of the covariance function of Gaussian isotropic random fields on spheres, related Rosenblatt-type distributions and the cosmic variance problem (2017 submitted)

Loève, M.: Analyse harmonique générale d’une fonction aléatoire. C. R. Acad. Sci. Paris 220, 380–382 (1945)

Lomakin, V.A.: Statistical description of the stressed state of a body under deformation. Dokl. Akad. Nauk SSSR 155(6), 1274–1277 (1964)

Lomakin, V.A.: Deformation of microscopically nonhomogeneous elastic bodies. Appl. Math. Mech. 29(5), 888–893 (1965)

Malyarenko, A., Ostoja-Starzewski, M.: Statistically isotropic tensor random fields: correlation structures. Math. Mech. Complex Syst. 2(2), 209–231 (2014). doi:10.2140/memocs.2014.2.209

Malyarenko, A., Ostoja-Starzewski, M.: A random field formulation of Hooke’s law in all elasticity classes. arXiv:1602.09066 [math-ph] (2016)

Malyarenko, A., Ostoja-Starzewski, M.: A random field formulation of Hooke’s law in all elasticity classes. J. Elast. 127(2), 269–302 (2016). doi:10.1007/s10659-016-9613-2

Malyarenko, A., Ostoja-Starzewski, M.: Spectral expansions of homogeneous and isotropic tensor-valued random fields. Z. Angew. Math. Phys. 67(3), Art. 59, 20 (2016). doi:10.1007/s00033-016-0657-8

Marinucci, D., Peccati, G.: Random Fields on the Sphere. Representation, Limit Theorems and Cosmological Applications. London Mathematical Society Lecture Note Series, vol. 389. Cambridge University Press, Cambridge (2011). doi:10.1017/CBO9780511751677

Marinucci, D., Peccati, G.: Mean-square continuity on homogeneous spaces of compact groups. Electron. Commun. Probab. 18(37), 10 (2013). doi:10.1214/ECP.v18-2400

Monin, A.S., Yaglom, A.M.: Statistical Fluid Mechanics: Mechanics of Turbulence, vol. 2. Dover, Mineola (2007). Translated from the 1965 Russian original. Lumley, English edition updated, augmented and revised by the authors, Edited and with a preface by John L. Reprinted from the 1975 edition

Prudnikov, A.P., Brychkov, Y.A., Marichev, O.I.: Integrals and series, vol. 1. Gordon & Breach Science Publishers, New York (1986). Elementary functions. Translated from the Russian and with a preface by N. M, Queen

Prudnikov, A.P., Brychkov, Y.A., Marichev, O.I.: Integrals and Series, vol. 2, 2nd edn. Gordon & Breach Science Publishers, New York (1988). Special functions. Translated from the Russian by N.M. Queen

Robertson, H.P.: The invariant theory of isotropic turbulence. Proc. Camb. Philos. Soc. 36, 209–223 (1940)

Schoenberg, I.J.: Metric spaces and completely monotone functions. Ann. Math. (2) 39(4), 811–841 (1938). doi:10.2307/1968466

Selivanova, S.: Computing Clebsch-Gordan matrices with applications in elasticity theory. In: Brattka, V., Diener, H., Spreen, D. (eds.) Logic, Computation, Hierarchies. Ontos Mathematical Logic, vol. 4, pp. 273–295. De Gruyter, Berlin (2014)

Sobczyk, K., Kirkner, D.: Stochastic Modeling of Microstructures. Modeling and Simulation in Science, Engineering and Technology. Birkhäuser, Boston (2012)

Truesdell III, C.A.: A First Course in Rational Continuum Mechanics, vol. 1. General Concepts. Pure and Applied Mathematics, vol. 71. Academic Press, Inc., Boston (1991)

Weyl, H.: The Classical Groups, Their Invariants and Representations. Princeton Landmarks in Mathematics. Princeton University Press, Princeton (1997). Fifteenth printing, Princeton Paperbacks

Wineman, A.S., Pipkin, A.C.: Material symmetry restrictions on constitutive equations. Arch. Ration. Mech. Anal. 17, 184–214 (1964)

Yaglom, A.M.: Certain types of random fields in \(n\)-dimensional space similar to stationary stochastic processes. Teor. Veroyatnost. i Primenen 2, 292–338 (1957)

Acknowledgements

Anatoliy Malyarenko is grateful to Professor Martin Ostoja-Starzewski for introducing to probabilistic models of continuum physics and fruitful discussions. Nikolai N. Leonenko was supported in part by Projects MTM2012-32674 (co-funded by European Regional Development Funds), and MTM2015-71839-P, MINECO, Spain. This research was also supported under Australian Research Council’s Discovery Projects funding scheme (Project Number DP160101366), and under Cardiff Incoming Visiting Fellowship Scheme and International Collaboration Seedcorn Fund.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Tensors

There are several equivalent definitions of tensors. Surprisingly, the most abstract of them is useful in the theory of random fields.

Let r be a nonnegative integer, and let \(V_1, \ldots , V_r\) be linear spaces over the same field \(\mathbb {K}\). When \(r=0\), define the tensor product of the empty family of spaces as \(\mathbb {K}^1\), the one-dimensional linear space over \(\mathbb {K}\).

Theorem 5

(The universal mapping property) There exist a unique linear space \(V_1\otimes \cdots \otimes V_r\) and a unique linear operator \(\tau :V_1\times V_2\times \cdots \times V_r\rightarrow V_1\otimes \cdots \otimes V_r\) that satisfy the universal mapping property: for any linear space W and for any multilinear map \(\beta :V_1\times V_2\times \cdots \times V_r\rightarrow W\), there exists a unique linear operator \(B:V_1\otimes \cdots \otimes V_r\rightarrow X\) such that \( \beta =B\circ \tau \):

In other words: the construction of the tensor product of linear spaces reduces the study of multilinear mappings to the study of linear ones.

Remark 1

In mathematical jargon, the above diagram is commutative: all directed paths with the same start and endpoints lead to the same result by composition. That is: \(\beta =B\circ \tau \).

The tensor product \(\mathbf {v}_1\otimes \cdots \otimes \mathbf {v}_r\) of the vectors \(\mathbf {v}_i\in V_i\), \(1\le i\le r\), is defined by

Let \(V_1, \dots , V_r\), \(W_1, \dots , W_r\) be finite-dimensional linear spaces, and let \(A_i\in L(V_i,W_i)\) for \(1\le i\le r\). The tensor product of linear operators, \(A_1\otimes \cdots \otimes A_r\), is a unique element of the space \(L(V_1\otimes \cdots \otimes V_r,W_1\otimes \cdots \otimes W_r)\) such that

If all the spaces \(V_i\), \(1\le i\le r\), are copies of the same space V, then we write \(V^{\otimes r}\) for the r-fold tensor product of V with itself, and \(\mathbf {v}^{\otimes r}\) for the tensor product of r copies of a vector \(\mathbf {v}\in V\). Similarly, for \(A\in L(V,V)\) we write \( A^{\otimes r}\) for the r-fold tensor product of A with itself. Note that \(A^{\otimes 0}\) is the identity operator in the space \(\mathbb {K}^1\).

Appendix B: Group Representations

Let G be a topological group. A finite-dimensional representation of G is a pair \((\rho ,V)\), where V is a finite-dimensional linear space, and \(\rho :G\rightarrow \mathrm{GL}(V)\) is a continuous group homomorphism. Here \(\mathrm{GL}(V)\) is the general linear group of order n, or the group of all invertible \(n\times n\) matrices. In what follows, we omit the word “finite-dimensional” unless infinite-dimensional representations are under consideration.

In a coordinate form, a representation of G is a continuous group homomorphism \(\rho :G\rightarrow \mathrm{GL}(n,\mathbb {K})\) and the space \( \mathbb {K}^n\).

Let \(W\subseteq V\) be a linear subspace of the space V. W is called an invariant subspace of the representation \((\rho ,V)\) if \(\rho (g) \mathbf {w}\in W\) for all \(g\in G\) and \(\mathbf {w}\in W\). The restriction of \( \rho \) to W is then a representation \((\sigma ,W)\) of G. Formula

defines a representation \((\tau ,V/W)\) of G in the quotient space V / W.

In a coordinate form, take a basis for W and complete it to a basis for V . The matrix of \(\rho (g)\) relative to the above basis is

Let \((\rho ,V)\) and \((\tau ,W)\) be representations of G. An operator \(A\in L(V,W)\) is called an intertwining operator if

The intertwining operators form a linear space \(L_G(V,W)\) over \(\mathbb {K}\).

The representations \((\rho ,V)\) and \((\tau ,W)\) are called equivalent if the space \(L_G(V,W)\) contains an invertible operator. Let A be such an operator. Multiply (14) by \(A^{-1}\) from the right. We obtain

In a coordinate form, \(\tau (g)\) and \(\rho (g)\) are matrices of the same presentation, written in two different bases, and A is the transition matrix between the bases.

A representation \((\rho ,V)\) with \(V\ne \{\mathbf {0}\}\) is called reducible if there exists an invariant subspace \(W\notin \{\{\mathbf {0} \},V\}\). In a coordinate form, all blocks of the matrix (13) are nonempty. Otherwise, the representation is called irreducible.

Example 9

Let \(G=\mathrm{O}(3)\). The mapping \(g\mapsto g^{\otimes r}\) is a representation of the group G in the space \((\mathbb {R}^3)^{\otimes r}\). When \(r=0\), this representation is called trivial, when \(r=1\), it is called defining. When \(r\ge 2\), this representation is reducible.

From now on we suppose that the topological group G is compact. There exists an inner product \((\varvec{\cdot },\varvec{\cdot })\) on V such that

In a coordinate form, we can choose an orthonormal basis in V. If V is a complex linear space, then the representation \((\rho ,V)\) takes values in \( \mathrm{U}(n)\), the group of \(n\times n\) unitary matrices, and we speak of a unitary representation. If V is a real linear space, then the representation \((\rho ,V)\) takes values in \(\mathrm{O}(n)\), and we speak of an orthogonal representation.

Let \((\pi ,V)\) and \((\rho ,W)\) be representations of G. The direct sum of representations is the representation \((\pi \oplus \rho ,V\oplus W)\) acting by

In a coordinate form, we have

Consider the action \(\pi \otimes \rho \) of the group G on the set of tensor products \(\mathbf {v}\otimes \mathbf {w}\) defined by

This action may be extended by linearity to the tensor product of representations \((\pi \otimes \rho ,V\otimes W)\). In a coordinate form, \( (\pi \otimes \rho )(g)\) is a rank 4 tensor with components

A representation \((\sigma ,V)\) of a group G is called completely reducible if for every invariant subspace \(W\subset V\) there exists an invariant subspace \(U\subset V\) such that \(V=W\oplus U\). In a coordinate form, any basis \(\{\mathbf {w}_1,\dots ,\mathbf {w}_p\}\) for W can be completed to a basis \(\{\mathbf {w}_1,\dots ,\mathbf {w}_p,\mathbf {u}_1,\dots , \mathbf {u}_q\}\) for V such that the span of the vectors \(\mathbf {u}_1 ,\dots ,\mathbf {u}_q\) is invariant. The matrix \(\sigma (g)\) in the above basis has the form (15). Any representation of a compact group is completely reducible.

Let \((\rho ,V)\) be an irreducible representation of a group G. Denote by \( [\rho ]\) the equivalence class of all representations of G equivalent to \( (\rho ,V)\) and by \(\hat{G}\) the set of all equivalence classes of irreducible representations of G. For any finite-dimensional representation \( (\sigma ,V) \) of G, there exists finitely many equivalence classes \([\rho _1], \dots , [\rho _k]\in \hat{G}\) and uniquely determined positive integers \(m_1 , \dots , m_k\) such that \((\sigma ,V)\) is equivalent to the direct sum of \( m_1\) copies of the representation \((\rho _1,V_1), \ldots , m_k\) copies of the representation \((\rho _k,V_k)\). The direct sum \(m_iV_i\) of \(m_i\) copies of the linear space \(V_i\) is called the isotypic subspace of the space V that corresponds to the representation \((\rho _i,V_i)\). The numbers \(m_i\) are called the multiplicities of the irreducible representation \( (\rho _i,V_i)\) in \((\sigma ,V)\). The decompositions \(V=\sum m_iV_i\) and \( \sigma =\sum m_i\rho _i\) are called the isotypic decompositions.

Assume that a compact group G is easy reducible. This means that for any three irreducible representation \((\rho ,V)\), \((\sigma ,W)\), and \( (\tau ,U)\) of G the multiplicity \(m_{\tau }\) of \(\tau \) in \(\rho \otimes \sigma \) is equal to either 0 or 1. For example, the group \(\mathrm{O}(3)\) is easy reducible. Assume \(m_{\tau }=1\). Let \(\{\,\mathbf {e}^{\rho }_i:1\le i\le \dim \rho \,\}\) be an orthonormal basis in V, and similarly for \( \sigma \) and \(\tau \). There are two natural bases in the space \(V\otimes W\). The coupled basis is

The uncoupled basis is

In a coordinate form, the elements of the space \(V\otimes W\) are matrices with \(\dim \rho \) rows and \(\dim \sigma \) columns. The coupled basis consists of matrices having 1 in the ith row and jth column, and all other entries equal to 0. Denote by \(c^{k[i,j]}_{\tau [\rho ,\sigma ]}\) the coefficients of expansion of the vectors of uncoupled basis in the coupled basis:

The numbers \(c^{k[i,j]}_{\tau [\rho ,\sigma ]}\) are called the Clebsch–Gordan coefficients of the group G. In the coupled basis, the vectors of the uncoupled basis are matrices \(c^k_{\tau [\rho ,\sigma ]}\) with matrix entries \(c^{k[i,j]}_{\tau [\rho ,\sigma ]}\), the Clebsch–Gordan matrices.

Example 10

(Irreducible unitary representations of \(\mathrm{SU}(2)\)) Let \(\ell \) be a non-negative integer or half-integer (the half of an odd integer) number. Let \((\rho _0,\mathbb {C}^1)\) be the trivial representation, and let \((\rho _{1/2},\mathbb {C}^2)\) be the defining representation of \( \mathrm{SU}(2)\). The representation \((\rho _{\ell },\mathbb {C}^{2\ell +1})\) with \(\ell =1, 3/2, 2, \dots ,\) is the symmetric tensor power \(\rho _{\ell }= \mathsf {S}^{2\ell }(\rho _{1/2})\). No other irreducible unitary representations exist.

We may realise the representations \(\rho _{\ell }\) in the space \(\mathcal {P} ^{2\ell }(\mathbb {C}^2)\) of homogeneous polynomials of degree \(2\ell \) in two formal complex variables \(\xi \) and \(\eta \) over the two-dimensional complex linear space \(\mathbb {C}^2\). The group \(\mathrm{SU}(2)\) consists of the matrices

The representation \(\rho _{\ell }\) acts as follows:

Note that \(\rho _{\ell }(-E)=E\) if and only if \(\ell \) is integer.

The Wigner orthonormal basis in the space \(\mathcal {P}^{2\ell }(V)\) is as follows:

The matrix entries of the operators \(\rho _{\ell }(g)\) in the above basis are called Wigner D functions and are denoted by \(D^{\ell }_{mn}(g)\). The tensor product \(\rho _{\ell _1}\otimes \rho _{\ell _2}\) is expanding as follows

Example 11

(Irreducible unitary representations of \(\mathrm{SO}(3)\) and \( \mathrm{O}(3)\)) Realise the linear space \(\mathbb {R}^3\) with coordinates \(x_{-1}\), \(x_0\), and \(x_1\) as the set of traceless Hermitian matrices over \(\mathbb {C}^2\) with entries

The matrix (17) acts on the so realised \(\mathbb {R}^3\) as follows:

The mapping \(\pi \) is a homomorphism of \(\mathrm{SU}(2)\) onto \(\mathrm{SO}(3)\) . The kernel of \(\pi \) is \(\pm E\). Assume that \((\rho ,V)\) is an irreducible unitary representation of \(\mathrm{SO}(3)\). Then \((\rho \circ \pi ,V)\) is an irreducible unitary representation of \(\mathrm{SU}(2)\) with kernel \(\pm E\). Then we have \(\rho \circ \pi =\rho _{\ell }\) for some integer \(\ell \). In other words, every irreducible unitary representation \((\rho _{\ell },V)\) of \( \mathrm{SU}(2)\) with integer \(\ell \) gives rise to an irreducible unitary representation of \(\mathrm{SO}(3)\), and no other irreducible unitary representations exist. We denote the above representation of \(\mathrm{SO}(3)\) again by \((\rho _{\ell },V)\).

Let \(\mathrm{SO}(2)\) be the subgroup of \(\mathrm{SO}(3)\) that leaves the vector \((0,0,1)^{\top }\) fixed. The restriction of \(\rho _{\ell }\) to \(\mathrm{SO}(2)\) is equivalent to the direct sum of irreducible unitary representations \((\mathrm{e}^{\mathrm{i}m\varphi },\mathbb {C}^1)\), \(-\ell \le m\le \ell \) of \(\mathrm{SO}(2)\). Moreover, the space of the representation \(( \mathrm{e}^{\mathrm{i}m\varphi },\mathbb {C}^1)\) is spanned by the vector \( \mathbf {e}_m(\xi ,\eta )\) of the Wigner basis (18).This is where their enumeration comes from.

The group O(3) is the Cartesian product of its normal subgroups \(\mathrm{SO }(3)\) and \(\{I,-I\}\). The elements of \(\mathrm{SO}(3)\) are rotations, while the elements of the second component are reflections. Therefore, any irreducible unitary representation of O(3) is the outer tensor product of some \((\rho _{\ell },V)\) by an irreducible unitary representation of \(\{E,-E\}\). The latter group has two irreducible unitary representation: trivial \((\rho _+,\mathbb {C}^1)\) and determinant \((\rho _-,\mathbb {C}^1)\). Denote \(\rho _{\ell ,+}:=\rho _{\ell } \hat{\otimes }\rho _+\) and \(\rho _{\ell ,-}:=\rho _{\ell }\hat{\otimes }\rho _-\). These are all irreducible unitary representations of O(3).

Introduce the coordinates on \(\mathrm{SO}(3)\), the Euler angles. Any rotation g may be performed by three successive rotations:

-

rotation \(g_0(\psi )\) about the \(x_0\)-axis through an angle \(\psi \), \( 0\le \psi <2\pi \);

-

rotation \(g_{-1}(\theta )\) about the \(x_{-1}\)-axis through an angle \( \theta \), \(0\le \theta \le \pi \),

-

rotation \(g_0(\varphi )\) about the \(x_0\)-axis through an angle \(\varphi \) , \(0\le \varphi <2\pi \).

The angles \(\psi \), \(\theta \), and \(\varphi \) are the Euler angles. The Wigner D functions are \(D^{\ell }_{mn}(\varphi ,\theta ,\psi )\). The Wigner D functions \(D^{\ell }_{m0}\) do not depend on \(\psi \) and may be written as \( D^{\ell }_{m0}(\varphi ,\theta )\). The spherical harmonics \(Y_{\ell }^m\) are defined by

Let \((r,\theta ,\varphi )\) be the spherical coordinates in \(\mathbb {R}^3\):

The measure \(\mathrm{d}\Omega :=\sin \theta \,\mathrm{d}\varphi \,\mathrm{d} \theta \) is the Lebesgue measure on the unit sphere \(S^2:=\{\,\mathbf {x}\in \mathbb {R}^3:\Vert \mathbf {x} \Vert =1\,\}\) . The spherical harmonics are orthonormal:

Example 12

(Expansions of tensor representations of the group \(\mathrm{O} (3)\)) Let \(r\ge 2\) be a nonnegative integer, and let \(\Sigma _r\) be the permutation group of the numbers \(1, 2, \dots , r\). The action

may be extended by linearity to an orthogonal representation of the group \( \Sigma _r\) in the space \((\mathbb {R}^3)^{\otimes r}\), call it \((\rho _r,( \mathbb {R}^3)^{\otimes r})\). Consider the orthogonal representation \((\tau ,( \mathbb {R}^3)^{\otimes r})\) of the group \(\mathrm{O}(3)\times \Sigma _r\) acting by

The representation \((\tau ,(\mathbb {R}^3)^{\otimes 2})\) of the group \(\mathrm{O}(3)\times \Sigma _2\) is the direct sum of three irreducible components

where \(\tau _+\) is the trivial representation of the group \(\Sigma _2\), while \( \varepsilon \) is its non-trivial representation. The one-dimensional space of the first component is the span of the identity matrix and consists of scalars. The three-dimensional space of the second component is the space \( \mathsf {\Lambda }^2(\mathbb {R}^3)\) of \(3\times 3\) skew-symmetric matrices. Its elements are three-dimensional pseudo-vectors. Finally, the five-dimensional space of the third component consists of \(3\times 3\) traceless symmetric matrices (deviators). The second component is \((\mathsf { \Lambda }^2(g),\mathsf {\Lambda }^2(\mathbb {R}^3))\), and the direct sum of the first and third components is \((\mathsf {S}^2(g),\mathsf {S}^2(\mathbb {R}^3))\).

In general, the representation \((\tau ,(\mathbb {R}^3)^{\otimes r})\) is reducible and may be represented as the direct sum of irreducible representations as follows:

where q is called the seniority index of the component \(U^{\ell x}(g)\rho _q(\sigma )\), see [1], and where \(x=g\) for even r and \(x=u\) for odd r. The number \(N^{\ell }_r\) of copies of the representation \(U^{\ell x}\) is given by

Appendix C: Classical Invariant Theory

Let V and W be two finite-dimensional linear spaces over the same field \( \mathbb {K}\). Let \((\rho ,V)\) and \((\sigma ,W)\) be two representations of a group G. A mapping \(h:W\rightarrow V\) is called a covariant or form-invariant or a covariant tensor of the pair of representations \( (\rho ,V)\) and \((\sigma ,W)\), if

In other words, the diagram

is commutative, as explained in Remark 1.

If \(V=\mathbb {K}^1\) and \(\rho \) is the trivial representation of G, then the corresponding covariant scalars are called absolute invariants (or just invariants) of the representation \((\sigma ,W)\), hence the name Invariant Theory. Note that the set \(\mathbb {K}[W]^G\) of invariants is an algebra over the field \(\mathbb {K}\), that is, a linear space over \(\mathbb {K}\) with bilinear multiplication operation and a multiplication identity 1. The product of a covariant \(h:W\rightarrow V\) and an invariant \(f\in \mathbb {K}[W]^G\) is again a covariant. In other words, the covariant tensors of the pair of representations \((\rho ,V)\) and \((\sigma ,W)\) form a module over the algebra of invariants of the representation \( (\sigma ,W)\).

A mapping \(h:W\rightarrow V\) is called homogeneous polynomial mapping of degree d if for any \(\mathbf {v}\in V\) the mapping \(\mathbf {w}\mapsto (h( \mathbf {w}),\mathbf {v})\) is a homogeneous polynomial of degree d in \(\dim W \) variables. The mapping h is called a polynomial covariant of degree d if it is homogeneous polynomial mapping of degree d and a covariant.

Let \((\sigma ,W)\) be the defining representation of G, and \((\rho ,V)\) be the rth tensor power of the defining representation. The corresponding covariant tensors are said to have an order r. The covariant tensors of degree 0 and of order r of the group \(\mathrm{O}(n)\) are known as isotropic tensors.

The algebra of invariants and the module of covariant tensors were an object of intensive research. The first general result was obtained by [15]. The author proved that for any finite-dimensional complex representation of the group \(G=\mathrm{SL}(2,\mathbb {C})\) the algebra of invariants and the module of covariant tensors are finitely generated. In other words, there exists an integrity basis: a finite set of invariant homogeneous polynomials \(I_1, \dots , I_N\) such that every polynomial invariant can be written as a polynomial in \(I_1, \dots , I_N\). An integrity basis is called minimal if none of its elements can be expressed as a polynomial in the others. A minimal integrity basis is not necessarily unique, but all minimal integrity bases have the same amount of elements of each degree.

The algebra of invariants is not necessarily free. Some polynomial relations between generators, called syzygies may exist.

The importance of polynomial invariants can be explained by the following result. Let G be a closed subgroup of the group \(\mathrm{O}(3)\), the group of symmetries of a material. Let \((\rho ,\mathsf {V})\), \((\rho _1,\mathsf {V}_1), \dots , (\rho _N,\mathsf {V}_N)\) be finitely many orthogonal representations of G in real finite-dimensional spaces. Let \(\mathsf {T}:\mathsf {V} _1\oplus \cdots \oplus \mathsf {V}_N\rightarrow \mathsf {V}\) be an arbitrary (say, measurable) covariant of the pair \(\rho \) and \(\rho _1\oplus \cdots \oplus \rho _N\) . Let \(\{\,I_k:1\le k\le K\,\}\) be an integrity basis for polynomial invariants of the representation \(\rho \), and let \(\{\,\mathsf {T} _l:1\le l\le L\,\}\) be an integrity basis for polynomial covariant tensors of the pair \(\rho \) and \(\rho _1\oplus \cdots \oplus \rho _N\). Following [45], we call \(\mathsf {T}_l\) basic covariant tensors.

Theorem 6

[45] A function \(\mathsf {T}:\mathsf {V} _1\oplus \cdots \oplus \mathsf {V}_N\rightarrow \mathsf {V}\) is a measurable covariant of the pair \(\rho \) and \(\rho _1\oplus \cdots \oplus \rho _N\) if and only if it has the form

where \(\varphi _l\) are real-valued measurable functions of the elements of an integrity basis.

In 1939 in the first edition of [44] Weyl proved that any polynomial covariant of degree d and of order r of the group \( \mathrm{O}(n)\) is a linear combination of products of Kronecker’s deltas \( \delta _{ij}\) and second degree homogeneous polynomials \(x_ix_j\).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Leonenko, N., Malyarenko, A. Matérn Class Tensor-Valued Random Fields and Beyond. J Stat Phys 168, 1276–1301 (2017). https://doi.org/10.1007/s10955-017-1847-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-017-1847-2