Abstract

We prove the large deviation principle (LDP) for the trajectory of a broad class of finite state mean-field interacting Markov jump processes via a general analytic approach based on viscosity solutions. Examples include generalized Ehrenfest models as well as Curie–Weiss spin flip dynamics with singular jump rates. The main step in the proof of the LDP, which is of independent interest, is the proof of the comparison principle for an associated collection of Hamilton–Jacobi equations. Additionally, we show that the LDP provides a general method to identify a Lyapunov function for the associated McKean–Vlasov equation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider two models of Markov jump processes with mean-field interaction. In both cases, we have n particles or spins that evolve as a pure jump process, where the jump rates of the individual particles depend on the empirical distribution of all n particles.

We prove the large deviation principle (LDP) for the trajectory of these empirical quantities, with Lagrangian rate function, via a proof that an associated Hamilton–Jacobi equation has a unique viscosity solution. The uniqueness is a consequence of the comparison principle, and the proof of this principle is the main novel contribution of this paper.

The first set of models that we consider are conservative models that generalize the Ehrenfest model. In the one dimensional setting, this model can also be interpreted as the Moran model without mutation or selection.

We consider d-dimensional spins \(\sigma (1),\ldots ,\sigma (n)\) taking their values in \(\{-1,1\}^d\). The quantity of interest is the empirical magnetisation \(x_n = (x_{n,1},\ldots ,x_{n,d}) \in E_1 := [-1,1]^d\), where \(x_{n,i} = x_{n,i}(\sigma ) = \frac{1}{n} \sum _{j=1}^n \sigma _i(j)\).

The second class of models are jump processes \((\sigma (1),\ldots ,\sigma (n)\) on a finite state space \(\{1,\ldots ,d\}\). As an example, we can consider Glauber type dynamics, such as Curie–Weiss spin flip dynamics. In this case, the empirical measure \(\mu _n(t) \in E_2 := \mathcal {P}(1,\ldots ,d)\) is given by

where \(\sigma _i(t) \in \{1,\ldots ,d\}\) is the state of the ith spin at time t.

Under some appropriate conditions, the trajectory \(x_n(t)\) or \(\mu _n(t)\) converges as \(n \rightarrow \infty \) to x(t), or \(\mu (t)\), the solution of a McKean–Vlasov equation, which is a generalization of the linear Kolmogorov forward equation which would appear in the case of independent particles.

For these sets of models, we obtain a LDP for the trajectory of these empirical measures on the space \(D_{E_i}(\mathbb {R}^+)\), \(i \in \{1,2\}\) of càdlàg paths on \(E_i\) of the form

where

for trajectories \(\gamma \) that are absolutely continuous and \(I(\gamma ) = \infty \) otherwise. In particular, \(I(\gamma ) = 0\) for the solution \(\gamma \) of the limiting McKean–Vlasov equation. The Lagrangian \(\mathcal {L}: E_i \times \mathbb {R}^d \rightarrow \mathbb {R}^+\) is defined as the Legendre transform of a Hamiltionan \(H : E_i \times \mathbb {R}^d \rightarrow \mathbb {R}\) that can be obtained via a limiting procedure

Here \(A_n\) is the generator of the Markov process of \(\{x_n(t)\}_{t \ge 0}\) or \(\{\mu _n(t)\}_{t \ge 0}\). More details on the models and definitions follow shortly in Sect. 2.

Recent applications of the path-space LDP are found in the study of mean-field Gibbs-non-Gibbs transitions, see e.g. [20, 29] or the microscopic origin of gradient flow structures, see e.g. [1, 27]. Other authors have considered the path-space LDP in various contexts before, see for example [3, 9, 13, 19, 23, 24, 26]. A comparison with these results follows in Sect. 2.6.

The novel aspect of this paper with respect to large deviations for jump processes is an approach via a class of Hamilton–Jacobi equations. In [22], a general strategy is proposed for the study for large deviations of trajectories which is based on an extension of the theory of convergence of non-linear semigroups by the theory of viscosity solutions. As in the theory of weak convergence of Markov processes, this program is carried out in three steps, first one proves convergence of the generators, i.e. (1.1), secondly one shows that H is indeed the generator of a semigroup. The third step is the verification of the exponential compact containment condition, which for our compact state-spaces is immediate, that yields, given the convergence of generators, exponential tightness on the Skorokhod space. This final step reduces the proof of the large deviation principle on the Skorokhod space to that of the finite dimensional distributions, which can then be proven via the first two steps.

Showing that H generates a semigroup is non-trivial and follows for example by showing that the Hamilton–Jacobi equation

has a unique solution f for all \(h \in C(E_i)\) and \(\lambda >0\) in the viscosity sense. As mentioned above, it is exactly this problem that is the main focus of the paper. An extra bonus of this approach is that the conditions on the Markov processes for finite n are weaker than in previous studies, and allow for singular behaviour in the jump rate if the empirical quantity is close to the boundary.

This approach via the Hamilton–Jacobi equation has been carried out in [22] for Levy processes on \(\mathbb {R}^d\), systems with multiple time scales and for stochastic equations in infinite dimensions. In [16], the LDP for a diffusion process on \((0,\infty )\) is treated with singular behaviour close to 0.

As a direct consequence of our LDP, we obtain a straightforward method to find Lyapunov functions for the limiting McKean–Vlasov equation. If \(A_n\) is the linear generator of the empirical quantity of interest of the n-particle process, the operator A obtained by \(Af = \lim _n A_n f\) can be represented by \(Af(\mu ) = \langle \nabla f(x),\mathbf {F}(x)\rangle \) for some vector field \(\mathbf {F}\). If solutions to

are unique for a given starting point and if the empirical quantity \(x_n(0)\) (or \(\mu _n(0)\), in the setting of the second model) converges to x(0), the empirical quantities \(\{x_n(t)\}_{t \ge 0}\) converge almost surely to a solution \(\{x(t)\}_{t \ge 0}\) of (1.3). In Sect. 2.4, we will show that if the stationary measures of \(A_n\) satisfy a LDP on \(E_i\) with rate function \(I_0\), then \(I_0\) is a Lyapunov function for (1.3).

The paper is organised as follows. In Sect. 2, we introduce the models and state our results. Additionally, we give some examples to show how to apply the theorems. In Sect. 3, we recall the main results from [22] that relate the Hamilton–Jacobi equations (1.2) to the large deviation problem. Additionally, we verify conditions from [22] that are necessary to obtain our large deviation result with a rate function in Lagrangian form, in the case that we have uniqueness of solutions to the Hamilton–Jacobi equations. Finally, in Sect. 4 we prove uniqueness of viscosity solutions to (1.2).

2 Main Results

2.1 Two Models of Interacting Jump Processes

We do a large deviation analysis of the trajectory of the empirical magnetization or distribution for two models of interacting spin-flip systems.

2.1.1 Generalized Ehrenfest Model in d-Dimensions

Consider d-dimensional spins \(\sigma = (\sigma (1),\ldots ,\sigma (n)) \in (\{-1,1\}^d)^n\). For example, we can interpret this as n individuals with d types, either being \(-1\) or 1. For \(k \le n\), we denote the ith coordinate of \(\sigma (k)\) by \(\sigma _i(k)\). Set \(x_n = (x_{n,1},\ldots ,x_{n,d}) \in E_1 := [-1,1]^d\), where \(x_{n,i} = x_{n,i}(\sigma ) = \frac{1}{n} \sum _{j=1}^n \sigma _i(j)\) the empirical magnetisation in the ith spin. For later convenience, denote by \(E_{1,n}\) the discrete subspace of \(E_1\) which is the image of \((\{-1,1\}^d)^n\) under the map \(\sigma \mapsto x_n(\sigma )\). The spins evolve according to mean-field Markovian dynamics with generator \(\mathcal {A}_n\):

The configuration \(\sigma ^{i,j}\) is obtained by flipping the ith coordinate of the jth spin. The functions \(r_{n,+}^i,r_{n,-}^i\) are non-negative and represent the jump rate of the ith spin flipping from a \(-1\) to 1 or vice-versa.

The empirical magnetisation \(x_n\) itself also behaves Markovian. To motivate the form of the generator of the \(x_n\) process, we turn to the transition semigroups. For \(g \in C((\{-1,1\}^d)^n)\), denote

As the rates in the generator of \(\sigma _n\) only depend on the empirical magnetization, we find that for \(f \in C(E_{1,n})\)

only depends on x and not on \(\sigma _n(0)\). Therefore, we can denote this object by \(S^2_n(t)f(x)\). Additionally, we see that \(S^1_n(t)(f \circ x_n)(\sigma _n) = S^2_n(t) f(x_n(\sigma _n))\). Thus, the empirical magnetization has generator \(A_n : C(E_{1,n}) \rightarrow C(E_{1,n})\) which satisfies \(A_n f(x_n(\sigma )) := \mathcal {A}_n (f \circ x_n)(\sigma )\) and is given by

where \(e_i\) the vector consisting of 0s, and a 1 in the ith component.

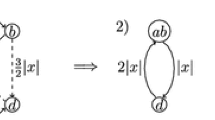

Note that \(A_n\) can be obtained from \(\mathcal {A}_n\) also intuitively. A change from \(-1\) to 1 in the ith coordinate induces a change \(+ \frac{2}{n} e_i\) in the empirical magnetisation. This happens at a rate \(r_{n,+}^i(x_n(\sigma ))\) multiplied by the number of spins. i.e. \(n \frac{1 - x_{n,i}(\sigma )}{2}\), that satisfy \(\sigma _i = -1\).

Under suitable conditions on the rates \(r_{n,+}^i\) and \(r_{n,-}^i\), we will derive a LDP for the trajectory \(\{x_n(t)\}_{t \ge 0}\) in the Skorokhod space \(D_{E_1}(\mathbb {R}^+)\) of right continuous \(E_1\) valued paths that have left limits.

2.1.2 Systems of Glauber Type with d States

We will also study the large deviation behaviour of copies of a Markov process on \(\{1,\ldots ,d\}\) that evolve under the influence of some mean-field interaction. Here \(\sigma = (\sigma (1),\ldots ,\sigma (n)) \in \{1,\ldots ,d\}^n\) and the empirical distribution \(\mu \) is given by \(\mu _n(\sigma ) = \frac{1}{n} \sum _{i \le n} \delta _{\sigma (i)}\) which takes values in

Of course, this set can be seen as discrete subset of \(E_2 := \mathcal {P}(\{1,\ldots ,d\}) = \{\mu \in \mathbb {R}^d \, | \, \mu _i \ge 0, \sum _i \mu _i = 1\}\). We take some n-dependent family of jump kernels \(r_n : \{1,\ldots , d\}\times \{1,\ldots , d\}\times E_{2,n} \rightarrow \mathbb {R}^+\) and define Markovian evolutions for \(\sigma \) by

where \(\sigma ^{i,b}\) is the configuration obtained from \(\sigma \) by changing the ith coordinate to b. Again, we have an effective evolution for \(\mu _n\), which is governed by the generator

As in the first model, we will prove, under suitable conditions on the jump kernels \(r_n\) a LDP in n for \(\{\mu _n(t)\}_{t \ge 0}\) in the Skorokhod space \(D_{E_2}(\mathbb {R}^+)\).

2.2 Large Deviation Principles

The main results in this paper are the two large deviation principles for the two sets of models introduced above. To be precise, we say that the sequence \(x_n \in D_{E_1}(\mathbb {R}^+)\), or for the second case \(\mu _n \in D_{E_2}(\mathbb {R}^+)\), satisfies the large deviation principle with rate function \(I : D_{E_1}(\mathbb {R}^+) \rightarrow [0,\infty ]\) if I is lower semi-continuous and the following two inequalities hold:

-

(a)

For all closed sets \(G \subseteq D_{E_1}(\mathbb {R}^+)\), we have

$$\begin{aligned} \limsup _{n \rightarrow \infty } \frac{1}{n} \log \mathbb {P}[\{x_n(t)\}_{t \ge 0} \in G] \le - \inf _{\gamma \in G} I(\gamma ). \end{aligned}$$ -

(b)

For all open sets \(U \subseteq D_{E_1}(\mathbb {R}^+)\), we have

$$\begin{aligned} \liminf _{n \rightarrow \infty } \frac{1}{n} \log \mathbb {P}[\{x_n(t)\}_{t \ge 0} \in U] \ge - \inf _{\gamma \in U} I(\gamma ). \end{aligned}$$

For the definition of the Skorokhod topology defined on \(D_{E_1}(\mathbb {R}^+)\), see for example [21]. We say that I is good if the level sets \(I^{-1}[0,a]\) are compact for all \(a \ge 0\).

Carrying out the procedure in (1.1) for our two sets of models, we obtain, see Lemma 1 below, operators \((H,\mathcal {D}(H))\), \(\mathcal {D}(H) = C^1(E)\) that are of the form \(Hf(x) = H(x,\nabla f(x))\), \(H:E \times \mathbb {R}^d \rightarrow \mathbb {R}\). These are the Hamiltonians that appear in Theorems 1 and 2.

For a trajectory \(\gamma \in D_{E_1}(\mathbb {R})\), we say that \(\gamma \in \mathcal {A}\mathcal {C}\) if the trajectory is absolutely continuous. For the d-dimensional Ehrenfest model, we have the following result.

Theorem 1

Suppose that

for some family of continuous functions \(v_+^i, v_-^i : E_1 \rightarrow \mathbb {R}^+\), \(1 \le i \le d\) with the following properties.

The rate \(v_+^i\) is identically zero or we have the following set of conditions.

-

(a)

\(v_+^i(x) > 0\) if \(x_i \ne 1\).

-

(b)

For \(z \in [-1,1]^d\) such that \(z_i = 1\), we have \(v_+^i(z) = 0\) and for every such z there exists a neighbourhood \(U_z\) of z on which there exists a decomposition \(v_+^i(x) = v_{+,z,\dagger }^i(x_i) v_{+,z,\ddagger }^i(x)\), where \(v_{+,z,\dagger }^i\) is decreasing and where \(v_{+,z,\ddagger }^i\) is continuous and satisfies \(v_{+,z,\ddagger }^i(z) \ne 0\).

The rate \(v_-^i\) is identically zero or we have the following set of conditions.

-

(a)

\(v_-^i(x) > 0\) if \(x_i \ne -1\).

-

(b)

For \(z \in [-1,1]^d\) such that \(z_i = -1\), we have \(v_-^i(z) = 0\) and for every such z there exists a neighbourhood \(U_z\) of z on which there exists a decomposition \(v_-^i(x) = v_{-,z,\dagger }^i(x_i) v_{-,z,\ddagger }^i(x)\), where \(v_{+,z,\dagger }^i\) is increasing and where \(v_{-,z,\ddagger }^i\) is continuous and satisfies \(v_{-,z,\ddagger }^i(z) \ne 0\).

Furthermore, suppose that \(\{x_n(0)\}_{n \ge 1}\) satisfies the LDP on \(E_1\) with good rate function \(I_0\). Then, \(\{x_n\}_{n \ge 1}\) satisfies the LDP on \(D_{E_1}(\mathbb {R}^+)\) with good rate function I given by

where the Lagrangian \(\mathcal {L}(x,v) : E_1 \times \mathbb {R}^d \rightarrow \mathbb {R}\) is given by the Legendre transform \(\mathcal {L}(x,v) = \sup _{p \in \mathbb {R}^d} \langle p,v\rangle - H(x,p)\) of the Hamiltonian \(H: E_1 \times \mathbb {R}^d \rightarrow \mathbb {R}\), defined by

Remark 1

Note that the functions \(v_+^i\) and \(v_-^i\) do not have to be of the form \(v_+^i(x) = \frac{1-x_i}{2} r_+^i(x)\), \(v_-^i(x) = \frac{1+x_i}{2}r_-^i(x)\) for some bounded functions \(r_+^i,r_-^i\). This we call singular behaviour, as such a rate cannot be obtained the LDP for independent particles via Varadhan’s lemma and the contraction principle as in [26] or [13].

Theorem 2

Suppose that

for some continuous function \(v : \{1,\ldots ,d\}\times \{1,\ldots ,d\} \times E_2 \rightarrow \mathbb {R}^+\) with the following properties.

For each a, b, the map \(\mu \mapsto v(a,b,\mu )\) is either identically equal to zero or satisfies the following two properties.

-

(a)

\(v(a,b,\mu ) > 0\) for all \(\mu \) such that \(\mu (a) > 0\).

-

(b)

For \(\nu \) such that \(\nu (a) = 0\), there exists a neighbourhood \(U_\nu \) of \(\nu \) on which there exists a decomposition \(v(a,b,\mu ) = v_{\nu ,\dagger }(a,b,\mu (a)) v_{\nu ,\ddagger }(a,b,\mu )\) such that \(v_{\nu ,\dagger }\) is increasing in the third coordinate and such that \(v_{\nu ,\ddagger }(a,b,\cdot )\) is continuous and satisfies \(v_{\nu ,\ddagger }(a,b,\nu ) \ne 0\).

Additionally, suppose that \(\{\mu _n(0)\}_{n \ge 1}\) satisfies the LDP on \(E_2\) with good rate function \(I_0\). Then, \(\{\mu _n\}_{n \ge 1}\) satisfies the LDP on \(D_{E_2}(\mathbb {R}^+)\) with good rate function I given by

where \(\mathcal {L}: E_2 \times \mathbb {R}^d \rightarrow \mathbb {R}^+\) is the Legendre transform of \(H : E_2 \times \mathbb {R}^d \rightarrow \mathbb {R}\) given by

2.3 The Comparison Principle

The main results in this paper are the two large deviation principles as stated above. However, the main step in the proof of these principles is the verification of the comparison principle for a set of Hamilton–Jacobi equations. As this result is of independent interest, we state these results here as well, and leave explanation on why the comparison principle is relevant for the large deviation principles for later. We start with some definitions.

For E equals \(E_1\) or \(E_2\), let \(H : E \times \mathbb {R}^d \rightarrow \mathbb {R}\) be some continuous map. For \(\lambda > 0\) and \(h \in C(E)\). Set \(F_{\lambda ,h} : E \times \mathbb {R}\times \mathbb {R}^d \rightarrow \mathbb {R}\) by

We will solve the Hamilton–Jacobi equation

in the viscosity sense.

Definition 1

We say that u is a (viscosity) subsolution of Eq. (2.5) if u is bounded, upper semi-continuous and if for every \(f \in C^{1}(E)\) and \(x \in E\) such that \(u - f\) has a maximum at x, we have

We say that u is a (viscosity) supersolution of Eq. (2.5) if u is bounded, lower semi-continuous and if for every \(f \in C^{1}(E)\) and \(x \in E\) such that \(u - f\) has a minimum at x, we have

We say that u is a (viscosity) solution of Eq. (2.5) if it is both a sub and a super solution.

There are various other definitions of viscosity solutions in the literature. This definition is the standard one for continuous H and compact state-space E.

Definition 2

We say that Eq. (2.5) satisfies the comparison principle if for a subsolution u and supersolution v we have \(u \le v\).

Note that if the comparison principle is satisfied, then a viscosity solution is unique.

Theorem 3

Suppose that \(H : E_1 \times \mathbb {R}^d \rightarrow \mathbb {R}\) is given by (2.2) and that the family of functions \(v_+^i, v_-^i : E_1 \rightarrow \mathbb {R}^+\), \(1 \le i \le d\), satisfy the conditions of Theorem 1.

Then, for every \(\lambda > 0\) and \(h \in C(E_1)\), the comparison principle holds for \(f(x) - \lambda H(x,\nabla f(x)) - h(x) = 0\).

Theorem 4

Suppose that \(H : E_2 \times \mathbb {R}^d \rightarrow \mathbb {R}\) is given by (2.4) and that function \(v : \{1,\ldots ,d\}\times \{1,\ldots ,d\} \times E_2 \rightarrow \mathbb {R}^+\) satisfies the conditions of Theorem 2.

Then, for every \(\lambda > 0\) and \(h \in C(E_2)\), the comparison principle holds for \(f(\mu ) - \lambda H(\mu ,\nabla f(\mu )) - h(\mu ) = 0\).

The main consequence of the comparison principle for the Hamilton–Jacobi equations stems from the fact, as we will see below, that the operator H generates a strongly continuous contraction semigroup on C(E).

The proof of the LDP is, in a sense, a problem of semigroup convergence. At least for linear semigroups, it is well known that semigroup convergence can be proven via the convergence of their generators. The main issue in this approach is to prove that the limiting generator H generates a semigroup. It is exactly this issue that the comparison principle takes care of.

Hence, the independent interest of the comparison principle comes from the fact that we have semigroup convergence whatever the approximating semigroups are, as long as their generators converge to H, i.e. this holds not just for the specifically chosen approximating semigroups that we consider in Sect. 3.

2.4 A Lyapunov Function for the Limiting Dynamics

As a corollary to the large deviation results, we show how to obtain a Lyapunov function for the solutions of

where \(\mathbf {F}(x) := H_p(x,0)\) for a Hamiltonian as in (2.4) or (2.2). Here \(H_p(x,p)\) is interpreted as the vector of partial derivatives of H in the second coordinate.

We will see in Example 3 that the trajectories solving this differential equation are the trajectories with 0 Lagrangian cost: \(\dot{x} = \mathbf {F}(x)\) if and only if \(\mathcal {L}(x,\dot{x}) = 0\). Additionally, the limiting operator \((A,C^1(E))\) obtained by

for all \(f \in C^1(E)\) and compact sets \(K \subseteq E\) has the form by \(Af(x) = \langle \nabla f(x),\mathbf {F}(x)\rangle \) for the same vector field \(\mathbf {F}\). This implies that the 0-cost trajectories are solutions to the McKean–Vlasov equation (2.6). Solutions to 2.6 are not necessarily unique, see Example 3. Uniqueness holds for example under a one-sided Lipschitz condition: if there exists \(M > 0 \) such that \(\langle \mathbf {F}(x) - \mathbf {F}(y),x-y\rangle \le M |x-y|^2\) for all \(x,y \in E\).

For non-interacting systems, it is well known that the relative entropy with respect to the stationary measure is a Lyapunov function for solutions of (2.6). The large deviation principle explains this fact and gives a method to obtain a suitable Lyapunov function, also for interacting dynamics.

Proposition 1

Suppose the conditions for Theorems 1 or 2 are satisfied. Suppose there exists measures \(\nu _n \in \mathcal {P}(E_n) \subseteq \mathcal {P}(E)\) that are invariant for the dynamics generated by \(A_n\). Furthermore, suppose that the measures \(\nu _n\) satisfy the large deviation principle on E with good rate function \(I_0\).

Then \(I_0\) is increasing along any solution of \(\dot{x}(t) = \mathbf {F}(x(t))\).

Note that we do not assume that (2.6) has a unique solution for a given starting point.

2.5 Examples

We give a series of examples to show the extent of Theorems 1 and 2.

For the Ehrenfest model, we start with the basic case, of spins flipping under the influence of some mean-field potential.

Example 1

To be precise, fix some continuously differentiable \(V : [-1,1]^d \rightarrow \mathbb {R}\) and set for every \(n \ge 1\) and \(i \in \{1,\ldots ,d\}\) the rates

The limiting objects \(v_+^i\) and \(v_-^i\) are given by

which already have the decomposition as required in the conditions of the Theorem 1. For example, condition (b) for \(v_+^i\) is satisfied by

For \(d = 1\), we give two extra notable examples, the first one exhibits unbounded jump rates for the individual spins if the empirical magnetisation is close to one of the boundary points. The second example shows a case where we have multiple trajectories \(\gamma \) with \(I(\gamma ) = 0\) that start from \(x_0 = 0\).

As \(d = 1\), we drop all sub- and super-scripts \(i \in \{1,\ldots ,d\}\) for the these two examples.

Example 2

Consider the one-dimensional Ehrenfest model with

Set \(v_+(x) = \sqrt{1-x}\), \(v_-(x) = \sqrt{1+x}\). By Dini’s theorem, we have

And additionally, conditions (a) and (b) of Theorem 1 are satisfied, e.g. take \(v_{+,1,\dagger }(x) = \sqrt{1-x}\), \(v_{+,1,\ddagger }(x) = 1\).

Example 3

Consider the one-dimensional Ehrenfest model with some rates \(r_{n,+}\), \(r_{n,-}\) and functions \(v_+(x)> 0, v_-(x) > 0\) such that \(\frac{1}{2}(1-x)r_{n,+}(x) \rightarrow v_+(x)\) and \(\frac{1}{2}(1+x)r_{n,-}(x) \rightarrow v_-(x)\) uniformly in \(x \in [-1,1]\).

Now suppose that there is a neighbourhood U of 0 on which \(v_+,v_-\) have the form

Consider the family of trajectories \(t \mapsto \gamma _a(t)\), \(a \ge 0\), defined by

Let \(T > 0\) be small enough such that \(\gamma _0(t) \in U\), and hence \(\gamma _a(t) \in U\), for all \(t \le T\). A straightforward calculation yields \(\int _0^T \mathcal {L}(\gamma _a(t),\dot{\gamma }_a(t)) \mathrm {d}t = 0\) for all \(a \ge 0\). So we find multiple trajectories starting at 0 that have zero Lagrangian cost.

Indeed, note that \(\mathcal {L}(x,v) = 0\) is equivalent to \(v = H_p(x,0) = 2\left[ v_+(x) - v_-(x) \right] = 2\sqrt{(x)}\). This yields that trajectories that have 0 Lagrangian cost are the trajectories, at least in U, that solve

which is the well-known example of a differential equation that allows for multiple solutions.

We end with an example for Theorem 2 and Proposition 1 in the spirit of Example 1.

Example 4

(Glauber dynamics for the Potts-model) Fix some continuously differentiable function \(V : \mathbb {R}^d \rightarrow \mathbb {R}\). Define the Gibbs measures

on \(\{1,\ldots ,d\}^n\), where \(P^{\otimes ,n}\) is the n-fold product measure of the uniform measure P on \(\{1,\ldots ,d\}\) and where \(Z_n\) are normalizing constants.

Let \(S(\mu \, | \, P)\) denote the relative entropy of \(\mu \in \mathcal {P}(\{1,\ldots ,d\})\) with respect to P:

By Sanov’s theorem and Varadhan’s lemma, the empirical measures under the laws \(\nu _n\) satisfy a LDP with rate function \(I_0(\mu ) = S(\mu \, | \, P) + V(\mu )\).

Now fix some function \(r : \{1,\ldots ,d\}\times \{1,\ldots ,d\} \rightarrow \mathbb {R}^+\). Set

As n goes to infinity, we have uniform convergence of \(\mu (a)r_n(a,b,\mu )\) to

where \(\nabla _a V(\mu )\) is the derivative of V in the ath coordinate. As in Example 1, condition (b) of Theorem 2 is satisfied by using the obvious decomposition.

By Proposition 1, we obtain that \(S(\mu \, | \, P) + V(\mu )\) is Lyapunov function for

2.6 Discussion and Comparison to the Existing Literature

We discuss our results in the context of the existing literature that cover our situation. Additionally, we consider a few cases where the LDP is proven for diffusion processes, because the proof techniques could possibly be applied in this setting.

2.6.1 LDP: Approach Via Non-interacting Systems, Varadhan’s Lemma and the Contraction Principle

In [3, 13, 26], the first step towards the LDP of the trajectory of some mean-field statistic of n interacting particles is the LDP for non-interacting particles on some large product space obtained via Sanov’s theorem. Varadhan’s lemma then gives the LDP in this product space for interacting particles, after which the contraction principle gives the LDP on the desired trajectory space. In [13, 26], the set-up is more general compared to ours in the sense that in [26] the behaviour of the particles depends on their spatial location, and in [13] the behaviour of a particle depends on some external random variable.

On the other hand, systems as in Example 2 fall outside of the conditions imposed in the three papers, if we disregard spatial dependence or external randomness.

The approach via Varadhan’s lemma, which needs control over the size of the perturbation, does not work, at least naively, for the situation where the jump rate for individual particles is diverging to \(\infty \), or converging to 0, if the mean is close to the boundary, see Remark 1.

2.6.2 LDP: Explicit Control on the Probabilities

For another approach considering interacting spins that have a spatial location, see [8]. The jump rates are taken to be explicit and the LDP is proven via explicit control on the Radon–Nikodym derivatives. This method should in principle work also in the case of singular v. The approach via the generators \(H_n\) in this paper, avoids arguments based on explicit control. This is an advantage for processes where the functions \(r_n\) and v are not very regular. Also in the classical Freidlin–Wentzell approach [24] for dynamical systems with Gaussian noise the explicit form of the Radon–Nikodym derivatives is used to prove the LDP.

2.6.3 LDP: Direct Comparison to a Process of Independent Particles

The main reference concerning large deviations for the trajectory of the empirical mean for interacting diffusion processes on \(\mathbb {R}^d\) is [14]. In this paper, the large deviation principle is also first established for non-interacting particles. An explicit rate function is obtained by showing that the desired rate is in between the rate function obtained via Sanov’s theorem and the contraction principle and the projective limit approach. The LDP for interacting particles is then obtained via comparing the interacting process with a non-interacting process that has a suitably chosen drift. For related approaches, see [23] for large deviations of interacting jump processes on \(\mathbb {N}\), where the interaction is unbounded and depends on the average location of the particles. See [4] for mean-field jump processes on \(\mathbb {R}^d\).

Again, the comparison with non-interacting processes would fail in our setting due the singular interaction terms.

2.6.4 LDP: Variational Representation of Poisson Random Measure

A proof of the large deviation principle for mean-field interacting jump processes has been given recently in [19]. The setting is similar to that of Theorem 2, but the proof of [19] allows for the possibility of more particles changing their state at the same time. The result is based on a variational representation for the Poisson random measure that can be used to establish bounds for trajectories in the interior of the simplex and perturbation arguments that show that trajectories that hit the boundary can be sufficiently well approximated by trajectories in the interior. For these arguments it is assumed that the rates are Lipschitz and ergodic. These are two conditions that are not necessary for our proof. On the other hand, the proof of the comparison principle using the methods from this paper fails in the context where multiple particles can change their state at the same time.

2.6.5 LDP: Proof Via Operator Convergence and the Comparison Principle

Regarding our approach based on the comparison principle, see Feng and Kurtz [22, Sect. 13.3], for an approach based on the comparison principle in the setting of Dawson and Gärtner [14] and Budhiraja et al. [5]. See Deng et al. [16] for an example of large deviations of a diffusion processes on \((0,\infty )\) with vanishing diffusion term with singular behaviour at the boundary. The methods to prove the comparison principle in Sects. 9.2 and 9.3 in [22] do not apply in our setting due to the different nature of our Hamiltonians.

2.6.6 LDP: Comparison of the Approaches

The method of obtaining exponential tightness in [22], and thus employed for this paper, is via density of the domain of the limiting generator \((H,\mathcal {D}(H))\). Like in the theory of weak convergence, functions \(f \in \mathcal {D}(H)\) in the domain of the generator, and functions \(f_n \in \mathcal {D}(H_n)\) that converge to f uniformly, can be used to bound the fluctuations in the Skorokhod space. This method is similar to the approaches taken in [9, 14, 24].

The approach using operator convergence is based on a result by Feng and Kurtz, analogous to the projective limit theorem, that allows one, given exponential tightness on the Skorokhod space, to establish a LDP via the large deviation principle for all finite dimensional distributions. This is done via the convergence of the logarithmic moment generating functions for the finite dimensional distributions. The Markov property reduces this to the convergence of the logarithmic moment generating function for time 0 and convergence of the conditional moment generating functions, that form a semigroup \(V_n(t)f(x) = \frac{1}{n} \log \mathbb {E}[e^{nf(X_n(t))} \, | \, X_n(0) = x]\). Thus, the problem is reduced to proving convergence of semigroups \(V_n(t)f \rightarrow V(t)f\). As in the theory of linear semigroups, this comes down to two steps. First one proves convergence of the generators \(H_n \rightarrow H\). Then one shows that the limiting semigroup generates a semigroup. The verification of the comparison principle implies that the domain of the limiting operator is sufficiently large to pin down a limiting semigroup.

This can be compared to the same problem for linear semigroups and the martingale problem. If the domain of a limiting linear generator is too small, multiple solutions to the martingale problem can be found, giving rise to multiple semigroups, see Chap. 12 in [28] or Sect. 4.5 in [21].

The convergence of \(V_n(t)f(x) \rightarrow V(t)f(x)\) uniformly in x corresponds to having sufficient control on the Doob-h transforms corresponding to the change of measures

where \(\mathbb {P}_{n,x}\) is the measure corresponding to the process \(X_n\) started in x at time 0. An argument based on the projective limit theorem and control on the Doob h-transforms for independent particles is also used in [14], whereas the methods in [9, 24] are based on direct calculation of the probabilities being close to a target trajectories.

2.6.7 Large Deviations for Large Excursions in Large Time

A notable second area of comparison is the study of large excursions in large time in the context of queuing systems, see e.g. [2, 17, 18] and references therein. Here, it is shown that the rate functions themselves, varying in space and time, are solutions to a Hamilton–Jacobi equation. As in our setting, one of the main problems is the verification of the comparison principle. The notable difficulty in these papers is a discontinuity of the Hamiltonian at the boundary, but in their interior the rates are uniformly bounded away from infinity and zero.

2.6.8 Lyapunov Functions

In [6, 7], Lyapunov functions are obtained for the McKean–Vlasov equation corresponding to interacting Markov processes in a setting similar to the setting of Theorem 2. Their discussion goes much beyond Proposition 1, which is perhaps best compared to Theorem 4.3 in [7]. However, the proof of Proposition 1 is interesting in its own right, as it gives an intuitive explanation for finding a relative entropy as a Lyapunov functional and is not based on explicit calculations. In particular, the proof of Proposition 1 in principle works for any setting where the path-space large deviation principle holds.

3 Large Deviation Principle Via an Associated Hamilton–Jacobi Equation

In this section, we will summarize the main results of [22]. Additionally, we will verify the main conditions of their results, except for the comparison principle of an associated Hamilton–Jacobi equation. This verification needs to be done for each individual model separately and this is the main contribution of this paper. We verify the comparison principle for our two models in Sect. 4.

3.1 Operator Convergence

Let \(E_n\) and E denote either of the spaces \(E_{n,1}, E_1\) or \(E_{n,2}, E_2\). Furthermore, denote by C(E) the continuous functions on E and by \(C^1(E)\) the functions that are continuously differentiable on a neighbourhood of E in \(\mathbb {R}^d\).

Assume that for each \(n \in \mathbb {N}\), we have a jump process \(X_n\) on \(E_n\), generated by a bounded infinitesimal generator \(A_n\). For the two examples, this process is either \(x_n\) or \(\mu _n\). We denote by \(\{S_n(t)\}_{t \ge 0}\) the transition semigroups \(S_n(t)f(y) = \mathbb {E}\left[ f(X_n(t)) \, | \, X_n(0) = y \right] \) on \(C(E_n)\). Define for each n the exponential semigroup

As in the theory of weak convergence, given that the processes \(X_n\) satisfy a exponential compact containment condition on the Skorokhod space, which in this setting is immediate, [22] show that the existence of a strongly continuous limiting semigroup \(\{V(t)\}_{t \ge 0}\) on C(E) in the sense that for all \(f \in C(E)\) and \(T \ge 0\), we have

allows us to study the large deviation behaviour of the process \(X_n\). We will consider this question from the point of view of the generators \(H_n\) of \(\{V_n(t)\}_{t \ge 0}\), where \(H_n f\) is defined by the norm limit of \(t^{-1} (V_n(t)f - f)\) as \(t \downarrow 0\). Note that \(H_n f = n^{-1} e^{-nf} A_n e^{nf}\), which for our first model yields

For our second model, we have

In particular, Feng and Kurtz show that, as in the theory of weak convergence of Markov processes, the existence of a limiting operator \((H,\mathcal {D}(H))\), such that for all \(f \in \mathcal {D}(H)\)

for which one can show that \((H,\mathcal {D}(H))\) generates a semigroup \(\{V(t)\}_{t \ge 0}\) on C(E) via the Crandall-Liggett theorem, [11], then (3.1) holds.

Lemma 1

For either of our two models, assuming (2.1) or (2.3), we find that \(H_n f \rightarrow Hf\), as in (3.2) holds for \(f \in C^1(E)\), where Hf is given by \(Hf(x) := H(x,\nabla f(x))\) and where H(x, p) is defined in (2.2) or (2.4).

The proof of the lemma is straightforward using the assumptions and the fact that f is continuously differentiable.

Thus, the problem is reduced to proving that \((H,C^1(E))\) generates a semigroup. The verification of the conditions of the Crandall-Liggett theorem is in general very hard, or even impossible. Two conditions need to be verified, the first is the dissipativity of H, which can be checked via the positive maximum principle. The second condition is the range condition: one needs to show that for \(\lambda > 0\), the range of \((\mathbbm {1}- \lambda H)\) is dense in C(E). In other words, for \(\lambda > 0\) and sufficiently many fixed \(h \in C(E)\), we need to solve \(f - \lambda H f = h\) with \(f \in C^1(E)\). An alternative is to solve this equation in the viscosity sense. If a viscosity solution exists and is unique, we denote it by \(\tilde{R}(\lambda )h\). Using these solutions, we can extend the domain of the operator \((H,C^1(E))\) by adding all pairs of the form \((\tilde{R}(\lambda )h, \lambda ^{-1}(\tilde{R}(\lambda )h - h))\) to the graph of H to obtain an operator \(\hat{H}\) that satisfies the conditions for the Crandall-Liggett theorem. This is part of the content of Theorem 5 stated below.

As a remark, note that any concept of weak solutions could be used to extend the operator. However, viscosity solutions are special in the sense that the extended operator remains dissipative.

The next result is a direct corollary of Theorem 6.14 in [22].

Theorem 5

For either of our two models, assume that (2.1) or (2.3) holds. Additionally, assume that the comparison principle is satisfied for (2.5) for all \(\lambda > 0\) and \(h \in C(E)\).

Then, the operator

generates a semigroup \(\{V(t)\}_{t \ge 0}\) as in the Crandall-Liggett theorem and we have (3.1).

Additionally, suppose that \(\{X_n(0)\}\) satisfies the large deviation principle on E with good rate function \(I_0\). Then \(X_n\) satisfies the LDP on \(D_E(\mathbb {R}^+)\) with good rate function I given by

where \(I_s(y \, | \, x) := \sup _{f \in C(E)} f(y) - V(s)f(x)\).

Note that to prove Theorem 6.14 in [22], one needs to check that viscosity sub- and super-solutions to (2.5) exist. Feng and Kurtz construct these sub- and super-solutions explicitly, using the approximating operators \(H_n\), see the proof of Lemma 6.9 in [22].

Proof

We check the conditions for Theorem 6.14 in [22]. In our models, the maps \(\eta _n : E_n \rightarrow E\) are simply the embedding maps. Condition (a) is satisfied as all our generators \(A_n\) are bounded. The conditions for convergence of the generators follow by Lemma 1.

The additional assumptions in Theorems 1 and 2 are there to make sure we are able to verify the comparison principle. This is the major contribution of the paper and will be carried out in Sect. 4.

The final steps to obtain Theorems 1 and 2 are to obtain the rate function as the integral over a Lagrangian. Also this is based on results in Chap. 8 of [22].

3.2 Variational Semigroups

In this section, we introduce the Nisio semigroup \(\mathbf {V}(t)\), of which we will show that it equals V(t) on C(E). This semigroup is given as a variational problem where one optimises a payoff \(f(\gamma (t))\) that depends on the state \(\gamma (t) \in E\), but where a cost is paid that depends on the whole trajectory \(\{\gamma (s)\}_{0 \le s \le t}\). The cost is accumulated over time and is given by a ‘Lagrangian’. Given the continuous and convex operator \(Hf(x) = H(x,\nabla f(x))\), we define this Lagrangian by taking the Legendre-Fenchel transform:

As \(p \mapsto H(x,p)\) is convex and continuous, it follows by the Fenchel - Moreau theorem that also

Using \(\mathcal {L}\), we define the Nisio semigroup for measurable functions f on E:

To be able to apply the results from Chap. 8 in [22], we need to verify Conditions 8.9 and 8.11 of [22].

For the semigroup to be well behaved, we need to verify Condition 8.9 in [22]. In particular, this condition implies Proposition 8.13 in [22] that ensures that the Nisio semigroup is in fact a semigroup on the upper semi-continuous functions that are bounded above. Additionally, it implies that all absolutely continuous trajectories up to time T, that have uniformly bounded Lagrangian cost, are a compact set in \(D_E([0,T])\).

Lemma 2

For the Hamiltonians in (2.2) and (2.4), Condition 8.9 in [22] is satisfied.

Proof

For (1),take \(U = \mathbb {R}^d\) and set \(Af(x,v) = \langle \nabla f(x),v\rangle \). Considering Definition 8.1 in [22], if \(\gamma \in \mathcal {A}\mathcal {C}\), then

by definition of A. In Definition 8.1, however, relaxed controls are considered, i.e. instead of a fixed speed \(\dot{\gamma }(s)\), one considers a measure \(\lambda \in \mathcal {M}(\mathbb {R}^d \times \mathbb {R}^+)\), such that \(\lambda (\mathbb {R}^d \times [0,t]) = t\) for all \( t \ge 0\) and

These relaxed controls are then used to define the Nisio semigroup in Eq. (8.10). Note however, that by convexity of H in the second coordinate, also \(\mathcal {L}\) is convex in the second coordinate. It follows that a deterministic control \(\lambda ( \mathrm {d}v, \mathrm {d}t) = \delta _{v(t)}( \mathrm {d}v) \mathrm {d}t\) is always the control with the smallest cost by Jensen’s inequality. We conclude that we can restrict the definition (8.10) to curves in \(\mathcal {A}\mathcal {C}\). This motivates our changed definition in Eq. (3.3).

For this paper, it suffices to set \(\Gamma = E \times \mathbb {R}^d\), so that (2) is satisfied. By compactness of E, (4) is clear.

We are left to prove (3) and (5). For (3), note that \(\mathcal {L}\) is lower semi-continuous by construction. We also have to prove compactness of the level sets. By lower semi-continuity, it is sufficient to show that the level sets \(\{\mathcal {L}\le c\}\) are contained in a compact set.

Set \(\mathcal {N}:= \cap _{x \in E} \left\{ p \in \mathbb {R}^d \, | \, H(x,p) \le 1 \right\} \). First, we show that \(\mathcal {N}\) has non-empty interior, i.e. there is some \(\varepsilon > 0\) such that the open ball \(B(0,\varepsilon )\) of radius \(\varepsilon \) around 0 is contained in \(\mathcal {N}\). Suppose not, then there exists \(x_n\) and \(p_n\) such that \(p_n \rightarrow 0\) and for all n: \(H(x_n,p_n) = 1\). By compactness of E and continuity of H, we find some \(x \in E\) such that \(H(x,0) = 1\), which contradicts our definitions of H, where \(H(y,0) = 0\) for all \(y \in E\).

Let \((x,v) \in \{\mathcal {L}\le c\}\), then

for all \(p \in B(0,\varepsilon ) \subseteq \mathcal {N}\). It follows that v is contained in some bounded ball in \(\mathbb {R}^d\). It follows that \(\{\mathcal {L}\le c\}\) is contained in some compact set by the Heine-Borel theorem.

Finally, (5) can be proven as Lemma 10.21 in [22] or Lemma 4.29 in [25]

The last property necessary for the equality of V(t)f and \(\mathbf {V}(t)f\) on C(E) is the verification of Condition 8.11 in [22]. This condition is key to proving that a variational resolvent, see Eq. (8.22), is a viscosity super-solution to (2.5). As the variational resolvent is also a sub-solution to (2.5) by Young’s inequality, the variational resolvent is a viscosity solution to this equation. If viscosity solutions are unique, this yields, after an approximation argument that \(V(t) = \mathbf {V}(t)\).

Lemma 3

Condition 8.11 in [22] is satisfied. In other words, for all \(g \in C^{1}(E)\) and \(x_0 \in E\), there exists a trajectory \(\gamma \in \mathcal {A}\mathcal {C}\) such that \(\gamma (0) = x_0\) and for all \(T \ge 0\):

Proof

Fix \(T > 0\), \(g \in C^{1}(E)\) and \(x_0 \in E\). We introduce a vector field \(\mathbf {F}^g : E \rightarrow \mathbb {R}^d\), by

where \(H_p(x,p)\) is the vector of partial derivatives of H in the second coordinate. Note that in our examples, H is continuously differentiable in the p-coordinates. For example, for the \(d=1\) case of Theorem 1, we obtain

As \(\mathbf {F}^g\) is a continuous vector field, we can find a local solution \(\gamma ^g(t)\) in E to the differential equation

by an extended version of Peano’s theorem [10]. The result in [10] is local, however, the length of the interval on which the solution is constructed depends inversely on the norm of the vector field, see his Eq. (2). As our vector fields are globally bounded in size, we can iterate the construction in [10] to obtain a global existence result, such that \(\dot{\gamma }^g(t) = \mathbf {F}^g(\gamma (t))\) for almost all times in \([0,\infty )\).

We conclude that on a subset of full measure of [0, T] that

By differentiating the final expression with respect to p, we find that the supremum is taken for \(p = \nabla g(\gamma ^g(t))\). In other words, we find

By integrating over time, the zero set does not contribute to the integral, we find (3.4).

The following result follows from Corollary 8.29 in [22].

Theorem 6

For either of our two models, assume that (2.1) or (2.3) holds. Assume that the comparison principle is satisfied for (2.5) for all \(\lambda > 0\) and \(h \in C(E)\). Finally, suppose that \(\{X_n(0)\}\) satisfies the large deviation principle on E with good rate function \(I_0\).

Then, we have \(V(t)f = \mathbf {V}(t)f\) for all \(f \in C(E)\) and \(t \ge 0\). Also, \(X_n\) satisfies the LDP on \(D_E(\mathbb {R}^+)\) with good rate function I given by

Proof

We check the conditions for Corollary 8.29 in [22]. Note that in our setting \(H = \mathbf {H}\). Therefore, condition (a) of Corollary 8.29 is trivially satisfied. Furthermore, we have to check the conditions for Theorems 6.14 and 8.27. For the first theorem, these conditions were checked already in the proof of our Theorem 5. For Theorem 8.27, we need to check Conditions 8.9, 8.10 and 8.11 in [22]. As \(H1 = 0\), Condition 8.10 follows from 8.11. 8.9 and 8.11 have been verified in Lemmas 2 and 3.

The last theorem shows us that we have Theorems 1 and 2 if we can verify the comparison principle, i.e. Theorems 3 and 4. This will be done in the section below.

Proof of Theorems 1 and 2

The comparison principles for equation (2.5) are verified in Theorems 3 and 4. The two theorems now follow from Theorem 6. \(\square \)

Proof of Proposition 1

We give the proof for the system considered in Theorem 1. Fix \(t \ge 0\) and some starting point \(x_0\). Let x(t) be any solution of \(\dot{x}(t) = \mathbf {F}(x(t))\) with \(x(0) = x_0\). We show that \(I_0(x(t)) \le I_0(x_0)\).

Let \(X_n(0)\) be distributed as \(\nu _n\). Then it follows by Theorem 1 that the LDP holds for \(\{X_n\}_{n \ge 0}\) on \(D_E(\mathbb {R}^+)\).

As \(\nu _n\) is invariant for the Markov process generated by \(A_n\), also the sequence \(\{X_n(t)\}_{n \ge 0}\) satisfies the large deviation principle on E with good rate function \(I_0\). Combining these two facts, the Contraction principle [15, Theorem 4.2.1] yields

Note that \(\mathcal {L}(x(s),\dot{x}(s)) = 0\) for all s as was shown in Example 3. \(\square \)

4 The Comparison Principle

We proceed with checking the comparison principle for equations of the type \(f(x) - \lambda H(x,\nabla f(x)) - h(x) = 0\). In other words, for subsolutions u and supersolutions v we need to check that \(u \le v\). We start with some known results. First of all, we give the main tool to construct sequences \(x_\alpha \) and \(y_\alpha \) that converge to a maximising point \(z \in E\) such that \(u(z) - v(z) = \sup _{z'\in E} u(z') - v(z')\). This result can be found for example as Proposition 3.7 in [12].

Lemma 4

Let E be a compact subset of \(\mathbb {R}^d\), let u be upper semi-continuous, v lower semi-continuous and let \(\Psi : E^2 \rightarrow \mathbb {R}^+\) be a lower semi-continuous function such that \(\Psi (x,y) = 0\) if and only if \(x = y\). For \(\alpha > 0\), let \(x_\alpha ,y_\alpha \in E\) such that

Then the following hold

-

(i)

\(\lim _{\alpha \rightarrow \infty } \alpha \Psi (x_\alpha ,y_\alpha ) = 0\).

-

(ii)

All limit points of \((x_\alpha ,y_\alpha )\) are of the form (z, z) and for these limit points we have \(u(z) - v(z) = \sup _{x \in E} \left\{ u(x) - v(x) \right\} \).

We say that \(\Psi : E^2 \rightarrow \mathbb {R}^+\) is a good penalization function if \(\Psi (x,y) = 0\) if and only if \(x = y\), it is continuously differentiable in both components and if \((\nabla \Psi (\cdot ,y))(x) = - (\nabla \Psi (x,\cdot ))(y)\) for all \(x,y \in E\). The next two results can be found as Lemma 9.3 in [22]. We will give the proofs of these results for completeness.

Proposition 2

Let \((H,\mathcal {D}(H))\) be an operator such that \(\mathcal {D}(H) = C^{1}(E)\) of the form \(Hf(x) = H(x,\nabla f(x))\). Let u be a subsolution and v a supersolution to \(f(x) - \lambda H(x,\nabla f(x)) - h(x) = 0\), for some \(\lambda > 0\) and \(h \in C(E)\). Let \(\Psi \) be a good penalization function and let \(x_\alpha ,y_\alpha \) satisfy

Suppose that

then \(u \le v\). In other words, \(f(x) - \lambda H(x,\nabla f(x)) - h(x) = 0\) satisfies the comparison principle.

Proof

Fix \(\lambda >0\) and \(h \in C(E)\). Let u be a subsolution and v a supersolution to

We argue by contradiction and assume that \(\delta := \sup _{x \in E} u(x) - v(x) > 0\). For \(\alpha > 0\), let \(x_\alpha ,y_\alpha \) be such that

Thus Lemma 4 yields \(\alpha \Psi (x_\alpha ,y_\alpha ) \rightarrow 0\) and for any limit point z of the sequence \(x_\alpha \), we have \(u(z) - v(z) = \sup _{x \in E} u(x) - v(x) = \delta > 0\). It follows that for \(\alpha \) large enough, \(u(x_\alpha ) - v(y_\alpha ) \ge \frac{1}{2}\delta \).

For every \(\alpha > 0\), the map \(\Phi ^1_\alpha (x) := v(y_\alpha ) + \alpha \Psi (x,y_\alpha )\) is in \(C^{1}(E)\) and \(u(x) - \Phi ^1_\alpha (x)\) has a maximum at \(x_\alpha \). On the other hand, \(\Phi ^2_\alpha (y) := u(x_\alpha ) - \alpha \Psi (x_\alpha ,y)\) is also in \(C^{1}(E)\) and \(v(y) - \Phi ^2_\alpha (y)\) has a minimum at \(y_\alpha \). As u is a sub- and v a super solution to (4.1), we have

where the last equality follows as \(\Psi \) is a good penalization function. It follows that for \(\alpha \) large enough, we have

As h is continuous, we obtain \(\lim _{\alpha \rightarrow \infty } h(x_\alpha ) - h(y_\alpha ) = 0\). Together with the assumption of the proposition, we find that the \(\liminf \) as \(\alpha \rightarrow \infty \) of the third line in (4.2) is bounded above by 0, which contradicts the assumption that \(\delta > 0\). \(\square \)

The next lemma gives additional control on the sequences \(x_\alpha ,y_\alpha \).

Lemma 5

Let \((H,\mathcal {D}(H))\) be an operator such that \(\mathcal {D}(H) = C^{1}(E)\) of the form \(Hf(x) = H(x,\nabla f(x))\). Let u be a subsolution and v a supersolution to \(f(x) - \lambda H(x,\nabla f(x)) - h(x) = 0\), for some \(\alpha > 0\) and \(h \in C(E)\). Let \(\Psi \) be a good penalization function and let \(x_\alpha ,y_\alpha \) satisfy

Then we have that

Proof

Fix \(\lambda > 0\), \(h \in C(E)\) and let u and v be sub- and super-solutions to \(f(x) - \lambda H(x,f(x)) - h(x) = 0\). Let \(\Psi \) be a good penalization function and let \(x_\alpha ,y_\alpha \) satisfy

As \(y_\alpha \) is such that

and v is a super-solution, we obtain

As \(\Psi \) is a good penalization function, we have \(- (\nabla \Psi (x_\alpha ,\cdot ))(y_\alpha ) = (\nabla \Psi (\cdot ,y_\alpha ))(x_\alpha )\). The boundedness of v now implies

\(\square \)

4.1 One-Dimensional Ehrenfest Model

To single out the important aspects of the proof of the comparison principle for Eq. (2.5), we start by proving it for the \(d=1\) case of Theorem 1.

Proposition 3

Let \(E = [-1,1]\) and let

where \(v_+, v_-\) are continuous and satisfy the following properties:

-

(a)

\(v_+(x) = 0\) for all x or \(v_+\) satisfies the following properties:

-

(i)

\(v_+(x) > 0\) for \(x \ne 1\).

-

(ii)

\(v_+(1) = 0\) and there exists a neighbourhood \(U_{1}\) of 1 on which there exists a decomposition \(v_+(x) = v_{+,\dagger }(x)v_{+,\ddagger }(x)\) such that \(v_{+,\dagger }\) is decreasing and where \(v_{+,\ddagger }\) is continuous and satisfies \(v_{+,\ddagger }(1) \ne 0\).

-

(i)

-

(b)

\(v_-(x) = 0\) for all x or \(v_-\) satisfies the following properties:

-

(i)

\(v_-(x) > 0\) for \(x \ne -1\).

-

(ii)

\(v_+(-1) = 0\) and there exists a neighbourhood \(U_{-1}\) of 1 on which there exists a decomposition \(v_-(x) = v_{-,\dagger }(x)v_{-,\ddagger }(x)\) such that \(v_{-,\dagger }\) is increasing and where \(v_{-,\ddagger }\) is continuous and satisfies \(v_{-,\ddagger }(-1) \ne 0\).

-

(i)

Let \(\lambda > 0\) and \(h \in C(E)\). Then the comparison principle holds for \(f(x) - \lambda H(x,\nabla f(x)) - h(x) = 0\).

Proof

Fix \(\lambda > 0\), \(h \in C(E)\) and pick a sub- and super-solutions u and v to \(f(x) - \lambda H(x,\nabla f(x)) - h(x) = 0\). We check the condition for Proposition 2. We take the good penalization function \(\Psi (x,y) = 2^{-1} (x-y)^2\) and let \(x_\alpha ,y_\alpha \) satisfy

We need to prove that

By Lemma 4, we know that \(\alpha |x_\alpha - y_\alpha |^2 \rightarrow 0\) as \(\alpha \rightarrow \infty \) and any limit point of \((x_\alpha , y_\alpha )\) is of the form (z, z) for some z such that \(u(z) - v(z) = \max _{z' \in E} u(z') - v(z')\). Restrict \(\alpha \) to the sequence \(\alpha \in \mathbb {N}\) and extract a subsequence, which we will also denote by \(\alpha \), such that \(\alpha \rightarrow \infty \) \(x_\alpha \) and \(y_\alpha \) converge to some z. The rest of the proof depends on whether \(z = -1, z = 1\) or \(z \in (-1,1)\).

First suppose that \(z \in (-1,1)\). By Lemma 5, we have

As \(e^c -1 > -1\), we see that the \(\limsup \) of both terms of the sum individually are bounded as well. Using that \(y_\alpha \rightarrow z \in (-1,1)\), and the fact that \(v_+,v_-\) are bounded away from 0 on a closed interval around z, we obtain from the first term that \(\sup _\alpha \alpha (x_\alpha - y_\alpha ) < \infty \) and from the second that \(\sup _\alpha \alpha (y_\alpha - x_\alpha ) < \infty \). We conclude that \(\alpha (x_\alpha - y_\alpha )\) is a bounded sequence. Therefore, there exists a subsequence \(\alpha (k)\) such that \(\alpha (k)(x_{\alpha (k)} - y_{\alpha (k)})\) converges to some \(p_0\). We find that

We proceed with the proof in the case that \(x_\alpha ,y_\alpha \rightarrow z = -1\). The case where \(z = 1\) is proven similarly. Again by Lemma 5, we obtain the bounds

As \(v_+\) is bounded away from 0 near \(-1\), we obtain by the left hand bound that \(\sup _\alpha \alpha (x_\alpha - y_\alpha ) < \infty \). As in the proof above, it follows that if \(\alpha |x_\alpha - y_\alpha |\) is bounded, we are done. This leaves the case where there exists a subsequence of \(\alpha \), denoted by \(\alpha (k)\), such that \(\alpha (k)(y_{\alpha (k)} - x_{\alpha (k)}) \rightarrow \infty \). Then clearly, \(e^{2\alpha (k)(x_{\alpha (k)} - y_{\alpha (k)})}- 1\) is bounded and contains a converging subsequence. We obtain as in the proof where \(z \in (-1,1)\) that

Note that as \(\alpha (k)(y_{\alpha (k)} - x_{\alpha (k)}) \rightarrow \infty \), we have \(y_{\alpha (k)} > x_{\alpha (k)} \ge -1\), which implies \(v_-(y_{\alpha (k)}) > 0\). Also for k sufficiently large, \(y_{\alpha (k)}, x_{\alpha (k)} \in U_{-1}\). Thus, we can write

By the bound in (4.5), and the obvious lower bound, we see that the non-negative sequence

contains a converging subsequence \(u_{k'} \rightarrow c\). As \(y_{\alpha (k)} > x_{\alpha (k)}\) and \(v_{-,\dagger }\) is increasing:

As a consequence, we obtain

This concludes the proof of (4.4) for the case that \(z = -1\). \(\square \)

4.2 Multi-dimensional Ehrenfest Model

Proof of Theorem 3

Let u be a subsolution and v a supersolution to \(f(x) - \lambda H(x,\nabla f(x)) - h(x) = 0\). As in the proof of Proposition 3, we check the condition for Proposition 2. Again, for \(\alpha \in \mathbb {N}\) let \(x_\alpha ,y_\alpha \) satisfy

and without loss of generality let z be such that \(x_\alpha ,y_\alpha \rightarrow z\).

Denote with \(x_{\alpha ,i}\) and \(y_{\alpha ,i}\) the ith coordinate of \(x_\alpha \) respectively \(y_\alpha \). We prove

by constructing a subsequence \(\alpha (n) \rightarrow \infty \) such that the first term in the sum converges to 0. From this sequence, we find a subsequence such that the second term converges to zero, and so on.

Therefore, we will assume that we have a sequence \(\alpha (n) \rightarrow \infty \) for which the first \(i-1\) terms of the difference of the two Hamiltonians vanishes and prove that we can find a subsequence for which the ith term

vanishes. This follows directly as in the proof of Proposition 3, arguing depending on the situation \(z_i \in (-1,1)\), \(z_i = -1\) or \(z_i = -1\). \(\square \)

4.3 Mean Field Markov Jump Processes on a Finite State Space

The proof of Theorem 4 follows along the lines of the proofs of Proposition 3 and Theorem 3. The proof however needs one important adaptation because of the appearance of the difference \(p_b - p_a\) in the exponents of the Hamiltonian.

Naively copying the proofs using the penalization function \(\Psi (\mu ,\nu ) = \frac{1}{2} \sum _{a} (\mu (a) - \nu (a))^2\) one obtains by Lemma 5, for suitable sequences \(\mu _\alpha \) and \(\nu _\alpha \), that

One sees that the control on the sequences \(\alpha (\nu _\alpha (a) - \mu _\alpha (a))\) obtained from this bound is not very good, due to the compensating term \(\alpha (\mu _\alpha (b) - \nu _\alpha (b))\).

The proof can be suitably adapted using a different penalization function. For \(x \in \mathbb {R}\), let \(x^- := x \wedge 0\) and \(x^+ = x \vee 0\). Define \(\Psi (\mu ,\nu ) = \frac{1}{2} \sum _{a} ((\mu (a) - \nu (a))^-)^2 = \frac{1}{2} \sum _{a} ((\nu (a) - \mu (a))^+)^2 \). Clearly, \(\Psi \) is differentiable in both components and satisfies \((\nabla \Psi (\cdot ,\nu ))(\mu ) = - (\nabla \Psi (\mu ,\cdot ))(\nu )\). Finally, using the fact that \(\sum _i \mu (i) = \sum _i \nu (i) = 1\), we find that \(\Psi (\mu ,\nu ) = 0\) implies that \(\mu = \nu \). We conclude that \(\Psi \) is a good penalization function.

The bound obtained from Lemma 5 using this \(\Psi \) yields

We see that if \(\left( \mu _\alpha (b) - \nu _\alpha (b)\right) ^- - \left( \mu _\alpha (a) - \nu _\alpha (a)\right) ^- \rightarrow \infty \) it must be because \(\alpha (\nu _\alpha (a) - \mu _\alpha (a)) \rightarrow \infty \). This puts us in the position to use the techniques from the previous proofs.

Proof of Theorem 4

Set \(\Psi (\mu ,\nu ) = \frac{1}{2} \sum _{a} ((\mu (a) - \nu (a))^-)^2\), as above. We already noted that \(\Psi \) is a good penalization function.

Let u be a subsolution and v be a supersolution to \(f(\mu ) - \lambda H(\mu ,\nabla f(\mu )) - h(\mu ) = 0\). For \(\alpha \in \mathbb {N}\), pick \(\mu _\alpha \) and \(\nu _\alpha \) such that

Furthermore, assume without loss of generality that \(\mu _\alpha ,\nu _\alpha \rightarrow z\) for some z such that \(u(z) - v(z) = \sup _{z'\in E} u(z') - v(z')\). By Proposition 2, we need to bound

As in the proof of Theorem 3, we will show that each term in the sum above can be bounded above by 0 separately. So pick some ordering of the ordered pairs (i, j), \(i,j \in \{1,\ldots ,n\}\) and assume that we have some sequence \(\alpha \) such that the \(\liminf _{\alpha \rightarrow \infty }\) of the first k terms in Eq. (4.7) are bounded above by 0. Suppose that (i, j) is the pair corresponding to the \(k+1\)th term of the sum in (4.7).

Clearly, if \(v(i,j,\pi ) = 0\) for all \(\pi \) then we are done. Therefore, we assume that \(v(i,j,\pi ) \ne 0\) for all \(\pi \) such that \(\pi (i) > 0\).

In the case that \(\mu _\alpha , \nu _\alpha \rightarrow \pi ^*\), where \(\pi ^*(i) > 0\), we know by Lemma 5, using that \(v(i,j,\cdot )\) is bounded away from 0 on a neighbourhood of \(\pi ^*\), that

Picking a subsequence \(\alpha (n)\) such that this term above converges and using that \(\pi \rightarrow v(i,j,\pi )\) is uniformly continuous, we see

For the second case, suppose that \(\mu _\alpha (i),\nu _\alpha (i) \rightarrow 0\). By Lemma 5, we get

First of all, if \(\sup _\alpha \alpha \left( \left( \mu _\alpha (j) - \nu _\alpha (j)\right) ^- - \left( \mu _\alpha (i) - \nu _\alpha (i)\right) ^-\right) < \infty \), then the argument given above also takes care of this situation. So suppose that this supremum is infinite. Clearly, the contribution \(\left( \mu _\alpha (j) - \nu _\alpha (j)\right) ^-\) is negative, which implies that \(\sup _\alpha \alpha \left( \nu _\alpha (i) -\mu _\alpha (i)\right) ^+ = \infty \). This means that we can assume without loss of generality that

We rewrite the term \(a = i\), \(b = j\) in Eq. (4.7) as

The right hand side is bounded above by (4.8) and bounded below by \(-1\), so we take a subsequence of \(\alpha \), also denoted by \(\alpha \), such that the right hand side converges. Also note that for \(\alpha \) large enough the right hand side is non-negative. Therefore, it suffices to show that

which follows as in the proof of Proposition 3. \(\square \)

References

Adams, A., Dirr, N., Peletier, M., Zimmer, J.: Large deviations and gradient flows. Philos. Trans. R. Soc. Lond. A 371, 20120341 (2013)

Atar, R., Dupuis, P.: Large deviations and queueing networks: methods for rate function identification. Stoch. Process. Appl. 84(2), 255–296 (1999). doi:10.1016/S0304-4149(99)00051-4

Borkar, V.S., Sundaresan, R.: Asymptotics of the invariant measure in mean field models with jumps. Stoch. Syst. 2(2), 322–380 (2012). doi:10.1214/12-SSY064

Boualem, D., Ingemar, K.: The rate function for some measure-valued jump processes. Ann. Probab. 23(3), 1414–1438 (1995). http://www.jstor.org/stable/2244879

Budhiraja, A., Dupuis, P., Fischer, M.: Large deviation properties of weakly interacting processes via weak convergence methods. Ann. Probab. 40(1), 74–102 (2012). doi:10.1214/10-AOP616

Budhiraja, A., Dupuis, P., Fischer, M., Ramanan, K.: Limits of relative entropies associated with weakly interacting particle systems. Electron J Probab 20(80), 1–22 (2015a). doi:10.1214/EJP.v20-4003. http://ejp.ejpecp.org/article/view/4003

Budhiraja, A., Dupuis, P., Fischer, M., Ramanan, K.: Local stability of Kolmogorov forward equations for finite state nonlinear Markov processes. Electron. J. Probab. 20(81), 1–30 (2015b). doi:10.1214/EJP.v20-4004. http://ejp.ejpecp.org/article/view/4004

Comets, F.: Nucleation for a long range magnetic model. Ann. Inst. H. Poincaré Probab. Stat. 23(2), 135–178 (1987)

Comets, F.: Large deviation estimates for a conditional probability distribution. Applications to random interaction Gibbs measures. Probab. Theory Relat. Fields 80(3), 407–432 (1989)

Crandall, M.G.: A generalization of Peano’s existence theorem and flow invariance. Proc. Am. Math. Soc. 36, 151–155 (1972)

Crandall, M.G., Liggett, T.M.: Generation of semi-groups of nonlinear transformations on general banach spaces. Am. J. Math. 93(2), 265–298 (1971)

Crandall, M.G., Ishii, H., Lions, P.L.: User’s guide to viscosity solutions of second order partial differential equations. Bull. Am. Math. Soc. New Ser. 27(1), 1–67 (1992). doi:10.1090/S0273-0979-1992-00266-5

Pra, Dai: P., den Hollander, F.: Mckean-vlasov limit for interacting random processes in random media. J. Stat. Phys. 84(3–4), 735–772 (1996). doi:10.1007/BF02179656

Dawson, D.A., Gärtner, J.: Large deviations from the McKean–Vlasov limit for weakly interacting diffusions. Stochastics 20(4), 247–308 (1987)

Dembo, A., Zeitouni, O.: Large Deviations Techniques and Applications, 2nd edn. Springer, New York (1998)

Deng, X., Feng, J., Liu, Y.: A singular 1-D Hamilton–Jacobi equation, with application to large deviation of diffusions. Commun. Math. Sci. 9(1), 289–300 (2011)

Dupuis, P., Ellis, R.S.: The large deviation principle for a general class of queueing systems. I. Trans. Am. Math. Soc. 347(8), 2689–2751 (1995). doi:10.2307/2154753

Dupuis, P., Ishii, H., Soner, H.M.: A viscosity solution approach to the asymptotic analysis of queueing systems. Ann. Probab. 18(1), 226–255 (1990). http://www.jstor.org/stable/2244236

Dupuis, P., Ramanan, K., Wu, W.: Large deviation principle for finite-state mean field interacting particle systems. Preprint (2016). arXiv:1601.06219

Ermolaev, V., Külske, C.: Low-temperature dynamics of the Curie–Weiss model: periodic orbits, multiple histories, and loss of Gibbsianness. J. Stat. Phys. 141(5), 727–756 (2010). doi:10.1007/s10955-010-0074-x

Ethier, S.N., Kurtz, T.G.: Markov Processes: Characterization and Convergence. Wiley, Hoboken (1986)

Feng, J., Kurtz, T.G.: Large Deviations for Stochastic Processes. American Mathematical Society, Providence (2006)

Feng, S.: Large deviations for empirical process of mean-field interacting particle system with unbounded jumps. Ann. Probab. 22(4), 2122–2151 (1994)

Freidlin, M., Wentzell, A.: Random Perturbations of Dynamical Systems, 2nd edn. Springer, Berlin (1998)

Kraaij, R.: Large deviations of the trajectory of empirical distributions of Feller processes on locally compact spaces. Preprint (2014). arXiv:1401.2802

Léonard, C.: Large deviations for long range interacting particle systems with jumps. Ann. Inst. H. Poincaré (B) Probab. Stat. 31(2), 289–323 (1995)

Mielke, A., Peletier, M., Renger, D.: On the relation between gradient flows and the large-deviation principle, with applications to Markov chains and diffusion. Potential Anal. 41(4), 1293–1327 (2014). doi:10.1007/s11118-014-9418-5

Stroock, D.W., Varadhan, S.R.S.: Multidimensional Diffusion Processes. Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], vol. 233. Springer, Berlin (1979)

van Enter, A., Fernández, R., den Hollander, F., Redig, F.: A large-deviation view on dynamical Gibbs–non-Gibbs transitions. Moscow Math. J. 10, 687–711 (2010)

Acknowledgments

The author is supported by The Netherlands Organisation for Scientific Research (NWO), Grant No. 600.065.130.12N109. The author thanks Frank Redig and Christian Maes for helpful discussions. Additionally, the author thanks anonymous referees for suggestions that improved the text.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kraaij, R. Large Deviations for Finite State Markov Jump Processes with Mean-Field Interaction Via the Comparison Principle for an Associated Hamilton–Jacobi Equation. J Stat Phys 164, 321–345 (2016). https://doi.org/10.1007/s10955-016-1542-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-016-1542-8

Keywords

- Large deviations

- Non-linear jump processes

- Hamilton–Jacobi equation

- Viscosity solutions

- Comparison principle