Abstract

In invasion percolation, the edges of successively maximal weight (the outlets) divide the invasion cluster into a chain of ponds separated by outlets. On the regular tree, the ponds are shown to grow exponentially, with law of large numbers, central limit theorem and large deviation results. The tail asymptotics for a fixed pond are also studied and are shown to be related to the asymptotics of a critical percolation cluster, with a logarithmic correction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Definitions

1.1 The Model: Invasion Percolation, Ponds and Outlets

Consider an infinite connected locally finite graph \({\mathcal{G}}\), with a distinguished vertex o, the root. On each edge, place an independent Uniform[0,1] edge weight, which we may assume (a.s.) to be all distinct. Starting from the subgraph \({\mathcal{C}}_{0}= \{ o \}\), inductively grow a sequence of subgraphs \({\mathcal{C}}_{i}\) according to the following deterministic rule. At step i, examine the edges on the boundary of C i−1, and form C i by adjoining to \({\mathcal {C}}_{i-1}\) the edge whose weight is minimal. The infinite union

is called the invasion cluster.

Invasion percolation is closely related to ordinary (Bernoulli) percolation. For instance, ([4] for \({\mathcal{G}}= Z^{d}\); later greatly generalized by [11]) if \({\mathcal{G}}\) is quasi-transitive, then for any p>p c , only a finite number of edges of weight greater than p are ever invaded. On the other hand, it is elementary to show that for any p<p c , infinitely many edges of weight greater than p must be invaded. In other words, writing ξ i for the weight of the ith invaded edge, we have

So invasion percolation produces an infinite cluster using only slightly more than critical edges, even though there may be no infinite cluster at criticality. The fact that invasion percolation is linked to the critical value p c , even though it contains no parameter in its definition, makes it an example of self-organized criticality.

Under mild hypotheses (see Sect. 3.1), the invasion cluster has a natural decomposition into ponds and outlets. Let \(e_{1}\in {\mathcal{C}}\) be the edge whose weight Q 1 is the largest ever invaded. For n>1, e n is the edge in \({\mathcal{C}}\) whose weight Q n is the highest among edges invaded after e n−1. We call e n the nth outlet and Q n the corresponding outlet weight. Write \(\hat{V}_{n}\) for the step at which e n was invaded, with \(\hat{V}_{0}=0\). The nth pond is the subgraph of edges invaded at steps \(i\in(\hat{V}_{n-1}, \hat{V}_{n}]\).

Suppose an edge e, with weight p, is first examined at step \(i\in (\hat{V}_{n-1}, \hat{V}_{n}]\). (That is, i is the first step at which e is on the boundary of \({\mathcal{C}}_{i-1}\).) Then we have the following dichotomy: either

-

e will be invaded as part of the nth pond (if p≤Q n ); or

-

e will never be invaded (if p>Q n )

This implies that the ponds are connected subgraphs and touch each other only at the outlets. Moreover, the outlets are pivotal in the sense that any infinite non-intersecting path in \({\mathcal{C}}\) starting at o must pass through every outlet. Consequently \({\mathcal {C}}\) is decomposed as an infinite chain of ponds, connected at the outlets.

In this paper we take \({\mathcal{G}}\) to be a regular tree and analyze the asymptotic behavior of the ponds, the outlets and the outlet weights. This problem can be approached in two directions: by considering the ponds as a sequence and studying the growth properties of that sequence; or by considering a fixed pond and finding its asymptotics. We will see that the sequence of ponds grows exponentially, with exact exponential constants. For a fixed pond, its asymptotics correspond to those of ordinary percolation with a logarithmic correction.

These computations are based on representing C in terms of the outlet weights Q n , as in [1]. Conditional on \((Q_{n})_{n=0}^{\infty}\), each pond is an independent percolation cluster with parameter related to Q n . In particular, the fluctuations of the ponds are a combination of fluctuations in Q n and the additional randomness.

Surprisingly, in all but the large deviation sense, the asymptotic behavior for the ponds is controlled by the outlet weights alone: the remaining randomness after conditioning only on \((Q_{n})_{n=0}^{\infty}\) disappears in the limit, and the fluctuations are attributable solely to fluctuations of Q n .

1.2 Known Results

The terminology of ponds and outlets comes from the following description (see [17]) of invasion percolation. Consider a random landscape where the edge weights represent the heights of channels between locations. Pour water into the landscape at o; then as more and more water is added, it will flow into neighboring areas according to the invasion percolation mechanism. The water level at o, and throughout the first pond, will rise until it reaches the height of the first outlet. Once water flows over an outlet, however, it will flow into a new pond where the water will only ever rise to a lower height. Note that the water level in the nth pond is the height (edge weight) of the nth outlet.

The edge weights may also be interpreted as energy barriers for a random walker exploring a random energy landscape: see [15]. If the energy levels are highly separated, then (with high probability and until some large time horizon) the walker will visit the ponds in order, spending a long time in each pond before crossing the next outlet. In this interpretation the growth rate of the ponds determines the effect of entropy on this analysis. See the extended discussion in [15].

Invasion percolation is also related to the incipient infinite cluster (IIC), at least in the cases \({\mathcal{G}}=\mathbb{Z}^{2}\) [12] and \({\mathcal{G}}\) a regular tree: see, e.g., [1, 5, 12]. For a cylinder event E, the law of the IIC can be defined by

or by other limiting procedures, many of which can be proved to be equivalent to each other. Both the invasion cluster and the IIC consist of an infinite cluster that is “almost critical”, in view of (1.2) or (1.3) respectively. For \({\mathcal{G}}=\mathbb{Z}^{2}\) [12] and \({\mathcal{G}}\) a regular tree [1], the IIC can be defined in terms of the invasion cluster: if X k denotes a vertex chosen uniformly from among the invaded vertices within distance k of o, and \(\tau_{X_{k}}E\) denotes the translation of E when o is sent to X k , then

Surprisingly, despite this local equivalence, the invasion cluster and the IIC are globally different: they are mutually singular and, at least on the regular tree, have different scaling limits, although they have the same scaling exponents.

The regular tree case, first considered in [16], was studied in great detail in [1]. Any infinite non-intersecting path from o must pass through every outlet; on a tree, this implies that there is a backbone, the unique infinite non-intersecting path from o. In [1] a description of the invasion cluster was given in terms of the forward maximal weight process, the outlet weights indexed by height along the backbone (see Sect. 3.2). This parametrization in terms of the external geometry of the tree allowed the calculation of natural geometric quantities, such as the number of invaded edges within a ball. In the following, we will see that when information about the heights is discarded, the process of edge weights takes an even simpler form.

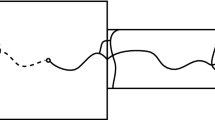

The detailed structural information in [1] was used in [2] to identify the scaling limit of the invasion cluster (again for the regular tree). Since the invasion cluster is a tree with a single infinite end, it can be encoded by its Lukaciewicz path or its height and contour functions. Within each pond, the scaling limit of the Lukaciewicz path is computed, and the different ponds are stitched together to provide the full scaling limit.

The two-dimensional case was also studied in a series of papers by van den Berg, Damron, Járai, Sapozhnikov and Vágvölgyi [5, 6, 17]. There they study, among other things, the probability that the nth pond extends a distance k from o, for n fixed. For n=1 this is asymptotically of the same order as the probability that a critical percolation cluster extends a distance k, and for n>1 there is a correction factor (logk)n−1. Furthermore an exponential growth bound for the ponds is given. This present work was motivated in part by the question of what the corresponding results would be for the tree. Quite remarkably, they are essentially the same, suggesting that a more general phenomenon may be involved.

In the results and proofs that follow, we shall see that the sequence of outlet weights plays a dominant role. Indeed, all of the results in Theorems 2.1–2.4 are proved first for Q n , then extended to other pond quantities using conditional tail estimates. Consequently, all of the results can be understood as consequences of the growth mechanism for the sequence Q n . On the regular tree, we are able to give an exact description of the sequence Q n in terms of a sum of independent random variables (see Sect. 3.3). In more general graphs, this representation cannot be expected to hold exactly. However, the similarities between the pond behaviors, even on graphs as different as the tree and ℤ2, suggest that an approximate analogue may hold. Such a result would provide a unifying explanation for both the exponential pond growth and the asymptotics of a fixed pond, even on potentially quite general graphs.

1.3 Summary of Notation

We will primarily consider the case where \({\mathcal{G}}\) is the forward regular tree of degree σ: namely, the tree in which the root o has degree σ and every other vertex has degree σ+1. The weight of the ith invaded edge is ξ i . The nth outlet is e n and its edge weight is Q n . We may naturally consider e n to be an oriented edge \(e_{n}=(\underline{v}_{n},\overline{v}_{n})\), where \(\underline{v}_{n}\) is invaded before \(\overline{v}_{n}\). The step at which e n is invaded is denoted \(\hat{V}_{n}\) and the (graph) distance from o to \(\overline{v}_{n}\) is \(\hat{L}_{n}\). Setting \(\hat {V}_{0}=\hat{L}_{0}=0\) for convenience, we write \(V_{n}=\hat{V}_{n}-\hat {V}_{n-1}\) and \(L_{n}=\hat{L}_{n}-\hat{L}_{n-1}\).

There is a natural geometric interpretation of L n as the length of the part of the backbone in the nth pond, and V n as the volume (number of edges) of the nth pond. In particular \(\hat{V}_{n}\) is the volume of the union of the first n ponds.

R n is the length of the longest upward-pointing path in the nth pond, and \(R'_{n}\) is the length of the longest upward-pointing path in the union of the first n ponds.

We shall later work with the quantity δ n ; for its definition, see (3.8).

We note the following elementary relations:

Probability laws will generically be denoted ℙ. For p∈[0,1], ℙ p denotes the law of Bernoulli percolation with parameter p. For a set A of vertices, the event {x↔A} means that there is a path of open edges joining x to some point of A, and {x↔∞} means that there is an infinite non-intersecting path of open edges starting at x. We define the percolation probability θ(p)=ℙ p (o↔∞) and p c =inf{p:θ(p)>0}. ∂B(k) denotes the vertices at distance exactly k from o.

For non-zero functions f(x) and g(x), we write f(x)∼g(x) if \(\lim\frac{f(x)}{g(x)}=1\); the point at which the limit is to be taken will usually be clear from the context. We write f(x)≍g(x) if there are constants c and C such that cg(x)≤f(x)≤Cg(x).

2 Main Results

2.1 Exponential Growth of the Ponds

Let \(\vec{Z}_{n}\) denote the 7-tuple

and write  .

.

Theorem 2.1

With probability 1,

Theorem 2.2

If (B t ) t≥0 denotes a standard Brownian motion then

as N→∞, with respect to the metric of uniform convergence on compact intervals of t.

These theorems say that each component of \(\vec{Z}\) satisfies a law of large numbers and functional central limit theorem, with the same limiting Brownian motion for each component.

Theorem 2.2 shows that the logarithmic scaling in Theorem 2.1 cannot be replaced by a linear rescaling such as e n(Q n −p c ). Indeed, log((Q n −p c )−1) has characteristic additive fluctuations of order \(\pm\sqrt{n}\), and therefore Q n −p c fluctuates by a multiplicative factor of the form \(e^{\pm\sqrt{n}}\). As n→∞ this will be concentrated at 0 and ∞, causing tightness to fail.

Theorem 2.3

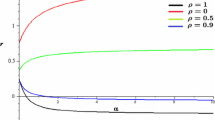

\(\frac{1}{n}\log ((Q_{n}-p_{c})^{-1} )\) satisfies a large deviation principle on [0,∞) with rate n and rate function

\(\frac{1}{n}\log L_{n}\), \(\frac{1}{n}\log R_{n}\) and \(\frac{1}{2n}\log V_{n}\) satisfy large deviation principles on [0,∞) with rate n and rate function ψ, where

It will be shown that ψ arises as the solution of the variational problem

2.2 Tail Behavior of a Pond

Theorems 2.1–2.3 describe the growth of the ponds as a sequence. We now consider a fixed pond and study its tail behavior.

Theorem 2.4

For n fixed and ϵ→0+, k→∞,

and

Using the well-known asymptotics

we may rewrite (2.7)–(2.10) as

Working in the case \({\mathcal{G}}=\mathbb{Z}^{2}\), [6] considers \(\tilde{R}_{n}\), the maximum distance from o to a point in the first n ponds, which is essentially \(R'_{n}\) in our notation. [6, Theorem 1.5] states that

and notes as a corollary

where \(\overset{i}{\leftrightarrow}\) denotes a percolation connection where up to i edges are allowed to be vacant (“percolation with defects”). (2.18) suggests the somewhat plausible heuristic of approximating the union of the first n ponds by the set of vertices reachable by critical percolation with at most n−1 defects. Indeed, the proof of (2.17) uses in part a comparison to percolation with defects. By contrast, on the tree the following result holds:

Theorem 2.5

For fixed n and k→∞,

The dramatic contrast between (2.18) and (2.19) can be explained in terms of the number of large clusters in a box. In ℤ2, a box of side length S has generically only one cluster of diameter of order S. On the tree, by contrast, there are many large clusters. Indeed, a cluster of size N has of order N edges on its outer boundary, any one of which might be adjacent to another large cluster, independently of every other edge. Percolation with defects allows the best boundary edge to be chosen, whereas invasion percolation is unlikely to invade the optimal edge.

2.3 Outline of the Paper

Section 3.1 states a Markov property for the outlet weights that is valid for any graph. From Sect. 3.2 onwards, we specialize to the case where \({\mathcal{G}}\) is a regular tree. In Sect. 3.2 we recall results from [1] that describe the structure of the invasion cluster conditional on the outlet weights Q n . Section 3.3 analyzes the Markov transition mechanism of Sect. 3.1 and proves the results of Theorems 2.1–2.3 for Q n .

Section 4.1 states conditional tail bounds for L n , R n and V n given Q n . The rest of Sects. 4–6 use these tail bounds to prove Theorems 2.1–2.4. The proof of the bounds in Sect. 4.1 is given in Sect. 7. Finally, Sect. 8 gives the proof of Theorem 2.5.

3 Markov Structure of Invasion Percolation

In Sect. 3.1 we give sufficient conditions for the existence of ponds and outlets, and state a Markov property for the ponds, outlets and outlet weights. Section 3.2 summarizes some previous results from [1] concerning the structure of the invasion cluster. Finally in Sect. 3.3 we analyze the resulting Markov chain in the special case where \({\mathcal{G}}\) is a regular tree and prove the results of Theorems 2.1–2.3 for Q n .

3.1 General Graphs: Ponds, Outlets and Outlet Weights

The representation of an invasion cluster in terms of ponds and outlets is guaranteed to be valid under the following two assumptions:

and

(3.1) is known to hold for many graphs and is conjectured to hold for any transitive graph for which p c <1 ([3, Conjecture 4]; see also, for instance, [13, Sect. 8.3]). If the graph \({\mathcal {G}}\) is quasi-transitive, (3.2) follows from the general result [11, Proposition 3.1]. Both (3.1) and (3.2) hold when \({\mathcal {G}}\) is a regular tree.

The assumption (3.1) implies that w.p. 1,

since otherwise there would exist somewhere an infinite percolation cluster at level p c . We can then make the inductive definition

since (3.2) and (3.3) imply that the maxima are attained.

Condition on Q n , e n , and the union \(\tilde{{\mathcal{C}}}_{n}\) of the first n ponds. We may naturally consider e n to be an oriented edge \(e_{n}=(\underline{v}_{n},\overline{v}_{n})\) where the vertex \(\underline{v}_{n}\) was invaded before \(\overline{v}_{n}\). The condition that e n is an outlet, with weight Q n , implies that there must exist an infinite path of edges with weights at most Q n , starting from \(\overline{v}_{n}\) and remaining in \({\mathcal{G}}\backslash \tilde{{\mathcal{C}}}_{n}\). However, the law of the edge weights in \({\mathcal{G}}\backslash\tilde{{\mathcal{C}}}_{n}\) is not otherwise affected by \(Q_{n},e_{n},\tilde{{\mathcal{C}}}_{n}\). In particular we have

on the event {q′≤Q n }. In (3.6) we can replace \({\mathcal{G}}\backslash\tilde {{\mathcal{C}}}_{n}\) by the connected component of \({\mathcal {G}}\backslash\tilde{{\mathcal{C}}}_{n}\) that contains \(\overline{e}_{n}\).

3.2 Geometric Structure of the Invasion Cluster: The Regular Tree Case

In [1, Sect. 3.1], the same outlet weights are studied, parametrized by height rather than by pond. W k is defined to be the maximum invaded edge weight above the vertex at height k along the backbone.

A key point in the analysis in [1] is the observation that \((W_{k})_{k=0}^{\infty}\) is itself a Markov process. W k is constant for long stretches, corresponding to k in the same pond, and the jumps of W k occur when an outlet is encountered. The relation between the two processes is given by

From (3.7) we see that the \((Q_{n})_{n=0}^{\infty}\) are the successive distinct values of \((W_{k})_{k=0}^{\infty}\), and \(L_{n}=\hat {L}_{n}-\hat{L}_{n-1}\) is the length of time the Markov chain W k spends in state Q n before jumping to state Q n+1. In particular, L n is geometric conditional on Q n , with some parameter depending only on Q n . As we will refer to it often, we define δ n to be that geometric parameter:

A further analysis (see [1, Sect. 2.1]) shows that the off-backbone part of the nth pond is a sub-critical Bernoulli percolation cluster with a parameter depending on Q n , independently in each pond. We summarize these results in the following theorem.

Theorem 3.1

[1, Sects. 2.1 and 3.1]

Conditional on \((Q_{n})_{n=1}^{\infty}\), the nth pond of the invasion cluster consists of

-

1.

L n edges from the infinite backbone, where L n is geometric with parameter δ n ; and

-

2.

emerging along the σ−1 sibling edges of each backbone edge, independent sub-critical Bernoulli percolation clusters with parameter

$$ p_c(1-\delta_n) $$(3.9)

Given \((Q_{n})_{n=0}^{\infty}\), the ponds are conditionally independent for different n. δ n is a continuous, strictly increasing functions of Q n and satisfies

as \(Q_{n}\rightarrow p_{c}^{+}\).

The meaning of (3.10) is that δ n =f(Q n ) where \(f(q)\sim\frac{\sigma-1}{2\sigma}\theta(q)\sim\sigma (q-p_{c})\) as \(q\rightarrow p_{c}^{+}\).

It is not at first apparent that the geometric parameter δ n in (3.8) is the same quantity that appears in (3.9), and indeed [1] has two different notations for the two quantities: see [1, Eqs. (3.1) and (2.14)]. Combining Eqs. (2.3), (2.5), (2.14) and (3.1) of [1] shows that they are equivalent to

For σ=2 we can find explicit formulas for these parameters: \(p_{c}=\frac{1}{2}\), θ(p)=p −2(2p−1) for p≥p c , δ n =2Q n −1 and p c (1−δ n )=1−Q n . However, all the information needed for our purposes is contained in the asymptotic relation (3.10).

3.3 The Outlet Weight Process

The representation (3.6) simplifies dramatically when \({\mathcal{G}}\) is a regular tree. Then the connected component of \({\mathcal{G}}\backslash\tilde{{\mathcal{C}}}_{n}\) containing \(\overline{e}_{n}\) is isomorphic to \({\mathcal{G}}\), with \(\overline {e}_{n}\) corresponding to the root. Therefore the dependence of Q n+1 on e n and \(\tilde{{\mathcal{C}}}_{n}\) is eliminated and we have the following result.

Corollary 3.2

On the regular tree, the process \((Q_{n})_{n=1}^{\infty}\) forms a time-homogeneous Markov chain with

and

for p c <q′<q.

Equations (3.12) and (3.13) say that, conditional on Q n , Q n+1 is chosen from the same distribution, conditioned to be smaller. In terms of \((W_{k})_{k=0}^{\infty}\), (3.13) describes the jumps of W k when they occur, and indeed the transition mechanism (3.13) is implicit in [1].

Since θ is a continuous function, it is simpler to consider θ(Q n ): θ(Q 1) is uniform on [0,1] and

for 0<u′<u. But this is equivalent to multiplying θ(Q n ) by an independent Uniform[0,1] variable. Noting further that the negative logarithm of a Uniform[0,1] variable is exponential of mean 1, we have proved the following proposition.

Proposition 3.3

Let U i , i∈ℕ, be independent Uniform[0,1] random variables. Then, as processes,

Equivalently, with \(E_{i}=\log U_{i}^{-1}\) independent exponentials of mean 1,

jointly for all n.

Corollary 3.4

The triple

satisfies the conclusions of Theorems 2.1 and 2.2, and each component of \(\frac{1}{n}\vec{Z}'_{n}\) satisfies a large deviation principle with rate n and rate function

Proof

The conclusions about log(θ(Q n )−1) follow from the representation (3.16) in terms of a sum of independent variables; the rate function φ is given by Cramér’s theorem. The other results then follow from the asymptotic relation (3.10). □

4 Law of Large Numbers and Central Limit Theorem

4.1 Tail Bounds for Pond Statistics

Theorem 3.1 expressed L n ,R n and V n as random variables whose parameters are given in terms of Q n . Their fluctuations are therefore a combination of fluctuations arising from Q n , and additional randomness. The following proposition gives bounds on the additional randomness.

Recall that δ n is a certain function of Q n with δ n ∼σ(Q n −p c ): see Theorem 3.1.

Proposition 4.1

There exist positive constants C,c,s 0,γ L ,γ R ,γ V such that L n , R n and V n satisfy the conditional bounds

for all n and all S,s>0; and

for s≤s 0.

The proofs of (4.1)–(4.6), which involve random walk and branching process estimates, are deferred to Sect. 7.

4.2 A Uniform Convergence Lemma

Because Theorem 2.2 involves weak convergence of several processes to the same joint limit, it will be convenient to use Skorohod’s representation theorem and almost sure convergence. The following uniform convergence result will be used to extend convergence from one set of coupled random variables (δ n,N ) to another (X n,N ): see Sect. 4.3.

Lemma 4.2

Suppose {X n,N } n,N∈ℕ and {δ n,N } n,N∈ℕ are positive random variables such that δ n,N is decreasing in n for each fixed N, and for positive constants a, β and C,

for all S and s. Define

Then for any T>0 and α>0, w.p. 1,

Proof

Let ϵ>0 be given. For a fixed N, (4.7) implies

where we used δ i,N ≥δ n,N in the third inequality. But then, noticing that

the Borel-Cantelli lemma implies

a.s. Similarly, (4.8) implies

so that

a.s. Since ϵ>0 was arbitrary and \(X_{n,N}\leq\hat {X}_{n,N}\), (4.10) follows. □

4.3 Proof of Theorems 2.1–2.2

The conclusions about Q n are contained in Corollary 3.4. The other conclusions will follow from Lemma 4.2. From Corollary 3.4, we may apply Skorohod’s representation theorem to produce realizations of the ponds for each N∈ℕ, coupled so that

a.s. as N→∞. Then the relation

shows that \(\frac{1}{a}\log X_{n}\) will satisfy a central limit theorem as well, with the same limiting Brownian motion. The same holds for \(\hat{X}_{n}\). We will successively set

The bounds (4.7)–(4.8) follow immediately from the bounds in Proposition 4.1. This proves Theorem 2.2 for L n and V n . For R n , the quantity \(\hat{R}\) is not the one that appears in Theorem 2.2, but the bound \(R_{n}\leq R'_{n}\leq\hat{R}_{n}\) implies the result for \(R'_{n}\) as well.

The lemma also implies the law of large numbers results (2.2), by taking T=1 and using the same ponds for every N.

5 Large Deviations: Proof of Theorem 2.3

In this section we present a proof of the large deviation results in Theorem 2.3. As in Sect. 4, we prove a generic result using a variable X n and tail estimates. Theorem 2.3 then follows immediately using Corollary 3.4 and Proposition 4.1.

Note that Proposition 5.1 uses the full strength of the bounds in Proposition 4.1.

Proposition 5.1

Suppose that δ n and X n are positive random variables such that, for positive constants a,β,c,C,γ,s 0,

for all S and s, and

on the event \(\{\delta_{n}^{a}<\gamma s \}\), for s≤s 0. Suppose also that \(\frac{1}{n}\log\delta_{n}^{-1}\) satisfies a large deviation principle with rate n on [0,∞) with rate function φ such that φ(1)=0, φ is continuous on (0,∞), and φ is decreasing on (0,1] and increasing on [1,∞). Then \(\frac{1}{an}\log X_{n}\) satisfies a large deviation principle with rate n on [0,∞) with rate function

Proof

It is easy to check that ψ is continuous, decreasing on [0,1] and increasing on [1,∞), ψ(1)=0, and ψ(u)=φ(u) for u≥1. So it suffices to show that

for u>0 and

for 0<u<1. For (5.5), let ϵ>0. Then

where we used (5.1) with S=e anϵ. The last term in (5.7) is super-exponentially small, so (5.7) and the large deviation principle for \(\frac{1}{n}\log\delta_{n}^{-1}\) imply

On the other hand,

using (5.2) with s=e −anϵ. So

Since φ is continuous and ϵ>0 was arbitrary, this proves (5.5).

For (5.6), let u∈(0,1) be given and choose v∈(u,1), ϵ∈(0,u). Then for n sufficiently large we have

Here we used (5.3) with s=e −an(v−u). Note that if n is large enough then s≤s 0 and the condition \(\delta_{n}^{a}<\gamma s\) follows from \(v-\epsilon<\frac{1}{n}\log\delta_{n}^{-1}\). Therefore, since φ is decreasing on (0,1],

(5.12) was proved for u<v<1. However, since φ is continuous and the function −φ(v)−av is decreasing in v for v≥1, (5.12) holds for all v≥u. So take the supremum over v≥u to obtain

Finally

(using (5.2) with \(s=e^{an (u-\frac {1}{n}\log\delta_{n}^{-1} )}\)). Apply Varadhan’s lemma (see, e.g., [7, p. 32]) to the second term of (5.14):

Therefore

which completes the proof. □

6 Tail Asymptotics

In this section we prove the fixed-pond asymptotics from Theorem 2.4.

Proof of (2.7)

Recall from (3.16) that log(θ(Q n )−1) has the same distribution as a sum of n exponential variables of mean 1, i.e., a Gamma variable with parameters n,1. So

Make the substitution x=(1+u)logϵ −1:

Then Watson’s lemma (see for instance (2.13) of [14]) implies that

and so (3.10) gives

□

Combining (6.3) with (3.10) implies at once that

We use (6.5) to prove (2.9)–(2.10) using the following lemma.

Lemma 6.1

Let δ n be a random variable satisfying (6.5). Suppose a,β are positive constants such that aβ>1, and X n is any positive random variable satisfying

for all S,n>0, and

for some s 0,p 0>0. Write \(\hat{X}_{n}=\sum_{i=1}^{n} X_{i}\). Then

as k→∞.

Proof

From (6.7) and (6.5), we have the lower bound

For the upper bound,

Use (6.5) and make the substitution r=k −1/a(1+u) to obtain

The last integral in (6.11) is bounded as k→∞ since aβ>1, which proves the upper bound for X n .

To extend (6.8) to \(\hat{X}_{n}\), assume inductively that we have the bound \(\mathbb{P}(\hat{X}_{n}>k)\asymp(\log k)^{n-1}/k^{1/a}\). (The case n=1 is already proved since \(\hat {X}_{1}=X_{1}\).) The bound \(\mathbb{P}(\hat{X}_{n+1}>k)\geq\mathbb {P}(X_{n+1}>k)\) is immediate, and we can estimate

where we set k′=⌊k/(logk)a/2⌋. Then k−k′∼k and log(k−k′)∼logk′∼logk, so that

while

which is of lower order. This completes the induction. □

Proof of (2.9)–(2.10)

These relations follow immediately from (6.5) and Lemma 6.1; the bounds (6.6)–(6.7) are immediate consequences of Proposition 4.1. As in Sect. 4.3, the asymptotics for \(R'_{n}\) follow from the asymptotics for \(\hat{R}_{n}\) and the bound \(R_{n}\leq R'_{n}\leq\hat{R}_{n}\). □

Proof of (2.8)

For L n , we can use the exact formula ℙ(L n >k|δ n )=(1−δ n )k. Write δ n =g(θ(Q n )), where g(p) is a certain continuous and increasing function. By (3.10), \(g(p)\sim\frac{\sigma-1}{2\sigma} p\) as p→0+; we will use the bound g(p)≥cp for some constant c>0. Noting as above that logθ(Q n )−1 is a Gamma random variable, we compute

after the substitution e −x=y/k. But the integral in (6.15) converges to \(\int_{0}^{\infty}e^{-\frac{\sigma -1}{2\sigma}y} \,dy\,=\frac{2\sigma}{\sigma-1}\) as k→∞: pointwise convergence follows from \(g(p)\sim\frac{\sigma -1}{2\sigma} p\), and we can uniformly bound the integrand using

Lastly, a simple modification of the argument for \(\hat{X}_{n}\) extends (2.8) to \(\hat{L}_{n}\). □

7 Pond Bounds: Proof of Proposition 4.1

In this section we prove the tail bounds (4.1)–(4.6). Since the laws of L n , R n and V n do not depend on n except through the value of δ n , we will omit the subscript in this section. For convenient reference we recall the structure of the bounds:

for all S and s, and

on the event {δ a<γs}, for s≤s 0. We have a=1 for X=L and X=R, and a=2 for X=V.

In (7.3) it is necessary to assume δ a<γs. This is due only to a discretization effect: if X is any ℕ-valued random variable, then necessarily ℙ(δ a X<s|δ)=0 whenever δ a≥s. Indeed, the bounds (4.4)–(4.6) can be proved with γ=1, although it is not necessary for our purposes.

Note that, by proper choice of C and s 0, we can assume that S is large in (7.1) and s is small in (7.2) and (7.3). Since we only consider ℕ-valued random variables X, we can assume δ is small in (7.2), say δ<1/2 (otherwise take s<(1/2)a without loss of generality). Moreover, Theorem 3.1 shows that L, R and V are all stochastically decreasing in δ. Consequently it suffices to prove (7.1) for δ small, say δ<1/2. Finally the constraint δ a<γs 0 makes δ small in (7.3) also.

We note for subsequent use the inequalities

for x∈(0,1),y>0 and

for x∈(0,1/2),y>0, which follow from log(1−x)≤−x for x∈(0,1) and log(1−x)≥−2x for x∈(0,1/2).

7.1 The Backbone Length L: Proof of (4.1) and (4.4)

From Theorem 3.1, L is a geometric random variable with parameter δ. So

since δ≤1, proving (7.1). For (7.2) and (7.3), we have

For \(\delta\leq\frac{1}{2}\) we can use (7.5) to get

which proves (7.2). For (7.3), take γ L =1/2. Then on the event {δ<γ L s} we have ⌈s/δ⌉≥3 so that expanding (7.7) as a binomial series gives

for s≤1=s 0. So (7.3) holds.

7.2 The Pond Radius R: Proof of (4.2) and (4.5)

Conditional on δ and L, R is the maximum height of a percolation cluster with parameter p c (1−δ) started from a path of length L. We have R≥L so (7.2) follows immediately from the corresponding bound for L. R is stochastically dominated by

where \(\tilde{R}_{i}\) is the maximum height of a branching process with offspring distribution Binomial(σ,p c (1−δ)) started from a single vertex, independently for each i. Define

for k>0. Thus a k is the probability that the branching process survives to generation k+1. By comparison with a critical branching process,

for some constant C 0. On the other hand, a k satisfies

where f(z) is the generating function for the offspring distribution of the branching process. (This is a reformulation of the well-known recursion for the extinction probability.) In particular, since f′(z)≤f′(1)=σp c (1−δ)=1−δ,

and taking k=⌈S/2δ⌉≥S/2δ, j=⌊S/δ⌋−⌈S/2δ⌉≥S/2δ−2,

Using this estimate we can compute

Similarly

provided δ is sufficiently small compared to s, i.e., provided γ R is small enough.

7.3 The Pond Volume V: Proof of (4.3) and (4.6)

From Theorem 3.1, conditional on δ and L, V is the number of edges in a percolation cluster with parameter p c (1−δ), started from a path of length L and with no edges emerging from the top of the path. We can express V in terms of the return time of a random walk as follows.

Start with an edge configuration with L backbone edges marked as occupied. Mark as unexamined the (σ−1)L edges adjacent to the backbone, not including the edges emerging from the top. At each step, take an unexamined edge (if any remain) and either (1) with probability 1−p c (1−δ), mark it as vacant; or (2) with probability p c (1−δ), mark it as occupied and mark its child edges as unexamined. Let N k denote the number of unexamined edges after k steps. Then it is easy to see that N k is a random walk \(N_{k}=N_{0}+\sum_{i=1}^{k} Y_{i}\) (at least until N k =0) where N 0=(σ−1)L and

Let T=inf{k:N k =0}. (T is finite a.s. since \(\mathbb {E}(Y_{i}| \delta)=-\delta<0\) and N k can jump down only by 1.) T counts the total number of off-backbone edges examined, namely the number of non-backbone edges in the cluster and on its boundary, not including the edges from the top of the backbone. Consequently

In order to apply random walk estimates we write X

i

=Y

i

+δ, \(Z_{k}=\sum_{i=1}^{k} X_{i}\), so that \(\mathbb {E}(X_{i}| \delta)=0\);  for universal constants

for universal constants  ; and N

k

=Z

k

−kδ+(σ−1)L. Note that

; and N

k

=Z

k

−kδ+(σ−1)L. Note that

so using, for instance, Freedman’s inequality [9, Proposition 2.1] leads after some computation to

if S 0≥3, say. Then, setting S 0=S/δL,

which proves (7.1).

For (7.2), apply Freedman’s inequality again:

(where in the denominator of (7.24) we use L≤s/δ 2 since V≥L). So

The first term in (7.26) is at most C 7 s from (4.1) and will therefore be negligible compared to \(\sqrt{s}\). For the second term, note that for L≥σs/δ,

and so, with the substitution \(x\sqrt{s}=\delta l-\sigma s\),

which proves (7.2)

Finally, for (7.3), the Berry-Esseen inequality (see for instance [8, Theorem 2.4.9]) implies

where Φ(x)=ℙ(G<x) for G a standard Gaussian, and  is some absolute constant. In particular, using

is some absolute constant. In particular, using  ,

,

for  . Choose

. Choose  . Then we have s/δ

2≥1 (so we may bound \(\sigma s/\delta^{2}-\sqrt{s}/\delta\geq s/\delta^{2}\)); \(\sqrt{s}/\delta\leq\gamma_{L}\); and ⌊s/δ

2⌋≥C

11, so that

. Then we have s/δ

2≥1 (so we may bound \(\sigma s/\delta^{2}-\sqrt{s}/\delta\geq s/\delta^{2}\)); \(\sqrt{s}/\delta\leq\gamma_{L}\); and ⌊s/δ

2⌋≥C

11, so that

proving (7.3).

8 Percolation with Defects

In this section we prove

The case n=0 is a standard branching process result. For n>0, proceed by induction. Write C(o) for the percolation cluster of the root o. The lower bound follows from the following well-known estimate:

If C(o)>N then there are at least N vertices \(v_{1},\dotsc,v_{N}\) on the outer boundary of C(o), any one of which may have a connection to ∂B(k) with n−1 defects. As a worst-case estimate we may assume that \(v_{1},\dotsc,v_{N}\) are still at distance k from ∂B(k), so that by independence we have

for constants c 1,c 2. If we set \(N=k^{2^{-n+1}}\) then the second factor is of order 1, and the lower bound is proved. For the upper bound, use a slightly stronger form of (8.2) (see for instance [10, p. 260]):

Now if C(o)=N, with N≤k/2, then there are at most σN vertices on the outer boundary of C(o), one of which must have a connection with n−1 defects of length at least k−N≥k/2. So

which proves the result (the first term is an error term if n≥2 and combines with the second if n=1).

References

Angel, O., Goodman, J., den Hollander, F., Slade, G.: Invasion percolation on regular trees. Ann. Probab. 36(2), 420–466 (2008)

Angel, O., Goodman, J., Merle, M.: Scaling limit of the invasion percolation cluster on a regular tree. Ann. Probab. doi:10.1214/11-AOP731. arXiv:0910.4205 [math.PR]

Benjamini, I., Schramm, O.: Percolation beyond ℤd, many questions and a few answers. Electron. Commun. Probab. 1, 71–82 (1996)

Chayes, J.T., Chayes, L., Newman, C.M.: The stochastic geometry of invasion percolation. Commun. Math. Phys. 101(3), 383–407 (1985)

Damron, M., Sapozhnikov, A.: Outlets of 2D invasion percolation and multiple-armed incipient infinite clusters. Probab. Theory Relat. Fields 150(1–2), 257–294 (2011)

Damron, M., Sapozhnikov, A., Vágvölgyi, B.: Relations between invasion percolation and critical percolation in two dimensions. Ann. Probab. 37(6), 2297–2331 (2009)

den Hollander, F.: Large Deviations. Fields Institute Monographs. Am. Math. Soc., Providence (2000)

Durrett, R.: Probability: Theory and Examples. Duxbury Advanced Series, 3rd edn. Duxbury, N. Scituate (2005).

Freedman, D.A.: On tail probabilities for martingales. Ann. Probab. 3(1), 100–118 (1975)

Grimmett, G.: Percolation. Grundlehren der Mathematischen Wissenschaften, 2nd edn. Springer, Berlin (1999)

Häggström, O., Peres, Y., Schonmann, R.H.: Percolation on transitive graphs as a coalescent process: relentless merging followed by simultaneous uniqueness. In: Perplexing Problems in Probability: Festschrift in honor of Harry Kesten, pp. 69–90. Birkhäuser, Basel (1999)

Járai, A.A.: Invasion percolation and the incipient infinite cluster in 2D. Commun. Math. Phys. 236(2), 311–334 (2003)

Lyons, R., Peres, Y.: Probability on trees and networks (2009, in preparation). See http://mypage.iu.edu/~rdlyons/prbtree/prbtree.html

Murray, J.D.: Asymptotic Analysis. Applied Mathematical Sciences, vol. 48. Springer, Berlin (1984)

Newman, C.M., Stein, D.L.: Broken ergodicity and the geometry of rugged landscapes. Phys. Rev. E 51(6), 5228–5238 (1995)

Nickel, B., Wilkinson, D.: Invasion percolation on the Cayley tree: exact solution of a modified percolation model. Phys. Rev. Lett. 51(2), 71–74 (1983)

van den Berg, J., Járai, A.A., Vágvölgyi, B.: The size of a pond in 2D invasion percolation. Electron. Commun. Probab. 12, 411–420 (2007)

Acknowledgements

The author thanks Gordon Slade for numerous discussions and his many useful suggestions. This research was supported in part by NSERC.

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Goodman, J. Exponential Growth of Ponds in Invasion Percolation on Regular Trees. J Stat Phys 147, 919–941 (2012). https://doi.org/10.1007/s10955-012-0509-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-012-0509-7