Abstract

The mixed no-idle flowshop scheduling problem arises in modern industries including integrated circuits, ceramic frit and steel production, among others, and where some machines are not allowed to remain idle between jobs. This paper describes an exact algorithm that uses Benders decomposition with a simple yet effective enhancement mechanism that entails the generation of additional cuts by using a referenced local search to help speed up convergence. Using only a single additional optimality cut at each iteration, and combined with combinatorial cuts, the algorithm can optimally solve instances with up to 500 jobs and 15 machines that are otherwise not within the reach of off-the-shelf optimization software, and can easily surpass ad-hoc existing metaheuristics. To the best of the authors’ knowledge, the algorithm described here is the only exact method for solving the mixed no-idle permutation flowshop scheduling problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

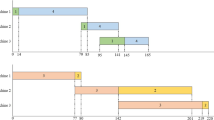

The permutation flowshop scheduling problem (PFSP) is concerned with sequencing a set N of n jobs on a set M of m machines in a sequential manner. Each job j has a known, fixed, nonnegative amount of processing time on machine i denoted by \(p_{ij}\) (\(i =1, \ldots , m\); \(j =1, \ldots , n\)). At any point in time, each job can be processed by at most one machine and each machine can process at most one job. When a machine starts processing a job, it must complete that job without interruption, as no preemption is allowed. The sequence of jobs to be processed is the same for each machine, implying that there are n! possible solutions of the problem. One extension of the PFSP is the no-idle permutation flowshop scheduling problem (NPFSP), where machines should run continuously from the time that they start the first job until they complete the last job, i.e., idle times are not allowed at any machine in between the processing of consecutive jobs. The problem arises in production environments where setup times or machine operating costs are significant, so it is not cost-effective to let the machines sit idle at any point during the production run (Pan and Ruiz 2014), such as foundry production (Saadani et al. 2003), fiber glass processing (Kalczynski and Kamburowski 2005), production of integrated circuits and in the steel industry (Pan and Ruiz 2014). In other real-life cases, and as pointed out by Ruiz et al. (2009), there might be technological constraints impeding idleness at machines such as high-temperature frit kilns, for example.

The first study on the NPFSP was by Adiri and Pohoryles (1982), defined on two machines with the objective of minimizing the sum of completion times. A more popular objective has been to minimize the total makespan, namely the sum of the completion times of the last job processed on each machine, for which the first study was contributed by Vachajitpan (1982), where mathematical models and branch-and-bound methods for solving small-scale instances were presented. One exact method using branch-and-bound was described by Baptiste and Hguny (1997). Comprehensive reviews on the problem can be found in Ruiz and Maroto (2005) and Goncharov and Sevastyanov (2009), which indicated an abundance of heuristic algorithms described to solve the problem, including discrete differential evolution and particle swarm optimization (Pan and Wang 2008a, b), iterated greedy search (Ruiz et al. 2009), variable neighborhood search (Tasgetiren et al. 2013) and memetic algorithms (Shao et al. 2017). To date, however, no effective exact approach has been proposed for NPFSP, and the attempts that exist can solve instances with only more than a handful of jobs (Pan and Ruiz 2014).

The mixed no-idle permutation flowshop scheduling problem (MNPFSP) arises as a more general case of the NPFSP when some machines are allowed to be idle, and others not. Examples can be found in the production of integrated circuits and ceramic frit, as well as in the steel industry (Pan and Ruiz 2014). In ceramic frit production, for example, only the central fusing kiln has the no-idle constraint. As an extension of the PFSP, which is known to be NP-hard for three or more machines (see, e.g., Röck 1984), the MNPFSP is also NP-hard in the strong sense (Pan and Ruiz 2014). The only study on this problem that minimizes makespan is that of Pan and Ruiz (2014), which describes a mixed integer programming model for the problem, an effective iterated greedy (IG) algorithm and enhancements to accelerate the calculation of insertions used within the local search. Computational results showed that, in comparison with the existing methods, the IG algorithm was able to identify solutions for the NPFSP instances that were 61% better, on average, with respect to the makespan. However, no exact method, to our knowledge, has been proposed to solve the MNPFSP, which is the aim of this paper. In particular, we contribute to the literature by describing an application of Benders decomposition that is enhanced with a referenced local search (RLS) that is used to generate additional cuts to accelerate the convergence of the algorithm. We propose and test three cut generating strategies and use combinatorial cuts to discard solutions already evaluated. The algorithms described in this paper are all exact, the performance of which we computationally assess on a test bed of literature instances, and compare with the commercial optimizer CPLEX and its automated Benders decomposition algorithm.

The remainder of this paper is structured as follows. Section 2 formally defines the problem and presents a formulation. The proposed algorithm is described in detail in Sect. 3. Computational results are presented in Sect. 4, followed by conclusions and future research in Sect. 5.

2 The mixed no-idle permutation flowshop scheduling problem

We denote by \(\pi _{(j)}\) the job occupying position j in a given permutation \(\pi \). The PFSP requires the condition \(c_{i,\pi _{(j)}} \ge c_{i,\pi _{(j-1)}}+p_{i,\pi _{(j)}}\) to hold for any two consecutive jobs in any permutation, where \(c_{i,j}\) is the completion time of task j at machine i. A key difference between the NPFSP and the PFSP is that the former forbids any idle time between any two consecutive tasks, thus transforming the previous inequality into an equality as \(c_{i,\pi _{(j)}} = c_{i,\pi _{(j-1)}}+p_{i,\pi _{(j)}}\). The mixed no-idle problem generalizes two problems in which there exists a subset \(M'\subseteq M\) of \(m'\) ‘no-idle’ machines, and it is only for those machines in \(M \setminus M'\) that any idle running is allowed. Apart from these key differences, the common PFSP assumptions hold (Baker 1974): (1) Jobs are independent from each other and are available for their processing from time 0; (2) machines are always available (no breakdowns); (3) machines can process only one task at any given time; (4) jobs can be processed by only one machine at all times; (5) tasks must be processed without interruptions once started and until their completion (no preemptions allowed); (6) setup times are either sequence-independent and can be directly included in the processing times, or are considered negligible and therefore ignored; and finally (7) there is an infinite in-process buffer capacity between any two machines in the shop.

In the remainder of the paper, we make use of the integer programming formulation below of the problem described in Pan and Ruiz (2014), provided here for the sake of completeness. In this formulation, a binary variable \(x_{jk}\) takes the value 1 if job j is in position k of the sequence, and 0 otherwise. Continuous variables \(c_{i,k}\) denote the completion time of job \(k = 1, \ldots , n\) on machine \(i = 1, \ldots , m\).

subject to

Objective function (1) minimizes the makespan among all jobs. In the PFSP and also for the MNPFSP, the makespan corresponds to the completion time of the last job in the permutation at the last machine of the shop (\(c_{m,n}\)). With constraints (2) and (3), we enforce that any job occupies one position in the permutation and also that each position at any permutation is occupied by one job. Constraints (4) control the completion time of the first job in the permutation. Constraints (5) enforce that the completion times of jobs on the second and subsequent machines are larger than the completion times of the preceding tasks of the same job on previous machines plus their processing time, effectively avoiding overlaps of the tasks of the same job. The key characteristic of the MNPFSP is shown in constraints (6) and (7). Constraint (6) forbids idle time at ‘no-idle’ machines by making the completion time of a job equal to the completion time of the previous job in the sequence plus its processing time. Inequality (7) is the usual PFSP constraint that forbids overlaps of jobs on the same machine and, at the same time, allows for idle time.

3 Benders decomposition

In this section, we first describe an application of the traditional Benders decomposition followed by the proposed cut generation strategy using a local search algorithm.

3.1 Application of Benders decomposition

Benders decomposition (Benders 1962) is a cutting plane algorithm to solve a Benders reformulation of a given model that enables it to be decomposed into two simpler formulations, namely the master problem and the subproblem. The master problem contains only a subset of the variables and of the constraints of the original model. The subproblem is the original model in which the master problem variables are fixed, and the solution of which yields either an optimality or a feasibility cut for the master problem (Costa et al. 2012). Benders reformulation is typically solved using a delayed constraint generation algorithm that iterates between the master and the subproblem, until an optimal solution is identified.

Let M(c, x) denote the formulation (1)–(9) where \(x = \{x_{jk} | j,k = 1, \ldots , n\}\) and \(c = \{c_{ik} | k = 1, \dots , n; i = 1, \ldots , m\}\) are the vectors of the decision variables. Let us suppose that variables x have been fixed as \(x = \hat{x} \in X = \{x | x\) satisfies (2), (3), (9)}. The resulting formulation, shown by \(M(c,\hat{x})\), consists only of the variables c, the constraints of which are assigned the dual variables \(\alpha \) and \(\beta \) corresponding to constraints (4) and (5), respectively, and \(\gamma \) corresponding to constraints (6) and (7), respectively. The dual \(D(\alpha ,\beta ,\gamma ,\hat{x})\) of \(M(c,\hat{x})\) formulation is given by the following:

subject to

The procedure to calculate the makespan we use here is the one proposed in Pan and Ruiz (2014), which calculates the start and completion times of the jobs in the order in which they appear in a given permutation, with the makespan being equal to the completion of the job in the last position. The procedure runs in \(\mathcal {O}(nm)\) time and implies that \(M(c,\hat{x})\) always admits a feasible solution for a given \(\hat{x} \in X\). This, in turn, means that \(D(\alpha ,\beta ,\gamma ,\hat{x})\) is always feasible for a given \(\hat{x} \in X\), and for an optimal solution \((\hat{\alpha }, \hat{\beta }, \hat{\gamma })\) of the dual problem, one obtains the following Benders optimality cuts:

where z is a lower bound on the optimal solution value of M(c, x), \(A_j = \sum ^{n}_{k=1} \hat{\alpha }_k p_{1j} + \sum ^{m}_{i=2} \hat{\beta }_{i1} p_{ij}\) and \(B_{jk} = \sum ^{m}_{i=2} \hat{\beta }_{ik} p_{ij} + \sum ^{m}_{i=1} \hat{\gamma }_{ik} p_{ij}\). Using this result, we are now ready to present the following reformulation of M(c, x), referred to as the master problem, constructed using the set \(P_D\) of extreme points of the polytope defined by the dual problem \(D(\alpha ,\beta ,\gamma ,\hat{x})\), and shown as MP(\(P_D\)) below:

subject to

As the MP includes a large number of optimality cuts, it can be solved using a cutting plane algorithm in practice, normally starting with MP(\(\varnothing \)) with no optimality cuts (26), and generating the cuts on an as-needed basis. The algorithm stops after solving a certain MP(P), where \(P \subseteq P_D\).

To help speed up the convergence of the algorithm, we also use the following combinatorial Benders cuts using any solution \(\hat{x} \in X\) in the master problem:

which can be used to cut solution \(\hat{x}\) off from the set of feasible solutions to M(c, x). In particular, after the addition of constraint (27), any solution obtained by the formulation will differ from solution \(\hat{x}\) with respect to the position of at least two jobs. This follows from the fact that changing the position of one job in a given sequence implicitly requires the change of position of another job in the sequence.

3.2 Referenced local search algorithm

Another ingredient of our algorithm is a referenced local search (RLS), proposed by Pan et al. (2008), which is initialized with a seed permutation \(\pi ^{\mathrm{ref}}\) obtained by a good constructive heuristic. In this paper, the reference permutation \(\pi ^{\mathrm{ref}}\) is taken from the master problem solution. Let \(C_{\max }(\pi )\) denote the makespan of permutation \(\pi \). The RLS procedure first finds the referenced job, which is determined by using the index i in the RLS procedure, in the current permutation \(\pi \). The referenced job is removed from \(\pi \) and inserted into all possible positions of \(\pi \). If this operation results in a permutation \(\pi ^*\) with a lower \(C_{\max }(\pi ^*)\) as compared to \(C_{\max }(\pi )\), then the current permutation is replaced with \(\pi ^*\) and the value of the counter controlling the number of runs in RLS is set to 1. Otherwise, the value of the counter is increased by one. This procedure is repeated until the value of the counter reaches the number of jobs in the problem. RLS keeps a list S with the best solutions found. The pseudocode of RLS is given in Algorithm 1. Note that the accelerated makespan calculations and speedups proposed in Pan and Ruiz (2014) are employed in the proposed RLS local search.

3.3 Cut generation using referenced local search

The choice of various ingredients used within a Benders decomposition can have a significant effect on the performance of the algorithm. These range from model selection (Magnanti and Wong 1981), cut improvement (e.g., Papadakos 2008; Saharidis and Ierapetritou 2013) and strengthening the master problem, through the addition of pre-generated valid inequalities (e.g., Cordeau et al. 2006). However, the choice of the integer solutions obtained from the master problem and the improvement thereof has received much less attention. In particular, the question around the effect of the quality of a feasible solution and the strength of the corresponding cut subsequently added to the master problem on the convergence of the Benders decomposition algorithm has not yet been fully investigated. Costa et al. (2012), for example, suggest the use of intermediate solutions within Benders decomposition, obtained either during the course of the branch-and-cut algorithm or through local search, and report encouraging results for the fixed-charge network design problem. In this paper, we seek to explore this question further and propose a Benders decomposition algorithm that embeds the bespoke RLS into the algorithm for solving the MNPFSP. The aim is to enhance the performance of the algorithm by generating extra cuts within the algorithm induced by the heuristic solutions. This section explains the details of the algorithm and describes and tests various strategies for an effective implementation.

The enhanced Benders decomposition is an iterative algorithm that generates optimality cuts (26) at each iteration on the basis of an optimal MP solution \(x^*\) and uses \(x^*\) as an input to the RLS to generate a user-defined number \(\sigma \) of neighbor solutions, each of which induces an additional optimality cut inserted into the master problem. The reason behind the choice of the RLS, instead of other heuristics and metaheuristics for the problem, is the simplicity of its implementation and the lack of any special input parameters. The RLS has been shown to work effectively for solving the PFSP (Pan et al. 2008), the NPFSP (Deng and Gu 2012) and the MNPFSP (Pan and Ruiz 2014). We propose three different strategies for generating the additional cuts, which are described below. Let \(S = \{x_1, x_2, \ldots , x_{|S|}\}\) be the set of solutions generated by RLS, and let v(x) denote the objective function value of a feasible solution x.

-

Elite: Choose the first \(\sigma \) solutions in S such that \(v(x_1) \ge v(x_2) \ge \cdots \ge v(x_{\sigma }) \ge v(x^*)\).

-

Highly elite: Choose the best \(\sigma \) solutions in S in terms of the objective value.

-

Random: Choose \(\sigma \) solutions in S at random.

The pseudocode of the proposed algorithm is given in Algorithm 2.

4 Computational results

This section presents a computational study to assess the performance of the Benders decomposition algorithm proposed in this paper. The algorithm and its variants are coded in Visual C++, using CPLEX 12.7.1 as the solver. We used an Intel Core i5-2450M computer with a 2.5 GHz CPU and 4 GB of memory. The tests were conducted on two sets of instances available at http://soa.iti.es/rruiz that were originally proposed in Ruiz et al. (2009) and extended in Pan and Ruiz (2014). The experiments were conducted in four sets, which will be explained below along with the results.

4.1 Performance of standard Benders decomposition implementations

In the first stage, we first compare our (deliberately) naive implementation of Benders decomposition (shown by BD) described in Sect. 3.1, the branch-and-cut algorithm of CPLEX 12.7.1 (shown by CPLEX) to solve the formulation of the problem shown in Sect. 2 and the automated Benders decomposition available within the software (shown by ABD). The aim is to show the performance of standard Benders decomposition implementations on the MNPFSP. For this purpose, we generate relatively small-size instances from the instance I_3_500_50_1 with 500 jobs and 50 machines available at the above website. A smaller instance with ten jobs and three machines, for example, is generated by using the first ten jobs and three machines within the larger instance. A total of 27 small-scale instances are formed with \(n \in \{10, 15, 20, 25, 30, 35, 40, 45, 50\}\) jobs and \(m \in \{3, 6, 9\}\) machines. A computational time limit of 7200 CPU seconds was imposed on the total run time of each algorithm. The results are given in Table 1, where the first three columns show the instance number as the identifier, number n of jobs and number m of machines. Then, for each of the three methods compared, we provide the value of the best integer solution (BI) identified within the time limit, the final optimality gap (GAP), in percent, the overall solution time (ST), in seconds, and the total number of iterations (NI) for ABD and BD.

As can be seen from Table 1, BD was able to solve 23 out of 27 instances to optimality, while CPLEX and ABD are able to solve all of the instances to optimality within the given time limit. In terms of the time to solve to optimality, CPLEX is the fastest in all instances except for instances 16, 22 and 23, for which ABD shows a better performance. BD is quicker than the other two for instances 4, 7 and 10. These results suggest that a standard implementation of Benders decomposition without any enhancements is highly ineffective and does not seem to provide encouraging results for the MNPFSP.

4.2 Effectiveness of the cut generation strategies

The second stage of the experiments numerically evaluates the cut generation strategies, and the effect of the number of additional cuts \(\sigma \) added at each iteration of the algorithm. We denote by \(\sigma ' = \sigma + 1\) the total number of cuts added at each iteration, where the ‘\(+\,1\)’ indicates the original optimality cut from the solution of the master problem, and test \(\sigma ' \in \{2,5,10,25,50\}\) in the experiments, resulting in a total of 15 combinations applied to the 27 instances described above. A computational time limit of 7200 CPU seconds also was imposed.

The results of the experiments are reported in Tables 2 and 3 for the elite and the highly elite strategies, respectively, denoted by the notation E\(\sigma '\) and HE\(\sigma '\) which also indicates the number of additional optimality cuts introduced. We do not report the results associated with the random strategy, as it consistently produced very poor quality solutions in all cases.

The findings indicate that the elite strategy has found the optimum solutions for all instances with all settings of \(\sigma '\), with the exception of instances 24 and 27 for E2, instances 18, 24, 26 and 27 for E5 and instances 18, 23 and 26 for E10. The highly elite strategy, on the other hand, has found all the optimum solutions for all values of \(\sigma '\), as can be seen from Table 3. Furthermore, the variant HE2 solves 19 (out of 27) instances to optimality quicker than the other two cut generation strategies. Comparing HE2 with the results of CPLEX and ABD reported in Table 1, we observe that it yields the best performance on 11 out of the 27 instances and is able to solve instances to optimality for which BD has failed to do so within the time limit.

4.3 Results on larger-scale instances

The third and last phase of the computational study compares the algorithms on medium- and large-scale instances. The former set includes a further 30 instances, generated in the same manner as the small-size instances by choosing n to range from 50 to 500 in increments of 50, and m as either 5, 10 and 15 from within the main instance I_3_500_50_1.

Having established the superiority of HE2 over other variants of the Benders decomposition algorithm in the previous section, we now employ two further enhancements to this algorithm. The first is the addition of combinatorial cuts (27) within the algorithm, shown by HE2, and the second is a further strengthening of the optimality cuts using the Pareto-optimal cut generation scheme described by Magnanti and Wong (1981), indicated by HE2 + PO. These two variants have also been tested by deactivating the combinatorial cuts, indicated using the same names but with the suffix ‘-CC.’ It should be noted with the addition of cardinality cuts, the optimality gaps are no longer valid, reasons for which we do not report here. The tests comparing the four variants have been conducted on a limited set of instances with n chosen as either 50, 100 or 150, and m equal to 5, 10 or 15. The results are reported in Table 4.

The results in Table 4 show that the variants of the algorithm, namely HE2 and HE2 + PO that use combinatorial cuts, always result in a superior performance over the cases where they are not used. However, a performance comparison between HE2 and HE2 + PO is not conclusive and seems to be instance-dependent. In particular, while HE2 is faster for instances 28, 29, 31, 34 and 35, instances 30 and 32 are solved significantly faster with HE2 + PO.

To further compare HE2 and HE2 + PO along with the CPLEX solver and the ABD, we provide further results on instances with up to 500 jobs in Table 5. These results are indicative of the superior performance of the two variants of the algorithm over CPLEX and ABD, in terms of both the computational solution time and solution quality. In particular, with the exception of one instance, one of the two variants always yields the best performance. More remarkably, while neither CPLEX nor ABD was able to identify a feasible solution for instances (\(n=150\), \(m=10\)), (\(n=300\), \(m=5\)), (\(n=350\), \(m=10\)), (\(n=500\), \(m=5\)) and (\(n=500\), \(m=5\)) within the allowed time limit, at least one of HE2 or HE2 + PO solved these instances to optimality.

4.4 Comparison with a state-of-the-art heuristic

The last set of experiments reported in this section are conducted to compare the algorithms we propose in this paper with a state-of-the-art heuristic described for the problem, namely the iterated greedy algorithm (IGA) of Pan and Ruiz (2014), in terms of the value of the solutions identified. These tests use the same set of instances shown in Table 5. In their study, Pan and Ruiz (2014) run the IGA under a time limit of \(n \times (m/2) \times \rho \) milliseconds, where \(\rho \in \{10, 20, 30, 60, 90\}\). In our experiments, we run the IGA five times, using the largest value for \(\rho = 90\) in setting the time limit for each of the five runs. The results are shown in Table 6, where the column titled BI corresponds to the best value found for the corresponding instance, and the column titled Method indicates which of the four algorithms of Table 5 was able to identify the best value. Columns five to seven report the minimum, maximum and the average solution values for each instance, respectively, across the five runs of the IGA, and the standard deviation. For reasons of fairness, we also run the IGA once for each instance, under the same time limit of 7200 s used for the exact algorithms, and report the value of the best solution identified in the last column of the table.

As the results in Table 6 indicate, the exact algorithms proposed in this paper, in particular HE2 and HE2 + PO, are highly competitive with the IGA for instances with \(n \le 250\), and are able to identify the same solution values in most cases. However, the exact algorithms often yield better quality solutions for larger instances in comparison, irrespective of whether the IGA is run under a 7200 second time limit or shorter. In particular, for instances with (\(n=350\), \(m=15\)), (\(n=400\), \(m=10\)), (\(n=400\), \(m=15\)), (\(n=450\), \(m=10\)), (\(n=450\), \(m=15\)), (\(n=500\), \(m=10\)) and (\(n=500\), \(m=15\)), the exact algorithms yield better quality solutions under the same time limit.

5 Conclusions and future research

This paper presented an enhancement to the traditional Benders decomposition by generating cuts using a local search algorithm for the mixed no-idle permutation flowshop scheduling problem. The latter algorithm was used as an ‘oracle’ to generate high-quality solutions, which in turn were used to construct additional cuts used within the iterative algorithm. Our findings indicate that such a strategy can substantially improve the performance of the algorithm, even with only a single additional cut added at each iteration, and that the quality of the solution used to generate the additional cut is of paramount importance. In particular, it is only high-quality solutions that help to improve the convergence; randomly generated solutions worsen the efficiency of the algorithm. Our algorithm also makes use of combinatorial cuts that eliminate feasible solutions from the search space, which leads to the solution of instances of up to 500 jobs and five machines that otherwise are not solved with a commercial solver. The results encourage the use of such a strategy on other types of problems, assuming that an ‘oracle’ is available to generate high-quality solutions within short computational times.

References

Adiri, I., & Pohoryles, D. (1982). Flowshop no-idle or no-wait scheduling to minimize the sum of completion times. Naval Research Logistics, 29(3), 495–504.

Baker, K. R. (1974). Introduction to sequencing and scheduling. New York: Wiley.

Baptiste, P., & Hguny, L. K. (1997). A branch and bound algorithm for the \(F\)/no-idle/\(C_{max}\). In Proceedings of the international conference on industrial engineering and production management, IEPM’97, Lyon, France (Vol. 1, pp. 429–438).

Benders, J. F. (1962). Partitioning procedures for solving mixed-variables programming problems. Numerische Mathematik, 4(1), 238–252.

Cordeau, J. F., Pasin, F., & Solomon, M. (2006). An integrated model for logistics network design. Annals of Operations Research, 144(1), 59–82.

Costa, A. M., Cordeau, J. F., Gendron, B., & Laporte, G. (2012). Accelerating benders decomposition with heuristic master problem solutions. Pesquisa Operacional, 32(1), 3–20.

Deng, G., & Gu, X. (2012). A hybrid discrete differential evolution algorithm for the no-idle permutation flow shop scheduling problem with makespan criterion. Computers & Operations Research, 39(9), 2152–2160.

Goncharov, Y., & Sevastyanov, S. (2009). The flow shop problem with no-idle constraints: A review and approximation. European Journal of Operational Research, 196(2), 450–456.

Kalczynski, P. J., & Kamburowski, J. (2005). A heuristic for minimizing the makespan in no-idle permutation flow shops. Computers & Industrial Engineering, 49(1), 146–154.

Magnanti, T. L., & Wong, R. T. (1981). Accelerating benders decomposition: Algorithmic enhancement and model selection criteria. Operations Research, 29(3), 464–484.

Pan, Q. K., & Ruiz, R. (2014). An effective iterated greedy algorithm for the mixed no-idle flowshop scheduling problem. Omega, 44(1), 41–50.

Pan, Q. K., Tasgetiren, M. F., & Liang, Y. C. (2008). A discrete differential evolution algorithm for the permutation flowshop scheduling problem. Computers & Industrial Engineering, 55(4), 795–816.

Pan, Q. K., & Wang, L. (2008a). No-idle permutation flow shop scheduling based on a hybrid discrete particle swarm optimization algorithm. International Journal of Advanced Manufacturing Technology, 39(7–8), 796–807.

Pan, Q. K., & Wang, L. (2008b). A novel differential evolution algorithm for no-idle permutation flow-shop scheduling problems. European Journal of Industrial Engineering, 2(3), 279–297.

Papadakos, N. (2008). Practical enhancements to the Magnanti–Wong method. Operations Research Letters, 36(4), 444–449.

Röck, H. (1984). The three-machine no-wait flow shop is NP-complete. Journal of the Association for Computing Machinery, 31(2), 336–345.

Ruiz, R., & Maroto, C. (2005). A comprehensive review and evaluation of permutation flowshop heuristics. European Journal of Operational Research, 165(2), 479–494.

Ruiz, R., Vallada, E., & Fernández-Martínez, C. (2009). Scheduling in flowshops with no-idle machines. In U. Chakraborty (Ed.), Computational intelligence in flow shop and job shop scheduling, chap 2 (pp. 21–51). New York: Springer.

Saadani, N. E. H., Guinet, A., & Moalla, M. (2003). Three stage no-idle flow-shops. Computers & Industrial Engineering, 44(3), 425–434.

Saharidis, G., & Ierapetritou, M. (2013). Speed-up Benders decomposition using maximum density cut (MDC) generation. Annals of Operations Research, 210, 101–123.

Shao, W., Pi, D., & Shao, Z. (2017). Memetic algorithm with node and edge histogram for no-idle flow shop scheduling problem to minimize the makespan criterion. Applied Soft Computing, 54, 164–182.

Tasgetiren, M. F., Buyukdagli, O., Pan, Q. K., & Suganthan, P. N. (2013). A general variable neighborhood search algorithm for the no-idle permutation flowshop scheduling problem. In B. K. Panigrahi, P. N. Suganthan, S. Das, & S. S. Dash (Eds.), Swarm, evolutionary, and memetic computing (pp. 24–34). Cham: Springer.

Vachajitpan, P. (1982). Job sequencing with continuous machine operation. Computers & Industrial Engineering, 6(3), 255–259.

Acknowledgements

This research project was partially supported by the Scientific and Technological Research Council of Turkey (TÜBITAK) under Grant 1059B191600107. While writing this paper, Dr Hamzadayı was a visiting researcher at the Southampton Business School at the University of Southampton. Rubén Ruiz is supported by the Spanish Ministry of Science, Innovation and Universities, under the Project ‘OPTEP-Port Terminal Operations Optimization’ (No. RTI2018-094940-B-I00) financed with FEDER funds. Thanks are due to two anonymous reviewers for their careful reading of the paper and helpful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bektaş, T., Hamzadayı, A. & Ruiz, R. Benders decomposition for the mixed no-idle permutation flowshop scheduling problem. J Sched 23, 513–523 (2020). https://doi.org/10.1007/s10951-020-00637-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10951-020-00637-8