Abstract

Although research on seismic interferometry is now entering a phase of maturity, earthquakes are still the most troublesome issues that plague the process in real applications. To address the problems that arise from spatially scattered and temporally transient enormous earthquakes, preference is usually given to the use of time-dependent weights. However, small earthquakes can also have a disturbing effect on the accuracy of interpretations if they are persistently clustered right next to the perpendicular bisector of the line joining station pairs or in close proximity to one of the stations. With regard to the suppression of these cluster earthquakes, commonly used solutions for dealing with monochromatic microseismic cluster events (e.g., implementing a band-reject filter around a comparatively narrow frequency band or whitening the amplitude spectra before calculating the cross-spectrum between two signals) may not have the necessary efficiency since earthquake clusters are generally a collection of events with different magnitudes, each having its own frequency and energy contents. Therefore, the only solution left in such a situation is to use stronger non-linear time-dependent weights (e.g., square of the running average or one-bit normalization), which may cause Green’s function amplitude information to be lost. In this paper, by simulating the records of a benchmark earthquake MN 5.2 with the help of empirical Green’s functions (EGF) obtained after the Ahar-Varzeghan Earthquake Doublet (MN 6.4 and MN 6.3), it is shown that the amplitude-unbiased phase cross-correlation is a relatively efficient approach in the face of the issues concerning long-standing cluster events.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Historically speaking, seismologists have long dreamed of turning ambient seismic noise into useful signals. Since the beginning of the current century, a new field of research called seismic interferometry has provided an overarching theoretical framework to translate this visionary ambition into a concrete reality. In brief, theoretical studies have shown that the ensemble-averaging of cross-correlations of random seismic noises records over available time windows is a straightforward way to retrieve the Empirical Green’s functions (EGFs) between a pair of stations (for a comprehensive review, see Wapenaar et al. 2010a, b; Campillo et al. 2014; Nakata et al. 2019 and references therein), provided that certain conditions are fulfilled. The first precondition for the successful implementation of this method is that noise sources are uncorrelated such that the cross-correlations of signals coming from simultaneously acting sources located in different places (the “cross-terms”) nullify each other reciprocally (e.g., Snieder 2004; Weemstra et al. 2014; Medeiros et al. 2015). Another prerequisite is the availability of a diffusive seismic noise. In strictly theoretical terms, a perfect diffuse system is defined under the theorem of the modal energy equipartitioning (e.g., Hodgson 1996; Hennino et al. 2001; Shapiro et al. 2000; Weaver and Lobkis 2006; Sánchez-Sesma et al. 2008). Fundamentally, a wavefield can be assumed to be equipartition when, in a given frequency band, all the modes of the system are statistically equiprobable, and the share of energy between these modes is balanced in such a way that the net flux becomes zero (e.g., Margerin et al. 2009; Margerin 2017). In general, the equipartitioning required for performing the seismic interferometry is deemed to be fulfilled in a finite medium with an irregular bounding surface or at the stage of the isotropically developed multiple scattering processes in a heterogeneous medium (e.g., Margerin et al. 2009; Pilz and Parolai 2014).

In the absence of a fully scattered wavefield, it is imperative that studies be carried out at a long lapse of time to make sure station pairs are sufficiently surrounded by a spatially homogeneous distribution of random noise sources (shown in black circles in Fig. 1). The rationale behind this idea is that if the azimuthal homogeneity of the incoming noise is met, the coherent energy sent from the sources located in the Fresnel zone, i.e., the endfire lobes along with the line joining a pair station, contributes constructively in the cross-correlation at a given lag-time. On the other hand, in such conditions, most of those energy produced by the sources outside the Fresnel zone cancel one another out by destructive interferences (see, e.g., Snieder and Hagerty 2004; Roux et al. 2005; Lin et al. 2008; Harmon et al. 2010).

A schematic drawing illustrating the spatially scattered and temporally transient enormous events (the blue solid circles), the spatially localized and temporally persistent small events (a cluster of red solid circles), a line joining two stations (the horizontal black dash line), the azimuthally homogeneous distribution of uncorrelated noise sources (the black solid circles), the perpendicular bisector of the line joining two stations (the perpendicular orange dash line), and endfire lobes. The series of temporally persistent events that are spatially localized along the iso-phase hyperbolas with foci at two stations in the off-Fresnel zone are shown by the green solid circles

The most important challenge that researchers face when attempting to do a study over a longer period of time is that it makes the correlation results more vulnerable to the disturbing influence of spatially scattered and temporally transient (SS-TT) high-energy impulsive sources (e.g., earthquakes, explosive) (shown in blue circles in Fig. 1). These events by imposing too much energy along certain azimuths prevent the energy equipartitioning condition from being met. Matters become even more complicated when clusters of continually repeating events are grouped in clusters along the iso-phase hyperbolas with foci at two stations in the off-Fresnel zone (shown in green circles in Fig. 1). In such cases, spatially localized and temporally persistent (SL-TP) small events may create a series of unwelcome bumps, sometimes called precursory noise (see Yanovskaya and Koroleva 2011; Ritzwoller and Feng 2018), in the earlier time-lags of cross-correlation results if they occur right next to the perpendicular bisector of the line joining two stations (shown in perpendicular orange dash line in Fig. 1). As the cluster events shift from the center toward one of the stations, these cluster events manifest their problematic effect in the form of a frequency-dependent asymmetry in the correlation results (Frank et al. 2009, see Fig. 8; Zheng et al. 2011). Contrary to the common view that taking the average of the causal and anticausal parts of correlation functions is a way to homogenizing incoming noise azimuths (e.g., Lin et al. 2008), Zheng et al. (2011, Fig. 5) showed that this averaging not only does not help to mitigate such frequency-dependent asymmetry but also may cause a significant bias in the group and phase velocity measurements.

In this paper, we simulated a benchmark earthquake MN 5.2 using the empirical Green’s functions (EGFs) obtained from the amplitude-unbiased phase cross-correlation (PCC) analysis (Schimmel 1999; Schimmel et al. 2011b; D’Hour et al. 2016; Schimmel et al. 2018; Ventosa et al. 2019) in a three-month seismic active timeframe after the Ahar-Varzeghan Earthquake Doublet (MN 6.4 and MN 6.3) to examine the operational effectiveness and efficiency of this method in regards to mitigating the precursory noise created by cluster events when extracting coherent signals from the uncorrelated ambient noise data. A comparison of the consistency of the results provides evidence to support this claim that the PCC appears to be an optimal option in the face of the issues concerning the retrieval of Green’s function information, even in the presence of long-standing cluster events.

2 Correlation techniques

The seismic interferometry offers an innovative basis to obtain an approximate assessment of the empirical Green’s function (i.e., EGF) between station pairs, simply by the ensemble average (i.e., stack) of cross-correlations of received uncorrelated random seismic noise. These correlations can be obtained by calculating the inverse Fourier transform of the cross-spectrum of two signals, \( {\varPhi}_{lm}^k\left({X}_A,{X}_B,\omega \right)\equiv {u}_l^k\left({X}_A,\omega \right){u}_m^k{\left({X}_B,\omega \right)}^{\ast } \) where \( {u}_l^k\left({X}_A,\omega \right) \) and \( {u}_m^k\left({X}_B,\omega \right) \) are respectively fixed-length signals (e.g., hourly data) recorded by the component of l at the station XA and the component of m at the station XB in the angular frequency domain, ω, also k = 1, …, K denotes the index of the hourly time windows and ∗ stands for the complex conjugate. When it comes to the actual implementation, earthquakes are major barriers on the way to achieving the satisfactory results in this method. So far, various time-domain pre-processing approaches have been proposed to lessen the disturbing influence of SL-TP events (Ritzwoller and Feng 2018). In the case of monochromatic microseismic SL-TP events, it is also a common practice to use a band-reject filter around a comparatively narrow frequency bandwidth (Schimmel et al. 2011b, see Subsection 3.1.2) or to whiten amplitude spectra, before calculating the cross-spectrum between two signals (Shapiro et al. 2006, see Discussion Section; Ritzwoller and Feng 2018). Advantage of spectral whitening is that it broadens the bandwidth of the estimated empirical Green’s functions (Seats et al. 2012). It should be borne in mind, however, that the aftershock clusters cannot be viewed as monochromatic signals, since they are generally composed of a collection of events with different magnitudes, each having its own frequency and energy contents. Therefore, the conventional pre-processing techniques may not be of much help as regards to aftershock clusters that may affect a broad frequency range of the correlation results.

To make the frequency domain whitening as adaptable as possible, attention may be drawn to use frequency-dependent whitening approaches as \( {\overset{\sim }{\varPhi}}_{lm}^k\left({X}_A,{X}_B,\omega \right)\equiv \tilde{u}_{l}^k\left({X}_A,\omega \right)\tilde{u}_{m}^k{\left({X}_B,\omega \right)}^{\ast } \), where \( \overset{\sim }{u}\left(\omega \right)=u\left(\omega \right)/N\left(\omega \right) \) is the spectrum of ground motion after normalizing with a frequency-dependent real-valued function, N(ω). The particular case that \( {N}_l^k\left({X}_A,\omega \right)={N}_m^k\left({X}_B,\omega \right)=\left\{\left|{u}_l^k\left(\omega \right)\right|\right\} \) is called the deconvolution method (e.g., Prieto et al. 2011; Viens et al. 2014, 2015, 2016, 2017)

where the operator || indicates the absolute value and the operator {} represents the smoothing of the spectra using a moving average over predefined points, δDEC is the regularization parameter that has to be added to prevent numerical instability. This regularization partly relaxes the dependence of the correlation analysis to the features of excitations (Prieto and Beroza 2008; Prieto et al. 2011). By choosing \( N\left({X}_A,\omega \right)=\left\{\left|{u}_l^k\left(\omega \right)\right|\right\} \) and \( {N}_m^k\left({X}_B,\omega \right)=\left\{\left|{u}_m^k\left(\omega \right)\right|\right\} \), we end up in the cross-coherency method as (e.g., Prieto and Beroza 2008; Prieto et al. 2011)

where δCCOH is the regularization parameter for the cross-coherency method.

By introducing the amplitude-unbiased PCC method, Schimmel (1999) contributed substantially to the new ways of thinking about calculating the coherence of two signals in terms of their instantaneous phase (Schimmel et al. 2011b). The PCC is obtained according to the following equation:

where θl(XA) and θm(XB) represent the phases belonging to the stations A and B that their elements are the instantaneous phase of three components at the instantaneous time t. It is important to note that in this equation, the results have been normalized to \( \left|{\theta}_{lm}^k\right|\le 1 \). Therefore, \( {\theta}_{lm}^k=1 \) indicates that there is a perfect correlation between two signals and \( {\theta}_{lm}^k=-1 \) implies a complete anticorrelated feature between two signals.

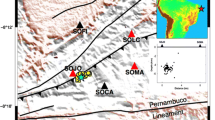

3 Region of study and data

In an attempt to evaluate the robustness of the correlation methods in addressing the issues concerning the retrieval of the true Green’s function information in the presence of long-standing cluster events, we limit our focus to the northwestern Iran where the region was in continuous vibration by sustained aftershock sequences, since 11 August 2012 when a runaway release of a stored elastic strain of the Ahar-Varzeghan Earthquake Doublet (MN 6.4 and 6.3, MN represents the Nuttli’s magnitude scale, Nuttli 1973) triggered a succession of localized aftershock sequences. In total, 869 aftershocks with magnitudes greater than MN 2.5 have jolted this region at a period from the first of August 2012 to the end of August 2013. The yearly pattern of events occurring in a rectangular box bounded by latitude (min): 36.50°, latitude (max): 39° longitude (min): 45.50° and longitude (max): 49° and their magnitude distribution are shown in Fig. 2.

The data used in this study were recorded by three-component broad-band and the short-period sensors in different-length Nanometrics Y-File and the GCF fragments. Stations used in this study belong to Iranian Seismological Centre, Institute of Geophysics, University of Tehran (IRSC-IGUT, http://irsc.ut.ac.ir), and International Institute of Earthquake Engineering and Seismology (IIEES, http://www.iiees.ac.ir/en/). We applied a baseline correction by the removal of mean and trends from all fragments, before using them. Thereafter, following applying a cosine taper, fragments were corrected to the ground velocity (for a polarity analysis, see Section 4.1) and ground displacement (for an interferometry analysis, see Section 4.2) by removing instrumental responses. The different-length fragments were merged to obtain a 1-h-long times-series for all three-component records. All windows were visually inspected to determine whether there has been any indication of failure to record or poor quality data. If so, these windows were excluded from the processing cycle. Finally, data are bandpass-filtered between 0.01 and 2 Hz and then decimated up to a Nyquist frequency of 2 Hz.

4 Method: comparison of EGFs obtained in two seismic active and quiescent timeframes

4.1 Background noise analysis

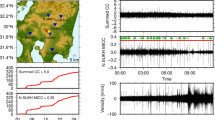

We selected the ZNJK station in Northwestern Iran as our virtual source, and tried to make the pairwise comparison of the EGFs of the hourly three-component displacement noise data of this station and the other six stations. To investigate the effect of aftershock clusters on cross-correlation results, we performed this comparison for two different timeframes: a three-month-length seismic active timeframe and a 3-month-length quiescent timeframe. The seismic active timeframe was selected from 2 days after the occurrence of the doublet earthquake to 13 November 2012 (Fig. 3a). During this interval, stations have recorded 623 earthquakes with a magnitude larger than MN 2.5 of which 37 earthquakes had a magnitude larger than or equal to MN 4. Another 3-month-length timeframe was selected from a seismic quiescent time period: 01 May to 01 August 2013 in which only 26 events with a magnitude of MN 2.5–3.5 occurred (Fig. 3b).

The configuration of sensors used in this study. This region was in continuous vibration, since the 11 August 2012 when the Ahar-Varzeghan Earthquake Doublet (MN 6.4 and 6.3) hit the region. The green circles represent those events (> MN 2.5) that occurred at a period from the first of August 2012 to the end of August 2013. The broad-band ZNJK station is used as a virtual source. The red star corresponds to the benchmark earthquake that occurred near the virtual source on 27 May 2008. We simulate the records of the MN 5.2 benchmark earthquake (2008.05) with the help of the empirical Green’s function obtained from the different correlation techniques in (a) seismic active and (b) quiescent timeframes

In this paper, the timescales of post-seismic healing after the occurrence of each event were considered very short. Additionally, it is assumed that the accumulation of stress before triggering an event does not affect the nature and content of the noise. Therefore, with such assumptions, the background noise received by the stations in the seismic active timeframe is expected to have the same pattern as the seismic quiescent timeframe, provided that the seasonal differences are negligible. We evaluated the validity of this assumption by the information obtained from instantaneous back azimuths (BAZs) of the elliptical motions as a function of time and frequency using frequency-dependent polarization analysis (FDPA) in the region (Schimmel and Gallart 2003, 2004; Schimmel et al. 2011a; Davy et al. 2015; Berbellini et al. 2019; Carvalho et al. 2019). In line with this strategy, an adaptive-length Gaussian-shaped sliding window moves along the three-component data vector. The length of the Gaussian window is inversely proportional to the frequency and used to construct a frequency-dependent cross-spectral matrix. Then, this matrix is decomposed into its eigenvalues and corresponding eigenvectors, helping us determine semi-major and semi-minor axes of an ellipse that best fits the ground motion. Using these results, in addition to the instantaneous BAZs, the variation of the instantaneous planarity vectors of polarized signals with respect to the mean direction of their vectors can be measured by a parameter called the degree-of-polarization (DOP). The planarity vector is the perpendicular vector to the instantaneous plane of the ground motion ellipse. It should be noted that the DOP changes in a range between 0 and 1. In the cases in which the semi-major axis direction is strongly varying, it is expected to have a smaller value for the DOP, while for a perfectly polarized motion the DOP will be 1.

We performed polarity analysis on both the seismic active and quiescent timeframes by setting the DOP in the range (0.7–1). This constraint allowed us to downweight less polarized signals and to increase the intensity of weakly coherent Rayleigh waves. We obtained the results by accumulating the outputs of polarity analysis for 180-s time segments in which no earthquake occurred. Bootstrap resample analysis was used before performing analysis on the time segments to reduce the effect of very small cluster earthquakes in space that were not visually recognizable, especially for seismic active intervals. Figure 4 shows the variations of the BAZ, as a function of frequency, at each seismic station. The number of signals has been normalized to 1 per day, and the color scale has been saturated to 0.5.

We performed the polarity analysis on both the seismic quiescent (first column) and active (second column) timeframes by setting the DOP in the range (0.7–1) for different stations in the frequency range of 0.1 to 0.5 Hz. The number of signals has been normalized to 1 per day, and the color scale has been saturated to 0.5. As can be seen, the coherent incoming waves enter the station from all directions; nevertheless, a degree of directivity is also evident in the patterns of their azimuth-frequency distribution. Additionally, in most stations, this directivity is observed along the northeast and southwest, which may be attributed to the microseismic waves. However, the SRB station shows the predominant direction of elliptically polarized seismic waves in the southeast direction. Despite the complexities noted above, what matters is that the patterns are almost unchanged in two seismic active and quiescent timeframes

Considering the results (Fig. 4), it is particularly evident that coherent noise in the lower-frequency band (0.1–0.5 Hz) entered the station from all directions; nevertheless, a degree of directivity is also evident in the patterns of their azimuth-frequency distribution. At most stations, this directivity is observed along the northeast and southwest, which may be attributed to secondary microseismic waves. However, the SRB station shows a predominant direction of elliptically polarized seismic waves in the southeast direction, which may be considered the effect of regional structures, especially the directivity due to the large-scale topography response (Burjánek et al. 2014; Massa et al. 2014). Despite the complexities noted above, what matters is that the patterns are almost unchanged in two seismic active and quiescent timeframes. By this token, it can be assumed that any difference in the results of correlation methods is most likely due to the presence of cluster earthquakes.

4.2 Efficiency of applying a time-domain binary filter to eliminate the precursory noise

When performing the correlation, we first selected hourly time intervals randomly from both the seismic active and quiescent timeframes so that the gradual decline of the rate of occurrence of aftershocks following the trigger of the mainshock does not introduce a systematic bias on the results. After removing the effect of the enormous spikes, glitches, and SS-TT events with a time-domain binary filter on the data (i.e., setting 0 for time periods having peaks larger than a certain threshold value and 1 for other time periods) (e.g., Ritzwoller and Feng 2018), the adverse effect on the correlation results is only due to the cluster of small earthquakes (Pedersen and Krüger 2007). To make it easier to extract the EGFs of Rayleigh and Love waves, we rotated the coordinate system from northeast-down coordinates to radial transverse-down coordinates before calculating the correlation. To accelerate the convergence of Green’s function, we overlapped the time windows by 30 min (Seats et al. 2012).

In Fig. 5, the EGFs obtained for the vertical-to-vertical cross-correlations are shown in the microseismic frequency range (0.01–0.3 Hz). The causal window is an interval between times corresponding to group speeds of 2 and 5 km/s, and its counterpart is shown by an acausal window. A comparison of the SNR (i.e., the ratio of the maximum amplitude in the causal window to the RMS obtained for precursory noise) at the seismic quiescent (5a) and active timeframes (5b) for the ZNJN-MRD station pair decreased from 2.09 to 0.27. As such, the time-domain binary filter does not seem to be doing very much in mitigating the harm posed by the aftershock clusters, particularly because the amplitude of the signals generated by some of the earthquakes was much smaller than the selected threshold, so they were able to pass through the time-domain binary. In other words, a time-domain binary filter assigns 0 for time periods having peaks larger than a certain threshold value and 1 for other time periods. If the amplitudes of the aftershocks are smaller than this threshold, they are safe from this filter and become further magnified in the ensemble average (stacking) operation.

The vertical-to-vertical correlation results between ZNJN and six stations located at northwest of Iran obtained by the cross-correlation analysis at seismic (a) quiescent and (b) active timeframes. (c) Results obtained by the deconvolution method at the seismic active timeframe, (d) results obtained by the cross-coherency method at the seismic active timeframe, and (e) results obtained by the PCC method at the seismic active timeframe. The signal-to-noise ratio (SNR) is inserted at the top left of each plot. In calculating the SNRs, we have considered the signal window to be a causal window and the noise window to be the precursory window (the gray-colored box). A comparison of the results shows that the PCC method is better than the other methods to eliminate the impact of precursory noises at active time interval (for details see the main text)

Since the events are mostly clustered close to the perpendicular bisector of the line joining the ZNJN-MRD station pair, the precursory noise is more pronounced in the initial lags of their cross-correction function. As shown in Fig. 3, however, the location of the cluster events is inclined towards one of the pairs of stations in the cases of the ZNJN-TBZ and ZNJN-AZR station pairs. Because of that, the precursory noise in these two cases is seen in the later lags, and the SNR obtained for these station pairs shows a decrease in the seismic active timeframe compared to the seismic quiescent timeframe (with a drop from 2.17 to 0.35 for the ZNJN-TBZ pair and from 2.15 to 0.66 for the ZNJN-AZR station pair). In the cases of ZNJN-HRS, ZNJN-BST, and ZNJN-SRB, the clusters are mostly located outside the space between the station pairs. Therefore, the effect of cluster events shows themselves as a clear asymmetry in the correlation results rather than precursory noise. Examples of such asymmetries have already been reported by studies conducted over microseismic cluster events at the Gulf of Guinea (Shapiro et al. 2006), near the Kyushu Island (Zeng and Ni 2010), and in shallow waters of the Juan de Fuca plate (Tian and Ritzwoller 2015, 2017). As such, aftershock clusters are expected to bear a close resemblance to the microseismic clusters, because these events continuously shake a limited area of space, months, or years after the main shock.

4.3 Efficiency of applying a frequency-dependent whitening approach to eliminate the precursory noise

Now, we try to study the efficiency of the frequency-dependent whitening approaches in mitigating the earthquake clusters. To this end, we choose the regularization parameter δDEC to be 1% of the average spectral power of ul(XA, ω) when applying the deconvolution method (Nakata et al. 2011). In the cross-coherency method, when the spectral amplitude is small, the numerator and denominator are both small. Therefore, the regularization parameter δCCOH can be selected much lower than δDEC. According to Fig. 5c and Fig. 5d, the deconvolution and cross-coherency methods have both a positive effect on increasing the SNR of the correlation results for ZNJN-HRS, ZNJN-BST, and ZNJN-SRB station pairs. However, the use of these methods was not able to eliminate the impact of the precursory bumps and the asymmetry of the ZNJN-TBZ, ZNJN-TBZ, and ZNJN-AZR results.

4.4 Evaluating the robustness of the PCC method in retrieving the true Green’s function by simulating a benchmark earthquake

Looking at the results in Fig. 5e, it seems that the PCC method can better overcome the impact of cluster events than other correlation methods because the vertical-to-vertical PCC results are less affected by precursory noise, and the asymmetry between the causal and acausal parts of the correlation results is insignificant. To more accurately assess the ability of this method, we try to simulate the records of a benchmark earthquake with the help of EGFs obtained from the PCC noise analysis. To this end, we first compute the pairwise PCC between the three components of the station pairs in random hourly time intervals in the active timeframe. If simulated signals are fitted with an acceptable degree of accuracy to the original records of the earthquake, it can be concluded that the PCC can retrieve a reliable estimate of Green’s function, even in the presence of cluster aftershocks.

4.4.1 Setting a benchmark

To put the idea described above into practice, it is necessary to establish an appropriate benchmark earthquake. Generally speaking, a benchmark event is referred to as a candidate earthquake that its epicenter locates very close to the location of the virtual source. As a rule of thumb, a benchmark earthquake should not be an event smaller than MW 4 since smaller events usually do not have an adequate signal-to-noise ratio at longer periods. Furthermore, it should not be an event stronger than MW 5, since the source time function of strong events is so complex (i.e., a Mw 4–5 earthquake is that the earthquake can be regarded as a point source at long periods > 3s). In line with these requirements, we selected a MN 5.2 event occurred on 2008 May 27 at 06:18:08 as our benchmark earthquake (shown with a red star in Fig. 3). Table 1 shows information about the epicenter of this event reported by IRSC. From the inversion of the first pulse of the phase onset together with the sign information of the amplitudes recorded by the Northwest Iranian stations, an oblique-reverse mechanism was obtained for this earthquake. The seismic moment tensor analysis package, Hybrid (Kwiatek et al. 2016), was used to obtain this mechanism. Table 2 provides a concise description of the moment tensor solutions that we obtained for these events.

4.4.2 Converting EGFs obtained from noise to earthquake Earth Green’s response

Before proceeding further with the analysis, a set of corrections should be made upon the noise EGF tensors to make them quite similar to a real earthquake Green’s response function. To this end, we first assume that there is no energy leakage to off-diagonal entries of the EGF tensors due to the Love and Rayleigh waves coupling. Based on this assumption, we calibrate the amplitude of the non-zero entries of the EGF tensors to that of the earthquake using a set of calibration factors by the method described in Denolle et al.’s study (2013).

Comparing the epicenter of this event with the location of the ZNJK station (36.67 N, 48.68 E) indicates that these two are only 4.93 km away from each other which compared to the average interstation distance (in the order of 254 km) is somewhat negligible. Due to the proximity of the epicenter of the benchmark earthquake and the location of the virtual source, we suppose that the attenuation and geometrical spreading effects for seismic waves propagating from benchmark-to-stations and virtual source-to-stations are almost identical. Furthermore, the arrival time differences between the simulated signals and the waves received from the benchmark earthquake were ignored. However, the difference between the excitation depth of the near-surface noise sources and the centroid depth of the benchmark earthquake remains a matter of serious concern, as spectrums of seismic waves strongly depend on their excitation depth (i.e., high-frequency surface waves are more likely to be excited for shallow events than deep events). To address this difference, we pursued the course of action outlined in Denolle et al.’s work (2013).

The first thing needed for the depth correction is to determine the radial displacement eigenfunctions associated with Rayleigh waves, r1(ω), the vertical displacement eigenfunctions associated with Rayleigh waves, r2(ω), and the transverse displacement eigenfunctions associated with Love waves, l1(ω), for the region surrounding ZNJN station both at the centroid depth of the benchmark earthquake, h, and at the surface of Earth (see Denolle et al. 2013, 2014). Figure 6 shows the ratios of these displacement eigenfunctions that were obtained by the Chebyshev Spectral Collocation algorithm (Denolle et al. 2012, VEA Package). The velocity model and density profiles in calculating these parameters were adapted from the model obtained by Donner et al. (2013) (Table 3). Although this model was achieved by analyzing the group-velocity dispersion curves of surface waves obtained at the Alborz mountains region, however, Donner et al. (2014, 2015) demonstrated that it is also applicable to a broad regional scale including the NW of Iran. In the next step, the effect of the radiation pattern of the benchmark earthquake was taken into account by inserting the moment tensor solutions in the surface-wave displacement equations (see Donner et al. 2013, Eqs. 13 to 15). This effect on the results can be seen by comparing the absolute values of these converting terms in the polar coordinate system at given periods 5, 7, and 10 s (Fig. 7).

Ratio of the radial (Rayleigh) r1(h)/r1(0), transverse (Love) l1(h)/l1(0), and vertical (Rayleigh) r2(h)/r2(0) displacement eigenfunctions at the source depth and the surface for the region surrounding ZNJN station. These ratios were obtained based on the velocity and density profiles under seismic ZNJN station (Table 2)

The absolute values of converting terms (see Section 6.2 in the main text) are shown in polar plots at given periods T= 5 (red dashed line), 7 (orange dashed line), and 10 s (black dashed line). We obtained the factors at those periods by a flat response assumption for the moment-rate function. The moment tensors used to obtain these values are listed in Table 1. The maximum amplitude at the azimuth 15° also shown at these plots

An earthquake source is generally considered a spontaneous rupture propagation along the fault. Therefore, convolving the modified noise displacement fields with the displacement source time function of the benchmark earthquake makes it more compatible with the benchmark earthquake records (Denolle et al. 2013, Eq. 17). Frequently, the source time function of a moderate event is considered a pulse with the width of T. This pulse width is inversely proportional to the corner frequency fc as T = 1/2fc (Udias 1999). This corner frequency is a threshold frequency beyond which the falloff of the source spectrum is proportional to f2 and can be obtained by fc = 0.491 β(∆σ/M0) where ∆σ is called the stress drop (Hanks and Thatcher 1972). This stress drop is typically considered ∆σ = 3 MPa and shear velocity β is supposed to be β =3 km/s. We obtained fc = 0.36 Hz for the corner frequency of the benchmark event. Finally, we applied a conservative free phase shift up to 2 s upon the correlation results on account of the possible uncertainties regarding errors in the determination of the location of benchmark events, their excitation depths, their fault plane solutions, and so on.

4.4.3 The degree of consistency between the waveforms and amplitudes of simulated and observed records

Figure 8 shows waveforms obtained for the records of simulated signals and the records received from the benchmark earthquake. A visual comparison of these results indicates that there is a certain degree of consistency between the amplitude and frequency content of these two records, but any claim in this regard must be made on a mathematical basis. As a metric to evaluate the accuracy of the PCC method in eliminating the disturbing impact of the persistent sequence of the cluster aftershocks, therefore, we used the following parameters to compare the degree of consistency between the waveform and amplitude information of simulated and observed records: (1) A normalized correlation coefficient function (hereinafter called CCF) that forms a standard to evaluate the consistency between the travel time information of these two sets of data (Denolle et al. 2013; Viens et al. 2014):

where x is the Green’s function and y is the earthquake record in the time domain. In this equation, N92.5 and N2.5 stand for the 2.5 and 92.5% of the cumulative energy of both signals, respectively. (2) Peak ground displacement (hereinafter called PGD) differences (in percentage) that quantitatively assesses the accuracy of the amplitude retrieved as:

where PGDPCC and PGDeq are respectively PGDs of the simulated and earthquake wavefield, and PGDmax = max(PGDnoise, PGDeq). The CCFs for the vertical component of the simulated results in the ZNJK-AZR and ZNJK-TBZ pairs exhibit a rather low correlation (≤ 0.6) in comparison with other pairs. In our view, the low CCF values obtained for the PCC results of the ZNJK-AZR and ZNJK-TBZ pairs may be relevant to the complexities created by the low-velocity large-scale heterogeneities that have been reported by previous studies at the eastern lower crust margins of the Sahand inactive volcanic region (see Rezaeifar et al. 2016; Bavali et al. 2016). Because of these large-scale anomalies, it seems that factors affecting the wave propagation of seismic noise on the surface of the Earth, which are slightly different from factors affecting the wave propagation of the benchmark earthquake and the complexities of the propagation path, were not compensated merely by applying the simple corrections provided above. However, what is clear from the comparisons is that the PCC method has been able to simulate amplitudes and waveforms of the benchmark earthquake at the SRB, BST, and MRD stations in a relatively acceptable way. By comparing the results obtained for PGDmax values, it can be said that although the matching accuracy of the amplitudes is less than the phase information, the use of the PCC method has resulted in better retrieval of amplitude information in some components of the station pairs.

Prediction of the 2008 MN 5.2 benchmark earthquake by the modified and calibrated noise impulse responses. Waveforms were filtered between 0.01 and 0.3 Hz. The radial, transverse and vertical components of the simulated records are shown by red blue color, while records of the benchmark earthquake are shown by blue. The normalized correlation coefficient function, CCF, and the percentage of retrieved amplitude errors, PGDdiff, are shown at the upper right and left part of each subplot, respectively

5 Discussion and conclusion

When retrieving EGFs from noise data in the microseismic frequency range, the main focus is often on eliminating the damaging effects of very large earthquakes, while less attention is paid to small earthquakes. However, Jousset and Douglas (2007) showed that small earthquakes may also make a major contribution to the amplitude spectrum at longer periods. Moreover, we observed that the disturbing effect of temporarily persistent small earthquakes may be much greater, especially in two specific cases: (1) If these earthquakes are clustered around the perpendicular bisector of the line joining station pairs, they cause precursory noise to be observed in the earlier time-lags of cross-correlation results; (2) If these earthquakes are clustered in the close proximity to one of the stations, they may lead to the frequency-dependent asymmetry in the correlation results.

Experiences to date have demonstrated that stronger non-linear operations such as the square of the running average (Yanovskaya and Koroleva 2011) or one-bit normalization (Ritzwoller and Feng 2018) may be useful in mitigating the adverse effects of clusters of small earthquakes. However, a number of previous scholarly papers have provided evidence demonstrating that the efficiency of these methods is mainly limited to cases where we wanted to extract information on seismic velocities (e.g., Sabra et al. 2005; Cupillard and Capdeville 2010; Cupillard et al. 2011), while some uncertainty, even controversy, surrounds the application of these methods to address the problem of properly extracting Green’s function amplitudes (Weaver 2011; Prieto et al. 2011; Lawrence et al. 2013; Weemstra et al. 2014). In general, the retrieval of Green’s function amplitude information from noise correlation analysis is extremely important in applications such as ground motion simulation, attenuation estimation, evaluation of site amplification effects, and anisotropy analysis (e.g., Prieto et al. 2011). Therefore, there is widespread consensus in the literature that the use of pre-processing schemes should be limited to keep Green’s function amplitude information as intact as possible.

Another important point to note is that all time-domain and frequency-domain normalization methods destroy the uniqueness of waveforms, which has two major drawbacks. First, they increase the time required to extract the signal in the stacking process. Second, they may worsen the situation regarding the destructive effect of the signal associated with spatially localized and temporally persistent small events due to the increased ambiguity between signals and noise (D’hour et al. 2016; Schimmel et al. 2018).

According to the explanation provided in this paper, it seems that the PCC can be a way to address the issues we discussed above, perhaps because in this method, waveform similarity is measured through the number of phase-coherent samples rather than the sum of amplitude products (Schimmel et al. 2011a, b). As the epicenter of the cluster earthquakes inclined towards the perpendicular bisector of the line joining two stations, the waves generated by them travel in different paths before reaching the stations. Therefore, the recorded signals from these station pairs have different coherence under the influence of different anomalies and heterogeneities along their path. This can prevent the formation of precursory noise and frequency-dependent asymmetry in the correlation results. Additionally, the amplitude unbiasedness of the PCC allows it to be used under difficult circumstances with a high-amplitude variability of signals and noise, without the need for implementing pre-processing methods in mitigating the influence of energetic features such as strong earthquakes, sensor failures, and local episodic noise (Schimmel et al. 2018). Therefore, the PCC may partially address concerns regarding the retrieval of Green’s function amplitude information. Of course, just doing a study on a small number of stations cannot guarantee the PCC’s efficiency in this regard. Therefore, this conclusion must be examined by other studies. In addition, the fact that the PCC method does not require pre-processing techniques can also be an advantage in the face of accelerating signal extraction. The relatively satisfactory results obtained for EGFs with the help of 3-month data in this study can be evidence of this claim.

It should be noted that the performance of the PCC may also be affected by the temporal variability in the seismic anisotropy of background seismic noise due to the alignment of the fractures and cracks that accompanies major earthquakes (e.g., Saade et al. 2017). This may reduce the efficiency of the PCC method, since in such cases, signals may be less phase-coherent than in the surrounding noise (Schimmel et al. 2018). This may have been the cause of the slight mismatch observed at some stations.

References

Campillo M, Roux P, Romanowicz B, Dziewonski A (2014) Seismic imaging and monitoring with ambient noise correlations. Treatise on Geophysics 1:256–271

Bavali K, Motaghi K, Sobouti F, Ghods A, Abbasi M, Priestley K, Mortezanejad G, Rezaeian M (2016) Lithospheric structure beneath NW Iran using regional and teleseismic travel-time tomography. Phys Earth Planet Inter 253:97–107

Berbellini A, Schimmel M, Ferreira A, Morelli A (2019) Constraining S-wave velocity using Rayleigh wave ellipticity from polarization analysis of seismic noise. Geophys J Int 216:1817–1830

Burjánek J, Edwards B, Fäh D (2014) Empirical evidence of local seismic effects at sites with pronounced topography: a systematic approach. Geophys J Int 197:608–619

Carvalho JF, Silveira G, Schimmel M, Stutzmann E (2019) Characterization of microseismic noise in Cape Verdecharacterization of microseismic noise in Cape Verde. Bull Seismol Soc Am 109:1099–1109

Cupillard P, Capdeville Y (2010) On the amplitude of surface waves obtained by noise correlation and the capability to recover the attenuation: a numerical approach. Geophys J Int 181:1687–1700

Cupillard P, Stehly L, Romanowicz B (2011) The one-bit noise correlation: a theory based on the concepts of coherent and incoherent noise. Geophys J Int 184:1397–1414

D’hour V, Schimmel M, Do Nascimento AF, Ferreira JM, Neto HL (2016) Detection of subtle hydromechanical medium changes caused by a small-magnitude earthquake swarm in NE Brazil. Pure Appl Geophys 173:1097–1113

Davy C, Stutzmann E, Barruol G, Fontaine FR, Schimmel M (2015) Sources of secondary microseisms in the Indian Ocean. Geophys J Int 202:1180–1189

Denolle M, Dunham E, Beroza G (2012) Solving the surface-wave eigenproblem with Chebyshev spectral collocation. Bull Seismol Soc Am 102(3):1214–1223

Denolle M, Dunham E, Prieto G, Beroza G (2013) Ground motion prediction of realistic earthquake sources using the ambient seismic field. J Geophys Res Solid Earth 118(5):2102–2118

Denolle M, Dunham E, Prieto G, Beroza G (2014) Strong ground motion prediction using virtual earthquakes. Science 343(6169):399–403

Donner S, Krüger F, Rößler D, Ghods A (2014) Combined inversion of broadband and short-period waveform data for regional moment tensors: a case study in the Alborz Mountains, Iran. Bull Seismol Soc Am 104(3):1358–1373

Donner S, Rößler D, Krüger F, Ghods A, Strecker MR (2013) Segmented seismicity of the M w 6.2 Baladeh earthquake sequence (Alborz Mountains, Iran) revealed from regional moment tensors. J Seismol 17(3):925–959

Donner S, Ghods A, Krüger F, Rößler D, Landgraf A, Ballato P (2015) The Ahar-Varzeghan earthquake doublet (M w 6.4 and 6.2) of 11 August 2012: regional seismic moment tensors and a seismotectonic interpretation. Bull Seismol Soc Am 105(2A):791–807

Frank SD, Foster AE, Ferris AN, Johnson M (2009) Frequency-dependent asymmetry of seismic cross-correlation functions associated with noise directionality. Bull Seismol Soc Am 99:462–470

Hanks TC, Thatcher W (1972) A graphical representation of seismic source parameters. J Geophys Res 77(23):4393–4405

Harmon N, Rychert C, Gerstoft P (2010) Distribution of noise sources for seismic interferometry. Geophys J Int 183(3):1470–1484

Hennino R, Trégourès N, Shapiro N, Margerin L, Campillo M, Van Tiggelen B, Weaver R (2001) Observation of equipartition of seismic waves. Phys Rev Lett 86(15):3447–3450

Hodgson M (1996) When is diffuse-field theory applicable? Appl Acoust 49(3):197–207

Jousset P, Douglas J (2007) Long-period earthquake ground displacements recorded on Guadeloupe (French Antilles) Earthquake engineering & structural dynamics 36(7): 949-963.

Kwiatek G, Martínez-Garzón P, Bohnhoff M (2016) HybridMT: A MATLAB/shell environment package for seismic moment tensor inversion and refinement. Seismol Res Lett 87(4):964–976

Lawrence JF, Denolle M, Seats KJ, Prieto GA (2013) A numeric evaluation of attenuation from ambient noise correlation functions. J Geophys Res Solid Earth 118(12):6134–6145

Lin FC, Moschetti MP, Ritzwoller MH (2008) Surface wave tomography of the western United States from ambient seismic noise: Rayleigh and Love wave phase velocity maps. Geophys J Int 173:281–298

Margerin L, Campillo M, Van Tiggelen B, Hennino R (2009) Energy partition of seismic coda waves in layered media: theory and application to Pinyon Flats Observatory. Geophys J Int 177(2):571–585

Margerin L (2017) Breakdown of equipartition in diffuse fields caused by energy leakage. Eur Phys J Spec Top 226(7):1353–1370

Massa M, Barani S, Lovati S (2014) Overview of topographic effects based on experimental observations: meaning, causes and possible interpretations. Geophys J Int 197:1537–1550

Medeiros WE, Schimmel M, Nascimento AF (2015) How much averaging is necessary to cancel out cross-terms in noise correlation studies? Geophys J Int 203:1096–1100

Nakata N, Snieder R, Tsuji T, Larner K, Matsuoka T (2011) Shear wave imaging from traffic nose using seismic interferometry by cross-coherence. Geophysics 76(6):SA97–SA106

Nakata N, Gualtieri L, Fichtner A (2019) Seismic ambient noise. Cambridge University Press

Nuttli OW (1973) Seismic wave attenuation and magnitude relations for eastern North America. J Geophys Res 78(5):876–885

Pedersen HA, Krüger F (2007) Influence of the seismic noise characteristics on noise correlations in the Baltic shield. Geophys J Int 168:197–210

Pilz M, Parolai S (2014) Statistical properties of the seismic noise field: influence of soil heterogeneities. Geophys J Int 199(1):430–440

Prieto GA, Beroza GC (2008) Earthquake ground motion prediction using the ambient seismic field. Geophys Res Lett 35(14)

Prieto GA, Denolle M, Lawrence JF, Beroza GC (2011) On amplitude information carried by the ambient seismic field. Compt Rendus Geosci 343(8-9):600–614

Rezaeifar M, Kissling E, Shomali ZH, Shahpasand-Zadeh M (2016) 3D crustal structure of the northwest Alborz region (Iran) from local earthquake tomography. Swiss J Geosci 109(3):389–400

Roux P, Sabra KG, Gerstoft P, Kuperman W, Fehler MC (2005) P-waves from cross-correlation of seismic noise. Geophys Res Lett 32

Ritzwoller MH, Feng L (2018) Overview of pre-and post-processing of ambient noise correlations. Seismic Ambient Noise.

Sabra KG, Gerstoft P, Roux P, Kuperman W, Fehler MC (2005) Extracting time-domain Green’s function estimates from ambient seismic noise. Geophys Res Lett 32(3)

Saadé M, Montagner JP, Roux P, Shiomi K, Enescu B, Brenguier F (2017) Monitoring of seismic anisotropy at the time of the 2008 Iwate-Miyagi (Japan) earthquake. Geophys J Int 211:483–497

Sánchez-Sesma FJ, Pérez-Ruiz JA, Luzón F, Campillo M, Rodríguez-Castellanos A (2008) Diffuse fields in dynamic elasticity. Wave Motion 45(5):641–654

Schimmel M (1999) Phase cross-correlations: design, comparisons, and applications. Bull Seismol Soc Am 89(5):1366–1378

Schimmel M, Gallart J (2003) The use of instantaneous polarization attributes for seismic signal detection and image enhancement. Geophys J Int 155(2):653–668

Schimmel M, Gallart J (2004) Degree of polarization filter for frequency-dependent signal enhancement through noise suppression. Bull Seismol Soc Am 94(3):1016–1035

Schimmel M, Stutzmann E, Ardhuin F, Gallart J (2011a) Polarized Earth’s ambient microseismic noise. Geochemistry, Geophysics, Geosystems 12.

Schimmel M, Stutzmann E, Gallart J (2011b) Using instantaneous phase coherence for signal extraction from ambient noise data at a local to a global scale. Geophys J Int 184(1):494–506

Schimmel M, Stutzmann E, Ventosa S (2018) Low-frequency ambient noise autocorrelations: waveforms and normal modes. Seismol Res Lett 89:1488–1496

Seats KJ, Lawrence JF, Prieto GA (2012) Improved ambient noise correlation functions using Welch’ s method. Geophys J Int 188:513–523

Shapiro N, Campillo M, Margerin L, Singh S, Kostoglodov V, Pacheco J (2000) The energy partitioning and the diffusive character of the seismic coda. Bull Seismol Soc Am 90(3):655–665

Shapiro NM, Ritzwoller M, Bensen G (2006) Source location of the 26 sec microseism from cross-correlations of ambient seismic noise. Geophys Res Lett 33

Snieder R, Hagerty M (2004) Monitoring change in volcanic interiors using coda wave interferometry: application to Arenal Volcano, Costa Rica. Geophys Res Lett 31(9)

Snieder R (2004) Extracting the Green’s function from the correlation of coda waves: a derivation based on stationary phase. Phys Rev E 69:046610

Tian Y, Ritzwoller MH (2015) Directionality of ambient noise on the Juan de Fuca plate: implications for source locations of the primary and secondary microseisms. Geophys J Int 201(1):429–443

Tian Y, Ritzwoller MH (2017) Improving ambient noise cross-correlations in the noisy ocean bottom environment of the Juan de Fuca plate. Geophys J Int 210(3):1787–1805

Udias A (1999) Principles of seismology. Press, Cambridge University

Ventosa S, Schimmel M, Stutzmann E (2019) Towards the processing of large data volumes with phase cross-correlation. Seismol Res Lett 90:1663–1669

Viens L, Miyake H, Koketsu K (2015) Long-period ground motion simulation of a subduction earthquake using the offshore-onshore ambient seismic field. Geophys Res Lett 42(13):5282–5289

Viens L, Laurendeau A, Bonilla LF, Shapiro N (2014) Broad-band acceleration time histories synthesis by coupling low-frequency ambient seismic field and high-frequency stochastic modelling. Geophys J Int 199(3):1784–1797

Viens L, Koketsu K, Miyake H, Sakai S, Nakagawa S (2016) Basin-scale Green’s functions from the ambient seismic field recorded by MeSO-net stations. J Geophys Res Solid Earth 121(4):2507–2520

Viens L, Denolle M, Miyake H, Sakai S, Nakagawa S (2017) Retrieving impulse response function amplitudes from the ambient seismic field. Geophys J Int 210(1):210–222

Wapenaar K, Slob E, Snieder R, Curtis A (2010a) Tutorial on seismic interferometry: part 2—underlying theory and new advances. Geophysics 75(5):75A211–275A227

Wapenaar K, Draganov D, Snieder R, Campman X, Verdel A (2010b) Tutorial on seismic interferometry: part 1—basic principles and applications. Geophysics 75(5):75A195–175A209

Weaver RL, Lobkis OI (2006) Diffuse fields in ultrasonics and seismology. Geophysics 71(4):SI5–SI9

Weaver RL (2011) On the amplitudes of correlations and the inference of attenuations, specific intensities and site factors from ambient noise. Compt Rendus Geosci 343:615–622

Weemstra C, Westra W, Snieder R, Boschi L (2014) On estimating attenuation from the amplitude of the spectrally whitened ambient seismic field. Geophys J Int 197:1770–1788

Yanovskaya T, Koroleva TY (2011) Effect of earthquakes on ambient noise cross-correlation function, Izvestiya. Physics of the Solid Earth 47(9):747–756

Zeng X, Ni S (2010) A persistent localized microseismic source near the Kyushu Island, Japan. Geophys Res Lett 37(24)

Zheng Y, Shen W, Zhou L, Yang Y, Xie Z, Ritzwoller MH (2011) Crust and uppermost mantle beneath the North China Craton, northeastern China, and the Sea of Japan from ambient noise tomography. J Geophys Res Solid Earth 116

Acknowledgements

The authors would like to acknowledge the Iranian Seismological Centre, Institute of Geophysics, University of Tehran (IRSC-IGUT) and International Institute of Earthquake Engineering and Seismology, Tehran (IIEES) for providing access to metadata required in this study. The authors would like to thank the editor and two anonymous reviewers who took the time to review and made very constructive scientific suggestions to improve the paper.

Funding

Open access funding provided by Uppsala University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Article highlights

• Spatially localized and temporally persistent small events have a destructive impact on correlation results in the seismic interferometry.

• Conventional pre-processing methods cannot completely eliminate these adverse impacts.

• Phase cross-correlation partially addresses concerns regarding these persistent cluster events.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hamed, A.A., Shomali, Z.H. & Moradi, A. On the strength of the phase cross-correlation in retrieving the Green’s function information in a region affected by persistent aftershock sequences. J Seismol 25, 987–1003 (2021). https://doi.org/10.1007/s10950-021-10008-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10950-021-10008-1