Abstract

Training data is crucial for any artificial intelligence model. Previous research has shown that various methods can be used to enhance and improve AI training data. Taking a step beyond previous research, this paper presents a method that uses AI techniques to generate CT training data, especially realistic, artificial, industrial 3D voxel data. This includes that material as well as realistic internal defects, like pores, are artificially generated. To automate the processes, the creation of the data is implemented in a 3D Data Generation, called SPARC (Synthetized Process Artificial Realistic CT data). The SPARC is built as a pipeline consisting of several steps where different types of AI fulfill different tasks in the process of generating synthetic data. One AI generates geometrically realistic internal defects. Another AI is used to generate a realistic 3D voxel representation. This involves a conversion from STL to voxel data and generating the gray values accordingly. By combining the different AI methods, the SPARC pipeline can generate realistic 3D voxel data with internal defects, addressing the lack of data for various applications. The data generated by SPARC achieved a structural similarity of 98% compared to the real data. Realistic 3D voxel training data can thus be generated. For future AI applications, annotations of various features can be created to be used in both supervised and unsupervised training.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Computed tomography (CT) is an established and important technology in medicine. In the industrial environment, CT is playing an increasingly important role in non-destructive testing (NDT) and evaluation (NDE) through the visualization of internal structures. High-resolution 3D CT scans have an enormous data size, which requires a high-performance hardware. The increase in performance of computer systems in recent years has made it possible to establish CT more profitably in industrial areas. Industrial CT is used, for example, for quality control, dimensional analysis, material analysis, reverse engineering, or defect analysis. CT is an important key technology for ensuring component quality in many industrial sectors such as automotive engineering, electronics, medical technology, and aerospace. Due to the size of the resulting 3D data, the analysis is very complex. There are various methods of analyzing industrial CT data. One method is manual inspection. For this, a specialist must consistently examine all 2D sections of the 3D data by hand and correctly assess the features. In the case of defect detection, a decision must be made as to where the defects are. With a 3D resolution of 30003voxels, this takes a very long time, is prone to errors, and is only reproducible to a limited extent. Algorithms offer an alternative, allowing the definition of rules and limits for automatic data analysis. Algorithms can be used to define rules and limits that automatically analyze the data. This method is expedient for homogeneous data and repetitive structures or properties. However, as real industrial CT data usually include artefacts to a certain extent, the data is not homogeneous, and the properties may vary from scan to scan. Therefore, algorithmic methods can only be used to a limited extent. An alternative method for analyzing large 3D voxel data is artificial intelligence (AI) and explicitly deep learning (DL) with an artificial neural network (ANN). They have the advantage of not analyzing properties and structures in images using rigid rules, but rather learning the characteristics of the properties and their features. In addition, ANNs have a certain degree of abstraction capability, which allows them to infer unknown knowledge from learnt information. The AI or the networks can be trained in a generalized or specified manner. This means that the AIs are explicitly trained on one feature in the images or on many features simultaneously. If the training data for an AI was created by several experts, the AI reflects the combined expertise of these experts and can also abstract from unseen aspects. However, AI methods also have disadvantages. In order to develop an AI, the developer must have a certain amount of expertise. Data, network architecture, and training must be fine-tuned. Although there are already toolboxes that make this work easier. Without extensive expertise in this area, it is difficult to optimize a system for the intended use or to evaluate the success of the training and the results. In addition, there is the problem of large voxel data, which makes the networks and the training so large that powerful hardware is required. The biggest issue, however, is the training data. An AI can only be customized and trained for an application as well as the training data in combination with the architecture and the training process allow. To do this, the training dataset must contain a sufficient number of images and at the same time represent a sufficient variance of the features to be learnt. In the case of CT data, this can lead to problems, as the availability of training data is very limited and there are no public databases for industrial CT data. Alternatively, training data could be created using own scans, but this is very time-consuming if several thousand scans have to be made and reconstructed before an AI can be trained. Simulation could be an option in this case. It could be used to generate artificial scan data to compensate for missing real data. However, a simulation must be parameterized in a complex way and requires an extensive variance of input data, which usually has to be available in the surface tessellation language (STL) file format. The STLs must also be able to exhibit a certain variance, which poses a particular difficulty for internal defects and their free-form surfaces. Creating a suitable variance for this is very time-consuming and the gap to reality is difficult to close. Simulation is therefore more of a tool for creating individual data, but not for a complete training dataset. There is the option to algorithmically generate geometries using CAD software with parameter variation and then scripting the software to create several samples. Nevertheless, it remains an algorithmic approach whose rules need to be defined. Another method could be direct algorithmic generation on a voxel basis. This is a fast method of generation, but there is also the problem of reflecting the reality. Real CT data is very multifaceted, and the properties created by the scanning and reconstruction process vary greatly. Creating a few geometries as well as a simple material flow algorithmically can still work, but as soon as artefacts or internal defects are added, it becomes difficult. The creation of the data becomes much more complex with each property that is added, especially because the properties are also interdependent. Algorithms can be used to create individual 3D CT data, but the algorithms required for this are very complex and reach their limits. This means it would be very challenging to define all the algorithms for the data generation, like generating the geometries, creating defects, generating a gray value distribution, etc. to create realistic representations. Algorithms are functions that are subject to rigid and clear rules. This means that every small property in the data must be precisely defined in the algorithm. In order to create a realistic dataset with a certain variance and sufficient data, algorithms are therefore not expedient, as the gap to reality can never be closed.

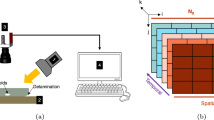

Since training data is of utmost importance for any AI, in this paper we pursue the synthetic generation of data with the help of an AI-supported pipeline. The advantage of using an AI is its ability to learn the real conditions through training data without having to formulate them into rigid algorithms. The aim of this work describes the generation of synthetic 3D voxel data with internal defects for different applications such as AI defect segmentation. Figure 1 illustrates the concept and rationale behind the SPARC idea (Synthetized Process Artificial Realistic CT data) and its potential applications.

Based on this concept, the SPARC AI Data Generation makes it possible to generate a desired number of realistic synthetic data from only One Real CT Voxel Data sample. The AI Data Generation is here visualized as a black box. Its internal structure is a pipeline which is explained in chapter 3 in more detail. This sample is fed in our AI Data Generation where the defects inside the component as well as the material characteristics are learned to generate synthetic 3D voxel data. These serve as training data for a subsequent AI Application. After the AI Application model is trained with the synthetic training data, it can process unseen Real 3D Voxel Data to deliver AI Application Results. An example application for the AI Application would be a defect segmentation in 3D voxel data. The SPARC pipeline is structured in such a way that several individual steps are carried out one after the other. For this we use the STL format as well as the voxel format. This enables us to obtain STL data in addition to 3D voxel data, which is of great importance for many applications such as FEM simulation. With this pipeline, we are able to quickly create large amounts of realistic STL and voxel data and define exactly what the inner characteristics of the data should look like in terms of defects and material representation.

2 Related Research

2.1 Data Synthetization

The demand for data is very high when it comes to deep learning. Since real industrial CT data is not freely available due to company confidentiality agreements, synthetic data is an alternative. Researchers state out that this is in fact a challenging situation, but it turns out that it is not enough to simply generate some data [1,2,3]. The quality of the data is of great importance, as it can only be used effectively for training AI systems if it represents reality well enough [1]. The probability of detection (PoD) of AI systems goes hand in hand with the quality of the data [2]. There are several approaches depending on the type of data needed. A general survey on methods for creating synthetic data for deep learning gives an overview of promising approaches [4]. In our case industrial 3D CT data is needed especially for training segmentation models. Synthetic industrial 3D CT data can be derived through one of the following approaches: (a) manual, (b) algorithmic and (c) AI based. (a) Synthetic 3D voxel data can generally be created manually using a slice wise approach giving each pixel in a 2D array a specific gray value for example using a drawing tool and then creating a stack of slices to form 3D voxel data. But this would be a very odd approach especially when there is a need for hundreds or thousands of 3D voxel data samples. Also, the reality gap between synthetic and real data would be very high. The (b) approach makes use of algorithms. After defining the dimensions of a 3D sample, the algorithm can randomly generate geometric structures and gray values within the empty volume. By specifying value ranges and using toolboxes to create common geometric 3D shapes, more realistic samples could be created. Therefore, the features of real CT data need to be analyzed and formed in rules and algorithms. Another also algorithmic approach is making use of CT simulation [5] and CT reconstruction [6] software to generate 3D voxel data. But there is still the need for feature variation, which is to be created before simulation, for example using computer aided design (CAD) software or also randomization. Approach (c) is based on generative AI models. These models are trained to break down the input information to a simplified representation and learn to recreate them for example using autoencoders (AE) [7]. Alternatively, based on another learning approach, generative adversarial networks (GANs) [8] are also able to create synthetic data which are close to real data or creating realistic variations.

2.2 Synthetic 3D-Geometries

In different publications [9, 10] a variation of 3D geometries is created using CAD software and scripting to vary parameters to procedurally generate synthetic 3D objects based on build rules. The approach [11, 12] utilizes a Deep Boltzmann Machine (DBM), a special type of recurrent neural network, to learn the surface points of structural elements of objects such as legs, seats, armrests, and backrests of e.g. chairs. It then iteratively deforms them to create variations of a given input object. In another work [13] a 3D-GAN is used to create 3D objects on a voxel basis. The generator transforms a latent space input to a 3D voxel model based on convolutional layers. The discriminator is built very similar to the generator but is mirrored and acts as a descriptor for 3D objects. After training, the generator is used independently. The authors evaluate the latent space to identify reasonable latent space vectors by computing the Gaussian distribution of the training inputs. The discriminator is then used as a classifier to evaluate the results by comparing them to objects of test data sets. Another study [14] utilizes combination of convolutional encoder-decoder networks and long short-term memory (LSTM) [15] to create 3D voxel data objects based on 2D input images and 3D voxel data ground truth. In our preliminary work, which is a feasibility study [16] we used an convolutional AE with a dense code layer to learn how to reconstruct defects that were extracted from a real CT scan of an industrial component. We used similar techniques [13] to evaluate meaningful latent space inputs after training the model which allows to create high variational synthetic defect geometries.

2.3 Synthetic RGB and Gray Values

Three approaches [2, 9, 10] are using an algorithmic solution for creating synthetic 3D voxel data by processing several STL models through CT simulation. The projections are then reconstructed into synthetic 3D voxel data. It is also possible to directly generate synthetic 3D voxel data using algorithms. Another algorithmic approach [1] makes use of a statistical measurement of key features like X-ray intensity distribution, and edge transitions in over 1000 real CT scans. The statistics define limits for an algorithmic randomized data generation. In other approaches [17, 18], data is generated using an algorithm that randomly creates 3D geometries (material) and different inner defects, including gray values on a voxel basis. The randomization is within defined rules and value ranges which allows to create data in large variety. Another work [19] uses a conditional GAN to learn generative variations of the MNIST [20] dataset. The model can generate large variations of handwritten digits as 2D images with gray values. The next solution [21] is creating an image-to-image translation using a CGAN. Instead of latent space vector input to the generator, a segmentation image is used. The generator model learns to predict pixel values (RGB/gray values) based on the input segmentation. This is used to predict image scenes, building faces, and translations from gray values to RGB or edges to RGB. There are additional image-to-image translation approaches [22, 23] focusing on multi domain image transfer using a second modality as input to the generator of the GAN. In another work [24] a GAN is used to create synthetic medical 3D voxel data from noisy and pixelated approximations of real 3D voxel data.

2.4 Proposed Approach

As shown in the previous subsections about data synthetization in general and for creating synthetic geometries and gray values there are relevant scientific works in those areas. The SPARC approach goes beyond that by showing a novel pipeline for an end-to-end synthetization of realistic industrial 3D voxel data specifically for setting up AI based automated quality inspections for production lines with only few initial data. None of the described approaches delivered a combination of realistic defect generation combined with also realistic 3D gray value data generation incorporating synthetic defects. There is no solution to solve the lack of data for various industrial, 3D CT AI applications. A direct generation of 3D voxel data might be possible but gives no control on characteristics of defects and defect distribution. The SPARC approach makes use of two individually learned AI models, one for defect synthetization and one for gray value synthetization packed in one pipeline, which offers most flexibility and control options through corresponding parameterization of the respective process step. Also, efficient data generation involves avoiding the complex and time-consuming process of creating realistic synthetic 3D voxel data from STL components with synthetic defects through CT simulation and reconstruction which increases usability of the data generation dramatically compared to our prior work. Additionally, the combined use of the STL and NIFTI data formats offer advantages due to their representation properties, which allows to use dedicated algorithms to realize tasks most efficiently. Our preliminary work [16] is a feasibility study which consists of two steps to generate synthetic defects (AI Defect Generation) and placing them (Defect Placement) in an STL model of a component. In this study several approaches were researched. The most promising approach will be further investigated for this work in order to enable application-oriented data generation together with the novel third step, the generation of artificial realistic gray values. This research includes the following advancements:

An overall upgrade of the entire data flow path to a new feature rich industrial component including noise, large number of defects, low contrast, and a high resolution of 1028 × 1028 × 751. Especially the resolution and the noise combined with low contrast is challenging but is often the case in industry which makes the use case for SPARC more realistic. Consequently, both the AI Defect Generation model and the Defect Placement is further researched accordingly. The architecture of the AI Defect Generation was changed by increasing the latent space to a size of ten. This offers more control over the AI Defect Generation by clustering the characteristics in more bins which leads to richer variation in synthetic defects by an increase of combinatorial possibilities with better differentiability of the characteristics. Introducing a larger latent space in the autoencoder the model must be retrained and the hyperparameter optimization must be applied. Additionally, other parameters must be optimized as well to fit the new latent space size and achieving higher generation quality. In comparison to our preliminary work the latent space is evaluated. For this purpose, the mean value, and the standard deviation for all nodes of the latent space are calculated with the help of the training data and then verified with a new evaluation tool. The evaluation tool has a graphical user interface which offers direct feedback on a specific latent space input. In our previous work, the Defect Placement algorithm only took care to place the defects within the component and without contact to other defects. In this work we added multiprocessing to increase efficiency and a lot of new parameters. The new parameters allow the user to distinguish between different defect characteristics of the defects to be placed. The algorithm was completely new developed to include the new features. In general, our approach solves the lack of realistic data for industrial quality inspection purposes.

3 Methods

The methodology of our SPARC pipeline consists of several steps. Step 1: AI Defect Generation; Step 2: Defect Placement; Step 3: AI Voxel Generation. An overview schematic is visualized in the Fig. 2 below. Each of the steps could be run individually or it could be run as an end-to-end pipeline. This offers the opportunity to generate different types of data depending on the application. Step 1 creates single defects which can be stored in a database depending on the volumetric size of the defects. By executing Step 1 and Step 2 one after the other, defects are created, stored in a database, selected from the database, and distributed in any STL component model. Executing all three steps creates 3D voxel data by converting the component STL with defects into synthetic 3D voxel data with AI generated gray values for surrounding air, material, and defects. In the following subsections the methods and processes of each step are explained in detail, whereby the Step 1 and the Step 2 originate from our feasibility study [16]. However, for this work the Steps 1 and 2 are further researched and developed as described in the chapter 2.4 Proposed Approach. Step 3 describes a novel approach for 3D voxel data gray value generation based on 2D image transfer AI models, optimized on fine grained details of material and defect representation in industrial CT data.

Two different servers were used to train the AI models and to conduct the experiments. Both servers have an AMD Epyc 7282 16-Core CPU, 128 GB RAM, one with the Nvidia A100 GPU (80 GB VRAM) and the other with the Nvidia Ada 6000 GPU (48 GB VRAM).

3.1 AI Defect Generation

The AI Defect Generation will be explained in two different phases. Since it makes use of AI, specifically deep neural networks, it is divided in the training and the application phase.

3.1.1 AI Defect Generation—Training

To generate synthetic defects, a 3D voxel data sample of a real component is taken. The process is visualized in Fig. 3. This component has several inner defects. In order to train an AI model to generate these, the 3D voxel data sample of the component is converted to STL using the marching cubes algorithm [25]. To accomplish this, a preliminary analysis of the gray values in the real 3D voxel data sample is made to determine a material threshold value and provide it to the algorithm. To perform the analysis, a histogram is created to identify the gray value range in the material. The center value in this range is used for the thresholding. This is an individual value for each part or scan where the generation is based on. An STL surface model of the component can now be derived, including inner defects. This conversion is helpful since the defects can be extracted from the STL using algorithms to separate closed surface objects. The single STL defects are stored in a database for later use. Depending on the geometrical complexity of each defect, the number of vertices and faces varies which is uncomfortable when it comes to input vectors of ANNs. Instead, we convert all defects also back to small 3D voxel data with the size of 253 using a binarization algorithm. The algorithm defines an overlaying 3D grid on the STL defect with a defined resolution. For every grid element it distinguishes, if this element is inside or outside the STL defect and therefore decides if the corresponding voxel must be zero or one. As datatype for the 3D voxel data, the NIFTI file type is used. A database of real defects is now available, separated into different sizes in both STL and NIFTI format. The AI Defect Generation model is then trained using defects represented as 3D voxel data in NIFTI format.

The ANN model is a convolutional autoencoder (AE) (Fig. 4). The encoder and the decoder are built as individual models. To train them together as a single model, the encoder and decoder model is wrapped in a superior model. During the training, the state of the encoder, the decoder and the superior model are saved as checkpoints. This enables individual execution of the tasks after training. The nodes in the code layer or latent space (layer “input_2) represent the geometrical features, such as size, sphericity, and spikiness, among others. After investigating different latent vector sizes, it was determined that a latent space of size ten provides sufficient capacity for variation while also allowing for acceptable training time. During training, the NIFTI defect dataset with 25,000 samples, which was previously extracted, is used as input. To get an optimal trained model the architecture like convolutional layers and their parameters are adjusted and hyperparameter optimization is applied. The progress of the training is tracked by the binary intersection over union (BIoU) metric, also known as Jaccard-Index [26]. To calculate the BIoU score the absolute of the intersection of ground truth \(GT\) with the prediction \(P\) is divided by the absolute of the union of those. It could also be represented as the true positives (\(TP\)) divided by the sum of the \(TP\), false positives (\(FP\)) and false negatives (\(FN\)) shown in equation (1).

3.1.2 AI Defect Generation—Application

Making use of the AE after training the encoder is detached from the decoder (Fig. 5). To evaluate the input to the latent space, all training data is applied to the encoder and the output is stored in an array to get a realistic latent space parameter field for each node similar to the approach [13] referenced in chapter 2.2. Since the training data consists of real defects, the evaluation of the latent space offers a parameter field in which real characteristics and conditions are represented. Then the mean and standard deviation over all samples along each node is calculated. To generate a synthetic defect, a random parameter variation for every node is chosen as input. A random variation within the parameter field has a high likelihood of resulting in a realistic defect but could only be evaluated qualitatively. For evaluating the parameter field, a new evaluation tool is developed. Using this tool, it is possible to parametrize every single node of the latent space and directly get a visualization of the resulting synthetic defect. As the weights in the model are fixed after training, executing the model with two times the same parameters lead to exactly reproducible defects. In order to finally generate a desired number of synthetic defects, the AE must be executed with realistic latent space parameters (Defect Characteristics) as often as required. The number of defects to be generated can be set by a user. The number is a freely chosen by a user or could be derived from the characteristics of the real manufactured component to be imitated synthetically. In general, if there is a high count of defects in the training data for a defect segmentation, the model might need less data samples to learn from. The generated synthetic defects are stored in a second database as NIFTI, and after a conversion also as STL.

3.2 Defect Placement

In the previous step a large database of realistic synthetic defects is created. To create a dataset of complete components with defect variations inside, the defects are placed in a component (Fig. 6). The input to this step is an STL surface model of any component. In this case the STL format is used, since in the STL format it is easy to determine if a random location is within the surface of a component our outside. The surface of the STL has a vector which determines the inside and outside of the surface. By calculating the distance from any point to the surface, it is possible to determine whether the point is located inside or outside a component. If the distance is negative the point is outside, otherwise its inside the component surface. To place defects a set of placement parameters is required, like how many defects should be placed, the number of defect variations per component, if an intersection with other defect structures is allowed, the average size of defects to be placed and a range the size may vary. Additionally, ranges for convexity, surface area, compactness, spikiness, sphericality, and lengthiness can be chosen by the user. The number of variations per component means how many different defect distributions should be created for the input STL component. The average size indicates how large the placed defects are on average. To determine a volumetric size, the defects extracted from the real component are measured at STL level. This allows an average size and range to be determined. Predefined functions for processing STL data can be used for the measurement. Since the defect placement takes a long time depending on the number of vertices and faces of the input STL, which increases the complexity of the distance calculation, multi-processing is introduced to have several workers to place defects in parallel. If multiple components with synthetic defects are required, the defect placement has to be executed once for each STL component. The output of this step is a third database of components with a high variation of defects as STL models.

3.3 AI Voxel Generation

The SPARC pipeline concludes with step 3, which involves generating synthetic 3D voxel data. Again, this step is divided into two phases since AI is used and the training as well as the application phase is explained here. To generate synthetic gray values in a 3D voxel geometry there are several approaches as stated out in the related work Sect. 2.3. Algorithmic approaches have several limitations. Algorithms can generate synthetic gray values, for example, by applying a noise pattern to a homogeneous material gray value. However, the gray value distribution of a CT scan material is complex and influenced by various factors, including material thickness, beam hardening, scattered radiation, electron noise, and detector discretization. Polychromatic X-ray sources are also important to consider. A more realistic approach is making use of CT simulation. The more realistic the simulation, the more realistic are the results after reconstruction of the x-ray projections received from the simulation. The closeness to reality comes with incorporating detailed modeling of the X-ray source and detector as well with the monte-carlo simulation. Creating a realistic simulation requires a high level of knowledge in physics. Also altering the parameters automatically to slightly vary the results for gaining large amounts of synthetic data would be challenging. Additionally, it is computational heavy and time-consuming to run all the simulations and the CT reconstruction afterwards. Another approach is to utilize AI, which has the ability to learn characteristics, patterns and features in data. Especially generative AI models can learn those features and resemble them to new variations. For this reason, Step 3 of the pipeline is realized with a generative AI approach. The pix2pix [21] approach was chosen as the generative AI. There are newer approaches for image-to-image translation [22, 23] which are focused on a multi domain and mixed multi domain translation with additional modalities as input to the generator. As only a single 3D voxel data sample is to be required as input, it is not necessary to introduce additional complexity. The base architecture of the generators of the other approaches are very similar to the architecture of the pix2pix generator. Applying pix2pix for generating industrial 3D voxel data by using the image-to-image translation ability to generate synthetic gray value distribution is a novel approach. Especially in the combination with a full end-to-end pipeline from generating defects, placing them in an STL component, calculating a binary segmentation by binarizing the STL to predict the gray values for the three classes (air, material and defects) is a new overall concept for generating synthetic 3D voxel data.

3.3.1 AI Voxel Generation—Training

To generate synthetic 3D voxel data, a single real 3D voxel data sample is used. This real data delivers information about the material properties in terms of gray values or in other words material density as well as for the surrounding air and the defects. To generate synthetic 3D voxel data, an AI model should learn these characteristics. Instead of a single ANN model, two models are used and trained as a GAN (Fig. 7). The image-to-image translation approach pix2pix is used and adjusted to our requirements as follows. The original approach is for 2D images, since we want to create 3D voxel data, we increased the dimension of the models and adjusted feature maps and the depth of the models. The first model, the generator, is a U-Net [27] like architecture without intermediate filtering at each depth level. Instead, the image matrix or the information in the downward part of the network is reduced by convolutions with a stride of two followed by a batch normalization and an activation layer. In the upward part of the network, the information is then expanded back to the original input dimension by transposed convolutions also followed by a batch normalization and an activation layer. The repetitive operations for going downwards or upwards are wrapped in a sequential block. Also, the layer activations are changed from LeakyReLU to ReLU and the output activation, of both models, from tanh to sigmoid, since our data is normalized between zero and one. The discriminator is a patch GAN discriminator which does not classify the inputs as true or false. Instead, it patch-wise compares the prediction with a ground truth, which are small subsections of the image. In the training process the generator produces outputs which are compared against the ground truth using the discriminator.

The training data will be explained here to better understand the training process and results. First, the real 3D voxel data is cut into chunks of 1283. This method is chosen due to hardware limitations, since a full 3D voxel data sample is too large to fit it in the model. During this process it is calculated if a reasonable amount of material of the component is within this chunk by giving a material gray value threshold, counting the number of voxels above this threshold and comparing to the overall number of voxels of this chunk. If a chunk has more than 30% of material inside, a feature segmentation of this chunk, where the surrounding air and the defects have the value zero and the material has the value one, is calculated. For the training of the GAN and the application of the AI Voxel Generation, the segmentation of the structures is crucial for the network to learn a successful generation. To cut out the chunks, a 1283 window is moved through the entire real component with a certain step size. A defined overlap of the chunks is derived from the step size. Depending on the step size or overlap, large amounts of data can be generated. The resulting binary chunks with a size of 1283 are the input to the generator which tries to predict or generate the gray values corresponding to air, material and defects to match the original gray values given as ground truth to the discriminator. Using jittering or also called augmentations, like rotation, flip, translate and sharpness- and contrast enhancement, additionally the variation of the data is increased. With the opportunity to increase the data variation it is possible to generate different CT representations with different material characteristics. The GAN model consists of a generator and a discriminator which are trained together. Based on the input feature segmentation the generator predicts the corresponding gray values while the discriminator compares the generator results to a ground truth. The training process is visualized in Fig. 8. In a post-pipeline application such as segmentation, different 3D voxel data samples with different scan parameters may occur. In order to be able to train this segmentation for different scan parameters, the variation is increased by these augmentations, thus creating the possibility of generating different data. During the training the progress is tracked using the structural similarity metric (SSIM) [28] by comparing the ground truth (3D) to the prediction (3D) of the generator. The SSIM is given in equation (2) were \(x\) and \(y\) are the compared images, \({\mu }_{x}\) and \({\mu }_{y}\) are the mean intensities and \({\sigma }_{x}\) and \({\sigma }_{y}\) are the mean contrast.

3.3.2 AI Voxel Generation—Application

After the training of the model, the generator can be run as a standalone model (Fig. 9). The input to the model is a binary segmentation of the material representing just the geometry of the component and the output is the same geometry with predicted gray values. To produce large amount of synthetic 3D voxel data the generated STL components with variations of synthetic defects inside are taken. Next these surface models are converted back to NIFTI 3D voxel data. To predict synthetic gray values using the generator on a whole component, a patching method is used. Patching is a common term, but rather describes the processing of 2D images, where a large image is cut down in smaller image parts to process them. In our case 3D parts or patches are created. Volumetric elements of a 3D grid are often referred to as chunks, but chunking is no common term in the field of image processing. Therefore, the term patching is still used, even though in this context it refers to the processing of 3D data piece by piece. For the patching procedure a chunk size of 1283 and an overlap of 50% is chosen. Each chunk is fed to the generator model predicting the gray values. After that, the process to reassemble the chunks to a full 3D volume is called depatching. Since inaccuracies occur at the edges, an overlap of 50% in combination with linear blending [29], expanded to 3D, is used to create synthetic 3D voxel data chunk by chunk. The AI considers only the information within each specific chunk when making predictions. However, due to the overlap, the gray values at the borders of one chunk may not align perfectly with those of the neighboring chunk. To illustrate, consider the AI predicts gray values for two adjacent chunks with a 50% overlap. As each chunk is processed separately, the AI may generate slightly different predictions for the overlapping area of each chunk. This discrepancy occurs because the model's understanding of the image context within each chunk may vary, leading to differences in predicted gray values. However, if there were no overlap between chunks, there would be a different problem. In this case, the gray values at the border of one chunk might not perfectly match those of the neighboring chunk. The absence of overlap between chunks may cause inconsistencies in predicted gray values, resulting in visible artifacts at chunk boundaries. Therefore, ensuring seamless transitions between adjacent chunks in terms of predicted gray values is challenging, whether using overlap or not. The 50% overlap approach aims to mitigate these challenges, but it may still result in discrepancies in predictions for overlapping areas between adjacent chunks. A realistic gray value distribution is continuous over the entire component, whereby grid effects lead to a reduction in realism. Therefore, a blending method is used to avoid discrepancies.

4 Results

Since our SPARC approach is a pipeline with several steps, described in the methods (chapter 3), we will present the results in the same manner.

4.1 AI Defect Generation

4.1.1 AI Defect Generation—Training

The first step in our pipeline involves training the AE on single defects derived from a real 3D voxel data sample. This sample is converted to STL format, where every defect is extracted and saved as separate STL file and as a converted NIFTI file. The results of the conversion processes are visualized in Fig. 10.

The BIoU metric is used to analyze the quality of the conversion of NIFTI voxel data to STL and back by comparing the NIFTI input data with the back-converted NIFTI output data. The average BIoU value over 11,000 defect samples is 95.62%, which means that, for example, for a defect size of 150 voxels, there are approximately 7 incorrect voxels. To illustrate this result, an input NIFTI image, the STL conversion and the output NIFTI image are shown in the Fig. 11 below.

During the training the binary intersection over union (BIoU) score is tracked, as shown in Fig. 12. After 41 epochs with 25 k samples the model converges to ~ 96.8% training BIoU and ~ 96.6% validation BIoU. The model can reconstruct the input with high average precision, indicating that it has learned the most relevant features of the input. Since we do not want to recreate the original data and instead generating synthetic variations, which are realistic, this result is promising.

4.1.2 AI Defect Generation—Application

After the model is trained, the decoder will be detached from the encoder. The latent space evaluation using the training data provides value ranges, including mean and standard deviation, for the decoder during the application for each node. An evaluation tool with graphical user interface is developed to vary the input of each latent space node (Fig. 13). If the model is executed with a specific latent space, the tool directly renders the generated defect, converted to STL. If the execution is started, it runs the model in an endless loop. This allows the user to see the effect of every adjustment of the values using the sliders. There is also a thresholding parameter. Since the model predicts defect geometries on a voxel basis with a specific likelihood of a voxel being part of the defect geometry or not, the threshold is used to binarize the results based on a user set probability limit. Additionally, the resolution for the STL conversion and the upper and lower bound of the node values can be adjusted. The generated defect and the corresponding latent space values can be exported. The tool helps to better understand the feature representation in the latent space as well as qualitatively evaluating the generated defects.

Combinations were randomized within the ranges determined by the mean and standard deviation to generate defects that have a realistic appearance, as shown in Table 1 (a). These defects are completely new and have not been previously trained. If values are out of range, we get mostly unrealistic synthetic defects like illustrated in the Table 1 (b), marked in bold. Therefore, it is crucial to choose or define the desired values within the calculated ranges. A value is out of range if it is outside the mean value ± standard deviation. A comparison of results is shown in Table 1, where the latent space input to the model is given along with the calculated mean and standard deviation, describing the allowed ranges. Therefore, only valid values are chosen in the application to generate realistic defects. Depending on the number of defects to be generated, the model has to be executed several times with changing input values. The output of the model is a 3D voxel data, stored as NIFTI, with single synthetic defect. They will be categorized and stored in a database along with their corresponding STL converted counterparts.

As there are never two identical defects in reality, it is not possible to prove whether the synthetic defects are realistic by one-to-one comparison. However, visual evaluations can be used to verify whether the essential characteristics are correctly reproduced. Figure 14 presents a comparison between the extracted real defects and the synthetically generated defects. This demonstrates that synthetic defects can be generated in a similar manner to real defects. Around 30,000 synthetic defects are generated to build a database for later placement. The synthetic defects are generated with higher resolution compared to the extracted ones to increase the possibilities of geometric variations. The shown generated defects have very realistic geometries, sizes, and features, like roundish or sharp edges, if we compare them with extracted defects, but they still have their own characteristic features. This proves, it is possible to generate defects very close to the real extracted ones. The aim of this method is not to generate identical copies to real extracted defects. The aim is to exploit a multiplication effect so that a much larger synthetic data set can be obtained from a real data set of defects by varying the properties. This means that a comparison is only possible on a qualitative level.

Along the realistic imitation we can also create realistic variations by changing the defect characteristics in the defined space. Figure 15 shows on the left side that small defects could be generated in contrast to the larger defects on the right side. At the same time, a high variety of geometries are shown.

4.2 Defect Placement

The defect placement, as next step in our pipeline, is fed with the created synthetic defects and the STL model of the component which should be populated with defects. For the placement, parameters such as number and size of defects, distance to the STL surface, distance between defects and more have to be set by the user. The results of placed defects in the STL are shown in Fig. 16. On the left side the component as target STL to place the defects inside and on the center and right side two different defect quantities and distributions of synthetically generated defects are shown.

4.3 AI Voxel Generation

4.3.1 AI Voxel Generation—Training

The AI Voxel Generation is a GAN, where two models are trained against each other. In the training phase we provided 3D voxel chunks of the one real 3D voxel data sample as ground truth and a binary segmentation counterpart as input to the generator. The training dataset consists of 3468 chunks of training data, 434 chunks of validation data and 433 chunks of test data. The generator learns to apply the gray values depending on the given segmentations of material, defect, or surrounding background. We experimented with 3 class segmentations where we have background, material and defects have their own class, but the results had no remarkable differences compared to only two classes (class 1: material; class 2: defects and background). For this reason, the binary segmentation as input is chosen, which is also faster and simpler to create. As described in the Sect. 3.1.1, data augmentation is used to increase the variation. Since rotation and flip should be known, Fig. 17 compares the two image enhancement augmentations applied to the ground truth. Only one enhancement is used per training, no mixture is applied. In this way, we can show that it is possible to introduce specific aspects into the synthetic data in a targeted manner and that these are also generated by AI. After successful training, data with different aspects such as sharpening, or contrast enhancement can be created as required. The use of other enhancements or a mixture of them is also possible but is not yet the subject of this work. Along with the ground truth, the corresponding binary segmentation is shown, which remains untouched.

The training with the real data took 164 epochs, the training for contrast enhanced data took 186 epochs and the training for sharpness enhanced data took 72 epochs. Each epoch in the training requires approx. two minutes of calculation time. For example, the training with the contrast-enhanced data set takes over six hours. During the training, we tracked the progress using the structural similarity index metric (SSIM) and achieved an average over all 434 chunks of the validation dataset of 98% for the training data without image enhancement augmentation, 82% for the contrast enhanced data and 89% for the sharpness enhancement data.

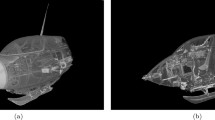

4.3.2 AI Voxel Generation—Application

To generate synthetic 3D voxel data, we convert the STL components with different defect variations to 3D voxel data. Through this process we directly receive binary data. The 3D voxel data is processed chunk-wise respectively patch-wise with the trained generator model. It predicts the gray values of material, background and defects of each chunk and blends the results of the overlapping areas to avoid possible edge transitions between the predicted patches. Without blending we experienced grid effects when depatching the volume. In Fig. 18 we show a comparison of real and synthetic 3D voxel data of a whole 3D volume with 1028×1028×751 as cropped slices generated with our AI Voxel Generation model. The gray values of the material and the transitions between material and defect as well as between material and air have a natural appearance. The synthetic defects in the sample are clearly visible. Approximately 1500 defects are placed in the STL model of the component. Fine grained details like the ring artifacts are missing. Those details are the small gap to 100% in structural similarity.

Comparison of 3 sample sections (a, b, c) of predicted synthetic 3D voxel data. (left column) Predicted sample slices trained with not enhanced data. (center column) Predicted sample slices trained on contrast enhanced data. (right column) Predicted sample slice trained on sharpness enhanced data. (Visualization created with ImageJ)

Figure 19 shows a more detailed comparison of two samples. On the left is a real sample and, on the right, a synthetic sample. Below each sample the corresponding line profiles are shown along the green selection, drawn in the samples. We can see that the gray values of the material and the gray values of the surrounding air are very similar, which shows that the quality of generating the gray values is successful compared to the real CT slice. The similarity of the curves is compared using different characteristics. First, it is checked whether the gradient of the material-air transition is comparable in both images. It is also analyzed how the gradient of the material transition flattens, in order to analyze how sharp or blurred a material transition edge is generated in the data. Among other parameters, the gray value ranges of the different materials or features, in this case air, material, and defect, are analyzed. The gray values of the material for the real sample, shown in Fig. 19 on the left, are approximately between 60.050 and 62.100. The material gray values for the synthetic sample, shown in Fig. 19 on the right, are approximately between 59,700 and 61,200. The gray value ranges are close together and have a large overlap. At the same time, they show that they are not identical, which should be the case for a new synthetic variations. Another parameter examined is the noise. Noise is reflected both in the air (background) and in the material. In both cases, it appears as a slight irregularity that causes the gray values in homogeneous areas to appear inhomogeneous. Noise occurs in real data for physical reasons. Therefore, it is important that the synthetically generated data also have a certain noise behavior. The noise can be seen in the line plots below as stochastic fluctuations in the signal. Both in the air and in the material, there is a kind of stochastic irregularity on the signal in the line plots that reflects the noise in that area. For the quantitative comparison of the two samples, the SSIM and the peak signal-to-noise ratio (PSNR) were calculated. The structural similarity of the two samples reaches a value of 98.88% and the PSNR reaches a value of 30.59 dB, which proves the similarity of the two images. The successful generation of the gray values can be seen in the similar characteristics of the curves, although a new synthetic sample is to be created at the same time. Therefore, although a clear similarity can be seen in the curves, the difference between the two image sections or line profiles can also be seen at the same time. Since the gray value characteristics were matched and a new sample was created at the same time, it can be said that AI-based gray value generation can successfully create new variants of the original sample.

Figure 20 visualizes a line profile comparison through a defect. This work is primarily concerned with creating a data generation pipeline that can generate 3D voxel data with defects. Accordingly, it is important that the defects are not only created realistically in their geometric characteristics, as explained in Step 1 of the pipeline, but also in their material density or their gray value representation. Both defects are different in their geometric characteristics and are located at different points in the component. However, it can be seen in the line profiles that the defect characteristics are very similar. In addition, the transition from material to defect is present in both images with a similar gradient. Only the width and depth of the peak and the height of the gray values are slightly different. This is due to the size and shape of the defect, which also results in a different attenuation of the rays in a CT scan. Each defect is unique in its characteristics, its position in the component and, in combination with CT scan parameters, also in its gray values. Nevertheless, a great similarity can be recognized by comparing the two line profiles. This shows that the AI-generated defect was created very realistically in terms of its geometric shape and gray values.

Defect characteristic comparison of sections between real 3D voxel data and synthetic one trained on data without enhancement. A profile is illustrated along the marked lines in each sample. Brightness and contrast are adjusted to improve the visibility of the features. (Visualization created with ImageJ)

4.4 SPARC: AI Data Generation

The SPARC pipeline, shown in this work, can be used to generate synthetic 3D voxel data in large quantities with high variance in defect geometries, distribution, and material gray value distribution. Each step has different interfaces like parameter and data to adjust the generated data as needed. To give an impression on the processing time of the pipeline in application phase, to produce one synthetic 3D voxel data sample, an example is given. The STL model of the bracket part has around 400,000 vertices and 150,000 faces. Placing 1,500 defects on our server with 20 processes running in parallel, it takes around 20–50 min, depending on the size of the defects. Additionally, the fluctuation in time is a result of the repetition of the placing attempts, whether the actual defect fits in the found location or not. But from one STL component with inserted defects several synthetic 3D voxel data samples can be derived by applying differently trained AI gray value generators. To compare our solution, the basis is the STL of a component with synthetic defects. This allows a direct comparison of state-of-the-art CT simulation followed by reconstruction with our Step 3 of the SPARC pipeline, since both methods require the same input. For the CT simulation and reconstruction approximately eleven minutes are needed on our hardware to create a synthetic voxel data sample with a resolution of 1028 × 1028 × 751, which corresponds to the real reconstructed data. Processing this STL into a synthetic 3D voxel data sample with the same resolution using SPARC Step 3 takes approximately seven minutes. Compared to the state-of-the-art CT simulation and reconstruction, our SPARC approach is the faster method to generate 3D voxel data. Especially if, for example, 1000 3D voxel data samples are required for a subsequent AI application, we can thus achieve a time saving of ~ 4000 min against the CT simulation with reconstruction. In addition to the generation speed, Fig. 21 compares a real 3D voxel data sample with three synthetic 3D voxel data samples to illustrate SPARC generation quality.

As a final target application an ANN model for defect segmentation in industrial components like this bracket can be trained supervised since we can create the corresponding segmentation to the synthetic 3D voxel data sample (ground truth) along with the sample (Fig. 22). It is a great advantage that accurate ground truth segmentation can be generated directly. Creating the ground truth for real scans is very time-consuming, as it has to be carried out by an experienced application engineer.

Additionally, to the final data, intermediate data outputs can be used for other applications. For example, STL components with inserted defects can be used for finite element analysis to evaluate the influence of defects on the structural sustainability under mechanical forces.

5 Conclusion and Discussion

Our solution presents a novel approach for quickly generating a substantial amount of authentic 3D voxel training data. The SPARC pipeline could have a high impact on industrial quality assessment of industrial components, automatically evaluated using CT. Imagine a production line where one specific part is manufactured in a metal casting process. After casting it gets picked up by robot and placed in a CT. By scanning one of those parts and creating training data for this component with our pipeline, an automatic quality assessment system could be built up by training an AI model with the data specific for this task. The whole data creation must first be set up but then could create large quantities of data. After creating for example 10,000 samples it starts automatically to train a segmentation AI model. A benefit of our SPRAC solution is that minimal expert knowledge for CT or material properties of the scanned component is required. It would even be conceivable for a setup wizard to help the user determine the necessary parameters for threshold values, for example, by automatically generating histograms of the input sample and suggesting a suitable threshold value to the user. Also compared to creating the synthetic 3D voxel data using CT simulation, like proposed in our feasibility study, where also CT expert knowledge is needed, our new solution simplifies a lot. In addition, the very time-consuming annotation of the defects in the data is no longer necessary, which in turn would be error-prone and could only be carried out with a certain amount of prior knowledge. Another significant advantage of SPARC is its high degree of variability. Through the use of AI, the pipeline can be trained for many application scenarios during the training process, making it universally applicable. The internal structures and component geometry can be modified without restriction. In conclusion, after the training, the SPARC pipeline addresses the lack of industrial 3D voxel data for various AI applications without a real CT scan or a simulation.

Another interesting example of the universal applicability of the method is its use for structural analysis of components. The SPRAC pipeline can generate many derivatives from one prototypically manufactured and CT scanned component, allowing for structural analyses to be carried out in advance. This helps to investigate the impact of various internal defects on the component and eliminate weak points in production, if necessary.

The next step is to incorporate specific CT artifacts into the pipeline. This will enable the generation of specific artifacts, such as realistic beam hardening and ring artifacts, in Step 3 of the pipeline. Thus, synthetic 3D voxel data with artifacts can be generated on demand, and the resulting data can be widely used for further AI applications such as defect segmentation. Such AI segmentation can be greatly improved by the artifact-containing synthetic data, as the AI networks learn the structure of the artifacts which reduces false predictions since the AI learns to differ between defects and artifacts. This means that incorrect detection of a defect in the artifacts can be reduced or, at best, prevented. AI defect segmentation could therefore be improved for real data containing artifacts. Besides new features such as CT artifacts, future work will also focus on improving individual steps, such as the acceleration of defect placement.

Data Availability

All of the material is owned by the authors and/or no permissions are required. The data will be shared on a reasonable request.

References

Hena, B., Wei, Z., Perron, L., Castanedo, C.I., Maldague, X.: Towards enhancing automated defect recognition (ADR) in digital X-ray radiography applications: synthesizing training data through X-ray intensity distribution modeling for deep learning algorithms. Information 15(1), 16 (2024). https://doi.org/10.3390/info15010016

Yosifov, M., et al.: Probability of detection applied to X-ray inspection using numerical simulations. Nondestruct. Test. Eval. 37(5), 536–551 (2022). https://doi.org/10.1080/10589759.2022.2071892

Fuchs, et al.: Generating meaningful synthetic ground truth for pore detection in cast aluminum parts. e- J. Nondestruct. Test. (eJNDT) 9, 1435–4934 (2019)

Nikolenko, S. I.: Synthetic data for deep learning. (2019). https://arxiv.org/pdf/1909.11512

aRTist - Analytical RT inspection simulation tool. https://artist.bam.de/. Accessed 25 Jul 2023

CERA - Innovative software for cone-beam CT imaging. https://www.oem-products.siemens-healthineers.com/software-components. Accessed 3 Jan 2024

Kingma, D. P., Welling, M.: Auto-encoding variational bayes. (2013). https://arxiv.org/pdf/1312.6114

Goodfellow, I. J., et al.: Generative adversarial networks. (2014). https://arxiv.org/pdf/1406.2661

Fuchs, P.: Efficient and accurate segmentation of defects in industrial CT scans, Heidelberg University Library, Heidelberg, (2021). https://archiv.ub.uni-heidelberg.de/volltextserver/29459/

Fuchs, P., Kröger, T., Garbe, C.S.: Defect detection in CT scans of cast aluminum parts: a machine vision perspective. Neurocomputing 453, 85–96 (2021). https://doi.org/10.1016/j.neucom.2021.04.094

Huang, H., Kalogerakis, E., Marlin, B.: Analysis and synthesis of 3D shape families via deep-learned generative models of surfaces: The Eurographics Association and John Wiley & Sons Ltd. (2015). https://diglib.eg.org/handle/10.1111/cgf12694

Kalogerakis, E., Chaudhuri, S., Koller, D., Koltun, V.: A probabilistic model for component-based shape synthesis. ACM Trans. Graph. 31(4), 1–11 (2012). https://doi.org/10.1145/2185520.2185551

Wu, J., Zhang, C., Xue, T., Freeman, W. T., Tenenbaum, J. B.: Learning a probabilistic latent space of object shapes via 3D generative-adversarial modeling. (2016). https://arxiv.org/pdf/1610.07584

Choy, C. B., Xu, D., Gwak, J., Chen, K., Savarese, S.: 3D-R2N2: a unified approach for single and multi-view 3D object reconstruction. (2016). https://arxiv.org/pdf/1604.00449

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997). https://doi.org/10.1162/neco.1997.9.8.1735

Tenscher-Philipp, R., Schanz, T., Wunderle, Y., Lickert, P., Simon, M.: Generative synthesis of defects in industrial computed tomography data, e-J. Nondestruct. Test. (2023). https://www.ndt.net/search/docs.php3?id=28078

Schanz, T., Tenscher-Philipp, R., Marschall, F., Simon, M.: AI-powered multi-class defect segmentation in industrial CT data. eJNDT (2023). https://doi.org/10.58286/27756

Schanz, T., Tenscher-Philipp, R., Marschall, F., Simon, M.: Deep learning approach for multi-class segmentation in industrial CT-data, e-J. Nondestruct. Test. https://www.ndt.net/search/docs.php3?id=28077

Mirza, M., Osindero, S.: Conditional generative adversarial nets. (2014). https://arxiv.org/pdf/1411.1784

MNIST handwritten digit database, Yann LeCun, Corinna Cortes and Chris Burges. http://yann.lecun.com/exdb/mnist/. Accessed 4 Jan 2024

Isola, P., Zhu, J.- Y., Zhou, T., Efros, A. A.: Image-to-image translation with conditional adversarial networks. (2016). https://arxiv.org/pdf/1611.07004

Choi, Y., Choi, M., Kim, M., Ha, J.- W., Kim, S., Choo, J.: StarGAN: unified generative adversarial networks for multi-domain image-to-image translation, IEEE Conference on Computer Vision and Pattern Recognition (CVPR). http://arxiv.org/pdf/1711.09020.pdf

Ko, K., Yeom, T., Lee, M.: SuperstarGAN: generative adversarial networks for image-to-image translation in large-scale domains. Neural Netw.: Off. J. Int. Neural Netw. Soc. 162, 330–339 (2023). https://doi.org/10.1016/j.neunet.2023.02.042

Mangalagiri, J., et al.: Toward generating synthetic CT volumes using a 3D-conditional generative adversarial network. (2021). https://arxiv.org/pdf/2104.02060

Lorensen, W.E., Cline, H.E.: Marching cubes: a high resolution 3D surface construction algorithm. SIGGRAPH Comput. Graph. 21(4), 163–169 (1987). https://doi.org/10.1145/37402.37422

Jaccard, P.: Lois de distribution florale dans la zone alpine, (1902). https://doi.org/10.5169/SEALS-266762.

Ronneberger, O., Brox, P. F. T.: U-Net: Convolutional networks for biomedical image segmentation. https://link.springer.com/chapter/10.1007/978-3-319-24574-4_28. Aaccessed 6 Dec 2022

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process.: Publ. IEEE Signal Process. Soc. 13(4), 600–612 (2004). https://doi.org/10.1109/Tip.2003.819861

Tomasi, C., Manduchi, R.: Bilateral filtering for gray and color images, in Sixth International Conference on Computer Vision (IEEE Cat. No.98CH36271), 1998, pp. 839–846.

Acknowledgements

This work was supported by the “Bundesministerium für Wirtschaft und Klimaschutz” (BMWK) with the funding program “Technologietransfer-Programm Leichtbau” (TTP LB) under the grant number [FKZ 03LB2041D].

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by R.T. and T.S. The first draft of the manuscript was written by R.T. and T.S., and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing Interests

We declare that the authors have no competing interests as defined by Springer, or other interests that might be perceived to influence the results and/or discussion reported in this paper.

Ethical Approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Dual Publication

This work is a further research and development of a feasibility study that is marked in the reference list [16] and is therefore not a dual publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tenscher-Philipp, R., Schanz, T., Harlacher, F. et al. AI-Driven Synthetization Pipeline of Realistic 3D-CT Data for Industrial Defect Segmentation. J Nondestruct Eval 43, 67 (2024). https://doi.org/10.1007/s10921-024-01080-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10921-024-01080-x