Abstract

Location of acoustic emission (AE) events is one of the main evaluation tools in AE analysis. Reliable location of AE sources enables accurate investigation of the mechanisms that led to a crack in the material. It is known that the location errors are influenced by several factors, including the accuracy of the elastic wave arrival time reading, the geometric distribution of the AE sensors, and most importantly, by the physical properties of the propagation medium. The aim of this study is the application of a neural network to classify clustered AE events, which were detected during six hydraulic fracturing tests in massive salt rock. A fully connected feed forward network was used for pattern recognition and classification of the input events according to target classes. For input data the signal arrival time profiles of the longitudinal (L) and transversal (T) elastic waves were used to train, to test, and to validate the neural network. In total 765 AE events were classified in various target classes. Receiver operating characteristic analysis (ROC) was applied for analyzing the result of the neural network approach. The neural network classified clustered events correctly, while few spatially scattered events outside the region of a cluster could not be matched to any cluster. Bootstrap analysis showed that the results are robust and demonstrates the high potential of Deep Learning (DL) methods in the location of AE events.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In acoustic emission evaluation methods in the time and amplitude domain have been developed and applied since many years. For a single-channel measurement the physical measured quantities such as counts, count rate, event count, RMS value, amplitude, and peak amplitude are determined. In scientific sense, however, the most useful measure for characterization of damage processes is the energy released during their devolution. In the time domain, the frequency analysis is suitable for the recognition of AE signals of certain cause. It is since individual noise groups of the same cause have a specific frequency content. In the case of single-channel registration of the AE, the focus is usually on material studies. In the case of multi-channel registration, it is also possible to determine the location of AE events. The basis for the location of an AE source is the measurement of time differences, often referred to as triangulation. With this method, the location of an AE source is calculated using arrival times differences of the AE signals which are detected at various sensors and from the knowledge of sensor distances and wave velocity. In recent years, additional evaluation methods have been added to the above-mentioned established methods of parameterization of AE signals and AE source localization. These include methods such as the moment tensor method, guided wave analysis, cluster analysis, and pattern recognition [1].

In AE analysis, pattern recognition as well as event classification with neural networks are increasingly used to find similarities in the waveforms and for source localization in geometrically complex structures. In this context, neural networks use many features to unambiguously assign similar signal types, even if these cannot be described by fixed feature boundaries. First suggestions are made to use neural networks in AE analysis and for source localization in metallic complex structures [2,3,4]. In such structures a conventional localization using arrival-time differences is very inaccurate especially when the wave velocity varies within the structure. The neural network was trained using artificially generated AE events with known location, for example by breaking of pencil leads or pulsing of ultrasound signals at many positions on the surface of the object. In this case, artificial neural networks can be used to introduce a relationship between \(\varDelta t\) values and the source coordinates. However, this so-called \(\varDelta t\)-mapping expects a good accessibility of the structure to generate signals from test sources in all directions.

There has been a recent increase in development of DL models for locating seismic events. Many solutions using DL approaches are still in an experimental state [3, 5,6,7]. More complex than parameterization of AE signals is the use of complete waveforms. Representative for many applications in the last years only three recent publications are quoted [8,9,10]. In the first publication, fully convolutional neural networks (FCNN) are used for source localization of microseismic events in civil engineering. Here, original waveform data is directly used as input of the neural network to improve the localization accuracy. The proposed location method overcomes the shortcomings of the conventional localization methods, such as the inaccuracy of velocity model and arrival detection [8]. In the second publication, deep learning methods were used to classify rock fractures under different loading conditions in the laboratory. This involved converting AE waveforms into time-frequency images, and then using multiple convolutional neural networks (CNNs) to determine the loading modes of rock fractures [9]. The third paper reported the application of convolutional neural networks to electrocardiograms time series forming structures analogous to seismic waveforms in medical technology for predictive detection of myocardial scars [10].

In the present study, DL algorithm was applied to 765 spatially clustered AE events which were generated by six hydraulic fracturing tests in salt rock to use the capabilities of neural networks for classification of located AE events [11,12,13]. In contrast to other works, the neural network was trained, tested, and validated using natural AE events. We evaluated the waveforms arriving at the sensors and extracted relevant numerical information as input for our model. Due to the high-fidelity, AE waveforms the L- and T-wave onsets are clearly discernible. By reducing the input data two parameters of each trace, a much simpler feed forward neural network (FNN) structure than CNN can be used to classify AE events. With this approach we constructed a FNN architecture producing promising results.

2 Experiments and Data

2.1 Hydraulic Fracturing Experiments

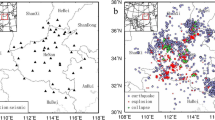

In the Bernburg salt mine in Germany at a depth of 420 m six hydraulic fracturing tests were carried out in a horizontal injection borehole of a length of 12 m. The test site is shown in Fig. 1(a) in a perspective view. The 12 m long injection well with diameter of 42 mm (red line) was drilled from an access drift (Gallery XVIII in Fig. 1(b)) in horizontal direction [12]. This well is in a barrier pillar, which separates two huge excavation chambers of 120 m length, 25 m width, and 20 m height. Due to the high degree of excavation the barrier pillar is strongly loaded. The minimum and maximum stresses are 10 MPa and 25 MPa, respectively. Around the central injection well four observation wells (length 10 m, diameter 100 mm) were drilled, each equipped with two AE sensors (blue arrows in Fig. 1(b)). With this sensor network a cubic area with a volume of approximately 10 m × 10 m × 10 m can be monitored (Fig. 1(b)). A total of six fracturing tests with adjacent refracturing tests were carried out at a borehole depth between 1.6 m and 9 m. During the test and repetition test an oil volume of 100 cm3 and 300 cm3, respectively, was injected at a high injection rate. On test lasted of about 15 min.

Figure 1(c) shows the location of the events projected onto a vertical cross-sectional plane of the test site. The orientations of the macroscopic fracture planes indicated by the AE measurement were compared with independent stress calculations using the finite element method (FEM). Indicated as crosses in this figure are the directions of the minimum and maximum principal stresses. A total of 765 AE events are located using the P- and S-wave onsets. These onsets are automatically picked after band-pass filtering of the traces by applying an adapted, speed-optimized short-term-average to long-term-average (STA-to-LTA) trigger algorithm [14]. After picking the onsets, a least-square algorithm based on a gradient method is used to determine the location of the AE events. A location is valid if enough P- and S-wave onsets (at least 10 times) are used for source location and the localization error calculated by using the travel-time residuals of the P and S wave is below 5 cm.

Figure 2 gives an overview of the located events during all hydraulic fracturing and refracturing tests in projection to the three coordinate planes (x-y plane: top view; x-z plane, and y-z-plane: lateral views). The red line is indicating the injection well. The located events can divide into clustered events (Fig. 2(a)) and scattered events (Fig. 2(b)). 698 clustered AE events appear at various borehole depths parallel to the y axis which are attributed to elliptical fracture planes with diameters in the range of approximately 1,5 m to 1,8 m. Whereas in smaller borehole depths up to 2 m the macroscopic fracture planes are perpendicular to the horizontal injection well, the fractures in greater depths are striking in y direction at approximately 63 degrees. The orientation of the fracture planes as measured by AE (y-z plane in Fig. 2(a)) agrees remarkably well with the orientation of the calculated principal stresses (Fig. 1(c)). The direction of the fracture planes appears to coincide with the maximum principal stress [12]. At small hole depths, the macroscopic fracture planes are parallel to the wall of the gallery. At larger borehole depths the fracture planes are perpendicular to the minimum principal stress at about 63°.

(a): Perspective view of the test site in the salt mine Bernburg with the injection well (red line) and arrangement of the chambers at the 420-m level. (b): AE sensors (blue arrows) and injection well (red line). (c): Orientation of the principal stresses at the test site which was calculated using finite element method. The crosses are indicating the direction of the minimum and maximum principal stresses together with the access drift (Gallery XVIII) and contour of the huge chamber (right) [12]

67 AE events could not be attributed to any cluster (Fig. 2(b)). These scattered events occurred at the surface of the access drift (coordinate y < 150 cm) in the so-called excavation disturbed zone (EDZ) and in greater depts well outside the injection intervals in the solid rock mass. These background events occur spontaneously due to microcracking in areas of high stresses.

2.2 Parametrization of Data

As input data for the location of AE events clearly discernible arrival times are used, e.g., from the L and T waves. The location procedure which is implemented in the AE systems [15] is based on the principle of triangulation (see Fig. 3). An AE source at position Q emitted elastic waves. The waves propagate in all directions and reach the Sensors \({S}_{i}\) at different times and different travel paths \({r}_{i}\) with\(i=1, \text{ }2, \dots , N\) (\(N\) is the number of sensors).

Located AE events (small black dots) of hydraulic fracturing tests HF1 to HF6 in projection to the three coordinate planes. The red line and red dots indicate the injection well and the location of the AE sensors, respectively [12]. (a): 698 Clustered and (b): 67 scattered events

From the travel time differences e.g., of the first longitudinal pulse, the true location of the AE event can be iteratively determined. For most applications after 20 to 30 iterations sufficient location accuracy are achieved. The remaining residual error is a measure of the location accuracy. In summary, the exact determination of the arrival times (signal start) is the decisive factor for the accuracy of the localization in such algorithms that use time differences.

For the application of neural networks for event location it should considered that the arrival times are depending on the hypocenter and trigger point in the time window. Therefore, the absolute arrival times are converted to parameters independent of material velocity and time scale. This parameterization of signal arrival times so-called signal arrival time profiles (ATP) was first introduced by [5,6,7] and allows the application of neural networks onto real structures. For this purpose, the ATP was calculated for each AE event to train and test the neural network. The ATP is a normalized vector pi with\(i=1, \text{ }2, \dots , N\) (again \(N\)is the number of sensors) defined as:

with\({diff}_{i}={T}_{i}-\frac{1}{N}{\sum }_{j=1}^{N}{T}_{j}\)

and

\({T}_{i}\)= \(\left({t}_{i}-{t}_{0}\right)\)denotes the signal propagation time from the source to Sensor i of the L and T wave. \({t}_{i}\) and \({t}_{0}\) are the travel times of the L or T waves and origin time of the Source \(Q\), respectively. The ATP is a normalization of the input data. In the first step, the mean value of the arrival times is subtracted from each \({T}_{i}\) (\({diff}_{i}\) in Eq. 1). These data are normalized by the mean value of their absolute values (\(norm\) in Eq. 1).

3 Application of a Neural Network

3.1 Feature Extraction

For feature extraction the AE events of hydraulic fracturing test in sequential order of these test. The hydraulic fracturing tests were located at 1.6 m depth (149 events), 2.5 m depth (166 events), 3.4 m depth (88 events), 5.8 m depth (129 events), at 7.2 m depth (150 events), and finally at 9.0 m depth (83 events). Figure 4 shows the waveforms (left-hand side) of the AE events with calculated arrival times of the L (green lines) and T waves (red lines). The dashed black vertical line marks the origin time of the event. The green and red dashed lines mark the mean value of the L- and T-arrival times, respectively. The selected signals belong to representative events which are located during hydraulic fracturing tests HF1 to HF6 in 1.6 m, 2.5 m, 3.4 m, 5.8 m, 7.2 m, and 9.0 m well depths (Fig. 4(a) to 4(f)), respectively. The corresponding arrival times profiles (ATP) are shown as horizontal bars at the right-hand side. A comparison of Fig. 4(a) and 4(b) indicates similar ATP patterns of events in 1.6 m and 2.5 m depths. This is because the waves propagate along almost the same paths to the sensors. With constant propagation velocities of the L and T waves, the arrival times are also approximately the same. At greater borehole depths, the ATPs differ more clearly from each other.

Waveforms (left-hand side) of AE events with the calculated arrival times of the L (green lines) and T waves (red lines). The selected events are originated approximately in the center of each cluster of HF1 to HF6. Dashed black vertical line marks the origin time of the event. The green and red dashed lines mark the average of the L- and T-arrival times, respectively. The selected events are from hydraulic fracturing tests HF1 to HF6 in 1.6 m, 2.5 m, 3.4 m, 5.8 m, 7.2 m, and 9.0 m well depths ((a) to (f)). The corresponding ATPs are shown as horizontal bars at the right-hand side

3.2 Architecture of Neural Network

We defined our network model iteratively guided by the goal to keep the model as simple as possible. Therefore, a simple feedforward structure was chosen. The number of layers and units were set experimentally. This resulted in a two-layer feedforward network, with ReLU activation function in the hidden layer and Softmax activation in the output layer. The number of hidden neurons is set to 10. The size of the output layer is determined by the number of classes. Finally a manual search was applied to identify hyperparameter setting, e.g., learning rate. Figure 5 schematically displays the architecture of the neural network with input and output (green squares) and the hidden and output layer (blue squares). W and b are the network weights and biases, respectively. For details of network mechanisms, we refer to standard literature, e.g. [16].

Input data for all events is a vector with 16 elements. These p elements are the arrival times profile i (see Eq. 1) of the L and T waves, which are equal due to the normalization of the time scale. As mentioned, the arrival time profile is independent of time scale and material, and it is more robust to input errors. By subtracting the arrival time averages, the C1 to C6 errors are evenly distributed over all inputs. The target data are the six various classes to that can be assigned to the six fracturing tests HF1 to HF6 as seen in Fig. 2. In this context, the scattered events that are not spatially unambiguous assigned to one of these six clusters are classified in the order in which they were registered. The target data consist of vectors of all zero values except for a 1 in the element, which is representing the class.

3.3 Training of Neural Network

When training Deep Learning models it is general practice is to divide the data into three subsets. The first subset is the training set, which is used for computing the gradient and updating the network weights, and biases. The second subset is the validation set. The error on the validation set is monitored during the training process. The validation error normally decreases during the initial phase of training, as does the training set error. However, when the network begins to overfit the data, the error on the validation set typically begins to rise. The network weights and biases are saved at the minimum of the validation set error. The data is split into 70% for training, 15% to validate that the network is generalizing and to stop training before overfitting, and 15% to independently test network generalization. The division of the data is done randomly. Figure 6 shows the result of the test of the neural network after 27 epochs which was applied to the 765 AE events of the six classes C1 to C6.

In Fig. 6(a) the elements of the output vector (values between 0 and 1) are plotted as green bars indicating the probability with which the event can be predicted to belong to a specific class. The locations of the related AE events are shown in Fig. 6(b) in projection on the x-y plane. In general, about 91% of the events are predicted to the correct cluster. The mean output value of all AE events in Class C1 is about 0.8 and in the Classes C2 to C6 0.86, 0.89, 0.93, 0.89, and 87%, respectively.

3.4 Confusion Matrix

For discrete class mapping, the largest value of the output vector is used. Thus, only the predicted class is specified for an event. To describe the performance of this discrete classification a confusion matrix is commonly used. The confusion matrix itself is relatively simple to understand, but the associated terminology can be confusing. To create a confusion matrix, two possible prediction classes are determined: positive “p” or negative “n”. “p” means that the event is assigned to a class. A “n” means that this event is not assigned to this class. To distinguish between the actual class and the predicted class the labels “Y” and “N” for the class predictions. “Y” means correct classification (noted as positive) and accordingly “N” means not correctly classified (noted as negative). Thus, there are four possibilities. If the case is positive and classified as positive, it is counted as a true positive case (TP); if it is classified as negative, it is counted as a false negative (FN). If the case is negative and classified as negative, it is counted as a true negative case (TN); if it is classified as positive, it is counted as a false positive (FP). Given six classes, the confusion matrix has six-by-six elements. The equations to calculate the elements of the confusion matrix is given in the paper from Fawcett (2005) [17].

Figure 7 shows the confusion matrix for the training data set, the validation data set, and the testing data set. The sum of these matrices can be seen in the lower right corner of this figure. The rows of the confusion matrix correspond to the true class and the columns correspond to the predicted class. The sum of the columns of a class result in the number of classified events. Diagonal and off-diagonal elements correspond to correctly and incorrectly classified observations, respectively. In addition, Fig. 7 displays the number of correctly and incorrectly classified events for each true and predicted class as percentages related to number of events of the corresponding true and predicted class.

If the confusion matrix for all is considered, 141 events (94,6%) of Class 1 are correctly classified. The remaining 5.6% are incorrectly assigned to Class 2 (4 events), Class 3 (1 event), Class 5 (1 event), and Class 6 (2 Events). In Class 2, 95.2% could be classified correctly. 6 and 2 events are misclassified to Class 1 and 6, respectively. For Class 3, the predicted class matches the true class in 97.5%. Only two events are not correctly classified. From Class 4 with 129 events, 94.3% of the events could be attributed to the true class. A similar result is shown for Classes 5 and 6 with a percentage of 89,3 and 94 correct classified events, respectively. Most of misclassified events (9 and 5, respectively) are predicted for Class 1. Only 5 events (Class 5) are incorrectly classified to Class 6.

3.5 Receiver Operating Characteristic

One method for graphical representation of the performance of classifier is called receiver operating characteristic (ROC) diagrams. ROC diagrams are commonly used in medical decision making and in recent years have been increasingly used in machine learning and data mining research. An ROC diagram shows the relative trade-offs between benefits (true positives) and effort (false positives). ROC diagrams are two-dimensional graphs in which the TP rate is plotted on the y axis and the FP rate is plotted on the x axis. When creating an ROC diagram, the data are simply sorted in descending order by score and processed sequentially, updating the TP and FP values. Figure 8 shows the ROC diagram of the six classes of the testing dataset. The diagonal line is indicating a random process: Values near the diagonal mean an equal hit rate and false positive rate, which corresponds to the expected hit frequency of a random process. A ROC curve that remains significantly below the diagonal indicates that the values have been misinterpreted.

At the beginning, the curves especially of Class 3 and 6 vertically rise and change horizontally to an almost constant value near one. As mentioned, the ROC curve is a two-dimensional representation of classifier performance. However, it may be useful to reduce classifier performance to only one scalar value. A common method is to calculate the area under the ROC curve. This value is abbreviated as AUC [18, 19]. AUC is scale invariant. It measures how well the predictions are classified, rather than their absolute values. Since the AUC is part of the area of the unit square, its value will always be between 0 and 1. Larger AUC values indicate better classifier performance. Since random processes are characterized by the diagonal, no realistic classifier should have an AUC of less than 0.5. The AUC of the six classes ranged from 0.92 (Class 5) to 1 (Class 3 and 6), indicating a near-perfect predication.

3.6 Bootstrap Analysis

Since the test data were randomly selected, it cannot be assumed that all classes are equally represented. To determine the confidence interval of the whole data set with 765 classified events, a bootstrap analysis is performed. The advantage of bootstrapping is that this method makes no distributional assumption [20]. Bootstrapping is based on resampling, which means that samples are repeatedly extracted from the given test data. Figure 9 shows the bootstrap analysis of the value of the classification score obtained for 300 resamples.

The green vertical dashed lines in the graph of the probability distribution indicate lower and upper boundaries of the 95% confidence level (approximately 1.96 standard deviations). The red vertical dashed line shows the mean value of the output score. In this figure the density distribution of the classification score includes a superimposed normal distribution curve to illustrate normality (black line). The mean value of the dataset is 0.952. The bootstrap distributions appear to be normal and therefore, the bootstrap results can be trusted.

3.7 Visualizing Using t-SNE

Figure 10 displays a t-SNE plot of the input vectors. t-SNE (Stochastic Neighborhood Embedding) is a Machine Learning based algorithm producing a representation of high-dimensional data in a space of lower dimension preserving mutual neighborhood relations. Here we map classified AE events into R2 so that the events can be visualized as a simple scatter plot allowing to identify neighboring data [21, 22]. While pure clustering methods provide clusters of data, it is far from trivial to graphically represent or analyze the relationships between clusters. In this context, t-SNE also offers itself as a downstream processing step to graphically represent clusters found by other algorithms. The figure shows very well that the clusters are tightly constrained in their groups. However, it is also noticeable that some individual events are related to wrong clusters.

4 Discussion

The results presented here of applying neural network to clustered AE events show that approximately 91% of the events were matched to the correct class. The remaining 9% is due to more spontaneous acoustic emission activity that did not originate from the six clusters created during hydraulic fracturing. Nevertheless, the spatially dispersed events were also assigned to one of the six classes located closest to the corresponding cluster. Especially the near-surface events originating from the EDZ are attributed to the classes of the corresponding clusters of hydraulic fracturing tests in 1.6 or 2.5 m borehole depth. This fact explains the relatively low probability of Classes 1 and 2 predicting events on one of these classes. The absolute arrival times are converted to parameters independent of material velocity and time scale. A major advantage of using the absolute arrival times used for feature extraction is that they are independent of material velocity and time scale. However, the location of the event must be known to determine the arrival time profile using the theoretical arrival times of the L and T waves.

5 Summary

In this study, the application of artificial intelligent to localized AE events is presented. A pattern recognition networks with one hidden layer was applied to 765 spatially clustered AE events which were generated during six hydraulic fracturing tests in salt rock. In order to use the capabilities of neural networks the arrival times of the L and T waves were used to test the neural network. Therefore, the arrival times were converted into time-dependent input parameters like the arrival time profile. In order to train the neural network, the characteristic arrival time profile of each cluster was selected. The application of neural network shows that all clustered events are classified and assigned to the correct cluster, while few events outside the region of a cluster could not be assigned. In this case the event location does not coincide with the training dataset, and a correct classification is not achieved. However, the method can still be applied if the datasets are selected for training have a similar structure as the experimental dataset. In contrast to other works, the neural network is learned with natural AE events.

The use of the time-invariant arrival time profiles is characterized by their robustness against input errors. By subtracting the mean values of the arrival times, individual errors due to wrong picking of the arrival times are distributed to all sensors, which reduces the global input error. However, if the requirements are met, the approach presented here also enables the application to any data set with other parameters such as amplitudes of the first motion or even entire waveforms to determine the mechanism which are cause acoustic emission. The use of neural networks for the further evaluation of acoustic emission data is therefore very promising.

Data Availability

Not applicable.

References

Grosse, C.U., Ohtsu, M., Aggelis, D.G., Shiotani, T. (eds.): Acoustic Emission Testing. Springer Nature Switzerland AG, (2022). https://doi.org/10.1007/978-3-030-7936-1

Grabec, I., Sachse, W.: Synergetics of Measurement, Prediction and Control. Springer-Verlag, Berlin Heidelberg (1997). https://doi.org/10.1007/978-3-642-60336-5

Sause, M.G.R.: In situ Monitoring of fiber-reinforced Composites: Theory, Basic Concepts, Methods, and Applications. Springer Series in Materials Science, vol. 242. Springer, Cham (2016). DOI: https://doi.org/10.1007/978-3-319-30954-5

Prevorovsky, Z., Landa, M., Blahacek, M., Varchon, D., Rousseau, J., Ferry, L., Perreux, D.: Ultrasonic scanning and acoustic emission of composite tubes subjected to multiaxial loading. Ultrasonics (1998). https://doi.org/10.1016/S0041-624X(97)00097-8

Chlada, M., Prevorovsky, Z., Blahacek, M.: Neural network AE source location apart from structure size and material. J. Acoust. Emiss. 28, 99–108 (2010)

Chlada, M., Prevorovsky, Z.: Remote AE Monitoring of Fatigue Crack Growth in Complex Aircraft Structures. In: 30th European Conference on Acoustic Emission Testing & 7th International Conference on Acoustic Emission, www.ndt.net/EWGAE-ICAE2012/ (2012)

Blahacek, M., Chlada, M., Prevorovsky, Z.: Acoustic emission source location based on signal features. Adv. Mater. Res. 13–14, 77–82 (2006)

Ma, K., Sun, X., Zhang, Z., Hu, J., Wang, Z.: Intelligent Location of microseismic events based on a fully convolutional neural network (FCNN). Rock. Mech. Rock. Eng. 55, 4801–4817 (2022). https://doi.org/10.1007/s00603-022-02911-x

Song, Z.L., Zhang, Z.G., Zhang, G.H., Huang, J., Wu, M.Y.: Identifying the types of Loading Mode for Rock Fracture via Convolutional neural networks. J. Geophys. Res. Sol Ea. 127(2) (2022). https://doi.org/10.1029/2021JB022532

Gumpfer, N., Grün, D., Hannig, J., Keller, T., Guckert, M.: Detecting myocardial scar using electrocardiogram data and deep neural networks. Biol. Chem. (2021). https://doi.org/10.1515/hsz-2020-0169

Manthei, G., Plenkers, K.: Review on in situ Acoustic Emission Monitoring in the context of Structural Health Monitoring in Mines. Appl. Sci. (2018). https://doi.org/10.3390/app8091595

Manthei, G., Eisenblätter, J., Dahm, T.: Moment Tensor evaluation of Acoustic Emission sources in Salt Rock. Constr. Build. Mater. 15, 297–309 (2001)

Dahm, T., Manthei, G., Eisenblätter, J., Tectonophysics: (1999). https://doi.org/10.1016/S0040-1951(99)00041-4

Allen, R.: Automatic phase pickers: their present use and future prospects. Bull. Seismol. Soc. Am. 72, 225–242 (1982)

Plenkers, K., Manthei, G., Kwiatek, G.: Underground In-situ Acoustic Emission in Study of Rock Stability and Earthquake Physics. In: Grosse CU, Ohtsu M, Aggelis DG, Shiotani T (eds) Acoustic Emission Testing, Springer Tracts in Civil Engineering, Springer Nature Switzerland (2022). https://doi.org/10.1007/978-3-030-67936-1_16

Goodfellow, I., Bengio, J., Courville, A.: Deep Learning. MIT Press, Cambridge MA USA (2016)

Fawcett, T.: An introduction to ROC analysis. Pattern Recognit. Lett. (2006). https://doi.org/10.1016/j.patrec.2005.10.010

Bradley, A.P.: The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. https://doi. (1997). https://doi.org/10.1016/S0031-3203(96)00142-2

Hanley, J.A., McNeil, B.J.: The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 143, 29–36 (1982)

Efron, B.: Bootstrap Methods: Another Look at the Jackknife. Ann Statist (1979). https://doi.org/10.1214/aos/1176344552

van der Maaten, L.J.P., Hinton, G.E.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9, 2579–2605 (2008)

van der Maaten, L.J.P.: Accelerating t-SNE using tree-based algorithms. J. Mach. Learn. Res. 15, 3221–3245 (2014)

Acknowledgements

Not applicable.

Funding

Not applicable.

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

Not applicable.

Corresponding author

Ethics declarations

Ethics Approval and Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Competing Interests

Not applicable.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Manthei, G., Guckert, M. Classification of Located Acoustic Emission Events Using Neural Network. J Nondestruct Eval 42, 4 (2023). https://doi.org/10.1007/s10921-022-00913-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10921-022-00913-x