Abstract

Social resemblance, like group membership or similar attitudes, increases the mimicry of the observed emotional facial display. In this study, we investigate whether facial self-resemblance (manipulated by computer morphing) modulates emotional mimicry in a similar manner. Participants watched dynamic expressions of faces that either did or did not resemble their own, while their facial muscle activity was measured using EMG. Additionally, after each presentation, respondents completed social evaluations of the faces they saw. The results show that self-resemblance evokes convergent facial reactions. More specifically, participants mimicked the happiness and, to a lesser extent, the anger of self-resembling faces. In turn, the happiness of non-resembling faces was less likely mimicked than in the case of self-resembling faces, while anger evoked a more divergent, smile-like response. Finally, we found that social evaluations were in general increased by happiness displays, but not influenced by resemblance. Overall, the study demonstrates an interesting and novel phenomenon, particularly that mimicry can be modified by relatively subtle cues of physical resemblance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Facial expressions are an essential source of inferences about emotional and motivational states, as well as social traits. An intriguing phenomenon associated with observing facial expressions is that people tend to imitate the displays they see (Dimberg et al., 2000). The process of replicating others’ actions, i.e., mimicry, is more general and includes different types of behaviors, e.g., foot-tapping and face touching (Chartrand & Bargh, 1999), bodily postures (Bavelas et al., 1986; Bernieri & Rosenthal, 1991) or spoken word repetition (Goode & Robinson, 2013; Kulesza et al., 2014). In the case of emotional expression, this is also referred to as emotional mimicry (Hess & Fischer, 2014). What is usually observed in the context of both behavioral and emotional mimicry, is that people are more likely to synchronize their behaviors with those who are socially resemblant, i.e., belong to the same group, have similar attitudes or social characteristics (Guéguen & Martin, 2009; Stel et al., 2010; Van Der Schalk et al., 2011). Such sensitivity to social resemblance raises the question of whether mimicry is also influenced by physical resemblance. In the presented study, we address this question to show that people have a greater tendency to mimic emotional displays of self-resembling faces.

Emotional Mimicry of Socially Resemblant Others

A common way to observe emotional mimicry is to measure the activity of the muscles that produce convergent facial displays. For example, using surface EMG allows for the detection of changes to the Zygomaticus major (which pulls up lip corners), as the participant’s muscle is flexed when they observe other people’s smiles, and relatively relaxed when observing anger. In contrast, the Corrugator supercilii muscle (which lowers the eyebrows) is typically flexed in response to anger and relaxed when the participant is watching other people smile (Dimberg, 1982; Hess et al., 2017; Wingenbach et al., 2020).

The phenomenon is often considered a result of an automatic, perception–action-related mechanism (e.g., Dimberg, 1982) that additionally supports accurate emotion recognition through sensory-motor stimulation (e.g., Wood et al., 2016). What is of importance, other theoretical approaches underline that emotional mimicry is not just pure imitation of facial movements, but rather should be understood as a prosocial behavior that adapts to the context of various emotional states and social situations (Fischer & Hess, 2017; Hess, 2021; Winkielman, Coulson, & Niedenthal, 2018; Wróbel & Imbir, 2019). This means that mimicry is modulated by such factors as e.g., the meaning of the expression or the relationship between the displayer and the observer. Specifically, people tend to mimic mainly affiliative emotions (e.g., smiles), while antagonizing ones (e.g., disgust or anger) are less likely to be mimicked (Fischer et al., 2012). In a similar vein, hostile or competitive social situations result in reduced mimicry, compared to cooperative situations (Hofree et al., 2018; Likowski et al., 2008; Weyers et al., 2009). Finally, smiles of individuals described to have traits representing high communion (which implies affiliative intents, e.g., being helpful, kind, non-egoistic, and selfless) were more readily mimicked than those whose traits represented low communion (that implies non-affiliative intents, e.g., being envious, malicious, stingy, and unfair; Wróbel et al., 2021).

Even more importantly, mimicry is also increased toward socially resemblant others. For example, expressions of in-group members are imitated more frequently than those of out-group members (Peng et al., 2021; Van Der Schalk et al., 2011), while antagonizing emotions (e.g., anger) of out-group members often evoke a divergent facial reaction—i.e., fear (Van Der Schalk et al., 2011; however, further replication does not confirm greater mimicry of fear and anger in case of in-group members—see Sachisthal et al., 2016). Likewise, people who have similar social or political attitudes are mimicked more than those who have opposite attitudes (Bourgeois & Hess, 2008). It has also been shown that teenagers mostly mimicked facial displays of their peers, whereas adults exhibited mimicry of both adults’ and teenagers’ facial expressions (Ardizzi et al., 2014). Moreover, based on their recent study, Forbes and colleagues (Forbes, Korb, Radloff, & Lamm, 2021) suggest that facial self-relevance also impacts mimicry. As they demonstrated, participants mimic smiles to a greater extent when shown faces previously associated by conditioning with winning or losing money for themselves, compared to those associated with outcomes for another person. As the authors conclude, the fact that participants also imitated displays of faces that were accompanying a loss indicates that face relevance, not just reward association, is the mimicry modulator. To summarize, studies support the premise that affiliative and prosocial context that often accompanies interactions with socially resemblant (or self-relevant) others increases emotional mimicry.

Facial Self-Resemblance

Faces that are similar to known faces evoke the same automatic evaluations as the actual faces they resemble (Gawronski & Quinn, 2013; Gunaydin et al., 2012; Kraus & Chen, 2010; Verosky & Todorov, 2010). For example, a study by Kraus and Chen (2010) has shown that faces whose features resemble those of one’s significant others are evaluated similarly to those of significant others. Moreover, Verosky and Todorov (2010) showed that novel faces morphed with faces previously associated with positive behaviors were rated more positively than novel faces morphed with faces associated with negative behaviors.

Crucially though, people show a preference for self-resembling faces in many social contexts, e.g., they express more trust and cooperation in social-dilemma games (DeBruine, 2002; Krupp et al., 2008), or attribute higher trustworthiness (DeBruine, 2004, 2005; Olszanowski, Parzuchowski, & Szymkow, 2019) and attractiveness (DeBruine et al., 2011; Kocsor et al., 2011) than to non-resembling faces. For example, when asked to choose a picture of the more trustworthy face of a pair, participants preferred faces that were morphed with their own face (DeBruine, 2005). Such a preference is present in the early stages of development, as even preschoolers at the age of 5 favored those whose faces looked subtly like themselves (i.e., where an unknown face was morphed with their own) over complete strangers (i.e., two unknown faces morphed, Richter, Tiddeman, & Haun, 2016).

The exact processes underlying the preference for self-resembling faces is still a matter of investigation, however, the phenomenon is often considered to be part of an evolutionarily developed functional mechanism of phenotype matching that supports kin-favoring behavior (DeBruine, 2005; Hauber & Sherman, 2001; Lewis, 2011; Park et al., 2008). Other accounts suggest that the preference is a by-product of some perceptual mechanisms, e.g., mere exposure, beauty-in-averageness, or processing fluency effects, as people in general favor stimuli that are familiar, similar to the mental prototype (e.g., averaged), but also easily and efficiently processed (Reber et al., 2004; Rhodes & Tremewan, 1996; Winkielman et al., 2006; Zajonc, 1968).

Irrespective of the true nature of self-resembling face preference, we can notice its similarity to the affiliative and prosocial motivation found for social resemblance. Thus, we could expect that self-resembling faces modulate the mimicry of displayed emotions comparably to faces of socially resemblant others. However, the key difference between those two kinds of resemblance should be highlighted here. In most of the aforementioned studies, the information that a person belongs to the same or different social group, or that they have similar social attitudes, was quite evident to the participants—e.g., by presenting a written description prior to or along with face presentation. In other words, the impact of social resemblance on mimicry relied on relatively explicitly accessible contextual cues. Conversely, a facial resemblance is a matter of subtle signs, and observers are usually unaware of its nature (see DeBruine, 2004; Park et al., 2008). This is because facial features for matching similarity are computed rapidly and with a minimal amount of information (Lieberman et al., 2007; Platek et al., 2005, 2008, 2009). Thus, the present research addresses the novel and intriguing question of whether mimicry adapts to implicitly perceived cues indicating resemblance.

Current Study

We hypothesize that observers mimic self-resembling faces more than non-resembling faces. More specifically, we assume that facial self-resemblance increases emotional mimicry. Furthermore, we predict that affiliative emotions (e.g., happiness) are more likely to be mimicked than antagonizing ones (e.g., anger). The question remains open as to what the mimicry of antagonistic emotions of non-resembling faces will look like. Research indicates that the reactions may be either divergent (e.g., van der Schalk, et al., 2011), show a lack of or only a trace of convergent imitation (Ardizzi, et al., 2014; Bourgeois & Hess, 2008), or, particularly when there is no explicit manipulation of the context, be congruent with observed facial displays (Hühnel et al., 2014; Rymarczyk et al., 2016). To provide support for predicted mimicry pattern of self-resembling faces and address the question on how antagonistic displays of non-resembling faces are mimicked, we presented participants with a video of happiness or anger, displayed by faces that either did or did not resemble their own.

Additionally, mainly to justify the task requiring observing faces, we asked participants to evaluate each of the faces on different social dimensions, i.e., trustworthiness, confidence, and attractiveness. As we already noted, facial self-resemblance is frequently related to social preference, thus such evaluations could provide additional indications for the nature of the relationship between automatic imitative behaviors and more deliberately constructed impressions. The first two of the evaluations, i.e., trustworthiness and confidence, are related to basic dimensions of social traits interference, namely communion/trust (as represented by trustworthiness judgments) and agency/dominance (as represented by confidence judgments; Abele & Wojciszke, 2007, 2014; Todorov et al., 2008). Importantly, research indicates the primacy of communion over agency in judgments about unknown persons, as traits related to a communal dimension make it possible to better predict the personal consequences of interactions (Wojciszke & Abele, 2008; Ybarra et al., 2008). As such, trustworthiness evaluations should be more sensitive to facial features related to resemblance. In turn, attractiveness evaluations may indicate a general affective preference for the stimulus that is more familiar or similar to mental prototype (Halberstadt, 2006; Rhodes & Tremewan, 1996; Winkielman et al., 2006).

On the other hand, the display of emotion is a signal regarding the intention of the displayer (Horstmann, 2003). For example, happiness implies a positive attitude and increases trust as well as perceived dominance and attractiveness, while anger usually decreases such judgments (Hess, Adams, & Kleck, 2009; Winkielman et al., 2015). Moreover, studies indicate that inferences from facial expressions can override those based on phenotypic features; therefore, judgments may be primarily driven by more explicit accessible cues (Gill et al., 2014; Olszanowski, et al., 2019). For this reason, one could expect that the appearance of facial expression will be a sufficient indication for evaluation, while the impact of resemblance will be minor or insignificant.

Method

Participants

Power analysis for a repeated measures design conducted using PANGEA v 0.2 (Westfall, 2015) indicated that, given 12 “per-cell” observations, a sample of 50 or above would be sufficient to detect the interaction between emotional display and face resemblance with an effect size of d = 0.25 (η2 ≈ 0.015, i.e., a small effect as defined by Cohen, 1988), with a probability of 1- ß > 0.8 and α = 0.05. Assuming some data loss, we recruited over 70 participants for the first part of the experiment (photo-taking), while 64 participants (36 females) took part in both parts of the study. Participants were undergraduate students of Caucasian origin, aged 20–25 years. Data of four participants were discarded, as they reported awareness of the experimental manipulation during the post-experimental debriefing session. EMG recordings of five other participants could not be used for technical reasons—i.e., a high level of artifacts (three participants) or electrode abruption (two participants). Thus, the EMG signal analyses were conducted with the remaining 55 participants (30 females). Due to technical failure, we did not record evaluative responses of five participants and behavioral analysis was conducted with data from 55 participants (31 females).

Facial Stimuli

The facial resemblance was manipulated using digital morphing techniques (with Fanta Morph 5 software) to combine same-sex faces of individuals unknown to the participant with either the participant’s face (for self-resembling faces) or a composite portrait of a same-sex face (for other-resembling faces). During the experimental procedure, 24 video clips were used in total—12 presenting participant-resembling faces (created individually for each participant) and 12 presenting other-resembling faces (the same for all participants).

Each created video clip lasted 6 s and started with a neutral face that, within 5 s, changed gradually to an emotional one (angry: 12 videos—6 self-resembling and 6 other-resembling and happy: 12 videos—6 self-resembling and 6 other-resembling). After reaching the apex (full emotional display), the video clip stopped, retaining a still image of the full emotional display, visible for another 1 s.

Self-resembling faces To create video clips with emotional expressions of self-resembling faces, we used a two-step procedure—morphing facial feature in step one, and morphing facial expression in step two. Before starting the procedure, approximately two weeks before the main experiment, we took pictures of the participants’ neutral faces under the guise of conducting a pilot study on computer learning of facial features. Then, in the first step, a picture of participant’s neutral display was morphed with pictures of 6 unknown to participant individuals of the same sex (pictures were taken from the WSEFEP database, and were of Caucasian faces, aged between 20 and 30; Olszanowski et al., 2015). Each image of an unknown individual presented one of three facial displays—i.e., neutral, angry, and happy. The morphing of neutral facial displays resulted in neutral blends, while morphing participant’s neutral display with one of the emotional displays of an unknown individual resulted in an emotional blend. As an outcome, during the first step, 18 facial blends were prepared for each participant—6 with a neutral display, 6 with a display of anger, and 6 with a display of happiness. Morphing templates consisted of approx. 100 anatomically defined points, while blends were created by sharing 50% of shape, texture, and color information of both the participant’s face and the individual’s face. Participants’ facial traits, like scars or birthmarks, were digitally removed before the morphing procedure. The hair and neck regions of the composite face were masked (see Fig. 1 for an example).

The two-step procedure was employed to create facial stimuli. During Step 1 participant’s image or composite portrait was morphed with an image of an individual of the same sex displaying either happiness, anger, or a neutral expression (in total there were 18 images of 6 individuals used for this purpose). Transformation added 50% of participants’ face shape and color to the blend, resulting either with self-resembling or other-resembling blended faces with a neutral, angry, or happy display. During Step 2 neutral blends were morphed with blends displaying anger or happiness to create movies presenting dynamic facial expressions changing from neutral to emotional

In step two, given that dynamic facial expressions show greater potential for mimicry (Rymarczyk et al., 2011), we further morphed facial blends in pairs (i.e., one neutral and one of the emotional displays) to create movie clips (see Olszanowski et al., 2020; Wróbel & Olszanowski, 2019). For each video, a neutral face was used as a starting image, while an emotional one (either displaying happiness or anger) was used as a final image.

Other-resembling faces The same two-step procedure was used to create a set of other-resembling faces. However, instead of using participant pictures, we first created a male or female composite portrait—a blend of 14 individual faces unknown to participants. We used the composite portrait instead of just images of individuals unknown to the participant to balance the potential fluency inferences due to averaging of physical properties in morphed images (Reber et al., 2004; Winkielman et al., 2006), but also to minimize trait inferences related to individuals’ facial appearance (Todorov et al., 2015). More precisely, pictures of individuals (again, taken from the WSEFEP database—Olszanowski, et al., 2015) were blended based on templates consisting of approx. 100 points regarding face shape and texture, to produce a single image that represented a face with averaged features—i.e., a composite portrait. None of the faces used to create a composite portrait were of the ones used to create self- and other-resembling blends. After that, analogically to preparing self-resembling faces, the composite portrait was morphed with faces of 6 individuals with a neutral, angry, or happy expression, using blends containing 50% of the composite prototype. Subsequently, facial blends were morphed in pairs of one neutral and one emotional display. The samples of facial stimuli can be found at: https://osf.io/3h4r6/.

Facial EMG

Muscle activity was measured by the bipolar placement of surface, 8 mm diameter, Ag/Cl electrodes on the left side of the face. A ground electrode was attached to the middle of the forehead, directly below the hairline. We measured the activity of the Corrugator supercilii and Zygomaticus major. The electrodes have been placed according to the guidelines by Tassinary et al. (2007). To reduce skin impedance to an acceptable level (below 20 kΩ) the areas of sensor placement were cleaned, while the electrodes were additionally filled with conducting gel. EMG was measured using a BioPac MP150, digitized with 24-bit resolution, sampled at 2 kHz, and recorded on a PC. Raw data were filtered offline with a 20–400 Hz bandpass filter and a 50 Hz notch filter, smoothed with moving average with time constant 50 ms, and rectified using ANSLAB software (Blechert et al., 2015). To remove artifacts from the signal, data were first averaged within epochs of 200 ms starting from stimuli onset up to 7 s (that included 6 s of stimulus presentation and additional 1 s of post-stimulus period that allowed for better contrasting of muscle activity during periods of facial display presentation), which resulted in 35 data points for each trial, with additional 0.5 s of signal baseline value. Data of each participant was z-standarized using the mean and standard deviation computed from all collected data points. Data points above 3 standard deviations were further removed (1.7% of all data points), as well as trials containing more than 5 of such data points or those where the baseline value was above 3 SD (4% of all trials). Remaining raw data points were again z-standardized, baseline-corrected and segmented into periods of 1 s across each of the 6 s of stimuli presentation. The database containing exported raw signal can be found at: https://osf.io/3h4r6/

Procedure

The experiment was administered individually and presented as a study on social evaluations. After signing the informed consent forms, participants were seated in front of a monitor connected to a PC. Before starting the experiment, participants were informed that they would have to evaluate faces on different social dimensions, while electrodes would be applied to their faces to measure the effort they put into the evaluations. After receiving instructions on task performance, participants were presented with three repeated blocks of experimental trials, each presenting 24 videos in a random order (12 self-resembling faces + 12 prototype-resembling faces), resulting in 72 trials in total. Each trial started with a fixation cross (display length varied between 1 to 3 s), then the video with a facial expression was presented, followed by 5 s of a blank screen, and ending with the screen for evaluation. The participants answered three questions: “Is this person trustworthy?”, “Is this person confident?”, and “Is this person attractive?”. They marked their judgments on a horizontal axis (labeled with “no” and “yes” values) with a hidden 100-points scale, using a computer mouse to confirm their evaluations. After completing the study, the participants were fully debriefed and interviewed regarding their awareness of the experimental manipulation (i.e., whether they were able to recognize the similarity of the faces presented during the experimental procedure.)

Results

Mimicry

Emotion displays can be understood as the joint movement of different facial muscles (see Hess & Blairy, 2001; Hess et al., 2017). Specifically, anger display is related to a larger activation of Corrugator supercilii than Zygomaticus major, whose activation is then often reduced. In turn, happiness display is a result of higher activation of Zygomaticus major than Corrugator supercilii. In order to confirm mimicry of emotional displays, analyses were based on the calculated contrast between muscle activity—i.e., the difference between Corrugator and Zygomaticus in case of anger displays, and the difference between Zygomaticus and Corrugator in case of happiness displays. The results can be then interpreted in the following manner—the higher the values above 0, the higher the level of mimicry. In turn, values below 0 indicate a reversed pattern of facial responses—i.e., a more anger-like reaction to happiness or more happiness-like reaction to anger. Additional analyses of raw signals of individual muscles are presented in Supplementary Materials 1 (see Appendix).

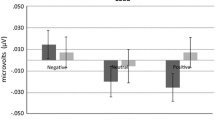

The 2 (emotional display: anger vs happiness) × 2 (facial resemblance: self vs other) × 6 (time epochs: 1, 2, 3, 4, 5, and 6 s) repeated measure ANOVA was conducted with Greenhouse–Geisser corrections applied where necessary, using JASP ver 0.15 (JASP Team, 2021). The analysis revealed significant main effects of facial resemblance, F(1, 54) = 20.85; p < 0.001, ηp2 = 0.28 (participants mimicked more self-resembling faces than other-resembling faces) and emotional display, F(1, 54) = 11.00; p = 0.002, ηp2 = 0.17 (participants mimicked happy displays more readily than angry displays), as well as the main effect of time, F(2.9, 156.38) = 14.13; p < 0.001, ηp2 = 0.21 (mimicry increased in time, along with the changes of facial display presented in the movie). Increasing mimicry over time and larger response to happy faces resulted in significant interaction of these two factors, F(2.35, 126.89) = 8.75; p < 0.001, ηp2 = 0.14. Similarly, there was a significant interaction between facial resemblance and time, F(1.94, 104.54) = 13.75; p < 0.001, ηp2 = 0.20. However, there was no significant interaction between emotional display and facial resemblance, F(1, 54) = 0.07; p = 0.791, ηp2 = 0.001. As can be seen on Fig. 2, stronger mimicry occurred in response to faces displaying happiness than anger in case of both self- and other resembling faces—which was confirmed by simple main effect analysis—respectively F(1, 54) = 8.62; p = 0.005 and F(1, 54) = 9.74; p = 0.003. In turn, mimicry of anger and happiness was larger for self-resembling faces than other-resembling faces, i.e., anger, F(1, 54) = 15.92; p < 0.001; happiness, F(1, 54) = 16.8; p < 0.001. Finally, the 3-way interaction between all factors was marginally insignificant, F(3.16, 170.48) = 2.33; p = 0.073, ηp2 = 0.04.

Mimicry of emotional displays based on the calculated contrast between z-scored muscle activity—i.e., the difference between Corrugator and Zygomaticus in case of anger displays, and the difference between Zygomaticus and Corrugator in case of happiness displays. The higher the values above 0 the higher the level of mimicry, while values below 0 indicate a reversed pattern of facial responses. Error bars depict the SE of the mean

To further decompose the relationship between dynamics of emotional mimicry in response to facial resemblance, separate analyses for each emotional display were conducted. For the happy expression, there were significant main effects of face resemblance, F(1, 54) = 16.8, p < 0.001, ηp2 = 0.24, and time F(2.48, 133.94) = 17.56, p < 0.001; ηp2 = 0.25, as well as a significant interaction between those two factors, F(2.12, 114.32) = 11.62, p = 0.002, ηp2 = 0.18. As shown in the Fig. 2 (right panel), mimicry increased over time in both cases, which was confirmed by a simple main effect analysis—for self-resembling faces, F(5, 270) = 23.39; p < 0.001, and for other-resembling faces, F(5, 270) = 3.16; p = 0.009. However, it was larger for self-resembling than other-resembling faces starting from 3rd second (all Fs (1, 54) > 11.38, all ps < 0.002).

For the angry displays, the main effect of facial resemblance was significant, F(1, 54) = 15.92; p < 0.001, ηp2 = 0.23 (the mimicry of self-resembling faces anger was larger than other-resembling faces), as was the interaction, F(2.42, 130.39) = 9.17; p < 0.001, ηp2 = 0.15. Simple main effect analysis showed that mimicry significantly differed between time periods in case of both other-resembling faces, F(5, 270) = 4.38; p < 0.001, and self-resembling faces, F(5, 270) = 5.10; p < 0.001. However, it was larger for self-resembling faces than other-resembling faces starting from the 2nd second (all Fs (1, 54) > 9.91; all ps < 0.003). As can be seen in the Fig. 2 (left panel) in case of other-resembling faces, the mimicry index goes below 0, which indicates that Zygomaticus response was larger than Corrugator and suggests a more smile-like muscle activation. One sample t-test specified that the mean is significantly below 0 starting from the 2nd second—all ps < 0.026.

Evaluations

To assess the influence of facial emotional displays on social evaluations, we conducted a 2 (emotional display: anger vs happiness) by 2 (facial resemblance: self vs other) ANOVA separately for trustworthiness, attractiveness, and confidence evaluations. The analysis revealed a robust main effect of the displayed emotion on all three dimensions ‒ happy faces were rated as more trustworthy, F(1, 54) = 103.84, p < 0.001, ηp2 = 0.66, more attractive, F(1, 54) = 103.45, p < 0.001, ηp2 = 0.66, and more confident, F(1, 54) = 61.34, p < 0.001, ηp2 = 0.53, than angry faces. However, facial resemblance did not differentiate evaluations, or interact with emotional display (Fig. 3).

Relationship Between Facial Display, Mimicry and Evaluation

Mediation analyses with the use of MLmed macro for SPSS (Rockwood & Hayes, 2017) were additionally used to test whether mimicry predicts participants’ evaluation. The emotional display was set as the predictor (X), mimicry index, averaged between 3rd and 6th second, as the mediator (M), and evaluation as the dependent variable (Y). Based on ML estimation model structure included a fixed within-group effect of X, and random effect of X on M. The analysis confirmed a significant effect of emotional display on mimicry (a-path), B = 0.35, SE = 0.08, p < 0.001. In case of trustworthiness, the evaluation analysis also showed a direct effect of emotional display on evaluation (c’-path), B = 21.77, SE = 2.28, p < 0.001, while the relationship between mimicry and trustworthiness evaluation was not significant (b-path), B = 1.11, SE = 0.83, p = 0.18. Finally, the effect of display on evaluation remained almost unchanged also when controlling for mimicry (c-path), B = 21.77, SE = 2.28, p < 0.001 and resulted in a not significant indirect effect of mimicry on evaluation, M = 0.39, SE = 0.31, 95%, CI [− 0.18, 1.05]. The patterns of relationships between variables were pretty much the same for the two other evaluations. More precisely, in case of attractiveness: (b-path), B = 1.30, SE = 0.81, p = 0.11; (c’-path), B = 21.16, SE = 2.19, p < 0.001; (c-path), B = 21.16, SE = 2.19, p < 0.001; and indirect effect, M = 0.45, SE = 0.31, 95%, CI [− 0.10, 1.11]. While for confidence: (b-path), B = 0.87, SE = 0.78, p = 0.27; (c’-path), B = 15.89, SE = 2.17, p < 0.001; (c-path), B = 15.89, SE = 2.17, p < 0.001; and indirect effect, M = 0.30, SE = 0.29, 95%, CI [− 0.24, 0.92].

Discussion

The present study aimed to assess whether emotional mimicry is influenced by facial self-resemblance. Overall, the results confirm our main prediction, that is, self-resembling faces are more likely to be mimicked than other-resembling faces. More specifically, participants mimicked a smile, i.e., we observed an overall larger Zygomaticus than Corrugator activity (see e.g., Wingenbach et al., 2020) to self-resembling faces displaying happiness. Likewise, self-resembling faces displaying anger provoked a convergent facial response, i.e., larger activation of Corrugator than Zygomaticus, although the changes were relatively smaller compared to the happiness. The pattern of responses to other-resembling faces was somewhat different. Here, participants also mimicked happiness, although reactions were more subtle as compared to happiness of self-resembling faces. In addition, a divergent smile-like reaction, i.e., larger activity of the Zygomaticus than Corrugator, was observed in response to the anger.

These findings correspond with previous works showing that affiliative displays and social resemblance or self-relevance (e.g., belonging to the same social or age group, sharing similar attitudes, etc.) increase mimicry, while displays of non-resembling others are frequently not mimicked or, particularly in the case of antagonizing emotions, can cause divergent facial reactions (Ardizzi, et al., 2014; Bourgeois & Hess, 2008; Forbes et al., 2021; Van der Schalk, et al., 2011). This also confirms the notion expressed in most contemporary theoretical models on mimicry, that it adapts to the situational context and is intensified by affiliative attitudes occurring when interacting with someone who is more psychologically similar or important (Arnold & Winkielman, 2020; Fischer & Hess, 2017; Wróbel & Imbir, 2019). Assuming that mimicry serves to achieve an affiliative ending of the interaction, or at least helps to avoid antagonism, it can be particularly meaningful to explain a smile-like response to anger in other-resembling faces. Here, it can be interpreted as a (perhaps more reactive than imitative in nature) response to a threat expressed to conciliate and prevent antagonism (Fischer & Hess, 2017; Hess, 2021). In turn, mimicry of self-resembling faces’ anger may be due to the feeling of safety the in-group provides to the individual in relation to emotional expression (Matsumoto et al., 2010). However, we did not collect data on how participants interpreted the meaning of the faces or what internal states were evoked by them. It should be a matter of further investigation to include such kinds of measurements to determine more precisely the nature of muscle responses.

The key innovation of the current work lies in the nature of intrasubjective accessibility of contextual information regarding resemblance. More specifically, when contextual manipulation relies on the fact that the displayer is socially similar or relevant, i.e., belongs to the same or different social group, is related to some meaningful event—the relationship between the displayer and observer can be quite evident to the latter. Although the affective bias toward such a resemblance may remain implicit (Otten & Moskowitz, 2000), the knowledge about it is relatively accessible and thus may more directly influence the top-down regulation of mimicry (see Korb et al., 2019; Murata et al., 2016). In other words, the observer may have explicit access to information that affects their attitude towards the displayer. Conversely, facial self-resemblance discrimination is assumed to be a result of a low-level process involved in face and self-recognition (Gobbini & Haxby, 2007; Platek, et al., 2006, 2008). Due to this, the observer is usually unaware of any resemblance and has little chance to find out the reasons for own behavioral attitudes (Park et al., 2008). For example, in the present research, except for four excluded cases, none of the participants reported being aware of the experimental manipulation, and during the post-experimental debrief session, most of them were rather surprised by the fact that some of the faces included their features. Therefore, current data suggests that low-level perceptual features, such as physical resemblance, are capable of changing the contextual settings that modulate emotional mimicry. However, it is also important to consider that the facial morphing process itself can also generate perceptual differences that affect the perception of the stimulus. While efforts were made to minimize such differences when developing the material (e.g., both face types were created as morphs based on the same set of faces), it is possible that some of their properties, such as sharpness or brightness, may have indirectly contributed to the observed differences in mimicry. This potential confounding variable should be taken into account in subsequent studies.

Although the results confirm general expectations regarding emotional mimicry, a few limitations must also be mentioned here. As stated, facial self-resemblance increases trust and cooperation in social dilemma games, and causes more favorable judgments (DeBruine et al., 2011; Giang et al., 2012; Kocsor et al., 2011). However, our research does not confirm this observation, as self-resembling faces were not rated as more trustworthy, confident, or attractive. Why did resemblance fail to impact judgments in this case? Possible explanations may lie in the adaptive nature of social behaviors like facial displays. Although morphological facial features help people to assess the social traits of others—e.g., trustworthiness or dominance (Hassin & Trope, 2000; Todorov et al., 2008; Zebrowitz & Montepare, 2008)—numerous studies have also shown that people judge others according to their facial emotional expressions (e.gKaminska et al., 2020; Knutson, 1996; Montepare & Dobish, 2003; Morrison et al., 2013; Olszanowski et al., 2018; Winkielman et al., 2015). For example, a smile usually increases a positive assessment of an individual’s trustworthiness, attractiveness, or confidence, while frowning decreases this assessment in comparison to a neutral facial display. Importantly, expressing emotions can override the information related, e.g., to trustworthiness or attractiveness. As a result, people can more or less voluntarily camouflage their phenotypic features to manipulate impressions that others may have about them (Dijk et al., 2018; Ekman, Friesen, O’Sullivan, 1988; Gill et al., 2014; Olszanowski, et al., 2019). In fact, phenotypic features signaling the resemblance might also be more difficult to explicitly detect when observing faces with emotional expressions. For example, studies showed that the accuracy of relatedness judgments, made on images of a sibling or unrelated pairs, was decreased when faces smiled, as compared to neutral displays (Fasolt et al., 2019).

Additionally, recent studies in the domain of social evaluations of trustworthiness, have suggested that trait evaluation inferred from faces can be rapidly updated by diagnostic behavioral information (Shen, Mann, & Ferguson, 2020). Regarding current data, we can speculate that emotional display “camouflage” has updated implicit impressions, but did not affect the affiliative attitudes evoked by resemblance. As a result, participants were more likely to imitate self-resembling faces, but evaluated them based on more perceptually accessible cues derived from the facial expression. This interpretation is further supported by mediation analyses indicating the existence of a direct effect of emotion display on evaluations, with no indirect effect of mimicry. Nevertheless, the true nature of the preference for self-resembling faces in terms of social evaluation and its interplay with the emotional display of the evaluated person should be investigated in further studies.

In conclusion, we suggest that this work demonstrates interesting and novel phenomena related to emotional mimicry. As previous research has shown, people are more likely to mimic socially resembling others. Our research extends these observations, showing that mimicry can also be modulated by subtle cues of physical resemblance. It is worth pointing out, however, that such modulation seems to be limited to behaviors, which are of a latent nature, whereas explicit forms of behaviors (e.g., evaluative judgments) are based on more readily available premises. Despite some limitations, we believe that this study helps to better understand the processes of emotional mimicry and how it is modulated by the context in which the interaction takes place.

References

Abele, A. E., & Wojciszke, B. (2007). Agency and communion from the perspective of self versus others. Journal of Personality and Social Psychology, 93(5), 751–763. https://doi.org/10.1037/0022-3514.93.5.751.

Abele, A. E., & Wojciszke, B. (2014). Communal and agentic content in social cognition: A dual perspective model. In Advances in experimental social psychology (Vol. 50, pp. 195–255). Academic Press.

Ardizzi, M., Sestito, M., Martini, F., Umiltà, M. A., Ravera, R., & Gallese, V. (2014). When age matters: Differences in facial mimicry and autonomic responses to peers’ emotions in teenagers and adults. PLoS ONE, 9(10), e110763.

Arnold, A. J., & Winkielman, P. (2020). The mimicry among us: Intra-and inter-personal mechanisms of spontaneous mimicry. Journal of Nonverbal Behavior, 44(1), 195–212.

Bavelas, J. B., Black, A., Lemery, C. R., & Mullett, J. (1986). “I show how you feel”: Motor mimicry as a communicative act. Journal of Personality and Social Psychology, 50(2), 322–329.

Bernieri, F. J., & Rosenthal, R. (1991). Interpersonal coordination: Behavior matching and interactional synchrony. In R. S. Feldman & B. Rimé (Eds.), Studies in emotion & social interaction. Fundamentals of nonverbal behavior (pp. 401–432). Cambridge University Press.

Blechert, J., Peyk, P., Liedlgruber, M., & Wilhelm, F. H. (2015). ANSLAB: Integrated multi-channel peripheral biosignal processing in psychophysiological science. Behavior Research Methods, 48(4), 1528–1545.

Bourgeois, P., & Hess, U. (2008). The impact of social context on mimicry. Biological Psychology, 77(3), 343–352.

Chartrand, T. L., & Bargh, J. A. (1999). The chameleon effect: The perception–behavior link and social interaction. Journal of Personality and Social Psychology, 76(6), 893–910.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Erlbaum.

DeBruine, L. M. (2002). Facial resemblance enhances trust. Proceedings of the Royal Society of London. Series B: Biological Sciences, 269(1498), 1307–1312.

DeBruine, L. M. (2004). Resemblance to self increases the appeal of child faces to both men and women. Evolution and Human Behavior, 25(3), 142–154.

DeBruine, L. M. (2005). Trustworthy but not lust-worthy: Context-specific effects of facial resemblance. Proceedings of the Royal Society B: Biological Sciences, 272(1566), 919–922.

DeBruine, L. M., Jones, B. C., Watkins, C. D., Roberts, S. C., Little, A. C., Smith, F. G., & Quist, M. C. (2011). Opposite-sex siblings decrease attraction, but not prosocial attributions, to self-resembling opposite-sex faces. Proceedings of the National Academy of Sciences, 108(28), 11710–11714.

Dijk, C., Fischer, A. H., Morina, N., Van Eeuwijk, C., & Van Kleef, G. A. (2018). Effects of social anxiety on emotional mimicry and contagion: Feeling negative, but smiling politely. Journal of Nonverbal Behavior, 42(1), 81–99.

Dimberg, U. (1982). Facial reactions to facial expressions. Psychophysiology, 19(6), 643–647.

Dimberg, U., Thunberg, M., & Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychological Science, 11(1), 86–89.

Ekman, P., Friesen, W. V., & O’Sullivan, M. (1988). Smiles when lying. Journal of Personality and Social Psychology, 54(3), 414–420. https://doi.org/10.1037/0022-3514.54.3.414

Fasolt, V., Holzleitner, I. J., Lee, A. J., O’Shea, K. J., & DeBruine, L. M. (2019). Contribution of shape and surface reflectance information to kinship detection in 3D face images. Journal of Vision, 19(12), 1–9.

Fischer, A., & Hess, U. (2017). Mimicking emotions. Current Opinion in Psychology, 17, 151–155.

Fischer, A., Becker, D., & Veenstra, L. (2012). Emotional mimicry in social context: The case of disgust and pride. Frontiers in Psychology, 3, 475.

Forbes, P. A. G., Korb, S., Radloff, A., & Lamm, C. (2021). The effects of self-relevance vs. reward value on facial mimicry. Acta Psychologica, 212, 103193. https://doi.org/10.1016/j.actpsy.2020.103193.

Gawronski, B., & Quinn, K. A. (2013). Guilty by mere similarity: Assimilative effects of facial resemblance on automatic evaluation. Journal of Experimental Social Psychology, 49(1), 120–125.

Giang, T., Bell, R., & Buchner, A. (2012). Does facial resemblance enhance cooperation? PLoS ONE, 7(10), e47809.

Gill, D., Garrod, O. G., Jack, R. E., & Schyns, P. G. (2014). Facial movements strategically camouflage involuntary social signals of face morphology. Psychological Science, 25(5), 1079–1086.

Gobbini, M. I., & Haxby, M. I. (2007). Neural systems for recognition of familiar faces. Neuropsychologia, 45(1), 32–41.

Goode, J., & Robinson, J. D. (2013). Linguistic synchrony in parasocial interaction. Communication Studies, 64(4), 453–466.

Guéguen, N., & Martin, A. (2009). Incidental similarity facilitates behavioral mimicry. Social Psychology, 40(2), 88.

Günaydin, G., Zayas, V., Selcuk, E., & Hazan, C. (2012). I like you but I don’t know why: Objective facial resemblance to significant others influences snap judgments. Journal of Experimental Social Psychology, 48(1), 350–353.

Halberstadt, J. B. (2006). The generality and ultimate origins of the attractiveness of prototypes. Personality and Social Psychology Review, 10, 166–183.

Hassin, R., & Trope, Y. (2000). Facing faces: Studies on the cognitive aspects of physiognomy. Journal of Personality and Social Psychology, 78(5), 837–852.

Hauber, M. E., & Sherman, P. W. (2001). Self-referent phenotype matching: Theoretical considerations and empirical evidence. Trends in Neurosciences, 24(10), 609–616.

Hess, U. (2021). Who to whom and why: The social nature of emotional mimicry. Psychophysiology, 58, e13675.

Hess, U., & Blairy, S. (2001). Facial mimicry and emotional contagion to dynamic emotional facial expressions and their influence on decoding accuracy. International Journal of Psychophysiology, 40(2), 129–141.

Hess, U., & Fischer, A. (2014). Emotional mimicry: Why and when we mimic emotions. Social and Personality Psychology Compass, 8(2), 45–57.

Hess, U., Adams, R. B., Jr., & Kleck, R. E. (2009). The categorical perception of emotions and traits. Social Cognition, 27(2), 320–326.

Hess, U., Arslan, R., Mauersberger, H., Blaison, C., Dufner, M., Denissen, J. J., & Ziegler, M. (2017). Reliability of surface facial electromyography. Psychophysiology, 54(1), 12–23.

Hofree, G., Ruvolo, P., Reinert, A., Bartlett, M. S., & Winkielman, P. (2018). Behind the robot’s smiles and frowns: In social context, people do not mirror android’s expressions but react to their informational value. Frontiers in Neurorobotics, 12, 14.

Horstmann, G. (2003). What do facial expressions convey: Feeling states, behavioral intentions, or action requests? Emotion, 3(2), 150–166.

Hühnel, I., Fölster, M., Werheid, K., & Hess, U. (2014). Empathic reactions of younger and older adults: No age related decline in affective responding. Journal of Experimental Social Psychology, 50, 136–143.

JASP Team. (2021). JASP (0.15) [Computer software]. https://jasp-stats.org

Kaminska, O. K., Magnuski, M., Olszanowski, M., Gola, M., Brzezicka, A., & Winkielman, P. (2020). Ambiguous at the second sight: Mixed facial expressions trigger late electrophysiological responses linked to lower social impressions. Cognitive, Affective, & Behavioral Neuroscience, 20(2), 441–454.

Knutson, B. (1996). Facial expressions of emotion influence interpersonal trait inferences. Journal of Nonverbal Behavior, 20(3), 165–182.

Kocsor, F., Rezneki, R., Juhász, S., & Bereczkei, T. (2011). Preference for facial self-resemblance and attractiveness in human mate choice. Archives of Sexual Behavior, 40(6), 1263–1270.

Korb, S., Goldman, R., Davidson, R. J., & Niedenthal, P. M. (2019). Increased medial prefrontal cortex and decreased Zygomaticus activation in response to disliked smiles suggest top-down inhibition of facial mimicry. Frontiers in Psychology, 10.

Kraus, M. W., & Chen, S. (2010). Facial-feature resemblance elicits the transference effect. Psychological Science, 21(4), 518–522.

Krupp, D. B., Debruine, L. M., & Barclay, P. (2008). A cue of kinship promotes cooperation for the public good. Evolution and Human Behavior, 29(1), 49–55.

Kulesza, W., Dolinski, D., Huisman, A., & Majewski, R. (2014). The echo effect: The power of verbal mimicry to influence prosocial behavior. Journal of Language and Social Psychology, 33(2), 183–201.

Lewis, D. M. (2011). The sibling uncertainty hypothesis: Facial resemblance as a sibling recognition cue. Personality and Individual Differences, 51(8), 969–974.

Lieberman, D., Tooby, J., & Cosmides, L. (2007). The architecture of human kin detection. Nature, 445(7129), 727–731.

Likowski, K. U., Mühlberger, A., Seibt, B., Pauli, P., & Weyers, P. (2008). Modulation of facial mimicry by attitudes. Journal of Experimental Social Psychology, 44(4), 1065–1072.

Matsumoto, D., Yoo, S. H., & Chung, J. (2010). The expression of anger across cultures. International handbook of anger (pp. 125–137). Springer.

Montepare, J. M., & Dobish, H. (2003). The contribution of emotion perceptions and their overgeneralizations to trait impressions. Journal of Nonverbal Behavior, 27(4), 237–254.

Morrison, E. R., Morris, P. H., & Bard, K. A. (2013). The stability of facial attractiveness: Is it what you’ve got or what you do with it? Journal of Nonverbal Behavior, 37(2), 59–67.

Murata, A., Saito, H., Schug, J., Ogawa, K., & Kameda, T. (2016). Spontaneous facial mimicry is enhanced by the goal of inferring emotional states: Evidence for moderation of “automatic” mimicry by higher cognitive processes. PLoS ONE, 11(4), e0153128.

Olszanowski, M., Pochwatko, G., Kuklinski, K., Scibor-Rylski, M., Lewinski, P., & Ohme, R. K. (2015). Warsaw set of emotional facial expression pictures: A validation study of facial display photographs. Frontiers in Psychology, 5, 1516.

Olszanowski, M., Kaminska, O. K., & Winkielman, P. (2018). Mixed matters: Fluency impacts trust ratings when faces range on valence but not on motivational implications. Cognition and Emotion, 32(5), 1032–1051.

Olszanowski, M., Parzuchowski, M., & Szymków, A. (2019). When the smile is not enough: The interactive role of smiling and facial characteristics in forming judgments about trustworthiness and dominance. Roczniki Psychologiczne/annals of Psychology, 22(1), 35–52.

Olszanowski, M., Wróbel, M., & Hess, U. (2020). Mimicking and sharing emotions: A re-examination of the link between facial mimicry and emotional contagion. Cognition and Emotion, 34(2), 367–376.

Otten, S., & Moskowitz, G. B. (2000). Evidence for Implicit Evaluative In-Group Bias: Affect-Biased Spontaneous Trait Inference in a Minimal Group Paradigm. Journal of Experimental Social Psychology, 36(1), 77–89. https://doi.org/10.1006/jesp.1999.1399.

Park, J. H., Schaller, M., & Van Vugt, M. (2008). Psychology of human kin recognition: Heuristic cues, erroneous inferences, and their implications. Review of General Psychology, 12(3), 215–235.

Peng, S., Zhang, L., & Hu, P. (2021). Relating self-other overlap to ingroup bias in emotional mimicry. Social Neuroscience, 16(4), 439–447.

Platek, S. M., Keenan, J. P., & Mohamed, F. B. (2005). Sex differences in the neural correlates of child facial resemblance: An event-related fMRI study. NeuroImage, 25(4), 1336–1344.

Platek, S. M., Loughead, J. W., Gur, R. C., Busch, S., Ruparel, K., Phend, N., Panyavin, I. S., & Langleben, D. D. (2006). Neural substrates for functionally discriminating self-face from personally familiar faces. Human Brain Mapping, 27(2), 91–98.

Platek, S. M., Krill, A. L., & Kemp, S. M. (2008). The neural basis of facial resemblance. Neuroscience Letters, 437(2), 76–81.

Platek, S. M., Krill, A. L., & Wilson, B. (2009). Implicit trustworthiness ratings of self-resembling faces activate brain centers involved in reward. Neuropsychologia, 47(1), 289–293.

Rhodes, G., & Tremewan, T. (1996). Averageness, exaggeration, and facial attractiveness. Psychological Science, 7(2), 105–110.

Reber, R., Schwarz, N., & Winkielman, P. (2004). Processing fluency and aesthetic pleasure: Is beauty in the perceiver’s processing experience? Personality and Social Psychology Review, 8(4), 364–382.

Richter, N., Tiddeman, B., & Haun, D. B. (2016). Social preference in preschoolers: Effects of morphological self-similarity and familiarity. PLoS ONE, 11(1), e0145443.

Rockwood, N. J., & Hayes, A. F. (2017). MLmed: An SPSS macro for multilevel mediation and conditional process analysis. Poster presented at the annual meeting of the Association of Psychological Science (APS), Boston, MA.

Rymarczyk, K., Biele, C., Grabowska, A., & Majczynski, H. (2011). EMG activity in response to static and dynamic facial expressions. International Journal of Psychophysiology, 79(2), 330–333.

Rymarczyk, K., Żurawski, Ł, Jankowiak-Siuda, K., & Szatkowska, I. (2016). Emotional empathy and facial mimicry for static and dynamic facial expressions of fear and disgust. Frontiers in Psychology, 7, 1853.

Sachisthal, M. S., Sauter, D. A., & Fischer, A. H. (2016). Mimicry of ingroup and outgroup emotional expressions. Comprehensive Results in Social Psychology, 1(1–3), 86–105.

Shen, X., Mann, T. C., & Ferguson, M. J. (2020). Beware a dishonest face?: Updating face-based implicit impressions using diagnostic behavioral information. Journal of Experimental Social Psychology, 86, 103888.

Stel, M., Van Baaren, R. B., Blascovich, J., Van Dijk, E., McCall, C., Pollmann, M. M., Van Leeuwen, M. L., Mastop, J., & Vonk, R. (2010). Effects of a priori liking on the elicitation of mimicry. Experimental Psychology, 57(6), 412–418.

Tassinary, L. G., Cacioppo, J. T., & Vanman, E. J. (2007). The skeletomotor system: Surface electromyography. In J. Cacioppo, L. G. Tassinary, & G. G. Berntson (Eds.), Handbook of psychophysiology (3rd ed., pp. 267–302). Cambridge University Press.

Todorov, A., Said, C. P., Engell, A. D., & Oosterhof, N. N. (2008). Understanding evaluation of faces on social dimensions. Trends in Cognitive Sciences, 12(12), 455–460.

Todorov, A., Olivola, C. Y., Dotsch, R., & Mende-Siedlecki, P. (2015). Social attributions from faces: Determinants, consequences, accuracy, and functional significance. Annual Review of Psychology, 66, 519–545.

Van Der Schalk, J., Fischer, A., Doosje, B., Wigboldus, D., Hawk, S., Rotteveel, M., & Hess, U. (2011). Convergent and divergent responses to emotional displays of ingroup and outgroup. Emotion, 11(2), 286–298.

Verosky, S. C., & Todorov, A. (2010). Differential neural responses to faces physically similar to the self as a function of their valence. NeuroImage, 49(2), 1690–1698.

Westfall, J. (2015). PANGEA: Power analysis for general ANOVA designs. Unpublished manuscript. Available at http://jakewestfall.org/publications/pangea.pdf.

Weyers, P., Mühlberger, A., Kund, A., Hess, U., & Pauli, P. (2009). Modulation of facial reactions to avatar emotional faces by nonconscious competition priming. Psychophysiology, 46(2), 328–335.

Wingenbach, T. S., Brosnan, M., Pfaltz, M. C., Peyk, P., & Ashwin, C. (2020). Perception of discrete emotions in others: Evidence for distinct facial mimicry patterns. Scientific Reports, 10, 4692.

Winkielman, P., Halberstadt, J., Fazendeiro, T., & Catty, S. (2006). Prototypes are attractive because they are easy on the mind. Psychological Science, 17(9), 799–806.

Winkielman, P., Olszanowski, M., & Gola, M. (2015). Faces in-between: Evaluations reflect the interplay of facial features and task-dependent fluency. Emotion, 15(2), 232–242.

Winkielman, P., Coulson, S., & Niedenthal, P. (2018). Dynamic grounding of emotion concepts. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 373(1752), 20170127.

Wojciszke, B., & Abele, A. E. (2008). The primacy of communion over agency and its reversals in evaluations. European Journal of Social Psychology, 38, 1139–1147.

Wood, A., Rychlowska, M., Korb, S., & Niedenthal, P. M. (2016). Fashioning the face: Sensorimotor simulation contributes to facial expression recognition. Trends in Cognitive Sciences, 20(3), 227–240.

Wróbel, M., & Imbir, K. K. (2019). Broadening the perspective on emotional contagion and emotional mimicry: The correction hypothesis. Perspectives on Psychological Science, 14(3), 437–451.

Wróbel, M., & Olszanowski, M. (2019). Emotional reactions to dynamic morphed facial expressions: A new method to induce emotional contagion. Roczniki Psychologiczne/annals of Psychology, 22(1), 91–102.

Wróbel, M., Piórkowska, M., Rzeczkowska, M., Troszczyńska, A., Tołopiło, A., & Olszanowski, M. (2021). The “Big Two” and socially induced emotions: Agency and communion jointly influence emotional contagion and emotional mimicry. Motivation and Emotion, 45, 683–704.

Ybarra, O., Chan, E., Park, H., Burnstein, E., Monin, B., & Stanik, C. (2008). Life’s recurring challenges and the fundamental dimensions: An integration and its implications for cultural differences and similarities. European Journal of Social Psychology, 38, 1083–1092.

Zajonc, R. B. (1968). Attitudinal effects of mere exposure. Journal of Personality and Social Psychology, 9, 1–27.

Zebrowitz, L. A., & Montepare, J. M. (2008). Social psychological face perception: Why appearance matters. Social and Personality Psychology Compass, 2(3), 1497–1517.

Funding

This work was supported by the National Science Centre in Poland under Grant No. 2016/21/HS6/01179 awarded to MO.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Authors declare no conflict of interests.

Data Availability

Data and stimuli samples are available at https://osf.io/3h4r6/?view_only=d7905ca8c04e41dbb9590d802c986f76

Ethical Approval

Experimental procedure was conducted in accordance with SWPS University of Social Science & Humanities ethic guidelines and approved by the local Ethic Committee.

Informed Consent

All participants that took part in the experiment provided informed written consent prior to the experimental procedure. All authors gave informed consent for manuscript publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Olszanowski, M., Lewandowska, P., Ozimek, A. et al. The Effect of Facial Self-Resemblance on Emotional Mimicry. J Nonverbal Behav 46, 197–213 (2022). https://doi.org/10.1007/s10919-021-00395-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10919-021-00395-x