Abstract

The human voice communicates emotion through two different types of vocalizations: nonverbal vocalizations (brief non-linguistic sounds like laughs) and speech prosody (tone of voice). Research examining recognizability of emotions from the voice has mostly focused on either nonverbal vocalizations or speech prosody, and included few categories of positive emotions. In two preregistered experiments, we compare human listeners’ (total n = 400) recognition performance for 22 positive emotions from nonverbal vocalizations (n = 880) to that from speech prosody (n = 880). The results show that listeners were more accurate in recognizing most positive emotions from nonverbal vocalizations compared to prosodic expressions. Furthermore, acoustic classification experiments with machine learning models demonstrated that positive emotions are expressed with more distinctive acoustic patterns for nonverbal vocalizations as compared to speech prosody. Overall, the results suggest that vocal expressions of positive emotions are communicated more successfully when expressed as nonverbal vocalizations compared to speech prosody.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The human voice expresses a wealth of information, serving as an audible index of a person’s age, sex, identity, and emotional state (Kreiman & Sidtis, 2011). Among the features conveyed by the voice, an important element for everyday social interactions is the expression of emotions. Independently of semantic information (i.e., what is being said), the voice can express emotions via speech prosody (i.e., how it is being said), like speaking louder or softer. Emotion can also be expressed vocally via nonverbal vocalizations like screams and laughs. The voice can communicate discrete emotions such as anger, fear, and happiness to listeners via both nonverbal vocalizations and speech prosody (e.g., Banse & Scherer, 1996; Juslin & Laukka, 2001, 2003). However, it is poorly understood how the type of vocalization (nonverbal vocalizations vs speech prosody) influences listeners’ recognition of emotions. For example, is it easier to recognize amusement from a laugh as compared to a word spoken with amusement? Moreover, research to date has tended to include a limited number of positive emotions or to use a single positive emotion category, referred to as happiness or joy. In the present study, we examine recognizability of 22 positive emotions expressed via nonverbal vocalizations and speech prosody, and compare the accuracy levels between the two vocalization types.

Nonverbal Vocalizations Versus Speech Prosody

Nonverbal vocalizations are brief non-speech expressions of emotions like laughs, moans, sighs, and grunts. They exclude linguistic interjections (e.g., “Surprise!”) that form part of semantic speech content (e.g., Ameka, 1992). Nonverbal vocalizations are similar to affect bursts (Scherer, 1994), but they do not include emblematic affect expressions like “yuck!” and they do not have to include a change in facial expressions. Nonverbal vocalizations are considered ‘pure’ expressions of emotions in the sense that they closely reflect physiological and autonomic changes (Scherer, 1986). Speech prosody, on the other hand, refers to suprasegmental attributes of spoken language, such as intonation, that can communicate emotions concurrently with semantic content (Juslin & Laukka, 2003; Scott et al., 2010). Acoustic features mainly related with rhythm and melodies in speech constitute the domain of prosody, which are sometimes referred to as paralinguistic features (Gibbon, 2017). It has been proposed that nonverbal vocalizations might be easier to understand than speech prosody (Scott et al., 2010). The production of emotional speech prosody is constrained because during speech production, there can be conflicts between the prosodic features associated with an emotional state and the ones used to denote linguistic information. For instance, changes in pitch levels signaling emotional information can conflict with changes relating to linguistic stress in a sentence or the rising pitch of a question. Such conflicts may create ambiguity in the speech prosody, resulting in less discriminable emotional information compared to nonverbal vocalizations. Nonverbal vocalizations, on the other hand, are largely unconstrained by linguistic structure (e.g., Pell et al., 2015; Scott, Sauter, & McGettigan, 2010). They are produced at the glottal/subglottal level with reduced volitional control of the vocal tract configurations (Trouvain, 2014). A lack of volitional control and linguistic constraints on nonverbal vocalizations leads to greater acoustic variability in nonverbal expression of emotions compared to prosodic expressions of emotions (e.g., Jessen & Kotz, 2011; Lima et al., 2013). Such flexibility might lead to the expression of emotions with higher discriminability in terms of acoustic structures.

Some emotions are typically expressed by means of nonverbal vocalizations and rarely vocalized via speech (Banse & Scherer, 1996). This proposal (yet untested) implies that recognizability advantage of nonverbal vocalizations over speech prosody might differ per emotion. For example, relief is typically expressed with sighs and amusement is expressed with laughs, with such vocalizations also occurring in other mammals, like rats (Panksepp & Burgdorf, 2003; Soltysik & Jelen, 2005). These emotions might have more distinctive acoustic configurations when expressed via nonverbal vocalizations compared to speech prosody, leading to higher recognizability. However, it is also a possibility that some emotions like feeling respected might not have any prototypical nonverbal vocalizations and might be preferred to be expressed through prosodic expressions. These emotions might have better differentiated acoustic profiles in speech prosody compared to nonverbal vocalizations.

Even though arguments for enhanced communication of emotions via nonverbal vocalizations as compared to speech prosody have been put forward, there is little research formally testing this notion. Studies conducted to date have found that negative emotions are recognized more accurately (Hawk et al., 2009; Lausen & Hammerschmidt, 2020; Sauter, 2007) and rapidly (Castiajo & Pinheiro, 2019; Pell et al., 2015; Schaerlaeken & Grandjean, 2018) from nonverbal vocalizations compared to speech prosody. For positive emotions, this perception advantage of nonverbal vocalizations has been tested for happiness/joy and pride, yet it is not established whether it generalizes to other positive emotions. In the present study, we compare human listeners’ recognition performance for nonverbal vocalizations and speech prosody for a wide range of different positive emotions.

Theorists have highlighted the need to differentiate between different positive emotions in vocal expressions for several decades. In an early review, Scherer (1986) lamented that such distinctions were rarely made in the literature and noted that it is therefore not clear what researchers refer to when they use the term ‘happiness,’ which makes it difficult to compare results between studies. Ekman (1992) suggested that ‘happiness’ should be replaced with several positive emotions and proposed that listeners might be able to differentiate these emotions from vocal expressions. The need to differentiate between positive emotions is further supported by an empirical study comparing more intense and less intense form of emotional vocalizations (Banse & Scherer, 1996). The two positive emotions, elation and happiness, were rarely confused with each other, suggesting that they might be expressions of two distinct positive emotions.

In recent years, researchers examining vocal communication of emotions are increasingly differentiating between distinct positive emotional states. There is empirical evidence showing that several positive emotions are expressed with vocal expressions characterized by distinct acoustic patterns and that they can be recognized by naïve listeners (see Kamiloğlu et al., 2020 for a review). However, studies to date have tended to include only a few categories of positive emotions and have focused on either nonverbal vocalizations or speech prosody. In the present study, we test whether listeners can recognize 22 different positive emotions from nonverbal vocalizations and speech prosody. We then test the robustness of these findings by conducting an identical experiment in a second cultural context.

The Present Study

In the present study, we aim to compare recognition accuracy for nonverbal vocalizations to that from speech prosody for positive emotions. To do so, we first examined which of the 22 positive emotions could be recognized at better-than-chance levels for each type of vocalization. This allowed us to differentiate positive emotions that were not recognized from nonverbal vocalizations or speech prosody. We then tested the hypothesis that positive emotions are more accurately recognized when expressed as nonverbal vocalizations compared to speech prosody. We further sought to exploratorily examine recognition accuracy differences between the two vocalization types for each emotion. In order to be inclusive of a wide range of positive emotions, a total of 22 positive emotions that have been examined in the scientific literature were included: admiration, amae [presumption on others to be indulgent and accepting (Behrens, 2004)], amusement, awe, determination, elation, elevation, excitement, gratitude, hope, inspiration, interest, lust, moved, pride, relief, respected, schadenfreude, sensory pleasure, surprise, tenderness, and triumph (see Table 1 for definitions and examples).

In Experiment 1, naïve Dutch listeners were asked to complete a forced-choice emotion categorization task for vocal expressions produced by native Dutch speakers. Experiment 2 was a replication of Experiment 1 in which naïve Chinese listeners completed an identical forced-choice emotion categorization task with vocalizations of 22 positive emotions produced by native Chinese Mandarin speakers. The hypotheses, methods, and data analysis plan for both experiments were pre-registered on the Open Science Framework (https://osf.io/6c8v3/?view_only=) before data collection was commenced.

In order to compare acoustic patterns of positive emotions expressed via nonverbal vocalizations to that of speech prosody, we conducted an acoustic analysis. Machine learning models were used to classify the nonverbal vocalizations and speech prosody stimuli from Experiment 1 and 2 based on their acoustic features. We hypothesized that acoustic classification accuracy of positive emotions would be higher for nonverbal vocalizations compared to speech prosody.

Experiment 1: Dutch Listeners’ Recognition of Positive Emotions from Dutch Vocalizations

In Experiment 1, we first examine whether naïve Dutch listeners would be able to recognize 22 positive emotions from vocal expressions produced by native Dutch speakers at levels significantly above chance. We then test whether recognition of positive emotions is better from nonverbal vocalizations as compared to speech prosody overall, and provide a breakdown of the results per emotion.

Method

Participants

We estimated the sample size through data simulation. A generalized linear mixed model was constructed to test whether participants would recognize 22 positive emotions at better-than-chance levels, with the dependent variable being a binary response (correct or incorrect). Positive emotion with 22 factors was set as a fixed effect. Participant and vocalization IDs were entered as random factors accounting for participant and speaker variability. Chance level was set to 1/8, which is the chance of selecting the correct emotion category by random guessing in an 8-way forced-choice task. We defined logit for the reference probability of 1/8, which was entered in the model as an offset term. The simulations indicated that using a sample size of N = 200 would ensure that the experiment would be well powered (80%) for testing whether recognition performance would be at better-than-chance levels. Simulations were run in R (version 1.1.383, www.r-project.org) using lme4 package (Bates, Mächler, Bolker, & Walker, 2015). The simulation script is provided in Supplementary Materials Script 1S.

Two hundred native Dutch speakers (105 women, 92 men, 2 other, 1 preferred not to say; Mage = 21.75, SDage = 3.82, range = 18–40 years old) with no (self-reported) hearing impairments were recruited via the University of Amsterdam, Department of Psychology’s research pool, and by advertisements posted on Facebook. Participation in the study was compensated with course credit or monetary reward.

Materials and Procedure

Stimuli

Posed vocal expressions of positive emotions were recorded at the University of Amsterdam’s psychology laboratory. The walls of the laboratory were covered with high quality acoustic fabric to prevent echoes. Individuals whose native language was Dutch and who had never been diagnosed or treated for any voice, speech, hearing, or language disorder were considered eligible for participation in the study. Twenty participants (10 women, 10 men; Mage = 22.42, SDage = 2.64, range = 20–31 years old) were invited to the lab to record vocalizations.

Upon arriving at the lab, participants were seated in front of a lab computer, which displayed each emotion term in turn, together with its definition. Participants were then asked by the experimenter to describe the emotion in their own words to ensure that they understood the definition correctly. If needed, they were provided examples. Then, they read a situational example and were asked to imagine the situation as vividly as possible. They were then asked to produce a vocal expression of the corresponding emotion. The target emotions, accompanying definitions, and situational examples can be found in Table 1.

Participants were positioned approximately 30 cm from the microphone. They produced both nonverbal vocalizations and speech prosody for each of the 22 positive emotions. When producing nonverbal vocalizations, participants were asked to avoid actual words (e.g.,” “no,” “yes”) and vocalizations with conventionalized semantic meanings (e.g., “yuck,” “ouch”). For speech prosody, speakers were asked to produce the semantically neutral word “zeshonderd zevenenveertig” (from Dutch: six hundred forty-seven) in a way that expressed the target emotion. We chose to use a neutral word to make sure that vocal emotions would be communicated solely in terms of prosodic cues. Participants were instructed to avoid inserting any additional sounds such as laughs or sighs into their speech (e.g., Hawk et al., 2009). Each type of vocalization (nonverbal vocalizations and speech prosody) constituted a separate block. The order of the two blocks and the order of emotions in each block were randomized across participants. Participants were allowed to produce multiple vocal expressions for a given emotion. If they did, they were asked to choose the expression they thought best depicted the emotion they were trying to express. All stimuli were recorded using a high-quality microphone (Sennheiser MKE 600) and a Tascam DR-100 MK3 recorder.

In total, 880 vocalizations were collected. Average duration was 1.30 s (SD = 0.56) for nonverbal vocalizations, and 2.28 s (SD = 0.44) for speech prosody. All of the vocalizations were used in the recognition experiment without any preselection. Before the recognition experiment, recordings were digitalized at a 44 kHz sampling rate (16 bit, mono) and normalized for peak amplitude using AudaCity software (version 2.2.2, https://www.audacityteam.org). A representative vocalization for each positive emotion and vocalization type can be listened from https://emotionwaves.github.io/dutch22/.

Experimental Procedure

The recognition study was run online using the Qualtrics (Provo, UT) survey tool. Before the experiment, participants were instructed to complete the experiment in a silent environment and to use headphones. After being informed about the general procedure and giving informed consent, they were provided with the definitions of 22 positive emotions (see Table 1). After reading the definitions, participants completed two practice trials, each of which played an emotional vocalization that was not included in the main experiment (taken from www.findsounds.com). After listening to each vocalization, they were asked to select the emotion they thought the individual was expressing, choosing from eight response options. During the practice trials, participants were asked to adjust to a comfortable sound level and to keep it constant for the rest of the study. After the practice trials, participants were presented with two screening questions. One of these played a bird sound and the other a car horn. On these trials, participants were asked to indicate what they heard, with “bird sound” and “car horn” as response options. These questions were used to make sure that participants were paying attention and listening to the stimuli. Participants who failed one or both of the screening questions were not able to continue to the main experiment.

After the practice and screening questions, participants were assigned to one of fourteen conditions, half of which were speech prosody, and the other half nonverbal vocalizations. In each condition, three stimuli from each emotion category (e.g., three nonverbal vocalizations expressing admiration) were presented. Each participant thus completed 66 trials (22 emotion × 3 vocalization) in total. This way, each of the 880 stimuli were judged by at least one participant. After hearing each vocalization, participants were asked to make a forced-choice emotion categorization judgment, selecting from eight emotion categories (“Select the positive emotion you think the individual was expressing”). These response options included the target category (i.e., the emotion category of the stimulus on that given trial), and seven nontarget categories (emotion categories randomly selected from the remaining 21 positive emotions). Across all participants, all target response categories were paired with all nontarget response categories. For instance, across trials that included admiration vocalization as stimuli, the nontarget response options included all of the other 21 emotion categories for some participant(s). The presentation order of stimuli was randomized for each participant, and the response options were presented in a randomized order on each trial. There was no time constraint on completing each trial, and participants were able to replay each stimulus as many times as needed to make a judgment.

Statistical Analysis

Statistical analysis and outlier detection were done based on the preregistered analysis plan. Before the analysis, data were checked for participants with exceptionally low performance, defined as 3 SD or more below the mean in terms of overall recognition performance. None of the participants met this criterion, and so all were retained in the analyses.

We constructed a generalized linear mixed model (GLMM) to analyze whether listeners were able to categorize positive emotions at better-than-chance levels for nonverbal vocalizations and speech prosody. GLMM was used because it allows for fixed effects to be defined in addition to taking advantage of the computation of random effects. Positive emotion was set as a fixed effect. Participant and vocalization IDs were entered as random factors to account for participant and speaker variability. Chance level was set to 1/8, which is the probability of selecting the correct emotion category by random guessing. We defined logit for the reference probability of 1/8, which was entered into the model as an offset term. The dependent variable was a binary response (i.e., correct or incorrect response):

glmer (response ~ offset(logit(1/8)) + PositiveEmotion + (1|ParticipantnID) + (1|VocalizationID), family = binomial)

To test our prediction that participants would recognize emotions from nonverbal vocalizations better than from speech prosody, we constructed a second GLMM. In this model, type of vocalization was set as fixed effect, and similarly to the previous model, participant and vocalization IDs were entered as random factors:

glmer (response ~ offset(logit(1/8)) + Type + (1|VocalizationID) + (1|ParticipantID), family = binomial)

All analyses were performed in R (version 1.1.383, www.r-project.org) using the lme4 package (Bates, Mächler, Bolker, & Walker, 2015).

Results

Confusion matrices for average recognition percentages for nonverbal vocalizations and speech prosody for each emotion are shown in Fig. 1. Recognition performance compared to the chance level per emotion is shown in Table 2.

Fourteen positive emotions were recognized at better-than-chance level when expressed as nonverbal vocalizations. These emotions, ordered based on coefficients for fixed effects in log-odd scale, were relief (Est. = 4.274, SE = 0.331), amusement (Est = 2.484, SE = 0.378), tenderness (Est. = 2.194, SE = 0.339), admiration (Est. = 2.099, SE = 0.331), lust (Est. = 1.919, SE = 0.376), surprise (Est. = 1.671, SE = 0.187), sensory pleasure (Est. = 1.642, SE = 0.269), schadenfreude (Est. = 1.642, SE = 0.269), determination (Est. = 1.451, SE = 0.166), excitement (Est. = 1.308, SE = 1.308), interest (Est. = 1.233, SE = 0.244), awe (Est. = 1.170, SE = 0.330), being moved (Est. = 0.821, SE = 0.315), and inspiration (Est. = 0.628, SE = 0.244). These findings demonstrate that many positive emotions are recognizable from nonverbal vocalizations for naïve listeners.

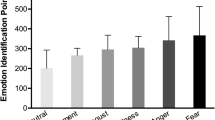

For speech prosody, 10 positive emotions were recognized better than would be expected by chance. These emotions, ordered by coefficient size for fixed effects in log-odd scale, were determination (Est. = 1.725, SE = 0.198), amusement (Est. = 1.401, SE = 0.453), surprise (Est. = 1.671, SE = 0.187), lust (Est. = 0.997, SE = 0.467), relief (Est. = 0.830, SE = 0.326), being respected (Est. = 0.771, SE = 0.247), admiration (Est. = 0.753, SE = 0.240), triumph (Est. = 0.626, SE = 0.254), excitement (Est. = 0.454, SE = 0.001), and being moved (Est. = 0.091, SE = 0.002). These results suggest that some positive emotions can be recognized from speech prosody. Figure 2 illustrates the estimates from the GLMM models. Full details of the GLMMs are provided in the Supplementary Materials, Tables S1 and S2.

Forest plots of estimates of the GLMMs. The x-axes represent estimates of the fixed effects as log-odds with standard error bars. Larger standard error bar indicates higher uncertainty about coefficient point estimates. Zero estimate indicates no recognition. Positive emotions recognized better than the chance level for the corresponding vocalization type in both cultural contexts are marked in bold

We hypothesized that positive emotions would be recognized with higher accuracy rates from nonverbal vocalizations compared to speech prosody. The results showed that participants categorized nonverbal vocalizations of positive emotions significantly better than speech prosody overall (GLMM: z = − 8,599, p < 0.001). When performance accuracy was compared for each emotion separately, 14 positive emotions were recognized with significantly better accuracy from nonverbal vocalizations. However, two emotions were recognized better from speech prosody than nonverbal vocalizations: amae and feeling respected (see Table 3). However, not all of these emotions were recognized better-than-chance level for both expressions of nonverbal vocalizations and speech prosody, suggesting that some emotions can only be recognized from some one kind of vocal expression. Awe, inspiration, interest, schadenfreude, sensory pleasure, and tenderness were recognized better than expected by chance only when expressed via nonverbal vocalizations. In contrast, feeling respected was accurately recognized only from the prosodic expressions. This might be an emotion that is expressed by differentiable prosodic configurations in speech, but lacking a unique nonverbal vocalization. Even though amae was recognized better when expressed via speech prosody as compared to nonverbal vocalizations, it was not recognized from either vocalization type at above chance levels. However, in many cases, positive emotions were recognized from both nonverbal vocalizations and speech prosody at better-than-chance levels, with recognition being higher for nonverbal vocalizations compared to speech prosody. Figure 3a illustrates the comparisons of accurate responses across vocalization types per emotion. Random effects in GLMM models are summarised in Supplementary Materials, Table S3.

Correct responses in percentages per emotion across vocalization types for Dutch and Chinese vocalizations. Bold text indicates recognition with above chance level accuracy tested with GLMM models. Significance levels comparing vocalization types: *** < 0.001, ** < 0.01, * < 0.05. Nv = Nonverbal vocalizations, Sp = Speech prosody

Taken together, the results from Experiment 1 showed that 16 of the 22 positive emotions were recognized better than would be expected by chance level by naïve Dutch listeners from the vocal expressions of Dutch speakers. Moreover, 14 positive emotions were recognized better when expressed via nonverbal vocalizations compared to prosodic expressions, indicating superior recognition of most positive emotions from nonverbal vocalizations. As compared to speech prosody, nonverbal vocalizations of most positive emotions might have relatively distinctive acoustic profiles that are highly differentiated from those of other emotions, leading to higher recognition scores.

Experiment 2: Chinese Listeners’ Recognition of Positive Emotions from Chinese Vocalizations

Experiment 1 was conducted in a Dutch cultural context. In order to evaluate the robustness of the findings, we sought to repeat Experiment 1 in a distant cultural context. Languages are characterized by prosodic conventions, which might shape the communication of emotions via speech prosody. Choosing distant cultures with different prosodic conventions allows us to interrogate the robustness of the findings, making it unlikely that the same prosodic conventions shape the communication of positive emotions in our study. In Experiment 2, we test whether (1) Chinese listeners can recognize 22 positive emotions from nonverbal expressions and speech prosody from stimuli produced by Chinese individuals; and (2) whether positive emotions would better recognized from nonverbal vocalizations of as compared to prosodic expressions also in a Chinese cultural context.

Method

Participants

Sample size was determined in the same way as Experiment 1. Two hundred native Chinese Mandarin speakers (109 women, 90 men, 1 prefer not to say; Mage = 27.51, SDage = 4.50, range = 19–35 years old) with no (self-reported) hearing impairments were recruited via a Chinese online data collection platform, https://www.wjx.cn. Participation in the study was compensated with a monetary reward.

Materials and Procedure

Stimuli

Posed vocal expressions of positive emotions in Chinese Mandarin were recorded at the University of Amsterdam’s psychology laboratory, using the same procedure as the recordings of the Dutch vocalizations (see Experiment 1, Stimuli). Eligibility criteria for participating in the recordings were: (1) being a native Chinese Mandarin speaker, (2) having been in the Netherlands for no more than 3 months by the time of the recording, (3) having lived in China until the age of 18, and (4) never having lived outside of China for more than 2 years. Based on these criteria, twenty participants (10 women, 10 men; Mage = 23, SDage = 2.63, range = 19–31 years old) were invited to the laboratory to record vocalizations. Participants reported never having been diagnosed or treated for any voice, speech, hearing, or language disorder.

The experimenter was a Chinese Mandarin native speaker, and the entire recording procedure was in Chinese Mandarin. The target emotions, accompanying definitions, and situational examples (given in Table 1), as well as the neutral phrase used for recordings of speech prosody (“六百四十七” from Chinese Mandarin: six hundred forty-seven), were provided in Chinese Mandarin. All 880 recorded vocalizations were used as stimuli in Experiment 2. Average duration as 1.25 s (SD = 0.64) for nonverbal vocalizations, and 1.64 s (SD = 0.45) for speech prosody. An example of vocalizations for each positive emotion and vocalization type is available from https://emotionwaves.github.io/chinese22/.

Experimental procedure

The experimental procedure was the same as in Experiment 1, except that the stimuli were from the Chinese Mandarin recordings.

Statistical Analysis

For data analysis and outlier detection, the preregistered plan was followed. Before data analysis, data were checked for participants with 3 SD or more below the mean on overall recognition performance. Based on this criterion, one participant’s data were excluded from the analysis. The statistical analyses were identical to those employed in Experiment 1.

Results

Confusion matrices for average recognition percentages for nonverbal vocalizations and speech prosody are shown in Fig. 1. Comparisons of recognition performance to chance level per positive emotion for nonverbal vocalizations and speech prosody can be found in Table 2.

Sixteen positive emotions were recognized at better-than-chance level from nonverbal vocalizations. In the order of coefficient size in log-odd scale, these emotions were amusement (Est. = 3.587, SE = 0.324), relief (Est. = 2.924, SE = 0.338), schadenfreude (Est. = 2.494, SE = 0.282), amae (Est. = 2.319, SE = 0.410), determination (Est. = 2.123, SE = 0.322), interest (Est. = 1.931, SE = 0.264), surprise (Est. = 1.635, SE = 0.300), triumph (Est. = 1.613, SE = 0.330), sensory pleasure (Est. = 1.408, SE = 0.177), admiration (Est. = 0.821, SE = 0.275), elation (Est. = 0.762, SE = 0.259), inspiration (Est. = 0.751, SE = 0.238), elevation (Est. = 0.692, SE = 0.248), pride (Est. = 0.656, SE = 0.222), lust (Est. = 0.643, SE = 0.274), and excitement (Est. = 0.604, SE = 0.269). These findings show that nonverbal vocalizations are highly effective means of conveying many positive emotions.

In contrast, only seven positive emotions were recognized better than would be expected by chance from speech prosody. These emotions in the order of coefficients in the log-odd scale were amusement (Est. = 1.453, SE = 0.284), relief (Est. = 1.227, SE = 0.263), determination (Est. = 1.165, SE = 0.244), interest (Est. = 0.662, SE = 0.200), pride (Est. = 0.503, SE = 0.180), triumph (Est. = 0.479, SE = 0.200), and awe (Est. = 0.465, SE = 0.205). These results suggest that prosodic expressions are not very effective in conveying positive emotions, with recognizability highly dependent on the emotion expressed. Estimates from the GLMM models are visualised in Fig. 2. Full details of the GLMMs are provided in the Supplementary Materials, Tables S1 and S2.

As in the Dutch cultural context, we sought to test the hypothesis that positive emotions would be more accurately recognized from nonverbal vocalizations than from speech prosody. As predicted, participants categorized nonverbal vocalizations of positive emotions better than speech prosody overall (GLMM: z = − 10.69, p < 0.001). Next, we compared performance accuracy across vocalization types for each emotion, showing that 16 positive emotions were recognized with better accuracy from nonverbal vocalizations. None of the emotions was more accurately recognized from speech prosody (see Table 3). It is worth noting that not all of the 16 emotions that were recognized better from nonverbal vocalizations than speech prosody were recognized above chance levels for both kinds of expressions (see Fig. 3b). Admiration, amae, elation, elevation, excitement, inspiration, lust, surprise, sensory pleasure, and triumph were recognized at better-than-chance levels only when expressed as nonverbal vocalizations. These emotions might thus be expressed with unique nonverbal vocalizations, while they are not clearly communicated via speech prosody cues. These results suggest that recognizability of some positive emotions depends on the vocalization type through which the emotion is expressed. Summary of random effects in GLMM models can be found in Supplementary Materials, Table S3.

Experiment 2 revealed that naïve Chinese listeners recognized 17 out of 22 positive emotions better than expected by chance from vocal expressions of native Chinese Mandarin speakers. Moreover, 16 positive emotions were recognized with higher accuracy from nonverbal vocalizations compared to speech prosody, suggesting a communicative advantage for nonverbal vocalizations. When compared to nonverbal vocalizations, a relative lack of distinctive acoustic cues of positive emotions expressed via prosodic expressions might be leading to poorer recognizability.

Acoustic Classification Experiments

Machine learning approaches were employed to attempt to automatically classify the nonverbal vocalizations and speech prosody of 22 positive emotions based on their acoustic features. All stimuli collected from the Dutch speakers in Experiment 1 and the Chinese Mandarin speakers in Experiment 2 were used. We first extracted a large number of acoustic features for each audio clip and then performed discriminative classification experiments with machine learning algorithms to try to classify the 22 positive emotions based on the extracted acoustic features. If acoustic classification is higher for nonverbal vocalizations than for speech prosody, this might be one of the contributing mechanisms to better recognition of positive emotions from nonverbal vocalizations in Experiment 1 and 2. The acoustic characteristics of the vocalizations used in this study (duration, Rms amplitude, pitch mean, pitch standard deviation, spectral central of gravity, and spectral standard deviation values, extracted using Praat: Boersma & Weenink, 2011) are presented in Fig. 4.

Acoustic characteristics of the vocalizations used in this study. Larger circles signify higher values. Rms = root mean square, SCoG = spectral center of gravity; duration is in seconds, amplitude is in pascal, pitch and spectral measurements are in Hertz. Nv = nonverbal vocalization, Sp = Speech prosody

Feature Extraction

By utilizing openSMILE software (Eyben et al., 2013), we extracted acoustic features from the extended version of the Geneva Minimalistic Acoustic Parameter Set (eGeMAPs, see Eyben et al., 2016). GeMAPs is a standardized, open source method for measurement of acoustic features for emotional voice analysis. The acoustic features included the frequency, energy/amplitude, spectral balance, and temporal domains. Features of the frequency domain include aspects of fundamental frequency (correlated with the perceived pitch), as well as formant frequencies and bandwidths. Energy/amplitude features refer to the air pressure in the sound wave, and are perceived as loudness. Spectral balance parameters are influenced by laryngeal and supralaryngeal movements and are related to perceived voice quality. Lastly, features from the temporal domain reflect the duration and rate of voiced and unvoiced speech segments. We extracted 88 acoustic features in total from these four domains. For each stimulus, the feature vector was the mean of the whole audio clip.

Classification Experiments

We conducted acoustic classification experiments with four machine learning algorithms: support vector machine (Linear SVM), linear, radial basis function (RBF SVM), polynomial SVM (Poly SVM), and random forest. These are the most commonly used models for classification (Poria et al., 2017). Scikit-learn, a python-based machine learning library was used for machine learning evaluation (Pedregosa et al., 2011). For all of the machine learning models, we performed tenfold cross-validation and grid search to select the hyperparameters that produced the best results.

We tested classification of 8 positive emotions for each run in order to reflect the findings on human recognition performance in Experiments 1 and 2, in which participants had to select one of 8 emotion options in a forced-choice task. We performed three separate classification runs for all stimuli that had a specific emotion category, henceforth called “emotion category group”. There were 22 emotion category groups corresponding to the 22 emotion categories. First, we used each emotion category group’s actual category plus seven randomly selected emotion categories from the other 21 categories (i.e., excluding the target category). Next, we selected another seven random categories from the remaining 14 categories in addition to the emotion category group’s actual category. Finally, we used the last seven categories and the emotion category group’s actual category. Hence, eight categories were used for each classification run; all 22 categories were included by the end of the third run.

To perform the classification during each run, we split the data into a train-test split using a 60:40% ratio. We optimized our machine learning models on the training set using a hyperparameter grid search. Next, we performed classification on the test set. We then combined the predictions for each of the 22 emotion label groups into one confusion matrix.

Results

Classification accuracy for each machine learning model is summarized in Table 4; confusion matrices for the most accurate machine learning models for each group are shown in Fig. 5.

For both Dutch and Chinese stimuli, nonverbal vocalizations of all positive emotions except hope and inspiration were classified with above-chance (i.e., 12.5% (1/8) given that there were 8 emotion labels) accuracy. The results revealed that the best classified positive emotions mapped into the emotions well-recognized from nonverbal vocalizations. For speech prosody, only eight positive emotions (admiration, amae, awe, excitement, gratitude, schadenfreude, and tenderness) were classified at above chance levels. Across the machine learning models, nonverbal vocalizations were classified more accurately compared to speech prosody. When vocalization types were compared for each positive emotion, acoustic classification accuracy was higher for nonverbal vocalizations of 18 positive emotions, while none of the emotions were classified with better accuracy from speech prosody. These results illustrate the lower distinctiveness of the acoustic patterns of positive emotions expressed through prosodic expressions as compared to nonverbal vocalizations, providing a likely explanation for the better recognition of positive emotions from nonverbal vocalizations found in Experiment 1 and 2.

Ancillary Acoustic Analyses

In order to better understand acoustic distinctiveness of nonverbal expressions and speech prosody, we first visualised acoustic similarity structure of positive emotions across the two vocalizations types using t-distributed stochastic neighbor embedding (t-SNE; https://lvdmaaten.github.io/tsne/). In the resulting multidimensional scaling projection, distance between the elements (i.e., acoustic structure of each vocalization) denotes their similarity (see Fig. 6). The similarity space for vocalizations across the two vocalization types derived by t-SNE revealed that nonverbal vocalizations and speech prosody form distinctive clusters.

The t-distributed stochastic neighbor embedding (t-SNE) multidimensional scaling projection of the acoustic structure of positive emotions for each vocalization type. T-SNE estimates local distances between data points without assuming linearity or discreteness. Acoustic structures that are similar are closer in the t-SNE space. Nv = nonverbal vocalization, Sp = Speech prosody

In order to better understand the acoustic characteristics of nonverbal expressions and speech prosody, we identified the five most important acoustic features based on feature weights. Feature weights represent how much of each of the acoustic features are used by the machine learning model in classifying emotions for nonverbal vocalizations and speech prosody produced by Dutch and Mandarin Chinese speakers. Table 5 lists these parameters together with their definitions, features weights, and standard variations for nonverbal vocalizations and speech prosody, separately. These calculations highlight that feature weights, in general, were higher for nonverbal vocalizations compared to speech prosody. Acoustic features of nonverbal vocalizations were more influential in classification of nonverbal vocalizations compared to speech prosody. Moreover, pitch cues were among the most important cues for speech prosody but not for nonverbal vocalizations, while loudness and spectral-balance cues were among the most important features for both vocalization types. Temporal cues were important in vocal expressions produced by Chinese Mandarin speakers, but not by Dutch individuals. This might reflect differences in linguistic structures across these languages. Specifically, Chinese Mandarin is a syllable-timed language (spacing syllables equally across an utterance) while Dutch is a stress-timed (emphasizing particular stressed syllables at regular intervals) language (e.g., Benton et al., 2007). Most, but not all, of the acoustic features have more variation in nonverbal vocalizations compared to speech prosody. This could be due to linguistic constraints in the production of speech prosody. The production of nonverbal vocalizations—unlike speech—does not require precise movements of articulators, because they are not constrained by linguistic codes (Scott et al., 2010, see General Discussion for a discussion).

We further performed cross-classification analyses in order to test whether producers’ emotion encoding strategies overlap between nonverbal vocalizations and speech prosody, and whether the encoding strategies are shared across Dutch and Chinese Mandarin speaking participants. For the cross-classification analyses we thus conducted two types of analyses: (1) trained models on nonverbal vocalizations and tested on speech prosody, and vice versa; (2) trained models on Dutch speaking participants vocalizations and tested on vocalizations produced by Chinese Mandarin speaking participants, and vice versa. The accuracy of all models are shown in Table 6.

The results show that classification models in each of the cross-classification types performed statistically better than chance, indicating shared encoding strategies used in the production of emotional vocalizations. In cross-vocalization type evaluations, performance was nearly equivalent for training and test in both directions for the Dutch vocal expressions. However, for the vocalizations produced by Chinese Mandarin speakers, the accuracies were slightly higher for training on speech prosody and testing on nonverbal vocalizations as compared to the reverse. In cross-cultural evaluations, training on the Dutch vocalizations and testing on Chinese vocalizations performed similarly as training on the Chinese vocalizations and testing on Dutch vocalizations. Cross-cultural classification performance was better for nonverbal vocalizations compared to speech prosody, suggesting more robust differentiation of positive emotions based on acoustic configurations across cultures when expressed via nonverbal vocalizations. Cross-classification evaluations demonstrate that encoding strategies used in production of emotional vocalizations shared across vocalization types as well as the speakers from the two cultures.

General Discussion

The current study demonstrates that nonverbal vocalizations expressing a wide range of positive emotions hold a communicative advantage over prosodic expressions. We examined recognition of 22 positive emotions from nonverbal vocalizations and speech prosody in two distant cultural contexts. The results showed differential accuracy depending on the vocalization type through which positive emotions were expressed. In particular, listeners recognized emotions better from nonverbal vocalizations compared to speech prosody. This pattern was found for many but not all positive emotions, and so the superiority of nonverbal vocalizations is influenced by the specific positive emotion expressed in the voice.

Recognition of Positive Emotions from Nonverbal Vocalizations Versus Speech Prosody

The current study adds to the scant knowledge available on differences in emotion recognition between types of vocal expressions. Our results show that most positive emotions are better recognized from brief nonverbal vocalizations than from speech prosody. This demonstrates that nonverbal vocalizations can communicate positive emotions more successfully than speech prosody, even though nonverbal vocalizations are considerably shorter in duration. Brief nonverbal vocalizations are more densely packed with emotional information compared to speech prosody. Previous research has reported better recognition from nonverbal vocalizations as compared to speech prosody for several negative emotions and for happiness/joy (e.g., Hawk et al., 2009). Our study, for the first time, provides comparisons of recognition for different vocalization types for a wide range of positive emotions. The results imply that nonverbal vocalizations may be richer and more nuanced than previously thought, given the wide range of positive emotions that could be clearly conveyed via such cues.

The results of cross-classification analysis with machine learning models show shared encoding strategies in production of emotional vocalizations across vocalization types and cultures. Dutch and Chinese Mandarin speakers employed shared mechanisms in production of both nonverbal vocalizations and speech prosody. Furthermore, cross-cultural classification evaluations show that differentiation of positive emotions based on acoustic features was more robust across cultures when expressed as nonverbal vocalizations compared to speech prosody. Indeed, the results of acoustic analysis with machine learning models demonstrate that acoustic configurations of discrete positive emotions were highly differentiated from those of other emotions when expressed through nonverbal vocalizations but less so for speech prosody. Discriminability of acoustic patterns in nonverbal expressions of positive emotions paralleled human listeners’ patterns of recognition accuracy. One possibility is that the superiority of nonverbal vocalization in recognition of positive emotions might be due to more distinctive acoustic patterns.

Communicating Positive Emotions via Nonverbal Vocalizations

Both Dutch and Chinese nonverbal vocalizations were highly effective means of communicating 11 different positive emotions. These results are in line with previous research showing that amusement, interest, lust, relief, and surprise are well-recognized from nonverbal vocalizations (e.g., Cordaro et al., 2016; Cowen et al., 2019; Laukka et al., 2013). In addition to these emotions, the current investigation showed that nonverbal vocalizations can reliably communicate admiration, determination, excitement, inspiration, schadenfreude, and sensory pleasure. The recognition scores for nonverbal vocalization of schadenfreude and sensory pleasure are particularly notable, given the low recognition rates of these emotions from speech prosody. Nonverbal vocalizations of these positive emotions appear to map onto distinct, recognizable vocal signatures. Within a functional framework, different positive emotions serve adaptive functions relating to different types of opportunities, like affiliation and cooperation (e.g., Fredrickson, 1998; Griskevicius et al., 2010; Keltner et al., 2006; Shiota et al., 2004, 2014). For instance, schadenfreude has been proposed to serve a social affiliation function by strengthening ingroup bonds (Yam, 2017), and sensory pleasure motivates an individual to pursue reward necessary for fitness (Berridge & Kringelbach, 2015). Based on the highest recognition accuracies of positive emotions in both cultures (see Fig. 1), more clearly recognized nonverbal vocalizations are vocalizations of amusement, relief, schadenfreude, sensory pleasure, and surprise.

Communicating Positive Emotions via Speech Prosody

For speech prosody, participants were able to recognize only amusement, determination, relief, and triumph with above chance accuracy in both cultural contexts. These emotions might be associated with prosodic configurations in running speech that are highly differentiable from other positive emotions. For instance, when expressing amusement via speech prosody, we might produce salient prosodic expressions which might signal cooperative intent to others. The accuracy rate of successfully recognized positive emotions, as well as the overall recognition rate, were lower for speech prosody compared to nonverbal vocalizations. Prosodic expressions require more complex coordination of articulators with greater volitional control due to linguistic structures in speech. In contrast, nonverbal vocalizations are produced with less volitional control while articulators are mostly in their resting positions. The lack of constraints on nonverbal expressions allows more flexibility in the expression of emotions, avoiding the linguistic constraints that exist in prosodic expressions. Despite the lower recognition accuracy, specific positive emotions could be recognized from speech prosody in both languages. Previous literature has shown that emotions like anger, sadness, and fear can be recognized from speech prosody across languages, and certain acoustic features such as speaker fundamental frequency have great importance in signaling these emotions (e.g., Paulmann & Uskul, 2014; Pell et al., 2009). Our results suggest that prosodic expressions of amusement, determination, and relief future are well-recognized based on their high recognition accuracies of positive emotions in both cultures (see Fig. 1).

Previous research on emotional speech prosody have found higher levels of recognition accuracy compared to those in the present study (e.g., Hawk et al., 2009; Pell et al., 2009; Scherer et al., 1991). There are several potential reasons for this difference. One possibility is that discrete positive emotions might be recognized with lower accuracy levels from speech prosody than the primarily negative emotions studied in previous research. Previous studies mostly included a general positive emotion category (i.e., happiness/joy) and discrete negative emotions like anger, fear, and sadness. It is likely that comparing these emotions with each other was easier for participants as compared to our study. In addition, lower numbers of emotion categories were included in most previous studies. Given that participants compared eight positive emotions in our study, the difficulty of the emotion recognition task could explain the lower recognition accuracy in our study. Methodological differences may also have contributed to these differences in recognition accuracy. The present study included all stimuli, whereas previous studies have pre-selected stimuli based on listeners’ judgments. For instance, in the study of Pell et al. (2009), only stimuli that were recognized at minimally three times chance performance were included in the analysis of overall recognition levels. In the study of Hawk et al. (2009), two raters evaluated all stimuli and selected the ones for which there was good correspondence with the target emotion. Applying such pre-selection criteria is certain to inflate emotion recognition accuracy.

Cultural Differences in Recognition of Positive Emotions

The recognizability of some positive emotions from nonverbal vocalizations and speech prosody differed between the two cultural contexts. For instance, amae was recognized from Chinese but not Dutch nonverbal vocalizations. Amae is an emotion originating in an East Asian context (Doi, 2005), loosely translated as attachment love in English. One possibility is that amae may have normative vocal expressions in Chinese culture, but not in the Dutch cultural context. For speech prosody, we found that being respected was recognized only by Dutch listeners, while awe was recognized only in the Chinese culture. These findings suggest that prototypical prosodic expressions can exist in one culture without necessarily occurring in other cultural contexts.

The present study only assessed within-culture recognition, that is, producer and perceiver came from the same culture. Repeating the experiment in two cultural contexts sought to test whether the findings would be replicable. However, this approach precludes the investigation of cross-cultural recognition of positive emotions. Findings from previous studies point to impairments in cross-cultural recognition of happiness from vocal expressions (see Laukka & Elfenbein, 2020 for a meta-analysis). These challenges are more pronounced than in the cross-cultural communication of vocal expressions of negative emotions, suggesting that positive vocalizations might be particularly susceptible to cultural differences. For instance, Pell and colleagues (2009) showed that, across four languages, acoustic features extracted from prosodic expressions of happiness were more variable than those of negative emotions like disgust and fear. Sauter et al. (2010) suggested that vocal signals of negative emotions might be less influenced by cultural learning compared to positive emotions. Negative signals may be more related to biological reactions to immediate dangers, while communication of positive emotions might facilitate social capacities that promote adaptation (e.g., Fredrickson, 1998; Nesse, 1990). Further work is needed to test the extent to which the challenges with cross-cultural communication of vocal signals is true of the wide range of positive emotions examined in the present study. Some evidence has found that laughter is well recognized as communicating amusement across cultural groups (Sauter et al., 2010), suggesting that there is likely considerable variability across positive emotions and expression types. Research that includes the production and perception of a wide range of positive emotions will be needed to establish this empirically.

Limitations and Future Research Suggestions

Our study has several limitations that merit consideration. One point is that we used a forced-choice design to assess recognition. Forced-choice tasks provide a convenient way to collect and analyze categorical data. However, they might potentially inflate perceiver accuracy because participants can use guessing strategies that are informed by the available response alternatives (e.g., Russell, 1994). Most studies testing recognition of emotions from nonverbal expressions used forced-choice methodology and included a relatively small number of response alternatives (e.g., four: Cordaro et al., 2020; Scherer et al., 2001). In such tasks, comparing small number of emotions to make a judgment might artificially inflate recognition rates by enabling informed guessing strategies. Increasing the number of alternatives in a forced-choice task might reduce the guessing rate, but there is also a point at which the number of alternatives becomes too large for perceivers (Vancleef et al., 2018). In the present study, we considered that it would be too cumbersome for participants to choose between 22 response options. We, therefore, opted to let participants select from eight positive emotion categories, with response options different across trials. Generalized linear mixed models with responses to such a task allowed us to assess recognition of 22 positive emotions, while keeping the number of response options at a manageable level. However, we cannot rule out that participants may have been able to make use of elimination strategies to help guide their responses on some trials.

Another limitation is that we used posed expressions of positive emotions. The vocalizations were produced by untrained individuals who were asked to produce vocal expressions of specific emotions on demand. Posed vocalizations provide better sound quality since they can be recorded with high-quality equipment in the lab, while it is typically challenging to record good-quality audio from spontaneous vocal expressions in real-world contexts. In addition to better sound quality, posed vocalizations also afford certainty about the intended emotion being expressed. We provided definitions and situational examples in order to ensure that the expressions were targeting the intended emotions. However, the producers did not experience those emotions when producing the vocalizations. In contrast, spontaneous vocalizations occurring in real-world settings are more natural and thus have the advantage of reflecting genuinely felt emotions (Williams & Stevens, 1981). Previous research points to some differences in acoustic properties of spontaneous and posed emotional vocalizations (e.g., Anikin & Lima, 2018). For instance, spontaneous laughter typically has higher pitch and shorter burst duration compared to volitional laughter (e.g., Bryant & Aktipis, 2014). For emotional speech, most acoustic features show similar patterns for spontaneous and posed speech, while some subtle acoustic differences have been found in measures of frequency and temporal features (e.g., Juslin et al., 2017). It is thus possible that some acoustic characteristics of the vocalizations used in our study differ from those of spontaneous vocalizations of the same emotions, and recognizability of positive emotions might even be stronger for spontaneous vocalizations (Anikin & Lima, 2018, but see Sauter & Fischer, 2018). In order to investigate the acoustic profiles and recognition accuracy of positive emotions in vocal expressions that are higher in ecological validity, future research should aim to collect high-quality recordings of spontaneous vocalizations of different positive emotions in real life (e.g., Anderson et al., 2018).

Examining (filtered versions of) spontaneously produced emotional speech would allow researchers to avoid the potential artifact of imposing standardized utterances. Encoding and decoding of emotions in speech prosody might be influenced by the use of standardized semantic content. The use of standard utterances such as names and pseudo-speech is common in the study of emotional prosody (see Juslin & Laukka, 2003 for a review). In our study, producing a number, “six hundred forty-seven,” in an emotionally inflected way might have felt unnatural and thus been difficult for speakers. Similarly, recognition of emotions from such stimuli might have been an unfamiliar, and thus challenging task for the listeners. The use of a standardized utterance may have hampered the production and perception of emotional speech, which could have contributed to the poorer recognition of emotions from speech prosody compared to nonverbal vocalizations (although producing nonverbal vocalizations on demand may have felt unnatural for participants too). Future research could examine the distinctiveness of acoustic features of emotions in spontaneous speech. Additionally, the emotion recognition ability of listeners who are from a close culture but do not understand the language spoken could be employed to address the contribution of difficulties in producing semantically constrained speech.

In producing nonverbal vocalizations of positive emotions, encoders sometimes used emblems like “wow” that are culturally shaped, conventionalized vocalizations (Scherer, 1994; see https://emotionwaves.github.io/dutch22/ to listen some examples). Since emblematic vocalizations are culturally bound and convey a symbolic meaning, it may be plausible to expect that emblematic vocalizations are more accurately recognized than raw vocalizations (when producer and perceiver are from the same culture). Previous research, however, has shown higher decoding accuracy for both raw and emblematic vocalizations compared to speech prosody (e.g., compare Schröder, 2003 with Banse & Scherer, 1996; Hawk et al., 2009). Moreover, the distinction between raw and emblematic vocalizations is far from clear-cut. While nonverbal vocalizations like laughs and screams are considered relatively raw expressions that naturally occur, emblematic expressions are more likely to be produced when the communication is intentional (Buck, 1984). However, affective vocal signals conveyed in everyday life are suggested to fall somewhere on a continuum between raw and emblematic, rather than being one or the other. The distinction between raw and emblematic expressions is likely even less evident when considering real-world vocalizations of emotions because “all sorts of mixtures” occur (e.g., Banse & Scherer, 1996; Scherer, 1994; Schröder, 2003). The setup of our study further blurs the line between these expressions, because not only the emblematic expressions, but all of the nonverbal vocalizations were produced intentionally. In order to draw firmer conclusions about what constitutes emblematic vocalizations of positive emotions and the proportional use of emblems in vocal expression, future research should gather more emblematic vocalizations, perhaps across different cultures.

The acoustic classification performed slightly worse than the human listeners overall. One reason for poorer classification accuracy might be the small dataset in our study. Further approaches can be explored in the future to improve the machine learning results, such as deep neural networks in which the network is trained on a different but related task that has large number of examples. Another reason contributing to the slightly worse performance of the acoustic classification might be the features used in the training of the classifier. Dataset used in our study mostly involved the arithmetic mean of the extracted features. Classifiers trained on datasets including temporal information that characterizes vocal expressions might provide better performance.

Conclusions

In conclusion, we provide evidence for systematic differences between different kinds of vocal expressions of positive emotions. Overall, the results of this study demonstrate the superiority of nonverbal vocalizations over speech prosody for recognition of many positive emotions. We demonstrate that positive emotions are also expressed with more distinctive acoustic patterns in nonverbal vocalizations as compared to speech prosody. Finally, our results show that human listeners can accurately perceive a wide range of positive emotions from nonverbal vocalizations but only a few from speech prosody.

Availability of Data, Code, and Material

Data and code are available from https://osf.io/djgq9/?view_only=6ba8056f2f564d4b9d9d21b9c4014de4.

References

Ameka, F. (1992). Interjections: The universal yet neglected part of speech. Journal of Pragmatics, 18, 101–118. https://doi.org/10.1016/0378-2166(92)90048-G

Anderson, C. L., Monroy, M., & Keltner, D. (2018). Emotion in the wilds of nature: The coherence and contagion of fear during threatening group-based outdoors experiences. Emotion, 18, 355. https://doi.org/10.1037/emo0000378

Anikin, A., & Lima, C. F. (2018). Perceptual and acoustic differences between authentic and acted nonverbal emotional vocalizations. The Quarterly Journal of Experimental Psychology, 71, 1–21. https://doi.org/10.1080/17470218.2016.1270976

Banse, R., & Scherer, K. R. (1996). Acoustic profiles in vocal emotion expression. Journal of Personality and Social Psychology, 70, 614. https://doi.org/10.1037/0022-3514.70.3.614

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software. https://doi.org/10.18637/jss.v067.i01

Behrens, K. Y. (2004). A multifaceted view of the concept of amae: Reconsidering the indigenous Japanese concept of relatedness. Human Development, 47, 1–27. https://doi.org/10.1159/000075366

Benton, M., Dockendorf, L., Jin, W., Liu, Y., & Edmondson, J. A. (2007). The continuum of speech rhythm: Computational testing of speech rhythm of large corpora from natural Chinese and English speech. The 16th ICPhS (pp. 1269–1272).

Berridge, K. C., & Kringelbach, M. L. (2015). Pleasure systems in the brain. Neuron, 86, 646–664. https://doi.org/10.1016/j.neuron.2015.02.018

Boersma, P., & Weenink, D. (2011). Praat: Doing phonetics by computer. Retrieved from http://www.praat.org/.

Bryant, G. A., & Aktipis, C. A. (2014). The animal nature of spontaneous human laughter. Evolution and Human Behavior, 35, 327–335. https://doi.org/10.1016/j.evolhumbehav.2014.03.003

Buck, R. (1984). The communication of emotion. Guilford Press.

Castiajo, P., & Pinheiro, A. P. (2019). Decoding emotions from nonverbal vocalizations: How much voice signal is enough? Motivation and Emotion, 43, 803–813. https://doi.org/10.1007/s11031-019-09783-9

Cordaro, D. T., Keltner, D., Tshering, S., Wangchuk, D., & Flynn, L. M. (2016). The voice conveys emotion in ten globalized cultures and one remote village in Bhutan. Emotion, 16, 117. https://doi.org/10.1037/emo0000100

Cordaro, D. T., Sun, R., Kamble, S., Hodder, N., Monroy, M., Cowen, A., Bai, Y., & Keltner, D. (2020). The recognition of 18 facial-bodily expressions across nine cultures. Emotion, 20(7), 1292–1300. https://doi.org/10.1037/emo0000576

Cowen, A. S., Elfenbein, H. A., Laukka, P., & Keltner, D. (2019). Mapping 24 emotions conveyed by brief human vocalization. American Psychologist, 74, 698. https://doi.org/10.1037/amp0000399

Doi, T. (2005). Understanding amae: The Japanese concept of need-love. Kent: Global Orient.

Ekman, P. (1992). An argument for basic emotions. Cognition and Emotion, 6, 169–200. https://doi.org/10.1080/02699939208411068

Eyben, F., Scherer, K. R., Schuller, B. W., Sundberg, J., André, E., Busso, C., Devillers, L. Y., Epps, J., Laukka, P., Narayanan, S. S., & Truong, K. P. (2016). The Geneva Minimalistic acoustic parameter set (GeMAPS) for voice research and affective computing. IEEE Transactions on Affective Computing, 7, 190–202. https://doi.org/10.1109/TAFFC.2015.2457417

Eyben, F., Weninger, F., Gross, F., & Schuller, B. (2013). Recent developments in openSMILE, the Munich open-source multimedia feature extractor. In A. Jaimes, N. Sebe, N. Boujemaa, D. Gatica-Perez, D. A. Shamma, M. Worring, & R. Zimmermann (Eds.), Proceedings of the 21st association for computing machinery international conference on multimedia (pp. 835–838). New York, NY: Association for Computing Machinery. https://doi.org/10.1145/2502081.2502224

Fredrickson, B. L. (1998). What good are positive emotions? Review of General Psychology, 2, 300. https://doi.org/10.1037/1089-2680.2.3.300

Gibbon, D. (2017). Prosody: Rhythms and melodies of speech. Retrieved from https://arxiv.org/pdf/1704.02565.pdf

Griskevicius, V., Shiota, M. N., & Neufeld, S. L. (2010). Influence of different positive emotions on persuasion processing: A functional evolutionary approach. Emotion, 10, 190–206. https://doi.org/10.1037/a0018421

Hawk, S. T., Van Kleef, G. A., Fischer, A. H., & Van Der Schalk, J. (2009). “Worth a thousand words”: Absolute and relative decoding of nonlinguistic affect vocalizations. Emotion, 9, 293. https://doi.org/10.1037/a0015178

Jessen, S., & Kotz, S. A. (2011). The temporal dynamics of processing emotions from vocal, facial, and bodily expressions. NeuroImage, 58, 665–674. https://doi.org/10.1016/j.neuroimage.2011.06.035

Juslin, P. N., & Laukka, P. . (2001). Impact of intended emotion intensity on cue utilization and decoding accuracy in vocal expression of emotion. Emotion, 4, 381–412. https://doi.org/10.1037/1528-3542.1.4.381

Juslin, P. N., & Laukka, P. (2003). Communication of emotions in vocal expression and music performance: Different channels, same code? Psychological Bulletin, 129, 770–814. https://doi.org/10.1037/0033-2909.129.5.770

Juslin, P. N., Laukka, P., & Bänziger, T. (2017). The mirror to our soul? Comparisons of spontaneous and posed vocal expression of emotion. Journal of Nonverbal Behavior, 42, 1–40. https://doi.org/10.1007/s10919-017-0268-x

Kamiloğlu, R. G., Fischer, A. H., & Sauter, D. A. (2020). Good vibrations: A review of vocal expressions of positive emotions. Psychonomic Bulletin & Review, 27, 237–265. https://doi.org/10.3758/s13423-019-01701-x

Keltner, D., Haidt, J., & Shiota, M. N. (2006). Social functionalism and the evolution of emotions. In M. Schaller, J. A. Simpson, & D. T. Kenrick (Eds.), Evolution and social psychology (pp. 115–142). Psychosocial Press.

Kreiman, J., & Sidtis, D. (2011). Foundations of voice studies: An interdisciplinary approach to voice production and perception. Hoboken: Wiley.

Laukka, P., & Elfenbein, H. A. (2020). Cross-cultural emotion recognition and in-group advantage in vocal expression: A meta-analysis. Emotion Review. https://doi.org/10.1177/1754073919897295

Laukka, P., Elfenbein, H. A., Söder, N., Nordström, H., Althoff, J., Iraki, F. K. E., Rockstuhl, T., & Thingujam, N. S. (2013). Cross-cultural decoding of positive and negative non-linguistic emotion vocalizations. Frontiers in Psychology, 4, 353. https://doi.org/10.3389/fpsyg.2013.00353

Lausen, A., & Hammerschmidt, K. (2020). Emotion recognition and confidence ratings predicted by vocal stimulus type and prosodic parameters. Humanities and Social Sciences Communications, 7, 1–17. https://doi.org/10.1057/s41599-020-0499-z

Lima, C. F., Castro, S. L., & Scott, S. K. (2013). When voices get emotional: A corpus of nonverbal vocalizations for research on emotion processing. Behavior Research Methods, 45, 1234–1245. https://doi.org/10.3758/s13428-013-0324-3

Nesse, R. M. (1990). Evolutionary explanations of emotions. Human Nature, 1, 261–289.

Pajupuu, H., Altrov, R., & Pajupuu, J. (2019). Towards a vividness in synthesized speech for audiobooks. Eesti ja soome-ugri keeleteaduse ajakiri. Journal of Estonian and Finno-Ugric Linguistics, 10, 167–190. https://doi.org/10.12697/jeful.2019.10.1.09

Panksepp, J., & Burgdorf, J. (2003). “Laughing” rats and the evolutionary antecedents of human joy? Physiology & Behavior, 79, 533–547.

Paulmann, S., & Uskul, A. K. (2014). Cross-cultural emotional prosody recognition: Evidence from Chinese and British listeners. Cognition and Emotion, 28, 230–244. https://doi.org/10.1080/02699931.2013.812033

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., & Vanderplas, J. (2011). Scikit-learn: Machine learning in Python. The Journal of Machine Learning Research, 12, 2825–2830.

Pell, M. D., Paulmann, S., Dara, C., Alasseri, A., & Kotz, S. A. (2009). Factors in the recognition of vocally expressed emotions: A comparison of four languages. Journal of Phonetics, 37, 417–435. https://doi.org/10.1016/j.wocn.2009.07.005

Pell, M. D., Rothermich, K., Liu, P., Paulmann, S., Sethi, S., & Rigoulot, S. (2015). Preferential decoding of emotion from human non-linguistic vocalizations versus speech prosody. Biological Psychology, 111, 14–25. https://doi.org/10.1016/j.biopsycho.2015.08.008

Poria, S., Cambria, E., Bajpai, R., & Hussain, A. (2017). A review of affective computing: From unimodal analysis to multimodal fusion. Information Fusion, 37, 98–125. https://doi.org/10.1016/j.inffus.2017.02.003

Russell, J. A. (1994). Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychological Bulletin, 115, 102–141. https://doi.org/10.1037/0033-2909.115.1.102

Sauter, D. (2007). An investigation into vocal expressions of emotions: The roles of valence, culture, and acoustic factors (Doctoral dissertation, University of London).

Sauter, D. A., Eisner, F., Ekman, P., & Scott, S. K. (2010). Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. Proceedings of the National Academy of Sciences of the United States of America, 107, 2408–2412. https://doi.org/10.1073/pnas.0908239106

Sauter, D. A., & Fischer, A. H. (2018). Can perceivers recognize emotions from spontaneous expressions? Cognition and Emotion, 32, 504–515. https://doi.org/10.1080/02699931.2017.1320978

Schaerlaeken, S., & Grandjean, D. (2018). Unfolding and dynamics of affect bursts decoding in humans. PLoS ONE, 13, e0206216. https://doi.org/10.1371/journal.pone.0206216

Scherer, K. R. (1986). Vocal affect expression: A review and a model for future research. Psychological Bulletin, 99, 143–165. https://doi.org/10.1037/0033-2909.99.2.143

Scherer, K. R. (1994). Affect bursts. In S. H. M. van Goozen, N. E. Van de Poll, & J. A. Sergeant (Eds.), Emotions: Essays on emotion theory (pp. 161–196). Erlbaum.

Scherer, K. R., Banse, R., & Wallbott, H. G. (2001). Emotion inferences from vocal expression correlate across languages and cultures. Journal of Cross-Cultural Psychology, 32, 76–98. https://doi.org/10.1177/0022022101032001009

Scherer, K. R., Banse, R., Wallbott, H. G., & Goldbeck, T. (1991). Vocal cues in emotion encoding and decoding. Motivation and Emotion, 15, 123–148. https://doi.org/10.1007/BF00995674

Schröder, M. (2003). Experimental study of vocal affect bursts. Speech Communication, 40, 99–116. https://doi.org/10.1016/S0167-6393(02)00078-X

Scott, S. K., Sauter, D., & McGettigan, C. (2010). Brain mechanisms for processing perceived emotional vocalizations in humans. In Handbook of behavioral neuroscience (Vol. 19, pp. 187–197). Elsevier.

Shiota, M. N., Campos, B., Keltner, D., & Hertenstein, M. (2004). Positive emotion and the regulation of interpersonal relationships. In P. Phillipot & R. Feldman (Eds.), Emotion regulation (pp. 127–156). Erlbaum.

Shiota, M. N., Neufeld, S. L., Danvers, A. F., Osborne, E. A., Sng, O., & Yee, C. I. (2014). Positive emotion differentiation: A functional approach. Social and Personality Psychology Compass, 8, 104–117. https://doi.org/10.1111/spc3.12092

Soltysik, S., & Jelen, P. (2005). In rats, sighs correlate with relief. Physiology & Behavior, 85, 598–602. https://doi.org/10.1016/j.physbeh.2005.06.008

Trouvain, J. (2014). Laughing, breathing, clicking—The prosody of nonverbal vocalizations. Speech Prosody, 2014, 598–602.

Vancleef, K., Read, J. C., Herbert, W., Goodship, N., Woodhouse, M., & Serrano-Pedraza, I. (2018). Two choices good, four choices better: For measuring stereoacuity in children, a four-alternative forced-choice paradigm is more efficient than two. PLoS ONE, 13, e0201366. https://doi.org/10.1371/journal.pone.0201366

Williams, C. E., & Stevens, K. N. (1981). Vocal correlates of emotional states. In J. K. Darby (Ed.), Speech evaluation in psychiatry (pp. 221–240). New York, NY: Grune and Stratton.

Yam, P. C. (2017). The social functions of intergroup schadenfreude (Doctoral dissertation, University of Oxford).

Funding

R.G.K. and D.A.S. are supported by ERC Starting grant no. 714977 awarded to D.A.S.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they had no conflicts of interest with respect to their authorship or the publication of this article.

Ethics Approval

The collection of Dutch vocalizations has been approved with the project number 2019-SP-10142, Chinese vocalizations with the project number 2019-SP-11306, and the recognition experiments with the project number 2019-SP-11716 by the Faculty Ethics Review Board of the University of Amsterdam, Amsterdam, the Netherlands.

Consent to Participate

All participants were asked to provide (electronic) informed consent before participation.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kamiloğlu, R.G., Boateng, G., Balabanova, A. et al. Superior Communication of Positive Emotions Through Nonverbal Vocalisations Compared to Speech Prosody. J Nonverbal Behav 45, 419–454 (2021). https://doi.org/10.1007/s10919-021-00375-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10919-021-00375-1