Abstract

Background

Paediatric emergencies are challenging for healthcare workers, first aiders, and parents waiting for emergency medical services to arrive. With the expected rise of virtual assistants, people will likely seek help from such digital AI tools, especially in regions lacking emergency medical services. Large Language Models like ChatGPT proved effective in providing health-related information and are competent in medical exams but are questioned regarding patient safety. Currently, there is no information on ChatGPT’s performance in supporting parents in paediatric emergencies requiring help from emergency medical services. This study aimed to test 20 paediatric and two basic life support case vignettes for ChatGPT and GPT-4 performance and safety in children.

Methods

We provided the cases three times each to two models, ChatGPT and GPT-4, and assessed the diagnostic accuracy, emergency call advice, and the validity of advice given to parents.

Results

Both models recognized the emergency in the cases, except for septic shock and pulmonary embolism, and identified the correct diagnosis in 94%. However, ChatGPT/GPT-4 reliably advised to call emergency services only in 12 of 22 cases (54%), gave correct first aid instructions in 9 cases (45%) and incorrectly advised advanced life support techniques to parents in 3 of 22 cases (13.6%).

Conclusion

Considering these results of the recent ChatGPT versions, the validity, reliability and thus safety of ChatGPT/GPT-4 as an emergency support tool is questionable. However, whether humans would perform better in the same situation is uncertain. Moreover, other studies have shown that human emergency call operators are also inaccurate, partly with worse performance than ChatGPT/GPT-4 in our study. However, one of the main limitations of the study is that we used prototypical cases, and the management may differ from urban to rural areas and between different countries, indicating the need for further evaluation of the context sensitivity and adaptability of the model. Nevertheless, ChatGPT and the new versions under development may be promising tools for assisting lay first responders, operators, and professionals in diagnosing a paediatric emergency.

Trial registration

Not applicable.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Background/Rationale

The early recognition of life-threatening situations and high-quality first aid until emergency medical services (EMS) arrive is lifesaving and highly relevant to the prognosis and neurological outcome in cardiopulmonary resuscitation [1]. In most European countries, EMS are present within a few minutes, ensuring high-quality medical care for victims in arrest or peri-arrest situations. However, medical care might be delayed in rural or remote areas or with a possible future shortage of EMS [2] due to demographic developments. This puts lay helpers in a crucial position impacting the prognosis depending on the quality of first aid until EMS arrives.

Complex medical conditions and the expected increasing shortage of educational staff make it unlikely for lay persons to be empowered to manage difficult cases without guidance. A selection of paediatric emergencies that may be challenging even for experts are addressed in the American Heart Association course for Paediatric Advanced Life Support (PALS). PALS covers eight general conditions (four respiratory and four cardiovascular) with one to four specific conditions that cause paediatric cardiac arrest and death [3].

In recent years, lay people’s health information-seeking behaviour has gradually shifted as they increasingly turn to online sources and digital services [4]. This trend has been accompanied by the development of numerous mobile applications (“apps”) that provide health-related services, ranging from fitness trackers to tools for self-diagnosis and symptom checking [5]. Thanks to the widespread use of smartphones, such health apps are easily accessible to many (e.g., via Google Play or Apple App Store).

Symptom checkers are one type of health app (or online tool) that provides a personal assessment of a medical complaint and offers triage advice through chatbot-based interfaces. While these apps are frequently used for common conditions such as viral infections, studies suggest that some symptom checkers also have high diagnostic and triage accuracy in medical emergencies [6]. Yet other apps provide first aid advice or resuscitation instructions for bystanders or first responders. However, the quality of freely available apps can vary substantially, and due to a lack of quality control, concerns arise about their accuracy, reliability, and safety [7]. For instance, some cardiopulmonary resuscitation apps provide inaccurate information [8], and some symptom checkers have been shown to lack accuracy and tend to be overly risk-averse [9,10,11,12].

Although some health apps already incorporate artificial intelligence (AI), many tools, such as symptom checkers, rely on narrowly scoped models or simple decision tree systems. Recent advancements in generative AI have opened the door for using powerful deep learning techniques that could be used in health-related contexts and potentially support health information-seeking [13].

One type of generative AI that has recently gained immense interest is the Large Language Model (LLM), with OpenAI’s system ChatGPT, based on the GPT 3.5 (Generative Pre-trained Transformer) architecture, being one of the most popular. ChatGPT provides a free and easy-to-use chatbot released in November 2022 and has since attracted millions of users, making it one of the fastest-growing internet applications in history. In March 2023, OpenAI unrolled an advanced chatbot for paying user, based on an early version of GPT-4. In response to the hype around ChatGPT, Microsoft integrated a GPT-4-based chatbot into its search engine Bing, and Google released a first version of a similar system named Bard, based on its LLM called LaMDA. Given the power of these and other LLMs, the public and economic interest, and the integration into everyday used products such as search engines, smartphones, or virtual assistants, it is likely that we will see a rise in AI-based chatbot that are used for various purposes including healthcare and medicine [14].

While first LLMs have been specifically trained for medical uses (e.g. Med-PaLM2 or BioGPT), ChatGPT or GPT-4 were not initially developed for healthcare or health research use. Nevertheless, researchers have recently explored various potential applications of ChatGPT in medicine and healthcare during the last few months [15]. Studies have investigated whether GPT-based models can pass medical licensing examinations [16, 17] or life support exams [18], with most finding that their performance falls below professional benchmarks. Despite this, the results show the promising potential of the model [19]. Recent research found that ChatGPT “achieves impressive accuracy in clinical decision making” [20] and could provide a powerful tool to assist in diagnosis and self-triage for individuals without medical training [21], outperforming lay individuals [22]. It has also been suggested that ChatGPT’s responses are being perceived by help-seekers as more empathic than those of physicians [23]. Consequently, there is speculation about the potential for conversational AI to provide laypeople with assistance in medical contexts [24], including emergency situations.

While models of ChatGPT or GPT-4 have been tested in various medical settings [15, 25], to our knowledge, no specific targeted assessment has yet been performed on medical emergencies in children [18] – the most feared situation for bystanders involving high emotionality and exposure to become traumatized as a “second victim” [26]. The use of AI-based chatbots in such high-stakes contexts is restricted due to their limitations and potential for harm [27,28,29]. Despite the proficiency of LLMs in multiple domains, they are still susceptible to error and “hallucinations” (plausible text generated that is not based on reality or fact) [25]. Concerns have been raised that using LLMs like ChatGPT may compromise patient safety in emergency situations, leading to various ethical and legal questions [29]. When answering to health-related questions, ChatGPT sometimes provides a disclaimer that it is not a qualified medical diagnosis but continues offering advice after this caveat. Despite such warnings and the fact that ChatGPT is not designed to aid in emergencies, there is a high likelihood that lay people will use popular AI tools in unforeseen ways and turn to AI-based chatbots when seeking help during medical emergencies [30]. Therefore, it is crucial to investigate the capabilities and safety of such LLMs in high-stake scenarios.

Objectives

The objective of this study was to test the hypothesis that ChatGPT and GPT-4 are capable of correctly identifying emergencies requiring EMS (hypothesis 1), identifying the correct diagnosis (hypothesis 2), and correctly advising on further actions to lay rescuers (hypothesis 3) depending on prototypical case vignettes based on Basic Life Support (BLS) and PALS scenarios. As the PALS cases are life-threatening paediatric conditions, we expected a 95% accuracy (accounting for typical alpha errors) for all hypotheses. Depending on the study design with six iterations per case, this equals a zero-error tolerance.

Methods

Study Design and Setting

We conducted a cross-sectional explorative evaluation of the capabilities of OpenAI’s ChatGPT and GPT-4 using 22 case vignettes based on 2 BLS and the 20 core PALS scenarios [3]. Five emergency physicians developed and validated these vignettes (see Table 1) for face and content validity. All physicians (MB, SB, StB, JB, JG) are active in clinical practice, with four in leadership positions specialized in critical care medicine, emergency medicine, and anaesthesiology. In addition, three of them (SB, StB, MB) are licensed instructors of the American Heart Association for Basic Life Support, Advanced Cardiovascular Life Support for Experienced Providers, and Paediatric Advanced Life Support.

The vignettes comprised 20 prototypical PALS emergencies: three for upper airway (foreign body aspiration, croup, anaphylaxis), two for lower airway (asthma, bronchiolitis), one for lung tissue disease (viral pneumonia), three for disordered control of breathing (raised intracranial pressure, intoxication, neuromuscular disease), two for hypovolaemic shock (non-haemorrhagic, haemorrhagic), three for distributive shock (septic, anaphylactic, neurogenic), two for cardiogenic shock (arrhythmia, myocarditis), and four for obstructive shock (tension pneumothorax, pericardial tamponade, pulmonary embolism, ductal-depended heart disease).

Additionally, we added two BLS cases (cardiac arrest in adults with and without AED).

Both ChatGPT as well as GPT-4 were exposed to each case at least three times. In all PALS cases, the program was asked, “What is the diagnosis?” and “What can I do?”. In four cases, we included that EMS was already called.

Participants

The study did not involve any human subjects.

Variables

Hypothesis 1

was tested by the variable if a correct call for medical professionals in indicated situations was advised (“CALL” for medical help). This variable was considered correct whenever EMS was advised to be called (e.g., by “911”) in life-threatening situations, such as respiratory distress or decompensated shock, or contact to emergency systems (e.g., driving to the hospital, calling the general practitioner) in compensated situations was instructed.

Hypothesis 2

was tested by a qualitative analysis of the variable “DIAGNOSIS”, which was considered to be correct whenever the program mentioned the diagnosis.

Hypothesis 3

was tested by the qualitative analysis of correct “ADVICE” to first aiders and the analysis of “ALS-ADVICE” coded for situations in which ChatGPT/GPT-4 would suggest PALS or ACLS treatments that are recommended for professionals only.

All variables, except “ALS ADVICE”, were defined as “1” for correct and “0” for incorrect. “ALS-ADVICE” was an inverted item (“0” = correct, “1” = incorrect).

The secondary variables were:

-

a)

ALTERNATIVE DIAGNOSIS: Correct alternative diagnosis mentioned by ChatGPT/GPT-4?

-

b)

DISCLAIMER: Correct mentioning that ChatGPT/GPT-4 is not a substitute for assistance by health care professionals?

-

c)

PATIENT SAFETY: Subjective impression of patient safety violation by combining the other parameters (emergency call, first aid advice, no professional advice)?

We performed the binomial test to examine the probability of successfully classifying the following variables: CALL, DIAGNOSIS, ADVICE, ALS ADVICE, ALTERNATIVE DIAGNOSIS, DISCLAIMER, and PATIENT SAFETY as correct or incorrect if lower than 95% of all 132 cases and iterations.

Data Sources/ Measurement

Our data sources were ChatGPT (OpenAI, San Francisco, USA) on Google Chrome (Version 111.0.5563.111) using an Acer Aspire tabletop PC and GPT-4 (OpenAI, San Francisco, USA) on Google Chrome (Version 109.0.5414.119) using a MacBook Pro, M1, 2020. Default Model (GPT-3.5) and Model GPT-4 on ChatGPT Plus were used, version number March 23 (2023). The data was collected between the 29th of March and the 10th of April 2023.

Study Size

Each case (n = 22) was presented to ChatGPT and GPT-4 three times, resulting in the analysis of 132 cases.

Statistical Methods

We used SPSS 29.0 by IBM for the descriptive and analytic statistics. Aside from descriptive and explorative data analysis, intra-rater reliability for every variable mentioned above was assessed using the Fleiss’ kappa to evaluate the degree of concord among the three iterations of the 22 cases rated by ChatGPT and GPT-4. Inter-rater reliability was assessed using the interclass correlation (ICC) to evaluate the degree of agreement between ChatGPT and GPT-4, comparing the mean percentage of correctly classified answers of three iterations for every variable mentioned above. For binominal tests, we assumed significancy for p < 0.05 (one-sided).We did not use SI units in variable and case descriptions to simulate a setting for lay rescuers. There were two exceptions: body temperature (fever) was described in degrees Celsius, and a medication dose of ibuprofen in milligrams. Both are common units assumed to be used by lay rescuers who would consult an LLM in an emergency.

Results

Participants

Not applicable.

Descriptive Data

Altogether 132 core cases were analysed.

Main Results

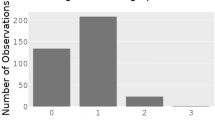

All results are presented in Table 2. ChatGPT/GPT-4 responses are available in detail in supplement S1. In all cases, all models advised contacting medical professionals. Calling EMS was advised in 94 cases (71.2%). Considering the six iterative presentations, ChatGPT/GPT-4 correctly identified 12 of 22 scenarios (54.5%) as emergencies of high urgency with the correct activation of the emergency response chain.

In case 17, the medical staff assessed the patient before contacting the AI. ChatGPT/GPT-4 ignored this in all six iterations and advised the emergency call. The cases with poor activation of the EMS were non-haemorrhagic shock, septic shock, supraventricular tachycardia, myocarditis, and pulmonary embolism.

Valid advice to first aiders was correct in 83 of 132 cases (62.9%) and considering the iterative approach in 10 of 22 scenarios (45.5%). The worst performance in advice could be detected in choking (ChatGPT/GPT-4 advises infant treatments, the Heimlich manoeuvre only once), opioid intoxication, Duchenne muscular dystrophy, haemorrhagic shock (advising a torniquet), supraventricular tachycardia (advising carotid pressure), and pericardial tamponade. In case 2 (airway swelling in anaphylaxis), ChatGPT-GPT-4 decided twice for hemodynamic anaphylactic shock treatment (lay down, legs raised) instead of respiratory treatment (elevated positioning).

The correct diagnosis was made in 124 of 132 cases (93.94%). The binomial test revealed that the observed proportion of correct diagnoses was not statistically lower than 95% considering all scenarios and iterations together (one-tailed p = 0.49). In three of 22 scenarios (13.6%), the diagnoses could not be made consistently. These were septic shock, pulmonary embolism, and pericardial tamponade. All other scenarios were identified correctly.

For the six other variables (CALL, ADVICE, DIAGNOSIS, ALTERNATIVE DIAGNOSIS, DISCLAIMER, PATIENT SAFETY), the observed proportion of correctly classified answers was significantly lower than 95% (one-tailed p < 0.05) throughout all cases and iterations. Only the variable ALS ADVICE did not show an observed proportion of correct answers lower than 95% (one-tailed p = 0.49).

A Fleiss’ Kappa of 0.73 showed a high degree of concordance of the iterations for GPT-4 and ChatGPT for the variable CALL medical professionals. For the variable ADVICE, GPT-4 showed a higher Fleiss’ Kappa value of 0.67 as opposed to ChatGPT (Fleiss’ Kappa = 0.48). The same applies to the variable ALS ADVICE (GPT-4: Fleiss’ Kappa = 0.47 vs. ChatGPT: Fleiss’ Kappa = 0.30). The degree of agreement regarding the correct DIAGNOSIS among the iterations was higher for ChatGPT (Fleiss’ Kappa = 0.3) than for GPT-4 (Fleiss’ Kappa = 0.2). There was no difference between the models for the variable ALTERNATIVE DIAGNOSIS (Fleiss’ Kappa = 0.39) and PATIENT SAFETY (Fleiss’ Kappa = 0.63). In contrast, there was practically no agreement among the iterations regarding the variable DISCLAIMER for both models (Fleiss’ Kappa < 0). In conclusion, the intra-rater reliability was poor for both models except for the variables CALL medical professionals and PATIENT SAFETY.

The inter-rater reliability between ChatGPT and GPT-4 measured by ICC was poor for the variables DIAGNOSIS, ALTERNATIVE DIAGNOSIS, and PATIENT SAFETY (average measure = 0.2–0.4) and acceptable for the variables CALL, ADVICE, ADVICE ALS, and DISCLAIMER (average measure > 0.7).

Discussion

Key Results

To our knowledge, this is the first work to investigate the capabilities of ChatGPT and GPT-4 on PALS core cases in the hypothetical scenario that laypersons would use the chatbot for support until EMS arrive.

However, our results clearly show that ChatGPT/GPT-4 was not consistent in activating the correct emergency response (hypothesis 1) and advising correct first aid actions (hypothesis 3). Therefore, the only hypothesis we could confirm is DIAGNOSIS (hypothesis 2), as ChatGPT/GPT-4 mostly provided the correct diagnosis.

Additional analyses showed that when combining all parameters, the model failed to obtain safe support to first aiders in nearly half the cases, especially in the shock cases (see Table 2). Despite our high expectations, the use of recent ChatGPT/GPT-4 in medical emergencies must be questioned for patient safety.

Recent research on health apps (preceding LLMs) regarding their validity and accuracy could show a wide range of quality as many apps failed the quality standards [7, 31]. However, some useful apps can be used in paediatric emergencies [32], but these would have to be downloaded before or during the incident. Other apps mainly focus on health care providers, specific aspects of resuscitation [33,34,35], or function as educational tools [36]. Concerning LLMs, early studies showed satisfactory accuracy of ChatGPT answering multidisciplinary medical questions [22, 37] and a capability to pass older BLS, PALS and ACLS exams [18].

However, lay rescuers face different barriers in emergencies. Concerning cardiopulmonary arrest, resuscitation attempts and competencies are still far below desirable [38] and may overwhelm bystanders. Furthermore, health literacy among parents is low [39,40,41], again showing the demand for education on cardiac arrest prevention, but also on emergency management itself, including improved advice by EMS operators.

However, even emergency call centre operators or telephone-triage nurses and general practitioners show limited accuracy in emergencies below 95%, partially even below 60% sensitivity or specificity [42,43,44]. Additionally, they may be biased by language barriers [45]. This insight reduces the argument of our high-performance expectation of ChatGPT/GPT-4 of 95%, which was not met in our study. Nonetheless, the LLMs show better, and thus promising results compared to humans’ competence in emergency situations that might be optimized in future research, development, and training of LLMs.

Limitations

Studying LLMs such as ChatGPT or GPT-4 presents several challenges, partly due to the opacity of these models and the limited knowledge of their specific training data. For example, when using standard scenarios (e.g., based on PALS) to investigate the diagnostic and triage capacities, it is difficult to discern whether the models perform beyond mere “memorization” of correct answers, as it is likely that they have been trained on these specific vignettes. To avoid this shortcoming, we modified the wording of standard scenarios [46].

Furthermore, the output generated from LLMs is highly sensitive to the input prompts, and different prompting strategies can significantly impact the model’s abilities and performance. Complex language models have the capacity for in-context learning, e.g., through demonstrations of a few training examples of the relevant task [47]. Chain-of-thought technique, which demonstrates step-by-step reasoning in the prompts [48], has been suggested as a promising strategy in the context of complex medical or health-related questions [49]. In our study, instead of providing ChatGPT/GPT-4 with input-output training examples or chain-of-thoughts, we intentionally used a simple prompting strategy (“zero-shot”), that we believe imitates laypersons’ interaction with the chatbot. Hence, the prompts contained only a case description and the questions “What is the diagnosis?” and “What can I do?”. It remains an area for further research to test different prompting strategies and their impact on diagnostic and triage accuracy.

Our study faces further limitations: First, there is no comparative group of lay persons, first responders, EMS operators, or other medical staff that would be asked the same questions. However, this was not the aim of our study, leaving opportunities for future work on human-LLM inter-reliability, accuracy, and results on hybrid human/AI cooperation on emergency calls.

Second, selection bias might still be present. As ChatGPT/GPT-4 uses answer structures that differ between entries, perhaps six iterations are insufficient, indicating the need to analyse more iterations and differing prompts.

Third, we used English cases created by B2/C1 level speakers, not native speakers. However, different language proficiencies (e.g., due to migration) are realistic and were therefore left as is. Further evaluations of different language proficiencies and barriers and the ability of ChatGPT/GPT-4 to deal with this issue creates a future research opportunity [45].

Fourth, some cases included typical phrases for illnesses, e.g., “barking cough” in the croup case. Consequently, we reduced the vignettes and eliminated the pathognomonic “clues” for specific diseases that probably would not be mentioned by lay persons consulting ChatGPT/GPT-4. In this case, ChatGPT/GPT-4 identified croup in 50% after the terminology modification. For future research, these cases should be reduced, or lay persons should describe the simulated cases to be evaluated.

Further, we used European standards to determine patient safety and correct EMS activation. As ChatGPT is accessible worldwide through the internet, expected answers may differ regionally, especially in regions without access to EMS. For example, in case 14, we treated the transport to a hospital as incorrect due to missed spine immobilization, but in rural areas this may be the only chance to survive, as there is no EMS. Consequently, LLMs should be evaluated context-sensitively to local and national medical resources and recommendations.

Conclusion

While our results show that recent ChatGPT/GPT-4 models may perform better than humans in certain medical emergencies, we must express reluctance in recommending the use as a device for diagnostic advice at this time. Nevertheless, these are very promising results for the next AI generations. The future potential to improve the efficiency and delivery of emergency management and services in times of resource shortages and longer waiting times (especially in rural areas) is exciting, especially when combined with human medical professionals. Consequently, further evaluation and experiments with LLMs compared to humans and hybrid models of AI and humans should be the aim of future studies in emergency medicine.

Data Availability

Data is included in Supplemental 1.

Abbreviations

- ACLS:

-

Advanced Cardiovascular Life Support

- AI:

-

Artificial Intelligence

- BLS:

-

Basic Life Support

- ChatGPT:

-

Chat Generative Pre-trained Transformer

- GPT:

-

Generative Pre-trained Transformer

- EMS:

-

Emergency Medical Service

- ICC:

-

Intra Class Correlation

- LLM:

-

Large Language Model

- PALS:

-

Pediatric Advanced Life Support

References

Vadakkencherry Ramaswamy, V., et al., A comparative evaluation and appraisal of 2020 American Heart Association and 2021 European Resuscitation Council neonatal resuscitation guidelines. Resuscitation, 2021. 167: p. 151–159.

Eiche, C., et al., Job Satisfaction and Performance Orientation of Paramedics in German Emergency Medical Services-A Nationwide Survey. Int J Environ Res Public Health, 2021. 18(23).

AHA, Pediatric Advanced Life Support Instructor Manual, International English eBook edition. 2020, American Heart Association.

Mirzaei, A., et al., Predictors of Health Information-Seeking Behavior: Systematic Literature Review and Network Analysis. J Med Internet Res, 2021. 23(7): p. e21680.

Klasnja, P. and W. Pratt, Healthcare in the pocket: mapping the space of mobile-phone health interventions. J Biomed Inform, 2012. 45(1): p. 184–98.

Fraser, H.S., et al., Evaluation of Diagnostic and Triage Accuracy and Usability of a Symptom Checker in an Emergency Department: Observational Study. JMIR mHealth and uHealth, 2022. 10(9): p. e38364.

Grundy, Q., A Review of the Quality and Impact of Mobile Health Apps. Annual Review of Public Health, 2022. 43(1): p. 117–134.

Metelmann, B., et al., Medical Correctness and User Friendliness of Available Apps for Cardiopulmonary Resuscitation: Systematic Search Combined With Guideline Adherence and Usability Evaluation. JMIR Mhealth Uhealth, 2018. 6(11): p. e190.

Semigran, H.L., et al., Evaluation of symptom checkers for self diagnosis and triage: audit study. bmj, 2015. 351.

Schmieding, M.L., et al., Triage accuracy of symptom checker apps: 5-year follow-up evaluation. Journal of Medical Internet Research, 2022. 24(5): p. e31810.

Ceney, A., et al., Accuracy of online symptom checkers and the potential impact on service utilisation. PLoS One, 2021. 16(7): p. e0254088.

Wallace, W., et al., The diagnostic and triage accuracy of digital and online symptom checker tools: a systematic review. NPJ Digital Medicine, 2022. 5(1): p. 118.

Moor, M., et al., Foundation models for generalist medical artificial intelligence. Nature, 2023. 616(7956): p. 259–265.

Lee, P., S. Bubeck, and J. Petro, Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N Engl J Med, 2023. 388(13): p. 1233–1239.

Li, J., et al., ChatGPT in Healthcare: A Taxonomy and Systematic Review medRxiv, 2023: p. 2023.03. 30.23287899.

Kung, T.H., et al., Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS digital health, 2023. 2(2): p. e0000198.

Gilson, A., et al., How does CHATGPT perform on the United States Medical Licensing Examination? the implications of large language models for medical education and knowledge assessment. JMIR Medical Education, 2023. 9(1): p. e45312.

Fijačko, N., et al., Can ChatGPT pass the life support exams without entering the American heart association course? Resuscitation, 2023. 185.

Lee, P., Goldberg, C., & Kohane, I., The AI Revolution in Medicine: GPT-4 and Beyond Vol. 1. 2023: Pearson.

Rao, A., et al., Assessing the Utility of ChatGPT Throughout the Entire Clinical Workflow: Development and Usability Study. J Med Internet Res, 2023. 25: p. e48659.

Mehrotra, A. Symptom Checkers & ChatGPT. 2023 [cited 2023 16.04.2023]; Available from: https://scholar.harvard.edu/mehrotra/symptom-checkers.

Levine, D.M., et al., The Diagnostic and Triage Accuracy of the GPT-3 Artificial Intelligence Model medRxiv, 2023: p. 2023.01. 30.23285067.

Ayers, J.W., et al., Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum. JAMA Intern Med, 2023.

Reardon, S. AI Chatbots Can Diagnose Medical Conditions at Home. How Good Are They? 2023 [cited 2023 16.04.2023]; Available from: https://www.scientificamerican.com/article/ai-chatbots-can-diagnose-medical-conditions-at-home-how-good-are-they/.

Sallam, M., ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare (Basel), 2023. 11(6).

Wu, A.W., Medical error: the second victim. The doctor who makes the mistake needs help too. Bmj, 2000. 320(7237): p. 726–7.

Bender, E.M., et al. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?? in Proceedings of the 2021 ACM conference on fairness, accountability, and transparency. 2021.

Weidinger, L., et al. Taxonomy of risks posed by language models. in 2022 ACM Conference on Fairness, Accountability, and Transparency. 2022.

Li, H., et al., Ethics of large language models in medicine and medical research. Lancet Digit Health, 2023.

Haltaufderheide, J. and R. Ranisch, Tools, Agents or Something Different? – The Importance of Techno- Philosophical Premises in Analyzing Health Technology. American Journal of Bioethics, 2023. 23(5): p. 19–22.

Müller, R., et al., Ethical, legal, and social aspects of symptom checker applications: a scoping review. Medicine, Health Care and Philosophy, 2022. 25(4): p. 737–755.

Born, J., et al., Great Help for Small People - The Development of a Children’s Emergency App. Stud Health Technol Inform, 2020. 270: p. 1297–1298.

Rose, C., et al., Utilizing Lean Software Methods To Improve Acceptance of Global eHealth Initiatives: Results From the Implementation of the Basic Emergency Care App. JMIR Form Res, 2021. 5(5): p. e14851.

Gálvez, J.A., et al., Interactive pediatric emergency checklists to the palm of your hand - How the Pedi Crisis App traveled around the world. Paediatr Anaesth, 2017. 27(8): p. 835–840.

Siebert, J.N., et al., Effect of a Mobile App on Prehospital Medication Errors During Simulated Pediatric Resuscitation: A Randomized Clinical Trial. JAMA Netw Open, 2021. 4(8): p. e2123007.

Doucet, L., et al., App-based learning as an alternative for instructors in teaching basic life support to school children: a randomized control trial. Acta Clin Belg, 2019. 74(5): p. 317–325.

Johnson, D., et al., Assessing the Accuracy and Reliability of AI-Generated Medical Responses: An Evaluation of the Chat-GPT Model. Res Sq, 2023.

Gräsner, J.T., et al., Survival after out-of-hospital cardiac arrest in Europe - Results of the EuReCa TWO study. Resuscitation, 2020. 148: p. 218–226.

Morrison, A.K., A. Glick, and H.S. Yin, Health Literacy: Implications for Child Health. Pediatr Rev, 2019. 40(6): p. 263–277.

DeWalt, D.A. and A. Hink, Health literacy and child health outcomes: a systematic review of the literature. Pediatrics, 2009. 124 Suppl 3: p. S265-74.

Sanders, L.M., et al., Literacy and child health: a systematic review. Arch Pediatr Adolesc Med, 2009. 163(2): p. 131–40.

Morreel, S., et al., How accurate is telephone triage in out-of-hours care? An observational trial in real patients. Acta Clin Belg, 2022. 77(2): p. 301–306.

Giesen, P., et al., Safety of telephone triage in general practitioner cooperatives: do triage nurses correctly estimate urgency? Qual Saf Health Care, 2007. 16(3): p. 181–4.

Huibers, L., et al., Safety of telephone triage in out-of-hours care: a systematic review. Scand J Prim Health Care, 2011. 29(4): p. 198–209.

Meischke, H.W., et al., The effect of language barriers on dispatching EMS response. Prehosp Emerg Care, 2013. 17(4): p. 475–80.

Hagendorff, T., Machine Psychology: Investigating Emergent Capabilities and Behavior in Large Language Models Using Psychological Methods arXiv preprint arXiv:2303.13988, 2023.

Brown, T., et al., Language models are few-shot learners. Advances in neural information processing systems, 2020. 33: p. 1877–1901.

Wei, J., et al., Chain of thought prompting elicits reasoning in large language models arXiv preprint arXiv:2201.11903, 2022.

Singhal, K., et al., Large language models encode clinical knowledge. Nature, 2023. 620(7972): p. 172–180.

Acknowledgements

None declared.

Funding

MTK was funded by the Messmer-Foundation Radolfzell, Germany, and Training Center for Emergency Medicine (NOTIS) Engen, Germany. RR work is a part of the Digital Ethics Forsight Lab in the Digital Medical Ethics Network (DiMEN) funded by the VolkswagenStiftung. SB, StB, MB, BG and JG declare no financial support or sponsorship.

Author information

Authors and Affiliations

Contributions

The authors contributed to the study as follows:

S Bushuven: conceptualization, case development, coding, ChatGPT 3.5 data, primary manuscript

J Bansbach: validation (critical care), primary draft and supervision

J Ganter: validation (emergency medicine), primary draft

M Bentele: validation (PALS, BLS), primary draft, and supervision

S Bentele: validation (PALS, BLS), primary draft, and supervision

B Gerber: validation (PALS, BLS), primary draft, English native speaker

M Trifunovic-Koenig: inferential statistical analysis, psychology, and primary draft.

R Ranisch: conceptualization, GPT 4 data, primary manuscript

All authors approved the final version of the manuscript.

Corresponding author

Ethics declarations

Ethical Approval

Not applicable. According to the national regulations in Germany, no ethical approval was required for this study.

Consent for Publication

Not applicable.

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bushuven, S., Bentele, M., Bentele, S. et al. “ChatGPT, Can You Help Me Save My Child’s Life?” - Diagnostic Accuracy and Supportive Capabilities to Lay Rescuers by ChatGPT in Prehospital Basic Life Support and Paediatric Advanced Life Support Cases – An In-silico Analysis. J Med Syst 47, 123 (2023). https://doi.org/10.1007/s10916-023-02019-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10916-023-02019-x