Abstract

Gait dysfunctions and balance impairments are key fall risk factors and associated with reduced quality of life in individuals with Parkinson’s Disease (PD). Smartphone-based assessments show potential to increase remote monitoring of the disease. This review aimed to summarize the validity, reliability, and discriminative abilities of smartphone applications to assess gait, balance, and falls in PD. Two independent reviewers screened articles systematically identified through PubMed, Web of Science, Scopus, CINAHL, and SportDiscuss. Studies that used smartphone-based gait, balance, or fall applications in PD were retrieved. The validity, reliability, and discriminative abilities of the smartphone applications were summarized and qualitatively discussed. Methodological quality appraisal of the studies was performed using the quality assessment tool for observational cohort and cross-sectional studies. Thirty-one articles were included in this review. The studies present mostly with low risk of bias. In total, 52% of the studies reported validity, 22% reported reliability, and 55% reported discriminative abilities of smartphone applications to evaluate gait, balance, and falls in PD. Those studies reported strong validity, good to excellent reliability, and good discriminative properties of smartphone applications. Only 19% of the studies formally evaluated the usability of their smartphone applications. The current evidence supports the use of smartphone to assess gait and balance, and detect freezing of gait in PD. More studies are needed to explore the use of smartphone to predict falls in this population. Further studies are also warranted to evaluate the usability of smartphone applications to improve remote monitoring in this population.

Registration: PROSPERO CRD 42020198510

Similar content being viewed by others

Introduction

Parkinson’s disease (PD) is the second most common neurodegenerative disorder in the United States of America behind Alzheimer’s disease [1]. It is associated with 4 cardinal well-known motor symptoms: resting tremor, bradykinesia, postural instability, and musculoskeletal stiffness [2]. These motor symptoms lead to gait dysfunctions and balance impairments which have been linked to an increased risk of falls [3], mortality, and morbidity in individuals diagnosed with PD [4]. Due to the consequences associated with gait dysfunctions and balance impairments within individuals with PD, accurate assessments widely available to a variety of clinicians and healthcare providers is critical for monitoring the overall progression of the disease and providing targeted care.

Significant effort by clinicians and researchers has focused on the accurate and valid measurement of gait and balance in individuals with PD. Performance-based clinical measures commonly used include the Timed Up and Go (TUG) test, Berg Balance Scale (BBS), Mini Balance Evaluation Systems Test (Mini-BESTest), and Functional Gait Assessment (FGA) [5,6,7]. These clinical outcomes are quick, easy to administer, and suitable for clinical practice. However, the clinical test’s scoring criteria are often subjective, and it is recommended that trained healthcare professionals such as physical and occupational therapists administer the tests [8]. In addition, clinical outcomes are often insensitive to subtle dysfunctional changes and have poor reliability [9]. Within a laboratory-based setting, gait alterations and balance impairments have been accurately evaluated using motion capture, wearable sensor systems, or force plate [10,11,12]. These advanced technologies require specialized equipment and advanced knowledge to interpret their results. Due to their excessive cost and the requirement of specialized training before implementation and interpretation of the data, they are not always suitable for clinical practice [8, 13]. Additionally, clinical and laboratory-based assessments require in-person contact which is not possible during a global health crisis such as the COVID-19 pandemic [14].

With the progress of technology, smartphones can serve as an alternative to the use of the aforementioned expensive and complex technologies. Smartphones are ubiquitous, portable, and affordable. Smartphones also offer the potential to perform remote assessments in home environments to gather more insight into an individual’s true functional abilities and limitations. Smartphones are equipped with triaxial accelerometers and gyroscopes that use applications that can be installed on smartphones. This technology has been used to assess gait and balance in various clinical populations including older adults [15], non-ambulatory individuals [16], individuals with traumatic brain injury [17], and people with multiple sclerosis [18]. Previous systematic reviews by Linares-Del Rey et al. [19] and Zapata et al. [20] indicated that smartphone and mHealth applications presented with potential to be used to monitor medication and provide PD-related information. However, these reviews did not synthetize the validity, reliability, and discriminative abilities of smartphone applications to assess gait and balance in this population. Therefore, the primary purpose of this systematic review was to synthetize the current state of smartphone applications to evaluate gait and balance in individuals with PD. Specifically, this review summarized the validity, reliability, and discriminative abilities of smartphone applications in PD. As a secondary aim, due to relationship between gait, balance, and falls, this review also aimed to report on smartphone applications ability to predict future falls in PD.

Methods

Review Protocol and Registration

The systematic review was conducted in accordance with the Cochrane Handbook [21]. The reporting of the study followed the instructions suggested by the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) [22]. The review protocol was pre-registered with PROSPERO, the International prospective register of systematic review (CRD: 42020198510).

Data Sources and Searches

The following databases search were searched from inception to February 2021: PubMed/Medline, Scopus, Web of Science, CINAHL, and SportDiscuss. The search strategies were adjusted for the various databases and filters were added to exclude studies such as animal studies. The search algorithm included possible combinations of “smartphone”, or “cell phone”, or “mobile phone”, and “gait”, or “ambulation”, or “standing” or “fall”, or “postural control”, or “balance”, and “Parkinson’s Disease”. The search strategies developed for each database are presented in Appendix A.

(EW) and (SMD) independently examined title and abstract of articles retrieved from the databases to identify potential articles for this review. They each compiled a list of potentially eligible studies. The full papers on both lists were retrieved and both review authors independently culled on the basis of the full text articles. A manual search in the reference lists (forward and backward searches) of included articles was conducted to identify other relevant studies. Any discrepancies were discussed until consensus was reached with a third author (LA).

Study Selection

Studies meeting the eligibility criteria were included in the systematic review. The eligibility criteria were developed based on the populations, interventions or exposures, comparators, outcomes, and study designs (PICOS). Participants: adults (over the age of 18 years) with the diagnosis of Parkinson’s Disease; Interventions or Exposures: not applicable; Comparators: data collected with a smartphone and compared with any clinical gait and balance outcome measures or with any validated technology such as standalone accelerometer, inertial measurement unit (IMU), 3-D motion capture, or force plate; Outcomes: gait measures such as 10-m Walking Test (10MWT), Freezing of Gait (FoG) measure, TUG; balance measures such as BBS and Mini-BESTest; and prospective falls; Study designs: cross-sectional and prospective cohort observational studies and intervention studies. Studies were excluded if they were non-human trials, reviews, abstracts, conference proceedings, case–control studies, and protocol papers without data collection. Studies published in languages other than English, French, Spanish, Italian, and Portuguese were intended to be excluded due to the inability of the research team to fully understand the content of these eventual studies.

Data Extraction and Analysis

Two review authors (EW) and (JP) independently extracted the following data: authors, publication year, study design, participant characteristics (Hoehn and Yahr, percentage of males, and duration of disease), mean age of participants, sample size, smartphone outcome measures, and types of smartphone, gait and balance outcome measures, measure of validity, reliability, sensitivity, and specificity, main results, smartphone wear site, intended user (i.e., clinicians or individuals with PD) and locations of assessment. Different smartphone applications based on accelerometers and gyroscopes were extracted. Any discrepancies between the authors during data extraction were resolved through discussion with a third author (LA).

Methodological Quality Assessment

The methodological quality assessment of the included studies was performed independently by two review authors (JP) and (RA) using the Quality Assessment Tool for Observational Cohort and Cross-sectional Studies developed by the National Institute of Health-NIH [23]. This tool includes 14 criteria that are rated according to 3 responses: Yes, No, or Other (cannot determine, not reported, or not available). The 14 criteria are listed and defined in Appendix B. Overall, the studies are rated as good, fair, or poor methodological quality [23]. Studies with 8 or more than 50% of the total applicable questions responded as “yes” were considered “good quality,” studies with 5–7 or less than 50% of the total applicable questions responded as “yes” were considered “fair quality.” Studies with < 5 questions responded as “yes” were considered “low quality” [24]. Any discrepancies between the authors during methodological quality assessment were resolved through discussion with a third author (LA).

Results

Study Selection

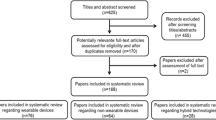

The databases search yielded a total of 376 articles and 4 articles were found following forward and backward searches. After removing duplicates, 252 articles underwent title and abstract screening. Following initial screening, 71 articles were retrieved for full text review. A total of 40 articles were excluded after the full text review due to the following reason: did not use a smartphone to assess fall, gait, or balance (n = 19), did not include individuals with PD (n = 17), and protocol papers (n = 4). Finally, 31 articles were included in this review. Figure 1 presents a detailed illustration of the study selection in accordance with PRISMA guidelines.

Study Analysis

Table 1 presents the characteristics of the studies included in this review. Sample size varied from 9 to 334 participants among the included studies. Nine studies were prospective cohort, and the remaining 22 studies were cross-sectional including individuals with PD. The mean age of participants varied from 50.7 to 77.0 years old. Hoehn and Yahr (H&Y) scale evaluating the level of disability and how the symptoms of PD progress varied from 1 to 4 across the studies. Ten studies did not report the level of disability of their participants. Most participants with PD in the studies were males. Percentage of males varied from 43 to 86%. Six studies did not provide information about the gender of the participants. The mean duration of disease varied from 3.5 to 15.5 years. Seven studies did not report the mean duration of PD.

Table 2 presents the details of the smartphone outcome measures, models of smartphone used, gait and balance outcome measures, measures of validity, reliability, sensitivity, and specificity of the smartphone measures, the main results, as well as the intended users of the smartphone, and locations of assessment. Only one of the included studies used a smartphone application to specifically predict future falls among individuals with PD [8], four studies used smartphone applications to specifically evaluate balance impairments [25,26,27,28], and 13 studies used smartphone applications to specifically assess gait including freezing of gait (FoG) [9, 29,30,31,32,33,34,35,36,37,38,39,40]. In addition, 11 studies used smartphone applications to assess gait and balance simultaneously [41,42,43,44,45,46,47,48,49,50,51] and two studies used smartphone applications to assess gait, balance, and predict future falls among individuals with PD [52, 53].

Gait and balance measurements trough clinical and biomechanics outcomes differed greatly between the studies. The most common gait and balance outcome measures included the gait, FoG, and postural stability items of the Unified Parkinson’s Disease Rating Scale-III (UPDRS-III), FoG, TUG, 10MWT, and Six-Minute Walking Test (6MWT). Seventeen studies (55%) completed the smartphone assessments in laboratory settings, ten studies (32%) completed the assessments in home settings, and four (13%) completed the assessments in both home and laboratory settings. Smartphone models also varied greatly from iOS to Android OS across the studies reviewed. iOS models included iPod Touch 4th and 5th generation, iPhone 5, 6, and 6 plus and Android OS models included Samsung S3, S5, S8, J7, LG, LG Optimus, Sony Xperia, Google Nexus, Huawei Ascend, and Moto G4. Six studies did not specify the smartphone models used [26, 28, 34, 38, 50, 51]. Nineteen (61%) out the 31 studies included in this review reported individuals with PD as their intended users, seven (23%) reported clinicians as intended users, and five (16%) reported both individuals with PD and clinicians as their intended users. Although most of the studies mentioned their intended users, only six out the 31 included studies formally evaluated the usability, feasibility, or satisfaction of their smartphone applications [27, 35, 38, 44, 46, 50]. Also, smartphone wear site during gait and balance assessments differed greatly among the studies and included the device secured to the chest, placed in front pocket, or mounted at the hip, navel, ankle, thigh, and lower back. Two studies did not specify the smartphone wear site during their assessments [29, 46].

Validity and Reliability of Smartphone Applications

Sixteen (52%) out of the 31 studies included in this review evaluated the validity of smartphone assessments by comparing their results to gait and balance clinical tests, standalone accelerometers, or force plate (See Table 2). Fiems et al. [8, 25] reported concurrent validity through significant correlations between an assessment performed with the Sway Balance™ smartphone application (balance and fall protocols) and IMUs placed at pelvic and thoracic regions (ρ = –0.61 to –0.92, p < 0.001). Su et al. [9] found significant correlations between a customized smartphone application and mobility laboratory measures, including stride time and stride variability, and total UPDRS-III (r = 0.98 – 0.99, p < 0.001 and β = 0.37 – 0.39, p < 0.001). Similarly, Borzì et al. [41] reported significant correlations between a smartphone derived quality of movement (QoM) index, 6MWT, and gait and postural stability items of the UPDRS III (r = 0.4 – 0.61, p < 0.0001). Ellis et al. [36] reported concurrent validity of the SmartMOVE application with step durations and step displacements. Also, Capecci et al. [37] reported significant correlations between a FoG detection smartphone application and step cadence. Chen et al. [43] and Lipsmeier et al. [45] reported that the Roche PD application significantly correlated with gait and postural stability items of UPDRS (r = 0.54, p < 0.001) for severity of gait and balance assessments. Additionally, Clavijo-Buendía et al. [44] reported construct validity of the RUNZI application for gait measurements but not for balance assessments (p > 0.05). Elm et al. [46] found significant correlations between the Fox Wearable Companion (FWC) application paired with a smartwatch and balance and walking items of UPDRS (r = 0.43 – 0.54, p’s < 0.01). Tang et al. [40] reported strong correlation between a customized smartphone application and a research-grade accelerometer measuring gait and FoG detection (r = 0.86 – 0.97, p < 0.05). Yahalom et al. [48, 49] reported discriminant validity between individuals with PD and healthy control using the EncephaLog application measures. In addition, the authors reported high correlation between EncephaLog application measures and 4-axial motor UPDRS and UPDRS items arising from chair, posture, and gait measures (r = 0.14 – 0.46, p < 0.05) [49]. Borzì et al. [26] reported high correlation between a SensorLog application and UPDRS postural instability clinical score (r = 0.76, p < 0.0001). Similarly, Ozinga et al. [28] reported a high agreement between a mobile device center of mass (COM) acceleration and NeuroCom force plate measures with a difference close to 0. Finally, Zhan et al. [29] reported correlation between the Hopkins PD application and UPDRS, TUG Test, and H &Y (r = 0.72 – 0.91, p’s < 0.01).

Seven (22%) out of the 31 studies included in this review evaluated some measure of reliability using a smartphone. Fiems et al. [8, 25], Clavijo-Buendía et al. [44], Lipsmeier et al. [45], Ozinga et al. [28] reported good to excellent test–retest reliability (ICC = 0.64 – 0.98) of smartphone applications (Sway Balance™, RUNZI application, Roche PD application, mobile device COM acceleration) to assess gait and balance in individuals with PD. Additionally, Tang et al. [40] reported good reliability in detecting FoG (ICC = 0.82) using a customized smartphone-based assessment. Finally, Serra-Añó et al. [51] reported good reliability for postural control (ICC = 0.62 – 0.71) and excellent for gait (ICC = 0.89 – 0.92) using the FallSkip application.

Discriminative abilities of Smartphone Applications

Seventeen (55%) out of the 31 studies included in this review evaluated some discriminative ability of smartphone applications to assess falls, gait, and/or balance among individuals with PD. Fiems et al. [8] indicated that the Sway Balance™ smartphone application showed an accuracy of 0.65 to predict future falls. This prediction performance is lower than the prediction performance of the ABC (0.76), Mini-BESTest (0.72), MDS-UPDRS (0.66), and fall history (0.83) [8]. In contrast, Lo et al. [52] reported a high accuracy of 0.94 of a customized smartphone-based assessment to predict future falls. Lo et al. [52] also reported an accuracy of 0.95 and 0.9 to predict FoG and postural instability in individuals with PD. Similarly, Pepa et al. [34, 39], Borzì et al. [31], Capecci et al. [37], Tang et al. [40], Abujrida et al. [47], and Kim et al. [30] reported good predictive abilities of smartphone applications to detect FoG (sensitivity: 0.70—0.96, specificity, 0.85—0.99, and accuracy: 0.81—0.99).

Additionally, Borzì et al. [41], Arora et al. [32, 53], Abujrida et al. [47], reported good to excellent ability of smartphone applications to discriminate gait and postural instability between individuals with PD and healthy controls (sensitivity: 0.85–1, specificity: 0.88–1). Also, Borzi et al. [31, 41], Chomiak et al. [33], Chen et al. [43], Lipsmeier et al. [45], and Abujrida et al. [47] indicated good to excellent ability of smartphone applications to discriminate between individuals with PD with different levels of postural stability, dynamic gait instability, gait cycle breakdown, resting tremor, dexterity, and leg dexterity (sensitivity: 0.58–1, specificity: 0.79–1, accuracy: 0.92 – 0.97). Finally, Fiems et al. [25] and Bayés et al. [38] reported moderate to good ability of smartphone applications to predict H & Y levels (sensitivity: 0, specificity: 1, accuracy: 0.57) and to recognize on–off motor states (sensitivity: 97% and specificity: 88%), respectively.

Methodological Quality Assessment

Appendix C presents the details of the methodological quality assessment of the included studies. Twenty-eight (90%) out the 31 studies presented with good methodological quality indicating a low risk of bias. Different levels of exposure and blinding of outcome measures were not applicable to any of the included studies and were not accounted in the overall quality rating. Lack of sample size justification was the most common deficiency reported in the included studies.

Discussion

The purpose of this study was to synthesize the current evidence of smartphone applications to assess gait, balance, and falls among individuals with PD. Smartphone-based assessments may be an appropriate alternative to conventional gait and balance analysis methods when such methods are cost prohibitive or not possible. In addition, the importance of remote monitoring and assessments have become clear in light of the COVID-19 pandemic. As a result of this systematic review, smartphone applications have shown to present with strong validity, reliability, and discriminative abilities to evaluate gait and balance and detect FoG among individuals with PD. The ability of smartphone applications to predict future falls in this population is inconclusive and deserves further exploration.

Within this review, sixteen studies evaluated the validity of smartphone applications against clinical and biomechanics measures to assess gait and balance in individuals with PD. The results indicate strong concurrent and discriminant validities of smartphone applications in detecting FoG, gait alterations, and postural instability in this population. Also, the results indicate that smartphone applications are valid to differentiate gait and postural instability between healthy control and individuals with PD. Only one study reported low correlations between cadence derived from the EncephaLog application and arising and gait axial motor UPDRS measures [49]. These low correlations may be explained by the measurement errors of the EncephaLog application that needs to be refined to appropriately reflect gait constructs in individuals with PD. The EncephaLog application was not fully automated and some human preprocessing before every measurement was still required prior to analysis [49]. In summary, the validity of 13 smartphone applications to evaluate gait and/or balance in PD has been reported in the literature. Sway Balance™ [8, 25], SensorLog application [26], and a smartphone-based accelerometer application without a specific name [28] have been validated to assess postural instability in PD. Also, SmartMOVE application [36], RUNZI application [44], Hopkins PD application [29], a Fuzzy logic algorithm embedded in a smartphone [39], and two other smartphone-based accelerometer applications without specific names [9, 40] have been validated to assess gait alterations and FoG in PD. While Roche PD application [43, 45], Fox Wearable Companion application [46], EncephaLog application [49], and a FallSkip system based on accelerometer and gyroscope [51] have been validated to evaluate both gait and postural instability in this population.

Similar to the validity of smartphone applications, the seven studies that evaluated the reliability of smartphone applications indicated good to excellent reliability to assess gait and balance, and in detecting FoG among individuals with PD. Although the studies reported high reliability of smartphone applications which minimizes the measurement errors between assessments, only 22% of the included studies in this review evaluated reliability. This is similar to the results of previous reviews indicating that the reliability of smartphone applications was only evaluated among few studies including older adults [15] and people with multiple sclerosis [18]. To increase the use of smartphone applications within clinical settings and provide an appropriate remote monitoring of gait and balance in individuals with PD, more studies are warranted to explore the reliability of smartphone applications in PD.

Additionally, smartphone applications showed good to excellent abilities to discriminate gait and postural instability between healthy controls and individuals with PD. Also, several of the included studies reported the ability of smartphone applications to appropriately discriminate between individuals with PD with different levels of postural stability, dynamic gait instability, gait cycle breakdown, rest tremor, dexterity, and leg dexterity. These results are also consistent with the results reported in older adults [15] and people with multiple sclerosis [18] indicating that smartphone applications are sensitive, specific, and accurate in discriminating gait and postural control within subgroups of individuals with PD and between healthy control and individuals with PD. However, the discriminative ability of smartphone applications to predict falls is inconclusive. While Fiems et al. [8] indicate that the Sway Balance™ mobile application is not as accurate as history of falls and other clinical measures including ABC and Mini-BESTest in predicting falls and should not be used as alternative, Lo et al. [52] reported a high accuracy of a customized smartphone-based assessment to predict future falls. This contradictory result should be further clarified by using smartphone applications to track falls in prospective studies. Gait dysfunctions, FoG, and balance impairments are the main fall risks among individuals with PD [54] and therefore, their relationship should be explored using smartphone-based assessments. This will increase the clinical use of smartphone applications among people with PD.

The type of smartphone applications (i.e., RUNZI, EncephaLog, Roche PD, FWC) and model of smartphones (i.e., iOS-based and Android-based smartphones) differed greatly among the studies included in this review. This may influence the results of the validity, reliability, and discriminative properties described in this study. While no comparisons can be made based on the studies included in this review because of the different conditions of data collection, future studies should investigate the differences between smartphone applications and/or smartphone models. Also, the smartphone wear sites while evaluating gait and balance varied greatly among the studies reviewed. In most studies, participants carried smartphones in their front pant pocket or secured at the chest or waist. Smartphone placement has been reported as an important factor during gait and balance assessments in people with multiple sclerosis [55]. In individuals with PD, Kim et al. [30] compared different smartphone placements during FoG detection and reported that location of smartphone did not influence the results. The authors furthermore reported that smartphones mounted at ankle, waist, or pocket provided similar FoG detection results in this population [30]. Since only one study has investigated the influence of different smartphone locations on gait and balance assessments in PD, this topic also deserves further exploration.

Lastly, only 19% of the included studies formally evaluated the usability, feasibility, or satisfaction of their smartphone applications and 32% completed the assessments in home settings. In general, the studies reported feasibility, good acceptability, and high satisfaction of the smartphone applications in unsupervised home settings [27, 35, 38, 44, 46, 50]. However, in individuals with advanced PD, common motor features including tremor, bradykinesia, rigidity, and postural instability [2] are likely to be exacerbated which may lead to an increased difficulty using smartphone technology. Due to the importance of the intended users in the development of smartphone applications for remote monitoring [20], more studies are needed to investigate the usability of smartphone applications to assess gait and balance in individuals with PD. Adaptations of smartphone applications based on user’s needs may help to overcome the challenge of incorporating smartphone applications into clinical practice and clinical decision making.

Limitations

They are some limitations associated with this systematic review that should be taken into consideration when interpretating the results presented. First, the results of validity and discriminative abilities reported in this review are based on only approximately 50% of the total number of studies included. The other 50% did not report any results of validity and discriminative abilities of smartphone applications. Similarly, approximately 80% of the included studies did not report any results of reliability of smartphone applications in PD. Given the importance of the psychometric properties of an outcome measures regarding measurement errors and measurement construct, the results presented may be overstated. It is essential that more studies compare smartphone application outcomes with clinical outcomes and/or biomechanics measures to corroborate the results presented in this review. Additionally, none of the included studies performed a sample size calculation before recruiting participants. This is a crucial limitation as the power of the analysis in the included studies may be hindered. Finally, our review did not specify which smartphone applications are more appropriate to assess gait and balance according to the stage and severity of PD. This was mainly because most of the studies included in this review did not focus on differentiating gait and balance assessments based on participant’s characteristics using smartphone applications. We recommend that future studies explore the differences between the various stages and severity of PD using smartphone applications to facilitate their incorporation into clinical decision making.

Conclusion

This review provides strong evidence regarding the potential use of smartphone applications to assess gait and balance among individuals with PD in the home or laboratory. The results indicate that smartphone applications present with strong validity, reliability, and discriminative abilities to monitor gait dysfunctions and balance impairments and to detect FoG in individuals with PD. This review also highlights the need for further use of smartphone applications to monitor fall risk factors in this population. Additionally, most studies did not formally investigate the usability of smartphone applications. Due to the importance of the acceptability and satisfaction of smartphone applications in these assessments, further studies are warranted to investigate the usability of smartphone applications to assess gait and balance in PD. This will help to efficiently use smartphone applications as an unobstructive technology for gait and balance assessments during individuals with PD daily life activities in habitual setting. Consequently, remote monitoring of PD will help provide targeted care to individuals with PD.

Availability of Data and Material

Not applicable.

Code Availability

Not applicable.

References

Kowal SL, Dall TM, Chakrabarti R, Storm MV, Jain A. The current and projected economic burden of Parkinson's disease in the United States. Mov Disord. 2013;28(3):311-8.

Tysnes OB, Storstein A. Epidemiology of Parkinson's disease. J Neural Transm (Vienna). 2017;124(8):901-5.

Hoskovcová M, Dušek P, Sieger T, Brožová H, Zárubová K, Bezdíček O, et al. Predicting Falls in Parkinson Disease: What Is the Value of Instrumented Testing in OFF Medication State? PLoS One. 2015;10(10):e0139849.

Peterson DS, Mancini M, Fino PC, Horak F, Smulders K. Speeding Up Gait in Parkinson's Disease. Journal of Parkinson's disease. 2020;10(1):245-53.

Leddy AL, Crowner BE, Earhart GM. Functional Gait Assessment and Balance Evaluation System Test: Reliability, Validity, Sensitivity, and Specificity for Identifying Individuals With Parkinson Disease Who Fall. Physical Therapy. 2011;91(1):102-13.

Flynn A, Preston E, Dennis S, Canning CG, Allen NE. Home-based exercise monitored with telehealth is feasible and acceptable compared to centre-based exercise in Parkinson's disease: A randomised pilot study. Clin Rehabil. 2020:269215520976265.

Lazaro R. The Immediate Effect of Trunk Weighting on Balance and Functional Measures of People with Parkinson's Disease: A Feasibility Study. J Allied Health. 2021;50(1):38-46.

Fiems CL, Miller SA, Buchanan N, Knowles E, Larson E, Snow R, et al. Does a Sway-Based Mobile Application Predict Future Falls in People With Parkinson Disease? Archives of Physical Medicine and Rehabilitation. 2020;101(3):472-8.

Su D, Liu Z, Jiang X, Zhang F, Yu W, Ma H, et al. Simple Smartphone-Based Assessment of Gait Characteristics in Parkinson Disease: Validation Study. JMIR Mhealth Uhealth. 2021;9(2):e25451.

Ilha J, Abou L, Romanini F, Dall Pai AC, Mochizuki L. Postural control and the influence of the extent of thigh support on dynamic sitting balance among individuals with thoracic spinal cord injury. Clin Biomech (Bristol, Avon). 2020;73:108-14.

Romijnders R, Warmerdam E, Hansen C, Welzel J, Schmidt G, Maetzler W. Validation of IMU-based gait event detection during curved walking and turning in older adults and Parkinson's Disease patients. J Neuroeng Rehabil. 2021;18(1):28.

Bonora G, Mancini M, Carpinella I, Chiari L, Horak FB, Ferrarin M. Gait initiation is impaired in subjects with Parkinson's disease in the OFF state: Evidence from the analysis of the anticipatory postural adjustments through wearable inertial sensors. Gait Posture. 2017;51:218-21.

Abou L, de Freitas GR, Palandi J, Ilha J. Clinical Instruments for Measuring Unsupported Sitting Balance in Subjects with Spinal Cord Injury: A Systematic Review. Top Spinal Cord Inj Rehabil. 2018;24(2):177-93.

Prvu Bettger J, Thoumi A, Marquevich V, De Groote W, Rizzo Battistella L, Imamura M, et al. COVID-19: maintaining essential rehabilitation services across the care continuum. BMJ Glob Health. 2020;5(5).

Roeing KL, Hsieh KL, Sosnoff JJ. A systematic review of balance and fall risk assessments with mobile phone technology. Arch Gerontol Geriatr. 2017;73:222-6.

Frechette ML, Abou L, Rice LA, Sosnoff JJ. The Validity, Reliability, and Sensitivity of a Smartphone-Based Seated Postural Control Assessment in Wheelchair Users: A Pilot Study. Front Sports Act Living. 2020;2:540930.

Howell DR, Lugade V, Taksir M, Meehan WP, 3rd. Determining the utility of a smartphone-based gait evaluation for possible use in concussion management. Phys Sportsmed. 2020;48(1):75-80.

Abou L, Wong E, Peters J, Dossou MS, Sosnoff JJ, Rice LA. Smartphone applications to assess gait and postural control in people with multiple sclerosis: A systematic review. Mult Scler Relat Disord. 2021;51:102943.

Linares-Del Rey M, Vela-Desojo L, Cano-de la Cuerda R. Mobile phone applications in Parkinson's disease: A systematic review. Neurologia. 2019;34(1):38–54.

Zapata BC, Fernández-Alemán JL, Idri A, Toval A. Empirical studies on usability of mHealth apps: a systematic literature review. J Med Syst. 2015;39(2):1.

Higgins J, Thomas J, Chandler J, Cumpston M, Li T, Page M, et al. Cochrane Handbook for Systematic Reviews of Interventions Version 6.0 (updated July 2019). The Cochrane Collaboration. 2019.

Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Systematic Reviews. 2015;4(1):1.

National Institute of Health N. Study quality assessment tools [web page]. USA: NIH; 2014. p. https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools.

Abou L, Alluri A, Fliflet A, Du Y, Rice LA. Effectiveness of Physical Therapy Interventions in Reducing Fear of Falling Among Individuals With Neurologic Diseases: A Systematic Review and Meta-analysis. Archives of Physical Medicine and Rehabilitation. 2021;102(1):132-54.

Fiems CL, Dugan EL, Moore ES, Combs-Miller SA. Reliability and validity of the Sway Balance mobile application for measurement of postural sway in people with Parkinson disease. NeuroRehabilitation. 2018;43(2):147-54.

Borzì L, Fornara S, Amato F, Olmo G, Artusi CA, Lopiano L. Smartphone-Based Evaluation of Postural Stability in Parkinson’s Disease Patients During Quiet Stance. Electronics. 2020;9(6):919.

Fung A, Lai EC, Lee BC. Usability and Validation of the Smarter Balance System: An Unsupervised Dynamic Balance Exercises System for Individuals With Parkinson's Disease. IEEE Trans Neural Syst Rehabil Eng. 2018;26(4):798-806.

Ozinga SJ, Linder SM, Alberts JL. Use of Mobile Device Accelerometry to Enhance Evaluation of Postural Instability in Parkinson Disease. Arch Phys Med Rehabil. 2017;98(4):649-58.

Zhan A, Mohan S, Tarolli C, Schneider RB, Adams JL, Sharma S, et al. Using Smartphones and Machine Learning to Quantify Parkinson Disease Severity: The Mobile Parkinson Disease Score. JAMA Neurol. 2018;75(7):876-80.

Kim H, Lee HJ, Lee W, Kwon S, Kim SK, Jeon HS, et al. Unconstrained detection of freezing of Gait in Parkinson's disease patients using smartphone. Annu Int Conf IEEE Eng Med Biol Soc. 2015;2015:3751-4.

Borzì L, Varrecchia M, Olmo G, Artusi CA, Fabbri M, Rizzone MG, et al. Home monitoring of motor fluctuations in Parkinson’s disease patients. Journal of Reliable Intelligent Environments. 2019;5(3):145-62.

Arora S, Venkataraman V, Zhan A, Donohue S, Biglan KM, Dorsey ER, et al. Detecting and monitoring the symptoms of Parkinson's disease using smartphones: A pilot study. Parkinsonism & Related Disorders. 2015;21(6):650-3.

Chomiak T, Xian W, Pei Z, Hu B. A novel single-sensor-based method for the detection of gait-cycle breakdown and freezing of gait in Parkinson's disease. J Neural Transm (Vienna). 2019;126(8):1029-36.

Pepa L, Verdini F, Capecci M, Maracci F, Ceravolo MG, Leo T. Predicting Freezing of Gait in Parkinson’s Disease with a Smartphone: Comparison Between Two Algorithms. In: Andò B, Siciliano P, Marletta V, Monteriù A, editors. Ambient Assisted Living: Italian Forum 2014. Cham: Springer International Publishing; 2015. p. 61-9.

Mazilu S, Blanke U, Dorfman M, Gazit E, Mirelman A, Hausdorff JM, et al. A Wearable Assistant for Gait Training for Parkinson’s Disease with Freezing of Gait in Out-of-the-Lab Environments. ACM Trans Interact Intell Syst. 2015;5(1):Article 5.

Ellis RJ, Ng YS, Zhu S, Tan DM, Anderson B, Schlaug G, et al. A Validated Smartphone-Based Assessment of Gait and Gait Variability in Parkinson’s Disease. PLOS ONE. 2015;10(10):e0141694.

Capecci M, Pepa L, Verdini F, Ceravolo MG. A smartphone-based architecture to detect and quantify freezing of gait in Parkinson's disease. Gait Posture. 2016;50:28-33.

Bayés À, Samá A, Prats A, Pérez-López C, Crespo-Maraver M, Moreno JM, et al. A “HOLTER” for Parkinson’s disease: Validation of the ability to detect on-off states using the REMPARK system. Gait & Posture. 2018;59:1-6.

Pepa L, Capecci M, Andrenelli E, Ciabattoni L, Spalazzi L, Ceravolo MG. A fuzzy logic system for the home assessment of freezing of gait in subjects with Parkinsons disease. Expert Systems with Applications. 2020;147:113197.

Tang S-T, Tai C-H, Yang C-Y, Lin J-H. Feasibility of Smartphone-Based Gait Assessment for Parkinson’s Disease. Journal of Medical and Biological Engineering. 2020;40(4):582-91.

Borzì L, Olmo G, Artusi CA, Fabbri M, Rizzone MG, Romagnolo A, et al. A new index to assess turning quality and postural stability in patients with Parkinson's disease. Biomedical Signal Processing and Control. 2020;62:102059.

Orozco-Arroyave JR, Vásquez-Correa JC, Klumpp P, Pérez-Toro PA, Escobar-Grisales D, Roth N, et al. Apkinson: the smartphone application for telemonitoring Parkinson's patients through speech, gait and hands movement. Neurodegener Dis Manag. 2020;10(3):137-57.

Chen OY, Lipsmeier F, Phan H, Prince J, Taylor KI, Gossens C, et al. Building a Machine-Learning Framework to Remotely Assess Parkinson's Disease Using Smartphones. IEEE Trans Biomed Eng. 2020;67(12):3491-500.

Clavijo-Buendía S, Molina-Rueda F, Martín-Casas P, Ortega-Bastidas P, Monge-Pereira E, Laguarta-Val S, et al. Construct validity and test-retest reliability of a free mobile application for spatio-temporal gait analysis in Parkinson’s disease patients. Gait & Posture. 2020;79:86-91.

Lipsmeier F, Taylor KI, Kilchenmann T, Wolf D, Scotland A, Schjodt-Eriksen J, et al. Evaluation of smartphone-based testing to generate exploratory outcome measures in a phase 1 Parkinson's disease clinical trial. Mov Disord. 2018;33(8):1287-97.

Elm JJ, Daeschler M, Bataille L, Schneider R, Amara A, Espay AJ, et al. Feasibility and utility of a clinician dashboard from wearable and mobile application Parkinson’s disease data. npj Digital Medicine. 2019;2(1):95.

Abujrida H, Agu E, Pahlavan K. Machine learning-based motor assessment of Parkinson's disease using postural sway, gait and lifestyle features on crowdsourced smartphone data. Biomed Phys Eng Express. 2020;6(3):035005.

Yahalom H, Israeli-Korn S, Linder M, Yekutieli Z, Karlinsky KT, Rubel Y, et al. Psychiatric Patients on Neuroleptics: Evaluation of Parkinsonism and Quantified Assessment of Gait. Clin Neuropharmacol. 2020;43(1):1-6.

Yahalom G, Yekutieli Z, Israeli-Korn S, Elincx-Benizri S, Livneh V, Fay-Karmon T, et al. Smartphone Based Timed Up and Go Test Can Identify Postural Instability in Parkinson's Disease. The Israel Medical Association journal: IMAJ. 2020;22(1):37-42.

Ferreira JJ, Godinho C, Santos AT, Domingos J, Abreu D, Lobo R, et al. Quantitative home-based assessment of Parkinson's symptoms: the SENSE-PARK feasibility and usability study. BMC Neurol. 2015;15:89.

Serra-Añó P, Pedrero-Sánchez JF, Inglés M, Aguilar-Rodríguez M, Vargas-Villanueva I, López-Pascual J. Assessment of Functional Activities in Individuals with Parkinson's Disease Using a Simple and Reliable Smartphone-Based Procedure. Int J Environ Res Public Health. 2020;17(11).

Lo C, Arora S, Baig F, Lawton MA, El Mouden C, Barber TR, et al. Predicting motor, cognitive & functional impairment in Parkinson's. Ann Clin Transl Neurol. 2019;6(8):1498-509.

Arora S, Baig F, Lo C, Barber TR, Lawton MA, Zhan A, et al. Smartphone motor testing to distinguish idiopathic REM sleep behavior disorder, controls, and PD. Neurology. 2018;91(16):e1528.

Shen X, Wong-Yu IS, Mak MK. Effects of Exercise on Falls, Balance, and Gait Ability in Parkinson's Disease: A Meta-analysis. Neurorehabil Neural Repair. 2016;30(6):512-27.

Shanahan CJ, Boonstra FMC, Cofré Lizama LE, Strik M, Moffat BA, Khan F, et al. Technologies for Advanced Gait and Balance Assessments in People with Multiple Sclerosis. Front Neurol. 2017;8:708.

Funding

This research did not receive any specific grand from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

LA was responsible for designing the study, protocol registration, mediating any data screening and extraction discrepancies, interpreting the results, and writing the initial manuscript. JP, EW, SMD, and RA were responsible for screening the studies, extracting the data, and assessing the methodological quality assessment of the included studies at different levels. JJS and LAR contributed to the study design and provided feedback on the initial review protocol and the manuscript.

Corresponding author

Ethics declarations

Ethical Approval

Not required since this article is a review.

Consent to Participate

Not applicable since this article is a review.

Consent for Publication

Not applicable since no identifying information is included in this article.

Conflict of Interest

Jacob J. Sosnoff is principal owner of Sosnoff Technologies LLC.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of Topical Collection on Mobile & Wireless Health

Electronic Supplementary Material

Below is the link to the electronic supplementary material.

10916_2021_1760_MOESM2_ESM.docx

Supplementary file2 (DOCX 23 KB) Supplementary Appendix B. Criteria and definitions of the items of the Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies.

Rights and permissions

About this article

Cite this article

Abou, L., Peters, J., Wong, E. et al. Gait and Balance Assessments using Smartphone Applications in Parkinson’s Disease: A Systematic Review. J Med Syst 45, 87 (2021). https://doi.org/10.1007/s10916-021-01760-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10916-021-01760-5