Abstract

This contribution presents substantial computational advancements to compare measures even with varying masses. Specifically, we utilize the nonequispaced fast Fourier transform to accelerate the radial kernel convolution in unbalanced optimal transport approximation, built upon the Sinkhorn algorithm. We also present accelerated schemes for maximum mean discrepancies involving kernels. Our approaches reduce the arithmetic operations needed to compute distances from \({{\mathcal {O}}}\left( n^{2}\right) \) to \({{{\mathcal {O}}}}\left( n \log n \right) \), opening the door to handle large and high-dimensional datasets efficiently. Furthermore, we establish robust connections between transportation problems, encompassing Wasserstein distance and unbalanced optimal transport, and maximum mean discrepancies. This empowers practitioners with compelling rationale to opt for adaptable distances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many progressive and remarkable formulations have been presented to address the problem of comparing probability measures. Among popular domains like data science, the restriction to formulations that can only handle measures of equal weight (probability measures, for example), is cumbersome. Some formulations have been proposed to handle unbalanced measures. However, the numerical acceleration of such formulations is not addressed enough in the literature. This paper focuses on numerical acceleration of prominent formulations, which enable the comparison of two measures with possibly different masses. Additionally, we provide theoretical bounds and elucidate relationships within the taxonomy of distances presented below. Leveraging fast computations alongside these bounds empowers practitioners to select flexible distances tailored to their specific requirements.

Optimal transport (OT) in its standard formulation builds on efficient ways to rearrange masses between two given probability distributions. Such approaches commonly relate to the Wasserstein or Monge–Kantorovich distance. We refer to the monograph Villani [54] for an extensive discussion of the OT problem. A pivotal prerequisite of the standard OT problem formulation is that it requires the input measures to be normalized to unit mass – that is, to probability, or balanced measures. This is an unfeasible presumption for some problems that need to handle arbitrary, though positive measures.

Unbalanced optimal transport (UOT) has been established to deal with this drawback by allowing mass variation in the transportation problem (cf. Benamou [6]). This problem is stated as a generalization of the Kantorovich formulation (cf. Kantorovich [21]) by taking aberrations from the desired measures into account in addition.

On the other hand, kernel techniques lead to many mathematical and computational advancements. One popular instance of such advancements is the reproducing kernel Hilbert space (RKHS), which is predominantly considered as an efficient tool to generalize the linear statistical approaches to non-linear settings, cf. the kernel trick, e.g. By utilizing the distinct mathematical properties of RKHS, a distance measure is proposed in Gretton et al. [18], which is known as maximum mean discrepancy (MMD). Maximum mean discrepancies (MMDs) are kernel based distance measures between given unbalanced and/ or probability measures, based on embedding measures in a RKHS. We refer to Muandet et al. [30] for a textbook reference. Remarkably, these kernel based distances provide meaningful metrics for unbalanced measures as well.

Numerical computation and applications of UOT and MMD.Traditional choices for solving the transportation problem involve several discrete combinatorial algorithms that rely on the finite-dimensional linear programming formulation. Notable among these are the Hungarian method, the auction algorithm, and the network simplex, see Luenberger and Ye [28], Ahuja et al. [1]. However, their scalability diminishes notably when confronted with large, dense problems. The technique of entropy regularization to solve the standard OT problem is an important milestone, which improves the scalability of traditional methods (cf. Cuturi [12]). The Sinkhorn algorithm exploits the entropy-regularized (OT) problem, recognized as an alternating optimization algorithm, commonly referred to as the Iterative Proportional Fitting Procedure (IPFP) (cf. Sinkhorn [48]). The unbalanced OT (UOT) problems splendidly adopt the entropy regularization technique, which also improves the scalability.

Today’s data-driven world, which is dominated by rapidly growing machine learning (ML) techniques, utilizes the entropy regularized UOT algorithm for many applications. These include, among various others, domain adaptation (cf. Fatras et al. [13]), crowd counting (cf. Ma et al. [29]), bioinformatics (cf. Schiebinger et al. [42]), and natural language processing (cf. Wang et al. [56]).

MMD is considered to be an important framework for many problems in machine learning and statistics. Intelligent fault diagnosis (cf. Li et al. [25]), two-sample testing (cf. Gretton et al. [18]), feature selection (cf. Song et al. [50]), density estimation (cf. Song et al. [49]), and kernel Bayes’ rule (cf. Fukumizu et al. [14]) are applicable fields based on MMD. In the MMD framework, the choice of the kernel plays a vital role, and it depends on the nature of the problem. The forthcoming sections explicitly explain the efficient computational approach for prominent kernels.

Related Works.Considering unbalanced measures (that is, measures of possibly different total mass) requires extending divergences to more general measures than probability measures. We build our formulations on Bregman divergences. This approach the popular Kullback–Leibler divergence, which captures deviations for probability measures only.

Many algorithms have been proposed, including the entropy regularization approach, to efficiently solve UOT problems. Chizat et al. [11] investigate some prominent numerical approaches. In the research work of Carlier et al. [9], a method for fast Sinkhorn iterations, that involve only Gaussian convolutions, is theoretically studied. The core idea of this approach is that each step (i.e., each iteration) can be solved on a regular grid (equispaced), which is relatively faster than the standard Sinkhorn iteration. However, this approach utilizes the equispaced convolution, which is often a setback among the wide range of applications (cf. Platte et al. [36], Potts et al. [39, Sect. 1]), with the expense of the approximation. Furthermore, specific results scrutinize the consideration of low-rank approximation methods, specifically Nyström methods, for enhancing scalability (cf. Altschuler et al. [2]). Moreover, von Lindheim and Steidl [55] emphasize the necessity for addressing the heightened computational complexity and memory requirements of regularized UOT problems.

Despite powerful statistical properties, the MMD frameworks suffer from computational burdens. Unlike OT and UOT problems, only few contributions are presented to surpass the computational burden of MMD problems. Those few contributions improve the computational process at the price of substandard approximation accuracy (cf. Zhao and Meng [58], Le et al. [24]). Furthermore, some recent approaches, with focus on Gaussian kernel implementation, utilize the low-rank Nyström method to mitigate the computational burdens (cf. Cherfaoui et al. [10]).

One of the top ten algorithms of the twentith century is the fast Fourier transform (FFT), which relies on equispaced data points. The technique of FFT has been generalized to access non-equispaced data points, which is known as non-equispaced fast Fourier transform (NFFT). In contrast to FFT, NFFT is an approximate algorithm, although it renders stable computation at the same number of arithmetic operations as FFT. The fast summation method employed in the forthcoming algorithms is harnessed in the standard entropy-regularized OT problem, see Lakshmanan et al. [23]. Remarkably, it captures the precise nature of standard numerical algorithms and comes with significant improvements in terms of time and memory. This method can also be utilized for multi-marginal OT, a generalization of standard OT (cf. Ba and Quellmalz [4]). Notably, the NFFT fast summation method has not been explored in either a UOT or an MMD setup. A evident deviation regarding the utilization of NFFT approximation from existing literature lies in our implementation of the three-dimensional approximation regime of NFFT. Furthermore, the investigation into one, two, and three-dimensional approximations of MMD, notably focusing on the inverse multi-quadratic and energy kernels, is conspicuously absent in current literature.

Contributions and outline.To address the formidable challenges set by UOT and MMDs, we introduce efficient methods and robust bounds. More precisely, the paper discusses the following key approaches to address unbalanced problems:

-

(i)

We establish bounds of the UOT problem using the Wasserstein distance, suitable exclusively for probability measures, and the Bregman divergences, which are applicable for unbalanced measures.

-

(ii)

The relationship between MMD and the Wasserstein distance, as well as the optimal transport for unbalanced measures, recognizing that the genuine Wasserstein distance and UOT are often unattainable.

-

(iii)

NFFT-based fast computation methods for regularized UOT and MMD.

Initially, we conduct a comprehensive theoretical exploration, unveiling metric inequalities and other profound relationships between the Wasserstein distance and UOT, and MMDs. This paper addresses computational hurdles, but also deepens the understanding of the intricate connections within these frameworks.

Below, we provide an outline of the paper.

-

(i)

Sections 2.4 and 2.5 present upper bounds for both, the standard UOT problem and the regularized UOT problem. These bounds do not necessitate any sophisticated optimization routine for their computation. Moreover, Sect. 2.4 demonstrates a bound for UOT by leveraging the Wasserstein distance.

-

(ii)

In Sect. 2.7, we furnish robust inequalities that articulate the relationship between MMD and the Wasserstein distance. Additionally, we expand the robust inequalities to the unbalanced setting, specifically examining the connection between MMD and UOT.

-

(iii)

Section 4 introduces the fast summation technique based on the NFFT, enabling fast matrix–vector operations. This section unveils the NFFT-accelerated implementation of regularized UOT and MMDs, ensuring fast and stable computations. For fast MMD approaches, we present inverse multiquadratic and energy kernels in addition to Gaussian and Laplace kernels, as there is a demand for these kernels in the literature, see Hagemann et al. [19], Ji et al. [20], Zhao et al. [59]. Furthermore, we elucidate the arithmetic operations associated with these problems. By leveraging our fast summation method and the bounds delineated in Sects. 2.4, 2.5 and 2.7, we adeptly acquire the desired quantities with efficiency and precision.

-

(iv)

Section 5 substantiates the performances of our proposed approaches using both synthetic and real datasets. Furthermore, we provide an analysis of the disparities among our established bounds. Additionally, we offer comprehensive discussions on the advantages and trade-offs associated with prominent existing approaches.

2 Preliminaries and Taxonomy of Wasserstein Distances

In this section, we provide necessary definitions and properties to facilitate the upcoming discussion. Moreover, we introduce robust relations between MMD and Wasserstein distance, and upper bounds for the UOT and regularized UOT.

2.1 Bregman Divergence

In various domains, the Bregman divergence (cf. Bregman [8]) is utilized as a generalized measure of difference of two different points (cf. Lu et al. [27], Nielsen [34]). Here, we consider the deviation with regard to a strictly convex function on the set of non-negative Radon measures \({\mathcal {M}} _+({{\mathcal {X}}})\), where \({{\mathcal {X}}} \subset {{\mathbb {R}}}^d\).

Definition 2.1

(Bregman divergence) For a \({{\mathbb {R}}}\)-valued, convex function \(\Phi :{\mathcal {M}} _+({{\mathcal {X}}})\rightarrow {{\mathbb {R}}}\), the Bregman divergence is

where

is the directional derivative of the convex function \(\Phi \) at \(\mu \) in direction \(\nu -\mu \).

In statistics, \(F_\mu \) is also called von Mises derivative or the influence function of \(\Phi \) at \(\mu \). Note that the Bregman divergence exists (possibly with values \(\pm \infty \)), and it is non-negative (that is, \({\textrm{D}}(\nu \Vert \mu )\ge 0\)) even for unbalanced measures \(\mu \) and \(\nu \), as the function \(\Phi \) is convex by assumption and \(\Phi (\nu )\ge \Phi (\mu )+ F_\mu (\nu )\). Uniform convexity causes definiteness (that is, \({\textrm{D}}(\nu \Vert \mu )=0\) if and only if \(\nu =\mu \)) and the Bregman divergence, in general, is not symmetric.

The Bregman divergence is notably defined here for general measures \(\mu \) and \(\nu \) in the domain of \(\Phi \), including unbalanced measures (i.e., \(\mu ({{\mathcal {X}}})\ne \nu ({{\mathcal {X}}})\)); the Bregman divergence is particularly not restricted to probability measures.

Important examples for the Bregman divergence involve a non-negative reference measure \(\mu \) and a convex function \(\varphi \), and build on

where \(Z_\nu \) is the Radon–Nikodým derivative of \(\nu \) with respect to \(\mu \); that is, \(\nu ({\textrm{d}}\xi )= Z_\nu (\xi )\,\mu ({\textrm{d}}\xi )\). As \(\varphi \) is convex and the measure \(\mu \) positive, it follows from

that \(\Phi \) is convex as well. For sufficiently smooth functions \(\varphi \), it follows with the Taylor series expansion \(\varphi (1+z)= \varphi (1)+ \varphi ^{\prime }(1)\,z+{\mathcal {O}}(z^2)\) that

so that the Bregman divergence associated with \(\Phi \) is

Using the aforementioned universalized formulation of Bregman divergence, the Bregman divergence corresponding to \(\varphi (z)= z\log z\) is

which generalizes the Kullback–Leibler divergence to general (unbalanced) measures \(\nu \), provided that \(\mu \) is a positive measure.

2.2 OT Problem

The standard (i.e., balanced) OT problem is a linear optimization problem that reveals the minimal cost to transport masses from one probability measure to some other probability measure. The optimal cost is popularly known as the Wasserstein distance.

Definition 2.2

(r-Wasserstein distance) The Wasserstein distance of order \(r\ge 1\) of the probability measures P, \({{\tilde{P}}}\in {\mathcal {P}}({\mathcal {X}})\) for a given cost or distance function \(d:{{\mathcal {X}}}\times {{\mathcal {X}}}\rightarrow [0,\infty ]\) is

where

Here, \(\pi \) has marginal measures P and \({{\tilde{P}}}\), that is,

where \(\tau _1(x,{{\tilde{x}}}){:}{=} x\) (\(\tau _2(x,{\tilde{x}}){:}{=} {\tilde{x}}\), resp.) is the coordinate projection and \(\tau _{1\#}\pi {:}{=} \pi \circ \tau _1^{-1}\) (\(\tau _{2\#}\pi {:}{=} \pi \circ \tau _2^{-1}\), resp.) the pushforward measure.

More explicitly, the marginal constraints (2.2b)–(2.2c) are

where A and \(B\subset {{\mathcal {X}}}\) are measurable sets. We shall also write

when averaging the function \(d^r\) with respect to the measure \(\pi \) as in (2.2a).

2.3 UOT Problem

The marginal measures in (2.2b) and (2.2c) necessarily share the same mass, as \(\tau _{1\#} \pi ({{\mathcal {X}}})= \pi ({{\mathcal {X}}}\times {{\mathcal {X}}})= \tau _{2\#} \pi ({{\mathcal {X}}})\). The core principle of the UOT problem is to relax the hard marginal constraints (2.2b) and (2.2c) with soft constraints to enable the mass variation. More concisely, the soft constraints are considered in the objective by involving the divergence between the marginals of \(\pi \) from given measures \(\mu \) and \(\nu \).

In our research, we consider Bregman divergences as the soft constraints or marginal discrepancy function.

Definition 2.3

(Unbalanced optimal transport problem) Let \(\mu \), \(\nu \in {\mathcal {M}} _+({{\mathcal {X}}})\) and \(d:{{\mathcal {X}}}\times {{\mathcal {X}}}\rightarrow [0,\infty )\). Let \({\textrm{D}}(\cdot \Vert \cdot )\) be the Bregman divergence as in Definition 2.1. The generalized unbalanced transport cost is

where \(r\ge 1\); the parameter \(\eta _1\ge 0\) (\(\eta _2\ge 0\), resp.) is a regularization parameter, which emphasizes the importance assigned to the marginal measures \(\mu \) (\(\nu \), resp.).

The measure \(\pi \in {\mathcal {M}} _+\) in (2.3) satisfies the relation \(\tau _{1\#}\pi ({{\mathcal {X}}})= \tau _{2\#}\pi ({{\mathcal {X}}})\), although \(\mu ({{\mathcal {X}}}) \ne \nu ({{\mathcal {X}}})\) in general. The optimal measure in (2.3) thus constitutes a compromise between the total masses of \(\mu \) and \(\nu \). Note as well that the measure \(\mu \otimes \nu \) is feasible in (2.3), with total measure \((\mu \otimes \nu )({{\mathcal {X}}}\times {{\mathcal {X}}})=\mu ({{\mathcal {X}}})\cdot \nu ({{\mathcal {X}}})\).

Remark 2.4

(Marginal regularization parameters) For parameters \(\eta _1= \eta _2= 0\) (or \(\eta _1= \eta _2\searrow 0\), resp.), the explicit solution of problem (2.3) is \(\pi =0\) (\(\pi \searrow 0\), resp.). That is, no transportation takes place at all in this special case.

For \(\eta _1\nearrow \infty \) and \(\eta _2\nearrow \infty \), problem (2.3) appraises the marginals well. More precisely, for measures with equal mass, the solution \(\pi \) tends to the solution of the standard optimal transport problem. In addition, the total mass of the optimal solution (2.3) increases from \(\pi ({{\mathcal {X}}}\times {{\mathcal {X}}})=0\) to \(\pi ({{\mathcal {X}}}\times {{\mathcal {X}}})= \mu ({{\mathcal {X}}})= \nu ({{\mathcal {X}}})\) (not \(\mu ({{\mathcal {X}}})\cdot \nu ({{\mathcal {X}}})\)). For measures with unequal mass, \(\mu ({{\mathcal {X}}})\ne \nu ({{\mathcal {X}}})\), the transportation plan is the best plausible arrangement of the measures \(\mu \) and \(\nu \).

For a discussion on the minimizing measure and its existence we may refer to Liero et al. [26, Theorem 3.3].

2.4 Upper Bounds for the Unbalance Optimal Transport

Suppose the marginals \(\tau _{1\#}\pi \) and \(\tau _{2\#}\pi \) were known, then the Wasserstein problem in (2.3) is intrinsic. For this reason, we may consider the Wasserstein problem with normalized marginals to develop an upper bound for the unbalanced optimal transport problem.

Proposition 2.5

Let \(\pi \) be a bivariate probability measure, feasible for the Wasserstein problem with normalized marginals

Then

is an upper bound for the unbalanced optimal transport problem (2.3) with Kullback–Leibler divergences, and the constant in (2.5) is optimal among all measures of the form \(c\cdot \pi \), where \(c\ge 0\).

Proof

See Appendix A.1\(\square \)

The following proposition delineates a bound for UOT by leveraging the Wasserstein distance and Bregman divergences.

Proposition 2.6

Let \(\mu \), \(\nu \in {\mathcal {M}} _+({{\mathcal {X}}})\), and define probability measures \(P(\cdot ){:}{=} {\mu (\cdot ) \over \mu ({{\mathcal {X}}})}\) and \(Q(\cdot ){:}{=} {\nu (\cdot ) \over \nu ({{\mathcal {X}}})}\). It holds that

where \(u:=\mu ({{\mathcal {X}}})\cdot \nu ({{\mathcal {X}}})\) and \({\textrm{D}}(\cdot \Vert \cdot )\) is the Bregman divergence.

For probability measures P and Q on a discrete space \({\mathcal {X}}\), it holds in addition that

where \(\Vert d^r\Vert _F\) is the Frobenius norm of the distance matrix.

Proof

For probability measures P and Q as defined above, we have that

as the terms \(u\cdot {\tilde{\eta }}_1\,{\textrm{D}}\left( {\tau _{1\#}{\pi } \over u}\Vert \,P\right) \) and \(u\cdot {\tilde{\eta }}_2\,{\textrm{D}}\left( {\tau _{2\#}{\pi } \over u}\Vert \,Q\right) \) are non-negative for \({{\tilde{\eta }}}_1\ge 0\) and \({{\tilde{\eta }}}_2\ge 0\). Employing the optimal measure \(\pi ^{*}_{{\tilde{\eta }}}\) of the UOT problem associated with the probability measures P and Q, it follows from right-hand side of (2.6) that

Taking the limit on the right-hand side, i.e., \({\tilde{\eta }}_1,{\tilde{\eta }}_2\rightarrow \infty \), we obtain that (cf. Remark 2.4)

which is the first assertion. Now, by exploiting Cauchy–Schwarz inequality, we have

where \({\tilde{\pi }}\) is the optimal measures of \(w_{r}(P,Q)\), and \(\Vert {\tilde{\pi }}\Vert _F\le 1\) by Hölder’s inequality. Then, it follows from (2.7) and (2.8) for probability measures P and Q that

and thus the second assertion. \(\square \)

In the following Sect. 2.5, we discuss the regularized UOT problem, and its properties.

2.5 Entropy Regularized UOT

The generic setup of UOT is computationally challenging. Thus, an entropy regularization approach was proposed, enabling computational efficiency with negligible compromise in accuracy.

Definition 2.7

The entropy regularized, unbalanced optimal transport problem is

where \(\pi \) is a non-negative, bivariate measure on \({{\mathcal {X}}}\times {{\mathcal {X}}}\); the parameter \(r\ge 1\) is the order of the Wasserstein distance, \(\lambda >0\) accounts for the entropy regularization and \(\eta _1 \ge 0\), \(\eta _2\ge 0\) are the marginal regularization parameters.

Remark 2.8

It is notable that the constants in (2.9) regularizing the marginals are \(\eta _1\) and \(\eta _2\), while the constant for the discrepancy of the entropy is \(\nicefrac 1{\lambda }\) (and not \(\lambda \)). We maintain the constant \(1\over \lambda \) instead of \(\lambda \) to stay consistent with preceding literature. To recover the initial Wasserstein problem (2.2), it is essential that \(\eta _1\) and \(\eta _2\), as well as \(\lambda \) are large or tend to \(+\infty \).

As in Proposition 2.5 above, we can establish the following optimal compromise between the measures \(\mu \) and \(\nu \).

Proposition 2.9

(Optimal independent measure) When restricted to measures \(c\cdot \mu \otimes \nu \), \(c\ge 0\), the measure

with constant

is optimal for the entropy (Kullback–Leibler) regularized, unbalance optimal transport problem (2.9) with Kullback–Leibler divergences.

Proof

See Appendix A.2\(\square \)

Remark 2.10

(Upper bounds) The determination of optimal quantities \(c^{*}_{r;\eta ;\lambda }\) and \(c^{*}_{r;\eta }\) doesn’t necessitate intricate optimization algorithms. Furthermore, these upper bounds,

possess significant advantages across numerous scenarios, serving as robust initial values for iterative procedures in practical implementations. Through the utilization of these optimal quantities, we establish significant and non-trivial connections within a particular class of distances in the following Sect. 2.7.

Remark 2.11

(Sinkhorn divergence) The addition of regularization term accelerates the computational process of both, OT and UOT problems. However, the transportation costs or distances of entropy regularized OT and UOT problems violate the axiom of definiteness of distances. The technique of entropy debiasing was initially proposed for the regularized OT problem by Ramdas et al. [41], which is formulated as

where \(w_{r;\lambda }(P,{{\tilde{P}}}) {:}{=} \min _{\pi \in {{\mathcal {P}}}({\mathcal {X}} \times {\mathcal {X}})}\langle d^r,\pi \rangle + \frac{1}{\lambda }{\textrm{D}}\big (\pi \Vert P \otimes {\tilde{P}}\big )\). Here, \(\pi \) has marginal measures P and \({{\tilde{P}}}\) as defined in (2.2a), \(r\ge 1\), and \(\lambda >0\) is an entropy regularization parameter.

Similarly, to evacuate the bias, one can state the debiased regularized UOT problem as

This technique of entropy debiasing for regularized OT and UOT problems, is adapted from the idea of computation of kernel based distance, i.e., MMD. The aforementioned formulations are called Sinkhorn divergence (cf. Genevay et al. [15]).

Remark 2.12

(entropy upper bounds) For probability measures P and \({\tilde{P}}\), as a natural consequence of the non-negative entropy penalty term \({\textrm{D}}(\cdot \Vert \cdot )\ge 0\), we have \(w_r(P,{\tilde{P}}) \le w_{r;\lambda }(P,{\tilde{P}})\). Similarly, for the unbalanced case, we have \(\textrm{UOT}_{r;\eta }(\mu ,\nu ) \le \textrm{UOT}_{r;\eta ;\lambda }(\mu ,\nu )\).

2.6 Maximum Mean Discrepancies

Maximum mean discrepancy (MMD) embeds measures into a reproducing kernel Hilbert space (RKHS). This embedding gives rise to defining a distance on general, unbalance measures.

In what follows, we provide conditions so that the embedding is continuous with respect to the Wasserstein distance. As well, we demonstrate the fast computation of the new distance on unbalanced measures.

Definition 2.13

(Positive semi-definite) The function \(k:{{\mathcal {X}}} \times {{\mathcal {X}}} \rightarrow {{\mathbb {R}}}\) is a positive definite and symmetric kernel, if

for any scalars \((c_1,\ldots ,c_n)\in {{\mathbb {R}}}^{n}\) and \((x_1,\ldots ,x_n)\in {{\mathcal {X}}}^{n}\) and \(n\in {{\mathbb {N}}}\).

Definition 2.14

(Reproducing kernel Hilbert space, cf. Berlinet and Thomas-Agnan [7]) A Hilbert space \({{\mathcal {H}}}\) of functions from \({{\mathcal {X}}}\rightarrow {{\mathbb {R}}}\) (with inner product \(\left\langle \cdot ,\cdot \right\rangle _{{\mathcal {H}}}\)) is a RKHS, if there is a positive semi-definite kernel \(k:{{\mathcal {X}}}\times {{\mathcal {X}}}\rightarrow {{\mathbb {R}}}\), such that

-

(i)

\(k_x(\cdot ){:}{=} k(\cdot ,x)\in {{\mathcal {H}}}\) for all \(x\in {{\mathcal {X}}}\), and

-

(ii)

\(\left\langle k_x, \varphi \right\rangle _{{\mathcal {H}}} = \varphi (x)\) for all \(\varphi \in {{\mathcal {H}}}\) and \(x\in {{\mathcal {X}}}\) (that is, the evaluation of the function \(\varphi \in {{\mathcal {H}}}\) is a continuous, linear functional).

k is called the kernel of \({{\mathcal {H}}}\) (cf. Aronszajn [3]).

Definition 2.15

(MMD) Let \({{\mathcal {H}}}_k\) be an RKHS with kernel k. The mean embedding is

The RKHS distance between the mean embedding of given measures \(\mu \) and \(\nu \) is defined as

This RKHS distance is popularly known as MMD. Notably, it satisfies the axioms of a distance function. Further, given the elementary function \(f(\cdot )= \sum _{j=1}^{n} w_j\,k(x_j,\cdot )\in {{\mathcal {H}}}_k\), then \(\iota (\mu )= f\) for the discrete measure \(\mu (\cdot )= \sum _{j=1}^{n} w_j\,\delta _{x_j}\).

In other words, an RKHS-based interface between kernel methods and given distributions is offered by the mean embedding kernels. This distance is also known as mean map kernel.

The critical relation to Wasserstein (Kantorovich) distances is given by

so that

and

where the supremum is among all functions \(\varphi \in {{\mathcal {H}}}_k\) in the unit ball \(B_k\subset {{\mathcal {H}}}_k\) (\(\Vert \varphi \Vert _{{{\mathcal {H}}}_k}\le 1\)). By the Kantorovich–Rubinstein theorem (cf. Rachev and Rüschendorf [40]), the equation (2.14) can serve as a definition of the Wasserstein distance of order \(r=1\), where the functions \(\varphi \) are among all Lipschitz-1 functions.

Remark 2.16

(MMD upperbound) In general, it holds that

As a consequence of the triangle inequality and (2.15), we have

which is the elementary upper bound.

2.7 Relations Between MMD and Transportation Problems

In what follows we establish intricate connections between MMD and transportation problems, encompassing both probability and non-probability measures. To this end, consider first the measure \(P= \delta _x\) (\(Q=\delta _y\), resp.), the Dirac measure located at \(x\in {{\mathcal {X}}}\) (\(y\in {{\mathcal {X}}}\), resp.). Assuming that the kernel k is bounded by C (\(k\le C\)), say, then

while

It follows that the transportation problems, in general, cannot be bounded by MMD on support sets \({{\mathcal {X}}}\) with unbounded diameter \(\sup _{x,y\in {{\mathcal {X}}}}d(x,y)\). Note as well that \(d(x,y){:}{=} \Vert \delta _x-\delta _y\Vert _{{{\mathcal {H}}}_k}= \sqrt{k(x,x)-2k(x,y)+k(y,y)}\) defines a pseudo-metric on \({{\mathcal {X}}}\).

The following results provide conditions so that the embedding \(\iota :{\mathcal {M}} \hookrightarrow {{\mathcal {H}}}_k\) is (Hölder-)continuous. It generalizes Vayer and Gribonval [53, Propostion 2] slightly to kernels, for which the unit ball in \({{\mathcal {H}}}_k\) is not necessarily uniformly Lipschitz, which includes the Laplacian kernel, e.g.

The subsequent Lemma 2.17 expounds upon essential findings crucial for establishing the interrelationship between MMD and transportation problems.

Lemma 2.17

Let \(\mu \), \(\nu \in {\mathcal {M}} _+({{\mathcal {X}}})\), and define probability measures \(P(\cdot ){:}{=} {\mu (\cdot ) \over \mu ({{\mathcal {X}}})}\) and \(Q(\cdot ){:}{=} {\nu (\cdot ) \over \nu ({{\mathcal {X}}})}\). Additionally, consider functions \(\varphi \in {{\mathcal {H}}}_k\) in the unit ball \(B_k\subset {{\mathcal {H}}}_k\). It holds that

where \({\tilde{\pi }}\) has marginals P and Q. Furthermore, for unbalanced measures \(\mu \) and \(\nu \) with \(\eta _1\, > 0\), \(\eta _2 > 0\) and \(\alpha >0\), \(c>0\), it holds that

here, \(\pi \) represents the optimal measure of the problem \(\textrm{UOT}_{2\alpha ;\nicefrac {\eta }{c^2}}(\mu ,\nu )\) and \(u^{*}= \pi ({\mathcal {X}}\times {\mathcal {X}})\).

Proof

First, assume that \(\Vert \varphi \Vert _{{{\mathcal {H}}}_k}\le 1\), then

Then, it follows with Jensen’s inequality that

where measure \({{\tilde{\pi }}}\) has marginals \(P\) and \(Q\). By substituting (2.18) in (2.19), we obtain first assertion.

Now, similar to first assertion, we have, with Jensen’s inequality

for the optimal measure \(\pi \) as defined above and \(u^*=\pi ({\mathcal {X}}\times {\mathcal {X}})\). This quantity can be expressed with the marginals \(\mu ^*:=\tau _{1\#}\pi \) and \(\nu ^*:=\tau _{1\#}\pi \), it holds that \(u^{*}=\sqrt{\mu ^{*}({{\mathcal {X}}})\cdot \nu ^{*}({{\mathcal {X}}})}\). Finally, we obtain

as \({\textrm{D}}(\tau _{1\#}\pi \Vert \,\mu )\ge 0\) and \({\textrm{D}}(\tau _{2\#}\pi \Vert \,\nu )\ge 0\), which is the second assertion. This completes the proof. \(\square \)

In the following result, we establish Hölder continuity between MMD distance for probability measures and Wasserstein distance.

Proposition 2.18

Let P and Q be probability measures. Suppose that

for some \(c>0\) and \(\alpha \ge 1/2\). Then it holds that

Proof

It follows with (2.16) that

where we have used (2.20). Maximizing the left-hand side with respect to all functions \(\varphi \in {{\mathcal {H}}}_k\) with \(\Vert \varphi \Vert _{{{\mathcal {H}}}_k}\le 1\) using (2.14) and minimizing its right-hand side with respect to all measures \(\pi \) with marginals P and Q reveals that

Hence, the assertion. \(\square \)

The Wasserstein distance imposes fixed marginals in (2.2b) and (2.2c). In contrast, the unbalanced problem relaxes these constraints by incorporating soft marginal constraints in (2.3). Furthermore, the maximum mean discrepancy (cf. (2.13)) accommodates even for unbalanced measures.

The subsequent result establishes a connection between the MMD distance and UOT for general, unbalanced measures. The quantities \(\textrm{MMD}_k(\mu ,\mu ^*)\) and \(\textrm{MMD}_k(\nu ,\nu ^*)\) in (2.23) below account for the varying masses of the measures. These quantities vanish as \(\eta _1\nearrow \infty \) and \(\eta _2\nearrow \infty \), and if \(\mu \) (\(\nu \), resp.) are probability or balanced measures.

Proposition 2.19

Suppose that

for some \(c>0\) and \(\alpha \ge 1/2\). Let \(\mu \), \(\nu \in {\mathcal {M}} _+({{\mathcal {X}}}),\) and \(\pi \) be the optimal measure of the problem \(\textrm{UOT}_{2\alpha ;\nicefrac {\eta }{c^2}}(\mu ,\nu )\) with marginals \(\mu ^*:=\tau _{1\#}{\pi }\) and \(\nu ^*:=\tau _{2\#}{\pi }\). Then, it holds that

where \(u^{*}=\sqrt{\mu ^{*}({{\mathcal {X}}})\cdot \nu ^{*}({{\mathcal {X}}})}\).

Proof

Consider the optimal measure \(\pi \) of the problem \(\textrm{UOT}_{2\alpha ;\nicefrac {\eta }{c^2}}(\mu ,\nu )\), which has marginals \(\mu ^{*}\) and \(\nu ^{*}\) and note that \(\mu ^*({\mathcal {X}})=\nu ^*({\mathcal {X}})\). Utilizing (2.17) and (2.22), we have

Maximizing the left-hand side with respect to all functions \(\varphi \in {{\mathcal {H}}}_k\) with \(\Vert \varphi \Vert _{{{\mathcal {H}}}_k}\le 1\) using (2.14), we obtain

By adding \(\textrm{MMD}_k(\nu ,\nu ^{*})\) on both side of (2.24) and utilizing the triangle inequality (that is, \(\textrm{MMD}_k(\mu ^{*},\nu ) \le \textrm{MMD}_k(\mu ^{*},\nu ^{*})+ \textrm{MMD}_k(\nu ,\nu ^{*})\)) on the left-hand side to obtain

Repeating the same procedure by adding \(\textrm{MMD}_k(\mu ,\mu ^{*})\) on both side of (2.25), we obtain

which is the assertion. \(\square \)

The kernels we consider below for fast summation techniques satisfy this general relation (2.20) with \(\alpha =1\) and \(\alpha =1/2\). Note that, for \(\alpha =1/2\), the relations in (2.21) and (2.23) reveal Hölder (and not Lipschitz) continuity of the embedding.

Prominent kernels.In applications, the choice of the kernel function is important, and it depends on the problem of interest. The most prominent kernels used in MMDs are Gaussian (Gauss), inverse multi-quadratic (IMQ), Laplace (Lap) and energy (E) kernels. The following table (Table 1) provides these kernels, together with the constants in the preceding Proposition 2.18.

The discrepancy obtained by using the energy kernel, \(k^{\text {e}}\), is known as energy distance. The energy distance was originally proposed by Székely et al. [51], which is occasionally known as Harald Cramér’s distance. In general, the energy kernel is not positive definite although, for compact spaces \({{\mathcal {X}}}\), it is positive definite with a minor modification, see Neumayer and Steidl [33, Proposition 3.2]. Also, see Gräf [17, Corollary 2.15] for an explicit proof of conditional positivity of the energy kernel \(k^{\text {e}}(\cdot ,\cdot )\). The equivalence of energy distance and Cramér’s distance cannot be extended to higher dimensions because, unlike the energy distance, Cramér’s distance is not rotation invariant.

2.8 Convergence of 1-UOT to MMD Energy Distance

The following results revisit the interconnection between OT and MMD for the energy kernel, elucidating the correspondence between UOT and MMD.

Lemma 2.20

Let P and \({{\tilde{P}}}\) be the probability measures and \(\lambda >0\) be the regularization parameter. Then, the following relationships between the standard OT and the energy distance using debiased regularized OT \({\textrm{sd}}_{1;\lambda }\)

hold true.

Proof

See Ramdas et al. [41] and Neumayer and Steidl [33, Proposition 2]. \(\square \)

The following corollary discloses relationships between debiased regularized UOT and \(\textrm{MMD}_{k^\text {e}}(P,{{\tilde{P}}})\) for probability measures P and \({{\tilde{P}}}\).

Corollary 2.21

Let \(\lambda >0\) be the entropy regularization parameter and \(\eta >0\) be the marginal regularization parameter. Then, the relationships between OT with relaxed marginal constraints and energy distance using debiased regularized UOT

hold true for probability measures P and \({\tilde{P}}\).

Proof

See Appendix A.3\(\square \)

Up to this stage, we have established relationships and bounds between MMD and UOT through Propositions 2.5, 2.6, 2.9, 2.18, 2.19, and Corollary 2.21. These findings present value for practitioners, offering a reliable theoretical guideline for selecting readily accessible metrics. These connections furnish theoretical assurances, aiding practitioners in choosing distances that are computationally feasible. For instance, while computing MMD is generally straightforward, tasks involving Wasserstein distance and UOT can be daunting. Therefore, by leveraging Hölder continuity results, practitioners can confidently opt for MMD, ensuring practicality across various applications.

3 Distances in Discrete Framework

Computational aspects of the problems predominantly build on discrete measures, which motivates us to investigate faster and stable computational methods. To this end, we reformulate the aforementioned problems in a discrete setting. More precisely, the unbalanced optimal transport problem must be stated in its dual version to accommodate the fast summation technique presented below. On the other hand, the maximum mean discrepancy formulation does not require any additional modifications. The discrete measures considered in the following sections are \(\mu =\sum _{i=1}^{n} \mu _i\,\delta _{x_i}\) and \(\nu = \sum _{j=1}^{{\tilde{n}}} \nu _j\,\delta _{{{\tilde{x}}}_j}\).

3.1 Unbalanced Optimal Transport

To reformulate problem (2.9), we introduce the distance or cost matrix \(d \in {{\mathbb {R}}}^{n\times {{\tilde{n}}}}\) with entries \(d_{ij}{:}{=} d(x_i, {\tilde{x}}_j)\). With that, the discrete optimization problem (2.9) is

Here, the matrix \(\pi \in {{\mathbb {R}}}^{n\times {{\tilde{n}}}}\) with positive entries represents the transportation plan, and the parameters are as defined in Definition 2.7.

The duality reformulation gives rise to the accelerated algorithm of the UOT problem provided below. This explicit formulation justifies our advanced technique.

The following statement provides the dual formulation of the (primal) optimization problem (2.7), which is the foundation of our accelerated algorithm. The proof builds on the Fenchel-Rockafellar Theorem, and we may refer to Chizat et al. [11, Theorem 3.2].

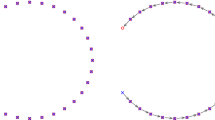

Transportation (indicated by green lines) of measures (circles and triangles) with equal mass. The optimal transportation plan bypasses the outliers for smaller parameters \(\eta \), and appraises for larger parameters \(\eta \), cf. Remark 2.4 (Color figure online)

Theorem 3.1

(Dualization of (2.9)) For \(\lambda > 0\), \(\eta _1> 0\) and \(\eta _2> 0\), the dual function of entropy-regularized UOT (2.9) is

which results from a strictly concave, smooth, and unconstrained optimization problem. Strong duality is obtained for \(\eta _1>0\) and \(\eta _2>0\).

The duality result (3.1) gives rise to the well-known Sinkhorn’s algorithm. The unique maximizer \((\beta ^{*},\gamma ^{*})\) is described by the first-order optimality condition, and by alternating optimization (i.e., iterative procedure), we obtain a sequence \(\gamma ^{0}, \beta ^{1},\gamma ^{1},\beta ^{2},\ldots \) as

where \(\Delta \in {{\mathbb {N}}}\) is the iteration count, \(\odot \) refers to the element wise dot product and \(k{:}{=} e^{-\lambda \,d^r}\) (entrywise), and we may set \(\gamma ^{(0)}{:}{=} {{\textbf{0}}}_{{{\tilde{n}}}}\). This is the basis for Sinkhorn’s iteration of regularized UOT. Sinkhorn’s theorem ensures that this alternating optimization routine converges to the optimal solution (cf. Sinkhorn [48]).

Algorithm 1 summarizes the computational process, provides the optimal dual variable (\(\beta ^{\Delta ^{*}}\) and \(\gamma ^{\Delta ^{*}}\)), and desired quantities can be computed using it. For instance, the proximate solution of the regularized UOT Problem (2.9) is

Remark 3.2

(Hyperparameter tuning and outliers) In practice, we often fix \(\eta _1 = \eta _2 =:\eta \) to reduce the necessity of hyperparameter tuning.

From the consideration of Remark 2.4, we also deduce that, for finite values of \(\eta \), problem (2.9) tends to ignore larger entries of the distance matrix d. That is, the solution of the problem does not involve outliers of the measures in the transportation. Figure 1 illustrates the impact of the parameters \(\eta \), highlighting the transportation of outliers. Furthermore, if one prefers to strengthen the mass conservation of \(\mu \) (\(\nu \), resp.), it is achievable by increasing the marginal parameter \(\eta _1\) (\(\eta _2\), resp.).

Arithmetic complexity.Even though the entropy regularization approach relaxes the computational burden, it still requires matrix–vector operation in (3.3) and (3.4) for each iteration, which are \({\mathcal {O}}(n^2\, d)\) arithmetic operations. The complete iteration routine of Algorithm 1 approximately requires

arithmetic operations (cf. Pham et al. [35]) to achieve machine precision, where d is dimension.

The exposition on fast summation techniques in Sect. 4 below takes advantage of the specific structure of the matrix \(k=e^{-\lambda \,d^r}\) in (3.3) and (3.4), which is known as Gibbs kernel and/ or kernel matrix.

3.2 Computational Aspects of MMDs

For discrete measures \(\mu \) and \(\nu \) as above, the numerical computation of \(\textrm{MMD}^2_k(\mu ,\nu )\) in terms of the corresponding kernel function k is based on

The important observation here is that the individual terms in (3.5) constitute matrix vector multiplications, where the matrix has the same shape as in (3.2), cf. also Algorithm 1.

Arithmetic complexity.In order to compute \(\textrm{MMD}^2_k(\mu ,\nu )\) or \(\textrm{MMD}^2_k(P,{{\tilde{P}}})\), the standard implementation (3.5) requires \({\mathcal {O}}(n^2 d)\) arithmetic operations, where d is the dimension and \(n\approx {{\tilde{n}}}\).

4 Fast Summation Method Based on Nonequispaced FFT

This section succinctly describes the nonequispaced Fourier transform (NFFT) based fast summation technique. In a nutshell, this technique allows reducing the computational burden of matrix–vector multiplications, which is \({\mathcal {O}}(n^2)\) arithmetic operations. The forthcoming discussion explicitly provides the arithmetic operations of our fast algorithms, which are proposed to solve entropy regularized UOT and MMDs.

The standard kernel matrix–vector operation resembles the multiplication of the Toeplitz matrix with the vector. One can take advantage of this scenario to optimize \({\mathcal {O}}(n^2)\) to \({\mathcal {O}}( n\log n)\) arithmetic operations, using a fast algorithm (cf. Plonka et al. [37, Theorem 3.31]). In other words, the fast algorithm embeds the kernel matrix into a circulant matrix, which is a special case of the Toeplitz matrix, and it diagonalizes the matrix using the Fourier matrix. This approach leads to the computation advancement, which is achieved using standard FFT (cf. Plonka et al. [37, pp. 141–142]).

Despite the benchmark computational advancement, standard FFT deteriorates by the restriction to equispaced points (\(x_i\), \({\tilde{x}}_j\)). Thus, the NFFT fast algorithm is introduced, which follows the similar approach for arbitrary points, see Plonka et al. [37, Chapter 7]. We refer to Keiner et al. [22] for a detailed interpretation of affiliated software.

4.1 NFFT Fast Summation

The fast summation method, which is based on NFFT, makes use of the radial distance

which builds on the difference of x and \({{\tilde{x}}}\); the distance matrix \(d \in {\mathbb {R}}^{n\times {\tilde{n}}}\) is

Remark 4.1

(Radial cost functions) The fast summation technique presented below crucially builds on radial cost functions. The cost functions contemplated here are increasing functions of the genuine distance d, for example \(d^r\). This type of cost functions is commonly referred to as radial transport cost.

The core concept of fast summation is to efficiently approximate the radial kernels, i.e.,

where \(y :=x - {\tilde{x}}\) (\(\ell >0\) and \(\textrm{c}>0\)). The fast summation approach, which performs matrix–vector operation, computes sums of the form

where coefficients \(\tilde{\alpha }_j\in {{\mathbb {C}}}\) for all j.

4.2 Approximation Aspects of Kernel Approximation

The central idea of NFFT is to efficiently approximate \({{\mathcal {K}}}(y)\) by an h-periodic trigonometric polynomial \({{\mathcal {K}}}_{RK}(y)\). To illustrate, we approximate a univariate kernel using a trigonometric polynomial in one dimension via

where \(b_{{\textrm{k}}}\in {{\mathbb {C}}}\) are appropriate Fourier coefficients, \(y=x-{\tilde{x}}\) and bandwidth \(N \in 2{{\mathbb {N}}}\). In order to efficiently compute the matrix–vector operations (4.3), which is a bottleneck of UOT and MMDs, the fast summation based on NFFT approximates \({\mathcal {K}}\) by the trigonometric polynomial \({{\mathcal {K}}}_{RK}\) with machine precision.

Note, that the desired kernels are non-periodic functions. Hence, an approximation using a trigonometric polynomial is not forthright. In order to obtain an h-periodic smooth kernel function \(\tilde{{\mathcal {K}}}(y)\), we regularize \({{\mathcal {K}}}(y)\) as follows. If the argument y satisfies \(\Vert y\Vert \le L\), we choose \(h\ge 2L\). For a good approximation, we embed \({\mathcal {K}}(y)\) into a smooth periodic function by

where \({\mathcal {K}}_\textrm{B}\) is a univariate polynomial, which matches the derivatives of \({\mathcal {K}}(y)\) up to a certain degree. This makes its periodic continuation \(\tilde{{\mathcal {K}}}(y)\) smooth. We refer to Plonka et al. [37, Chapter 7.5] for more mathematical details.

In the one dimensional (univariate) case, we obtain the fast computation of distance matrix–vector operation by replacing the kernel \({\mathcal {K}}\) in (4.3) with corresponding Fourier representation (4.4). More specifically,

with scaled nodes \({\bar{x}}_i :=h^{-1}x_i \in {\mathbb {T}}^d\), \(\bar{{\tilde{x}}}_j :=h^{-1}{\tilde{x}}_j \in {\mathbb {T}}^d.\) Here, the torus \({\mathbb {T}}\) is

In the aforementioned setup, the inner sum in (4.6) is computed by the NFFT in \({\mathcal {O}}(d\,N\log N +{\tilde{n}})\) arithmetical operations and the outer sum with \({\mathcal {O}}(d\,N\log N + n)\). The aforementioned ansatz generalizes to the multivariate setting.

Curse of dimensionality.Despite the robustness of NFFT, the evaluation is expensive for larger dimensions (\({{\mathcal {X}}}={{\mathbb {R}}}^d\), where \(d\ge 4\)). More specifically, for larger dimension, the grid belongs to the index set

where \(N=(N_1,N_2,N_3)\in 2{\mathbb {N}}^3\), grows exponentially, which makes the evaluation expensive. To mitigate this burden, several research works have been presented in literature, which are predominantly based on mutual information score and Analysis of Variance (ANOVA) (cf. Nestler et al. [32], Potts and Schmischke [38]).

In our context, we narrow our focus up to three dimensions, recognizing it as the most prevalent scenario across a wide array of applications. For more detailed specifications of approximations in higher dimensions (two and three), we refer Nestler [31, Page 98].

Remark 4.2

(Arithmetic complexity) Evaluating (4.3) with Gaussian kernel \({\mathcal {K}}^{\text {Gauss}}\) requires \({\mathcal {O}} (n\,d)\) arithmetic operations; with Laplacian \({{\mathcal {K}}}^{\text {Lap}}\), inverse multiquadratic \({\mathcal {K}}^{\text {IMQ}}\) and absolute value kernel \({{\mathcal {K}}}^{\text {e}}\), \({\mathcal {O}} (d\,n\log n)\) arithmetic operations are required. We refer to Plonka et al. [37, Chapter 7.5] for a comprehensive discussion.

Remark 4.3

(Heuristic stabilization) The kernel approximation realm specifically tailored for certain boundaries, see (4.7). This specialization might introduce constraints aligned with our problem of interest. In instances where the kernel function requires intensified focus within a narrower or wider interval, surpassing a threshold in boundary conditions, a prudent approach entails the integration of a scaling factor h, as previously elucidated, which is beneficial. This augmentation serves to truncate the summation process (4.3), thereby aligning the solution characteristics with the desired outcomes.

Remark 4.4

(NFFT parameters) The accuracy of the approximation in (4.5) relies on various parameters, including the grid size or bandwidth N. We set those parameters in accordance with the specifications outlined in Keiner et al. [22] to achieve machine precision. A common selection for the grid size is \(N=2^8\).Footnote 1

Arithmetic complexity of Algorithm 2. For the sake of simplicity, we assume \(n={\tilde{n}}\). If \(r=1\) (Laplacian kernel), Algorithm 2 requires

arithmetic operations to achieve machine accuracy. If \(r=2\) (Gaussian Kernel), it requires

arithmetic operations (cf. Remark 4.2).

Arithmetic complexity of NFFT-accelerated MMD. To compute MMD using Gaussian \( {\mathcal {K}}^{\text {Gauss}}\), it requires

arithmetic operations, and to compute MMD using Laplace \({\mathcal {K}}^{\text {Lap}}\), inverse multiquadric \({\mathcal {K}}^{\text {IMQ}}\) and absolute value \({\mathcal {K}}^{\text {abs}}\) kernels, it requires

arithmetic operations.

Arithmetic complexity of upper bound of standard UOT and regularized UOT. The bottleneck in the Sects. 2.4 and 2.5 of the proposed formulation entails the inner product \(\langle \mu \otimes \nu |\,d^r\rangle \), demanding \({\mathcal {O}}(n^2d)\) arithmetic operations. For \(r=1\), however, it holds that \(d(x,y)=-k_\text {e}(x,y)\), where \(k_\text {e}\) is the energy kernel. We thus can compute the inner product in

arithmetic operations using the fast absolute value \({{\mathcal {K}}}^{\text {abs}}\) kernel, cf. (4.2).

5 Numerical Exposition of the NFFT Accelerated UOT and MMD

In this section, we demonstrate numerical results for the approaches and methods considered in the preceding sections. The corresponding implementations are available online.Footnote 2 The implementation of NFFT techniques are based on the freely available repository ‘NFFT3.jl’.Footnote 3 All experiments were performed on a standard desktop computer with Intel(R) Core(TM) i7-7700 CPU and 15.0 GB of RAM. Additionally, we emphasize that our proposed methods do not require expensive hardware or having access to supercomputers which is highly advantageous in terms of access to wide and low-threshold applications.

5.1 UOT Acceleration

In this section, we demonstrate the enhanced performance and accuracy of regularized UOT using synthetic and benchmark data.

5.1.1 Empirical Measures

We demonstrate the efficiency (in terms of time and memory allocations) of our proposed Algorithm 2 using synthetic data.

Consider the unbalanced measures

on \({\mathbb {R}}\times {\mathbb {R}}\), where \(U^1_i\), \(U^2_i\), \({{\tilde{U}}}_j^1\), \({{\tilde{U}}}_j^2\), as well as the weights \(\mu _i\) and \(\nu _j\), are independent samples from the uniform distribution on [0, 1] (\(i=1,\ldots n\), \(j=1,\dots ,{\tilde{n}}\)).

Table 2 displays computation times and memory allocations of UOT Sinkhorn’s Algorithm 1 and UOT NFFT Sinkhorn’s Algorithm 2. Our NFFT accelerated algorithm significantly outperforms the standard algorithm. Notably, our proposed algorithm easily reaches problem sizes, which are completely inaccessible for standard implementations.

5.1.2 Accuracy Analysis with Benchmark Datasets

To unleash the exact ground truth of our proposed algorithm, we perform an accuracy analysis using benchmark datasets.

We employ the DOTmark dataset, which is explicitly designed to validate the performance and accuracy of new OT techniques and algorithms (cf. Schrieber et al. [45]). The DOTmark dataset consists of 10 subsets of gray scale images, ranging from \(32 \times 32\) to \(512 \times 512\) resolution.

Transformation of images to (unbalanced) vectors. The grayscale image is given as a matrix, with each entry representing the intensity of a pixel in the range [0, 1] (black: 0, white: 1). The standard OT problem would – inappropriately – normalize the matrix. Our approach to UOT does not require normalizing the measure. Moreover, background pixel intensities are the \(\ell _1\) distance between pixels i and j of the respective grids \((32 \times 32, \dots \), and \(512 \times 512)\).

We compute the residual,

where \(\beta ^{*}\) and \(\gamma ^{*}\) are optimal dual variable of UOT Sinkhorn’s Algorithm 1 and \(\beta ^{*}_{\text {NFFT}} \) and \(\gamma ^{*}_{\text {NFFT}}\) are optimal dual variable of UOT NFFT Sinkhorn’s Algorithm 2, to substantiate the accuracy of our proposed algorithm.

Table 3 comprises the results of our accuracy analysis. From the results, it is evident that our proposed algorithm provides machine accuracy as promised. For the sake of brevity, we skip the precise results and instead present the average residual. Although, we would like to emphasize that our proposed algorithm is capable of withstanding from smooth to rough nature of problems provided by DOTmark datasets.

Remark 5.1

(Regularization parameter \(\lambda \)) In this experiment above we observe stable computation of NFFT-accelerated regularized UOT using Algorithm 2 across the range of \(\lambda \in (0, 200]\). As mentioned earlier, this threshold for \(\lambda \) is adequate in achieving the approximate optimal solution within machine accuracy.

Remark 5.2

(New initialization of Sinkhorn’s algorithm) The UOT experiments employ the regularized UOT upper bound (\(c^{*}\)) as the initialization factor \(\gamma ^{(0)} = c^{*}\cdot {\textbf{1}}_{{{\tilde{n}}}}\) for Algorithm 2, deviating from the conventional choice of \(\gamma ^{(0)} = {{\textbf{0}}}_{{{\tilde{n}}}}\). This departure resulted in a discernible enhancement, indicating a slight improvement over the standard selection. However, a recent study by Thornton and Cuturi [52] has examined the initialization factor of Sinkhorn’s algorithm, presents promising results. Notably, their approach involves data-dependent initializers, distinguishing it from our methodology.

5.1.3 \(\lambda \)-Scaling

A robust heuristic approach for overcoming the loss of spectral information and mitigating the slowdown in the convergence process is the utilization of \(\lambda \)-scaling (also known as deformed iteration) (cf. Schmitzer [44, Sect. 3], Sharify et al. [47, Sect. 3]). This method entails the iterative resolution of the regularized problem with progressively increasing values for the regularization parameter \(\lambda \in \Lambda \subset {{\mathbb {R}}}_+\).

The following experiment showcases the adaptability of our proposed method with this \(\lambda \)-scaling technique.

The experimental setup adheres to the simulation depicted in Fig. 3a. For this investigation, we focus on probability measures—an instantiation of UOT also recognized as robust OT, see Balaji et al. [5].

Figure 3 presents the heat map of optimal matrices \(\pi ^{*}\) along with total number of iteration for each experiments. It demonstrates that the NFFT-accelerated UOT Sinkhorn’s Algorithm 2 utilizing \(\lambda \)-scaling technique converges faster than the NFFT-accelerated UOT Sinkhorn’s Algorithm 2. The adaptability of this technique further enhances the performance of our proposals.

5.2 MMDs Accelerations

This section demonstrates the performance and accuracy of NFFT accelerated MMDs. We perform the experiments using the measures \(\mu \) and \(\nu \) as described in Sect. 5.1.1 above. For the accuracy analysis, we conduct experiments using probability vectors and unbalanced vectors. To validate the quality of our NFFT MMDs, we compute the errors

From Table 4, we infer that the quality of the approximation is stable, and the errors are negligible.

We then illustrate the performance of NFFT accelerated MMDs. Table 5 (below) comprehends the performance of the standard MMDs and NFFT accelerated MMDs using different problem size and dimensions \((d=1,2,3)\). It evidently confirms that the performances of our NFFT accelerated MMDs are significantly better than the standard computations. Nevertheless, we notice that NFFT accelerated computations are slightly expensive (in terms of time allocation) for problem size \(n={\tilde{n}}=1000\) in dimension \(d=3\). However, this slightly expensive time allocation is negligible when compared to memory allocations of standard computations.

5.2.1 Convergence of 1-UOT to Energy Distance

Now, we empirically validate Corollary 2.21 by utilizing synthetic data. For this experiment, we fix \(\lambda =0.001\), and consider empirical probability measures P and \({\tilde{P}}\) on the closed interval [0, 1] for uniformly samples.

\({\textrm{sd}}_{1;\eta ;\lambda }(P,{\tilde{P}})\) approaches \(\textrm{MMD}_{k^{\text {e}}}(P,{\tilde{P}})\) for increasing penalization parameters \(\eta \), cf (2.12) (\(\lambda =0.001\))

Figure 4 illustrates \(sd_{1;\eta ;\lambda }(P,{\tilde{P}})\) and \(\textrm{MMD}_{k^{\text {e}}}(P,{\tilde{P}})\) for various values of the marginal regularization parameter. This visualization corroborates Corolary 2.21.

5.3 Disparity Between MMD and Transportation Problems

Propositions 2.18 and 2.19 establish Hölder continuity between MMD and transportation problem (Wasserstein distance and UOT). This section investigates into scrutinizing the disparities and inequalities of the proposals across different parameter settings.

Probability measures, cf. Propositions 2.18. We consider empirical probability measures P and Q on the closed interval [0, 1] following a uniform distribution. The observed average disparities from 1000 simulations in Table 6 confirm inequality (2.21) in Proposition 2.18.

Unbalanced measures, cf. Propositions 2.19. For synthetic data as described in Sect. 5.1.1, Table 7 displays the average disparities observed from 1000 simulations with fixed parameters. The results substantiate the observations in inequality (2.23).

Remark 5.3

The disparity analysis aims to uncover the gap between the MMD, Wasserstein distance and UOT for standard parameter choices. However, it’s worth noting that, for instance, the average disparity in Tables 6 and 7 diminishes as \(\ell \nearrow \infty \) (cf. Table 1), which typically isn’t the most relevant scenario.

Remark 5.4

(Proposition 2.6) Based on the experimental observations aligned with the theoretical findings from Proposition 2.6 (first assertion), we note that as \(\eta _1= \eta _2\searrow 0\), the Wasserstein distance is recovered, while as \(\eta _1= \eta _2\nearrow \infty \), the disparity between \(\textrm{UOT}_{r;\eta }(\mu ,\nu )\) and \(u\cdot w_{r}(P,Q) + \eta _1\,{\textrm{D}}(P\Vert \,\mu )+ \eta _2\,{\textrm{D}}(Q\Vert \,\nu )\) increases. However, it is noteworthy that the experiment for both the assertion confirms the theoretical underpinnings consistently across varying parameters.

5.4 Existing Approaches: A Comparative Exploration

The subsequent discussion is a comparative exploration of state-of-the-art algorithms and other prominent fast algorithms with our NFFT accelerated Sinkhorn’s UOT and MMD.

5.4.1 State-of-the-Art Algorithms

Existing literature highlights remarkable achievements in solving transportation problems using advancements in standard optimization techniques (cf. Gottschlich and Schuhmacher [16], Schmitzer [43]). For concise implementations of such approaches, we reference to the R package Schuhmacher et al. [46].

Table 8 presents a comparative analysis of time and memory performance between the unbalanced function from the R package and our proposed Algorithm 2.

The results demonstrate the computational superiority of our approach. The state-of-the-art algorithms from the R package, employing the network flow algorithm and revised simplex algorithm methods, are designed for solving the standard UOT problem, which do not require regularization. This aspect can be advantageous when working with small-scale or intermediate-scale problems.

The computational aspects of MMD have not been studied as extensively as those of UOT. The forthcoming discussion theoretically compares the prominent approaches with our proposed approach based on NFFT.

5.4.2 Nyström Approximation

In terms of computational methodologies, the utilization of Nyström approximation emerges as imperative, especially in the context of approximating large matrices within diverse machine learning and data analysis applications (cf. Yang et al. [57]). The Nyström method builds on a low rank approximation of the kernel matrix. This method gain prominence when prioritizing computational efficiency is paramount. Its applicability extends to the realms of regularized OT and MMD, where it yields good results (cf. Altschuler et al. [2], Cherfaoui et al. [10]). Nevertheless, the Nyström approximation approach within the framework of regularized OT and MMD is not without limitations, which include the following:

- Loss of spectral information.:

-

Nyström approximation involves selecting a subset of data points to construct a low-rank approximation. This process may lead to a loss of spectral information in the kernel matrix.

- Computational complexity.:

-

It still involves matrix inversion and multiplication, which can be computationally expensive for large datasets.

- Sensitivity to subset selection.:

-

The performance of the Nyström approximation is sensitive to the choice of the subset of data points used for the low-rank approximation. In certain cases, a suboptimal selection of these points results in a less accurate representation of the original kernel matrix.

- Applicability to:

-

The method may not perform optimally for datasets with

- non-uniform distributions.:

-

non-uniformly distributed points.

5.4.3 Random Fourier Features

On the other hand, for faster computation, the random Fourier features (RFF) is considered in the context of MMD (cf. Zhao and Meng [58]), where it shows better performance. However, the RFF is also not without limitations in the MMD setup:

- Loss of spatial structure.:

-

RFF-based approximations may not fully capture the spatial structure of the original data, especially in MMD, where the spatial arrangement of features is crucial.

- Quality of approximation.:

-

The quality of the approximation introduced by RFF rely on random projections to approximate feature maps, and while they offer computational efficiency for MMD problems, the random nature of the projections may lead to suboptimal approximations.

- Increased computational cost.:

-

RFF introduce additional computational costs, particularly in terms of matrix–vector multiplications, due to the need to compute the random feature mappings for each data point.

- Suboptimal for sparse data.:

-

RFF may not be the most efficient choice for sparse data matrices, as the random features approach might not fully exploit the sparsity inherent in the data during matrix–vector multiplications.

5.4.4 NFFT Based Fast Summation

Our methodology for regularized UOT and MMD, leveraging the NFFT, theoretically assures efficient performance even in the limitations mentioned above, see Plonka et al. [37, Chapter 7.2]. Some pivotal supremacies are highlighted in following:

- Efficiency in large-scale:

-

The NFFT fast summation technique is known for its

- computations.:

-

efficiency in large-scale computations, making it well-suited for handling datasets with a substantial number of data points in regularized UOT and MMD. NFFT can outperform Nyström and RFF when dealing with extensive datasets, as it offers computational advantages in terms of both time and memory.

- Improved accuracy:

-

NFFT often provides a more accurate approximation of

- in approximation.:

-

of kernelized functions compared to Nyström and random Fourier features. The NFFT contributes to improved accuracy in approximating the feature space, leading to better representation of complex relationships within the data.

- Preservation of:

-

NFFT is designed to preserve the structural

- structural information.:

-

information present in the data, making it particularly advantageous for regularized UOT and MMD, where capturing intricate details in the feature space is crucial. Unlike RFF methods, NFFT aims to maintain the essential characteristics of the data during the approximation process.

- Utilization of:

-

The adaptability of non-equispaced data by NFFT

- nonequispaced data.:

-

contributes to its effectiveness, allowing for more flexible and adaptive to wide range of applications.

Remark 5.5

(Three dimension approximation) The adaptation of existing methodologies supporting one and two-dimensional NFFT approximations for standard OT and Multi-Marginal setups is feasible for the three-dimensional NFFT approximation.

6 Summary

Our work delivers significant advancements in the realm of comparing unbalanced measures (i.e., non-probability measures). We have introduced robust inequalities, which elucidate the nuanced relationship between Wasserstein distance, UOT and MMD (probability and non-probability measures).

Furthermore, we explore convergence of regularized UOT to the energy distance (MMD).

Introducing a fast summation technique based on NFFT, our approach enables fast matrix–vector operations, significantly accelerating the implementation of regularized UOT and MMDs while ensuring computational stability, see Table 9. Diverse kernel options, including inverse multiquadratic and energy kernels, are presented.

We again emphasize that these inequalities along with fast computation significantly enhance both the theoretical and computational frameworks, enabling effective management of disparities between probability and non-nonnegative (unbalanced) measures.

Furthermore, we showcase numerical illustrations of our method, validating its robustness, stability, and properties outlined in theoretical results. These numerical demonstrations provide empirical evidence, further substantiating the efficacy of our proposals.

The corresponding implementations are available in the following GitHub repository:

Data Availability

Enquiries about data availability should be directed to the authors.

Notes

The internal window cutoff parameter m plays a crucial role in approximation accuracy, which is independent of n (\(\tilde{n}\), resp.), and the typical choice \(m=8\) yields machine precision.

References

Ahuja, R., Magnanti, T., Orlin, J.: Network Flows: Theory, Algorithms, and Applications. Prentice Hall, Essex (1993)

Altschuler, J., Bach, F., Rudi, A., Niles-Weed, J.: Massively scalable Sinkhorn distances via the Nyström method. Adv. Neural Inf. Process. Syst. 32. (2019)

Aronszajn, N.: Theory of reproducing kernels. Trans. Am. Math. Soc. 68(3), 337–404 (1950). https://doi.org/10.1090/s0002-9947-1950-0051437-7

Ba, F.A., Quellmalz, M.: Accelerating the Sinkhorn algorithm for sparse multi-marginal optimal transport via fast Fourier transforms. Algorithms 15(9), 311 (2022). https://doi.org/10.3390/a15090311

Balaji, Y., Chellappa, R., Feizi, S.: Robust optimal transport with applications in generative modeling and domain adaptation. Adv. Neural Inf. Process. Syst. 33, 12934–12944 (2020)

Benamou, J.-D.: Numerical resolution of an “unbalanced’’ mass transport problem. ESAIM Math. Modell. Numer. Anal. 37(5), 851–868 (2003). https://doi.org/10.1051/m2an:2003058

Berlinet, A., Thomas-Agnan, C.: Reproducing Kernel Hilbert Spaces in Probability and Statistics. Springer, Heidelberg (2004)

Bregman, L.M.: The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming. USSR Comput. Math. Math. Phys. 7(3), 200–217 (1967). https://doi.org/10.1016/0041-5553(67)90040-7

Carlier, G., Duval, V., Peyré, G., Schmitzer, B.: Convergence of entropic schemes for optimal transport and gradient flows. SIAM J. Math. Anal. 49(2), 1385–1418 (2017). https://doi.org/10.1016/0041-5553(67)90040-7

Cherfaoui, F., Kadri, H., Anthoine, S., Ralaivola, L.: A discrete RKHS standpoint for Nyström MMD. working paper or preprint, (2022). https://hal.science/hal-03651849/

Chizat, L., Peyré, G., Schmitzer, B., Vialard, F.-X.: Scaling algorithms for unbalanced optimal transport problems. Math. Comput. 87(314), 2563–2609 (2018). https://doi.org/10.1090/mcom/3303

Distances, C.M.S.: Lightspeed computation of optimal transport. Adv. Neural Inf. Process. Syst. 26, 2292–2300 (2013)

Fatras, K., Sejourne, T., Flamary, R., Courty, N.: Unbalanced minibatch optimal transport; applications to domain adaptation. In: Meila, M., Zhang, T. editors, Proceedings of the 38th International Conference on Machine Learning, volume 139 of Proceedings of Machine Learning Research, pp. 3186–3197. PMLR, (2021). https://proceedings.mlr.press/v139/fatras21a.html

Fukumizu, K., Song, L., Gretton, A.: Kernel Bayes’ rule: Bayesian inference with positive definite kernels. J. Mach. Learn. Res. 14(1), 3753–3783 (2013). https://doi.org/10.5555/2567709.2627677

Genevay, A., Peyré, G., Cuturi, M.: Learning generative models with Sinkhorn divergences. In: International Conference on Artificial Intelligence and Statistics, pp. 1608–1617. PMLR, (2018). https://proceedings.mlr.press/v84/genevay18a.html

Gottschlich, C., Schuhmacher, D.: The shortlist method for fast computation of the earth mover’s distance and finding optimal solutions to transportation problems. PLoS ONE 9(10), e110214 (2014). https://doi.org/10.1371/journal.pone.0110214

Gräf, D.-M. M.: Efficient algorithms for the computation of optimal quadrature points on Riemannian manifolds. PhD thesis, TU Chemnitz, (2013). https://core.ac.uk/reader/153229370

Gretton, A., Borgwardt, K.M., Rasch, M.J., Schölkopf, B., Smola, A.: A kernel two-sample test. J. Mach. Learn. Res. 13(1), 723–773 (2012). https://doi.org/10.5555/2188385.2188410

Hagemann, P., Hertrich, J., Altekrüger, F., Beinert, R., Chemseddine, J., Steidl, G.: Posterior sampling based on gradient flows of the mmd with negative distance kernel. arXiv preprint arXiv:2310.03054 (2023)

Ji, F., Zhang, X., Zhao, J.: \(\alpha \)-egan: \(\alpha \)-energy distance gan with an early stopping rule. Comput. Vis. Image Understand. 234, 103748 (2023). https://doi.org/10.1016/j.cviu.2023.103748

Kantorovich, L.V.: On the translocation of masses. J. Math. Sci. 133(4), 1381–1382 (2006). https://doi.org/10.1007/s10958-006-0049-2

Keiner, J., Kunis, S., Potts, D.: Using NFFT 3–a software library for various nonequispaced fast Fourier transforms. ACM Trans. Math. Softw. 36(4), 1–30 (2009). https://doi.org/10.1145/1555386.1555388

Lakshmanan, R., Pichler, A., Potts, D.: Nonequispaced fast Fourier transform boost for the sinkhorn algorithm. Electron. Trans. Numer. Anal. 58, 289–315 (2023). https://doi.org/10.1553/etna_vol58s289

Le, Q., Sarlós, T., Smola, A. et al.: Fastfood-approximating kernel expansions in loglinear time. In: Proceedings of the International Conference on Machine Learning, vol. 85, p. 8, (2013). http://proceedings.mlr.press/v28/le13-supp.pdf

Li, Y., Song, Y., Jia, L., Gao, S., Li, Q., Qiu, M.: Intelligent fault diagnosis by fusing domain adversarial training and maximum mean discrepancy via ensemble learning. IEEE Trans. Ind. Inf. 17(4), 2833–2841 (2021). https://doi.org/10.1109/TII.2020.3008010

Liero, M., Mielke, A., Savaré, G.: Optimal entropy-transport problems and a new Hellinger-Kantorovich distance between positive measures. Invent. Math. 211(3), 969–1117 (2018). https://doi.org/10.1007/s00222-017-0759-8

Lu, F., Raff, E., Ferraro, F.: Neural Bregman divergences for distance learning, (2022)

Luenberger, D.G., Ye, Y.: Linear and Nonlinear Programming. Springer, Cham (1984)

Ma, Z., Wei, X., Hong, X., Lin, H., Qiu, Y., Gong, Y.: Learning to count via unbalanced optimal transport. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 2319–2327. (2021). https://ojs.aaai.org/index.php/AAAI/article/view/16332

Muandet, K., Fukumizu, K., Sriperumbudur, B., Schölkopf, B., et al.: Kernel mean embedding of distributions: a review and beyond. Found. Trends® Mach. Learn. 10(1–2), 1–141 (2017). https://doi.org/10.1561/2200000060

Nestler, F.: Efficient Computation of Electrostatic Interactions in Particle Systems Based on Nonequispaced Fast Fourier Transforms. Universitätsverlag Chemnitz, Chemnitz (2018)

Nestler, F., Stoll, M., Wagner, T.: Learning in high-dimensional feature spaces using anova-based fast matrix-vector multiplication. arXiv preprint arXiv:2111.10140 (2021). https://doi.org/10.3934/fods.2022012

Neumayer, S., Steidl, G.: From optimal transport to discrepancy. In: Handbook of Mathematical Models and Algorithms in Computer Vision and Imaging: Mathematical Imaging and Vision, pp. 1–36, (2021). https://doi.org/10.1007/978-3-030-03009-4_95-1

Nielsen, F.: Statistical divergences between densities of truncated exponential families with nested supports: Duo Bregman and duo Jensen divergences. Entropy 24(3), 421 (2022). https://doi.org/10.3390/e24030421

Pham, K., Le, K., Ho, N., Pham, T., Bui, H.: On unbalanced optimal transport: an analysis of Sinkhorn algorithm. In: International Conference on Machine Learning, pp. 7673–7682. PMLR, (2020). https://proceedings.mlr.press/v119/pham20a.html

Platte, R.B., Trefethen, L.N., Kuijlaars, A.B.: Impossibility of fast stable approximation of analytic functions from equispaced samples. SIAM Rev. 53(2), 308–318 (2011). https://doi.org/10.1137/090774707

Plonka, G., Potts, D., Steidl, G., Tasche, M.: Numerical Fourier Analysis. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-04306-3

Potts, D., Schmischke, M.: Approximation of high-dimensional periodic functions with Fourier-based methods. SIAM J. Numer. Anal. 59(5), 2393–2429 (2021). https://doi.org/10.1137/20M1354921

Potts, D., Steidl, G., Tasche, M. Fast Fourier transforms for nonequispaced data: a tutorial. Modern sampling theory, pp. 247–270. (2001). https://doi.org/10.1007/978-1-4612-0143-4_12

Rachev, S.T., Rüschendorf, L.: Mass Transportation Problems Volume I: Theory, Volume II: Applications, Volume XXVV of Probability and Its Applications. Springer, New York (1998)

Ramdas, A., García Trillos, N., Cuturi, M.: On Wasserstein two-sample testing and related families of nonparametric tests. Entropy 19(2), 47 (2017)

Schiebinger, G., Shu, J., Tabaka, M., Cleary, B., Subramanian, V., Solomon, A., Gould, J., Liu, S., Lin, S., Berube, P., et al.: Optimal-transport analysis of single-cell gene expression identifies developmental trajectories in reprogramming. Cell 176(4), 928–943 (2019). https://doi.org/10.1016/j.cell.2019.01.006

Schmitzer, B.: A sparse multiscale algorithm for dense optimal transport. J. Math. Imag. Vis. 56, 238–259 (2016). https://doi.org/10.1007/s10851-016-0653-9

Schmitzer, B.: Stabilized sparse scaling algorithms for entropy regularized transport problems. SIAM J. Sci. Comput. 41(3), A1443–A1481 (2019). https://doi.org/10.1137/16M1106018

Schrieber, J., Schuhmacher, D., Gottschlich, C.: Dotmark - a benchmark for discrete optimal transport. IEEE Access 5, 271–282 (2017)

Schuhmacher, D., Bähre, B., Gottschlich, C., Hartmann, V., Heinemann, F., Schmitzer, B.: transport: computation of Optimal Transport Plans and Wasserstein Distances, (2023). R package version 0.14-6

Sharify, M., Gaubert, S., Grigori, L.: Solution of the optimal assignment problem by diagonal scaling algorithms. arXiv preprint (2011). arXiv:1104.3830

Sinkhorn, R.: Diagonal equivalence to matrices with prescribed row and column sums. Am. Math. Mon. 74(4), 402 (1967). https://doi.org/10.2307/2314570

Song, L., Zhang, X., Smola, A., Gretton, A., Schölkopf, B.: Tailoring density estimation via reproducing kernel moment matching. In: Proceedings of the 25th international conference on Machine learning, pp. 992–999. (2008). https://doi.org/10.1145/1390156.1390281

Song, L., Smola, A., Gretton, A., Bedo, J., Borgwardt, K.: Feature selection via dependence maximization. J. Mach. Learn. Res. 13(5), (2012)

Székely, G.J., Rizzo, M.L., et al.: Testing for equal distributions in high dimension. InterStat 5, 1249–1272 (2004)

Thornton, J., Cuturi, M.: Rethinking initialization of the Sinkhorn algorithm. In: Ruiz, F., Dy, J., van de Meent, J.-W. editors, Proceedings of The 26th International Conference on Artificial Intelligence and Statistics, volume 206 of Proceedings of Machine Learning Research, pp. 8682–8698. PMLR, (2023). https://proceedings.mlr.press/v206/thornton23a.html

Vayer, T., Gribonval, R.: Controlling Wasserstein distances by kernel norms with application to compressive statistical learning, (2023). arxiv:2112.00423

Villani, C.: Topics in Optimal Transportation, Volume 58 of Graduate Studies in Mathematics, vol. 58. American Mathematical Society, Providence, RI (2003)

von Lindheim, J., Steidl, G.: Generalized iterative scaling for regularized optimal transport with affine constraints: application examples. (2023). arxiv:2305.07071

Wang, Z., Zhou, D., Yang, M., Zhang, Y., Rao, C., Wu, H.: Robust document distance with Wasserstein-Fisher-Rao metric. In: Pan, S.J., Sugiyama, M. editors, Proceedings of The 12th Asian Conference on Machine Learning, volume 129 of Proceedings of Machine Learning Research, pp. 721–736. PMLR, (2020). https://proceedings.mlr.press/v129/wang20c.html

Yang, T., Li, Y.-f., Mahdavi, M., Jin, R., Zhou, Z.-H.: Nyström method vs random Fourier features: a theoretical and empirical comparison. Adv. Neural Inf. Process. Syst. 25 (2012). https://proceedings.neurips.cc/paper_files/paper/2012/file/621bf66ddb7c962aa0d22ac97d69b793-Paper.pdf

Zhao, J., Meng, D.: Fastmmd: ensemble of circular discrepancy for efficient two-sample test. Neural Comput. 27(6), 1345–1372 (2015)

Zhao, J., Xiao, W., Chen, J., Shen, Y., Lv, L.: Validation metric of multi-output model based on energy distance. J. Phys. Conf. Ser. 2599(1), 012043 (2023). https://doi.org/10.1088/1742-6596/2599/1/012043

Acknowledgements

We would like to thank Franziska Nestler for helping with the implementation of fast energy kernel.

Funding

Open Access funding enabled and organized by Projekt DEAL. Funding statements are provided by DFG, German Research Foundation - Project-ID 416228727 - SFB 1410.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest