Abstract

This paper studies a statistical learning model where the model coefficients have a pre-determined non-overlapping group sparsity structure. We consider a combination of a loss function and a regularizer to recover the desired group sparsity patterns, which can embrace many existing works. We analyze directional stationary solutions of the proposed formulation, obtaining a sufficient condition for a directional stationary solution to achieve optimality and establishing a bound of the distance from the solution to a reference point. We develop an efficient algorithm that adopts an alternating direction method of multiplier (ADMM), showing that the iterates converge to a directional stationary solution under certain conditions. In the numerical experiment, we implement the algorithm for generalized linear models with convex and nonconvex group regularizers to evaluate the model performance on various data types, noise levels, and sparsity settings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Statistical learning models have been extensively used for data interpretations and predictions in many fields collecting a large amount of data, such as computer vision and computational biology. Given a dataset, a statistical learning model is utilized to estimate unknown model coefficients by minimizing a certain loss function such as mean squared error and cross-entropy [17]. With a wide range of features, only a small subset of them actually corresponds to nonzero model coefficients; as a result, fitting a model by blindly incorporating all the available features may cause a misleading interpretation of contributing features and an inaccurate prediction. In an attempt to select meaningful features automatically, feature selection techniques [4, 16, 21] have been developed to produce a sparsely fitted model with many zero coefficients. For example, regularization methods, appending a penalty function to the loss function, discard insignificant features by setting small model coefficients to zero. A well-known regularization term is the \(\ell _1\)-norm of the model coefficients, known as least absolute shrinkage and selection operator (LASSO) [53]. LASSO has been widely used in diverse settings to tackle practical problems such as forecasting corporate bankruptcy and assessing drug efficacy [52, 54]. However, the convex \(\ell _1\) penalty may mistakenly suppress large coefficients while shrinking small coefficients to zero, which is referred to as biasedness [14]. To alleviate this issue, nonconvex regularization functions have been proposed, including smoothly clipped absolute deviation (SCAD) [14], minimax concave penalty (MCP) [68], transformed \(\ell _1\) (TL1) [37, 70], \(\ell _{1/2}\) penalty [26, 65], scale-invariant \(\ell _1\) [45, 56], and logarithm penalty [9]. These nonconvex regularizers try to retain the large model coefficients while applying a similar shrinkage of smaller coefficients as the convex \(\ell _1\)-norm.

Group selection is a variant of feature selection when features have a group structure; specifically, each feature belongs to one or more groups. A group structure is non-overlapping if each feature is assigned to exactly one group. In the non-overlapping group selection, the features in the same group are required to have the corresponding coefficients altogether nonzero or zero, which is called group sparsity. The group structure of the features is reasonable in many applications. For example, a categorical variable should be converted to a group of several binary dummy variables prior to model fitting. Group sparsity can be enforced into a model by extending regularization techniques to a group setting. Group LASSO [35, 67] achieves group sparsity with the convex \(\ell _{2,1}\) norm, i.e., the sum of the \(\ell _2\) norms of the coefficients from the same group. Exploiting the convexity, many algorithms were applied and developed to solve for the group LASSO, including the gradient descent [72], the coordinate descent [25, 46], the second-order cone program [28], the semismooth Newton’s method [71], the subspace acceleration method [11], and the alternating direction method of multipliers (ADMM) [6, 12]. However, group LASSO may inherently have bias in the same way as LASSO [21]. Consequently, nonconvex regularizers such as \(\ell _{0,2}\) penalty [22], group capped-\(\ell _1\) [38, 44], group MCP [21], group SCAD [57], and group LOG [23], were introduced. A variety of numerical algorithms are considered to solve for the nonconvex optimization, including primal dual active set algorithm [22], smoothing penalty algorithm [38], difference-of-convex algorithm [44], coordinate descent algorithms [42, 60], group descent algorithm [8], coordinate majorization descent algorithm [59], and iterative reweighted algorithm [23].

We propose a generalized formulation for fitting a statistical learning model with a non-overlapping group structure. For example, we establish the connection of loss functions under our setting to generalized linear models (GLMs) [34]. Many existing regularizations can be regarded as special cases of our generalized framework; we consider the group variants of \(\ell _1\) penalty, SCAD, MCP, and TL1 as case studies. Our optimization problem is nonconvex if we use nonconvex regularization terms. Instead of a global optimum that might be hard to obtain, our analysis is based on the directional stationary solution, which can be provably achieved by using our algorithm. Directional stationary solutions were studied for nonconvex programming analyses, especially for the difference of convex (DC) problems [33, 39, 55]. In the DC literature, it has been shown that such stationarity is a necessary condition for local optimality [39]. Furthermore, the directional stationary solution can achieve local and even global optimality [3] under suitable conditions. In this paper, we identify such conditions based on a restricted strong convexity (RSC) assumption [18]. RSC was originally analyzed for the convex LASSO problem where the global minimizer is compared to the ground-truth. We relax the requirement of having the ground-truth vector by a reference point that can be tied to the ground-truth in a probabilistic interpretation. We further provide a bound for the distance from the stationary solution obtained by our approach to this reference point.

To solve our proposed model, we design an iterative scheme based on the ADMM framework, which is an efficient method for solving large-scale optimization problems in statistics, machine learning, and related fields [10, 15, 49, 63, 73]. Although ADMM is originally designed for convex optimization, it can be extended to nonconvex problems, and its global convergence can be proved under certain conditions [19, 40, 58]. In our problem formulation, ADMM provides an iterative scheme that involves two subproblems that can be minimized sequentially. One subproblem involving the loss function is assumed to be convex, and hence it can be solved efficiently by closed-form solution or iterative methods. The other subproblem is related to the nonconvex regularization terms. We rely on proximal operators [41] to produce a stationary solution and characterize conditions for the global optimality of the directional stationary solutions. Theoretically, we prove the subsequence convergence of the ADMM framework. In other words, the sequence generated by the ADMM has a subsequence convergence to a directional stationary point of our proposed model.

We conduct in-depth experiments on various datasets under different learning settings. We use synthetic datasets for linear regression and Poisson regression, and one real dataset for logistic regression. With hyperparameters tuned by cross-validation, our framework with various group regularization terms is applied to those datasets. An overall evaluation indicates that nonconvex penalty functions consistently outperform convex penalty functions.

We summarize our major contributions as follows,

-

(1)

We introduce a generalized formulation for a non-overlapping group sparsity problem that can be reduced to many existing works;

-

(2)

We investigate properties of the directional stationary solutions of the problem to achieve optimality as well as the bound on the distance from the solution to a reference point which is closely related to the optima of the loss function;

-

(3)

We prove the subsequence convergence of ADMM iterates to a directional stationary point of our proposed model;

-

(4)

Our numerical experiments on various models under different group structure settings evaluate the performance of existing group sparsity functions under the proposed formulation, showing the advantages of nonconvex penalties over convex ones.

The rest of the paper is organized as follows. In Sect. 2, we introduce a generalized formulation for non-overlapping group selection. Section 3 analyzes the conditions for global optimality of directional stationary solutions to our optimization problem and their properties under the RSC assumption on the loss function. In Sect. 4, we present the ADMM framework for minimizing the proposed model with convergence analysis. In Sect. 5, we present in-depth numerical experiments on both synthetic and real datasets for linear, Poisson, and logistic regressions. Section 6 concludes the paper.

2 The Proposed Framework

We consider a statistical learning problem defined by a coefficient vector \(\textbf{x} \in \mathbb {R}^d\) with a pre-determined group structure. We restrict our attention to a non-overlapping group selection by assuming that components of the variable \(\textbf{x}\) are from m non-overlapping groups, denoted by \(\mathcal {G}_k\) for \(k \in \{1, \dots , m\}.\) Specifically \(\mathcal {G}_k\) is a set of indices of \(\textbf{x}\) that belong to the kth group. The setting of non-overlapping groups implies that all \(\mathcal {G}_k\)’s are mutually exclusive. The notation \(| \mathcal {G}_k |\) represents the cardinality of the set \(\mathcal {G}_k\), and \(\textbf{x}_{\mathcal {G}_k}\in \mathbb {R}^{|\mathcal {G}_k|}\) is defined as a subvector of \(\textbf{x}\) that only consists of the coefficients in the group \(\mathcal {G}_k\). For the rest of the paper, we denote \(\mathcal {G}^{\max }\triangleq \max \limits _{1 \le k \le m} \sqrt{| \mathcal {G}_k|}\).

We aim to penalize the complexity of the group structure while minimizing the loss of the model simultaneously. To this end, we propose a general framework

where the loss function \(L(\cdot )\) measures the fit of the model to the observed data, the regularizer \(P_k(\cdot )\) determines the group complexity for the kth group of the model coefficients, and a hyperparameter \(\lambda > 0\) balances between the model fitting and the group complexity. Note that \(P_k:\mathbb {R}^{|\mathcal {G}_k|} \rightarrow \mathbb {R}\) is a composite function consisting of a univariate sparsity function \(p: \mathbb {R} \rightarrow \mathbb {R}\) and the norm of the coefficients corresponding to \(\mathcal {G}_k\). The following set of assumptions is considered on \(p(\cdot )\):

-

(P1)

. p is symmetric about zero on \(\mathbb {R}\), i.e., \(p(t) = p(-t),\) concave and non-decreasing on \([0,\infty )\) with \(p(0) = 0.\)

-

(P2)

. The derivative of p is well-defined except at 0, finite with \(p'(t) \ge 0\), and \(u \triangleq \sup \limits _{t} p'(t)\) for any \(t > 0\).

-

(P3)

. There exists a constant \(\textrm{Lip}_p > 0 \) such that

$$\begin{aligned} |p'(t_1) - p'(t_2) | \le \mathrm{Lip_p} | t_1-t_2 | \quad \forall \ t_1, t_2 > 0. \end{aligned}$$

It is straightforward to verify that many popular sparsity-promoting functions, including SCAD [14], MCP [68], transformed \(\ell _1\) [37, 70], and logarithm penalty [9], satisfy all the assumptions. We assume (P1) throughout this paper and impose (P2) or (P3) wherever needed.

The assumptions on the loss function are summarized as follows.

-

(A1)

. \(L(\cdot )\) is lower-bounded.

-

(A2)

. There exists a constant \(\textrm{Lip}_L > 0\) such that

$$\begin{aligned} \Vert \nabla L(\textbf{x}_1) - \nabla L(\textbf{x}_2) \Vert _2 \le \mathrm{Lip_L} \Vert \textbf{x}_1- \textbf{x}_2 \Vert _2 \quad \forall \textbf{x}_1, \, \textbf{x}_2. \end{aligned}$$ -

(A3)

. \(L(\cdot )\) is convex with modulus \(\sigma ,\) i.e., there exists a constant \(\sigma \ge 0\) such that

$$\begin{aligned} L(\textbf{x}_1) - L(\textbf{x}_2) - \langle \nabla L(\textbf{x}_2), \textbf{x}_1- \textbf{x}_2\rangle \ge \displaystyle {\frac{\sigma }{2}} \Vert \textbf{x}_1- \textbf{x}_2 \Vert _2^2 \quad \forall \textbf{x}_1, \, \textbf{x}_2. \end{aligned}$$If \(\sigma \) is strictly positive, \(L(\cdot )\) is strongly convex.

Some sparsity functions in the literature have been written as DC functions [3, 32, 66, 70]. Using a unified DC representation [3] of p with a convex loss, the problem (1) can be written as a DC formulation, e.g., \(F_\lambda (\textbf{x}) = g(\textbf{x}) - h(\textbf{x})\) where both g and h are convex functions. Subsequently, existing algorithms [24, 27, 29, 43] can be applied to compute a critical point, e.g., \(\bar{\textbf{x}}\) with \(\textbf{0} \in \partial F_\lambda (\bar{\textbf{x}}\)). Our approach bypasses the use of a DC representation of (1) and introduces a numerical method that computes a directional stationary point.

The loss function used in our optimization problem (1) can be specified by generalized linear models (GLMs) that are widely used for supervised learning, where input features explain a response [34]. GLMs are an extension of ordinary linear regression beyond Gaussian response variables, where the responses \(b_i\), \(i=1,\ldots ,n\), follow any exponential family distribution with a parameter \(\theta _i\). Specifically, the probability density function of \(b_i\) takes the following canonical form:

where \(\psi \) is a cumulant function and \(\phi \) is a function that is independent of \(\theta _i\) [34, 64]. Both \(\psi \) and \(\phi \) are given functions by the exponential family distribution in consideration, and the first-order derivative of the cumulant function \(\psi '(\theta _i)\) is the expected value of \(b_i\) [30, 34]. Taking a Gaussian distribution with mean \(\theta _i\) and variance 1, for example, one sets \( \psi (\theta _i)=\frac{\theta _i^2}{2}\) and \(\phi (b_i)= \frac{\exp (-{b_i^2}/{2})}{\sqrt{2\pi }}\).

Denote the ith observation of the input features by a row vector \(A_i \in \mathbb {R}^d\) of the matrix \(A \in \mathbb {R}^{n \times d}\). A GLM associates \(\psi '(\theta _i)\) with a linear function of the features \(A_i \textbf{x}\) such that \(\theta _i=A_i \textbf{x}\). Omitting \(\phi (b_i)\) that is free of \(\theta _i\), the loss function L for the GLM is written by summing the negative exponent in (2) for all the observations \((A_i, b_i)\), \(i=1,\ldots ,n\), i.e.,

For example, the logistic regression models binary responses \(b_i \in \{0, 1\}\) by

The Poisson regression for count data \(b_i \in \{0, 1, 2, \ldots \}\) has \(\psi (\theta _i)=\exp (\theta _i)=\exp (A_i\textbf{x})\). Under the assumptions that the second-order derivative \(\psi ''\) is continuous, positive, and bounded above by a constant, we establish the Lipschitz continuity of the gradient of the loss function in (A2) and the convexity in (A3). The boundedness assumption on \(\psi ''\) holds for loss functions of most GLMs such as ordinary linear regression, logistic regression, and multinomial regression [30], but not Poisson regression.

3 Theoretical Analysis

We present two theoretical results of the proposed model (1). Specifically, Sect. 3.1 establishes the global optimality of a directional stationary solution of (1) when the loss function is strongly convex. In Sect. 3.2, we define restricted strong convexity (RSC), under which the loss function is strongly convex over a subset of the feasible space, followed by providing an upper bound of the distance from the directional stationary solution to a reference point which we define in Sect. 3.2.

3.1 Optimality of Directional Stationary Solutions

Notations: For a function \(f: \mathbb {R} \rightarrow \mathbb {R}\), its derivative at a point t is denoted as \(f'(t)\). The notation \(f'(t^+)\) represents the right-side derivative at t, i.e.,

The notation \(F'(\textbf{x}; \textbf{d})\), for \(F: \mathbb {R}^d \rightarrow \mathbb {R}\), is the directional derivative of F at a point \(\textbf{x}\) along the direction \(\textbf{d},\) which is formally defined in Definition 1.

Definition 1

Given a function \(F: \mathbb {R}^d \rightarrow \mathbb {R}\), the directional derivative of F at point \(\textbf{x} \in \mathbb {R}^d\) along direction \(\textbf{d} \in \mathbb {R}^d\) is denoted as \(F'(\textbf{x}; \textbf{d})\) and defined by

Next, we provide the definition of a directional stationary point used in this paper.

Definition 2

The point \(\textbf{x}^*\) be a directional stationary point of an unconstrained optimization problem with an objective function \(F:\mathbb {R}^d \rightarrow \mathbb {R}\) if \(F'(\textbf{x}^*;\textbf{x} - \textbf{x}^*) \ge 0, \ \forall \textbf{x} \in \mathbb {R}^d\).

Our analysis is built on the directional stationary solutions. Hereafter, we simply denote a directional stationary solution as a stationary solution. It has been shown that directional stationarity is a necessary condition for a point to be a local minimum for certain DC programs [39].

We establish in Theorem 2 that a stationary solution of (1) is a global minimizer under certain conditions. The proof of Theorem 2 requires Lemma 1.

Lemma 1

If p satisfies Assumption (P3), we have

where \(P(\textbf{x}) \triangleq p(\Vert \textbf{x}\Vert _2)\) and n is an ambient dimension of \(\textbf{x}\) and \(\textbf{y}\).

Proof

Let \(G(\textbf{x}) \triangleq p(\Vert \textbf{x} \Vert _2) + \displaystyle \frac{\mathrm{Lip_p}}{2}\Vert \textbf{x}\Vert _2^2\). Observe that \(G(\textbf{x}) = G_1 \circ G_2 (\textbf{x})\) where \(G_1(t) \triangleq p (t) + \displaystyle \frac{\mathrm{Lip_p}}{2} t^2\) and \(G_2(\textbf{x}) \triangleq \Vert \textbf{x}\Vert _2\). Then for any \(0 < t_1 \le t_2\), we have

which implies that \(G'_1(t)\) is monotonically non-decreasing on \((0,\infty ).\) By choosing sufficiently small \(0 < t_1 \le t_2\) and taking limit on \(t_1\) to 0, we obtain \(|p'(0^+) - p'(t_2)| \le \mathrm{Lip_p} \, t_2\) from (P3). This implies \(G_1'(0^+) = p'(0^+) \le p'(t_2) + {\mathrm{Lip_{p}}} \, t_2 = G_2'(t_2)\). Therefore, \(G_1(t)\) is a convex function on the interval \([0,\infty )\). Since \(G_1, G_2\) are both convex and \(G_1\) is non-decreasing on \([0,\infty )\) by (P1), we conclude that their composite function \(G(\textbf{x})\) is also a convex function. Using the first order condition, i.e., \(G(\textbf{y}) \ge G(\textbf{x}) + G'(\textbf{x}; \textbf{y} - \textbf{x})\), we have

After simple manipulations, we deduce the desired inequality (3). \(\square \)

Theorem 2

Let Assumptions (P3) and (A3) hold with \(\sigma > 0\). If \(\sigma \ge \lambda \textrm{Lip}_p \mathcal {G}^{\max }\), then any stationary solution of (1) is a global minimizer.

Proof

Denote \(\textbf{x}^*\) as a stationary solution of (1). By Assumption (A3) and applying Lemma 1, we have

Due to the stationarity (Definition 2), \(\textbf{x}^*\) satisfies

Consequently, the inequality (4) can be simplified as

where we define \(\zeta \triangleq \sigma - \lambda \textrm{Lip}_p \mathcal {G}^{\max }.\) If \(\sigma -\lambda \textrm{Lip}_p \mathcal {G}^{\max }\ge 0,\) then \(\zeta \ge 0\) and hence \(F_\lambda (\textbf{x})\ge F_\lambda (\textbf{x}^*)\ \forall \textbf{x},\) which implies that any stationary solution \(\textbf{x}^*\) is a global minimizer. \(\square \)

Our result identifies the condition on the hyperparamter \(\lambda \) which guarantees the global optimality of any stationary solutions. This is a generalization of [23, Theorem 2.2] which is for a special case of the group-LOG regularizer.

3.2 Stationarity Under Restricted Strong Convexity

Before discussing the concept of restricted strong convexity, we first consider two related problems of (1). In the first problem, we assume there exist data points \(\tilde{\varvec{\xi }}\) that are generated by an unknown distribution \(\mathcal {D}\). The following problem minimizes the loss of the model associated with the model coefficient \(\textbf{x}\) with respect to the data distribution:

With a set of observed data points \(\{ \varvec{\xi }_i \}_{i=1}^{n}\), we can also minimize the loss over the observed data points by solving the following sample average approximation problem:

Some works investigate the relationships between (5) and (6). For example, [48, Theorem 7.77] states that under suitable conditions, for any \(\epsilon ' > 0\), there exists positive constants \(\alpha \) and \(\beta \) independent of n such that

for any given vector \(\bar{\textbf{x}}\). The problem (1) is considered as sample average approximation which employs regularizers to help recover groupwise sparsity structure in the variables. The connection between the sample average approximation and the population risk motivates our analysis. If the ground-truth is the unique solution for (5), then the gradient of \(\tilde{L}\) at that point is zero, and the above result yields a probability involving the gradient of the sample average approximation function \(\widehat{L}_n\) at the ground-truth. However, since the ground-truth is unlikely to be attained in practice, we consider a reference point, denoted as \(\textbf{x}^\epsilon \), which satisfies

for a given \(\epsilon >0\). Our analysis is based on the reference point and we compare it to the stationary solutions of (1).

The assumption of strong convexity may not hold for some loss functions, e.g., ordinary linear regression with an under-determined system of linear equations. As a remedy, we consider a setting where strong convexity only holds over a smaller region rather than the entire domain of the loss function. Since the scope of the strong convexity is limited, such an assumption is referred to as the restricted strong convexity (RSC) [18]. In statistics, the RSC condition for a subset of possible vector differences between the ground-truth and an estimator is imposed [36].

We first derive a region for RSC to hold, then provide a bound of the distance from a stationary solution to a reference point. Let S be the group-wise support set of the reference point \(\textbf{x}^\epsilon \), i.e., \(S \triangleq \{\,k \in \{ 1,\dots , m \} \ | \ \Vert \textbf{x}_{\mathcal {G}_k}^\epsilon \Vert _2 \ne 0\}\). Given \(\delta >0\), define the set \(\mathcal {V}_\delta (S)\) as

It is not difficult to verify that \(\mathcal {V}_\delta (S)\) is a cone; i.e., if \(\textbf{x} \in \mathcal {V}_\delta (S)\) then \(\alpha \textbf{x} \in \mathcal {V}_\delta (S)\) for any \(\alpha \ge 0\). Furthermore, the set is a nonconvex cone. For example, let \(\delta = 0.5, \mathcal {G}_1 = \{1,2\},\mathcal {G}_2 = \{3,4\}\) and \(S=\{2\}\). For two points \(\nu _1 = (1,1,1,3)^T\) and \(\nu _2 =(1,2,4,2)^T\), we have \(\nu _1, \nu _2 \in \mathcal {V}_\delta (S)\) but \(0.5\nu _1+0.5\nu _2 \notin \mathcal {V}_\delta (S)\).

Given a reference point \(\textbf{x}^\epsilon \), there exists a region that includes the vector differences between the stationary solutions of (1) and \(\textbf{x}^\epsilon \) under certain conditions; refer to the following lemma.

Lemma 3

Let Assumptions (A3) and (P2) hold. Let \(\textbf{x}^\epsilon \) be a vector such that, for a given \(\epsilon > 0\), \(\Vert \nabla L(\textbf{x}^\epsilon )\Vert _2 \le \epsilon \). If \(\textbf{x}^*\) is a stationary solution of (1) with

then there exists \(\delta ^* >0\) such that \((\textbf{x}^\epsilon - \textbf{x}^*) \in \mathcal {V}_{\delta ^*}(S)\).

Proof

For any stationary solution \(\textbf{x}^*\), one has

Since the support of \(\textbf{x}^\epsilon \) may not be the same as that of \(\textbf{x}^*\), the four possible cases for each summand of (9) are considered. If \(\textbf{x}_{\mathcal {G}_k}^* \ne \textbf{0}\), we use (P2) to obtain

If \(\textbf{x}_{\mathcal {G}_k}^* = \textbf{0}\), it follows from the definition of the directional derivative that

The above captures the cases \(k \in S\) and \(k \notin S\). By (7) and the convexity of L, we obtain

Substituting (10)-(12) into (9) yields

By the assumption (P2) where u is defined, we deduce

The condition (8) guarantees the nonnegativity of the left-hand side of the inequality, which validates the existence of \(\delta ^*\). \(\square \)

Lemma 3 can be interpreted as, if there is a stationary solution that is sufficiently close to \(\textbf{x}^\epsilon \), then there exists a nonconvex cone which includes the direction \(\textbf{x}^\epsilon - \textbf{x}^*\). This is because a stationary solution \(\textbf{x}_{\mathcal {G}_k}^*\) is more likely to meet the condition (8) if \(\Vert \textbf{x}_{\mathcal {G}_k}^* \Vert _2\) is near the origin whenever the corresponding subvector of \(\textbf{x}_{\mathcal {G}_k}^\epsilon \) is zero. We note that the scale of \(\delta ^*\) in the analysis is related to the model parameters and the stationary solution which may lead to a strong assumption on the RSC.

We define the RSC assumption of L over the set \(\mathcal {V}_{\delta ^*}(S)\):

-

(A4)

. There exists \(\sigma > 0\) such that \(L(\textbf{x}_1) - L(\textbf{x}_2) - \langle \nabla L(\textbf{x}_2), \textbf{x}_1- \textbf{x}_2\rangle \ge \displaystyle {\frac{\sigma }{2}} \Vert \textbf{x}_1- \textbf{x}_2 \Vert _2^2 \quad \forall (\textbf{x}_1 - \textbf{x}_2) \in \mathcal {V}_{\delta ^*}(S)\).

Under Assumption (A4), we provide a bound of the distance between a stationary solution of (1) and the reference point \(\textbf{x}^\epsilon \).

Theorem 4

Let Assumptions (A4) and (P2) hold. Let \(\textbf{x}^\epsilon \) be a vector such that \(\Vert \nabla L(\textbf{x}^\epsilon )\Vert _2 \le \epsilon \) for a given \(0< \epsilon < \lambda u \min \limits _{1\le k\le m} \sqrt{|\mathcal {G}_k|}\). Let \(\textbf{x}^*\) be a stationary solution of (1) with

Suppose (A4) holds over the set \(\mathcal {V}_{\delta ^*}(S) \ni (\textbf{x}^\epsilon - \textbf{x}^*)\). We have a bound

Proof

By the RSC assumption (A4), there exists \(\sigma >0\) such that

The last inequality is obtained by applying (10)-(12). Due to (13), the first term of the right-hand side of the inequality is negative and \(\epsilon < \lambda u\sqrt{|\mathcal {G}_k| }\), leading to

By dividing \(\Vert \textbf{x}^\epsilon -\textbf{x}^*\Vert _2\) on both sides and substituting \(| \mathcal {G}_S | = \sum \limits _{k \in S} | \mathcal {G}_k|\), we complete the proof. \(\square \)

Theorem 3 is a generalization of existing bounds shown for individual sparsity problems (without group structure) [2, 18], for which every group is a singleton such that \(\mathcal {G}_1 = \{ 1 \}, \dots , \mathcal {G}_d = \{ d \}\). For example, if we let \(p(t) = |t|\), the problem (1) becomes LASSO regularization, and Theorem 3 exactly recovers the bound on the distance between the optimal solution of LASSO and the ground-truth shown in [18, Theorem 11.1]. The result also extends the bound derived in [2, Theorem 1] for nonconvex sparsity functions such as SCAD, MCP, and transformed \(\ell _1\).

4 Our Algorithm

We adopt the alternating direction method of multipliers (ADMM) [6] to minimize the problem (1). Specifically, we introduce an auxiliary variable \(\textbf{z}\) and rewrite (1) equivalently as

The corresponding augmented Lagrangian function is

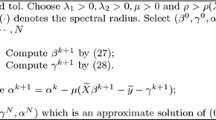

where \(\textbf{v}\) is a Lagrangian multiplier (or dual variable) and \(\rho \) is a positive parameter. We consider a scaled form [6] in (15) by multiplying \(\rho \) in front of \(\langle \textbf{v}, \textbf{x}-\textbf{z}\rangle .\) Consequently, ADMM iterations proceed as follows:

where \(\tau \) indexes the iteration number. The \(\textbf{z}\)-subproblem is written as

which is convex under Assumption (A3) and hence can be solved efficiently by existing convex programming algorithms. For example, a closed-form solution can be derived if the loss function is the least squares for linear regression. In Appendix A, we provide details on how the \(\textbf{z}\)-subproblem is solved for various GLM loss functions that are considered in the numerical study in Sect. 5. In Sect. 4.1, we elaborate on how to solve \(\textbf{x}\)-subproblem in (16), and the convergence analysis of the ADMM scheme (16) is conducted in Sect. 4.2.

4.1 \(\textbf{x}\)-subproblem

The \(\textbf{x}\)-subproblem can be decomposed into groups such that for each \(k\in \{1,\cdots , m\}\),

It is nonconvex due to Assumption (P1), by which a (global) optimal solution may be difficult to obtain. Corollary 5 characterizes conditions under which a stationary solution of (17) achieves the global optimality.

Corollary 5

Let Assumption (P3) hold. If \(\rho \ge \lambda \textrm{Lip}_p \mathcal {G}^{\max }\), then any stationary solution of (17) is a global minimizer.

Proof

Since \(\rho \) is the strong convexity modulus of the second term in (17), the statement follows from Theorem 2. \(\square \)

To solve (17), we introduce a general update scheme based on a proximal operator [5, Chapter 6], then discuss the stationarity of the obtained solution. The proximal operator of a function f is defined by

where f is a univariate function and \(\mu \) is a positive parameter. The notation argmin we use in (17) and (18) denotes a set of ordinary stationary solutions instead of the (global) optimal solutions. Here, we define \(\bar{x}\) as an ordinary stationary solution to (18) if there exists \(\bar{v} \in \partial f(\bar{x}) = \{ v \, | \, f(z) \ge f(\bar{x}) + v(z-\bar{x}), \ \forall z \ \}\) such that \(\mu \bar{v} + (\bar{x}-y) = 0.\) It has been shown that (18) yields a global solution for certain sparsity functions such as transformed \(\ell _1\) function [69, Theorem 3.1]. Multiple stationary solutions are possible for \(\ell _1\)-\(\ell _2\) function [31].

We aim to find a closed-form solution to (17). By applying the change of variable \(\textbf{x} = c(\textbf{z}_{\mathcal {G}_k}^\tau -\textbf{v}_{\mathcal {G}_k}^\tau )\), we rewrite the problem (17) to the following univariate problem:

The problem (19) can be solved by a proximal operator, i.e.,

where \(c^*\) is an ordinary stationary solution of (19). Applying the above to the \(\textbf{x}\)-subproblem yields our \(\textbf{x}\)-update:

In the proposition below, we show that the solution (21) is a stationary solution of (17).

Proposition 6

The solution \(\textbf{x}_{\mathcal {G}_k}^{\tau +1}\) in (21) is a stationary solution of the \(\textbf{x}\)-subproblem (17).

Proof

We discuss the cases that \(\textbf{x}_{\mathcal {G}_k}^{\tau +1}\) to be zero and nonzero separately. Let \(c^*\) be a solution given by (20). If \(c^*\ne 0,\) then it satisfies

which implies that \(0< c^* < 1\). The corresponding \(\textbf{x}^* = c^*(\textbf{z}_{\mathcal {G}_k}^\tau -\textbf{v}_{\mathcal {G}_k}^\tau )\) satisfies the stationary condition of (17):

In the case of \(c^* = 0\), there exists \(\bar{u} \in \partial p(0)\) such that \(\lambda \sqrt{|\mathcal {G}_k|} \bar{u} - \rho \Vert \textbf{z}_{\mathcal {G}_k}^\tau -\textbf{v}_{\mathcal {G}_k}^\tau \Vert _2 = 0\). Here, the set \(\partial p(0)\) is nonempty with 0 belonging to the set. To see this, recall the definition of the subgradient: \(v \in \partial p(0)\) if \(p(y) \ge p(0) + v(y-0)\) for all y, which is equivalent to \(p(y) \ge v \, y\). Since \(p(y) \ge 0\) for any y by (P1), we have \(0 \in \partial p(0)\). Next, we observe the directional derivative of \(H_{\lambda , \rho }\) in (17) at \(\textbf{x}^*\) in the direction \(\textbf{x} - \textbf{x}^*\) for any \(\textbf{x},\) i.e.,

Letting \(\textbf{x}^* = \textbf{0}\) with \(p(0)=0\), we have

Combining (22) with the stationary condition of \(c^*\) provides

It remains to show that \(p'(0^+) \ge u\) for all \(u \in \partial p(0)\). Suppose there exists \(\hat{u} \in \partial p(0)\) such that \(p'(0^+) < \hat{u}\). From the property of the subgradient, we have \(p(t) \ge p(0) + \hat{u} (t-0)\) for all \(t \in \mathbb {R}\). If we choose a strictly positive \(\hat{t}\), then we must have \(p(\hat{t}) \ge p(0) + \hat{u}(\hat{t}-0) > p(0) + p'(0^+)(\hat{t}-0)\). This contradicts the concavity of p on the domain \([0,\infty )\). Hence we conclude that if \(c^* = 0\) is a stationary solution to (19), then \(H_{\lambda , \rho }'(\textbf{0}; \textbf{x}) \ge 0\) for any \(\textbf{x}\). \(\square \)

Proposition 6 and Corollary 5 indicate that if Assumption (P3) holds with \(\rho \ge \lambda \textrm{Lip}_p \mathcal {G}^{\max },\) then \(\textbf{x}_{\mathcal {G}_k}^{\tau +1}\) is a global minimizer to the problem (17).

4.2 Convergence Analysis

The ADMM framework for minimizing (14) that involves both \(\textbf{x}\)- and \(\textbf{z}\)-subproblems is described in Algorithm 1, where \(\tau _{\max }\) is the maximal number of iterations. In this section, we present its convergence analysis. We first show that each \(\textbf{x}\)- and \(\textbf{z}\)-update decreases its objective value, followed by monotontic decreasing of \(\{\mathcal {L}(\textbf{x}^\tau ,\textbf{z}^\tau ;\textbf{v}^\tau )\}\); refer to Lemmas 7, 8, and 10, respectively.

Lemma 7

Let Assumption (P3) hold. If \(\rho >\lambda \mathrm{Lip_{p}} \mathcal {G}^{\max },\) then for any \(\textbf{x}^{\tau +1}\) given by (16), there exists \(\bar{c}_1 > 0\) independent of \(\tau \) such that

Proof

By Proposition 6, \(\textbf{x}^{\tau +1}\) is a stationary solution to (16) such that

It follows from Lemma 1 that

Simple calculations lead to

If \(\rho >\lambda \mathrm{Lip_{p}} \mathcal {G}^{\max },\) we choose \(\bar{c}_1 = \frac{1}{2} (\rho -\lambda \mathrm{Lip_{p}}\mathcal {G}^{\max })>0\) such that (23) holds. \(\square \)

Lemma 8

Let Assumption (A3) hold. There exists \(\bar{c}_2 > 0\) independent of \(\tau \) such that

Proof

The optimality condition of \(\textbf{z}^{\tau +1}\) is

It follows from Assumption (A3) that

As \(\rho >0\) and \(\sigma \ge 0,\) we choose \(\bar{c}_2=\rho +\sigma >0\) that completes the proof. \(\square \)

Lemma 9

Let Assumption (A2) hold. We have

Proof

Based on (26) and \(\textbf{v}\)-update formula \(\textbf{v}^{\tau +1} = \textbf{v}^\tau + \textbf{x}^{\tau +1}- \textbf{z}^{\tau +1}\), we obtain

or equivalently \( \textbf{v}^{\tau +1} = \frac{\nabla L(\textbf{z}^{\tau +1})}{\rho }.\) Similarly, we have \(\textbf{v}^\tau = \frac{\nabla L(\textbf{z}^\tau )}{\rho }\). It follows from Assumption (A2) that

\(\square \)

Combining Lemmas 7-9, we shown in Lemma 10 that every triplet \((\textbf{x}^{\tau +1},\textbf{z}^{\tau +1};\textbf{v}^{\tau +1})\) produced by (16) sufficiently decreases the objective value of (15).

Lemma 10

(sufficient descent) Let Assumptions (P3), (A2) and (A3) hold. If \(\rho \) in (15) satisfies \(\rho > \max \left\{ \lambda {\textrm{Lip}}_p {\mathcal {G}^{\max }}, \frac{\sqrt{\sigma ^2+8\mathrm{Lip_L}^2}-\sigma }{2} \right\} \), then there exist two constants \(c_1>0\) and \(c_2>0\) such that

Proof

It follows from \(\textbf{v}\)-update formula \(\textbf{v}^{\tau +1}-\textbf{v}^\tau = \textbf{x}^{\tau +1}- \textbf{z}^{\tau +1}\) that

where the last inequality holds by Lemma 9. By applying Lemmas 7-8, we achieve the desired inequality (29) with \(c_1 = \frac{\bar{c}_1}{2}\) and \(c_2 = \frac{\bar{c}_2}{2} - \frac{\mathrm{Lip_L}^2}{\rho }\), where \(\bar{c}_1\) and \(\bar{c}_2\) are defined in Lemma 7 and Lemma 8, respectively. The condition \(\rho > \lambda {\textrm{Lip}}_p {\mathcal {G}^{\max }}\) is required for Lemma 7. Additionally we require \(\rho > \frac{\sqrt{\sigma ^2+8\mathrm{Lip_L}^2}-\sigma }{2}\) such that \(c_2 >0\). \(\square \)

Theorem 11 establishes the subsequence convergence of the iterates

under an assumption that the objective function \(F_\lambda (\cdot )\) is coercive; refer to Definition 3. If either the regularization function P or the loss function L is coercive, then the objective function is coercive, which guarantees the boundedness of the minimizing sequence.

Definition 3

A function \(f(\cdot )\) is coercive if \(f(\textbf{x}) \rightarrow \infty \) as \(\Vert \textbf{x}\Vert _2 \rightarrow \infty \).

Theorem 11

(convergence) Let Assumptions (P3), (A1)-(A3) hold. If either P or L is coercive, and \(\rho \) in (15) satisfies \(\rho > \max \left\{ \lambda {\textrm{Lip}}_p {\mathcal {G}^{\max }}, \frac{\sqrt{\sigma ^2+8\mathrm{Lip_L}^2}-\sigma }{2} \right\} \), then the sequence \(\{(\textbf{x}^\tau , \textbf{z}^\tau ,\textbf{v}^\tau )\}_{\tau =1}^\infty \) generated by (16) has a convergent subsequence. Moreover, its limit point is a stationary solution of the problem (1).

Proof

We first show the convergence of the sequence \(\{(\textbf{x}^\tau , \textbf{z}^\tau ,\textbf{v}^\tau )\}_{\tau =1}^\infty \). By telescoping summation of (29) from \(\tau =0\) to T, we have

which implies that the sequence \(\{\mathcal {L}(\textbf{x}^\tau ,\textbf{z}^\tau ;\textbf{v}^\tau )\}_{\tau = 0}^\infty \) is upper-bounded. On the other hand, we can estimate a lower bound for \(\forall \, T = 0,1,\dots \)

where we use (27) and Assumption (A2). It follows from (31) that the sequence {\(F_\lambda (\textbf{x}^\tau )\}_{\tau = 0}^\infty \) is upper-bounded if \(\rho > \mathrm{Lip_L}\) and thus {\(F_\lambda (\textbf{x}^\tau )\}_{\tau = 0}^\infty \) is bounded by Assumption (A1). Note that \(\mathrm{Lip_L} \le \frac{\sqrt{\sigma ^2+8\mathrm{Lip_L}^2}-\sigma }{2}\), a constant stated in Lemma 10. Since \(F_\lambda (\cdot )\) is coercive by the coerciveness of P or L, \(\{\textbf{x}^\tau \}_{\tau = 1}^\infty \) is bounded, so is \(\{\textbf{z}^\tau \}_{\tau = 1}^\infty \) by (31). To show the boundedness of \(\{\textbf{v}^\tau \}_{\tau = 1}^\infty \), we consider

which can be obtained similarly to (28). This implies that

Together with the boundedness of \(\{\textbf{z}^\tau \}_{\tau = 1}^\infty ,\) we have \(\{\textbf{v}^\tau \}_{\tau = 1}^\infty \) bounded. By Bolzano-Weierstrass theorem, the bounded sequence \(\{(\textbf{x}^\tau , \textbf{z}^\tau , \textbf{v}^\tau )\}_{\tau =1}^\infty \) has a convergent subsequence, denoted by \((\textbf{x}^{\tau _j}, \textbf{z}^{\tau _j}, \textbf{v}^{\tau _j}) \rightarrow (\textbf{x}^*, \textbf{z}^*, \textbf{v}^*)\) as \(\tau _j \rightarrow \infty \).

With the boundedness, \(\mathcal {L}(\textbf{x}^\tau ,\textbf{z}^\tau ;\textbf{v}^\tau )\) converges due to the monotonic decreasing property shown in Lemma 10. By letting \(T\rightarrow \infty \) in (30), we have \(\sum _{\tau = 0}^\infty \Vert \textbf{x}^{\tau +1}-\textbf{x}^\tau \Vert _2^2\) and \(\sum _{\tau = 0}^\infty \Vert \textbf{z}^{\tau +1}-\textbf{z}^\tau \Vert _2^2\) are finite. Therefore, \(\textbf{x}^{\tau +1}-\textbf{x}^\tau \rightarrow 0\) and \(\textbf{z}^{\tau +1}-\textbf{z}^\tau \rightarrow 0\) as \(\tau \rightarrow \infty \). It further follows from Lemma 9 that \(\textbf{v}^{\tau +1}-\textbf{v}^\tau \rightarrow 0\) as well. As \((\textbf{x}^{\tau _j}, \textbf{z}^{\tau _j}, \textbf{v}^{\tau _j}) \rightarrow (\textbf{x}^*, \textbf{z}^*, \textbf{v}^*)\), we have \((\textbf{x}^{\tau _j+1}, \textbf{z}^{\tau _j+1}, \textbf{v}^{\tau _j+1}) \rightarrow (\textbf{x}^*, \textbf{z}^*, \textbf{v}^*)\) and \(\textbf{x}^*=\textbf{z}^*\) due to the \(\textbf{v}\)-update.

We next show that \((\textbf{x}^*, \textbf{z}^*, \textbf{v}^*)\) is a stationary solution of (1). By the iterative scheme (16), we have

Let \(\tau _j \rightarrow \infty \), we have \(\mathcal {L}(\textbf{x}^*,\textbf{z}^*,\textbf{v}^*) \le \mathcal {L}(\textbf{x},\textbf{z}^*,\textbf{v}^*),\, \forall \textbf{x}\) and \(\mathcal {L}(\textbf{x}^*,\textbf{z}^*,\textbf{v}^*) \le \mathcal {L}(\textbf{x}^*,\textbf{z},\textbf{v}^*),\, \forall \textbf{z}\), which implies that

and

Let us fix \(\textbf{x}\) in (33), and let \(\textbf{z} = \textbf{x}\) in (34). As \(\textbf{x}^*=\textbf{z}^*\), combining (33) and (34) yields

for any \(\textbf{x}\). Define \(\widehat{F}_{\lambda }(\textbf{x}) \triangleq F_\lambda (\textbf{x}) + \rho \Vert \textbf{x} - \textbf{x}^*\Vert _2^2\). It follows from (35) that

implying \(\textbf{x}^*\) is a global minimum for \(\widehat{F}_{\lambda }(\textbf{x})\) and hence a stationary point. We show \(F_\lambda \) and \(\widehat{F}_{\lambda }\) have the same directional derivative at the point \(\textbf{x} = \textbf{x}^*\) by the following calculations,

Since \(\textbf{x}^*\) is a stationary point of \(\widehat{F}_{\lambda }(\textbf{x}),\) it is also a stationary point of \(F_\lambda (\textbf{x})\). \(\square \)

If neither L nor P is coercive, then we need to assume the sequence generated by the ADMM framework is bounded. In other words, the sequence either diverges or has a subsequence convergent to a stationary point; see Theorem 12.

Theorem 12

(convergence without coerciveness) Let Assumptions (P3), (A1)-(A3) hold. Let \(\rho \) satisfy the condition given in Theorem 11. If the sequence \(\{(\textbf{x}^\tau , \textbf{z}^\tau , \textbf{v}^\tau )\}_{\tau =1}^\infty \) is bounded, then it has a subsequence convergent to a stationary point of problem (1).

Proof

If \(\{\textbf{x}^\tau \}_{\tau =1}^\infty \) is bounded, then \(\{\textbf{z}^\tau \}_{\tau =1}^\infty \) and \(\{\textbf{v}^\tau \}_{\tau =1}^\infty \) are bounded due to (31) and (32), respectively. The rest of the proof is along the same lines as Theorem 11, thus is omitted. \(\square \)

Note that the Poisson regression does not have a global Lipschitz constant owing to \(\psi ''(\theta _i)=\exp (\theta _i)\), and hence Assumption (A2) does not hold. As a result, Theorems 11-12 are not applicable to Poisson regression. Fortunately, we observe in the empirical studies of Sect. 5 that ADMM with the Poisson loss function generally converges.

5 Numerical Experiments

In the numerical experiments, we examine group regularization methods that are widely used, including group LOG, group MCP, group SCAD, group transformed \(\ell _1\), and group LASSO. The definitions of the corresponding univariate regularization functions and the references for their proximal operators are listed in Table 1.

A comparison to LASSO is added as a baseline method for feature selection without the group structure. We consider three loss functions for linear regression, Poisson regression, and logistic regression in Sects. 5.1-5.3, respectively. We generate synthetic data for linear regression and Poisson regression, while applying logistic regression on a real dataset that involves prostate cancer gene expression levels.

5.1 Synthetic Data for Linear Regression

We generate 50 triplets of a dataset that consists of 200 features and 200 observations \((A,\textbf{x}^*, \textbf{b})\) for linear regression, where \(A \in \mathbb {R}^{200 \times 200}\) is called the feature matrix, \(\textbf{x}^* \in \mathbb {R}^{200}\) is the ground-truth vector, and \(\textbf{b} \in \mathbb {R}^{200}\) is the response vector. Each row of the feature matrix A, denoted by \(A_{i } \in \mathbb {R}^{200}\), is randomly generated from multivariate Gaussian distribution with zero mean \(\textbf{0} \in \mathbb {R}^{200}\) and covariance matrix \(\varSigma \in \mathbb {R}^{200 \times 200}\) independently, i.e, \(A_{i} {\mathop {\sim }\limits ^{iid}} \mathcal {N}(\textbf{0}, \varSigma )\). We set the variances of all the features to be 1, i.e. \(\varSigma _{jj} = 1\), \(\forall j\). The off-diagonal elements of the covariances \(\varSigma _{jj'}\), \(j\ne j'\) are set as one of the following cases:

-

Case 1. \(\varSigma _{jj'}=0\).

-

Case 2. \(\varSigma _{jj'}=0.2\) when \(j,j' \in \mathcal {G}_k\), \(\varSigma _{jj'}=0\) otherwise.

-

Case 3. \(\varSigma _{jj'}=0.5\) when \(j,j' \in \mathcal {G}_k\), \(\varSigma _{jj'}=0.2\) otherwise.

The three cases for the covariance matrix \(\varSigma \) consider various levels of correlations among the features. Specifically, Case 1 considers features that are completely independent of each other, while Cases 2-3 consider the features positively correlated in part or in whole. Positive correlations are introduced within a group in Case 2. In Case 3, all feature pairs have positive correlations, and the within-group correlations are stronger than the across-group correlations.

The ground-truth \(\textbf{x}^*\in \mathbb {R}^{d}\) (\(d=200\)) consists of 40 equal-size groups with 5 coefficients in each group that are simultaneously zero or nonzero. We assume without loss of generality that \(x^*_1, \ldots , x^*_s\) are nonzero coefficients \((s<d)\), whose indices \(1, \ldots , s\) are grouped into the first \(m'\) distinct groups \(\mathcal {G}_1, \ldots ,\mathcal {G}_{m'}\) \((m'<m)\). The indices in \(\mathcal {G}_{m'+1}, \ldots ,\mathcal {G}_{m}\) correspond to zero coefficients. We set the number of nonzero groups to be one, three, or five for Cases 1-3. The coefficients in the nonzero group(s) are randomly generated from uniform distribution between \(-5\) and 5 independently, i.e, \(\textbf{x}^*_j {\mathop {\sim }\limits ^{iid}} U[-5,5]\). The response vector \(\textbf{b}\) is generated by a linear regression model

where the noise \(\textbf{e} \in \mathbb {R}^{200}\) follows Gaussian distribution, \(e_i {\mathop {\sim }\limits ^{iid}} \mathcal {N}(0,\tilde{\sigma }^2)\). Here, \(\tilde{\sigma }^2\) is the empirical version of \(\text {Var}(A \textbf{x}^*)\) such that \(\tilde{\sigma }^2 =\sum _i( A_{i}\textbf{x}^*-\bar{A}\textbf{x}^*)^2/199\), where \(\bar{A}=\sum _i A_{i}/200\).

Let \(\hat{\textbf{x}} \in \mathbb {R}^d\) be a reconstructed solution from any method with its support \(\hat{\mathcal {S}}=\{j: \hat{x}_j \ne 0\}\). The complement of the support is denoted as \(\hat{\mathcal {S}}^c=\{j: \hat{x}_j = 0\}\). Let \(\hat{\mathcal {M}}=\{k: \hat{x}_j \ne 0, \forall j \in \mathcal {G}_k \}\) and \(\hat{\mathcal {N}}=\{k: \hat{x}_j = 0, \forall j \in \mathcal {G}_k \}\) denote the index set of groups in which coefficients being estimated as nonzero and the index set of groups whose coefficients being estimated as zero, respectively. To quantitatively evaluate the performance of each regularization method, we consider the following standard metrics:

-

1.

Relative error of \(\hat{\textbf{x}} \triangleq \displaystyle \frac{\Vert \hat{\textbf{x}}-\textbf{x}^*\Vert _2}{\Vert \textbf{x}^*\Vert _2}\).

-

2.

Precision of \(\hat{\textbf{x}}\triangleq \displaystyle \frac{|\hat{\mathcal {S}} \cap \{1,\ldots ,s\}| }{|\hat{\mathcal {S}}|}\).

-

3.

Recall of \(\hat{\textbf{x}}\triangleq \displaystyle \frac{|\hat{\mathcal {S}} \cap \{1,\ldots , s\}| }{s}\).

-

4.

Element accuracy of \(\hat{\textbf{x}} \triangleq \displaystyle \frac{|\hat{\mathcal {S}} \cap \{1,\ldots ,s\}|+|\hat{\mathcal {S}}^c \cap \{s+1,\ldots ,d\}|}{d}\).

-

5.

Group accuracy of \(\hat{\textbf{x}} \triangleq \displaystyle \frac{|\hat{\mathcal {M}} \cap \{1,\ldots ,m'\}|+|\hat{\mathcal {N}} \cap \{m'+1,\ldots ,m\}|}{m}\).

For tuning the hyperparameter \(\lambda \), we split the dataset \((A, \textbf{b})\) into two equal-size datasets: a training dataset \((A_{tr}, \textbf{b}_{tr}) \in \mathbb {R}^{100 \times 200} \times \mathbb {R}^{100}\) and a validation dataset \((A_v, \textbf{b}_v) \in \mathbb {R}^{100 \times 200} \times \mathbb {R}^{100}\). We solve the following optimization problem with the training dataset for different penalty functions \(p(\cdot )\):

For the linear regression problem (37), there is a closed-form solution for the \(\textbf{z}\)-subproblem as detailed in Appendix A. The hyperparameter settings for the different penalty functions are summarized in Table 2. Specifically for group LOG, group transformed \(\ell _1\), group LASSO, and LASSO, we tune the hyperparameter \(\lambda \) with 50 logarithmically spaced values (generated by Matlab function logspace) from \(10^{-4}\) to 10. For group MCP and group SCAD, we tune the log-spaced hyperparameter \(\tilde{\lambda }\) from \(10^{-4}\) to 10 with \(\lambda = 1\). By fixing \(\lambda =1\) for group MCP and group SCAD, we conform to their standard formulations found in [14, 68]. Although we do not have the intercept \(x_0\) in generating data from (36), we include it in the estimation to mimic the reality with no prior information about the intercept value. The numerical experiments are performed without the regularization on the intercept to have straightforward interpretations of the estimated solution. We may regularize the intercept term by treating it as a standalone group, for which the convergence theory is easily extended. Given a specific value of hyperparameters, we obtain an estimated vector \(\hat{\textbf{x}}\) and an intercept \(\hat{x}_0\) that can be used to compute the mean squared error (MSE) on the validation set, \(\text {MSE}_{\text {lin}} \triangleq \Vert A_{v} \hat{\textbf{x}} + \hat{x}_0 \textbf{1} - \textbf{b}_{v}\Vert _2^2/100\). The optimal value of the hyperparameter can be found with the smallest MSE among the preset range of the hyperparameters. We do not tune the algorithmic parameter \(\rho \), as it only affects the convergence speed but not the performance. Generally, a larger value of \(\rho \) results in a longer computation time. On the other hand, a very small of \(\rho \) may cause our algorithm to be divergent, as the \(\textbf{x}\)-subproblem is nonconvex and thus ill-posed. In practice, we choose \(\rho = 1\) for group LASSO, and \(\rho = 2\lambda \mathrm{Lip_{p}} \mathcal {G}^{\max }\) for group LOG, MCP, SCAD, and TL1, which ensures the \(\textbf{x}\)-subproblem is convex.

Table 3 summarizes the results of all the eight methods (TL1 stands for transformed \(\ell _1\)) for the datasets with Case 1 covariance. Note that the overall performance of the various models does not depend on the number of nonzero groups. We additionally consider the oracle method that sets the zero coefficients in \(\textbf{x}^*\) to be zero a priori and obtains the solution by least-squares minimization without any regularization. The nonconvex regularizers outperform the convex regularizers such as group LASSO and LASSO. The average relative errors of the nonconvex regularizers are very close to those of the oracle method. In particular, group LOG is successful in recovering the sparsity and group structure and has smaller relative errors than the oracle method on average. Although the sparsity and group structure of the oracle method perfectly aligns with the ground-truth, the group LOG outperforms the oracle solution which is undermined by a large signal-to-noise ratio. Both LASSO and group LASSO have low average precision values but high average recall values, which indicates that both methods tend to incorrectly estimate the zero coefficients at the ground-truth as nonzero. LASSO is not successful in recovering the group structure as the group structure is not incorporated in the model formulation. Note that the union of the group index sets \(\hat{\mathcal {M}}\) and \(\hat{\mathcal {N}}\) for the group regularizers and the oracle method is the whole group index set \(\{1,\ldots , m\}\) while LASSO may have some group indices not in any of the two sets. The estimation with a larger number of nonzero groups is a more challenging task, as indicated by the increase in the relative errors and the decrease in the precision, accuracy, and group accuracy metrics. Similar patterns are obtained in the results with Case 2 and Case 3 covariances. Refer to Tables 6–7 in Appendix C for more details.

We compare the ADMM to a block successive upper-bound minimization (BSUM) method [20] and an iteratively reweighted algorithm in [23, Algorithm 1] for the group LOG penalty. The last algorithm is a special case of the successive upper-bound minimization algorithm [47] that solves a sequence of convex subproblems involving the weight of the current iterate; we refer to the algorithm as the iteratively reweighted algorithm. We note that iterates of the algorithms are shown to converge to the same type of stationary solution. We implement the algorithms on an identical computation environment with covariance matrix \(\varSigma _{ij'}=0\) (Case 1) and three nonzero groups in ground-truth. Figure 1 plots the decrease in the objective values and the relative errors of the iterates with respect to computation time. Although the methods reach the same level of objective value and relative error in the end, Fig. 1 indicates that the ADMM converges faster than the iteratively reweighted algorithm when both subproblems in (16) have closed-form solutions. Both ADMM and iterative algorithm are much faster compared to BSUM.

5.2 Synthetic Data for Poisson Regression

Poisson regression is a generalized linear model that takes count data as its response. We generate 50 triples of \((A,\textbf{x}^*, \textbf{b})\), where \(\textbf{x}^* \in \mathbb {R}^{200}\) is the ground-truth vector and \(\textbf{b} \in \mathbb {R}^{200}\) is the response vector generated by Poisson distribution, i.e.,

The feature matrix \(A \in \mathbb {R}^{200 \times 200}\) is generated in the same manner as in Sect. 5.1 with Case 1, 2, 3 covariance settings. One, three, and five groups of 5 coefficients in the ground-truth \(\textbf{x}^*\) are nonzero and the rest of the coefficients are zero. The nonzero coefficients are randomly generated from uniform distribution between \(-0.5\) and 0.5, i.e., \(\textbf{x}^*_j {\mathop {\sim }\limits ^{iid}} U[-0.5,0.5]\). Same as in Sect. 5.1, we randomly split the dataset \((A, \textbf{b})\) into training and validation sets. For the training dataset \((A_{tr}, \textbf{b}_{tr})\), we consider the following optimization problem with different penalty functions \(p(\cdot )\):

We adopt Newton’s method, described in Appendix A, to solve for the \(\textbf{z}\)-subproblem. The Newton’s method terminates if the distance between the current iterate and the previous iterate is less than \(10^{-3}\). Note that our empirical study shows that the stopping tolerance in the subproblem does not have a significant influence on the final results. We use 50 different hyperparameter values from \(10^{-4}\) to 10 (\(\lambda \) for group LOG, group transformed \(\ell _1\), group LASSO, LASSO, and \(\tilde{\lambda }\) for group MCP, group SCAD). Given a specific hyperparameter value, we obtain the estimated coefficients \(\hat{\textbf{x}}\) and the intercept \(\hat{x}_0\). For hyperparameter selection, we use mean squared error (MSE) on the validation set \((A_v, \textbf{b}_v)\) for Poisson regression defined as

where the \(\exp \) of a vector is a componentwise operation. The hyperparameter value giving the smallest MSE is chosen. Note that there are alternatives to MSE for Poisson regression, such as deviance and mean squared error of log response, to alleviate the sensitivity to large predicted values. With the known ground-truth, the evaluation metrics are the same as in linear regression.

Table 4 summarizes the results of the eight approaches with Case 1 covariance setting. The five nonconvex regularizers show great performance, among which group LOG attains the smallest relative error. Group LASSO and LASSO show poor performance in terms of relative errors, precision, and group accuracy. Tables 8–9 in Appendix C present the results for the Case 2 and Case 3 covariance settings, reporting a similar conclusion.

5.3 Prostate Cancer Gene Expression Dataset for Logistic Regression

Prostate cancer is the most commonly diagnosed non-skin cancer and the second leading cause of cancer death among men in the United States [7]. In order to identify prostate cancer risk genes, we analyze gene expression levels of prostate samples collected from 102 study participants [50]. Genetic expression levels of 6033 genes were measured from 52 prostate cancer patients and 50 normal controls. For our model fittings, we choose the top 50 genes with large median absolute deviation in the participants’ gene expression levels. The response vector consists of binary values representing 0 = cancer patient and 1 = normal control. We generate a feature matrix of dimension \(102 \times 150\) for a cubic B-spline basis function of the 50 genes, where each row is for one man and each of the three columns corresponds to one gene.

To evaluate the performance of the seven regularization methods for the logistic regression model, we perform 50 random splits of the dataset into a training set \((A_{tr}, \textbf{b}_{tr} ) \in \mathbb {R}^{82 \times 150} \times \mathbb {R}^{82}\) and test set \((A_{test}, \textbf{b}_{test} ) \in \mathbb {R}^{20 \times 150} \times \mathbb {R}^{20}\). The model coefficients \( \hat{\textbf{x}}\) and \(\hat{x}_0\) are estimated from the logit loss function with regularization, i.e.,

As the Hessian matrix for this problem is nearly singular, we apply the standard gradient descent for solving the \(\textbf{z}\)-subproblem. The gradient descent terminates if the distance between the current iterate and the previous iterate is less than \(10^{-3}\). The oracle method is not available for this experiment since the ground-truth is not known. The performance metrics of each method are given by

-

1.

Prediction error \( \triangleq \displaystyle { \frac{1}{20} \sum _{i=1}^{20} \Big | \textbf{1}\left( (\hat{b}_{test})_i > 0.5\right) - (b_{test})_{i}\Big |}, \), where

$$\begin{aligned} (\hat{b}_{test})_i = \frac{1}{1+\exp (-\hat{x}_0- ( A_{test})_{i} \hat{\textbf{x}})} \end{aligned}$$and \(\textbf{1}(\varOmega )\) is the indicator function that returns 1 if condition \(\varOmega \) holds and 0 otherwise.

-

2.

Area under a receiver operating characteristic curve (AUC) \( \displaystyle \triangleq \int _{0}^{1} TPR(t) \ dt \), where

$$\begin{aligned}&TPR(t) \triangleq \frac{TP(t)}{TP(t)+FN(t)},\\&TP(t) \triangleq \sum _{i=1}^{20} \textbf{1}\left( (\hat{b}_{test})_i > t\right) (b_{test})_i, \text { and }\\&FN(t) \triangleq \sum _{i=1}^{20} \textbf{1}\left( (\hat{b}_{test})_i \le t\right) (b_{test})_i. \end{aligned}$$ -

3.

Coefficient selection rate \(\triangleq \displaystyle {\frac{1}{d} \sum \limits _{j=1}^{d} \textbf{1}(\hat{x}_j \ne 0)}\).

-

4.

Group selection rate \(\triangleq \displaystyle {\frac{1}{m} \sum \limits _{k=1}^{m} \textbf{1}(\hat{\textbf{x}}_\mathcal {G_k} \ne \varvec{0})}\), where \(\hat{\textbf{x}}_\mathcal {G_k}\) is a coefficient subvector corresponding to the k-th group.

For all methods, we perform 5-fold cross-validation on the 50 training sets to tune the hyperparameters (\(\lambda \) for group LOG, group transformed \(\ell _1\), group LASSO, LASSO, and \(\tilde{\lambda }\) for group MCP, group SCAD) by maximizing AUC. The other parameters are fixed as in Table 2, except for \(\varepsilon = \texttt {1e-4}\) of group LOG. For group LOG, we tune the log-spaced hyperparameter \(\lambda \) from \(10^{-4}\) to \(10^{-2}\). For group transformed \(\ell _1\) and group LASSO, we tune the log-spaced hyperparameter \(\lambda \) from \(10^{-3}\) to \(10^{-1}\). For group MCP and group SCAD, we tune the log-spaced hyperparameter \(\tilde{\lambda }\) from \(10^{-3}\) to \(10^{-1}\) while fixing the hyperparameter \(\lambda = 1\). With the selected hyperparamter by cross-validation, the model coefficients \( \hat{\textbf{x}}\) and \(\hat{x}_0\) are estimated on the whole training set and their performances are evaluated on the corresponding test set.

Table 5 exhibits the performance of the seven regularization methods with logistic regression. The nonconvex group penalties except for group transformed \(\ell _1\) have smaller prediction errors and higher AUCs than group LASSO. As seen in the coefficient and group selection rates, both group MCP and group SCAD result in non-sparse coefficients with marginally higher AUC values. LASSO, on average, has the smallest coefficient selection rate, but it cannot retain the group structure by nature. Taking both prediction accuracy and group sparsity recovery into consideration, group LOG shows satisfactory results compared to the other methods.

6 Conclusion

We study a generalized formulation of the non-overlapping group selection problem, which encompasses many existing works by choosing a specific set of loss functions and sparsity-promoting functions. We analyzed the properties of a directional stationary solution to our proposed model, demonstrating its global optimality under certain conditions and providing a bound of its distance to a reference point which is a proxy of the ground-truth in the view of probability. We applied the ADMM framework with a proximal operator to iteratively minimize the generalized formulation that is commonly nonconvex and nonsmooth. We also proved the subsequence convergence of the algorithm to a stationary point. The global convergence and inexact ADMM [61, 62] (when the subproblems are solved approximately), which requires more conditions on the loss function, will be left for future work. In numerical experiments, we tested our algorithm on synthetic datasets with linear and Poisson regression analysis, showing that nonconvex group regularization methods often outperform the convex approaches with respect to the recovery of the ground-truth. The analysis of prostate cancer gene expression data confirmed that a solution with group sparsity structure is successfully produced by our proposed model, in which nonconvex group regularization methods outperform group LASSO.

Data Availability

The datasets generated during and/or analysed during the current study will be available at GitHub.

References

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables, vol. 55. US Government Printing Office, Washington, D.C (1964)

Ahn, M.: Consistency bounds and support recovery of d-stationary solutions of sparse sample average approximations. J. Glob. Optim. 78(3), 397–422 (2019)

Ahn, M., Pang, J.S., Xin, J.: Difference-of-convex learning: directional stationarity, optimality, and sparsity. SIAM J. Optim. 27(3), 1637–1665 (2017)

Bach, F., Jenatton, R., Mairal, J., Obozinski, G., et al.: Optimization with sparsity-inducing penalties. Found. Trends Mach. Learn. 4(1), 1–106 (2012)

Beck, A.: First-Order Methods in Optimization. Society for Industrial and Applied Mathematics, Philadelphia (2017)

Boyd, S., Parikh, N., Chu, E.: Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Now Publishers Inc., Hanover (2011)

Brawley, O.W.: Trends in prostate cancer in the United States. J. Natl. Cancer Inst. Monogr. 45, 152–156 (2012)

Breheny, P., Huang, J.: Group descent algorithms for nonconvex penalized linear and logistic regression models with grouped predictors. Stat. Comput. 25(2), 173–187 (2015)

Candés, E.J., Wakin, M.B., Boyd, S.: Enhancing sparsity by reweighted \(\ell _{1}\) minimization. J. Fourier Anal. Appl. 14(5–6), 877–905 (2008)

Chartrand, R., Wohlberg, B.: A nonconvex ADMM algorithm for group sparsity with sparse groups. In: 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 6009–6013

Curtis, F.E., Dai, Y., Robinson, D.P.: A subspace acceleration method for minimization involving a group sparsity-inducing regularizer (2020). arXiv preprint arXiv:2007.14951

Deng, W., Yin, W., Zhang, Y.: Group sparse optimization by alternating direction method. In: Wavelets and Sparsity XV, vol. 8858, p. 88580R. International Society for Optics and Photonics, Bellingham (2013)

Euler, L.: Of a new method of resolving equations of the fourth degree. In: Elements of Algebra, pp. 282–288. Springer, Cham (1972)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Gu, Y., Fan, J., Kong, L., Ma, S., Zou, H.: ADMM for high-dimensional sparse penalized quantile regression. Technometrics 60(3), 319–331 (2018)

Guyon, I., Elisseeff, A.: An introduction to variable and feature selection. J. Mach. Learn. Res. 3(Mar), 1157–1182 (2003)

Hastie, T., Tibshirani, R., Friedman, J.H., Friedman, J.H.: The Elements of Statistical Learning: Data Mining, Inference, and Prediction, vol. 2. Springer (2009)

Hastie, T., Tibshirani, R., Wainwright, M.: Statistical Learning with Sparsity: The Lasso and Generalizations. RC Press Taylor & Francis Group, Florida (2015)

Hong, M., Luo, Z.Q., Razaviyayn, M.: Convergence analysis of alternating direction method of multipliers for a family of nonconvex problems. SIAM J. Optim. 26(1), 337–364 (2016)

Hong, M., Wang, X., Razaviyayn, M., Luo, Z.Q.: Iteration complexity analysis of block coordinate descent methods. Math. Program. 163(1–2), 85–114 (2016)

Huang, J., Breheny, P., Ma, S.: A selective review of group selection in high-dimensional models. Stat. Sci. (2012). https://doi.org/10.1214/12-STS392

Jiao, Y., Jin, B., Lu, X.: Group sparse recovery via the \(l_0(l_2)\) penalty: theory and algorithm. IEEE Trans. Signal Process. 65(4), 998–1012 (2016)

Ke, C., Ahn, M., Shin, S., Lou, Y.: Iteratively reweighted group lasso based on log-composite regularization. SIAM J. Sci. Comput. 43(5), S655–S678 (2021)

Khamaru, K., Wainwright, M.J.: Convergence guarantees for a class of non-convex and non-smooth optimization problems. J. Mach. Learn. Res. 20(154), 1–52 (2019)

Kronvall, T., Jakobsson, A.: Hyperparameter selection for group-sparse regression: a probabilistic approach. Signal Process. 151, 107–118 (2018)

Lai, M.J., Xu, Y., Yin, W.: Improved iteratively reweighted least squares for unconstrained smoothed \(l_{q}\) minimization. SIAM J. Numer. Anal. 51(2), 927–957 (2013)

Lanckriet, G., Sriperumbudur, B.K.: On the convergence of the concave-convex procedure. Adv. Neural Inf. Process. Syst. 22, 1759–1767 (2009)

Lauer, F., Ohlsson, H.: Finding sparse solutions of systems of polynomial equations via group-sparsity optimization. J. Glob. Optim. 62(2), 319–349 (2015)

Lipp, T., Boyd, S.: Variations and extension of the convex–concave procedure. Optim. Eng. 17(2), 263–287 (2015)

Loh, P.L., Wainwright, M.J.: Regularized M-estimators with nonconvexity: statistical and algorithmic theory for local optima. J. Mach. Learn. Res. 16(1), 559–616 (2015)

Lou, Y., Yan, M.: Fast \(\ell _1-\ell _2\) minimization via a proximal operator. J. Sci. Comput. 74(2), 767–785 (2018)

Lou, Y., Yin, P., He, Q., Xin, J.: Computing sparse representation in a highly coherent dictionary based on difference of \(\ell _1\) and \(\ell _2\). J. Sci. Comput. 64(1), 178–196 (2015)

Lu, Z., Zhou, Z., Sun, Z.: Enhanced proximal DC algorithms with extrapolation for a class of structured nonsmooth DC minimization. Math. Program. 176(1–2), 369–401 (2018)

McCullagh, P., Nelder, J.A.: Generalized Linear Models. Routledge, New York (2019)

Meier, L., Van De Geer, S., Bühlmann, P.: The group lasso for logistic regression. J. R. Stat. Soc. Ser. B 70(1), 53–71 (2008)

Negahban, S.N., Ravikumar, P., Wainwright, M.J., Yu, B.: A unified framework for high-dimensional analysis of \( m \)-estimators with decomposable regularizers. Stat. Sci. 27(4), 538–557 (2012)

Nikolova, M.: Local strong homogeneity of a regularized estimator. SIAM J. Appl. Math. 61(2), 633–658 (2000)

Pan, L., Chen, X.: Group sparse optimization for images recovery using capped folded concave functions. SIAM J. Imaging Sci. 14(1), 1–25 (2021)

Pang, J.S., Razaviyayn, M., Alvarado, A.: Computing b-stationary points of nonsmooth DC programs. Math. Oper. Res. 42(1), 95–118 (2017)

Pang, J.S., Tao, M.: Decomposition methods for computing directional stationary solutions of a class of nonsmooth nonconvex optimization problems. SIAM J. Optim. 28(2), 1640–1669 (2018)

Parikh, N., Boyd, S.: Proximal algorithms. Found. Trends Optim. 1(3), 127–239 (2014)

Peng, B., Wang, L.: An iterative coordinate descent algorithm for high-dimensional nonconvex penalized quantile regression. J. Comput. Graph. Stat. 24(3), 676–694 (2015)

Pham Dinh, T., Le Thi, H.: Convex analysis approach to DC programming: Theory, algorithms and applications. Acta Math. Vietnam 22(1), 289–355 (1997)

Phan, D.N., Le Thi, H.A.: Group variable selection via \(l_{p,0}\) regularization and application to optimal scoring. Neural Netw. 118, 220–234 (2019)

Rahimi, Y., Wang, C., Dong, H., Lou, Y.: A scale-invariant approach for sparse signal recovery. SIAM J. Sci. Comput. 41(6), A3649–A3672 (2019)

Rakotomamonjy, A.: Surveying and comparing simultaneous sparse approximation (or group-lasso) algorithms. Signal Process. 91(7), 1505–1526 (2011)

Razaviyayn, M., Hong, M., Luo, Z.Q.: A unified convergence analysis of block successive minimization methods for nonsmooth optimization. SIAM J. Optim. 23(2), 1126–1153 (2013)

Shapiro, A., Dentcheva, D., Ruszczynski, A.: Lectures on Stochastic Programming: Modeling and Theory. SIAM Publications, Philadelphia (2009)

Shen, X., Chen, L., Gu, Y., So, H.C.: Square-root lasso with nonconvex regularization: an ADMM approach. IEEE Signal Process. Lett. 23(7), 934–938 (2016)

Singh, D., et al.: Gene expression correlates of clinical prostate cancer behavior. Cancer Cell 1(2), 203–209 (2002)

Spiegel, M.R.: Mathematical Handbook of Formulas and Tables. McGraw-Hill, New York (1968)

Tian, S., Yu, Y., Guo, H.: Variable selection and corporate bankruptcy forecasts. J. Bank. Financ. 52, 89–100 (2015)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 58(1), 267–288 (1996)

Tibshirani, R.: The lasso method for variable selection in the cox model. Stat. Med. 16(4), 385–395 (1997)

van Ackooij, W., Demassey, S., Javal, P., Morais, H., de Oliveira, W., Swaminathan, B.: A bundle method for nonsmooth DC programming with application to chance-constrained problems. Comput. Optim. Appl. 78(2), 451–490 (2020)

Wang, C., Yan, M., Rahimi, Y., Lou, Y.: Accelerated schemes for the \(l1/l2 \) minimization. IEEE Trans. Signal Process. 68, 2660–2669 (2020)

Wang, L., Chen, G., Li, H.: Group SCAD regression analysis for microarray time course gene expression data. Bioinformatics 23(12), 1486–1494 (2007)

Wang, Y., Yin, W., Zeng, J.: Global convergence of ADMM in nonconvex nonsmooth optimization. J. Sci. Comput. 78(1), 29–63 (2019)

Wang, Y., Zhu, L.: Coordinate majorization descent algorithm for nonconvex penalized regression. J. Stat. Comput. Simul. 91(13), 1–15 (2021)

Wei, F., Zhu, H.: Group coordinate descent algorithms for nonconvex penalized regression. Comput. Stat. Data Anal. 56(2), 316–326 (2012)

Xie, J.: On inexact admms with relative error criteria. Comput. Optim. Appl. 71(3), 743–765 (2018)

Xie, J., Liao, A., Yang, X.: An inexact alternating direction method of multipliers with relative error criteria. Optim. Lett. 11(3), 583–596 (2017)

Xie, Y., Shanbhag, U.V.: Tractable ADMM schemes for computing KKT points and local minimizers for \(\ell _0\)-minimization problems. Comput. Optim. Appl. 78(1), 43–85 (2020)

Xu, J., Chi, E., Lange, K.: Generalized linear model regression under distance-to-set penalties. Adv. Neural Inf. Process. Syst. 30, 1385–1395 (2017)

Xu, Z., Chang, X., Xu, F., Zhang, H.: \( l_{1/2} \) regularization: a thresholding representation theory and a fast solver. IEEE Trans. Neural Netw. Learn. Syst. 23(7), 1013–1027 (2012)

Yin, P., Lou, Y., He, Q., Xin, J.: Minimization of \(\ell _{1-2}\) for compressed sensing. SIAM J. Sci. Comput. 37(1), A536–A563 (2015)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B 68(1), 49–67 (2006)

Zhang, C.H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38(2), 894–942 (2010)

Zhang, S., Xin, J.: Minimization of transformed \(\ell _1\) penalty: closed form representation and iterative thresholding algorithms. Commun. Math. Sci. 15, 511–537 (2017). https://doi.org/10.4310/CMS.2017.v15.n2.a9

Zhang, S., Xin, J.: Minimization of transformed \(\ell _1\) penalty: theory, difference of convex function algorithm, and robust application in compressed sensing. Math. Program. 169(1), 307–336 (2018)

Zhang, Y., Zhang, N., Sun, D., Toh, K.C.: An efficient hessian based algorithm for solving large-scale sparse group lasso problems. Math. Program. 179(1), 223–263 (2020)

Zhou, Y., Han, J., Yuan, X., Wei, Z., Hong, R.: Inverse sparse group lasso model for robust object tracking. IEEE Trans. Multimed. 19(8), 1798–1810 (2017)

Zhu, Y.: An augmented ADMM algorithm with application to the generalized lasso problem. J. Comput. Graph. Stat. 26(1), 195–204 (2017)

Funding

Open access funding provided by SCELC, Statewide California Electronic Library Consortium. Ahn and Ke were supported by NSF grant CRII IIS-1948341. Shin was supported in part by NSF grant DMS-2113674, the Korean National Research Foundation (NRF) grant funded by the Korea government(MSIT) (RS-2023-00243012, RS-2023-00219980), POSTECH Basic Science Research Institute Fund (NRF-2021R1A6A1A10042944), and POSCO HOLDINGS grant 2023Q033. Lou was partially supported by NSF grant DMS-1846690.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Common approaches to solving the z-subproblem

This section details three approaches regarding how to solve for the z-subproblem in (16).

-

A closed-form solution can be derived for the least squares loss

$$\begin{aligned} L^\textrm{lin}(\textbf{z}) \triangleq \frac{1}{n}\sum _{i=1}^n \left( b_i - A_i\textbf{z} \right) ^2. \end{aligned}$$We shall consider an intercept, denoted by \(x_0,\) and hence the least squares loss can be expressed as

$$\begin{aligned} L^\textrm{lin}(\textbf{z},x_0) \triangleq \frac{1}{n}\sum _{i=1}^n \left( b_i -A_i\textbf{z} -x_0\right) ^2. \end{aligned}$$The \(\textbf{z}\)-update is given by

$$\begin{aligned} \textbf{z}^{\tau +1} = \Big (\frac{1}{n} A^TA + \rho I_d\Big )^{-1} \Big (\frac{1}{n} A^T (x_0^{\tau } \textbf{1}+\textbf{b}) + \rho (\textbf{x}^{\tau +1}+\textbf{v}^\tau )\Big ), \end{aligned}$$where \(\textbf{1}\) denotes the all-ones vector and \(I_d\) is the \(d \times d\) identity matrix. The \(x_0\)-update is made by

$$\begin{aligned} x_0^{\tau +1} = \frac{1}{n} \sum _{i=1}^n (b_i-A_i\textbf{z}^{\tau +1}). \end{aligned}$$ -

The Newton’s method is often used when any GLM loss has a continuous second-order derivative. It is especially useful when there is no closed-form solution of \(\textbf{z}\), such as logistic regression and Poisson regression. The Newton’s method at the s-

$$\begin{aligned} \textbf{z}_{s+1}=\textbf{z}_s-&\delta _s \Big \{\nabla ^2_{\textbf{z}_s}L^\textrm{glm}(\textbf{z}_s)+ \rho I_d\Big \}^{-1}\nonumber \\&\Big \{\nabla _{\textbf{z}_s}L^\textrm{glm}(\textbf{z}_s)+\rho (\textbf{z}_s-\textbf{x}^{\tau +1}-\textbf{v}^\tau )\Big \},\\ =\textbf{z}_s-&\delta _s \Big \{\psi ^{''}(A_i\textbf{z}_s)A_i^T A_i+ \rho I_d\Big \}^{-1}\nonumber \\&\Big [\{\psi ^{'}(A_i\textbf{x})-b_i\} A_i^T+\rho (\textbf{z}_s-\textbf{x}^{\tau +1}-\textbf{v}^\tau )\Big ], \end{aligned}$$where \(\delta _s>0\) is a step size. We define the logit loss as follows,

$$\begin{aligned} L^\textrm{logit}(\textbf{x},x_0) \triangleq \frac{1}{n}\sum _{i=1}^n \left[ \log \left\{ 1+\exp (A_i\textbf{x} + x_0)\right\} - b_i (A_i \textbf{x}+x_0) \right] . \end{aligned}$$Its first and second derivatives with respect to each component of \(\textbf{x}\) can be obtained by

$$\begin{aligned} \begin{aligned} \frac{\partial L^\textrm{logit}}{\partial x_j}&=\frac{1}{n}\sum _{i=1}^n\left[ \frac{a_{ij} \exp (A_i\textbf{x} + x_0)}{1+\exp (A_i\textbf{x} + x_0)} - b_i a_{ij}\right] ,\\ \frac{\partial ^2L^\textrm{logit}}{\partial x_j\partial x_k}&=\frac{1}{n}\sum _{i=1}^n\left[ \frac{a_{ij}a_{ik}\exp (A_i\textbf{x} + x_0)}{1+\exp (A_i\textbf{x} + x_0)}-a_{ij}a_{ik}\left( \frac{\exp (A_i\textbf{x} + x_0)}{1+\exp (A_i\textbf{x} + x_0)}\right) ^2\right] , \end{aligned} \end{aligned}$$for \(j,k=1,\ldots ,d\). Its derivatives with respect to \(x_0\) are

$$\begin{aligned} \begin{aligned} \frac{\partial L^\textrm{logit}}{\partial x_0}&=\frac{1}{n}\sum _{i=1}^n\left[ \frac{ \exp (A_i\textbf{x} + x_0)}{1+\exp (A_i\textbf{x} + x_0)} - b_i \right] ,\\ \frac{\partial ^2L^\textrm{logit}}{\partial x_0^2}&=\frac{1}{n}\sum _{i=1}^n\left[ \frac{\exp (A_i\textbf{x} + x_0)}{1+\exp (A_i\textbf{x} + x_0)}-\left( \frac{\exp (A_i\textbf{x} + x_0)}{1+\exp (A_i\textbf{x} + x_0)}\right) ^2\right] , \end{aligned} \end{aligned}$$For the Poisson loss, which is defined by

$$\begin{aligned} L^{\textrm{pois}}(\textbf{x}) \triangleq -\frac{1}{n} \sum _{i=1}^n \left\{ b_i (A_i \textbf{x}+x_0) - \exp (A_i \textbf{x} + x_0)\right\} , \end{aligned}$$its first and second derivatives with respect to each component of \(\textbf{x}\) are

$$\begin{aligned}&\frac{\partial L^\textrm{pois}}{\partial x_j} = \frac{1}{n} \sum _{i=1}^n \left[ a_{ij} \exp (A_i \textbf{x}+x_0) - b_i a_{ij} \right] \\&\frac{\partial ^2L^\textrm{pois}}{\partial x_j\partial x_k} = \frac{1}{n} \sum _{i=1}^n a_{ij} a_{ik} \exp (A_i \textbf{x}+x_0). \end{aligned}$$Its first and second derivatives with respect to \(x_0\) are given by

$$\begin{aligned} \begin{aligned} \frac{\partial L^\textrm{pois}}{\partial x_0}&=\frac{1}{n} \sum _{i=1}^n \left[ \exp (A_i \textbf{x}+x_0) - b_i \right] ,\\ \frac{\partial ^2L^\textrm{pois}}{\partial x_0^2}&=\frac{\partial ^2L^\textrm{pois}}{\partial x_j\partial x_k} = \frac{1}{n} \sum _{i=1}^n \exp (A_i \textbf{x}+x_0) , \end{aligned} \end{aligned}$$ -

Gradient descent is considered when computing the Hessian matrix is infeasible or inefficient. It is useful for analysis of high dimensional datasets or employing loss functions that are not twice differentiable. The gradient descent at the s-th inner iteration is given by