Abstract

We present an acceleration method for sequences of large-scale linear systems, such as the ones arising from the numerical solution of time-dependent partial differential equations coupled with algebraic constraints. We discuss different approaches to leverage the subspace containing the history of solutions computed at previous time steps in order to generate a good initial guess for the iterative solver. In particular, we propose a novel combination of reduced-order projection with randomized linear algebra techniques, which drastically reduces the number of iterations needed for convergence. We analyze the accuracy of the initial guess produced by the reduced-order projection when the coefficients of the linear system depend analytically on time. Extending extrapolation results by Demanet and Townsend to a vector-valued setting, we show that the accuracy improves rapidly as the size of the history increases, a theoretical result confirmed by our numerical observations. In particular, we apply the developed method to the simulation of plasma turbulence in the boundary of a fusion device, showing that the time needed for solving the linear systems is significantly reduced.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The numerical solution of time-dependent partial differential equations (PDEs) often leads to sequences of linear systems of the form

where \(t_{0}< t_1<t_2 < \cdots \) is a discretization of time t, and both the system matrix \(A(t_{i}) \in {\mathbb {R}}^{n \times n}\) and the right-hand side \({\varvec{b}}(t_{i}) \in {\mathbb {R}}^n\) depend on time. Typically, the systems (1) are available only consecutively. Such sequences of linear systems can arise in a number of applications, including implicit time stepping schemes for the solution of PDEs or iterative solutions of non-linear equations and optimization problems. A relevant example is given by time-dependent PDEs solved in presence of algebraic constraints. In this case, even when an explicit time stepping method is used to evolve the nonlinear PDE, the discretization of the algebraic constraints leads to linear systems that need to be solved at every (sub-)timestep. This is the case of the simulation of turbulent plasma dynamics [10], where a linear constraint (Maxwell equations) is imposed upon the plasma dynamics described by a set of non linear fluid or kinetics equations. The linear systems resulting from the discretized algebraic constraints may feature millions of degrees of freedom, hence their solution is often computationally very expensive.

One usually expects that the linear system (1) changes slowly in subsequent time steps. This work is focused on exploiting this property to accelerate iterative solvers, such as CG [17] for symmetric positive definite matrices and GMRES [24] for general matrices. An obvious way to do so is to supply the iterative solver for the timestep \(t_{i+1}\) with the solution of (1) at timestep \(t_{i}\), as initial guess. As a more advanced technique, in the context of Krylov subspace methods, subspace recycling methods [26] such as GCROT [7] and GMRES-DR [21] have been proposed. Such methods have been developed in the case of a single linear system, to enrich the information when restarting the iterative solver. The idea behind is often to accelerate the convergence by suppressing parts of the spectrum of the matrix, including the corresponding approximate invariant subspace in the Krylov minimization subspace. GCROT and GMRES-DR have then been adapted to sequences of linear systems in [22], recycling selected subspaces from one system to the next. For this class of methods to be efficient, it is necessary that the sequence of matrices undergoes local changes only, that is, the difference \(A(t_{i+1})- A(t_{i})\) is computationally cheap to apply. For example, one can expect this difference matrix to be sparse when time dependence is restricted to a small part of the computational domain, e.g., through time-dependent boundary conditions. We refer to [26] for a more complete survey of subspace recycling methods and their applications. In [5], subspace recycling was combined with goal-oriented POD (Proper Orthogonal Decomposition) in order to limit the size of the subspaces involved in an augmented CG approach. Simplifications occur when the matrices \(A(t_i)\) are actually a fixed matrix A shifted by different scalar multiples of the identity matrix, because Krylov subspaces are invariant under such shifts. In the context of subspace recycling, this property has been exploited in, e.g., [27], and in [25] it is shown how a smoothly varying right-hand side can be incorporated.

When \(A(t_i)\) and \({{\textbf {b}}}(t_i)\) in (1) are samples of smooth matrix/vector-valued functions, one expects that the subspace of the previously computed solutions contains a very good approximation of the current one. This can be exploited to construct a better initial guess, either explicitly through (polynomial) extrapolation, or implicitly through projection techniques. Examples of the extrapolation approach include polynomial POD extrapolation [14], weighted group extrapolation methods [30] and a stabilized, least-squares polynomial extrapolation method [1], for the case that only the right-hand side evolves in time. For the same setting, projection techniques have been introduced by Fischer [11]. Following this first work, several approaches have been developed to extract an initial guess from the solution of a reduced-order model, constructed from projecting the problem to a low-dimensional subspace spanned by previous solutions. In [28], such an approach is applied to fully implicit discretizations of nonlinear evolution problems, while [20] applies the same idea to the so called IMPES scheme used for simulating two-phase flows through heterogeneous porous media.

In this paper, we develop a new projection technique for solving sequences of linear systems that combines projection with randomized linear algebra techniques, leading to considerably reduced cost. Moreover, a novel convergence analysis of the algorithm is carried out to show its efficiency. This is also proved numerically by applying the algorithm to the numerical simulation of turbulent plasma in the boundary of a fusion device.

The rest of this paper is organized as follows. In Sect. 2, we first discuss general subspace acceleration techniques based on solving a projected linear system and then explain how randomized techniques can be used to speed up existing approaches. In Sect. 3, a convergence analysis of these subspace acceleration techniques is presented. In Sect. 4 we first discuss numerical results for a test case to demonstrate the improvements that can be attained by the new algorithm in a somewhat idealistic setting. In Sect. 5 our algorithm is applied to large-scale turbulent simulation of plasma in a tokamak, showing a significant reduction of computational time.

2 Algorithm

The algorithm proposed in this work for accelerating the solution of the sequence of linear systems (1) uses randomized techniques to lower the cost of a POD-based strategy, such as the one proposed in [20]. Recall that we aim at solving the linear systems \(A(t_{i}) {\varvec{x}}(t_{i}) = {\varvec{b}}(t_{i})\) consecutively for \(i = 0,1,\cdots \). We make no assumption on the symmetry of \(A(t_{i}) \in {\mathbb {R}}^{n \times n}\) and thus GMRES is an appropriate choice for solving each linear system. Supposing that, at the ith timestep, M previous solutions are available, we arrange them into the history matrix

where the notation on the right-hand side indicates the concatenation of columns. Instead of using the complete history, which may contain redundant information, one usually selects a subspace \({\mathcal {S}} \subset \textrm{span}(X)\) of lower dimension \(m\le M\). Then, the initial guess for the ith linear system is obtained from choosing the element of \({\mathcal {S}}\) that minimizes the residual:

where the columns of \(Q \in {\mathbb {R}}^{n\times m}\) contain an orthonormal basis of \({\mathcal {S}}\). We use \(\Vert \cdot \Vert _2\) to denote the Euclidean norm for vectors and the spectral norm for matrices. The described approach is summarized in Algorithm 1, which is a template that needs to be completed by an appropriate choice of the subspace \({\mathcal {S}}\), in Sects. 2.1 and 2.2.

If the complete history is used, \({\mathcal {S}} = \textrm{span}(X)\), then computing Q via a QR decomposition [13], as required in Step 2, costs \({\mathcal {O}}(M^2 n)\) operations. In addition, setting up the linear least-squares problem in Step 3 of Algorithm 1 requires M (sparse) matrix–vector products in order to compute \(A(t_{i}) Q\). The standard approach for solving the linear least-squares problem proceeds through the QR decomposition of that matrix and costs another \({\mathcal {O}}(M^3 + M^2 n)\) operations. This strong dependence of the cost on M effectively forces a rather small choice of M, neglecting relevant components of the solutions that could be contained in older solutions only. In the following, we discuss two strategies to overcome this problem.

2.1 Proper Orthogonal Decomposition

An existing strategy [20] to arrive at a low-dimensional subspace \({\mathcal {S}} \subset \textrm{span}(X)\) uses a POD approach [19] and computes the orthonormal basis Q for \({\mathcal {S}}\) through a truncated SVD (Singular Value Decomposition) of X; see Algorithm 2. Note that only the first m left singular vectors \(\varvec{\Psi }_{1}, \cdots , \varvec{\Psi }_{m}\) need to be computed in Step 2.

Thanks to basic properties of the SVD, the basis \(Q_{{\textsf{POD}}}\) enjoys the following optimality property [29]:

where \(\Vert \cdot \Vert _F\) denotes the Frobenius norm and \(\sigma _1 \ge \sigma _2 \ge \cdots \ge \sigma _M \ge 0\) are the singular values of X. In words, the choice \(Q_{{\textsf{POD}}}\) minimizes the error of orthogonally projecting the columns of X onto an m–dimensional subspace. The relation to the singular values of X established in (2) also allows one to choose m adaptively, e.g., by choosing m such that most of the variability in the history matrix X is captured.

At every time step, the history matrix X gets modified by removing its first column and appending a new last column. The most straightforward implementation of Algorithm 2 would compute the SVD needed of Step 2 from scratch at every time step, leading to a complexity of \({\mathcal {O}}(nM^2)\) operations. In principle, SVD updating techniques, such as the ones presented in [4] and [6], could be used to reduce this complexity to \(O(mn + m^3)\) for every time step. However, in the context of our application, there is no need to update a complete SVD (in particular, the right singular vectors are not needed) and the randomized techniques discussed in the next section seem to be preferable.

2.2 Randomized Range Finder

In this section, an alternative to the POD method (Algorithm 1) for generating the low-dimensional subspace \({\mathcal {S}} \subset \textrm{span}(X)\) is presented, relying on randomized techniques. The randomized SVD from [16] applied to the \(n\times M\) history matrix X proceeds as follows. First, we draw an \(M\times m\) Gaussian random matrix Z, that is, the entries of Z are independent and identically distributed (i.i.d) standard normal variables. Then the so-called sketch

is computed, followed by a reduced QR decomposition \(\Omega = QR\). This only involves the \(n\times m\) matrix \(\Omega \), which for \(m\ll M\) is a significant advantage compared to Algorithm 2, which requires the SVD of an \(n\times M\) matrix. The described procedure is contained in lines 2–4 and 11 of Algorithm 3 below.

According to [16, Theorem 10.5], the expected value (with respect to Z) of the error returned by the randomized SVD satisfies

where we partition \(m = r + p\) for a small oversampling parameter \(p\ge 2\). Also, the tail bound from [16, Theorem 10.7] implies that it is highly unlikely that the error is much larger than the upper bound (4). Comparing (4) with the error (2), we see that the randomized method is only a factor \(\sqrt{2}\) worse than the optimal basis of roughly half the size produced by POD. As we also see in our experiments of Sect. 4, this bound is quite pessimistic and usually the randomized SVD performs nearly as good as POD using bases of the same size.

Instead of performing the randomized SVD from scratch in every timestep, one can easily exploit the fact that only a small part of the history matrix is modified. To see this, let us consider the sketch from the previous timestep:

Comparing with (3), we see that the sketch \(\Omega \) of the current timestep is obtained by removing the contribution from the solution \({\varvec{x}}(t_{i-M-1})\) and adding the contribution of \({\varvec{x}}(t_{i-1})\). The removal is accomplished in line 6 of Algorithm 3 by a rank-one update:

By a cyclic permutation, we can move the zero column to the last column, \(\left[ {\varvec{x}}(t_{i-M})\,|\, \cdots \,|\, {\varvec{x}}(t_{i-2}) \,|\, {\varvec{0}}\right] \), updating Z as in line 7 of Algorithm 3. Finally, the contribution of the latest solution is incorporated by adding the rank-one matrix \({\varvec{x}}(t_{i-1}) {{\varvec{z}}_M}^T\), where \({\varvec{z}}_M\) \( \in {\mathbb {R}}^m\) is a newly generated Gaussian random vector that is stored in the last row of Z. Under the (idealistic) assumption that all solutions are exactly computed (and hence deterministic), the described progressive updating procedure is mathematically equivalent to computing the randomized SVD from scratch. In particular, the error bound (4) continues to hold.

Lines 6–9 of Algorithm 3 require \({\mathcal {O}}(nm)\) operations. When using standard updating procedures for QR decomposition [13], line 11 has the same complexity. This compares favorably with the \({\mathcal {O}}(nM^2)\) operations needed by Algorithm 2 per timestep.

When performing the progressive update of \(\Omega \) over many timesteps, one can encounter numerical issues due to numerical cancellation in the repeated subtraction and addition of contributions to the sketch matrix. To avoid this, the progressive update is carried out only for a fixed number of timesteps, after which a new random matrix Z is periodically generated and \(\Omega \) is computed from scratch.

3 Convergence Analysis

We start our convergence analysis of the algorithms from the preceding section by considering analytical properties of the history matrix \(X = [{\varvec{x}}(t_{i-M})\,|\,\cdots \,|\,{\varvec{x}}(t_{i-1})]\). After reparametrization, we may assume without loss of generality that each of the past timesteps is contained in the interval \([-1,1]\):

For notational convenience, we define

where \({\varvec{x}}(t)\) satisfies the (parametrized) linear system

that is, each entry of A and \({\varvec{b}}\) is a scalar function on the interval \([-1,1]\). Indeed, for the convergence analysis, we assume that each linear system of the sequence in (1) is obtained by sampling the parametrized system in (7) in \(t_i \in [-1,1]\). In many practical applications, like the one described in Sect. 5, the time dependence in (7) arises from time-dependent coefficients in the underlying PDEs. Frequently, this dependence is real analytic, which prompts us to make the following smoothness assumption on A, \({\varvec{b}}\).

Assumption 1

Consider the open Bernstein ellipse \(E_{\rho } \subset {\mathbb {C}}\) for \(\rho > 1\), that is, the open ellipse with foci \(\pm 1\) and semi-minor/-major axes summing up to \(\rho \). We assume that \(A: \left[ -1, 1 \right] \rightarrow {\mathbb {C}}^{n \times n}\) and \( {\varvec{b}}: \left[ -1, 1 \right] \rightarrow {\mathbb {C}}^{n}\) admit extensions that are analytic on \(E_{\rho }\) and continuous on \(\bar{E_{\rho }}\) (the closed Bernstein ellipse), such that A(t) is invertible for all \(t\in \bar{E_{\rho }}\). In particular, this implies that \({\varvec{x}}(t) = A^{-1}(t) {\varvec{b}}(t)\) is analytic on \(E_{\rho }\) and \(\kappa _\rho := \max _{t \in \partial E_{\rho }} \Vert {\varvec{x}}(t) \Vert _2\) is finite.

3.1 Compressibility of the Solution Time History

The effectiveness of POD-based algorithms relies on the compressibility of the solution history, that is, the columns of X can be well approximated by an m–dimensional subspace with \(m \ll M\). According to (2), this is equivalent to stating that the singular values of X decrease rapidly to zero. Indeed, this property is implied by Assumption 1 as shown by the following result, which was stated in [18] in the context of low-rank methods for solving parametrized linear systems.

Theorem 2

([18, Theorem 2.4]) Under Assumption 1, the kth largest singular value \(\sigma _k\) of the history matrix \(X({\varvec{t}})\) from (6) satisfies

Combined with (2), Theorem 2 implies that the POD basis \(Q_{{\textsf{POD}}} \in {\mathbb {R}}^{n\times m}\) satisfies the error bound

3.2 Quality of Prediction Without Compression

Algorithm 1 determines the initial guess \({\varvec{s}}^*\) for the next time step \(t_{i} > t_{i-1} = 1\) by solving the minimization problem

In this section, we will assume, additionally to Assumption (1), that \({\mathcal {S}} = \textrm{span}(X({\varvec{t}}))\), that is, \(X({\varvec{t}})\) is not compressed. Our analysis focuses on uniform timesteps \(\varvec{t_{{\textsf{equi}}}}= \left[ t_{i-M},\cdots , t_{i-1}\right] \) defined by

Note that the next timestep \(t_{i} = 1 + \Delta t\) satisfies \(t_{i} \in E_\rho \) if and only if \(\rho > t_{i} + \sqrt{t_{i}^2-1} \approx 1 + \sqrt{2 \Delta t}\). The following result shows how the quality of the initial guess rapidly improves (at a square root exponential rate, compared to the exponential rate of Theorem 2) as M, the number of previous time steps in the history, increases.

Theorem 3

Under Assumption (1), the initial guess constructed by Algorithm 1 with \({\mathcal {S}} = \textrm{span}(X)\) satisfies the error bound

with \(C(M,R) = 5 \sqrt{5} \sqrt{2R+1} \sqrt{M} / \sqrt{2(M-1)}\), for any \(R \le \frac{1}{2} \sqrt{M-1}\), \(r = (t_{i} + \sqrt{t_{i}^{2} -1})/ \rho < 1\).

3.2.1 Proof of Theorem 3

The rest of this section is concerned with the proof of Theorem 3. We establish the result by making a connection to vector-valued polynomial extrapolation and extending results by Demanet and Townsend [8] on polynomial extrapolation to the vector-valued setting.

Let \({\mathbb {P}}_{R} \subset {\mathbb {R}}^n[t]\) denote the subspace of vector-valued polynomials of length n and degree at most R for some \(R \le M-1\). We recall that any \({{\textbf {v}}} \in {\mathbb {P}}_{R}\) takes the form \({{\textbf {v}}}(t) = {{\textbf {v}}}_0 + {{\textbf {v}}}_1 t + \cdots + {{\textbf {v}}}_R t^R\) for constant vectors \({{\textbf {v}}}_0, \cdots , {{\textbf {v}}}_R \in {\mathbb {R}}^n\). Equivalently, each entry of \({{\textbf {v}}}\) is a (scalar) polynomial of degree at most R. In our analysis we consider vector-valued polynomials of the particular form

for a vector-valued polynomial \({\varvec{y}}(t)\) of length M. A key observation is that the evaluation of \({\varvec{p}}\) in the next timestep \(t_{i}\) satisfies \({\varvec{p}}(t_{i}) \in \textrm{span}(X(\varvec{t_{{\textsf{equi}}}})) = {\mathcal {S}}\). According to (8), \(\mathbf {s^*}\) minimizes the residual over \({\mathcal {S}}\). Hence, the residual can only increase when we replace \(\mathbf {s^*}\) by \({\varvec{p}}(t_{i})\) in

Thus, it remains to find a polynomial of the form (9) for which we can establish convergence of the extrapolation error \( \Vert {\varvec{p}}(t_{i})- {\varvec{x}} (t_{i}) \Vert _2\). For this purpose, we will choose \({\varvec{p}}_R \in {\mathbb {P}}_{R}\) to be the least-squares approximation of the M function samples contained in \(X(\varvec{t_{{\textsf{equi}}}})\):

We will represent the entries of \({\varvec{p}}_{R}\) in the Chebyshev polynomial basis:

where \({\varvec{c}}_{k,p} \in {\mathbb {R}}^n\) and \(q_{k}\) denotes the Chebyshev polynomial of degree k, that is, \(q_{k}(t) = \cos (k \cos ^{-1}t)\) for \(t\in [-1,1]\). Setting

we can express (12) more compactly as \({\varvec{p}}_{R}(t) = C_{p} {\varvec{q}}_{R}(t)\). Thus,

In view of (11), the matrix of coefficients \(C_{p}\) is determined by minimizing \(\Vert X(\varvec{t_{{\textsf{equi}}}}) - C_{p} Q_R(\varvec{t_{{\textsf{equi}}}})\Vert _{F}\). Because \(R \le M-1\), the matrix \(Q_R(\varvec{t_{{\textsf{equi}}}})\) has full row rank and thus the solution of this least-squares problem is given by \(C_{p} = X(\varvec{t_{{\textsf{equi}}}})Q_{R}(\varvec{t_{{\textsf{equi}}}})^{\dagger }\) with \(Q_{R}(\varvec{t_{{\textsf{equi}}}})^{\dagger } = Q_{R}(\varvec{t_{{\textsf{equi}}}})^{T} (Q_{R}(\varvec{t_{{\textsf{equi}}}}) Q_{R}(\varvec{t_{{\textsf{equi}}}})^{T})^{-1}\). In summary, we obtain that

which is of the form (9) and thus contained in \(\textrm{span}(X(\varvec{t_{{\textsf{equi}}}}))\), as desired.

In order to analyze the convergence of \({\varvec{p}}_{R}(t)\), we relate it to Chebyshev polynomial interpolation of \({\varvec{x}}\). The following lemma follows from classical approximation theory, see, e.g., [18, Lemma 2.2].

Lemma 4

Let \({\varvec{q}}_{R}(t) \in {\mathbb {R}}^{R+1}\) be defined as in (13), containing the Chebyshev polynomials up to degree R. Under Assumption 1 there exists an approximation of the form

such that \( \Vert {\varvec{c}}_{k,x} \Vert _2 \le 2 \kappa _{\rho } \rho ^{-k} \) and

Following the arguments in [8] for scalar functions, Lemma 4 allows us to estimate the extrapolation error for \({\varvec{p}}_{R}(t)\) if \(R \sim \sqrt{M}\).

Theorem 5

Suppose that Assumption 1 holds and \(R \le \frac{1}{2}\sqrt{M-1}\). Then the vector-valued polynomial \({\varvec{p}}_{R} \in {\mathbb {P}}_R\) defined in (14) satisfies for every \(t \in (1, (\rho + \rho ^{-1})/2)\) the error bound

with \(r = (t + \sqrt{t^{2} -1})/ \rho < 1\) and C(M, R) defined as in Theorem 3.

Proof

Letting \({\varvec{x}}_{R}\) be the polynomial from Lemma 4, we write

To treat the second term in (15), first note that, by definition, we have

and hence \(C_x = X_R(\varvec{t_{{\textsf{equi}}}}) Q_R(\varvec{t_{{\textsf{equi}}}})^\dagger \). Setting \(\sigma := \sigma _{\min }(Q_R(\varvec{t_{{\textsf{equi}}}})) = 1/\Vert Q_R(\varvec{t_{{\textsf{equi}}}})^\dagger \Vert _2\), we obtain

where we used Lemma 4 in the last inequality. Applying, once more, Lemma 4 to the first term in (15) gives

Because \(|q_{k}(t)| \le (t + \sqrt{t^{2}-1})^{k} \le \rho ^k r^k\) for \(t>1\), we have that

Inserted into (16), this gives

The proof is completed by inserting the lower bound

which holds when \(R \le \frac{1}{2}\sqrt{M-1}\) according to [8, Theorem 4]. \(\square \)

Using Theorem 5 with \(t=t_{i}\) and inserting the result in (10), we have proven the statement of Theorem 3.

3.3 Optimality of the Prediction with Compression

When the matrix \(X({\varvec{t}})\) is compressed via POD (Algorithm 2) or the randomized range finder (Algorithm 3), the orthonormal basis \(Q \in {\mathbb {R}}^{n \times m}\) used in Algorithm 1 spans a lower-dimensional subspace \({\mathcal {S}} \subseteq \textrm{span}(X)\).

Corollary 6

Suppose that Algorithm 1 is used with an orthonormal basis satisfying \(\Vert (QQ^{T} -I) X(\varvec{t_{{\textsf{equi}}}}) \Vert _{2} \le \varepsilon \) for some tolerance \(\varepsilon > 0\). Under Assumption 1, the initial guess \({\varvec{s}}^{*}\) constructed by the algorithm satisfies the error bound

for any \(R \le \frac{1}{2} \sqrt{M-1}\).

Proof

Let \({\varvec{p}}_{R}(t) = X(\varvec{t_{{\textsf{equi}}}}) Q_{R}(\varvec{t_{{\textsf{equi}}}})^{\dagger } {\varvec{q}}_{R}(t)\) be the polynomial constructed in (14). Using that \({\varvec{s}}^{*}\) satisfies the minimization problem (8) and \(QQ^T {\varvec{x}}(t_{i}) \in {\mathcal {S}} = \textrm{span}(Q)\), we obtain:

The first term is bounded using Theorem 5 with \(t = t_i\). For the second term, we use the bound in (17) on \(\Vert {\varvec{q}}_{R}(t_{M+1})\Vert \) to obtain

with \(\sigma := \sigma _{\min }(Q_R(\varvec{t_{{\textsf{equi}}}}))\). The proof is completed using the lower bound (18) on \(\sigma \). \(\square \)

4 Numerical Results: Test Case

To test the subspace acceleration algorithms proposed in Sect. 2, we first consider a simplified setting, an elliptic PDE with an explicitly given time- and space-dependent coefficient \(a({\varvec{x}},t)\) and source term \(g({\varvec{x}},t)\):

We consider the domain \(\Omega = \left[ 0,1\right] ^{2} \subset {\mathbb {R}}^{2}\) and discretize (19) on a uniform two-dimensional Cartesian grid using a centered finite difference scheme of order 4. This leads to a linear system for the vector of unknowns \(\varvec{{f}}(t)\), for which both the matrix and the right-hand side depend on t:

We discretize the time variable on the interval \(\left[ t_{0}, \, t_{f} \right] \) with a uniform timestep \(\Delta t\) on \(N_{t}\) points, such that \(t_{f} = t_{0} + N_{t} \Delta t\). Evaluating (20) in these \(N_{t}\) instants, we obtain a sequence of linear systems of the same type as (1).

We set \(a({\varvec{x}},t) = \exp ^{\left[ -(x-0.5)^{2} - (y-0.5)^{2}\right] } \cos (tx) +2.1\) and choose the right-hand side \(g({\varvec{x}},t)\) such that

is the exact solution of (19). The tests are performed using MATLAB 2023a on an M1 MacbookPro. We employ GMRES as iterative solver for the linear system, with tolerance \(10^{-7}\) and incomplete LU factorization as preconditioner. We start the simulations at \(t_{0}= 2.3\, s\) and perform \(N_{t} = 200\) timesteps.

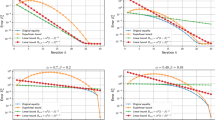

GMRES iterations per timestep when solving Eq. (20) with different initial guesses

The results reported in Fig. 1 use a spatial grid of dimension \(100 \times 100\), leading to linear systems of size \(n = 10000\). Different values of M, the number of previous solutions retained in the history matrix X, and m, the dimension of the reduced-order model, were tested. We found that the choices \(M = 20, \, m = 10\) and \(M = 35,\, m = 20\) lead to good performance for \(\Delta t = 10^{-5}\) and \(\Delta t = 10^{-3}\), respectively. The baseline is (preconditioned) GMRES with the previous solution used as initial guess; the resulting number of iterations is indicated with the solid blue line (“Baseline”) in Fig. 1. This is compared to the number of iterations obtained by applying GMRES when Algorithm 1 is employed to compute the initial guess, in combination with both the POD basis in Algorithm 2 (“POD” in the graph) and the Randomized Range Finder in Algorithm 3 (“RAND” in the graph). For the Randomized Range Finder algorithm, the matrix \(\Omega \) is computed from scratch only every 50 timesteps, while in the other timesteps is updated as described in Algorithm 3, resulting in a computationally efficient version of the algorithm. Both the POD and Randomized versions of the acceleration method give a remarkable gain in computational time with respect to the baseline.

When employing \(\Delta t = 10^{-5}\), in Fig. 1a, the number of iterations computed by the linear solver vanishes most of the time, since the initial residual computed with the new initial guess is already below the tolerance, set to \(10^{-7}\) in this case. It is worth noticing that the new randomized method gives an acceleration comparable to the existing POD one, but it requires a much lower computational cost, as described in Sect. 2.

The results obtained for larger timesteps, in Fig. 1b, are slightly worse, as expected, since it is less easy to predict new solutions using the previous ones when they are further apart in time. Nevertheless, the gain of the acceleration method is still visible, obtaining always less than half iterations with respect to the baseline and adding the solution of a reduced-order system of dimension \(m= 20 \) only, compared to the full solution of dimension 10000. The resulting advantage of the new method can indeed be observed in Fig. 2, which compares the computational time needed by the solver using the baseline approach with the one obtained by using the new guess (this includes the time employed to compute the guess). The timings showed are the ones needed to produce the results in Fig. 1. The time employed by the POD method has not been included since it is significantly higher than the baseline, as predicted by the analysis in Sect. 2.1.

Computational time per timestep corresponding to Fig. 1a and b. The average speedup per iteration of the randomized method with respect to the baseline is a factor 9 for \(\Delta t = 10^{-5} \) and a factor 10 for \(\Delta t = 10^{-3}\)

5 Numerical Results: Plasma Simulation

In this Section, we apply the subspace acceleration method to the numerical simulation of plasma turbulence in the outermost plasma region of tokamaks, where the plasma enters in contact with the surrounding external solid walls, resulting in strongly non-linear phenomena occurring on a large range of time and length scales.

In this work, we consider GBS (Global Braginskii Solver) [12, 23], a three-dimensional, flux-driven, two-fluid code developed for the simulation of the plasma dynamics in the boundary of a fusion device. GBS implements the Braginskii two-fluid model [3], which describes a quasi-neutral plasma through the conservation of density, momentum, and energy. This results in six coupled three-dimensional time-evolving non-linear equations which evolve the plasma dynamics in \(\Omega \), a 3D toroidal domain with rectangular poloidal cross section, as represented in Fig. 3. The fluid equations are coupled with Maxwell equations, specifically Poisson and Ampére, elliptic equations for the electromagnetic variables of the plasma. In the limit considered here the elliptic equations reduce to a set of two-dimensional algebraic constraints decoupled along the toroidal direction, therefore to be satisfied independently on each poloidal plane. The differential equations are spatially discretized on a uniform Cartesian grid employing a finite difference method, resulting in a system of differential-algebraic equations of index one [15]:

where \(\varvec{{\mathcal {Y}}}({\varvec{f}}(t),{\varvec{x}}(t))\) is a non-linear, 6-dimensional differential operator and

are the vector of, respectively, the electromagnetic and fluid quantities solved for by GBS, where the solutions of all the \(N_{Z}\) poloidal planes are stacked together. More precisely, the time evolution of the fluid variables, \({\varvec{f}}\), is coupled with the set of linear systems \(A_{k}({\varvec{f}}(t)) {\varvec{x}}_{k}(t) = {\varvec{b}}_{k}({\varvec{f}}(t))\) which result from the discretization of Maxwell equations. Indeed, the matrix \(A_{k} \in {\mathbb {R}}^{N_{R}N_{Z} \times N_{R}N_{Z} }\) and right-hand side \(\varvec{b_{k}} \in {\mathbb {R}}^{N_{R}N_{Z}}\) depend on time through \({\varvec{f}}\).

In GBS, system (21) is integrated using a Runge–Kutta scheme of order four, on the discrete times \(\left\{ t_{i} \right\} _{i=1}^{N_{t}}\), with step-size \(\Delta t\). Given \({\varvec{f}}^{i}\) and \({\varvec{x}}^{i}\), the value of \({\varvec{f}}\) and \({\varvec{x}}\) at time \(t_{i}\), the computation of \(\varvec{ {f}}^{i+1}\), requires performing three intermediate substeps where the quantities \(\varvec{ {f}}^{i+1, j}\) for \( j =1,2,3\) are computed. To guarantee the consistency and convergence of the Runge–Kutta integration method [15], the algebraic constraints are solved at every substep, computing \(\varvec{ {x}}^{i+1,j}_{k}\) for \( j =1,2,3\) and for each \(k-\)th poloidal plane. As a consequence, the linear systems \(A_{k}({\varvec{f}}(t)) {\varvec{x}}_{k}(t) = {\varvec{b}}_{k}({\varvec{f}}(t))\) are assembled and solved four times for each of the \(N_{\varphi }\) poloidal planes, to advance the full system (21) by one timestep. Since the timestep \(\Delta t\) is constrained to be small from the stiff nature of the GBS model, the solution of the linear systems is among the most computationally expensive part of GBS simulations.

In GBS, the linear system is solved using GMRES, with the algebraic multigrid preconditioner boomerAMG from the HYPRE library [9], a choice motivated by previous investigations [12]. The subspace acceleration algorithm proposed in Sect. 2 is implemented in the GBS code and, given the results shown in Sect. 4, the randomized version of the algorithm is chosen. The results reported are obtained from GBS simulations on one computing node. The poloidal planes of the computational domain are distributed among 16 cores, specifically of type Intel(R) Core i7-10700F CPU at 2.90GHz. GBS is implemented in Fortran 90, and relies on the PETSc library [2] for the linear solver and Intel MPI 19.1 for the parallelization.

We consider the simulation setting described in [12], taking as initial conditions the results of a simulation in a turbulent state. We use a Cartesian grid of size of \(N_{R} = 150,\, N_{Z} = 300\) and \(N_{\varphi }=64\), with additional 4 ghost points in the Z and R directions. Therefore, the imposed algebraic constraints result in 64 sequences of linear systems of dimension \( N_{R} N_{Z} \times N_{R} N_{Z} = 46816 \times 46816\). The timestep employed is \(\Delta t = 0.7 \times 10^{-5}\). The sequence of linear systems we consider represents the solution of the Poisson equation on one fixed poloidal plane, but the same considerations apply to the discretization of Ampére equation.

In Fig. 4a the number of iterations obtained with the method proposed in Sect. 2, denoted as “RAND” is compared with the ones obtained using the previous step solution as initial guess, depicted in blue as “Baseline”. We notice that, employing the acceleration method, the number of GMRES iterations needed for each solution of the linear system is reduced by a factor 2.9, on average, at the cost of computing a solution of an \(m \times m \) reduced-order system. In Fig. 4b the wall clock time required for the solution of the systems is shown. The baseline approach is compared to the accelerated method, where we also take into account the cost of computing the initial guess. Thanks to the randomized method employed, the process of generating the guess is fast enough to provide a time speed up of a factor of 6.5 per iteration.

The employed values of \(M=15\), the number of previous solutions retained, and \(m=10\), the dimension of the reduced-order model, are the ones found to give a good balance between the decrease in the number of iterations and the computational cost of the reduced-order model. In Table 1 the results for different values of M and m are reported. It is worth noticing that an average number of GMRES iterations per timestep smaller than one implies that often the initial residual obtained with the initial guess is below the tolerance set for the solver. It is possible to notice that higher values of m lead to very small number of iterations, but the overall time speedup is reduced since the computation of the guess becomes more expensive.

6 Conclusions

In this paper, we propose a novel approach for accelerating the solution of a sequence of large-scale linear systems that arises from, e.g., the discretization of time-dependent PDEs. Our method generates an initial guess from the solution of a reduced-order model, obtained by extracting relevant components of previously computed solutions using dimensionality reduction techniques. Starting from an existing POD-like approach, we accelerate the process by employing a randomized algorithm. A convergence analysis is performed, which applies to both approaches, POD and the randomized algorithm and shows how the accuracy of the method increases with the history size. A test case displays how POD leads to a noticeable decrease in the number of iterations, but at the same time a nearly equal decrease is achieved by the cheaper randomized method, that leads to a time speedup per iteration of a factor 9. In real applications such as the plasma simulations described in Sect. 5, the speedup is more modest, given the stiff nature of the problem which constrains the timestep of the explicit integration method to be very small, but still practically relevant.

Data Availability

The data that support the findings of this study are available upon reasonable request from the authors.

References

Austin, A.P., Chalmers, N., Warburton, T.: Initial guesses for sequences of linear systems in a GPU-accelerated incompressible flow solver. SIAM J. Sci. Comput. 43(4), C259–C289 (2021). https://doi.org/10.1137/20M1368677

Balay, S., et al: PETSc, the portable, extensible toolkit for scientific computation. Vol. 2. 17. Argonne National Laboratory, (1998)

Braginskii, S.I.: Transport Processes in a Plasma. Rev. Plasma Phys. 1, 205 (1965)

Brand, M.: Fast low-rank modifications of the thin singular value decomposition. Linear Algebra Appl. 415(1), 20–30 (2006). https://doi.org/10.1016/j.laa.2005.07.021

Carlberg, K., Forstall, V., Tuminaro, R.: Krylov-subspace recycling via the POD-augmented conjugate-gradient method. SIAM J. Matrix Anal. Appl. 37(3), 1304–1336 (2016). https://doi.org/10.1137/16M1057693

Chen, G., Zhang, Y., Zuo, D.: An Incremental SVD Method for Non-Fickian Flows in Porous Media: Addressing Storage and Computational Challenges. (2023). arXiv:2308.15409 [math.NA]

De Sturler, E.: Truncation strategies for optimal Krylov subspace methods. SIAM J. Numer. Anal. 36(3), 864–889 (1999). https://doi.org/10.1137/S0036142997315950

Demanet, L., Townsend, A.: Stable extrapolation of analytic functions. Found. Comput. Math. 19(2), 297–331 (2019). https://doi.org/10.1007/s10208-018-9384-1

Falgout, R.D., Yang, U.M.: hypre: A Library of High Performance Preconditioners. In: L Peter, MA Sloot et al. (eds.) International Conference on computational science ICCS 2002. Springer, Berlin, 632–641 (2002)

Fasoli, A., et al.: Computational challenges in magnetic-confinement fusion physics. Nat. Phys. 12(5), 411–423 (2016). https://doi.org/10.1038/NPHYS3744

Fischer, P.F.: Projection techniques for iterative solution of Ax = b with successive right-hand sides. Comput. Methods Appl. Mech. Eng. 163(1–4), 193–204 (1998). https://doi.org/10.1016/S0045-7825(98)00012-7

Giacomin, M., et al.: The GBS code for the self-consistent simulation of plasma turbulence and kinetic neutral dynamics in the tokamak boundary. J. Comput. Phys. 463, 111294 (2022). https://doi.org/10.1016/j.jcp.2022.111294

Golub, G.H., Van L., Charles F.: Matrix computations. Fourth. Johns Hopkins Studies in the Mathematical Sciences. Johns Hopkins University Press, Baltimore, MD, (2013)

Grinberg, L., Karniadakis, G.E.: Extrapolation-based acceleration of iterative solvers: application to simulation of 3D flows. Commun. Comput. Phys. 9(3), 607–626 (2011). https://doi.org/10.4208/cicp.301109.080410s

Hairer, E., Lubich, C., Roche, M.: The numerical solution of differential-algebraic systems by Runge–Kutta methods. Vol. 1409. Lecture Notes in Mathematics. Springer-Verlag, Berlin, pp. viii+139 (1989) https://doi.org/10.1007/BFb0093947

Halko, N., Martinsson, P.G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011). https://doi.org/10.1137/090771806

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49(6), 409 (1952). https://doi.org/10.6028/jres.049.044

Kressner, D., Tobler, C.: Low-rank tensor Krylov subspace methods for parametrized linear systems. SIAM J. Matrix Anal. Appl. 32(4), 1288–1316 (2011). https://doi.org/10.1137/100799010

Kunisch, K., Volkwein, S.: Control of the Burgers equation by a reduced-order approach using proper orthogonal decomposition. J. Optim. Theory Appl. 102(2), 345–371 (1999). https://doi.org/10.1023/A:1021732508059

Markovinović, R., Jansen, J.D.: Accelerating iterative solution methods using reduced-order models as solution predictors. Int. J. Numer. Methods Eng. 68(5), 525–541 (2006). https://doi.org/10.1002/nme.1721

Morgan, R.B.: Implicitly restarted GMRES and Arnoldi methods for nonsymmetric systems of equations. SIAM J. Matrix Anal. Appl. 21(4), 1112–1135 (2000). https://doi.org/10.1137/S0895479897321362

Parks, M.L., et al.: Recycling Krylov subspaces for sequences of linear systems. SIAM J. Sci. Comput. 28(5), 1651–1674 (2006). https://doi.org/10.1137/040607277

Ricci, P., et al.: Simulation of plasma turbulence in scrape-off layer conditions: the GBS code, simulation results and code validation. Plasma Phys. Control. Fusion (2012). https://doi.org/10.1088/0741-3335/54/12/124047

Saad, Y., Schultz, M.H.: GMRES: A Generalized Minimal Residual Algorithm for Solving Nonsymmetric Linear Systems. SIAM J. Sci. Stat. Comput. 7(3), 856–869 (1986). https://doi.org/10.1137/0907058

Soodhalter, K.M.: Block Krylov subspace recycling for shifted systems with unrelated right-hand sides. SIAM J. Sci. Comput. 38(1), A302–A324 (2016). https://doi.org/10.1137/140998214

Soodhalter, K.M., de Sturler, E., Kilmer, M.E.: A survey of subspace recycling iterative methods. GAMM-Mitt 43(4), e202000016 (2020). https://doi.org/10.1002/gamm.202000016

Soodhalter, K.M., Szyld, D.B., Xue, F.: Krylov subspace recycling for sequences of shifted linear systems. Appl. Numer. Math. 81, 105–118 (2014). https://doi.org/10.1016/j.apnum.2014.02.006. arXiv:1301.2650

Tromeur-Dervout, D., Vassilevski, Y.: Choice of initial guess in iterative solution of series of systems arising in fluid flow simulations. J. Comput. Phys. 219(1), 210–227 (2006). https://doi.org/10.1016/j.jcp.2006.03.014

Volkwein, S.: Proper orthogonal decomposition: Theory and reduced-order modelling. Lect. Notes, Univ. Konstanz 4, p. 4 (2013)

Ye, S., et al.: Improving initial guess for the iterative solution of linear equation systems in incompressible flow. Mathematics 8(1), 1–20 (2020). https://doi.org/10.3390/math8010119

Acknowledgements

The authors thank the anonymous reviewers for helpful feedback. This work has been carried out within the framework of the EUROfusion Consortium, via the Euratom Research and Training Programme (Grant Agreement No 101052200 - EUROfusion) and funded by the Swiss State Secretariat for Education, Research and Innovation (SERI). Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union, the European Commission, or SERI. Neither the European Union nor the European Commission nor SERI can be held responsible for them.

Funding

Open access funding provided by EPFL Lausanne The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guido, M., Kressner, D. & Ricci, P. Subspace Acceleration for a Sequence of Linear Systems and Application to Plasma Simulation. J Sci Comput 99, 68 (2024). https://doi.org/10.1007/s10915-024-02525-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-024-02525-1