Abstract

An auxiliary optimal control problem is formulated that provides with its unique solution, a continuous representation of the global error of a numerical approximation to the solution of a linear quadratic optimal control problem. The resulting error functions are characterized as the unique solutions to an optimality system that is reformulated as a boundary value problem. With this formulation, reliable pointwise error estimates can be generated utilizing well-established techniques of defect control. A novel algorithm based on defect correction and defect control is presented that generates pointwise approximations to the global error of numerical optimal control solutions on a uniform grid. It is proven and numerically validated that this algorithm can generate pointwise estimates that approximate the true global error with a prescribed accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is devoted to the pointwise quantification of errors of discrete-time approximations to solutions of certain classes of linear quadratic optimal control (LQOC) problems. We extend for the first time the approach initiated by Zadunaisky [1, 2] for continuous-in-time estimates of errors in numerical approximations of Cauchy and boundary value problems with ordinary differential equations (ODE) to the field of optimal control problems governed by linear ODE models. Our purpose is to derive a continuous representation of the discretization errors of the numerical state and control functions approximating the solution to optimal control problems of the form

where \(\alpha \ge 0\), \(c\in {\mathbb R}^n\), \(A\in {\mathbb R}^{n\times n}\), \(B\in {\mathbb R}^{n\times m}\), \(x^0 \in {\mathbb R}^n\), \(x(t) \in {\mathbb R}^n\) for all \(t\in [t_0,T]\), and \(|\cdot |\) denotes the standard euclidean norm. For the weight matrices, it is supposed that \(R\in {\mathbb R}^{m\times m}\) is symmetric positive definite and \(Q\in {\mathbb R}^{n\times n}\) is symmetric positive semi-definite.

This work considers two cases for \(U_{ad}\). If the set of admissible controls for (1) is bounded and given by

where \({{\mathcal {U}}}:= [ \underline{u}, \overline{u} ]^m\), \(\underline{u} \le 0 \le \overline{u}\); we refer to (1)–(2) as the LQOC problem.

In the case without control constraints, that is, if the optimal control is sought in the set

we refer to (1) with (3) as the linear quadratic regulator (LQR) problem.

In both cases, \(U_{ad}\) is closed and convex in \(L^2(t_0,T;{\mathbb R}^m)\) and the optimal control problems (1)–(2) and (1) with (3) admit unique solutions which are denoted with (x, u).

Since the fundamental works by Kalman [3, 4], linear quadratic control problems play an important role in optimal control theory and see extensive use in engineering applications. This is also because the LQR setting allows for the analysis of optimal control problems with nonlinear dynamics by providing a local approximation of the optimal control solution. In some cases, non-linear control problems can be solved directly using a linear model [5]. Applications of LQR problems involve the construction of trajectory controllers by linearization around the desired trajectory of a nonlinear system or for deriving feedback controls for the stabilization of nonlinear systems in equilibrium points. The input-constrained case (1)–(2) is a natural extension of LQR problems and the constraints on the inputs can model limitations of the hardware implementing the controller. However, despite its simple form, in general, no exact solution to (1) is available except for a few cases. Hence, in practice, (1) must be solved using computational methods that involve time-discretization of some form. A prominent approach is the so-called direct approach of optimal control, where the optimal control problem is recast into a finite-dimensional optimization problem by time-discretization of the system dynamics and the objective functional J. Another approach for finding approximations to the optimal control is the indirect approach, where an approximate solution to the system of necessary conditions arising from the Pontryagin maximum principle (PMP) [6] is sought. The PMP states that given an optimal control \(u \in U_{ad}\) and the corresponding state x, there exists an adjoint state p such that

where \(H(x,u,p) = p^T(Ax + Bu) - \frac{1}{2} (x^T Q x + u^T R u )\) is the Hamilton-Pontryagin (HP) function and it holds:

In the case of LQR problems, from (4) the relation \(u(t) = R^{-1}B^T p(t)\) follows and the optimality system described by the PMP can be reduced to an initial-terminal-value problem of the form

with the initial and terminal conditions given by

where

and \(\mathbb {1}_n \in {\mathbb R}^{n\times n}\) denoted the unit matrix. In the following, we refer to initial-terminal-value problems as boundary-value problems (BVP).

Computational methods for solving LQR problems mostly make use of the fact that the optimal control is given in the form of the feedback law \(u(t) = R^{-1}B^T Y(t) x(t)\) [7]. The construction of such a feedback law requires the solution Y(t) of a matrix Riccati differential equation which needs to be solved numerically using a suitable integration scheme [8,9,10,11]. Alternatively, in the unconstrained case, finding an approximation to the optimal control solution involves solving the BVP (7)–(8) directly using appropriate solvers [12, 13].

For our investigation, independently of the underlying solution method, we assume that the resulting numerical approximations to x, u, and p are available and defined on the uniform grid given by

with mesh width \(h = \frac{T-t_0}{L}\) and \(L \in {\mathbb N}\). Moreover, we suppose that these approximations are represented by well-defined grid functions as follows

where \(x_l:= x_h(t_l) \approx x(t_l)\), \(u_l:= u_h(t_l) \approx u(t_l)\) and \(p_l:= p_h(t_l) \approx p(t_l)\) hold for \(l = 0,\ldots ,L\). Certainly, \(x_h\), \(u_h\) and \(p_h\) are only relevant if they provide a reliable representation of the true solution to the problem (1) on the grid \(\varDelta _h\). That is if the norm of the global error vectors with components

for all \(l = 0,\ldots , L\), is sufficiently small. For this reason, a substantial amount of research has been devoted to the question of convergence and convergence rates of numerical approximations to the continuous solutions of (1); see, e.g., [14,15,16,17]. In particular, considering the class of Runge–Kutta (RK) methods for time-discretization in optimal control, Hager and Dontchev [18, 19] proved, for a large class of optimal control problems with and without control constraints, that for all \(h>0\) sufficiently small, a constant \(C>0\) exists, such that the following holds:

where \(u_l = {{\,\mathrm{arg\,max}\,}}_{v \in {{\mathcal {U}}}} H(x_{l},v,p_{l})\) is supposed for all \(l = 0,\ldots , L\) and the order k depends on both, the smoothness of the solution to (1) and the order-of-accuracy of the Runge–Kutta scheme used for the discretization of the state equation.

In [18, 19], the pair \((x_{h},u_{h})\) is the strict local minimizer of a discrete counterpart to the optimal control problem corresponding to (1), where integration is replaced by a numerical quadrature consistent with the RK scheme applied to the state equation. Hager proved [19] that, in the case of unconstrained optimal control problems, the usual order conditions for RK discretization of ODEs lead to (10) at least for \(k = 1\) and \(k = 2\). For the cases \(k = 3\) and \(k = 4\) and if control constraints are active additional conditions on the RK coefficients are necessary; we refer to the original papers [18] and [19] for a detailed discussion. These results ensure convergence of the approximations to the solution of (1) as \(h \rightarrow 0\). However, for any fixed grid \(\varDelta _h\), the estimate (10) does not give insight into the distribution of the global error over the entire interval of integration.

In this paper, a continuous representation of the global error is developed, which is new to the field of optimal control, allowing the quantification of pointwise errors in the approximation \((x_{h},u_{h})\) to the solution of our control problems where the admissible sets are defined by either (2) or (3). Therefore, on the interval \([t_0,T]\), continuous error functions are defined by \(e_x\) and \(e_u\), such that their evaluation on the grid \(\varDelta _h\) exactly gives the global error of the underlying numerical solutions \(x_h\) and \(u_h\), respectively. To construct these error functions, we extend the strategy of Zadunaisky [1, 2] originally applied to BVPs and initial value problems (IVP) to the framework of optimal control problems.

In the next section, our extension of Zadunaisky’s strategy to the framework of optimal control is discussed. An auxiliary optimal control problem is derived that has as its unique solution the error functions \(e_x\) and \(e_u\) which in turn are characterized by the solution of a BVP derived from the PMP. To construct the auxiliary problem, appropriate interpolation operators are defined, to achieve continuous extensions for \(x_h\) and \(u_h\) to the interval \([t_0,T]\). Assuming that the global errors of \(x_h\) and \(u_h\) are of a certain order in the sense of (10), we provide conditions for the interpolation operator that guarantee that the uniform convergence of \(e_x\) and \(e_u\) to zero is of the same order as the convergence of the underlying approximations on \(\varDelta _h\) to x and u as \(h \rightarrow 0\). Section 3 is devoted to the discussion of numerical procedures for generating reliable approximations of the desired error functions. Therein, a novel iterative procedure for generating error estimates in the LQR setting is proposed. This method successively generates improved error estimates using the symplectic Euler method and is inspired by the iterated defect correction (IDeC) schemes of Stetter [20, 21] and Frank et. al. [22,23,24]. To ensure the reliability of the approximations to \(e_x\) and \(e_u\), a defect control-based strategy is utilized similar to [12, 13]. It is proved that with this algorithm a continuous approximation to the error functions \(e_x\) and \(e_u\) can be computed that is arbitrarily close to the global error of \(x_h\) and \(u_h\) on the grid \(\varDelta _h\). The findings of this work are supported with results of numerical experiments presented in Sect. 4, where a numerical order analysis of numerical approximations to \(e_x\) and \(e_u\) is discussed. In particular, an application of the proposed methodology to LQR problems arising from a Petrov-Galerkin approximation of controlled convection-diffusion problems is presented, together with a discussion of the resulting computational complexity. Moreover, in the Appendix we present a cyclic reduction-based direct method that takes advantage of the special structure of the linear systems arising from the application of the symplectic Euler method to the auxiliary optimality systems. A section of conclusion completes this work.

2 The Construction of Continuous Error Functions

The strategy of Zadunaisky was initially developed to construct global error estimates of a given approximation to solutions of BVPs and IVPs [1, 2]. Notice, that, in the case of LQR problems, the optimal pair (x, u) is uniquely defined by the solution of the BVP (7)–(8), together with the relation \(u = R^{-1}B^T p\). Hence, by assuming that the approximations \((x_h,p_h)\) to the solution of (7)–(8) are available, the method of Zadunaisky can be readily extended to the optimal control framework. That is, first an auxiliary BVP problem is constructed having as its unique solution the error functions

where \({{\mathcal {I}}}\) is an appropriate interpolating polynomial. An error functions for the controls can be constructed similarly as

In the second step, the solution to the auxiliary problem is approximated using an appropriate solver returning the estimates \(e_{x,h}\), \(e_{p,h}\). Then, if \(u_h = R^{-1}B^T p_h\) holds, the error of the control approximation is given by \(e_{x,h} = R^{-1}B^T e_{p,h}\). While this approach is applicable in the LQR setting, the general LQOC case (1)–(2), where control bounds are active, does not allow a direct application of Zadunaiskys approach to the optimality system. Instead, it is more advantageous to directly elaborate our methodology with the cost function and the system dynamics. In this way, an auxiliary optimal control problem can be constructed which has as its unique solution the error functions \((e_x,e_u)\) and can be constructed using the approximations \((x_h,u_h)\). This approach makes it possible to extend Zadunaisky’s strategy to a large range of numerical optimal control settings.

For the construction of the auxiliary optimal control problem, we define the vector space

together with the corresponding subsets

and

If LQR problems are considered and thus \((x_h,u_h)\) is an approximate solution to (1) with (3), we will always assume that \(U^h_{ad} = Z^{h,m}\). Moreover, we define the restriction operator \( {{\mathcal {R}}}^h: W^{1,\infty }(t_0,T;{\mathbb R}^r) \rightarrow Z^{h,1} \) with \({{\mathcal {R}}}^h(z)(t_l) = z(t_l)\), for all \(t_l \in \varDelta _h\) and any \(z \in W^{1,\infty }(t_0,T;{\mathbb R}^r)\). Restrictions of the above-defined error functions to the global errors of \((x_h,u_h)\in X^h \times U_{ad}^h\) are then given by

To extend the definition of the error functions \(e_x\) and \(e_u\) to the framework of LQOC problems, appropriate requirements for the interpolation operators applied to \(x_h\) and \(u_h\) are necessary. We make the following Remark about the regularity of solutions to (1):

Remark 1

In the unconstrained case where we have (1) with (3), the optimal control and the corresponding state share the same regularity class. In particular, one can prove by using a bootstrap argument on the optimality system (4)–(6), that \(x \in C^{\infty }(t_0,T;{\mathbb R}^n)\) and \(u \in C^{\infty }(t_0,T;{\mathbb R}^m)\).

On the other hand, in the case of active box constraints (2), and since R is symmetric and positive definite, the component functions \(u_i\), with \(i = 1,\ldots ,m\), of the optimal control have an explicit form:

where \(w_i\) denotes the i-th component of \(w(t) = R^{-1}B^T p(t)\) [5]. Since \(u\in L^2(t_0,T;{\mathbb R}^m)\), the optimal state x is absolutely continuous and, in turn, the solution to the adjoint system (6) is continuously differentiable. With respect to \(w_i\) we have that \(\min \{w_i,\overline{u}\} \in W^{1,\infty }({\mathbb R})\). Then Lemma 7.6 in [25] guarantees that with \(w_i \in C^1([t_0,T])\) it holds \(\min (w_i(\cdot ),\overline{u}) \in W^{1,\infty }([t_0,T])\). A repeated application of this reasoning applied to the maximum function guarantees that the functions (13) are bounded Lipschitz functions and hence \(u \in W^{1,\infty }(t_0,T;{\mathbb R}^m)\), such that \(x \in W^{2,\infty }(t_0,T;{\mathbb R}^n)\).

By the above remark, we have that the continuous extensions of \(u_h\) and \(x_h\) are best chosen from the class of bounded and Lipschitz functions or a suitable subset. The following definition makes use of this fact.

Definition 1

(Interpolation operator) Let \(\alpha _i: Z^{h,1} \mapsto {\mathbb R}\) define a linear form for all \(i = 1,\ldots , N \), and choose \(\{\beta _i\}_{i = 1, \ldots N}\) as the basis of a finite-dimensional subspace \(W_N \subset W^{1,\infty }(t_0,T)\). Then the mapping

is called interpolation operator if \({{\mathcal {I}}}(z_h)(t_l) = z_h(t_l)\) holds for all \(l = 0,\ldots ,L\).

An interpolation operator is accurate of order \(k_z>0\) with respect to some function \(z \in W^{1,\infty }(t_0,T)\), if there exists some constant \(C>0\), such that for all \(h>0\) sufficiently small, it holds

In the case of an unconstrained control variable, where the optimal state and control are members of the same regularity class, one can use the same interpolation operator to generate both the continuous extension to \(x_h\) and to \(u_h\). However, if control bounds are active a further distinction between the interpolation operators for the discrete states and discrete controls is necessary. For generalization and in line with Definition 1, the following assumptions are made for an arbitrary function \(z \in W^{1,\infty }(t_0,T)\) with corresponding approximation \(z_h \in Z^{h,1}\), where \(z_h(t_l) \approx z(t_l)\) for all \(t_l \in \varDelta _h\). We have

-

(A1)

Assume \(z(t)\in [\underline{u},\overline{u}]\), holds for all \(t \in [t_0,T]\) and \( z_h(t_l)\in [\underline{u},\overline{u}]\), holds for all \(t_l \in \varDelta _h\), then \({{\mathcal {I}}}(z_h)(t)\in [\underline{u},\overline{u}]\) must be satisfied for all \(t \in [t_0,T]\).

-

(A2)

If \(z \in W^{2,\infty }(t_0,T)\), then it must hold \({{\mathcal {I}}}(z_{h}) \in W^{2,\infty }(t_0,T)\).

-

(A3)

Assume that there exists a \(k \in {\mathbb N}\) and a constant \(C>0\), such that \(\max _{l = 0, \ldots L} | z(t_l) - z_h(t_l) | \le C h^k\) holds for all \(h>0\) sufficiently small. Then there exists another constant \(\tilde{C}>0\), such that \(\Vert ({{\mathcal {I}}}\circ {{\mathcal {R}}}^h)(z) - {{\mathcal {I}}}(z_h) \Vert _{\infty } \le \tilde{C}h^k\) is satisfied for all \(h>0\) sufficiently small.

Assumption (A3) guarantees that an interpolating function \({{\mathcal {I}}}(z_h)\) does reflect the behaviour of the global error in \(z_h\) at least asymptotically as \(h \rightarrow 0\). That is, any sequence of interpolating functions \(({{\mathcal {I}}}(z_h))_{h>0}\) converging pointwise on the interval \([t_0, T]\) with order k, due to the convergence properties of the sequence \((z_h)_{h>0}\), convergences uniformly to z with the same order. A well-known example of an interpolation operator satisfying this assumption together with Assumption (A2), is the cubic spline interpolation operator with not-a-knot boundary conditions.

Remark 2

The Assumption (A2) is satisfied by construction since for cubic splines it holds \({{\mathcal {I}}}(z_h) \in C^2(t_0,T)\). We show the validity of (A3) in the case of cubic splines with not-a-knot boundary conditions. Therefore, we define \(z_l:= z_h(t_l)\) for all \(l = 1,\ldots ,L \), and require for all \(h>0\) sufficiently small there exists a \(C>0\), such that it holds

For each \(t \in [t_l,t_{l+1}]\) a cubic spline is defined by

with the coefficients

Together with the not-a-knot end conditions for the interpolatory cubic polynomial, the coefficients \(\mu _0,\ldots ,\mu _L\) are uniquely determined by the linear equations

for \(l = 1,\ldots ,L-1\) as well as

for \(l =0 \) and \(l = L\). As a result, each coefficient \(\mu _l\) is given as the linear combination of divided differences

and using the requirement (15), it holds

for some \(C_1,C_2,C_3 > 0\) and \(h>0\) sufficiently small. Together with this result, there exists a \(\tilde{C} > 0\) such that

holds for a sufficiently small \(h>0\). Since this estimate holds on any subinterval \([t_l,t_{l+1}]\), the interpolating function \({{\mathcal {I}}}({{\mathcal {R}}}^h(z) - z_h )\) converges uniformly to zero with order k on the whole interval \([t_0,T]\) as \(h\rightarrow 0\). The validity of (A3) now is a direct consequence of the linearity of the spline interpolation with respect to the interpolation nodes.

When interpolation of discrete controls \(u_h \in U^h_{ad}\) is desired, and control bounds are active, a restriction to a smaller class of interpolation operators is necessary to guarantee (A1). This is tied to the fact that the possible oscillatory behaviour of higher-degree polynomials like cubic splines can lead to a violation of requirement (A1). The following remark proves that the linear spline interpolation operator is a suitable choice for the interpolation of \(u_h \in U^h_{ad}\) as it not only satisfies (A3) but also (A1).

Remark 3

To construct a linear spline interpolation operator for an approximation \(u_h \in U^h_{ad}\) in the case \(m = 1\), the basis functions are chosen to be the hat functions:

with \(\beta _l \in W^{1,\infty }(t_0,T)\), for all \(l = 0,1,\ldots ,L\). The linear forms within (14) are defined such that

Hence, due to the property of the basis functions \(\{\beta _l\}_{l = 0,\ldots ,L}\) as a partition of unity it follows

A similar reasoning is applied when proving that the lower bound is satisfied for \({{\mathcal {I}}}(u_h)\). As a result, assumption (A1) is satisfied for the linear spline interpolation operator. The assumption (A3) is easily verified for a linear spline by using the linearity of \(\alpha _l\) and the property of the basis functions \(\{\beta _l\}_{l = 0,\ldots ,L}\) as a partition of unity.

For the entirety of the upcoming discussion, we will use the following convention: For any \(z_h \in Z^{h,r}\) with \(r \in {\mathbb N}\), the corresponding interpolating function, defined using an interpolation operator \({{\mathcal {I}}}\), will be given by

where \(z_{h,i}\in Z^{h,1}\) denotes the i-th component function of \(z_h\). With this, the definition of interpolation operators and the requirements (A1) - (A3) in mind, we define continuous extensions of the global errors (11)–(12) as follows.

Definition 2

(Continous error extensions) Let (x, u) denote the unique solution to the optimal control problem (1) and \((x_h,u_h) \in X_{x^0}^h \times U^h_{ad}\) its corresponding approximate solution on the grid \(\varDelta _h\). Moreover, define

as appropriate interpolation operators satisfying Assumptions (A1) - (A3). Then continuous extensions for the global errors of \(x_h\) and \(u_h\) are defined as follows:

and

respectively. A continuous error extension will be called consistent with the global error of the underlying approximation if it is uniformly convergent to zero in the same order as the global error of the underlying approximation.

The choice of the interpolation operator has a decisive influence on the quality of the continuous error extension. In particular, it is a reasonable prerequisite to aim for a continuous error representation that reflects the convergence behaviour of the global error not only at the interpolation nodes but on the entirety of \([t_0,T]\). The following Lemma provides possible criteria for the choice of interpolation operators, such that the functions \(e_x\) and \(e_u\) are consistent with the global error of the approximations \(x_h\) and \(u_h\).

Lemma 1

Let \(z\in W^{1,\infty }(t_0,T)\), with corresponding approximation \(z_h \in Z^{h,1}\). Assume, there exists a constant \(C>0\) for all \(h>0\) sufficiently small such that

holds for a fixed \(k \in {\mathbb N}\) and define \(e_{z} = z - {{\mathcal {I}}}(z_h)\). Moreover, let \({{\mathcal {I}}}\) denote an interpolation operator which is accurate of order \(k_z\) with respect to z and satisfies (A3). Then, there exists a constant \(\tilde{C}>0\) such that

is satisfied for all \(h>0\) sufficiently small.

Proof

It appears that the size of \( \Vert z - {{\mathcal {I}}}(z_h) \Vert _{\infty } \) is governed by two sources, the error of interpolation itself and an error due to the interpolation with approximate rather than exact values. Moreover, since \({{\mathcal {I}}}\) is an interpolation operator that is accurate of order \(k_z\) and due to Assumption (A3), there exist constants \(C_1,C_2>0\) such that

Thus the interpolating function \({{\mathcal {I}}}(z_h)\) is uniformly convergent to z of order \(\min \{k,k_u\}\) as \(h \rightarrow 0\), and the Lemma is proved. \(\square \)

Corollary 1

Assume that \({{\mathcal {I}}}_x\) and \({{\mathcal {I}}}_u\) are interpolation operators that fulfil assumption (A3) and are accurate of order \(k_x\) and \(k_u\) with respect to x and u respectively. Then if the estimate (10) is satisfied for all \(h>0\) sufficiently small, there exists a constant \(\tilde{C}>0\) such that

holds for all \(h>0\) sufficiently small.

To obtain continuous extensions \(e_u\) and \(e_x\) that reflect the convergence behaviour of their discrete counterparts (11)–(12), interpolation operators must be selected that have at least the same order of accuracy as the underlying method for generating \(x_h\) and \(u_h\). If the control bounds are not active, both the control and the corresponding state are arbitrarily smooth. Therefore, in this case, and by a restriction to piecewise polynomial interpolation operators, the continuous extensions \(e_u\) and \(e_x\) are consistent with the underlying global error if one chooses polynomial pieces with a sufficiently high polynomial degree [26, 27]. However, if the control bounds are active, the consistency of the global extensions in the sense of the definition 2 is not always easy to achieve. In the following remark, the consistency of \(e_u\) and \(e_x\) with respect to the underlying global error for cubic and linear spline interpolation polynomials is discussed.

Remark 4

The cubic spline interpolation operator, as described in Remark 2, defines an interpolation operator that is exactly of order four, with respect to any function \(z \in C^4(t_0,T)\) [28, 29]. Thus, if no control constraints are active, the continuous error extensions \(e_x\) and \(e_u\) with cubic splines are consistent in the sense of Definition 2 as long as the size of the global errors in \(x_h\) and \(u_h\) is of order \(O(h^k)\) with \(k\le 4\). If the control constraints are active, only a weaker result is possible, since both the control u and the associated state x are not arbitrarily smooth, see Remark 1. In this case, the cubic spline interpolation to the state x in \(W^{2,\infty }(t_0,T)\) defines an interpolation operator that is exactly of order two [30]. On the other hand, the linear spline interpolation operator of the Remark 3 with respect to the control \(u\in W^{1,\infty }(t_0,T)\) is only exact of order one. The continuous error extensions \(e_x\) and \(e_u\) are therefore only consistent in the sense of Definition 2 if the size of the global error in \(x_h\) is \(O(h^k)\) with \(k\le 2\) and the size of the global error in \(u_h\) is equal to O(h).

The definition of continuous error extensions \(e_x\) and \(e_u\) allows to represent the unique solution to (1) as the sum of the continuous error estimate which is unknown and a known part represented by the continuous approximations \({{\mathcal {I}}}_x(x_h)\) and \({{\mathcal {I}}}_u(u_h)\). Utilizing this representation of the optimal solution to (1)–(2), we can construct an auxiliary problem having as its solution exactly the continuous error extensions given in Definition 2.

Lemma 2

Let \((x_h,u_h) \in X_{x^0}^h \times U^h_{ad}\), with corresponding interpolating functions \({{\mathcal {I}}}_x(x_h)\) and \({{\mathcal {I}}}_u(u_h)\). The tuple \((e_x,e_u)\) of continuous error extensions in the sense of Definition 2, where (x, u) solves (1)–(2), is the unique solution to the following optimal control problem

where

and the set of admissible controls is given by

where \({{\mathcal {E}}}_t = [ \underline{u} - {{\mathcal {I}}}_{u}(u_h)(t), \overline{u} - {{\mathcal {I}}}_{u}(u_h)(t)]^m\).

Proof

It is first proved that (18) admits a unique solution in \(E_{ad}\). By Definition 1 it follows \(D_{xu} \in L^1(t_0,T;{\mathbb R}^n)\). Now, as \(\upsilon \in L^2(t_0,T;{\mathbb R}^m)\), the Carathéodory theorem guarantees the existence and uniqueness of a solution to \(\dot{\xi } = A \xi + B \upsilon - D_{xu}\) with \(\xi (t_0) = 0\). This solution takes the form

where \(X(t) = e^{At}\). As a result of the boundedness of X(t) on \([t_0,T]\), and assuming \(\upsilon \in E_{ad}\) it follows that \(\xi \in AC(t_0,T;{\mathbb R}^n)\). Hence, (20) defines a control-to-state map for the control system involved in (18). This map is given by \({{\mathcal {C}}}: E_{ad} \rightarrow AC(t_0,T;{\mathbb R}^n)\), \(\upsilon \mapsto {{\mathcal {C}}}(\upsilon )\), which is a linear affine function.

Now, define \(\hat{{{\mathcal {J}}}}(\upsilon ):= {{\mathcal {J}}}({{\mathcal {C}}}(\upsilon ),\upsilon )\) as the reduced cost functional of \({{\mathcal {J}}}\), which results strictly convex. Since \({{\mathcal {E}}}_t\) is compact if both \(\underline{u}\) and \(\overline{u}\) are finite, the set \(E_{ad}\) is convex, closed, and bounded, such that in this case (18) admits a unique solution. In the LQR case where \(U_{ad} = L^2(t_0,T;{\mathbb R}^m)\), we have \(E_{ad}= L^2(t_0,T;{\mathbb R}^m)\) and the existence of a unique solution to (18) follows directly from the positive definiteness of R and the fact that \(\hat{{{\mathcal {J}}}}\) is coercive. To show that this solution is given by the tuple \((e_x,e_u)\), we first prove that \(e_u\) is an admissible control and \(e_x\) its corresponding state, i.e. \({{\mathcal {C}}}(e_u) = e_x\). Define by \( C: U_{ad} \rightarrow AC(t_0,T;{\mathbb R}^n)\), the control-to-state map for (1) (see [31]). By Definition 2, \(e_u \in E_{ad}\), using the relation \(C(u) = x\) and the fact that the function \({{\mathcal {I}}}_x(x_h)\) is the unique solution to an ODE problem of the form

it follows:

for all \(t \in [t_0,T]\). In order to see that \(e_u\) is a minimizer for \(\hat{{{\mathcal {J}}}}\) on \(E_{ad}\), consider the correspondence

for all \(u \in U_{ad}\) and \(\upsilon \in E_{ad}\) such that \(u(t) = \upsilon (t) + {{\mathcal {I}}}_u(u_h)(t)\) a.e. in \((t_0,T)\). This fact at hand, the minimizing property of \(e_u\) is an immediate consequence of Definition 2 and the optimality of u. Thus the tuple \((e_x,e_u)\) is the unique solution to the optimal control problem (18). \(\square \)

Due to the formulation of the continuous error extension as a unique solution of an optimal control problem, the PMP [6] is applied to obtain \(e_x\) and \(e_u\) from the solution of a BVP.

Theorem 1

Let \((x_h,u_h) \in X_{x^0}^h \times U^h_{ad}\), with corresponding interpolating functions \({{\mathcal {I}}}_x(x_h)\) and \({{\mathcal {I}}}_u(u_h)\). Moreover, define \(y:= (e_x,p)^T\) with the error extension \(e_x\) given as in Lemma 2, p characterized by (6) and let

with each component of \(\pi \) defined by

where \(i = 1,\ldots ,m\). Then y is the unique solution to the non-linear BVP

where \(b = (0,\alpha (c-{{\mathcal {I}}}_x(x_h)(T)))^T\). Moreover, the continuous error extension \(e_u\) for the global errors in \(u_h\), is uniquely determined by \(e_u = \pi (p)\).

Proof

By Lemma 2 the functions \((e_x,e_u)\) are the unique solution to the optimal control problem (18). Since \({{\mathcal {I}}}_x(x_h) \in W^{2,\infty }(t_0,T;{\mathbb R}^n)\) and \({{\mathcal {I}}}_u(u_h) \in W^{1,\infty }(t_0,T;{\mathbb R}^m)\), the PMP can be applied in that case as well. We define the following HP function

corresponding to (18). The PMP guarantees that, for \(e_u\) and the corresponding trajectory \(e_x\), there exists an associated function \(q\in W^{2,\infty }(t_0,T;{\mathbb R}^n)\), such that \(e_x, e_u\) and q satisfy the optimality system

together with

for all \(v\in {{\mathcal {E}}}_t\) and any \(t\in [t_0,T]\). Due to Definition 2 and (26), one can identify q with the adjoint state of the original problem (1)–(2) and set \(q = p\). In the following let

Then (27) is equivalent to

where \(\langle \cdot ,\cdot \rangle \) denotes the standard inner product over \({\mathbb R}\). Since R is symmetric and positive definite, there exists an orthogonal matrix \(P\in {\mathbb R}^{m\times m}\) such that \(P^TRP\) is a diagonal matrix with the eigenvalues of R and it holds

By (28) the function \(\varphi \) attains its minimum at \(e_u\) and since the eigenvalues of R are all positive, for any \(t\in [t_0,T]\) one obtains

where \({{\mathcal {E}}}_{t,i}:= [\underline{u} - {{\mathcal {I}}}_{u,i}(u_h)(t), \overline{u} - {{\mathcal {I}}}_{u,i}(u_h)(t)]\). As a result, the continuous error extension for the control is uniquely defined by \(e_u = \pi (p)\) with its components given as (22). Due to the positive definiteness requirements on R and Q, the HP function \({{\mathcal {H}}}\) is jointly concave in z and v. Together with the convexity of \(\varPhi \) and \({{\mathcal {E}}}_t\) for all \(t \in [t_0,T]\) we have that the conditions of the PMP are also sufficient [32]. A consequence of this fact and of the unique solvability of (18) is that \(e_x\) and p are the unique solutions to the HP system (23) \(\square \)

Corollary 2

Assume that (x, u) is the unique solution to (1) with (3) and p is defined by (6). Moreover, let \((x_h,u_h) \in X_{x^0}^h \times U^h_{ad}\), define approximations to (x, u) with corresponding interpolating functions \({{\mathcal {I}}}_x(x_h)\) and \({{\mathcal {I}}}_u(u_h)\). Then \(y = (e_x,p)^T\), with \(e_x\) as the continuous extension of the global errors of \(x_h\), is the unique solution to the ODE

with forcing function \(F = (-D_x,Q{{\mathcal {I}}}_x(x_h))^T\), \(D_x = \frac{d}{dt}{{\mathcal {I}}}_x(x_h) - A {{\mathcal {I}}}_x(x_h)\) and boundary conditions as in (23). Moreover, the continuous error extension \(e_u\) for the global errors of \(u_h\) is uniquely determined by

When considering error extensions \(e_x\) and \(e_u\) as solutions to (18) with \(E_{ad} = L^2(t_0,T;{\mathbb R}^m)\), a feedback control can be derived. Notice that (18) can be interpreted as a general linear tracking problem, where a feedback law is easily constructed just as in the classical setting of LQR problems. Thus the error functions \(e_x\) and \(e_u\) are related through the following equation

where \(G(t) = R^{-1}B^T Y(t)\) is the gain feedback matrix for the original problem (1) and \(V(t) = R^{-1}B^T v(t) - {{\mathcal {I}}}_u(u_h)(t)\) is a new command signal arising from the presence of \({{\mathcal {I}}}_u(u_h)\) and \({{\mathcal {I}}}_x(x_h)\) in (18). The functions Y and v are solutions to the following differential equations

and

As a result, the function \(e_x\) is obtained, given v and Y, by integrating forward in time the matrix IVP as follows:

Then \(e_u\) is obtained by substituting \(e_x\) into the feedback law (30).

3 Numerical Approximation of Continuous Error Functions

The main result of the last section is that the continuous extensions \(e_x\) and \(e_u\), defined in Definition 2, are characterized by the auxiliary problem (18). Theorem 1 guarantees that the solution to this auxiliary control problem is uniquely identified by the following optimality system

This system renders not only necessary but sufficient conditions that need to be satisfied by the functions defined in Definition 2. Hence, solving (31) numerically with an appropriate method will provide pointwise estimates for the global error functions \(e_x\) and \(e_u\) and in turn estimates for the global errors (11)–(12). As the auxiliary problem (18) defines a new optimal control problem it can be solved independently of the initial method or grid used to solve (1). In fact, in general, the grid used for solving (18) can be chosen to be much denser than that used for the approximation of x and u. In this case, for solving (18) numerically, a stepsize \(\eta > 0\) is chosen such that \(\varDelta _{h} \subseteq \varDelta _{\eta }\) holds, where \(\varDelta _{\eta }\) defines a new grid in the sense of (9). For the following discussion on the choice of appropriate numerical methods to generate pointwise approximations to continuous error functions \(e_x\) and \(e_u\), the main focus is on LQR problems, where the resulting auxiliary problem is given by (18) with \(E_{ad} = L^2(t_0,T;{\mathbb R}^m)\). In this framework, the linear algebraic system (31) is equivalent to the BVP formulated in Corollary 2.

The method, we present in the following, is a novelty to the field of optimal control and arises from combining, for the first time, IDeC with a symplectic Euler method. Thus, this algorithm is closely related to the concept of acceleration methods, but in contrast to methods like Richardson extrapolation, only requires a single mesh to improve the rate of convergence of an underlying grid function. The original form of IDeC goes back to Stetter [20, 21] and was later theoretically investigated by Frank, Ueberhuber, and others [24, 33]. A recent review of existing defect correction methods for ODEs can be found in [34].

Before going into further detail, the general idea of the upcoming method is described. In the first step, an appropriate one-step method is applied to the ODE (29) with boundary conditions as in (23), to generate approximations \(e_{x,l}\approx e_x(t_l)\) and \(p_{l} \approx p(t_l)\) for all \(l = 0,\ldots ,L\). With these approximations at hand, one can achieve an estimate for the error in the controls on the grid \(\varDelta _{h}\) by extracting the appropriate values and setting \(e_{u,l} = \pi (p_l) \approx e_u(t_l)\) for all \(l = 0,\ldots ,L\) [35]. Similar to before, we represent the generated approximations using the grid functions \(e_{x,\eta }\in X_0^{\eta }\), \(p_{\eta }\in Z^{\eta ,n}\) and \(e_{u,\eta }\in Z^{\eta ,m}\), where we define \(e_{x,l}:=e_{x,\eta }(t_l)\), \(p_{l}:=p_{\eta }(t_l)\) and \(e_{u,l}:=e_{u,\eta }(t_l)\) for all \(t_l \in \varDelta _{\eta }\). The accuracy of these pointwise error estimates is then evaluated utilizing ideas of defect control [12, 13] that are transferred to the framework of optimal control. Until a desired level of accuracy for \(e_{x,\eta }\) and \(e_{u,\eta }\) with respect to \(e_x\) and \(e_u\) is achieved, the initial approximations are corrected successively. Therefore in the second step, and with similar reasoning as in Sect. 2, an auxiliary problem is constructed and solved to estimate the deviation of \(e_{x,\eta }\) and \(e_{u,\eta }\) from \(e_x\) and \(e_u\), respectively. The process is stopped as soon as a desired level of accuracy for the approximations to \(e_x\) and \(e_u\) is achieved.

To determine a suitable method for determining \(e_{x,\eta }\) and \(e_{u,\eta }\), it must be taken into account that the system (29) is a canonical Hamiltonian system. This property becomes apparent by introducing the Hamiltonian \(\hat{{{\mathcal {H}}}}(t,e_x,p):= {{\mathcal {H}}}(t,e_x,p,\pi (p))\), where \({{\mathcal {H}}}\) is defined by (24). With this in mind, the system (29) with boundary conditions given as in (23) is equivalent to the following system

Thus, the solution \(y:=(e_x,p)\) is characterized by a symplecticity property [35] and it is reasonable to discretize the involved equations using a symplectic integrator. According to the results of [36], for non-autonomous Hamiltonian systems, the symplectic Euler method is appropriate. For the given Hamiltonian system an application of this method leads to the following equations

for each \(l = 0,\ldots ,L-1\), where \(F_1 = -D_x\) and \(F_2 = Q \, {{\mathcal {I}}}_x(x_h)\). We associate the solution to (32)–(33) with the grid function \(y_{\eta }:= (e_{x,\eta },p_{\eta })\) and define the corresponding realizations by \(y_l:= (e_{x,\eta }(t_l),p_{\eta }(t_l))^T\) for any \(t_l \in \varDelta _\eta \).

Remark 5

According to results in [37], the symplectic Euler scheme is a consistent and convergent first-order, one-step method if \(e_x,p \in C^{2}(t_0,T;{\mathbb R}^n)\). The regularity requirements for this method are fulfilled if at least a cubic spline, as defined in Remark 2, is chosen for the interpolation of \(x_h\).

Once we have pointwise estimates \(e_{x,\eta }\) and \(e_{u,\eta } = \pi (p_{\eta })\) for the continuous error extension \(e_x\) and \(e_u\) on a given grid \(\varDelta _{\eta }\), we need to ensure that these estimates adequately reflect the global errors of the approximations \(x_h\) and \(u_h\). Therefore, a reliable and computable bound for the global error of \(e_{x,\eta }\) and \(e_{u,\eta }\) is necessary. For this reason, similar to Sect. 2, a suitably accurate interpolation operator in the sense of the Definition 1 is chosen so that assumptions (A2) and (A3) are fulfilled. This interpolation operator, denoted \({{\mathcal {I}}}_{y}\), is then applied to the solution of (48) to determine the continuous extension \({{\mathcal {I}}}_y(y_{\eta }):=({{\mathcal {I}}}(e_{x,\eta }), {{\mathcal {I}}}(p_{\eta }))^T\). Following the idea of Zadunaisky [1, 2], a natural measure of the quality of this continuous approximation to y is available through the defect, given by

In fact, the interpolating function \({{\mathcal {I}}}_y(y_{\eta })\) satisfies a perturbed problem given by

together with the boundary conditions in (23). This perturbed BVP is close to the original (23) if the norm of (34) is small. In this case, and if the underlying BVP is sufficiently well conditioned, the solution of the neighbouring problem (35) is also close to that of (23). In turn, the norm of the global errors

is of small size as well. This fact suggests that the norm of (34) establishes a computable quantity for evaluating the quality of \(e_{x,\eta }\) and \(p_{\eta }\).

For the following, we define the continuous error extensions of the global errors of \(e_{\eta }\), \(p_{\eta }\) and \(e_{u,\eta }\) by

and

respectively, where in (38) it is taken into account that the relations \(e_u = \pi (p)\) and \(e_{u,\eta } = \pi (p_\eta )\) hold. The definitions (36)–(38) at hand, the defect is established as a computable upper bound for the global errors of the approximation \(e_{u,\eta }\) and \(e_{x,\eta }\).

Theorem 2

Let the Assumptions of Corollary 2 hold and assume that the interpolation operator \({{\mathcal {I}}}\) used to define (36)–(38) satisfies (A2). Moreover, suppose that the approximate solutions \(e_{x,\eta }\) and \(p_{\eta }\), to the linear algebraic system (31) satisfy the conditions

Then for every \(r\in {\mathbb N}\cup \{\infty \}\), there exists a \(C >0\) such that

where D is given by (34), with \({{\mathcal {I}}}_y(y_{\eta })=({{\mathcal {I}}}(e_{x,\eta }), {{\mathcal {I}}}(p_{\eta }))^T\).

Proof

By Corollary 2, the function \(y = (e_x,p)^T\) is the unique solution to the linear ODE

with boundary conditions as in (23) and \(F \in W^{1,\infty }(t_0,T;{\mathbb R}^{2n})\). The defect of this ODE, with respect to the interpolated approximation \({{\mathcal {I}}}_y(y_{\eta })\) is given by (34), and due to the assumptions (39), this interpolating function satisfies the boundary conditions in (23). By Assumption (A2) we have that \( D \in W^{1,\infty }(t_0,T;{\mathbb R}^{2n})\), such that together with (39), the function \({{\mathcal {I}}}_y(y_{\eta })\) uniquely satisfies

with the boundary conditions in (23). As a result, the error function \(\delta _y:= (\delta _x, \delta _p)^T\) uniquely satisfies

By standard results [38], the solution \(\delta _y\) can be represented in terms of an appropriately chosen Green’s function \(G(t,\tau )\) such that

Hence, the maximum of \(\delta _y\) can be explicitly bounded by the defect. Therefore the Hölder inequality is applied to the above integral representation of \(\delta _y\) to obtain:

for an appropriate r and s such that \(\frac{1}{r} + \frac{1}{s} = 1\), where for \(r = \infty \), we set \(s = 1\). Due to this result and since \(\Vert \delta _y \Vert _{\infty } = \max _{t \in [t_0,T]} ( | \delta _x(t) | + | \delta _p(t) |)\) we obtain

Since \(e_u = \pi (p)\) holds due Corollary 2, we have

where \(\Vert \cdot \Vert \) denotes an appropriate matrix norm. Taking this fact into account, we obtain the estimate

where \(C = \kappa (1 + \Vert R^{-1}B^T\Vert )\). \(\square \)

Corollary 3

Let the assumptions of Theorem 2 be satisfied and \( e_{u,\eta } = \pi (p_{\eta })\). Then for each \(r \in {\mathbb N}\cup \{\infty \}\) there exists a \(C >0\) such that

where D denotes the defect (34).

According to Corollary 3, the defect provides in particular an upper bound for the global error of the approximation, provided by (32)–(33). Due to the Remark 5, the error of the approximations \(e_{x,\eta }\) and \(e_{u,\eta }\) is of the size \(O(\eta )\). To establish the defect (34) not only as an upper bound for the global error but also as a reliable estimate for the size of the global error, at least asymptotically, we need \(D = O(\eta )\). The following lemma guarantees that this property is fulfilled for the symplectic Euler method if a suitable interpolation operator for \(x_h\) and \(y_{\eta }\) is chosen.

Lemma 3

Let the interpolation operator \({{\mathcal {I}}}_x\) in Definition 2, chosen such that \(e_x \in C^{2}(t_0,T;{\mathbb R}^n)\). Moreover, choose \({{\mathcal {I}}}_y\) in (34), such that \({{\mathcal {I}}}_y(y_{\eta }) \in C^{2}(t_0,T;{\mathbb R}^{2n})\), where \(y_{\eta }\) is uniquely defined by the solution of (48). Then for the defect (34), it holds \(\Vert D\Vert _{\infty } = O(\eta )\).

Proof

One integration step of the symplectic Euler method applied to the BVP (23) is given by

with

Since \(e_x,p \in C^{2}(t_0,T;{\mathbb R}^n)\) and due to Remark 5 it holds:

and hence

The application of Taylor’s formula allows for the substitution

such that

The latter equation is equivalent to

Due to the regularity of \({{\mathcal {I}}}_y(y_\eta )\) it must hold \(\Vert \dot{y}-\frac{d}{dt}{{\mathcal {I}}}_y(y_\eta )\Vert _{\infty } = O(1)\) and thus it follows \(|D(t_l)| = O(\eta )\). Moreover, for each \(t \in [t_l,t_{l+1}]\) it holds

Hence, we obtain

Together with the boundedness of \(\Vert \dot{D}\Vert _{\infty }\), due to \(\dot{D}\in C^0(t_0,T;{\mathbb R}^{2n})\), it then follows \(|D(t)| = O(\eta )\) for any \(t \in [t_l,t_{l+1}]\). Since the choice of the sub-interval was arbitrary, the theorem is proved. \(\square \)

If highly accurate approximations for \(e_x\) and \(e_u\) are desired, the determination of a solution of (32)–(33) is associated with high computational costs since \(\eta > 0\) must be chosen sufficiently small. These costs increase considerably if the variables x(t) and u(t) are high-dimensional. It, therefore, makes sense to strive for an improvement in the convergence rate of the approximations \(e_{x,\eta }\) and \(e_{u,\eta }\) before refining the grid \(\varDelta _{\eta }\). To achieve an improvement in the convergence rate of the initial approximations, the deviations \(\delta _x\) and \(\delta _u\) must be investigated. By the reasoning applied in Theorem 2, these functions are related to \(\delta _y\), which is the unique solution to the BVP

By applying similar reasoning as initially for (31) an estimate for the solution to (42) can be determined with the symplectic Euler method. The resulting approximation on the grid \(\varDelta _{\eta }\) is defined by the grid function

and the boundary conditions in (42). Here and in the following, \(\delta _{x,\eta }\) and \(\delta _{p,\eta }\) denote the approximations of \(\delta _x\) and \(\delta _p\) on \(\varDelta _{\eta }\) using the symplectic Euler scheme (32)–(33), where the defect D assumes the role of the forcing term.

With the estimate of \(\delta _y\) on a sufficiently dense grid at hand, an improvement of the initial approximations to \(e_x\) and p is obtained by setting

The corrected approximations satisfy (39) and are thus approximations to the solution of the linear ODE (29) with boundary conditions in (23). Hence, Theorem 2 applies, when defining \(y^{(1)}_{\eta }:= (e^{(1)}_{x,\eta },p^{(1)}_{\eta })^T\) and \(e^{(1)}_{u,\eta } = \pi (p^{(1)}_{\eta })\), together with the defect

To achieve a further improvement of the initial approximation, this correction procedure can be continued iteratively by starting with the grid function \(y^{(1)}_{\eta }\). For each improved iteration, generated by this procedure, the following corollary of Theorem 2 applies.

Corollary 4

Let the assumptions of Theorem 2 hold and assume that \(\delta ^{(j)}_{x,\eta }\) and \(\delta ^{(j)}_{p,\eta }\), for all \(j \in {\mathbb N}_0\) are defined such that

Moreover, define

and for \(j = 0,1,\ldots \) set

such that the function

is the unique solution to \(\dot{\delta }^{(j)}_y = M \delta ^{(j)}_y - D^{(j)}\) with boundary conditions given in (42) and forcing term

Then, there exists a constant \(C>0\) independent of the current iteration j, such that it holds

with \( \delta ^{(j)}_u = e_u -\pi ( p -\delta ^{(j)}_p)\).

Remark 6

For each iteration, we have \(C = \kappa (1+\Vert R^{-1}B^{T}\Vert )\), where the constant \(\kappa >0\) is referred to as the conditioning number of the underlying BVP. Its magnitude depends on the fundamental solution of the homogeneous BVP corresponding to (42) and the boundary condition matrices \(b_{t_0}\) and \(b_{T}\). The size of this constant, in particular, affects the reliability of the defect as a measure of the quality of an approximation. It is therefore advantageous to estimate the conditioning number of the original BVP (7) - (8) from the LQR problem (1) a priori. A discussion on the estimation of condition numbers can be found in [38] and [39].

By (46), the norm of the defect \(D^{(j)}\) provides an upper bound for the size of the global error for each corrected approximation. Thus, to monitor whether a correction step of the form (45) leads to an improvement of the original approximation, the norms of two consecutive defects \(D^{(j)}\) and \(D^{(j-1)}\) are compared based on the results of the following Lemma.

Lemma 4

Assume that the interpolation operator \({{\mathcal {I}}}_y\) is accurate of order k with respect to the solution y of the ODE (29) with the boundary conditions in (23). Suppose that there exists a constant \(C_0>0\) such that it holds

for all \(\eta >0\) sufficiently small. Moreover, in addition to (A3), assume that for \(y^{(j)}_{\eta }\) and \({{\mathcal {I}}}_y\) there exist \(C_1,C_2>0\) for all \(\eta >0\) sufficiently small such that the following implication holds

Then there exists \(C>0\) for all \(\eta >0\) sufficiently small such that the following relation holds:

Proof

Due to the linearity of \(D^{(j)}\) with respect to the interpolating function \({{\mathcal {I}}}_y\) and the fact that y satisfies \(\dot{y} = M y + F\), the following result holds for all \(\eta >0\) sufficiently small:

\(\square \)

Remark 7

The assumptions of Lemma 4 are rather natural for interpolation operators utilizing piecewise interpolating polynomials. The cubic spline defined in Remark 2 is an example of an interpolation operator satisfying these prerequisites.

Lemma 4 provides two pieces of information for the construction of a reliable and efficient algorithm with correction iterations of the form (45). First, it allows the definition of a criterion to check whether a correction step leads to an improvement of the initial approximation \(y_{\eta }\), and second, it provides an upper bound for the maximum number of correction steps. For the first case, it should be noted that for a sufficiently small step size \(\eta > 0\) and a suitable interpolation operator, the condition \(\Vert D^{(j)}\Vert _{L^r} \le \Vert D^{(j-1)} \Vert _{L^r}\) must be fulfilled if the correction (45) provides an improvement in the order of convergence of \(y_{\eta }\). Thus, if \(\Vert D^{(j)}\Vert _{L^r} > \Vert D^{(j-1)}\Vert _{L^r}\) holds, the grid \(\varDelta _{\eta }\) might be too coarse and needs to be refined for the correction procedure to work. The second conclusion of Lemma 4, is related to Remark 4 and the regularity of the function y one wishes to approximate. The maximum achievable order of accuracy depends not only on the choice of \({{\mathcal {I}}}_y\), but also on the choice of the interpolation operator used to interpolate the approximation state \(x_h\) of the original LQR problem given by (1) with (3). In general, the maximum number of corrections is defined by \(j_{max} = k-1\), see Lemma 4.

Remark 8

Using the cubic spline interpolation operator defined in Remark 2, we obtain \(y \in W^{3,\infty }(t_0,T;{\mathbb R}^{2n})\) and thus \(k=3\) for Lemma 4 according to the results in [30]. Consequently, the defect would not be able to adequately reflect the accuracy order of \(y_{\eta }\) if \(j>2\), which suggests setting the maximum number of correction steps to \(j_{max} = 2\).

It remains to determine a suitable criterion so that the algorithm stops automatically as soon as the generated approximations to the global errors are sufficiently accurate. Based on the results of Lemma 3, Corollary 4 and Lemma 4, a suitable criterion is defined by adding the condition \(\Vert D^{(j)}\Vert _{L^r} \le \theta \). The above results guarantee that there is a step size \(\eta >0\), so that this condition is fulfilled. To find the required step size, the grid \(\varDelta _{\eta }\) is refined and a new correction cycle is initialized when the maximum number of corrections \(j_{max}\) is reached and the desired tolerance has not yet been obtained. As soon as the specified tolerance or a desired number of correction cycles is reached, the process is stopped and an estimate of the continuous error extension \(e_u\) is provided by \(\pi ({{\mathcal {I}}}(p^{(j)}_{\eta }))\). The pseudocode for the described method is given in Algorithm 1. The following theorem guarantees the well-definedness of the integrated termination criterion for the algorithm. Since it is a direct conclusion from the above findings, this result is stated without proof.

Theorem 3

Let the conditions of Theorem 2 and Lemma 3 be satisfied. Then for any tolerance \(\theta > 0\) and a sufficiently accurate interpolation operator \({{\mathcal {I}}}\), there exists an iteration \(0 \le j\le j_{max}\) with stepsize \(\eta > 0\), such that for the error estimates \(e^{(j)}_{x,\eta }\) and \(e^{(j)}_{u,\eta }\) in Corollary 4, it holds:

An important computational aspect of Algorithm 1 is the choice of the procedure for determining the approximations \(y^{(0)}_{\eta }\) and each \( \delta ^{(j)}_{y} \). Since every correction cycle requires the solution of multiple systems like (32)–(33), it is important to aim for a method that allows for efficient execution of the correction steps. To achieve this goal, we exploit the linearity of the involved differential equation and represent the solution to (32)–(33) equivalently as the solution to the linear system of equations

with \(\vec {y}_{\eta } = \left( p_{\eta }(t_0), y_{\eta }(t_1),\ldots , y_{\eta }(t_{L-1}), e_{x,\eta }(t_L)\right) ^T \in {\mathbb R}^{2nL}\), where

and

with \( b_2 = \alpha (c-{{\mathcal {I}}}_x(x_h)(T))\). The involved system matrix has the advantageous structure of a block tridiagonal, Toeplitz matrix as follows

where

Together with the involutory property of \({\textbf{M}}_0\), the system (48) is especially well-suited for the application of a one-step block cyclic reduction approach [40]. As a consequence of this approach only a reduced system of equations needs to be solved, which is half the size of the original one. A variety of methods can be invoked to determine a solution to the reduced system. For medium-sized problems a block LU factorization-based algorithm [41] is appropriate, also known as block Thomas algorithm, which is a variation of block Gaussian elimination without pivoting and takes advantage of the block tridiagonal structure of \({\textbf{M}}_{\eta }\). In its non-block form, this algorithm was first presented in [42]. At each correction step the approximates \(\delta ^{(j)}_{y,\eta }\) are determined using the linear system (48) with the righthand side updated to

and resulting in the solution vector

Consequently, at each correction step (45) with \(\eta > 0\) fixed, a linear system with system matrix \({\textbf{M}}_{\eta }\) is solved, suggesting that cyclic reduction and a required LU decomposition or suitable preconditioning for an iterative method must be determined only once for each choice of \(\eta > 0\). This, in turn, allows for an efficient execution of each correction step. A detailed description of a direct method, combining one-step cyclic reduction and LU decomposition for determining \( y^{(0)}_{\eta } \) and each \(\delta ^{(j)}_{y,\eta }\) is provided in the Appendix. Besides solving the linear system (48) for determining the desired grid functions another crucial part of Algorithm 1 is the construction of each defect and thus the interpolating functions \({{\mathcal {I}}}(y^{(j)}_{{\eta }})\). In particular, when piecewise polynomials like splines are used, the required interpolating functions are determined by solving several linear systems equal to the spatial dimension 2n of the function y. Since there exists no coupling between the component function of \(y^{(j)}_{{\eta }}\), parallelization of the interpolation process is possible, which has an advantageous effect on computational cost. In Sect. 4, a numerical investigation of Algorithm 1 and its time complexity is provided. Moreover, the experiments described in Sect. 4 reveal that by a successive correction with (45) the order of convergence of the approximations \(e_{x,\eta }\) and \(e_{u,\eta }\) is improved by a whole number. This fact is consistent with the results of IDeC given in, e.g., [24]. The convergence analysis for these methods, however, is not straightforward and usually requires the existence of asymptotic expansions to the global error of the underlying scheme [33]. It is well known that these expansions exist for RK-methods with constant step-size [37], providing evidence that a similar result can be achieved for our symplectic Euler method presented. An extensive discussion of this matter is out of the scope of this paper and will thus not be given within this work.

So far, the numerical error estimates for LQR problems have been discussed. To complete this section, the estimation of continuous error functions with active control constraints is discussed below. For this case, a solution of the optimal control problem (18)–(19) is sought, where the mapping \(\pi \) defined by (22) is a projection of the linear affine transformed co-state p onto the set of feasible control values. Therefore, the BVP (23), which results from the linear algebraic system (31), is nonlinear, so that the algorithm (1) is not readily applicable. However, since the HP system (31) is uniquely solvable and the associated maximization of the Hamiltonian function (4) can be solved analytically, the use of a forward-backwards iteration is suitable for the approximation of \(e_x\) and \(e_u\). Prominent examples of these methods are the projected gradient approach [43], as well as methods of successive approximation [44,45,46]. Having at disposal estimates for the continuous error extension on the grid \(\varDelta _{\eta }\), an estimate for the quality of the approximations \(e_{x,\eta }\) and \(e_{u,\eta }\) is desired. Therefore, similar to before the quantities (36)–(38) are defined. Since \(\pi \), as defined by (22), is a projection of p(t) onto the compact set \({{\mathcal {E}}}_t\) and hence Lischitz continuous, it holds:

Consequently, the investigation of the error in the approximation \(e_{u,\eta }\) to the function \(e_u\) can also be reduced to an investigation of the error function \(\delta _p\) in the case of active control constraints. According to Theorem 1, the functions \(e_x\) and p are solutions to the BVP (23). We suppose that the numerical solution to the system (31) satisfies

Then, just as in the case of LQR problems, the resulting interpolated approximations \({{\mathcal {I}}}_y = ({{\mathcal {I}}}(e_{x,\eta }), {{\mathcal {I}}}(p_{\eta }))\) satisfies the perturbed problem

with boundary conditions as in (23), the defect (34) and \({{\mathcal {L}}}\) given by (21). Intuitively, one expects that the same reasoning as applied in the unconstrained setting holds for the defect. That is, if the maximum norm of the defect is sufficiently small, the perturbed and the original BVP (23) are closely related, and thus the approximations \(e_{x,\eta }\), \(p_{\eta }\), on the given grid \(\varDelta _{\eta }\), sufficiently close to \(e_x\) and p. In turn by (50) \(e_{u,\eta }\) should as well represent a suitable approximation to \(e_u\). However, in contrast to the LQR case an exact bound as stated in Theorem 2 does not hold. Instead, the defect rather serves as an asymptotically correct upper bound for the maximum norm of the function \(\delta _y\). To substantiate this statement a reasoning similar to [13] is applied. Therefore we assume that the approximations \(e_{x,\eta }\) and \(p_{\eta }\) are accurate of order k in the sense of (10) and the interpolation operator \({{\mathcal {I}}}_y\) satisfies (A3) and is chosen such that \(\Vert \delta _y\Vert _{\infty } = O(\eta ^k)\). We rewrite the defect as follows:

Then integration over the sub-interval \([t_l,t]\) with \(t \in (t_l,t_{l+1}]\), results in

Due to the Lipschitz properties of \(\pi \) and the assumption \(\Vert \delta _y\Vert _{\infty } = O(\eta ^k)\), we have

for all \(t \in (t_l,t_{l+1}]\). This correspondence provides essentially two pieces of information about the asymptotic behaviour of the defect. First, in the worst case, for the defect it holds \(D(t) = O(\eta ^{k-1})\) and, secondly, for \(\eta \) sufficiently small the quantity \(\eta \Vert D\Vert _{\infty }\) can serve as an upper bound for the global error of our approximations \(e_{x,\eta }\) and \(p_{\eta }\). In turn, \(\eta \Vert R^{-1}B^T\Vert \; \Vert D\Vert _{\infty }\) can at least asymptotically be seen as an upper bound for the global error of the approximation \(e_{u,\eta }\). However, since this relation holds only asymptotically, the defect in the control-constrained case is a less reliable measure for the quality of our approximations \({{\mathcal {I}}}(e_{x,\eta })\) and \(\pi ({{\mathcal {I}}}(p_{\eta }))\) than it is in the unconstrained case.

4 Numerical Experiments

To demonstrate the findings of our work, results of numerical experiments are presented. For validation purposes, in the first subsection, small problems with known analytical solutions are considered. In the second subsection an application of Algorithm 1 to an LQR problem is presented that arises from the finite element Petrov-Galerkin approximation of a controlled convection-diffusion equation.

4.1 Numerical Validation with Small LQR and LQOC Problems

As a first example, an LQR problem is considered. Therefore, we use the specifications \(Q = 0\), \(R = 0.005\), \(\alpha = 10\), and \(c = (-2,-2)^T\). The governing ODE model is given by

where \(t \in [0,10]\). The true solution (x, u) is achieved by solving the corresponding BVP (7)–(8) using the Matlab ’Symbolic Math’ toolbox and setting \(u = R^{-1}B^T p\). To generate the approximations \((x_h,u_h)\) on the equidistant grid with step-size \(h = 0.5\), a direct method of optimal control is applied. Here, we chose a coarse grid for the initial approximation to better visualize the error functions and approximations. The system dynamics (53) are approximated using the second-order accurate Crank-Nicolson method. Consistent with this choice, the integral in the cost functional (1) is approximated using the trapezoidal rule. The resulting nonlinear programming problem is then given by

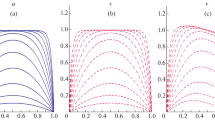

where we suppose \(x_l:= x_h(t_l)\) and \(u_l:= u_h(t_l)\) for all \(l = 0,\ldots ,L\). The finite-dimensional optimization problem (54) is solved numerically using a second-order interior point optimization technique [47]. For constructing the continuous extensions in the sense of Definition 2, the interpolation operator \({{\mathcal {I}}}\) is chosen to be the cubic spline interpolation with not-a-knot boundary condition, as described in Remark 2. For constructing approximations \(e_{x,\eta }\) and \(e_{u,\eta }\) to the continuous error functions \(e_x\) and \(e_u\), we apply Algorithm 1 with the tolerance \(\theta = 1e-03\), maximum number of corrections \(j_{\max } = 2\), initial step-size \(\eta = 0.05\) and \(\gamma = 0.5\). The \(L^{\infty }\)-error for the approximations \(e^{(j)}_{x,\eta }\) and \(e^{(j)}_{u,\eta }\), in the form of the maximum norm of the functions \(\delta _x^{(j)}\) and \(\delta _u^{(j)}\) respectively is provided in the Table 1. For the corresponding estimated upper bounds \(\Vert D^{(j)}\Vert _{L^r}\) we set \(r = \infty \) and report them in Table 3. By comparing the results given in Table 1 and 3 the maximum norm of the defect provides an upper bound for the error of approximation with respect to the error functions \(e_x\) and \(e_u\). This aligns with the results of Theorem 2 when choosing \(r = \infty \) and for the given problem, the defect is an appropriate indicator for the reliability of the continuous error estimates \({{\mathcal {I}}}(e_{x,\eta })\) and \({{\mathcal {I}}}(e_{u,\eta })\). The resulting trajectories of the continuous error estimates and the continuous extensions of the numerical approximations are represented in Fig. 1. Due to the use of the trapezoidal rule when constructing the discrete-time problem (54), less weight is put on the optimization of the discrete controls at the boundaries of \([t_0,T]\). Thus, the global error in \(u_h\) and \(x_h\) is the largest, close to \(t_0\) and T. As visible in Fig. 1, this behaviour is perfectly reflected by the error functions \(e_x\) and \(e_u\) as well as their approximations generated by Algorithm 1. For a numerical order analysis, we additionally investigate for each iteration \(j = 0,1,2\) the asymptotic behaviour of the defect \(D^{(j)}\) as well as that of the continuous error estimates \({{\mathcal {I}}}(e^{(j)}_{x,\eta })\) and \({{\mathcal {I}}}(e^{(j)}_{u,\eta })\). Therefore, multiple approximations \(e_{x,\eta }\) and \(e_{u,\eta }\) using Algorithm 1, with the specification \(\theta = 2\textrm{e}{-06}\) and \(\eta = 0.0625\) are generated. Having at disposal those approximations for each iterate of Algorithm 1 and the corresponding defect values, least-squares fit of the generated value to a function of the form \(C\eta ^k\) is applied. The corresponding log-log plot for the results is provided in Fig. 2, the estimated orders of \(\delta _{\eta }^{(j)}\) for \(j = 0,1,2\) are given in Table 2. The estimated orders of \(D^{(j)}\) for \(j = 0,1,2\) are presented in Table 4. Consistent with the framework of IDeC [24], Table 2 shows that for each iteration \(j = 0,1,2\) of Algorithm 1 the corrections defined by (45) lead to an improvement in the order of accuracy of the initial estimates \({{\mathcal {I}}}(e_{x,\eta })\) and \({{\mathcal {I}}}(e_{u,\eta })\). This is strongly connected with the ability of the defect to reflect the asymptotic behaviour of the underlying global error. From Table 4 it is apparent that the defect is asymptotically equivalent to the global error of the corrected approximations \(e^{(j)}_{x,\eta }\) and \(e^{(j)}_{u,\eta }\) for \(j < 2\). However, for the last approximation with \(j = 2\), an order reduction appears in the defect \( D^{(2)} \). While a cubic spline interpolation polynomial satisfies Assumption (A3) as shown in Remark 2 its derivative is not able to reflect the asymptotic behaviour of the underlying approximation \(e_{x,\eta }\). More precisely in the given case, it can be proved that \(\frac{d}{dt}{{\mathcal {I}}}(e^{(2)}_{x,\eta }) = O(\eta ^{2})\), holds. Taking into account the relation (47), it appears that in the given case the size of the defect cannot be improved beyond this worst-case bound. To overcome this order reduction effect in the defect, we apply Lemma 4 and chose an interpolation operator with a higher order of accuracy. By the use of a quartic, instead of a cubic spline for the interpolation operator \({{\mathcal {I}}}\) we obtain the results presented in Table 5 and 6. It is apparent that by the choice of a higher-order spline, the order reduction effect in the defect of the last iterate no longer occurs.

Trajectories of the true solution and the continuous extensions of the corresponding numerical approximations to the first test problem based on (54), together with the corresponding continuous error estimates

LogLog-plot for the \(L^{\infty }\)-error of the estimates \({{\mathcal {I}}}(e_{x,\eta })\) for \(x_h\) (a) and \({{\mathcal {I}}}(e_{u,\eta })\) for \(u_h\) (b) with respect to the step-size \(\eta \). The corresponding estimated orders are reported in Table 2

Our next test problem is taken from [18] and specified by the parameters \(Q = 2\), \(R = 2\), \(\alpha = 0\). In this case, the Cauchy problem is given by

where \(t \in [0,1]\). Moreover, it is required that \(u(t) \in {{\mathcal {U}}}= (-\infty ,1]\) for all \(t \in [0,1]\). The exact optimal solution (x, u) is given by

and

To generate an approximation \((x_h,u_h)\) to the above functions, the indirect approach of optimal control is applied. Therefore the HP system (4)–(6) is solved by successive iteration. The symplectic Euler method with step size \(h = 0.1\) is used to construct a discrete HP system which is then solved using the projected gradient method. To determine \(e_{x,\eta }\) and \(e_{u,\eta }\) the auxiliary HP system (31) discretized using the symplectic Euler method on a uniform grid with step size \(\eta = 0.01\). The resulting system, then is solved using the sequential quadratic Hamiltonian algorithm [46]. The continuous extensions for \(x_h\) and \(e_{x,\eta }\), are constructed using the cubic spline of Remark 2. For the interpolation of \(u_h\) and \(e_{u,\eta }\) the linear spline described in Remark 3 is utilized. The resulting trajectories, together with those of the true solutions \(x,u,e_x\) and \(e_u\) are shown in Fig. 3. As long as the control bounds are active for both the discrete control \(u_h\) and the optimal control u, the approximation of the state is exact by construction. This is perfectly in line with the behaviour of the error function \(e_x\) and its continuous approximation \({{\mathcal {I}}}_x(e_{x,\eta })\). As soon as the control constraints are inactive the global error of the approximation \(p_h\) to the co-state impacts the global error of \(u_h\). In Fig. 3b one can see that the global error of the discrete control is at its maximum, where the control constraints on u turn from active to inactive. Even though the global error in \(u_h\) decays linearly, the construction of the forward Euler method causes the global error in the controls and thus in \(x_h\) to add up linearly what complies with the trajectory of \(e_x\) and its continuous approximation \({{\mathcal {I}}}_x(e_{x,\eta })\), see Fig. 3a. Figure 3b illustrates the impact of the choice of a linear interpolation operator on the regularity of the continuous error function \(e_u\). Due to the non-linearity of (57), non-differentiable points occur at the grid points for \(0.5\le t \le 1\). Nevertheless, as noted in Remark 4 the continuous error function \(e_u\) is consistent with the global error of \(u_h\). Therefore notice that \(\Vert e_u\Vert _{\infty }\) has an estimated order of uniform convergence of \(k \approx 1.01113\) for \(h \rightarrow 0\). Moreover, it is well known that the Euler method as applied to the system (4)–(6) is first-order accurate. Taking those facts together the consistency of the continuous error estimate \(e_u\) follows. A similar result is provided for \(e_x\), where the estimated order of uniform convergence as \(h \mapsto 0\) is \( k\approx 0.99262 \).

4.2 An Application to a PDE Optimal Control Problem

In the following, we apply Algorithm 1 to derive continuous error extensions for numerical solutions of LQR problems governed by a convection-diffusion equation approximated using the finite element Petrov-Galerkin scheme [48]. For the sake of completeness and to make this investigation within the paper self-contained, we provide a derivation of the relationship between LQR problems and numerical approximations to finite-time horizon regulator problems of the form

where \(\varOmega = (0,1)\) denotes the domain of the space variable, \(\mu > 0\), \(\nu > 0\) and \(b \in L^2(\varOmega )\) as well as \({{\mathcal {Q}}}: L^2(\varOmega ) \rightarrow L^2(\varOmega )\) are suitably chosen bounded linear operators. Here and in the following \(\langle \cdot , \cdot \rangle \) denotes the standard \(L^2\)-inner product on \(L^2(\varOmega )\) and we suppose \(\nu = 1\). The basic idea of the Petrov-Galerkin approximation applied to (59)–(61) relies on the weak formulation of the convection-diffusion equation. For this purpose, we multiply (59) by a test function \(\varphi \in W_0^{1,2}(\varOmega )\) and then integrate over the domain \(\varOmega \). After functional manipulation, we obtain

for any \(t \in (0,T]\) and \(u \in L^2(0,T)\). The problem (59)–(61) in its weak formulation now is to find for any \(t \in (0,T]\) and \(u \in L^2(0,T)\) fixed, a so-called weak solution w(t, s) that satisfies the variational Eq. (62) for all test functions \(\varphi \in W_0^{1,2}(\varOmega )\) together with \(w(0,s) = w_0(s)\) almost everywhere on \(\varOmega \). The Petrov-Galerkin approach now aims to construct an approximation to this weak solution by projecting the weak formulation onto a suitable subspace of \(W_0^{1,2}(\varOmega )\).

Now, let \(\varOmega \) be equally partitioned by the gridpoints \(s_i = \frac{i}{n+1}\) where \(i = 0,\ldots , n+1\). For the resulting grid, a suitable basis of piecewise trial functions \(\{\beta _i\}_{i = 1,\ldots , n}\) is chosen from a finite element space \(W_n \subset W_0^{1,2}(\varOmega )\) to approximate w(t, s) using the ansatz

Additionally, restricting the choice of test functions to a set \(\{\phi _j\}_{j = 1,\ldots ,n}\) of appropriate basis functions selected from a possibly different finite element space \(V_n \subset W_0^{1,2}(\varOmega )\), the weak formulation (62) is reduced to a problem in a finite-dimensional vector subspace and called the Petrov–Galerkin formulation of (59)–(61): Given \(u \in L^2(0,T)\) and for any \(t \in (0,T]\) find \(w^n(t,\cdot ) \in W_n\) such that

holds for all \(j = 1,\ldots ,n\) and \(w^n(0,s) = w^n_0(s)\), where \(w^n_0(s)\) is a suitable projection of \(w_0(s)\) onto \(W_n\). For the following, we suppose that this projection is achieved by pointwise interpolation at the gridpoints \(s_i\) and thus define \(x_i(0) = w_0(s_i)\) for all \(i = 1,\ldots ,n \). With this in mind and substituting \(w^n(t,s)\) into the Petrov-Galerkin formulation (64), it appears that the coefficient functions \(x_1(t), \ldots , x_n(t)\) for (63) are characterized by a first-order system of linear ordinary differential equations with respect to time and the initial condition \(x^0:= (w_0(s_1),\ldots ,w_0(s_n))^T\). That is, we have

where \(x(t):= (x_1(t),\ldots ,x_n(t))^T\), \(b^n:= (\langle b, \phi _1 \rangle , \ldots , \langle b, \phi _n \rangle )^T\) and the matrices

are the mass and stiffness matrices of the underlying Petrov-Galerkin approximation. By the above derivation, controlling the approximate solution \(w^n(x,t)\) using the control function u(t) with respect to the time variable is equivalent to controlling the coefficient functions x(t) using the correspondence (65). Thus by substituting the finite element formulation (63) into (58) one can see that determining an optimal control for the Petrov-Galerkin formulation of the controlled convection-diffusion equation in the finite element space \(V_n\) reduces to an LQR problem of the form (1). That is, an approximate solution to (58) can be determined by first solving numerically the problem

where we set

followed by substitution of the results into the ansatz function (63).

Notice that the quality of the approximation to w(t, s) and its corresponding optimal control, depends upon two factors, the choice of the trial space \(V_n\) and the test space \(W_n\) as well as the choice of the method to solve (66). As a result, two error sources occur, the first corresponding to the Petrov-Galerkin approximation and the second one due to the accuracy of the method used to solve (66). Our investigation within this section is devoted to the quantification of errors due to the latter source and the application of Algorithm 1 to numerical solutions of (66). Thus all continuous error estimates are related to approximations to \(w^n(t,s)\) and the corresponding optimal control, in the following denoted with \(u^n(t)\). A discussion of continuous error representations with respect to the true solution w(t, s) to (58) and its corresponding optimal control will be omitted here, as this would be beyond the scope of this paper. Instead, we utilize a well-tested Petrov-Galerkin method for the controlled convection-diffusion equation to generate A, B, and Q, such that the effect of numerical instabilities occurring due to the discretization with respect to the space variable are mitigated. In the case of finite element methods, the functions \(\{\beta _i\}_{i = 1\ldots ,n}\) and \(\{\phi _i\}_{i = 1\ldots ,n}\) are commonly selected from piecewise polynomial spaces [49]. A standard choice for \(V_n\) and \(W_n\) is the space of piecewise linear functions, where a basis can be constructed choosing appropriate hat-functions as defined in Remark 3. Specifically, we choose

Using these basis functions in the construction of (63) and setting \(\phi _j = \beta _j\) for all \(j = 1, \ldots , n\), one arrives at the standard linear finite element Galerkin approximation of the convection-diffusion equation. While this choice is easily accessible, it has been observed that this basic approach may introduce instabilities to the numerical approximation of problems like (58)–(61), see [48]. Thus, to improve the accuracy of the finite element approximation, we utilize the upwinding scheme proposed in [48]. That is, for our numerical experiments, we set

where

and \(\epsilon > 0\) is a stabilization parameter. This parameter depends on the grid used to generate the finite element solution and serves as a control mechanism for the error with respect to the space variable. As specifications for the optimal control problem (58)–(61) we set