Abstract

A convergent numerical method for \(\alpha \)-dissipative solutions of the Hunter–Saxton equation is derived. The method is based on applying a tailor-made projection operator to the initial data, and then solving exactly using the generalized method of characteristics. The projection step is the only step that introduces any approximation error. It is therefore crucial that its design ensures not only a good approximation of the initial data, but also that errors due to the energy dissipation at later times remain small. Furthermore, it is shown that the main quantity of interest, the wave profile, converges in \(L^{\infty }\) for all \(t \ge 0\), while a subsequence of the energy density converges weakly for almost every time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this article, we present a numerical algorithm for \(\alpha \)-dissipative solutions of the Cauchy problem for the Hunter–Saxton (HS) equation

The equation was derived as an asymptotic model of the director field of a nematic liquid crystal [19], and possesses a rich mathematical structure. We mention a few of its properties here: it is bi-Hamiltonian and admits a Lax pair [20], it can be interpreted as a geodesic flow [23, 24], and it admits numerous extensions and generalizations [22, 26, 31].

A lot of the interest for (1.1) is generated by the fact that weak solutions in general will develop singularities in finite time, and, consequently, they are not unique, see [9, 13, 19, 21]. This phenomenon is known as wave breaking. In particular, \(u_{x} \rightarrow -\infty \) pointwise while the energy \(\Vert u_x (t, \cdot )\Vert _2\) remains uniformly bounded, and the solution u, itself, Hölder continuous. Furthermore, at wave breaking, energy concentrates on a set of measure zero. Thus the energy density is in general not absolutely continuous. To overcome this problem it is common to augment the solution with a finite, positive Radon measure \(\mu \) describing the energy density, see [9, 13, 18]. In particular, \(\mu \) coincides with the usual kinetic energy density \(u_x^2\) in regions where there is no wave breaking, thus, \(d\mu _{\text {ac}}=u_x^2dx\). The energy is then described by \(F(x) = \mu ((-\infty , x))\).

Weak solutions can be extended past wave breaking in various ways, see for instance [3, 9, 13, 21]. The two most prominent notions in the literature are that of a dissipative solution, where one removes all the concentrated energy from the system, and that of a conservative solution, where one reinserts the concentrated energy. In this work, we consider the concept of \(\alpha \)-dissipative solutions, first introduced in [12] for the related Camassa–Holm equation, and in [13] for (1.1). Instead of removing all the concentrated energy or none of it, an \(\alpha \)-fraction, where \(\alpha \in [0, 1]\), is removed. This way, the notion of \(\alpha \)-dissipative solutions acts as a continuous interpolant between the two extreme cases, \(\alpha =0\) corresponding to conservative solutions, and \(\alpha =1\) corresponding to dissipative solutions. Thus, the notion of \(\alpha \)-dissipative solutions allows for a uniform treatment of weak solutions with nonincreasing energy. The existence of \(\alpha \)-dissipative solutions was established in [13] for the two-component Hunter–Saxton system which generalizes (1.1), and in the more general setting where \(\alpha \in W^{1, \infty }(\mathbb {R}, [0, 1)) \cup \{1 \}\).

A common approach for solving the Cauchy problem (1.1) is to use a generalized method of characteristics, see for instance [9, 13]. This approach is followed here. In particular, given an \(\alpha \)-dissipative solution, the corresponding Lagrangian coordinates are governed by a linear system of differential equations, whose right hand side admits discontinuities at fixed times. These times can be computed a priori based on the initial data. Whence, if the initial data in Lagrangian coordinates is piecewise linear, the Lagrangian coordinates will remain piecewise linear at all later times, an important property which we take advantage of in this work.

Despite receiving a considerable amount of attention from a theoretical perspective, only a few number of numerical schemes have been proposed for the HS equation. In [17] several finite difference schemes for dissipative solutions were proved to converge. Also for dissipative solutions, [32] introduces a convergent, discontinuous Galerkin method. Furthermore, in [30] a finite difference scheme on a periodic domain for a modified HS equation was derived and proven to converge towards conservative solutions. More recently, a Godunov-inspired scheme [25, Chp. 12.1] for conservative solutions based on tracking the solution along characteristics, was introduced in [14]. The scheme was proved to converge and a convergence rate prior to wave breaking was derived.

We contribute to this line of research by introducing a numerical algorithm well-suited for \(\alpha \)-dissipative solutions. This algorithm is based on applying a tailor-made projection operator to the initial data, and then solving exactly along characteristics. Thus, the projection step is the only step that introduces any approximation error and it is therefore of particular importance that it not only yields a good approximation of the initial data, but also ensures that additional prospective errors, due to the energy dissipation, remain small and hence do not prevent convergence.

To highlight the importance of the correct choice of the projection operator, we compare our projection operator with the one introduced in [14]. Motivated by the fact that a piecewise linear structure of the initial data is preserved at all later times, see [1, 20], the numerical scheme in [14] uses a standard piecewise linear projection operator in Eulerian coordinates at every timestep. In between timesteps the numerical solution is evolved exactly along characteristics. The projection operator used treats u and F completely independently, although u and \(\mu \) are strongly connected through the absolutely continuous part. Consequently, a deviation is introduced in the sense that \(d\mu _{\Delta x, \textrm{ac}} \ne u_{\Delta x, x}^2dx\), where \(\Delta x\) denotes the spatial discretization parameter. Thus, as pointed out in [14], one is no longer dealing with the Hunter–Saxton equation, but rather a reformulated version of the two-component Hunter–Saxton system [28], which is accompanied by a density \(\rho \). Or, in other words, the projection operator maps into the Eulerian set for the two-component Hunter-Saxton equation, see [28, Def. 2.2], for which it has been established that \(\rho \) has a regularizing effect. In particular, if \(\rho > 0\) in some interval \([x_1, x_2]\), then the solution will never experience wave breaking in the interval spanned by the characteristics emanating from \([x_1, x_2]\), see [11, Thm 7.1]. While this works out neatly in the setting of conservative solutions, since no energy is lost, for \(\alpha \)-dissipative solutions we are highly dependent on wave breaking actually occurring in order to remove concentrated energy. Thus, more care is needed to derive a suitable projection operator. That is why we introduce a piecewise linear projection operator \(P_{\Delta x}\), which in contrast to the the one in [14], ensures that the projected initial data satisfies \(d\mu _{\Delta x, \textrm{ac}} = u_{\Delta x, x}^2dx\).

We accompany the numerical algorithm with a convergence analysis, which shows that the limit is a weak \(\alpha \)-dissipative solution. In particular, we obtain that \(u_{\Delta x} \rightarrow u\) in \(L^{\infty }(\mathbb {R})\) for all \(t \ge 0\), which is the main quantity of interest in practice, while a subsequence of the energy measure \(\mu _{\Delta x}(t)\) converges weakly for almost every time.

The outline of the paper is as follows. In Sect. 2 we set the stage for the Cauchy problem of (1.1) by defining the Eulerian set \(\mathcal {D}\), the Lagrangian set \(\mathcal {F}\), the mappings L and M between them and the notion of an \(\alpha \)-dissipative solution. Then in Sect. 3 we focus on deriving and motivating our choice of the projection operator \(P_{\Delta x}\), before we discuss the practical implementation of our numerical algorithm. In Sect. 4 we conduct the convergence analysis. In the last section, Sect. 5, we provide a few numerical experiments to illustrate the theoretical results and to go beyond the theory established here by investigating the convergence rate.

2 Preliminaries

In this section, we set the stage for the numerical algorithm by defining the sets we are working in, as well as recalling the construction of \(\alpha \)-dissipative solutions by using a generalized method of characteristics, as introduced in [13] and used in [15].

Assume that \(\alpha \in [0,1]\) and denote by \(\mathcal {M}^+(\mathbb {R})\) the space of positive, finite Radon measures on \(\mathbb {R}\). To define the set of Eulerian coordinates, we first need to recall some important spaces from [13] and [15].

Introduce

which is a Banach space when equipped with the norm

Furthermore, define

and introduce a partition of unity \(\chi ^+\) and \(\chi ^-\) on \(\mathbb {R}\), i.e., a pair of functions \(\chi ^+, \chi ^- \in C^{\infty }(\mathbb {R})\) such that

-

\(\chi ^+ + \chi ^- = 1\),

-

\(0 \le \chi ^{\pm } \le 1\),

-

\(\text {supp}(\chi ^+) \subset (-1, \infty )\) and \(\text {supp}(\chi ^-) \subset (-\infty , 1)\).

Then, we can define the following mappings

which are linear, continuous, and injective, see [4]. Based on those, we introduce the Banach spaces \(E_1\) and \(E_2\) as the images of \(H_1^1(\mathbb {R})\) and \(H_2^1(\mathbb {R})\), respectively, that is,

and endow them with the norms

By construction the spaces \(E_1\) and \(E_2\) do not rely on the particular choice of \(\chi ^+\) and \(\chi ^-\), cf. [10]. Furthermore, observe that the mapping \(R_1\) is also well-defined for functions in \(L_1^2(\mathbb {R}) = L^2(\mathbb {R}) \times \mathbb {R}\) and therefore, we set

and equip this space with the norm

At last, we can define the set of Eulerian coordinates, \(\mathcal {D}\), in which we also seek numerical solutions.

Definition 2.1

The space \(\mathcal {D}\) consists of all triplets \((u, \mu , \nu )\) such that

-

(i)

\(u \in E_2\),

-

(ii)

\(\mu \le \nu \in \mathcal {M}^{+}(\mathbb {R})\),

-

(iii)

\(\mu _{\textrm{ac}}\le \nu _{\textrm{ac}}\),

-

(iv)

\(d\mu _{\textrm{ac}} = u_x^2dx\),

-

(v)

\(\mu \left( (-\infty , \cdot ) \right) \in E_1^0\),

-

(vi)

\(\nu \left( (-\infty , \cdot ) \right) \in E_1^0\),

-

(vii)

If \(\alpha = 1\), then \(d\nu _{\textrm{ac}}=d\mu = u_x^2dx\),

-

(viii)

If \(\alpha \in [0, 1)\), then \(\frac{d\mu }{d\nu }(x) \in \{1 - \alpha , 1 \}\), and \(\frac{d\mu _{\textrm{ac}}}{d\nu _{\textrm{ac}}}(x) = 1\) if \(u_x(x) < 0\).

As \(\mu \), \(\nu \in \mathcal {M}^+(\mathbb {R})\), we can define the primitive functions \(F(x) = \mu ((-\infty , x))\) and \(G(x) = \nu ((- \infty , x))\). These are bounded, increasing, left-continuous and satisfy

We will interchangeably use the notation (u, F, G) and \((u, \mu , \nu )\) to refer to the same triplet in \(\mathcal {D}\), since by [7, Thm. 1.16], there is a one-to-one correspondence between (F, G) and \((\mu , \nu )\).

Moreover, for practical purposes, we will often restrict the initial data to belong to

Let \(B=E_2 \times E_2 \times E_1 \times E_1\) endowed with the norm

then the set of Lagrangian coordinates, \(\mathcal {F}\), is defined as follows.

Definition 2.2

The set \(\mathcal {F}\) consists of all quadruplets \(X = (y, U, V, H)\) with \((y-\text { {id}}, U, V, H) \in B\) such that

-

(i)

\((y-\text { {id}}, U, V, H) \in \big [W^{1, \infty }(\mathbb {R}) \big ]^4\),

-

(ii)

\( y_{\xi }, H_{\xi } \ge 0\) and there exists \(c > 0\) such that \(y_{\xi } + H_{\xi } \ge c \) holds a.e.,

-

(iii)

\(y_{\xi }V_{\xi }= U_{\xi }^2 \) a.e.,

-

(iv)

\(0 \le V_{\xi } \le H_{\xi }\) a.e.,

-

(v)

If \(\alpha = 1\), then \(y_{\xi }(\xi )=0\) implies that \(V_{\xi }(\xi )=0\), and \(y_{\xi }(\xi ) > 0\) implies that \(V_{\xi }(\xi ) = H_{\xi }(\xi )\) a.e.,

-

(vi)

If \(\alpha \in [0, 1)\), then there exists a function \(\kappa : \mathbb {R}\rightarrow \{1 - \alpha , 1 \}\) such that \(V_{\xi }(\xi ) = \kappa (\xi )H_{\xi }(\xi )\) a.e., and \(\kappa (\xi )=1\) whenever \(U_{\xi }(\xi ) < 0\).

In the convergence analysis, the following subsets of \(\mathcal {F}\) will play an important role

and

To construct the \(\alpha \)-dissipative solution using a generalized method of characteristics means to study the time evolution in Lagrangian rather than in Eulerian coordinates, and therefore the mappings between \(\mathcal {D}\) and \(\mathcal {F}\) are an essential part.

Definition 2.3

Let \(L: \mathcal {D}\rightarrow \mathcal {F}_0\) be defined by \(L \left( (u, \mu , \nu ) \right) = (y, U, V, H)\), where

Next we introduce the mapping taking us from Lagrangian to Eulerian coordinates. To this end, recall that the pushforward of a Borel measure \(\lambda \) by a measurable function f is the measure \(f_{\#}\lambda \) defined for all Borel sets \(A\subset \mathbb {R}\) by

Definition 2.4

Define \(M: \mathcal {F}\rightarrow \mathcal {D}\) by \(M((y, U, V, H)) = (u, \mu , \nu )\), where

For proofs that these mappings are well-defined we refer to [29, Prop. 2.1.5 and 2.1.7]. Furthermore, it should be noted that the triplets \((u,\mu ,\nu )\) are mapped to quadruplets (y, U, V, H) and hence there cannot be a one-to-one correspondence between Eulerian and Lagrangian coordinates. However, as pointed out in [29], one can identify equivalence classes in Lagrangian coordinates, so that each equivalence class corresponds to exactly one element in Eulerian coordinates.

Moreover, it should be pointed out that all the important information in Eulerian coordinates is encoded in the pair \((u,\mu )\), and hence contained in the triplet (y, U, V) in Lagrangian coordinates. In contrast, the mapping L relies heavily on \(\nu \) and hence changing \(\nu \) changes not only H, but also (y, U, V).

Finally, we can turn our attention to the time evolution. The \(\alpha \)-dissipative solution in Lagrangian coordinates, \(X(t) = (y, U, V, H)(t)\), with initial data \(X(0)=X_0\in \mathcal {F}\), is the unique solution to the following system of differential equations

Here \(\tau :\mathbb {R}\rightarrow [0, \infty ]\) is the wave breaking function given by

and \(V_{\infty }(t) = \lim _{\xi \rightarrow \infty }V(t, \xi )\) denotes the total Lagrangian energy at time t.

For a proof of the uniqueness of the solution to (2.2), we refer to [13, Lem. 2.3]. Furthermore, it should be pointed out that the solution operator respects equivalence classes in the following sense: If \(X_{A,0}\) and \(X_{B,0}\) belong to the same equivalence class, then also \(X_A(t)\) and \(X_B(t)\) belong to the same equivalence class for all \(t\ge 0\), see [13, Prop. 3.7].

Based on (2.2) we now define the solution operator \(S_t\) in Lagrangian coordinates.

Definition 2.5

For any \(t\ge 0\) and \(X_0\in \mathcal {F}\) define \(S_t(X_0)=X(t)\), where X(t) denotes the unique \(\alpha \)-dissipative solution to (2.2) with initial data \(X(0)=X_0\).

To finally obtain the \(\alpha \)-dissipative solution in Eulerian coordinates, we combine the solution operator \(S_t\) with the mappings L and M as follows.

Definition 2.6

For any \(t\ge 0\) and \((u_0,\mu _0,\nu _0)\in \mathcal {D}\) the \(\alpha \)-dissipative solution at time t is given by

As we mentioned earlier \(L((u,\mu ,\nu ))\) is heavily influenced by the choice of \(\nu \) in the triplet \((u,\mu ,\nu )\). Nevertheless, it has been shown in [16, Lem. 2.13], that the choice of \(\nu \) has no influence on the solution, in the following sense. Given any two triplets of initial data \((u_{A,0}, \mu _{A,0}, \nu _{A,0})\) and \((u_{B,0}, \mu _{B,0}, \nu _{B,0})\) in \(\mathcal {D}\) such that

then

As consequence of this result, we will restrict ourselves from now on to consider only initial data \((u_0,\mu _0,\nu _0)\in \mathcal {D}_0\), which means in particular that \(\mu _0=\nu _0\).

Furthermore, in the case of conservative and dissipative solutions the uniqueness of weak solutions has been established in [9] and [6], respectively, by showing that if a weak solution of the Hunter–Saxton equation satisfies certain properties, which are heavily dependent on the class of solutions one is interested in, then it can be computed using Definition 2.6. For the remaining values of \(\alpha \), i.e., \(\alpha \in (0,1)\), this is yet an open question.

3 The Numerical Algorithm

This section is devoted to presenting our numerical algorithm, which combines a projection operator \(P_{\Delta x}\) with the solution operator \(T_t\) as follows.

Definition 3.1

We define the numerical solution \((u_{\Delta x}, F_{\Delta x}, G_{\Delta x})\) for \(t \in [0,T]\) by

for any \((u_0, F_0, G_0) \in \mathcal {D}_0\).

As the projection operator \(P_{\Delta x}\) is the only part that actually introduces any error, its construction is the crucial step. In particular, \(P_{\Delta x}\) must preserve key properties of \(\alpha \)-dissipative solutions such as the total energy \(\mu ({\mathbb {R}})\) and Definition 2.1 (iv). Beside the construction of \(P_{\Delta x}\), we will, at the end of this section, discuss the implementation of our algorithm.

3.1 The Projection Operator

Any \(\mu \in \mathcal {M}^+(\mathbb {R})\) can be split into an absolutely continuous part \(\mu _{\text {ac}}\), and a singular part \(\mu _{\text {sing}}\) with respect to the Lebesgue measure, see [27, Prop. 9.8], i.e.,

Thus \(F(x)=\mu ((-\infty ,x))\) can be written as

where \(F_{\textrm{ac}}(x) = \mu _{\textrm{ac}}((-\infty , x))\) and \(F_{\textrm{sing}}(x) = \mu _{\textrm{sing}}((- \infty , x))\). Thus a projection operator acting on F(x) can be a combination of two projections, one for \(F_{\textrm{ac}}(x)\) and another one for \(F_{\textrm{sing}}(x)\). As we will see, this is how we define \(P_{\Delta x}\).

To derive \(P_{\Delta x}\), let \(\{x_j \}_{j \in {\mathbb {Z}}}\) be a uniform discretization of \(\mathbb {R}\), where \(x_j = j \Delta x\) for \(j \in {\mathbb {Z}}\) and \(\Delta x > 0\) is fixed. Furthermore, set \(P_{\Delta x}\left( (u, F, G)\right) = (u_{\Delta x}, F_{\Delta x}, G_{\Delta x})\). To ensure that \((u_{\Delta x}, F_{\Delta x}, G_{\Delta x})\) satisfies Definition 2.1 (iv), the projection operator is defined over two grid cells, i.e., over \([x_{2j}, x_{2j+1}] \cup [ x_{2j+1}, x_{2j+2}]\). Furthermore, to preserve the continuity of \((u, F_{\textrm{ac}})\) and the total energy, we require that \(\left( u_{\Delta x}, F_{\Delta x, \text {ac}} \right) \) coincides with \((u, F_{\textrm{ac}})\) in every other gridpoint, i.e.,

To interpolate \(u_{\Delta x}\) and \(F_{\Delta x}\) between the gridpoints \(\{x_{2j} \}_{j \in {\mathbb {Z}}}\), we fit two lines, \(p_1\) and \(p_2\), such that the resulting wave profile

is continuous, \((u_{\Delta x}, F_{\Delta x, \textrm{ac}})\) satisfies (3.2), and

For an arbitrary \(j \in {\mathbb {Z}}\) these constraints then read

Introducing the operators

for any sequence \(\{f_j \}_{j \in {\mathbb {Z}}}\) and solving (3.3a)–(3.3d), we end up with

where

Note that (3.4) is well-defined, as we have by the Cauchy–Schwarz inequality

For the singular part, we set

where the last equality follows from (3.1). Hence, all the discontinuities of \(F_{\Delta x, \text {sing}}(x)\) are located within the countable set \(\{x_{2j} \}_{j \in {\mathbb {Z}}}\). Finally, introduce

which is left-continuous. Then we can associate to \(F_{\Delta x}\) a positive and finite Radon measure \(\mu _{\Delta x}\) through

and, by construction,

To summarize, the projection operator \(P_{\Delta x}\) is defined as follows.

Definition 3.2

(Projection operator) We define the projection operator \(P_{\Delta x}:\mathcal {D}_0\rightarrow \mathcal {D}_0\) by \(P_{\Delta x} \left( (u,F,G) \right) =(u_{\Delta x},F_{\Delta x}, G_{\Delta x})\), where

and

for all \(x \in \mathbb {R}\). The absolutely continuous part of \(F_{\Delta x}\) is given by

and the singular part is defined by

Finally, let \(\mu _{\Delta x} ((- \infty , x))=F_{\Delta x}(x)\) and \(\nu _{\Delta x}((- \infty , x)) = G_{\Delta x}(x)\) be the unique, finite and positive Radon measures associated with \(F_{\Delta x}\) and \(G_{\Delta x}\), respectively.

Remark 3.3

The projection operator \(P_{\Delta x}\) in Definition 3.2 is only defined for \((u,\mu ,\nu )\in \mathcal {D}_0\) due to Definition 3.1. In this case \(\mu =\nu \) and by construction \(\mu _{\Delta x}= \nu _{\Delta x}\). Nevertheless, \(P_{\Delta x}\) can be extended to a projection operator \({\tilde{P}}_{\Delta x}\), which is well-defined for any \((u,\mu ,\nu ) \in \mathcal {D}\) as follows. We keep \(u_{\Delta x}\) and \(\mu _{\Delta x}((-\infty ,x))=F_{\Delta x}(x) \) given by (3.5)–(3.7), but \(\nu _{\Delta x}((-\infty ,x))=G_{\Delta x}(x)\) needs to be adjusted:

Following the same idea as for \(F_{\Delta x, \textrm{sing}}\), we set

Since we now have \(d\mu _{\textrm{ac}} = u_{x}^2dx\) and \(\mu _{\textrm{ac}} \le \nu _{\textrm{ac}}\), which implies that \(d\nu _{\textrm{ac}} = f dx \) for some \(f \ge u_x^2\), we approximate the deviation \((f - u_x^2)\) with the integral average over two grid cells. Following these lines, we end up with

for \(x\in (x_{2j}, x_{2j+1})\cup (x_{2j+1}, x_{2j+2})\). Computing \(G_{\Delta x, \textrm{ac}}(x)=\nu _{\Delta x, \textrm{ac}}((-\infty ,x))\), yields \(G_{\Delta x, \textrm{ac}}(x_{2j}) = G_{\textrm{ac}}(x_{2j})\), and

for \(x \in (x_{2j}, x_{2j+2}]\). Finally, we define \(\nu _{\Delta x}\) implicitly by

Last but not least, note that there is one drawback with \(\tilde{P}_{\Delta x}\). It preserves all properties in Definition 2.1 when \(d\mu _{\textrm{ac}} = d\nu _{\textrm{ac}}\). However, in the case \(d\mu _{\textrm{ac}} \ne d\nu _{\textrm{ac}}\), the Radon–Nikodym derivative \(\frac{d\mu _{\Delta x}}{d\nu _{\Delta x}}\) belongs to the interval \([1-\alpha , 1]\) rather than the set \(\{1-\alpha , 1 \}\), and hence the relation \(\frac{d\mu _{\textrm{ac}}}{d\nu _{\textrm{ac}}}(x) =1\) whenever \(u_x(x) < 0\) in Definition 2.1 (vi) will not be obeyed in general. Therefore, \({\tilde{P}}_{\Delta x}\) maps into a larger space \(\hat{\mathcal {D}} \supset \mathcal {D}\). Nevertheless, \({\tilde{P}}_{\Delta x}\) is relevant for numerical algorithms which are based on applying the projection operator after each time step \(\Delta t\) for \(\alpha \not =0\).

A closer look at (3.5) and (3.6) reveals that there are two possible sign choices on each interval \([x_{2j}, x_{2j+2}]\). Especially on a coarse grid, it is vital to make the right choice, as the following example shows.

Example 3.4

Consider the tuple (u, F, G), where

This models a scenario where wave breaking takes place at \(x=0\).

We discretize the domain \([-\frac{1}{2}, \frac{5}{2}]\) with \(\Delta x = \frac{1}{2}\), but for convenience we use \(x_j = - \frac{1}{2} + j \Delta x\) as our gridpoints. Using (3.5) and (3.6) we find

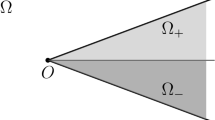

A comparison of the tuple (u, F, G) from Example 3.4 with the projected data \((u_{\Delta x}, F_{\Delta x}, G_{\Delta x})\) for different sign-choices for \(\alpha =\frac{1}{2}\) on a grid with \(\Delta x = \frac{1}{2}\) (x on the horizontal axis)

There are several possible ways to choose the signs in (3.8)–(3.9) and some of those are shown in Fig. 1: \(u_{\Delta x, 1}\) is based on using minus sign over \([x_{2j}, x_{2j+1}]\) and plus over \([x_{2j+1}, x_{2j+2}]\), while \(u_{\Delta x, 2}\) is based on using plus over \([x_{2j}, x_{2j+1}]\) and minus over \([x_{2j+1}, x_{2j+2}]\). By carefully choosing the signs, \(u_{\Delta x}\) fully overlaps with the exact solution. This choice which we denote as \(u_{\Delta x, \text {opt}}\), is given by

and equals \((u, F_{\textrm{ac}})\). The singular parts are approximated by

As the above example shows, choosing the best sign on each of the two grid cells in \([x_{2j}, x_{2j+2}]\) is vital, since it significantly affects the accuracy in the projection step and hence the whole algorithm, especially for a coarse grid. Instead of trial and error, which is never a good choice except for illustrative purposes, usually a selection criterion is imposed. In the implementation we decided to choose the sign over \([x_{2j}, x_{2j+1}]\) that minimizes the distance between \(u_{\Delta x}(x_{2j+1})\) and \(u(x_{2j+1})\), which can be formalized as follows. Introduce \(m \in \{0, 1\}\) such that for \(x \in [x_{2j}, x_{2j+1}]\) we may write (3.5) as

Subtracting \(u_{\Delta x}(x_{2j+1})\) from \(u(x_{2j+1})\), and finding the m that minimizes the distance can then be expressed as

Note that the sign over \([x_{2j+1}, x_{2j+2}]\) will be \((-1)^{k_{2j}+1}\).

3.2 Numerical Implementation of \(T_t\)

Since \(T_t\) associates to each piecewise linear initial data, the corresponding solution at time t, which again is piecewise linear, our numerical implementation of \(T_t\) will yield the exact solution with the projected initial data.

Fix a discretization parameter \(\Delta x > 0\) and an initial datum \((u, F, G)(0) \in \mathcal {D}_0\). Following Definition 3.1, the numerical Lagrangian initial data is given by

and we therefore first focus on the numerical implementation of L. We will observe that each component of \(X_{\Delta x}(0)\) is again a piecewise linear function. Moreover, the associated Lagrangian grid is non-uniform and has possibly a larger number of gridpoints than the original Eulerian grid.

3.2.1 Implementation of L

To avoid any ambiguity between breakpoints of a function and points of wave breaking, we denote the former as nodes in the following.

By construction, \(x+G_{\Delta x}(0, x)\) is an increasing, piecewise linear function with nodes situated at the points \(\{x_j\}_{j\in {\mathbb {Z}}}\), and hence \(y_{\Delta x}(0,\xi )\) is again an increasing and piecewise linear function due to Definition 2.3. Furthermore, \(y_{\Delta x}(0,\xi )\) is continuous and its nodes can be identified by finding all \(\xi \) which satisfy \(y_{\Delta x}(0,\xi )=x_j\) for some \(j\in {\mathbb {Z}}\) as follows. By Definition 2.3

and, due to \(X_{\Delta x}(0)\in \mathcal {F}_0\), we have

Since \(G_{\Delta x}(0, x)\) is continuous except possibly at the points \(\{x_{2j} \}_{j \in {\mathbb {Z}}}\), to every \(x_{2j+1}\) with \(j\in {\mathbb {Z}}\), there exists a unique \(\xi _{2j+1}\) such that \(y_{\Delta x}(0, \xi _{2j+1})=x_{2j+1}\) and, using (3.11), \(\xi _{2j+1}\) is given by

At the points \(\{x_{2j}\}_{j\in {\mathbb {Z}}}\), the function \(G_{\Delta x}(0, x)\) might have a jump. Therefore, there exists a maximal interval \(I_{2j}=[\xi _{2j}^{l}, \xi _{2j}^{r}]\) such that \(y_{\Delta x}(0,\xi )=x_{2j}\) for all \(\xi \in I_{2j}\), and using once more (3.11), \(\xi _{2j}^{l}\) and \(\xi _{2j}^{r}\) are given by

Note that \(\xi _{2j}^{l}=\xi _{2j}^{r}\) if and only if \(G_{\Delta x}(0, x)\) has no jump at \(x=x_{2j}\).

Set

Then the nodes of \(y_{\Delta x} (0,\xi )\) are situated at the points \(\{{\hat{\xi }}_{j}\}_{j\in {\mathbb {Z}}}\), as shown in Fig. 2. As mentioned earlier \(y_{\Delta x}(0)\) is piecewise linear and continuous, and hence also \(U_{\Delta x}(0)\), \(V_{\Delta x}(0)\), and \(H_{\Delta x}(0)\) are piecewise linear and continuous. Moreover, the nodes of \(X_{\Delta x}(0)\) are located at \(\{{\hat{\xi }}_{j}\}_{j\in {\mathbb {Z}}}\), since the nodes of \((u_{\Delta x}, F_{\Delta x}, G_{\Delta x})(0)\) are situated at \(x=x_{j}\), \(j\in {\mathbb {Z}}\). Thus, once the nodes are identified and the values of \(X_{\Delta x}(0)\) at these nodes are determined using Definition 2.3, the value of \(X_{\Delta x}(0,\xi )\) can be computed at any point \(\xi \).

Furthermore, each two grid cells \([x_{2j}, x_{2j+1}],[x_{2j+1}, x_{2j+2}]\) are mapped to 3 grid cells \([{\hat{\xi }}_{3j}, {\hat{\xi }}_{3j+1}], [{\hat{\xi }}_{3j+1}, {\hat{\xi }}_{3j+2}], [{\hat{\xi }}_{3j+2}, {\hat{\xi }}_{3j+3}]\) by L. In addition, while the Eulerian discretization is uniform with size \(\Delta x\), we now have

and hence the Lagrangian discretization is non-uniform.

3.2.2 The Wave Breaking Function

The next step is to compute the numerical wave breaking function \(\tau _{\Delta x}: \mathbb {R}\rightarrow [0, \infty ]\), using (2.3). For \(\xi \in [{\hat{\xi }}_{3j}, {\hat{\xi }}_{3j+1}]\), we have \(y_{\Delta x, \xi }(0,\xi ) = U_{\Delta x, \xi } (0,\xi )= 0\) and hence \(\tau _{\Delta x}(\xi )=0\) by (2.3). For \(\xi \in ({\hat{\xi }}_{3j+1}, {\hat{\xi }}_{3j+2})\), note that \(U_{\Delta x,\xi }(0,\xi )\) has the same sign as \(u_{\Delta x,x}(0, x)= Du_{2j}\mp q_{2j}\) in \((x_{2j}, x_{2j+1})\). Likewise, for \(\xi \in ({\hat{\xi }}_{3j+2}, {\hat{\xi }}_{3j+3})\), \(U_{\Delta x,\xi }(0,\xi )\) has the same sign as \(u_{\Delta x,x}(0, x)= Du_{2j}\pm q_{2j}\) in \((x_{2j+1}, x_{2j+2})\). Furthermore, \(U_{\Delta x,\xi }(0,\xi )=u_{\Delta x,x}(0, y_{\Delta x}(0,\xi ))y_{\Delta x,\xi }(0,\xi )\) for all \(\xi \in ({\hat{\xi }}_{3j+1}, {\hat{\xi }}_{3j+3})\backslash \{ {\hat{\xi }}_{3j+2}\}\). Therefore, introducing \(\tau _{3j+ \frac{3}{2}}\) and \(\tau _{3j+ \frac{5}{2}}\) as

the wave breaking function \(\tau _{\Delta x}\) for \(\xi \in [{\hat{\xi }}_{3j}, {\hat{\xi }}_{3j+3})\) is given by

Figure 3 illustrates the relation between the slopes of \(U_{\delta {x}}(0)\) and the value attained by \(\tau _{\delta {x}}\). Note that an interval where \(U_{\delta {x}}(0)\) is strictly increasing leads to an interval where \(\tau _{\delta {x}}\) is unbounded.

3.2.3 Implementation of \(S_t\)

We proceed by considering \(X_{\Delta x}(t)=S_t(X_{\Delta x}(0))\). Introduce the function

which we prefer to work with as \(\zeta _{\Delta x}\in L^{\infty }(\mathbb {R})\) in contrast to \(y_{\Delta x}\), see Definition 2.2. Furthermore, let

It then turns out to be advantageous to compute the time evolution of \({\hat{X}}_{\Delta x,\xi }\) rather than the one of \({\hat{X}}_{\Delta x}\), due to possible drops in \(V_{\Delta x,\xi }\), cf. (2.2c). However, this forces us to slightly change our point of view, since by differentiating (3.10) we find that \({\hat{X}}_{\Delta x, \xi }(0)\) is a piecewise constant function, whose discontinuities are situated at the nodes \({\hat{\xi }}_j\), where \(j\in {\mathbb {Z}}\). Therefore, we associate to each \(j \in {\mathbb {Z}}\),

This sequence is evolved numerically according to,

The above system is obtained by differentiating (2.2) with respect to \(\xi \). Since computing \({\hat{X}}_{\Delta x, \xi }(t,\cdot )\) exactly, yields a piecewise constant function whose discontinuities are again located at the nodes \(\{{\hat{\xi }}_j\}_{j \in {\mathbb {Z}}}\), considering the above sequence \(\{{\hat{X}}_{j, \xi }(t)\}_{j \in {\mathbb {Z}}}\) does not yield any additional error. Furthermore, the exact \({\hat{X}}_{\Delta x, \xi }(t,\cdot )\) can be read off from \(\{{\hat{X}}_{j, \xi }(t)\}_{j \in {\mathbb {Z}}}\).

Finally, we can exactly recover \({\hat{X}}_{\Delta x}(t)\), which is continuous with respect to \(\xi \), from \(\{{\hat{X}}_{j,\xi }(t)\}_{j \in {\mathbb {Z}}}\). Since the asymptotic behavior of \({\hat{X}}_{\Delta x}(t,\xi )\) as \(\xi \rightarrow \pm \infty \) changes in accordance with (2.2), this must also be taken into account by our algorithm. The fact that the initial data is in the space \({\mathcal {D}}\) combined with (2.2c) implies

where the abbreviation

has been introduced to ease the notation. Hence, for all \(j\in {\mathbb {Z}}\),

From (2.2d) it follows that

For \(U_{\Delta x}(t,\xi )\), we have, combining (2.2b) and Fubini’s theorem,

and for all \(j\in {\mathbb {Z}}\)

For \(\zeta _{\Delta x}(t,\xi )\), we find, using (2.2a) and \(\zeta _{\Delta x,-\infty }(0) = 0\),

and for all \(j\in {\mathbb {Z}}\),

Recalling that \({{\hat{X}}}_{\Delta x}(t)\) is piecewise linear and continuous, with nodes situated at \({\hat{\xi }}_j\) with \(j\in {\mathbb {Z}}\), we can now recover \({{\hat{X}}}_{\Delta x}(t,\xi )\) for all \(\xi \in {\mathbb {R}}\), and, using that \(y_{\Delta x}(t)= \zeta _{\Delta x}(t)+ \text { {id}}\), we end up with \(X_{\Delta x}(t,\xi )\) for all \(\xi \in {\mathbb {R}}\).

In practice, we have to limit the numerical approximation of the Cauchy problem (1.1) to initial data where \(u_{x}(0)\) and \(\mu _{\textrm{sing}}(0)\) have compact support. For such initial data there exists \(N \in {\mathbb {N}}\) such that

and by (3.14) we have

3.2.4 Implementation of M

Finally, to recover the solution in Eulerian coordinates we apply the mapping M to \(X_{\Delta x}(t)\), i.e.,

Here it is important to note that \(X_{\Delta x}(t)\) is piecewise linear and continuous, thus \(u_{\Delta x}(t,\cdot )\) is also piecewise linear and continuous, while \(F_{\Delta x}(t, \cdot )\) and \(G_{\Delta x}(t, \cdot )\) are piecewise linear, increasing and in general only left-continuous. Furthermore, their nodes are situated at the points \(\{y_{\Delta x}(t, {\hat{\xi }}_j)\}_{j \in {\mathbb {Z}}}\). Numerically, we therefore apply a piecewise linear reconstruction.

Given \(x \in \mathbb {R}\), there exists \(j \in {\mathbb {Z}}\) such that \(x \in (y_{\Delta x}(t, \hat{\xi }_j), y_{\Delta x}(t, \hat{\xi }_{j+1})]\).

If \(x=y_{\Delta x}(t, \hat{\xi }_{j+1})\), we have, by the definition of M,

This covers also the case where \(\hat{\xi }_j = \hat{\xi }_{j+1}\), and the case where wave breaking occurs, i.e., \(y_{\Delta x}(t, \hat{\xi }_j) = y_{\Delta x}(t, \hat{\xi }_{j+1})\).

If \(x\in (y_{\Delta x}(t, \hat{\xi }_j), y_{\Delta x}(t, \hat{\xi }_{j+1}))\), observe that

and

Therefore, for \(x\in (y_{\Delta x}(t, \hat{\xi }_j), y_{\Delta x}(t, \hat{\xi }_{j+1}))\) the linear interpolation, which coincides with \(M\left( X_{\Delta x}(t) \right) \), is given by

where \(D_+^{\xi }y_{\Delta x}(t, \hat{\xi }_j) = y_{\Delta x}(t, \hat{\xi }_{j +1 }) - y_{\Delta x}(t, \hat{\xi }_{j})\). Finally, note that the Eulerian grid changes significantly with respect to time. First of all the number of grid cells is changing over time and secondly, the grid does not remain uniform.

4 Convergence of the Numerical Method

In this section we prove that our family of approximations \(\{ \left( u_{\Delta x}, F_{\Delta x}, G_{\Delta x} \right) \}_{\Delta x > 0}\) converges to the \(\alpha \)-dissipative solution of (1.1). We start by proving convergence of the projected initial data in Eulerian coordinates. Thereafter, we show that this induces convergence, initially and at later times, towards the unique \(\alpha \)-dissipative solution in Lagrangian coordinates. Finally, we investigate in what sense convergence in Lagrangian coordinates carries over to Eulerian coordinates.

4.1 Convergence of the Initial Data in Eulerian Coordinates

Let \(P_{\Delta x}\) be the projection operator given by Definition 3.2. We have the following result.

Proposition 4.1

For \((u,\mu , \nu ) \in \mathcal {D}_0\), let \((u_{\Delta x}, F_{\Delta x}, G_{\Delta x})=P_{\Delta x} \left( (u,F, G) \right) \). Then

Proof

Let \(x \in [x_{2j}, x_{2j+1}]\). By (3.5), we have

where \(q_{2j}\) is defined by (3.4). The second term is bounded by

The first term can be estimated as follows:

where we applied the Cauchy–Schwarz inequality. Combining (4.2) and (4.3) yields

By a similar argument for \(x \in [x_{2j+1}, x_{2j+2}]\), (4.1a) is established.

Using (4.4) we get

which yields (4.1b).

Next, we show (4.1c). For the \(L^1(\mathbb {R})\)-estimate, we have

and for the \(L^2(\mathbb {R})\)-estimate,

To prove (4.1e), it suffices to show that \(\mu _{\Delta x} \rightarrow \mu \) vaguely as \(\Delta x \rightarrow 0\), see [7, Prop. 7.19]. That is,

for all \(\phi \in C_0(\mathbb {R})\), where \(C_0(\mathbb {R})\) denotes the space of continuous functions vanishing at infinity. Furthermore, since \(C_c^{\infty }(\mathbb {R})\) is dense in \(C_0(\mathbb {R})\), cf. [7, Prop 8.17], it suffices to show that (4.5) holds for all \(\phi \in C_c^{\infty }(\mathbb {R})\).

Let \(\phi \in C_c^{\infty }(\mathbb {R})\). Combining integration by parts and (4.1c) with \(p=1\), we find

and consequently \(\mu _{\Delta x} \rightarrow \mu \) vaguely as \(\Delta x\rightarrow 0\).

Since \(F=G\) and \(F_{\Delta x}=G_{\Delta x}\) by assumption, (4.1d) and (4.1f) hold. \(\square \)

Next we establish convergence of the spatial derivative \(u_{\Delta x, x}\).

Lemma 4.2

Let \((u,F,G)\in \mathcal {D}_0\) and \((u_{\Delta x}, F_{\Delta x}, G_{\Delta x})=P_{\Delta x} \left( (u,F, G) \right) \), then

Proof

We apply the Radon–Riesz theorem. Thus, we have to show that \(\Vert u_{\Delta x, x} \Vert _2 \rightarrow \Vert u_x \Vert _2\) and \(u_{\Delta x, x} \rightharpoonup u_x\) in \(L^2(\mathbb {R})\). A direct calculation, using (3.5), yields

For the weak convergence, it suffices to consider test functions \(\phi \in C_c^\infty ({\mathbb {R}})\), as \(C_c^{\infty }(\mathbb {R})\) is dense in \(L^2(\mathbb {R})\). Let \(\phi \in C_c^{\infty }(\mathbb {R})\). Integration by parts combined with the Cauchy–Schwarz inequality and (4.1b) yields

Thus \(u_{\Delta x, x} \rightharpoonup u_{x}\) in \(L^2(\mathbb {R})\) as \(\Delta x\rightarrow 0\). \(\square \)

The auxiliary function f(Z) will be an essential part in the upcoming convergence analysis, when we want to relate convergence in Eulerian coordinates to convergence in Lagrangian coordinates. It is defined as

where

and

Recalling that \(d\mu _{\textrm{ac}}=u_x^2dx\) by Definition 2.1 (iv), and \(\mu _{\textrm{ac}}=\nu _{\textrm{ac}}\) for \((u, \mu , \nu ) \in \mathcal {D}_0\), we establish the following \(L^1(\mathbb {R})\)-estimate.

Lemma 4.3

Let \((u,F,G)\in \mathcal {D}_0\) and \((u_{\Delta x}, F_{\Delta x}, G_{\Delta x})=P_{\Delta x} \left( (u,F, G) \right) \). Then f(Z), \(f(Z_{\Delta x}) \in L^1({\mathbb {R}})\), and

Proof

We only show that \(f(Z)\in L^1(\mathbb {R})\), since the argument for \(f(Z_{\Delta x})\) is exactly the same. By construction \(f(Z)(x)\le u_x^2(x)\) for all \(x\in \mathbb {R}\) and, since \(u_x\in L^2(\mathbb {R})\), it follows that

and hence \(f(Z)\in L^1(\mathbb {R})\).

For (4.7), we use a splitting based on \({\tilde{\Omega }}_d(Z)\) and \({\tilde{\Omega }}_c(X)\). If \(x \in \tilde{\Omega }_{d}(Z)\cap {\tilde{\Omega }}_{d}(Z_{\Delta x})\), then

and, by the Cauchy–Schwarz inequality and (4.8), we obtain

We can proceed similarly for \(x \in {\tilde{\Omega }}_c(Z) \cap \tilde{\Omega }_c(Z_{\Delta x})\).

Finally, consider \(x \in \left( {\tilde{\Omega }}_c(Z)\cap \tilde{\Omega }_d(Z_{\Delta x})\right) \cup \left( {\tilde{\Omega }}_d(Z)\cap {\tilde{\Omega }}_c(Z_{\Delta x})\right) \). By symmetry, it is sufficient to consider \(x \in {\tilde{\Omega }}_c(Z)\cap {\tilde{\Omega }}_d(Z_{\Delta x})\), for which

since \(u_{x}(x) \ge 0\) and \(u_{\Delta x, x}(x) <0\). Therefore, by the Cauchy–Schwarz inequality,

Combining (4.9) and (4.10) with analogous estimates for the other cases yields (4.7). \(\square \)

4.2 Convergence in Lagrangian Coordinates

We start by showing convergence of the initial data in Lagrangian coordinates.

Lemma 4.4

Given \((u, \mu , \nu ) \in \mathcal {D}_0\), let \(X = \left( y, U, V, H \right) = L \left( (u, \mu , \nu ) \right) \) and \(X_{\Delta x}= (y_{\Delta x}, U_{\Delta x}, V_{\Delta x}, H_{\Delta x}) = L \circ P_{\Delta x} \left( (u, \mu , \nu ) \right) \), then

Proof

Given \(\xi \in \mathbb {R}\), there exists \(j \in {\mathbb {Z}}\) such that \(\xi \in [\hat{\xi }_{3j}, \hat{\xi }_{3j+3})\). Moreover, by construction, y and \(y_{\Delta x}\) are continuous, increasing and satisfy

as discussed in Sect. 3.2. Thus

and, as X and \(X_{\Delta x}\) belong to \(\mathcal {F}_0\), we have shown both (4.11a) and (4.11c).

To prove (4.11b), note that by (2.1a), to any \(x \in {\mathbb {R}}\) there exist characteristic variables \(\xi _{\Delta x}\) and \(\xi \), such that \(y_{\Delta x}( \xi _{\Delta x}) = x=y(\xi )\), and hence

By (2.1b), we have, \(u(y(\xi )) = U(\xi )\) and \(u_{\Delta x}(y_{\Delta x}(\xi )) = U_{\Delta x}(\xi )\). Therefore, by rearranging (4.13), we obtain

The first term is bounded by (4.1a), while for the second term we apply the Cauchy–Schwarz inequality, yielding

Thus,

\(\square \)

For the initial derivatives we have the following convergence result.

Lemma 4.5

Given \((u, \mu , \nu ) \in \mathcal {D}_0\), let \(X= (y, U, V, H) = L \left( (u, \mu , \nu ) \right) \) and \(X_{\Delta x} = \left( y_{\Delta x}, U_{\Delta x}, V_{\Delta x}, H_{\Delta x} \right) = L \circ P_{\Delta x} \left( (u, \mu , \nu ) \right) \), then as \({\Delta x}\rightarrow 0\),

Proof

For a proof of \(H_{\Delta x, \xi } \rightarrow H_{\xi }\) and \(U_{\Delta x, \xi } \rightarrow U_{\xi }\) in \(L^2(\mathbb {R})\) we refer to [8, Sec. 5]. As \(X\in \mathcal {F}_0^0\) and, in particular, \(U_{\xi }^2 = y_{\xi }H_{\xi }\), we have

Since (4.15) also holds for \(X_{\Delta x}\), we obtain, using the Cauchy–Schwarz inequality,

and \(H_{\Delta x, \xi } \rightarrow H_\xi \) in \(L^1(\mathbb {R})\). Lastly, (4.14a) follows from (4.14c), as \(X_{\Delta x}, X \in \mathcal {F}_0^0\). \(\square \)

We proceed by introducing the stability function g(X), which is the key element when showing convergence at later times in Lagrangian coordinates. In particular, it describes the loss of energy at wave breaking in a continuous way, in contrast to the actual energy density \(V_{\xi }\), which drops abruptly at wave breaking.

Let

where

Note that for each \(\xi \in \Omega _c\) no wave breaking occurs in the future and hence \(V_\xi (t,\xi )\) is continuous forward in time, while for \(\xi \in \Omega _d\) wave breaking occurs in the future and hence \(V_\xi (t,\xi )\) might be discontinuous forward in time.

The function g(X) is then defined as

Note that the Eulerian counterpart of g(X) is the previously defined function f(Z), cf. (4.6). The relation between the two functions is clarified in the following remark.

Remark 4.6

If \((u,\mu ,\nu )\in \mathcal {D}_0\), \(\nu \) is purely absolutely continuous, and \(X=L((u,\mu ,\nu ))\), the functions (4.17) and (4.6) are related through

The following result is the final one concerning convergence of the initial data in Lagrangian coordinates.

Proposition 4.7

Given \((u, \mu , \nu ) \in \mathcal {D}_0\), let \(X= (y, U, V, H) = L \left( (u, \mu , \nu ) \right) \) and \(X_{\Delta x} = \left( y_{\Delta x}, U_{\Delta x}, V_{\Delta x}, H_{\Delta x} \right) = L \circ P_{\Delta x} \left( (u, \mu , \nu ) \right) \), then as \({\Delta x}\rightarrow 0\),

Proof

To begin with observe that for any \(X\in \mathcal {F}_0^0\)

since \(0\le V_\xi (\xi )=H_\xi (\xi )\le 1\) for all \(\xi \in \mathbb {R}\), which follows from (2.1c) and the fact that both y and H are increasing functions. We can therefore apply the Radon–Riesz theorem to establish \(g(X_{\Delta x})\rightarrow g(X)\) in \(L^2(\mathbb {R})\). Accordingly, we split the proof of (4.18) into three parts, i) \(L^2\)-norm convergence, ii) weak \(L^2\)-convergence, and iii) \(L^1\)-convergence.

i) Verification of \(\Vert g(X_{\Delta x}) \Vert _2 \rightarrow \Vert g(X) \Vert _{2}\). Note that since \(X_{\Delta x}\) and X belong to \(\mathcal {F}_0^0\), we have

where we used (4.15) in the last step.

To estimate (4.19a) and (4.19b), introduce

and \(B=y({\mathcal {S}})\). Then it has been shown in the proof of [12, Thm. 27] that

where \(\mu \vert _B\) denotes the restriction of \(\mu \) to B, that is \(\mu \vert _B(E)=\mu (E\cap B)\) for any Borel set E. Thus

Along the same lines, by defining

we have

Since \(\Omega _d(X)=\Omega _d(X)\cap {\mathcal {S}}^c\) and \(\Omega _d(X_{\Delta x})=\Omega _d(X_{\Delta x})\cap {\mathcal {S}}^c_{\Delta x}\), due to Definition 2.2 (iii), (4.21) implies that

Furthermore, \(U_{\xi }(\xi ) = u_x (y(\xi ))y_{\xi }(\xi )\) for almost every \(\xi \in \Omega _d(X)\) and likewise for \(U_{\Delta x,\xi }\). Hence, we can replace in the above integrals \(y\left( \Omega _d(X) \right) \) and \(y_{\Delta x}\left( \Omega _d(X_{\Delta x}) \right) \), by \({\tilde{\Omega }}_d(Z)\) and \({\tilde{\Omega }}_{d}(Z_{\Delta x})\), respectively, and end up with

The terms on the right hand side can be estimated using the same approach as in the proof of Lemma 4.3, which yields

For (4.19b), we get

The two terms in (4.26a) have a similar structure and we therefore only consider the first one. We have

since \(U_{\xi }^2 = y_{\xi }V_{\xi }\) and \(0 \le y_{\xi } \le 1\). Estimating (4.26b) in much the same way, yields

Finally, combining (4.19), (4.25) and (4.27), we have shown that

As \(H_{\Delta x, \xi } \rightarrow H_{\xi }\) and \(U_{\Delta x, \xi } \rightarrow U_{\xi }\) in \(L^2(\mathbb {R})\) by Lemma 4.5 and \(u_{\Delta x, x} \rightarrow u_{x}\) in \(L^2(\mathbb {R})\) by Lemma 4.2, it holds that \(\Vert g(X_{\Delta x})\Vert _2 \rightarrow \Vert g(X) \Vert _2\).

ii) We show that \(g(X_{\Delta x}) \rightharpoonup g(X)\) in \(L^2(\mathbb {R})\). To that end, we interpret \(g(X_{\Delta x})\) and g(X) as positive Radon measures with the associated functions

If we show that \({\hat{G}}_{\Delta x} \rightarrow {\hat{G}}\) pointwise, then [7, Prop. 7.19] implies that \(g(X_{\Delta x}) \rightarrow g(X)\) vaguely, and as a consequence, \(g(X_{\Delta x}) \rightharpoonup g(X)\) in \(L^2(\mathbb {R})\).

Let \(\xi \in [\hat{\xi }_{3j}, \hat{\xi }_{3j+3})\), and note that due to Definition 3.2, Definition 2.3, (3.12)–(3.13), and (4.21),

Thus,

For \(I_1\) note that using the same argument as the one leading to (4.24), we obtain

and due to Lemma 4.3,

The term \(I_2\) can be estimated as follows,

The first term is bounded from above by \(\Vert H - H_{\Delta x} \Vert _{\infty } \le 2\Delta x\), due to (4.11c). For the second term, let \(x= y(\xi )\) and \(x_{\Delta x}=y_{\Delta x}(\xi )\), which both belong to \([x_{2j}, x_{2j+2}]\). Using once more the same argument as the one leading to (4.24), \(\nu _{\text {ac}}=u_x^2\), and \(\nu _{\Delta x, \text {ac}}=u_{\Delta x, x}^2\), we end up with

and therefore

Thus,

By Lemma 4.2, \(u_{\Delta x, x} \rightarrow u_x\) in \(L^2(\mathbb {R})\), and, since \(F_{\text {ac}}\) is continuous, also the third term tends to zero as \(\Delta x \rightarrow 0\). Therefore, (4.28) implies that \({\hat{G}}_{\Delta x} \rightarrow {\hat{G}}\) pointwise, which gives, see [7, Prop. 7.19], that

Since g(X) and \(g(X_{\Delta x})\) belong to \(L^2(\mathbb {R})\) and \(C_c^\infty (\mathbb {R})\) is a dense subset of \(L^2(\mathbb {R})\), (4.29) holds for all \(\phi \in L^2(\mathbb {R})\) and \(g(X_{\Delta x})\rightharpoonup g(X)\) in \(L^2(\mathbb {R})\).

Consequently, combining i) and ii), the conditions of the Radon–Riesz theorem are met and \(g(X_{\Delta x})\rightarrow g(X)\) in \(L^2(\mathbb {R})\).

iii) It is left to show that \(g(X_{\Delta x}) \rightarrow g(X)\) in \(L^1(\mathbb {R})\). Again we will use a splitting based on the sets \({\mathcal {S}}\) and \({\mathcal {S}}_{\Delta x}\) defined in (4.20) and (4.22), respectively.

Since \(g(X_{\Delta x})(\xi ) = g(X) (\xi )= 1\) for all \(\xi \in {\mathcal {S}}\cap {\mathcal {S}}_{\Delta x}\), we have

By the Cauchy–Schwarz inequality and (4.23), (4.30a) satisfies

and we treat the first term in (4.30) the same way.

The term (4.30b), on the other hand, requires a bit more work. Introduce the set \(A = \{\xi : H_{\xi }(\xi ) \ge \frac{1}{2}\}\), which satisfies, due to Chebyshev’s inequality with \(p=1\),

Furthermore, let \(E_{\Delta x} = {\mathcal {S}}^c \cap {\mathcal {S}}_{\Delta x}^c\), so that (4.30b) can be written as

For (4.33), the Cauchy–Schwarz inequality and (4.32) imply

Combining (4.30), (4.31) (4.33), and (4.34) and recalling that \(g(X_{\Delta x})\rightarrow g(X) \) in \(L^2(\mathbb {R})\), it remains to show that (4.33) tends to zero as \(\Delta x\rightarrow 0\). We follow the proof of [11, Lem. 7.3] closely. For almost every \(\xi \in E_{\Delta x}\), we have

and

Thus combining (4.35) and (4.36),

Concerning the integral of (4.37a), note that \(f(Z) \in L^1(\mathbb {R})\) by Lemma 4.3. Thus, given \(\epsilon > 0\) there exists \(\psi \in C_c(\mathbb {R})\) such that \(\Vert f(Z) - \psi \Vert _{L^1(\mathbb {R})} \le \epsilon \), since \(C_c(\mathbb {R})\) is dense in \(L^1(\mathbb {R})\), and

since \(0\le y_\xi \), \(y_{\Delta x,\xi }\le 1\). As \(y_{\Delta x} \rightarrow y\) in \(L^{\infty }(\mathbb {R})\) by (4.11a), the support of \(\psi (y_{\Delta x})\) is contained inside some compact set which can be chosen independently of \(\Delta x\). Therefore, by the Lebesgue dominated convergence theorem, \(\psi (y_{\Delta x}) \rightarrow \psi (y)\) in \(L^1(\mathbb {R})\). Consequently, the left hand side of (4.38) vanishes as \(\epsilon \rightarrow 0\) and \(\Delta x \rightarrow 0\).

For the term (4.37b) we use the change of variables \(x=y_{\Delta x}(\xi )\), \(0 \le y_{\xi } \le 1\), and Lemma 4.3 to deduce that

For (4.37c) note that

which implies

Since \(u_x^2 \in L^1(\mathbb {R})\), an argument similar to the one for the integrals of (4.37a) and (4.37b) shows that the term on the left hand side tends to zero as \(\Delta x\rightarrow 0\).

Finally, for (4.37d), we observe that

and hence (4.37d) is bounded from above by

which is a combination of the integrals of (4.37a) and (4.37b) which tend to zero as \({\Delta x}\rightarrow 0\). This finishes the proof of \(g(X_{\Delta x}) \rightarrow g(X)\) in \(L^1(\mathbb {R})\). \(\square \)

To establish convergence at later times in Lagrangian coordinates, we equip the set \(\mathcal {F}\) with the following metric, which has been introduced in [13, Def. 4.6].

Definition 4.8

Let \(d: \mathcal {F}\times \mathcal {F}\rightarrow [0, \infty )\) be defined by

This metric has one major drawback: it separates solutions in Lagrangian coordinates belonging to the same equivalence class. Nevertheless, it is well suited for us, since we are only interested in comparing \(X_{\Delta x}(t) = S_t (X_{\Delta x}(0))\) with \(X(t) = S_t (X(0))\) and not their respective equivalence classes.

Theorem 4.9

Given \((u_0, \mu _0, \nu _0) \in \mathcal {D}_0\) and \(t\ge 0\), let \(X(t) = S_t \circ L \left( (u_0, \mu _0, \nu _0 )\right) \) and \(X_{\Delta x}(t) = S_{t} \circ L \circ P_{\Delta x} \left( (u_0, \mu _0, \nu _0) \right) \), then as \({\Delta x}\rightarrow 0\),

Furthermore,

Proof

Let \(X(0)=L((u_0, \mu _0,\nu _0))\) and \(X_{\Delta x}(0)= L\circ P_{\Delta x}((u_0,\mu _0,\nu _0))\). Combining Lemma 4.4, Lemma 4.5, and Proposition 4.7 yields \(d(X(0), X_{\Delta x}(0)) \rightarrow 0\). Furthermore, see [13, Thm. 4.18],

and hence (4.39a)–(4.39e) hold.

Since \(g(X)(t,\cdot )-g(X_{\Delta x})(t,\cdot )\) and \(H(t,\cdot )-H_{\Delta x}(t,\cdot )\) are time-independent, (4.39f) and (4.40a) follow immediately from Proposition 4.7 and (4.11c).

It remains to show (4.40b). Recalling (4.16), introduce

Using Fubini’s theorem, we write

Since no wave breaking occurs for \(\xi \in \Omega _{c,c}(0)\),

The terms (4.41a) and (4.41b) can be treated similarly, so we only consider (4.41a). Since any \(\xi \in \Omega _d(X_{\Delta x}(0))\) might enter \(\Omega _c\) within the time interval [0, t], we have

where we used in the last step that X(0) and \(X_{\Delta x}(0)\) belong to \(\mathcal {F}_0^0\).

For (4.41c) we use a similar decomposition, yielding

For the first term we have

For (4.44a), recalling (4.17) and that X(0) and \(X_{\Delta x}(0)\) belong to \(\mathcal {F}_0^0\), we have

For the last term in the above inequality, observe that \(U_\xi (t,\xi )\) and \(U_{\Delta x, \xi }(t,\xi )\) are increasing functions, cf. (2.2b), which equal zero at \(t= \tau (\xi )\) and \(t= \tau _{\Delta x}(\xi )\), respectively, for \(\xi \in \Omega _{d, d}(0)\). Furthermore, (2.2b) implies

and thus

The term (4.44b) is bounded similarly.

To estimate (4.44c), we split \(\Omega _{d, d}(0) \cap \Omega _{c, c}(t)\) into two sets,

where

We only present the details for the integral over A(t), since the other one can be treated similarly. Following closely the argument leading to (4.46), we get

Combining (4.42)–(4.43), (4.45), (4.46)–(4.47), and analogous estimates for the remaining cases, yields

where

Furthermore, \(\textrm{meas} \! \left( \{\xi \in \mathbb {R}: \tau (\xi ) \le t \}\right) \le (1 + \frac{1}{4}t^2)H_{\infty }(0)\), and likewise for the set \(\{\xi : \tau _{\Delta x}(\xi ) \le t \}\), by [13, Cor. 2.4]. Hence

Thus, by applying the Cauchy–Schwarz inequality to the last term in (4.48) and inserting (4.49), we obtain (4.40b). \(\square \)

Corollary 4.10

Let \((u_0, \mu _0, \nu _0) \in \mathcal {D}_0\) and \(t \ge 0\). Set \(X(t) = S_t \circ L \left( (u_0, \mu _0, \nu _0 ) \right) \) and \(X_{\Delta x}(t) = S_{t} \circ L \circ P_{\Delta x} \left( (u_0, \mu _0, \nu _0 )\right) \). Then there exists a subsequence \(\{\Delta x_m \}_{ m \in {\mathbb {N}}}\) with \(\Delta x_m \rightarrow 0\) as \(m \rightarrow \infty \), such that for a.e. \(t \in [0, \infty )\) we have

Proof

By (4.40b) and [7, Cor. 2.32], there exists a subsequence \(\{V_{\Delta x_m, \xi }\}_{m \in {\mathbb {N}}}\) such that \(V_{\Delta x_m, \xi }(t,\cdot ) \rightarrow V_{\xi }(t,\cdot )\) in \(L^1(\mathbb {R})\) for a.e. \(t \in [0, \infty )\). Let \(N \subset [0, \infty )\) be the null set of times for which the convergence does not hold. Thus, for \(t \in N^c\) we have

\(\square \)

Observe that the times for which the convergence in Corollary 4.10 fails depend on the particular chosen subsequence. Therefore, there is no natural way to extend this convergence to the whole sequence.

4.3 Convergence of the \(\alpha \)-Dissipative Solution in Eulerian Coordinates

Finally, we can examine in what sense convergence in Lagrangian coordinates, given by Theorem 4.9 and Corollary 4.10, carries over to Eulerian coordinates.

Lemma 4.11

Given \((u_0, \mu _0, \nu _0) \in \mathcal {D}_0\), let \((u, \mu , \nu )(t)= T_t \left( (u_0, \mu _0, \nu _0 )\right) \) and \((u_{\Delta x}, \mu _{\Delta x}, \nu _{\Delta x})(t) = T_t \circ P_{\Delta x} \left( (u_0, \mu _0, \nu _0 )\right) \) for \(t \in [0, \infty )\). Then

Proof

Following the same line of reasoning as in the proof of (4.11b), yields (4.51).

Before proving (4.52), recall that by Definition 2.4, we have

and likewise for \(G_{\Delta x}(t,x)\). Thus, the change of variables \(x=y(t,\xi )\) yields

Following the steps in the proof of [13, (2.15)], we can establish

for almost every \(\xi \in \mathbb {R}\), which implies that

where we used (4.40a) and (4.12).

For (4.53a), note that, by construction, \(y_{\Delta x}(t,\xi )\) and \(H_{\Delta x}(t,\xi )\) are piecewise linear functions with nodes at the points \({\hat{\xi }}_j\) with \(j\in {\mathbb {Z}}\). Furthermore, \(H_{\Delta x}(t, \xi ) - G_{\Delta x}(t, y_{\Delta x}(t, \xi ))>0\) on an interval I if and only if \(y_{\Delta x}(t,\xi ) \) is constant on this interval. Assuming that such an interval I exists, an upper bound on its length can be established as follows. Observe that

and hence, due to (4.54),

Thus, it holds that

To estimate (4.53b), we exploit that \(G_{\Delta x}(t)\in \text {BV}(\mathbb {R})\), since it is monotonically increasing and bounded. Introducing \(\Theta = \Vert y(t) - y_{\Delta x}(t) \Vert _{\infty }\), then yields

where we applied Tonelli’s theorem to exchange the order of the sum and integral.

Combining (4.53), (4.55), (4.56), and (4.57), we end up with (4.52). \(\square \)

Theorem 4.12

Given \((u_0, \mu _0, \nu _0) \in \mathcal {D}_0\), let \((u, \mu , \nu )(t)= T_t \left( (u_0, \mu _0, \nu _0)\right) \) and \((u_{\Delta x}, \mu _{\Delta x}, \nu _{\Delta x})(t) = T_t \circ P_{\Delta x} \left( (u_0, \mu _0, \nu _0)\right) \) for \(t \in [0, \infty )\). Then, as \(\Delta x\rightarrow 0\)Footnote 1,

Furthermore, there exists a subsequence \(\{\Delta x_m \}_{m \in {\mathbb {N}}}\) with \(\Delta x_m \rightarrow 0\) as \(m \rightarrow \infty \), and a null set \(N \subset [0, \infty )\) such that for all \(t \in N^c\) we have as \(\Delta x_m\rightarrow 0\)

Note that in the case of conservative solutions, \(F_{\Delta x} = G_{\Delta x}\) and hence (4.52) implies that we have \(L^1(\mathbb {R})\)-convergence of \(F_{\Delta x}(t)\) for all \(t \ge 0\).

Proof

Note that \(U_{\Delta x}(t) \rightarrow U(t)\) and \(y_{\Delta x}(t) \rightarrow y(t)\) in \(L^{\infty }(\mathbb {R})\) as \(\Delta x\rightarrow 0\) by Theorem 4.9, which combined with (4.51) yields (4.58).

To prove (4.59), observe that for any \(\psi \in C_c^{\infty }(\mathbb {R})\),

and hence, using (4.58), the right hand side tends to zero as \(\Delta x\rightarrow 0\). Since \(C_c^\infty (\mathbb {R})\) is a dense subset of \(L^2(\mathbb {R})\), we end up with \(u_{\Delta x, x}(t) \rightharpoonup u_x(t)\) in \(L^2(\mathbb {R})\) as \(\Delta x \rightarrow 0\).

Next, note that \(y_{\Delta x}(t) \rightarrow y(t)\) in \(L^{\infty }(\mathbb {R})\) and \(y_{\Delta x,\xi }(t) \rightarrow y_\xi (t)\) in \(L^{2}(\mathbb {R})\) as \(\Delta x\rightarrow 0\) by Theorem 4.9, and hence (4.60) follows from (4.52)

To prove (4.61), let \(\phi \in C_b(\mathbb {R})\). Then, by Definition 2.4, we have

Since \(y_{\Delta x}(t) \rightarrow y(t)\) in \(L^{\infty }(\mathbb {R})\) by (4.39a) and \(\phi \in C_b(\mathbb {R})\), it follows that \(\phi (y_{\Delta x}(t)) \rightarrow \phi (y(t))\) pointwise a.e.. Furthermore, \(|\phi (y(t)) - \phi (y_{\Delta x}(t))|H_{\xi }(t) \le 2\Vert \phi \Vert _{\infty }H_{\xi }(t)\) and hence, by the dominated convergence theorem, (4.65a) vanishes as \(\Delta x \rightarrow 0\).

For (4.65b) on the other hand, we observe that \(\phi (y_{\Delta x}(t))\in L^{\infty }(\mathbb {R})\) and \(H_{\Delta x, \xi }(t)\rightarrow H_{\xi }(t)\) in \(L^1(\mathbb {R})\), see (4.39e), imply

as \(\Delta x\rightarrow 0\). Thus the left hand side of (4.65) tends to zero as \(\Delta x \rightarrow 0\) for any \(\phi \in C_b(\mathbb {R})\) and we have shown (4.61).

As \(g(X_{\Delta x}(t)) \rightarrow g(X(t))\) in \(L^1(\mathbb {R})\) by (4.39f), the proof of (4.62) is completely analogous to the one of (4.61).

To show the remaining part of the theorem, recall Corollary 4.10, which ensures the existence of a subsequence \(\{\Delta x_m \}_{m \in {\mathbb {N}}}\) with \(\Delta x_m \rightarrow 0\) as \(m \rightarrow \infty \), such that \(V_{\Delta x_m, \xi } (t) \rightarrow V_{\xi }(t)\) in \(L^1(\mathbb {R})\) for all \(t \in N^c\), where \(N\subset [0, T]\) is a null set. Therefore, applying a similar argument to the one used for proving (4.61), we can establish that \(\mu _{\Delta x_m}(t) \Longrightarrow \mu (t)\) for all \(t\in N^c\). Choosing \(\phi = 1 \in C_b(\mathbb {R})\), we obtain (4.64). Furthermore, as \(C_0(\mathbb {R}) \subset C_b(\mathbb {R})\), we have \(\mu _{\Delta x_m}(t) \rightarrow \mu (t)\) vaguely for all \(t \in N^c\) and (4.63) holds by [7, Prop. 7.19]. \(\square \)

Remark 4.13

For each \(t\in [0,T]\) and \(\Delta x>0\), \(F_{\Delta x}(t)\in \text {BV}(\mathbb {R})\). Thus, by Helly’s selection principle, see [2, App. II.], there exists for every \(t \in N\) a subsequence \(\{F_{\Delta x_k}(t) \}_{k \in {\mathbb {N}}}\) such that \(F_{\Delta x_k}(t)\) converges pointwise almost everywhere to a function of bounded variation.

Remark 4.14

The projection operator \(P_{\Delta x}\) is constructed with focus on accurately approximating the wave profile u, preserving the total energy and ensuring that

Therefore, the control over the size of the sets \(\{x: u_x(x) \le - \frac{2}{t}< u_{\Delta x, x}(x) < 0\}\) and \(\{x: u_{\Delta x, x}(x) \le - \frac{2}{t}< u_{x}(x) < 0\}\) is limited and as a consequence it prevents the convergence of the energy density \(\mu _{\Delta x}\) for every fixed time.

5 Numerical Experiments

In this section we present three examples which highlight different challenges for the numerical algorithm. The first two examples are also considered in [5, 14] and display solutions with multipeakon and cusp initial data, respectively. In the first example, wave breaking happens twice; initially, and also at a later time, and at each occurrence a finite amount of energy concentrates on a set of Lebesgue measure zero. For the second example on the other hand, the wave breaking times accumulate in the interval [0, 3], but at every time only an infinitesimal amount of energy concentrates. The behavior of this latter example is thoroughly studied in [5, Sec. 5.3]. In both cases, expressions for the exact solutions in Eulerian coordinates can be found.

In the third example, we consider a cosine wave profile as initial data. Here, an expression for the Lagrangian solution is known, but the exact expression for u in Eulerian coordinates is not available for comparison. The exact solution possesses accumulating wave breaking times, and, similarly to the cusp initial data, experiences wave breaking continuously in time, but now over the unbounded interval \([\frac{2}{\pi }, \infty )\). Furthermore, in contrast to the cusp example, the rate at which the total energy dissipates is nonlinear, making this an interesting challenge for the algorithm.

We present plots of both the time evolution and of the errors \(\sup _{t \in [0,T]}\Vert u(t) - u_{\Delta x}(t) \Vert _{\infty }\) and \(|F_{\infty }(T) - F_{\Delta x, \infty }(T)|\) for a chosen time T. In the first two examples, the errors are computed by comparing the exact and numerical solutions at the gridpoints of a uniform mesh which is a refinement of the finest mesh we use for the numerical approximations. The value of \(u_{\Delta x}\) at the gridpoints is found by using (3.15). As we do not have a closed form expression for the exact solution in Eulerian coordinates in the third example, Example 5.3, we instead use

to measure the error introduced by the projection operator \(P_{{\Delta x}}\). Note that due to (4.51), this is an upper bound for the \(L^\infty \)-error of \(u_{\Delta x}\) in Eulerian coordinates.

Example 5.1

(Multipeakon initial data) Consider the Cauchy problem from Example 3.4 with \(\alpha =\frac{1}{2}\), but now set \(\nu _0 = \mu _0\), i.e.,

The exact solution experiences wave breaking at \(t=2\).

In Fig. 4 the numerical solutions \((u_{\Delta x}, F_{\Delta x})\) with \(\Delta x = 2.35 \cdot 10^{-1}\) and \(\Delta x = 8.02 \cdot 10^{-3}\) are compared to the exact solution (u, F) at \(t=0\), at the wave breaking time \(t=2\), and at \(t=4\).

Figure 5 displays the numerically computed errors. In this case the numerically computed convergence order, which is \(\ge 0.99\) for both u and F, is greater than the general order of the initial approximation error, see Proposition 4.1. We also computed the errors using (5.1), which led to an order of 0.56. Thus, the estimate in (4.51) is not optimal for this example.

Time evolution of u (top row, dashed red line) and F (bottom row, dashed red line), and \(u_{\Delta x}\) (top row) and \(F_{\Delta x}\) (bottom row) for \(\Delta x = 2.35 \cdot 10^{-1}\) (blue dashed line) and \(\Delta x = 8.02 \cdot 10^{-3}\) (black solid line) in Example 5.1. The times from left to right are \(t=0\), 2, 4, and \(\alpha =\frac{1}{2}\) (Color figure online)

The errors \(\sup _{t \in [0,4]}\Vert u(t) - u_{\Delta x}(t) \Vert _{\infty }\) (left) and \(|F_{\infty }(T) - F_{\Delta x, \infty }(T)|\) at \(T=4\) (right) plotted against the mesh size \(\Delta x\) in Example 5.1

Time evolution of u (top row, dashed red line) and F (bottom row, dashed red line), and \(u_{\Delta x}\) (top row) and \(F_{\Delta x}\) (bottom row) for \(\Delta x = 1.76 \cdot 10^{-1}\) (blue dashed line) and \(\Delta x = 6.01 \cdot 10^{-3}\) (black solid line) in Example 5.2. The times from left to right are \(t=0, \frac{3}{2},3\), and \(\alpha =\frac{2}{5}\) (Color figure online)

The errors \(\sup _{t \in [0,3]}\Vert u(t) - u_{\Delta x}(t) \Vert _{\infty }\) (left) and \(|F_{\infty }(T) - F_{\Delta x, \infty }(T)|\) at \(T=3\) (right) plotted against the mesh size \(\Delta x\) in Example 5.2

Time evolution of u (top row, dashed red line) and F (bottom row, dashed red line), and \(u_{\Delta x}\) (top row) and \(F_{\Delta x}\) (bottom row) for \(\Delta x = 9.59 \cdot 10^{-2}\) (blue dashed line) and \(\Delta x = 5.88 \cdot 10^{-4}\) (black solid line) in Example 5.3. The times from left to right are \(t=0, \frac{2}{\pi }, \frac{4}{\pi }\), and \(\alpha =\frac{3}{5}\) (Color figure online)

Example 5.2

(Cusp initial data) Let \(\alpha =\frac{2}{5}\) and consider the initial data

The wave breaking times accumulate. For each \(t \in [0, 3]\) an infinitesimal amount of energy concentrates. Furthermore, \(u_{0,x}\) is not in \(L^{\infty }(\mathbb {R})\).

In Fig. 6\((u_{\Delta x}, F_{\Delta x})\) with \(\Delta x = 1.76 \cdot 10^{-1}\) and \(\Delta x = 6.01 \cdot 10^{-3}\), and the exact solution, are displayed at \(t=0\), \(t=\frac{3}{2}\), and \(t=3\). Figure 7 shows the errors as we refine the mesh. We also computed the errors using (5.1), which led to an order of 0.24. Thus, in this case the estimate in (4.51) is optimal.

As can be observed in Fig. 7 of Example 5.2, the convergence order is lower than in Example 5.1, suggesting that accumulating wave breaking times deteriorates the convergence rate of the numerical method. However, the upcoming example indicates that accumulation is of less significance than regularity of the initial data.

Example 5.3

(Cosine wave initial data) Let \(\alpha =\frac{3}{5}\) and consider the initial data

Note that in contrast to the previous example, the derivative \(u_{0, x}\) is Lipschitz continuous. Moreover, for each \(t \in \left( \tfrac{2}{\pi }, \infty \right) \), wave breaking occurs at four distinct isolated points both in Eulerian and Lagrangian coordinates, and happens continuously in the time interval \([\frac{2}{\pi }, \infty )\). One can compute the solution in Lagrangian coordinates exactly, as well as the total energy \(F_{\infty }(t)\) for all \(t \ge 0\). The Lagrangian solution is then numerically mapped into Eulerian coordinates and compared with two different numerical approximations at the times \(t=0\), \(\frac{2}{\pi }\), and \(\frac{4}{\pi }\) in Fig. 8.

Figure 9 shows the approximation errors. We observe that the convergence order is higher than in Example 5.2, although the wave breaking times still accumulate.

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Notes

We say that \(\eta _{\Delta x} \Longrightarrow \eta \) if \(\int _{\mathbb {R}}\phi d\eta _{\Delta x} \rightarrow \int _{\mathbb {R}}\phi d \eta \) for all \(\phi \in C_b(\mathbb {R}):= C(\mathbb {R}) \cap L^{\infty }(\mathbb {R})\).

References

Beals, R., Sattinger, D.H., Szmigielski, J.: Inverse scattering solutions of the Hunter–Saxton equation. Appl. Anal. 78(3–4), 255–269 (2001)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York-London-Sydney (1968)

Bressan, A., Constantin, A.: Global solutions of the Hunter–Saxton equation. SIAM J. Math. Anal. 37(3), 996–1026 (2005)

Bressan, A., Holden, H., Raynaud, X.: Lipschitz metric for the Hunter–Saxton equation. J. Math. Pures Appl. 94(1), 68–92 (2010)

Christiansen, T.: Traveling wave solutions for the Hunter–Saxton equation. Master’s thesis, Norwegian University of Science and Technology (NTNU), (2021)

Dafermos, C.M.: Generalized characteristics and the Hunter–Saxton equation. J. Hyperb. Differ. Equ. 8(1), 159–168 (2011)

Folland, G.B: Real analysis. Pure and Applied Mathematics (New York). John Wiley & Sons, Inc., New York, second edition edition. Modern techniques and their applications, A Wiley-Interscience Publication (1999)

Grasmair, M., Grunert, K., Holden, H.: On the equivalence of Eulerian and Lagrangian variables for the two-component Camassa-Holm system. In Current research in nonlinear analysis, volume 135 of Springer Optim. Appl., pp. 157–201. Springer, Cham (2018)

Grunert, K., Holden, H.: Uniqueness of conservative solutions for the Hunter–Saxton equation. Res. Math. Sci. 9(2), 19 (2022)

Grunert, K., Holden, H., Raynaud, X.: Global conservative solutions to the Camassa–Holm equation for initial data with nonvanishing asymptotics. Discret. Contin. Dyn. Syst. 32(12), 4209–4227 (2012)

Grunert, K., Holden, H., Raynaud, X.: Global dissipative solutions of the two-component Camassa–Holm system for initial data with nonvanishing asymptotics. Nonlinear Anal. Real World Appl. 17, 203–244 (2014)

Grunert, K., Holden, H., Raynaud, X.: A continuous interpolation between conservative and dissipative solutions for the two-component Camassa–Holm system. Forum Math. Sigma, vol. 3, Paper No. e1, 73, (2015)

Grunert, K., Nordli, A.: Existence and Lipschitz stability for \(\alpha \)-dissipative solutions of the two-component Hunter-Saxton system. J. Hyperb. Differ. Equ. 15(3), 559–597 (2018)

Grunert, K., Nordli, A., Solem, S.: Numerical conservative solutions of the Hunter–Saxton equation. BIT 61(2), 441–471 (2021)

Grunert, K., Tandy, M.: Lipschitz stability for the Hunter–Saxton equation. J. Hyperb. Differ. Equ. 19(2), 275–310 (2022)

Grunert, K., Tandy, M.: A Lipschitz metric for \(\alpha \)-dissipative solutions to the Hunter–Saxton equation, arXiv:2302.07150

Holden, H., Karlsen, K.H., Risebro, N.H.: Convergent difference schemes for the Hunter–Saxton equation. Math. Comp. 76(258), 699–744 (2007)

Holden, H., Raynaud, X.: Global conservative solutions of the Camassa–Holm equation: a Lagrangian point of view. Comm. Part. Differ. Equ. 32(10–12), 1511–1549 (2007)

Hunter, J.K., Saxton, R.: Dynamics of director fields. SIAM J. Appl. Math. 51(6), 1498–1521 (1991)

Hunter, J.K., Zheng, Y.: On a completely integrable nonlinear hyperbolic variational equation. Phys. D 79(2–4), 361–386 (1994)

Hunter, J.K., Zheng, Y.: On a nonlinear hyperbolic variational equation. I. Global existence of weak solutions. Arch. Ration. Mech. Anal 129(4), 305–353 (1995)

Khesin, B., Lenells, J., Misiołek, G.: Generalized Hunter–Saxton equation and the geometry of the group of circle diffeomorphisms. Math. Ann. 342(3), 617–656 (2008)

Lenells, J.: The Hunter–Saxton equation describes the geodesic flow on a sphere. J. Geom. Phys. 57(10), 2049–2064 (2007)

Lenells, J.: The Hunter–Saxton equation: a geometric approach. SIAM J. Math. Anal. 40(1), 266–277 (2008)

LeVeque, R.J.: Finite Volume Methods for Hyperbolic Problems. Cambridge Texts in Applied Mathematics. Cambridge University Press, Cambridge (2002)

Liu, J., Yin, Z.: Global weak solutions for a periodic two-component \(\mu \)-Hunter–Saxton system. Monatsh. Math. 168(3–4), 503–521 (2012)

McDonald, J.N., Weiss, N.A.: A course in real analysis. Academic Press Inc. San Diego CA, second edition edition (2013)

Nordli, A.: A Lipschitz metric for conservative solutions of the two-component Hunter–Saxton system. Methods Appl. Anal. 23(3), 215–232 (2016)

Nordli, A.: On the two-component Hunter–Saxton system. PhD thesis, Norwegian University of Science and Technology (NTNU) (2017)

Sato, S.: Stability and convergence of a conservative finite difference scheme for the modified Hunter–Saxton equation. BIT 59(1), 213–241 (2019)

Wunsch, M.: The generalized Hunter–Saxton system. SIAM J. Math. Anal. 42(3), 1286–1304 (2010)

Yan, X., Shu, C.-W.: Dissipative numerical methods for the Hunter–Saxton equation. J. Comput. Math. 28(5), 606–620 (2010)

Funding

Open access funding provided by NTNU Norwegian University of Science and Technology (incl St. Olavs Hospital - Trondheim University Hospital). The funding was provided by Norges Forskningsråd (Grant numbers: 286822, 250070).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Research supported by the grants Waves and Nonlinear Phenomena (WaNP) and Wave Phenomena and Stability — a Shocking Combination (WaPheS) from the Research Council of Norway.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Christiansen, T., Grunert, K., Nordli, A. et al. A Convergent Numerical Algorithm for \(\alpha \)-Dissipative Solutions of the Hunter–Saxton Equation. J Sci Comput 99, 14 (2024). https://doi.org/10.1007/s10915-024-02479-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-024-02479-4

Keywords

- Hunter–Saxton equation

- Projection operator

- Conservative solutions

- Numerical method

- Convergence

- \(\alpha \)-Dissipative solutions