Abstract

Wasserstein–Fisher–Rao (WFR) distance is a family of metrics to gauge the discrepancy of two Radon measures, which takes into account both transportation and weight change. Spherical WFR distance is a projected version of WFR distance for probability measures so that the space of Radon measures equipped with WFR can be viewed as metric cone over the space of probability measures with spherical WFR. Compared to the case for Wasserstein distance, the understanding of geodesics under the spherical WFR is less clear and still an ongoing research focus. In this paper, we develop a deep learning framework to compute the geodesics under the spherical WFR metric, and the learned geodesics can be adopted to generate weighted samples. Our approach is based on a Benamou–Brenier type dynamic formulation for spherical WFR. To overcome the difficulty in enforcing the boundary constraint brought by the weight change, a Kullback–Leibler divergence term based on the inverse map is introduced into the cost function. Moreover, a new regularization term using the particle velocity is introduced as a substitute for the Hamilton–Jacobi equation for the potential in dynamic formula. When used for sample generation, our framework can be beneficial for applications with given weighted samples, especially in the Bayesian inference, compared to sample generation with previous flow models.

Similar content being viewed by others

Data Availibility

The datasets generated during the current study are available from the corresponding author on reasonable request.

Notes

https://www.csie.ntu.edu.tw/\(\sim \)cjlin/libsvmtools/datasets/binary.html.

References

Ambrosio, L., Gigli, N., Savaré, G.: Gradient Flows: in Metric Spaces and in the Space of Probability Measures. Springer (2005)

Apte, A., Hairer, M., Stuart, A.M., Voss, J.: Sampling the Posterior: An Approach to Non-Gaussian Data Assimilation. Physica D: Nonlinear Phenomena, 230(1–2), 50–64 (2007)

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In: International Conference on Machine Learning, pp. 214–223 (2017)

Braides, A.: Gamma-Convergence for Beginners, vol. 22. Clarendon Press (2002)

Brenier, Y., Vorotnikov, D.: On optimal transport of matrix-valued measures. SIAM J. Math. Anal. 52(3), 2849–2873 (2020)

Chen, R.T.Q., Rubanova, Y., Bettencourt, J., Duvenaud, D.K.: Neural ordinary differential equations. Adv. Neural Inf. Process. Syst. 31 (2018)

Chizat, L., Peyré, G., Schmitzer, B., Vialard, F.-X.: An interpolating distance between optimal transport and Fisher–Rao metrics. Found. Comput. Math. 18(1), 1–44 (2018)

Chizat, L., Peyré, G., Schmitzer, B., Vialard, F.-X.: Unbalanced optimal transport: dynamic and Kantorovich formulations. J. Funct. Anal. 274(11), 3090–3123 (2018)

Chwialkowski, K., Strathmann, H., Gretton, A.: A kernel test of goodness of fit. In: International Conference on Machine Learning, pp. 2606–2615 (2016)

De Giorgi, E.: New Problems on Minimizing Movements. Ennio de Giorgi: Selected Papers, pp. 699–713 (1993)

Devlin, J., Chang, M.-W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics (2019)

Evans, L.C.: An Introduction to Mathematical Optimal Control Theory Version 0.2. Lecture Notes available at http://math.berkeley.edu/\(\sim \)evans/control.course.pdf (1983)

Finlay, C., Jacobsen, J.-H., Nurbekyan, L., Oberman, A.: How to train your neural ODE: the world of Jacobian and kinetic regularization. In: International Conference on Machine Learning, pp. 3154–3164 (2020)

Galichon, A.: A survey of some recent applications of optimal transport methods to econometrics. Econom. J. 20(2), C1–C11 (2017)

Galichon, A.: Optimal Transport Methods in Economics. Princeton University Press (2018)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. Adv. Neural Inf. Process. Syst. 27 (2014)

Gorham, J., Mackey, L.: Measuring sample quality with kernels. In: International Conference on Machine Learning, pp. 1292–1301 (2017)

Gretton, A., Borgwardt, K.M., Rasch, M.J., Schölkopf, B., Smola, A.: A kernel two-sample test. J. Mach. Learn. Res. 13(1), 723–773 (2012)

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.C.: Improved Training of Wasserstein GANs. Adv. Neural Inf. Process. Syst. 30 (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep Residual Learning for Image Recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hu, T., Chen, Z., Sun, H., Bai, J., Ye, M., Cheng, G.: Stein neural sampler. arXiv preprint arXiv:1810.03545 (2018)

Johnson, R., Zhang, T.: A framework of composite functional gradient methods for generative adversarial models. IEEE Trans. Pattern Anal. Mach. Intell. 43(1), 17–32 (2019)

Jordan, R., Kinderlehrer, D., Otto, F.: The variational formulation of the Fokker–Planck equation. SIAM J. Math. Anal. 29(1), 1–17 (1998)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: International Conference on Learning Representations (2015)

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. In: International Conference on Learning Representations (2014)

Kingma, D.P., Welling, M., et al.: An introduction to variational autoencoders. Found. Trends® Mach. Learn. 12(4), 307–392 (2019)

Kondratyev, S., Monsaingeon, L., Vorotnikov, D.: A new optimal transport distance on the space of finite Radon measures. Adv. Differ. Equ. 21(11/12), 1117–1164 (2016)

Kondratyev, S., Vorotnikov, D.: Spherical Hellinger–Kantorovich gradient flows. SIAM J. Math. Anal. 51(3), 2053–2084 (2019)

Laschos, V., Mielke, A.: Geometric properties of cones with applications on the Hellinger–Kantorovich space, and a new distance on the space of probability measures. J. Funct. Anal. 276(11), 3529–3576 (2019)

Li, W., Lee, W., Osher, S.: Computational mean-field information dynamics associated with reaction–diffusion equations. J. Comput. Phys., p. 111409 (2022)

Li, W., Ryu, E.K., Osher, S., Yin, W., Gangbo, W.: A parallel method for Earth Mover’s distance. J. Sci. Comput. 75(1), 182–197 (2018)

Li, Y., Swersky, K., Zemel, R.: Generative moment matching networks. In: International Conference on Machine Learning, pp. 1718–1727 (2015)

Liero, M., Mielke, A., Savaré, G.: Optimal entropy-transport problems and a new Hellinger–Kantorovich distance between positive measures. Inventiones Math. 211(3), 969–1117 (2018)

Liu, Q.: Stein variational gradient descent as gradient flow. Adv. Neural Inf. Process. Syst. 30 (2017)

Liu, Q., Lee, J., Jordan, M.: A kernelized Stein discrepancy for goodness-of-fit tests. In: International Conference on Machine Learning, pp. 276–284 (2016)

Liu, Q., Wang, D.: Stein variational gradient descent: a general purpose Bayesian inference algorithm. Adv. Neural Inf. Process. Syst. 29 (2016)

Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H., Teller, E.: Equation of state calculations by fast computing machines. J. Chem. Phys. 21(6), 1087–1092 (1953)

Monge, G.: Mémoire sur la théorie des déblais et des remblais. Mem. Math. Phys. Acad. Royale Sci., pp. 666–704 (1781)

Müller, T., McWilliams, B., Rousselle, F., Gross, M., Novák, J.: Neural importance sampling. ACM Trans. Graphics (ToG) 38(5), 1–19 (2019)

Onken, D., Fung, S.W., Li, X., Ruthotto, L.: OT-Flow: Fast and accurate continuous normalizing flows via optimal transport. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 9223–9232 (2021)

Papamakarios, G., Nalisnick, E., Rezende, D.J., Mohamed, S., Lakshminarayanan, B.: Normalizing flows for probabilistic modeling and inference. J. Mach. Learn. Res. 22(1), 2617–2680 (2021)

Pele, O., Werman, M.: A linear time histogram metric for improved sift matching. In: European Conference on Computer Vision, pp. 495–508. Springer (2008)

Peyré, G., Cuturi, M.: Computational optimal transport: with applications to data science. Found. Trends® Mach. Learn. 11(5–6), 355–607 (2019)

Rezende, D., Mohamed, S.: Variational inference with normalizing flows. In: International Conference on Machine Learning, pp. 1530–1538 (2015)

Rubner, Y., Guibas, L.J., Tomasi, C.: The Earth Mover’s distance, multi-dimensional scaling, and color-based image retrieval. In: Proceedings of the ARPA Image Understanding Workshop, vol. 661, p. 668 (1997)

Rubner, Y., Tomasi, C., Guibas, L.J.: The Earth Mover’s distance as a metric for image retrieval. Int. J. Comput. Vis. 40(2), 99–121 (2000)

Ruthotto, L., Osher, S.J., Li, W., Nurbekyan, L., Fung, S.W.: A machine learning framework for solving high-dimensional mean field game and mean field control problems. Proc. Natl. Acad. Sci. 117(17), 9183–9193 (2020)

Salimans, T., Zhang, H., Radford, A., Metaxas, D.: Improving GANs using optimal transport. In: International Conference on Learning Representations (2018)

Santambrogio, F.: Optimal Transport for Applied Mathematicians. Birkäuser, NY 55(58–63), 94 (2015)

Schiebinger, G., Shu, J., Tabaka, M., Cleary, B., Subramanian, V., Solomon, A., Gould, J., Liu, S., Lin, S., Berube, P., et al.: Optimal-transport analysis of single-cell gene expression identifies developmental trajectories in reprogramming. Cell 176(4), 928–943 (2019)

Tabak, E.G., Vanden-Eijnden, E.: Density estimation by dual ascent of the log-likelihood. Commun. Math. Sci. 8(1), 217–233 (2010)

Theis, L., Oord, A.V.D., Bethge, M.: A note on the evaluation of generative models. In: International Conference on Learning Representations (2016)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017)

Vidal, A., Wu Fung, S., Tenorio, L., Osher, S., Nurbekyan, L.: Taming hyperparameter tuning in continuous normalizing flows using the JKO scheme. Sci. Rep. 13(1), 4501 (2023)

Villani, C.: Optimal Transport: Old and New, vol. 338. Springer (2009)

Wang, Z., Zhou, D., Yang, M., Zhang, Y., Rao, C., Wu, H.: Robust document distance with Wasserstein–Fisher–Rao metric. In: Asian Conference on Machine Learning, pp. 721–736 (2020)

Wu, J., Wen, L., Green, P.L., Li, J., Maskell, S.: Ensemble Kalman filter based sequential Monte Carlo sampler for sequential Bayesian inference. Stat. Comput. 32(1), 1–14 (2022)

Xiong, Z., Li, L., Zhu, Y.-N., Zhang, X.: On the convergence of continuous and discrete unbalanced optimal transport models SIAM J. Numer. Anal. To appear. arXiv preprint arXiv:2303.17267 (2023)

Yang, K.D., Damodaran, K., Venkatachalapathy, S., Soylemezoglu, A.C., Shivashankar, G.V., Uhler, C.: Predicting cell lineages using autoencoders and optimal transport. PLoS Comput. Biol. 16(4), e1007828 (2020)

Zhou, D., Chen, J., Wu, H., Yang, D., Qiu, L.: The Wasserstein–Fisher–Rao metric for waveform based earthquake location. J. Comput. Math. 41(3), 417–438 (2023)

Funding

This work is partially supported by the National Key R &D Program of China Nos. 2020YFA0712000 and 2021YFA1002800. The work of L. Li was partially supported by Shanghai Municipal Science and Technology Major Project 2021SHZDZX0102, NSFC 12371400 and 12031013, and Shanghai Science and Technology Commission Grant No. 21JC1402900.

Author information

Authors and Affiliations

Contributions

All authors contributed equally.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Implementation Details for Solving SWFR Distance with Optimization Method

Appendix A: Implementation Details for Solving SWFR Distance with Optimization Method

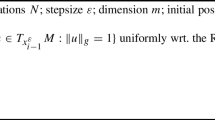

We can apply a primal-dual hybrid algorithm to compute the SWFR distance. The algorithm is also used to compute the earth mover’s distance in [31]. To begin with, we reformulate the optimization problem (3.4) as a convex form:

Then we solve the corresponding min-max problem:

We consider the space domain \(\Omega =[0,1]^{d}\) and the time domain [0, 1] for simplicity. Let \(\Omega _{h}\) be the discrete space mesh-grid of \(\Omega \) with step size h, i.e. \(\Omega _{h}=\{0,h,2h,\cdots ,1\}^d\). The time domain [0, 1] is discreted with step size \(\Delta t\) . Let \(N_x=1/h\) be the space grid size and \(N_t=1/\Delta t\) be the time grid size. All optimization variables (\(\rho \), \(\xi \), m, \(\phi \) and \(\lambda \)) are defined on the grid.

We employ the same discrete scheme for divergence operator and boundary conditions as [58]. We give some definitions on the discrete space \(\Omega _h\):

where \(D_{h,i}\) denotes discrete differential operator for i-th component in i-th dimension with step size h:

Then the discretization of (A.2) can be written as:

From the discretized form, we can describe the sizes of the optimization variables individually. The sizes of discretized variables are : \((N_t+1) \times (N_x+1)^d \) for \(\rho \), \( N_t\times (N_x+1)^{d} \times d \) for m, \( N_t\times (N_x)^{d} \) for \(\xi \) and \(\phi \), and \(N_t\) for \(\lambda \). The boundary conditions are given as \(\rho _1=\mu \), \(\rho _{N_t+1}=\nu \) and \(m(x)=0\) for all \(x \in \partial \Omega _h\). Then the primal-dual hybrid algorithm gives as follows for variables with superscripts k:

The first step of the algorithm is equivalent to solving the following system:

where \(\text {div}_{h}^{*}\) represents the conjugate operator of divergence operator. By the definition of conjugate operator, we have

Thus \(\text {div}_{h}^{*}=-\nabla _{h}\) and

where each \(\partial _{h,i} \) denotes discrete differential operator in i-th dimension with step size h:

We also introduce a natural boundary condition \(\phi _0=0\), which comes from deriving optimal value condition for updating \(\rho \). Solving above system (A.5) requires us to solve the roots for a third order polynomial, where \(\rho ^{\star }\) should be the largest real root. The third step of the algorithm is to update dual variables:

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jing, Y., Chen, J., Li, L. et al. A Machine Learning Framework for Geodesics Under Spherical Wasserstein–Fisher–Rao Metric and Its Application for Weighted Sample Generation. J Sci Comput 98, 5 (2024). https://doi.org/10.1007/s10915-023-02396-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02396-y

Keywords

- Unbalanced optimal transport

- Wasserstein distances

- Weighted samples

- Normalizing flows

- Kullback–Leibler divergence

- Bayesian inference