Abstract

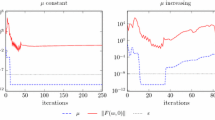

In this paper, we propose a unified primal-dual algorithm framework based on the augmented Lagrangian function for composite convex problems with conic inequality constraints. The new framework is highly versatile. First, it not only covers many existing algorithms such as PDHG, Chambolle–Pock, GDA, OGDA and linearized ALM, but also guides us to design a new efficient algorithm called Semi-OGDA (SOGDA). Second, it enables us to study the role of the augmented penalty term in the convergence analysis. Interestingly, a properly selected penalty not only improves the numerical performance of the above methods, but also theoretically enables the convergence of algorithms like PDHG and SOGDA. Under properly designed step sizes and penalty term, our unified framework preserves the \({\mathcal {O}}(1/N)\) ergodic convergence while not requiring any prior knowledge about the magnitude of the optimal Lagrangian multiplier. Linear convergence rate for affine equality constrained problem is also obtained given appropriate conditions. Finally, numerical experiments on linear programming, \(\ell _1\) minimization problem, and multi-block basis pursuit problem demonstrate the efficiency of our methods.

Similar content being viewed by others

References

Bauschke, H.H., Combettes, P.L., et al.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces, vol. 408. Springer, New York (2011)

Bertsekas, D.: Convex Optimization Algorithms. Athena Scientific, Nashua (2015)

Cai, X., Han, D., Yuan, X.: On the convergence of the direct extension of ADMM for three-block separable convex minimization models with one strongly convex function. Comput. Optim. Appl. 66(1), 39–73 (2017)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Chambolle, A., Pock, T.: On the ergodic convergence rates of a first-order primal-dual algorithm. Math. Program. 159(1), 253–287 (2016)

Chen, C., He, B., Ye, Y., Yuan, X.: The direct extension of ADMM for multi-block convex minimization problems is not necessarily convergent. Math. Program. 155(1), 57–79 (2016)

Chen, C., Shen, Y., You, Y.: On the convergence analysis of the alternating direction method of multipliers with three blocks. In: Abstract and Applied Analysis, vol. 2013. Hindawi (2013)

Daskalakis, C., Ilyas, A., Syrgkanis, V., Zeng, H.: Training GANs with optimism. arXiv preprint arXiv:1711.00141 (2017)

De Marchi, A., Jia, X., Kanzow, C., Mehlitz, P.: Constrained composite optimization and augmented Lagrangian methods. Mathematical Programming, pp. 1–34 (2023)

Deng, W., Lai, M.J., Peng, Z., Yin, W.: Parallel multi-block ADMM with \(o (1/k)\) convergence. J. Sci. Comput. 71(2), 712–736 (2017)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55(1), 293–318 (1992)

Gao, X., Xu, Y., Zhang, S.: Randomized primal-dual proximal block coordinate updates. J. Oper. Res. Soc. China 7(2), 205–250 (2019)

Gay, D.M.: Electronic mail distribution of linear programming test problems. Math. Program. Soc. COAL Newsl. 13, 10–12 (1985)

Gurobi Optimization, LLC: Gurobi Optimizer Reference Manual (2021). https://www.gurobi.com

Hamedani, E.Y., Aybat, N.S.: A primal-dual algorithm with line search for general convex-concave saddle point problems. SIAM J. Optim. 31(2), 1299–1329 (2021)

He, B., You, Y., Yuan, X.: On the convergence of primal-dual hybrid gradient algorithm. SIAM J. Imaging Sci. 7(4), 2526–2537 (2014)

Kong, W., Melo, J.G., Monteiro, R.D.: Iteration complexity of a proximal augmented Lagrangian method for solving nonconvex composite optimization problems with nonlinear convex constraints. Math. Oper. Res. 48(2), 1066–1094 (2023)

Li, M., Sun, D., Toh, K.C.: A convergent 3-block semi-proximal ADMM for convex minimization problems with one strongly convex block. Asia-Pac. J. Oper. Res. 32(04), 1550024 (2015)

Liang, T., Stokes, J.: Interaction matters: a note on non-asymptotic local convergence of generative adversarial networks. In: The 22nd International Conference on Artificial Intelligence and Statistics, pp. 907–915. PMLR (2019)

Lin, T., Ma, S., Zhang, S.: On the global linear convergence of the ADMM with multiblock variables. SIAM J. Optim. 25(3), 1478–1497 (2015)

Lin, T., Ma, S., Zhang, S.: On the sublinear convergence rate of multi-block ADMM. J. Oper. Res. Soc. China 3(3), 251–274 (2015)

Luo, H.: Accelerated primal-dual methods for linearly constrained convex optimization problems. arXiv preprint arXiv:2109.12604 (2021)

Malitsky, Y., Tam, M.K.: A forward-backward splitting method for monotone inclusions without cocoercivity. SIAM J. Optim. 30(2), 1451–1472 (2020)

Milzarek, A., Ulbrich, M.: A semismooth Newton method with multidimensional filter globalization for \(l_1\)-optimization. SIAM J. Optim. 24(1), 298–333 (2014)

Mokhtari, A., Ozdaglar, A., Pattathil, S.: A unified analysis of extra-gradient and optimistic gradient methods for saddle point problems: proximal point approach. In: International Conference on Artificial Intelligence and Statistics, pp. 1497–1507. PMLR (2020)

Mokhtari, A., Ozdaglar, A.E., Pattathil, S.: Convergence rate of \(\cal{O} (1/k)\) for optimistic gradient and extragradient methods in smooth convex-concave saddle point problems. SIAM J. Optim. 30(4), 3230–3251 (2020)

Moreau, J.J.: Décomposition orthogonale d’un espace hilbertien selon deux cônes mutuellement polaires. C. R. Hebd. Seances Acad. Sci. 255, 238–240 (1962)

Rockafellar, R.T., Wets, R.J.B.: Variational Analysis, vol. 317. Springer Science & Business Media, New York (2009)

Uzawa, H.: Iterative methods for concave programming. Stud. Linear Nonlinear Program. 6, 154–165 (1958)

Wei, C.Y., Lee, C.W., Zhang, M., Luo, H.: Linear last-iterate convergence in constrained saddle-point optimization. In: International Conference on Learning Representations (2020)

Xu, Y.: First-order methods for constrained convex programming based on linearized augmented Lagrangian function. Inf. J. Optim. 3(1), 89–117 (2021)

Yang, J., Yuan, X.: Linearized augmented Lagrangian and alternating direction methods for nuclear norm minimization. Math. Comput. 82(281), 301–329 (2013)

Yang, J., Zhang, Y.: Alternating direction algorithms for \(\ell _1\)-problems in compressive sensing. SIAM J. Sci. Comput. 33(1), 250–278 (2011)

Ye, J., Ye, X.: Necessary optimality conditions for optimization problems with variational inequality constraints. Math. Oper. Res. 22(4), 977–997 (1997)

Yuan, X., Zeng, S., Zhang, J.: Discerning the linear convergence of ADMM for structured convex optimization through the lens of variational analysis. J. Mach. Learn. Res. 21, 83–1 (2020)

Zhang, J., Hong, M., Zhang, S.: On lower iteration complexity bounds for the convex concave saddle point problems. Mathematical Programming, pp. 1–35 (2021)

Zhang, J., Wang, M., Hong, M., Zhang, S.: Primal-dual first-order methods for affinely constrained multi-block saddle point problems. arXiv preprint arXiv:2109.14212 (2021)

Zhu, M., Chan, T.: An efficient primal-dual hybrid gradient algorithm for total variation image restoration. UCLA Cam Rep. 34, 8–34 (2008)

Funding

Dr. Zaiwen Wen was supported by the National Natural Science Foundation of China under grant number 11831002, and Dr. Junyu Zhang was supported by the Ministry of Education, Singapore under WBS number A-0009530-04-00 & A-0009530-05-00.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Theorem 7

(Moreau’s decomposition theorem [27]) Let \({\mathcal {K}}\subset {\mathbb {R}}^n\) be a closed convex cone and \({\mathcal {K}}^\circ \) be its polar cone. For \(x,y,z\in {\mathbb {R}}^n\), the following statements are equivalent:

-

\(z=x+y,x\in {\mathcal {K}}, y\in {\mathcal {K}}^\circ \) and \(\langle x,y\rangle =0\),

-

\(x={\mathcal {P}}_{{\mathcal {K}}}(z)\) and \(y={\mathcal {P}}_{{\mathcal {K}}^\circ }(z)\).

The proof of basic lemmas is presented as follows. It is worth noting that when \({\mathcal {K}}={\mathbb {R}}^{n}_{\le 0}\), we have \({\mathcal {P}}_{+}\left( \cdot \right) =\left[ \cdot \right] _{+}\) being the standard entry-wise positive part, and \({\mathcal {P}}_{-}\left( \cdot \right) =\left[ \cdot \right] _{-}\).

Proof of Lemma 6

By the definition of \({\mathcal {P}}_{+}\left( \cdot \right) \) and \({\mathcal {P}}_{-}\left( \cdot \right) \), we have

where the second equality is due to the fact that \(\left\langle {{\mathcal {P}}_{+}\left( w\right) },{{\mathcal {P}}_{-}\left( w\right) }\right\rangle =\left\langle {{\mathcal {P}}_{+}\left( w'\right) },{{\mathcal {P}}_{-}\left( w'\right) }\right\rangle =0\). Hence, the case \(a>b\) is proven by noting that \(\left\langle {{\mathcal {P}}_{+}\left( w\right) },{{\mathcal {P}}_{-}\left( w'\right) }\right\rangle \ge 0\) and \(\left\langle {{\mathcal {P}}_{+}\left( w'\right) },{{\mathcal {P}}_{-}\left( w\right) }\right\rangle \ge 0\). As of the case \(a\ge b\), we have

which completes the proof of the first inequality.

For the second inequality, we consider

Lemma 10

When \({\mathcal {K}}=\{x|x\le 0\}\) and \(\rho >0\), for any \(i\in [m]\), the dual update rule of (14) is

Proof

According to the dual update rule in (14), \(y^{k+1}\) is the solution of the following equation:

We can give the solution of the equation through category discussion:

-

1.

If \(\nu _i\ge y_i\), \(\left[ \nu _i-y_i\right] _-=0\) and hence \(y_i = -\left[ \omega _i\right] _-\).

-

2.

If \(\nu _i<y_i\), the equation becomes \(y_i = -\left[ \omega _i+\kappa (\nu _i-y_i)\right] _-\), then we consider the following two cases:

-

When \(\omega _i+\kappa \nu _i\ge 0\), it holds that \(\omega _i+\kappa (\nu _i-y_i)\ge 0\) due to the fact that \(\kappa \ge 0\) and \(y_i\le 0\). Thus, we obtain \(y_i=0\).

-

When \(\omega _i+\kappa \nu _i< 0\), the equation has a solution if and only if \(\omega _i+\kappa (\nu _i-y_i)\le 0\), that is \(y_i = \omega _i+\kappa (\nu _i-y_i)\). Thus, the solution is \(y_i=\frac{\omega _i+\kappa \nu _i}{\kappa +1}\).

-

By further simplification, we get (64). \(\square \)

Lemma 11

For any \(a\in \mathbb {R}^n\) and \(b\in -{\mathcal {K}}\), it holds that \(\Vert {\mathcal {P}}_{+}\left( a+b\right) \Vert \ge \Vert {\mathcal {P}}_{+}\left( a\right) \Vert \).

Proof

Write \(c={\mathcal {P}}_{+}\left( a+b\right) \in {\mathcal {K}}^{\circ }, d={\mathcal {P}}_{-}\left( a+b\right) \in -{\mathcal {K}}\), then \(a+b=c-d\). By definition of \({\mathcal {P}}_{{\mathcal {K}}}\), we have

Hence, \(\left\| {\mathcal {P}}_{+}\left( a\right) \right\| =\left\| a+{\mathcal {P}}_{-}\left( a\right) \right\| =\left\| a-{\mathcal {P}}_{{\mathcal {K}}}(a)\right\| \le \Vert c\Vert =\left\| {\mathcal {P}}_{+}\left( a+b\right) \right\| \). \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhu, Z., Chen, F., Zhang, J. et al. A Unified Primal-Dual Algorithm Framework for Inequality Constrained Problems. J Sci Comput 97, 39 (2023). https://doi.org/10.1007/s10915-023-02346-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02346-8