Abstract

Thanks to their universal approximation properties and new efficient training strategies, Deep Neural Networks are becoming a valuable tool for the approximation of mathematical operators. In the present work, we introduce Mesh-Informed Neural Networks (MINNs), a class of architectures specifically tailored to handle mesh based functional data, and thus of particular interest for reduced order modeling of parametrized Partial Differential Equations (PDEs). The driving idea behind MINNs is to embed hidden layers into discrete functional spaces of increasing complexity, obtained through a sequence of meshes defined over the underlying spatial domain. The approach leads to a natural pruning strategy which enables the design of sparse architectures that are able to learn general nonlinear operators. We assess this strategy through an extensive set of numerical experiments, ranging from nonlocal operators to nonlinear diffusion PDEs, where MINNs are compared against more traditional architectures, such as classical fully connected Deep Neural Networks, but also more recent ones, such as DeepONets and Fourier Neural Operators. Our results show that MINNs can handle functional data defined on general domains of any shape, while ensuring reduced training times, lower computational costs, and better generalization capabilities, thus making MINNs very well-suited for demanding applications such as Reduced Order Modeling and Uncertainty Quantification for PDEs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Deep Neural Networks (DNNs) are one of the fundamental building blocks in modern Machine Learning. Originally developed to tackle classification tasks, they have become extremely popular after reporting striking achievements in fields such as computer vision [34] and language processing [50]. Not only, an in-depth investigation of their approximation properties has also been carried out in the last decade [6, 11, 17, 25, 29]. In particular, DNNs have been recently employed for learning (nonlinear) operators in high-dimensional spaces [12, 22, 33, 40], because of their unique properties, such as the ability to blend theoretical and data-driven approaches. Additionally, the interest in using DNNs to learn high-dimensional operators arises from the potential repercussions that these models would have on fields such as Reduced Order Modeling.

Consider for instance a parameter dependent PDE problem, where each parameter instance \(\varvec{\mu }\) leads to a solution \(u_{\varvec{\mu }}\). In this framework, multi-query applications such as optimal control and statistical inference are prohibitive to implement, as they imply repeated queries to expensive numerical solvers. Then, learning the operator \(\varvec{\mu }\rightarrow u_{\varvec{\mu }}\) becomes of key interest, as it allows one to replace numerical solvers with much cheaper surrogates. To this end, DNNs can be a valid and powerful alternative, as they were recently shown capable of either comparable or superior results with respect to other state-of-the-art techniques, e.g. [4, 19, 20]. More generally, other works have recently exploited physics-informed machine learning for efficient reduced order modeling of parametrized PDEs [10, 49]. Also, DNN models have the practical advantage of being highly versatile as, differently from other techniques such as splines and wavelets, they can easily adapt to both high-dimensional inputs, as in image recognition, and outputs, as in the so-called generative models.

However, when the dimensions into play become very high, there are some practical issues that hinder the use of as-is DNN models. In fact, classical dense architectures tend to have too many degrees of freedom, which makes them harder to train, computationally demanding and prone to overfitting [2]. As a remedy, alternative architectures such as Convolutional Neural Networks (CNNs) and Graph Neural Networks (GNNs) have been employed over the years. These architectures can handle very efficiently data defined respectively over hypercubes (CNNs) or graphs (GNNs). Nevertheless, these models do not provide a complete answer, especially when the high-dimensionality arises from the discretization of a functional space such as \(L^{2}(\varOmega )\), where \(\varOmega \subset {\mathbb {R}}^{d}\) is some bounded domain, possibly nonconvex. In fact, CNNs cannot handle general geometries and they might become inappropriate as soon as \(\varOmega \) is not an hypercube, although some preliminary attempts to generalize CNN in this direction have recently appeared [21]. Conversely, GNNs have the benefit of considering their inputs and outputs as defined over the vertices of a graph [47]. This appears to be a promising feature, since a classical way to discretize spatial domains is to use meshing strategies, and meshes are ultimately graphs. However, GNNs are heavily based on the graph representation itself, and their construction does not exploit the existence of an underlying spatial domain. In particular, GNNs were not constructed to operate at different levels of resolution: still, in a context in which the discretization is ultimately fictitious, this would be a desirable property.

Inspired by these considerations, we introduce a novel class of sparse architectures, which we refer to as Mesh-Informed Neural Networks (MINNs), to tackle the problem of learning a (nonlinear) operator

where \(V_{1}\) and \(V_{2}\) are some functional spaces, e.g. \({V_{1}=V_{2}}\subseteq L^{2}(\varOmega )\). The definition of \({\mathcal {G}}\) may involve both local and nonlocal operations, such as derivatives and integrals, and it may as well imply the solution to a Partial Differential Equation (PDE).

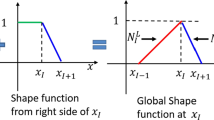

We cast the above problem in a setting that is more familiar to the Deep Learning literature by introducing a form of high-fidelity discretization. This is an approach that has become widely adopted by now, and it involves the discretization of the functional spaces along the same lines of Finite Element methods, as in [4, 19, 36]. In short, one introduces a mesh having vertices \(\{{\textbf{x}}_{i}\}_{i=1}^{N_{h}}\subset {\overline{\varOmega }}\), and defines \(V_{h}\subset L^{2}(\varOmega )\) as the subspace of piecewise linear Lagrange polynomials, where \(h>0\) is the stepsize of the mesh (the idea can be easily generalized to higher order Finite Elements, as we show later on). Since each \(v\in V_{h}\) is uniquely identified by its nodal values, we have \(V_{h}\cong {\mathbb {R}}^{N_{h}}\), and the original operator to be learned can be replaced by

The idea is now to approximate \({\mathcal {G}}_{h}\) by training some DNN \(\varPhi :{\mathbb {R}}^{N_{h}}\rightarrow {\mathbb {R}}^{N_{h}}\). As we argued previously, dense architectures are unsuited for such a purpose because of their prohibitive computational cost during training, which is mostly caused by: i) the computational resources required for the optimization, ii) the amount of training data needed to avoid overfitting.

To overcome this bottleneck, we propose Mesh-Informed Neural Networks. These are ultimately based on an a priori pruning strategy, whose purpose is to inform the model with the geometrical knowledge coming from \(\varOmega \). As we will demonstrate later in the paper, despite their simple implementation, MINNs show reduced training times and better generalization capabilities, making them a competitive alternative to other operator learning approaches, such as DeepONets [40] and Fourier Neural Operators [39]. Also, they allow for a novel interpretation of the so-called hidden layers, in a way that may simplify the practical problem of designing DNN architectures.

The rest of the paper is devoted to the presentation of MINNs and it is organized as follows. In Sect. 2, we set some notation and formally introduce Mesh-Informed Neural Networks from a theoretical point of view. There, we also discuss their implementation and comment on the parallelism between MINNs and other emerging approaches such as DeepONets [40] and Neural Operators [33]. We then devote Sects. 3, 4 and 5 to the numerical experiments, addressing a different scientific question in each Section. More precisely: in Sect. 3, we provide empirical evidence that the pruning strategy underlying MINNs is powerful enough to resolve the issues of dense architectures; in Sect. 4, we showcase the flexibility of MINNs in handling complex nonconvex domains; finally, in Sect. 5, we compare the performances of MINNs with those of other state-of-the-art Deep Learning algorithms, namely DeepONets and Fourier Neural Operators. Following the numerical experiments, in Sect. 6, we take the chance to present an application where MINNs are employed to answer a practical problem of Uncertainty Quantification related to the delivery of oxygen in biological tissues. Finally, we draw our conclusions and discuss future developments in Sect. 7.

2 Mesh-Informed Neural Networks

In the present Section we present Mesh-Informed Neural Networks, a novel class of architectures specifically built to handle discretized functional data defined over meshes, and thus of particular interest for PDE applications. Preliminary to that, we introduce some notation and recall some of the basic concepts behind classical DNNs.

2.1 Notation and Preliminaries

Deep Neural Networks are a powerful class of approximators that is ultimately based on the composition of affine and nonlinear transformations. Here, we focus on DNNs having a feedforward architecture. We report below some basic definitions.

Definition 1

Let \(m,n\ge 1\) and \(\rho :{\mathbb {R}}\rightarrow {\mathbb {R}}\). A layer with activation \(\rho \) is a map \(L:{\mathbb {R}}^{m}\rightarrow {\mathbb {R}}^{n}\) of the form \(L({{\textbf {v}}})=\rho \left( {\textbf{W}}{{\textbf {v}}}+{\textbf{b}}\right) \), for some \({\textbf{W}}\in {\mathbb {R}}^{n\times m}\) and \({\textbf{b}}\in {\mathbb {R}}^{n}\).

In the literature, \({\textbf{W}}\) and \({\textbf{b}}\) are usually referred to as the weight and the bias of the layer, respectively. Note that Definition 1 contains an abuse of notation, as \(\rho \) is evaluated over an n-dimensional vector: we understand the latter operation componentwise, that is \(\rho ([x_{1},\ldots ,x_{n}]):=[\rho (x_{1}),\ldots ,\rho (x_{n})]\).

Definition 2

Let \(m,n\ge 1\). A neural network of depth \(l\ge 0\) is a map \(\varPhi :{\mathbb {R}}^{m}\rightarrow {\mathbb {R}}^{n}\) obtained via composition of \(l+1\) layers, \(\varPhi =L_{l+1}\circ \ldots L_{1}\).

The layers of a neural network do not need to share the same activation function and usually the output layer, \(L_{l+1}\), does not have one. Architectures with \(l=1\) are known as shallow networks, while the adjective deep is used when \(l\ge 2\). We also allow for the degenerate case in which the network reduces to a single layer (\(l=0\)). The classical pipeline for building a neural network model starts by fixing the architecture, that is the number of layers and their input–output dimensions. Then, the weights and biases of all layers are tuned according to some procedure, which typically involves the optimization of a loss function computed over a given training set: for further details, the reader can refer to [27].

2.2 Mesh-Informed Layers

We consider the following framework. We are given a bounded domain \(\varOmega \subset {\mathbb {R}}^{d}\), not necessarily convex, and two meshes (see Definition 3 right below) having respectively stepsizes \(h,h'>0\) and vertices

The two meshes can be completely different and they can be either structured or unstructured. To each mesh we associate the corresponding space of piecewise linear Lagrange polynomials, namely \(V_{h},V_{h'}\subset L^{2}(\varOmega )\). Our purpose is to introduce a suitable notion of mesh-informed layer \(L: V_{h}\rightarrow V_{h'}\) that exploits the a priori existence of \(\varOmega \). In analogy to Definition 1, L should have \(N_{h}\) neurons at input and \(N_{h'}\) neurons at output, since \(V_{h}\cong {\mathbb {R}}^{N_{h}}\) and \(V_{h'}\cong {\mathbb {R}}^{N_{h'}}\). However, thinking of the state spaces as either comprised of functions or vectors is fundamentally different: while we can describe the objects in \(V_{h}\) as regular, smooth or noisy, these notions have no meaning in \({\mathbb {R}}^{N_{h}}\), and similarly for \(V_{h'}\) and \({\mathbb {R}}^{N_{h'}}\). Furthermore, in the case of PDE applications, we are typically not interested in all the elements of \(V_{h}\) and \(V_{h'}\), rather we focus on those that present spatial correlations coherent with the underlying physics. Starting from these considerations, we build a novel layer architecture that can meet our specific needs. In order to provide a rigorous definition, and directly extend the idea to higher order Finite Element spaces, we first introduce some preliminary notation. For the sake of simplicity, we will restrict to simplicial Finite Elements [13].

Definition 3

Let \(\varOmega \subset {\mathbb {R}}^{d}\) be a bounded domain. Let \({\mathcal {M}}\) be a collection of d-simplices in \(\varOmega \), so that each \(K\in {\mathcal {M}}\) is a closed subset of \({\overline{\varOmega }}\). For each element \(K\in {\mathcal {M}}\), define the quantities

We say that \({\mathcal {M}}\) is an admissible mesh of stepsize \(h>0\) over \(\varOmega \) if the following conditions hold.

-

1.

The elements are exhaustive, that is

$$\begin{aligned} \text {dist}\left( {\overline{\varOmega }}, \bigcup _{K\in {\mathcal {M}}}K\right) \le h \end{aligned}$$where \(\text {dist}(A,B)=\sup _{x\in A}\inf _{y\in B}|x-y|\) is the distance between A and B.

-

2.

Any two distinct elements \(K,K'\in {\mathcal {M}}\) have disjoint interiors. Also, their intersection is either empty or results in a common face of dimension \(s<d\).

-

4.

The elements are non degenerate and their maximum diameter equals h, that is

$$\begin{aligned} \min _{K\in {\mathcal {M}}}\;R_{K}>0\quad \text {and}\quad \max _{K\in {\mathcal {M}}}\;h_{K}=h. \end{aligned}$$

In that case, the quantity

is said to be the aspect-ratio of the mesh.

Definition 4

Let \(\varOmega \subset {\mathbb {R}}^{d}\) be a bounded domain and let \({\mathcal {M}}\) be a mesh of stepsize \(h>0\) defined over \(\varOmega \). For any positive integer q, we write \(X_{h}^{q}({\mathcal {M}})\) for the Finite Element space of piecewise polynomials of degree at most q, that is

Let \(N_{h}=\dim (X_{h}^{q}({\mathcal {M}}))\). We say that a collection of nodes \(\{{\textbf{x}}_{i}\}_{i=1}^{N_{h}}\subset \varOmega \) and a sequence of functions \(\{\varphi _{i}\}_{i=1}^{N_{h}}\subset X_{h}^{q}({\mathcal {M}})\) define a Lagrangian basis of \(X_{h}^{q}({\mathcal {M}})\) if

We write \(\varPi _{h,q}({\mathcal {M}}):X_{h}^{q}({\mathcal {M}})\rightarrow {\mathbb {R}}^{N_{h}}\) for the function-to-nodes operator,

whose inverse is

We now have all we need to introduce our concept of mesh-informed layer.

Definition 5

(Mesh-informed layer) Let \(\varOmega \subset {\mathbb {R}}^{d}\) be a bounded domain and

a given distance function. Let

\({\mathcal {M}}\) and

\({\mathcal {M}}'\) be two meshes of stepsizes h and \(h'\), respectively. Let \(V_{h}=X_{h}^{q}({\mathcal {M}})\) and \(V_{h'}=X_{h'}^{q'}({\mathcal {M}})\) be the input and output spaces, respectively. Denote by \(\{{\textbf{x}}_{j}\}_{j=1}^{N_{h}}\) and \(\{{\textbf{x}}_{i}'\}_{i=1}^{N_{h'}}\) the nodes associated to a Lagrangian basis of \(V_{h}\) and \(V_{h'}\) respectively. A mesh-informed layer with activation function \(\rho :{\mathbb {R}}\rightarrow {\mathbb {R}}\) and support \(r>0\) is a map \(L:V_{h}\rightarrow V_{h'}\) of the form

a given distance function. Let

\({\mathcal {M}}\) and

\({\mathcal {M}}'\) be two meshes of stepsizes h and \(h'\), respectively. Let \(V_{h}=X_{h}^{q}({\mathcal {M}})\) and \(V_{h'}=X_{h'}^{q'}({\mathcal {M}})\) be the input and output spaces, respectively. Denote by \(\{{\textbf{x}}_{j}\}_{j=1}^{N_{h}}\) and \(\{{\textbf{x}}_{i}'\}_{i=1}^{N_{h'}}\) the nodes associated to a Lagrangian basis of \(V_{h}\) and \(V_{h'}\) respectively. A mesh-informed layer with activation function \(\rho :{\mathbb {R}}\rightarrow {\mathbb {R}}\) and support \(r>0\) is a map \(L:V_{h}\rightarrow V_{h'}\) of the form

where \({\tilde{L}}:{\mathbb {R}}^{N_{h}}\rightarrow {\mathbb {R}}^{N_{h}'}\) is a layer with activation \(\rho \) whose weight matrix \({\textbf{W}}\) satisfies the additional sparsity constraint below,

Comparison of a dense layer (cf. Definition 1) and a mesh-informed layer (cf. Definition 5). The dense model features 3 neurons at input and 5 at output. All the neurons communicate: consequently the weight matrix of the layer has 15 active entries. In the mesh-informed counterpart, neurons become vertices of two meshes (resp. in green and red) defined over the same spatial domain \(\varOmega \). Only nearby neurons are allowed to communicate. This results in a sparse model with only 9 active weights

The distance function  in Definition 5 can be any metric over \(\varOmega \). For instance, one may choose to consider the Euclidean distance,

in Definition 5 can be any metric over \(\varOmega \). For instance, one may choose to consider the Euclidean distance,  . However, if the geometry of \(\varOmega \) becomes particularly involved, better choices of

. However, if the geometry of \(\varOmega \) becomes particularly involved, better choices of  might be available, such as the geodesic distance. The latter quantifies the distance of two points \({\textbf{x}},{\textbf{x}}'\in \varOmega \) by measuring the length of the shortest path within \(\varOmega \) between \({\textbf{x}}\) and \({\textbf{x}}'\), namely

might be available, such as the geodesic distance. The latter quantifies the distance of two points \({\textbf{x}},{\textbf{x}}'\in \varOmega \) by measuring the length of the shortest path within \(\varOmega \) between \({\textbf{x}}\) and \({\textbf{x}}'\), namely

It is worth pointing out that, as a matter of fact, the projections \(\varPi _{h,q}({\mathcal {M}})\) and \(\varPi _{h',q'}^{-1}({\mathcal {M}}')\) have the sole purpose of making Definition 5 rigorous. What actually defines the mesh-informed layer L are the sparsity patterns imposed to \({\tilde{L}}\). In fact, the idea is that these constraints should help the layer in producing outputs that are more coherent with the underlying spatial domain (cf. Fig. 1). In light of this intrinsic duality between L and \({\tilde{L}}\), we will refer to the weights and biases of L as to those that are formally defined in \({\tilde{L}}\). Also, for better readability, from now on we will use the notation

to emphasize that L is a mesh-informed layer with support r. We note that dense layers can be recovered by letting  , while lighter architecture are obtained for smaller values of r. The following result provides an explicit upper bound on the number of nonzero entries in a mesh-informed layer. For the sake of simplicity, we restrict to the case in which

, while lighter architecture are obtained for smaller values of r. The following result provides an explicit upper bound on the number of nonzero entries in a mesh-informed layer. For the sake of simplicity, we restrict to the case in which  is the Euclidean distance.

is the Euclidean distance.

Theorem 1

Let \(\varOmega \subset {\mathbb {R}}^{d}\) be a bounded domain and  the Euclidean distance. Let \({\mathcal {M}}\) and \({\mathcal {M}}'\) be two meshes having respectively stepsizes \(h,h'\) and aspect-ratios \(\sigma ,\sigma '\). Let

the Euclidean distance. Let \({\mathcal {M}}\) and \({\mathcal {M}}'\) be two meshes having respectively stepsizes \(h,h'\) and aspect-ratios \(\sigma ,\sigma '\). Let

be the smallest diameters within the two meshes respectively. Let \(L: V_{h}\xrightarrow []{\;r\;} V_{h'}\) be a mesh-informed layer of support \(r>0\), where \(V_{h}:=X_{h}^{q}({\mathcal {M}})\) and \(V_{h'}:=X_{h'}^{q'}({\mathcal {M}}')\). Then,

where \(\Vert {\textbf{W}}\Vert _{0}\) is the number of nonzero entries in the weight matrix of the layer L, while \(C=C(\varOmega ,d,q,q')>0\) is a constant depending only on \(\varOmega \), d, q and \(q'\).

Proof

Let \(N_{h}:=\text {dim}(V_{h})\), \(N_{h'}:=\text {dim}(V_{h'})\) and let \(\{{\textbf{x}}_{j}\}_{j=1}^{N_{h}},\{{\textbf{x}}_{i}'\}_{i=1}^{N_{h'}}\) be the Lagrangian nodes in the two meshes, respectively. Let \(\omega :=|B({\textbf{0}},1)|\) be the volume of the unit ball in \({\mathbb {R}}^{d}\). Since \(\min _{K'}R_{K'}\ge h'_{\min }/\sigma '\), the volume of an element in the output mesh is at least

It follows that, for any \({\textbf{x}}\in \varOmega \), the ball \(B({\textbf{x}},r)\) can contain at most

elements of the output mesh. Therefore, the number of indices i such that \(|{\textbf{x}}_{i}'-{\textbf{x}}_{j}|\le r\) is at most \(n_{\text {e}}(r,h')c(d,q')\), where \(c(d,q'):=(d+q')!/(q'!d!)\) bounds the number of degrees of freedom within each element. Finally,

\(\square \)

Starting from here, we define MINNs by composition, with a possible interchange of dense and mesh-informed layers. Consider for instance the case in which we want to define a Mesh-Informed Neural Network \(\varPhi : {\mathbb {R}}^{p}\rightarrow V_{h}\cong {\mathbb {R}}^{N_{h}}\) that maps a low-dimensional input, say \(p\ll N_{h}\), to some functional output. Then, using our notation, one possible architecture could be

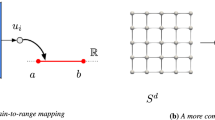

The above scheme defines a MINN of depth \(l=2\), as it is composed of 3 layers. The first layer is dense (Definition 1) and has the purpose of preprocessing the input while mapping the data onto a coarse mesh (stepsize 4h). Then, the remaining two layers perform local transformations in order to return the desired output. Note that the three meshes need not to satisfy any hierarchy, see e.g. Fig. 2. Also, the corresponding Finite Element spaces need not to share the same polynomial degree. Clearly, (1) can be generalized by employing any number of layers, as well as any sequence of stepsizes \(h_{1},\dots ,h_{n}\) and supports \(r_{1},\dots ,r_{n-1}\). Similarly, as we shall demonstrate in our experiments, one may also modify (1) to handle functional inputs, e.g. by introducing a mesh-informed layer at the beginning of the architecture. The choice of the hyperparameters remains problem specific, but a good rule of thumb is to decrease the supports as the mesh becomes finer, so that the network complexity is kept under control (cf. Theorem 1).

MINNs operate at different resolution levels to enforce local properties. Here, three meshes of a vertical brain slice, represent three different hidden states in the pipeline of a suitable MINN architecture, where neurons are identified with mesh vertices. Due to the sparsity constraint (Definition 5), the red neuron only fires information to those in the highlighted region (second mesh); in turn, these can only communicate with the neurons in the third mesh that are sufficiently close (orange region). In this way, despite relying on local operations, MINNs can eventually spread information all over the domain by exploiting the composition of multiple layers

2.3 Implementation Details

For simplicity, let us first focus on the case in which distances are evaluated according to the Euclidean metric,  In this case, the practical implementation of mesh-informed layers is straightforward and can be done as follows, cf. Fig. 3. Given \(\varOmega \subset {\mathbb {R}}^{d}\), \(h,h'>0\), let \({\textbf{X}}\in {\mathbb {R}}^{N_{h}\times d}\) and \({\textbf{X}}'\in {\mathbb {R}}^{N_{h'}\times d}\) be the matrices containing the degrees of freedom associated to the chosen Finite Element spaces, that is \({\textbf{X}}_{j,.}:=[X_{j,1},\dots ,X_{j,d}]\) are the coordinates of the jth node in the input mesh, and similarly for \({\textbf{X}}'\). In order to build a mesh-informed layer of support \(r>0\), we first compute all the pairwise distances \(D_{i,j}:=|{\textbf{X}}_{j,.}-{\textbf{X}}'_{i,.}|^{2}\) among the nodes in the two meshes. This can be done efficiently using tensor algebra, e.g.

In this case, the practical implementation of mesh-informed layers is straightforward and can be done as follows, cf. Fig. 3. Given \(\varOmega \subset {\mathbb {R}}^{d}\), \(h,h'>0\), let \({\textbf{X}}\in {\mathbb {R}}^{N_{h}\times d}\) and \({\textbf{X}}'\in {\mathbb {R}}^{N_{h'}\times d}\) be the matrices containing the degrees of freedom associated to the chosen Finite Element spaces, that is \({\textbf{X}}_{j,.}:=[X_{j,1},\dots ,X_{j,d}]\) are the coordinates of the jth node in the input mesh, and similarly for \({\textbf{X}}'\). In order to build a mesh-informed layer of support \(r>0\), we first compute all the pairwise distances \(D_{i,j}:=|{\textbf{X}}_{j,.}-{\textbf{X}}'_{i,.}|^{2}\) among the nodes in the two meshes. This can be done efficiently using tensor algebra, e.g.

where \({\textbf{X}}_{.,l}\) is the lth column of \({\textbf{X}}\), \(e_{k}:=[1,\dots ,1]\in {\mathbb {R}}^{k}\), \(\otimes \) is the tensor product and \(\circ 2\) is the Hadamard power. We then extract the indices \(\{(i_{k}, j_{k})\}_{k=1}^{\text {dof}}\) for which \(D_{i_{k},j_{k}}\le r^{2}\) and initialize a weight vector \({\textbf{w}}\in {\mathbb {R}}^{\text {dof}}\). This allows us to declare the weight matrix \({\textbf{W}}\) in sparse format by letting the nonzero entries be equal to \(w_{k}\) at position \((i_{k},j_{k})\), so that \(\Vert {\textbf{W}}\Vert _{0}=\text {dof}\). Preliminary to the training phase, we fill the entries of \({\textbf{w}}\) with random values sampled from a suitable Gaussian distribution. The optimal choice for such distribution may be problem dependent. Empirically, we see that good results can be achieved by sampling the weights \(w_{1},\dots ,w_{\text {dof}}\) independently from a centered normal distribution with variance \(1/\text {dof}\). Otherwise, if the architecture is particularly deep, another possibility is to consider an adaptation of the He initialization [31]. In the case of \(\alpha \)-leakyReLU nonlinearities, the latter suggests sampling \(w_{i}\) from a centered Gaussian distribution with variance

where \(s_{i}\) is the cardinality of the set \(\{j\;|\;D_{i,j}\le r^{2}\}\), that is, the number of input neurons that communicate with the ith output neuron. Finally, in analogy to [31], if the layer has no activation then one may just set \(\alpha =1\) in Eq. (2).

Implementation of a mesh-informed layer. Each panel reports a representation of the input/output meshes (top row), the network architecture (bottom left) and the corresponding weight matrix (bottom right). The degrees of freedom at the input/output meshes are associated to the input/output neurons of a reference dense layer (left panel). For each input node, the neighbouring nodes at output are highlighted (center panel). Then, all the remaining connections are pruned, and the final model is left with a sparse weight matrix (right panel)

The above reasoning can be easily adapted to the general case, provided that one is able to compute efficiently all the pairwise distances  . Of course, the actual implementation will then depend on the specific choice of

. Of course, the actual implementation will then depend on the specific choice of  . Since the case of geodesic distances can be of particular interest in certain applications, we shall briefly discuss it below. In this setting, the main difficulty arises from the fact that, in general, we are required to compute distances between points of different meshes. Additionally, if we consider Finite Element spaces of degree \(q>1\), not all the Lagrangian nodes will be placed over the mesh vertices, meaning that we cannot exploit the graph structure of the mesh to calculate shortest paths.

. Since the case of geodesic distances can be of particular interest in certain applications, we shall briefly discuss it below. In this setting, the main difficulty arises from the fact that, in general, we are required to compute distances between points of different meshes. Additionally, if we consider Finite Element spaces of degree \(q>1\), not all the Lagrangian nodes will be placed over the mesh vertices, meaning that we cannot exploit the graph structure of the mesh to calculate shortest paths.

To overcome these drawbacks, we propose the introduction of an auxiliary coarse mesh \({\mathcal {M}}_{0}:=\{K_{i}\}_{i=1}^{m}\), whose sole purpose is to capture the geometry of the domain. We use this mesh to build another graph, \({\mathscr {G}}\), which describes the location of the elements \(K_{i}\). More precisely, let \({\textbf{c}}_{i}\) be the centroid of the element \(K_{i}\). We let \({\mathscr {G}}\) be the weighted graph having vertices \(\{{\textbf{c}}_{i}\}_{i=1}^{m}\), where we link \({\textbf{c}}_{i}\) with \({\textbf{c}}_{j}\) if and only if the elements \(K_{i}\) and \(K_{j}\) are adjacent. Then, to weight the edges, we use the Euclidean distance between the centroids. Once \({\mathscr {G}}\) is constructed, we use Dijkstra’s algorithm to compute all the shortest paths along the graph. This leaves us with an estimated geodesic distance \(g_{i,j}\) for each pair of centroids \(({\textbf{c}}_{i},{\textbf{c}}_{j})\), which we can precompute and store in a suitable matrix. Then, we approximate the geodesic distance of any two points \({\textbf{x}},{\textbf{x}}'\in \varOmega \) as

where  maps each point to an element containing it, see Fig. 4. In other words,

maps each point to an element containing it, see Fig. 4. In other words,

If the auxiliary mesh does not fill \(\varOmega \) completely, the relaxed version below may be used as well

In general, evaluating the index function  can be done in O(m) time. In particular, going back to our original problem, we can approximate all the pairwise distances between the nodes of the input and the output spaces in \(O(mN_{h}+mN_{n}'+m^{2})\) time. In fact, we can run Dijkstra’s algorithm once and for all with a computational cost of \(O(m^{2})\). Then, we just need to evaluate the index function

can be done in O(m) time. In particular, going back to our original problem, we can approximate all the pairwise distances between the nodes of the input and the output spaces in \(O(mN_{h}+mN_{n}'+m^{2})\) time. In fact, we can run Dijkstra’s algorithm once and for all with a computational cost of \(O(m^{2})\). Then, we just need to evaluate the index function  for all the Lagrangian nodes \(\{{\textbf{x}}\}_{i=1}^{N_{h}}\) and \(\{{\textbf{x}}'\}_{i=1}^{N_{h'}}\), which takes respectively \(O(mN_{h})\) and \(O(mN_{h'})\).

for all the Lagrangian nodes \(\{{\textbf{x}}\}_{i=1}^{N_{h}}\) and \(\{{\textbf{x}}'\}_{i=1}^{N_{h'}}\), which takes respectively \(O(mN_{h})\) and \(O(mN_{h'})\).

2.4 A Supervised Learning Approach Based on MINNs

Once a mesh-informed architecture has been constructed, we learn the operator of interest through a standard supervised approach. Let \({\mathcal {G}}_{h}:\varTheta \rightarrow V_{h}\) be the high-fidelity discretization of a suitable operator, whose pointwise evaluations may as well involve the numerical solution to a PDE. Here, we allow the input space, \(\varTheta \), to either consist of vectors, \(\varTheta \subset {\mathbb {R}}^{p}\), or (discretized) functions, e.g. \(\varTheta =V_{h}.\) Let \(\varPhi :\varTheta \rightarrow V_{h}\) be a given MINN architecture: in general, depending on the input type, the latter will consist of both dense and mesh-informed layers. We assume that \(\varPhi \) has already been initialized according to some procedure, such as those reported in Sect. 2.3, and that it is ready for training.

We make direct use of the forward operator to compute a suitable collection of training pairs (thereby also referred to as training samples or snapshots, following the classical terminology adopted in the Reduced Order Modeling literature),

with \(u_{h}^{i}:={\mathcal {G}}_{h}(f_{i}).\) Here, with little abuse of notation, we write \(f\in \varTheta \), that is, we assume to be in the case of functional inputs. If not so, the reader may simply replace the \(f_{i}\)’s with suitable \(\varvec{\mu }_{i}\)’s. The training pairs are typically chosen at random, i.e. by equipping the input space with a probability distribution \({\mathbb {P}}\) and by computing \(N_{\text {train}}\) independent samples.

We then train \(\varPhi \) by minimizing the mean squared \(L^{2}\)-error, that is, by optimizing the following loss function

which acts as the empirical counterpart of

This allows one to actually tune the model parameters (i.e. all layers weights and biases), and obtain a suitable approximation \(\varPhi \approx {\mathcal {G}}_{h}.\) Then, multiple metrics can be used to evaluate the quality of such an approximation. In this work, we shall often consider the average relative \(L^{2}\)-error, which we estimate thanks to a precomputed test set

The test set \(\{f_{i}^{\text {test}},u_{h,\text {test}}^{i}\}_{i=1}^{N_{\text {test}}}\) is constructed independently of the training set, but still relying on the high-fidelity operator \({\mathcal {G}}_{h}\) and the probability distribution \({\mathbb {P}}\).

If the approximation is considered satisfactory, then the expensive operator \({\mathcal {G}}_{h}\) can be replaced with the cheaper MINN surrogate \(\varPhi \), whose outputs can be evaluated with little to none computational cost.

Before concluding this paragraph, it may be worth to emphasize a couple of things. First of all, we highlight the fact that both the loss and the error function, respectively \(\hat{{\mathscr {L}}}\) and \({\mathscr {E}}\), require the computation of integral norms. However, this is not an issue: since both \(u_{h}^{i}\) and \(\varPhi (f_{i})\) lie in \(V_{h}\), we can compute these norms by relying on the mass matrix \({\textbf{M}}\in {\mathbb {R}}^{N_{h}\times N_{h}}\), which can be precomputed and stored once and for all. We recall in fact that the latter is a highly sparse matrix defined in such a way that

where \(\{{\textbf{x}}_{i}\}_{i=1}^{N_{h}}\) are the nodes corresponding to the degrees of freedom in \(V_{h}\), cf. function-to-nodes operator in Definition 4.

Finally, we remark that the MINN architecture is trained in a purely supervised fashion. Even if the definition of the operator \({\mathcal {G}}_{h}\) might involve a PDE or any other physical law, none of this knowledge is imposed over \(\varPhi \). Similarly, we do not impose boundary conditions, mass conservation, or other constraints, on the outputs of the MINN model: in principle, these should be learned implicitly. Nonetheless, we recognize that MINNs should benefit from the integration of such additional knowledge, as this is what other researchers have already observed for other approaches in the literature [10, 21, 49]. As of now, we leave these considerations for future works.

2.5 Relationship to Other Deep Learning Techniques

It is worth to comment on the differences and similarities that MINNs share with other Deep Learning approaches. We discuss them below.

2.5.1 Relationship to CNNs and GNNs

Mesh-Informed architectures can operate at different levels of resolution, in a way that is very similar to CNNs. However, their construction comes with multiple advantages. First of all, Definition 5 adapts to any geometry, while convolutional layers typically operate on square or cubic input–output. Furthermore, convolutional layers use weight sharing, meaning that all parts of the domain are treated in the same way. This may not be an optimal choice in some applications, such as those involving PDEs, as we may want to differentiate our behavior over \(\varOmega \) (for instance near of far away from the boundaries).

Conversely, MINNs share with GNNs the ability to handle general geometries. As a matter of fact, we mention that these architectures have been recently applied to mesh-based data, see e.g. [15, 28, 45, 51]. With respect to GNNs, the main advantage of MINNs lies in their capacity to work at different resolutions. This fact, which essentially comes from the presence of an underlying spatial domain, has at least two advantages: it provides a direct way for either reducing or increasing the dimensionality of a given input, and it increases the interpretability of hidden layers (now the number of neurons is not arbitrary but comes from the chosen discretization). In a way, mesh-informed architectures are similar to hierarchical GNNs, however, their construction is fundamentally different. While GNNs use aggregation strategies to collapse neighbouring information, MINNs differentiate their behavior depending on the nodes that are being involved. This difference, makes the two approaches better suited for different applications. For instance, GNNs can be of great interest when dealing with geometric variability, as their construction allows for a single architecture to operate over completely different domains, see e.g. [23]. Conversely, if the shape of the domain is fixed, then MINNs may grant a higher expressivity, as they are ultimately obtained by pruning dense feedforward models. In this sense, MINNs only exploit meshing strategies as auxiliary tools, and they appear to be a natural choice for learning discretized functional outputs.

2.5.2 Relationship to DeepONets and Neural Operators

Recently, some new DNN models have been proposed for operator learning. One of these are DeepONets [40], a mesh-free approach that is based on an explicit decoupling between the input and the space variable. More precisely, DeepONets consider a representation of the following form

where \(\cdot \) is the dot product, \(\varPsi : V_{h}\rightarrow {\mathbb {R}}^{m}\) is the branch-net, and \(\phi : \varOmega \rightarrow {\mathbb {R}}^{m}\) is the trunk-net. DeepONets have been shown capable of learning nonlinear operators and are now being extended to include a priori physical knowledge, see e.g. [49]. We consider MINNs and DeepONets as two fundamentally different approaches that answer different questions. DeepONets were originally designed to process input data coming from sensors and, being mesh-free, they are particularly suited for those applications where the output is only partially known or observed. In contrast, MINNs are rooted on the presence of a high-fidelity model \({\mathcal {G}}_{h}\) and are thus better suited for tasks such as reduced order modeling. Another difference lies in the fact that DeepONets include explicitly the dependence on the space variable \({\textbf{x}}\): because of this, suitable strategies are required in order for them to deal with complex geometries or incorporate additional information, such as boundary data, see e.g. [24]. Conversely, MINNs can easily handle this kind of issues thanks to their global perspective.

In this sense, MINNs are much closer to the so-called Neural Operators, a novel class of DNN models first proposed by Kovachki et al. in [33]. Neural Operators are an extension of classical DNNs that was developed to operate between infinite dimensional spaces. These models are ultimately based on Hilbert-Schmidt operators, meaning that their linear part, that is ignoring activations and biases, is of the form

where \(k:\varOmega \times \varOmega \rightarrow {\mathbb {R}}\) is some kernel function that has to be identified during the training phase. Clearly, the actual implementation of Neural Operators is carried out in a discrete setting and integrals are replaced with suitable quadrature formulas. We mention that, among these architectures, Fourier Neural Operators (FNO) are possibly the most popular ones: we shall discuss more about the comparison between MINNs and FNOs in Sect. 5.

The construction of Neural Operators is very general, to the point that other approaches, such as ours, can be seen as a special case. Indeed, a rough Monte Carlo-type estimate of (6) would yield

If we let \(\{{\textbf{x}}_{j}\}_{j}\) and \(\{{\textbf{x}}'_{i}\}_{i}\) be the nodes in the two meshes, then the constraint in Definition 5 becomes equivalent to the requirement that k is supported somewhere near the diagonal, that is \(\text {supp}(k)\subseteq \{({\textbf{x}},{\textbf{x}}+\mathbf {\varepsilon })\;\text {with}\;|\mathbf {\varepsilon }|\le r\}\).

We believe that these parallels are extremely valuable, as they indicate the existence of a growing scientific community with common goals and interests. Furthermore, they all contribute to the enrichment of the operator learning literature, providing researchers with multiple alternatives from which to choose: in fact, as we shall see in Sect. 5, depending on the problem at hand one methodology might be better suited than the others.

3 Numerical Experiments: Effectiveness of the Pruning Strategy

We provide empirical evidence that the sparsity introduced by MINNs can be of great help in learning maps that involve functional data, such as nonlinear operators, showing a reduced computational cost and better generalization capabilities. We first detail the setting of each experiment alone, specifying the corresponding operator to be learned and the adopted MINN architecture. Then, at the end of the current Section, we discuss the numerical results.

Throughout all our experiments, we adopt a standardized approach for designing and training the networks. In general, we always employ the 0.1-leakyReLU activation for all the hidden layers, while we do not use any activation at the output. Every time a mesh-informed architecture is introduced, we also consider its dense counterpart, obtained without imposing the sparsity constraints. Both networks are then trained following the same criteria, so that a fair comparison can be made. As loss function, we always consider the mean square error computed with respect to the \(L^{2}\) norm, cf. Eq. (4).

The optimization of the loss function is performed via the L-BFGS optimizer, with learning rate always equal to 1 and no batching. What may change from case to case are the network architecture, the number of epochs, and the size of the training set. After training, we compare mesh-informed and dense architectures by evaluating their performance on 500 unseen samples (test set), which we use to compute an unbiased estimate of the average relative error, cf. Eq. (5).

All the code was written in Python 3, mainly relying on the FEniCS and mshr libraries for the construction of Finite Element spaces and the numerical solution of PDEs. The implementation of neural network models, instead, was handled in Pytorch, and their training was carried out on a GPU NVidia Tesla V100 (32GB of RAM).

Domains considered for the numerical experiments in Sect. 3. Panel a up to boundaries, \(\varOmega \) is the difference of two circles, \(B({{\textbf {0}}}, 1)\) and \(B({\textbf{x}}_{0},0.7)\), where \({\textbf{x}}_{0}=(-0.75, 0)\). Panel b A polygonal domain obtained by removing the rectangles \([-0.75, 0.75]\times [0.5, 1.5]\) and \([-0.75,0.75]\times [-1.5,-0.5]\) from \((-2,2)\times (-1.5, 1.5)\). Panel c the unit circle \(B({{\textbf {0}}},1)\). Panel d \(\varOmega \) is obtained by removing a square, namely \([-0.4,0.4]^{2}\), from the unit circle \(B({{\textbf {0}}},1)\)

3.1 Description of the Benchmark Operators

3.1.1 Learning a Parametrized Family of Functions

Let \(\varOmega \) be the domain defined as in Fig. 5a. For our first experiment, we consider a variation of a classical problem concerning the calculation of the signed distance function of \(\varOmega \). This kind of functions are often encountered in areas such as computer vision [43] and real-time rendering [1]. In particular, we consider the following operator,

where \(\varvec{\mu }=(\mu _1,\mu _2,\mu _3)\) is a finite dimensional vector, and \({\textbf{A}}_{\mu _3} = \text {diag}(1,\mu _{3})\). In practice, the value of \(u_{\varvec{\mu }}({\textbf{x}})\) corresponds to the (weighted) distance between the dilated point \({\textbf{A}}_{\mu _{3}}{\textbf{x}}\) and the truncated boundary \(\partial \varOmega \cap \{{\textbf{y}}:\;y_{2}>\mu _{1}\}\).

As input space we consider \(\varTheta :=[0,1]\times [-1,1]\times [1,2]\), endowed with the uniform probability distribution. Since the input is finite-dimensional, we can think of \({\mathcal {G}}\) as to the parametrization of a 3-dimensional hypersurface in \(L^{2}(\varOmega )\). We discretize \(\varOmega \) using P1 triangular Finite Elements with mesh stepsize \(h=0.02\), resulting in the high-fidelity space \(V_{h}:=\cong {\mathbb {R}}^{13577}\). To learn the discretized operator \({\mathcal {G}}_{h}\), we employ the following MINN architecture

where the supports are defined according to the Euclidean distance. The corresponding dense counterpart, which servers as benchmark, is obtained by removing the sparsity constraints (equivalently, by letting the supports go to infinity). We train the networks on 50 samples and for a total of 50 epochs.

3.1.2 Learning a Local Nonlinear Operator

As a second experiment, we learn a nonlinear operator that is local with respect to the input. Let \(\varOmega \) be as in Fig. 5b. We consider the infinitesimal area operator \({\mathcal {G}}: H^{1}(\varOmega )\rightarrow L^{2}(\varOmega )\),

Note in fact that, if we associate to each \(u\in H^{1}(\varOmega )\) the Cartesian surface

then \({\mathcal {G}}u\) yields a measure of the area that is locally spanned by that surface, in the sense that

for any continuous map \(\phi :S_{u}\rightarrow {\mathbb {R}}.\) Over the input space \(\varTheta :=H^{1}(\varOmega )\) we consider the probability distribution \({\mathbb {P}}\) induced by a Gaussian process with mean zero and covariance kernel

We discretize \(\varOmega \) using a triangular mesh of stepsize \(h=0.045\) and P1 Finite Elements, which results in a total of \(N_{h}= 11266\) vertices. To sample from the Gaussian process, we truncate its Karhunen-Loéve expansion at \(k=100\), as that suffices to capture the complexity of the input field (actually, we mention that 40 modes would be enough to explain 95% of the field variance: here, we set \(k=100\) to not oversimplify). Conversely, the output of the operator is computed numerically by exploiting the high-fidelity mesh as a reference.

To learn \({\mathcal {G}}_{h}\) we use the MINN architecture below,

where the supports are intended with respect to the Euclidean metric. We train our model over 500 snapshots and for a total of 50 epochs.

3.1.3 Learning a Nonlocal Nonlinear Operator

Since MINNs are based on local operations, it is of interest to assess whether they can also learn nonlocal operators. To this end, we consider the problem of learning the Hardy-Littlewood Maximal Operator \({\mathcal {G}}: L^{2}(\varOmega )\rightarrow L^{2}(\varOmega )\),

which is known to be a continuous nonlinear operator from \(L^{2}(\varOmega )\) onto itself [42]. Here, we let \(\varOmega :=B({\textbf{0}},1)\subset {\mathbb {R}}^{2}\) be the unit circle. Over the input space \(\varTheta :=L^{2}(\varOmega )\) we consider the probability distribution \({\mathbb {P}}\) induced by a Gaussian process with mean zero and covariance kernel

As a high-fidelity reference, we consider a discretization of \(\varOmega \) via P1 triangular Finite Elements of stepsize \(h=0.033\), resulting in a state space \(V_{h}\) with \(N_{h}=7253\) degrees of freedom. As for the previous experiment, we sample from \({\mathbb {P}}\) by considering a truncated Karhunen–Loève expansion of the Gaussian process (100 modes). Conversely, the true output \(u\rightarrow {\mathcal {G}}_{h}(u)\) is computed approximately by replacing the supremum over r with a maximum across 50 equally spaced radii in [h, 2]. To learn \({\mathcal {G}}_{h}\) we use a MINN of depth 2 with a dense layer in the middle,

The idea is that nonlocality can still be enforced through the use of fully connected blocks, but this are only inserted at the lower fidelity levels to reduce the computational cost. We train the architectures over 500 samples and for a total of 50 epochs. Also in this case, we build the mesh-informed layers upon the Euclidean distance.

3.1.4 Learning the Solution Operator of a Nonlinear PDE

For our final experiment, we consider the case of a parameter dependent PDE, which is a framework of particular interest in the literature of Reduced Order Modeling. In fact, learning the solution operator of a PDE model by means of neural networks allows one to replace the original numerical solver with a much cheaper and efficient surrogate, which enables expensive multi-query tasks such as PDE constrained optimal control, Uncertainty Quantification or Bayesian Inversion.

Here, we consider a steady version of the porous media equation, defined as follows

The PDE is understood in the domain \(\varOmega \) defined in Fig. 5d, and it is complemented with homogeneous Neumann boundary conditions. We define \({\mathcal {G}}\) to be the data-to-solution operator that maps \(f\rightarrow u\). This time, we endow the input space with the push-forward distribution \(\#{\mathbb {P}}\) induced by the square map \(f\rightarrow f^{2}\), where \({\mathbb {P}}\) is the probability distribution associated to a Gaussian random field with mean zero and covariance kernel

To sample from the latter distribution we exploit a truncated Karhunen–Loève expansion of the random field. This time, we set the truncation index to \(k=20\), as that is sufficient to capture 99.71% of the volatility in the Gaussian random field. Indeed, the total variance of the latter is

while, by direct computation, the first 20 eigenvalues of the covariance operator sum up to 2.49458. For the high-fidelity discretization, we consider a mesh of stepsize \(h=0.03\) and P1 Finite Elements, resulting in \(N_{h}=5987\). Finally, we employ the MINN architecture below,

where the supports are defined according to the Euclidean distance. We train our model over 500 snapshots and for a total of 100 epochs. Note that, as in our third experiment, we employ a dense block at the center of the architecture. This is because the solution operator to a boundary value problem is typically nonlocal (consider, for instance, the Green formula for the Poisson equation).

3.2 Numerical Results

Table 1 reports the numerical results obtained across the four experiments. In general, MINNs perform better with respect to their dense counterpart, with relative errors that are always below 5%. As the operator to be learned becomes more and more involved, fully connected DNNs begin to struggle, eventually reaching an error of 15% in the PDE example. In contrast, MINNs are able to keep up and maintain their performance. This is also due to the fact that, having less parameters, MINNs are unlikely to overfit, instead they can generalize well even in poor data regimes (cf. last column of Table 1 Consider for instance the first experiment, which counted as little as 50 training samples. There, the dense model returns an error of 4.78%, of which 4.07% is due to the generalization gap. This means that the DNN model actually surpassed the MINN performance over the training set, as their training errors are respectively of \(0.71\%\) and \(1.06\%\). However, the smaller generalization gap allows the sparse architecture to perform better over unseen inputs.

Figures 6, 7, 8 and 9 reports some examples of approximation on unseen input values. There, we note that dense models tend to have noisy outputs (Figs. 7 and 9 ) and often miscalculate the range of values spanned by the output (Figs. 6 and 8 ). Conversely, MINNs always manage to capture the main features present in the actual ground truth. This goes to show that MINNs are built upon an effective pruning strategy, thanks to which they are able to overcome the limitations entailed by dense architectures. This phenomenon can be further appreciated in the plot reported in Fig. 10. The latter refers to the nonlinear PDE example, Eq. (7), where our MINN architecture was of the form

with \(\delta =0.4\). Figure 10 shows how the choice of the support size, \(\delta \), affects the performance of the MINN model. Since, here, \(\varOmega \) has a diameter of 2, for \(\delta =4\) the architecture is formally equivalent to a dense DNN. As the support decreases, the first and the last layer become sparser, and, in turn, the architecture starts to generalize more, ultimately reducing the test error by nearly 11%. However, if the support is too small, e.g. \(\delta \le 0.125\), this may have a negative effect on the expressivity of the architecture, which now has too few degrees of freedom to properly approximate the operator of interest. In general, the best support size is to be found in the middle, \(0\le \delta ^{*}\le \text {diam}(\varOmega )\): of note, in this case, our original choice happens to be nearly optimal.

Comparison of DNNs and MINNs when learning a low-dimensional manifold \(\varvec{\mu }\rightarrow u_{\varvec{\mu }}\in L^{2}(\varOmega )\), cf. Sect. 3.1. The reported results correspond to the approximations obtained on an unseen input value \(\varvec{\mu }^{*}=[0.42, 0.04, 1.45].\)

Comparison of DNNs and MINNs when learning the local operator \(u\rightarrow \sqrt{1+|\nabla u|^{2}}\), cf. Sect. 3.1. The reported results correspond to the approximations obtained for an input instance outside of the training set

Comparison of DNNs and MINNs when learning the Hardy-Littlewood Maximal Operator, cf. Sect. 3.1. The pictures correspond to the results obtained for an unseen input instance

Comparison of DNNs and MINNs when learning the solution operator \(f\rightarrow u\) of a nonlinear PDE, cf. Sect. 3.1. The reported results correspond to the approximations obtained for an input instance f outside of the training set

Relationship between model accuracy and support size in mesh-informed layers. Case study: nonlinear PDE, see Eq. (7); MINN architecture: see Eq. (9). Red dots correspond to different choices for the support of the mesh-informed layers; cubic splines are used to draw the general trend (red dashed line). In blue, our original choice for the architecture, see Eq. (8). Errors are computed according to the \(L^{2}\)-norm. The x-axis is reported in logarithmic scale (Color figure online)

Aside from the improvement in performance, Mesh-Informed Neural Networks also allow for a significant reduction in the computational cost (cf. Table 2). In general, MINNs are ten to a hundred times lighter with respect to fully connected DNNs. While this is not particularly relevant once the architecture is trained (the most heavy DNN weights as little as 124 Megabytes), it makes a huge difference during the training phase. In fact, additional resources are required to optimize a DNN model, as one needs to keep track of all the operations and gradients in order to perform the so-called backpropagation step. This poses a significant limitation to the use of dense architectures, as the entailed computational cost can easily exceed the capacity of modern GPUs. For instance, in our experiments, fully connected DNNs required more than 2 GB of memory during training, while, depending on the operator to be learned, 10 to 250 MB were sufficient for MINNs. Clearly, one could also alleviate the computational burden by exploiting cheaper optimization routines, such as first order optimizers and batching strategies, however this typically prevents the network from actually reaching the global minimum of the loss function. In fact, we recall that the optimization of a DNN architecture is, in general, a non-convex and ill-posed problem. Of note, despite being 10 to 100 times lighter, MINNs are only 2 to 4 times faster during training. We believe that these results can be improved, possibly by optimizing the code used to implement MINNs.

4 Numerical Experiments: Handling General Nonconvex Domains

In the previous Section, when building our mesh-informed architectures, we always referred to the Euclidean distance. This is because, for our analysis, we considered spatial domains that were still quite simple in terms of shape and topology. In this sense, while most of those domains were nonconvex, the Euclidean metric was still satisfactory for quantifying distances.

In this Section, we would like to investigate this further, by testing MINNs over geometries that are far more complicated. We shall provide two examples: one concerning the flow of a Newtonian fluid through a system of channels, and one describing a reaction-diffusion equation solved on a 2D section of the human brain. In both cases, we shall not provide comparisons with other Deep Learning techniques: the only purpose for this Section is to assess the ability of MINNs in handling complicated geometries.

4.1 Stokes Flow in a System of Channels

As a first example, we consider the solution operator to the following parametrized (stationary) Stokes equation,

where p and \({\textbf{u}}\) are respectively the fluid pressure and velocity, while \(\varOmega \subset {\mathbb {R}}^{2}\) is the domain depicted in Fig. 11. The PDE depends on five scalar parameters. The first one, \(y\in [0.1, 0.8]\) is used to parametrize the location of the inflow condition, which is given as

In other words, \({\textbf{f}}_{y}\) describes a jet flow centered at y and directed towards the right. Vice versa, the remaining four parameters, \(a_{1},\dots ,a_{4}\in [0,1]\), are used to model the presence of possible leaks at the corresponding regions \(\varGamma _{1},\dots ,\varGamma _{4}\). In particular, for each \(i=1,\dots ,4\),

where \({\textbf{n}}\) is the external normal, while the \(\eta _{i}\)’s are suitable affine transformations from \({\mathbb {R}}\rightarrow {\mathbb {R}}\) such that \(\eta _{i}(\{x_{1}\;|\;{\textbf{x}}\in \varGamma _{i}\})=[0,1].\) Our output of interest, instead, is the velocity vector field \({\textbf{u}}:\varOmega \rightarrow {\mathbb {R}}^{2}\), meaning that the operator under study is

Spatial domain for the Stokes flow example, Sect. 4.1

As high-fidelity reference, we consider a discretized setting where the domain \(\varOmega \) is endowed with a triangular mesh of stepsize \(h=0.08,\) the pressure p is sought in the Finite Element space of piecewise linear polynomials, \(Q_{h}=X_{h}^{1}\), while the velocity \({\textbf{u}}\) is found in the space \(V_{h}\) of P1-Bubble vector elements [53], i.e. \(X_{h}^{1}\times X_{h}^{1} \subset V_{h}\subset X_{h}^{3}\times X_{h}^{3}\). This is done in order to ensure the numerical stability of classical Finite Element schemes associated to (10). Then, our purpose is to approximate the operator

with a suitable MINN surrogate, which we build as

Note that, even for the hidden state at the third/fourth layer, we consider a space comprised of Bubble elements (however, this is not mandatory). Here, in order to handle the fact that \(V_{h}\) consists of 2-dimensional vector fields, each Lagrangian node is counted twice during the construction of the mesh-informed layers. As a consequence, the mesh-informed layers will allow information to travel locally in space but also interact in between dimensions.

As usual, we employ the 0.1-leakyReLU nonlinearity at the internal layers, while we do not use any activation at output. We train our architecture over 450 randomly sampled snapshots, and for a total of 50 epochs. Following the same criteria as in Sect. 3, we train the network by optimizing the average squared error with respect to the \(L^{2}\)-norm, cf. Eq. 4, without using batching strategies and by relying on the L-BFGS optimizer. This time, however, in order to account for the complicated shape of the spatial domain \(\varOmega \), we impose the sparsity constraints in the mesh-informed layers according to the geodesic distance. We compute the latter as in Sect. 2.3, where we exploit a coarse mesh of stepsize \(h^{*}=0.2\) to approximate distances.

After training, our MINN surrogate reported an average \(L^{2}\)-error of 5.39% when tested over fifty new parameter instances, a performance that we consider to be satisfactory. In particular, as it can be further appreciated from Fig. 12, the proposed MINN architecture succeeded in learning the main characteristics of the operator under study. Of note, we mention that, in this case, implementing the dense counterpart of architecture 11, as we did back in Sect. 3), is computationally prohibitive. In fact, the latter would have \(\approx 300\cdot 10^{6}\) degrees of freedom, which, for instance, our GPU cannot handle.

Ground truth vs MINN approximation for the Stokes flow example, Sect. 4.1. The solutions reported refer to two different configurations of the parameters (not seen during training), respectively on the left/right

4.2 Brain Damage Recovery

As a second example we consider a problem concerning a nonlinear diffusion reaction of Fisher-Kolmogorov–Petrovsky–Piskunov (F-KPP) type [16] in a two-dimensional domain representing a vertical section of a human brain across the sagittal plane. Specifically, we consider the following time-dependent PDE

In the literature, researchers have used the F-KPP equation to model a multitude of biological phenomena, including cell proliferation and wound healing, see e.g. [26, 48]. In this sense, we can think of (12) as an equation that models the recovery of a damaged human brain, even though much more sophisticated models would be required to properly describe such phenomenon [46].

We thus interpret the solution to Eq. (12), \(u:\varOmega \times [0,+\infty )\rightarrow [0,1]\), as a map that describes the level of healthiness of the brain at a given location at a given time (0 = highly damaged, 1 = full health). Then, the dynamics induced by the F-KPP equation resembles that of a healing process: in fact, for any initial profile \(\varphi :\varOmega \rightarrow [0,1]\), later modeled as a random field, one has \(u({\textbf{x}},t)\rightarrow 1\) for \(t\rightarrow +\infty \), where the speed of such convergence depends both on \(\varphi \) and on the point \({\textbf{x}}\). In light of this, we introduce an additional map, \(\tau :\varOmega \rightarrow [0,+\infty )\), which we define as

In other words, \(\tau ({\textbf{x}})\) corresponds to the amount of time required by the healing process in order to achieve a suitable recovery at the point \({\textbf{x}}\in \varOmega \). Our objective is to learn the operator

which maps any given initial condition to the corresponding time-to-recovery map. To tackle this problem, we first discretize \(\varOmega \) through a triangular mesh of stepsize \(h=0.0213\), over which we define the state space of continuous piecewise linear Finite Elements, \(V_{h}\), with \(\text {dim}(V_{h})=2414\). Then, for any fixed initial condition \(\varphi \), we solve (12) numerically by employing the Finite Element method in space and the backward Euler scheme in time (\(\varDelta t=10^{-4}\)). In particular, in order to compute an approximation of \(\tau \) at the mesh vertices, we evolve the PDE up until the first time at which all nodal values of the solution are above 0.9. In this way, for each \(\varphi \in V_{h}\) we are able to compute a suitable \(\tau \in V_{h}\), with \(\tau \approx {\mathcal {G}}(\varphi )\). Precisely, in order to guarantee that \(\varphi \) always takes values in [0,1], and that its realizations have an underlying spatial correlation coherent with the geometry of \(\varOmega \), we cast our learning problem in a probabilistic setting where we endow the input space with the push-forward measure \(\#{\mathbb {P}}\) induced by the map

where \({\mathbb {P}}\) is the probability law of a centered Gaussian random field g defined over \(\varOmega \) with Covariance kernel

being the geodesic distance across \(\varOmega \).

being the geodesic distance across \(\varOmega \).

As model surrogate, we consider the MINN architecture reported below,

which we train over 900 random snapshots and for a total of 300 epochs. As for our previous test cases, we use the 0.1-leakyReLU activation at the internal layers and we optimize the weights by minimizing the mean squared \(L^{2}\)-error: to do so, we rely on the L-BFGS optimizer, with default learning rate and no mini-batching. Note that, as for Sect. 4.1, here the supports of the mesh-informed layers are computed following the geodesic distance (stepsize of the auxiliary coarse mesh: \(h^{*}=0.107\)). However, differently from the previous test case, we only employ mesh-informed layers. This is because, intuitively, we expect the phenomenon under study to be mostly local in nature.

After training, our architecture reported an average \(L^{2}\)-error of 7.40% (computed on 100 randomly sampled unseen instances). Figure 13 shows the initial condition of the brain for a given realization of the random field \(\varphi \), together with its high-fidelity approximation for \(\tau \) and the corresponding MINN prediction. Once again, we see a good agreement between the actual ground truth and the neural network output. For instance, the proposed MINN model succeeds in recognizing a fundamental feature of the healing process, that is: despite reporting an equivalent damage, two different regions of the domain may require completely different times for their recovery; in particular, those regions that are more isolated will take longer to heal.

Initial condition (right), ground truth of the time-to-recovery map (center) and corresponding MINN approximation (right) for an unseen instance of the brain damage recovery example, Sect. 4.2. Note: a different colorbar is used for the initial condition and the time-to-recovery map, respectively (Color figure online)

5 Numerical Experiments: Comparison with DeepONets and Fourier Neural Operators

Our purpose for the current Section is to compare the performances of MINN architectures with those of two other popular Deep Learning approaches to operator learning, namely DeepONets and Fourier Neural Operators (FNO). To start with, we compare the three methodologies on a benchmark case study, where the performances of DeepONets and FNO have already been reported [41]. We then move on to consider two additional problems which, despite their simplicity, we find to be of remarkable interest. These concern a dynamical system with chaotic trajectories, and an advection-dominated problem, two notoriously challenging scenarios when it comes to surrogate and reduced order modeling. Here, we shall exploit these problems to showcase the possible advantages that MINNs may offer with respect to the state-of-the-art.

Here, for the sake of simplicity, we shall restrict our analysis to either 1D domains or 2D squares, which we always discretize through uniform grids. This is to ensure a fair comparison between MINNs and other approaches, such as FNOs, whose generalization to more complex geometries is still in the progress of being (see, e.g., the recently proposed Laplace Neural Operators, [7, 9]). We report our results in the subsections below. Before that, however, it may be worth recalling the fundamental ideas behind DeepONets and FNOs.

Let \({\mathcal {G}}_{h}:\varTheta \rightarrow V_{h}\) be our operator of interest, where \(\varTheta \) is some input space, while \(V_{h}\cong {\mathbb {R}}^{N_{h}}\) is a given Finite Element space of dimension \(N_{h}\), here consisting, for simplicity, of piecewise linear polynomials. As we mentioned in Sect. 2.5.2, DeepONets are grounded on a representation of the form

where \(\varPsi : \varTheta \rightarrow {\mathbb {R}}^{m}\) and \(\phi : \varOmega \rightarrow {\mathbb {R}}^{m}\) are the branch and the trunk nets, respectively. Let now \(\{{\textbf{x}}_{j}\}_{j=1}^{N_{h}}\) be the nodes of the mesh at output, and let \({\textbf{V}}\in {\mathbb {R}}^{N_{h}\times m}\) be the matrix

where \(\phi _{i}({\textbf{x}})\) denotes the ith component of \(\phi ({\textbf{x}})\). We note that the map

formally returns the DeepONet approximation across the overall output mesh. In particular, this opens the possibility of directly replacing the trunk net with a suitable projection matrix \({\textbf{V}}\). As a matter of fact, this is the strategy proposed by Lu et al. in [41], where the authors exploit Proper Orthogonal Decomposition (POD) [52] to compute the projection matrix \({\textbf{V}}\) in an empirically optimal way. This technique, which the authors call POD-DeepONet, is usually better performing than the vanilla implementation of DeepONet, but it requires fixing the mesh discretization at output. Since the latter condition is always true within our framework, we shall restrict our attention to POD-DeepONet for our benchmark analysis.

For what concerns FNOs instead, the fundamental building block of these architectures is the Fourier layer. Similarly to convolutional layers, these architectures are defined to accept inputs with multiple channels or features. In our setting, for a given feature dimension c, a Fourier layer is a map \(L:(V_{h})^{c}\rightarrow (V_{h})^{c}\) of the form

where

-

\({\mathcal {F}}:(V_{h})^{c}\rightarrow {\mathbb {R}}^{m\times c}\) is the truncated Fourier transform, which takes the c-signals at input, computes the Fourier transform of each of them and only keeps the first m coefficients;

-

\({\mathcal {F}}^{-1}:{\mathbb {R}}^{m\times c}\rightarrow (V_{h})^{c}\) is the inverse Fourier transform, defined for each channel separately;

-

\({\textbf{R}}=(r_{i,j,k})_{i,j,k}\in {\mathbb {R}}^{m\times m\times c}\) is a learnable tensor that performs a linear transformation in the Fourier space. For any given input \({\textbf{A}}=(a_{j,k})_{j,k}\in {\mathbb {R}}^{m\times c}\), the action of the latter is given by

$$\begin{aligned} {\textbf{R}}\cdot {\textbf{A}}:=\left( \sum _{j=1}^{m}r_{i,j,k}a_{j,k}\right) _{i,k}\in {\mathbb {R}}^{m\times c}; \end{aligned}$$ -

\(W:V_{h}\rightarrow V_{h}\) is a linear map whose action is given by

$$\begin{aligned} (W{\varvec{v}})({\textbf{x}}):={\textbf{W}}\cdot {\varvec{v}}({\textbf{x}}), \end{aligned}$$where \({\textbf{W}}\in {\mathbb {R}}^{c\times c}\) is a learnable weight matrix;

-

\(\rho \) is the activation function.

In practice, when approximating a given operator \({\mathcal {G}}_{h}:\varTheta \rightarrow V_{h}\), the implementation of a complete FNO architecture is often of the form

Here, \(\varPhi \) is a preliminary block, possibly consisting of dense layers, that maps the input form \(\varTheta \) to \(V_{h}\). The FNO that exploits a lifting layer, E, to increase the number of channels, from \(V_{h}\) to \((V_{h})^{c}\). Such lifting is typically performed by composing the signal at input with a shallow (dense) network, \(e:{\mathbb {R}}\rightarrow {\mathbb {R}}^{c}\), so that \(E:v({\textbf{x}})\rightarrow e(v({\textbf{x}}))\). The model then implements l Fourier layers, which constitute the core of the FNO architecture. Finally, a projection layer is used to map the output back from \((V_{h})^{c}\) to \(V_{h}\). As for the lifting operator, this is achieved through the introduction of a suitable projection network, \(p:{\mathbb {R}}\rightarrow {\mathbb {R}}^{c}\), acting node-wise, i.e. \(P:{\varvec{v}}({\textbf{x}})\rightarrow p({\varvec{v}}({\textbf{x}}))\).

5.1 Benchmark Problem: Diffusion Equation with Random Coefficients

To start, we consider a test case where the performances of DeepONets and FNOs have already been reported, see, respectively [41] and [39]. Let \(\varOmega =(0,1)^{2}\). We wish to learn the solution operator \({\mathcal {G}}:L^{\infty }(\varOmega )\rightarrow L^{2}(\varOmega )\) of the boundary value problem below

where \({\mathcal {G}}: a\rightarrow u\). As usual, we cast our learning problem in a probabilistic setting by endowing the input space, \(L^{\infty }(\varOmega )\), with a suitable probability distribution \({\mathbb {P}}\). In short, the latter is built so that a satisfies

meaning that the PDE is characterized by a random permeability field with piecewise constant realizations. For a more accurate description about the construction of such \({\mathbb {P}}\), we refer to [39, 41].

Following the same lines of [41], we discretize the spatial domain with a uniform 29x29 grid, which we can equivalently think of as a triangular rectangular mesh, \({\mathcal {M}}=\{{\textbf{x}}_{i}\}_{i=1}^{841}\), of uniform stepsize \(h=\sqrt{2}/28\approx 0.05.\) Both for training and testing, we refer to the dataset made available by the authors in [41], which consists of 1000 training snapshots and 200 testing instances, respectively. As detailed in [39], the latter were obtained by repeatedly solving Problem (20) via finite-differences. Consequently, for each random realization of the permeability field, we have access to the nodal values \({\textbf{a}}=[a({\textbf{x}})]_{{\textbf{x}}\in {\mathcal {M}}}\in {\mathbb {R}}^{N_{h}}\) of the random field, and the corresponding approximations of the PDE solution, \({\textbf{u}}\approx [u({\textbf{x}})]_{{\textbf{x}}\in {\mathcal {M}}}\in {\mathbb {R}}^{N_{h}}\), where \(N_{h}=841.\) In this sense, we can think of the discrete operator as \({\mathcal {G}}_{h}:V_{h}\rightarrow V_{h}\), with \(V_{h}=X_{h}^{1}\), even though it would be more natural to have \(X_{h}^{0}\) as input space to account for the discontinuities in a.

It is also in light of these considerations that we propose to learn to operator with the MINN architecture below,

The idea is that the first two layers should act as a preprocessing of the input. In particular, aside from the dimensionality reduction, they serve the additional purpose of recasting the original signal over the space of P0 Finite Elements, which we find to be better suited for representing permeability fields.

This time, we employ the 0.3-leakyReLU activation for all the layers, including the last one. This allows us to enforce, at least in a relaxed fashion, the fact that any PDE solution to (20) should be nonnegative. We train our model for 300 epochs by minimizing the mean squared \(L^{2}\)-error through the L-BFGS optimizer (no batching, default learning rate), which on our GPU takes only 1 min an 56 s.

After training, our model reports an average \(L^{2}\)-error of 2.78%, which we find to be satisfactory as it compares very well with both DeepONets and FNOs (cf. Table 3). In general, as it can be observed from Fig. 14, the proposed MINN architecture can reproduce the overall behavior of the PDE solutions fairly well, but it fails in capturing some of their local properties. Considering that our model is twice as accurate over the training snapshots than it is over the test data (average relative error: 1.38% vs 2.78%), this might be due to a reduced amount of training instances.

Nonetheless, our performances remain comparable with those achieved by the state-of-art. Here, DeepONets attain the best accuracy thanks to their direct usage of the POD projection, which is known to be particularly effective for elliptic problems [52]. Indeed, as shown in [41], the same approach yields an average \(L^{2}\)-error of 2.91% if one replaces the POD basis with a classical trunk-net architecture. FNOs, instead, report the worst performance, by their accuracy can be easily increased if one exploits suitable strategies such as output normalization. The latter consists in the construction of a surrogate model of the form

where \({\tilde{\varPhi }}\) is an FNO architecture to be learned, while

are the pointwise average and variance of the solution field, respectively (both to be estimated directly from the training data). Then, this trick allows one to obtain a better FNO surrogate with a relative error of 2.41% [41].

5.2 Dealing with Chaotic Trajectories: The Kuramoto–Sivashinsky Equation

As a second example, we test the three methodologies in the presence of chaotic behaviors, here arising from the Kuramoto–Sivashinsky equation, a time-dependent nonlinear PDE that was first introduced to model thermal instabilities and flames propagation [35]. More precisely, we consider the periodic boundary value problem below,

where \(\ell =T=100\) and \(u_{0}(x):=\pi +\cos (2\pi x/\ell )+0.1\cos (4\pi x/\ell )\) are given, while \(\nu \in [1,3.5]\) is a parameter. We wish to learn the operator \({\mathcal {G}}\) that maps \(\nu \) onto the corresponding PDE solution \(u=u(x,t)\). To this end, it is convenient to define the spacetime domain \(\varOmega :=(0,\ell )\times (0,T)\), which we equip with a uniform grid of dimension 100x100. As a high-fidelity reference, we then consider a spectral method combined with a modified Crank-Nicolson scheme for time integration (\(\varDelta t = 5\cdot 10^{-4}\): trajectories are later subsampled to fit the uniform grid over \(\varOmega \)). This allows us to define the discrete operator as

with where \(\varTheta :=[1,3.5]\) and \(V_{h}:=X^{1}_{h}(\varOmega )\), so that \(\dim (X^{1}_{h})=N_{h}=n_{h}^{2}=10'000.\) We use the numerical solver to compute a total of \(N=1000\) PDE solutions, \(\{(\nu _{i},u_{h}^{i})\}_{i=1}^{N}\), \(N_{\text {train}}=500\) to be used for training and \(N_{\text {test}}=500\) for testing.

We train all the models according to the loss function below

which we minimize iteratively, for a total of 500 epochs, using the L-BFGS optimizer. Similarly, in order to emphasize the difference between the space and the time dimension, we evaluate the accuracy of the models in terms of the relative error below,

where norms are intended in the Bochner sense [14]. For the implementation of the three approaches, we proceed as follows:

-

(i)

POD-DeepONet: we exploit the training data to construct a POD basis \({\textbf{V}}\) consisting of \(m=200\) modes, as those should be sufficient for capturing most of the variability in the solution space. We then construct the branch net as a classical DNN from \({\mathbb {R}}\rightarrow {\mathbb {R}}^{m}\), with 3 hidden layers of width 500, each implementing the 0.1-leakyReLU activation;

-

(ii)

FNO: we use a combined architecture with a dense block at the beginning and three Fourier layers at the end. The dense block consists of 2 hidden layers of width 500, and an output layer with \(N_{h}\) neurons, all complemented with the 0.1-leakyReLU activation. Then, the model is followed by three Fourier layers, which, for simplicity, are identical in structure. In particular, following the same rule of thumb proposed by the authors [39], we implement a Fourier block with \(c=32\) features and \(m=12\) Fourier modes per layer. Once again, all layers (except for the last one) use the 0.1-leakyReLU as nonlinearity;

-

(iii)