Abstract

Getting an efficient neural network can be a very difficult task for engineers and researchers because of the huge number of hyperparameters to tune and their interconnections. To make the tuning step easier and more understandable, this work focuses on probably one of the most important leverage to improve Neural Networks efficiency: the optimizer. These recent years, a great number of algorithms have been developed but they need an accurate tuning to be efficient. To get rid of this long and experimental step, we are looking for generic and desirable properties for non-convex optimization. For this purpose, the optimizers are reinterpreted or analyzed as a discretization of a continuous dynamical system. This continuous framework offers many mathematical tools in order to interpret the sensitivity of the optimizer with respect to the initial guess such as Lyapunov stability. By enforcing the discrete decrease of Lyapunov functionals, new robust and efficient optimizers are designed. They also considerably simplify the tuning of hyperparameters (learning rate, momentum etc.). These Lyapunov based algorithms outperform several state of the art optimizers on different benchmarks of the literature. Drawing its inspiration from the numerical analysis of PDEs, this paper emphasizes the essential role of some hidden energy/entropy quantities for machine learning tasks.

Similar content being viewed by others

Data availability

The implementation of the optimizers in C/C++ and the Python (matplotlib) script to generate the graphics can be found on the git repository: https://github.com/bbensaid30/NN_Levenberg_Marquardt/tree/shaman.

Notes

0.01 is just here to avoid the iterations where the increasing is negligible but there is no real consequence on the results

Tensorflow documentation: link

References

Attouch, H., Peypouquet, J.: The rate of convergence of nesterovś accelerated forward-backward method is actually \(o(k^{-2})\). SIAM J. Optim. (2015). https://doi.org/10.1137/15M1046095

Barakat, A., Bianchi, P.: Convergence and dynamical behavior of the adam algorithm for nonconvex stochastic optimization. SIAM J. Optim. 31(1), 244–274 (2021). https://doi.org/10.1137/19M1263443

Behery, G., El-Harby, A., El-Bakry, M.: Reorganizing neural network system for two spirals and linear low-density polyethylene copolymer problems. Appl. Comput. Intell. Soft Comput. (2009). https://doi.org/10.1155/2009/721370

Berner, J., Grohs, P., Kutyniok, G., Petersen, P.: The Modern Mathematics of Deep Learning (2021). https://doi.org/10.48550/ARXIV.2105.04026

Bof, N., Carli, R., Schenato, L.: Lyapunov theory for discrete time systems (2018). https://doi.org/10.48550/ARXIV.1809.05289

Bourriaud, A., Loubère, R., Turpault, R.: A priori neural networks versus a posteriori mood loop: a high accurate 1d fv scheme testing bed. J. Sci. Comput. (2020). https://doi.org/10.1007/s10915-020-01282-1

Chalup, S.K., Wiklendt, L.: Variations of the two-spiral task. Connect. Sci. 19(2), 183–199 (2007). https://doi.org/10.1080/09540090701398017

Chartier, P., Hairer, E., Vilmart, G.: Numerical integrators based on modified differential equations. Math. Comput. 76, 1941–1953 (2007). https://doi.org/10.1090/S0025-5718-07-01967-9

Chetaev, N.G.: The stability of motion., [2d rev. ed.] translated from the russian, by morton nadler. translation editors: a. w. babister [and] j. burlak. edn. Pergamon Press, New York (1961)

Das, H., Reza, N.: On gröbner basis and their uses in solving system of polynomial equations and graph coloring. J. Math. Stat. 14, 175–182 (2018). https://doi.org/10.3844/jmssp.2018.175.182

Demeure, N.: Compromise between precision and performance in high performance computing. Theses, École Normale supérieure Paris-Saclay (2021). https://tel.archives-ouvertes.fr/tel-03116750

Després, B., Jourdren, H.: Machine learning design of volume of fluid schemes for compressible flows. J. Comput. Phys. 408, 109275 (2020). https://doi.org/10.1016/j.jcp.2020.109275

Glorot, X., Bengio, Y.: Understanding the difficulty of training deep feedforward neural networks. In: AISTATS (2010)

Griffiths, D., Sanz-Serna, J.: On the scope of the method of modified equations. SIAM J. Sci. Stat. Comput. 7, 994–1008 (1986). https://doi.org/10.1137/0907067

Hagan, M., Menhaj, M.: Training feedforward networks with the marquardt algorithm. IEEE Trans. Neural Netw. 5(6), 989–993 (1994). https://doi.org/10.1109/72.329697

Kalkbrener, M.: Solving systems of algebraic equations by using gröbner bases. In: EUROCAL (1987)

Kelley Pace, R., Barry, R.: Sparse spatial autoregressions. Stat. Probab. Lett. 33(3), 291–297 (1997). https://doi.org/10.1016/S0167-7152(96)00140-X

Khalil, H.K.: Nonlinear systems; 3rd ed. Prentice-Hall, Upper Saddle River, NJ (2002). https://cds.cern.ch/record/1173048. The book can be consulted by contacting: PH-AID: Wallet, Lionel

Kingma, D., Ba, J.: Adam: a method for stochastic optimization. International Conference on Learning Representations (2014). https://doi.org/10.48550/ARXIV.1412.6980

Kluth, G., Humbird, K.D., Spears, B.K., Peterson, J.L., Scott, H.A., Patel, M.V., Koning, J., Marinak, M., Divol, L., Young, C.V.: Deep learning for nlte spectral opacities. Phys. Plasmas 27(5), 052707 (2020). https://doi.org/10.1063/5.0006784

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images. Tech. Rep. 0, University of Toronto, Toronto, Ontario (2009)

Lamy, C., Dubroca, B., Nicolaï, P., Tikhonchuk, V., Feugeas, J.L.: Modeling of electron nonlocal transport in plasmas using artificial neural networks. Phys. Rev. E 105, 055201 (2022). https://doi.org/10.1103/PhysRevE.105.055201

LaSalle, J.: Stability theory for ordinary differential equations. J. Differen. Equ. 4(1), 57–65 (1968). https://doi.org/10.1016/0022-0396(68)90048-X

Lazard, D.: Solving zero-dimensional algebraic systems. J. Symb. Comput. 13, 117–132 (1992)

Lecun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998). https://doi.org/10.1109/5.726791

Li, C., Miao, Y., Maio, Q.: A method to judge the stability of dynamical system. IFAC Proc. Vol. 28(16), 101–105 (1995). https://doi.org/10.1016/S1474-6670(17)45161-5

Liu, L., Jiang, H., He, P., Chen, W., Liu, X., Gao, J., Han, J.: On the variance of the adaptive learning rate and beyond. p. 13. ICLR (2020). https://doi.org/10.48550/ARXIV.1908.03265

Lydia, A., Francis, S.: Adagrad - an optimizer for stochastic gradient descent. Int. J. Inf. Comput. Sci. 6, 566–568 (2019)

Mo, W., Luo, X., Zhong, Y., Jiang, W.: Image recognition using convolutional neural network combined with ensemble learning algorithm. J. Phys: Conf. Ser. 1237, 022026 (2019). https://doi.org/10.1088/1742-6596/1237/2/022026

Nesterov, Y.: A method for solving the convex programming problem with convergence rate \(o(1/k^2)\). Proc. USSR Acad. Sci. 269, 543–547 (1983)

Novello, P., Poëtte, G., Lugato, D., Congedo, P.M.: Explainable Hyperparameters Optimization using Hilbert-Schmidt Independence Criterion (2021). https://hal.archives-ouvertes.fr/hal-03128298. Accepted in Journal of Sci. Comput

Novello, P., Poëtte, G., Lugato, D., Peluchon, S., Congedo, P.M.: Accelerating hypersonic reentry simulations using deep learning-based hybridization (with guarantees) (2022). https://arxiv.org/abs/2209.13434.

Polyak, B.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4(5), 1–17 (1964). https://doi.org/10.1016/0041-5553(64)90137-5

Raissi, M., Perdikaris, P., Karniadakis, G.: Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378, 686–707 (2019). https://doi.org/10.1016/j.jcp.2018.10.045

Reddi, S.J., Kale, S., Kumar, S.: On the convergence of adam and beyond. In: International Conference on Learning Representations (2018). https://doi.org/10.48550/ARXIV.1904.09237

Richardson, F., Reynolds, D., Dehak, N.: Deep neural network approaches to speaker and language recognition. IEEE Signal Process. Lett. 22(10), 1671–1675 (2015). https://doi.org/10.1109/LSP.2015.2420092

Ripoll, J.F., Kluth, G., Has, S., Fischer, A., Mougeot, M., Camporeale, E.: Exploring pitch-angle diffusion during high speed streams with neural networks. In: 2022 3rd URSI Atlantic and Asia Pacific Radio Science Meeting (AT-AP-RASC), pp. 1–4 (2022). https://doi.org/10.23919/AT-AP-RASC54737.2022.9814235

Rouche, N., Habets, P., Laloy, M.: Stability Theory by Liapunov’s Direct Method. Applied Mathematical Sciences. 3Island Press (1977). https://books.google.fr/books?id=yErqoQEACAAJ

Shi, B., Du, S., Jordan, M., Su, W.: Understanding the acceleration phenomenon via high-resolution differential equations. Math. Program. (2021). https://doi.org/10.1007/s10107-021-01681-8

Smith, L.: Cyclical learning rates for training neural networks. In: IEEE winter conference on applications of computer vision, pp. 464–472 (2017)

Su, W., Boyd, S., Candès, E.J.: A differential equation for modeling nesterovś accelerated gradient method: theory and insights. J. Mach. Learn. Res. 17(153), 1–43 (2016)

Tian, D., Liu, Y., Wang, J.: Fuzzy neural network structure identification based on soft competitive learning. Int. J. Hybrid Intell. Syst. 4, 231–242 (2007). https://doi.org/10.3233/HIS-2007-4403

Tieleman, T., Hinton, G., et al.: Lecture 6.5-rmsprop: divide the gradient by a running average of its recent magnitude. COURSERA Neural Netw. Mach. Learn. 4(2), 26–31 (2012)

Wibisono, A., Wilson, A.C., Jordan, M.I.: A variational perspective on accelerated methods in optimization. Proc. Natl. Acad. Sci. 113(47), E7351–E7358 (2016). https://doi.org/10.1073/pnas.1614734113

Wilkinson, J.H.: Modern error analysis. SIAM Rev. 13(4), 548–568 (1971). https://doi.org/10.1137/1013095

Wilson, A.C., Recht, B., Jordan, M.I.: A Lyapunov analysis of accelerated methods in optimization. J. Mach. Learn. Res. 22, 5040–5073 (2021)

Xu, Z., Dai, A.M., Kemp, J., Metz, L.: Learning an adaptive learning rate schedule. NeurIPS, arXiv (2019). https://doi.org/10.48550/ARXIV.1909.09712

You, K., Long, M., Wang, J., Jordan, M.: How does learning rate decay help modern neural networks (2019)

Zeiler, M.: Adadelta: An adaptive learning rate method 1212 (2012). https://doi.org/10.48550/ARXIV.1212.5701

Funding

This work was supported by CEA/CESTA and LRC Anabase.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Analytical benchmarks and their subtleties

All the analytical examples of this section are based on the cost function (7) reminded here:

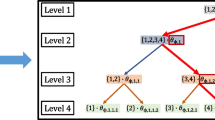

Their general structures are the same, that is to say \({\mathcal {N}}=(1)\) and \({\mathcal {G}}=(g)\), but they are built by choosing different activation functions. Each new activation function, in this section, leads to a more difficult learning problem. Here, when Q is claimed to be a good approximation of H, it means that \(Q(\theta ^*)=H(\theta ^*)\). If not, it means that \( \displaystyle {\lim _{\theta \rightarrow \theta ^*}}\Vert \frac{Q(\theta )}{S(\theta )}\Vert = \infty \). In this appendix, several benchmarks are presented with their corresponding properties. These are summed-up in Tables 5, 6, 7, 8, 9. We recall that Q does not involve any second order derivatives, see (1), contrary to S, see (2). In the next points, we sum-up the subtleties of each new analytical benchmark we introduce:

-

Benchmark #1 admits four global minimums and the quasi hessian is sufficient to describe the curvature of the surface around the minimums. It is an ideal case.

-

Benchmark #2 presents one global minimum and three local minimums of different values. In the neighborhood of the global minimum the quasi hessian is a good approximation of the hessian (called LM hypothesis). However, the curvature of the other minimums involves the second order derivatives of u (the network output). This example helps to identify the influence of the curvature of u on the attractive power of the minimums. But given that the best minimum is the only one that satisfies the LM hypothesis, the attractive power can be impacted by the fact that this minimum is global. So if the region of attractivity of a minimum is larger, it is hard to know if it is due to the second order information or to its training performances.

-

With this in mind, benchmark #4 presents global/local minimums that are equal with respect to the LM hypothesis. For the same reasons, benchmark #5 checks this assumption around the local minimum but not in the vicinity of the global one. Therefore a comparison with benchmark #2 makes it possible to have an idea about the role of H(u) on the proportions found by a given algorithm. Some minimums of these examples show non invertible/zero hessian to allow a possible study about the influence of singular curvature.

-

To be complete, concerning benchmark #3 the four global minimums involve the curvature of u. Besides, this example seems hard to train with classical algorithms due to its stiffness see Table 2.

Every of the above characteristics of the benchmarks are summed up in the Tables 5, 6, 7, 8, 9. The term “order of dominating terms” refers to the asymptotic development of \({\mathcal {R}}(\theta )-{\mathcal {R}}(\theta ^*)\) around a minimum \(\theta ^*\). The values of the minima \(\theta ^*\), i.e. \({\mathcal {R}}(\theta ^*)\), are given in the same order than the set of the minima.

Benchmark #1 The activation function is simply set to:

Benchmark #2 In this case:

Benchmark #3 In this case:

Benchmark #4 In this case:

.

Benchmark #5 In this case:

B Proof of the stability/attractivity theorem 1

In order to prove the stability/attractivity theorem 1, we rely on classical theorems such as Lyapunov/LaSalle/Salvadori theorems in order to study the behaviors in the vicinities of minima and such as Chetaev/Salvadori theorems for the study of maxima and saddle points. These are usually formalised for a general ODE of the form:

of unknown \(Y(t)\in {\mathbb {R}}^n\) and \(F:{\mathbb {R}}^n \mapsto {\mathbb {R}}^n\). The theorems we use next have a common point: they imply finding functionals that satisfy certain assumptions. The common point of these functionals is that they assume having an additional redundant scalar equation of the form

Depending on the properties satisfied by V (definite positiveness, definite negativeness etc.), we are going to conclude on the stability/attractivity in the vicinities of the minima/maxima/saddle points. Let us remark that the functions V(t) defined in Sect. 4 are not strictly speaking Lyapunov functions because \(V(\theta ^*)\ne 0\). Indeed, it is required and sufficient to substract \({\mathcal {R}}(\theta ^*)\) to them.

In the following section, we denote by \({\mathcal {Z}}=\{Y\in {\mathbb {R}}^N, {\dot{V}}(Y)=0\}\) the set of points of \({\mathbb {R}}\) which cancel \({\dot{V}}(t)=V'(t)\) and \({\mathcal {M}}\) the largest invariant set included in \({\mathcal {Z}}\).

Proof

(Theorem 1 for GD) Let us choose \(V(\theta ) = {\mathcal {R}}(\theta ) - {\mathcal {R}}(\theta ^*)\) where \(\theta ^*\) is a stationary point. For GD, we have

For GD, \({\dot{V}}\) as computed above is definite negative for isolated critical points. We have immediately:

-

if \(\theta ^*\) is a isolated local minimum then V is definite positive.

-

If \(\theta ^*\) is a isolated local maximum then V is definite negative.

-

If \(\theta ^*\) is a isolated saddle point V is undefined.

The result for the stability/instability of GD of theorem 1 is established by Lyapunov and extension of the Chetaev theorem. For the attractivity of GD, i.e. for the convergence towards \({\mathcal {E}}\), it is sufficient to note that \({\mathcal {Z}}={\mathcal {E}}\) and use the LaSalle’s theorem. \(\square \)

Proof

(Theorem 1 for Momentum) For Momentum, function F, for all \((v,\theta ) \in {\mathbb {R}}^N\times {\mathbb {R}}^N\), is given by:

The stationary points, i.e. \((v,\theta )\in {\mathbb {R}}^N\times {\mathbb {R}}^N\) such that \(F(v,\theta )=0\), are of the form \((0,\theta ^*)\) where \(\theta ^*\) is a critical point of \({\mathcal {R}}\). Set

then we have

The difficulty for Momentum (in opposition to the proof for GD) is that \({\dot{V}}\) is just semi-negative. So it is impossible to apply, for example, the extension of Chetaev theorem. The trick is to see Momentum as a dissipative Lagrangian system. Let us define:

-

a kinetic energy \(T(v)=\frac{\Vert v\Vert ^2}{2}\),

-

a potential energy \(\Pi (\theta ) = \bar{\beta _1}{\mathcal {R}}(\theta )\),

-

and a dissipative term \(Q(v) = -\bar{\beta _1}v\).

The Lagrangian is \(L=T-\Pi \) and the equation of motion is:

With the above definitions of \(T, \Pi ,Q\), the above equation corresponds to the Momentum ODE.

It is sufficient to check the assumptions of the Salvadori theorem (theorem 5.2 p114 [38]):

-

1.

If \(\theta ^*\) is a minimum for \({\mathcal {R}}\) then \(\theta ^*\) is a minimum for \(\Pi \). The same holds for maxima and saddle points.

-

2.

\(\theta ^*\) is an isolated point.

-

3.

The dissipation is complete that is to say:

$$\begin{aligned} Q(v) \cdot v = -\bar{\beta _1} \Vert v\Vert ^2 \le -a(\Vert v\Vert ). \end{aligned}$$where \(\left( a: x\in {\mathbb {R}}^+ \mapsto \bar{\beta _1}x^2\right) \in {\mathcal {K}}\). Let us recall that \({\mathcal {K}}\) is, classically in stability theory, the set of functions from \({\mathbb {R}}^+\) to \({\mathbb {R}}^+\) continuous, strictly increasing such that \(a(0)=0\).

The stability/unstability result of theorem 1 for Momentum is established.

It remains to prove the convergence towards \({\mathcal {E}}\) thanks to the invariance principle [38]. First, note that \({\mathcal {Z}}=\{0\} \times {\mathbb {R}}^N\). Take a solution \((v(t),\theta (t))\) starting in the largest invariant set \({\mathcal {M}}\) included in \({\mathcal {Z}}\). As \({\mathcal {M}}\) is invariant, for all \(t\ge 0\), \(v(t)=0\). From the Momentum vector field (32), we have that \(\theta '(t)=v(t)\). As \(v(t)=0=\theta '(t)\), we deduce that the trajectory \(\theta (t)\) is constant. The second equation \(v'(t) = -\bar{\beta _1}v(t)-\bar{\beta _1} \nabla {\mathcal {R}}(\theta (t))\) gives taht \(\forall t\ge 0\), \(\theta (t)=\theta ^* \in {\mathcal {E}}\). As a consequence, \({\mathcal {M}}=\{0\} \times {\mathcal {E}}\) and we can conclude thanks to LaSalle’s invariance principle. \(\square \)

Proof

(Theorem 1 for AWB) For AWB, function F is given by \((v,s,\theta ) \in {\mathbb {R}}^N \times {\mathbb {R}}_+^N \times {\mathbb {R}}^N\):

In order to apply LaSalle’s invariance principle, the definition domain of F has to be an open connected space: first, note that for \(t>0,\theta (0)\notin {\mathcal {E}}\) implies that we have \(s(t)>0\), see (14). It means we can restrict the study to \({\mathbb {R}}^N \times {\mathbb {R}}_+^N\setminus \{0\} \times {\mathbb {R}}^N\) as we establish an asymptotic property. The stationary points are of the form \((0,0,\theta ^*)\) where \(\theta ^*\) is a critical point of \({\mathcal {R}}\). Let us set:

Its derivative is:

Noting that \(\frac{s_i}{s_i+\epsilon _a}<1\) as \(s_i \ge 0\) we have:

As \(\bar{\beta _2} < 4 \bar{\beta _2}\), \({\dot{V}}\) is semi-negative.

We will now apply the LaSalle invariance principle. From the above inequality, we have \({\mathcal {Z}}=\{(0,s,\theta ), (s,\theta ) \in {\mathbb {R}}^{2N}\}\). Take a solution \((v(t),s(t),\theta (t))\) starting in the largest invariant set \({\mathcal {M}}\) included in \({\mathcal {Z}}\). As \({\mathcal {M}}\) is invariant, for all \(t\ge 0\), \(v(t)=0\). From (11), we can deduce several properties:

-

from \(\theta '(t) = -\frac{v(t)}{\sqrt{s(t)+\epsilon _a}}\), we obtain that for all \(t \ge 0\), we get:

$$\begin{aligned} \theta '(t)=0. \end{aligned}$$ -

From the equation \(v'(t) = -\bar{\beta _1}v(t)+\bar{\beta _1} \nabla {\mathcal {R}}(\theta (t))\), we deduce that \(\forall t\ge 0\):

$$\begin{aligned} \nabla {\mathcal {R}}(\theta (t))=0. \end{aligned}$$ -

From the equation \(s'(t) = -\bar{\beta _2}s(t)+\bar{\beta _2} \nabla {\mathcal {R}}(\theta (t))^2\), we deduce that:

$$\begin{aligned} s(t)=s(0)e^{-\bar{\beta _2}t}. \end{aligned}$$

Hence for all \(t \ge 0\):

Therefore the following equality holds:

According to the LaSalle’s invariance principle [23], all bounded trajectories \((v(t),s(t),\theta (t))\) converges to the set \({\mathcal {M}}\). The inclusion proved above shows that:

the attractivity of AWB is proved. \(\square \) \(\square \)

Proof

(Theorem 1 for Adam) For Adam, F is given by \((t,v,s,\theta ) \in {\mathbb {R}}^+ \times {\mathbb {R}}^N \times {\mathbb {R}}_+^N \times {\mathbb {R}}^N\):

Let us recall that f(t) is defined in 4.

For the same reason as in the previous proof for AWB, we can restrict the study to \({\mathbb {R}}^+\setminus \{0\} \times {\mathbb {R}}^N \times {\mathbb {R}}_+^N\setminus \{0\} \times {\mathbb {R}}^N\). The stationary points are of the form \((0,0,\theta ^*)\) where \(\theta ^*\) is a critical point of \({\mathcal {R}}\).

Let us set:

Its derivative is:

Now, in order to prove the attractivity of Adam, it remains to check the hypothesis of the section 5.9 p206 (chapter 8) of [38] with the corollary 4.15. These assumptions are recalled below: there exists a continuous function \(\psi \) and for every compact set \(K \in {\mathbb {R}}^N \times {\mathbb {R}}_+^N \times {\mathbb {R}}^N\), there are constants \(A \in {\mathbb {R}}\), \(B>0\) such that:

-

i

F is bounded on K:

$$\begin{aligned} \forall t\in {\mathbb {R}}_+, \forall (v,s,\theta )\in K, \Vert F(t,v,s,\theta )\Vert \le A. \end{aligned}$$ -

ii

V is bounded by below:

$$\begin{aligned} \forall t\in {\mathbb {R}}_+, \forall (v,s,\theta )\in K, V(t,v,s,\theta )\ge -B. \end{aligned}$$ -

iii

\({\dot{V}}\) is bounded independently of the time:

$$\begin{aligned} \forall t\in {\mathbb {R}}_+, \forall (v,s,\theta )\in K, {\dot{V}}(t,v,s,\theta ) \le -\psi (v,s,\theta ) \le 0. \end{aligned}$$ -

iv

The limit set is compact.

-

v

The ODE is asymptotically autonomous. This means that the translation \(F_a(t,v,s,\theta ) {:}{=}F(t+a,v,s,\theta )\) converges when a comes to \(\infty \) and we will denote the limit function \(F^*\).

Denoting by \({\mathcal {Z}}{:}{=}\{(v,s,\theta ) \in {\mathbb {R}}^N \times {\mathbb {R}}_+^N \times {\mathbb {R}}^N, \psi (v,s,\theta )=0\}\), the theorem claims that the solution converges towards the largest invariant set included in \({\mathcal {Z}}\) for the limit equation:

In order to prove point iii, it is necessary to obtain an upper bound on \({\dot{V}}\). This is done by showing that \(g(t) = 2f'(t) + (\bar{\beta _2}-4\bar{\beta _1})f(t)\), is negative on \({\mathbb {R}}_+\) if \(\bar{\beta _2} < \bar{\beta _1}\). This will allow deducing a bound on \({\dot{V}}\). We can rewrite g as:

where:

As for all \(t\ge 0\), \(e^{-(\bar{\beta _1}+\bar{\beta _2})t} \le e^{-\bar{\beta _1}t}\):

Note that P(0) = 0. Therefore g is negative on \({\mathbb {R}}_+\).

Let us analyse the function f to have a bound independent of the time. It is straightforward that \(\displaystyle {\lim _{t \rightarrow 0}} f(t) = \frac{\bar{\beta _2}}{\bar{\beta _1}}\) and \(\displaystyle {\lim _{t\rightarrow +\infty }} f(t) = 1\). Given that f is continuous on \({\mathbb {R}}_+^*\), f is bounded on \({\mathbb {R}}_+\). From now on, we consider the continuous extension of f and we abusively call it f. Denoting by \(m>0\) the lower bound of f on \({\mathbb {R}}_+\) (f is strictly positive) the following inequality is verified (assumption iii):

The positivity of \({\mathcal {R}}\) gives the second hypothesis ii:

As f is bounded we deduce that for every compact \(K \subset {\mathbb {R}}^+ \times {\mathbb {R}}^N \times {\mathbb {R}}_+^N \times {\mathbb {R}}^N\), there exists \(A \in {\mathbb {R}}\) (a continuous function is bounded on a compact set) such that (assumption i):

As the assumptions of corollary 4.15 (the \({\mathcal {C}}^1\) regularity of F and V is straightforward) (36), (35), (34) are verified, it is sufficient to prove that the ODE is asymptotically autonomous (assumption v). A simple calculation gives:

Consider a bounded trajectory \((v(t),s(t),\theta (t))\). It is a classical result (see theorem 4 p364 of appendix 2 in [38]) that the limit set is compact (assumption iv). The theorem of section 5.9 claims that the trajectory converges towards the invariant set of the limit ODE

included in the set:

Now note that this limit equation is exactly the ODE associated to AWB. So the study is already done. \(\square \) \(\square \)

Remark

This methodology is useful for many NNs optimizers not studied in this paper. For instance, we can apply the above methodology to RMSProp [43]. The following choices of V are possible (keeping the same notations as Adam):

or

C Tests on analytical examples: supplement

In this appendix, we give more quantitative results than in Sect. 3.3 on benchmarks #2 (activation (27)) with the classical optimizers and the new ones we develop in this paper. These quantitative results are summed-up in Table 10. Note that the default settings for the parameters of the classical optimizers are the same as in Sect. 6.1. First, Table 10 shows that the performances observed are not particular to an example. The algorithms have been tested on all the benchmarks of the appendix A. Contrary to the benchmark #3 of the Sect. 6.1, it allows to see if the global minimum \((0,-1)\) gives an advantage or not. AWB is very troubling because it favours the worst minimum (0, 1) a lot contrary to Adam: a direct advantage of the biased steps appears clearly. A very promising insight is that the algorithms that enforce the energy decreasing promote the global minimum: concerning EGD, half of the trajectories converge to \((0,-1)\).

D Tests on machine learning tasks: supplement

The default settings are the same as Sect. 6.2. Table 11 is about the square function \((x,y) \mapsto (x^2,y^2)\) using 50 training/testing points uniformly sampled on \([-1,1]^2\). The architecture is the following: \({\mathcal {N}}=\left( 2,15,2\right) \) and \({\mathcal {G}}=\left( reLU,linear\right) \) so \(N=77\). Here the assumptions of propositions 1 and 4 are not verified. However the differences between classical methods and the adaptive ones are even more striking.

E Proofs of propositions 5 and 6

The proofs consist in checking the three points of the Sect. 7 using Taylor expansion.

Proof

(Proposition 5) Since \(\nabla {\mathcal {R}}\) is Lipschitz-continuous, the explicit Euler scheme is 0-stable. The second point to check is simple: the initial condition is exact with \(l_0=\theta (0)-\theta _0=0\). Assume that \(\theta \) is the solution of the modified equation (22). The local error is given by:

Using equation (22) and Taylor formula, there exists \(t_n \le \alpha _n \le t_{n+1}\) such that:

Let us denote by \(\nabla ^3 {\mathcal {R}}\) the tensor of the third derivatives. By differentiation of (22), we get (omitting the \(\theta (t_n)\) in the right term):

Rearranging the terms it follows:

From the regularity theorem for parametric equations, it comes that \((\eta ,t) \mapsto \theta _{\eta }(t)\) is \({\mathcal {C}}^{\infty }([0,1] \times [0,T])\). Therefore the term in front of \(\eta ^2\) is uniformly bounded in \(\eta \) at the neighborhood of the origin. This ends the proof. \(\square \) \(\square \)

Proof

(Proposition 6) The 0-stability is already discussed in the remark 3. The second point is verified as \(l_0=\theta (0)-\theta _0=0\). By performing the change of variable \(\bar{\beta _1} = \frac{\beta _1}{\eta }\), we can write the algorithm as the following finite differences scheme:

With this modification, the ODE (23) can be written as:

By Taylor formula and after some manipulations (using the equation above), there exists \(t_n \le \alpha _n^0 \le t_{n+1}\), \(t_{n-1} \le \alpha _n^1 \le t_n\) and \(t_{n-1} \le \alpha _n^2 \le t_n\) such that:

We conclude as in the proof of proposition 5 using the regularity theorem. \(\square \) \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bensaid, B., Poëtte, G. & Turpault, R. Deterministic Neural Networks Optimization from a Continuous and Energy Point of View. J Sci Comput 96, 14 (2023). https://doi.org/10.1007/s10915-023-02215-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02215-4