Abstract

Tackling new machine learning problems with neural networks always means optimizing numerous hyperparameters that define their structure and strongly impact their performances. In this work, we study the use of goal-oriented sensitivity analysis, based on the Hilbert–Schmidt independence criterion (HSIC), for hyperparameter analysis and optimization. Hyperparameters live in spaces that are often complex and awkward. They can be of different natures (categorical, discrete, boolean, continuous), interact, and have inter-dependencies. All this makes it non-trivial to perform classical sensitivity analysis. We alleviate these difficulties to obtain a robust analysis index that is able to quantify hyperparameters’ relative impact on a neural network’s final error. This valuable tool allows us to better understand hyperparameters and to make hyperparameter optimization more interpretable. We illustrate the benefits of this knowledge in the context of hyperparameter optimization and derive an HSIC-based optimization algorithm that we apply on MNIST and Cifar, classical machine learning data sets, but also on the approximation of Runge function and Bateman equations solution, of interest for scientific machine learning. This method yields neural networks that are both competitive and cost-effective.

Similar content being viewed by others

Materials Availability

The source code is available at https://github.com/paulnovello/goal-oriented-ho.

References

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning (2016). http://www.deeplearningbook.org

Gretton, A., Bousquet, O., Smola, A., Schölkopf, B.: Measuring statistical dependence with Hilbert–Schmidt norms. In: Proceedings of the 16th International Conference on Algorithmic Learning Theory. ALT’05, pp. 63–77. Springer, Berlin, Heidelberg (2005). https://doi.org/10.1007/11564089_7

Gretton, A., Borgwardt, K., Rasch, M., Schölkopf, B., Smola, A.J.: A kernel method for the two-sample-problem. In: Schölkopf, B., Platt, J.C., Hoffman, T. (eds.) Advances in Neural Information Processing Systems 19 (2007). http://papers.nips.cc/paper/3110-a-kernel-method-for-the-two-sample-problem.pdf

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Bach, F., Blei, D. (eds.) Proceedings of the 32nd International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 37, pp. 448–456. PMLR, Lille, France (2015). https://proceedings.mlr.press/v37/ioffe15.html

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. In: ICLR (Poster) (2015). arXiv:1412.6980

Tan, M., Le, Q.: EfficientNet: rethinking model scaling for convolutional neural networks. In: Chaudhuri, K., Salakhutdinov, R. (eds.) Proceedings of the 36th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 97, pp. 6105–6114. PMLR, Long Beach, California, USA (2019). http://proceedings.mlr.press/v97/tan19a.html

Bergstra, J., Bengio, Y.: Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13(10), 281–305 (2012)

Jamieson, K., Talwalkar, A.: Non-stochastic best arm identification and hyperparameter optimization. In: Gretton, A., Robert, C.C. (eds.) Proceedings of the 19th International Conference on Artificial Intelligence and Statistics. Proceedings of Machine Learning Research, vol. 51, pp. 240–248. PMLR, Cadiz, Spain (2016). http://proceedings.mlr.press/v51/jamieson16.html

Li, L., Jamieson, K., DeSalvo, G., Rostamizadeh, A., Talwalkar, A.: Hyperband: a novel bandit-based approach to hyperparameter optimization. J. Mach. Learn. Res. 18(185), 1–52 (2018)

Mockus, J.: On Bayesian methods for seeking the extremum. In: Proceedings of the IFIP Technical Conference, pp. 400–404. Springer, Berlin, Heidelberg (1974)

Shahriari, B., Swersky, K., Wang, Z., Adams, R.P., de Freitas, N.: Taking the human out of the loop: a review of Bayesian optimization. Proc. IEEE 104, 148–175 (2016)

Snoek, J., Larochelle, H., Adams, R.P.: Practical Bayesian optimization of machine learning algorithms. In: Proceedings of the 25th International Conference on Neural Information Processing Systems, Vol. 2. NIPS’12, pp. 2951–2959. Curran Associates Inc., Red Hook (2012)

Bergstra, J.S., Bardenet, R., Bengio, Y., Kégl, B.: Algorithms for hyper-parameter optimization. In: Shawe-Taylor, J., Zemel, R.S., Bartlett, P.L., Pereira, F., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems 24 (2011). http://papers.nips.cc/paper/4443-algorithms-for-hyper-parameter-optimization.pdf

Snoek, J., Rippel, O., Swersky, K., Kiros, R., Satish, N., Sundaram, N., Patwary, M., Prabhat, M., Adams, R.: Scalable Bayesian optimization using deep neural networks. In: Bach, F., Blei, D. (eds.) Proceedings of the 32nd International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 37, pp. 2171–2180. PMLR, Lille, France (2015). http://proceedings.mlr.press/v37/snoek15.html

Chollet, F.: Xception: deep learning with depthwise separable convolutions. CoRR (2016). arXiv:1610.02357

Stanley, K.O., Miikkulainen, R.: Evolving neural networks through augmenting topologies. Evol. Comput. 10(2), 99–127 (2002). https://doi.org/10.1162/106365602320169811

Kandasamy, K., Neiswanger, W., Schneider, J., Póczos, B., Xing, E.P.: Neural architecture search with Bayesian optimisation and optimal transport. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. NIPS’18, pp. 2020–2029. Curran Associates Inc., Red Hook, NY, USA (2018)

Pham, H., Guan, M., Zoph, B., Le, Q., Dean, J.: Efficient neural architecture search via parameters sharing. In: Proceedings of Machine Learning Research, vol. 80, pp. 4095–4104. PMLR, Stockholmsmässan, Stockholm Sweden (2018). http://proceedings.mlr.press/v80/pham18a.html

Tan, M., Chen, B., Pang, R., Vasudevan, V., Le, Q.V.: Mnasnet: platform-aware neural architecture search for mobile. CoRR (2018). arXiv:1807.11626

Elsken, T., Metzen, J.H., Hutter, F.: Neural architecture search: a survey. J. Mach. Learn. Res. 20(55), 1–21 (2019)

Razavi, S., Jakeman, A., Saltelli, A., Prieur, C., Iooss, B., Borgonovo, E., Plischke, E., Lo Piano, S., Iwanaga, T., Becker, W., Tarantola, S., Guillaume, J.H.A., Jakeman, J., Gupta, H., Melillo, N., Rabitti, G., Chabridon, V., Duan, Q., Sun, X., Smith, S., Sheikholeslami, R., Hosseini, N., Asadzadeh, M., Puy, A., Kucherenko, S., Maier, H.R.: The future of sensitivity analysis: an essential discipline for systems modeling and policy support. Environ. Model. Softw. 137, 104954 (2021). https://doi.org/10.1016/j.envsoft.2020.104954

Sobol, I.M.: Sensitivity estimates for nonlinear mathematical models. MMCE 1, 407–414 (1993)

Fort, J.-C., Klein, T., Rachdi, N.: New sensitivity analysis subordinated to a contrast. Commun. Stat. Theory Methods 45(15), 4349–4364 (2016). https://doi.org/10.1080/03610926.2014.901369

Borgonovo, E.: A new uncertainty importance measure. Reliab. Eng. Syst. Saf. 92(6), 771–784 (2007). https://doi.org/10.1016/j.ress.2006.04.015

Saltelli, A.: Making best use of model evaluations to compute sensitivity indices. Comput. Phys. Commun. 145(2), 280–297 (2002). https://doi.org/10.1016/S0010-4655(02)00280-1

Da Veiga, S.: Global sensitivity analysis with dependence measures. J. Stat. Comput. Simul. (2013). https://doi.org/10.1080/00949655.2014.945932

Csizar, I.: Information-type measures of difference of probability distributions and indirect observation. Stud. Sci. Math. Hung. 2, 229–318 (1967)

Müller, A.: Integral probability metrics and their generating classes of functions. Adv. Appl. Probab. 29(2), 429–443 (1997). https://doi.org/10.2307/1428011

Spagnol, A., Riche, R.L., Da Veiga, S.: Global sensitivity analysis for optimization with variable selection. SIAM/ASA J. Uncertain. Quantif. 7, 417–443 (2018)

Fukumizu, K., Gretton, A., Lanckriet, G.R., Schölkopf, B., Sriperumbudur, B.K.: Kernel choice and classifiability for rkhs embeddings of probability distributions. In: Bengio, Y., Schuurmans, D., Lafferty, J.D., Williams, C.K.I., Culotta, A. (eds.) Advances in Neural Information Processing Systems 22 (2009). http://papers.nips.cc/paper/3750-kernel-choice-and-classifiability-for-rkhs-embeddings-of-probability-distributions.pdf

Kruskal, J.B.: Multidimensional scaling by optimizing goodness of fit to a nonmetric hypothesis. Psychometrika 29(1), 1–27 (1964). https://doi.org/10.1007/BF02289565

Gillespie, D.T.: A general method for numerically simulating the stochastic time evolution of coupled chemical reactions. J. Comput. Phys. 22(4), 403–434 (1976). https://doi.org/10.1016/0021-9991(76)90041-3

Falkner, S., Klein, A., Hutter, F.: BOHB: Robust and efficient hyperparameter optimization at scale. In: Proceedings of Machine Learning Research, vol. 80, pp. 1437–1446. PMLR, Stockholmsmässan, Stockholm Sweden (2018). http://proceedings.mlr.press/v80/falkner18a.html

Song, L., Smola, A., Gretton, A., Borgwardt, K.M., Bedo, J.: Supervised feature selection via dependence estimation. In: Proceedings of the 24th International Conference on Machine Learning. ICML ’07, pp. 823–830. Association for Computing Machinery, New York, NY, USA (2007). https://doi.org/10.1145/1273496.1273600

Funding

The research has been supported by the institutes where the authors are affiliated: the CEA, Inria and the Ecole Polytechnique.

Author information

Authors and Affiliations

Contributions

All authors whose names appear on the submission. Made substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data; or the creation of new software used in the work. Drafted the work or revised it critically for important intellectual content. Approved the version to be published. Agree to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Corresponding author

Ethics declarations

Conflict of interest

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Ethical Approval and Consent to Participate

All authors approve the Committee on Publication Ethics guidelines. They consent to participate.

Consent for Publication

All authors give explicit consent to submit and they obtained consent from the responsible authorities at the institutes where the work has been carried out, the CEA, Inria and the Ecole Polytechnique.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Hyperparameters Spaces

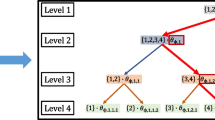

In this section, we describe hyperparameters spaces used for each problem in this chapter. Note that hyperparameter n_seeds denotes the number of random repetitions of the training for each hyperparameter configuration. If a conditional hyperparameter \(X_j\) is only involved for some specific values of a main hyperparameter \(X_i\), it is displayed with an indent on tab lines below that of \(X_i\), with the value of \(X_i\) required for \(X_j\) to be involved in the training.

1.1 Runge and MNIST

For Runge and MNIST, only fully connected Neural Networks are trained, and the width (n_units) is the same for every layer (Tables ).

Conditional Groups: (see (iii) of Sect. 4.3) \(\mathcal {G}_0\) and \(\mathcal {G}_{\texttt {dropout\_rate}}\)

1.2 Bateman

For Bateman, only fully connected Neural Networks are trained, and the width (n_units) is the same for every layer (Table ).

Conditional groups: (see (iii) of Sect. 4.3) \(\mathcal {G}_0\), \(\mathcal {G}_{\texttt {dropout\_rate}}\), \(\mathcal {G}_{\texttt {amsgrad}}\), \(\mathcal {G}_{\texttt {centered}}\), \(\mathcal {G}_{\texttt {nesterov}}\), \(\mathcal {G}_{\texttt {momentum}}\) and \(\mathcal {G}_{(\texttt {1st\_moment}, \texttt {2nd\_moment})}\)

1.3 Cifar10

For Cifar10, we use Convolutional Neural Networks, whose width increases with the depth according to hyperparameters stages and stage_mult. The first layer has width n_filters, and then, \(\texttt {stages} - 1\) times, the network is widen by a factor stage_mult. For instance, a neural network with \(\texttt {n\_filters} = 20\), \(\texttt {n\_layers}=3\), \(\texttt {stages}=3\) and \(\texttt {stage\_mult}=2\) will have a first layer with 20 filters, a second layer with \(\texttt {n\_filters} \times {stage\_mult} = 40\) filters, and a third layer with \(\texttt {n\_filters} \times \texttt {stage\_mult}^{\texttt {stages}-1} = 60\) filters (Table ).

Conditional groups: (see (iii) of Sect. 4.3) \(\mathcal {G}_0\), \(\mathcal {G}_{\texttt {dropout\_rate}}\), \(\mathcal {G}_{\texttt {amsgrad}}\), \(\mathcal {G}_{\texttt {centered}}\), \(\mathcal {G}_{\texttt {nesterov}}\), \(\mathcal {G}_{\texttt {momentum}}\) and

\(\mathcal {G}_{(\texttt {1st\_moment}, \texttt {2nd\_moment})}\)

Appendix B HSICs for Conditional Hyperparameters

1.1 B.1 MNIST

For MNIST, there is only one conditional hyperparameter, dropout_rate, so only one conditional group to consider in order to assess the importance of conditional hyperparameters (Fig. ).

1.2 B.2 Bateman

For Bateman, there are seven conditional hyperparameter, amsgrad, 1st_moment (beta_1), 2nd_moment (beta_2), dropout_rate, centered, momentum, and nesterov. Six conditional groups, specified in Fig. , have to be considered in order to assess their importance.

a \(\mathcal {G}_{\texttt {amsgrad}}\). b \(\mathcal {G}_{(\texttt {1st\_moment}, \texttt {2nd\_moment})}\) c \(\mathcal {G}_{\texttt {centered}}\) d \(\mathcal {G}_{\texttt {dropout\_rate}}\) f \(\mathcal {G}_{\texttt {momentum}}\) g \(\mathcal {G}_{\texttt {nesterov}}\) HSICs for conditional groups of Bateman hyperparameter analysis. a amsgrad is not impactful (it is in the estimation noise), b 1st_moment is not impactful but 2nd_moment is the fourth most impactful hyperparameter of this group, c centered is the second most impactful hyperparameter of this group, d dropout_rate is not impactful, e momentum is not impactful, f nesterov is the most impactful hyperparameter of this group

1.3 B. 3 Cifar10

For Cifar10, there are seven conditional hyperparameter, amsgrad, 1st_moment (beta_1), 2nd_moment (beta_2), dropout_rate, centered, momentum, and nesterov. Six conditional groups, specified in Fig. , have to be considered in order to assess their importance.

a \(\mathcal {G}_{\texttt {amsgrad}}\) b \(\mathcal {G}_{(\texttt {1st\_moment}, \texttt {2nd\_moment})}\) c \(\mathcal {G}_{\texttt {centered}}\) d \(\mathcal {G}_{\texttt {dropout\_rate}}\) e \(\mathcal {G}_{\texttt {momentum}}\) f \(\mathcal {G}_{\texttt {nesterov}}\). HSICs for conditional groups of cifar10 hyperparameter analysis. a amsgrad is not impactful, b 1st_moment, 2nd_moment are not impactful, c centered is the third most impactful hyperparameter of this group, d dropout_rate is not impactful, e momentum is not impactful, f nesterov is not impactful

Appendix C Construction of Bateman Data Set

Bateman data set is based on the resolution of the Bateman equations, which is an ODE system modeling multi species reactions:

and \(\varvec{\eta }\in (\mathbb {R}^{+})^M\), \(\varvec{\Sigma }_r \in \mathbb {R}^{M\times M}\). Here, \(f: ( \varvec{\eta _0}, t) \rightarrow \varvec{\eta }(t)\), and we are interested in \(\varvec{\eta }(t)\), which is the concentration of each of the species \(S_k\), with \(k \in \{1,\ldots ,M\}\). For physical applications, M ranges from tens to thousands. We consider the particular case \(M=11\). Matrix \(\varvec{\Sigma _r}\big (\varvec{\eta }(t)\big )\) depends on reaction constants. Here, 4 reactions are considered and each reaction p has constant \(\sigma _p\).

with \(\sigma _1=1\), \(\sigma _2=5\), \(\sigma _3=3\) and \(\sigma _4=0.1\). To obtain \(\varvec{\Sigma _r}\big (\varvec{\eta }(t)\big )\), the species have to be considered one by one. Here we give an example of how to construct the second row of \(\varvec{\Sigma _r}\big (\varvec{\eta }(t)\big )\). The other rows are built the same way. Given the reaction equations :

because \(S_2\) disappears in reactions (1) and (3) involving \(S_1\) and \(S_{11}\) as other reactants at rate \(\sigma _1\) and \(\sigma _3\), respectively, and appears in reactions (2) and (4) involving \(S_3\), \(S_4\) and \(S_3\), \(S_{11}\) as reactants, at rate \(\sigma _2\) and \(\sigma _4\) respectively. Hence, the second row of \(\varvec{\Sigma _r}\big (\varvec{\eta }(t)\big )\) is

with \(\varvec{\eta }(t)\) denoted by \(\varvec{\eta }\) to simplify the equation and \(\eta _i\) the i-th component of \(\varvec{\eta }\). To construct the training, validation and test data sets, we sample uniformly \(( \varvec{\eta }_0, t) \in [0,1]^{12} \times [0,5]\) 130000 times. We denote these samples \(( \varvec{\eta }_0, t)_i \) for \(i \in \{1,\ldots ,130000\}\). Then, we apply a first order Euler solver with a time step of \(10^{-3}\) to compute \(f(( \varvec{\eta }_0, t)_i)\). As a result, neural network’s input is \(( \varvec{\eta }_0, t)\) and neural network’s output is \(f(( \varvec{\eta }_0, t))\).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Novello, P., Poëtte, G., Lugato, D. et al. Goal-Oriented Sensitivity Analysis of Hyperparameters in Deep Learning. J Sci Comput 94, 45 (2023). https://doi.org/10.1007/s10915-022-02083-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-02083-4