Abstract

Conservation properties of iterative methods applied to implicit finite volume discretizations of nonlinear conservation laws are analyzed. It is shown that any consistent multistep or Runge-Kutta method is globally conservative. Further, it is shown that Newton’s method, Krylov subspace methods and pseudo-time iterations are globally conservative while the Jacobi and Gauss-Seidel methods are not in general. If pseudo-time iterations using an explicit Runge-Kutta method are applied to a locally conservative discretization, then the resulting scheme is also locally conservative. However, the corresponding numerical flux can be inconsistent with the conservation law. We prove an extension of the Lax-Wendroff theorem, which reveals that numerical solutions based on these methods converge to weak solutions of a modified conservation law where the flux function is multiplied by a particular constant. This constant depends on the choice of Runge-Kutta method but is independent of both the conservation law and the discretization. Consistency is maintained by ensuring that this constant equals unity and a strategy for achieving this is presented. Simulations show that this strategy improves the convergence rate of the pseudo-time iterations. Experiments with GMRES suggest that it also suffers from inconsistency but that this is automatically accounted for after some finite number of iterations. Similar experiments with coarse grid corrections based on agglomeration indicate no inconsistency.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Conservation laws arise ubiquitously in the modelling of physical phenomena and their discretizations have been the subject of intense study; see e.g. [17, Chapter 1] and [18, Chapter 1]. They derive their name from the fact that they describe the conservation of some quantities of interest over time. In computational fluid dynamics (CFD), these quantities are usually mass, momentum and energy. For convenience we always refer to the conserved quantity as the "mass" in this paper.

Many numerical schemes have been designed to mimic mass conservation. Throughout, we refer to such schemes as globally conservative. As special cases, locally conservative schemes dictate that local variations in the mass propagate from one computational cell to neighboring ones without "skipping" any cells (typically referred to as "conservative schemes" in the literature). In particular, finite volume methods are designed in part upon this principle, although many other schemes possess the same property [13]. A major result on the topic is the Lax-Wendroff theorem, see e.g. [16], which provides sufficient conditions for numerical solutions of convergent and locally conservative discretizations to converge to weak solutions of the corresponding conservation law.

For stiff problems, e.g. the simulation of wall bounded viscous flows [23], implicit time stepping methods are used. Such discretizations result in systems of linear or nonlinear equations. In the context of fluid flow simulations, these systems are sparse and very large. Their solutions are approximated using iterative methods, see e.g. [1, 2, 4,5,6,7,8, 10,11,12] and the references therein.

It is natural to ask whether such approximate solutions satisfy the conservation properties upon which the discretizations are based, and in particular, whether a Lax-Wendroff type result is available. It is typically claimed that this is not the case, e.g. in [15], where a version of a Newton-Jacobi iteration was noted to violate global conservation. In this paper we set out to answer this question in greater detail for some well-known (families of) iterative methods. We consider conservative implicit space-time discretizations whose solutions in each time step are approximated by a fixed number of iterations with different iterative methods. In practice, iterative methods are terminated using tolerances on the residual, set to match some predefined error tolerance on the entire scheme. Hence, the number of iterations is not known a priori. Nonetheless, in the pursuit of designing efficient iterative methods, it is of interest to understand their behaviour when terminated well before convergence. To this end, the assumption of a small number of iterations is beneficial.

After introducing relevant concepts and definitions in Sect. 2, we investigate the global conservation of iterative methods in Sect. 3. It is shown that Newton’s method, Krylov subspace methods and pseudo-time iterations using Runge-Kutta (RK) methods are globally conservative if the initial guess has correct mass. However, the Jacobi and Gauss-Seidel methods in general are not. In Sect. 4 we focus on pseudo-time iterations using explicit RK methods. We show that such methods are locally conservative. By fixing the number of iterations and considering the limit of infinitesimal space-time increments, an extension of the Lax-Wendroff theorem is given. It turns out that the numerical solution in general converges to a weak solution of a modified conservation law for which the flux function is multiplied by a particular constant. An expression for this modification constant is given that only depends on the choice of Runge-Kutta method and the selected pseudo-time steps. A technique for ensuring that the constant equals one is presented, thereby ensuring consistency of the resulting scheme. Experiments indicate that the new technique improves the convergence rate of the pseudo-time iterations. Numerical tests corroborate these findings and further suggest that the results hold for systems of conservation laws in multiple dimensions. Further, experiments with GMRES suggest that they suffer from a similar modification as pseudo-time iterations, but that this is automatically accounted for after some finite number of iterations. Similar experiments with agglomeration coarse grid corrections indicate no presence of such modifications. Conclusions are drawn in Sect. 5.

Throughout the paper we separate quantities that belong to a spatial discretization from those that do not. Any vector living on a grid with m cells is denoted by a lower case bold letter, e.g. \(\mathbf {u} \in {\mathbb {R}}^m\). Similarly, any matrix operating on such a vector is represented by a bold upper case letter, e.g. \(\mathbf {A} \in {\mathbb {R}}^{m \times m}\). In Sect. 4, we consider s-stage Runge-Kutta methods. Vector quantities spanning these stages are denoted by bold and underlined lower case letters, e.g. \(\underline{\mathbf {b}} \in {\mathbb {R}}^s\). Matrices operating on the stages are represented by bold and underlined upper case letters e.g. \(\underline{\mathbf {A}} \in {\mathbb {R}}^{s \times s}\).

2 Conservation and Conservative Discretizations

In this paper we consider numerical methods for conservation laws, meaning partial differential equations of the form

possibly with additional boundary conditions. Here, the kth component \(u_k\) of the vector \(u \in {\mathbb {R}}^q\) represents the concentration of a quantity, i.e. the total amount of that quantity in a domain is given by \(\int _{\Omega } u_k \text {d}x\). The conservation law states that this amount is only changed by flow across the boundary of the domain. Assuming that the net flow across the boundary is zero, we thus have for all \(t \in (t_0,t_e]\) that

In this paper we focus on scalar conservation laws in 1D, i.e. the case when \(d = q = 1\) in (1). Numerical experiments in Sect. 4 suggest that the results of the paper can be generalized to systems in multiple dimensions, however this task is deferred for future work. Further, we restrict our analysis to the Cauchy problem for (1), or the problem with periodic boundary conditions. Thus, the conservation law of interest becomes

where either \(\Omega = (-\infty , \infty )\) or \(\Omega = (a,b]\) and \(u(a) = u(b)\). Which case is considered will be clear from context.

A very successful line of research in computational fluid dynamics is to construct numerical methods that respect (2) on a discrete level. Herein, we predominantly consider finite volume methods that utilize the implicit Euler method as temporal discretization. Let the computational grid be given by \((x_i, t_n) = (i \Delta x, n \Delta t)\) with \(n \ge 0\). This grid may be either infinite or finite and periodic in space, i.e. \(x_{i+m} = x_i\) for some positive integer m. We consider discretizations on the form

Here, \(u_i^n\) approximates the solution \(u(x,t_n)\) of (3) in the computational cell \(\Omega _i\). The notation \({\hat{f}}_{i+\frac{1}{2}}(\mathbf {u})\) is used as a placeholder for a generic numerical flux of the form \({\hat{f}}(u_{i-p}^{n+1},\dots ,u_{i+q}^{n+1})\), where \(p,q \in {\mathbb {N}}\) determine the bandwidth of the stencil. Multiplying (4) by \(\Delta x\) and summing over all i reveals that

Comparing this with (2) shows that the finite volume scheme discretely mimics conservation of mass.

2.1 Local Conservation

It is the telescoping nature of the spatial terms in (4) that leads to the preceeding result. In the finite volume community, this property is typically referred to simply as conservation. However, to distinguish it from other mass conservative schemes, we will henceforth refer to this property as local conservation:

Definition 1

A discretization of (1) that can be written in the form (4) is said to be locally conservative.

Local conservation plays a central role in the Lax-Wendroff theorem, which considers the Cauchy problem for (3). First, the notion of consistency must be specified:

Definition 2

A numerical flux \({\hat{f}}\) is consistent with f if it is Lipschitz continuous in each argument and if \({\hat{f}}(u,\dots ,u) = f(u)\).

A numerical flux function is inconsistent if it fails to satisfy Definition 2. We will see examples of this in Sect. 4.

The Lax-Wendroff theorem applies to the Cauchy problem for (3) and considers locally conservative discretizations with consistent numerical flux. If the numerical solution of such a scheme converges to a function u in the limit of vanishing \(\Delta x\) and \(\Delta t\), the theorem provides sufficient conditions for u to be a weak solution of the conservation law (1) [17, Chapter 12]. More precisely, consider a sequence of grids \((\Delta x_\ell , \Delta t_\ell )\) such that \(\Delta x_\ell , \Delta t_\ell \rightarrow 0\) as \(\ell \rightarrow \infty \). Let \({\mathscr {U}}_\ell (x,t)\) denote the piecewise constant function that takes the solution value \(u_i^n\) in \((x_i, x_{i+1}] \times (t_{n-1}, t_n]\) on the \(\ell \)th grid. We make the following assumptions:

Assumption 1

-

1.

There is a function u(x, t) such that over every bounded set \(\Omega = [a,b] \times [0,T]\) in x-t space,

$$\begin{aligned} \Vert {\mathscr {U}}_\ell (x,t) - u(x,t) \Vert _{1,\Omega } \rightarrow 0 \quad \text {as} \quad \ell \rightarrow \infty . \end{aligned}$$ -

2.

For each T there is a constant \(R > 0\) such that

$$\begin{aligned} TV({\mathscr {U}}_\ell (\cdot ,t)) < R \quad \text {for all} \quad 0 \le t \le T, \quad \ell = 1, 2, \dots \end{aligned}$$

The first of these assumptions asserts that the numerical solution is convergent in the \(L^1\)-norm with limit u. The second one states that the total variation of the numerical solution remains bounded independently of the grid. The Lax-Wendroff theorem may now be stated as follows:

Theorem 1

(Lax-Wendroff) Consider the locally conservative discretization (4), suppose that the numerical flux \({\hat{f}}\) is consistent and that Assumption 1 is satisfied. Then, u(x, t) is a weak solution of (1).

The theorem and its assumptions require that the linear or nonlinear systems arising in (4) are solved exactly. However, in practice these systems will be solved approximately using iterative methods. The natural question that now arises is: Do iterative solvers maintain the convergence to weak solutions?

2.2 Global Conservation

To answer this question we must establish if iterative methods preserve local conservation. However, local conservation is a special case of the more general notion of global conservation. Let us therefore consider a finite and periodic grid. Discretizing (3) in space on a mesh with cells \(\Omega _i\) of volume \(|\Omega _i|\), we arrive at an initial value problem

with \(\mathbf {u}(t), \hat{\mathbf {f}} \in {\mathbb {R}}^m\).

Definition 3

A discretization \(\hat{\mathbf {f}} = ({\hat{f}}_1(\mathbf {u}), \dots , {\hat{f}}_m(\mathbf {u}))^\top \) is globally conservative if

Global conservation implies that (2) is fulfilled on a semidiscrete level by (6):

Suppose next that a time integration method is used to solve (6).

Definition 4

A time integration method applied to the conservative semidiscretization (6) is globally conservative if

holds for each time step \(n=0,1,\dots \)

Note that the conservation property (5) derived for the locally conservative finite volume discretization (4) is a special case of Definition 4, where \(\Omega _i = \Delta x\) for every i. Global conservation is thus necessary for local conservation, which in turn is necessary for the Lax-Wendroff Theorem.

In the CFD community, one hears from time to time the claim that implicit methods are not conservative. However, this is not true: All consistent linear multistep methods are globally conservative. To see this, consider a generic s-step linear multistep method

where \(a_j\) and \(b_j\) are given method dependent coefficients. Suppose that \(\sum _{i=1}^m |\Omega _i| u_i^{n+j} = \sum _{i=1}^m |\Omega _i| u_i^0\) for \(j = 0, \dots , s-1\). Multiplying (7) by \(|\Omega _i|\) and summing over all cells gives

Consistent multistep methods satisfy \(a_s = 1\) and \(a_0 + \dots + a_{s-1} = -1\). Hence, global conservation is ensured.

Similarly, all Runge-Kutta (RK) methods are globally conservative. Consider an s-stage RK method

where \(a_{j,l}\) and \(b_j\) are given by the method. Since \(\hat{\mathbf {f}}\) is globally conservative it follows from Definition 3 that the mass of \(\mathbf {k}_j\) is zero and therefore that

Hence, RK methods are globally conservative.

Exponential integrators have been proven to be conservative as well [9], essentially due to the following useful lemma:

Lemma 1

For any \(\mathbf {y} \in {\mathbb {R}}^m\) the Jacobian \(\hat{\mathbf {f}}'(\mathbf {u}) = \frac{\partial \hat{\mathbf {f}}}{\partial \mathbf {u}} \in {\mathbb {R}}^{m \times m}\) of the globally conservative discretization \(\hat{\mathbf {f}}(\mathbf {u})\) satisfies

Proof

See [27]. \(\square \)

In the remainder we also need the following result:

Lemma 2

Let \(\hat{\mathbf {f}}'(\mathbf {u}) \in {\mathbb {R}}^{m \times m}\) be the Jacobian of a conservative discretization \(\hat{\mathbf {f}}(\mathbf {u})\) and let \(\alpha \) be any scalar. The solution \(\mathbf {x}\) of a linear system of the form

satisfies

Proof

Multiplying the ith element of (10) by \(|\Omega _i|\) and summing over all i gives

By Lemma 1 the final term on the right-hand side is zero. \(\square \)

3 Globally Conservative Iterative Solvers

We begin by asking: Do iterative solvers respect global conservation? Since global conservation is necessary for local conservation, which in turn is used in Theorem 1, a negative answer will immediately exclude the possibility to extend the Lax-Wendroff theorem to incorporate a particular iterative method.

Discretizing (6) in time using the implicit Euler method results in a nonlinear equation system of the form

We concern ourselves with a set of iterates \(\mathbf {u}^{(k)}\) with limit \(\mathbf {u}^{n+1}\) as \(k \rightarrow \infty \).

Definition 5

An iterative method with iterates \(\mathbf {u}^{(k)}\) is said to be globally conservative if

In the following subsections we investigate the conservation properties of some of the most common iterative methods.

3.1 Newton’s Method

Newton’s method finds an approximate solution to (11) by solving the sequence of linear systems

for \(k = 0, 1,\dots \) Since \(\hat{\mathbf {f}}\) is conservative it has zero mass by Definition 3. If \(\mathbf {u}^{(k)}\) and \(\mathbf {u}^n\) have the same mass, the entire right-hand side of the first equation in (12) has zero mass. Hence, by Lemma 2, the increment \(\Delta \mathbf {u}\) also has zero mass. Thus, the mass of \(\mathbf {u}^{(k+1)}\) is the same as that of \(\mathbf {u}^{(k)}\), which by assumption is the same as that of \(\mathbf {u}^n\). Hence, we have shown:

Proposition 1

Newton’s method is globally conservative.

In large scale practical applications, each linear system in (12) is solved approximately using other iterative methods. In the following, we consider a generic linear system

that may either represent a Newton iteration or a linear discretization of a conservation law. In line with Lemma 1, it is assumed that the mass of \(\mathbf {A} \mathbf {y}\) is zero for any vector \(\mathbf {y} \in {\mathbb {R}}^m\).

3.2 Stationary Linear Methods

Any iterative method for solving (13) that can be written in the form

for some matrices \(\mathbf {M}\) and \(\mathbf {N}\), is termed a stationary linear method. Well known examples include the Richardson, Jacobi and Gauss-Seidel iterations.

3.2.1 Richardson Iteration

For the Richardson iteration, \(\mathbf {M} = \mathbf {I} - \theta (\mathbf {I} - \alpha \mathbf {A})\) and \(\mathbf {N}^{-1} = \theta \mathbf {I}\), where \(\theta \in {\mathbb {R}}\) is a fixed parameter. With some minor rearrangements, the iteration can thus be expressed as

Multiplying the ith element by \(|\Omega _i|\) and summing over i results in

By assumption, the matrix-vector product \(\mathbf {A} \mathbf {u}^{(k)}\) has zero mass, hence the final term vanishes. Further, if \(\mathbf {u}^{(k)}\) has the same mass as \(\mathbf {b}\), then the second term on the right-hand side vanishes. By induction we therefore conclude:

Proposition 2

The Richardson iteration is globally conservative.

3.2.2 Jacobi Iteration

Consider again the linear system (13). With the matrix decomposition \(\mathbf {I}-\alpha \mathbf {A} = \mathbf {I} - \alpha (\mathbf {D} + \mathbf {L} + \mathbf {U})\), where \(\mathbf {D}\), \(\mathbf {L}\) and \(\mathbf {U}\) are the diagonal, lower and upper triangular parts of \(\mathbf {A}\) respectively, the Jacobi iteration is obtained by setting \(\mathbf {M} = \alpha (\mathbf {I} - \alpha \mathbf {D})^{-1} (\mathbf {L} + \mathbf {U})\) and \(\mathbf {N}^{-1} = (\mathbf {I} - \alpha \mathbf {D})^{-1}\). Inserting this into (14), multiplying by \(\mathbf {I} - \alpha \mathbf {D}\), then adding and subtracting \(\alpha \mathbf {D} \mathbf {u}^{(k)}\), gives after some rearrangement

Multiplying the ith element by \(|\Omega _i|\) and summing over i results in

The final term vanishes by assumption. However, the second term on the right-hand side is in general non-zero and thus introduces a conservation error

Proposition 3

The Jacobi iteration is not globally conservative in general.

However, consider the special case when the diagonal \(\mathbf {D}\) of \(\mathbf {A}\) is a scalar multiple of the identity matrix, say \(\mathbf {D} = a \mathbf {I}\). Then \(a_{i,i} = a\) for \(i = 1, \dots , m\). If \(\mathbf {u}^{(k)}\) has the same mass as \(\mathbf {b}\) we find, after rearrangement of (15), that

Thus, \(\mathbf {u}^{(k+1)}\) has correct mass, at least as long as \(\alpha a \ne 1\). Hence, the Jacobi iteration is conservative in this special case.

3.2.3 Gauss-Seidel Iteration

The Gauss-Seidel iteration is defined by \(\mathbf {M} = \alpha (\mathbf {I} - \alpha (\mathbf {D} + \mathbf {L}))^{-1} \mathbf {U}\) and \(\mathbf {N}^{-1} = (\mathbf {I} - \alpha (\mathbf {D} + \mathbf {L}))^{-1}\). Inserting this into (14), multiplying by \(\mathbf {I} - \alpha (\mathbf {D} + \mathbf {L})\), then adding and subtracting \(\alpha \mathbf {U} \mathbf {u}^{(k+1)}\) gives

Following the same procedure as previously, we multiply the ith element by \(|\Omega _i|\) and sum over i to obtain

By assumption, the final term vanishes. However, the second term on the right-hand side is in general non-zero, and introduces a conservation error given by

Proposition 4

The Gauss-Seidel iteration is not globally conservative in general.

However, note that for problems where \(\mathbf {A}\) is lower triangular the Gauss-Seidel iteration is indeed conservative. In fact, for such problems the method is by definition exact.

3.3 Krylov Subspace Methods

Given an initial guess \(\mathbf {u}^{(0)}\) for the solution of the linear system (13), the kth iteration of a Krylov subspace method belongs to the space

where \(\mathbf {r}^{(0)} = \mathbf {b} - (\mathbf {I} - \alpha \mathbf {A}) \mathbf {u}^{(0)}\) is the initial residual. Examples of Krylov subspace methods are GMRES, CG and BICGSTAB [24, Chapters 6–7].

We may express the kth iteration \(\mathbf {u}^{(k)}\) as

for some coefficients \(c_l, \, l=0,\dots ,k-1\) that depend on, and are chosen by the method. It follows that

The final term vanishes by assumption. Note that the mass of \(\mathbf {r}^{(0)}\) is zero if the mass of \(\mathbf {u}^{(0)}\) equals that of \(\mathbf {b}\). Thus, the second term on the right-hand side also vanishes. We thus conclude:

Proposition 5

All Krylov subspace methods are globally conservative when the initial guess has the same mass as the right-hand side.

Typically a preconditioner \(\mathbf {P}^{-1}\) will pre- or post-multiply \(\mathbf {A}\) in Krylov subspace methods. We will not delve into this subject here but merely observe that if \(\mathbf {y}\) and \(\mathbf {P}^{-1} \mathbf {y}\) have the same mass for any choice of \(\mathbf {y}\), then the above analysis applies without modification.

3.4 Multigrid Methods

Multigrid methods combine two methods, namely a smoother and a coarse grid correction (CGC). The idea is to separate the residual into high and low frequency components. As a smoother, an iterative method is applied, designed to effectively damp the high frequency components.

The role of the CGC is to remove the low frequency components of the residual. The residual is mapped to a coarser grid using a restriction operator, \(\mathbf {R}\). Smoothing is then applied and a prolongation operator \(\mathbf {P}\) is used to reconstruct the residual on the fine grid. Finally, the fine grid solution is updated by adding the correction to the previous iterate. The procedure can be applied to a hirearchy of grids, thereby resulting in a multigrid method. If we consider only two grid levels and assume that the system is solved exactly on the coarser one, then the coarse grid correction for the linear problem (13) has the form

Here, the notation \((\cdot )_\ell \) and \((\cdot )_{\ell -1}\) denote matrices operating on the fine and the coarse grid respectively.

The easiest way to make a multigrid method globally conservative is to choose its components to be globally conservative. The smoother can be any of the globally conservative iterations discussed so far. By Lemma 2, the inverted matrices in (16) are mass conserving. Thus, it remains to choose globally conservative restriction and prolongation operators \(\mathbf {R}\) and \(\mathbf {P}\). This is achieved using agglomeration. The restriction is performed by agglomerating a number of neighboring cells by constructing a volume weighted average of fine grid values. Conversely, the prolongation is done by injecting coarse grid values at multiple fine grid points. In fact, this is the standard choice of multigrid method in CFD since it results in faster convergence than other alternatives. For details, see [3].

3.5 Pseudo-Time Iterations

Pseudo-time iterations are obtained by adding a pseudo-time derivative term to the algebraic equations (11) (or (13)),

Here, \(\mathbf {u}_0\) is initial data that must be provided, e.g. \(\mathbf {u}_0 = \mathbf {u}^n\). The idea is that the system eventually should reach a steady state as \(\tau \rightarrow \infty \) where the pseudo-time derivative vanishes [14], resuting in a solution to the original nonlinear system (11). Any time integration method can in principle be applied to the initial value problem (17). Explicit RK methods are globally conservative by construction. Implicit methods result in new systems of equations whose solutions are once again approximated using iterative methods [5]. Whether the resulting approximation is conservative depends on the choice of method. Any one of the conservative methods discussed so far can be applied in principle. We will return to pseudo-time iterations in Sect. 4, where more details are provided.

3.6 Numerical Validation

To validate the results of the preceding section we consider two simple experiments. The first is the linear advection equation with periodic boundary conditions,

We discretize using the finite volume scheme (4) with a central numerical flux \({\hat{f}}_{i+\frac{1}{2}} = (u_{i+1}^{n+1} - u_i^{n+1})/2\). This results in a linear system of the form (13) with \(\alpha = -\Delta t/ \Delta x\) and \(\mathbf {A} = \text {Tridiag} \left( -\frac{1}{2}, 0, \frac{1}{2} \right) \). Here, \(\text {Tridiag}(\cdot )\) refers to a tridiagonal matrix with periodic wrap-around. Note that the diagonal of \(\mathbf {A}\) is constant. We therefore expect the Jacobi method to be conservative for this particular discretization.

The second experiment is Burgers’ equation with periodic boundary conditions,

Again, we use the finite volume scheme (4), however with the upwind flux \({\hat{f}}_{i+\frac{1}{2}} = \left( u_i^{n+1} \right) ^2/2\). The result is a nonlinear system in the form (11) with \(\alpha = -\Delta t\) and \({\hat{f}}_i(\mathbf {u}^{n+1}) = \left( (u_i^{n+1})^2 - (u_{i-1}^{n+1})^2 \right) /2\Delta x\). The Jacobian of this discretization is lower triangular except for a single element in the top right corner. Thus, we expect the Gauss-Seidel method to be nearly conservative if the grid is sufficiently fine.

Burgers’ Eq. (19) is solved using Newton’s method with initial guess \(\mathbf {u}^n\). The resulting linear systems are either solved exactly or using the following iterative methods with \(\mathbf {0}\) as initial guess:

- (R):

-

The Richardson iteration using \(\theta = 0.5\).

- (J):

-

The Jacobi method.

- (GS):

-

The Gauss-Seidel method.

- (GM):

-

GMRES without restarts or preconditioning.

- (CGC):

-

A two-level coarse grid correction.

- (H):

-

Pseudo-time iterations using Heun’s method with \(\Delta \tau = 0.5\).

As prolongation and restriction operators for the CGC, agglomeration gives

The same methods are used directly on the advection problem (18) with \(\mathbf {u}^n\) as initial guess.

First we consider very coarse discretizations with \(\Delta x= \Delta t= 0.5\) for both problems. A single iteration is used with each method and the mass error and residual is computed after one time step. The results are shown in Table 1. Here, any error smaller than \(10^{-15}\) is denoted as zero. The Richardson iteration, GMRES, CGC and the pseudo-time iterations are conservative for both problems. Similarly, the exact Newton method (Exact) is conservative. As expected, the Jacobi method is conservative for the advection problem but not for Burgers’ equation. Gauss-Seidel is not conservative for any of the two problems.

Next, we repeat the experiment with well resolved discretizations. For the advection problem we choose \(\Delta t= \Delta x= 0.006\) and for Burgers’ equation \(\Delta t= \Delta x= 0.003\). In the latter case, two Newton iterations are used per time step. For each of the other methods, 5 iterations are used except for CGC where a single iteration is performed.

The total mass is computed in each time step and compared with the mass of the initial data. Figure 1 shows the results. The main difference to the previous test is that Gauss-Seidel has a non-detectible mass error for Burger’s equation. This is in line with our expectations since the Jacobian only has a single nonzero element above the main diagonal.

4 Local Conservation of Pseudo-Time Iterations

Having covered global conservation in the previous section, we now switch focus to local conservation. The Jacobi and Gauss-Seidel methods cannot be locally conservative since they are not globally conservative in general. For the other methods considered so far, the question remains open.

In what follows, we restrict our attention to the Cauchy problem for (3). We focus on pseudo-time iterations, show that these methods are locally conservative and prove an extension of the Lax-Wendroff theorem. Noting that the Richardson iteration is equivalent to pseudo-time iterations with explicit Euler, this method is also covered.

4.1 Pseudo-Time Iterations in Locally Conservative Form

We once again consider the finite volume method (4) where the implicit Euler method is used as time discretization. In order to apply pseudo-time iterations to this scheme, we introduce a pseudo-time derivative,

where the nonlinear function \(g_i\) is given by

Several different methods are available for iterating in pseudo-time [5, 28]. Herein, we use an explicit s-stage Runge-Kutta method (ERK). Let \((\underline{\mathbf {A}},\underline{\mathbf {b}},\underline{\mathbf {c}})\) denote the coefficient matrix and vectors of the ERK method. We denote the kth pseudo-time iterate by \(u_{i}^{(k)}\). The subsequent iterate \(u_{i}^{(k+1)}\) is computed from \(u_{i}^{(k)}\) as

where the stage vectors \(\mathbf {U}_j^{(k)}\), \(j = 1, \dots , s\) have elements

As previously, p and q determine the bandwidth of the finite volume stencil.

In the remainder we always use a fixed number N of iterations. A step in physical time is taken by setting \(u_i^{n+1} = u_{i}^{(N)}\). Throughout, \(u_{i}^{(0)} = u_i^n\) is chosen as initial iterate.

Recall that the stability function \(\phi (z)\) of an RK method \((\underline{\mathbf {A}},\underline{\mathbf {b}},\underline{\mathbf {c}})\) is given by (see e.g. [31, Chapter IV.3])

where \(\underline{\mathbf {I}}\) is the \(s \times s\) identity matrix. The stability region of the RK method is defined as the subset of the complex plane for which \(|\phi (z)| < 1\). The proof of the following lemma is rather lengthy and is therefore deferred to Appendix A.

Lemma 3

For each pseudo-time iteration \(k = 0, \dots , N-1\), let \(\mu _k = \Delta \tau _k/\Delta t\) and set \(u_{i}^{(0)} = u_i^n\). Then, for any \(N \ge 1\), the elements of the pseudo-time iterate \(u_{i}^{(N)}\) satisfy the relation

where the flux \({\hat{h}}_{i+\frac{1}{2}}^{(N)}\) is given by

and \(\hat{\underline{\mathbf {f}}}_{i+\frac{1}{2}}^{(k)} = \left( {\hat{f}}_{i+\frac{1}{2}}\left( \mathbf {U}_1^{(k)} \right) , \dots , {\hat{f}}_{i+\frac{1}{2}} \left( \mathbf {U}_s^{(k)} \right) \right) ^\top \).

Remark 1

The product in (26) is empty when \(k = N-1\). To handle this case we use the convention

It follows immediately from Lemma 3 that the locally conservative nature of the discretization (4) is preserved by the pseudo-time iterations, albeit with the new numerical flux \({\hat{h}}_{i+\frac{1}{2}}^{(N)}\) in place of \({\hat{f}}_{i+\frac{1}{2}}\). The question with which flux \({\hat{h}}_{i+\frac{1}{2}}^{(N)}\) is consistent, is answered in the following theorem:

Theorem 2

Let N be given and let \(\mu _k = \Delta \tau _k/\Delta t\) for \(k = 0, \dots , N-1\). Set \(u_{i}^{(0)} = u_i^n\) and terminate the pseudo-time iteration after N steps. Then the resulting scheme can be written in the locally conservative form

The numerical flux \({\hat{h}}_{i+\frac{1}{2}}^{(N)}\), given by (26), is consistent with the flux \(c(\mu _0,\dots ,\mu _{N-1}) f\), where

such that (27) is a consistent discretization of the conservation law

Thus, the scheme is consistent with (3) if and only if \(c(\mu _0,\dots ,\mu _{N-1}) = 1\).

Proof

Firstly, the locally conservative form (27) follows from setting \(u_i^{n+1} = u_{i}^{(N)}\) in (25). Secondly we recall that the numerical flux \({\hat{f}}_{i+\frac{1}{2}}\) depends on \(p+q+1\) parameters, e.g. \({\hat{f}}_{i+\frac{1}{2}}(\mathbf {w}) = {\hat{f}}(w_{i-p}, \dots , w_{i+q})\). Inspecting the flux \({\hat{h}}_{i+\frac{1}{2}}^{(N)}\) we see that it is additionally dependent on \(u_i^n\) so that we may write \({\hat{h}}_{i+\frac{1}{2}}^{(N)}(\mathbf {w}) = {\hat{h}}^N(w_{i-p}, \dots , w_{i+q}; u_i^n)\). To establish the consistency we must therefore show that \({\hat{h}}^N(u, \dots , u; u) = c f(u)\). To do this, we first note from (21) that \(g_i(u,\dots ,u; u) = 0\) due to the consistency of \({\hat{f}}_{i+\frac{1}{2}}\). Using the fact that \(\underline{\mathbf {A}}\) is lower triangular for any ERK method, it follows from (22) and (23) that \(U_{j_\iota }^{(k)} = u_{i}^{(k)} = u\) for every j, k and \(\iota = i-p, \dots , i+q\). Consequently, the vector \(\hat{\underline{\mathbf {f}}}_{i+\frac{1}{2}}^{(k)}\) becomes

where the consistency of \({\hat{f}}\) has been used in the final equality. Inserting this into the flux function \({\hat{h}}_{i+\frac{1}{2}}^{(N)}\) in (26) and using (24) gives

where we have utilized the fact that the sum in the third equality is telescoping. Finally, note that \({\hat{h}}_{i+\frac{1}{2}}^{(N)}\) is formed by a linear combination of evaluations of \({\hat{f}}_{i+\frac{1}{2}}\) and is therefore Lipschitz continuous. \(\square \)

The fact that \({\hat{h}}_{i+\frac{1}{2}}^{(N)}\) is inconsistent with f when \(c \ne 1\) is a manifestation of the error associated with the pseudo-time iterations. This has important implications on the convergence of the resulting scheme. What follows is an extension of the Lax-Wendroff theorem (see [17, Chapter 12]) pertaining to ERK pseudo-time iterations:

Theorem 3

Consider a sequence of grids \((\Delta x_\ell , \Delta t_\ell )\) such that \(\Delta x_\ell , \Delta t_\ell \rightarrow 0\) as \(\ell \rightarrow \infty \). Fix N independently of \(\ell \), set \(u_{i}^{(0)} = u_i^n\) and terminate the pseudo-time iterations after N steps. Let \(\Delta \tau _{k,\ell } / \Delta t_\ell = \mu _{k,\ell } = \mu _k\) be constants independent of \(\ell \) for each \(k=0,\dots ,N-1\). Suppose that the numerical flux \({\hat{f}}\) in (4) is consistent with f and that Assumption 1 is satisfied. Then, u(x, t) is a weak solution of the conservation law

Proof

We follow the proof of the Lax-Wendroff theorem given in [17, Chapter 12] with changes and details added where necessary. Throughout the proof we let \({\mathscr {U}}_\ell (x,t)\) denote the piecewise constant function that takes the solution value \(u_i^n\) in \((x_i, x_{i+1}] \times (t_{n-1}, t_n]\) on the \(\ell \)th grid. Similarly, for \(j = 1,\dots ,s\) we let \({\mathscr {U}}_{j_\ell }^{(k)}(x,t)\) be the piecewise constant function that takes the value \(U_{j_i}^{(k)}\) in \((x_i, x_{i+1}] \times (t_{n-1}, t_n]\).

The discretization (27) can equivalently be expressed as

Let \(\varphi \in C_0^1\) be a compactly supported test function. Multiply (30) by \(\varphi (x_i,t_n)\) and sum over all n and i. Due to the compact support of \(\varphi \), these sums can be extended arbitrarily beyond the bounds of x and t, hence we obtain

At this point we will use summation by parts on the sums in (31). Recall that for two sequences \((a_i)\) and \((b_i)\), the summation by parts formula can be expressed as

Applied to the n-sum in the first term and to the i-sum in the second term in (31), this results in

Here we have used the fact that \(\varphi \) has compact support in order to eliminate all boundary terms except the one at \(n=0\).

We now let \(\ell \rightarrow \infty \) and investigate the convergence of the terms arising in (32). The first and third terms are identical to those in the proof of the Lax-Wendroff theorem [17, Chapter 12]. Thus, it can immediately be concluded that the first term converges to

and the third one to

It remains to investigate the second term in (32).

Expanding \({\hat{h}}_{i+\frac{1}{2}}^{(N)}\) using (26) yields for the second term in (32)

To this expression, add and subtract

in order to obtain

The bracketed part of this expression evaluates to \(c(\mu _0,\dots ,\mu _{N-1})\), as in the proof of Theorem 2. Thus, the first half of this expression equals

Apart from the factor \(c(\mu _0,\dots ,\mu _{N-1})\), which is independent of \(\ell \), this term appears identically in the proof of the Lax-Wendroff theorem [17, Chapter 12] and converges to

It remains to show that

vanishes in the limit \(\ell \rightarrow \infty \). Since \({\mathscr {U}}_\ell \) and \({\mathscr {U}}_{j_\ell }^{(k)}\) are both constant in \((x_i,x_{i+1}] \times (t_{n-1},t_n]\), we can rewrite (33) as

Here, \(\hat{\underline{\mathbf {f}}}_{i+\frac{1}{2}}^{(k)}(x,t)\) is placeholder notation for the vector whose jth element is

To establish that this term indeed vanishes, it suffices to show that

tends to zero for almost every x. By the Cauchy-Schwarz and triangle inequalities, (34) is bounded by

where the Euclidean norm in \({\mathbb {R}}^s\) is used. The only term in (35) that depends on \(\ell \) is \(\left\| \left( \hat{\underline{\mathbf {f}}}_{i+\frac{1}{2}}^{(k)}(x,t) - \underline{\mathbf {1}} f({\mathscr {U}}_\ell (x,t)) \right) \right\| \) and it therefore suffices to show that this term vanishes for almost every x.

Recall that \(f({\mathscr {U}}_\ell ) = {\hat{f}}_{i+\frac{1}{2}}({\mathscr {U}}_\ell , \dots , {\mathscr {U}}_\ell )\) by consistency. By the equivalence of norms in finite dimensions, there is a constant C such that

Since \({\hat{f}}_{i+\frac{1}{2}}\) is Lipschitz continuous in each argument there is a constant L such that the final expression is bounded by

The second of these terms appear in the proof of the Lax-Wendroff theorem [17, Chapter 12] and vanishes in the limit for almost every x due to the bounded total variation of \({\mathscr {U}}_\ell \). Further, from (23) and (22) and the fact that \(u_{i}^{(0)} = u_i^n\) it follows that for every \(j=1,\dots ,s\) and \(k \ge 0\), \(U_{j_\iota }^{(k)} = u_\iota ^n\) for each \(\iota = i-p, \dots , i+q\) in the limit of vanishing \(\Delta \tau _\ell \). Consequently, \(\left| {\mathscr {U}}_{j_\ell }^{(k)}(\cdot ,t) - {\mathscr {U}}_\ell (\cdot ,t) \right| \) vanishes identically as \(\ell \rightarrow \infty \). \(\square \)

A few remarks about Theorem 3 are in place: First, we demand from a useful iterative method that it converges to the correct solution as \(N \rightarrow \infty \). Thus, the pseudo-time steps should be chosen in a way that ensures that \(c \rightarrow 1\), or equivalently,

The simplest way to do this is to choose each pseudo-time step so that \(|\phi (-\mu _{l})| < 1\), i.e. to stay within the stability region of the RK method.

Secondly, observe that if \(\phi (-\mu _{l}) = 0\) for any l, then \(c=1\) irrespective of how many further iterations that are carried out. For some RK methods such a root exists while for others it does not. For instance, the explicit Euler method has stability function \(\phi (-\mu ) = 1 - \mu \), hence \(\mu = 1\) is a root of \(\phi \). On the other hand, Heun’s method has stability function \(\phi (-\mu ) = 1 - \mu + \mu ^2 /2 > 0\) for all \(\mu \in {\mathbb {R}}\) and therefore does not have any real roots. A strategy is thus to choose a RK method with a root, begin the pseudo-time iterations with a step that corresponds to this root, then resort to a conventional method for choosing the remaining pseudo-time steps. The initial iteration will not change the limit as \(N \rightarrow \infty \) if the remaining pseudo-time steps are selected within the stability region.

4.2 Numerical Results

Next we validate Theorem 3 by numerically solving a series of linear and nonlinear conservation laws.

4.2.1 Linear Advection

The first setting is the linear advection Eq. (18). The computational domain is \(x \in (-1,1]\), \(t \in (0,0.25]\) and periodic boundary conditions are used. The upwind flux \({\hat{f}}_{i+\frac{1}{2}} = u_{i}^{n+1}\) is used for the spatial discretization. The resulting finite volume method becomes

Throughout the experiments the temporal and spatial increments are chosen to be equal: \(\Delta t= \Delta x\).

We validate Theorem 3 by studying the convergence of the numerical scheme to the solution of the original advection problem (18) as well as to the solution of the modified version \(u_t + c u_x = 0\). As pseudo-time iteration we use the explicit Euler method, Heun’s method and the third order strong stability preserving RK method SSPRK3 [26]. Theorem 3 predicts that these methods respectively will modify the propagation speed by the factor

Here, we fix \(N=4\) and consider two different sequences of pseudo-time steps, one with constant and one with variable step sizes. The first is given by \(\mu _{l} = 1/20\) and the second by \(\mu _{l} = 2^{-l}\) for \(l = 0, \dots , 3\). The corresponding modification constants \(c(\mu _0, \dots , \mu _3)\) are shown in Table 2. Note that with the second sequence, \(c=1\) for the explicit Euler method. This is due to the fact that \(\mu _0\) is a root of the stability polynomial in this case.

The advection problem is solved on a sequence of grids with grid spacing \(\Delta x= 2 / (40 \times 2^j)\) for \(j = 1, \dots , 12\). The \(L^2\) error is calculated with respect to the exact solution of the original and modified equations. The results are shown in Fig. 2. When constant pseudo-time steps are used (Fig. 2a), the lines overlap. None of the methods converge to the solution of the original problem since the numerical solution is moving with the incorrect speed. Instead, all three schemes converge to the solution of their respective modified conservation law. Similar results are seen when variable pseudo-time steps are used (Fig. 2b).

Solutions of the advection Eq. (18) propagate from left to right at unit speed. In this setting it follows from Theorem 3 that the numerical solution will propagate with the speed \(c(\mu _0,\dots ,\mu _{N-1})\) in the limit \(\Delta x, \Delta t\rightarrow 0\). Thus, if \(c \ne 1\) in each physical time step, the numerical solution will drift out of phase. However, it should be noted that Theorem 3 is an asymptotic result and does not necessarily imply that \(c(\mu _0, \dots , \mu _{N-1})\) alone captures the propagation speed error on coarse grids. In fact, we may generally expect a contribution to the speed from dispersion errors built into the discretization; see e.g. [19,20,21,22, 29, 30] for details and remedies.

In the next experiment we verify that c depends on N as predicted by Theorem 3 by measuring the propagation speed \({\bar{c}}\) of the numerical solution while varying N. To measure \({\bar{c}}\) we track the x-coordinate of the maximum of the propagating pulse through time. To get accurate measurements we extend the computational domain to \(x \in (-1/5, 1/5]\), \(t \in (0,6]\).

For this experiment we fix \(\Delta x= 0.003\) and set \(\mu _{l} = \mu \) to be fixed for each \(l=0,\dots ,N-1\). The measured propagation speed error \(1 - {\bar{c}}\) for different \(\mu \) are shown in Fig. 3a for Heun’s method and in Fig. 3b for SSPRK3. The solid lines show the theoretically predicted error,

The measured and theoretical speed errors agree well for \(\mu = 0.05\) and \(\mu = 0.2\). For \(\mu = 0.5\) a discrepancy between theory and measurement is seen for small errors. This suggests that the dispersion error intrinsic to the finite volume scheme is starting to dominate the propagation speed error.

4.2.2 Burgers’ Equation

Next we consider a triangular shock wave propagating under the 1D Burgers’ equation with periodic boundary conditions:

The exact solution to this problem is given by

Modifying the conservation law to \(u_t + (c u^2/2)_x = 0\) while retaining the same initial condition modifies the exact solution to

For this problem we therefore expect that pseudo-time iterations to affect both the speed and the amplitude of the shock front.

As for the advection problem, we investigate the \(L^2\) convergence of the numerical scheme to the original and modified conservation laws. We once again use an upwind numerical flux and implicit Euler in time;

We run the simulation to time \(t=0.1\) with \(\Delta t= \Delta x\) using the sequence of grids \(\Delta x= 1/(25 \times 2^j)\) for \(j=1,\dots ,15\). The explicit Euler method is used as pseudo-time iterator with \(N=12\) and \(\mu _l = 1/4\) for \(l=0,\dots ,11\). The corresponding modification constant is \(c \approx 0.9683\). Figure 4a shows that the numerical solution converges to the solution of the modified conservation law as expected.

Fixing \(\Delta x= 0.004\) and setting \(\mu _l = \mu = 1/4\), we run the simulation to time \(t=1\) with different choices of N. Figure 5 shows the numerical solutions together with the initial data (dashed line) and the exact solution (dotted line). The locations of the tips of the shock waves as predicted by Theorem 3 are indicated as crosses in the figure. There is good agreement between theory and experiment, although the shocks appear slightly smeared due to the built-in dissipation in the numerical scheme.

Numerical solutions and predicted shock locations (crosses) for the solution of Burgers’ Eq. (19) using different numbers of pseudo-time iterations, N. The dotted line indicates the exact solution. The dashed line shows the initial data

Next, we repeat the experiments but change the initial data to a step function,

Since this data is not periodic, we impose the boundary condition \(u(0,t) = 1\). Theorem 3 does not treat boundary conditions and it is therefore interesting to see if it still provides useful predictions of the behaviour of the numerical solution in this setting.

The exact solution is the initial step function travelling to the right. The shock speed is given by the Rankine-Hugoniot condition as

where \(u_{l,r}\) denote left and right states of the discontinuity respectively. The shock speed of the modified conservation law is instead c/2.

We use the exact same grids and pseudo-time iterations as for the triangular shock and measure the \(L^2\) error with respect to the exact and modified conservation laws. The results are shown in Fig. 4b. Convergence is once again seen towards the modified equation, thus verifying that Theorem 3 predicts the propagation speed error correctly despite the added boundary condition.

Next we extend the time domain to \(t \in (0,1]\), fix \(\Delta x= \Delta t= 4 \Delta \tau = 1/100\) and vary the number of iterations, N. The computed solutions using \(N = 1\), \(N = 3\) and \(N = 12\) are shown in Fig. 6 together with the predicted shock locations (dashed lines). Once again, there is good agreement between prediction and experiment, although numerical dissipation smears the shock fronts somewhat.

Numerical solutions and predicted shock locations (dashed lines) for the solution of Burgers’ Eq. (19) using different numbers of pseudo-time iterations, N

As mentioned previously, the shock speed error can be eliminated entirely for the explicit Euler method by noting that \(\mu = 1\) is a root of the stability polynomial \(1 - \mu \). To highlight the effect of this, we introduce two strategies for choosing the pseudo-time steps:

- Strategy 1:

-

Use \(N=12\) iterations with \(\Delta \tau _{0,\dots ,11} = \Delta t/4\).

- Strategy 2:

-

Use \(N=9\) iterations with \(\Delta \tau _0 = \Delta t\) and \(\Delta \tau _{1,\dots ,8} = \Delta t/4\).

The first strategy is the same that we have used in the experiments so far. The second one ensures that \(c=1\) by taking a large initial pseudo-time step. Note that both strategies correspond to integration in pseudo-time to the same point; \(\tau = 3 \Delta t\).

The relative residuals of the pseudo-time iterates in the first physical time step are shown in Fig. 7 for the two strategies. The large initial pseudo-time step in Strategy 2 results in a considerably greater residual reduction than the corresponding iteration using Strategy 1. Interestingly, subsequent iterations yield faster convergence of the residual using Strategy 2 as seen by the steeper gradient. This suggests that the incorrect shock speed makes the dominant and slowest converging contribution to the residual for this problem when using Strategy 1. We also conclude that the point to which we march in pseudo-time, here \(\tau = 3 \Delta t\), have less of an impact on the convergence than the choice of pseudo-time steps used to reach this point.

Relative residuals of pseudo-time iterates applied to the discretization (38) of Burgers’ equation using Strategy 1 and Strategy 2

4.2.3 The Euler Equations

As a second nonlinear problem we consider the 2D compressible Euler equations,

posed on the domain \((x,y) \in (-5, 15] \times (-5, 5]\). Here, \(\rho , u, v, E\) and p respectively denote density, horizontal and vertical velocity components, total energy per unit mass and pressure. The pressure is related to the other variables through the equation of state

where \(\gamma = 1.4\) and \(e = E - (u^2 + v^2)/2\) is the internal energy density. The domain is taken to be periodic in both spatial coordinates. The setting is the isentropic vortex problem [25] with initial conditions

where \(r = 1 - x^2 - y^2\). Here, \(\epsilon = 5\) is the circulation and \(M_\infty = 0.5\) is the Mach number. As the solution evolves in time, the initial vortex propagates in the horizontal direction with unit speed.

As in previous experiments, we use implicit Euler in time, yielding a finite volume scheme of the form

Along the x-coordinate we use a fourth order centered flux

and similarly along the y-coordinate. With this choice, the resulting problem violates the assumptions of Theorem 3 in three ways: (i) It is 2D, (ii) it is a system of equations, and (iii) the scheme is not total variation bounded.

The exact solution of the isentropic vortex problem is given by the initial data (centred at the origin) translated to the point \((x,y) = (T,0)\), where T is the end point of the time domain. However, the exact solution of the modified conservation law is instead centred at \((x,y) = (cT,0)\).

The explicit Euler method is again used as pseudo-time iteration. We study the convergence of the numerical solution to the exact solutions of the original and modified conservation laws by measuring the \(L^2\) error of the density component. The discretizations are chosen such that \(\Delta x= \Delta y= 4\Delta t\) on all grids. Two different strategies for choosing the pseudo-time steps are considered:

- Strategy 1:

-

Use \(N=9\) iterations with \(\Delta \tau _{0,\dots ,8} = 0.2 \Delta t\).

- Strategy 2:

-

Use \(N=5\) iterations with \(\Delta \tau _0 = \Delta t\) and \(\Delta \tau _{1,\dots ,4} = 0.2 \Delta t\).

Both strategies integrate in pseudo-time to the point \(\tau = 1.8 \Delta t\) in each time step. However, Theorem 3 predicts that Strategy 1 will give a speed modification \(c(\mu _0, \dots , \mu _8) \approx 0.866\). On the other hand, Strategy 2 will give \(c(\mu _0, \dots , \mu _4) = 1\), i.e. the correct propagation speed, due to the large initial pseudo-time step. Figure 8 shows the numerical solutions at time \(T=10\) for the case where \(\Delta x= \Delta y= 0.2\), \(\Delta t= 0.05\). The predicted and observed vortex locations agree very well.

Computed density of the isentropic vortex at \(t = 10\) using Strategy 1 and Strategy 2. Dashed lines mark the vortex locations predicted by Theorem 3

Figure 9a shows the convergence of the numerical solutions, measured at time \(T=1\). Convergence to the correct solution is observed for Strategy 2 (S2) but not for Strategy 1 (S1). This is expected due to the incorrect location of the vortex in the latter case. However, convergence to the solution of the modified conservation law is seen (S1 mod). Thus, Theorem 3 accurately predicts the behavior of the numerical solution despite the violated assumptions.

In practical applications, the pseudo-time iterations are terminated when the residual has decreased beneath some tolerance. It is interesting to see in what way the convergence is affected by the choice of strategy. Returning to the setting in Fig. 8, the residual in each pseudo-time iteration for all 200 physical time steps are shown for the two strategies in Fig. 9b. The 200 lines overlap nearly perfectly, suggesting that the residual behaves similarly in each physical time step. The initial iteration in Strategy 2 evidently has a large impact on the reduction of the residual that supercedes those of the other iterations put together. The remaining iterations appear to reduce the residual by comparable amounts for the two strategies, as seen by the similar gradients. In contrast to the shock problem considered previously, this suggests that the convergence rate of the residual is dictated by other factors than the propagation speed for this particular problem. Nonetheless, a correct propagation speed is visibly very beneficial, here with a drop in relative residual of more than an order of magnitude.

4.2.4 Experiments with GMRES and Agglomeration CGC

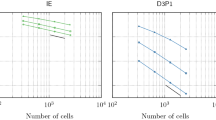

The analysis and experiments with pseudo-time iterations reveals that iterative methods can cause (predictable) alterations to the physics described by a conservation law, even if conservation is preserved. Although we have not presented a similar analysis for multigrid and Krylov subspace methods, it is interesting to experimentally investigate whether they display similar modifications to the propagation speed. To this end, we return to the linear advection problem in Sect. 4.2.1. Throughout we set \(\Delta t= 4\Delta x\) and solve the linear system in each time step either with an agglomeration based coarse grid correction or with GMRES. The initial guess is taken to be the solution in the previous time step. The prolongation and restriction operators used are given in (20).

Figure 10a shows the \(L^2\) error with respect to the exact solution. The solutions to the linear systems have been approximated either with one coarse grid correction (CGC) or with a fixed number of GMRES iterations per time step, N. The errors appear to converge both for the coarse grid correction and for GMRES with \(N=10\). However, with \(N=1\) and \(N=3\), convergence is lost as the grid is refined. Figure 10b shows the numerical solutions computed with \(N \in \{ 1,3,10 \}\) in the subdomain \(x \in [0,1]\) at time \(t=0.25\), here with \(\Delta x= 1/5120\). The exact and numerical solution computed with \(N=10\) overlap, with the pulse centred at \(x=0.5\). However, the solutions computed with \(N=1\) and \(N=3\) are seen at incorrect locations, suggesting reduced propagation speeds.

The result suggest that, for this problem, agglomeration coarse grid corrections retain consistency with the conservation law. GMRES on the other hand, behaves similarly to pseudo-time iterations in the sense that it slows down the speed of the solution. However, this problem appears to be solved automatically after some finite number of iterations.

5 Summary and Conclusions

In this paper we have studied conservation properties of a selection of iterative methods applied to 1D scalar conservation laws. The fact that conservation is a design principle behind many numerical schemes motivates such a study. We have established that Newton’s method, the Richardson iteration, Krylov subspace methods, coarse grid corrections using agglomeration, as well as ERK pseudo-time iterations preserve the global conservation of a given scheme if the initial guess has correct mass. However, the Jacobi and Gauss-Seidel methods do not, unless the linear system being solved possesses particular properties.

The stronger requirement of local conservation has been investigated for ERK pseudo-time iterations. We have shown that local conservation is preserved for finite volume schemes that employ the implicit Euler method in time. However, the resulting modified numerical flux may be inconsistent with the governing conservation law. An extension of the Lax-Wendroff theorem shows that this inconsistency leads to convergence to a weak solution of a conservation law modified by a particular constant. We give an exact expression for the modification constant, which depends only on the stability function of the ERK method and the pseudo-time steps. Depending on the problem solved, this modification can alter both the propagation speed and amplitude of the numerical solution if the constant differs from unity. We present a strategy for ensuring that the constant equals one and show numerically that the strategy results in faster convergence.

Numerical tests corroborate the theoretical findings and suggest that the results hold even for systems of conservation laws in multiple dimensions. Further, experiments with GMRES suggest that they suffer from a similar modification as pseudo-time iterations, but that this is automatically accounted for after a finite number of iterations. Similar experiments with coarse grid corrections based on agglomeration indicate no presence of such modifications.

Availability of Data and Material

N/A

References

Bassi, F., Ghidoni, A., Rebay, S.: Optimal Runge-Kutta smoothers for the p-multigrid discontinuous Galerkin solution of the 1D Euler equations. J. Comput. Phys. 230(11), 4153–4175 (2011)

Bassi, F., Rebay, S.: GMRES discontinuous Galerkin solution of the compressible Navier-Stokes equations. In: Discontinuous Galerkin Methods, 197–208. Springer (2000)

Birken, P.: Numerical methods for the unsteady compressible Navier–Stokes equations. Habilitation thesis, University of Kassel, Kassel (2012)

Birken, P.: Numerical Methods for Unsteady Compressible Flow Problems. Chapman and Hall/CRC (to appear) (2021)

Birken, P., Bull, J., Jameson, A.: Preconditioned smoothers for the full approximation scheme for the RANS equations. J. Sci. Comput. 78(2), 995–1022 (2019)

Birken, P., Gassner, G., Haas, M., Munz, C.D.: Preconditioning for modal discontinuous Galerkin methods for unsteady 3D Navier-Stokes equations. J. Comput. Phys. 240, 20–35 (2013)

Birken, P., Gassner, G.J., Versbach, L.M.: Subcell finite volume multigrid preconditioning for high-order discontinuous Galerkin methods. International Journal of Computational Fluid Dynamics 33(9), 353–361 (2019)

Birken, P., Jameson, A.: On nonlinear preconditioners in Newton-Krylov methods for unsteady flows. Int. J. Numer. Meth. Fluids 62(5), 565–573 (2010)

Birken, P., Meister, A., Ortleb, S., Straub, V.: On stability and conservation properties of (s)EPIRK integrators in the context of discretized PDEs. In: XVI International Conference on Hyperbolic Problems: Theory, Numerics, Applications, 617–629. Springer (2016)

Birken, P., Tebbens, J.D., Meister, A., Tůma, M.: Preconditioner updates applied to CFD model problems. Appl. Numer. Math. 58(11), 1628–1641 (2008)

Blom, D.S., Birken, P., Bijl, H., Kessels, F., Meister, A., van Zuijlen, A.H.: A comparison of Rosenbrock and ESDIRK methods combined with iterative solvers for unsteady compressible flows. Adv. Comput. Math. 42(6), 1401–1426 (2016)

Cockburn, B., Kanschat, G., Schötzau, D.: A locally conservative LDG method for the incompressible Navier-Stokes equations. Math. Comput. 74(251), 1067–1095 (2005)

Fisher, T.C., Carpenter, M.H.: High-order entropy stable finite difference schemes for nonlinear conservation laws: Finite domains. J. Comput. Phys. 252, 518–557 (2013)

Jameson, A., Schmidt, W., Turkel, E.: Numerical solution of the Euler equations by finite volume methods using Runge Kutta time stepping schemes. In: 14th fluid and plasma dynamics conference, 1259 (1981)

Junqueira-Junior, C., Scalabrin, L.C., Basso, E., Azevedo, J.L.F.: Study of conservation on implicit techniques for unstructured finite volume Navier-Stokes solvers. J. Aerosp. Technol. Manag. 6(3), 267–280 (2014)

Lax, P., Wendroff, B.: Systems of conservation laws. Tech. rep, LOS ALAMOS NATIONAL LAB NM (1959)

LeVeque, R.J.: Numerical methods for conservation laws, vol. 3. Springer, Basel (1992)

LeVeque, R.J.: Finite volume methods for hyperbolic problems. vol. 31. Cambridge university press (2002)

Linders, V., Carpenter, M.H., Nordström, J.: Accurate solution-adaptive finite difference schemes for coarse and fine grids. J. Comput. Phys. 410, 109393 (2020)

Linders, V., Kupiainen, M., Frankel, S.H., Delorme, Y., Nordstrom, J.: Summation-by-parts operators with minimal dispersion error for accurate and efficient flow calculations. In: 54th AIAA Aerospace Sciences Meeting, 1329 (2016)

Linders, V., Kupiainen, M., Nordström, J.: Summation-by-parts operators with minimal dispersion error for coarse grid flow calculations. J. Comput. Phys. 340, 160–176 (2017)

Linders, V., Nordström, J.: Uniformly best wavenumber approximations by spatial central difference operators. J. Comput. Phys. 300, 695–709 (2015)

Miranker, W.L.: Numerical methods of boundary layer type for stiff systems of differential equations. Computing 11(3), 221–234 (1973)

Saad, Y.: Iterative methods for sparse linear systems. SIAM (2003)

Shu, C.W.: Essentially non-oscillatory and weighted essentially non-oscillatory schemes for hyperbolic conservation laws. In: Advanced numerical approximation of nonlinear hyperbolic equations, 325–432. Springer (1998)

Shu, C.W., Osher, S.: Efficient implementation of essentially non-oscillatory shock-capturing schemes. J. Comput. Phys. 77(2), 439–471 (1988)

Straub, V., Ortleb, S., Birken, P., Meister, A.: Adopting (s)EPIRK schemes in a domain-based IMEX setting. In: AIP Conference Proceedings, vol. 1863, 410008. AIP Publishing LLC (2017)

Swanson, R.C., Turkel, E., Rossow, C.C.: Convergence acceleration of Runge-Kutta schemes for solving the Navier-Stokes equations. J. Comput. Phys. 224(1), 365–388 (2007)

Tam, C.K., Webb, J.C.: Dispersion-relation-preserving finite difference schemes for computational acoustics. J. Comput. Phys. 107(2), 262–281 (1993)

Trefethen, L.N.: Group velocity in finite difference schemes. SIAM Rev. 24(2), 113–136 (1982)

Wanner, G., Hairer, E.: Solving ordinary differential equations II. Springer, Berlin Heidelberg (1996)

Funding

Open access funding provided by Lund University.

Author information

Authors and Affiliations

Contributions

Philipp Birken and Viktor Linders both contributed to the conceptualization of this article and to the mathematical analysis. Viktor Linders was the main contributor to the numerical experiments and production of the graphics. Philipp Birken and Viktor Linders jointly contributed in writing the article.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Code availability

N/A

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proof of Lemma 3

Proof of Lemma 3

The purpose of this appendix is to provide a detailed proof of Lemma 3.

Proof

Note first that (22)-(23) can be equivalently written in the form

where \(\underline{\mathbf {1}} = (1,\dots ,1)^\top \in {\mathbb {R}}^s\). Here, we use the notation \(\underline{\mathbf {g}}_i(\underline{\mathbf {\mathbf {U}}}^k) = \left( g_i(\mathbf {U}_1^{(k)}), \dots , g_i(\mathbf {U}_s^{(k)}) \right) ^\top \).

Suppose that for some \(N \ge 1\) the relation

holds. We will show that it also holds for \(N+1\). From (21), (25) and (41) it follows that

Solving for \(\underline{\mathbf {U}}_i^{(N)}\) gives

Note that \((\underline{\mathbf {I}} + \mu _N \underline{\mathbf {A}})^{-1}\) exists since \(\underline{\mathbf {A}}\) is lower triangular.

Evaluating \(\underline{\mathbf {g}}_i \left( \underline{\mathbf {\mathbf {U}}}^{(N)} \right) \) using (21) gives

In the last equality we have used the fact that

Inserting the above expression for \(\underline{\mathbf {g}}_i \left( \underline{\mathbf {\mathbf {U}}}^{(N)} \right) \) into \(u_{i}^{(N+1)}\) as given in (41) leads to

Rearranging, using the induction hypothesis and the expression (26) for \({\hat{h}}^{(N)}\) results in

It remains to show that the lemma holds when \(N=1\). To this end, recall that \(u_{i}^{(0)} = u_i^n\) and note from (21) and (41) that

Solving for \(\underline{\mathbf {U}}_i^{(0)}\) gives

Using (21) it follows that

Here we have once again used (42) in the final equality. Thus, \(u_{i}^{(1)}\) can be evaluated using (41) as

Division by \(\Delta t\) and rearrangement shows that the lemma holds when \(N=1\). By induction it holds for all \(N \ge 1\). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Birken, P., Linders, V. Conservation Properties of Iterative Methods for Implicit Discretizations of Conservation Laws. J Sci Comput 92, 60 (2022). https://doi.org/10.1007/s10915-022-01923-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01923-7

Keywords

- Iterative methods

- Conservation laws

- Conservative numerical methods

- Pseudo-time iterations

- Lax-Wendroff theorem