Abstract

We develop a new finite difference method for the wave equation in second order form. The finite difference operators satisfy a summation-by-parts (SBP) property. With boundary conditions and material interface conditions imposed weakly by the simultaneous-approximation-term (SAT) method, we derive energy estimates for the semi-discretization. In addition, error estimates are derived by the normal mode analysis. The proposed method is termed as energy-based because of its similarity with the energy-based discontinuous Galerkin method. When imposing the Dirichlet boundary condition and material interface conditions, the traditional SBP-SAT discretization uses a penalty term with a mesh-dependent parameter, which is not needed in our method. Furthermore, numerical dissipation can be added to the discretization through the boundary and interface conditions. We present numerical experiments that verify convergence and robustness of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many wave phenomena are governed by second order hyperbolic partial differential equations (PDEs), such as the wave equation, the elastic wave equation and Einstein’s equations of general relativity. Also, equations that are formulated in first order form, such as Maxwell’s equations, may often be reformulated in second order form [13]. When a second order formulation is available it is typically formulated using fewer variables and fewer derivative operators, which can be exploited in the design of faster numerical methods. Many physical models are derived using Euler-Lagrange formalism starting from an energy, and it is natural to look for numerical methods that track the dynamics of this energy. We have considered such energy-based numerical methods in the context of discontinuous Galerkin discretizations, [3, 4, 6]. Here we generalize those ideas to high order finite difference methods.

The classical dispersion error analysis by Kreiss and Oliger, [15], predicts that high order methods are more efficient than low order methods when used for the simulation of wave propagation problems with smooth coefficients. High order finite difference methods are computationally efficient for solving hyperbolic PDEs and such methods are also easy to implement if the simulation domains are not too complex.

In the distant past it was difficult to construct stable and high order accurate finite difference methods, but this challenge has now largely been overcome through the use of derivative approximations with the summation-by-parts (SBP) property [12], and boundary and interface conditions enforced through the use of ghost points [22, 23], or by the simultaneous-approximation-term (SAT) method [7].

Summation by parts operators for the second derivative \(\frac{d^2}{dx^2}\) and their extension to variable coefficients can be found in [17, 20]. When these SBP operators are used together with the SAT method to impose boundary or interface conditions for the wave equation in a second order in time and space form, the resulting discretization bears similarity with the symmetric interior penalty discontinuous Galerkin method (SIPDG) [11]. As for SIPDG, coercivity requires that such SBP-SAT methods use a mesh-dependent penalty term. This penalty parameter, which depends on the material properties and the SBP operator, must be large enough for the method to be stable [1, 5, 18, 19]. Precise bounds on this penalty parameter may not be easy to determine a priori [8, 26], especially in the presence of grid interface conditions [18], curvilinear grids [25] and nonconforming grid interfaces [2, 26, 29].

In this paper, we present an energy-based discretization in the SBP-SAT framework. Here, energy-based refers to the design principle advocated in [3, 4, 6]. That is, design a semi-discretization that is based on the sum of the kinetic and potential energy, leading to a new class of DG methods. For problems with only Neumann boundary conditions, the proposed method is the same as the traditional SBP-SAT discretization in [20]. However, for Dirichlet boundary conditions and grid interface conditions, our method is different from the traditional SBP-SAT method in [5, 19] and [18], respectively, because our method does not use any mesh-dependent parameter. The method discretizes the wave equation in the velocity-displacement form, i.e. as a system with first order derivatives in time and second order derivatives in space. The resulting system of ordinary differential equations can then be evolved by a Runge-Kutta time integrator or a Taylor series method. As mentioned above, the method is inspired by our energy-based discontinuous Galerkin (DG) method [3, 4, 6]. We show that, just as the energy-based DG method, numerical dissipation can naturally be included in the method. We also present a general framework for deriving error estimates by normal mode analysis and perform the detailed analysis for a fourth order discretization for the Dirichlet problem. We will use the same approach as in [27] to prove fourth order convergence rate for the dissipative discretization and third order convergence rate for the energy-conserving discretization, which agree well with our numerical verification.

The outline of the paper is as follows. We introduce the SBP concepts in Sect. 2. In Sect. 3, we construct our new SBP-SAT discretization for problems with boundaries and grid interfaces. Stability analysis and a priori error estimates by normal mode analysis are then derived. In Sect. 4, we present an efficient implementation of the method for higher dimensional problems. Numerical experiments verifying accuracy and robustness are presented in Sect. 5. We end with concluding remarks in Sect. 6.

2 Preliminaries

Let P and Q be twice continuously differentiable functions in [0, 1]. We define the standard inner product and norm in \(L^2([0, 1])\) as

The integration-by-parts principle reads

where b is a continuously differentiable function in [0, 1]. It can be used to derive energy estimates for the wave equation

with the energy

consisting of a kinetic and potential part. To make matters concrete consider (2) with a Dirichlet boundary condition at \(x=0\) and a Neumann boundary condition at \(x=1\),

supplemented with compatible and smooth initial conditions for U and \(U_t\). Also assume that the wave speed \(c(x) \equiv \sqrt{b(x)}\) is smooth and positive. Then, multiplying (2) by \(U_t\) and integrating in space, the integration-by-parts principle (1) leads to

With homogeneous boundary conditions, the boundary contribution in (5) vanishes and the continuous energy estimate states that the energy of (2) is constant in time.

2.1 Summation-by-Parts Finite Difference Operators

Consider the one dimensional domain [0, 1] discretized by an equidistant grid \(\varvec{x} = [x_1,x_2,\cdots ,x_n]^T\) with grid spacing \(h=1/(n-1)\). The SBP finite difference operator for the approximation of the second derivative on the grid \(\varvec{x}\) is defined as follows.

Definition 1

A difference operator \(D^{(b)}\approx \frac{d}{dx}\left( b(x)\frac{d}{dx}\right) \) with \(b(x)>0\) is an SBP operator if D can be decomposed as

where H is diagonal and positive definite, \(A^{(b)}\) is symmetric and positive semidefinite, \(\varvec{e_1}=[1,0,\cdots ,0,0]^T\), \(\varvec{e_n}=[0,0,\cdots ,0,1]^T\). The column vectors \(\varvec{d_1}\) and \(\varvec{d_n}\) contain coefficients for the approximation of the first derivative at the boundaries. The coefficients \(b_1\) and \(b_n\) are the function b(x) evaluated at the boundaries.

Let \(\varvec{p}\) and \(\varvec{q}\) be grid functions on \(\varvec{x}\). The operators H and \(A^{(b)}\) define a weighted discrete \(L^2\) norm \(\Vert \varvec{p}\Vert ^2_H = \varvec{p}^TH\varvec{p}\) and seminorm \(\Vert \varvec{p}\Vert ^2_{A^{(b)}}=\varvec{p}^TA^{(b)}\varvec{p}\). The SBP property (6) is a discrete analogue of the integration-by-parts principle,

If \(\varvec{p}\), \(\varvec{q}\) are P(x), Q(x) evaluated on the grid, then the term on the left-hand side of (7) is an approximation of the term on the left-hand side of (1) because H is a quadrature [14]. On the right-hand side of (6), the term \(-H^{-1}A^{(b)}\) is a discrete approximation of the Laplacian operator with homogeneous Neumann boundary conditions. In (7), the term \(\varvec{p^T}A^{(b)} \varvec{q}\approx \int _{0}^{1} bP_x Q_x dx\). Therefore, the vector of constants, \([a,a,\cdots ,a]\in {\mathbf {R}}^n\) for any \(a\in {\mathbf {R}}\), is in the null space of \(A^{(b)}\). We make the following assumption of the operator \(A^{(b)}\).

Assumption 2

In Definition 1, the rank of \(A^{(b)}\) is \(n-1\) with any vector of constants in its null space.

For constant coefficient (the superscript b is dropped), the matrix hA corresponding to the second order accurate SBP operator takes the form

with eigenvalues

We observe that all eigenvalues are distinct. Since \(\uplambda _1 = 0\), the rank of A is \(n-1\), and Assumption 2 is true. For the second and fourth order SBP operators with constant coefficient, Assumption 2 is proved in [10] using the result from [9], and the explicit formulas for the Moore-Penrose inverse of A are also derived.

2.2 Accuracy of SBP Operators

Standard central finite difference stencils are used in the interior of the computational domain. To satisfy the SBP property, special non-centered difference stencils are used on a few grid points close to boundaries. In the interior where the central stencils are used, the truncation error is of even order, often denoted by 2p with \(p=1,2,3,\cdots \). On a few grid points with the non-centered boundary stencils, the truncation error can at best be of order p when H is diagonal. We denote the accuracy of such SBP operators (2p, p). Note that it is also common to refer to the accuracy of the operator and scheme as \(2p^{th}\) order accurate. It is then important to be specific with the precise truncation error and convergence rate of the discretization.

Though the boundary truncation error is order p, the convergence rate of the underlying numerical scheme can be higher in certain cases. This is in part due to the fact that the number of grid points with the less accurate boundary stencils is independent of grid spacing. The precise order of convergence rate depends on the equation, the spatial discretization and the numerical boundary conditions, see further the detailed error analysis in [27, 28]. Below, in Sect. 3.2, we derive error estimates for the proposed scheme and we see that the choice of the SAT affects the convergence rate.

3 An Energy-Based SBP-SAT Finite Difference Method

In this section, we derive the energy-based SBP-SAT discretization of the wave Eq. (2). First, we consider boundary conditions in Sect. 3.1. We show that our method is equivalent to that in [19] for Neumann boundary conditions, but is different from the discretization in [19] for Dirichlet boundary conditions. We then derive error estimates in Sect. 3.2 and consider grid interface conditions in Sect. 3.3.

3.1 The Boundary Conditions

Our SBP-SAT discretization is based on the approximation of the unknown variable U and its time derivative \(U_t\). Therefore, we rewrite Eq. (2) to a system with the first order derivative in time

The energy-based SBP-SAT finite difference approximation of (8) with the boundary condition (4) is

where \(\varvec{u}\) and \(\varvec{v}\) are grid functions that approximate U and V, respectively. On the right-hand side of (9), the first term imposes weakly the time derivative of the Dirichlet boundary condition \(U_t(0, t)=f_t(t)\) (note that the constant level of the solution is uniquely determined by the initial data and, being a constant, is not affected by this boundary condition). This penalty term affects the stencils on a few grid points near the left boundary because of the weights in \(\varvec{d_1}\). The second term is a dissipative term controlled by \(\alpha \) and contributes to a few grid points near the right boundary. On the left-hand side, \(\varvec{u}_t\) is approximately equal to \(\varvec{v}\). The symmetric positive semidefinite matrix \(A^{(b)}\) is multiplied by \(\varvec{u}_t-\varvec{v}\). In the traditional way of imposing the Dirichlet boundary condition in the SBP-SAT finite difference method, the corresponding penalty term is based on \(U(0, t)=f(t)\) and involves a mesh-dependent penalty parameter [19]. Such mesh dependent parameters are not needed in our energy-based discretization.

The last term on the right-hand side of (10) is a dissipation term that has contribution only on the first grid point and is controlled by the parameter \(\beta \). We note the Neumann boundary condition is imposed weakly in the same way as in [20]. To see this, consider (9)-(10) without terms for the Dirichlet boudary condition and the dissipation. Then, (9) is reduced to \(\varvec{u}_{tt}=\varvec{v}_t\). Replacing \(\varvec{v}_t\) by \(\varvec{u}_{tt}\) in (10) gives an equivalent formulation as in [20].

The stability property of the semi-discretization is stated in the following theorem.

Theorem 1

With homogeneous boundary conditions, the energy-based SBP-SAT discretization (9)-(10) with \(\alpha \le 0\) and \(\beta \le 0\) satisfies

The discrete energy \(E_H\) is defined as

and is the discrete analogue of the continuous energy (3).

Proof

Consider homogeneous boundary conditions with \(f=g=0\) in (4). We multiply from the left of (9) by \(\varvec{u}^T\), and (10) by \(\varvec{v}^T H\), and obtain

if \(\alpha \le 0\) and \(\beta \le 0\). \(\square \)

If \(\alpha < 0\) and \(\beta < 0\), the discrete energy \(E_H\) is dissipated even though the continuous energy is conserved. With \(\alpha =\beta =0\), the discrete energy is constant in time. All four penalty terms in (9)-(10) have no mesh-dependent parameters.

In the first semi-discretized Eq. (9), the time derivative of the unknown variable \(\varvec{u}\) is given implicitly, since \(\varvec{u}_t\) is multiplied by \(A^{(b)}\). The matrix \(A^{(b)}\) is banded and symmetric positive semi-definite, with nullspace consisting of vectors of constants. Since both \(\varvec{d_1}\) and \(\varvec{d_n}\) are consistent finite difference stencils for the first derivative, the right hand side will always be in the range of \(A^{(b)}\). In other words, the solution exists but is only unique up to a constant. A unique solution can be obtained with an additional constraint. Here, we require that the sum of all elements in \(\varvec{u}_t-\varvec{v}\) are zero, consistent with the equation \(U_t=V\). Numerically, this constraint can be taken into account by the Lagrange multiplier technique. With the new variables

Equation (9) is replaced by

which is nonsingular. The auxiliary variables \(\mu \) and \(\nu \) satisfy \(\mu _t\approx \nu \). Alternatively, we can use the pseudoinverse of \(A^{(b)}\). Since the right-hand side of (9) is a summation of rank-one vectors, we only need a few columns of the pseudoinverse of \(A^{(b)}\), which can be computed by using the analytical formula in [9, 10] for constant coefficient problems and \(p=2\) or 4. After that, the resulting system of first order ordinary differential equations can be advanced explicitly in time by using standard time integrators, for example Runge-Kutta methods.

Remark 1

At first glance, it appears that the formulation (9) would have a higher computational complexity than comparable methods but, as we show in Sect. 4, for constant coefficient systems there is a fast direct algorithm that results in a linear (in the number of degrees of freedom) complexity. For variable coefficients, we will illustrate by numerical examples that the preconditioned conjugate gradient method only requires a very small number of iterations per timestep to converge.

3.2 Error Estimates

As discussed in Sect. 2.2, the \(2p^{th}\) order accurate SBP operators with diagonal norms are only \(p^{th}\) order accurate on a few grid points near boundaries. In this section, we derive error estimates and analyze the effect of the \(p^{th}\) order truncation error on the overall convergence rate of the discretization. We note that the energy-based discretization for the Neumann problem is the same as the traditional SBP-SAT method [20] and for this problem the error estimates derived in [27] already applies. Below, we consider the problem with Dirichlet boundary conditions by first outlining the general approach [12] for error analysis and then specializing to the case when \(p=2\). As will be seen, dissipation at the Dirichlet boundary conditions affects the overall convergence rate. We note that the influence of dissipation for a discretization of the wave equation is also considered in [24].

Consider the following half line problem

in the domain \(x\in [0,\infty )\) with the Dirichlet boundary condition \(U(0,t)=f(t)\) and \(t\in [0,t_f]\) for some final time \(t_f\). The corresponding energy-based discretization is

When \(\beta \le 0\), the discretization satisfies an energy estimate as in Theorem 1. We will see below that the energy-dissipative discretization with \(\beta <0\) gives a higher convergence rate than the energy-conserving discretization with \(\beta =0\).

Let \(\varvec{\xi }=[\xi _{1}, \xi _{2}, \cdots ]^T\) and \(\varvec{\zeta }=[\zeta _{1}, \zeta _{2}, \cdots ]^T\) be the pointwise error vector with \(\xi _{j}=u_j(t)-U(x_j,t)\) and \(\zeta _{j}=v_j(t)-V(x_j,t)\). We then have the error equations

where \(\varvec{T}\) is the truncation error vector. Note that there is no truncation error in the first equation, because the equation is satisfied exactly by the true solution on the grid. We partition the truncation error into two parts, the boundary truncation error \(\varvec{T^B}\) and the interior truncation error \(\varvec{T^I}\) such that \(\varvec{T}=\varvec{T^B}+\varvec{T^I}\). The only nonzero elements of \(\varvec{T^B}\) are the first r elements corresponding to the boundary stencil of D and are of order \({\mathcal {O}}(h^p)\), where r depends on p but not h. In \(\varvec{T^I}\), the first r elements are zero and the rest are of order \({\mathcal {O}}(h^{2p})\) corresponding to the interior stencil of D.

We partition the error as \(\varvec{\xi }=\varvec{\xi ^I}+\varvec{\xi ^B}\) and \(\varvec{\zeta }=\varvec{\zeta ^I}+\varvec{\zeta ^B}\). The first terms \(\varvec{\xi ^I},\varvec{\zeta ^I}\sim {\mathcal {O}}(h^{2p})\) are caused by the interior truncation error \(\varvec{T^I}\) and can be estimated by the energy technique for SBP methods. It is often the second part, \(\varvec{\xi ^B},\varvec{\zeta ^B}\) caused by the boundary truncation error \(\varvec{T^B}\), that determine the overall convergence rate of the scheme. We note that \(\varvec{\xi ^B},\varvec{\zeta ^B}\) satisfy the error equations

For convenience, we define the h-independent quantities

and the new variables

We have the relation \(\varvec{\varepsilon }_t = (\varvec{\xi ^B})_t- \varvec{\zeta ^B}\).

Next, we take the Laplace transform of the error Eqs. (16)-(17) in time. With exact initial data, we obtain the difference equations

where \(\widehat{\varvec{\varepsilon }}\) and \(\widehat{\varvec{\delta }}\) are the Laplace-transform of \({\varvec{\varepsilon }}\) and \({\varvec{\delta }}\), respectively. We also use the notation \(\widetilde{s}=sh\), where s is the dual of time. Note that when deriving (20), we have used (19) and the identity \(D=H^{-1}(-A-\varvec{e_1}\varvec{d}_{\varvec{1}}^T)\) because of the half-line problem.

In Laplace space, we solve the difference Eqs. (19)-(20) and use (18) to derive an error estimate for \(\widehat{\varvec{\xi ^B}}\). The corresponding error estimate for \(\varvec{\xi ^B}\) in physical space can then be obtained by Parseval’s relation. The precise estimate depends on the operators in (19)-(20). Below we consider the SBP operator with accuracy \((2p,p)=(4,2)\) constructed in [20]. The accuracy analysis follows the same procedure when other SBP operators are used.

Theorem 2

With the SBP operator of accuracy order (4,2) from [20], the method (14)-(15) has convergence rate four with a dissipative discretization \(\beta <0\). For the energy-conserving discretization with \(\beta =0\), the convergence rate is three.

Proof

In this case, \(\widehat{\varvec{T^B}}\) in (20) is to the leading order

In (19), the matrix \(\overline{A}\) has boundary stencils in the first four rows and repeated interior stencil from row five. The only nonzeros of \(\overline{\varvec{d_1}} \varvec{e}_{\varvec{1}}^T\) are in the first four rows. Therefore, from row five the difference equation takes the form

The corresponding characteristic equation

has four solutions \(7-4\sqrt{3}\approx 0.0718\), \(7+4\sqrt{3}\approx 13.9282\), and a double root 1. The only admissible solution satisfying \(|\uplambda |<1\) is \(\uplambda =7-4\sqrt{3}\). As a consequence, the elements of the vector \(\widehat{\varvec{\varepsilon }}\) can be written as

with four unknowns \(\widehat{\varepsilon }_1\), \(\widehat{\varepsilon }_2\), \(\widehat{\varepsilon }_3\) and \(\sigma \). Note that it is also possible to use three unknowns \(\widehat{\varepsilon }_1, \widehat{\varepsilon }_2, \sigma \). We formulate the linear system with four unknowns to match the number of equations. In this case, we have the relation \(\widehat{\varepsilon }_3=\sigma \uplambda ^{-1}\). These four unknowns, together with the unknowns in \(\widehat{\varvec{\delta }}\), are involved in the first four equations of (19). To solve for them, we also need to consider (20).

The difference equation from row five of (20) takes the form

and has two admissible roots

We note that the second admissible root \(\kappa _{2}\) is a slowly decaying component at \(\widetilde{s}\rightarrow 0^+\). The vector \(\widehat{\varvec{\delta }}\) can then be written as

with four unknowns \(\widehat{\delta }_1\), \(\widehat{\delta }_2\), \(\sigma _{1}\) and \(\sigma _{2}\).

The first four equations of (19) and the first four equations of (20) lead to the eight-by-eight boundary system

where the unknown vector \({\varvec{z}}\) and the right-hand side vector \(T_{uv}\) are

The nonzeros of \({\hat{T}}_{uv}\) are the nonzeros of \(\widehat{\varvec{T^B}}\) scaled by the first four diagonal elements of \(\overline{H}\), i.e. \(\frac{17}{48}, \frac{59}{48}, \frac{43}{48}, \frac{49}{48}\). From (18), we have

which depends on all the eight unknowns in the vector \({\varvec{z}}\).

To analyze convergence rate, we shall consider the solution to the boundary system in the vicinity of \(\widetilde{s}\rightarrow 0^+\) corresponding to the asymptotic region when \(h\rightarrow 0\). When the scheme is stable with \(\beta \le 0\), the boundary system is non-singular for all \(Re(\widetilde{s})>0\) [12]. However, the solution to the boundary system may depend on h, and the precise dependence is important to the convergence rate. To this end, we analyze the inverse of \(C(\widetilde{s},\beta )\), and the components of \({\varvec{z}}\) and \(\widehat{\varvec{\xi ^B}}\) in the vicinity of \(\widetilde{s}\rightarrow 0^+\).

We start by considering the boundary system (21) with \(\widetilde{s}=0\). Here, the matrix \(C(0):=C(0,\beta )\) is independent of \(\beta \) and takes the form

It is singular with one eigenvalue equal to zero, i.e. the so-called determinant condition is not satisfied. Since the matrix \(C(\widetilde{s}, \beta )\) cannot be inverted at \(\widetilde{s}=0\), we take a similar approach as in [21, 27] and consider \(Re(\widetilde{s})=\eta h\) for a small constant \(\eta >0\) independent of h. We also refer to [12] for this technique.

The Taylor series of \(C(\widetilde{s}, \beta )\) at \(\widetilde{s}=0\) can be written as

where \(C'(0,\beta )\) and \(C''(0,\beta )\) are the first and second derivative of \(C(\widetilde{s},\beta )\) with respect to \(\widetilde{s}\) at \(\widetilde{s}=0\), respectively. We perform a singular value decomposition of the singular matrix \(C(0)=M\varSigma N^*\) with two unitary matrices M and N. Substituting into the Taylor series, we obtain the boundary system to the leading order

where \(\bar{{\varvec{z}}}= N^* {\varvec{z}}\) and \(C_\beta =M^* C'(0,\beta ) N\). The solution can be written as

and consequently we have

We note that \(\varSigma \) is a diagonal matrix where the first seven diagonal components are nonzero and the last diagonal component equals to zero. The last diagonal element of \(C_\beta \), denoted by \((C_\beta )_{88}\), is crucial. We find that \((C_\beta )_{88}\) is zero when \(\beta =0\) and nonzero when \(\beta <0\). We analyze these two cases separately.

When \(\beta <0\), we have \((C_\beta )_{88}\ne 0\). In this case, we have

We use Gaussian elimination to reduce the linear system (25) to a triangular form. The resulting upper triangular matrix has diagonal elements \({\mathcal {O}}(1)\), except the last diagonal element \({\mathcal {O}}(\widetilde{s})\). Using that all elements of \(M^* {\hat{T}}_{uv}\) are \({\mathcal {O}}(h^4)\), the backward substitution procedure gives the solution \(\bar{{\varvec{z}}}\) in the form such that its first seven elements are \({\mathcal {O}}(h^4)\) and the last element is \({\mathcal {O}}({\widetilde{s}}^{-1} h^4)\sim {\mathcal {O}}(h^3)\).

The dominating error component \({\mathcal {O}}(h^3)\) in \(\bar{{\varvec{z}}}\) is a potential source of accuracy reduction. To analyze its effect to the convergence rate, we only consider this dominating component in \(\bar{{\varvec{z}}}\), that is \([0,0,0,0,0,0,0,1]^T Kh^3\) for some constant K. By the relation \({\varvec{z}}=N\bar{{\varvec{z}}}\), the corresponding part of \({\varvec{z}}\) can be computed as \({\varvec{z}}=N [0,0,0,0,0,0,0,1]^T Kh^3\). A direct calculation of \({\varvec{z}}\) shows that its components satisfy the following relations,

The last relation \(\sigma _{2}=0\) is important because in the error vector (23), the variable \(\sigma _{2}\) is multiplied with the slowly decaying component \(\kappa _{2}\), which is now eliminated. By using the first relation in (26) and the relation \(\uplambda =\kappa _{1}+{\mathcal {O}}({\widetilde{s}}^2)\), the error vector (23) becomes

Therefore, the dominating error component \({\mathcal {O}}(h^3)\) in \(\bar{{\varvec{z}}}\) does not lead to a convergence rate reduction. As a consequence, the error \(\widehat{\varvec{\xi ^B}}\) is determined by the first seven elements of \(\bar{{\varvec{z}}}\sim {\mathcal {O}}(h^4)\). It is then straightforward to compute

for some constant \(\widetilde{K}\). By using Parseval’s relation, we have

for some constant K. In the above, we have used the argument “future cannot affect past” [12, pp. 294]. Finally, the overall error \(\varvec{\xi }\) is in fact determined by the interior scheme. We conclude that the scheme has a fourth order convergence rate when \(\beta <0\).

Now, we consider the case \(\beta =0\). Since \((U_c^*C'(0,\beta )V_c)_{88}=0\), it is necessary to include the quadratic term of the Taylor series (24) in the boundary system analysis. A direct calculation gives \((U_c^*C''(0,\beta )V_c)_{88}\ne 0\). The solution \(\bar{{\varvec{z}}}\) to the boundary system is then in the form such that its first seven components are \({\mathcal {O}}(h^3)\) and the last component is \({\mathcal {O}}(h^2)\). The first seven components \({\mathcal {O}}(h^3)\) lead to \(\Vert \widehat{\varvec{\xi ^B}}\Vert _h \le K_3 h^{3} |\widehat{U}_{xxxx}(0,t)|\) for some constant \(K_3\), and the dominating error component \({\mathcal {O}}(h^2)\) does not lead to further reduction in convergence rate for the same reason as the case with \(\beta <0\). Hence, the convergence rate is three when \(\beta =0\). Note that in this case, the slowly decaying component \(\kappa _{2}\) does not vanish in \(\widehat{\varvec{\xi ^B}}\). This concludes the proof. \(\square \)

3.3 Interface Conditions

In heterogeneous materials, a multi-block finite difference discretization can be advantageous. Different grid spacings can be used in different blocks to adapt to the velocity structure of the material. If the material property is discontinuous, the material interfaces can be aligned with block boundaries so that high order accurate discretization can be constructed in each block.

As a model problem, we again consider the wave Eq. (8) in the domain [−1,1]. The parameter b(x) is smooth in \((-1,0)\) and (0, 1), but discontinuous at \(x=0\). In the stability analysis, we omit terms corresponding to the boundaries \(x=0,1\) and focus on the interface contribution. The energy (3) is conserved in time if we impose the interface conditions

In this case, at \(x=0\) the solution is continuous but its derivative is discontinuous.

We proceed by deriving an energy-based SBP-SAT discretization. To distinguish notations in the two subdomains, we use a tilde symbol on top of the variables and operators in [0, 1]. The semi-discretization reads

in the subdomain \([-1,0]\), and

in the subdomain [0, 1]. Similar to the Dirichlet boundary condition, we impose continuity of the time derivative of the solution, instead of continuity of the solution itself. Unlike in [25], no mesh-dependent parameter is needed to impose interface conditions by the SAT method. The stability property is summarized in the following theorem.

Theorem 3

The semi-discretization (29)-(32) satisfies

where the discrete energy is defined as \(E_H \equiv \Vert \varvec{u}\Vert _{A^{(b)}}^2 + \Vert \varvec{v}\Vert _H^2 + \Vert \varvec{{{\tilde{u}}}}\Vert _{{{\tilde{A}}}^{(b)}}^2 + \Vert \varvec{{{\tilde{v}}}}\Vert _{{{\tilde{H}}}}^2\) for any \(\tau \) and \(\gamma \le 0\).

Proof

We multiply (29) by \(\varvec{u}^T\), (30) by \(\varvec{v}^TH\), (31) by \(\varvec{{{\tilde{u}}}}^T\), (32) by \(\tilde{\varvec{v}}^T{{\tilde{H}}}\). After adding all the four equations, we obtain

where the discrete energy is \(E_H = \Vert \varvec{u}\Vert _{A^{(b)}}^2 + \Vert \varvec{v}\Vert _H^2 + \Vert \varvec{{{\tilde{u}}}}\Vert _{{{\tilde{A}}}^{(b)}}^2 + \Vert \varvec{{{\tilde{v}}}}\Vert _{{{\tilde{H}}}}^2\). \(\square \)

The penalty parameters \(\tau \) and \(\gamma \) do not depend on the mesh size. We have \(\frac{d}{dt}E_H\le 0\) when \(\gamma \le 0\). In particular, if \(\gamma =0\), then the discrete energy is conserved in time. We note that the linear system involving \(A^{(b)}\) and \({{\tilde{A}}}^{(b)}\) can be solved separately in each domain, thus resulting a linear computational complexity with respect to the number of degrees of freedom.

4 Discretization in Higher Space Dimensions

The one-dimensional discretization technique can be generalized to higher dimensional problems by tensor products. As an example, we consider the wave equation in two space dimensions (2D)

in the domain \(\varOmega =[0,1]^2\) with Dirichlet boundary conditions

and a forcing function F. We discretize \(\varOmega \) by a Cartesian grid with \(n_x\) points in x and \(n_y\) points in y. The semi-discretization can be written as

The operator \({\mathbf {D}}\) in (35) approximates the second derivative with variable coefficients in 2D and is defined as

where \(D^{a_{:,i}}\) approximates \(\frac{\partial }{\partial x}\left( a(x,y_i)\frac{\partial }{\partial x}\right) \) and the only nonzero element in the \(n_y\) by \(n_y\) matrix \(E_y^i\) is \(E_y^i(i,i) = 1\). The operators corresponding to the term in the y-direction is defined similarly. The operator \({\mathbf {H}}=H_x\otimes H_y\) defines the 2D SBP norm and quadrature. In addition, we also have in (34) that

where \(A^{a_{:,i}}\) is the symmetric semidefinite matrix associated with \(D^{a_{:,i}}\). The right-hand side of (34) are SAT imposing the Dirichlet boundary conditions. We define

where \(d^{a_{1,i}}\) approximates the first derivative \(a(x_1,y_i)\frac{\partial }{\partial x}\) and is associated with \(D^{a_{:,i}}\). The \(n_y\) by 1 vector \(\varvec{f_{tW}}\) is the time derivative of the Dirichlet boundary data evaluated on the grid of the left boundary \(x=0\). We use the following operators to select the numerical solutions on the boundary

Finally, the third term on the right-hand side of (35) corresponds to numerical dissipation and the parameter \(\theta \) is determined by the energy analysis. The grid function \(\varvec{F}\) is the forcing function F evaluated on the grid.

Theorem 4

The semi-discretization (34)-(35) satisfies

if \(\theta \le 0\), where the discrete energy is \(E_H\equiv \varvec{u}^T {\mathbf {A}} \varvec{u}+\varvec{v}^T{\mathbf {H}}\varvec{v}\).

Proof

Consider homogeneous boundary and forcing data. Multiplying (34) by \(\varvec{u}^T\), we have

Similarly, we multiply (35) by \(\varvec{v}^T{\mathbf {H}}\) and obtain

We then add (36) and (37) to obtain

Therefore, the discrete energy \(E_H\equiv \varvec{u}^T {\mathbf {A}} \varvec{u}+\varvec{v}^T{\mathbf {H}}\varvec{v}\) decays in time if \(\theta <0\) and is conserved in time if \(\theta =0\). \(\square \)

To advance in time the two dimensional semi-discretized Eqs. (34)-(35), we need to isolate the \(\mathbf{u}_t\) term. In Sect. 4.1 we show that when the problem has constant coefficients this can be efficiently done through the diagonalization technique first proposed in [16] and more recently used in [30] for a Galerkin-difference method. In Sect. 4.2 we illustrate that the variable coefficient case can be handled by the use of iterative solvers.

4.1 Constant Coefficient Problems

Now consider the case \(a(x,y)\equiv a\) and \(b(x,y)\equiv b\), where a, b are positive constants. The semi-discretized equation is the same as (34)-(35) but the operators \({\mathbf {D}}\), \({\mathbf {A}}\) and \(d_{W,E,S,N}\) are in a simpler form

where \(D_x=H_x^{-1}(-A_x-\varvec{e_{1x}}\varvec{d}^T_{\varvec{1x}}+\varvec{e_{nx}}\varvec{d}^T_{\varvec{nx}})\) approximates \(\partial ^2/\partial x^2\) and the operators with subscript y are defined analogously. We further define

and consider the eigendecomposition of \(\widetilde{A_x}\) and \(\widetilde{A_y}\),

Here, \(\varLambda _{x}\) is a diagonal matrix with the eigenvalues of \(\widetilde{A_{x}}\) as the diagonal entries. Since \(\widetilde{A_{x}}\) is real and symmetric, the eigenvectors can be chosen to be orthogonal \(Q_{x}^T = Q_{x}^{-1}\). The operators \(Q_y\) and \(\varLambda _y\) are defined analogously. The operator \(\widetilde{{\mathbf {A}}}\) can be diagonalized as

where the orthogonal matrix \({\mathbf {Q}}=Q_x\otimes Q_y\) and the diagonal matrix \(\mathbf {\varLambda }=\varLambda _x\otimes I_y+I_x\otimes \varLambda _y\).

Next, we define \(\widetilde{\varvec{u}}\) and \(\widetilde{\varvec{v}}\) such that they satisfy

respectively. Substituting the new variables into (34), we obtain

We multiply the above equation from the left by \(({\mathbf {H}}^{-\frac{1}{2}}{\mathbf {Q}})^T\), and obtain

We note that the diagonal matrix \(\mathbf {\varLambda }\) has one eigenvalue equal to zero. If we order the eigenvalues such that the first diagonal entry of \(\mathbf {\varLambda }\) is zero, then the first equation of (40) is \((\widetilde{u}_1)_t=\widetilde{v}_1\).

In the same way, we can substitute the new variables \(\widetilde{\varvec{u}}\) and \(\widetilde{\varvec{v}}\) into (35) and obtain

We then multiply the above equation from the left by \({\mathbf {Q}}^T {\mathbf {H}}^{\frac{1}{2}}\), and have

The transformed difference operator \({\mathbf {Q}}^T {\mathbf {H}}^{\frac{1}{2}} {\mathbf {D}}{\mathbf {H}}^{-\frac{1}{2}}{\mathbf {Q}}\) can be simplified by using the relation

to obtain

The operator \({\mathbf {A}}\), which is the volume part of \({\mathbf {D}}\), is diagonalized to \(\mathbf {\varLambda }\). For the boundary parts, we do not need to use the \(n_xn_y\)-by-\(n_xn_y\) dense matrix \({\mathbf {Q}}\). As an example, for the term \({\mathbf {Q}}^T {\mathbf {H}}^{-\frac{1}{2}} e_W H_y d_W^T {\mathbf {H}}^{-\frac{1}{2}}{\mathbf {Q}}\), we have

Furthermore, the rank of the \(n_x\)-by-\(n_x\) matrix \(e_{1x} ad_{1x}^T\) is 1. Hence, multiplying the \(n_xn_y\)-by-\(n_xn_y\) matrix with the vector \(\widetilde{{\mathbf {u}}}\) can be done with computational complexity \({\mathcal {O}}(n_xn_y)\). This procedure can be used for the computation of the other boundary terms in (40) and (41). In the end, the solution to the original problem can be obtained by (39).

4.2 Variable Coefficient Problems

The above diagonalization procedure cannot be easily generalized to solve for problems with variable coefficients (either originating from heterogeneous material properties or grid transformation). Instead we may simply solve \(\mathbf{A u}_t\) in each timestep by an iterative method. As \(\mathbf{A} \) is symmetric and since the right hand side will always be in the range of A the method of choice is the preconditioned conjugate gradient method. Below, in Sect. 5.2 we show that when an incomplete Cholesky preconditioner is used together with the initial guess \(\varvec{u}_t\approx \varvec{v}\), the number of iterations needed to meet a tolerance that scales with the order of the method is small.

5 Numerical Experiments

We present numerical examples in Sect. 5.1 to verify the convergence property of our proposed method. In all experiments, the classical Runge-Kutta method is used for time integration. The \(L_2\) errors at final time are computed as

where \(\varvec{u_h}\) is the numerical solution, \(\varvec{u_{ex}}\) is the manufactured solution restricted to the grid, h is the grid spacing and d is the spatial dimension. The convergence rates for grids refined by a factor of two are estimated by

In Sect. 5.2, we test the preconditioned conjugate gradient method for solving 2D wave equation with variable coefficients.

5.1 Examples in One Space Dimension

We start with a verification of the convergence rate for the wave equation \(U_{tt}=U_{xx}\) in the domain \(x\in [-\pi /2,\pi /2]\) and \(t\in [0,2]\). We consider the Dirichlet boundary conditions at \(x=-\pi /2\) and \(x=\pi /2\). The boundary data is obtained from the manufactured solution \(U=\cos (10x+1)\cos (10t+2)\).

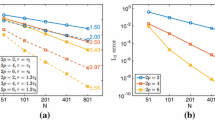

We construct the semi-discretization based on (9)-(10) by using the SBP operators in [20] of fourth and sixth order of accuracy, compute explicitly the pseudoinverse of A. We are interested in how the dissipative term affects the accuracy of the numerical solution. To this end, we consider the parameter \(\beta =0\) or \(-1\) to control the dissipation. The \(L_2\) errors and the corresponding rates of convergence are presented in Table 1 for the fourth order method and Table 2 for the sixth order method.

We observe that the parameter \(\beta \) affects the numerical errors and convergence rates. For the fourth order method, fourth order convergence rate is obtained when \(\beta =-1\). However, when \(\beta =0\) the convergence rate drops by one order to three. This agrees with the error estimate in Sect. 3.2. For the sixth order method, the choice \(\beta =-1\) leads to a super-convergence of order 5.5. The same convergence rate is observed and proved in [27] for the traditional sixth order SBP-SAT discretization for the Dirichlet problem. With a careful analysis of the solution to the boundary system, it was shown that the coefficient multiplied with the slowly decaying component of the error in Laplace space equals to zero. This leads to an additional gain of a half order in convergence rate. Without dissipation from the Dirichlet boundary, however, the convergence rate is five.

Next, we test the numerical interface treatment (29)-(32), and consider the same problem as above but with a grid interface at \(x=0\) and interface conditions (27)-(28). To eliminate any influence from the boundaries, we impose periodic boundary condition at \(x=\pm \pi /2\). For the interface conditions, we choose either \(\gamma =-1\) or \(\gamma =0\), corresponding to with or without dissipation at the interface, respectively. The \(L_2\) errors and the corresponding rates of convergence are presented in Table 3 for the fourth order method and Table 4 for the sixth order method.

For the fourth order method, both \(\gamma =0\) and \(\gamma =-1\) lead to a convergence rate of order four. For the same mesh resolution, the L\(_2\) errors are almost the same. For the sixth order method, the two choices of \(\gamma \) give different rates of convergence. The convergence rate with \(\gamma =-1\) is 5.5, but the rate drops to between 5 and 5.5 when \(\gamma =0\). On the finest mesh, the L\(_2\) error with the dissipative discretization is about half of the L\(_2\) error with the energy-conserving discretization. We note that the with the traditional sixth order SBP-SAT discretization for the interface problem, the convergence rate is 5.5 [27], which is the same as the dissipative energy-based SBP-SAT discretization.

5.2 Examples in Two Space Dimensions

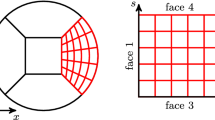

We consider variable coeffcient problem (33) with \(a(x,y)=0.5(\tanh (k(R-0.25))+3)\) and \(b(x,y)=0.5(\tanh (k(R-0.25))+3)\), where \(R=(x-0.5)^2+(y-0.5)^2\). The parameters a and b model heterogeneous material properties of a layered structure, with k controlling the transition of two layers. A few examples of a(x, y) with different values of k are shown in Fig. 1. We see that with a larger k, the transition zone of the two materials becomes smaller. We choose the forcing function F so that the manufactured solution \(U=\cos (2x+\pi /2)\cos (2y+\pi /2)\cos (2\sqrt{2}t+3)\) satisfies the equations.

The equations are discretized in space by the scheme (34)-(35) with the second derivative variable coefficients SBP operators constructed in [17]. The classical Runge-Kutta method is used to advance the semi-discretization in time. At each Runge-Kutta stage, the linear system is solved by a preconditioned conjugate gradient (PCG) method, where the preconditioner is obtained by the incomplete Cholesky (ICHOL) factorization. Since the matrix \({\mathbf {A}}\) is only semi-definite, in the ICHOL process we increase the diagonal elements by \(1\%\) and \(0.01\%\) for the fourth and sixth order methods, respectively. In addition, to keep the factorized matrix sparse, we use a drop-tolerance \(10^{-4}\) and \(10^{-6}\) for the fourth and sixth order methods, respectively. In Table 5, the number of iterations (averaged over the four Runge-Kutta stages in all time steps) are shown for different material properties and mesh resolutions. We observe that in all cases we have tested, PCG converges with less than four iterations.

6 Conclusion

We have developed an energy-based SBP-SAT discretization of the wave equation. Comparing with the traditional SBP-SAT discretization, an advantage of the proposed method is that no mesh-dependent parameter is needed to imposed Dirichlet boundary conditions and material interface conditions. Our stability analysis shows that the discretization can either be energy conserving or dissipative. In addition, we have presented a general framework for deriving error estimates by the normal mode analysis and detailed the accuracy analysis for a fourth order discretization.

In numerical experiments, we have examined more cases for the effect of dissipation on the convergence rate. For the fourth order method with Dirichlet boundary conditions, the energy conserving discretization converges to third order, and the dissipative version converges to fourth order. This is also theoretically proved; while at a grid interface, both dissipative and energy-conserving interface coupling lead to a fourth order convergence rate. For the sixth order method, dissipation at a Dirichlet boundary increases convergence rate from 5 to 5.5. At a grid interface, similar improvement is also observed.

For the energy-based discretization to be efficient the vector \(\mathbf{u}_t\) must be isolated and we have demonstrated that this is possible. For problems in one space dimension, this can be done by explicitly or implicitly forming the pseudoinverse and extracting a few of its columns. For problems in multiple dimensions with constant coefficients, we have leveraged the diagonalization technique from [30]. This technique gives an algorithm with the same cost as a traditional method of lines discretization after a pre-computation step that only involve solving one dimensional eigenvalue problems. The same procedure cannot be generalized to problems with variable coefficients. However, our numerical experiments have demonstrated that the corresponding linear system can be solved efficiently by the conjugate gradient method with an incomplete Cholesky preconditioner. The iterative solver converges fast and is not very sensitive to the material property and mesh resolution. Here, we have only considered two dimensional problems and observe the time-to-solution for our method and the traditional SBP discretization of the wave equation are roughly comparable. In three dimensions, the preconditioning and iterative solution for obtaining \(\mathbf{u}_t\) may be less efficient and it remains to be explored if the method presented here can be competitive with the traditional approach.

Availability of Data and Material

The data generated or analyzed in this work are available from the corresponding author upon request.

References

Almquist, M., Dunham, E.M.: Non-stiff boundary and interface penalties for narrow-stencil finite difference approximations of the laplacian on curvilinear multiblock grids. J. Comput. Phys. 408, 109294 (2020)

Almquist, M., Wang, S., Werpers, J.: Order-preserving interpolation for summation-by-parts operators at nonconforming grid interfaces. SIAM J. Sci. Comput. 41, A1201–A1227 (2019)

Appelö, D., Hagstrom, T.: A new discontinuous Galerkin formulation for wave equations in second-order form. SIAM J. Numer. Anal. 53, 2705–2726 (2015)

Appelö, D., Hagstrom, T.: An energy-based discontinuous Galerkin discretization of the elastic wave equation in second order form. Comput. Methods Appl. Mech. Engrg. 338, 362–391 (2018)

Appelö, D., Kreiss, G.: Application of a perfectly matched layer to the nonlinear wave equation. Wave Motion 44, 531–548 (2007)

Appelö, D., Wang, S.: An energy based discontinuous Galerkin method for coupled elasto-acoustic wave equations in second order form. Int. J. Numer. Meth. Eng. 119, 618–638 (2019)

Carpenter, M.H., Gottlieb, D., Abarbanel, S.: Time-stable boundary conditions for finite-difference schemes solving hyperbolic systems: methodology and application to high-order compact schemes. J. Comput. Phys. 111, 220–236 (1994)

Duru, K., Virta, K.: Stable and high order accurate difference methods for the elastic wave equation in discontinuous media. J. Comput. Phys. 279, 37–62 (2014)

Eriksson, S.: Inverses of SBP-SAT finite difference operators approximating the first and second derivative. J. Sci. Comput. 89, 30 (2021)

Eriksson, S., Wang, S.: Summation-by-parts approximations of the second derivative: Pseudoinverse and revisitation of a high order accurate operator. SIAM J. Numer. Anal., 2669–2697, (2021)

Grote, M.J., Schneebeli, A., Schötzau, D.: Discontinuous Galerkin finite element method for the wave equation. SIAM. J. Numer. Anal. 44, 2408–2431 (2006)

Gustafsson, B., Kreiss, H.O., Oliger, J.: Time-dependent problems and difference methods. John Wiley & Sons (2013)

Henshaw, W.D.: A high-order accurate parallel solver for Maxwell’s equations on overlapping grids. SIAM J. Sci. Comput. 28, 1730–1765 (2006)

Hicken, J.E., Zingg, D.W.: Summation-by-parts operators and high-order quadrature. J. Comput. Appl. Math. 237, 111–125 (2013)

Kreiss, H.O., Oliger, J.: Comparison of accurate methods for the integration of hyperbolic equations. Tellus 24, 199–215 (1972)

Lynch, R.E., Rice, J.R., Thomas, D.H.: Direct solution of partial difference equations by tensor product methods. Numer. Math. 6(1), 185–199 (1964)

Mattsson, K.: Summation by parts operators for finite difference approximations of second-derivatives with variable coefficient. J. Sci. Comput. 51, 650–682 (2012)

Mattsson, K., Ham, F., Iaccarino, G.: Stable and accurate wave-propagation in discontinuous media. J. Comput. Phys. 227, 8753–8767 (2008)

Mattsson, K., Ham, F., Iaccarino, G.: Stable boundary treatment for the wave equation on second-order form. J. Sci. Comput. 41, 366–383 (2009)

Mattsson, K., Nordström, J.: Summation by parts operators for finite difference approximations of second derivatives. J. Comput. Phys. 199, 503–540 (2004)

Nissen, A., Kreiss, G., Gerritsen, M.: Stability at nonconforming grid interfaces for a high order discretization of the Schrödinger equation. J. Sci. Comput. 53, 528–551 (2012)

Petersson, N.A., Sjögreen, B.: Stable grid refinement and singular source discretization for seismic wave simulations. Commun. Comput. Phys. 8, 1074–1110 (2010)

Sjögreen, B., Petersson, N.A.: A fourth order accurate finite difference scheme for the elastic wave equation in second order formulation. J. Sci. Comput. 52, 17–48 (2012)

Svärd, M., Nordström, J.: On the convergence rates of energy-stable finite-difference schemes. J. Comput. Phys. 397, 108819 (2019)

Virta, K., Mattsson, K.: Acoustic wave propagation in complicated geometries and heterogeneous media. J. Sci. Comput. 61, 90–118 (2014)

Wang, S.: An improved high order finite difference method for non-conforming grid interfaces for the wave equation. J. Sci. Comput. 77, 775–792 (2018)

Wang, S., Kreiss, G.: Convergence of summation-by-parts finite difference methods for the wave equation. J. Sci. Comput. 71, 219–245 (2017)

Wang, S., Nissen, A., Kreiss, G.: Convergence of finite difference methods for the wave equation in two space dimensions. Math. Comp. 87, 2737–2763 (2018)

Wang, S., Virta, K., Kreiss, G.: High order finite difference methods for the wave equation with non-conforming grid interfaces. J. Sci. Comput. 68, 1002–1028 (2016)

Zhang, L., Appelö, D., Hagstrom, T.: Energy-based discontinuous Galerkin difference methods for second-order wave equations. Commun. Appl. Math. Comput., (2021)

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funding

Open access funding provided by Umea University. This work was supported by NSF Grant DMS-1913076 (Appelö). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Code Availability

The codes used to generate the results in the numerical experiments are available from the corresponding author upon request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, S., Appelö, D. & Kreiss, G. An Energy-Based Summation-by-Parts Finite Difference Method For the Wave Equation in Second Order Form. J Sci Comput 91, 52 (2022). https://doi.org/10.1007/s10915-022-01829-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01829-4