Abstract

The main purpose of this work is to provide an efficient scheme for constructing kernel-based reduced interpolation models. In the existing literature such problems are mainly addressed via the well-established knot insertion or knot removal schemes. Such iterative strategies are usually quite demanding from a computational point of view and our goal is to study an efficient implementation for data removal approaches, namely efficient reduced basis algorithm (ERBA). Focusing on kernel-based interpolation, the algorithm makes use of two iterative rules for removing data. The former, called ERBA-r, is based on classical residual evaluations. The latter, namely ERBA-p, is independent of the function values and relies on error bounds defined by the power function. In both cases, inspired by the so-called extended Rippa’s algorithm, our ERBA takes advantage of a fast implementation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We introduce the kernel-based scattered data interpolation problem following [1, 2]. Let  and \( {{{\mathcal {X}}}} = \{ {\varvec{x}}_i, \; i = 1, \ldots , n\} \subset \varOmega \) be a set of distinct nodes, \(n\in {\mathbb {N}}\), where \({\varvec{x}}_i=(x_{i,1},\ldots ,x_{i,d})^{\intercal }\), \(i=1,\ldots ,n\). The scattered data interpolation problem consists in recovering an unknown function \(f: \varOmega \longrightarrow {\mathbb {R}}\) given its values at \({{{\mathcal {X}}}}\), i.e. \(\varvec{f}=\varvec{f}_{|\mathcal{X}}=(f(\varvec{x}_1),\ldots ,f(\varvec{x}_n))^{\intercal }=(f_1,\ldots ,f_n)^{\intercal }\). This can be achieved by imposing the interpolation conditions at \({{{\mathcal {X}}}}\). In particular, for kernel-based interpolation, the approximating function assumes the form:

and \( {{{\mathcal {X}}}} = \{ {\varvec{x}}_i, \; i = 1, \ldots , n\} \subset \varOmega \) be a set of distinct nodes, \(n\in {\mathbb {N}}\), where \({\varvec{x}}_i=(x_{i,1},\ldots ,x_{i,d})^{\intercal }\), \(i=1,\ldots ,n\). The scattered data interpolation problem consists in recovering an unknown function \(f: \varOmega \longrightarrow {\mathbb {R}}\) given its values at \({{{\mathcal {X}}}}\), i.e. \(\varvec{f}=\varvec{f}_{|\mathcal{X}}=(f(\varvec{x}_1),\ldots ,f(\varvec{x}_n))^{\intercal }=(f_1,\ldots ,f_n)^{\intercal }\). This can be achieved by imposing the interpolation conditions at \({{{\mathcal {X}}}}\). In particular, for kernel-based interpolation, the approximating function assumes the form:

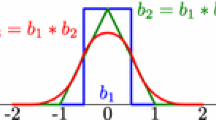

where \(\varvec{c}=(c_1,\ldots ,c_n)^{\intercal }\in {\mathbb {R}}^n\) and \(\kappa _{\varepsilon }:\varOmega \times \varOmega \longrightarrow {\mathbb {R}}\) is a strictly positive definite kernel depending on a shape parameter \(\varepsilon >0\). In addition, \(\kappa _{\varepsilon }\) is supposed to be radial, i.e. there exists a univariate function \(\varphi _{\varepsilon }:{\mathbb {R}}_{\ge 0}\longrightarrow {\mathbb {R}}\) such that \(\kappa _{\varepsilon }(\varvec{x},\varvec{y})=\varphi _{\varepsilon }(r)\), with \(r:=\Vert \varvec{x}-\varvec{y}\Vert _2\). As a consequence, such a setting is commonly referred to as Radial Basis Function (RBF) interpolation. With this clarification and with abuse of notation, we might write simply \(\kappa \) instead of \(\kappa _{\varepsilon }\). The interpolation conditions are satisfied by finding the unique vector \(\varvec{c}\) so that

where \({\mathsf {A}}=({\mathsf {A}}_{i,j})=\kappa (\varvec{x}_i,\varvec{x}_j)\), \(i,j=1,\ldots ,n\), is the so-called interpolation (or collocation or simply kernel) matrix. The uniqueness of the solution of (1) is guaranteed as long as \(\kappa \) is strictly positive definite. For a more general formulation of the interpolant that involves conditionally positive definite kernels, and for a complete overview concerning kernel-based approximation, we refer the reader e.g. to [2].

The interpolant \(S_{f,{\mathcal {X}}}\) belongs to the space

which, equipped with the bilinear form \((\cdot ,\cdot )_{{\mathcal {H}}_{\kappa }}\), is a pre-Hilbert space with reproducing kernel \(\kappa \). Moreover, the completion of \({\mathcal {H}}_{\kappa }\) with respect to the norm \(\Vert \cdot \Vert _{{\mathcal {H}}_{\kappa }}=\sqrt{(\cdot ,\cdot )_{{\mathcal {H}}_{\kappa }}}\) is the so-called native space \({\mathcal {N}}_{\kappa }\) associated to \(\kappa \). Many upper bounds for the interpolation error, e.g. in terms of the fill-distance [1, Theorem 14.5, p. 121] and using sampling inequalities [3, 4], are available. Here we focus on the following pointwise error bound [1, Theorem 14.2, p. 117]:

where \(P_{\kappa ,{\mathcal {X}}}\) is the so-called power function. For the purposes of this paper, we directly define the power function as [5, p. 116, §14]:

where

Note that (2) splits the error into two terms. The former, i.e. the power function, only depends on the nodes and on the kernel, while the latter takes into account the function f.

As a consequence, the power function gives information about how the interpolation error relates to the node distributions. Indeed, as for polynomial and spline interpolation, the approximation quality strongly depends on the distribution of the scattered data. In view of this, starting with an initial set of nodes, many adaptive strategies have been studied in order to construct well-behaved interpolation designs, i.e. interpolation sets which provide an accurate reconstruction and, preferably, affordable computational costs. In particular, the so-called greedy approaches match such purposes by iteratively adding new points to the interpolation set. The iterative rule is based on minimizing a pointwise upper bound for the interpolant. Precisely, those strategies rely on the residual of the interpolant (f-greedy) or on the value of the power function (p-greedy); refer to [6,7,8,9] for a general overview. Despite the fact that we only focus on these two schemes, other strategies that combine the two above, known as \(f \cdot p\) and f/p greedy, are available; we refer the reader to [10,11,12]. These methods fall under the context of knot insertion algorithms, which have been studied also in the context of adaptive least-squares approximation [1, §21].

Alternatively, as in our work, one could start by taking a large interpolation set, which provides an accurate approximation, and iteratively remove nodes until the resulting interpolation error does not exceed a fixed tolerance (see e.g. [13] for a general overview). These kinds of knot removal approaches aim to provide reduced models by neglecting as many points as possible from the initial set while preserving a target accuracy. However, they are usually computationally expensive, since many interpolants built upon large sets of nodes need to be constructed [14].

In this paper, in order to overcome the limitations related to the high computational complexity for reduced basis models, we take advantage of the Extension of Rippa’s Algorithm (ERA) recently provided in [15]; see also [16]. More precisely, classical reduced basis iterative strategies are usually quite demanding (about \({{{\mathcal {O}}}}(n^4)\) operations) and our goal is to study an efficient implementation for data removal approaches, namely Efficient Reduced Basis Algorithm (ERBA), which only requires about \({{{\mathcal {O}}}}(n^3)\) operations. Since n might be large, we should call the algorithm more efficient RBA. However, with abuse of language, we simply refer to the algorithm as efficient RBA. For the ERBA scheme, besides the residual-based rule, that leads to the ERBA-r, and that can be derived from the ERA, we provide an efficient scheme for computing the power function as well. The resulting ERBA-p is a reduced model that takes advantage of being accurate, fast and easy to implement.

The paper is organised as follows. In Sect. 2, we fix some notations and we introduce the Reduced Basis Algorithm (RBA) based on knot removal strategies. Its efficient implementation, i.e. the ERBA scheme, and theoretical results are then provided in Sect. 3, where a detailed analysis of the computational complexity of the proposed schemes is also included. Numerical tests that confirm the theoretical findings are presented in Sect. 4. The conclusions are offered in Sect. 5.

2 The Reduced Basis Algorithm (RBA)

In what follows, we introduce our schemes for reduced basis models based on both the residual and on the power function minimization. The latter algorithm is quasi optimal in the sense that, referring to (2), we only take into account the power function, neglecting the term involving the native space norm of the sought function. This should not be seen as a drawback. Indeed, we are able to compute a quasi-optimal subset of points independently of the function values. As a consequence, it might be relevant if one has to deal with many measurements sampled at the same points. We further point out that the residual-based scheme has strong similarities with the knot removal algorithm shown in [1, §21]. We now present the RBA scheme and in the next section we focus on its fast implementation.

Let \( {{{\mathcal {X}}}} = \{ {\varvec{x}}_i, \; i = 1, \ldots , n\} \subset \varOmega \) be the initial set of nodes, let \( {{{\mathcal {X}}}}_{s-1} = \{ {\varvec{x}}_i, \; i = 1, \ldots , n_{s-1}\} \subset \varOmega \) be the reduced set at the \((s-1)\)-th step of the algorithm (\( {{{\mathcal {X}}}}_{0} = {{{\mathcal {X}}}}\)), and let \(\tau \in {\mathbb {R}}_{>0}\) be a fixed tolerance. Choosing \(\rho \in {\mathbb {N}}\), \(\rho <n\), and letting \(\ell = \lfloor n_{s-1} /\rho \rfloor \), the s-th step of the iterative scheme, \(s \ge 1\), is as follows.

-

1.

Partition \({\mathcal {X}}_{s-1}\) into \(\ell \) test sets \({\mathcal {X}}_{s-1}^1,\ldots ,{\mathcal {X}}_{s-1}^{\ell }\), \(|{\mathcal {X}}_{s-1}^j|=\rho ^j\) with \(\rho ^j \in \{\rho ,\ldots ,2\rho -1\}\), \(\rho ^j\le \max (2\rho -1,n_{s-1})\). Moreover, let \(\overline{{\mathcal {X}}}_{s-1}^j:={\mathcal {X}}_{s-1}\setminus {\mathcal {X}}_{s-1}^j\) be the training sets, \(j=1,\ldots ,\ell \).

-

2.

For each \({\mathcal {X}}_{s-1}^j\), \(j=1,\ldots ,\ell \), compute:

-

2a.

for the residual-based scheme:

$$\begin{aligned} w^j = \dfrac{1}{\sqrt{\rho ^j}}\Vert \varvec{f}^j-\varvec{S}^j \Vert _2, \end{aligned}$$(4)where \(\varvec{f}^j:=\varvec{f}_{|_{{\mathcal {X}}_{s-1}^j}}\) and \({\varvec{S}}^j :=S_{f,\overline{{\mathcal {X}}}_{s-1}^j}({\mathcal {X}}_{s-1}^j)\) is the evaluation vector on \({\mathcal {X}}_{s-1}^j\) of the interpolant \(S_{f,\overline{{\mathcal {X}}}_{s-1}^j}\). Here, we need to solve an \((n_{s-1}-\rho ^j)\times (n_{s-1}-\rho ^j)\) linear system for each \(j=1,\ldots ,\ell \) (see (1));

-

2b.

for the power-based scheme:

$$\begin{aligned} w^j = \dfrac{1}{\sqrt{\rho ^j}} \Vert \varvec{P}^j\Vert _2, \end{aligned}$$where \(\varvec{P}^j:=P_{\kappa ,\overline{{\mathcal {X}}}_{s-1}^j}({\mathcal {X}}_{s-1}^j)\) is the evaluation vector on \({\mathcal {X}}_{s-1}^j\) of the power function \(P_{\kappa ,\overline{{\mathcal {X}}}_{s-1}^j}\). Here, we need to invert an \((n_{s-1}-\rho ^j)\times (n_{s-1}-\rho ^j)\) matrix for each \(j=1,\ldots ,\ell \) (see (3)).

-

2a.

-

3.

Choose

$$\begin{aligned} j^{\star }=\mathop {\mathrm {argmin}}\limits _{j\in \{1,\ldots ,\ell \}}{w^j}. \end{aligned}$$ -

4.

Let \(r_{s-1} = w^{j^{\star }}\):

-

4a.

if \(r_{s-1}\le \tau \), define \({\mathcal {X}}_{s} = {\mathcal {X}}_{s-1}\setminus {\mathcal {X}}_{s-1}^{j^{\star }}\) and proceed iteratively with the s-th step;

-

4b.

if \(r_{s-1}>\tau \), \({\mathcal {X}}_{s} = {\mathcal {X}}_{s-1}\) is the final interpolation set obtained by the procedure.

-

4a.

Remark 1

In the presented RBA algorithm, at each iteration, the data set is partitioned randomly. As also confirmed by the numerical experiments carried out in Sect. 4, since \(\rho \ll n\), such randomness does not influence the algorithm in selecting meaningful designs of interpolation node sets according to the chosen approach. Nevertheless, if relevant, particular configurations of subsets can be taken into account (e.g. nearest neighbors).

The convergence of the residual-based rule has been studied in many context and for many basis functions, see e.g. [13] for a general overview. As far as the convergence of the proposed power function-based scheme is concerned, it is a consequence of [7, Lemma 2.3], precisely:

being \( {\mathcal {X}}_{s} \subset {\mathcal {X}}_{s-1} \) nested sets.

From now on, in analyzing the computational complexity, we assume without loss of generality that \(\rho \) divides \(n_s\) at each step of the algorithm, and thus \(\rho _j\equiv \rho \) holds true. Therefore, at each step, a classical implementation of this method requires to solving \(n_s\) different \((n_s-\rho )\times (n_s-\rho )\) linear systems, in the residual-based case, or the inversion of an \((n_s-\rho )\times (n_s-\rho )\) matrix in the power-based setting. Hence, in both situations, each step is computationally demanding. In [1, §21], a similar residual-based approach has been speeded up by using Rippa’s algorithm in the case \(\rho =1\). In the next section, inspired by ERA [15], which speeds up the computational complexity for any \(\rho \ge 1\), we provide a fast implementation of the proposed algorithm.

3 Efficient Reduced Basis Algorithm (ERBA)

To study the computational complexity of the proposed schemes, we have to focus on the calculation of the residuals (or of the power function) that is performed at each iteration. Then, fixed a certain step s, with \(s=1,2,\ldots \), let us introduce the following notations: \({\mathcal {X}}_s:={\mathcal {X}}\), \(n_s:=n\), and let \(\varvec{p}:=(p_1,\ldots ,p_{\rho })^{\intercal }\) be the vector of \(\rho \) indices related to the elements of the subset \({\mathcal {X}}_s^j:={\mathcal {V}}\), \(|{\mathcal {X}}_s^j|=\rho \). We also adopt the following notations:

In the following we explain our efficient strategy for the implementation of both the residual and power-based schemes, i.e. we speed up Step 2. of the RBA algorithm.

3.1 The ERBA-r

In step 2a. for the computation of \({\varvec{S}}^j\) in Eq. (4), we need to compute the interpolant and hence solve a system that leads to a matrix inversion. Precisely, let us consider

where \(\overline{{{\mathcal {V}}}}={\mathcal {X}}\setminus {\mathcal {V}}\) and \(\varvec{c}^{(\varvec{p})}:=\big (c^{(\varvec{p})}_{i}\big )_{i\notin \varvec{p}}\) is determined by solving

We are interested in computing the residual

being \(\varvec{k}^{\varvec{p}}({\mathcal {V}})=(\kappa (\varvec{x}_i,\varvec{x}_j))_{i\in \varvec{p},j\notin \varvec{p}}\) an \((n-\rho )\times \rho \) matrix. Supposing \(n/\rho =\ell \in {\mathbb {N}}\), a classic approach would lead to the resolution of \(\ell \) different \((n-\rho )\times (n-\rho )\) linear systems, and thus, since usually \(n \gg \rho \), to a computational cost of about \({\mathcal {O}}(n^4)\). In case \(\rho =1\) one could use the Rippa’s algorithm reducing the complexity to \({\mathcal {O}}(n^3)\). In case of more folds, i.e. \(\rho >1\), we take advantage of the ERA scheme [15] for which (5) can be computed as

Doing in this way, we need to invert an \(n\times n\) matrix and then to solve \(\ell \) different \(\rho \times \rho \) linear systems. Therefore, the computational cost is about \({\mathcal {O}}(n^3)\), leading to a significant saving (cf. [15, §2.2]).

3.2 The ERBA-p

Here, we focus on the computation of the power function vector (see (3) and step 2b. of the algorithm presented in Sect. 2)

where \(\mathrm {diag}(\cdot )\) is the diagonal operator. The vector \(\varvec{P}^{{\mathcal {V}}}\) is usually computed by means of a for-loop over the elements of \({\mathcal {V}}\) (see [1, Program 17.1]). Hence, supposing again \(n/\rho =\ell \in {\mathbb {N}}\), we need to invert \(\ell \) matrices of dimension \((n-\rho )\times (n-\rho )\), leading to a computational cost of about \({\mathcal {O}}(\ell (n-\rho )^3)={\mathcal {O}}(n(n-\rho )^3/\rho )\). Since \(n \gg \rho \), the cost for each step of the algorithm is approximately \({\mathcal {O}}(n^4)\).

To provide a fast computation of the power function vector \(\varvec{P}^{{\mathcal {V}}}\), we first recall that, letting \({\mathsf {R}} \in {\mathbb {R}}^{n_1\times n_2}\) and \({\mathsf {S}} \in {\mathbb {R}}^{n_2\times n_1}\), we have that

where \(\odot \) denotes the Hadamard (or pointwise) product. Then, we obtain the following result.

Theorem 1

The vector (7) can be computed as

where \(\mathrm {sum}(\cdot )\) denotes the sum-by-rows operator.

Proof

Putting together (5) and (6), we have that

Since \(\varvec{f}\) is arbitrary if the function f is not fixed, we can substitute it with \(\varvec{k}^{\varvec{p}}({\mathcal {V}})\) in the equation. Then,

Hence, recalling (7), we get

Finally, by applying (8) to \(({\mathsf {A}}^{-1}_{\varvec{p},\varvec{p}})^{-1}{\mathsf {A}}^{-1}_{\varvec{p},:}\) and \(\varvec{k}({\mathcal {V}})\), we conclude the proof. \(\square \)

By adopting the scheme proposed in Theorem 1, the computational cost at each step is about \({\mathcal {O}}(n^3)\), indeed we need to invert a unique \(n\times n\) matrix and to perform other minor calculations that are negligible as long as \(n \gg \rho \). The proposed strategy is therefore faster than the classical framework, as confirmed by the experiments carried out in the next section.

4 Numerics

In what follows, we perform some numerical experiments to prove the efficiency of the proposed ERBA. The tests have been carried out in Matlab on a Intel(R) Core(TM) i7-1165G7 CPU@2.80 GHz processor. The software is available for the scientific community at

In Sect. 4.1 we compare our efficient implementation with the classical one. In doing so, we take both smooth and non-smooth target functions and different kernel bases. A focus on the CPU times is shown in Sect. 4.2, where we let both n and \(\rho \) vary. In Sect. 4.3, we analyze a stationary setting where the shape parameter varies according to the interpolation set. Finally, we present a test comparing the ERBA method and greedy schemes (which might be thought as forward RBA approaches).

4.1 ERBA versus RBA

Let \(\varOmega =[-1,1]^2\). In this subsection, we take the strictly positive definite kernel

and we set \(\varepsilon = 1\). As interpolation data set \({\mathcal {X}} \subset \varOmega \), we consider an \(n\times n\) grid, with \(n=25\). Moreover, we take an \(m\times m\) evaluation grid \(\varXi \) with \(m=60\).

4.1.1 Regular target function

Let \(f:\varOmega \longrightarrow {\mathbb {R}}\) be defined as

The associated RMSE computed via the whole data set \({\mathcal {X}}\) and evaluated on \(\varXi \) is \(e_{{\mathcal {X}}}=9.69\mathrm{E}{-}05\). In Fig. 1, we plot the function f and the pointwise relative error between f and the interpolant \(S_{f,{\mathcal {X}}}\) evaluated on \(\varXi \).

For testing the proposed strategy, we set \(\rho =3\) and the tolerance \(\tau _r = 2 {e}_{{\mathcal {X}}}\) for ERBA-r, while for ERBA-p we take \(\tau _p = 2\Vert \varvec{P}^{\varXi } \Vert _{2}/m\), being \(\varvec{P}^{\varXi }\) the power function vector constructed via the nodes \({\mathcal {X}}\) and evaluated on \(\varXi \). Denoting by \({\mathcal {X}}_s \subset {\mathcal {X}}\) the set of resulting reduced interpolation nodes, in Tables 1 and 2 we respectively compare the CPU times of ERBA-r and ERBA-p with their classical implementation. Moreover, the so-constructed reduced data sets, as well as the pointwise relative error between f and the interpolants constructed on \({\mathcal {X}}_s\), are depicted in Fig. 2.

The efficiency of our ERBA scheme is clearly deduced by Tables 1 and 2. Furthermore, as expected, we note that the ERBA-p tends to extract almost uniformly distributed nodes, while ERBA-r cluster the points where the target function has steep gradients. Such a behaviour will be amplified in the next experiment, which is carried out with a discontinuous target function.

4.1.2 Discontinuous target function

Let \(g:\varOmega \longrightarrow {\mathbb {R}}\) be defined as

The associated RMSE computed on \(\varXi \) is \(e_{{\mathcal {X}}}=1.14\mathrm{E}{-}01\). In Fig. 3, we plot the function g and the pointwise relative error between g and the interpolant \(S_{g,{\mathcal {X}}}\) evaluated on \(\varXi \).

Here, we keep \(\rho =3\) and we set the tolerance \(\tau _r = (3/2) {e}_{{\mathcal {X}}}\) for ERBA-r, while for ERBA-p we take \(\tau _p = (3/2)\Vert \varvec{P}^{\varXi } \Vert _{2}/m\), being \(\varvec{P}^{\varXi }\) the power function vector constructed via the nodes \({\mathcal {X}}\) and evaluated on \(\varXi \). As in the previous test, we report the CPU times of ERBA-r and ERBA-p (in Tables 3, 4) and the reduced data sets together with the pointwise relative errors in Fig. 4. From these results with the discontinuous target function, we recover the same patterns of the previous experiments, i.e. the efficiency of the ERBA methods and the clustering effect of the ERBA-r.

4.2 Varying n and \(\rho \)

This subsection is dedicated to test how the efficacy of our schemes depends on n and \(\rho \).

4.2.1 A focus on n

As a further experiment, always with the aim of comparing the complexity of ERBA and RBA, we consider the setting of Sect. 4.1 (with target function f) and we compare the CPU times of the algorithms by varying the grid size of the initial data set \({\mathcal {X}}\). Precisely, we take \(n=15+3k\), \(k=0,\ldots ,7\). The results are reported in Fig. 5. We note that the saving in terms of computational complexity is relevant for each choice of n.

4.2.2 A focus on \(\rho \)

Here, always in the same setting of Sect. 4.1 (but with target function g), our purpose is to show how the CPU times and the number of reduced nodes provided by ERBA vary with the parameter \(\rho \). Thus, we take \(\rho =2k\), \(k=1,\ldots ,7\), and we display the results in Fig. 6. We observe that the number of reduced nodes is almost constant, especially as long as \(\rho \) is small. As a consequence, the CPU times decrease with \(\rho \), indeed less steps of the algorithm are performed.

4.3 Stationary ERBA

In this subsection, we investigate the role of the shape parameter. We take the kernel

As interpolation set, we consider \(n^2\) quasi-random Halton points [17] \({\mathcal {H}}\) in \(\varOmega \), with \(n=37\), while we keep \(\varXi \) as evaluation grid. Here, we focus on a stationary interpolation approach: fixed an initial \(\varepsilon _0>0\), at each iteration of our method, the shape parameter is set as \(\varepsilon =\varepsilon _0/h_{{\mathcal {H}}_{s-1},\varXi }\), where \(h_{{\mathcal {H}}_{s-1},\varXi }\) is the fill-distance at the s-th step of the algorithm, i.e.,

In the following, we fix \(\rho =7\) and we consider the target function f introduced in Sect. 4.1. In Fig. 7, we plot the pointwise relative errors between such function and the interpolant constructed on \({\mathcal {H}}\). The associated RMSE computed on \(\varXi \) is \(e_{{\mathcal {H}},f}=1.23\mathrm{E}{-}06\).

We now fix \(\varepsilon _0=0.1\) and we set \(\tau _r = 1\mathrm{E}{+}04 r_{0}\) (for ERBA-r) and \(\tau _p = (6/5) r_{0}\) (for ERBA-p), being \(r_{0}\) the value produced at the first iteration of ERBA scheme as outlined in the algorithm described in Sect. 2. Then, the results of the stationary ERBA implementation are presented in Fig. 8. We point out that, for infinitely smooth kernels, such a stationary strategy becomes essential to partially avoid the typical ill-conditioning of the kernel matrices.

4.4 ERBA versus Greedy

As a final test, we carry out a comparison between our ERBA scheme and a greedy scheme that works in a specular manner with respect to RBA. For the sake of a clearer presentation, we detail it in the Appendix. We take the compactly-supported kernel

with \(\varepsilon =0.1\). As interpolation data set \({\mathcal {Y}} \subset \varOmega \), we consider an \(n\times n\) grid, with \(n=40\), while we keep \(\varXi \) as evaluation grid. Moreover, we consider the target function \(h:\varOmega \longrightarrow {\mathbb {R}}\) defined as

The associated RMSE computed on \(\varXi \) is \(e_{{\mathcal {Y}}}=3.94\mathrm{E}-06\). In Fig. 9, we plot the function h and the pointwise relative error between h and the interpolant \(S_{h,{\mathcal {Y}}}\) evaluated on \(\varXi \).

For both the ERBA and greedy algorithms we take \(\rho =5\). For ERBA-r and greedy-r, we set \(\tau _r= 1\mathrm{E}{-}06\), while we take \(\tau _p = 5\Vert \varvec{P}^{\varXi } \Vert _{2}/m\), being \(\varvec{P}^{\varXi }\) the power function vector constructed via the nodes \({\mathcal {Y}}\) and evaluated on \(\varXi \), for ERBA-p and greedy-p. The initial set \({\mathcal {X}}_0\) for the greedy schemes is a \(5\times 5\) equispaced grid in \(\varOmega \), which is nested in \({{\mathcal {X}}}\). In Figs. 10 and 11 we report the achieved results.

These experiments point out that in some cases, as when the number of nodes to remove is not too large, the ERBA is faster than the greedy approach. Indeed, the cost of greedy schemes increase when the number of the iterations grows.

5 Discussion and Conclusions

We have investigated a fast computation of knot removal approaches. We have implemented two different strategies: the first one in based on classical residual-based schemes while the second one relies on power function error bounds. The latter is independent of the function values and tends to return quasi-uniform data (cf. [18] and [11, Theorem 15 & 19 & 20]), while the former, as expected, keeps more points where the corresponding function has steep gradients. In both cases we are able to significantly speed up the algorithm thanks to our implementation.

Work in progress consists in extending the proposed tool to other bases, e.g. splines [19] and to variably scaled kernels [20, 21]. Moreover, it can be helpful for validating the shape parameter in RBF interpolation; refer e.g. to [22].

Data availability

Data sharing is not applicable to this article as no data sets were generated or analyzed.

Code availability

The software is available for the scientific community at https://github.com/cesc14/ERBA.

Change history

23 July 2022

The Missing Open Access funding information has been added in the Funding Note.

References

Fasshauer, G.E.: Meshfree Approximations Methods with Matlab. World Scientific, Singapore (2007)

Wendland, H.: Scattered Data Approximation, Cambridge Monogr. Appl. Comput. Math., vol. 17, Cambridge University Press, Cambridge (2005)

Fuselier, E., Wright, G.: Scattered data interpolation on embedded submanifolds with restricted positive definite kernels: Sobolev error estimates. SIAM J. Numer. Anal. 50(3), 1753–1776 (2012)

Rieger, C.: Sampling Inequalities and Applications. Disseration, Göttingen (2008)

Fasshauer, G.E., McCourt, M.J.: Kernel-Based Approximation Methods Using Matlab. World Scientific, Singapore (2015)

Driscoll, T.A., Heryudono, A.R.H.: Adaptive residual subsampling methods for radial basis function interpolation and collocation problems. Comput. Math. Appl. 53, 927–939 (2007)

Santin, G., Haasdonk, B.: Convergence rate of the data-independent \(P\)-greedy algorithm in kernel-based approximation. Dolomites Res. Notes Approx. 10, 68–78 (2017)

Wirtz, D., Karajan, N., Haasdonk, B.: Surrogate modelling of multiscale models using kernel methods. Int. J. Numer. Methods Eng. 101, 1–28 (2015)

Wirtz, D., Haasdonk, B.: A vectorial kernel orthogonal greedy algorithm. Dolomites Res. Notes Approx. 6, 83–100 (2013)

Dutta, S., Farthing, M.W., Perracchione, E., Savant, G., Putti, M.: A greedy non-intrusive reduced order model for shallow water equations. J. Comput. Phys. 439, 110378 (2021)

Wenzel, T., Santin, G., Haasdonk, B.: A novel class of stabilized greedy kernel approximation algorithms: convergence, stability and uniform point distribution. J. Approx. Theory 262, 105508 (2021)

Wenzel, T., Santin, G., Haasdonk, B.: Analysis of target data-dependent greedy kernel algorithms: convergence rates for \(f\)-, \(f\cdot P\)- and \(f/P\)-greedy. arXiv: 2105.07411 (2021)

Lyche, T.: Knot removal for spline curves and surfaces. In: Cheney, E.W. et al (eds.), Approximation Theory, pp. 207–226 (1992)

Fasshauer,G.E.: Adaptive least squares fitting with radial basis functions on the sphere. In: Daehlen, M. et al. (eds.), Vanderbilt University Press (Nashville), pp. 141–150

Marchetti, F.: The extension of Rippa’s algorithm beyond LOOCV. Appl. Math. Lett. 120, 107262 (2021)

Rippa, S.: An algorithm for selecting a good value for the parameter in radial basis function interpolation. Adv. Comput. Math. 11, 193–210 (1999)

Halton, J.H.: On the efficiency of certain quasi-random sequences of points in evaluating multi-dimensional integrals. Numer. Math. 2, 84–90 (1960)

De Marchi, S., Schaback, R., Wendland, H.: Near-optimal data-independent point locations for radial basis function interpolation. Adv. Comput. Math. 23, 317–330 (2005)

Campagna, R., Conti, C., Cuomo, S.: Smoothing exponential–polynomial splines for multiexponential decay data. Dolomites Res. Notes Approx. 12, 86–100 (2018)

Campi, C., Marchetti, F., Perracchione, E.: Learning via variably scaled kernels. Adv. Comput. Math. 47, 51 (2021)

Perracchione, E., Massone, A.M., Piana, M.: Feature augmentation for the inversion of the Fourier transform with limited data. Inverse Probl. 37, 105001 (2021)

Cavoretto, R., De Rossi, A., Mukhametzhanov, M.S., Sergeyev, Y.D.: On the search of the shape parameter in radial basis functions using univariate global optimization methods. J. Glob. Optim. 79, 305–327 (2021)

Acknowledgements

We sincerely thank the reviewers for helping us to significantly improve the manuscript. This research has been accomplished within Rete ITaliana di Approssimazione (RITA) and the UMI Group TAA Approximation Theory and Applications.

Funding

Open access funding provided by Università degli Studi di Padova within the CRUI-CARE Agreement. This research has been partially funded by GNCS-IN\(\delta \)AM and by the ASI-INAF grant “Artificial Intelligence for the analysis of solar FLARES data (AI-FLARES)”.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Greedy Algorithms

Appendix: Greedy Algorithms

Let \( {{{\mathcal {X}}}} = \{ {\varvec{x}}_i, \; i = 1, \ldots , n\} \subset \varOmega \) be the initial set of nodes, let \( {{{\mathcal {X}}}}_{s-1} = \{ {\varvec{x}}_i, \; i = 1, \ldots , n-n_{s-1}\} \subset \varOmega \) be the extracted set at the \((s-1)\)-th step of the algorithm. The set \( {{{\mathcal {X}}}}_{0} \subset {{{\mathcal {X}}}}\) is chosen taking \(n_0 \ll n\). Let \(\tau \in {\mathbb {R}}_{>0}\) be a fixed tolerance. Choosing \(\rho \in {\mathbb {N}}\), \(\rho <n\), and letting \(\ell = \lfloor n-n_{s-1} /\rho \rfloor \), the s-th step of the iterative scheme, \(s \ge 1\), is as follows.

-

1.

Partition \({\mathcal {X}}\setminus {\mathcal {X}}_{s-1}\) into \(\ell \) test sets \({\mathcal {X}}_{s-1}^1,\ldots ,{\mathcal {X}}_{s-1}^{\ell }\), \(|{\mathcal {X}}_{s-1}^j|=\rho ^j\) with \(\rho ^j \in \{\rho ,\ldots ,2\rho -1\}\), \(\rho ^j\le \max (2\rho -1,n-n_{s-1})\).

-

2.

For each \({\mathcal {X}}_{s-1}^j\), \(j=1,\ldots ,\ell \), compute:

-

2a.

for the residual-based scheme (greedy-r):

$$\begin{aligned} w^j = \dfrac{1}{\sqrt{\rho ^j}}\Vert \varvec{f}^j-\varvec{S}^j \Vert _2, \end{aligned}$$where \(\varvec{f}^j:=\varvec{f}_{|_{{\mathcal {X}}_{s-1}^j}}\) and \({\varvec{S}}^j :=S_{f,{\mathcal {X}}_{s-1}}({\mathcal {X}}_{s-1}^j)\) is the evaluation vector on \({\mathcal {X}}_{s-1}^j\) of the interpolant \(S_{f,{\mathcal {X}}_{s-1}}\);

-

2b.

for the power-based scheme (greedy-p):

$$\begin{aligned} w^j = \dfrac{1}{\sqrt{\rho ^j}} \Vert \varvec{P}^j\Vert _2, \end{aligned}$$where \(\varvec{P}^j:=P_{\kappa ,{\mathcal {X}}_{s-1}}({\mathcal {X}}_{s-1}^j)\) is the evaluation vector on \({\mathcal {X}}_{s-1}^j\) of the power function \(P_{\kappa ,{\mathcal {X}}_{s-1}}\);

-

2a.

-

3.

Choose

$$\begin{aligned} j^{\star }=\mathop {\mathrm {argmax}}\limits _{j\in \{1,\ldots ,\ell \}}{w^j}. \end{aligned}$$ -

4.

Let \(r_{s-1} = w^{j^{\star }}\):

-

4a.

if \(r_{s-1}>\tau \), define \({\mathcal {X}}_{s} = {\mathcal {X}}_{s-1}\cup {\mathcal {X}}_{s-1}^{j^{\star }}\) and proceed iteratively with the s-th step;

-

4b.

if \(r_{s-1}\le \tau \), \({\mathcal {X}}_{s} = {\mathcal {X}}_{s-1}\) is the final interpolation set obtained by the procedure.

-

4a.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Marchetti, F., Perracchione, E. Efficient Reduced Basis Algorithm (ERBA) for Kernel-Based Approximation. J Sci Comput 91, 41 (2022). https://doi.org/10.1007/s10915-022-01818-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01818-7