Abstract

A continuous Galerkin time stepping method is introduced and analyzed for subdiffusion problem in an abstract setting. The approximate solution will be sought as a continuous piecewise linear function in time t and the test space is based on the discontinuous piecewise constant functions. We prove that the proposed time stepping method has the convergence order \(O(\tau ^{1+ \alpha }), \, \alpha \in (0, 1)\) for general sectorial elliptic operators for nonsmooth data by using the Laplace transform method, where \(\tau \) is the time step size. This convergence order is higher than the convergence orders of the popular convolution quadrature methods (e.g., Lubich’s convolution methods) and L-type methods (e.g., L1 method), which have only \(O(\tau )\) convergence for the nonsmooth data. Numerical examples are given to verify the robustness of the time discretization schemes with respect to data regularity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the following subdiffusion problem, with \(\alpha \in (0, 1)\),

where \(u_{0}\) is the initial value and f is the source function which will be specified later and where \( \, _{0}^{C}D_{t}^{\alpha } u(t)\) denotes the Caputo fractional derivative defined by, see [7],

and \(A: {\mathcal {D}} (A) \subset {\mathcal {H}} \rightarrow {\mathcal {H}}\) denotes the elliptic operator satisfying the following resolvent estimate, with some \(\pi /2< \theta < \pi \), see, e.g., [25, 37],

Here \({\mathcal {H}}\) denotes a suitable Hilbert space. For example, \({\mathcal {H}} = L_{2}(\varOmega )\) and \(A= - \varDelta \) with definition domain \(D(A) = H^{2}(\varOmega ) \cap H_{0}^{1}(\varOmega )\). Here \(L_{2}(\varOmega ), H_{0}^{1}(\varOmega )\) and \(H^{2}(\varOmega )\) denote the standard Sobolev spaces and \(\varOmega \subset {\mathbb {R}}^{d}, d=1, 2, 3\) is a domain with smooth boundary \(\partial \varOmega \).

Note that \(z^{\alpha } \in \varSigma _{\theta ^{\prime }}\) with \( \theta ^{\prime } = \alpha \theta < \pi \) for all \( z \in \varSigma _{\theta }\) which implies that, by (3), with some \(\pi /2< \theta < \pi \),

Let \(S_{h} \subset H_{0}^{1}(\varOmega )\) denote the piecewise linear finite element space defined on the triangulation of \(\varOmega \). The finite element method of (1), (2) is to find \(u_{h}(t) \in S_{h}\) such that for \(u_{0} \in L_{2}(\varOmega ), f(t) \in L_{2}(\varOmega )\) with \(f_{h}(t) = P_{h} f (t)\),

where \(A_{h}: S_{h} \rightarrow S_{h}\) denotes the discrete analogue of the elliptic operator A defined by, see Thomée [37, Chapter 12],

where \(A(\cdot , \cdot )\) denotes the bilinear form associated with A. Here \(P_{h}: L_{2}(\varOmega ) \rightarrow S_{h}\) denotes the \(L_{2}\) projection operator defined by

Note that the minimal eigenvalue of \(A_{h}\) is bounded below by that of A since \(S_{h}\) is a subspace of \(L_{2}(\varOmega )\), we have, with some \(\pi /2< \theta < \pi \), see Lubich et al. [25, page 6],

Let \( v_{h}(t) = u_{h}(t) - u_{0h}\). Then (5), (6) can be written equivalently as

In this work, we shall construct and analyze a continuous Galerkin time stepping method for solving (8), (9). It is more convenient for analyzing the time stepping method for (8), (9) than for (5), (6), see Lubich et al. [25].

Many application problems are modeled by using (1), (2), see, e.g., [1, 9, 10, 32], etc. It is not possible to find the analytic solution of (1), (2). Therefore we need to design and analyze the numerical methods for solving (1), (2). Note that the Caputo fractional derivative is a nonlocal operator which makes the numerical analysis of the subdiffusion equation (1), (2) more difficult than the diffusion equation with the integer order derivative. There are two popular ways to approximate the Caputo fractional derivative in literature. One way is to use the convolution quadrature formula to approximate the Caputo fractional derivative, see, e.g., [25, 26, 46]. Another way is to use the L-type schemes to approximate the Caputo fractional derivative, see, e. g., [16, 20,21,22,23, 34, 45], etc. Under the assumptions that the solutions of (1), (2) are sufficiently smooth, the numerical methods constructed based on both convolution quadrature and L-type schemes have the optimal convergence orders, see [23, 34], etc. However, Stynes et al. [36] and Stynes [35] showed that in general the solutions of (1), (2) have the limited regularities and the solutions behave as \(O(t^{\alpha })\) even for smooth initial data, which implies that the solutions of (1), (2) are not in \(C^{1}[0, T]\), see also [33]. Hence the higher order numerical methods constructed by convolution quadrature and L-type schemes for solving (1), (2) have only first order \(O(\tau )\) convergence for both smooth and nonsmooth data, see, e.g., [11, 41], etc. To obtain the optimal convergence orders of the higher order numerical methods for solving (1), (2), one may use the corrected schemes to correct the weights of the starting steps of the numerical methods, see, e.g., [12, 42], etc., or use the graded meshes to capture the singularities of the solutions of the subdiffusion problems, see, e.g., [16, 36, 45], etc. Discontinuous Galerkin methods are also well studied for solving fractional subdiffusion equations, see, e.g., [29,30,31] and the references therein. There are other numerical methods for solving fractional partial differential equations, see, e.g., [2,3,4,5,6, 8, 13,14,15, 24, 27, 28, 39, 43, 44, 46], etc.

Recently, Li et al. [17] analyzed the L1 scheme for solving the superdiffusion problem with the fractional order \(\alpha \in (1, 2)\) based on the Petrov–Galerkin method. This scheme is first proposed and analyzed by Sun and Wu [34] in the framework of finite difference method under the assumptions that the solution of the problem is sufficiently smooth. Li et al. [17] proved that, without any regularity assumptions for the solution of the problem and without using the corrections of the weights and the graded meshes, the L1 scheme has the convergence order \(O(\tau ^{3-\alpha }), \alpha \in (1,2 )\) for both smooth and nonsmooth data when the elliptic operator A is assumed to be self-adjoint, positive semidefinite and densely defined in a suitable Hilbert space, see also [18, 19], etc.

The purpose of this paper is to consider the continuous Galerkin method for solving the subdiffusion problem with \(\alpha \in (0, 1)\) by using the similar argument as in Li et al. [17] for the superdiffusion problem with \(\alpha \in (1,2)\). We prove that, without any regularity assumptions for the solution of the problem and without using the corrections of the weights and the graded meshes, the proposed time stepping method has the convergence order \(O(\tau ^{1+\alpha }), \, \alpha \in (0, 1)\) for general sectorial elliptic operators satisfying the resolvent estimate (3).

The main contributions of this paper are the following:

-

1.

A continuous Galerkin time stepping method for solving subdiffusion problem is introduced for general sectorial elliptic operators satisfying the resolvent estimate (3).

-

2.

The convergence order \(O(\tau ^{1+\alpha }), \alpha \in (0, 1) \) of the proposed time stepping method for solving homogeneous subdiffusion problem is proved by using the Laplace transform method.

-

3.

The convergence order \(O(\tau ^{1+\alpha }), \alpha \in (0, 1) \) of the proposed time stepping method for solving inhomogeneous subdiffusion problem is also proved by using the Laplace transform method.

The paper is organized as follows. In Sect. 2, we introduce the continuous Galerkin time stepping method for solving subdiffusion problem. In Sect. 3, the error estimates of the proposed time stepping methods are proved by using discrete Laplace trnsform method for the subdiffusion problems. In Sect. 4, some numerical examples for both fractional ordinary differential equations and subdiffusion problems are given to verify the theoretical results. An Appendix with three lemmas is given in Sect. 5.

By C we denote a positive constant independent of the functions and parameters concerned, but not necessarily the same at different occurrences. We assume that \(\alpha \in (0, 1)\) in this paper and, for simplicity, we will not explicitly write this assumption in many places.

2 A Continuous Galerkin Time Stepping Method for (8), (9)

In this section, we shall introduce a continuous Galerkin time stepping method for solving (8), (9).

Let \(0 = t_{0}< t_{1}< \dots < t_{N}=T\) be a partition of [0, T] and \(\tau \) the time step size. We define the following trial space, with \(k=0, 1, 2, \dots , N-1\) and \(q=2\),

and the test space

respectively. We remark that the trial space \(W^{1}\) is a continuous function space and the test space is a discontinuous piecewise constant function space with respect to time t. The trial space \(W^{1}\) is, due to the continuous restriction, of one order higher than the test space \(W^{0}\).

Let \(u_{0} \in L_{2}(\varOmega )\) and \( f \in L^{2}(0, T; L_{2}(\varOmega ))\). The continuous Galerkin time stepping method for solving (8), (9) is to find \(V_{h} \in W^{1}\) with \(V_{h}(0)=0\), such that

where \(\, _{0}^{R} D_{t} ^{\alpha } V_{h} (t)\) denotes the Riemann-Liouville fractional derivative and \((\cdot , \cdot )\) denotes the inner product in \(L_{2}(\varOmega )\).

Let \(V_{h}^{k} = V_{h}(t_{k}), \, k=0, 1, 2, \dots , N\) denote the approximate solution of \(v_{h}(t_{k})\) in (8), (9). We shall show that the solutions \(V_{h}^{k}, k=0, 1, 2, \dots , N\) of (10) satisfy the following abstract operator form: with \(V_{h}^{0}= V_{h}(0)=0\),

where

In fact, we have, on each \((t_{k}, t_{k+1}), \; k=0, 1, 2, \dots , N-1\),

On each subinterval \((t_{k}, t_{k+1}), k=1, 2, \dots , N-1\), (similarly we may consider the subinterval \((t_{0}, t_{1})\)), we may write, for \(\forall \chi \in W^{0}\),

Note that \(V_{h} \in W^{1}\) and therefore \( V_{h}(t) = V_{h}(t_{k}) + \big (V_{h}(t_{k+1}) - V_{h}(t_{k}) \big ) \frac{t- t_{k}}{\tau }\) on \( t \in (t_{k}, t_{k+1}), \, k=0, 1, \dots , N-1\). Hence we have, for \(\forall \chi \in W^{0}\), with \(k=1, 2, \dots , N-1\),

and, with \(l=2, 3, \dots , k\),

and

Hence we have

where \(b_{k}, k=0, 1, 2, \dots , N\) are defined in (13).

Further we have, with \(k=0, 1, 2, \dots , N-1\),

Together these estimates we obtain the time stepping method (11), (12).

Remark 1

The time stepping method (11), (12) has the similar form as the time discretization scheme introduced in [17, (4)] for the superdiffusion problem with \(1< \alpha <2\).

3 Error Estimates of the Time Stepping Method (11), (12)

In this section, we will show the error estimates of the abstract time stepping method (11), (12) by using the Laplace transform method proposed originally by Lubich et al. [25] and developed by Jin et al. [11, 12], Yan et al. [42] and Wang et al. [38], etc.

3.1 The Homogeneous Case with \(f=0\) and \(u_{0} \ne 0\)

In this subsection, we will consider the error estimates of the time stepping method (11), (12) with \(f=0\) and \(u_{0} \ne 0\). We thus consider the following homogeneous problem, with \(v_{h}(0)=0\) and \(u_{0h} = P_{h} u_{0}, \, u_{0} \in L_{2}(\varOmega )\),

The abstract time stepping method (11), (12) for solving (14) is now reduced to, with \(V_{h}^{0}=0\),

We then have the following theorem:

Theorem 1

Let \(v_{h}(t_{n})\) and \(V_{h}^{n}, \; n=0, 1, 2, \dots , \) be the solutions of (14) and (15), (16), respectively. Assume that \(u_{0h} = P_{h} u_{0}, \, u_{0} \in L_{2}(\varOmega )\). Then we have

Proof

Step 1: Find the exact solution of (14). Let \({\hat{v}}_{h}(z)\) denote the Laplace transform of \(v_{h}(t)\). Taking the Laplace transform in (14), we have

which implies that, by using the inverse Laplace transform, with \(n=1, 2, \dots ,\)

where, with some \(\pi /2< \theta < \pi \),

with \(\mathfrak {I}z\) running from \(-\infty \) to \(\infty \).

Taking the variable change \(z= {\bar{z}}/\tau \), we may write (17) as

For simplicity of the notations, we replace \({\bar{z}}\) by z in (19), then (17) can be written as

Step 2: Find the approximate solutions \(V_{h}^{n}, n=1, 2, \dots \) of (15), (16).

Denote

where

Here \( {\tilde{b}} (z) = \sum _{j=0}^{\infty } b_{j} e^{-jz}\) with \(b_{j}, j=0, 1, 2, \dots , \) defined by (13), denotes the discrete Laplacian transform of \(\{ b_{j} \}_{j=0}^{\infty }\).

By Lemma 1, we see that \((z_{\tau }^{\alpha } + \tau ^{\alpha } A_{h})^{-1}\) is well defined. Further we shall prove that the solutions \(V_{h}^{n}, n=1, 2, \dots \) of (15), (16) take the following forms:

where, with \(\varGamma \) defined by (18),

In fact, multiplying the \((k+1)\)th equation in (15), (16) by \(e^{-kz}, \; k=0, 1, 2, \dots \), we obtain

Summing the equations in (25), (26) from \(k=0\) to \(k=\infty \), we get

We remark that the second summation in (27) starts from \(k=1\) since the left hand side of (25) only has two terms. Note that, since \(b_{0}=0\),

Let \({\widetilde{V}}_{h} (z) = \sum _{n=0}^{\infty } V_{h}^{n} e^{-nz}\) denote the discrete Laplace transform of \(\{ V_{h}^{n} \}_{n=0}^{\infty }\). We have, after some simple calculations,

Further we have

Thus we get

which implies that, with \(z_{\tau }\) defined by (21),

With \(\zeta = e^{-z}\), we may write \({\widetilde{V}}_{h} (z) = \sum _{n=0}^{\infty } V_{h}^{n} e^{-nz} = \sum _{n=0}^{\infty } V_{h}^{n} \zeta ^{n}\). Using the Taylor expansion of the analytic function around the origin, we have, for \(\rho \) small enough, see Lubich et al. [25, (3.9)] and Jin et al. [11],

where the contour \(\varGamma ^{0} := \{ z = - \ln (\rho ) + i y: \; | y | \le \pi \}\) is oriented counterclockwise. By deforming the contour \(\varGamma ^{0}\) to \(\varGamma _{\tau }\) defined by (24) and using the periodicity of the exponential function, we obtain (23).

Subtracting (23) from (19), we have

where

For \(I_{1}\), we have, by the resolvent estimate (7), with some constant \(c>0\),

where we use the variable change \( z= r e^{i \theta }\) in the second inequality above.

For \(I_{2}\), we have, by Lemma 2,

Together these estimates complete the proof of Theorem 1. \(\square \)

Remark 2

In (28), we follow the approach in Lubich et al. [25, (3.9)] and Jin et al. [11] and obtain the formula for \(V_{h}^{n}\) by using the Laplace transform method. By Lemma 1, we may show that \(V_{h}^{n}\) is well defined since \(z_{\tau }^{\alpha } \in \varSigma _{\theta }\) for some \(\theta \in (\pi /2, \pi )\). An alternative approach for obtaining the formula of \(V_{h}^{n}\) is given in Xie et al. [17], where the authors do not apply the Taylor expansion of the analytic function around the origin, and instead directly calculate the inverse Laplace transform \(V_{h}^{n} = \frac{1}{2 \pi i} \int _{a - i \pi }^{a+i \pi } e^{nz} {\widetilde{V}}_{h} (z) \, \mathrm {d} z\) for some \(a>0\), see [17, Lemma 3.6]. In particular, they obtain the stability estimate of \(V_{h}^{n}\), i.e., [17, Lemma 3.1], which implies that \({\widetilde{V}}_{h}(z) = \sum _{n=0}^{\infty } V_{h}^{n} e^{-nz}\) is well defined for \(z \in {\mathbb {C}}^{+}\).

Remark 3

In the above estimates for \(\Vert I_{1} \Vert \) and \( \Vert I_{2}\Vert \), the constants c and C depend on the angle \(\theta \) of the integral path \(\varGamma \). Moreover, the constant C will tend to \(\infty \) as \(\theta \rightarrow \pi /2\). In our proof of Lemma 1, we require that \(\theta \) is sufficiently close to \(\pi /2\), so that the constant in Theorem 1 could be very large. The similar remark is also valid for the error estimates in Theorem 2 in the inhomogeneous case in the next section.

3.2 The Inhomogeneous Case with \(f \ne 0\) and \(u_{0}=0\)

In this subsection, we will consider the error estimates of the time stepping method (11), (12) with \(f \ne 0\) and \(u_{0}=0\). We thus consider the following inhomogeneous problem, with \(v_{h}(0)=0\),

The abstract time stepping method (11), (12) for solving (29) is now reduced to, with \(V_{h}^{0}=0\),

We then have the following theorem:

Theorem 2

Let \(v_{h}(t_{n})\) and \(V_{h}^{n}, \; n=0, 1, 2, \dots , \) be the solutions of (29) and (30), (31), respectively. Assume that \(\int _{0}^{t} (t-s)^{-1+\epsilon } \Vert f^{\prime } (s) \Vert \, \mathrm {d}s < \infty \) for any \(t>0\) and \(\epsilon >0\). Then we have

Proof

Step 1: Find the exact solution of (29). Taking the Laplace transform in (29), we have

which implies that, by using the inverse Laplace transform, with \(n=1, 2, \dots ,\)

where, with \(\varGamma \) defined by (18),

Step 2: Find the approximate solutions \(V_{h}^{n}, n=1, 2, \dots \) of (30), (31). Denote, with \(z_{\tau }^{\alpha }\) and \(\varGamma _{\tau }\) defined by (21) and (24), respectively,

we shall show that the solutions \(V_{h}^{n}, n=1, 2, \dots \) of (30), (31) have the following form:

where

In fact, multiplying the \((k+1)\)th equation in (30), (31) by \(e^{-kz}, \; k=0, 1, 2, \dots \), we have

Summing the equations in (36), (37) from \(k=0\) to \(k=\infty \), we get

Using the similar argument as for the discrete solutions for the homogeneous problem in previous subsection, we may obtain

By using the inverse discrete Laplacian transform, we get

We shall prove that, for \(j \ge n\) with any fixed \(n=1, 2, \dots \),

Assuming (39) holds at the moment, we then have, by (38),

which shows (34).

It remains to prove (39). We shall follow the idea of the proof for [17, Lemma 3.6]. By Cauchy integral formula, for any real number \( a >0\), we have

To estimate the integral, we need to consider the bound of \(\Big \Vert \big (z_{\tau }^{\alpha } + \tau ^{\alpha } A_{h} \big )^{-1} \Big ( \frac{2}{e^{z}+1} \Big ) \Big \Vert \). We have, by the resolvent estimate (7),

where \(z_{\tau }^{\alpha }\) and \(\psi \) are defined in (21) and (22), respectively.

Note that, by (22)

where \(Li_{\alpha -2}(z)\) denotes the polylogarithm function. By the singular expansion of the function \(Li_{p}(e^{-z}), \, p \in {\mathbb {C}}, \, p \ne 1, 2, \dots \), we have, see Jin et al. [11, Lemma 3.2],

where \(\zeta \) is the Riemann zeta function. Thus we have, with some suitable constants \(c_{0}, c_{1}, \dots \),

which implies that \( \lim _{z \rightarrow 0} \frac{z^{\alpha }}{\psi (z)} = 1\).

Further we observe that \(\lim _{z \rightarrow \infty } \frac{z^{\alpha }}{\psi (z)} =0\). Hence we get

which implies that, by (41),

Therefore, by (40),

Note that \( \lim _{a \rightarrow \infty } e^{(n-j)a} a^{-\alpha } =0\) for \(j \ge n\) with any fixed \(n=1, 2, \dots \), which implies that (39) holds.

We next consider the error estimates \(v_{h}(t_{n})- V_{h}^{n}\). Subtracting (34) from (32), we have

where

By Lemma 3, we have, with any small \(\epsilon >0\),

Together these estimates complete the proof of Theorem 1. \(\square \)

4 Numerical Simulations

In this section, we will consider some numerical examples for solving both fractional ordinary differential equations and subdiffusion problems by using the time stepping method (11), (12).

4.1 Fractional Ordinary Differential Equation

In this subsection, we shall consider the numerical simulations for solving the following fractional ordinary differential equation, with \(0< \alpha <1\),

where \(g: {\mathbb {R}} \rightarrow {\mathbb {R}}\) is a suitable function, \(y_{0} \in {\mathbb {R}}\) is the initial value and \(\lambda >0\).

Let \( 0 = t_{0}< t_{1}< \dots < t_{N}=T\) be a partition of [0, T] and \( \tau \) the step size. Let \(Y^{j} \approx y(t_{j}), \, j=0, 1, \dots , N\) denote the approximations of \(y(t_{j})\). The time stepping method (12) for solving (46), (47) can be written as

where the weights \(w_{j}, j=0, 1, 2, \dots , N\) are defined as below.

For \(n=1\), the time stepping method (48), (49) is reduced to

where, with \(b_{j}, j=0, 1\) defined by (13),

For \(n=2\), the time stepping method (48), (49) is reduced to

where, with \(b_{j}, j=0, 1, 2\) defined by (13),

For \(n=3\), the time stepping method (48), (49) is reduced to

where, with \(b_{j}, j=0, 1, 2, 3\) defined by (13),

For \(n \ge 4\), the time stepping method (48), (49) is reduced to

where, with \(b_{j}, j=0, 1, 2, \dots , n\) defined by (13),

Example 1

Our first example is a homogeneous problem. Choose \(g(t) =0\) in (46) and the initial value \(y_{0}=1\) in (47). In this case the problem has the following exact solution, with \(\alpha \in (0, 1)\),

where \(E_{\alpha , 1} (z)\) denotes the Mittag–Leffler function.

We choose \(T=2\) and \(\lambda =1\). The exact solution can be calculated by using the MATLAB function mlf.m. We obtain the approximate solutions with the different step sizes \(\tau =1/20, 1/40, 1/80, 1/160\). In Table 1, we observe that the experimentally determined convergence order of the time stepping method (48), (49) is about \(O(\tau ^{2})\) which is better than theoretical convergence order \(O(\tau ^{1+\alpha })\) for small \(\alpha \in (0, 1)\). In Table 1, we also compare the errors and convergence orders of the time stepping method (48), (49) with the popular L1 scheme [11] and the modified L1 scheme [42]. It is well known that the L1 scheme has only \(O(\tau )\) convergence due to the singularity of the solution of the fractional differential equation [11] . After correcting the starting step, the modified L1 scheme has the optimal convergence order \(O(\tau ^{2- \alpha })\) [42]. We observe that the time stepping method (48), (49) indeed captures the singularities of the problem more accurately than the L1 and modified L1 schemes. We remark that the modified L1 scheme has the convergence order \(O(\tau ^{2- \alpha }), \alpha \in (0, 1)\), however in order to observe this convergence order for small \(\alpha \), we need to consider the error at sufficiently large T. For example, we indeed observe the convergence order \(O(\tau ^{2-\alpha })\) of the modified L1 scheme for \(\alpha =0.3\) when we choose \(T \ge 10\). In Table 1, the convergence order of the modified L1 scheme is not close to \(O(\tau ^{2-\alpha })\) for \(\alpha =0.3\) since \(T=2\) is not big enough to observe the required convergence order. Since the purpose of this paper is to show the convergence orders of the time stepping method (48), (49), we will not study further the numerical behaviors of the modified L1 scheme.

Example 2

Our second example is an inhomogeneous problem with initial value \(y_{0}=0\). We choose \(\lambda =1\) and assume that the exact solution of (46) is \(y(t) =t^{\beta }, \, \beta >0\) and

We choose \(T=1\) and obtain the approximate solutions with the different step sizes \(\tau =1/10, 1/20, 1/40, 1/80, 1/160\). In Table 2, we show the experimentally determined orders of convergences with \(\beta =1.1\) for the time stepping method (48), (49). We also observe that the convergence orders are about \(O(\tau ^{2})\) which are better than the theoretical convergence order \(O(\tau ^{1+\alpha })\) for small \(\alpha \in (0, 1)\).

Example 3

The final example for the fractional ordinary differential equation is an inhomogeneous problem with nonzero initial value. We choose \(\lambda =1\) and assume that the exact solution of (46) is \(y(t) =t^{\beta } +1, \, \beta >0\). The initial value \(y_{0} =1\) and

We choose \(T=1\) and obtain the approximate solutions with the different step sizes \(\tau =1/10, 1/20, 1/40, 1/80, 1/160\). In Table 3, we show the experimentally determined orders of convergences with \(\beta =1.1\) for the time stepping method (48), (49). We also observe that the convergence orders are about \(O(\tau ^{2})\) which are better than the theoretical convergence order \(O(\tau ^{1+\alpha })\) for small \(\alpha \in (0, 1)\).

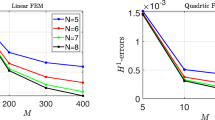

4.2 Subdiffusion Problem

Now we turn to the numerical examples for solving the following subdiffusion problem, with \( 0< \alpha <1\),

Let \(0 = t_{0}< t_{1}< \dots < t_{N}=T\) be a partiton of the time interval [0, T] and \(\tau \) the time step size. Let \( 0= x_{0}< x_{1}< \dots < x_{M}=1\) be a partition of the space interval [0, 1] and h the space step size. Let \(S_{h} \subset H_{0}^{1}(0, 1)\) be the piecewise linear finite element space defined by

The finite element method of (50), (52) is to find \(u_{h} (t) \in S_{h}\) such that

where \(P_{h}: L_{2}(0,1) \rightarrow S_{h}\) denotes the \(L_{2}\) projection operator.

Let \(U^{n} \approx u_{h} (t_{n}), n=0, 1, \dots , N\) be the approximation of \(u_{h} (t_{n})\). We define the following time discretization scheme for solving \(U^{n} \in S_{h}\), with \(n=1, 2, \dots , N\), and for \(\forall \, \chi \in S_{h}\),

where \(w_{j}, j=0, 1, 2, \dots , N\) are defined as in Sect. 4.1.

Let \(\varphi _{1}(x), \varphi _{2}(x), \dots , \varphi _{M-1} (x)\) be the linear finite element basis functions defined by, with \(j=1, 2, \dots , M-1\),

To find the solution \(U^{n} \in S_{h}, \; n=0, 1, \dots , N\), we assume that

for some coefficients \(\alpha _{k}^{n},\, k=1, 2, \dots , M-1\). Choose \(\chi = \varphi _{l}, \, l=1, 2, \dots , M-1\) in (55), we have

Denote

and

Further we denote the mass and stiffness metrics by

and

respectively. Then the scheme (57), (58) can be written into the following matrix form

which can be solved by using the similar MATLAB programs as for solving (48), (49).

Example 4

In this example, we shall consider a homogeneous subdiffusion problem. We choose \(f(t, x)=0\) and the initial value \(u_{0}(x) = x(1-x)\) in (50), (52). In this case, the exact solution is

where \(E_{\alpha ,1}(z)\) is the Mittag–Leffler function and \(A= \frac{\partial ^2}{\partial x^2}\) with \(D(A) = H_{0}^{1}(0, 1) \cap H^2 (0, 1)\).

In our numerical simulation, we let \(T=2\). Choose the space step size \(h= 2^{-10}\) and the different time step sizes \(\tau =1/10, 1/20, 1/40, 1/80, 1/160\), we get the different approximate solutions. The exact solution is calculated by using the MATLAB function mlf.m.

We observe that, in Table 4, the experimentally determined convergence orders are better than the theoretical convergence orders \(O(\tau ^{1+\alpha })\). For \(\alpha > 0.5\), the table shows a second order convergence rate. We also compare the errors and convergence orders of the method (48), (49) with the popular L1 scheme [11] and the modified L1 scheme [42]. We see that the time stepping method (48), (49) captures the singularities of the problem more accurately than the L1 and modified L1 schemes.

Example 5

Consider an inhomogeneous subdiffusion problem. Assume that the exact solution of (50)–(52) is \( u(t, x) =t^{\beta } x(1-x), \, \beta >0\) and

The initial value \(u_{0}(x) = 0\).

We choose \(T=2\) and \(\beta = \alpha \) which implies that the solution \(u(\cdot , x) \in C[0, T]\), but \(u(\cdot , x) \notin C^{1}[0, T]\) for any fixed x. We choose the space step size \(h= 2^{-10}\) and the different time step sizes \(\tau =1/10, 1/20, 1/40, 1/80, 1/160\) to get the approximate solutions. In Table 5, we observe that the experimentally determined convergence orders are higher than the theoretical convergence orders \(O(\tau ^{1+\alpha })\).

Example 6

Consider an inhomogeneous subdiffusion problem with nonzero initial value. Assume that the exact solution of (50)–(52) is \( u(t, x) =(t^{\beta }+1) x(1-x), \, \beta >0\) and

The initial value \(u_{0}(x) = x(1-x)\).

We observe that the experimentally determined convergence orders depend on the smoothness of the solution with respect to the time variable t. In our numerical simulation, we choose \(\beta \in (1, 2)\) which implies that \(u(\cdot , x) \in C^{1}[0, T]\), but \(u(\cdot , x) \notin C^{2}[0, T]\) for any fixed x. We choose \(T=2\) and the space step size \(h= 2^{-10}\) and the different time step sizes \(\tau =1/10, 1/20, 1/40, 1/80, 1/160\) to get the approximate solutions. In Table 6, we show the experimentally determined orders of convergence with \( \beta =1.1\), and we see that the convergence orders are consistent with our theoretical results.

Example 7

Consider an inhomogeneous subdiffusion problem in two-dimensional case. With \(x= (x_1, x_2), \; x_{1} \in [0, 1], \, x_{2} \in [0, 1]\), assume that the exact solution of (50)-(52) is \( u(t, x) =(t^{\beta }+1) x_{1} (1-x_{1}) x_{2} (1-x_{2}), \, \beta >0\) and

The initial value \(u_{0}(x) = x_{1} (1-x_{1}) x_{2} (1-x_{2})\).

We use the same parameters and the time and space step sizes as in Example 6 except in this example we need space partitions in both \(x_{1}\) and \(x_{2}\) directions. In Table 7, we show the experimentally determined convergence orders with \(\beta =1.1\). We see that the numerical results are consistent with the theoretical results for this example.

Data Availibility

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Adams, E.E., Gelhar, L.W.: Field study of dispersion in a heterogeneous aquifer: \(2\). Spatial moments analysis. Water Res. Res. 28, 3293–3307 (1992)

Al-Maskari, M., Karaa, S.: The lumped mass FEM for a time-fractional cable equation. Appl. Numer. Math. 132, 73–90 (2018)

Cao, J., Li, C., Chen, Y.: High-order approximation to Caputo derivatives and Caputo-type advection-diffusion equation (II). Fract. Calc. Appl. Anal. 18, 735–761 (2015)

Chen, F., Xu, Q., Hesthaven, J.S.: A multi-domain spectral method for time-fractional differential equations. J. Comput. Phys. 293, 157–172 (2015)

Chen, S., Shen, J., Wang, L.-L.: Generalized Jacobi functions and their applications to fractional differential equations. Math. Comp. 85, 1603–1638 (2016)

Chen, X., Zeng, F., Karniadakis, G.E.: A tunable finite difference method for fractional differential equations with non-smooth solutions. Comput. Methods Appl. Mech. Engrg. 318, 193–214 (2017)

Diethelm, K.: The Analysis of Fractional Differential Equations, volume 2004 of Lecture Notes in Mathematics. Springer-Verlag, Berlin, 2010. An application-oriented exposition using differential operators of Caputo type

Du, R., Yan, Y., Liang, Z.: A high-order scheme to approximate the Caputo fractional derivative and its application to solve the fractional diffusion wave equation. J. Comput. Phys. 376, 1312–1330 (2019)

Gorenflo, R., Mainardi, F.: Random walk models for space fractional diffusion processes. Fract. Calc. Appl. Anal. 1, 167–191 (1998)

Hatano, Y., Hatano, N.: Dispersive transport of ions in column experiments: an explanation of long-tailed profiles. Water Res. Res. 34, 1027–1033 (1998)

Jin, B., Lazarov, R., Zhou, Z.: An analysis of the L1 scheme for the subdiffusion equation with nonsmooth data. IMA J. Numer. Anal. 36, 197–221 (2016)

Jin, B., Li, B., Zhou, Z.: Correction of high-order BDF convolution quadrature for fractional evolution equations. SIAM J. Sci. Comput. 39, A3129–A3152 (2017)

Jin, B., Yan, Y., Zhou, Z.: Numerical approximation of stochastic time-fractional diffusion. ESAIM: M2AN 53, 1245–1268 (2019)

Karaa, S., Mustapha, K., Pani, A.K.: Optimal error analysis of a FEM for fractional diffusion problems by energy arguments. J. Sci. Comput. 74, 519–535 (2018)

Karaa, S., Pani, A.K.: Error analysis of a FVEM for fractional order evolution equations with nonsmooth initial data. ESAI: M2MAN 52, 773–801 (2018)

Kopteva, N.: Error analysis of the L1 method on graded and uniform meshes for a fractional-derivative problem in two and three dimensions. Math. Comp. 88, 2135–2155 (2019)

Li, B., Wang, T., Xie, X.: Analysis of the L1 scheme for fractional wave equations with nonsmooth data. Comput. Math. Appl. 90(2021), 1–12 (2021)

Li, B., Luo, H., Xie, X.: Analysis of a time-stepping scheme for time fractional diffusion problems with nonsmooth data. SIAM J. Numer. Anal. 57, 779–798 (2019)

Li, B., Wang, T., Xie, X.: Numerical analysis of two Galerkin discretizations with graded temporal grids for fractional evolution equations. J. Sci. Comput. 85, 59 (2020)

Li, C., Ding, H.: Higher order finite difference method for the reaction and anomalous-diffusion equation. Appl. Math. Model. 38, 3802–3821 (2014)

Liao, H., Li, D., Zhang, J.: Sharp error estimate of the nonuniform L1 formula for linear reaction-subdiffusion equations. SIAM J. Numer. Anal. 56, 1112–1133 (2018)

Liao, H., McLean, W., Zhang, J.: A discrete Grönwall inequality with applications to numerical schemes for subdiffusion problems. SIAM J. Numer. Anal. 57, 218–237 (2019)

Lin, Y., Xu, C.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225, 1533–1552 (2007)

Liu, Y., Du, Y., Li, H., Liu, F., Wang, Y.: Some second-order \(\theta \) schemes combined with finite element method for nonlinear fractional Cable equation. Numer. Algor. 80, 533–555 (2019)

Lubich, C., Sloan, I.H., Thomée, V.: Nonsmooth data error estimate for approximations of an evolution equation with a positive-type memory term. Math. Comp. 65, 1–17 (1996)

Cuesta, E., Lubich, C., Palencia, C.: Convolution quadrature time discretization of fractional diffusion-wave equations. Math. Comp. 75, 673–696 (2006)

Lv, C., Xu, C.: Error analysis of a high order method for time-fractional diffusion equations. SIAM J. Sci. Comput. 38, A2699–A2724 (2006)

McLean, W., Mustapha, K.: Time-stepping error bounds for fractional diffusion problems with non-smooth initial data. J. Comput. Phys. 293, 201–217 (2015)

Mustapha, K.: Time-stepping discontinuous Galerkin methods for fractional diffusion problems. Numer. Math. 130, 497–516 (2015)

Mustapha, K.: An L1 approximation for a fractional reaction-diffusion equation, a second-order error analysis over time-graded meshes. SIAM J. Numer. Anal. 58, 1319–1338 (2020)

Mustapha, K., Abdallah, B., Furati, K.M.: A discontinuous Petrov-Galerkin method for time-fractional diffusion equations. SIAM J. Numer. Anal. 52, 2512–2529 (2014)

Nigmatulin, R.: The realization of the generalized transfer equation in a medium with fractal geometry. Phys. Stat. Sol. B 133, 425–430 (1986)

Sakamoto, K., Yamamoto, M.: Initial/boundary value problems for fractional diffusion-wave equations and applications to some inverse problems. J. Math. Anal. Appl. 382, 426–447 (2011)

Sun, Z.-Z., Wu, X.: A fully discrete scheme for a diffusion wave system. Appl. Numer. Math. 56, 193–209 (2011)

Stynes, M.: Too much regularity may force too much uniqueness. Fract. Calc. Appl. Anal. 19, 1554–1562 (2016)

Stynes, M., O’riordan, E., Gracia, J.L.: Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM J. Numer. Anal. 55, 1057–1079 (2017)

Thomée, V.: Galerkin Finite Element Methods for Parabolic Problems. Springer-Verlag, Berlin (2007)

Wang, Y., Yan, Y., Yan, Y., Pani, A.K.: Higher order time stepping methods for subdiffusion problems based on weighted and shifted Grünwald-Letnikov formulae with nonsmooth data. J. Sci. Comput. 83, 40 (2020)

Wang, Y., Yan, Y., Yang, Y.: Two high-order time discretization schemes for subdiffusion problems with nonsmooth data. Fract. Calc. Appl. Anal. 23, 1349–1380 (2020)

Wood, D.: The computation of polylogarithms, Technical Report 15–92*. University of Kent, Computing Laboratory, Canterbury, UK (1992). (http://www.cs.kent.ac.uk/pubs/1992/110)

Xing, Y., Yan, Y.: A higher order numerical method for time fractional partial differential equations with nonsmooth data. J. Comput. Phys. 357, 305–323 (2018)

Yan, Y., Khan, M., Ford, N.J.: An analysis of the modified scheme for the time-fractional partial differential equations with nonsmooth data. SIAM J. Numer. Anal. 56, 210–227 (2016)

Yuste, S.B., Acedo, L.: An explicit finite difference method and a new von Neumann-type stability analysis for fractional diffusion equation. SIAM J. Numer. Anal. 42, 1862–1874 (2005)

Zayernouri, M., Karniadakis, G.E.: Fractional spectral collocation method. SIAM J. Sci. Comput. 36, A40–A62 (2014)

Zhang, H., Yang, X., Xu, D.: An efficient spline collocation method for a nonlinear fourth-order reaction subdiffusion equation. J. Sci. Comput. 85, 7 (2020)

Zeng, F., Li, C., Liu, F., Turner, I.: The use of finite difference/element approaches for solving the time-fractional subdiffusion equation. SIAM J. Sci. Comput. 35, A2976–A3000 (2013)

Acknowledgements

We express our gratitude to the referees for their valuable suggestions and comments. The first and third authors acknowledge the supports from National Natural Science Foundation of China (11801214). The fourth author thanks Dr. Bangti Jin for providing the sketch of the proof of Lemma 1 from private communications.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this Appendix, we shall prove the following three lemmas.

Lemma 1

Let \(\theta > \frac{\pi }{2}\) be close to \(\frac{\pi }{2}\). Let \(z_{\tau }\) be defined by (21), that is

where \(\psi (z) \) is defined by (22) and \(\varGamma _{\tau }\) is defined by (24). Then there exists some \(\theta _{0} \in (\frac{\pi }{2}, \pi )\), such that

Proof

We first note that, after a direct calculation, with \(\zeta = e^{-z}\),

where \(Li_{\alpha -2}\) is the polylogarithm function, see Jin et al. [11].

We will divide the proof of this lemma into the following 3 steps.

Step 1. Choose \(z: \, \arg z = \frac{\pi }{2}, \; 0< | \mathfrak {I}z | < \pi \). We will only consider the case with \(0< \mathfrak {I}z < \pi \). Similarly we may consider the case with \( -\pi< \mathfrak {I}z < 0\). Thus we have, with \(0< \mathfrak {I}z < \pi \),

Step 2. We shall prove that \(z_{\tau }^{\alpha }\) satisfies

which implies that, there exists \(\theta _{0} \in (\frac{\pi }{2}, \pi )\) such that

To prove this we shall find the arguments of the terms \( \frac{(1-\zeta )^2}{\zeta }\), \(\frac{1- \zeta }{1+ \zeta }\), and \(Li_{\alpha -2} (\zeta )\) in (62) for \(\zeta = e^{z}\) with \(z: \, \arg z = \frac{\pi }{2}, \; 0< \mathfrak {I}z < \pi \), respectively.

Note that, with \(\psi \in (0, \pi )\),

which implies that

Further we note that, with \(\psi \in (0, \pi )\),

which implies that

Now we consider the argument of the term \(Li_{\alpha -2} (\zeta )\). By Wood [40, (13.1)], we have, with \( p \ne 1, 2, \dots \),

Choose \(p=\alpha -2\) in (65), we have, with \(\zeta = e^{-i \psi }, \, \psi \in (0, \pi )\), see Wang et al. [38],

where

Both series converge for \(\alpha \in (0, 1)\). Since for \(\psi \in (0, \pi )\), we have \( \Big ( k + \frac{\psi }{2 \pi } \Big )^{\alpha -3}> \Big ( k+1 - \frac{\psi }{2 \pi } \Big )^{\alpha -3} >0, \) there holds

Note that \(\cos \big ( (3- \alpha ) \frac{\pi }{2} \big ) <0\) and \( \sin \big ( (3- \alpha ) \frac{\pi }{2} \big ) >0 \; \text{ for } \; \alpha \in (0, 1)\), we have

which implies that, since \(\arctan (x)\) is increasing on \( x \in (-\infty , \infty )\),

Hence we get, by (63), (64), (67),

that is,

which implies that, for some \(\theta _{0} \in (\frac{\pi }{2}, \pi )\),

Step 3. By continuity of \(z_{\tau }^{\alpha }\) with respect to z, we see that, there exists \(\theta _{0} \in (\frac{\pi }{2}, \pi )\), such that

where \(\varGamma _{\tau }\) is defined by (24) with \(\theta >\frac{\pi }{2}\) sufficiently close to \(\frac{\pi }{2}\).

The proof of Lemma 1 is complete. \(\square \)

Lemma 2

Let \(z_{\tau }\) be defined by (21), that is

where \(\psi (z) \) is defined by (22) and \(\varGamma _{\tau }\) is defined by (24). Then we have, with \(z \in \varGamma _{\tau }\),

and

Proof

For (68), we have

Note that

We then have

which implies that, for small \( \epsilon >0\),

For large z with \(z \in \varGamma _{\tau }\), by continuity of \(\frac{z_{\tau }^{\alpha } - z^{\alpha }}{z^{\alpha +2}} = ( \frac{2 \psi (z)}{e^{z} +1} - z^{\alpha } ) \frac{1}{z^{\alpha +2}}\), we see that

Thus we show that

Now we turn to (69). We have

Note that, by the resolvent estimate (7) and the mean value theorem,

Further we have, with some suitable constant \(d_{1}\),

which implies that

For large z with \(z \in \varGamma _{\tau }\), by continuity of \( \frac{z^{-1} - \frac{2}{e^{z} +1} ( \sum _{j=0}^{\infty } e^{-jz})}{z}, \) we see that

Thus we have

Hence we obtain, by (70),

Together these estimates complete the proof of Lemma 2. \(\square \)

Lemma 3

Let \(0= t_{0}< t_{1}< \dots< t_{n} < \dots \) be the time partition of \([0, \infty )\) and \(\tau \) the time step size. Let \({\mathcal {E}}_{h} (t)\) be defined by (44). We then have

and, with \(\epsilon >0\),

Proof

We follow the idea of the proof for [17, Lemma 3.9]. We first show (72). By (33) and (35), we have

Note that, see [17, Lemma 3.9],

and

where \(\varGamma \) and \(\varGamma _{\tau }\) are defined by (18) and (24), respectively. We remark that if the integral over \(\varGamma \) is divergent, caused by the singularity of the underlying integrand near the origin, then \(\varGamma \) should be deformed so that the origin lies at its left side. We then have

For \(I_{1}\), we have, by the resolvent estimate (7), with some constant \(c>0\),

For \(I_{2}\), we have, with some constant \(c>0\),

where we have used the following estimate

which can be proved similarly as for the proof of (69). Thus we get

which shows (72).

We now turn to the proof of (73). We first consider the case with \( t \in (t_{n}, t_{n+1}), \; n=1, 2, \dots \). By (33) and (35), we have

Hence we have, by (75) and (76),

For \(II_{1}\), we have, by using the resolvent estimate (7), with some constants \(c>0\) and \(\epsilon >0\),

For \(II_{2}\), by (77), we have, with some constants \(c>0\) and \(\epsilon >0\), noting that \(t \in (t_{n}, t_{n+1}), \, n=1, 2, \dots \),

For \(II_{3}\), we have

Following the proof of (69), we may show that, with \(z \in \varGamma _{\tau }\),

Combining this estimate with (79) and using the similar argument as for the estimate of \(II_{2}\), we get, noting that \( t \in (t_{n}, t_{n+1}), \, n=1, 2, \dots \),

Thus we get

which shows (73) for the case \( t \in (t_{n}, t_{n+1}), \; n=1, 2, \dots \).

Finally we show (73) for the case \( t \in (0, t_{1}]\). In this case, the estimate of \( \Vert II_{3}\Vert \) is reduced to, noting that \( t \in (0, t_{1}] = (0, \tau ]\),

Together these estimates complete the proof of Lemma 3. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yan, Y., Egwu, B.A., Liang, Z. et al. Error Estimates of a Continuous Galerkin Time Stepping Method for Subdiffusion Problem. J Sci Comput 88, 68 (2021). https://doi.org/10.1007/s10915-021-01587-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-021-01587-9

Keywords

- Subdiffusion problem

- Continuous Galerkin time stepping method

- Laplace transform

- Caputo fractional derivative