Abstract

We perform a comprehensive numerical study of the effect of approximation-theoretical results for neural networks on practical learning problems in the context of numerical analysis. As the underlying model, we study the machine-learning-based solution of parametric partial differential equations. Here, approximation theory for fully-connected neural networks predicts that the performance of the model should depend only very mildly on the dimension of the parameter space and is determined by the intrinsic dimension of the solution manifold of the parametric partial differential equation. We use various methods to establish comparability between test-cases by minimizing the effect of the choice of test-cases on the optimization and sampling aspects of the learning problem. We find strong support for the hypothesis that approximation-theoretical effects heavily influence the practical behavior of learning problems in numerical analysis. Turning to practically more successful and modern architectures, at the end of this study we derive improved error bounds by focusing on convolutional neural networks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This work studies the problem of numerically solving a specific parametric partial differential equation (PPDE) by training and applying neural networks (NNs). The central goal of the following exposition is to identify those key aspects of a parametric problem that render the problem harder or simpler to solve for methods based on NNs.

The underlying mathematical problem, the solution of PPDEs, is a standard problem in applied sciences and engineering. In this model, certain parts of a PDE such as the boundary conditions, the source terms, or the shape of the domain are controlled through a set of parameters, e.g., [30, 56]. In some applications where PDEs need to be evaluated very often or in real-time, individually solving the underlying PDEs for each choice of parameters becomes computationally infeasible. In this case, it is advisable to invoke methods that leverage on the joint structure of all the individual problems. A typical approach is that of constructing a reduced basis associated with the problem. With respect to this basis, the computational complexity of solving the PPDE is then significantly reduced, e.g., [30, 49, 56, 62].

Recently, as an alternative or to augment the reduced basis method, approaches were introduced that attempt to learn the parameter-to-solution map through methods of machine learning. We will provide a comprehensive overview of related approaches in Sect. 1.5. One approach is to train a NN to fit the discretized parameter-to-solution map, i.e., a map taking a parameter to a finite-element discretization of the solution of the associated PDEs. This approach has already been analyzed theoretically in [37] where it was shown from an approximation-theoretical point of view that the hardness of representing the parameter-to-solution map by NNs is determined by a highly problem-specific notion of complexity that depends (in some cases) only very mildly on the dimension of the parameter space.

In this work, we study the problem of learning the discretized parameter-to-solution map in practice. We hypothesize that the approximation-theoretical capacity of a NN architecture is one of the central factors in determining the practical difficulty level of the learning problem.

The motivation for this analysis is twofold: first, we regard this as a general analysis of the feasibility of approximation-theoretical arguments in the study of deep learning. Second, specifically for the problem of numerical solution of PPDEs, we consider it important to identify which characteristics of a parametric problem determine its practical hardness. This is especially relevant to identify in which areas the application of this model is appropriate. We outline these two points of motivation in Sect. 1.1. The design of the numerical experiment based on fully-connected neural networks is presented in Sect. 1.2, whereas we focus on convolutional neural networks in Sect. 1.3. Afterwards, we give a high-level report of our findings in Sect. 1.4.

1.1 Motivation

Below, we describe the two main reasons that motivate this numerical study.

1.1.1 Understanding of Deep Learning in General

A typical learning problem consists of an unknown data model, a hypothesis class, and an optimization procedure to identify the best fit in the hypothesis class to the observed (sampled) data, e.g., [17, 18]. In a deep learning problem, the hypothesis class is the set of NNs with a specific architecture.

The approximation-theoretical point of view analyzes the trade-off between the capacity of the hypothesis class and the complexity of the data model. In this sense, this point of view describes only one aspect of the learning problem.

In the framework of approximation theory, there are precise ways to assess the hardness of an underlying problem. Concretely, this is done by identifying the rate by which the misfit between the hypothesis class and the data model decreases for sequences of growing hypothesis classes. For example, one common theme in the literature is the observation that for certain function classes NNs do not admit a curse of dimension, i.e., their approximation rates do not deteriorate exponentially fast with increasing input dimension, e.g., [7, 54, 67]. Another theme is that classes of smooth functions can be approximated more efficiently than classes of rougher functions, e.g., [45, 46, 50, 52, 75].

While these results offer some interpretation of why a certain problem should be harder or simpler, it is not clear how relevant these results are in practice. Indeed, there are at least three issues that call the approximation-theoretical explanation for a practical learning problem into question:

-

Tightness of the upper bounds Approximation-theoretical bounds usually describe worst-case error estimates for whole classes of functions. For individual functions or subsets of these function classes, there is no guarantee that one could not achieve a significantly better approximation rate.

-

Optimization and sampling prevent approximation theoretical effect from materializing As explained at the beginning of this section, the learning problem consists of multiple aspects, one of which is the ability of the hypothesis class to describe the data. Two further aspects are how well the sampling of the data model describes the true model and how well the optimization procedure performs in finding the best fit to the sampled data. Since the underlying optimization problem of deep learning is in general non-convex, it is conceivable that, while there theoretically exists a very good approximation of a function by a NN, finding it in practice is highly unlikely. Moreover, it is certainly possible that the sampling process does not contain sufficient information to guarantee that the optimization routine will identify the theoretically best approximation.

-

Asymptotic estimates All approximation-theoretical results mentioned until here and almost all in the literature describe the capacity of NNs to represent functions approximately with accuracy \(\varepsilon \) for sufficiently large architectures only in a regime where \(\varepsilon \) tends to zero and the size of the architecture grows accordingly. The associated approximation rates may contain arbitrarily large implicit constants, and therefore it is entirely unclear if changes to the trade-off between the complexity of the data model and the size of the architecture have the theoretically predicted impact for moderately-sized practical learning problems.

We believe that, to understand the effect of approximation-theoretical capacities of NNs in practical learning scenarios, the learning problem associated with the parameter-to-solution map in a PPDE occupies a special role: It is in essence, a high-dimensional approximation problem of a function that has a very strong low-dimensional, but highly non-trivial structure. What is more is that one can, to a certain extent, control the complexity of the problem, as we have seen in [37]. In this context, we can ask ourselves the following questions: Do we observe a curse of dimensionality in the practical solution of the problem? If not, how does the difficulty in practice scale with the parameter dimension? On which characteristics of the problem does the hardness of the practical solution thereof depend?

If we study these questions numerically, then the answers can be compared with the predictions from approximation-theoretical considerations. If the predictions coincide with the observed behavior and other causes, such as artefacts from the optimization and sampling procedure, can be ruled out, then we can view these experiments as a strong support for the practical relevance of approximation-theoretical arguments.

Because of this, we study the aforementioned questions in an extensive numerical experiment that will be described in Sect. 1.2 below.

1.1.2 Feasibility of the Machine-Learning-Based Solution of Parametric PDEs

The method (as described in [37]) of learning the parameter-to-solution map has at least two major advantages over classical approaches to solve PPDEs: First of all, the setup is completely independent of the underlying PPDE. This versatility of NNs could be quite desirable in an environment where many substantially different PPDEs are treated. Second, because this approach is fully data-driven, we do not require any knowledge of the underlying PDE. Indeed, as long as sufficiently many data points are supplied, for example, from a physical experiment, the approach could be feasible under high uncertainty of the model.

The main drawback of the method is the lack of theoretical understanding thereof. Moreover, for the theoretical results that do exist, we lack any evaluation of how pertinent the theoretical observations are for practical behavior. Most importantly, we do not have an a priori assessment for the practical feasibility of certain problems.

In [37], we observed that the complexity of the solution manifold, i.e., the set of all solutions of the PDE, is a central quantity involved in upper bounding the hardness of approximating the parameter-to-solution map with a NN. In practice, it is unclear to what extent this notion is appropriate and if the complexity of the solution manifold influences the performance of the method at all.

In the numerical experiment described in the next section, we explore the performance of the learning approach for various test-cases with different intrinsic complexities and observe the sensitivity of the method to the different setups.

1.2 The Experiment

To analyze the approximation-theoretical effect of the architecture on the overall performance of the learning problem in practice, we train a fully connected NN as considered in [37] on a variety of datasets stemming from different parameter choices of the parametric diffusion equation. The design of the data sets is such that we vary the relationship between the capacity of the architecture and the complexity of the data and report the effect on the overall performance.

In designing such an experiment, we face three fundamental challenges hindering the comparability between test-cases:

-

Effect of the optimization procedure Since the algorithms used to train neural networks are not guaranteed to converge to the optimal solution, there may be a significant effect of the chosen optimization procedure on the performance of the model. The success of the optimization routine may depend on the complexity of the data model this interplay may be a much stronger factor in the performance of the method than the capacity of the architecture to fit the data model. In addition, the optimization routine may be affected by random aspects of the set-up, such as the initialisation, which may obfuscate the effect of the complexity of the solution manifold.

-

Effect of the sampling procedure We train our network based on a finite number of samples of the true solution. The number and choice of samples could have a non-negligible effect on the overall performance and potentially affect some test-cases more than others.

-

Quantification of the intrinsic complexity While we have theoretically established that the complexity of the solution manifold is the main factor in upper-bounding the hardness of the problem in the approximation-theoretical framework, we cannot, in practice, quantify this complexity.

In view of the aforementioned obstacles, the design of a meaningful experiment is a significant challenge. To overcome the issues described above, we introduce the following measures:

-

Keeping the architecture fixed An approximation-theoretical result on NNs is based on three ingredients. A function class \({\mathcal {C}}\), a worst-case accuracy \(\varepsilon >0\), and the size of the architecture.

Whenever one of these hyper-parameters—the function class, the accuracy, or the architecture—is fixed, one can theoretically describe how changing a second parameter influences the last one. For example, for fixed \({\mathcal {C}}\), an approximation-theoretical statement yields an estimate of the necessary size of the architecture to achieve an accuracy of \(\varepsilon \).

Because of the potentially strong impact of the architecture on the optimization procedure, we expect that the most sensible point of view to test numerically is that where the architecture remains fixed while we vary the function class \({\mathcal {C}}\) and observe \(\varepsilon \). This way, we can guarantee that the influence of the architecture on the optimization procedure is the same between test-cases.

-

Averaging over test-cases We perform each experiment multiple times and compare the results. This way, we can see how sensitive the experiment is to random initialization of the model and if certain results are due to the optimization routine getting stuck in local minima.

-

Analyzing the convergence behavior a posteriori We are not aware of any method to guarantee a priori that the choice of the data model would not influence the convergence behavior. We do, however, analyze the convergence after the experiment to see if there are fundamental differences between our test-cases. This analysis reveals no significant differences between all the setups and therefore indicates that the effect of the data model on the optimization procedure is very similar between test-cases.

-

Establishing independence of sample generation We run the experiment multiple times for various numbers of training samples N chosen in the same way—uniformly at random—in every test-case. Between the choices of N, we observe a linear dependence of the achieved accuracy on N. This indicates that the influence of the number of N on the performance of the method is the same for all test-cases.

-

Design of semi-ordered test-cases While we are not able to assess the intrinsic complexity exactly, it is straight-forward to construct series of test-cases with increasing complexity. In this sense, we can introduce a semi-ordering of test-cases according to their complexity and observe to what extent the performance of the method follows this ordering.

In addition, we can measure the intrinsic complexity of the parametric problem via a heuristic. Here we quantify, via the singular value decomposition, how well a random point cloud of solutions of the problem can be represented by a linear model.

We present the construction of the test-cases in Sect. 4 and discuss the measures taken to remove effects caused by the optimization and sampling procedures in greater detail in “Appendix A”. All of our test-cases consider the following parametric diffusion equation

where \(f\in L^2(\Omega )\) and \(a_y \in L^{\infty }(\Omega )\), is a diffusion coefficient depending on a parameter \(y \in {\mathcal {Y}}\). In our test-cases below, we learn a discretization of the map \( {\mathbb {R}}^p \supset {\mathcal {Y}} \ni y \mapsto u_y\), where \(p \in {\mathbb {N}}\), for various choices of parametrizations

Concretely, we vary the following characteristics of the parametrizations and observe the effect on the overall performance of the learning problem:

-

Type of parametrization We choose test-cases which differ with respect to the following characteristics: First, we study parametrizations (1.1) of various degrees of smoothness. Second, we study test-cases where the parametrization (1.1) is affine-linear or non-linear. Third, we consider cases, where \(a_y = \sum _{i=1}^p {\widetilde{a}}_{y_i}\) for \({\widetilde{a}}_{y_i} \in L^{\infty }(\Omega )\) and where the supports of \(({\widetilde{a}}_{y_i})_{i=1}^p\) overlap or have various degrees of separation.

-

Dimension of parameter space The discretization of our solution space is done on the maximal computationally feasible grid (with respect to our workstation). We have chosen the dimensions p of the parameter spaces in such a way that the resolutions of the parametrized solutions are still meaningful with respect to the underlying discretization.

-

Complexity hyper-parameters To generate comparable test-cases with increasing complexities, we include two types of hyper-parameters into the data-generation process. One that directly influences the ellipticity of the problem and another that introduces a weighting of the parameter values.

We expect that these tests yield answers to the following questions: How versatile is the approach? Does it perform well only for special types of parametrizations or is it generally applicable? Do we observe a curse of dimensionality and how much does the performance of the learning method depend on the dimension of the parameter space? How strongly does the performance of the learning method depend on the intrinsic complexity of the data?

1.3 Modern Architectures

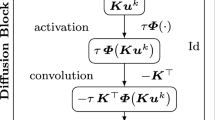

The previously described experiment aims at understanding whether the theoretically predicted effect of Kutyniok et al. [37] persists in practice. In many practical applications, it is, however, common to use much more refined architectures than the fully-connected architecture studied in [37]. To understand if and to what extent our findings also affect more modern architectures, we perform a second set of experiments using deep convolutional neural networks [39].

Convolutional neural networks are build by alternating convolutional blocks with pooling blocks. A convolutional block can be interpreted as a regular fully-connected block, however, with the weight matrices required to be block-circular matrices. A pooling block acts as a form of subsampling. Depending on the pooling operation, we can consider convolutional neural networks as special fully-connected neural networks [53, 77]. The restriction is, however, so strong that the estimates of Kutyniok et al. [37] do not extend to general convolutional neural networks.

The impact of this second experiment is expected to be twofold: First, if the results mirror the outcome of the first experiment, then we have grounds to believe that also the performance of modern architectures solving parametric problems is critically determined by the intrinsic dimension of the solution manifold. Second, if the effect of the intrinsic dimension is similar to the first experiment, then we have observed the same effect over multiple modalities. This serves as an additional validation that the effect observed is not based on the optimization procedure since this should affect substantially different architectures very differently.

1.4 Our Findings

In the numerical experiments, which we report in Sects. 4.3 and 5 and evaluate in Sects. 4.4 and 5.2, respectively, we find that the proposed method is very sensitive to the underlying type of test-case. Indeed, we observe qualitatively different scaling behaviors of the achieved error with the dimension p of the parameter space between different test-cases. Concretely, we observe the following asymptotic behavior of the errors in different test-cases: \({\mathcal {O}}(1), {\mathcal {O}}(\log (p))\) and \({\mathcal {O}}(p^k)\) for \(p \rightarrow \infty \) and \(k > 0\), where k depends on one of the complexity hyper-parameters. Notably, we do not observe a scaling according to the curse of dimensionality, i.e., an error scaling exponentially with p, in any of the test-cases. We also observe that the achieved errors obey the semi-ordering of complexities of the test-cases and are compatible with a heuristic complexity measure. This shows that the method is very versatile and can be applied for various settings. Moreover, the complexity of the solution manifold appears to be a sensible predictor for the efficiency of the method.

In addition, we observe that the numerical results agree with the predictions that can be made via approximation-theoretical considerations. Due to the careful design of this experiment, we can exclude effects associated with the optimization and sampling procedures. In addition, we even observed the described effect in a very practical regime, where the theoretical analysis is not yet available. This indicates the practical relevance of approximation-theoretical results for this particular problem and for deep learning problems in general.

1.5 Related Works

The practical application of NNs in the context of PDEs dates back to the 1990s [38]. However, in recent years the topic again gained traction in the scientific community driven by the ever-increasing availability of computational power. Much of this research can be condensed into three main directions: Learning the solution of a single PDE, system identification, and goal-oriented approaches. The first of these directions uses NNs to directly model the solution of a (in some cases user-specified) single PDE [42, 58, 63, 73, 74], an SDE [8, 72], or even the joint solution for multiple boundary conditions [68]. These methods mostly rely on the differential operator of the PDE to evaluate the loss, but other approaches do exist [27]. In system identification, one tries to discover an underlying physical law from data by reverse-engineering the PDE. This can be done by attempting to uncover a hidden parameter of a known equation [59], or modeling physical relations [11, 57]. Conversely, goal-oriented approaches, try to infer a quantity of interest stemming from the solution of an underlying PDE. For example, NNs can be used as a surrogate model to directly learn the quantity of interest and thereby circumvent the necessity of explicitly solving the equation [35]. A practical example for this is given by the ground state energy of a molecule which is derived from the solution of the electronic Schrödinger equation. This task has been efficiently solved by graph NNs [22, 43, 66] or hybrid approaches [28]. Furthermore, building a surrogate model can be especially useful in uncertainty quantification [69]. NNs can also aid classical methods in solving goal-oriented tasks [15, 44]. In addition to the aforementioned research directions, further work has been done on fusing NNs with classical numerical methods to assist, for example, in model-order reduction [40, 61].

Our work focuses on PPDEs and more specifically we are interested in learning the mapping from the parameter to the coefficients of the high-fidelity solution. Related but different approaches were analyzed in [19, 31, 69], where the solution of the PPDE is learned in an already precomputed reduced basis or at point evaluations in fixed spatial coordinates. The recent work [2] performs a comprehensive numerical study in the context of PPDEs that sheds light on the influence of the size of the training set. The work [64] employs NNs for model-order reduction in the context of transient flows.

On the theoretical side, the majority of works analyzing the power of NNs for the solution of (parametric) PDEs is concerned with an approximation-theoretical approach. Notable examples of such works include [8, 9, 12, 21, 24,25,26, 33, 34, 72], in which it is shown that NNs can overcome the curse of dimensionality in the approximative solution of some specific single PDE. In the same framework, it was shown in [12] how estimates on the approximation error imply bounds on the generalization error. Concerning the theoretical analysis of PPDEs, we mention [13, 29, 37, 47, 51, 65]. We will describe the results of the first two works in more detail in Sect. 3.2. The work [29] is concerned with an efficient approximation of a map that takes a noisy solution of a PDE as an input and returns a quantity of interest.

Additionally, we wish to mention that there exists a multitude of approaches (which are not necessarily directly RBM-or NN-related) that study the approximation of the parameter-to-solution map of PPDEs. These include methods based on sparse polynomials (see for instance [16, 32] and the references therein), tensors (see for instance [6, 20] and the references therein) and compressed sensing (see for instance [60, 71] and the references therein).

Parametric PDEs also appear in the context of stochastic PDEs or PDEs with random coefficients (see for instance [55]) and have been theoretically examined under the perspective of uncertainty quantification. For the sake of brevity, we only mention [16] and the references therein.

Finally, we mention that a comprehensive numerical study analyzing to what extent the approximation theoretical findings of NNs (not in the context of PPDEs) are visible in practice has been carried out in [3]. Similarly, in [23], a numerical algorithm that reproduces certain approximation-theoretically established exponential convergence rates of NNs was studied. The approximation rates of [14] were also numerically reproduced in that paper.

1.6 Outline

We start by describing the parametric diffusion equation and how we discretize it in Sect. 2. Then, we provide a formal introduction to NNs and a review of the approximation-theoretical results of NNs for parameter-to-solution maps in Sect. 3. In Sect. 4, we describe our numerical experiment based on fully-connected NNs. We start by stating three hypotheses underlying the examples in Sect. 4.1, before describing the set-up of our experiments in Sect. 4.2. After that, we present the results of the experiments in Sect. 4.3 and evaluate the observations in Sect. 4.4. Finally, in Sect. 5 we augment our results by repeating the experiments with a more modern CNN architecture and compare its performance to the fully-connected NN in Sect. 5.2. In “Appendix A”, we present additional measures taken to ensure comparability between test-cases and to remove all artefacts stemming from the optimization procedure.

2 The Parametric Diffusion Equation

In this section, we will introduce the abstract setup and necessary notation that we will consider throughout this paper. First of all, we will introduce the parameter-dependent diffusion equation in Sect. 2.1. Afterwards, in Sect. 2.2, we recapitulate some basic facts about high-fidelity discretizations and introduce the discretized parameter-to-solution map.

2.1 The Parametric Diffusion Equation

Throughout this paper, we will consider the parameter-dependent diffusion equation with homogeneous Dirichlet boundary conditions

where \(f\in L^2(\Omega )\) is the parameter-independent right-hand side, \(a\in {\mathcal {A}}\subset L^{\infty }(\Omega ),\) and \({\mathcal {A}}\) constitutes some compact set of parametrized diffusion coefficients. In the following, we will examine different varieties of parametrized diffusion coefficient sets \({\mathcal {A}}\). Following [16] (by restricting ourselves to the case of finite-dimensional parameter spaces), we will always describe the elements of \({\mathcal {A}}\) by elements in \({\mathbb {R}}^p\) for some \(p\in {\mathbb {N}}.\) To be more precise, we will assume that

where \({\mathcal {Y}}\subset {\mathbb {R}}^p\) is the compact parameter space.

A common assumption on the set \({\mathcal {A}}\), present in the first test-cases which we will describe below and especially convenient for the theoretical analysis of the problem, is given by affine parametrizations of the form

where the functions \((a_i)_{i=0}^p\subset L^{\infty }(\Omega )\) are fixed.

After reparametrization, we consider the following problem, given in its variational formulation:

where

and \(u_y\in {\mathcal {H}}{:}{=}H_0^1(\Omega )\) is the solution.Footnote 1

We will consider experiments in which the involved bilinear forms are uniformly continuous and uniformly coercive in the sense that there exist \(C_{\mathrm {cont}},C_{\mathrm {coer}} > 0\) with

By the Lax–Milgram lemma (see [56, Lemma 2.1]), the problem of (2.4) is well-posed, i.e., for every \(y\in {\mathcal {Y}}\) there exists exactly one \(u_y\in {\mathcal {H}}\) such that (2.4) is satisfied and \(u_y\) depends continuously on f.

2.2 High-Fidelity Discretizations

In practice, one cannot hope to solve (2.4) exactly for every \(y\in {\mathcal {Y}}\). Instead, if we assume for the moment that y is fixed, a common approach towards the calculation of an approximate solution of (2.4) is given by the Galerkin method, which we will describe briefly below following [30, Appendix A] and [56, Chapter 2.4]. In this framework, instead of solving (2.4), one solves a discrete scheme of the form

where \(U^{\mathrm {h}}\subset {{\mathcal {H}}}\) is a subspace of \({{\mathcal {H}}}\) with \(\mathrm {dim}\left( U^{\mathrm {h}} \right) < \infty \) and \(u^{\mathrm {h}}_y\in U^{\mathrm {h}}\) is the solution of (2.5). Let us now assume that \(U^\mathrm {h}\) is given. Moreover, let \(D{:}{=}\mathrm {dim}\left( U^{\mathrm {h}}\right) \), and let \(\left( \varphi _{i} \right) _{i=1}^D\) be a basis for \(U^{\mathrm {h}}\). Then the stiffness matrix \({\mathbf {B}}^{\mathrm {h}}_y{:}{=}(b_y( \varphi _{j},\varphi _{i}) )_{i,j=1}^D\) is non-singular and positive definite. The solution \(u^{\mathrm {h}}_y\) of (2.5) satisfies

where \( {\mathbf {u}}^{\mathrm {h}}_y{:}{=}({\mathbf {B}}^{\mathrm {h}}_y)^{-1}{\mathbf {f}}^{\mathrm {h}}_y \in {\mathbb {R}}^D\) and \({\mathbf {f}}^{\mathrm {h}}_y{:}{=}\left( \int _{\Omega }f({\mathbf {x}})\varphi _i({\mathbf {x}})~\mathrm {d}{\mathbf {x}} \right) _{i=1}^D\in {\mathbb {R}}^D\). By Cea’s Lemma (see [56, Lemma 2.2.]), \(u^{\mathrm {h}}_y\) is, up to a universal constant, a best approximation of \(u_y\) in \(U^{\mathrm {h}}.\)

In this framework, we can now define the central object of interest which is the map taking an element from the parameter space \({\mathcal {Y}}\) to the discretized solution \({\mathbf {u}}^{\mathrm {h}}_y\).

Definition 2.1

Let \(\Omega = (0,1)^2\), \(U^{\mathrm {h}} \subset {\mathcal {H}}\) be a finite dimensional space, \({\mathcal {A}}\subset L^{\infty }(\Omega )\) with \({\mathcal {Y}} \subset {\mathbb {R}}^p\) for \(p \in {\mathbb {N}}\) be as in (2.2). Then we define the discretized parameter-to-solution map (DPtSM) by

Remark 2.2

The DPtSM \({\mathcal {P}}\) is a potentially nonlinear map from a p-dimensional set to a D-dimensional space. Therefore, without using the information that \({\mathcal {P}}\) has a very specific structure described through \({\mathcal {A}}\) and the PDE (2.1), a direct approximation of \({\mathcal {P}}\) as a high-dimensional smooth function will suffer from the curse of dimensionality [10, 48].

Before we continue, let us introduce some crucial notation. Later, we need to compute the Sobolev norms of functions \(v\in {\mathcal {H}}.\) This will be done via a vector representation \({\mathbf {v}}\) of v with respect to the high-fidelity basis \((\varphi _i)_{i=1}^D.\) We denote by \({\mathbf {G}}{:}{=}\left( \langle \varphi _i,\varphi _j\rangle _{{\mathcal {H}}}\right) _{i,j=1}^{D}\in {\mathbb {R}}^{D\times D}\) the symmetric, positive definite Gram matrix of the basis functions \((\varphi _i)_{i=1}^D.\) Then, for any \(v\in U^{\mathrm {h}}\) with coefficient vector \({\mathbf {v}}\) with respect to the basis \((\varphi _i)_{i=1}^{D}\) we haveFootnote 2 (see [56, Equation 2.41]) \( \left| {\mathbf {v}}\right| _{{\mathbf {G}}}{:}{=}\left| {\mathbf {G}}^{1/2}{\mathbf {v}}\right| = \left\Vert v\right\Vert _ {{\mathcal {H}}}. \) In particular, \(\left\Vert u_y^{\mathrm {h}}\right\Vert _{{{\mathcal {H}}}} = |{\mathbf {u}}^{\mathrm {h}}_y |_{{\mathbf {G}}}\), for all \(y\in {\mathcal {Y}}\).

3 Approximation of the Discretized Parameter-to-Solution Map by Realizations of Neural Networks

In this section, we describe the approximation-theoretical motivation for the numerical study performed in this paper. We present a formal definition of NNs below. In Question 3.5, we present the underlying approximation-theoretical question of the considered learning problem. Thereafter, we recall the results of Kutyniok et al. [37] showing that one can upper bound the approximation rates that NNs obtain when approximating the DPtSM through an implicit notion of complexity of the DPtSM.

3.1 Neural Networks

NNs describe functions of compositional form that result from repeatedly applying affine linear maps and a so-called activation function. From an approximation-theoretical point of view, it is sensible to count the number of active parameters of a NN. To associate a meaningful and mathematically precise notion of the number of parameters to a NN, we differentiate here between neural networks which are sets of matrices and vectors, essentially describing the parameters of the NN, and realizations of neural networks which are the associated functions. Concretely, we make the following definition:

Definition 3.1

Let \(n, L\in {\mathbb {N}}\). A neural network \(\Phi \) with input dimension n and L layers is a sequence of matrix-vector tuples

where \(N_0 = n\) and \(N_1, \dots , N_{L} \in {\mathbb {N}}\), and where each \({\mathbf {A}}_\ell \) is an \(N_{\ell } \times N_{\ell -1}\) matrix, and \({\mathbf {b}}_\ell \in {\mathbb {R}}^{N_\ell }\).

If \(\Phi \) is a NN as above, \(K \subset {\mathbb {R}}^n\), and if \(\varrho {:}\,{\mathbb {R}}\rightarrow {\mathbb {R}}\) is arbitrary, then we define the associated realization of \(\Phi \) with activation function \(\varrho \) over K (in short, the \(\varrho \)-realization of \(\Phi \) over K) as the map \({\mathrm {R}}_{\varrho }^K(\Phi ){:}\,K \rightarrow {\mathbb {R}}^{N_L}\) such that \({\mathrm {R}}_{\varrho }^K(\Phi )({\mathbf {x}}) = {\mathbf {x}}_L\), where \({\mathbf {x}}_L\) results from the following scheme:

and where \(\varrho \) acts componentwise, that is, \(\varrho ({\mathbf {v}}) {:}{=}(\varrho ({v}_1), \dots , \varrho ({v}_m))\) for all \({\mathbf {v}} = ({v}_1, \dots , {v}_s) \in {\mathbb {R}}^s\).

We call \(N(\Phi ) {:}{=}n + \sum _{j = 1}^L N_j\) the number of neurons of the NN \(\Phi \) and L the number of layers. We call \(M(\Phi ) {:}{=}\sum _{\ell =1}^L \Vert {\mathbf {A}}_\ell \Vert _0 + \Vert {\mathbf {b}}_\ell \Vert _0\) the number of non-zero weights of \(\Phi \). Moreover, we refer to \(N_L \) as the output dimension of \(\Phi \). Finally, we refer to \((N_0,\dots ,N_L)\) as the architecture of \(\Phi .\)

We consider the following family of activation functions:

Definition 3.2

For \(\alpha \in [0,1)\), we define by \(\varrho _\alpha (x) {:}{=}\max \{x,\alpha x\}\) the \(\alpha \)-leaky rectified linear unit (\(\alpha \)-LReLU). The activation function \(\varrho _0 = \max \{x,0\}\) is called the rectified linear unit (ReLU).

Remark 3.3

For every \(\alpha \in (0,1)\) it holds that for all \(x \in {\mathbb {R}}\)

Hence, for every \(\alpha \in (0,1)\), we can represent the ReLU as the sum of two rescaled \(\alpha \)-LReLUs and vice versa. If we define for \(n\in {\mathbb {N}}\)

then, for NNs

we have that for \(K \subset {\mathbb {R}}^n\) it holds that \({\mathrm {R}}_{\varrho _0}^K(\Phi _1) = {\mathrm {R}}_{\varrho _\alpha }^K(\Phi _3)\) and \( {\mathrm {R}}_{\varrho _\alpha }^K(\Phi _1) = {\mathrm {R}}_{\varrho _0}^K(\Phi _2)\). Moreover, it is not hard to see that \(M(\Phi _1) \le M(\Phi _2), M(\Phi _3)\) and \(M(\Phi _2), M(\Phi _3) \le 4 M(\Phi _1)\). Therefore, we have that for every \(\alpha _1, \alpha _2 \in [0,1)\) and every function \(f: {\mathbb {R}}^n \rightarrow {\mathbb {R}}^{N_L}\) of a function space X such that

for a NN \(\Phi \) implies that there exists another NN \(\widetilde{\Phi }\) with \(L(\widetilde{\Phi }) = L(\Phi )\) and \(M(\widetilde{\Phi }) \le 16 M(\Phi )\) such that

In other words, up to a multiplicative constant the parameter \(\alpha \) of the \(\alpha \)-LReLU does not influence the approximation properties of realizations of NNs.

Remark 3.4

While Remark 3.3 shows that all \(\alpha \)-LReLUs yield, in principle, the same approximation behavior, these activation functions still display quite different behavior during the training phase of NNs, where a non-vanishing parameter \(\alpha \) can help avoid the occurrence of dead neurons.

3.2 Approximation of the Discretized Parameter-to-Solution Map by Realizations of Neural Networks

We can quantify the capability of NNs to represent the DPtSM by answering the following question:

Question 3.5

Let \(p, D \in {\mathbb {N}}\), \(\alpha \in [0,1)\), \(\Omega = (0,1)^2\), \(U^{\mathrm {h}} \subset {\mathcal {H}}\) be a D-dimensional space, \({\mathcal {A}}=\{a_y{:}~y\in {\mathcal {Y}}\} \subset L^{\infty }(\Omega )\) be compact with \({\mathcal {Y}} \subset {\mathbb {R}}^p\) as in (2.2). We consider the following equivalent questions:

-

For \(\varepsilon > 0\), how large do \(M_\varepsilon , L_\varepsilon \in {\mathbb {N}}\) need to be to guarantee, that there exists a NN \(\Phi \) that satisfies

-

(1)

\(\sup _{y \in {\mathcal {Y}}}|{\mathcal {P}}(y) - {\mathrm {R}}^{{\mathcal {Y}}}_{\varrho _\alpha }(\Phi )(y)|_{{\mathbf {G}}} \le \varepsilon ,\)

-

(2)

\(M(\Phi ),N(\Phi ) \le M_\varepsilon \) and \(L(\Phi ) \le L_\varepsilon \)?

-

(1)

-

For \(M, L \in {\mathbb {N}}\), how small can \(\varepsilon _{L,M}>0\) be chosen so that there exists a NN \(\Phi \) that satisfies

-

(1)

\(\sup _{y \in {\mathcal {Y}}}|{\mathcal {P}}(y) - {\mathrm {R}}^{{\mathcal {Y}}}_{\varrho _\alpha }(\Phi )(y)|_{{\mathbf {G}}} \le \varepsilon _{L,M},\)

-

(2)

\(M(\Phi ),N(\Phi ) \le M\) and \(L(\Phi ) \le L\)?

-

(1)

Remark 3.6

-

(i)

Conditions (1) in both instances of Question 3.5 are trivially equivalent to

$$\begin{aligned} \sup _{y\in {\mathcal {Y}}} \left\| \sum _{i=1}^D ({\mathcal {P}}(y))_j\cdot \varphi _j - \left( {\mathrm {R}}^{{\mathcal {Y}}}_{\varrho _\alpha }(\Phi )(y)\right) _j \cdot \varphi _j\right\| _{{\mathcal {H}}}\le \varepsilon \text {~~or~~} \varepsilon _{L,M}. \end{aligned}$$ -

(ii)

The results to follow measure the necessary sizes of the NNs in terms of the numbers of non-zero weights \(M(\Phi )\). However, from a practical point of view, we are also interested in the number of necessary neurons \(N(\Phi )\). Invoking a variation of [52, Lemma G.1.] shows that similar rates to the ones below are valid for the number of neurons \(N(\Phi ).\)

If the regularity of \({\mathcal {P}}\) is known, then a straight-forward bound on \(M_\varepsilon \) and \(L_\varepsilon \) can be found in [75]. Indeed, if \({\mathcal {P}}\in C^{s}({\mathcal {Y}}; {\mathbb {R}}^D)\) with \(\Vert {\mathcal {P}}\Vert _{C^s} \le 1\), then one can choose

In other situations, e.g., if \(L_\varepsilon \) is permitted to grow faster than \(\log _2(1/\varepsilon ),\) one can even replace s by 2s in (3.1), see [41, 76].

This rate of (3.1) uses the smoothness of \({\mathcal {P}}\) only and does not take into account the underlying structure stemming from the PDE (2.1) and the choice of \({\mathcal {A}}\). As a result, we find this rate to be significantly suboptimal.

In [37], it was showed that \({\mathcal {P}}\) can be approximated in the sense of Question 3.5 with

where \(d(\varepsilon )\) is a certain intrinsic dimensionFootnote 3 of the problem, essentially reflecting the size of a reduced basis required to sufficiently approximate \(S({\mathcal {Y}}).\) In many cases, especially those discussed in this manuscript, one can theoretically establish the scaling behavior of \(d(\varepsilon )\) for \(\varepsilon \rightarrow 0\). For instance, if \({\mathcal {A}}\) is as in (2.3), then (see [5, Equation (3.17)])

Applied to (3.2) this yields that

for some \(c\ge 1.\) We also mention a similar approximation result, not of the discretized parametric map \({\mathcal {P}}\) but of the parametrized solution \((y,{\mathbf {x}}) \mapsto u_{a_y}({\mathbf {x}})\), where \(u_{a_y}\) is as in (2.1) for \(a=a_y\). In this situation, and for specific parametrizations of \({\mathcal {A}}\), [65, Theorem 4.8] shows that this map can be approximated by the realization of a NN using the ReLU activation function up to an error of \(\varepsilon \) with a number of weights that essentially scales like \(\varepsilon ^{-r}\) where r depends on the summability of the (in this case potentially infinite) sequence \((a_i)_{i=1}^{\infty }\) such that \(a_y= a_0 + \sum _{i=1}^\infty y_i a_i\) for a coefficient vector \( y=(y_i)_{i=1}^{\infty } \). Here r can be very small if \(\Vert a_i\Vert _{L^\infty (\Omega )}\) decays quickly for \(i \rightarrow \infty \). This leads to very efficient approximations.

While the aforementioned results all examine the approximation-theoretical properties of realizations of NNs with respect to the uniform approximation error, they trivially imply the same rates if we examine the average errors

which are often used in practice. Here, \(1\le p<\infty \) and \(\mu \) is an arbitrary probability measure on \({\mathcal {Y}}.\) In this paper, we examine the discrete counterpart of the mean relative error

where \(\mu \) denotes the uniform probability measure on \({\mathcal {Y}}\).

In view of the aforementioned theoretical results, it is clear that a parameter that is not the dimension of the parameter space \({\mathcal {Y}}\) but a problem-specific notion of complexity determines the hardness of the approximation problem of Question 3.5. To what extent this theoretical observation influences the hardness of the practical learning problem will be analyzed in the numerical experiment presented in the next section.

4 Numerical Survey of Approximability of Discretized Parameter-to-Solution Maps

As outlined in Sect. 3, the theoretical hardness of the approximation problem of Question 3.5 is determined by an intrinsic notion of complexity that potentially differs substantially from the dimension of the parameter space.

To test how this intrinsic complexity affects the practical machine-learning based solution of (2.1), we perform a comprehensive study where we train NNs to approximate the DPtSM \({\mathcal {P}}\) for various choices of \({\mathcal {A}}\). Here, we are especially interested in the performance of the learned approximation of \({\mathcal {P}}\) for varying complexities of \({\mathcal {A}}\). In this context, we test the hypotheses listed in the following Sect. 4.1. The remainder of this section is structured as follows: in Sect. 4.2, we introduce the concrete setup of parametrized diffusion coefficient sets, NN architecture, and optimization procedure and explain how the choice of test-cases are related to our hypotheses. Afterwards, in Sect. 4.3, we report the results of our numerical experiments. Section 4.4 is devoted to an evaluation and interpretation of these results in view of the hypotheses of Sect. 4.1.

4.1 Hypotheses

- [H1] :

-

The performance of learning the DPtSM does not suffer from the curse of dimensionality

The theoretical results of Kutyniok et al. [37] show that the dimension of the parameter space p is not the main factor in determining the hardness of the underlying approximation-theoretical problem. As already outlined in the introduction, it is by no means clear that this effect is visible in a practical learning problem.

We expect that after accounting for effects stemming from optimization and sampling to promote comparability between test-cases in a way described in “Appendix A”, the performance of the learning method will scale only mildly with the dimension of the parameter space.

- [H2] :

-

The performance of learning the DPtSM is very sensitive to parametrization

We expect that, within the framework of Question 3.5, there are still extreme differences of intrinsic complexities for different choices of parametrizations for the diffusion coefficient sets \({\mathcal {A}} \subset L^\infty (\Omega )\) as defined in (2.2). However, it is not clear to what extent NNs are capable of resolving the low-dimensional sub-structures generated by various choices of \({\mathcal {A}} \subset L^\infty (\Omega )\).

Since realizations of NNs are a very versatile function class, we expect the degree to which the performance of a trained NN depends on the number of parameters to vary strongly over the choice of \((a_i)_{i=1}^p\).

- [H3] :

-

Learning the DPtSM is efficient also for non-affinely parametrized problems

The analysis of PPDEs often relies on affine parametrizations as in (2.3) or smooth variations thereof.

We expect the overall theme that NNs perform according to an intrinsic complexity of the problem depending only weakly on the parameter dimension to hold in more general cases.

4.2 Setup of Experiments

To test the hypotheses [H1], [H2], and [H3] of Sect. 4.1, we consider the following setup.

4.2.1 Parameterized Diffusion Coefficient Sets

We perform training of NNs for different instances of the approximation problem of Question 3.5. Here, we always assume the right-hand side to be fixed as \(f({\mathbf {x}})= 20+10x_1-5x_2\), for \({\mathbf {x}} = (x_1,x_2) \in \Omega \), and we vary the parametrized diffusion coefficient set \({\mathcal {A}}\).

We consider four different parametrized diffusion coefficient sets as described in the test-cases [T1]–[T4] (for a visualization of [T3] and [T4] see Fig. 1). [T1], [T2] and [T3-F] are affinely parametrized whereas the remaining parametrizations are non-affine.

-

[T1] Trigonometric Polynomials In this case, the set \({\mathcal {A}}\) consists of trigonometric polynomials that are weighted according to a scaling coefficient \(\sigma \). To be more precise, we consider

$$\begin{aligned} {\mathcal {A}}^{\mathrm {tp}}(p, \sigma ) {:}{=}\left\{ \mu + \sum _{i=1}^p y_i \cdot i^{\sigma } \cdot (1+a_i){:}~y\in {\mathcal {Y}}=[0,1]^p \right\} , \end{aligned}$$for some fixed shift \(\mu >0\) and a scaling coefficient \(\sigma \in {\mathbb {R}}\). Here \(a_i({\mathbf {x}})=\sin \left( \left\lfloor \frac{i+2}{2}\right\rfloor \pi x_1 \right) \sin \left( \left\lceil \frac{i+2}{2}\right\rceil \pi x_2\right) ,\) for \(i=1,\dots , p.\)

We analyze the cases \(p=2, 5, 10, 15, 20\) and, for each p, the scaling coefficients \(\sigma =-1,0,1\). As a shift we always choose \(\mu = 1\).

-

[T2] Chessboard Partition Here, we assume that \(p=s^2\) for some \(s\in {\mathbb {N}}\) and we considerFootnote 4

$$\begin{aligned} {\mathcal {A}}^{\mathrm {cb}}(p,\mu ) {:}{=}\left\{ \mu + \sum _{i=1}^p y_i{\mathcal {X}}_{\Omega _i}{:}~y\in {\mathcal {Y}}= [0,1]^p \right\} , \end{aligned}$$where \((\Omega _i)_{i=1}^p\) forms a \(s\times s\) chessboard partition of \((0,1)^2\) and \(\mu >0\) is a fixed shift.

We examine this test-case for the shifts \(\mu =10^{-1}, 10^{-2}, 10^{-3}\), and, for each \(\mu \) we consider \(s=2,3,4,5\) which yields \(p=4,9,16,25\), respectively.

-

[T3] Cookies In this test-case we differentiate between two sub-cases:

-

[T3-F] Cookies with Fixed Radii In this setting, we assume that \(p=s^2\) for some \(s\in {\mathbb {N}}\) and we consider

$$\begin{aligned} {\mathcal {A}}^{\mathrm {cfr}}(p,\mu ) {:}{=}\left\{ \mu + \sum _{i=1}^{p} y_i{\mathcal {X}}_{\Omega _{i}}{:}\,y\in {\mathcal {Y}}= [0,1]^p \right\} , \end{aligned}$$for some fixed shift \(\mu >0\) where the \(\Omega _{i}\) are disks with centers \(((2k+1)/(2s),(2\ell -1)/(2s))\), where \(i= ks+\ell \) for uniquely determined \(k\in \{0,\dots s-1\}\) and \(\ell \in \{ 1,\dots , s\}\). The radius is set to r/(2s) for some fixed \(r\in (0,1]\).

We examine this test-case for fixed \(\mu =10^{-4},\) \(r=0.8\) and \(s=2,3,4,5,6\) which yields parameter dimensions \(p=4,9,16,25,36,\) respectively.

-

[T3-V] Cookies with Variable Radii Here, we additionally assume that the radii of the involved disks are not fixed anymore. To be more precise, for \(s\in {\mathbb {N}}\) and every \(i=1,\dots ,s,\) we are given disks \(\Omega _{i, y_{i+s^2}}\) with center as before and radius \(y_{i+s^2}/(2s)\) for \(y_{i+s^2}\in [0.5,0.9],\) so that \({\mathcal {Y}}=[0,1]^{s^2}\times [0.5,0.9]^{s^2} \subset {\mathbb {R}}^{p}\) with \(p=2s^2\). We define

$$\begin{aligned} {\mathcal {A}}^{\mathrm {cvr}}(p,\mu ) {:}{=}\left\{ \mu + \sum _{i=1}^{p} y_i{\mathcal {X}}_{\Omega _{i, y_{i+s^2}}}{:}~y\in {\mathcal {Y}}= [0,1]^{p}\times [0.5,0.9]^{p}\right\} . \end{aligned}$$Note that, \({\mathcal {A}}^{\mathrm {cvr}}(p,\mu )\) is not an affine parametrization.

We consider the shifts \(\mu =10^{-4}\) and \(\mu =10^{-1},\) and, for each \(\mu \), we consider the cases \(s=2,3,4,5\) which yields the parameter dimensions \(p= 8,18,32,50,\) respectively.

-

-

[T4] Clipped Polynomials Let

$$\begin{aligned} {\mathcal {A}}^{\mathrm {cp}}(p,\mu ) {:}{=}\left\{ \max \left\{ \mu ,\sum _{i=1}^p y_i m_i\right\} {:}\, (y_i)_{i=1}^p \in {\mathcal {Y}}= [-1,1]^p \right\} , \end{aligned}$$where \(\mu >0\) is the fixed clipping value and \((m_i)_{i=1}^p\) is the monomial basis of the space of all two-variate polynomials of degree \(\le k\). Therefore \(p= \left( {\begin{array}{c}2+k\\ 2\end{array}}\right) .\)

We examine this test-case for fixed shift \(\mu =10^{-1}\) and for \(k=2,3,5,8,12\) which yields parameter dimensions \(p=6,10,21,45,91,\) respectively.

Partition of \(\Omega \) as in test-case [T2] (left) for \(p=9,\) (the red and blue areas indicate the \(\Omega _i\)), test-case [T3-F] (middle) for \(p=4\) (the red areas indicate the \(\Omega _{i}\)) and test-case [T3-V] (right) for \(p=8\) (the red areas indicate the \(\Omega _{i,y_{i+s^2}}\)) (Color figure online)

4.2.2 Complexities of the Test-Cases

To understand the different complexity levels of the test-cases and to compare them with each other, we apply two methods:

-

There is an intrinsic semi-ordering of complexities within the test-cases. In [T1] we have that \({\mathcal {A}}^{\mathrm {tp}}(p, \sigma ) \subset {\mathcal {A}}^{\mathrm {tp}}(p, \sigma ')\) if \(\sigma < \sigma '\) which shows that the problem gets more complex with increasing \(\sigma \). The influence of the shift \(\mu \) is of a somewhat different type. Higher values of \(\mu \) make the underlying problem more elliptic. This can be seen in Fig. 3: For a small value of \(\mu \), the impacts of the individual values on the chessboard-pieces on the solution appear to be almost completely decoupled. On the other hand, in the more elliptic case, the solution appears more smoothed out, and therefore each parameter value also influences the solution more globally. This implies a stronger coupling of the parameters and at least intuitively indicates a reduced intrinsic dimensionality for higher values of \(\mu \). The effect of \(\mu \) describes the ordering of complexities exists within [T2] and [T3].

-

In addition to these non-quantitative comparisons of complexities, we offer the following heuristic measure of complexity: We solve the diffusion equation for 5000 i.i.d samples each on a \(101\times 101\) grid with the finite element method as described in detail in Sect. 4.2.3. Afterwards, we compute the singular values of the set of these solutions with respect to the \(H_0^1(\Omega )\)-norm on the finite element space. The resulting decay plots, shown in Fig. 2, serve as a heuristic to judge whether a problem can be efficiently solved by a linear reduced-order model (see [56, Sec. 6.7]).

The different decay behavior between the test-cases suggests a significant variation in complexity between the corresponding parameter-to-solution maps. We observe that, independent of \(\sigma \), [T1] exhibits the fastest decay among all cases, which indicates that this might be the easiest scenario. Even more interestingly, [T2] strongly differs from all other test-cases: First and foremost, the parameter dimension p has by far the largest impact for [T2]. Secondly, contrary to [T3-V], the impact of the ellipticty \(\mu \) varies between different parameter dimensions. Lastly, we note that [T4] has the smoothest decay among all test-cases and its decay rate appears to be independent of p.

4.2.3 Setup of Neural Networks and Training Procedure

Our experiments are implemented using Tensorflow [1] for the learning procedure and FEniCS [4] as FEM solver. The code used for dataset generation of all considered test-cases is made publicly available at www.github.com/MoGeist/diffusion_PPDE. To be able to compare different test-cases and remove all effects stemming from the optimization procedure, we train almost the same model for all parameter spaces. The only—to a certain extent inevitable—change that we allow between test-cases is that the input dimension of the NN changes to that of the parameter space. Concretely, we consider the following setup:

-

(1)

The finite element space \(U^{\mathrm {h}}\) resulting from a triangulation of \(\Omega =[0,1]^2\) with \(101\times 101= 10201\) equidistant grid points and first-order Lagrange finite elements. This space shall serve as a discretized version of the space \(H^1(\Omega ).\) We denote by \(D=10201\) its dimension and by \((\varphi _i)_{i=1}^D\) the corresponding finite element basis.

-

(2)

The (fully-connected) neural network architecture \(S=(p,300,\dots ,300,10201)\) with \(L=11\) layers, where p is test-case-dependent and the weights and biases are initialized according to a normal distribution with mean 0 and standard deviation 0.1.

-

(3)

The activation function is the 0.2-LReLU of Definition 3.2.

-

(4)

The loss function is the relative error on the finite-element discretization of \({\mathcal {H}}\)

$$\begin{aligned} {\mathcal {L}}{:}\,{\mathbb {R}}^D\times ({\mathbb {R}}^D\setminus \{0\})\rightarrow {\mathbb {R}},\quad ({\mathbf {x}}_1,{\mathbf {x}}_2)\mapsto \frac{\left| {\mathbf {x}}_1-{\mathbf {x}}_2\right| _{{\mathbf {G}}}}{\left| {\mathbf {x}}_2\right| _{{\mathbf {G}}}}. \end{aligned}$$ -

(5)

The training set \((y^{i,\mathrm {tr}})_{i=1}^{N_{\mathrm {train}}}\subset {\mathcal {Y}}\) consists of \(N_{\mathrm {train}} {:}{=}20000\) i.i.d. parameter samples, drawn with respect to the uniform probability measure on \({\mathcal {Y}}.\)

-

(6)

The test set \((y^{i,\mathrm {ts}})_{i=1}^{N_{\mathrm {test}}}\subset {\mathcal {Y}}\) consists of \(N_{\mathrm {test}} {:}{=}5000\) i.i.d. parameter samples, drawn with respect to the uniform probability measure on \({\mathcal {Y}}.\)

In our experiments, we aim at finding a NN \(\Phi \) with architecture S such that the mean relative training error

is minimized. We then test the accuracy of our NN by computing the mean relative test error

Here, we use the mean relative error instead of the mean absolute error in order to establish comparability of our results between different sets \({\mathcal {A}}\), allowing us to put our results into context. In order to further limit the impact of the optimization procedure, each experiment is performed three times with varying initializations and test-train splits. We report the average error over these three runs. In “Impact of Initialization and Sample Selection” of appendix we analyze the variance of the three training runs in more detail to ensure that they do not influence our final results.

The optimization is done through batch gradient descent. To ensure further comparability between the different setups, the hyper-parameters in the optimization procedure are kept fixed: Training is conducted with batches of size 256 using the ADAM optimizer [36] with hyper-parameters \(\alpha = 2.0\times 10^{-4}\), \( \beta _{1}=0.9\), \(\beta _{2}=0.999\) and \(\varepsilon =1.0\times 10^{-8}\) (with the exception of the learning rate \(\alpha \) these are the default of the ADAM implementation in Tensorflow.). Training is stopped after reaching 40, 000 epochs. Having trained the NN, for some new input \(y\in {\mathcal {Y}},\) the computation of the approximate discretized solution \({\mathrm {R}}_\varrho ^{{\mathcal {Y}}}(\Phi )(y)\) is done by a simple forward pass.

4.2.4 Relation to Hypotheses

The test-cases [T1]–[T4] are designed to test the hypotheses [H1]–[H3] in the following way:

-

Enabling comparability between test-cases We implement three measures to produce a uniform influence of the optimization and sampling procedure in all test-cases. These are that we only change the architecture in the minimally required way between test-cases to not alter the optimization behavior, we average our results over multiple initialisations, we analyze a posteriori the optimization behavior to see if there are qualitative differences between test-cases, and we choose the number of training samples in such a way that neither moderate further increasing or decreasing of the number of training samples affects the outcome of the experiments. We describe these measures in detail in “Appendix A”.

-

Relation to Hypothesis [H1] To test if the learning method suffers from the curse of dimensionality or if the prediction of Kutyniok et al. [37] that its complexity is determined only by some intrinsic complexity of the function class holds, we run all test-cases [T1]–[T4] for various values of the dimension of the parameter space, and study the resulting scaling behavior.

-

Relation to Hypothesis [H2] To understand the extent to which the NN model is sufficiently versatile to adapt to various types of solution sets, we study four commonly considered parametrized diffusion coefficient sets which also include multiple subproblems described via the hyper-parameters \(\sigma \) and \(\mu \). The parametrized sets exhibit the following different characteristics:

- [T1]:

-

The parameter-dependence in this case is affine (i.e. the forward-map \(y\mapsto b_y(u,v)\) depends affinely on y for all \(u,v\in {\mathcal {H}}\)) whereas the spatial regularity of the functions \((a_i)_{i=1}^p\) is analytic. To vary the difficulty of the problem at hand, we consider different instances of the scaling coefficient \(\sigma \) which put different emphasis on the high-frequency components of the functions \((a_i)_{i=1}^p\). In particular, if \(\sigma >0,\) a higher weight is put on the high-frequency components than on the low-frequency ones whereas the opposite is true for \(\sigma <0.\)

- [T2]:

-

The parameter-dependence in this case is affine again, whereas the spatial regularity of the \(({\mathcal {X}}_{\Omega _i})_{i=1}^p\) is very low. To vary the difficulty of the problem, we consider different instances of shifts \(\mu \). The higher the shift is, the more elliptic the problem becomes.

- [T3]:

-

[T3-F] again exhibits affine parameter-dependence and the same regularity properties as test-case [T2]. However, this problem is considered to be easier than test-case [T2] since the \(\overline{\Omega _i}\) do not intersect each other.

For test-case [T3-V], the geometric properties of the domain partition are additionally encoded via a parameter thereby rendering the problem to be non-affine.

- [T4]:

-

In this case, the parameter-dependence is non-affine and has low regularity due to the clipping procedure. Additionally, the spatial regularity of the functions \(a_y\) is comparatively low in general.

A visualization highlighting the versatility of our test-cases can be seen when comparing the FE solutions in Fig. 3 (test-case [T2]) with the FE solutions in Fig. 4 (test-case [T4]).

-

Relation to Hypothesis [H3]: The test-cases [T3-V] and [T4] are non-affinely parametrized.

4.3 Numerical Results

In this subsection, we report the results of the test-cases announced in the previous subsection.

4.3.1 [T1] Trigonometric Polynomials

We observe the following mean relative test errors for the sets \({\mathcal {A}}^{\mathrm {tp}}(p, \sigma )\).

4.3.2 [T2] Chessboard Partition

We observe the following mean relative test errors for the sets \({\mathcal {A}}^{\mathrm {cb}}(p,\mu )\).

In Fig. 3, we show samples from the test set for different values of \(\mu \). Here we always depict one average performing test-case and one with poor performance. These figures offer a potential explanation of why the scaling with p is qualitatively different for different values of \(\mu \). This seems to be because for lower \(\mu \) the effect of the individual parameters on the solution seems to be much more local than for higher \(\mu \). This appears to lead to a higher intrinsic dimensionality of the problem.

Comparison of the ground truth solution and the one predicted by the NN for an average (left) and a poor performing (right) for \(p=16\) and \(\mu =10^{-1}\) (top) and \(\mu =10^{-3}\) (bottom) for test-case [T2]. The percentage in brackets represents the relative test error for this particular sample

4.3.3 [T3] Cookies with Fixed and Variable Radii

We start with one experiment where the radii of the cookies are fixed to 0.8/(2s).

Moreover, we find for the sets of cookies with variable radii \({\mathcal {A}}^{\mathrm {cvr}}(p,\mu )\) the following mean relative test errors.

4.3.4 [T4] Clipped Polynomials

For the set \({\mathcal {A}}^{\mathrm {cp}}(p,10^{-1})\), we obtain the following mean relative test errors when varying p.

4.4 Evaluation and Interpretation of Experiments

We make the following observations about the numerical results of Sect. 4.3.

- [O1]:

-

Our test-cases show that the error rate achieved by NN approximations for varying parameter sizes differs strongly and qualitatively between different test-cases. In Figs. 5, 6, 7, and 8 we depict the different scaling behaviors of the test-cases [T1], [T2], [T3], and [T4]. For [T1] and \(\sigma = -1\), the error appears to be almost independent from p for \(p \rightarrow \infty \). In contrast to that, we observe for \(\sigma = 1\) a linear scaling in the loglog plot implying a polynomial dependence of the error on p.

For test-case [T2], we observe that the error scales linearly in the loglog scale of Fig. 6. We conclude that for \({\mathcal {A}}^{\mathrm {cb}}(p,\mu ),\) the error scales polynomially with p.

The errors of the test-cases associated with [T3] seem to scale linearly with p in the loglog scale depicted in Fig. 7. This implies that for [T3] the error scales polynomially in p with the same exponent.

The semilog plot of Fig. 8 shows that for test-case [T4] with the sets \({\mathcal {A}}^{\mathrm {cp}}(p,10^{-4}),\) the growth of the error is logarithmic in p.

In total, we observed scaling behaviors of \({\mathcal {O}}(1), {\mathcal {O}}(\log (p))\) and \({\mathcal {O}}(p^k)\) for \(k>0\) and for \(p \rightarrow \infty \). Notably, none of the test-cases exhibited an exponential dependence of the error on p.

- [O2]:

-

The choice of the hyper-parameters \(\sigma \) and \(\mu \) in the test-cases [T1], [T2], [T3] influences the scaling behavior according to its effect on the complexity of the parametrized diffusion coefficient set.

The observed scaling behavior closely reflects the complexity of the solution manifolds as characterized by the singular value decay depict in Fig. 2. For [T1], the plot suggests this to be the easiest case. Moreover, the impact of the scaling parameter \(\sigma \) was supposed to simplify the parametric problem for smaller values of \(\sigma \). This is precisely, what we observe in Table 1 and Fig. 5.

Table 1 Mean relative test error averaged over three training runs for test-case [T1] and different parameter dimensions p, different scaling parameters \(\sigma \) and shift \(\mu =1\) Accordingly, we see for [T2] in Table 2 and Fig. 6 and for [T3-V] in Table 4 and Fig. 7 that the parameter \(\mu \) influences the scaling behavior of the method with p. Both cases get harder by lowering \(\mu \) and overall exhibit error scaling on the order \({\mathcal {O}}(p^k)\). However, for [T2] \(\mu \) directly impacts the scaling exponent k while for [T3-V] it stays the same. This again resembles the singular value decay depicted in Fig. 2 (Table 3).

Table 2 Mean relative test error averaged over three training runs for test-case [T2] and parameter dimensions \(p=s^2\) Table 3 Mean relative test error averaged over three training runs for test-case [T3-F] and different parameter dimensions \(p=s^2\) with shift \(\mu =10^{-4}\) and radius 0.8/(2s) Table 4 Mean relative test error averaged over three training runs for test-case [T3-V] and different parameter dimensions \(p=2s^2\) Concluding, we can see that the approximation of the DPtSM by NNs appears to be very sensitive to these parameters, especially to the ellipticity \(\mu \), and that the scaling behavior is accurately predicted by the complexity of the solution manifold in regard to its singular value decay (Table 5).

Table 5 Mean relative test error averaged over three training runs for test-case [T4] with clipping value \(\mu =10^{-1}\) and different parameter dimensions p - [O3]:

-

We observe no fundamentally worse scaling behavior for non-affinely parametrized test-cases compared to test-cases with an affine parametrization. In test-case [T3], we do observe that the non-linearly parametrized problem appears to be more challenging overall, while the scaling behavior is the same as for the affinely parametrized problem. In test-case [T4], which is the test-case with the highest number of parameters p, we observe only a very mild (in fact logarithmic) dependence of the error on p.

From these observations we draw the following conclusions for our hypotheses:

Hypothesis [H1] In observation [O1], we saw that over a wide variety of test-cases multiple types of scaling of the error with the dimension of the parameter space could be observed. None of them admit an exponential scaling. In fact, the behavior of the errors seems to be determined by an intrinsic complexity of the problems.

Hypothesis [H2] Comparing performance both between test-cases (observation [O1]) and within test-cases (observation [O2]), leads us to conclude that there exist strong differences in the performance of learning the DPtSM. For various test-cases, using NNs with precisely the same architecture, we observed (see [O2]) considerably different scaling behaviors of the test-cases [T1]–[T4] which have the error scale polynomially, logarithmically and being constant with changing parameter dimension p (described in [O1]). According to [O2], the overall level of the errors and the type of scaling for increasing p follows the semi-ordering of complexities of test-cases in the sense that more complex parametrized sets yield higher errors whereas simpler sets or spaces with intuitively lower intrinsic dimensionality yield smaller errors (test-cases [T1] and [T2]).

Therefore, we conclude that the approximation theoretical intrinsic dimension of the parametric problem is a main factor in determining the hardness of learning the DPtSM.

Hypothesis [H3] In support of [H3], we found no fundamental difference of the performance of the NN model for non-affinely parametrized problems (see [O3]).

In conclusion, we found support for all the hypotheses [H1]–[H3]. We consider this result a validation of the importance of approximation-theoretical results for practical learning problems, especially in the application of deep learning to problems of numerical analysis.

5 An Empirical Study Beyond the Theoretical Analysis

The design of our numerical study as presented in the previous section was chosen to closely resemble the approximation-theoretical framework of Kutyniok et al. [37]. Therefore, the underlying network model was chosen as a standard fully-connected neural network. In modern applications, typically more refined and performance driven architectures are used. To take a further step towards the practical relevance of approximation theory, we accompany the previous study by an experiment in a more practical and realistic setting.

CNN architecture used for experiments. Below each convolutional layer the number of filters is listed. The shrinking height and width of the blocks symbolize the lowered resolution after the red pooling layers. The visualization is created using the tools of www.github.com/HarisIqbal88/PlotNeuralNet

Because our problem is set up on a rectangular domain, it is a natural choice to encode the network inputs, i.e. the parametrized diffusion coefficients, as an image resulting from discretization on a fixed grid. This allows us to incorporate convolutional layers into our network architecture. For a meaningful comparison, we chose a CNN of similar depth to the previously used fully-connected NN: We use six convolutional followed by four fully connected layers, already including the output. The first two convolutional layers contain 128 filters of size \(2\times 2\) while the other four only contain 64. They all utilize zero padding to preserve the input dimension. Moreover, after layer two and four \(2\times 2\) max pooling is performed to down-sample the input. The fully connected layers contain 500 neurons each. All layers except the output are equipped with the 0.2-LReLU activation function. The architecture is visualized in Fig. 9.

We again use the ADAM optimizer [36] with hyper-parameters \(\alpha = 1.0\times 10^{-4}\), \( \beta _{1}=0.9\), \(\beta _{2}=0.999\) and \(\varepsilon =1.0\times 10^{-8}\) for training. However, the batch size is reduced to 32 and we employ early stopping based on a separate validation set of size \(N_{\mathrm {val}} {:}{=}1000\) to prevent overfitting. The maximum number of epochs is limited to 3000.

We noted earlier and described in detail in “Appendix A” that the impact of the number of samples seems to be independent of the scaling behavior. To emphasize this point, we cut the number of training samples in half to \(N_{\mathrm {train}} {:}{=}10{,}000\). In contrast to our earlier experiments, encoding the parameters as images now allows us to keep the architecture completely fixed throughout all test-cases, even the input layer. However, the resolution of the input becomes a new hyper-parameter. In general, increasing input resolution enhances the performance but comes at significant additional computational cost and the elevated risk of overfitting. To balance this trade-off, we settled at a resolution of \(20\times 20\) for all test-cases, except [T3-V] which required a resolution of \(50\times 50\) for an accurate representation. Note, that even for inputs of size \(20\times 20\) the diffusion coefficients are heavily over-parametrized which leads to a considerable increase in the required storage.

5.1 Numerical Results for CNNs

We tested the above network configuration for all cases besides [T1] and [T3-F], which could already easily be learned by the fully-connected NN. This yielded the following results.

5.1.1 [T2] Chessboard Partition

We observe the following mean relative test errors for the sets \({\mathcal {A}}^{\mathrm {cb}}(p,\mu )\) (Table 6).

5.1.2 [T3-V] Cookies with Variable Radii

For the variable cookies \({\mathcal {A}}^{\mathrm {cvr}}(p,\mu )\) we achieve the following mean relative test errors (Table 7).

5.1.3 [T4] Clipped Polynomials

For the set \({\mathcal {A}}^{\mathrm {cp}}(p,10^{-1})\), we obtain the following mean relative test errors (Table 8).

5.2 Evaluation and comparison to first experiment

Similar to the results obtained in the previous section, we examine the scaling behavior with regard to the parameter dimension p in Fig. 10. We notice that, although the CNN significantly outperforms the simple fully-connected NN, the scaling behavior remains nearly identical. The most notable difference between the two networks can be witnessed for [T2]. For this case, the error was reduced by an order of magnitude. This is especially noteworthy because, as mentioned earlier, only half the number of samples was used during training. Nonetheless, the similar scaling behavior (cf. Figs. 6, 7, 8 with Fig. 10) implies that the rates obtained previous section still accurately reflect the underlying hardness of the problem. This indicates that the relatively high relative error is a result of insufficient model capacity rather than an artifact of the optimization procedure.

Given that the experiments based on convolutional neural networks yielded lower overall errors with less training data, we identify two promising directions of future work. First, the fact that convolutional neural networks outperform their fully connected counterparts, requires a theoretical analysis and justification. Second, it appears worthwhile to search for additional architectural refinements which potentially allow cutting the overall error down even further.

Notes

Throughout this paper, we denote by \({\mathcal {H}}\) the space \(H_0^1(\Omega ){:}{=}\{u\in H^1(\Omega ){:}~u|_{\partial \Omega }=0\}\), where \(H^1(\Omega ){:}{=}W^{1,2}(\Omega )\) is the first-order Sobolev space and where \(\partial \Omega \) denotes the boundary of \(\Omega \). On this space, we consider the norm \(\Vert u\Vert _{{\mathcal {H}}}=\Vert u\Vert _{H_0^1(\Omega )}{:}{=}\Vert u\Vert _{H^1(\Omega )} = \left( \sum _{|{\mathbf {a}}|\le 1} \Vert D^{{\mathbf {a}}}u \Vert _{L^2(\Omega )}^2\right) ^{1/2}.\)

In this paper, \(|{\mathbf {x}}|\) denotes the Euclidean norm of \({\mathbf {x}}\in {\mathbb {R}}^n\).

derived from bounds on the Kolmogorov N-width of \(S({\mathcal {Y}})\)

\({\mathcal {X}}_A\) denotes the indicator function of A.

References

Abadi, M., Agarwal, A., Barham, P., Brevdo, E., Chen, Z., Citro, C., Corrado, G.S., Davis, A., Dean, J., Devin, M., Ghemawat, S., Goodfellow, I., Harp, A., Irving, G., Isard, M., Jia, Y., Jozefowicz, R., Kaiser, L., Kudlur, M., Levenberg, J., Mané, D., Monga, R., Moore, S., Murray, D., Olah, C., Schuster, M., Shlens, J., Steiner, B., Sutskever, I., Talwar, K., Tucker, P., Vanhoucke, V., Vasudevan, V., Viégas, F., Vinyals, O., Warden, P., Wattenberg, M., Wicke, M., Yu, Y., Zheng, X.: TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems (2015). Software available from tensorflow.org

Adcock, B., Brugiapaglia, S., Dexter, N., Moraga, S.: Deep neural networks are effective at learning high-dimensional Hilbert-valued functions from limited data. arXiv preprint arXiv:2012.06081 (2020)

Adcock, B., Dexter, N.: The gap between theory and practice in function approximation with deep neural networks. arXiv preprint arXiv:2001.07523 (2020)

Alnæs, M.S., Blechta, J., Hake, J., Johansson, A., Kehlet, B., Logg, A., Richardson, C., Ring, J., Rognes, M.E., Wells, G.N.: The FEniCS Project Version 1.5. Arch. Numer. Softw. 3(100) (2015)

Bachmayr, M., Cohen, A.: Kolmogorov widths and low-rank approximations of parametric elliptic PDEs. Math. Comput. 86(304), 701–724 (2017)

Bachmayr, M., Cohen, A., Dahmen, W.: Parametric PDEs: sparse or low-rank approximations? IMA J. Numer. Anal. 38(4), 1661–1708 (2018)

Barron, A.: Universal approximation bounds for superpositions of a sigmoidal function. IEEE Trans. Inf. Theory 39(3), 930–945 (1993)

Beck, C., Becker, S., Grohs, P., Jaafari, N., Jentzen, A.: Solving stochastic differential equations and Kolmogorov equations by means of deep learning. arXiv preprint arXiv:1806.00421 (2018)

Beck, C., Weinan, E., Jentzen, A.: Machine learning approximation algorithms for high-dimensional fully nonlinear partial differential equations and second-order backward stochastic differential equations. J. Nonlinear Sci. 29, 1563–1619 (2019)

Bellman, R.: On the theory of dynamic programming. Proc. Natl. Acad. Sci. U.S.A. 38(8), 716 (1952)

Berg, J., Nyström, K.: Data-driven discovery of PDEs in complex datasets. J. Comput. Phys. 384, 239–252 (2019)

Berner, J., Grohs, P., Jentzen, A.: Analysis of the generalization error: empirical risk minimization over deep artificial neural networks overcomes the curse of dimensionality in the numerical approximation of Black-Scholes partial differential equations. arXiv preprint arXiv:1809.03062 (2018)

Bhattacharya, K., Hosseini, B., Kovachki, N.B., Stuart, A.M.: Model reduction and neural networks for parametric PDEs. arXiv preprintarXiv:2005.03180 (2020)

Bölcskei, H., Grohs, P., Kutyniok, G., Petersen, P.C.: Optimal approximation with sparsely connected deep neural networks. SIAM J. Math. Data Sci. 1, 8–45 (2019)

Brevis, I., Muga, I., van der Zee, K.G.: Data-driven finite elements methods: machine learning acceleration of goal-oriented computations. arXiv preprint arXiv:2003.04485 (2020)

Cohen, A., DeVore, R.: Approximation of high-dimensional parametric PDEs. Acta Numer. 24, 1–159 (2015)

Cucker, F., Smale, S.: On the mathematical foundations of learning. Bull. Am. Math. Soc. 39, 1–49 (2002)

Cucker, F., Zhou, D.-X.: Learning Theory: An Approximation Theory Viewpoint, Cambridge Monographs on Applied and Computational Mathematics. Cambridge University Press (2007)

Dal Santo, N., Deparis, S., Pegolotti, L.: Data driven approximation of parametrized PDEs by Reduced Basis and Neural Networks. arXiv preprint arXiv:1904.01514 (2019)

Eigel, M., Schneider, R., Trunschke, P., Wolf, S.: Variational Monte Carlo-bridging concepts of machine learning and high dimensional partial differential equations. Adv. Comput. Math. 45, 2503–2532 (2019)

Elbrächter, D., Grohs, P., Jentzen, A., Schwab, C.: DNN expression rate analysis of high-dimensional PDEs: application to option pricing. arXiv preprint arXiv:1809.07669 (2018)

Faber, F.A., Hutchison, L., Huang, B., Gilmer, J., Schoenholz, S.S., Dahl, G.E., Vinyals, O., Kearnes, S., Riley, P.F., von Lilienfeld, O.A.: Prediction errors of molecular machine learning models lower than hybrid DFT error. J. Chem. Theory Comput. 13(11), 5255–5264 (2017)