Abstract

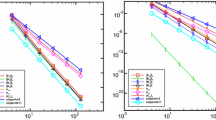

We analyze the superconvergence properties of ultra-weak discontinuous Galerkin (UWDG) methods with various choices of flux parameters for one-dimensional linear Schrödinger equation. In our previous work (Chen et al. in J Sci Comput 78(2):772–815, 2019), stability and optimal convergence rate are established for a large class of flux parameters. Depending on the flux choices and if the polynomial degree k is even or odd, in this paper, we prove 2k or \((2k-1)\)th order superconvergence rate for cell averages and numerical flux of the function, as well as \((2k-1)\) or \((2k-2)\)th order for numerical flux of the derivative. In addition, we prove superconvergence of \((k+2)\) or \((k+3)\)th order of the DG solution towards a special projection. At a class of special points, the function values and the first and second order derivatives of the DG solution are superconvergent with order \(k+2, k+1, k\), respectively. The proof relies on the correction function techniques initiated in Cao et al. (SIAM J Numer Anal 52(5):2555–2573, 2014), and applied to Cao et al. (Numer Methods Partial Differ Equ 33(1):290–317, 2017) for direct DG (DDG) methods for diffusion problems. Compared with Cao et al. (2017), Schrödinger equation poses unique challenges for superconvergence proof because of the lack of the dissipation mechanism from the equation. One major highlight of our proof is that we introduce specially chosen test functions in the error equation and show the superconvergence of the second derivative and jump across the cell interfaces of the difference between numerical solution and projected exact solution. This technique was originally proposed in Cheng and Shu (SIAM J Numer Anal 47(6):4044–4072, 2010) and is essential to elevate the convergence order for our analysis. Finally, by negative norm estimates, we apply the post-processing technique and show that the accuracy of our scheme can be enhanced to order 2k. Theoretical results are verified by numerical experiments.

Similar content being viewed by others

References

Adjerid, S., Devine, K.D., Flaherty, J.E., Krivodonova, L.: A posteriori error estimation for discontinuous Galerkin solutions of hyperbolic problems. Comput. Methods Appl. Mech. Eng. 191(11–12), 1097–1112 (2002)

Adjerid, S., Massey, T.C.: Superconvergence of discontinuous Galerkin solutions for a nonlinear scalar hyperbolic problem. Comput. Methods Appl. Mech. Eng. 195(25–28), 3331–3346 (2006)

Bona, J., Chen, H., Karakashian, O., Xing, Y.: Conservative, discontinuous Galerkin-methods for the generalized Korteweg-de Vries equation. Math. Comput. 82(283), 1401–1432 (2013)

Bramble, J.H., Schatz, A.H.: Higher order local accuracy by averaging in the finite element method. Math. Comput. 31(137), 94–111 (1977)

Cao, W., Li, D., Yang, Y., Zhang, Z.: Superconvergence of discontinuous Galerkin methods based on upwind-biased fluxes for 1d linear hyperbolic equations. ESAIM: Math. Model. Numer. Anal. 51(2), 467–486 (2017)

Cao, W., Liu, H., Zhang, Z.: Superconvergence of the direct discontinuous Galerkin method for convection-diffusion equations. Numer. Methods Partial Differ. Equ. 33(1), 290–317 (2017)

Cao, W., Shu, C.-W., Yang, Y., Zhang, Z.: Superconvergence of discontinuous Galerkin method for scalar nonlinear hyperbolic equations. SIAM J. Numer. Anal. 56(2), 732–765 (2018)

Cao, W., Zhang, Z., Zou, Q.: Superconvergence of discontinuous Galerkin methods for linear hyperbolic equations. SIAM J. Numer. Anal. 52(5), 2555–2573 (2014)

Cessenat, O., Despres, B.: Application of an ultra weak variational formulation of elliptic PDEs to the two-dimensional helmholtz problem. SIAM J. Numer. Anal. 35(1), 255–299 (1998)

Chen, A., Li, F., Cheng, Y.: An ultra-weak discontinuous Galerkin method for Schrödinger equation in one dimension. J. Sci. Comput. 78(2), 772–815 (2019)

Cheng, Y., Shu, C.-W.: A discontinuous Galerkin finite element method for time dependent partial differential equations with higher order derivatives. Math. Comput. 77(262), 699–730 (2008)

Cheng, Y., Shu, C.-W.: Superconvergence of discontinuous Galerkin and local discontinuous Galerkin schemes for linear hyperbolic and convection-diffusion equations in one space dimension. SIAM J. Numer. Anal. 47(6), 4044–4072 (2010)

Cockburn, B., Luskin, M., Shu, C.-W., Süli, E.: Enhanced accuracy by post-processing for finite element methods for hyperbolic equations. Math. Comput. 72(242), 577–606 (2003)

Cockburn, B., Shu, C.-W.: Runge–Kutta discontinuous Galerkin methods for convection-dominated problems. J. Sci. Comput. 16(3), 173–261 (2001)

Davis, P.J.: Circulant Matrices. Wiley, New York (1979)

Ji, L., Xu, Y., Ryan, J.K.: Negative-order norm estimates for nonlinear hyperbolic conservation laws. J. Sci. Comput. 54(2), 531–548 (2013)

Liang, X., Khaliq, A.Q.M., Xing, Y.: Fourth order exponential time differencing method with local discontinuous Galerkin approximation for coupled nonlinear Schrödinger equations. Commun. Comput. Phys. 17(2), 510–541 (2015)

Lu, W., Huang, Y., Liu, H.: Mass preserving discontinuous Galerkin methods for Schrödinger equations. J. Comput. Phys. 282, 210–226 (2015)

Meng, X., Ryan, J.K.: Discontinuous Galerkin methods for nonlinear scalar hyperbolic conservation laws: divided difference estimates and accuracy enhancement. Numer. Math. 136(1), 27–73 (2017)

Mock, M.S., Lax, P.D.: The computation of discontinuous solutions of linear hyperbolic equations. Commun. Pure Appl. Math. 31(4), 423–430 (1978)

Reed, W.H., Hill, T.: Triangular mesh methods for the neutron transport equation. Technical report, Los Alamos Scientific Lab., N. Mex. (USA) (1973)

Ryan, J., Shu, C.-W., Atkins, H.: Extension of a post processing technique for the discontinuous Galerkin method for hyperbolic equations with application to an aeroacoustic problem. SIAM J. Sci. Comput. 26(3), 821–843 (2005)

Shen, J., Tang, T., Wang, L.-L.: Spectral Methods: Algorithms, Analysis and Applications, vol. 41. Springer, Berlin (2011)

Shu, C.-W.: Discontinuous Galerkin methods for time-dependent convection dominated problems: Basics, recent developments and comparison with other methods. In: Barrenechea, A.C.G.R., Brezzi, F., Georgoulis, E. (eds.) Building Bridges: Connections and Challenges in Modern Approaches to Numerical Partial Differential Equations, vol. 114, pp. 369–397. Springer, Switzerland (2016)

Xu, Y., Shu, C.-W.: Local discontinuous Galerkin methods for nonlinear Schrödinger equations. J. Comput. Phys. 205(1), 72–97 (2005)

Xu, Y., Shu, C.-W.: Optimal error estimates of the semidiscrete local discontinuous Galerkin methods for high order wave equations. SIAM J. Numer. Anal. 50(1), 79–104 (2012)

Yang, Y., Shu, C.-W.: Analysis of optimal superconvergence of discontinuous Galerkin method for linear hyperbolic equations. SIAM J. Numer. Anal. 50(6), 3110–3133 (2012)

Zhou, L., Xu, Y., Zhang, Z., Cao, W.: Superconvergence of local discontinuous Galerkin method for one-dimensional linear schrödinger equations. J. Sci. Comput. 73(2–3), 1290–1315 (2017)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Yingda Cheng: Research is supported by NSF Grants DMS-1453661 and DMS-1720023.

Mengping Zhang: Research supported by NSFC Grant 11871448.

Appendix

Appendix

1.1 Collections of Intermediate Results

In this section, we list some results that will be used in the rest of the appendix. First, we gather some results from [10]. (59)–(63) in [10] yields

where \(r_j\) has been defined in (17). (59) and (71) and the first equation in A.3.3 in [10] yields

Next, we provide estimates of the Legendre coefficients in neighboring cells of equal size.

If \(u \in W^{k+2+n,\infty }(I)\), then expand \({\hat{u}}_j(\xi )\) at \(\xi = -1\) in (9) by Taylor series, we have for \(m \ge k+1\), \(\exists z\in [-1,1]\), s.t.

where \(\theta _l\) are constants independent of u and \(h_j\).

Therefore, when \(h_j = h_{j+1}\), we use Taylor expansion again, and compute the difference of two \(u_{j, m}\) from neighboring cells

Then we obtain the estimates

where \(\mu _s\) are constants independent of u and \(h_j\).

1.1.1 Two Convolution-Like Operators

In the proof of Lemmas 3.8 and 3.9 in [10], we used Fourier analysis for error analysis. Now we extract the main ideas and generalize the results to facilitate the proof of superconvergence results in Lemmas 3.6 and 4.2.

We define two operators on a periodic functions u in \(L^2(I)\):

where \(L = b-a\) is the size of I.

Expand u by Fourier series, i.e., \(u(x) = \sum _{n=-\infty }^{\infty }{\hat{f}}(n) e^{2\pi inx/L}\), we have

In addition, we can apply the operator on the same function recursively, we have

As shown in the proof of Lemmas 3.8 and 3.9 in [10], if \(\lambda _i, i \le n\) is a complex number with \(|\lambda _i| = 1\), independent of h, then

1.2 Proof of Lemma 3.2

Proof

where

Therefore,

where \(D_1 = \frac{(-1)^kh_j}{2k(k + (-1)^k)} ( (-1)^k \Gamma _j + \Lambda _j)\) is bounded by definitions of \(\Gamma _j, \Lambda _j\) and mesh regularity condition. Then

and

By mesh regularity condition, \(\exists \sigma _1, \sigma _2, s.t., \sigma _1 h_j \le h \le \sigma _2 h_j\) and the proof is complete. \(\square \)

1.3 Proof of Lemma 3.3

By Definition 3.1, \(P^\star _hu |_{I_j}= \sum _{m=0}^{k-2} u_{j,m} L_{j,m} + \acute{u}_{j,k-1}L_{j,k-1} + \acute{u}_{j,k}L_{j,k}.\) We solve the two coefficients \(\acute{u}_{j,k-1}, \acute{u}_{j,k}\) on every cell \(I_j\) according to definition (14).

If assumption A1 is satisfied, it has been shown in Lemma 3.1 in [10] that (14) is equivalent to (21). Substitute u and \(u_x\) by (8), we obtain the following equation

the existence and uniqueness of the system above is ensured by assumption A1, that is, \(\det (A_j+B_j) = 2(-1)^k \Gamma _j \ne 0\). Thus, (22) is proven.

If any of the assumptions A2/A3 is satisfied, we obtain

which can be solved by a global linear system with coefficient matrix M. The solution is

where \(r_N = Q^N (I_2 - Q^N)^{-1} = r_0 - I_2 \) is used in the third equality. Therefore, (23) is proven. The proof of (24) is given in Lemmas 3.2, 3.4, 3.8, 3.9 in [10].

If \(h_j = h_{j+1}\), denote

then by (22) and (23), we have

When assumption A1 is satisfied (25) is a direct result of (69).

When assumption A2 is satisfied, we have

where (66), (69) and the fact that \(V_{1,m}, V_{2,m}, \forall m \ge 0\) are constant matrices independent of h are used in above inequalities.

When assumption A3 is satisfied, we perform more detailed computation of \({\mathcal {S}}_j\) and use Fourier analysis to bound it by utilizing the smoothness and periodicity. If \(u \in W^{k+2+n, \infty }(I)\),

When \(\frac{|\Gamma |}{|\Lambda |} < 1\), \(Q=-A^{-1}B\) has two imaginary eigenvalues \(\lambda _1, \lambda _2\) with \(|\lambda _1| = |\lambda _2| =1\). By (59) of [10], we have \(r_l= \frac{\lambda _1^l}{1-\lambda _1^N} Q_1 + \frac{\lambda _2^l}{1-\lambda _2^N} (I_2 - Q_1)\), where \(Q_1\) is a constant matrix independent of h, and defined in (60) and (61) in [10].

By (72), we have

When \(\frac{|\Gamma |}{|\Lambda |} =1\), \(Q=-A^{-1}B\) has two repeated eigenvalues. By (71) of [10], we have \(r_l = \frac{(-1)^l}{2} I_2 + (-1)^l \frac{-N+2l}{4\Gamma } Q_2\), where \(Q_2/ \Gamma \) is a constant matrix, we estimate \({\mathcal {U}}_j\) by the same procedure as previous case and obtain

Finally, the estimates for \({\mathcal {U}}_j\) is complete for all assumptions and (25) is proven.

1.4 Proof of Lemma 3.6

Proof

By the definition of \(P^\dagger _h\), the solution of \(\grave{u}_{j, k-1}, \grave{u}_{j, k}\) has similar linear algebraic system as (22). That is, under assumption A2 or A3, the existence and uniqueness condition is \(\det (A+B) = 2( (-1)^k \Gamma + \Lambda ) \ne 0\). Thus,

And then, by (19) and (10), (28) is proven.

If any of the assumptions A2/A3 is satisfied, then the difference can be written as

The properties of \(P^\star _hu\) and \(P^\dagger _hu\) yield the following coupled system

where the second equality was obtained by the definition of \(P^\dagger _hu\) (27b).

Gather the relations above for all j results in a large \(2N \times 2N\) linear system with block circulant matrix M, defined in (15), as coefficient matrix, then the solution is

where by periodicity, when \(l+j> N\), \(\tau _{l+j} = \tau _{l+j-N}, \iota _{l+j} = \iota _{l+j-N}\).

On uniform mesh, by the definition of \(R_{j,m}\) in (31), \(R_{j,m}(1)\) and \((R_{j,m})_x(1)\) are independent of j, we denote the corresponding values as \(R_m(1)\) and \((R_m)_x(1)\) and let \(R^-_{m} = [R_m(1), (R_m)_x(1)]^T\). By (30), we have

and

We can estimate \({\mathcal {S}}_j\) by the same lines as the estimation of \({\mathcal {U}}_j\) in Appendix A.3 and (29) is proven. \(\square \)

1.5 Proof of Lemma 4.1

Proof

By error equation, the symmetry of \(A(\cdot , \cdot )\) and the definition of \(s_h,\) we have

Now, we are going to choose three special test functions to extract superconvergence properties (35)–(37) about \(\zeta _h.\) We first prove (35). Due to the invertibility of the coefficient matrix M, there exists a nontrivial function \(v_1 \in V_h^k\), such that \(\forall j \in {\mathbb {Z}}_N, v_1|_{I_j} = \alpha _{j,k-1}L_{j,k-1} + \alpha _{j,k} L_{j,k} + \overline{(\zeta _h)_{xx}}\), \(\int _{I_j}v_1 (\zeta _h)_{xx} dx = \Vert (\zeta _h)_{xx}\Vert _{L^2(I_j)}^2\), \({\hat{v}}_1 |_{{j+\frac{1}{2}}} = 0\) and \(\widetilde{(v_1)_x}|_{{j+\frac{1}{2}}} = 0\). Thus \(A(v_h, \zeta _h) = \Vert (\zeta _h)_{xx}\Vert ^2\). Let \(v_h=v_1,\) then (76) becomes

Hence \(\Vert (\zeta _h)_{xx}\Vert ^2 \le \Vert s_h + (\zeta _h)_t\Vert \cdot \Vert v_1\Vert .\) In order to show the estimates for \(\Vert (\zeta _h)_{xx}\Vert ,\) it remains to estimate \(\Vert v_1\Vert .\)

When the assumption A1 holds, the definition of \(v_1\) yields the following local system for each pair of \(\alpha _{j,k-1}\) and \(\alpha _{j,k}\),

By simple algebra

By orthogonality of Legendre polynomials, it follows that

where Lemma 3.2, trace inequalities and inverse inequalities are used in above inequality. Therefore, (35) is proven when assumption A1 is satisfied.

Similarly, we define \(v_2 \in V_h^k\), such that \(\forall j \in {\mathbb {Z}}_N, v_2|_{I_j} = \alpha _{j,k-1}L_{j,k-1} + \alpha _{j,k} L_{j,k}\), \(\int _{I_j}v_2 (\zeta _h)_{xx} dx = 0\), \({\hat{v}}_2 |_{{j+\frac{1}{2}}} = 0\) and \(\widetilde{(v_2)_x}|_{{j+\frac{1}{2}}} = \overline{[\zeta _h]}|_{{j+\frac{1}{2}}}\). Thus \(A(v_h, \zeta _h) = -\sum _{j=1}^N|[\zeta _h]|_{j+\frac{1}{2}}^2\). When assumption A1 is satisfied, this definition yields the following local system for each pair of \(\alpha _{j,k-1}\) and \(\alpha _{j,k}\),

By same algebra as above, we have

By Lemma 3.2, it follows directly that

Plug \(v_2\) in (76), we obtain

Therefore, (36) is proven when assumption A1 is satisfied.

Finally, we can also choose \(v_3 \in V_h^k\), such that \(\forall j \in {\mathbb {Z}}_N, v_3|_{I_j} = \alpha _{j,k-1}L_{j,k-1} + \alpha _{j,k} L_{j,k}\) such that \(\int _{I_j}v_3 (\zeta _h)_{xx} dx = 0\), \({\hat{v}}_3 |_{{j+\frac{1}{2}}} = \overline{[(\zeta _h)_x]}|_{{j+\frac{1}{2}}}\) and \(\widetilde{(v_3)_x}|_{{j+\frac{1}{2}}} = 0\). Thus \(A(v_h, \zeta _h) = \sum _{j=1}^N|[(\zeta _h)_x]|_{j+\frac{1}{2}}^2\). Follow the same lines as the estimates for \(v_2\), we end up with the estimates

Plug \(v_3\) in (76), we obtain (37) when assumption A1 is satisfied.

Under assumption A2, we need to compute \(\sum _{j=1}^N(|\alpha _{j,k-1}|^2 + |\alpha _{j,k}|^2)\) to estimate \(\Vert v_1 \Vert ^2\). The definition of \(v_1\) yields the following coupled system

Write it in matrix form

where M is defined in (15) and

Multiply \(A^{-1}\) from the left in (78), we get an equivalent system

and \((M')^{-1} = circ(r_0, \ldots , r_{N-1})\). By Theorem 5.6.4 in [15] and similar to the proof in Lemma 3.1 in [6],

where \({\mathcal {F}}_{N}\) is the \(N \times N\) discrete Fourier transform matrix defined by \(({\mathcal {F}}_N)_{ij} = \frac{1}{\sqrt{N}} {\overline{\omega }}^{(i-1)(j-1)}, \omega = e^{i\frac{2\pi }{N}}.\)\({\mathcal {F}}_{N}\) is symmetric and unitary and

The assumption \(\frac{\left| \Gamma \right| }{\left| \Lambda \right| } > 1\) in A2 ensures that the eigenvalues of \(Q = -A^{-1}B\) (see (52) in [10]) are not 1, thus \(I_2 + \omega ^j A^{-1}B, \forall j,\) is nonsingular and \(\varvec{\Omega }\) is invertible. Then

Therefore,

Since \(A^{-1}G \begin{bmatrix} 1 &{} 0 \\ 0 &{} \frac{1}{h} \end{bmatrix}, A^{-1}H \begin{bmatrix} 1 &{} 0 \\ 0 &{} \frac{1}{h} \end{bmatrix}\) are constant matrices, we have

where inverse inequality is used to obtain the last inequality. Finally, we obtain the estimate

where inverse inequality is used to obtain the last inequality. Then the estimates for (35) hold true. (36) and (37) can be proven by the same procedure when assumption A2 is satisfied, and the steps are omitted for brevity. \(\square \)

Remark A.1

When assumption A3 is satisfied, the eigenvalues of Q are two complex number with magnitude 1, then a constant bound for \(\rho ((M')^{-1})\) as in (79) is not possible. Therefore, we cannot obtain similar results for assumption A3.

1.6 Proof for Lemma 4.2

Proof

Since \(w_q \in V_h^k\), we have

Let \(v_h = D^{-2} L_{j,m}, m \le k - 2\) in (38a), we obtain

Since \(D^{-2} L_{j,m} \in P_c^{m+2}(I_j)\), by the property \(u - P^\star _hu \perp V_h^{k-2}\) in the \(L^2\) inner product sense, we have

By induction using (80), (81), (82), for \(0 \le m \le k - 2 - 2q\), \(c_{j,m}^q = 0.\)

Furthermore, the first nonzero coefficient can be written in a simpler form related to \(u_{j,k-1}\) by induction.

When \(q=1\), we compute \(c_{j, k-3}^1\) by (82) and the definition of \(w_0\). That is

Suppose \(c_{k+1-2q}^{q-1} = Ch_j^{2q-2}\partial _t^{q-1} (u_{j,k-1} - \acute{u}_{j,k-1})\), then

The induction is completed and (42) is proven when \(r=0\).

Next, we begin estimating the coefficient \(c_{j, m}^q.\) By Holder’s inequality and (81), we have the estimates for \(c_{j, m}^q, k-1-2q \le m \le k-2\),

To estimate the coefficients \(c_{j, k-1}^q, c_{j, k}^q\), we need to discuss it by cases. If assumption A1 is satisfied, meaning (38b) and (38c) can be decoupled and therefore \(w_q\) is locally defined by (38). By (39) and following the same algebra of solving the kth and \((k+1)\)th coefficients in (73),

By (19), for all \(j \in {\mathbb {Z}}_N\),

If one of assumption A2/A3 is satisfied, (39) defines a coupled system. From the same lines for obtaining (23) in Appendix A.3, the solution for \( c_{j,k-1}^q, c_{j,k}^q\) is

Under assumption A2, we can estimate \(c_{j,m}^q, m = k - 1, k,\) using (66), that is

Under assumption A3, \(\sum _{l=0}^{N-1}\Vert r_l\Vert _\infty \) is unbounded. Thus we use Fourier analysis to bound the coefficients utilizing the smoothness and periodicity by similar idea in [10]. In the rest of the proof, we make use of two operators \(\boxtimes \) and \(\boxplus \), which are defined in (71a) and (71b).

When \(\frac{|\Gamma |}{|\Lambda |} < 1\), \(Q=-A^{-1}B\) has two imaginary eigenvalues \(\lambda _1, \lambda _2\) with \(|\lambda _1| = |\lambda _2| =1\). By (59) of [10], we have \(r_l= \frac{\lambda _1^l}{1-\lambda _1^N} Q_1 + \frac{\lambda _2^l}{1-\lambda _2^N} (I_2 - Q_1)\), where \(Q_1\) is a constant matrix independent of h, and defined in (60) and (61) in [10]. We perform more detailed computation of the coefficients. In (82), plug in (23), for \(m = k-3, k-2\), when \(u_t \in W^{k+2+n,\infty }(I)\),

where \(F_{p,m}^\nu = \frac{2}{h} \int _{I_j}[L_{j,k-1}, L_{j,k}] V_{\nu ,p} D^{-2} L_{j,m} dx, \nu = 1, 2,\) are constants independent of h and (68) is used in the third equality.

Plug the formula above into (83), by similar computation, we have

By (72), we have

Therefore,

By induction and similar computation, we can obtain the formula for \(c_{j, m}^q\). For brevity, we omit the computation and directly show the estimates

When \(\frac{|\Gamma |}{|\Lambda |} =1\), \(Q=-A^{-1}B\) has two repeated eigenvalues. By (71) of [10], we have \(r_l = \frac{(-1)^l}{2} I_2 + (-1)^l \frac{-N+2l}{4\Gamma } Q_2\), where \(Q_2/ \Gamma \) is a constant matrix, then by (23) and (68). For \(m = k-3, k-2\), when \(u_t \in W^{k+2+n,\infty }(I)\), we compute \(c_{j, m}^1\) by the same procedure as previous case and obtain

Plug formula above into (83), we have

By (72), we have

and

By induction and similar computation, we can obtain the formula for \(c_{j, m}^q\). For brevity, we omit the computation and directly show the estimates

All the analysis above works when we change definition of \(w_q\) to \(\partial _t^r w_q\) (and change \((w_{q-1})_t\) to \(\partial _t^{r+1} w_{q-1}\) accordingly) in (38). Summarize the estimates for \(c_{j, m}^q\) under all three assumptions, for \(1 \le q \le \lfloor \frac{k-1}{2} \rfloor \), we have

Then (42), (43) is proven. And (44) is a direct result of above estimate and (41). \(\square \)

Rights and permissions

About this article

Cite this article

Chen, A., Cheng, Y., Liu, Y. et al. Superconvergence of Ultra-Weak Discontinuous Galerkin Methods for the Linear Schrödinger Equation in One Dimension. J Sci Comput 82, 22 (2020). https://doi.org/10.1007/s10915-020-01124-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-020-01124-0