Abstract

This article is a brief survey of Saul Abarbanel’s research career. We shall describe the main contributions and present the results in some of his articles. For each field of his interest a few introductary remarks are included with the intention of describing the history and the state of existing numerical methods at the time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This article is a brief survey of Saul Abarbanel’s researh career that stretched over more than half a century. His production was huge and has had great impact in the field of numerical analysis and its applications. He started out with work that can be characterized as applied mathematics in the classical sense with focus on aerodynamics. However, he soon switched to numerical methods, and his main research area came to be finite difference methods for the solution of partial differential equations. He worked on several different types of problems with particular emphasis on high order methods based on compact implicit difference operators, and on problems requiring artificial boundaries.

The list of publications at the end is far from complete, it includes essentially those articles mentioned in this presentation. He continued working with his students a long time after their graduation and many of these are coauthors on his articles. There are certainly many others who worked with him who should be mentioned in a more complete biography.

The presentation of his work on difference methods is divided into four parts corresponding to Saul’s main areas of interest:

Lax–Wendroff type difference methods.

High–order difference methods.

Navier–Stokes equations.

Artificial boundaries and PML–methods.

For each field a few introductary notes are included with the intention of describing the history and the state of existing numerical methods for the particular problems that caught Saul’s interest at the time.

2 The Early Years

Saul was born in Montclair, NJ, USA in 1931, but in 1933 his family moved back to Tel Aviv, where Saul finished high scool at the age 16. At this time the Jewish community was under various threats and Saul joined the underground resistence organization Palmach which later became part of the Israel defence forces. The war of independence took place 1947–1949; Saul served initially in the heavy mortar unit and later as a radio operator in the Air Force. This experience, obtained as a young teenager with high risk of being killed, is quite unusual among scientists in general.

In 1951 Saul moved to Boston where he started his academic studies at MIT, where he got his B.Sc. and M.Sc. in Aerodynamics and Astronautics. His Ph.D. thesis presented in 1959 was in theoretical aerodynamis with a study of heat transfer in various types of flow. Actually, the degree Sc.D. would have been more natural at this department, but the Ph.D. degree was used because of the theoretical mathematical character of the thesis. The work can be classified as applied mathematics with derivation of analytic solutions to certain mathematical models in physics. No numerical methods were used.

After completing the Ph.D. thesis at MIT he moved back to Israel and spent a year as post doc. at the Weizmann Institute. After that he got a position as Assistant Professor at Tel Aviv University where he began his academic career, and where he stayed until his death except for numerous visits as guest professor in United States.

Saul’s first published papers were [1] (1960) and [2] (1961), both of them about heat transfer. These were followed by a number of articles treating various flow problems, in particular those involving shocks. During the first decade his research was still applied mathematics with a number of different physical and technical applications. Some of his results were published in Israel Journal of Technology, for example the article [3] with the title The deflection of confining walls by explosive loads. Another one has the title The motion of shock waves and products of detonation confined between a wall and a rigid piston with an abstract which contains the sentence “\(\ldots \)a detailed analytical solution of the piston motion and flow field is carried out\(\ldots \)”, see [19]. This is a good illustration of the character of his work at this time; a mathematical model for the physical behaviour is found, and then an analytical solution is derived. The analysis is often very complicated, but Saul had an excellent technical skill when it came to handling the necessary mathematical tools. One can wonder whether his choice of topics had something to do with the problems encountered by the Israeli weapon industry.

3 Lax–Wendroff Type Difference Methods

Late in the 1960s Saul’s work shifted towards numerical methods, mainly difference methods for partial differential equations. At this time the approximation of nonlinear PDE was a big issue, the main problem being hyperbolic equations containing discontinuities in the form of contact discontinuities and shocks. The definition of unique solutions is not a trivial matter, but it was resolved in the seminal article [28] by Peter Lax in 1954. There the definition of shocks for the equation

was given as the limit of the perturbed equation

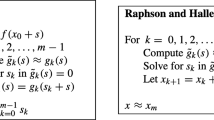

as \(\varepsilon \rightarrow 0\). For any fixed \(\varepsilon \) this equation is parabolic and has smooth solutions. The idea was introduced by von Neumann and Richtmyer already in 1950 as a method for solving (1), see [34]. Many versions based on this idea were developed, they were later called Lax-Wendroff type methods after the basic method given in the article [29]. It became the standard type for solution of more general shock problems even if Godunov methods, upwind methods and shock fitting began emerging. However, there is a problem with the Lax–Wendroff method since oscillations occur near the shock no matter how fine the grid is chosen. In the paper [18] this problem is resolved by modifying the scheme to an iterative version

where Q represents the Law–Wendroff operator. The intention is to use a low number of iterations, and it turns out that \(k=1\) and \(k=2\) eliminates the oscillations completely, giving a monotone profile. The method is used for various applications, see for example [10].

This work is typical for much of Saul’s work throughout the years. He picks up some method that has the potential of being effective but has certain flaws. Then he analyzes it to find the reason for the problem, and when understanding it he comes up with a cure that improves the performance considerably.

Another example of this is the article [9] published in 1986. For steady state problems several methods existed at this time, some of them being based on the time-dependent PDE that after integration in time leads to a steady state. Implicit methods allow for large time-steps without sacrificing stability, and ADI-methods simplify the solution of the large algebraic systems that occur at each time-step. One such method was introduced by Beam and Warming in 1976; for the simple heat equation

it is

for equal step-size h in both space directions. Here \(\delta _x^2\) and \(\delta _y^2\) are the standard second order difference operators, \(\lambda =\Delta t/h^2\) and \(\alpha \) are constants that may be chosen in different ways for different versions. Saul and his coworkers investigate the rate of convergence to steady state as \(n\rightarrow \infty \) and develop a general type of convergence analysis based on the size of the residual at each iteration. In this way the convergence rate is derived and shown to depend on \(\lambda \). To improve the method, an extra term

is added to the right hand side. In this way the convergence rate is significantly improved, furthermore it is independet of \(\lambda \) over a large range of values. Not only that, it largely removes the effect of grid stretching and seems to be very robust in general.

Finally it should be mentioned that higher order accurate versions of the Lax-Wendroff scheme was constructed in an early work with Zwas, see [36].

Typically for Saul, the results are based on a thorough analysis, partly containing a new type of approach. When the mechanism is understood, modifications are made resulting in a significant improvement of the algorithm.

4 High-Order Difference Methods

Beginning in 1971 pseudospectral methods were emerging, see [32] and [26]. These methods have optimal accuracy, i.e., the order is \({{\mathcal {O}}}(N)\), where N is the number of grid-ponts in space. However, with trigonometric basis functions allowing for the use of FFT, the application of the method is limited to problems with periodic solutions. Even if other basis functions like Chebyshev polynomials are used, the presence of boundaries causes trouble.

Traditionally, the order of accuracy for any method had been defined by truncated Taylor expansions and the form of the remainder. However, in [26] another and more precise study of the accuracy is introduced. A Fourier transformation is made, and the number of grid-points per wave-length to achieve a certain prescribed error is determined. The results show that high order methods are more effective even if more grid-points are included for approximation of derivatives at each grid-point. This created a new interest in high order difference methods. These have wide stencils and also here there are difficulties near the boundaries.

The method of lines is often used for PDE, i.e., the discretization is first done in space and then an ODE-solver is applied to the resulting system of ordinary differential equations. A Runge–Kutta method is often used for the time discretization, but the problem with wide computational stencils is then even more severe than for other direct PDE-methods, in particular for 3D-problems. The reason is that each step requires a number of intermediate stages and this creates difficulties when constructing numerical boundary conditions.

In [33] a modified version of the 4th order Runge–Kutta method is constructed and applied to the 2D conservation law

where \({\mathbf{u\,,f}}\) and \({\mathbf{g}}\) are vectors. The standard formulas for each stage is substituted by an advanced form of the Lax–Wendroff principle. In this way the computational domain is reduced, see Fig. 1, and already in two space dimensions the size reduces from 269 to 16 points, in 3D the reduction is enormous.

Actually Saul and his coworkers also worked out new boundary conditions for the original Runge–Kutta method, which eliminates problems with accuracy and stability for various conditions that are often used, see [24]. They find that by using extrapolation from inner points at the intermediate stages and imposing the exact condition \(u(0,t)=g(t)\) at the end of each complete step, the size of the time-step is reduced significantly. On the other hand, if the exact boundary values are prescribed at each stage, the step-size is not affected, but the accuracy is reduced to second order independently of the order of the basic Runge–Kutta scheme. Several other conditions are suggested for the intermediate stages and a detailed analysis shows that the drawbacks sometimes are eliminatad, sometimes they are not. For example, if the exact values at each stage is substituted by a Taylor expansion such that the boundary value at the first stage is given as

and similarly for the remaining stages, then full accuracy is retained for the linear case, while the accuracy in the nonlinear case is reduced to third order. This deficiency is removed by using the SAT tecnique where a penalty term is added to the approximation, see [14].

One way to overcome the difficulties with numerical boundary conditions is to construct high order methods by using a narrower computational stencil. The question then is if it is possible to retain the high order accuracy including the boundaries. Saul and his coworkers showed that it is.

As the basic method they used implicit compact difference methods, and the results were presented in a series of articles, see [4, 5, 21,22,23]. The principle for implicit compact difference operators goes back to approximations of functions by rational funcions introduced by Henri Padé already 1890. This idea was used by Collatz and later by Lele [30] as a basis for a new type of difference operators, where the difference approximation v of \(\partial u/\partial x\) has the form

where \(P_r\) and \(Q_r\) are difference operators such that the approximation is of order r.

Lele’s stability analysis is limited to a numerical computation of the eigenvalues of the matrix corresponding to the difference operator, and this does not guarantee stability in the true sense. Saul and some of his colleagues decided to do something about it. They considered the scalar hyperbolic equation

with the semidiscrete approximation

The method of analysis is based on normal mode analysis leading to so called GKS stability. The definition of stability allows for a bounded growth in time which is a disadvantage in many cases. If this growth is eliminated the result is a strictly stable scheme, also called time-stable or asymptotically stable. This property requires that the eigenvalues of the difference operator in space are located in the left halfplane, or in the fully discrete case that they are inside the unit circle.

Boundary conditions with various order of accuracy are constructed and analyzed with respect to stability. There are three main parts in the article: investigation of GKS stability, investigation of strict stability by using eigenvalue computation and finally numerical experiments for verification of the theoretical results. The GKS analysis requires the solution of polynomial equations. Increasing the order of accuracy increases the degree of the polynomials, and as a consequence it becomes more difficult to find the zeros. In these cases numerical methods are used for finding the roots to machine accuracy.

The results of this thorough and extensive analysis is the construction of GKS stable and strictly stable schemes with boundary conditions of up to sixth order of accuracy.

The boundary conditions are based on SBP operators, i.e., a scalar product is constructed such that by using summation by parts it can be shown that the corresponding norm \(||\cdot ||\) satifies

for all t. Clearly stability in the usual sense as well as strict stability holds in this case. These operators were invented by Heinz Kreiss and his student Godela Scherer in 1974, see [27], and many generalizations were made after that. However, for compact difference schemes not much had been done. Saul and his colleuges closed this gap by constructing new SBP operators which satisfies the proper conditions for the scalar case. However, for systems of PDE they also found a counter example which is not strictly stable. As an alternative they considered a SAT implementation mentioned above, and in this way stable and strictly stable methods were obtained.

The work on boundary conditions for implicit compact difference schemes is an extensive and important part of Saul’s work. The results have had a significant impact which is confirmed by the large numbers of citations.

Finally we would like to mention the article [6] where a multidimensional problem on an irregular domain is considered, see Fig. 2. The construction of stable boundary conditions is complicated when it comes to finite difference methods, but here the nonsymmetric operators near the boundary are constructed for a 4th order method, and shown to be stable using an energy estimate.

5 Navier–Stokes Equations

Solving the Navier–Stokes equations for viscous flow is a challenge since the equations are nonlinear and require a fine mesh for realistic applications. During the 1970s certain progress had been made, and the MacCormack–Baldwin scheme, see [31], quickly became popular. It has a split character allowing for convenient implementation, but a precise stability condition governing the choice of the time-step was missing. Furthermore, the estimated time-step depends on the step-size in all space directions (2 or 3).

Saul together with David Gottlieb proposed a new method of splitting which allows for maximal time-step in all directions, see [11]. The equations are written in the form

where \({\mathbf{F}}_H\) contains zero order terms, \({\mathbf{F}}_P\) contains first order x-derivatives, while \({\mathbf{F}}_M\) contains first order derivatives with respect to x, y, z. The vectors \({\mathbf{G}}\) and \(\mathbf{H}\) are defined in analogy with \({\mathbf{F}}\). The approximation is

where the L-operators denote the solvers corresponding to the one-dimensional terms in the differential equation while \(L_{xyz}\) solves the 3D-equation

The corresponding constant coefficient linear scalar model equation is shown to be stable under the one-dimensional conditions on the time-step corresponding to the one-dimensional operators. For the mixed operator the condition is \(\Delta t_{xyz}\le \Delta t_x\), which does not add any extra restriction.

The challenge is now to generalize this result to the linearized system with matrix coefficients. The central problem is to construct a similarity transformation that symmetrizes all the matrices, because then stability follows with the coefficients of the scalar equation substituted by the eigenvalues of the matrices. The authors manage to find this transformation, which is a great achievement. It leads to the first complete stability analysis of any difference approximation for the Navier–Stokes equations. Actually, in an interview by Philip Davis in 2003, Saul thought that this paper, written together with David Gottlieb, was his most important one.

Low Mach number flow is characterized by low fluid velocity compared to the speed of sound leading to a stiff system, and this creates difficulties when constructing numerical methods. Various splitting methods have been proposed, but they have not performed well in certain situations. This problem is considered in [8], and the first part of the paper contains an explanation of this behaviour. The basic source of the ill-posedness is that the underlying form of the differential equation has matrix coefficients that cannot be simultainously symmetrized, and in this case it was very natural for the authors to use the technique that was developed in [11]. The stiffness can of course not be removed without a fundamental change of the differential equations. However, Saul and his coworkers constructed a new splitting that allows for symmetrization, and as a consequence a well posed system with a stable difference method. Furthermore, the stiffness is isolated to a linear part of the approximation which “may be solved implicitly with ease” as expressed by the authors.

6 Artificial Boundaries and PML–Methods

For PDE problems defined on infinite domains, numerical methods based on a computational grid require a finite domain with an artificial boundary. The boundary conditions should be such that they affect the true solution as little as possible. The first attempt to handle this problem was done in 1977 by Björn Enquist and Andy Majda, who considered the wave equation and the problem of letting the waves through the artificial boundary without any reflections, see [25]. They Fourier transformed the wave equation, made an approximation annilihating the ingoing wave and then transformed back to physical space. This procedure results in high order derivatives as boundary conditions. Other types of conditions were constructed during the following years, but the real breakthrough came 1994 when Jean–Pierre Berenger presented the Perfectly Matched Layers technique (PML) for the Maxwell equations, see [20]. The idea is to add a layer around the computational domain, where the PDE is changed such that the solution is damped out before reaching the outer boundary, see Fig. 3. This technique has later been generalized and is now the most common method for problems of this type.

It turns out that the method works fine for many problems, but not for others. Saul and David Gottlieb decided to find out why this happens, see [12], and they did this by analyzing the PDE itself without any discretization. The Maxwell equations in 2D are

where the vector \({\mathbf{W}}=[E_x,\,E_y,\,H_z]^T\) represents the electric and magnetic field. In the extra layer the magnetic field is split such that the vector

satisfies

The authors note that the coefficient matrices in the original form can be symmetrized, while in the second version they cannot. The pure initial value problem for the second version is analyzed by Fourier transforming the equations. It is shown that the problem is not well posed and as a consequence certain perturbations may result in an unbounded exponential growth. This means of course that no consistent approximation can be stable.

The question is now how to modify the equations such that they become well posed. The answer is presented in the follow up article [13], where a modified set of equations is proposed. It has the form

Here \(P=J+\sigma E_x\), where J is a polarization current introduced by Ziolkowski, see [35]. One finesse with these equations is that no analysis is required to prove wellposedness since the principle part, where the lower order terms are disregarded, is the original Maxwell equations known to be well posed. Furthermore, the extra equation is an ODE which doesn’t add much to the computational work. A careful analysis shows that all the necessary properties hold, including continuity across the internal boundary separating the extra layer from the original domain.

Still another modification of the equations to be applied in the extra layer is proposed. It is constructed from a pure mathematical derivation, and is shown to have essentially the same properties as the previous model.

PML methods is a major part of Saul’s scientific production, the articles [15, 16] and [17] are further examples of this. The results are certainly very important, they contributed a lot to the understanding of this class of methods, and led to extensions with significant improvements.

7 Conclusions

Saul Abarbanel’s research career is remarkable. His first publication occurred in 1960 and his last one in 2015 when he was 84 years old, see [7]. All of the articles were published in well known journals and most of them are frequently cited. He advanced the development of new numerical methods for partial differential equations significantly, all being based on strict analysis securing stability and high order of accuracy. In typical cases he picks up some recent method that has been shown to fail for many applications. He analyzes it, often using som new approach, and finds the cure for it resulting in a well performing method. He had an extraordinary skill in using advanced mathematics leading to new insight in difficult problems. His development of new high order difference methods based on compact implicit methods has had a strong impact in the computational community, in particular the construction of stable and high order boundary conditions. Another important and difficult problem is the handling of artificial boundaries, and in this case he came up with new well working methods for general problems based on the PML-technique.

Much of Saul’s work was carried out jointly with others. It goes without saying that he attracted the very best PhD students, and he continued working with many of them until the end.

He had many commissions of trust. Already in 1964 he became head of the Applied Mathematics department at Tel Aviv University, later Dean of Science and finally Rector. After that he was appointed as Chairman of the National Research Council, and finally as Director of the Sackler Institute of Advanced Studies. Not surprisingly he handled these commissions successfully, and he did it in the typical informal way that characterized his personality. It is highly remarkable that he was able to do this while at the same time carrying out all the high level research described above.

Saul was an extremely nice and happy person, always available for discussions with others, not only about scientific matters but also about every day matters. He was one of the few that seems to have been liked by everybody.

References

Abarbanel, S.: Time dependent temperature distribution in radiating solids. J. Math. Phys. 29, 246–257 (1960)

Abarbanel, S.: Radiative heat transfer in free-molecule flow. J. Aerosp. Sci. 28, 299–307 (1961)

Abarbanel, S.: The distribution of flow properties behind a spherical detonation wave. Isr. J. Technol. 4, 82–86 (1966)

Abarbanel, S., Chertock, A.: Strict stability of high-order compact implicit finite-difference schemes: the role of boundary conditions for hyperbolic PDEs, I. J. Comput. Phys. 160, 42–66 (2000)

Abarbanel, S., Chertock, A., Yefet, A.: Strict stability of high-order compact implicit finite-difference schemes: the role of boundary conditions for hyperbolic PDEs, II. J. Comput. Phys. 160, 67–87 (2000)

Abarbanel, S., Ditkowski, A.: Asymptotically stable fourth-order accurate schemes for the diffusion equation on complex shapes. J. Comput. Phys. 133, 279–288 (1997)

Abarbanel, S., Ditkowski, A.: Wave propagation in advected acoustics within a non-uniform medium under the effect of gravity. Appl. Numer. Math. 93, 61–68 (2015)

Abarbanel, S., Duth, P., Gottlieb, D.: Splitting methods for low Mach number Euler and Navier–Stokes equations. Comput. Fluids 17, 1–12 (1989)

Abarbanel, S., Dwoyer, D., Gottlieb, D.: Improving the convergence rate to steady state of parabolic ADI methods. J. Comput. Phys. 67, 1–18 (1986)

Abarbanel, S., Goldberg, M.: Numerical solution of quasi-conservative hyperbolic systems—the cylindrical shock problem. J. Comput. Phys. 10, 1–21 (1972)

Abarbanel, S., Gottlieb, D.: Optimal time splitting for two- and three-dimensional Navier–Stokes equations with mixed derivatives. J. Comput. Phys. 41, 1–33 (1981)

Abarbanel, S., Gottlieb, D.: A mathematical analysis of the PML method. J. Comput. Phys. 134, 357–363 (1997)

Abarbanel, S., Gottlieb, D.: On the construction and analysis of absorbing layers in CEM. Appl. Numer. Math. 27, 331–340 (1998)

Abarbanel, S., Gottlieb, D., Carpenter, M.: On the removal of boundary errors caused by Runge–Kutta integration of nonlinear partial differential equations. SIAM J. Sci. Comput. 17, 777–782 (1996)

Abarbanel, S., Gottlieb, D., Hesthaven, J.: Well-posed perfectly matched layers for advective acoustics. J. Comput. Phys. 154, 266–283 (1999)

Abarbanel, S., Gottlieb, D., Hesthaven, J.: Long time behavior of the perfectly matched layer equations in computational electromagnetics. J. Sci. Comput. 17, 405–422 (2002)

Abarbanel, S., Gottlieb, D., Hesthaven, J.: Non-linear PML equations for time dependent electromagnetics in three dimensions. J. Sci. Comput. 28, 125–137 (2006)

Abarbanel, S., Zwas, G.: An iterative finite-difference method for hyperbolic systems. Math. Comput. 23, 549–565 (1969)

Abarbanel, S., Zwas, G.: The motion of shock waves and products of detonation confined between a wall and a rigid piston. J. Math. Anal. Appl. 28, 517–544 (1969)

Berenger, J.: A perfectly matched layer for the absorbtion of electromagnetic waves. J. Comput. Phys. 114, 185–200 (1994)

Carpenter, M., Gottlieb, D., Abarbanel, S.: The stability of numerical boundary treatments for compact high-order finite-difference schemes. J Comput. Phys. 108, 272–295 (1993)

Carpenter, M., Gottlieb, D., Abarbanel, S.: Stable and accurate boundary treatments for compact, high-order finite-difference schemes. Appl. Numer. Math. 12, 55–87 (1993)

Carpenter, M., Gottlieb, D., Abarbanel, S.: Time-stable boundary conditions for finite-difference schemes solving hyperbolic systems: methodology and application to high-order compact schemes. J Comput. Phys. 111, 220–236 (1994)

Carpenter, M., Gottlieb, D., Abarbanel, S., Don, W.-S.: The theoretical accuracy of Runge-Kutta time discretizations for the initial boundary value problem: a study of the boundary error. SIAM J. Sci. Comput. 16, 1241–1252 (1995)

Engquist, B., Majda, A.: Absorbing boundary conditions for the numerical simulation of waves. Math. Comput. 31, 629–651 (1977)

Kreiss, H.-O., Oliger, J.: Comparison of accurate methods for the integration of hyperbolic problems. Tellus 24, 199–215 (1972)

Kreiss, H.-O., Scherer, G.: Finite Element Andfinite Difference Methods for Hyperbolic Partial Differential Equations, Mathematical Aspects of Finite Element And Finite Difference Methods For Hyperbolic Partial Differential Equations. Academic Press, New York (1974)

Lax, P.: Weak solutions of nonlinear hyperbolic equations and their numerical computation. Commun. Pure Appl. Math. 7, 159–193 (1954)

Lax, P., Wendroff, B.: Systems of conservation laws. Commun. Pure Appl. Math. 13, 217–237 (1960)

Lele, S.: Compact finite difference schemes with spectral-like resolotion. J. Comput. Phys. 103, 16–42 (1992)

MacCormack, R., Baldwin, B.: A numerical method for solving the Navier–Stokes equations with application to shock-boundary layer interaction. AIAA paper 75–1 (1975)

Orszag, S.: Numerical simulation of incompressible flows within simple boundaries. I. Galerkin (spectral) representations. Stud. Appl. Math. 50, 293–327 (1971)

Turkel, E., Abarbanel, S., Gottlieb, D.: Multidimensional difference schemes with fourth order accuracy. J. Comput. Phys. 21, 85–113 (1976)

von Neumann, J., Richtmyer, R.: A method for numerical calculation of hydrodynamic shocks. J. Appl. Phys. 21, 232–237 (1950)

Ziolkowski, R.: Time-derivative Lorentz-material model-based absorbing boundary condition. IEEE Trans. Antennas Propag. 45, 1530–1535 (1997)

Zwas, G., Abarbanel, S.: Third and fourth order accurate schemes for hyperbolic equations of conservation law form. Math. Comput. 25, 229–236 (1971)

Acknowledgements

I would like to thank Adi Ditkowski for providing some facts on Saul’s youth and some material concerning his early scientific work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Gustafsson, B. Saul Abarbanel; Half a Century of Scientific Work. J Sci Comput 81, 1124–1135 (2019). https://doi.org/10.1007/s10915-019-00932-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-019-00932-3