Abstract

In this work, a novel artificial viscosity method is proposed using smooth and compactly supported viscosities. These are derived by revisiting the widely used piecewise constant artificial viscosity method of Persson and Peraire as well as the piecewise linear refinement of Klöckner et al. with respect to the fundamental design criteria of conservation and entropy stability. Further investigating the method of modal filtering in the process, it is demonstrated that this strategy has inherent shortcomings, which are related to problems of Legendre viscosities to handle shocks near element boundaries. This problem is overcome by introducing certain functions from the fields of robust reprojection and mollifiers as viscosity distributions. To the best of our knowledge, this is proposed for the first time in this work. The resulting \(C_0^\infty \)artificial viscosity method is demonstrated to provide sharper profiles, steeper gradients, and a higher resolution of small-scale features while still maintaining stability of the method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the last decades, great efforts have been made to develop accurate and stable numerical methods for time dependent partial differential equations (PDEs), especially for hyperbolic conservation laws. Traditionally, low order numerical schemes, have been used to solve hyperbolic conservation laws, in particular in industrial applications. But since they become quite costly for high accuracy or long time simulations, there is a rising demand for high order methods. Such high order methods, like flux reconstruction [42, 68] or several Discontinuous Galerkin schemes [20, 27, 38], typically use polynomial approximations to the solution. At least for smooth solutions, they are capable of reaching spectral orders of accuracy. Yet, special care has to be taken to the fact that solutions of hyperbolic conservation laws might form spontaneous discontinuities. Due to the Gibbs phenomenon, polynomial approximations of jump functions typically show spurious oscillations and yield the underlying numerical scheme to break down. While low-order methods add too much dissipation to the numerical solution, hence smearing smaller scaled features, high-order methods add too little. Therefore, in recent hp-methods, the idea is to add artificial dissipation in elements where discontinuities arise. Such procedures are known as shock capturing techniques.

In this work, a novel artificial viscosity method is proposed for sub-cell shock capturing in Discontinuous Galerkin and related methods. This new artificial viscosity method, referred to as the \(C_0^\infty \)artificial viscosity method, essentially relies on the idea to replace commonly used viscosities in the artificial viscosity method [47, 58] by certain weight functions from the field of robust reprojection [25] and mollifiers [66].

Further, the novel \(C_0^\infty \) artificial viscosity method is derived by revisiting the most commonly used existing viscosity methods, such as the piecewise constant artificial viscosity method of Persson and Peraire [58] as well as the piecewise linear method of Klöckner et al. [47], with regard to the fundamental design criteria of conservation and entropy stability. To the best of the authors’ knowledge, this is the first work to investigate these methods in a strictly analytical sense. In the process, we are able to pinpoint certain drawbacks of these methods, formulate precise criteria on the viscosity terms for certain properties to hold, and thus construct novel ones with favorable properties.

It should be noted that the related strategy of modal filtering is addressed, which is a widely used tool [8, 28, 30, 37], since it promises to be somewhat of a more efficient and easy to implement formulation of the artificial viscosity method. We will show, however, that modal filtering has inherent drawbacks which can not be overcome. This will be demonstrated by showing that modal filtering (at least for exponential filters) corresponds to specific Legendre or more general Jacobi artificial viscosities which again are highlighted to perform poor when shock discontinuities form near element boundaries. To the best of our knowledge, this is the first time modal filtering (by exponential filters) is exposed to hold such a shortcoming.

The rest of this work is organised as follows. In Sect. 2, a short description of the Discontinuous Galerkin method is given. Section 3 exposes the essential design criteria of conservation and entropy stability for hyperbolic conservation laws on which the subsequent theoretical investigation of different artificial viscosity methods are based. The artificial viscosity method is then introduced in Sect. 4 and the most widely used versions of Persson and Peraire as well as of Klöckner et al. are revisited. In this section it is further proved that conservation holds for the viscosity extension of a conservation law, once the artificial viscosity is continuous and compactly supported. In Sect. 5 this discussion is extended to entropy stability of viscosity extensions, where it is shown that the artificial viscosity in addition has to be nonnegative for entropy stability to carry over. Moreover pinpointing the crucial drawbacks of modal filtering, we utilize the acquired design criteria for artificial viscosity terms to propose novel ones. Section 6 introduces the shock sensor which will be used for the subsequent numerical tests in order to steer the location and strength of dissipation added by the novel artificial viscosity method. Numerical tests demonstrating the performance of the proposed novel strategies—also in comparison with the commonly used one of Klöckner et al.—are provided in Sect. 7 for the system of Euler equations. The work closes in Sect. 8 by summarizing the characteristic features of the proposed new artificial viscosity methods and discussing possible future applications.

2 Discretization

Traditionally, the DG approach combines ideas from Finite Volume (FV) and Spectral Element Methods (SEM). When space and time are decoupled by the method of lines [51], the DG method is designed as a semidiscretization of hyperbolic systems of conservation laws,

with appropriate initial and boundary conditions on a physical domain \(\Omega \), which is decomposed into I disjoint, face-conforming elements \(\Omega _i\), \(\Omega = \bigcup _{i=1}^I \Omega _i\).

The DG method in one space dimension uses a nodal or modal polynomial basis of order p in a reference element \(\Omega _\textit{ref} = [-\,1,1]\). The I elements are mapped to this reference element, where all computations are performed then. The extension to multiple dimensions can be achieved by tensor products. Thus, in the following, just this one dimensional case is briefly revised. For a more detailed as well as more general description of DG schemes, see for instance the works [38, 45] and references cited therein.

In one space dimension, the DG approach uses polynomial approximations \(u_p,f_p \in \mathbb {P}_p([-1,1])\) to the solution u(t, x) respectively the flux f(u) at time t. It is constructed by the approach of the residual

to vanish in the sense that it is orthogonal to all local test functions \(\psi \in \mathbb {P}_p([-1,1])\), i.e.

By applying integration by parts once, the locally defined weak form

is recovered, and by applying integration by parts a second time, the locally defined strong form

arises. Here, \(f^\mathrm {num}\) is a suitably chosen numerical flux. Representing the numerical solution \(u_p\), the flux (reconstruction) \(f_p\), and the test functions \(\psi \) all with respect to the same basis \(\{ \varphi _k \}\),

\(p+1\) equations

for \(j=0,\dots ,p\), are obtained for the \(p+1\) unknown coefficients \(\hat{u}_k\) of the numerical solution on each element.

By utilizing the mass, stiffness, differentiation, restriction (interpolation), and boundary integral matrices

the DG projection (7) can be rewritten in its matrix form

Here, \(\underline{u},\underline{f}\) are the vectors containing the coefficients of \(u_p, f_p\) and \(\underline{f}^\mathrm {num}\) is the vector containing the values of the numerical flux at the element boundaries.

The resulting system of ordinary differential equations for the solution coefficients \(\underline{u}\), is integrated in time using for instance a Runge–Kutta method then. See the extensive literature [31,32,33, 46, 52]. In this work, the later numerical tests are obtained by the explicit strong stability preserving (SSP) Runge–Kutta (RK) method of third order using three stages (SSPRK(3,3)) or of forth order using five stages (SSPRK(4,5)) given by Gottlieb and Shu in [32] and Hesthaven and Warburton in [38], respectively.

3 Design Principles and Equations

When space and time are decoupled by the method of lines, numerical methods for hyperbolic conservation laws \(\partial _t u = -\, \partial _x f(u)\) are essentially designed as semidiscretisations of the right hand side \(-\,\partial _x f(u)\). In the DG approach described in Sect. 2, this semidiscretisation is given by

But how does one construct such a semidiscretisation? Besides consistency with the underlying differential equation, numerical methods typically aim to mimic certain properties of the (unknown) analytical solution. In this work, the focus will be given to the very essential properties of conservation and entropy stability as design principles for numerical schemes. In fact, a broad class of artificial viscosity terms for sub-cell shock capturing methods will be rejected, since they already violated conservation. Furthermore, every hyperbolic conservation law provides its very own entropy (criterion), which is then used to select solutions of physical significance. In this section, the most important equations and associated entropy functions are briefly revisited.

3.1 Design Principles

Conservation laws

ensure that the rate of change of the total amount of a particular measurable property u in a fixed domain \(\Omega \) is equal to the flux of that property across the boundary of \(\Omega \), i.e.

Recalling [50] that a solution of (12) may develop spontaneous jump discontinuities (shock waves) in finite time and even for smooth initial condition, the more general class of weak solutions has to be permitted. However, since there are many possible weak solutions then, the one of physical significance must be selected. This is done by augmenting the conservation law (12) with an additional entropy condition which requires

U(u) and F(u) are any strictly convex entropy function and corresponding entropy-flux associated with the conservation law (12) in the sense that they have to satisfy \(U'(u) f'(u) = F'(u)\). A strict inequality in (14) reflects the existence of physically relevant shock waves in the solution of the system (12), (14) then. Similar to the associated conservation law, the entropy condition (14) ensures that the rate of change of the total amount of entropy U in a fixed domain \(\Omega \) is equal or less to the entropy-flux across the boundary of \(\Omega \), i.e.

Especially for periodic boundary conditions, the two mayor design principles,

which will be further utilized in Sects. 4 and 5, arise.

3.2 Linear Advection and Euler Equations

At the very simple end of the spectrum of hyperbolic conservation laws lies the linear advection equation,

with constant velocity. Equation (18) transports its initial condition in time with speed 1. Thus, when the equation is augmented with an initial condition \(u_0(x)\), the exact solution is simply given by \(u(t,x) = u_0(x-t)\). For the linear advection equation, one has \(f'(u) = 1\) and hence \(U'(u) = F'(u)\) for a pair (U, F) of a strictly convex entropy U and an entropy-flux F. One such pair associated to the linear advection equation, is therefore already given by

which is the so called \(L^2\)entropy. In fact, for scalar conservation laws, any strictly convex function U is an entropy [29]. Note that it is much more difficult to find an entropy U in the general case of systems. In fact, the existence of entropy functions is a special property of the system. However, in all practical examples derived from Mechanics or Physics, finding an entropy which has a physical meaning is possible.

Such a practical example are the Euler equations

of gas dynamics. Here, \(\rho \) is the density, v is the velocity, E is the total energy, \(m = q \rho \) is the momentum, \(P = (\gamma - 1)(E-\frac{1}{2}v^2 \rho )\) is the pressure, and \(\gamma \) is the ratio of specific heats. Euler equations broadly apply to compressible, inviscid flow problems. An entropy-entropy flux pair (U, F) associated to the Euler equations (20) is given by

where s is the physical entropy

with constant term \(C \in \mathbb {R}\), which can be ignored. In fact, there are many possible choices for the entropy function, such as \(U = \rho \mu (s)\) for any convex function \(\mu \), see [35]. The above choice, however, is the only one which is consistent with the entropy condition from thermodynamics [41] in the presence of heat transfer.

4 Motivation for \(C^0\) Artificial Viscosity

Spontaneous development of jump discontinuities in solutions of hyperbolic conservation laws is not only a challenge from a theoretical point of view but also from a numerical. In addition to selecting physical significant solutions, spurious oscillations arise in the numerical solution when shock waves are present. Especially high order schemes, where the ’wiggles’ stem from the Gibbs phenomenon [39], often become unstable and might finally break down. This is illustrated in Fig. 1 for the linear advection equation (18) with a square wave as initial condition.

Further, a numerical full upwind flux \(f^\mathrm {num}(u_-,u_+) = u_-\) and time integration by the third order SSPRK method using three stages—SSPRK(3,3)—by Gottlieb and Shu [32] has been used. It is known [13] that for stability to hold the time step size \(\varDelta t\) should be bounded as \(\varDelta t \le C \frac{h}{(2p+1) \lambda _{\text {max}}}\), where \(\lambda _{\text {max}} = 1\) is the magnitude of the velocity and \(h=1/I\) is the (local) mesh size. Using 1000 time steps, the CFL number C has been chosen as \(C = 0.38\).

In [49], it is suggested that the linear advection equation is highly suited to test shock capturing schemes for several reasons:

-

1.

The linear advection equation is the simplest partial differential equation that can feature discontinuous solutions. Thus, the (shock capturing) method can be observed in a well-understood setting, isolated from nonlinear effects.

-

2.

The knowledge of the exact solution \(u(t,x) = u_0(x-t)\), in particular, allows to suspend the discussion of shock senors. By eliminating potential shortcomings of a shock sensor, one is able to solely examine the behavior of the shock capturing method.

-

3.

At the same time, the linear advection equation provides a most challenging example to be treated. Similar to contact discontinuities in the Euler equations, discontinuities are not self-steeping. Once such a discontinuity is smeared by the method, nothing will recover it to its original sharp shape.

Furthermore, the \(L^2\) entropy U associated to (18) remains constant for the exact solution. While the entropy stable numerical flux introduces dissipation at the element boundaries, and thus in the spatial semidiscretization, the SSPRK method does in the time integration. As a consequence, the entropy in Fig. 1b slightly decreases. Both mechanisms alone, entropy stable (dissipative) numerical fluxes and time integration, have serious drawbacks however. First, (\(L^2\)) stability of SSPRK schemes is only ensured when the simple forward Euler method \(u^{n+1} = u^n + \varDelta t \partial _t u^n\) already provides (\(L^2\)) stability [33]. Yet, the forward Euler method is most often not stable, since

where the second term might be estimated due to a stable semidisretisation, while the last term is non-negative and might render the forward Euler method unstable. See [32, 33, 46, 52, 60] for more details on SSP time discretization methods. Second, and even more serious, are the shortcomings of shock capturing just by dissipative numerical fluxes. When dissipation is added just at the element boundaries, no further resolution can be obtained near shock discontinuities when the polynomial degree is increased. The same holds for increasing the number of elements, while keeping the polynomial degree fixed.Footnote 1 Both cases are illustrated in Fig. 2. Sub-cell resolution can neither be enhanced by increasing the polynomial degree nor by refining the mesh.

In both cases, the spurious oscillations are just closer to the discontinuity but will not vanish. A common approach therefore is to identify the elements lying in the shock region and to reduce the order of approximation there, see for instance [6, 9] and references therein. Since this increases inter-element jumps and thus the amount of dissipation naturally added by the DG method, at latest for piecewise constant approximations, the method should be able to handle any shock. Yet, it should be stressed that decreasing the order of approximation is equivalent to adding dissipation proportional to \(\mathcal {O}(\varDelta x)\). Clearly, the accuracy will be reduced. Thus, a widely accepted observation is that the solution, in fact, can be at most first order accurate near shocks. A common idea to bypass this problem is to adaptively refine the mesh in regions of discontinuity. Shocks, however, are lower dimensional objects and strongly anisotropic. An effective strategy for mesh adaptation therefore needs to incorporate some degree of directionality, especially in three dimensions. See for instance [17] and references therein.

In this work a simpler approach will be exploited. Sub-cell resolution will be enhanced by an improvement of the artificial viscosity method,

4.1 Vanishing and Artificial Viscosity

Originally, the idea of artificial viscosity stems from the vanishing viscosity method to show existence of entropy solutions, see [50]. There, entropy solutions are constructed as \(L^1\) limits of solutions \(u_\varepsilon \) of the parabolic equation

for \(\varepsilon \rightarrow 0\). Thus, another way to characterise physically realizable solutions is to identify them as limits of solutions of equations in which a small dissipative mechanism has been added.

In their pioneering work [69], von Neumann and Richtmyer revised this idea to construct stable FD schemes for the equations of hydrodynamics by including artificial viscosity terms. As they pointed out, when viscosity is taken into account, shocks are seen to be smeared out, so that the mathematical surfaces of discontinuity are replaced by thin layers in which pressure, density, temperature, etc. vary rapidly but continuously. The overall concept is to approximate (discontinuous) entropy solutions by smoother solutions of a parabolic equation and to apply the numerical method to this new equation, where shocks are now replaced by thin but continuous layers. Often, the smooth approximation \(u_\varepsilon \) is also called a viscose profile to the entropy solution u.

4.2 A Local Artificial Viscosity

Considering a viscose profile over the whole domain \(\Omega \), shocks might be spread over several elements if not even the whole domain. Furthermore, also other (especially small-scale) features of the original solution away from shocks typically get smeared by a global artificial viscosity, i.e. \(\varepsilon = \textit{const}\) on \(\Omega \). Breaking new ground in [58], Persson and Peraire therefore proposed a local artificial viscosity in the framework of DG methods. By locally adapting the viscosity strength \(\varepsilon \), artificial viscosity is just added in the elements where shocks arise. Thus, the now piecewise constant function \(\varepsilon \) is chosen \(\varepsilon = 0\) away from discontinuities and \(\varepsilon > 0\) in elements with shocks. Discontinuities that may appear in the original solution will spread over layers of thickness \(\mathcal {O}(\varepsilon )\) in the solution of the modified equation. Hence, Persson and Peraire more precisely suggest that \(\varepsilon \) should be chosen as a function of the resolution available in the approximating space. Since this resolution given by a piecewise polynomial of order p and element width h scales like \(\frac{h}{p}\), values \(\varepsilon \in \mathcal {O}\left( \frac{h}{p} \right) \) are taken near shocks.

While there are works of Barter and Darmofal [4] as well as Klöckner, Warburton, and Hesthaven [47] that emphasize certain drawbacks of a piecewise constant artificial viscosity, the present work is the first to decline this approach based on a strictly theoretical analysis. In [4], the authors already observed oscillations in state gradients which pollute the downstream flow for element-to-element variations in the artificial viscosity. In the following however, it is demonstrated that already the very first design principle (13) of conservation fails for a just piecewise continuous artificial viscosity.

Decomposing the domain \(\Omega \) into disjoint, face-conforming elements \(\Omega _i\), one has

in every element, since \(\varepsilon \) is piecewise constant with value \(\varepsilon _i\) on the i-th element. Now putting the elements together, the rate of change of the total amount of u is given by

Assuming a sufficiently smooth solution and therefore \(u_- = u_+\) at the element boundaries, this reduces to

Note that especially for periodic boundary conditions one thus has

which typically is not equal to zero and thus violates the design principle (16) of conservation. Demonstrated by this analysis, a just piecewise constant artificial viscosity admittedly has some critical drawbacks and thus should be rejected.

4.3 Recent Continuous Refinements: Smoothing the Artificial Viscosity

In [4], Barter and Darmofal took up the work [58] of Persson and Peraire and further improved their ideas. Clear numerical shortcomings of a piecewise constant artificial viscosity are stressed in their work. In particular, they note that the element-to-element variations induce oscillations in state gradients, which pollute the downstream flow. While numerical oscillations are damped, oscillations remain in the derivative \(\partial _x u\). Even though they seem to miss the violation of conservation, they clearly point out the missing smoothness of the viscosity as the crucial problem. Thus, in [4] a smooth artificial viscosity was developed by employing an artificial viscosity PDE model which is appended to the system of governing equations. Effectively, their idea to enhance smoothness of the artificial viscosity is “diffusing the diffusivity”.

Without separately discussing the drawbacks of this particular approach (which will be stressed later), we now analyze the consequences of a ’sufficiently smooth’ artificial viscosity. Working with the more general viscosity term \(\left( \partial _x \varepsilon (x) \partial _x \right) u\) this time, an analogous analysis to the one before yields

Again assuming a periodic behavior, now also for \(\varepsilon \), this time

arises, which in fact satisfies the design principle (16) of conservation. Furthermore, the general design principle of conservation for arbitrary boundary conditions (13) can be satisfied by enforcing a compactly supported viscosity \(\varepsilon (x)\) on \(\Omega \). By noting that in the above analysis, a continuous viscosity \(\varepsilon (x)\) is already ’sufficiently smooth’, the following Theorem is immediately proven.

Theorem 1

Augmenting a conservation law \(\partial _t u + \partial _x f(u) = 0\) with a continuous and on \(\Omega \) compactly supported artificial viscosity \(\left( \partial _x \varepsilon (x) \partial _x \right) u\) on the right hand side, preserves conservation. I.e.

holds for solutions of \(\partial _t u + \partial _x f(u) = \left( \partial _x \varepsilon (x) \partial _x \right) u\).

In accordance to Theorem 1, in [47], Klöckner et al. numerically observed that there seems to be no advantage in having viscosities \(\varepsilon \in C^k\) for \(k > 0\).Footnote 2 Thus, they have formulated an algorithm to enforce continuity of the viscosity—as the authors think, more efficient than the one of Barter and Darmofal—by simple linear interpolation. Building up on a given piecewise constant viscosity, they propose the following steps:

-

1.

At each vertex, collect the maximum viscosity occurring in each of the adjacent elements.

-

2.

Propagate the resulting maximum back to each element adjoining vertex.

-

3.

Use a linear (\(P^1\)) interpolant to extend the values at the vertices into a viscosity on the entire element.

While the above algorithm works perfectly fine in regards to enforce continuity of the artificial viscosity, it inherits a critical disadvantage, which is also shared by the approach of Barter and Damofal [4]. As Sheshadri and Jameson already stated in [63], enforcement of continuity of the artificial viscosity \(\varepsilon \) across element boundaries increases the footprint of the added dissipation again. This is noticed immediately when consulting Fig. 3, where the algorithm of Klöckner et al. is illustrated for a simple example.

First introducing local artificial viscosity to resolve shock discontinuities within a single element, yet increasing the footprint again by enforcing continuity, the circle closes.

5 Modal Filtering and Novel \(C^\infty _0\) Artificial Viscosities

When enforcing continuity of the viscosity, and therefore ensuring conservation, the initial localization of the artificial viscosity proposed by Persson and Peraire [58] is destroyed again. Shocks are spread over several cells and sub-cell resolution is prevented.

Yet, another drawback arises from the artificial viscosity method. Augmenting the original conservation law with a parabolic term \(\left( \partial _x \varepsilon \partial _x \right) u\) significantly decreases the efficiency of the numerical scheme. In addition to the typical time step restriction \(\varDelta t \sim \varDelta x\) an additional restriction \(\varDelta t \sim \frac{\left( \varDelta x \right) ^2 }{ \Vert \varepsilon \Vert _\infty }\) of an explicit time step arises for the artificial viscosity extension \(\partial _t u + \partial _x f(u) = \left( \partial _x \varepsilon \partial _x \right) u\). This second restriction is potentially more harsh and might reduce the global time step considerably. Note that also methods such as the local DG method of Cockburn and Shu [15], where the viscosity extension is rewritten as a system of first order hyperbolic equations

by introducing the auxiliary flux variable q, just shift the problem. The efficiency still is reduced, now by the increased complexity of the system under consideration.

To sum up, there are two major problems which have to be addressed:

-

1.

Spreading of shocks over several elements by enforcement of continuity.

-

2.

Decreased efficiency of the method by introducing second (or higher) order terms of the artificial viscosity.

The first problem will be overcome by introducing \(C^\infty _0\) artificial viscosities, which are compactly supported on the corresponding element. A well-known representative of this class of viscosities is the so called Legendre artificial viscosity, \(\partial _x \left( 1 - x^2 \right) \partial _x u \), which will be briefly revised in Sect. 5.1. This viscosity is often used since it corresponds to certain modal exponential filters. Using these modal filters instead of the original artificial viscosity method, the second problem of additional time step restrictions can also be overcome. Yet, in Sect. 5.1 the Legendre artificial viscosity, and therefore modal filtering in the Legendre basis, is declined due to serious shortcomings of the corresponding viscosity function. To the best of the authors’ knowledge, this work is the first to address this particular problem of the Legendre artificial viscosity and corresponding modal filters. By an argument of Bochner [7], the same modal filters will even be rejected for all orthogonal bases of Jacobi polynomials.

In order to fill the void that results from rejecting the state of the art viscosities, novel \(C^\infty _0\) artificial viscosities are proposed in Sect. 5.2. To the best of the authors’ knowledge, these viscosities are proposed for the first time.

5.1 Legendre Artificial Viscosity and Modal Filtering

A commonly utilized approach to overcome Problem 1, i.e. an increased footprint of the viscosity caused by enforcement of continuity, is to use the Legendre viscosity defined on the reference element \([-\,1,1]\) by

where \(\varepsilon \in \mathbb {R}_0^+\) is called the viscosity strength and \(\nu (x) =\left( 1 - x^2 \right) \) is referred to as the viscosity distribution.

Using the Legendre artificial viscosity, shocks are again resolved within a single element, where now conservation is satisfied by Theorem 1 and entropy stability is further ensured by the subsequent Theorem 2. The Legendre viscosity is such a widespread tool because it enables one to bypass Problem 2 in a certain sense. Here, a procedure first proposed by Majda et al. [53] as well as by Kreiss and Oliger [48] is utilized. Also see the monograph [30].

Applying a first order operator splitting in time, solving the artificial viscosity extension \(\partial _t u + \partial _x f(u) = \varepsilon \left( \partial _x \nu \partial _x \right) u\) is divided into two steps:

Applying a numerical solver to this approach, going forward in time is done by alternately integrating (35) and (36). This can for instance be done by an explicit Runge–Kutta method. Note that in this approach additional time step restrictions are still present for the parabolic equation (36). When adjusting the artificial viscosity to the modal basis in which the numerical solution is expressed, however, Eq. (36) can be solved exactly. The exact solution is obtained by applying a modal exponential filter to the numerical solution of Eq. (35) then.

The Legendre viscosity, in particular, is used so often, since the orthogonal Legendre polynomials \(\{P_k\}\) arise as solutions of the corresponding Legendre differential equation

of a Sturm–Liouville type for eigenvalues \(-\lambda _k = -k(k+1)\). Thus, expressing the numerical solution \(u_p\) with respect to the commonly used orthogonal basis of Legendre polynomials, \(u_p(t,x) = \sum _{k=0}^p \hat{u}_k(t) P_k(x)\), Eq. (36) reads

Thereby, the last equation follows from the eigenvalue equation (37) for the Legendre polynomials. A simple comparison of the time dependent coefficients results in a system of \(p+1\) decoupled ODEs

with solutions given by

After one time step \(\varDelta t = t^{n+1} - t^n\), the coefficients \(\hat{u}_k (t^{n+1})\) are hence given by

Thus, solving the parabolic equation (36) for the numerical solution \(u_p\) is equivalent to multiplying the coefficients \(\hat{u}_k\) with the function \(\sigma (k) = e^{-\varepsilon \varDelta t \lambda _k}\). In order to speak of a modal filter for \(\sigma (k) = \exp ( -\varepsilon \varDelta t \lambda _k )\), the exponent has to be rewritten as

Only now, \(\sigma : [0,1] \rightarrow [0,1]\) can be seen as an exponential filter of order 2 with filter strength\(\alpha := \varepsilon p^2 \varDelta t\). See for instance the work [37] of Hesthaven and Kirby, as well as references therein.

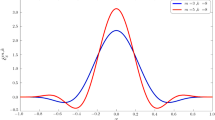

By now, modal filtering is an established shock-capturing tool and was applied in a great number of works, [8, 26, 28, 30, 37, 40, 44, 54,55,56, 71]. In our numerical tests however, we observed the Legendre artificial viscosity as well as the corresponding modal filter applied in a Legendre basis to perform poorer than for instance the artificial viscosity method of Klöckner et al. In Fig. 4, this is demonstrated for the linear advection equation.

While there are nearly no oscillations present anymore in the numerical solution obtained by the \(C^0\) artificial viscosity of Klöckner et al. (Fig. 4b), the numerical solution obtained by the Legendre artificial viscosity still shows spurious oscillations (Fig. 4c). This is illustrated in greater detail in Fig. 4d, where the numerical solutions for the \(C^0\) and Legendre artificial viscosity method are compared to the right of the jump discontinuity. By running various numerical tests, the particular viscosity distribution of the Legendre artificial viscosity was explored to be the determining factor for the spurious oscillations in the corresponding numerical solution. Further illustrated by the subsequent Fig. 5a, the Legendre viscosity rapidly vanishes away from the element center. Thus, if a shock discontinuity performs near a cell boundary, nearly no—in fact arbitrary little—dissipation is added there by the Legendre viscosity. This observation can be made whenever shocks perform near element boundaries and yield to the conclusion that the Legendre artificial viscosity method as well as corresponding modal filters should be rejected.

Naturally, the question arises if this bottleneck might be overcome by utilizing more suitable viscosities, which then again should correspond to modal filters in a proper basis of orthogonal polynomials. While the first attempt to construct more appropriate viscosity distributions will be tackled in Sect. 5.2 by constructing novel viscosity distributions, the possibility of still finding corresponding modal filters can immediately be rejected. The decisive property of the Legendre artificial viscosity is that the orthogonal Legendre polynomials occur as eigenfunctions of the Sturm–Liouville operator \(Ly = \left( (1 - x^2) y' \right) '\) with eigenvalues \(-\lambda _k = -k(k+1)\), i.e.

with \(a(x) = 1 - x^2, b(x) = 2x\) and \(c(x) = 0\) for the Legendre artificial viscosity. Yet, following a simple argument of Bochner [7, 62], for every Sturm–Liouville operator featuring polynomials as eigenfunctions, the corresponding coefficients a, b and c must be polynomials of degree 2, 1 and 0, respectively. Further keeping in mind that the resulting viscosity needs to vanish at the boundaries to ensure conservation and should be positive (see Theorem 2), up to a positive constant, \(a(x) = 1-x^2\) is the very only choice. Note that every modal filter \(\sigma _k = \exp ( - \alpha k(k+1+\alpha +\beta ) )\) applied in the associated basis of orthogonal Jacobi polynomials \(\{ P_k^{(\alpha ,\beta )} \}\) is equivalent to artificial viscosity of the form

by a first order operator splitting in time, since the Jacobi polynomials are eigenfunctions of the more general Sturm–Liouville operator \(Ly = \left( (1 - x^2) y' \right) ' + \left( \beta - \alpha - ( \alpha + \beta ) x \right) y'\). While the dissipative term \(\partial _x \left( 1 - x^2 \right) \partial _x\) is still the same, in this case also undesired dispersion would be added to the equation.

5.2 Novel \(C^\infty _0\) Artificial Viscosities

To the best of the authors’ knowledge, this work is the first to decline the whole class of Jacobi viscosities and their corresponding modal filters. In order to somewhat fill the resulting void in sub-cell shock capturing methods for spectral schemes, novel viscosity distributions will be proposed in this subsection in order to overcome the shortcomings of the Legendre and Jacobi ones.

Our idea is to construct more general smooth viscosities

which still vanish at the element boundaries and have their peak at the element center. Conservation of the resulting \(C^\infty _0\)artificial viscosities\(\varepsilon \left( \partial _x \nu (x) \partial _x \right) u \) is again ensured by Theorem 1. The crucial requirement is for the viscosity distribution \(\nu (x)\) to vanish at the element boundaries. Thus, other viscosity distributions than the Legendre one are obviously possible. Yet, non-negativity of these is mandatory to carry the design criteria of entropy stability (15) over to the artificial viscosity extension

This is further summarized in

Theorem 2

Augmenting a conservation law \(\partial _t u + \partial _x f(u) = 0\) with a \(C^\infty _0\) artificial viscosity \(\varepsilon \left( \partial _x \nu (x) \partial _x \right) u \) with positive viscosity strength \(\varepsilon \) and viscosity distribution \(\nu \ge 0\), again results in entropy stability. I.e.

holds for solutions of \(\partial _t u + \partial _x f(u) = \varepsilon \left( \partial _x \nu (x) \partial _x \right) u \) and an associated entropy-entropy flux pair (U, F).

Proof

Multiplying the viscosity extension (46) by an entropy gradient \(U'\), i.e. satisfying \(U'(u) f'(u) = F'(u)\), one obtains an entropy equation

Since \(\nu (x) \ge 0\) and U is convex, the inequality

holds, and thus the entropy inequality

follows. Finally, for the rate of change of entropy in the element \(\Omega _i\) under consideration,

is proven, which ensures the design criteria of entropy stability (15).

Note that both, the local artificial viscosity of Persson and Peraire [58] using a piecewise constant viscosity as well as the continuous refinement and Klöckner et al. [47] yield entropy stability. In fact, the proof of Theorem 2 easily adapts to continuous artificial viscosities over several cells as long as they are compactly supported on \(\Omega \). This is furthermore in accordance with the well-known effect of dissipative mechanisms on shocks, such as heat conduction and more general viscosity terms [69].

Remark 3

In Sect. 4, \(C^0\) has been referred to the viscosity being globally \(C^0\), i.e. continuous on the whole domain \(\Omega \). In the present section, however, \(C^\infty _0\) refers to the viscosity being locally \(C^\infty _0\), i.e. smooth and compactly supported in every element \(\Omega _i\). Globally, the viscosity will still be only continuous, if no additional restrictions at the boundaries are enforced for the viscosity.

Finally, to overcome the drawback of the Legendre viscosity, novel viscosity distributions \(\nu \) are proposed with \(\nu \approx 1\) over more of the reference element \([-\,1,1]\):

-

1.

The Gegenbauer viscosity

$$\begin{aligned} \nu (x) = \left( 1 - x^2 \right) ^\lambda \end{aligned}$$(55)for \(\lambda > 0\) is somewhat the ‘natural’ generalisation of the Legendre viscosity. Choosing small values for \(\lambda \), the Gegenbauer viscosity becomes \(\nu \approx 1\) over most of the interval, therefore fulfilling our devise for the novel viscosities. This is further illustrated in Fig. 5a for \(\lambda =1\) and \(\lambda =1/10\).

-

2.

The super Gaussian viscosity

$$\begin{aligned} \nu (x) = e^{-\alpha x^{2\lambda }}, \end{aligned}$$(56)where \(\alpha = - \ln \varepsilon _M\) with \(\varepsilon _M\) representing machine precision,

-

3.

as well as the Gevrey viscosity

$$\begin{aligned} \nu (x) = {\left\{ \begin{array}{ll} \exp \left( \frac{x^2}{\lambda (x^2 - 1)} \right) , &{} \quad 0 \le |x| < 1, \\ 0 , &{} \quad |x| \ge 1, \end{array}\right. }, \end{aligned}$$(57)

are inspired by corresponding weight functions, which were for instance studied in the context of Fourier (pseudo-)spectral reprojection [25] as well as spectral mollifiers [66, 67]. Yet, this is the first time, they are proposed as viscosity distributions in an artificial viscosity method. Examples for both viscosities are illustrated in Fig. 5b.

Remark 4

It should be stressed that in principle \(C_0^\infty \) viscosities yield the same time step restrictions as common viscosities. While this drawback might be overcome by modal filtering, it was shown in Sect. 5.1 that modal filtering corresponds to a Legendre viscosity, which in general is inappropriately distributed and was demonstrated in Fig. 4 to result in undesired numerical solutions. Yet, the compact nature of the new \(C_0^\infty \) viscosities allow a more efficient treatment when, for instance, implicit-explicit (IMEX) time integration schemes [3] are applied to bypass too harsh (explicit) time step restrictions, since they are applied to less elements.

Remark 5

Implementation of the \(C_0^\infty \) viscosity (45) is done in the very same way as for usual viscosities, i.e. by a local DG method (33), see [15], or by direct calculation of second derivatives as done in [61]. Thus, no additional code is necessary when going over from usual viscosities to \(C_0^\infty \) viscosities. In fact, \(C_0^\infty \) viscosities are easier to implement from scratch, since no additional ‘smoothing procedure’ [4, 47] as described in Sect. 4.3 is needed. In our implementation, we have used the local DG method.

Last but not least, the question of how to choose the viscosity strength \(\varepsilon \) has to be addressed. It is evident that the strength should again be strictly greater than 0 in elements where a shock is present and equal to 0 everywhere else. The next section thus aims at a more precise scaling of the viscosity strength in the presence of discontinuities in the solution.

6 Shock Sensor

In this section, we describe the shock sensor which is utilized in the our subsequent numerical tests. The shock detection algorithm is based on the one of Persson and Peraire [58] and does not just flag troubled cells but also steers the viscosity strength \(\varepsilon \).

Further, the sensor is based on the rate of decay of the expansion coefficients of the polynomial approximation \(u_p\) when represented with respect to a hierarchical orthonormal basis \(\{ \varphi _k \}_{k=0}^{K(p)}\) of the approximation space \(\mathbb {P}_p\left( \Omega _\textit{ref} \right) \). Here, \(\Omega _\textit{ref}\) denotes a reference element in which all computations are performed. Typical choices are \(\Omega _\textit{ref}=[-1,1]\) in one dimension and, for instance, in two dimensions \(\Omega _\textit{ref} = [-1,1]^2\) when quadrilateral elements are used or \(\Omega _\textit{ref} = \mathbb {T} := \{(x,y) \in \mathbb {R}^2| x\ge 0,y\ge 0, x+y\le 1\}\) when triangular elements are used. Furthermore, \(K(p)+1\) denotes the number of basis elements needed to generate the approximation space. Thus, when expressed in a hierarchical orthonormal basis \(\{ \varphi _k \}_{k=0}^{K(p)}\), the numerical solution reads \(u_p = \sum _{k=0}^{K(p)} \hat{u}_k \varphi _k\) on every element. The truncated series of reduced degree \(p-1\) is represented by \(u_{p-1} = \sum _{k=0}^{K(p-1)} \hat{u}_k \varphi _k\).

The shock detector is now based on

on every element. Numerical experiments indicate that a hierarchical orthogonal basis is sufficient to define the operator S. By assuming analogy to Fourier expansions, S measures the degree of smoothness and is expected to scale like

for continuous u for which \(u_p\) is the orthogonal projection into \(\mathbb {P}_p\left( \Omega _\textit{ref} \right) \). Thus, artificial viscosity should be activated for \(S>p^{-4}\), since a discontinuity is anticipated then. Applying the logarithm on both terms, the above condition can also be written as

While the reference value \(s_\textit{ref}\) for continuous u remains constant, the sensor value s linearly increases for increasing smoothness and decreases for decreasing smoothness of u.

Once a shock has been sensed with the help of (60), and artificial viscosity is activated, the amount of viscosity, i.e. the viscosity strength \(\varepsilon \) in (45), is determined by the smooth function

with maximal viscosity strength\(\varepsilon _{max} \sim \frac{h}{p}\) and a problem dependent ramp parameter\(\kappa > 0\). In this work, following [47], the ramp parameter is chosen as \(\kappa = 1\), while, for scalar problems, the maximal viscosity strength is chosen as

Further, following [4], we made a slight modification of the sensor by going over to use

and \(s_\textit{ref} = -2.0\) instead of (60), where c is a suitably chosen parameter defined to control the sensor sensitiveness. This parameter becomes the only problem dependent variable and, in general, the stronger the nonlinearity of the underlying equations is the higher the value of c becomes.

Closing this section, we want to note that several other shock sensors have been proposed for the selective application of artificial viscosity as well as mesh refinement. These shock detection algorithms, for instance, use information on the \(L^2\) norm of the residual of the variational form [5, 43], the primary orientation of the discontinuity [36], magnitude of the facial inter-element jumps [4, 19] or entropy pairs [34]. Yet, for most of these sensors it is typically unclear which value indicates a shock discontinuity or other features of instability. A shortcoming of these methods thus is that a variety of scaling choices on a case-by-case basis have to be proposed, where no assignment of the scaled quantity to an explicit meaning is clear anymore.

The above sensor of Persson and Peraire, however, has the advantage of a proper scaling: Most of the listed quantities essentially relate to the smoothness of the underlying solution u, and thus how well-resolved it is by a polynomial (best) approximation. There are also enhancements of the sensor of Persson and Peraire, see for instance the recommended work [47], which would exceed the scope of this work. Since we want to focus on the advantage of using novel viscosities rather than on the underlying shock detection algorithm, the subsequent numerical tests are performed by using the basic but fairly reliable sensor (61).

7 Extension to the Euler Equations of Gas Dynamics

In this section, the prior theoretical investigations and results are demonstrated for the system of Euler equations. The extension of the proposed artificial viscosity method to the system of Euler equations (or any other system) is straightforward and described in Sect. 7.1. Numerical tests for the problems of Sod’s shock tube as well as Shu and Osher’s shock tube are presented. In order to keep the presentation compact, we have decided to demonstrate the subsequent tests just for the super Gaussian viscosity (56) as a prototype of the proposed \(C^0\) viscosities.

The numerical tests demonstrate that the proposed \(C_0^\infty \) viscosities provide observable sharper profiles and steeper gradients than the usual \(C^0\) viscosity. Shu and Osher’s shock tube—especially designed for high-order methods—further illustrates that the new \(C_0^\infty \) artificial viscosity method, compared to the \(C^0\) artificial viscosity method, is capable of an advanced representation of wave amplitudes of high frequency features.

7.1 Sod’s Shock Tube

We now extend the artificial viscosity method to the Euler equations of gas dynamics

where \(\rho \) is the density, v is the velocity, E is the total energy, \(m = \rho v\) is the momentum, \(P = (\gamma - 1)(E-\frac{1}{2}v^2 \rho )\) is the pressure, and \(\gamma = 1.4\) is taken for the ratio of specific heat. The Euler equations express the conservation of mass, momentum, and energy for a perfect gas. The extension of the artificial viscosity is straightforward, since the method and the prior sensor is applied to the density variable \(u_1 = \rho \) and, once the shock is detected, the viscosity is added to all conserved variables.

Figure 6 reports the results of this procedure for the standard test case of Sod’s shock tube

on \(\Omega =[0,1]\) at time \(t = 0.2\).

The numerical solutions were obtained using \(I=40\) equidistant elements and polynomial degree \(p=5\). We decided for Sod’s shock tube as a first test case since this is a relatively mild test case for which an analytical reference solution can still be determined, [65]. The profiles for density, pressure, and velocity are illustrated in Fig. 6 by (black) dotted lines and consist of a left rarefaction, a contact, and a right shock.

At the same time, the profiles of numerical solutions are realized by (blue) straight lines for the \(C^0\) artificial viscosity method and by (red) dashed lines for the super Gaussian \(C_0^\infty \) artificial viscosity method. For the super Gaussian viscosity (56) the parameters \(\lambda = 100\) and \(\alpha = -\ln \left( 10^{-16}\right) \) were chosen. We made no effort to optimise these parameters, which appeared to be fairly robust in our tests. More suitable choices of parameters, yielding to further enhanced results, thus seem possible. The numerical solution obtained by the super Gaussian \(C_0^\infty \) artificial viscosity method shows a sharper profile and steeper gradients; particularly visible in the density profile at the contact discontinuity around \(x\approx 0.68\), see Fig. 6b.

7.2 Shu and Osher’s Shock Tube

The test case of Sod’s shock tube (65) is reasonable when it is intended to demonstrate how a method handles different types of discontinuities. Yet, no small-scale features were present. A more challenging test case to observe if a method is able to capture both, shocks as well as small-scale smooth flows, is Shu and Osher’s shock tube

for the Euler equations (64) on \(\Omega = [-5,5]\), which was proposed in [64]. Figure 7 shows the profiles of the numerical solutions for density, pressure, and velocity at time \(t=1.8\).

The numerical solutions by \(C^0\) artificial viscosity—(blue) straight line—and by super Gaussian \(C_0^\infty \) artificial viscosity—(red) dashed line—are both computed using \(I=80\) equidistant elements and polynomial approximations of degree \(p=5\). The reference solution—(black) dotted line—is computed with polynomial degree \(p=1\), \(I =10{,}000\) elements, and the generalized slope limiter, see [10,11,12,13,14, 16] or [38, page 152]. We decided for the test case of Shu and Osher’s shock tube, since it demonstrates the advantage of a reasonable chosen amount of dissipation, when the problem involved has some structure. In fact, we can observe an enhanced representation of the small-scale wave amplitudes, especially left from the shock discontinuity in the profile of the density, for the novel super Gaussian \(C_0^\infty \) artificial viscosity method compared to the usual \(C^0\) artificial viscosity method. This is illustrated in greater detail in Fig. 7b. For instance, in long time or large eddy simulations, the preservation of such small-scale features is highly desired.

Remark 6

The above results raise the question of the effect of the proposed viscosity on the spectral resolution of the method, in particular compared to usual viscosities. Future work will investigate these spectral properties by means of a (nonlinear) spectral analysis as described, for instance, in [18, 59, 70]. We think it would be of interest to have a more rigorous study on how the spectral resolution of the artificial viscosity method depends on the viscosity function.

8 Summary and Conclusions

In this work, a novel artificial viscosity method has been introduced utilizing smooth and compactly supported viscosity distributions. In order to derive them, widely used artificial viscosity methods, such as the ones of Persson and Peraire as well as Klöckner et al., were analytically revisited with respect to the essential design criteria of conservation and entropy stability. It was proved for the viscosity extension that conservation carries over if the viscosity is continuous and compactly supported, while entropy stability already holds for positive viscosities.

Further investigating the method of modal filtering, it was demonstrated that this strategy has inherent shortcomings, which are related to the nature of Legendre and more general Jacobi viscosities. Since these have their peak in the middle of elements and rapidly decrease away from the element center, problems arise for shock discontinuities near element boundaries.

Overcoming this drawback, the new \(C_0^\infty \) were constructed such that they are approximately constant over nearly the whole element. Smooth and compactly supported functions with \(\approx 1\) over most of an element can be found in the field of robust reprojection as well as mollifiers and were proposed as viscosity distributions for the first time in this work.

Numerical tests for the Euler equations demonstrated the novel (super Gaussian) \(C_0^\infty \) artificial viscosity to provide sharper profiles, steeper gradients, and a higher resolution of small-scale features while still maintaining stability of the method.

Since all artificial viscosity methods heavily rely on trustworthy detection of discontinuities, further research on shock sensor strategies is mandatory. It is our opinion that especially shock sensors which are capable of detecting the precise location and strength of jump discontinuities, such as the concentration method of Gelb and Tadmor [21,22,23,24, 57] or polynomial annihilation [1, 2], seem highly promising. This would also allow to moreover adapt the artificial viscosity method in every element to the exact location of a shock. Dissipation would just be added where it is needed.

Notes

Which would also contradict the idea of high order methods.

\(\varepsilon \in C^k\) for \(k > 0\) is required for higher order viscosities, for instance \(\left( \partial _{xx} \nu \partial _{xx} \right) u\). Then, \(\frac{{\text {d}}^k \nu }{{\text {d}}t^k} (\pm 1) = 0\) is further required for conservation.

References

Archibald, R., Gelb, A., Yoon, J.: Polynomial fitting for edge detection in irregularly sampled signals and images. SIAM J. Numer. Anal. 43(1), 259–279 (2005)

Archibald, R., Gelb, A., Yoon, J.: Determining the locations and discontinuities in the derivatives of functions. Appl. Numer. Math. 58(5), 577–592 (2008)

Ascher, U.M., Ruuth, S.J., Wetton, B.T.: Implicit-explicit methods for time-dependent partial differential equations. SIAM J. Numer. Anal. 32(3), 797–823 (1995)

Barter, G.E., Darmofal, D.L.: Shock capturing with pde-based artificial viscosity for dgfem: Part I. Formulation. J. Comput. Phys. 229(5), 1810–1827 (2010)

Bassi, F., Rebay, S.: Accurate 2D Euler computations by means of a high order discontinuous finite element method. In: Fourteenth International Conference on Numerical Methods in Fluid Dynamics, pp. 234–240. Springer (1995)

Baumann, C.E., Oden, J.T.: A discontinuous hp finite element method for convection–diffusion problems. Comput. Methods Appl. Mech. Eng. 175(3–4), 311–341 (1999)

Bochner, S.: Über Sturm–Liouvillesche polynomsysteme. Math. Z. 29(1), 730–736 (1929)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods. Courier Corporation, Chelmsford (2001)

Burbeau, A., Sagaut, P., Bruneau, C.H.: A problem-independent limiter for high-order Runge–Kutta discontinuous Galerkin methods. J. Comput. Phys. 169(1), 111–150 (2001)

Cockburn, B.: Discontinuous Galerkin methods for convection-dominated problems. In: High-Order Methods for Computational Physics, pp. 69–224. Springer (1999)

Cockburn, B., Hou, S., Shu, C.W.: The Runge–Kutta local projection discontinuous Galerkin finite element method for conservation laws. IV. The multidimensional case. Math. Comput. 54(190), 545–581 (1990)

Cockburn, B., Lin, S.Y., Shu, C.W.: TVB Runge–Kutta local projection discontinuous Galerkin finite element method for conservation laws III: one-dimensional systems. J. Comput. Phys. 84(1), 90–113 (1989)

Cockburn, B., Shu, C.W.: TVB Runge–Kutta local projection discontinuous Galerkin finite element method for conservation laws. II. General framework. Math. Comput. 52(186), 411–435 (1989)

Cockburn, B., Shu, C.W.: The Runge–Kutta local projection \(p^1\)-discontinuous-Galerkin finite element method for scalar conservation laws. ESAIM Math. Model. Numer. Anal. 25(3), 337–361 (1991)

Cockburn, B., Shu, C.W.: The local discontinuous Galerkin method for time-dependent convection–diffusion systems. SIAM J. Numer. Anal. 35(6), 2440–2463 (1998)

Cockburn, B., Shu, C.W.: The Runge–Kutta discontinuous Galerkin method for conservation laws V: multidimensional systems. J. Comput. Phys. 141(2), 199–224 (1998)

Dervieux, A., Leservoisier, D., George, P.L., Coudière, Y.: About theoretical and practical impact of mesh adaptation on approximation of functions and PDE solutions. Int. J. Numer. Methods Fluids 43(5), 507–516 (2003)

Fauconnier, D., Dick, E.: On the spectral and conservation properties of nonlinear discretization operators. J. Comput. Phys. 230(12), 4488–4518 (2011)

Feistauer, M., Kučera, V.: On a robust discontinuous Galerkin technique for the solution of compressible flow. J. Comput. Phys. 224(1), 208–221 (2007)

Gassner, G.J.: A skew-symmetric discontinuous Galerkin spectral element discretization and its relation to sbp-sat finite difference methods. SIAM J. Sci. Comput. 35(3), A1233–A1253 (2013)

Gelb, A., Cates, D.: Detection of edges in spectral data III—refinement of the concentration method. J. Sci. Comput. 36(1), 1–43 (2008)

Gelb, A., Tadmor, E.: Detection of edges in spectral data. Appl. Comput. Harmon. Anal. 7(1), 101–135 (1999)

Gelb, A., Tadmor, E.: Detection of edges in spectral data II. Nonlinear enhancement. SIAM J. Numer. Anal. 38(4), 1389–1408 (2000)

Gelb, A., Tadmor, E.: Adaptive edge detectors for piecewise smooth data based on the minmod limiter. J. Sci. Comput. 28(2), 279–306 (2006)

Gelb, A., Tanner, J.: Robust reprojection methods for the resolution of the gibbs phenomenon. Appl. Comput. Harmon. Anal. 20(1), 3–25 (2006)

Giraldo, F.X., Hesthaven, J.S., Warburton, T.: Nodal high-order discontinuous Galerkin methods for the spherical shallow water equations. J. Comput. Phys. 181(2), 499–525 (2002)

Glaubitz, J., Öffner, P.: A novel discontinuous Galerkin method using the principle of discrete least squares. MPIM preprint series: 2017-63. Submitted (2017)

Glaubitz, J., Öffner, P., Sonar, T.: Application of modal filtering to a spectral difference method (2016). ArXiv preprint arXiv:1604.00929

Godlewski, E., Raviart, P.: Hyperbolic systems of conservation laws. Mathématiques & applications. Ellipses. https://books.google.de/books?id=hzvvAAAAMAAJ (1991)

Gottlieb, D., Hesthaven, J.S.: Spectral methods for hyperbolic problems. J. Comput. Appl. Math. 128(1), 83–131 (2001)

Gottlieb, S., Ketcheson, D.I., Shu, C.W.: Strong Stability Preserving Runge–Kutta and Multistep Time Discretizations. World Scientific, Singapore (2011)

Gottlieb, S., Shu, C.W.: Total variation diminishing Runge–Kutta schemes. Math. Comput. Am. Math. Soc. 67(221), 73–85 (1998)

Gottlieb, S., Shu, C.W., Tadmor, E.: Strong stability-preserving high-order time discretization methods. SIAM Rev. 43(1), 89–112 (2001)

Guermond, J.L., Pasquetti, R.: Entropy-based nonlinear viscosity for fourier approximations of conservation laws. C. R. Math. 346(13–14), 801–806 (2008)

Harten, A.: On the symmetric form of systems of conservation laws with entropy. J. Comput. Phys. 49(1), 151–164 (1983)

Hartmann, R.: Adaptive discontinuous Galerkin methods with shock-capturing for the compressible Navier–Stokes equations. Int. J. Numer. Methods Fluids 51(9–10), 1131–1156 (2006)

Hesthaven, J., Kirby, R.: Filtering in legendre spectral methods. Math. Comput. 77(263), 1425–1452 (2008)

Hesthaven, J.S., Warburton, T.: Nodal Discontinuous Galerkin Methods: Algorithms, Analysis, and Applications. Springer, Berlin (2007)

Hewitt, E., Hewitt, R.E.: The Gibbs-Wilbraham phenomenon: an episode in Fourier analysis. Arch. Hist. Exact Sci. 21(2), 129–160 (1979)

Hu, F.Q.: On absorbing boundary conditions for linearized euler equations by a perfectly matched layer. J. Comput. Phys. 129(1), 201–219 (1996)

Hughes, T.J., Franca, L., Mallet, M.: A new finite element formulation for computational fluid dynamics: I. Symmetric forms of the compressible Euler and Navier–Stokes equations and the second law of thermodynamics. Comput. Methods Appl. Mech. Eng. 54(2), 223–234 (1986)

Huynh, H.T.: A flux reconstruction approach to high-order schemes including discontinuous Galerkin methods. AIAA Paper, vol. 4079 (2007)

Jaffre, J., Johnson, C., Szepessy, A.: Convergence of the discontinuous Galerkin finite element method for hyperbolic conservation laws. Math. Models Methods Appl. Sci. 5(03), 367–386 (1995)

Kanevsky, A., Carpenter, M.H., Hesthaven, J.S.: Idempotent filtering in spectral and spectral element methods. J. Comput. Phys. 220(1), 41–58 (2006)

Karniadakis, G., Sherwin, S.: Spectral/hp Element Methods for Computational Fluid Dynamics. Oxford University Press, Oxford (2013)

Ketcheson, D.I.: Highly efficient strong stability-preserving Runge–Kutta methods with low-storage implementations. SIAM J. Sci. Comput. 30(4), 2113–2136 (2008)

Klöckner, A., Warburton, T., Hesthaven, J.S.: Viscous shock capturing in a time-explicit discontinuous Galerkin method. Math. Model. Nat. Phenom. 6(3), 57–83 (2011)

Kreiss, H.O., Oliger, J.: Stability of the Fourier method. SIAM J. Numer. Anal. 16(3), 421–433 (1979)

Kuzmin, D., Löhner, R., Turek, S.: Flux-Corrected Transport: Principles, Algorithms, and Applications. Scientific Computation. Springer, Berlin. https://books.google.de/books?id=bZj8pI3ioOwC (2006)

Lax, P.D.: Hyperbolic Systems of Conservation Laws and the Mathematical Theory of Shock Waves. SIAM, Philadelphia (1973)

LeVeque, R.J.: Finite Volume Methods for Hyperbolic Problems, vol. 31. Cambridge University Press, Cambridge (2002)

Levy, D., Tadmor, E.: From semidiscrete to fully discrete: stability of Runge–Kutta schemes by the energy method. SIAM Rev. 40(1), 40–73 (1998)

Majda, A., McDonough, J., Osher, S.: The Fourier method for nonsmooth initial data. Math. Comput. 32(144), 1041–1081 (1978)

Meister, A., Ortleb, S., Sonar, T.: Application of spectral filtering to discontinuous Galerkin methods on triangulations. Numer. Methods Partial Differ. Equ. 28(6), 1840–1868 (2012)

Meister, A., Ortleb, S., Sonar, T., Wirz, M.: An extended discontinuous Galerkin and spectral difference method with modal filtering. J. Appl. Math. Mech. Zeitschrift für Angewandte Mathematik und Mechanik (ZAMM) 93(6–7), 459–464 (2013)

Öffner, P., Glaubitz, J., Ranocha, H.: Stability of correction procedure via reconstruction with summation-by-parts operators for Burgers’ equation using a polynomial chaos approach (2017). ArXiv preprint arXiv:1703.03561

Öffner, P., Sonar, T., Wirz, M.: Detecting strength and location of jump discontinuities in numerical data. Appl. Math. 4(12), 1 (2013)

Persson, P.O., Peraire, J.: Sub-cell shock capturing for discontinuous Galerkin methods. In: 44th AIAA Aerospace Sciences Meeting and Exhibit, p. 112 (2006)

Pirozzoli, S.: On the spectral properties of shock-capturing schemes. J. Comput. Phys. 219(2), 489–497 (2006)

Ranocha, H., Glaubitz, J., Öffner, P., Sonar, T.: Time discretisation and \( l\_2 \) stability of polynomial summation-by-parts schemes with Runge–Kutta methods (2016). ArXiv preprint arXiv:1609.02393

Ranocha, H., Glaubitz, J., Öffner, P., Sonar, T.: Stability of artificial dissipation and modal filtering for flux reconstruction schemes using summation-by-parts operators. Appl. Numer. Math. 128, 1–23 (2018). https://doi.org/10.1016/j.apnum.2018.01.019. See also arXiv:1606.00995 [math.NA] and arXiv:1606.01056 [math.NA]

Routh, E.J.: On some properties of certain solutions of a differential equation of the second order. Proc. Lond. Math. Soc. 1(1), 245–262 (1884)

Sheshadri, A., Jameson, A.: Shock detection and capturing methods for high order discontinuous-Galerkin finite element methods. In: 32nd AIAA Applied Aerodynamics Conference, p. 2688 (2014)

Shu, C.W., Osher, S.: Efficient implementation of essentially non-oscillatory shock-capturing schemes II. J. Comput. Phys. 83(1), 32–78 (1989)

Sod, G.A.: A survey of several finite difference methods for systems of nonlinear hyperbolic conservation laws. J. Comput. Phys. 27(1), 1–31 (1978)

Tadmor, E., Tanner, J.: Adaptive mollifiers for high resolution recovery of piecewise smooth data from its spectral information. Found. Comput. Math. 2(2), 155–189 (2002)

Tanner, J.: Optimal filter and mollifier for piecewise smooth spectral data. Math. Comput. 75(254), 767–790 (2006)

Vincent, P.E., Castonguay, P., Jameson, A.: A new class of high-order energy stable flux reconstruction schemes. J. Sci. Comput. 47(1), 50–72 (2011)

VonNeumann, J., Richtmyer, R.D.: A method for the numerical calculation of hydrodynamic shocks. J. Appl. Phys. 21(3), 232–237 (1950)

Wheatley, V., Jeltsch, R., Kumar, H.: Spectral performance of RKDG methods. Math. Balk. 25(3), 257–276 (2011)

Yang, B., Gottlieb, D., Hesthaven, J.S.: Spectral simulations of electromagnetic wave scattering. J. Comput. Phys. 134(2), 216–230 (1997)

Acknowledgements

Open access funding provided by Max Planck Society. This work developed during a two-moth stay of the first author at the Max Planck Institute for Mathematics (MPIM) in Bonn during summer of 2017. He would like to express his gratitude for the generous financial support by the MPIM as well as the warm and inspiring research atmosphere provided by its staff. Further, we would like to thank the anonymous referees for many helpful suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Glaubitz, J., Nogueira, A.C., Almeida, J.L.S. et al. Smooth and Compactly Supported Viscous Sub-cell Shock Capturing for Discontinuous Galerkin Methods. J Sci Comput 79, 249–272 (2019). https://doi.org/10.1007/s10915-018-0850-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-018-0850-3

Keywords

- Hyperbolic conservation laws

- Euler equations

- Discontinuous Galerkin methods

- Shock capturing

- Artificial viscosity