Abstract

We consider multigrid methods for finite volume discretizations of the Reynolds averaged Navier–Stokes equations for both steady and unsteady flows. We analyze the effect of different smoothers based on pseudo time iterations, such as explicit and additive Runge–Kutta (AERK) methods. Furthermore, by deriving them from Rosenbrock smoothers, we identify some existing schemes as a class of additive W (AW) methods. This gives rise to two classes of preconditioned smoothers, preconditioned AERK and AW, which are implemented the exact same way, but have different parameters and properties. This derivation allows to choose some of these based on results for time integration methods. As preconditioners, we consider SGS preconditioners based on flux vector splitting discretizations with a cutoff function for small eigenvalues. We compare these methods based on a discrete Fourier analysis. Numerical results on pitching and plunging airfoils identify AW3 as the best smoother regarding overall efficiency. Specifically, for the NACA 64A010 airfoil steady-state convergence rates of as low as 0.85 were achieved, or a reduction of 6 orders of magnitude in approximately 25 pseudo-time iterations. Unsteady convergence rates of as low as 0.77 were achieved, or a reduction of 11 orders of magnitude in approximately 70 pseudo-time iterations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are interested in numerical methods for compressible wall bounded turbulent flows as they appear in many problems in industry. Therefore, both steady and unsteady flows will be considered. Numerically, these are characterized by strong nonlinearities and a large number of unknowns, due to the requirement of resolving the boundary layer. High fidelity approaches such as Direct Numerical Simulation (DNS) or Large Eddy Simulation (LES) are slowly getting within reach through improvements in high order discretization methods. Nevertheless, these approaches are, and will remain in the foreseeable future, far too costly to be standard tools in industry.

However, low fidelity turbulence modeling based on the Reynolds Averaged Navier–Stokes (RANS) equations discretized using second order finite volume discretizations is a good choice for many industrial problems where turbulence matters. For steady flows, this comes down to solving one nonlinear system. In the unsteady case, the time discretization has to be at least partially implicit, due to the extremely fine grids in the boundary layer, requiring solving one or more nonlinear systems per time step. The choice for numerical methods for these comes down to Jacobian-free Newton–Krylov (JFNK) methods with appropriate preconditioners or nonlinear multigrid methods (Full Approximation scheme—FAS) with appropriate smoothers, see [3] for an overview.

In this article, we focus on improving the convergence rate of agglomeration multigrid methods [23], which are the standard in the aeronautical industry. For the type of problems considered here, two aspects have been identified that affect solver efficiency. Firstly, the flow is convection dominated. Secondly the grid has high aspect ratio cells. It is important to note that the viscous terms in the RANS equations do not pose problems in themselves. Instead, the problem is that they cause the boundary layer to appear, thus making high aspect ratio grids necessary. These aspects are shared by the Euler equations, meaning that solvers developed for one equation may also be effective for the other.

With regards to convection dominated flows, smoothers such as Jacobi or Gauß–Seidel do not perform well, in particular when the flow is aligned with the grid [24]. One idea has been to adjust multigrid restriction and prolongation by using directional or semi coarsening that respects the flow direction [25]. This approach has the problem of being significantly more complicated to implement than standard agglomeration. Thus, the alternative is to adjust the smoother. As it turned out, symmetric Gauß–Seidel (SGS) is an excellent smoother for the Euler equations even for grid aligned flow [7], simply because it takes into account propagation of information in the flow direction and backwards.

However, when discretizing the Euler equations on high aspect ratio grids suitable for wall bounded viscous flows, this smoother does not perform well. During the last ten years, the idea of preconditioned pseudo time iterations has garnered interest [5, 6, 14, 17,18,19,20,21, 27,28,29, 31, 32]. This goes back to the additive Runge–Kutta (ARK) smoothers originally introduced in [8] and independently in a multigrid setting in [11]. These exhibit slow convergence, but if they are combined with a preconditioner, methods result that work well for high Reynolds number high aspect ratio RANS simulations.

The preconditioned RK smoother suggested in [28, 32] was rederived in [18] by starting with a Rosenbrock method and then approximating the system matrix in the arising linear systems. This is called a W method in the literature on ordinary differential equations. Consequently, we now identify the two classes of preconditioned additive W methods and preconditioned additive explicit Runge–Kutta methods and derive them from time integration methods. This allows us to identify the roles the preconditioners have to play and aids in choosing good parameters. As for preconditioners itself, it turns out that again, SGS is a very good choice, as reported in [18, 32].

The specific convergence rate attainable depends on the discretization, in particular the flux function. Here, we consider the Jameson–Schmidt–Turkel (JST) scheme for structured grids, in its latest version [15]. We perform a discrete Fourier analysis of the smoother for the linearized Euler equations on cartesian grids with variable aspect ratios. This is justified, since the core issues of convection and high aspect ratio grids are present in this problem.

A convenient truth is that if we have a fast steady-state solver then it can be used to build a fast unsteady solver via dual timestepping. However, there are subtle differences that affect convergence and stability. In particular, the eigenvalues of the amplification matrix are scaled and shifted in the unsteady case relative to the steady case. For a fuller discussion of these issues we refer to our earlier work [5, 6] and to [2]. We compare the analytical behavior and numerical performance of iterative smoothers for steady and unsteady problems.

The article is structured as follows. We first present the governing equations and the discretization, then we describe multigrid methods and at length the smoothers considered. Then we present a Fourier analysis based on the Euler equations and finally numerical results for airfoil test cases.

2 Discretization

We consider the two dimensional compressible (U)RANS equations, where the vector of conservative variables is \((\rho , \rho v_1, \rho v_2, \rho E)^T\) and the convective and viscous fluxes are given by

where we used the Einstein notation.

Here, \(\rho \) is the density, \(v_i\) the velocity components and E the total energy per unit mass. The enthalpy is given by \(H = E + p/\rho \) with \(p = (\gamma -1)\rho (E-1/2 v_k v_k)\) being the pressure and \(\gamma =1.4\) the adiabatic index for an ideal gas. Furthermore, \(\tau _{ij}\) is the sum of the viscous and Reynolds stress tensors, \(q_j\) the sum of the diffusive and turbulent heat fluxes, \(\mu \) the dynamic viscosity, \(\mu _t\) the turbulent viscosity and Pr, \(Pr_t\) the dynamic Prandtl and turbulent Prandtl numbers.

As a turbulence model, we use the 0-equation Baldwin–Lomax model [1] for two reasons. Firstly, it performs well for flows around airfoils we use as primary motivation. Secondly, with 1- or 2-equation models more difficulties in implementation, analysis and convergence behavior arise. We believe that these have to be systematically looked at, but separately from this investigation.

The equations are discretized using a finite volume method on a structured mesh and the JST scheme as flux function. There are many variants of this method, see e.g. [15]. Here, we use the following, for simplicity written as if for a one dimensional problem:

Here, \(\mathbf{f}^R(\bar{\mathbf{u}})\) is the difference of the convective and the viscous fluxes, \(\mathbf{u}\in \mathbf{R}^m\) is the vector of all discrete unknowns and \(\bar{\mathbf{u}}=(\rho , \rho v, \rho E)\) is the vector of conservative variables. The artificial viscosity terms are given by

with \(\Delta _j\) being the forward difference operator and the vector \(\mathbf{w}\) being \(\bar{\mathbf{u}}\) where in the last component, the energy density has been replaced by the enthalpy density.

The scalar coefficient functions \(\epsilon ^{(2)}_{j+1/2}\) and \(\epsilon ^{(4)}_{j+1/2}\) are given by

and

Here, the entropy sensor \(s_{j+1/2}=\min (0.25,\max (s_j, s_{j+1}))\) given via

with \(S = p/\rho ^{\gamma }\). For the Euler equations, it is suggested to instead use a corresponding pressure sensor.

Furthermore, \(r_{i+1/2}\) is the scalar diffusion coefficient, given by

It approximates the spectral radius and is chosen instead of a matrix valued diffusion as in other versions of this scheme. The specific choice of \(r_j\) is important with respect to stability and the convergence speed of the multigrid method. Here, we use the locally largest eigenvalue \(r_j = |v_{n_j}|+a_j\) as a basis, where a is the speed of sound. In the multidimensional case, this is further modified to be [22]:

where \(r_i\) corresponds to the x direction and \(r_j\) to the y direction.

Additionally, to obtain velocity and temperature gradients needed for the viscous fluxes, we exploit that we have a cell centered method on a structured grid and use dual grids around vertices to avoid checker board effects [13, p. 364].

For boundary conditions, we use the no slip condition at fixed wall and far field conditions at outer boundaries. These are implemented using Riemann invariants [13, p. 362].

In time, we use BDF-2 with a fixed time step \(\Delta t\), resulting at time \(t_{n+1}\) in an equation system of the form

Here, \(\mathbf{f}(\mathbf{u})\) describes the spatial discretization, whereas \(\mathbf{\Omega }\) is a diagonal matrix with the volumes of the mesh cells as entries. We thus obtain

For a steady state problem, we just have

3 The Full Approximation Scheme

As mentioned in the introduction, we use an agglomeration FAS to solve Eqs. (4) and (5). To employ a multigrid method, we need a hierarchical sequence of grids with the coarsest grid being denoted by level \(l=0\). This is obtained by agglomerating 4 neighboring cells to one, which is straightforward for structured grids. On the coarse grids, the problem is discretized using a first order version of the JST scheme that does not use fourth differences or an entropy sensor.

The iteration is performed as a W-cycle, where on the coarsest grid, one smoothing step is performed. This gives the following pseudo code:

Function FAS-W-cycle\((\mathbf{u}_l, \mathbf{s}_l, l)\)

-

\(\mathbf{u}_l = \mathbf{S}_l^{\nu _1}(\mathbf{u}_l,\mathbf{s}_l)\) (Presmoothing)

-

if \((l>0)\)

-

\(\mathbf{r}_l=\mathbf{s}_l-\mathbf{F}_l(\mathbf{u}_l)\)

-

\({\tilde{\mathbf{u}}}_{l-1} = \mathbf{R}_{l-1,l} \mathbf{u}_l\) (Restriction of solution)

-

\(\mathbf{s}_{l-1}=\mathbf{F}_{l-1}({\tilde{\mathbf{u}}}_{l-1}) + \mathbf{R}_{l-1,l}{} \mathbf{r}_l\) (Restriction of residual)

-

For \(j=1,2\): call FAS-W-cycle\((\mathbf{u}_{l-1}, \mathbf{s}_{l-1},l-1)\) (Computation of the coarse grid correction)

-

\(\mathbf{u}_l = \mathbf{u}_l + \mathbf{P}_{l,l-1}(\mathbf{u}_{l-1}-{\tilde{\mathbf{u}}}_{l-1})\) (Correction via Prolongation)

-

-

end if

The restriction \(\mathbf{R}_{l-1,l}\) is an agglomeration that weighs components by the volume of their cells and divides by the total volume. As for the prolongation \(\mathbf{P}_{l,l-1}\), it uses a bilinear weighting [12].

On the finest level, the smoother is applied to the Eq. (4) resp. (5). On sublevels, it is instead used to solve

with

4 Preconditioned Smoothers

All smoothers we use have a pseudo time iteration as a basis. These are iterative methods for the nonlinear equation \(\mathbf{F}(\mathbf{u})=\mathbf{0}\) that are obtained by applying a time integration method to the initial value problem

For convenience, we have dropped the subscript l that denotes the multigrid level.

4.1 Preconditioned Additive Runge–Kutta Methods

We start with splitting \(\mathbf{F}(\mathbf{u})\) in a convective and diffusive part

Hereby, \(\mathbf{f}^c\) contains the physical convective fluxes, as well as the discretized time derivative and the multigrid source terms, whereas \(\mathbf{f}^v\) contains both the artificial dissipation and the discretized second order terms of the Navier–Stokes equations.

An additive explicit Runge–Kutta (AERK) method is then implemented in the following form:

where

The second to last line implies that \(\beta _1=1\). Here, \(\Delta t^*\) is a local pseudo time step, meaning that it depends on the specific cell and the multigrid level. It is obtained by choosing \(c^*\), a CFL number in pseudo time, and then computing \(\Delta t^*\) based on the local mesh width \(\Delta x_{k_l}\). On an equidistant mesh, this comes down to:

This implies larger time steps on coarser cells, in particular on coarser grids.

As for the coefficients, several schemes have been designed to have good smoothing properties in a multigrid method for convection dominated model equations. The 3-stage scheme AERK3 has its origins in [35], with the \(\beta \) coefficients being derived in [30]. AERK5J was designed by Jameson using linear advection with a fourth order diffusion term, see [11]. The 5-stage schemes AERK51 and AERK52 are from [35]. AERK52 is employed in [32]. Coefficients for the 3- and 5-stage schemes can be found in Table 1. All of these schemes are first order, except for the last one, which has order two and is therefore denoted as AERK52. In the original publication AERK51 and AERK52 are not additive. When using these within an additive method, we use the \(\beta \) coefficients from AERK5J. For current research into improving these coefficients we refer to [2, 4].

Setting \(\beta _i=1\) for all i gives an unsplit low storage explicit Runge–Kutta method that does not treat convection and diffusion differently. We refer to these schemes as ERK methods, e.g. ERK3J or ERK51.

To precondition this scheme, a preconditioner \(\mathbf{P}^{-1} \in \mathbf{R}^{m\times m}\) is applied to the equation system (4) or (5) by multiplying them with it, resulting in an equation

In a pseudo-time iteration for the new equation, all function evaluations have to be adjusted. In the above algorithm, this is realized by replacing the term \(\alpha _i \Delta t^*(\mathbf{f}^{c,(i-1)} + \mathbf{f}^{v,(i-1)})\) with \(\alpha _i \Delta t^*\mathbf{P}^{-1}(\mathbf{f}^{c,(i-1)} + \mathbf{f}^{v,(i-1)})\). We discuss the role of the preconditioner in more detail in Sect. 4.3.

4.2 Additive W-Methods

In [28, 32] so called RK/implicit methods were suggested with great success. These use in effect the method from above with an approximation of the matrix \(\mathbf{I}+\alpha \frac{\partial \mathbf{F}}{\partial \mathbf{u}}\) instead, where \(\alpha \) is a parameter. To bring these things together, an alternative way of deriving these methods has been presented by Langer in [17]. He uses the term preconditioned implicit smoothers and derives them from specific singly diagonally implicit RK (SDIRK) methods. SDIRK methods consist of a nonlinear system at each step, which he solves with one Newton step each and then simplifies by always using the Jacobian from the first stage. This is known as a special choice of a Rosenbrock method in the literature on differential equations [10, p. 102]. To arrive at a preconditioned method, Langer then replaces the system matrix with an approximation, for example originating from a preconditioner as known from linear algebra. In fact, this type of method is called a W-method in the ODE community [10, p. 114].

We now extend the framework from [17] to additive Runge–Kutta methods. For clarity we repeat the derivation, but start from the split equation

as described in (7). To this equation, we apply an additive SDIRK method with coefficients given in Tables 2 and 3:

Hereby, the vectors \(\mathbf{k}\) are called stage derivatives and we have \(\mathbf{k} = \mathbf{k}^c + \mathbf{k}^v\) according to the splitting (7). Thus, we have to solve s nonlinear equation systems for the stage derivatives \(\mathbf{k}_i\).

To obtain an additive Rosenbrock method, these are solved approximately using one Newton step each with initial guess zero, changing the stage values to

where \(\mathbf{J}_i = \frac{\partial \mathbf{F}_i(\mathbf{0})}{\partial \mathbf{k}}\), with \(\mathbf{F}_i(\mathbf{k}) := \mathbf{F}(\mathbf{u}^n + \Delta t^*\left( \sum _{j=1}^{i-1}(a^c_{ij}{} \mathbf{k}^c_j + a^v_{ij}{} \mathbf{k}^v_j)+ \eta \mathbf{k})\right) \). Thus, we now have to solve a linear system at each stage. This type of scheme is employed in Swanson et. al. [32]. They refer to the factor \(\eta \) as \(\epsilon \) and provide a discrete Fourier analysis of this factor.

As a final step, we approximate the system matrices \(\mathbf{I}+\eta \Delta t^*\mathbf{J}_i\) by a matrix \(\mathbf{W}\). This gives us a new class of schemes, which we call additive W (AW) methods, with stage derivatives given by:

In both additive Rosenbrock and additive W methods, Eq. (16) remains unchanged.

Finally, after some algebraic manipulations, this method can be rewritten in the same form as the low storage preconditioned AERK methods presented earlier:

where

As for the explicit methods, one recovers an unsplit scheme for \(\beta _i=1\) for all i and we refer to these methods as SDIRK, Rosenbrock and W methods.

4.3 Comparison

To get a better understanding for the different methods, it is illustrative to consider the linear case. Then, these methods are iterative schemes to solve a linear equation system \((\mathbf{A} + \mathbf{B})\mathbf{x} = \mathbf{b}\) and can be written as

The matrix \(\mathbf{M}\) is the iteration matrix and for pseudo time iterations, it is given as the stability function S of the time integration method. These are a polynomial \(P_s\) of degree s in \(\Delta t^*(\mathbf{A} + \mathbf{B})\) for an s stage ERK method and a bivariate polynomial \(P_s\) of degree s in \(\Delta t^*\mathbf{A}\) and \(\Delta t^*\mathbf{B}\) for an s stage AERK method. When preconditioning is added, this results in \(P_s(\Delta t^*\mathbf{P}^{-1}(\mathbf{A}+\mathbf{B}))\) and \(P_s(\Delta t^*\mathbf{P}^{-1}\mathbf{A},\Delta t^*\mathbf{P}^{-1}{} \mathbf{B})\), respectively.

For the implicit schemes, we obtain a rational function of the form \(Q_s(\mathbf{I}+\eta \Delta t^*(\mathbf{A} + \mathbf{B}))^{-1}P_s(\Delta t^*(\mathbf{A} + \mathbf{B}))\) for an s stage SDIRK or Rosenbrock method. Here, \(Q_s\) is a second polynomial of degree s. Finally, for an s-stage additive W method, we obtain a function of the form \(Q_s(\mathbf{W})^{-1}P_s(\Delta t^*\mathbf{A},\Delta t^*\mathbf{B})\). Due to the specific contruction, the inverse can simply be moved from the left into the argument which gives \(P_s(\Delta t^*\mathbf{W}^{-1}\mathbf{A},\Delta t^*\mathbf{W}^{-1}{} \mathbf{B})\). Note that this is the same as for the preconditioned AERK method, except for the preconditioner.

The additive W method and the preconditioned AERK method have three main differences. First of all, there is the role of \(\mathbf{P}\) in the AERK method versus the matrix \(\mathbf{W}\). In the W method \(\mathbf{W}\approx (\mathbf{I}+\eta \Delta t^*\mathbf{J}_i)\), whereas in the AERK scheme, \(\mathbf{P} \approx \mathbf{J}_i\). Second, the timestep in the one case is that of an explicit ARK method, whereas in the other, that of an implicit method. The latter in its SDIRK or Rosenbrock form is A-stable. However, approximating the Jacobian can cause the stability region to become finite. Finally, the latter method has an additional parameter \(\eta \) that needs to be chosen. However, the large stability region makes the choice of \(\Delta t^*\) easy for the additive W method (very large), whereas it has to be a small value for the preconditioned AERK scheme.

4.4 SGS Preconditioner

The basis of our method is the preconditioner suggested by Swanson et al. in [32] and modified by Jameson in [16]. In effect, this is a choice of a \(\mathbf{W}\) matrix in the framework just presented. We now repeat the derivation of this preconditioner in our notation.

The first step is to approximate the Jacobian by using a different first order linearized discretization. It is based on a splitting \(\mathbf{A} = \mathbf{A}^+ + \mathbf{A}^-\) of the flux Jacobian. This is evaluated in the average of the values on both sides of the interface, thereby deviating from [32]. The split Jacobians correspond to positive and negative eigenvalues:

Alternatively, these can be written in terms of the matrix of right eigenvectors \(\mathbf{R}\) as

where \(\Lambda ^{\pm }\) are diagonal matrices containing the positive and negative eigenvalues, respectively.

As noted in [16, 33], it is now crucial to use a cutoff function for the eigenvalues beforehand, to bound them away from zero. We use a parabolic function which kicks in when the modulus of the eigenvalue \(\lambda \) is smaller or equal to a fraction ad of the speed of sound a with free parameter \(d \in [0,1]\):

With this, an upwind discretization is given in cell i by

Here, \(e_{ij}\) is the edge between cells i and j, N(i) is the set of cells neighboring i and \(\mathbf{n}_{ij}\) the unit normal vector from i to j.

For the unsteady equation (4), we obtain instead

The corresponding approximation of the Jacobian is then used to construct a preconditioner. Specifically, we consider the block SGS preconditioner

where \(\mathbf{L}\), \(\mathbf{D}\) and \(\mathbf{U}\) are block matrices with \(4\times 4\) blocks. This preconditioner would look different when several SGS steps would be performed. However, a second step increases the cost of the whole method by 50% without giving an appropriate increase in convergence rate. Therefore, it should only be used if one step does not give convergence.

We now have two cases. In the AERK framework, \(\mathbf{L} + \mathbf{D} + \mathbf{U} = \mathbf{J}\) and we arrive at

respectively

in the unsteady case and we assumed a cartesian grid to simplify notation.

In the additive W framework, \(\mathbf{L} + \mathbf{D} + \mathbf{U} = \mathbf{I} + \eta \Delta t^*\mathbf{J}\) and we obtain

or in the unsteady case

Applying this preconditioner requires solving small \(4\times 4\) systems coming from the diagonal. We use Gaussian elimination for this. A fast implementation is obtained by transforming first to a certain set of symmetrizing variables, see [16].

5 Discrete Fourier Analysis

We now perform a discrete Fourier analysis of the preconditioned AERK method for the two dimensional Euler equations using the JST scheme. For a description of this technique, also called local Fourier analysis (LFA) in the multigrid community, we refer to [9, 34]. The rationale for this is that the core convergence problems for multigrid methods for viscous flow problems on high aspect ratio grids are the convective terms and the high aspect ratio grids. The viscous terms are of comparatively minor importance. Here, we do not take into account the coarse grid correction. Thus, our aim is to obtain amplification- and smoothing factors for the smoother. The latter is given by

where \(\lambda _{HF}\) denote the high frequency eigenvalues. Since eigenfunctions of first order hyperbolic differential operators involve \(e^{i\phi x}\), these are in \([-\pi ,-\pi /2]\) and \([\pi /2,\pi ]\).

We now consider a linearized version of the underlying equation with periodic boundary conditions on the domain \(\Omega = [0,1]^2\):

with \(\mathbf{A} = \frac{\partial \mathbf{f}_1}{\partial \mathbf{u}}\) and \(\mathbf{B} = \frac{\partial \mathbf{f}_2}{\partial \mathbf{u}}\) being the Jacobians of the Euler fluxes in a fixed point \(\hat{\mathbf{u}}\), to be set later.

5.1 JST Scheme

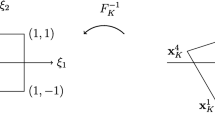

We discretize (32) on a cartesian mesh with mesh width \(\Delta x\) in x-direction and \(\Delta y = \text {AR}\Delta x\) in y-direction (\(\hbox {AR}=\hbox {aspect ratio}\)), resulting in an \(n_x\times n_y\) mesh. A cell centered finite volume method with the JST flux is employed. We denote the shift operators in x and y direction by \(E_x\) and \(E_y\). Cells are indexed the canonical doubly lexicographical way for a cartesian mesh. In cell ij we write the discretization as

with

respectively in the unsteady case,

For \(\mathbf{H}_v\), the starting point is that the pressure in conservative variables is

In the fraction, all potential shift operators cancel out. Thus, for the second differences in both directions,

For the fourth difference, there’s a corresponding identity. Furthermore, applying the second or fourth difference to \(\rho H_j = \rho E_j + p_j\) results in

This gives

with

For the coefficient functions \(\epsilon ^{(2)}\) and \(\epsilon ^{(4)}\) [see (1) and (2)], we first look at the shock sensor \(s_{j+1/2}\). Here, we use the version for the Euler equations based on pressure. Straightforward calculations give

Thus

For simplicity, we now assume that \(\max (s_j,s_{j+1}) = s_j\). Thus,

For the spectral radius we note that in the speed of sound \(a_j=\sqrt{\gamma p_j/\rho _j}\), possible shift operators cancel out as well, implying that is constant over the mesh. This gives

Regarding the maxima, we have \(r_j=r_{j+1} =: r\) and correspondingly for the y direction with \(r_i\). Thus,

and

5.2 Preconditioner

With regards to the SGS preconditioner \(\mathbf{P}^{-1} = (\mathbf{D} + \mathbf{L})^{-1} \mathbf{D} (\mathbf{D} +\mathbf{U})^{-1}\), the different discretization based on the flux splitting (20) with cutoff function (19) gives [see (23)–(26)]

We now get two different operators for the diagonal part for the steady and for the unsteady case. We have

for the steady case, whereas for the unsteady case there is

With these, the preconditioner (22) is formed. For the W methods, these matrices need to be adjusted slightly, compare (27)–(30).

We now make one simplification in the analysis and that is that we assume the matrices to be evaluated with the value of the respective cell and not the average as in the actual method.

As an example, the application of the 3-stage AERK scheme results in the following operator, where we write \(\bar{\mathbf{H}}_c:=\mathbf{P}^{-1}{} \mathbf{H}_c\), \(\bar{\mathbf{H}}_v:=\mathbf{P}^{-1}{} \mathbf{H}_v\) and \({\bar{\alpha }}_i = \Delta t^*\alpha _i\):

For other smoothers, we have to use other appropriate stability functions, as discussed in Sect. 4.3. As a relation between \(\Delta t^*\) and \(c^*\), we use the relation

5.3 Amplification and Smoothing Factors

We are now interested in the amplification factor of the corresponding method for different values of \(\Delta x\) and \(\Delta y\). Working with \(\mathbf{G}\) directly would require assembling a large matrix in \(\mathbf{R}^{4n_x\times 4n_y}\). Instead, we perform a discrete Fourier transform. In Fourier space, the transformed operator block diagonalizes, allowing to work with the much smaller matrix \({\hat{\mathbf{G}}}\in \mathbf{R}^{4\times 4}\). Thus, we replace \(\mathbf{u}_{ij}\) by its discrete Fourier series

and analyze

When applying a shift operator to one of the exponentials, we obtain

and similar for \(E_y\). Defining the phase angles

the Fourier transformed shift operators are

and can replace the dependence on the wave numbers with a dependence on phase angles.

To compute the spectral radius of \(\mathbf{G}\), we now just need to look at the maximum of the spectral radius of \({\hat{\mathbf{G}}}_{\Theta _x,\Theta _y} = {\hat{\mathbf{G}}}_{k_x,k_y}\) over all phase angles \(\Theta _x\) and \(\Theta _y\) between \(-\pi \) and \(\pi \). Furthermore, this allows to compute the smoothing factor (31) as well, by instead taking the maximum over all wave numbers between \(-\pi \) and \(-\,\pi /2\), as well as \(\pi /2\) and \(\pi \).

5.4 Results

We evaluate the matrices in the points

and

We use a \(8 \times (8\cdot AR)\) grid with different aspect ratios (AR), namely \(\hbox {AR}=1\), \(\hbox {AR}=100\) and \(\hbox {AR}=10{,}000\). To determine the physical time step, a CFL number c of 200 is chosen. All results were obtained using a python script, which can be accessed at http://www.maths.lu.se/philipp-birken/rksgs_fourier.zip.

5.4.1 The Explicit Schemes

Results for explicit schemes for different test cases are shown in Table 4. As can be seen, these methods have terrible convergence rates, but are good smoothers for equidistant meshes. For non-equidistant meshes, this is not the case, which demonstrates the poor performance of these methods for viscous flow problems.

5.4.2 Preconditioned AERK

We now consider preconditioned AERK3J with SGS and exact preconditioning. The Mach number is set to 0.8 and the angle of attack to zero degrees, which is the most difficult test case of the ones considered. Even so, it is possible to achieve convergence at all aspect ratios with a large physical CFL \(c=200\). With regards to stability, we show the maximal possible \(c^*\) in Table 5. We can see that this is dramatically improved compared to the unpreconditioned method, but it remains finite, as predicted by the theory. We furthermore notice that the choice of d in the cutoff function (19) is important. In particular, the smaller we choose d, meaning the smaller we allow eigenvalues to be, the less stable the method will be. Maximal \(c^*\) is approximately proportional to the aspect ratio and to d. The eigenvalues and contours of smoothing factor for \(d=0.5\) are also illustrated in Fig. 1 for aspect ratios 1 and 100, respectively. Clustering of the eigenvalues along the real axis is observed indicating good convergence.

For each value of d considered, \(c^*\) was optimised (\(c^*\) opt) to minimise the smoothing factor (SM fct. opt). The results are shown in Table 6. Optimal smoothing factors improve as d is increased. Preconditioning with the exact inverse affords better smoothing factors than SGS preconditioning. In general, optimal \(c^*\) is close to maximal \(c^*\).

5.4.3 Additive W Methods

Results for AW3 with SGS and exact preconditioning are shown in Tables 7 and 8. Again, the Mach number is set to 0.8, the physical CFL \(c=200\) and the angle of attack to zero degrees. We set \(\eta =0.8\). An A means that no bound on \(c^*\) was observed. As we can see, as long as d is chosen sufficiently large, the methods are practically A-stable, as suggested by the theory. Surprisingly, for d small, stability is worse than for the preconditioned AERK methods. This is also illustrated in Fig. 2 for for Mach 0.5 and aspect ratios 1 and 100, respectively. As with AERK3J, the eigenvalues are clustered along the real axis.

A slightly more complex picture emerges when the optimal smoothing factor is considered. At \(\hbox {AR}=1\), the AW3 scheme attains very low optimal smoothing factors of around 0.3 at all values of d while the AERK3J scheme smoothing factors improved with increasing d. Comparing SGS preconditioning in both schemes, the optimal smoothing factors obtained by AW3 are slightly higher than AERK3J. Using exact preconditioning in both schemes at \(\hbox {AR}=100\) and 10,000, AW3 and AERK3J obtain comparable smoothing factors. Regarding the optimal \(c^*\), it is generally lower than with AERK3J except for \(d \ge 0.5\) and AR\(>1\).

5.4.4 Comparison of AW Schemes and Choice of \(\eta \)

One important question is the optimal choice of the additional parameter \(\eta \) in the W methods. Based on the AW3 results in Table 7 it was decided to focus on two values of d: \(d=0.1\) where limited stability was observed, and \(d=0.5\) where A-stability was observed. Only SGS preconditioning was used. For each W scheme and value of d, optimal values of \(c^*\), \(\eta \) and amplification and smoothing factors were determined. These are presented in Table 9 for initial conditions Mach=0.8, \(\alpha =0^{\circ }\) and in Table 10 for initial conditions \(\hbox {Mach}=0.8\), \(\alpha =45^{\circ }\).

Looking just at Table 9, the optimal value of \(\eta \) is low, either 0.4 or 0.5 (with one case of 0.7), when \(d=0.5\). When \(d=0.1\), the optimal \(\eta \) depends on AR: for \(AR=1\), optimal values of \(\eta \) are 0.5 or 0.6 and for \(AR=100\) and 10,000 the values are higher, mostly 0.8. Looking at Table 10, the optimal value of \(\eta \) is independent of d and the choice of scheme but not of AR. The optimal value of \(\eta \) appears to be somewhat dependent on the initial conditions and other free parameters but independent of the specific W scheme. Furthermore, the optimisation process demonstrated (not all results are shown for brevity) that the W schemes are all stable within a range of about \(\eta \in [0.5,0.9]\), but the maximal \(c^*\) varies with \(\eta \) within the range. As shown in Table 8, fixing \(\eta =0.8\) across all tests results in a stable but sub-optimal scheme. Looking at the relative performance of different W schemes in Tables 9 and 10, it is apparent that they all obtain similar optimal smoothing factors at similar \(c^*\) values. Therefore, AW3 is the best scheme as it uses only three stages.

The discrete Fourier analysis suggests that the preconditioned AERK3J and additive W schemes should theoretically achieve very good smoothing factors under challenging flow conditions and on high aspect ratio grids. Moreover, in the W schemes the eigenvalue limiting parameter d plays an important role: for \(d \ge 0.5\) and \(AR>1\) the allowable \(c^*\) is unlimited, while for smaller d or \(AR=1\) the optimal \(c^*\) is finite and smaller than that found for preconditioned AERK3J.

6 Numerical Results

We now proceed to tests on the RANS equations and use a FAS scheme as the iterative solver. We employ the Fortran code uflo103 to compute flows around pitching airfoils. All computations are run on Ubuntu 16.04 on a single core of an 8-core Intel i7-3770 CPU at 3.40GHz with 8 GB of memory.

C-type grids are employed, where the half of the cells that are closer to the boundary in y-direction get a special boundary layer scaling. To obtain initial conditions for the unsteady simulation, far field values are used from which a steady state is computed. The first unsteady time step does not use BDF-2, but implicit Euler as a startup for the multistep method. From then on, BDF-2 is employed. We look at the startup phase to evaluate the performance of steady state computations and at the second overall timestep, meaning the first BDF-2 step, to evaluate performance for the unsteady case.

As a first test case, we consider the flow around the NACA 64A010 pitching and plunging airfoil at a Mach number 0.796. The grid is illustrated in Fig. 3. For the pitching, we use a frequency of 0.202 and an amplitude of \(1.01^{\circ }\). 36 timesteps per cycle (pstep) are chosen. The Reynolds number is \(10^6\) and the Prandtl number is 0.75. The grid is a C-mesh with \(512\times 64\) cells and maximum aspect ratio of 6.31e6. As a second test case, we look at the pitching RAE 2822 airfoil at a Mach number of 0.75. The grid is illustrated in Fig. 3. For the pitching, we use a frequency of 0.202 and an amplitude of \(1.01^{\circ }\) and \(\hbox {pstep}=36\). The grid has \(320\times 64\) cells and maximum aspect ratio of 8.22e6.

The results of the Fourier analysis suggest that the most interesting schemes are SGS preconditioned AERK3J and the various AW schemes. A first thing to note is that due to nonlinear effects, the schemes need to be tweaked from the linear to the nonlinear case. In particular, it is necessary to start with a reduced pseudo CFL number \(c^*\). We restrict it to 20 for the first two iterations.

6.1 Choice of Parameters

In the AW methods, there are now three interdependent parameters to choose: \(\eta \), d and \(c^*\), the CFL number in pseudo time. We start by fixing d. Choosing \(d=0\) does not cause instability per se, but it leads to a stall in the iteration away from the solution. The convergence rates for \(d=0.05\), \(d=0.1\) and \(d=0.5\) for the NACA and the RAE test case can be seen in Tables 11, 12, 13, 14, 15, 16. The largest \(c^*\) tried is 10,000 in all cases. If the number reported is smaller, it implies that it is the largest for which the methods are convergent. A number in parentheses e.g. (90) after the convergence rate means that the rate was calculated for the first 90 iterations, after which convergence stalled. Only stable values of \(\eta \) are reported for brevity. The schemes are stable within a certain range, \(0.5 \le \eta \le 0.9\), which tallies with the Fourier analysis results.

Qualitatively, we observe the following behavior:

-

Increasing d makes the schemes slower to converge and more stable

-

This effect is stronger for the unsteady system

-

If \(\eta \) is too small, we get instability

-

Decreasing \(\eta \) within the stable region will improve the convergence rate

We thus suggest two different modes of operation:

-

1.

The robust mode: Choose \(d=0.5\), \(\eta =0.5\) and \(c^*\) very large

-

2.

The fast mode: Choose \(d=0.05\), \(\eta =0.5\) and \(c^*=100\)

The robust mode trades some convergence rate for more robustness.

The numerical experiments find somewhat different optimal values of \(\eta \) to those found in the discrete Fourier analysis. Possible reasons for the discrepancies include the linearisations used in the discrete Fourier analysis and the non-cartesian meshes in the numerical experiments.

6.2 Comparison of Schemes

The linear analysis suggests that preconditioned AERK3 is competitive with the preconditioned W methods in terms of smoothing power. However, its application requires choosing \(c^*\) within a stability limit whereas the W methods are A-stable for a certain range of d. To test the stability limit of the AERK schemes, we apply preconditioned AERK3 and AERK51 to the pitching NACA airfoil test case. The AERK3 method becomes unstable for \(c^*>1\), whereas AERK51 can be run with \(c^*=3\). However, both methods are completely uncompetitive with convergence rates of 0.999. Hereafter we compare only the AW schemes.

We compare AW3, AW51, AW52 and AW5J for the two airfoils and the two modes of operation: \(d=0.05\) and \(c^*=100\) versus \(d=0.5\) and \(c^*=10{,}000\). The relative residuals for the initial steady state computation are plotted in Fig. 4 for \(d=0.05\) and \(c^*=100\) and in Fig. 5 for \(d=0.5\) and \(c^*=10{,}000\). The residual histories for the same tests, but for the second unsteady timestep can be seen in Figs. 6 and 7.

The convergence rates and CPU times are summarized in Table 17 for the NACA64A010 airfoil and in Table 18 for the RAE 2822 airfoil. The numbers after the scheme names are the values of \(c^*\) used in the steady iterations. Faster convergence is obtained with \(d=0.05\) for all schemes except AW5J.

As an immediate conclusion, it can be seen that the different schemes have similar convergence rates. Thus, AW3 performs best in terms of CPU times, since it is a three stage smoother, opposed to the five stage smoothers. With the fast mode, we get a convergence rate for the unsteady case of 0.77 for the NACA profile and 0.8 for the RAE profile. However, for the RAE profile, we have to reduce \(c^*\) from 100 for 3 of the 4 schemes to prevent instability. With the convergence rate obtained, 20–30 iterations are sufficient to get a reduction of the residual by five order of magnitude. This is a matter of seconds and is sufficient for most applications. Note however, that for example when assessing the quality of different turbulence models, much lower residuals are desirable. In the robust mode, the convergence rate goes down to 0.8 for the NACA profile and 0.84 for the RAE profile.

In the steady state cases, there is a decline in convergence rate after 20 to 30 iterations. This explains why the convergence rates are significantly slower here than the unsteady ones. In the first phase, a convergence rate of about 0.7 is obtained and the norm of the residual is decreased by about \(10^6\).

The cause of this decline in convergence must be in nonlinear effects or in boundary conditions. One point of future investigation is if weak boundary conditions would be a better choice [26].

6.3 Different Meshes

To assess the solvers’ mesh-dependence, we run the pitching NACA 64010 airfoil with AW3 and \(d=0.5\) on coarse (\(256\times 32\)), medium (\(512\times 64\)) and fine (\(1024\times 128\)) meshes in robust mode. With d set to 0.05 the simulations on the coarse mesh diverged, which is an example where the robust mode is indeed more robust. We use seven multigrid levels for the finest mesh. Table 19 shows the results. As can be seen, the convergence of the preconditioned AW schemes goes down on the finest mesh, caused by a stall in the residual after 50 iterations and a reduction of the residual by five orders of magnitude. To fix this, the solver has to be adjusted. The last line shows a computation with \(\eta =0.8\) and two SGS steps instead of one, increasing computational effort, but recovering the convergence rate. On even finer meshes, we observe again the stall after 5 orders of magnitude residual reduction.

6.4 Effect of Flow Angle

In the Fourier analysis it was found that grid-aligned flow could be problematic. We therefore choose angles of attack \(\alpha \) of 0, 1, 2 and 4 degrees for the steady state computation or the second time step in an unsteady computation. Table 20 shows the convergence rates in fast mode (\(d=0.05\)). Essentially, it is unaffected by the angle of attack. However, for two cases, the iteration stalls after 30, resp. 65 iterations at relative residuals of \(10^{-4}\) and \(10^{-5}\), respectively.

7 Conclusions

We considered preconditioned pseudo time iterations for agglomeration multigrid schemes for the steady and unsteady RANS equations. As a discretization, the JST scheme was used as a flux function in a finite volume method. Based on previous work of other authors, we derived AW methods, as well as preconditioned AERK methods from time integration schemes. Both are implemented in exactly the same way with the difference being in how the preconditioner is chosen, as well as the pseudo time step size. For the latter, the preconditioner has to approximate the Jacobian \({\mathbf {J}}\), wheras for the AW method, the preconditioner has to approximate \({\mathbf {I}}+\eta \Delta t^* {\mathbf {J}}\). The time integration based derivation allows to conclude that preconditioned AERK has a finite stability region, whereas AW allows for possibly unbounded pseudo time steps. However, we obtain an additional parameter \(\eta \) which currently must be chosen empirically. As a preconditioner, we choose a flux vector splitting with a cutoff of small eigenvalues controlled by the free variable d.

To compare the different methods, we used a discrete Fourier analysis of the linearized Euler equations. Numerical results show that AW3, AW51 and AW52 have similar convergence rates, meaning that AW3 performs best, since it uses two stages less. The free parameter \(\eta \) can be chosen with relative freedom within a stable range (about [0.5, 0.9]) although the optimal value is dependent in some cases on the initial conditions, d and the aspect ratio. Fixing \(\eta =0.8\) is an acceptable simplification in the cases tested. The most significant parameter affecting stability and convergence is the eigenvalue cutoff coefficient d in the numerical flux function. It was found that the W schemes were A-stable for \(d \ge 0.5\) and had stability limits lower than preconditioned AERK schemes for \(d < 0.5\). Thirdly, the pseudo CFL number \(c^*\) was tuned for optimal performance. Different optimal values were obtained for different aspect ratios but as long as \(c^*\) was within the stability limit, good convergence was achieved. This is useful since the aspect ratios in practical meshes vary considerably.

Simulations of pitching and plunging NACA 64A010 and RAE2822 airfoils in high Reynolds number flow at Mach 0.796 were performed using the 2D URANS code uflo103. The preconditioned AERK schemes were completely uncompetitive with convergence rates of around 0.999. The additive W schemes, on the other hand, achieved convergence rates of as low as 0.85 for the initial steady-state iteration and 0.77 for the unsteady iterations. Slightly different optimal values of \(\eta \) and \(c^*\) were found although the behaviour of the schemes was qualitatively similar to that predicted by the linear analysis. We emphasise two modes of operation for the AW schemes: a fast mode, \(d=0.05\), \(\eta =0.5\) and \(c^*=100\) and a robust mode, \(d=0.5\), \(\eta =0.5\) and \(c^*=10{,}000\). Unsteady convergence rates in the robust mode were higher than the fast mode but still competitive. Steady-state convergence rates for all tests stalled to varying degrees after around 20 iterations but the residuals had already fallen by 6 orders of magnitude.

In summary, the new additive W schemes achieve excellent performance as smoothers in the agglomeration multigrid method applied to 2D URANS simulations of high Reynolds number transonic flows. The stiffness associated with very high aspect ratio grids is counteracted by highly tuned preconditioning. The underlying aim of this paper was to present a complete analysis of the reasons why such preconditioned iterative smoothers are effective, in order that their high performance can be replicated. We encountered two parameters that resisted analysis and had to be tuned empirically: \(\eta \) and d. Nevertheless, this is considered a great improvement. Future work will look at these parameters in more detail. In addition, boundary conditions should have an influence on convergence speed.

References

Baldwin, B.S., Lomax, H.: Thin layer approximation and algebraic model for separated turbulent flows. AIAA Paper 78–257 (1978)

Bertaccini, D., Donatelli, M., Durastante, F., Serra-Capizzano, S.: Optimizing a multigrid Runge–Kutta smoother for variable-coefficient convection–diffusion equations. Linear Algebra Appl. 533, 507–535 (2017)

Birken, P.: Numerical methods for the unsteady compressible Navier–Stokes equations. Habilitation Thesis. University of Kassel (2012)

Birken, P.: Optimizing Runge–Kutta smoothers for unsteady flow problems. ETNA 39, 298–312 (2012)

Birken, P., Bull, J., Jameson, A.: A note on terminology in multigrid methods. PAMM 16, 721–722 (2016)

Birken, P., Bull, J., Jameson, A.: A study of multigrid smoothers used in compressible CFD based on the convection diffusion equation. In: Papadrakakis, M., Papadopoulos, V., Stefanou, G., Plevris, V. (eds.) ECCOMAS Congress 2016, VII European Congress on Computational Methods in Applied Sciences and Engineering, vol. 2, pp. 2648–2663. Crete Island, Greece (2016)

Caughey, D., Jameson, A.: How many steps are required to solve the Euler equations of steady compressible flow: In search of a fast solution algorithm. AIAA Paper 2001–2673 (2001)

Cooper, G.J., Sayfy, A.: Additive methods for the numerical solution of ordinary differential equations. Math. Comput. 35(152), 1159–1172 (1980)

Gustafsson, B.: High Order Difference Methods for Time Dependent PDE. Springer, Berlin (2008)

Hairer, E., Wanner, G.: Solving Ordinary Differential Equations II, 2nd edn. Springer, Berlin (2002)

Jameson, A.: Transonic flow calculations for aircraft. In: Brezzi, F. (ed.) Numerical Methods in Fluid Dynamics, Lecture Notes in Mathematics, pp. 156–242. Springer, Berlin (1985)

Jameson, A.: Multigrid algorithms for compressible flow calculations. In: W. Hackbusch, U. Trottenberg (eds.) 2nd European Conference on Multigrid Methods, vol. 1228, pp. 166–201. Springer (1986)

Jameson, A.: Aerodynamics. In: E. Stein, R. de Borst, T.J.R. Hughes (eds.) Encyclopedia of Computational Mechanics, vol. 3: Fluids, chap. 11, pp. 325–406. Wiley (2004)

Jameson, A.: Application of dual time stepping to fully implicit Runge Kutta schemes for unsteady flow calculations. In: 22nd AIAA Computational Fluid Dynamics Conference, 22–26 June 2015, Dallas, TX, AIAA Paper 2015–2753 (2015)

Jameson, A.: The origins and further development of the Jameson–Schmidt–Turkel (JST) scheme. In: 33rd AIAA Applied Aerodynamics Conference, 22–26 June 2015, Dallas, TX, AIAA Paper 2015–2718 (2015). https://doi.org/10.2514/6.2015-2718

Jameson, A.: Evaluation of fully implicit Runge Kutta schemes for unsteady flow calculations. J. Sci. Comput. (2017). https://doi.org/10.1007/s10745-006-9094-1

Langer, S.: Investigation and application of point implicit Runge–Kutta methods to inviscid flow problems. Int. J. Numer. Methods Fluids 69(2), 332–352 (2012). https://doi.org/10.1002/fld

Langer, S.: Application of a line implicit method to fully coupled system of equations for turbulent flow problems. Int. J. CFD 27(3), 131–150 (2013). https://doi.org/10.1080/10618562.2013.784902

Langer, S.: Agglomeration multigrid methods with implicit Runge–Kutta smoothers applied to aerodynamic simulations on unstructured grids. J. Comput. Phys. 277, 72–100 (2014). https://doi.org/10.1016/j.jcp.2014.07.050

Langer, S., Li, D.: Application of point implicit Runge–Kutta methods to inviscid and laminar flow problems using AUSM and AUSM + upwinding. Int. J. CFD 25(5), 255–269 (2011). https://doi.org/10.1080/10618562.2011.590801

Langer, S., Schwöppe, A., Kroll, N.: Investigation and comparison of implicit smoothers applied in agglomeration multigrid. AIAA J. 53(8), 2080–2096 (2015)

Martinelli, L.: Calculations of viscous flows with a multigrid method. Ph.D. thesis, Princeton University (1987)

Mavriplis, D.J., Venkatakrishnan, V.: Agglomeration multigrid for two-dimensional viscous flows. Comput. Fluids 24(5), 553–570 (1995). https://doi.org/10.1016/0045-7930(95)00005-W

Mulder, W.A.: A new multigrid approach to convection problems. J. Comput. Phys. 83, 303–323 (1989)

Mulder, W.A.: A high-resolution Euler solver based on multigrid semi-coarsening, and defect correction. J. Comput. Phys. 100, 91–104 (1992)

Nordström, J., Forsberg, K., Adamsson, C., Eliasson, P.: Finite volume methods, unstructured meshes and strict stability for hyperbolic problems. Appl. Numer. Math. 45(4), 453–473 (2003). https://doi.org/10.1016/S0168-9274(02)00239-8

Peles, O., Turkel, E.: Acceleration methods for multi-physics compressible flow. J. Comput. Phys. 358, 201–234 (2018). https://doi.org/10.1016/j.jcp.2017.10.011

Rossow, C.C.: Convergence acceleration for solving the compressible Navier–Stokes equations. AIAA J. 44, 345–352 (2006)

Rossow, C.C.: Efficient computation of compressible and incompressible flows. J. Comput. Phys. 220(2), 879–899 (2007). https://doi.org/10.1016/j.jcp.2006.05.034

Swanson, R.C., Rossow, C.C.: An efficient solver for the RANS equations and a one-equation turbulence model. Comput. Fluids 42(1), 13–25 (2011). https://doi.org/10.1016/j.compfluid.2010.10.010

Swanson, R.C., Turkel, E.: Analysis of a fast iterative method in a dual time algorithm for the Navier–Stokes equations. In: J. Eberhardsteiner (ed.) European Congress on Computational Methods and Applied Sciences and Engineering (ECCOMAS 2012) (2012)

Swanson, R.C., Turkel, E., Rossow, C.C.: Convergence acceleration of Runge–Kutta schemes for solving the Navier–Stokes equations. J. Comput. Phys. 224(1), 365–388 (2007). https://doi.org/10.1016/j.jcp.2007.02.028

Swanson, R.C., Turkel, E., Yaniv, S.: Analysis of a RK/implicit smoother for multigrid. In: Kuzmin, A. (ed.) Computational Fluid Dynamics 2010. Springer, Berlin (2011)

Trottenberg, U., Oosterlee, C.W., Schüller, S.: Multigrid. Elsevier Academic Press, Cambridge (2001)

van Leer, B., Tai, C.H., Powell, K.G.: Design of optimally smoothing multi-stage schemes for the Euler equations. In: AIAA 89-1933-CP, pp. 40–59 (1989)

Acknowledgements

We would like to thank Charlie Swanson for interesting discussions and sharing some code with us.

Funding

Funding was provided by Kungliga Fysiografiska Sällskapet i Lund, vetenskapsrådet via grant 2015–04133 and the Air Force Office of Scientific Research via grant FA9550-14-1-0186 under the direction of Fariba Fahroo.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Birken, P., Bull, J. & Jameson, A. Preconditioned Smoothers for the Full Approximation Scheme for the RANS Equations. J Sci Comput 78, 995–1022 (2019). https://doi.org/10.1007/s10915-018-0792-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-018-0792-9