Abstract

In this work we have applied the computational methodology based on Artificial Neural Networks (ANN) to the kinetic study of distinct reaction mechanisms to determine different types of parameters. Moreover, the problems of ambiguity or equivalence are analyzed in the set of parameters to determine in different kinetic systems when these parameters are from different natures. The ambiguity in the set of parameters show the possibility of existence of two possible set of parameter values that fit the experimental data. The deterministic analysis is applied to know beforehand if this problem occurs when rate constants of the different stages of the mechanism and the molar absorption coefficients of the species participating in the reaction are obtained together. Through the deterministic analysis we will analyze if a system is identifiable (unique solution or finite number of solutions) or if it is non-identifiable if it possesses infinite solutions. The determination of parameters of different nature can also present problems due to the different magnitude order, so we must analyze in each case the necessity to apply a second method to improve the values obtained through ANN. If necessary, an optimization mathematical method for improving the values of the parameters obtained with ANN will be used. The complete process, ANN and mathematical optimizations constitutes a hybrid algorithm ANN-MATOPT. The procedure will be applied first for the treatment of synthetic data with the purpose of checking the applicability of the method and after, it will be used in the case of experimental kinetic data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The application of computational techniques to the study of reaction kinetic systems has been very important since it has been possible to determine kinetic, analytic and/or thermodynamic parameters, allowing also, the discrimination between the different mechanisms that could be responsible of the course of the reaction.

The computational techniques used are numerous and are based in very diverse methodologies such as non-linear regression methods [1,2,3,4,5], curve resolution techniques [6, 7] or techniques that use methodologies based in Artificial Neural Networks [8,9,10,11].

In a previous work we have used the ANN methodology for the determination of rate constants from kinetic data in the case of a system of consecutive irreversible first-order reactions [11]. The ANN method was applied for the study of the reaction that takes place between 2-mercaptoethanol with Carbonyl cyanide 3-chlorophenylhydrazone, from experimental kinetic data the determination of the reaction rate constants was carried out, performing first an experimental design of the type Central Star Composite Experimental Design (CSCED) and then, the prediction of the rate constant through ANN.

In later works a hybrid algorithm, ANN-AGDC, was developed, consisting of the sequential application of two methods: Artificial Neural Network method (ANN) and mathematical optimization algorithm AGDC. This hybrid algorithm has been used for the treatment of kinetic data for the determination of rate constants and for the discrimination between reaction mechanisms [12]. The application of hybrid algorithm is a great advantage since the AN0N, that is applied first, is a treatment that does not need initial estimates, so that the obtained results can be used as initial estimates for the second method (mathematical optimization) that does require the use of previous values for the parameters to determine.

The first version of hybrid algorithm ANN-AGDC was applied to determine Activation Thermodynamic Parameters (ATP) from the treatment of non-isothermal kinetic data coming from diverse reaction mechanisms [13,14,15]. In the first two works the applicability of hybrid algorithm ANN-AGDC is checked for the determinations of ATP from non-isothermal kinetic data, directly, without the need to determine the kinetic constants in the previous step. In these works, the ANN methodology was first applied and the need to apply the second process, the mathematical optimization algorithm AGDC, was checked. Subsequently, this methodology was used for the treatment of experimental non-isothermal kinetic data from the isomerization reaction of the steroid 5-cholesten-3-ona to 4-cholesten-3-ona catalyzed by sodium ethoxide and from the kinetics for the breakdown of the trinuclear chromium acetate cluster with a series of monoprotic and diprotic amino acid ligands [15].

In this work we have used a new hybrid algorithm ANN-MATOPT for the determination of rate constants and molar absorption coefficients simultaneously, in the case of different reactions mechanisms whose stages are of first order. The studied mechanisms are the following:

-

Model I: Simple irreversible reaction in which a reactant gives a product through a single step.

-

Model II: System formed by two irreversible reactions in which two reactants give the same product.

-

Model III: Mechanisms in which the same reactant gives two different products through two irreversible reactions.

-

Model IV: System of consecutive irreversible reactions.

When parameters of different nature are determined jointly and simultaneously, there can be problems of ambiguity or equivalence in the set of parameters to determine, which indicates the possible existence of two set of values of the parameters that fit the experimental data [4, 5, 16,17,18]. Through the deterministic analysis it can be known beforehand if a model is identifiable with a unique solution or with a finite number of solutions, or non-identifiable with infinite solutions [16]. First, by means of deterministic analysis, for each one of the mechanisms, we will assess if ambiguity occurs when the rate constants of the different stages of the mechanism and the molar absorption coefficients of the participating species in the reaction are jointly determined. Subsequently, we will check in the case of situations where there is ambiguity if the application of ANN leads us to an unique solution or to any of the mathematically acceptable solutions.

The problem of the ambiguity in the parameters does not have a solution from a mathematical point of view so we apply the ANN methodology, either individually or in conjunction with a mathematical optimization algorithm, for situations in which this problem is found and we will check if the obtained results lead us to an unique solution or to all of those mathematically accepted.

The determination of parameters of different nature can also present problems due to their different magnitude order, so we need to analyze in each case the need to apply a second method (mathematical optimization) to improve the values obtained through ANN.

In the case of models I and II, whichever are the set of parameters to determine, exists an unique solution, so the models are identifiable. In model III depending on the set of parameters determined, the model can be identifiable with an unique solution or non-identifiable with infinite solutions.

In the case of model IV formed by two irreversible first order consecutive reactions, when the joint determination of rate constants and the molar absorption coefficient of the reactant or the final product is carried out, there exists a unique solution, so the system is identifiable in both cases. In case that the rate constants and the molar absorption coefficient of the intermediate species are jointly determined, an ambiguity problem occurs in the solution, finding two possible solutions that cannot be distinguished from the mathematical point of view [4, 5]. This aspect is clearly displayed in the case of the determination of these parameters through mathematical optimization procedures.

2 The oretical aspects

2.1 Chemical kinetics aspects

Each one of the nr chemical elementary reaction that can occur in a chemical system in which a number of species nc are present, can be written using the following equation [19]:

nc = number of chemical species involved in the mechanism. nr = number of chemical elementary reaction of the mechanism. \({\text{k}}_{{\text{r}}}\) = kinetic rate constant for each elementary reaction r (\({\text{r }}\) = 1,…, nr). \({\text{B}}_{{\text{j}}}\) = chemical species involved in the reaction mechanism (\({\text{j }}\) = 1,…, nc). \({\upnu }_{{{\text{j}},{\text{r}}}}\) = stoichiometric coefficient of the specie Bj in the reaction r (\({\upnu }_{{{\text{j}},{\text{r}}}}\) < 0 for the reagents and \({\upnu }_{{{\text{j}},{\text{r}}}}\) > 0 for the products).

The elementary reaction r occurs at a rate vr:

where Bc are the species that act as reagents in the elementary reaction r, \({\upnu }\) c,r is the stoichiometric coefficient of the species Bc in the reaction r (\({\upnu }\) c,r < 0 for reagents), [Bc] is the concentration of the species Bc and \({\text{k}}_{{\text{r}}}\) is the kinetic rate constant for each elementary reaction r.

The variation of the concentration of species Bj in each of the r elementary reactions, in a time interval, is equal to:

The total variation of the concentration is expressed through the following differential equation:

The change in the concentration of each of the nc species involved in the mechanism is given by d[Bj]/dt, so we will have a system of differential rate equations whose resolution provides the concentration of each specie in the considered time interval, \(\left( {\left[ {{\text{B}}_{{\text{j}}} } \right]_{{{\text{t}}_{{\text{i}}} }} } \right)\).

The reaction mechanisms studied in this work are the following:

-

1.

Model I \({\text{B}}_{1} \xrightarrow{{\text{k}}}{\text{B}}_{2}\)

-

2.

Model II \({\text{B}}_{2} \xrightarrow{{{\text{k}}_{2} }}{\text{B}}_{1}\) \({\text{B}}_{3} \xrightarrow{{{\text{k}}_{3} }} {\text{B}}_{1}\)

-

3.

Model III \({\text{B}}_{1} \xrightarrow{{{\text{k}}_{2} }} {\text{B}}_{2}\) \({\text{B}}_{1} \xrightarrow{{{\text{k}}_{3} }} {\text{B}}_{3}\)

-

4.

Model IV \({\text{ B}}_{1} \xrightarrow{{{\text{k}}_{1} }} {\text{B}}_{2} \xrightarrow{{{\text{k}}_{2} }} {\text{B}}_{3}\)

Table 1 shows the systems of differential rate equations obtained for each one of the mechanisms and the resulting expressions for the concentration of each species obtained from the resolution of the system of differential rate equations.

If the development of the chemical reaction is followed spectrophotometrically measuring the total absorbance of the reacting mixture and in the event that the optical path length 1 = 1 cm, according to the Lambert–Beer-Bouguer`s Law at time i and at wavelength λ, we obtain:

where nc is the number of species that absorb at the wavelength λ, Ai,λ is the total absorbance of the reacting mixture at time i and at wavelength λ, εj,λ is the molar absorption coefficient of the specie j at wavelength λ and [Bj]i the molar concentration of the specie j at time i.

2.2 Hybrid algorithm

A hybrid algorithm is a combination of different techniques that solve the same problem [20]. The hybrid algorithm used in this work is formed by a combination of two algorithms based in different mathematical principles and they are applied sequentially. In the first stage the Artificial Neural Network (ANN) method is applied for the determination of the individual rate constants and the molar absorption coefficients. The values of the parameters obtained through the application of ANN are used as initial estimates of a mathematical optimization algorithm applied in the second stage with the objective of improving the final values of the parameters.

2.2.1 Artificial neural networks (ANN)

The Artificial Neural Network computational method, ANN, is a systematic procedure of data processing formed by a large number of simple elements (nodes or artificial neurons) interconnected with each other [21,22,23]. Each neuron receives multiple input signals and sends a single output signal for which it contains two algorithms [23], one of them calculates the weighted sum of the values that are received by the input connections (xi) and the other one generates a response or output (xj) that is transferred to other neurons. The neurons network learns through the adjustment of the weights (wji) of the connections between neurons until it provides predictions with enough precision.

Each neuron determines the net input value, \({\text{ S}}_{{\text{j}}} ,\) from the weighted sum of the input values \(\left( {{\text{S}}_{{\text{j}}} = \mathop \sum \limits_{{\text{i}}} {\text{ x}}_{{\text{i}}} \,{\text{w}}_{{{\text{ji}}}} } \right)\), later it is transformed into the activation value, aj, (aj (k) = Fi (aj (k − 1), Sj(k))), where aj (k) is the actual activation value, aj (k − 1) the previous activation value and Sj(k) the net input value.

The output value is determined by applying the output function (transfer function), in most cases the activation and the net input are identical, so xj = fj (aj) = fj (Sj).

In an ANN the neurons are connected and organized in levels or layers (architecture);

-

Input layer where data is presented to the network.

-

Output layer where the network response is provided.

-

Hidden layer formed by those neurons whose inputs come from previous layers and whose outputs pass to neurons from subsequent layers.

The simplest network (monolayer network) consists of a group of organized neurons in one layer, between which lateral, crossed and recurrent connections are established. The multilayer networks consist of clustered sets of neurons in various levels or layers where the output of one layer is the input of the next one. The neurons of one layer receive input signals from a previous layer and send output signals to a following layer (feedforward) or the output of the neurons from posterior layers can be connected to the input of preceding layers, (feedback).

From a data set, training data set, the ANN learns to calculate the correct output for each input (training), during this process, the weights of the ANN connections suffer modifications in response to the input information (wji(k + 1) = wji(k) + Δwji(k)). The ANN has learnt when the values of the weights remain stable (dwji/dt = 0).

The goal of the training of an ANN is achieving that for a set of inputs the desired or consistent set of outputs is produced.

2.2.2 Mathematical optimization

The mathematical optimization methods usually use the criteria of least squares for the determination of parameters from experimental data. The procedure consists of minimizing the error expressed as the sum of the squares of the differences between the values of the experimentally observed magnitude and those calculated using the equation of the proposed mechanism or model (sum of the quadratic deviation function, SQD) [24, 25]. In the case that the observed magnitude is the total absorbance of the reacting mixture, the SQD function has the form:

nd = number of pairs of the absorbance/time data. \(\left( {{\text{A}}_{{\text{i}}} } \right)_{{\text{E}}}\) = experimental absorbance data. \(\left( {{\text{A}}_{{\text{i}}} } \right)_{{\text{C}}}\) = calculated absorbance data. t = values for the independent variable (time). X = vector that contains the parameters to be determines (X1, X2,…,Xp).It is possible to fit the function \(y = \left( {{\text{A}}_{{\text{i}}} } \right)_{{\text{C}}} \left( {{\mathbf{t}},{\mathbf{X}}} \right)\) to the experimental data (Ai)E, minimizing the SQD function (mathematical optimization) and adjusting the parameters X1, X2,…, Xp to obtain the minimum of SQD [24, 25]. The mathematical optimization is an iterative process so in order to initiate the process, it is necessary a first estimate of the values of the parameter, X(0), and with them the first value of the function SQD, SQD(0), will be obtained. When the optimization is finished the values of the parameters, X*, that provide the smallest value of SQD, SQD*, will be obtained.

Gradient methods (Gauss–Newton, Levenberg-Mardquardt, Davidon-Fletcher-Powell, etc., …) are often used to carry out the optimization, these methods are based on the Taylor series development of the SQD function [24, 25].

X = vector that contains the parameters to be determines. ΔX = vector constituted by the variations of the parameter values. g = Jacobian gradient vector of the SQD function (gT = transposed vector) whose components are the derivatives of the SQD function with respect to one of the parameters XP. H = Hessian matrix of the SQD function (symmetric matrix npxnp containing the second-order partial derivatives of the SQD function).

In Eq. (5) we can despise the terms in which the derivatives higher than the first order (first order gradient methods) or the terms in which the derivatives higher than the second order intervene (second order gradient methods). From the calculation of the derivative of the SQD function with respect to each of the parameters, its cancellation and the resolution of the equations obtained, the point in which the function SQD has a minimum value (X*) is reached, Eqs. (7) and (8) for first and second order gradient methods respectively:

The equation that provides the value X* can be used to move iteratively towards the SQD minimum starting from an initial estimate X(0) and generating values X(m) at each iteration, so that the SQD value is less than from the previous iteration.

The movement vector will be, ΔX(m) = – g (X(m)) for first order methods and ΔX(m) = – H(X(m))−1 g (X(m)) for the second ones, where m = 0, 1, … is the corresponding iteration.

In each iteration the vector ΔX(m) must provide a point X(m+1) that gives rise to a lower value of the SQD function, avoiding divergence. The first order method always evolves towards the minimum, but it is excessively slow. Second-order methods, due to the approximations that are imposed and to the not strictly quadratic condition of the SQD function, present problems that can lead to the divergence of the process. There are modifications of the Gauss–Newton method that improve the results: Levenberg-Mardquardt method that modifies the vector X(m+1) by introducing a parameter λ, Davidon-Fletcher-Powell method that determines an inverse matrix approximated by successive approximations or the AGDC that introduces control devices to avoid divergence [3,4,5, 24, 25].

The hybrid algorithm used in this work uses the values of the parameters obtained with ANN as initial estimates (X(0)) for the process of mathematical optimization (MATOPT).

2.3 Ambiguity or equivalence in the parameters

When the treatment of kinetic data corresponding to a certain reaction mechanism is carried out in order to determine a set of parameters, there might be one or more sets of parameters (X = (X1, …, Xp), X′ = (X′1, …,X′p), etc,..) that fit the experimental data: ambiguity or equivalence in the set of parameters [4, 5, 16,17,18]. This problem has been studied through different methods, one of them consists of the application of the deterministic analysis [16] that allows to detect it beforehand and determine the relationship between the solutions X and X′.

In a chemical reaction whose development is experimentally followed measuring the total absorbance, it might happen that the molar absorption coefficients of some species involved in the reaction \(\left( {\upvarepsilon _{{{\text{B}}_{{\text{j}}} }} } \right)\) are unknown parameters and it is necessary to determine them jointly with the rate constants, X = (k1, …,kr, \(\upvarepsilon _{{{\text{B}}_{{1}} }} ,..,\upvarepsilon _{{{\text{B}}_{{\text{j}}} }}\)).

In the case of reaction mechanism of first order, we consider the following vectors and the corresponding components: x0(X), initial concentrations; x(t,X), concentrations; x'(t,X), rate differential equations and y(t,X), absorbance values (linear function that depends of the parameters X and the concentrations). According to the deterministic analysis two sets of the parameters values, X and X' (X ≠ X') are not differentiable among them if:

According to this equation, the following situations can be given:

-

If X' = X, the solution is unique, the model is only identifiable in X.

-

If there is a finite number of distinct solutions, X' ≠ X, the model is identifiable in X, but the solution is not unique.

-

If there is an infinite number of solutions, the model is non-identifiable.

The approximation that applies the Laplace transform [16] to the differential rate equations and to the equations that relates the absorbance and the concentration, is one of the most used mathematical methods to analyze these problems. Through this method we obtain the transform of the concentration vector, Z(s, X) (s is a complex argument) and the Laplace transform of the absorbance\(, {\text{Y}}\left( {{\text{s}},\,{\mathbf{X}}} \right).\) Each component Yi(s,X) of \({\text{ Y}}\left( {{\text{s}},{\mathbf{X}}} \right)\) is a rational function with the form:

The coefficients \(\phi_{{\text{j}}}^{{\text{i}}}\) depend on X and x0(i) and the vector Φ will be formed by all the coefficients Y1(s,X)… Ym(s,X).

Considering another set of parameters \({\mathbf{X}}^{\prime } = \left( {{\text{k}}_{1}^{\prime } , \ldots ,{\text{k}}_{{\text{r}}}^{\prime } ,{\upvarepsilon }_{{{\text{B}}_{{\text{1}}} }}^{\prime } , \ldots ,{\upvarepsilon }_{{{\text{B}}_{{\text{j}}} }}^{\prime } } \right)\) whose Laplace transform is \({\text{Y}}\left( {{\text{s}},{\mathbf{X}}^{{\prime }} } \right)\), the Eq. (10) is satisfied if and only if: \({\text{Y}}\left( {{\text{s}},{\mathbf{X}}^{{\prime }} } \right) = {\text{Y}}\left( {{\text{s}},{\mathbf{X}}} \right)\). This relationship is true if and only if \({{\varvec{\Phi}}}\left( {{\mathbf{X^{\prime}}}} \right) = {{\varvec{\Phi}}}\left( {\mathbf{X}} \right)\), from which a system of polynomial equations is obtained, establishing the number of solutions of the system of equations we can establish if a reaction model possess more than one solution, meaning that it is identifiable.

If np is the number of parameters to optimize and ne is the number of polynomial equations that can be established, the following situations can occur:

-

np < ne then the system is identifiable and the solution is unique.

-

np = ne then the system is identifiable and the solution is not unique.

-

np > ne then the system is not identifiable, it has infinite solutions.

3 Computational aspects

3.1 ANN computational application

In this work, the applications provided by MATLAB® [26] are used for the design and training of the ANN and subsequent prediction of the parameters. All the applications are available in the Neural Network Toolbox™, since a set of parameters have been determined from kinetic data, the application that allows to adjust functions, Fitting a Function, has been used. In order to use this application, it is necessary to provide to the ANN a data set (training data set) to use them in the training of the ANN, this set needs to be formed by a inputs matrix that contains data corresponding to different kinetic experiences and a matrix (targets) consisting of the parameters corresponding to the data of the inputs matrix. The data is generated synthetically for each specific case and in the training the ANN learns to establish relationships between the inputs and targets matrixes.

3.1.1 Generation of data

The synthetic total absorbance/time data for the training of the ANN are generated computationally through the executable programs (type “.m”) of MATLAB, considering the initial concentration conditions, the time interval in which the reaction takes place, the rate constant values (kr) and the molar absorption coefficients \(\left( {\upvarepsilon _{{{\text{B}}_{{\text{j}}} }} } \right)\) obtained from the corresponding experimental desing. The concentration of each one of the chemical species in the time interval considered, is determined from the expressions that result from the resolution of the system of rate differential equations (Table 1) and the values of the absorbance from the Lambert-Beer-Bouguer`s law (Eq. 3). The absorbance data generated will be distributed in the inputs matrix and the values of the parameters with which they have been generated (constants and molar absorption coefficients) in the targets matrix. The dimensions of the inputs and targets matrixes, number of rows x number of columns, are the following:

-

Inputs: number of rows = number of data from each experience, number of columns = number of kinetic curves.

-

Targets: number of rows = number of parameters, number of columns = number of kinetic curves.

3.1.2 Design and training of the ANN

In each case studied it is necessary to find the optimal architecture of the ANN, that is, the optimal number of layers and nodes of each one of the hidden layers. The procedure to perform is the subsequent:

-

Desing of an ANN formed by n layers ((n − 1) Hidden + 1 Output):

-

Establish the number of layers (numLayer = n).

-

Establish the number of neurons in the hidden layers (numHiddenNeurons1 = Integer number, numHiddenNeurons2 = Integer number,..)

-

Create the network (net = newfit(inputs,targets,[numHiddenNeurons1,..]))

-

Training of the ANN: net = train(net,inputs,targets)

The training of each one of the ANNs designed, is carried out with the data corresponding to the training data set. These data is divided into three groups: experiences for the training (TR), experiences to validate the network (VL) and those that are used as an independent test (TS). The program automatically establishes the percentages for the curves for TR, VL and TS, but can be modified by the used to improve the results. MATLAB applies by default the Levenberg–Marquardt algorithm to carry out the training, although it is possible to choose another algorithm among others provided by MATLAB.

The result of the training can be considered acceptable if a series of requisites are met: the mean square errors (MSE) is significantly small, the VL and TS errors have similar characteristics, there is no overfitting and the linear regression between the corresponding outputs and targets for TR, VL and TS provide values for the correlation coefficient, R, close to 1.

If the training of the ANN has been carried out correctly, it could be considered suitable to carry out prediction tasks, otherwise we can improve the results: reestablish the initial ANN and train again (retrain), increase the number of neurons in the hidden layers, increase the number of hidden layers, modify the percentage of experiences for TR, VL and TS or use another optimization algorithm that minimizes the difference of the values of the output and targets.

3.1.3 Prediction

The optimal ANN can be used to determine unknown parameters (prediction), carrying out the following steps:

-

1.

Provide data to the ANN of the experiences whose parameters are unknown, inputs: Nº of rows = Nº data of each experience (it should match with the nº of rows of the inputs matrix with which the ANN has been trained) and Nº columns = Nº of experiences whose parameters are unknown.

-

2.

Prediction with the optimal ANN previously trained (net) through the command: [Y] = sim(net,inputs) (Y = values of the parameters predicted by the ANN).

3.2 Mathematical optimization

To carry out the mathematical optimization process the tool Optimization Toolbox™ from MATLAB® has been used. Among all the possibilities that MATLAB offers, we have used the lsqcurvefit function, that used different gradient methods to perform the optimization process, Levenberg–Marquardt, Gauss–Newton, etc., … that can be chosen by the user.

From the input data, xdata and ydata, the function lsqcurvefit finds the coefficients x that best fit the equation F(x, xdata):

where xdata is the vector that contains the time value considered (ti), ydata is the vector that contains the absorbance experimental data and F(x, xdata) is the vector that contains the values calculated for the property measured (absorbance).

The sequence of commands is the following:

-

Initial estimations of the parameters (values obtained with ANN): × 0 = [Par1, Par2,…]

-

Options for the optimization according to the manual specifications: options = optimset('MaxFunEvals',1e8,'TolFun',1e-18,'TolX',1e-12,'MaxIter',1e4)

-

Order to carry out the optimization: [x,resnorm] = lsqcurvefit(function, × 0,xdata,ydata,0,1000,options)

x = vector that contains the optimized parameters; resnorm = values of the sum of the quadratic deviations; function = name of the subprogram that needs to be created to calculate the absorbance data with the values estimated initially for the parameters and the ones that are been determined through the optimization process.

4 Results and discussion

In this work a hybrid algorithm (ANN-MATOPT) is applied to different reaction mechanisms to jointly determine parameters of different nature, specifically, rate constants (kr) and molar absorption coefficients (εj). This hybrid algorithm consists of a first stage where the ANN methodology is used to predict the values of kr and εj and a second stage where a mathematical optimization method is applied to the results obtained through ANN to improve them. In each reaction mechanism the deterministic analysis is applied to learn beforehand the existence of ambiguity and equivalence in the set of parameters to determine and it is checked if the hybrid algorithm ANN-MATOPT leads us to the true solution or the different set of parameters that fit the experimental data are obtained.

4.1 Experimental desing

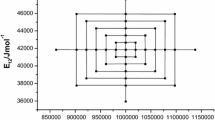

The absorbance data set with which it is performed the ANN training consists of a set of total absorbance/time kinetic data (inputs) generated with the group (targets) of constants (kr) and the molar absorption coefficients (εj), organized according to the corresponding experimental design (ED) [27]. The ED used can be two or three dimensional depending on the number of factors involved, an example for each case is shown in Figs. 1 and 2.

4.2 ANN training: optimal architecture

Once the kinetic data of total absorbance/time have been generated (input matrix) according to the ED (targets matrix), Fig. 3, we carry out the ANN training in order to find the most suitable to determine the parameters, kr y εj, in each case. The set of data are divided in three groups to carry out the tasks of training, validation and testing (TR/TS/VL) in all the cases studied in this work, the optimal percentage is 80/10/10.

The optimal architecture of the ANN is determined using the trial and error method that consists of designing different ANNs with different number of hidden layers and different number of neurons in each one of them to carry out the training process for each ANN. The training is an iterative process in which in each iteration new values for the parameters are obtained (outputs) and it is controlled through the validation process, for which the program calculates the value of the mean square error (MSE) as follows:

where nc is the number of kinetic curves, np number of parameters, \({b}_{ij}^{output}\) and \({b}_{ij}^{target}\) are the components of the outputs and targets matrixes.

The program selects the minimum MSE value of VL reached (Fig. 4) that corresponds to the MSE value of the overall training process. The TS process is similar to the VL, it compares the elements of the outputs and targets matrixes in each time unit for the set of curves selected for the processes of TS and VL. The TS and VL curves must always run very close (Fig. 4), this should be a criterion to consider the correct training process.

Once the ANN training process has been completed, the minimum MSE value and the values of the regression parameters of the overall training process and those of the TR, VL and TS groups are obtained. It can be considered that the training has been carried out correctly if the MSE value is small and if in the outputs/targets linear regressions (Fig. 5) the equations are linear (slope ≈ 1, intercept ≈ 0) and the regression coefficient is R≈1.

When the optimal network is selected, the final results of the training are analyzed and it is verified that overfitting does not occur, verifying that the performance of the training and test set is good, that is, that the results obtained in the training process are acceptable in both cases (Table 2).

4.3 Prediction of parameters in different reaction mechanisms

In all the reaction mechanisms studied we have considered that the different stages are of order 1 and that the initial concentration ([Bj]0) of the reagents is different from 0 and that of the products is null. Besides, \({\mathbf{X}} = \left( {{\text{k}}_{1} , \ldots ,{\text{ k}}_{{\text{r}}} ,\upvarepsilon _{{{\text{B}}_{{1}} }} , \ldots ,\upvarepsilon _{{{\text{B}}_{{\text{j}}} }} } \right)\)) and \({\mathbf{X}}^{{\prime }} = \left( {{\text{k}}_{1}^{\prime } , \ldots ,{\text{k}}_{{\text{r}}}^{\prime } ,\upvarepsilon _{{{\text{B}}_{1} }}^{\prime } , \ldots ,\upvarepsilon _{{{\text{B}}_{{\text{j}}} }}^{\prime } } \right)\) are the sets of parameters that fit the experimental data, being \({\text{Y}}\left( {{\text{s}},{\mathbf{X}}} \right){ }\) and \({\text{Y}}\left( {{\text{s}},{\mathbf{X}}^{\prime } } \right)\) the Laplace transforms of the absorbance for X and X′.

In all of the cases, we proceed as follow:

-

Establishment and resolution of the system of differential rate equations.

-

Calculation of the Laplace transforms of the absorbance for X and X′ (\({\text{Y}}\left( {{\text{s}},{\mathbf{X}}} \right)\) and \({\text{Y}}\left( {{\text{s}},{\mathbf{X}}^{\prime } } \right)\)).

-

\({\text{Y}}\left( {{\text{s}},{\mathbf{X}}} \right) = {\text{Y}}\left( {{\text{s}},{\mathbf{X}}^{\prime } } \right)\) → We obtain a system of polynomial equations.

-

Solving the system of polynomial equations → Knowledge beforehand of the identifiability of the system.

-

Experimental design in each case studied and generation of the AT/t data for training the Artificial Neural Network (ANN).

-

Desing of the ANNs.

-

Training of the ANNs and analysis of the results → Optimal ANN

-

Prediction of the parameters in experiences in which their value is not known

-

Verification of the agreement of the results with that predicted by the deterministic analysis → Identifiable system with a unique solution or with a finite number of solutions, non-identifiable system with an infinite number of solutions.

-

Verification of the need to apply a mathematical optimization method to improve the results → Hybrid algorithm ANN-MATOPT.

-

4.3.1 Model I \({\mathbf{B}}_{1} \xrightarrow{{\mathbf{k}}} {\mathbf{B}}_{2}\)

For the case of this simple mechanism, the determination of the following groups of parameters are analyzed: \({\mathbf{X}} = \left( {{\text{k}}, \upvarepsilon _{2} } \right)\), \({\mathbf{X}} = \left( {{\text{k}}, \upvarepsilon _{1} } \right)\) and \({\mathbf{X}} = \left( {{\text{k}},\upvarepsilon _{1} , \upvarepsilon _{2} } \right)\). The Laplace transform of the total absorbance for this case is expressed as:

For another set X′ parameters, the Laplace transform is \({\text{Y}}\left( {{\text{s}},{\mathbf{X}}^{\prime } } \right)\), according to the deterministic analysis \(\left( {{\text{Y}}\left( {{\text{s}},{\mathbf{X}}} \right) = {\text{Y}}\left( {{\text{s}},{\mathbf{X}}^{\prime } } \right)} \right)\) the following relationships are obtained: \(\upvarepsilon _{{{\text{B}}_{1} }} =\upvarepsilon _{{{\text{B}}_{1} }}^{\prime } ,\) \(\upvarepsilon _{{{\text{B}}_{2} }} =\upvarepsilon _{{{\text{B}}_{2} }}^{\prime }\) and \({\text{k}}_{1} = {\text{k}}_{1}^{\prime }\). Thus, the system is identifiable and the solution is unique in any of the cases. The ANN application for the prediction of the different group of parameters support the conclusions from the deterministic analysis, since in all of the cases the system is identifiable and the solution in unique.

Consider for example the prediction of \(\left( {{\mathbf{X}} = {\text{k}}, \upvarepsilon _{2} } \right)\), after performing the corresponding ED, the training is carried out obtaining that for this case the optimal ANN consists of one hidden layer with 4 neurons. Then, a series of AT/t curves are generated with certain values of k and \(\upvarepsilon _{2}\) that later we will assume that they are unknown and whose values are determined with the optimal ANN. In all the cases a solution is obtained and it is also observed the difference between the values of the parameters with which they have been generated and those calculated is minimal, so, it is no necessary to subsequently apply a mathematical optimization process.

4.3.2 Model II \({\mathbf{B}}_{2} \xrightarrow{{{\mathbf{k}}_{2} }} {\mathbf{B}}_{1}\) \({\mathbf{B}}_{3} \xrightarrow{{{\mathbf{k}}_{3} }} {\mathbf{B}}_{1}\)

For this mechanism, we study the determination of the following set of parameters: \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\), \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) and \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\). In this case the Laplace transform of the total absorbance is:

As in the previous case, considering another set of parameters X′, the following relationships are obtained:

Solving these equations, it is concluded that in all cases, the system is identifiable with a unique solution.

In order to check that the application of the ANN methodology leads us to these results in the case of the prediction of \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\), \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) or \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\), 43 AT/t curves are generated from the values of the parameters obtained from the corresponding ED. The training of the ANN is performed with these curves, obtaining as optimal networks the one formed by 2 hidden layers with 1 neuron in the 1st layer and 2 in the 2nd layer (experience 1) or, 3 hidden layers with 4 neurons in each of the layers (experience 2).

Next, an AT/t curve is generated for the following values: k1 = 0.1 min−1, k2 = 0.05 min−1, \(\upvarepsilon _{{{\text{B}}_{{1}} }}\) = 600 M−1 cm−1, \(\upvarepsilon _{{{\text{B}}_{{2}} }}\) = 900 M−1 cm−1, \(\upvarepsilon _{{{\text{B}}_{{3}} }}\) = 700 M−1 cm−1. Subsequently, the different groups of parameters kr, \(\upvarepsilon _{{{\text{B}}_{{\text{j}}} }}\) are assumed unknown and the prediction is performed with the optimal ANN. The results obtained corroborate the conclusions obtained by the deterministic analysis, but in this mechanism, the difference between the values of the parameters with which the data had been generated and those calculated by ANN are significantly large. In this case, the values determined with ANN are used as initial estimates for the application of the mathematical optimization algorithm, which considerably improves the results (Table 3). The sequential application of both methods, ANN and mathematical optimization, constitutes the hybrid algorithm (ANN-MATOPT). Table 3 shows the obtained results using optimal ANNs in the prediction of \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\), \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) and \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\) and the results obtained after subsequent mathematical optimization.

4.3.3 Model III \({\mathbf{B}}_{1} \xrightarrow{{{\mathbf{k}}_{2} }} {\mathbf{B}}_{2}\) \({\mathbf{B}}_{1} \xrightarrow{{{\mathbf{k}}_{3} }} {\mathbf{B}}_{3}\)

In the case of this mechanism, the determination of the following parameters is studied: \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\), \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) and \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\). The Laplace transform of the total absorbance in the system is given by:

The following relationships between the parameters corresponding to two solutions X and X′ are obtained from the deterministic analysis:

Solving these equations, the subsequent conclusions are obtained: if the parameters to determine are \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\) a unique solution is obtained and if \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) or \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\) are determined, the system in non-identifiable since it has infinite solutions.

As in the previous mechanisms, in each of the cases to study the corresponding ED and the ANN training is carried out and the prediction process is performed in each of the cases. The optimal ANN for predicting the three sets of parameters are those consisting of 2 hidden layers with 6 neurons in the 1st layer and 7 neurons in the 2nd layer and 3 hidden layers with 4 neurons in each layer.

To carry out the prediction, an AT/t curve is generated with the following parameter values: k1 = 0.1 min−1, k2 = 0.05 min−1, \(\upvarepsilon _{{{\text{B}}_{{1}} }}\) = 900 M−1 cm−1, \(\upvarepsilon _{{{\text{B}}_{{2}} }}\) = 600 M−1 cm−1, \(\upvarepsilon _{{{\text{B}}_{{3}} }}\) = 700 M−1 cm−1. Subsequently, different groups of parameters are assumed unknown and they are determined through the application of the hybrid algorithm ANN-MATOPT. Table 4 shows the results obtained for the prediction of \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\), \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) and \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\) for experiences performed with ANN of different architecture experiences and the results obtained after subsequent mathematical optimization.

The results obtained are in agreement with the deductions from the deterministic analysis, in the case of the prediction of de \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\) in all of the experiences performed a unique solution is obtained and in the prediction of \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) or \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\) it can be verified that the application of ANN and the subsequent optimization leads us to infinite solutions so the system is non-identifiable.

4.3.4 Model IV \({\mathbf{B}}_{1} \xrightarrow{{{\mathbf{k}}_{1} }} {\mathbf{B}}_{2} \xrightarrow{{{\mathbf{k}}_{2} }} {\mathbf{B}}_{3}\)

In the case of first-order consecutive irreversible reaction system, the prediction using ANN and subsequent optimization (hybrid algorithm ANN-MATOPT) of the following set of parameters are studied: \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\), \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) and \({\mathbf{X}} = \left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\).

The Laplace transform for the total absorbance and the corresponding relationships between the different set of parameters in this case are:

Solving these equations for different situations allows us to conclude that if the set of parameters to determine are \({\mathbf{X}} = \left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\) or \({\mathbf{X}} = \left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\), the system is identifiable and the solution is unique. In the case that the set of parameters to determine is \({\mathbf{X}} = \left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) a three-equation system is obtained:

Since the number of parameters to determine is three, the system is identifiable, but the solution is not unique. Two solutions are obtained, \({\mathbf{X}} = \left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) and \({\mathbf{X}}^{\prime } = \left( {{\text{k}}_{1}^{\prime } ,{\text{k}}_{2}^{\prime } ,\upvarepsilon _{{{\text{B}}_{3} }}^{\prime } } \right)\) being the relationship between the two:

In the same way as in the previous cases to study, the corresponding ED is performed, the ANN training is carried out and the prediction process is realized with the ANN that provide better results.

In the case that the parameters to determine are \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{1} }} } \right)\) the most appropriate ANN for the prediction are: 1 hidden layer with 10 neurons, 1 hidden layer with 15 neurons, 2 hidden layers with 15 neurons in each layer and 2 hidden layers with 20 neurons in each layer (experiences 1, 2, 3 and 4 respectively). If the prediction of \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{3} }} } \right)\) is performed, the most appropriate ANN for the prediction are: 1 hidden layer with 15 neurons, 2 hidden layers with 5 neurons in each one, 2 hidden layers with 7 neurons in the first layer, 5 in the second one and 2 hidden layers with 20 neurons in each layer (experiences 1, 2, 3 and 4 respectively).

In both cases, in order to carry out the prediction, an AT/t curve is generated with the following values k1 = 0.1 min−1, k2 = 0.05 min−1, \({\upvarepsilon }_{{\mathrm{B}}_{1}}\)= 900 M−1 cm−1, \({\upvarepsilon }_{{\mathrm{B}}_{2}}\)Lo=600 M−1 cm−1, \({\upvarepsilon }_{{\mathrm{B}}_{3}}\)=700 M−1 cm−1. Then, (k1, k2, \({\upvarepsilon }_{{\mathrm{B}}_{1}}\)) or (k1, k2, \({\upvarepsilon }_{{\mathrm{B}}_{3}}\)) are assumed unknown and through the application of the different ANN the prediction of the parameters is performed. Subsequently, the mathematical optimization is applied to improve the results obtained through ANN, the results obtained lead us to the same conclusions as the deterministic analysis; when determining the parameters (k1, k2, \({\upvarepsilon }_{{\mathrm{B}}_{1}}\)) or (k1, k2, \({\upvarepsilon }_{{\mathrm{B}}_{3}}\)) the system is identifiable, obtaining a unique solution (Table 5).

Lastly, we have studied the joint determination of (k1, k2, \(\upvarepsilon _{{{\text{B}}_{2} }}\)), according to the deterministic analysis when these parameters are jointly determined from total absorbance kinetic data, the system is identifiable but the solution is not unique, obtaining two solutions related to each other by the Eq. (17). Following the same procedures as in the previous cases, the corresponding ED is carried out and a series of AT/t curves are generated with which the training that provides the optimal networks is performed, a network formed by 2 hidden layers with 4 neurons in each layer. With the optimal ANN, considering \(\upvarepsilon _{{{\text{B}}_{1} }}\) and \(\upvarepsilon _{{{\text{B}}_{3} }}\) as known values, we carry out the prediction of k1, k2 and \(\upvarepsilon _{{{\text{B}}_{2} }}\).

Considering the point 1 of Fig. 6 that is found inside the 3D figure of the corresponding ED and which corresponds to the values k1 = 0.1 min−1, k2 = 0.05 min−1, \(\upvarepsilon _{{{\text{B}}_{2} }}\) = 600 M−1 cm−1, a curve AT/t is generated with these values and considering that \(\upvarepsilon _{{{\text{B}}_{1} }}\) = 900 M−1 cm−1 and \(\upvarepsilon _{{{\text{B}}_{3} }}\) = 700 M−1 cm−1. If k1, k2 and \(\upvarepsilon _{{{\text{B}}_{2} }}\) are unknown, according to the deterministic analysis, there exists two sets of parameters that can fit the data AT/t: X = (k1 = 0.1 min−1, k2 = 0.05 min−1, \(\upvarepsilon _{{{\text{B}}_{2} }}\) = 600 M−1 cm−1) and X′ = (\({\text{k}}_{1}^{\prime }\) = 0.05 min−1, \({\text{k}}_{2}^{\prime }\) = 0.1 min−1, \(\upvarepsilon _{{{\text{B}}_{2} }}^{\prime }\) = 300 M−1 cm−1). The results obtained in the prediction of these parameters through the application of ANN and the subsequent mathematical optimization are shown in Table 6. The values provided by ANN are far from the real values, so we use the vales as initial estimates for the mathematical optimization process. The results obtained through the application of the hybrid algorithm ANN-MATOPT show that two solution that are mathematically possible are obtained. The values obtained for \(\upvarepsilon _{{{\text{B}}2}}\) by ANN is the one that conditions that the mathematical optimization process leads us to one or another solution, the two solution are mathematically acceptable, performing the statistical analysis of residuals there are no appreciable differences between the two.

Points 2 and 3 of Fig. 6 are outside the ED and correspond respectively to the parameter values (k1 = 0.15 min−1, k2 = 0.01 min−1, \(\upvarepsilon _{{{\text{B}}_{{2}} }}\) = 100 M−1 cm−1) and (k1 = 0.15 min−1, k2 = 0.01 min−1, \(\upvarepsilon _{{{\text{B}}_{{2}} }}\) = 950 M−1 cm−1), in the case of the jointly determination of de k2, k3 and \(\upvarepsilon _{{{\text{B}}_{{2}} }}\) from the AT/t data, in each case there is another possible set of parameters that represent the system \({\text{k}}_{1}^{\prime }\) = 0.01 min−1, \({\text{k}}_{2}^{\prime }\) = 0.15 min−1, \(\upvarepsilon _{{{\text{B}}_{2} }}^{\prime }\) = − 11,000 M−1 cm−1) and (\({\text{k}}_{1}^{\prime }\) = 0.01 min−1, \({\text{k}}_{2}^{\prime }\) = 0.15 min−1, \(\upvarepsilon _{{{\text{B}}_{2} }}^{\prime }\) = 1650 M−1 cm−1).

Table 7 collects the most significant results obtained after the application of the hybrid algorithm in these cases, which confirms the applicability of the ANN-MATOPT algorithm for the determination of k1, k2 and \(\upvarepsilon _{{{\text{B}}_{2} }}\), although the values of these parameters are outside of the ED, it is also checked that the two solutions obtained are acceptable from a mathematical point of view although not from a chemical point of view \(\left( {\upvarepsilon _{{{\text{B}}_{{2}} }}^{\prime } < 0} \right)\).

Finally, the proposed methodology (ANN-MATOPT) has been used to process experimental kinetic data from the reaction between carbonyl cyanide 3-chlorophenylhydrazone (B1) with 2-mercaptoethanol (C), whose kinetics has been studied in different works [28,29,30].

The mechanism of this reaction consists of two consecutive irreversible reactions, in the first step, an intermediate adduct (B2) is formed, which is then hydrolyzed in an intramolecular reaction to give the product 3-chlorophenylhydrazonocyanoacetamide, (B3) and the subproduct ethylene sulphide (D).

The reaction is carried out in an excess of the reagent C, so a pseudo first order can be assumed and it is possible to express it schematically according to the IUPAC norms [19]:

Chau et al. [29] have performed a classic kinetic study at temperature of 20.0° C and pH = 4.3, following the evolution of the reaction by measuring the total absorbance (AT) at different wavelengths. The values obtained by these authors for the rate constants are: k1 = 0.33600 min−1 and k2 = 0.02004 min−1. We use the experimental data obtained when the reaction is followed at λ = 375 nm to determine by the hybrid algorithm ANN-MATOPT the kinetic constants and the molar absorption coefficients at that λ. The values of the molar absorption coefficients of each of the species at λ = 375 nm are: \({\upvarepsilon }_{{{\text{B}}_{1} }}\) = 1.63 × 104 mol−1 cm−1L, \({\upvarepsilon }_{{{\text{B}}_{2} }}\) = 2.00 × 104 mol−1 cm−1L and \({\upvarepsilon }_{{{\text{B}}_{3} }}\) = 1.48 × 104 mol−1 cm−1L.

Based on the values found in the bibliography, the interval variation of the parameters is established to perform the ED: [0.35–0.02 min−1] for the constants (kr) and [3 × 104–1 × 104 Lmol−1 cm−1] for the molar absorption coefficients (εj). Once the corresponding ED has been performed, 51 kinetic curves AT/t were generated with which the ANN training has been carried out and the optimal network has been obtained with which the prediction of the following parameters has been carried out: \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\), \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\) and \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{2} }} } \right)\). Table 8 shows the results obtained in different experiences after the application of the hybrid algorithm ANN-MATOPT for the prediction of the different set of parameters. As it occurs in the treatment of synthetic data, when \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\) or \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\) are determined the system is identifiable with a unique solution and when the parameters to determine are k1, k2 and \(\upvarepsilon _{{{\text{B}}_{{1}} }}\) the system is identifiable, but the solution is not unique, obtaining two solutions that are mathematically acceptable. Table 8 shows the two solutions, the real one and the other one that is mathematically acceptable (indicated in italics).

5 Conclusion

In this work the hybrid algorithm ANN-MATOPT has been used to determine in different reaction mechanisms, the rate constants (kr) of the different stages and the molar absorption coefficients (εj) of the different species participating in the reaction.

In a first stage the ANN methodology is applied, the analysis of the parameters given by ANN (regressions outputs/targets, MSE, etc,..) show that in some of the cases, the obtained results are unacceptable, telling the need to apply, in the second step, a mathematical optimization method to improve the results obtained with ANN and that originate the hybrid algorithm ANN-MATOPT.

The advantage of applying the ANN methodology over other parameter determination methods, is that it is a treatment that does not need to start from initial estimates, so it is not necessary to know the magnitude order of the parameters to determine. The mathematical optimization methods need estimated values of the parameters and it is also convenient that these estimations are close to the real values for the optimization to be carried out correctly. In the proposed methodology, ANN is applied initially, so that the results obtained can be used as initial estimates of the second method (mathematical optimization) that does require the use of previous values for the parameters to determine. It is observed that if the mechanism is simple (model I) the result provided by ANN are acceptable and in no case is necessary to apply subsequently the mathematical optimization method. For more complex mechanisms (II, III and IV) in all situations, it is necessary the application of the mathematical optimization so that the results can be considered acceptable.

When parameters of distinct nature are determined from kinetic data from the measurement of a total property, problems of ambiguity on the prediction may arise, that is, there is more than one group of parameters that represents the experimental data. The application of the Laplace transform has allowed us to detect beforehand the existence of ambiguity for the studied mechanism. The application of the ANN methodology in the case of situations where there is ambiguity or equivalence in the parameters, leads us to the same conclusions as the deterministic analysis and the mathematical optimization methods. ANN leads us to any of the acceptable solution from the mathematical point of view. In the cases of mechanisms I and II, a group of parameters is obtained that fits the kinetic data in all cases, while for mechanism III, it depends on the parameters that are determined. If \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\) are determined, a group of parameters is obtained and for \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) and \(\left( {{\text{k}}_{2} ,{\text{k}}_{3} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\), an infinite number of parameters that fit the kinetic data, non-identifiable system is obtained.

In the mechanism IV, it happens the same as the previous case, in the determination of \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{1}} }} } \right)\) and \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{3}} }} } \right)\) a unique solution is obtained, while when determining \(\left( {{\text{k}}_{1} ,{\text{k}}_{2} ,\upvarepsilon _{{{\text{B}}_{{2}} }} } \right)\) we find two indiscernible solutions from each other, equally valid from a mathematical point of view. It is worth to point out the influence of the molar absorption coefficient of the intermediate species obtained with ANN since its value has a decisive influence on the hybrid algorithm ANN-MATOPT leading us to one or the other solution.

The application of deterministic analysis in the mechanisms studied indicates that in the special case ki = kj there is no “slow-fast” ambiguity in the determination of parameters.

In all cases in which there is ambiguity, that is, in those systems identifiable with a finite number of solutions or non-identifiable with infinite solutions, all of them are acceptable from a mathematical point of view, but not so from a chemical point of view. All the sets of parameters fit the experimental data, but only one of them contains the true parameters. In these cases, all the solutions are mathematically acceptable, performing a statistical analysis of residuals, there are no appreciable differences found between them, in order to discern which is the true solution we must resort to other considerations different from the mathematical or statistical, such as those of the strictly chemical type. The molar absorption coefficient of a certain specie at a wavelength λ(εj), is a parameter that has a characteristic value and can be used for the chemical identification of that specie. Similarly, the value of the rate constants of the different stages of a reaction mechanism (ki), have a determined value under certain reaction conditions (temperature, pH,…). For this reason, only a certain set of ki and εj values are chemically true, although there is more than one set of parameters that fit the time total absorbance data and leads to a minimum value of the sum of the quadratic deviations (SQD).

Change history

04 November 2021

The original article has been updated due to update in funding note.

References

F. Pérez Pla, J.F. Bea Redón, R. Valero, Chemom. Intell. Lab. Syst. 53, 1 (2000)

B. Svir, O.V. Klymenco, M.S. Platz, Comput. Chem. 26, 379 (2002)

M.M. Canedo, J.L. González-Hernández, Chemom. Intell. Lab. Syst. 66, 63 (2003)

M.M. Canedo, J.L. González-Hernández, J. Math. Chem. 49, 163 (2011)

M.M. Canedo, J.L. González-Hernández, S. Encinar del Dedo, App. Math. and Comp. 219, 7089 (2013)

E. Bezemer, S.C. Rutan, Chemom. Intell. Lab. Syst. 59, 19 (2001)

S. Bijlsma, H. Boelens, H. Hoefsloot, A.K. Smilde, Anal. Chim. Acta 419, 197 (2000)

B. Kovacs, J. Tóth, Int. J. Appl. Math. Comput. Sci. 4, 7 (2007)

N.H.T. Lemes, E. Borges, J.P. Braga, Chemom. Intell. Lab. Syst. 96, 84 (2009)

F. Amato, J.L. González-Hernández, J. Havel, Talanta 93, 72 (2012)

M.M. Canedo, J.L. González-Hernández, S. Encinar del Dedo, J. Math. Chem. 51, 1634 (2013)

J.L. González-Hernández, M.M. Canedo Alonso, S. Encinar del Dedo, MATCH Commun. Math. Comput. Chem. 79, 619 (2018)

S. Encinar del Dedo, J.L. González-Hernández, M.M. Canedo, MATCH Commun. Math. Comput. Chem. 72, 427 (2014)

S. Encinar del Dedo, J.L. González-Hernández, M.M. Canedo, D. Juanes, J. Math. Chem. 53, 1080 (2015)

J.L. González-Hernández, M.M. Canedo Alonso, S. Encinar del Dedo, MATCH Commun. Comput. Chem. 83, 295 (2020)

S. Vadja, H. Rabitz, J. Phys. Chem. 98, 5265 (1994)

A. Balogh, G. Lente, J. Kalmár, I. Fábián, Int. J. Chem. Kinet. 47, 773 (2015)

A.I. Petrov, V.D. Dergachev, Int. J. Chem. Kinet. 49, 494 (2017)

K.J. Laidler, Pure Appl. Chem. 68, 149 (1996)

A. Tarek, E.A. Hopgood, L. Nolle, A. Battersby, Eng. Lett. 13(2), 124 (2006)

A. Freeman, D.M. Skapura, Neural Networks, Algorithms, Applications and Programming Techniques, 1st edn. (Adinson-Wesley, Massachusetts, 1991)

J. Zupan, J. Gasteiger, Neural Networks for Chemists. An Introduction, 1st edn. (VCH Weinheim, New York, 1993)

J.R. Hilera, V.J. Martínez, Redes Neuronales Artificiales: Fundamentos, Modelos y Aplicaciones, 1st edn. (Alfaomega, Madrid, 2000)

P.R. Abdy, M.A.H. Dempster, Introduction to Optimization Methods, 1st edn. (Chapman and Hall, Cambridge, 1974)

M.A. Wolfe, Numerical Methods for Unconstrained Optimization: An Introduction, 1st edn. (Van Nostrand Reinhold, New York, 1978)

MATLAB & Simulink, © 1994–2021, The MathWorks, Inc

P. Gemperline, Practical Guide to Chemometrics, 2nd edn. (CRC Press, Boca Raton, 2006)

R.H. Bisby, E.W.K. Thomas, J. Chem. Ed. 63(11), 990 (1986)

F.T. Chau, K.W. Mok, Comput. Chem. 16, 239 (1992)

S. Bijlsma, D.J. Louwerse, A.K. Smilde, J. Chemom. 13, 311 (1999)

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Canedo Alonso, M.M., González Cuadra, J. & González-Hernández, J.L. ANN-MATOPT hybrid algorithm: determination of kinetic and non-kinetic parameters in different reaction mechanisms. J Math Chem 59, 2021–2048 (2021). https://doi.org/10.1007/s10910-021-01275-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10910-021-01275-x