Abstract

We present a novel relaxation for general nonconvex sparse MINLP problems, called overlapping convex hull relaxation (CHR). It is defined by replacing all nonlinear constraint sets by their convex hulls. If the convex hulls are disjunctive, e.g. if the MINLP is block-separable, the CHR is equivalent to the convex hull relaxation obtained by (standard) column generation (CG). The CHR can be used for computing an initial lower bound in the root node of a branch-and-bound algorithm, or for computing a start vector for a local-search-based MINLP heuristic. We describe a dynamic block and column generation (DBCG) MINLP algorithm to generate the CHR by dynamically adding aggregated blocks. The idea of adding aggregated blocks in the CHR is similar to the well-known cutting plane approach. Numerical experiments on nonconvex MINLP instances show that the duality gap can be significantly reduced with the results of CHRs. DBCG is implemented as part of the CG-MINLP framework Decogo, see https://decogo.readthedocs.io/en/latest/index.html.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Column generation is a classical technique in mathematical optimization, see e.g. the Dantzig–Wolfe algorithm [1]. It is used in integer and continuous optimization problems to solve large problems in an elegant way. Solutions of sub-problems are combined in a master problem which provides both, a direction to search for further solutions of sub-problems, and a (primal) estimation of a solution point. The concept makes use of a (sparse) structure of problems where an optimization problem consists of a number of blocks that are linked.

The idea of a block-separable structure got attention in theoretical literature, see e.g. [2] for an overview in convex optimization. Furthermore, it has found practical applications in solving large-scale scheduling problems. Many engineering problems consist of several modules and can be modelled into a block-separable structure. [3] exploit this structure for optimizing decentralized energy supply systems (DESS). Such systems typically combine several modules to be optimized as a whole. In fact, most real-world Mixed Integer Nonlinear Programming (MINLP) models are sparse, e.g. instances of the MINLPLib [4]. These models can be defined by low-dimensional sub-problems coupled by a limited number of linear constraints. The sub-problems can potentially be solved in parallel, see [5,6,7]. The third paper investigated a column generation approach for general sparse nonconvex MINLP problems.

Our research question here is, whether it is worthwhile to combine blocks in an aggregation way to compute an overlapping convex hull relaxation, so that the result shows a reduced duality gap. To answer this question, we present a new algorithm to dynamically aggregate blocks for tightening the relaxation of MINLPs and execute numerical experiments over test instances. This paper is organised as follows. Section 2 introduces the notation of MINLP formulations with a block structure and the convex hull relaxation. In Sect. 3, we describe and illustrate, how aggregation of blocks helps to tighten convex hull relaxations. Section 4 presents a resource-constrained reformulation of an MINLP and describes a dynamic-block version of a column generation algorithm. In Sect. 5, we perform experiments on a set of instances with dynamic block aggregation and compare them to state-of-the-art MINLP solvers. Section 6 summarizes the findings of our investigation on block aggregation.

2 Block formulation and overlapping convex hull relaxation

MINLPLib [4], the library of MINLP models for real-world instances, shows that many models have a sparse block structure. A sparse block structure allows the variables to be grouped into many smaller blocks such that the Hessian of the nonlinear model functions has an almost block-diagonal structure. In this section, we describe MINLPs with a sparse block structure and present related overlapping convex hull relaxations.

2.1 Sparse MINLPs

Consider the following generic MINLP formulation. The linear constraints are captured by set

where the index set \(M_1\) refers to the inequality constraints and \(M_2\) refers to equality constraints. The variable indices are captured in set N with \(|N|=n\) elements. The complex constraint set is defined as

where \(I\subseteq {N}\) represents the set of indices for integer variables. The set G is defined as

where \(\underline{x}\) and \(\overline{x}\) are vectors in \(\mathbb {R}^{n}\) representing the lower and upper bounds on the variables, respectively. We assume that the constraint functions, \(g_{j}: \mathbb {R}^{n}\rightarrow \mathbb {R}\) are continuously differentiable within the set \([\underline{x}, \overline{x}]\). The complete problem is then written as

Each MINLP can be represented with a linear objective function vector c. The objective and linear constraints in P are not required to be sparse; they may involve many or all variables in N, making them dense. The nonlinear functions, in many instances, are mainly supported by small subsets of the variables, which promotes considering a block structure. To do so, we define a support \({{\,\textrm{supp}\,}}(g)\subseteq {N}\) of function g as the index set of variables that occur in g. Note that linear constraints may also be supported by a small group of variable. We can take these small support linear constraints into account in a block structure considering them as a function g rather than being captured in P. We will call MINLP (4) sparse if

It is worth noting that, without loss of generality, problems with nonlinear objective functions can be linearized by introducing new variables and corresponding nonlinear constraints. However, to maintain sparsity, the support of the nonlinear objective function should remain small, without violating (5). The sparsity described in Eq. (5) can be leveraged to decompose the feasible set of Eq. (4) into sub-domains characterized by a (relatively) small number of variables and constraints. To facilitate this decomposition, we introduce blocks to serve as containers for variables and constraints within each sub-domain. In this context, we use \(k\in K\) as the block index, \(B_k\subset {N}\) as the set of variable indices, and \(J_k\subset J\) as the set of constraint indices for block k. In a block-separable structure, the constraint blocks \(J_k\) partition set J. The variables within block k are defined by the vector \(x_{B_k}:=(x_i)_{i\in B_k}\). The set of constraint indices J can be decomposed as \(J=\bigcup _{k\in K} J_k\), where each \(J_k\) corresponds to a block with its variable indices \(B_k:=\bigcup _{j\in J_k} {{\,\textrm{supp}\,}}(g_j)\). The (local) constraints of block k are defined by

In fact, expression (7) requires the interpretation that \(g_j\) takes its arguments from variables in block \(B_k\) and the other variable values are irrelevant. Note that \(G_k\) may also contain linear constraints as long as their (small) support is included in \(B_k\), whereas the other large support linear constraints stay in P. This gives the following reformulation of problem (4):

If the blocks in MINLP (8) are disjoint, meaning \(B_k\cap B_\ell =\emptyset \) for \(k,\ell \in K\) and \(k\ne \ell \), then (8) is referred to as block-separable or quasi-separable. On the other hand, if the blocks overlap in their variable indices, the block structure is called block-overlapping. Although block aggregation can be applied in general for sparse models with overlapping blocks, we will focus on a block-separable structure.

2.2 Convex hull relaxation and inner approximation

A convex hull relaxation (CHR) of (8) is defined by replacing the local feasible set \(X_k\) with its convex hull, i.e.

The quality of relaxation (9) can be presented by the duality gap, defined by

that is, a smaller duality gap indicates a tighter relaxation of (4). Note that CHR (9) can be defined for a general overlapping block structure, i.e. \(B_k\cap B_\ell \not =\emptyset \) for some \(k,\ell \in K, k\ne \ell \). The algorithms we investigate use the notion of the so-called inner approximation, conv\((S_k)\), of \({{\,\textrm{conv}\,}}(X_k)\) by a finite set of feasible points \(S_k\subset X_k\) for block k, see Sect. 4.2. The generation of such points is based on a linear objective function, such that the inner points are typically extreme points of \(X_k\).

3 Tightening the convex hull relaxation by aggregating blocks

One essential observation is that good relaxation quality (measured by a small duality gap) relies on how the overlapping blocks are defined. In this section, we present a new approach of refining (strengthening) CHR (9) by dynamically adding overlapping aggregated blocks. These aggregated blocks, denoted by \(B_k=B_\ell \cup B_s\) and \(J_k=J_\ell \cup J_s\), where \(\ell \) and s are the indices of the original blocks in (8), play a key role in our approach. We introduce convex hull constraints \({x_{B_k}}\in {{\,\textrm{conv}\,}}({X_k})\) for each aggregated block into (9), effectively acting as cutting planes to enhance the CHR. This approach resembles the well-known cut generation technique used to refine outer approximations.

The research question focuses on how to efficiently generate overlapping blocks dynamically, in order to obtain an effective algorithm of generating a good convex hull relaxation. There is a trade-off between the computational cost and the quality of the relaxation. If the block becomes too large (in the extreme case, applying convex hull relaxation on the original problem instead of the block formulation), we can guarantee a better relaxation, but it requires solving many difficult MINLP sub-problems. So, we aim to keep relatively small size blocks. Under this circumstance, we look into how to generate low-dimensional overlapping blocks that provide better results in (9).

3.1 Block-aggregation

Aggregating existing blocks in (8) can be approached in multiple ways, allowing for flexibility in determining the number of aggregated blocks, their sizes, and the sequence in which they are introduced. We aim to select aggregated blocks that are promising in tightening the result of the CHR and that have relatively small size. To expand the set of blocks K, we define \(K_1\) as the index set of blocks from the original formulation (8). The aggregated blocks, denoted by \(K_2\), are then added, so \(K=K_1\cup K_2\). An aggregated block is characterized by its variable indices \(B_k = B_\ell \cup B_s\), where \(\ell , s \in K_1\). Aggregation of two blocks is of interest if the support of an existing linear constraint from P is contained in \(B_k\), but not in \(B_\ell \) and \(B_s\), i.e

where \(a_m: = [a_{mi}]_{i \in N} \in \mathbb {R}^n \) represents the left-hand side coefficient vector of a linear constraint in P, with each \(a_{mi}\) as its element; \({{\,\textrm{supp}\,}}(a_m)\) is defined as the set of indices with non-zero entries:

A linear constraint in P satisfying (11) is called a coupling constraint. The feasible domain associated with the linear constraints, where the supports are contained within \(B_k\) of an aggregated block, is defined as follows:

Here, \(a_{m,B_k}\) represents a vector of coefficients in \(a_m\) that are associated with the variables in \({B_k}\). Consider the (disjoint) original blocks \(B_\ell \) and \(B_s\) once more. The feasible domain of the aggregated block k is defined by

Example 1

(copy-constraints) A copy-constraint is a coupling constraint satisfying (11) defined by \(x_{i}-x_{j}=0\), where \(i\in B_\ell \), \(j\in B_s\) and \(\ell \not =s\). This type of constraints appears frequently in block-separable formulations. We can define an aggregated block \(B_k=B_\ell \cup B_s\) satisfying (11) regarding a copy-constraint with support in \(B_\ell \cup B_s\). Assuming the copyconstraint is the only linear constraint associated with the aggregated block, its feasible domain can be expressed as:

Proposition 1 shows that adding an aggregated block containing the support of a coupling constraint satisfying (11) may tighten the convex hull relaxation of (8).

Proposition 1

Consider a new aggregated block \(B_k=B_s\cup B_\ell \) satisfying (11). Let \(K_1\cup \{k\}\) be the index set of blocks in the extended CHR, \({{\,\textrm{gap}\,}}(K_1\cup \{k\})\) denote the corresponding duality gap of CHR (9) and \({{\,\textrm{gap}\,}}(K_1)\) that of the original blocks \(K_1\). Then we have \({{\,\textrm{gap}\,}}(K_1\cup \{k\})\le {{\,\textrm{gap}\,}}(K_1)\). Moreover, if the solution \(x^*\) of (9) associated with \(K_1\) is unique and \(x_{B_s\cup B_\ell }^*\not \in {{\,\textrm{conv}\,}}(X_k)\), then we have \({{\,\textrm{gap}\,}}((K_1\cup \{k\})< {{\,\textrm{gap}\,}}(K)\).

Proof

Let \(B_k=B_\ell \cup B_s, \ell , s\in K\), satisfying (11). This means that at least one linear constraint is included in \(P_k\) which was not included in \(P_\ell \) and \(P_s\) individually. We have

Let \(\Omega _1\) and \(\Omega _2\) represent the feasible sets of (9) associated with \(K_1\) and \(K_1\cup \{k\}\), respectively. As a consequence of (15), we have \(\Omega _2\subseteq \Omega _1\). This inclusion relationship implies that \({{\,\textrm{gap}\,}}(K_1\cup \{k\})\le {{\,\textrm{gap}\,}}(K_1)\). Moreover, if \(x_{B_k}^*\not \in {{\,\textrm{conv}\,}}(X_k)\), then we can deduce that \(x^*\in \Omega _2{\setminus } \Omega _1\). Since \(x^*\) is the unique solution, we can conclude that \({{\,\textrm{gap}\,}}(K_1\cup \{k\})< {{\,\textrm{gap}\,}}(K_1)\).

\(\square \)

We provide an illustrative example of tightening the convex hull relaxation using an aggregated block defined by a copy-constraint.

3.2 Illustrative example

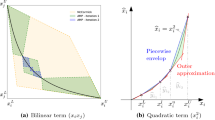

We showcase how an aggregated block derived from a copy-constraint helps tightening the relaxation. We use two-dimensional blocks presented in the original formulation by \((x_1,y_1)\in X_1\) and \((x_2,y_2)\in X_2\). The domain of the two blocks is illustrated in Fig. 1 with their feasible region and NLP relaxation. The convex hull of the inner points becomes the convex hull relaxation for each block as presented in Fig. 2. In this example, the linear constrained area related to the blocks consists of copy-constraint \(x_1 - x_2 = 0\). The copy-constraint is illustrated in Fig. 2 and cuts off the shaded area marked as region 1 in the convex hull relaxation of Block 2, which is not feasible according to Block 1 and the copy-constraint. The resulting inner approximation of the two blocks remains to be further tightened.

By incorporating the copy-constraint, we aggregate the two blocks into a new block with index 3. Figure 3 illustrates the resulting feasible region \(X_3\). Figure 4 depicts the resulting convex hull relaxation of the aggregated block mapped in two blocks. The new relaxation tightens the convex hull relaxation of Block 2 in Fig. 2 by cutting off infeasible shaded region 1 and 2. It is a block-aggregation cut for the convex hull relaxation of the MINLP problem.

4 A dynamic block and column generation algorithm for block-separable MINLPs

This section introduces a novel algorithm called dynamic block and column generation (DBCG) for efficiently generating aggregated blocks and obtaining tightened convex hull relaxations. The algorithm is designed to solve block-separable MINLPs, as represented by formulation (8). DBCG is an extension of the MINLP-CG algorithm proposed in [3], and it incorporates dynamic generation of aggregated blocks within the column generation framework to refine the convex hull relaxation.

We first present a reformulation of the MINLP problem as a resource-constrained formulation and then introduce the basic concept of column generation for computing an inner approximation and solving (9). Subsequently, we describe the methodology for dynamic block generation. Finally, we provide an overview of the DBCG algorithm. The algorithms are presented in a simplified manner, and further details can be found in Appendix A.

4.1 Resource-constrained reformulation

The resource-constrained reformulation of an optimization problem was firstly presented in [8] for decomposable integer programming. It facilitates a multi-objective vision on the linear constraints and a potential dimension reduction of the problems under consideration.

The resource-constrained reformulation works with variables \(w_k\) for each linear constraint and block. This means, the original variables are transformed linearly into resource variables in a matrix vector product \(w_k=A_kx_{B_k}\). This idea has been exploited in [7] for block-separable MINLPs. Considering the aggregation of blocks, we have that the number of transformed variables is not increasing when new (aggregated) blocks are added to the formulation. This can be realized using sets \(C_k\) defined as follows:

Here, \(\ell ,s \in K_1\) are the indices of original blocks combined in aggregated block \(k\in K_2\). So, \(C_k\) represents the index set of the original blocks in block k. In the reformulation of (8), the variables \(x_{B_k}\) of block k are replaced by vector \(w_{C_k}=(w_\ell )_{\ell \in C_k}\) of resource variables. This means that \(w_{C_k}=w_k\) for the original blocks \(k\in K_1\) and \(w_{C_k}=[w_{\ell }^\top , w_{s}^\top ]^\top \) for aggregated block \(C_k=\{\ell , s\}, k\in K_2\). The transformation is based on a matrix–vector multiplication where \(A_{\ell }\in \mathbb {R}^{(|M|+1)\times |B_{\ell }|}\) is a coefficient matrix for block \(\ell \in K_1\) with its row element \(A_{\ell ,m}\) defined as follows:

Here, the vectors \(c_{B_k}, a_{m,B_k} \in \mathbb {R}^{|B_{\ell }|} \) represent the coefficients in c and \(a_m\) associated with the variables in \(x_{B_k}\). For the aggregated blocks we define

as a block-diagonal matrix, where \(A_{\ell }\) represents the nonzero diagonal element. This extended coefficient matrix representation allows for the expression of matrix–vector multiplication with the corresponding variable vector. Consequently, we can express \(w_{C_k}\) as

Therefore, the transformed local feasible set for block \(k\in K,\) is represented as

The transformed objective function becomes

and the transformed linear feasible set is

The resource-constrained version of (8) is given by

Note that the relaxation (9) is equivalent to

4.2 Inner approximation

An inner approximation of \(X_k\) is defined by a finite set \(S_k\) of locally feasible points \(y \in X_k\). To transform these inner points into a column set, we define:

Notice that \(r\in R_k \subset \mathbb {R}^{|M|+1}\) for original blocks \(k\in K_1\) and \(r\in R_k \subset \mathbb {R}^{2(|M|+1)}\) for aggregated blocks \(k\in K_2\). The column set defines an inner approximation of (21), called IA, given by

Using an index j for the columns in \(R_k\), an LP formulation of (22), called LP-IA, is described by

In (23), the variables \(z_{kj}\) represent the weights of the column \(r_{kj} \in R_k\). Typically, \(\Delta _p \subset \mathbb R^{p}\) denotes the standard simplex with p vertices:

4.3 Column generation for solving the CHR

The CG solves (9) by alternatively computing a dual solution \(\mu \in \mathbb {R}^{|M|}\) corresponding to the linear constraints in \(w\in H\) in LP-IA (23), also called inner LP master problem, and solving pricing problems, also called MINLP sub-problems, in an iterative manner,

For each block \(k \in K\), we add the column \(r_k = A_{k}y_k \in \mathbb {R}^{(|M| +1)|C_k|}\) to the column set \(R_k\).

The convergence of CG iterations is measured by checking the reduced cost \(\delta _{k}\) for all blocks \(k\in K\) regarding a solution \(y_k\) of (25)

If \(\delta _{k}<\epsilon \) for all blocks \(k\in K\) with a small tolerance \(\epsilon >0\), the objective value of (23) cannot be further decreased and the CG iterations terminate, see [9]. In order to speed up the CG algorithm, columns are generated only for a subset \(\tilde{K}\subset K\) of blocks that exhibit a relatively large reduced cost \(\delta _k\). These blocks are referred to as active blocks. The CG algorithm is further described in Algorithm 1. It uses the following methods:

-

solveInnerLP(R, K) solves inner LP master problem (23) and returns the optimal resource vector \(\check{w}\), the weights z of the columns and the dual values for the linear resource constraints \(\mu \).

-

getActiveBlocks(d, R, K) takes the dual value \(d = (1, \mu ^\top )\) for selecting a subset of so-called active blocks \(\tilde{K}{:=\{k\in K: \delta _k \gg 0\}}\) from block set K with a relatively large reduced cost \(\delta _k\), as defined in (26). This speeds up the algorithm convergence when Algorithm 1 only solves sub-problems for \(k \in \tilde{K}\) and generates columns for these active blocks. The convergence of CG iterations using active blocks is not changed, since the reduced cost diminishes iteratively.

-

solveMinlpSubProblem(d, k) solves pricing problem (25) regarding a search direction \(d=(1,\mu ^T)\) and computes the reduced cost \(\delta _{k}\) as in (26). The resulting solution \(y_k\) is added to the column set \(S_k\).

4.4 Dynamic block generation

We describe a method to tighten the convex hull relaxation (9) by strategically creating aggregated blocks. Let

be the set of original blocks, which are coupled by linear constraint m. We call a linear constraint \(m\in M\) a coupling constraint, if \(|\Gamma _m|\ge 2\). The index set of two-block coupling constraints, including copy-constraints, is defined by

The goal is to carefully select coupling constraints \(m\in M_c\) and to create corresponding aggregated blocks \(B_k=\bigcup _{\ell \in \Gamma _m}B_\ell \) that effectively tighten the CHR. We examine the impact on the duality gap (10) of the optimal value of the convex hull relaxation (9) when an aggregated block \(B_k\) is added. This ana-lysis allows us to determine the effectiveness of including specific aggregated blocks in reducing the duality gap. Consider the Lagrangian of the resource-constrained formulation (20) with respect to linear constraints \(w\in H\)

Let \(w^*\) be a solution of the resource-constrained formulation (20), and \({\breve{w}}\) and \(\mu \) be a primal and dual solution of the convex hull relaxation (21). Since \(w^*\in H\), the duality gap of the convex hull relaxation is given by

where

The value of \(u_m^*\) corresponds to the potential contribution of a linear constraint in P to a gap reduction by moving it into a local domain \(X_k\) (14). Therefore, a larger value of \(u_m^*\) indicates a better gap reduction potential of the corresponding linear constraint with the resulting aggregated block.

We consider selecting coupling constraints \(m\in M_c\) that have a large gap reduction value (27) for creating aggregated blocks. The procedures of doing so are described in Algorithm 2. Let \({\check{w}}\) and z be a solution and the related weights of the master problem (23), i.e.

Let \(z_{kj}\) be the largest weight for a given aggregated block \(k\in K\), and \(r_{kj}\) the related dominant column of block k. Then, \(\tilde{w}_{C_k}=r_{kj}\) is a partial feasible solution of (20) and it is used to approximate \(w^*_{C_k}\). Furthermore, \(\check{w}\) is the solution of the inner approximation (23) and it converges to the solution of (21) after a number of CG iterations. Thus, (27) can be approximated by

Line 4 in the first repeat loop of Algorithm 2 determines the aggregated block and the column index (k, j) with the largest weight value \(\max \{z_{kj},\, \forall j \in [R_k], k\in \hat{K}\}\). The corresponding dominant column \(r_{kj}\) is then taken in a resource solution \(\tilde{w}_{C_k}\), and the aggregated blocks that overlap with \(C_{k}\) are removed from \(\hat{K}\). Approximation \(\tilde{w}:= (\tilde{w}_k)_{k \in {K_1}}\) is obtained after running this loop until \(\hat{K} = \emptyset \). Set \(\hat{M}\) contains coupling constraints which are not included in any existing aggregated block, see Line 10. The size of aggregated blocks is determined by the support of these linear constraints in \(\hat{M}\). It is necessary to create relatively small-size aggregated blocks; one example is creating aggregated blocks from the copy-constraints. The second repeat loop of Algorithm 2 selects linear constraints with maximum values \(u_m\) over \(\hat{M}\), and it creates new aggregated blocks based on the selected constraints. It is possible to generate multiple aggregated blocks by running more iterations of Line 12, while one can put early termination criterion on the loop, such as creating maximum N aggregated block and return the corresponding indices in Algorithm 2.

4.5 Main algorithm

Algorithm 3 describes the DBCG method for refining and solving CHR (21) and computing a set of solution candidates \(X^*\) of a block-separable MINLP (8). It performs the following steps:

-

1.

Compute column set \(R_k, \, \forall k\in {K_1}\) for the originally defined blocks, initial primal and dual solution \(\{\tilde{w}, \mu \}\) of the inner LP master problem (23) and \(X^*\) of solution candidates using initCG, see Appendix A.1.

-

2.

Create blocks and columns by iteratively performing steps of loop 1:

-

(a)

create aggregated blocks by createAggBlocks in Line 4 using primal and dual solution \(\{\check{w}, z, \mu \}\) of (23) and existing blocks K (Sect. 4.4).

-

(b)

compute columns \(R_k\) for new blocks using set \(X^*\) of solution candidates.

-

(c)

perform fast CG without a master problem for new (Loop 2) and active (Loop 3) blocks using Frank–Wolfe CG, fwColGen (Appendix A.2). Since it is sufficient to compute high-quality local feasible solutions for the pricing problems, a heuristic procedure is applied to find a local optimum of sub-problem (25) to generate columns in fwColGen, see Appendix A.3.

-

(d)

perform standard CG using colGen after convergence in fwColGen, i.e. \(\delta _{k}\) becomes very small, see Sect. 4.3. An MINLP solver is used to generate a globally optimal solution of (25).

-

(e)

find a solution candidate \(w^*\) in the neighborhood of the CHR solution \(\tilde{w}\) using findSolution, see Appendix A.4.

-

(a)

5 Numerical results

This section presents numerical results of Algorithm 3. We implemented the algorithm in Python with Pyomo 6.0.1 [10, 11], an optimization modeling language. This implementation is part of MINLP solver Decogo [12]. The solver uses SCIP 7.0.0 [13] for solving MINLP sub-problems, Gurobi 9.0.1 [14] for solving MIP/LP master problems and aggregated sub-problems. IPOPT 3.12.13 is applied for running NLP local-search in master and sub-problems. Computational experiments were performed using a computer with AMD Ryzen Threadripper 1950X 16-Core 3.4 GHz CPU and 128 GB RAM.

The following algorithm settings are used in the numerical experiments. In Algorithm 3, the time limit is set to 12 h, and the iteration limit in initCG is 20 iterations. The iteration limit for refining inner approximation (Loop 1) is set to five iterations and the iteration limit of Loop 2 and Loop 3 are set to eight iterations. The settings are also adapted for large-size instances and are described together with the presenting results. The fast CG is run up to five iterations. The standard CG is run up to three iterations. The iteration limit of the second loop in Algorithm 2 determines the number of aggregated blocks added in each iteration of Loop 1 in Algorithm 3. It is set according to the total number of the original blocks in the instances so that all possible non-overlapping aggregated blocks can be added in five iterations of Loop 1.

5.1 DESS model instances

For the numerical experiments, we used instances of decentralized energy supply systems (DESS). A DESS consists of energy conversion units and energy supply and demand forms which are integrated into a complex system. The optimization task is to identify simultaneously a plant design and a suitable plant schedule to optimize an economic objective function. MINLP DESS models typically have binary variables representing the facility selection and the operation states of selected units. Continuous variables model input and output streams of the model components as well as component costs. Nonlinear functions describe input–output dynamics and component costs of the DESS.

Goderbauer et al. [15] presented a DESS MINLP formulation. DESSLib is a published library of DESS models involving model instances of diverse complexity [16]. Three properties characterize the instances:

-

1.

maximum number of units of all component types

-

2.

number of load cases

-

3.

energy demand values

As an example, instance S12L16-1 refers to a DESS model consisting of maximum 12 units (S12) for the four component types, boiler, engine, turbo-chiller and absorption chiller. Each component type symmetrically has at most three units. The instance has 16 load cases (L16), and the energy demand values are taken from an instance indexed as one. The units of the DESS instances can be used in a natural way to generate blocks in a block-separable MINLP formulation. The implementation uses the component Block of Pyomo as a container for variables and constraints. For example, component variables and the associated nonlinear investment cost functions of each unit are taken as one block. Furthermore, this block is decomposed into smaller blocks according to the number of load cases. The load-case dependent variables and constraints in the smaller block represent operation status variables, input and output flow variables, the nonlinear performance constraints and load and unit decision constraints. These instances demonstrate good examples of block-structured formulations that enables the creation of aggregated blocks and the tightening of the convex hull relaxation, which is the central idea of our approach. Table 1 summarizes the characteristics of selected DESSlib instances used in the numerical experiments, including the number of original blocks in its block-separable formulation.

5.2 Improving the dual bound by aggregated block convex hull relaxation

This section showcases the steps of dynamic block aggregation and the effect of a tighter CHR by aggregating blocks on the dual bound. A DESSLib instances S12L4-1 is solved using Algorithm 3. Figure 5 visualizes the series of inner approximation values that are generated during the iterations when solving S12L4-1. The x-axis represents the iteration index of Loop 1 in Algorithm 3. Iterations zero generate initial columns for the original blocks \(K_1 = \{0,1,2,...,24\}\). The curve trend in each iteration illustrates how the inner approximation converges. The last objective value of the inner approximation in each iteration is taken as the updated dual value. Starting Loop 1, two aggregated blocks are created according to the supports of selected coupling constraints (copy-constraints): \(C_{25} = \{0, 12\}, C_{26} = \{9, 21\}\). Columns of the aggregated blocks 25 and 26 are generated and added to the inner approximation. The IA objective value initially decreases from the last dual value and then increases as aggregated block columns are added, eventually converging to the updated dual value. After five iterations of Loop 1, ten aggregated blocks \(K_2 = \{25, 26,..., 34\}\) and corresponding columns have been added to the inner approximation. The dual value shows a decreasing trend (maximization objective function). The best primal solution is depicted as a green line in Fig. 5 to illustrate the improvement of the duality gap from 24.3 to 4.2% during refinement of the inner approximation.

5.3 Comparison to other solvers

This section describes and compares the performance of applying the DBCG Algorithm to several DESSlib instances with state-of-art MINLP solvers. The selected instances have a varying number of components and a varying number of load cases resulting in diverse problem size and number of original blocks, as detailed in Table 1. The results of Algorithm 3 are compared to the results obtained by the column generation method presented in Muts et al. [3]. The results provide an idea of the effect of the refinement of the inner approximation due to adding aggregated blocks in the CG method for solving MINLPs. The DBCG Algorithm 3 is compared to state-of-art MINLP solver BARON 20.4.14 [17] on the DESSlib instances.

Goderbauer et al. [15] presented an adaptive discretization MINLP algorithm specifically for the DESSlib instances. The primal bounds of the DESSlib instances in that paper are taken as the best known primal bound among multiple solvers. However, we cannot directly compare the performance between the mentioned algorithm and Algorithm 3 since the implementation of the former one is not publicly available. On the other hand, the algorithm in Goderbauer et al. [15] does not aim at general MINLPs. Such a comparison is out of the scope of this paper. For the sake of brevity, we will use DBCG to refer to Algorithm 3, and CG to refer to the MINLP algorithm described in Muts et al. [3] throughout the remainder of the paper.

The solution time limit of all the MINLP algorithms are set to 12 h. Additionally, DBCG follows a termination criterion where the computation stops after five main iterations, while CG terminates once it obtains a positive reduced cost within a limit of 20 iterations, as described in Muts et al. [3]. These termination criteria are in place to prevent unnecessary iterations that do not significantly improve the results avoiding excessive computational costs. To showcase the solution quality of DBCG on large-size instances, we extend the time limit to 24 h specifically for instance S16L24-1. The computational performance is provided by Table 2, where gap values in bold indicate the smallest ones reached by the corresponding method for each instance. The iteration number of DBCG refers to the number of finished iterations in Loop 1 of Algorithm 3 (main iteration). The runs of DBCG on instances except S16L16-1 and S16L24-1 finished five iterations within 12 h. The additional DBCG run on S16L24-1 with 24-h time limit reached four iterations, while the one with 12-h time limit only finished two iterations. For DBCG, the main solution time is spent on solving the pricing problems, while the primal heuristics used in the algorithm contribute to a substantial portion, ranging from 30 to 50% in each main iteration. It is important to note that the solution time presented in Table 2 for DBCG and CG is influenced by the termination criteria and should not be considered as a direct indicator of algorithm performance. Our objective is to evaluate the dual and primal results of these MINLP algorithms under comparable settings. Our focus is on assessing their performance based on their ability to achieve desirable dual/primal results rather than solely comparing their solution time.

The dual bounds obtained by the MINLP algorithms is compared to the best known primal bounds obtained by and reported in Goderbauer et al. [15] in order to calculate the “absolute”duality gap. A comparison of the results is given in Table 2. The smallest duality gap for each instance is highlighted. The results show that the reached duality gap by DBCG is smaller than that by CG on the selected DESSlib instances. Moreover, the duality gap is improved more for instances with more original blocks (\(|K_1|\)). For instance S16L24-1, the run of DBCG with four iterations improved the duality gap from 31.55 to 23.04% compared to the one with two iterations. This shows that DBCG can further improve the duality gap when more solution time is used for large-problem-size instances. BARON closes the duality gap in a short time for small instance S4L16-1, see Table 2. For larger size instances, BARON did not close the gap within a 12-h time limit. Moreover, the duality gap reaches values up to 48%.

Table 3 summarizes the primal results of MINLP algorithm runs on the selected DESSlib instances; gap values in bold indicate the smallest ones reached by the corresponding method for each instance. The values of the primal results are presented in the form of a gap between the primal bound and the aforementioned best known primal bound of the instance:

In general, the primal bounds of DBCG and CG are comparable in a way that both are using the same primal heuristics, while DBCG provides a better dual solution as a starting point to search for primal solutions. As a result, both DBCG and CG for DESSlib instances provide good primal solutions for relatively large problem size instances. They are mostly better than that of BARON except instances S12L16-1 and S16L4-1. Moreover, the run of DBCG on instance S16L24-1 computed the best known primal solution after four iterations.

6 Conclusion

The paper presents a novel relaxation for nonconvex MINLPs, called the overlapping convex hull relaxation (CHR). There are several possibilities to create and solve an CHR. Here we present a way to generate the CHR by dynamic aggregation of blocks according to specific coupling constraints, such as copy-constraints. The results of DESSlib MINLP instances with up to 3000 variables show that the duality gap can be significantly reduced using this approach. The CHR can be used to compute an initial lower bound at the root node of a branch-andbound algorithm, or to compute a starting vector for a local search-based MINLP heuristic. Moreover, for problems where an initial (rough) model is dynamically changed during the solution process, such as PDE-based optimization or stochastic optimization, the columns of an inner approximation of the CHR can be efficiently adapted to model changes.

In the future, it would be interesting to make experiments with a direct generation of the CHR for sparse MINLPs with overlapping blocks without using copy-constraints and defining blocks according to groups of constraints involving a similar subset of variables. It is also possible to decompose an additive coupling constraint with a large support into several coupling constraints with a small support, which can be integrated into new aggregated blocks, in a similar way as we did for copy-constraints.

Data availability

The manuscript has no associated data. The used test instances are referenced in the text.

References

Dantzig, G.B., Wolfe, P.: Decomposition principle for linear programs. Oper. Res. 8, 101–111 (1960)

Rockafellar, R.T.: Problem decomposition in block-separable convex optimization: ideas old and new. J. Nonlinear Convex Anal. 19(9), 1459–1474 (2018)

Muts, P., Bruche, S., Nowak, I., Wu, O., Hendrix, E.M., Tsatsaronis, G.: A column generation algorithm for solving energy system planning problems. Optim. Eng. 24, 317–351 (2023)

Vigerske, S.: MINLPLib. http://minlplib.org/index.html (2018)

Muts, P., Nowak, I., Hendrix, E.M.T.: The decomposition-based outer approximation algorithm for convex mixed-integer nonlinear programming. J. Global Optim. 77(1), 75–96 (2020)

Muts,P., Nowak,I., Hendrix,E.M.T.: A resource constraint approach for one global constraint MINLP. In: Computational Science and Its Applications - ICCSA 2020, pp. 590–605. Springer (2020). https://doi.org/10.1007/978-3-030-58808-3_43

Muts, P., Nowak, I., Hendrix, E.M.: On decomposition and multiobjective-based column and disjunctive cut generation for MINLP. Optim. Eng. 22(3), 1389–1418 (2021)

Bodur, M., Ahmed, S., Boland, N., Nemhauser, G.L.: Decomposition of loosely coupled integer programs: a multiobjective perspective. http://www.optimization-online.org/DB_FILE/2016/08/5599.pdf (2016)

Nowak, I.: Relaxation and Decomposition Methods for Mixed Integer Nonlinear Programming. Birkhäuser, Basel (2005)

Hart, W.E., Watson, J.-P., Woodruff, D.L.: Pyomo: modeling and solving mathematical programs in python. Math. Program. Comput. 3(3), 219–260 (2011)

Bynum, M.L., Hackebeil, G.A., Hart, W.E., Laird, C.D., Nicholson, B.L., Siirola, J.D., Watson, J.-P., Woodruff, D.L.: Pyomo-optimization modeling in python, vol. 67, 3rd edn. Springer, Berlin (2021)

Muts,P., Wu,O., Nowak,I.: Decogo - decomposed-based approximation framework for global optimization. https://github.com/ouyang-w-19/decogo (2020)

Gamrath, G., Anderson, D., Bestuzheva, K., Chen, W.-K., Eifler, L., Gasse, M., Gemander, P., Gleixner, A., Gottwald, L., Halbig, K., Hendel, G., Hojny, C., Koch, T., Le Bodic, P., Maher, S.J., Matter, F., Miltenberger, M., Mühmer, E., Müller, B., Pfetsch, M.E., Schlösser, F., Serrano, F., Shinano, Y., Tawfik, C., Vigerske, S., Wegscheider, F., Weninger, D., Witzig, J.: The SCIP Optimization Suite 7.0. Technical report, Optimization Online, March 2020. http://www.optimization-online.org/DB_HTML/2020/03/7705.html

Gurobi Optimization, LLC. Gurobi Optimizer Reference Manual (2021). https://www.gurobi.com

Goderbauer, S., Bahl, B., Voll, P., Lübbecke, M., Bardow, A., Koster, A.: An adaptive discretization MINLP algorithm for optimal synthesis of decentralized energy supply systems. Comput. Chem. Eng. 95, 38–48 (2016)

Bahl, B., Goderbauer, S., Arnold, F., Voll, P., Lübbecke, M., Bardow, A., Koster, A.M.: DESSLib—Benchmark Instances for Optimization of Decentralized Energy Supply Systems. Technical report, Technische Universität Aachen, 2016. URL http://www.math2.rwth-aachen.de/DESSLib/

Sahinidis,N.V.: BARON 21.1.14: Global Optimization of Mixed-Integer Nonlinear Programs, User’s Manual, http://www.minlp.com/ (2020)

Nesterov, Y.E.: A method for solving the convex programming problem with convergence rate O(\(1/k^2\)). Dokl. Akad. Nauk SSSR 269, 543–547 (1983)

Acknowledgements

This work has been funded by grant 03ET4053B of the German Federal Ministry for Economic Affairs and Energy and Grant PID2021-123278OB-I00 funded by MCIN/AEI/ 10.13039/501100011033 and by “ERDF A way of making Europe”. We thank the anonymous referees for their valuable comments and suggestions.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Algorithmic details of DBCG

Appendix A: Algorithmic details of DBCG

For the sake of completeness we describe here the methods used in the DBCG Algorithm 3, which are derived from [3].

1.1 A.1 Initial column generation (initCG)

The method initCG, described in Algorithm 4, is used in the first step of is of the DBCG Algorithm 3 for computing initial columns. It performs the following steps:

-

1.

Compute initial columns by performing the subgradient method for maximizing the dual of (4) regarding coupling constraints as described in Muts et al. [3].

-

2.

Perform CG by iteratively

-

(a)

performing fast CG using fwColGen, see Section A.2.

-

(b)

solving the LP-IA (22) for computing an approximate solution \(\tilde{w}\) of the convex hull relaxation (9),

-

(c)

projecting \(\tilde{w}\) onto the feasible set finding an initial solution candidate w using findSolutionInit, see Appendix A.4.

-

(a)

1.2 A.2 Fast column generation (fwColGen)

The fast CG method fwColGen [3], described in Algorithm 5, is an alternative to the standard CG without solving the inner LP master problem (23). It is used initially in Algorithm 3 when the LP master problem (23) has non-zero slacks due to lack of columns R. The standard CG takes the search direction from the dual solution of the inner LP master problem (23). This can be inefficient for generating columns for slack elimination and primal improvement. The alternative Algorithm 5 is based on solving the following convex quadratic reformulation of the LP master problem (23) using the Frank Wolfe method:

where, \(Q(w,\sigma ):=F(w)+ \sum _{m\in M} \sigma _m \left( \sum _{k\in K_1} w_{km} -b_m\right) ^2\), and \(\sigma \in \mathbb {R}^{|M|}_+\) is a vector of penalty weights. It uses the Nesterov direction update rule proposed in [18], applied in Lines 12 to 14, for acceleration.

1.3 A.3 Fast sub-problem solving in CG (fastSolveMinlpSubproblem)

The method fastSolveMinlpSubproblem is a primal heuristic for fast computing a solution candidate \(\tilde{x}_{k}\) of the MINLP sub-problem (25) during Algorithm 5. The method can be used for blocks \(k\in K_1\) and \(k\in K_2\). For a block \(B_k=B_\ell \cup B_s, k\in K_2,\) we define integer/NLP relaxation of \(X_k\) by

This method applies to aggregated blocks and performs the following steps. Notice that the mentioned problems are solved locally by an NLP solver.

-

1.

Solve the resource projection problem (30) to obtain \(\check{x}\) as a solution in the original space.

$$\begin{aligned} \check{x}= \mathop {\textrm{argmin}}\limits \Vert A_{k}x - \check{w}_{C_k}\Vert _2^2 {{\,\mathrm{\quad s.t. \,\, }\,}}x \in G_k \end{aligned}$$(30)where \(\check{w}_{C_k}\) is an estimated solution of the CHR (9), obtained during the iterations of Algorithm 5.

-

2.

Perform a local search using \(\check{x}\) and a search direction d to compute a local minimizer of the integer relaxed sub-problem (31) with \(\check{x}\) as the starting point.

$$\begin{aligned} \tilde{y} = \mathop {\textrm{argmin}}\limits \, d^\top A_{k} x {{\,\mathrm{\quad s.t. \,\, }\,}}x\in G_k \end{aligned}$$(31) -

3.

Round the integer variables of \(\tilde{y}\) to obtain a rounded solution \(\hat{x}\). Use the values of the continuous variables in \(\hat{x}\) as the starting point for solving the NLP problem:

$$\begin{aligned} \tilde{x}=\mathop {\textrm{argmin}}\limits \,\,&d^\top A_{k} x {{\,\mathrm{\quad s.t. \,\, }\,}}x\in G_k, \quad x_{i}=\hat{x}_{i}, \,\,\forall i\in {B_k\cap I.} \end{aligned}$$(32)We acknowledge that rounding \(\tilde{y}\) can potentially lead to infeasible solutions for (32), though we did not encounter this in our numerical experiment. To address this issue, we employ a backtrack heuristic that allows us to obtain alternative rounded solutions for \(\tilde{y}\), ensuring the feasibility of the solution for (32). This heuristic enables us to navigate the solution space effectively and find feasible solutions that satisfy the constraints of the problem.

1.4 A.4 A primal heuristic for finding solution candidates (findSolution)

The methods findSolutionInit and findSolution are heuristic procedures for computing solution candidates in Algorithm 3. Method findSolutionInit is used in the beginning of Algorithm 4 for quickly finding a solution candidate, in order to eliminate slacks in LP-IA master problem (22). It performs the following steps:

-

1.

Perform an NLP local search solution of the following integer relaxed resource-projection NLP master problem.

$$\begin{aligned} \begin{aligned} {\tilde{y}=\mathop {\textrm{argmin}}\limits }&\sum _{k\in K_1} \Vert A_{k}x_{B_k}-\check{w}_k\Vert ^2,\\ {{\,\mathrm{\quad s.t. \,\, }\,}}&x\in P,\quad x_{B_k}\in G_k,\quad \forall k\in K_1,\\ \end{aligned} \end{aligned}$$(33)where \(\check{w}\) is the solution of the LP-IA master problem (22).

-

2.

Compute an integer globally feasible solution \(\hat{y}\) by solving the MIP-projection master problem(34),where \(\tilde{y}\) is a fractional solution of (33).

$$\begin{aligned} \begin{aligned} \hat{y} = \mathop {\textrm{argmin}}\limits \,\,&\sum _{k\in K_1}\Vert x_{B_k}-\tilde{y}_{B_k}\Vert _\infty \\ {{\,\mathrm{\quad s.t. \,\, }\,}}&x\in P,\,\, \, x_{B_k}\in Y_k,\, \forall k\in K_1.\\ \end{aligned} \end{aligned}$$(34) -

3.

Perform an NLP local search, where integer variables are fixed starting from \(\hat{y}\), using the integer globally feasible solution \(\hat{y}\).

$$\begin{aligned} \begin{aligned} x^*=\mathop {\textrm{argmin}}\limits \,\,&c^\top x + \sum _{m\in M_1} \theta _m s_m + \sum _{m\in M_2} \theta _m (s_{m1}+s_{m2})\\ {{\,\mathrm{\quad s.t. \,\, }\,}}&\sum _{k\in K_1} A_{k}x_{B_k} \le b_m+ s_m, \, \, m\in M_1,\\&\sum _{k\in K_1} A_{k}x_{B_k}= b_m+ s_{m1}-s_{m2}, \, m\in M_2,\\&x_{B_k}\in G_k, \, x_{i}=\hat{y}_{i},\, i\in {I_k}, \,k\in K_1. \end{aligned} \end{aligned}$$(35)

The method findSolution computes a solution candidate of MINLP problem (4) at the end of Algorithm 3 by performing the following steps:

-

1.

Compute a solution \(\check{w}\) of the LP-IA(22).

-

2.

Determine a solution pool \(\widehat{Y}\) of the best solutions of the MIP projection problem (34) of size N, using point \(\check{w}\).

-

3.

For each point \(\hat{y}\) of the solution pool \(\widehat{Y}\), perform an NLP local search over the global space, defined in (35) by fixing the integer valued variables, like in findSolutionInit.

More details of the primal heuristics can be found in Muts et al. [3].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, O., Muts, P., Nowak, I. et al. On the use of overlapping convex hull relaxations to solve nonconvex MINLPs. J Glob Optim (2024). https://doi.org/10.1007/s10898-024-01376-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10898-024-01376-2