Abstract

This paper presents a new algorithm based on interval methods for rigorously constructing inner estimates of feasible parameter regions together with enclosures of the solution set for parameter-dependent systems of nonlinear equations in low (parameter) dimensions. The proposed method allows to explicitly construct feasible parameter sets around a regular parameter value, and to rigorously enclose a particular solution curve (resp. manifold) by a union of inclusion regions, simultaneously. The method is based on the calculation of inclusion and exclusion regions for zeros of square nonlinear systems of equations. Starting from an approximate solution at a fixed set p of parameters, the new method provides an algorithmic concept on how to construct a box \({\mathbf {s}}\) around p such that for each element \(s\in {\mathbf {s}}\) in the box the existence of a solution can be proved within certain error bounds.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper we consider parameter-dependent nonlinear systems of equations

with solutions \(x = x(s) \in {\mathbb {R}}^n\) depending on a set of parameters \(s\in {\mathbb {R}}^p\). Thereby, we assume

to be differentiable with Lipschitz continuous derivative.

Branch and bound methods for finding all zeros of a (square) nonlinear system of equations in a box frequently have the difficulty that subboxes containing no solution cannot be eliminated if there is a nearby zero outside the box. This results in the so-called cluster effect, i.e., the creation of many small boxes by repeated splitting, whose processing may dominate the total work spent on the global search. Schichl and Neumaier [34] presented a method how to reduce the cluster effect for nonlinear \(n\times n\)-systems of equations by computing so-called inclusion and exclusion regions around an approximate zero with the property, that a true solution lies in the inclusion region and no other solution in the corresponding exclusion region, which thus can be safely discarded.

In the parameter-dependent case, it would be convenient to show the existence of such inclusion regions for a whole set of parameter values in order to rigorously identify feasible parameter boxes \(\mathbf {s}\) where for all \(s\in \mathbf {s}\) solutions x(s) of (1.1) exist. Thus, we extend the method from Schichl and Neumaier [34] to this problem class, and show how to compute parameter boxes \(\mathbf {s}\subseteq S\) such that for each parameter set \(s \in \mathbf {s}\) the existence of a solution \(x(s) \in \mathbf {x}\subseteq X\) of (1.1) within a narrow inclusion box can be guaranteed.

The procedure for computing such a feasible parameter box \(\mathbf {s}\) consists of three main steps: (i) solve (1.1) for a fixed parameter \(p\in \mathbf {s}\) and compute a pair of inclusion and exclusion regions for a corresponding approximate zero \(z\approx x(p)\) as described in Schichl and Neumaier [34], (ii) consider an approximation function \({\hat{x}}(s):S\rightarrow X\) for the solution curve, and (iii) extend the estimates and bounds from step (i) using slope forms in order to calculate a feasible parameter box \(\mathbf {s}\) around p such that for all \(s\in \mathbf {s}\) the existence of a solution \(x^*(s)\) of (1.1) can be proved.

Other known approaches. Parameter-dependent systems of equations can be solved by continuation methods (e.g., [1, 2]) which trace a particular solution curve or a solution manifold, if \(p>1\) in (1.1). More recently, Martin et al. [17] presented a new rigorous continuation method for 1-manifolds (improving the method proposed by Kearfott and Xing [13]) which utilizes parallelotopes (as defined in [9]) to enclose consecutive portions of the followed manifold. Another approach for parametric polynomial systems is to use Gröbner bases (e.g., [12, 21]).

Neumaier [23, Thm. 5.1.3] formulated a semilocal version of the implicit function theorem and provided a tool Neumaier [23, Prop. 5.5.2] for constructing an enclosure of the solution set of (1.1) with parameters varying in narrow intervals. Furthermore, Neumaier [22] performed a rigorous sensitivity analysis for parameter-dependent systems of equations and proved a quadratic approximation property of a slope based enclosure. A parametric Krawcyk operator was proposed in Rump [29], a comparison of this Newton-like operator and a parametric Hansen–Segupta operator [6] was presented in Goldsztejn [7]. In Goldsztejn and Granvilliers [9], Goldsztejn and Granvilliers extended the preconditioned interval Newton operator to underconstrained systems of equations by generalizing the domain from boxes to parallelepipeds.

Kolev and Nenov [15] proposed an iterative method to construct a linear interval enclosure of the solution set of (1.1) over a given parameter interval. Goldsztejn [5] used a weak version of the parametric Miranda-theorem (see [23], Thm. 5.3.7) to verify the existence of solutions over a given parameter interval and to compute a reliable inner estimate of the feasible parameter region. Independently from the work of Goldsztejn [5], the authors recently pursued a similar approach and propose some tools utilizing Miranda’s theorem and centered forms for rigorously solving parameter-dependent systems of equations [27].

In addition, several recent contributions are concerned with rigorously solving problems arising in the field of robotics. For example, Tannous et al. [38] proposed an interval linearization method to enclose the solution set of a system (1.1) over a small parameter interval given a nominal approximate solution in order to provide verified results for the sensitivity analysis of serial and parallel manipulators; Caro et al. [3] compute a verified enclosure of n-manifolds for computing generalized aspects (connected components of a set of nonsingular reachable configurations) of parallel robots; Goldsztejn et al. [8] use a parametric version of the Kantorovich theorem for proving hypotheses regarding the maximal pose error of parallel manipulators, and computing upper bounds on the pose error in a safe way.

Other contributions regarding parametric interval methods for solving systems of equations are, e.g., [36, 37] for robust simulation and design of chemical processes, or [39] for infinite dimensional systems of equations (PDE).

Outline. The paper is organized as follows: in Sect. 2 we review some central results about rigorously computing solutions of square nonlinear systems of equations. Additionally, we summarize basic definitions and known results about slope forms, which will be an important tool when extending the exlusion region-concept from Schichl and Neumaier [34] to the parameter-dependent case. In Sect. 3 we will outline the method introduced by Schichl and Neumaier [34] as it is the starting point for the new method. In Sect. 4 we will then state and prove the main results of this paper and describe how to extend the inclusion/exclusion-region concept to the parameter-dependent case. In Sect. 5 the new method is illustrated with several numerical examples, and, additionally, some preliminary computational results for rigorously computing an inner approximation of the feasible solution region over an initial box \(\mathbf {s}\) are presented. Section 6 provides a discussion of the proposed method as well as an outlook on future work.

Notation. Throughout the paper, we will use the following notation: For a matrix \(A\in {\mathbb {R}}^{n\times m}\) we denote by \(A_{: K}\) the \(n\times k\) submatrix consisting of k columns with indices in \(K\subseteq \lbrace {1,\dots ,m}\rbrace \), and, similarly \(A_{K:}\) denotes the \(k\times m\) submatrix with row-indices in \(K\subseteq \lbrace 1,\dots ,n\rbrace \). Let \(F:{\mathbb {R}}^m\rightarrow {\mathbb {R}}^n\). If \(y = (x,s)^T\in {\mathbb {R}}^m\) is a partition of x with \(x=y_I\), \(s=y_J\), where I, J are index sets with \(I\cap J=\emptyset \) and \(I\cup J=\lbrace {1,\dots ,m}\rbrace \), the Jacobian of F with respect to x is

A slope of F with center z at y is written as \({F}\!\left[ z,y\right] \), a slope with respect to x then is

Since second order slopes (resp. first order slopes of the Jacobian) are third order tensors, we use the following multiplication rules (see [33]) for a 3-tensor \({\mathcal {T}} \in {\mathbb {R}}^{n \times m\times r}\), a vector \(v \in {\mathbb {R}}^r\), and matrices \(C\in {\mathbb {R}}^{s\times n}\), \(B\in {\mathbb {R}}^{r \times s}\):

Additionally, we define for a vector \(v\in {\mathbb {R}}^n\) and a 3-tensor \({\mathcal {T}}\in {\mathbb {R}}^{n\times n\times n}\) the product

2 Preliminaries and known results

Consider a twice continuously differentiable function \(F:D \subseteq {\mathbb {R}}^n \rightarrow {\mathbb {R}}^n\). We can always write (see [34])

for any two points z, \(x\in D\) and a suitable matrix \({F}\!\left[ z,x\right] \in {\mathbb {R}}^{n\times n}\), a so-called slope matrix for F with center z at x. While in the multivariate case, the slope matrix is not uniquely determined, we always have by differentiability

Assuming that the slope matrix is continuously differentiable in both points, we can write similarly

which simplifies for \(z=z'\) to

where the second order slopes \({F}\!\left[ z,z',x\right] \), \({F}\!\left[ z,z,x\right] \), respectively, are continuous in z, \(z'\) and x. If F is quadratic, the first order slopes are linear, and thus, the second order slope matrices are constant. Let z be a fixed center in the domain of F. Having a slope \({F}\!\left[ z,x\right] \) for all \(x \in \mathbf {x}\) we get

and, analogously,

Hence, the first and second order slope forms given in (2.4) and (2.5), respectively, provide enclosures for the true range of the function F over an interval \(\mathbf {x}\). There are recursive procedures to calculate slopes, given x and z (see [14, 16, 30, 35]). A Matlab implementation for first order slopes is in Intlab [31]; also, the Coconut environment [32] provides algorithms.

Similarly to derivatives, slopes obey a sort of chain rule. Consider \(F :{\mathbb {R}}^m\rightarrow {\mathbb {R}}^p\) and \(g:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\). Then we have

i.e., \({F}\!\left[ g(z),\,g(x)\right] \;{g}\!\left[ z,x\right] \) is a slope matrix for \(F\circ g\).

Exclusion regions for \(n\times n\)-systems are usually constructed using uniqueness tests based on the Krawczyk operator (see [23]) or the Kantorovich theorem (see [4, 11, 25]), which both provide existence and uniqueness regions for zeros of square systems of equations. Kahan [10] used the Krawczyk operator to make existence statements. An important advantage of the Krawczyk operator is that it only needs first order information. Together with later improvements about slopes, his result is contained in the following statement.

Theorem 2.1

(Kahan) Let \(F:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\) be as before and let \(z\in \mathbf {z}\subseteq \mathbf {x}\). If there is a matrix \(C\in {\mathbb {R}}^{n\times n}\) such that the Krawczyk operator

satisfies \(\text {K}({\mathbf {z}, \mathbf {x}}) \subseteq \mathbf {x}\), then \(\mathbf {x}\) contains a zero of F. Moreover, if \(\text {K}({\mathbf {x}, \mathbf {x}})\subseteq {{\,\mathrm{int}\,}}(\mathbf {x})\), then \(\mathbf {x}\) contains a unique zero.

Neumaier and Zuhe [24] proved that the Krawczyk operator with slopes always provides existence regions which are at least as large as those computed by Kantorovich’s theorem. Based on a more detailed analysis of the properties of the Krawczyk operator, Schichl and Neumaier [34] provided componentwise and affine invariant existence, uniqueness, and nonexistence regions given a zero or any other point in the search region. More recently, this concept was extended to optimiziation problems (see [33]).

3 Inclusion/exclusion regions for a fixed parameter

We consider the nonlinear system of equations (1.1) at a fixed parameter value p,

Let \(z\approx x(p)\) be an approximate solution of (3.1), i.e.,

Our first aim is the verification of a true solution \(x^*\)of system (3.1) in a neighbourhood of z by computing an inclusion (resp. exclusion) region around z as described by Schichl and Neumaier [34]. Assuming regularity of the Jacobian \(H'_x(z, p)\) we take

as a fixed preconditioning matrix and compute the componentwise bounds

where the second order slopes \(H_{xx}\) are fixed. Throughout the paper, we assume \(z \approx x(p)\in \mathbf {x}\) as fixed center and the bounds from (3.4) valid for all \(x\in \mathbf {x}\), where \(\mathbf {x}\subseteq X\) is chosen appropriately (see below). Following [34, Thm. 4.3], we choose a suitable vector \(0<v\in {\mathbb {R}}^n\), which basically determines the scaling of the inclusion/exclusion regions, and set

Supposing

for all \(j=1,\ldots ,n\), we define

If \(\lambda ^e>\lambda ^i\), then there is at least one zero \(x^*\) of (3.1) in the inclusion region \(\mathbf {R}^{i}_0\) and the zeros in this region are the only zeros of (3.1) in the interior of the exclusion region \(\mathbf {R}^{e}_0\) with

In the important special case where H(x, p) is quadratic in x, the first order slope matrix \({H}\!\left[ (z, p), (x,p)\right] \) is linear in x. Hence, all second order slope matrices are constant in x. Therefore, the upper bounds \(B(x)=B\) are constant as well. Thus, we can set \(\overline{B} = B\) and the estimate from (3.4) becomes valid everywhere. Otherwise, an appropriate choice of \(\mathbf {x}\subseteq X\) is crucial in order to keep the bounds \(\overline{B}\) on the second order slopes considerably small.

4 Parameter-dependent problem

Let (z, p) be an approximate solution of (1.1) for which a pair of inclusion and exclusion regions can be computed as described in Sect. 3. In addition, we assume the bounds from (3.4) are valid for \(\mathbf {x}\subseteq X\) with \(z\in \mathbf {x}\). We aim to prove the existence of a solution of (1.1) for every \(s\in \mathbf {s}\subseteq S\). Therefore, we first extend the results from Schichl and Neumaier [34] to the parameter-dependent case. In Theorem 4.3 we then state a method to explicitly construct such a parameter interval \(\mathbf {s}\). As a by-product we get an outer enclosure of a solution region \(\mathbf {x}(\mathbf {s})\subseteq \mathbf {x}\) over the parameter set \(\mathbf {s}\).

Consider any box \(\mathbf {s}\subseteq S\subseteq {\mathbb {R}}^p\) with \(p\in \mathbf {s}\) and a continuously differentiable approximation function

which satisfies \({\hat{x}}(p) = z\) and \({\hat{x}}(s)\in \mathbf {x}\) for all \(s\in \mathbf {s}\), and prove for every \(s\in \mathbf {s}\) the existence of an inclusion box

In principle, the approximation function (4.1) can be chosen arbitrarily. The easiest choice would be a linear approximation of the function at hand. Another possible approach would be using higher-order Taylor-expansions of the solution. Due to computational reasons, expansions of maximal order 2 are most useful, since higher-order approximations would lead to more overestimation. Note, that the choice of the approximation function may greatly influence the quality, i.e., the radius, of the parameter interval \(\mathbf {s}\) (see Fig. 2).

We define

With \({{\hat{x}}}\!\left[ p, s\right] \) denoting a slope matrix for \({\hat{x}}\) with center p at s, a slope matrix for g is given by

since

Let C be the fixed preconditioning matrix from (3.3). For each \(s \in \mathbf {s}\) we define similar bounds as in (3.4)

and calculate estimates on the bounds from (4.4a) and (4.4b) with respect to the bounds from (3.4) using first order slope approximations. Applying the chain rule (2.6) to \(H({\hat{x}}(s),s) = (H\circ g)(s)\) we get

and, similarly we estimate the first derivative of H with respect to x by

where the 3-tensor \({(H'_x)}\!\left[ g(p),\; g (s)\right] \in {\mathbb {R}}^{n\times n \times (n+p)}\) is a slope for \(H'_x\).

By taking absolute values we get with \(\widetilde{y} \mathrel {\mathop :}=\left| {s-p}\right| \) and (3.4)

Hence, we define

and

Note, that \(A(s) \in {\mathbb {R}}^{n\times n\times p}\) is the result of the multiplication of a 3-tensor with an \(((n+p)\times p)\)-matrix. Therefore, A(s) is computed by the appropriate multiplication rule from (1.2).

Proposition 4.1

Let \((z,p)\in \mathbf {x}\times \mathbf {s}\subseteq X\times S\), where \(\mathbf {x}\), \(\mathbf {s}\) are any subboxes of X and S containing (z, p) (from (3.2)) such that the bounds (3.4) hold for all \(x\in \mathbf {x}\). Additionally, let \(s\in \mathbf {s}\) be an arbitrary parameter value, and \({\hat{x}} \mathrel {\mathop :}={\hat{x}}(s)\in {{\,\mathrm{int}\,}}(\mathbf {x})\) be the function value of the approximation function from (4.1) at s. Further, let \(0<v\in {\mathbb {R}}^n\) and \(\lambda ^e\) as in (3.6). Then for a true solution \(x=x(s)\) of (1.1) at s with \(\left| {x-z}\right| \le \lambda ^e\,v\) the deviation

satifies

with \(\overline{{\mathfrak {b}}}(s)\), \({\mathfrak {B}}_0(s)\), and \({\mathfrak {B}}(x,s)\) as defined in (4.6), (4.7), and (4.4c), respectively.

Proof

Let \((x^1, s^1)\) be an arbitrary point in the domain of definition of H. Then we have by (2.1)

since x is a solution of (1.1) at s. This simplifies for \((x^1, s^1)= ({\hat{x}}, s)\) and g(s) as in (4.2) to

where we calculate H(g(s)) by (4.5) with respect to (z, p) as

with \( {(H\circ g)}\!\left[ p, s\right] \mathrel {\mathop :}={H}\!\left[ g(p), g(s)\right] \, {g}\!\left[ p,s\right] \), and \({H_x}\!\left[ g(s), (x,s)^T\right] \) by (2.3) as

with g(s), \({g}\!\left[ p, s\right] \) as in (4.2) and (4.3).

Now we consider the deviation between the approximate and a true solution and get with (4.9)

which extends by (4.11) to

Taking absolute values, we get by (4.4c), (4.6), and (4.7)

\(\square \)

Using this result, we are able to formulate a first criterion for existence regions.

Theorem 4.1

Let again \(s\in \mathbf {s}\) with corresponding function value \({\hat{x}} \mathrel {\mathop :}={\hat{x}}(s)\in {{\,\mathrm{int}\,}}(\mathbf {x})\) of the approximation function from (4.1) at s. In addition to the assumptions from Proposition 4.1 let \(0<u\in {\mathbb {R}}^{n}\) be such that

with \({\mathfrak {B}}(s)\ge \, {\mathfrak {B}}(x,s)\) for all x in \(M_u(s)\), where

Then (1.1) has a solution \(x(s) \in M_u(s)\).

Proof

For arbitrary x in the domain of definition of H we define

For \(x\in M_u(s)\) we get with (4.9) and (4.11)

Taking absolute values we get

by assumption (4.12). Thus, \(\text {K}_s(x) \in M_u(s)\) for all \(x \in M_u(s)\). Further, (4.14) shows that \(\text {K}_s(x)\) is equal to the Krawczyk operator (2.7) for a fixed parameter s. Hence, we get by Theorem 2.1, or, equivalently, by direct application of Brouwer’s fixed point theorem, that there exists a solution of (1.1) in \(M_u(s)\). \(\square \)

Based on the above results, the following theorem provides a way of constructing inclusion and exclusion regions for an approximate solution \({\hat{x}}(s)\).

Theorem 4.2

In addition to the assumptions from Proposition 4.1 and Theorem 4.1, we take

For \(0<v\in {\mathbb {R}}^n\) we define

If

for all \(j=1,\dots ,n\), we define

and

If \(\lambda _s^e > \lambda _s^i\) and

then there exists at least one zero \(x^*\) of (1.1) for a parameter set s (i.e., \(H(x^*,s)=0\)) in the inclusion region

and these zeros are the only zeros of H at s in the interior of the exclusion region

Proof

We set \(u=\lambda v\) with arbitrary \(0<v\in {\mathbb {R}}^{n}\), and check for which \(\lambda =\lambda (s) \in {\mathbb {R}}_+\) the vector u satisfies property (4.12). We get

which leads to the sufficient condition

The jth component of this inequality requires \(\lambda \) to be between the solutions of the quadratic equation

which are exactly \(\lambda _j^i(s)\) and \(\lambda _j^e(s)\). Since \(D_j(s)>0\) for all j by assumption, the interval \(\left[ \lambda _s^i,\; \lambda _s^e\right] \) is nonempty. Thus, for all \(\lambda (s) \in \left[ \lambda _s^i,\; \lambda _s^e\right] \), the vector u satisfies (4.12).

It remains to check, whether the solution(s) in \({\mathbf {R}}^i_s\) are the only ones in \({\mathbf {R}}^e_s\). Assume that x is a solution of (1.1) at s with \(x \in {{\,\mathrm{int}\,}}({\mathbf {R}}^e_s)\setminus {\mathbf {R}}^i_s\), and let \(\lambda =\lambda (s)\) be minimal with \(\left| {x-{\hat{x}}}\right| \le \lambda v\). By construction, we have \(\lambda _s^i< \lambda < \lambda _s^e\). In the proof of Theorem 4.1 we got for the Krawczyk operator (2.7)

since x is a solution of (1.1) at s. Thus, we get by the same considerations as in the proof of Theorem 4.1 from (4.15)

since \(\lambda > \lambda _s^i\). Since this contradicts the minimality of \(\lambda \), there is no solution of (1.1) at s in \({{\,\mathrm{int}\,}}({\mathbf {R}}^e_s)\setminus {\mathbf {R}}^i_s\). So, if (4.19) is satisfied for all j, there exists at least one solution \(x^*\) of (1.1) at s in the inclusion box \({\mathbf {R}}^i_s\) and there are no other solutions in \({{\,\mathrm{int}\,}}({\mathbf {R}}^e_s)\setminus {\mathbf {R}}^i_s\). \(\square \)

The final step is now to compute a feasible parameter \(0<\mu \in {\mathbb {R}}\) such that Theorem 4.2 holds for all

with arbitrary scaling vector \(y\in {\mathbb {R}}^p\). Assume \(\widetilde{\mathbf {s}}\subseteq \mathbf {s}\in S\), where \(\mathbf {s}\) is an arbitrary box containing p. We compute a lower bound on each component

from the positivity requirement (4.16) of Theorem 4.2 over the box \(\mathbf {s}\). For the bounds from (4.6) and (4.7) we compute upper bounds

Since by construction \(\overline{G_0}\ge G_0(s)\) and \(\overline{A}\ge A(s)\) for all \(s\in \mathbf {s}\), we have

for all \(s\in \mathbf {s}\). By computing an upper bound over an appropriate box \(\mathbf {x}\in X\) with \(z\in \mathbf {x}\) (e.g., take \(\mathbf {x} = k\, \mathbf {R}_0^e\) with \(k\in {\mathbb {R}}_+,\, k \le 1\)) we get upper bounds on the second order slopes from (4.4c)

which satisfy

Hence, the lowest values of the discriminant D from (4.16) are obtained by

with \(\overline{{\mathfrak {b}}}\) from (4.21) and

Considering \(\underline{D}_j = \underline{D}_j(\mu )\), we get

with

and \(w_j\) and \(\overline{b}_j\) from (3.4). Solving each quadratic equation

for \(\mu \), if \(\alpha _j \ne 0\), we get

Since \(H(z,p)\approx 0\), the discriminant in (4.28) is smaller than \(\beta _j^2\), since we have

with \(\overline{b}_j\) being an upper bound for the function value at (z, p) and thus, close to zero. Hence, both solutions of (4.27) are positive. In order to derive numerically stable results, we compute these solutions by

and set

since we need \(\mu \in [0,\, \underline{\mu }_j]\) in order to meet the positivity requirement (4.16) in the j-th component.

Now we are able to state and prove the main result of this section.

Theorem 4.3

Let \(\mathbf {s}\in S\) with \(p\in \mathbf {s}\), \(\mathbf {x}\in X\) with \(z\in \mathbf {x}\) as above. In addition to the assumptions of Theorem 4.2 we assume upper bounds

-

(i)

on the first order slope of H(g(s)),

$$\begin{aligned} \overline{G}_0 \mathrel {\mathop :}=\overline{G_0(\mathbf {s})} = \overline{\left| {{CH}\!\left[ g\left( p\right) , g(\mathbf {s})\right] }\right| \, \left| {{g}\!\left[ p,\; \mathbf {s}\right] }\right| } , \end{aligned}$$(4.30) -

(ii)

on the slope of the first derivative of H(g(s)) wrt. x,

$$\begin{aligned} \overline{A} \mathrel {\mathop :}=\overline{A(\mathbf {s})} = \overline{\left| C{(H'_x)}\!\left[ g(p),\; g(\mathbf {s})\right] \right| \, \left| {g}\!\left[ p,\; \mathbf {s}\right] \right| }, \end{aligned}$$(4.31) -

(iii)

and on the second order slopes of H(g(s)) wrt. x

$$\begin{aligned} \overline{{\mathfrak {B}}}\mathrel {\mathop :}=\overline{\left| {C{H_{xx}}\!\left[ g(\mathbf {s}),\; g(\mathbf {s}),\; (\mathbf {x},\mathbf {s})^T\right] }\right| } \end{aligned}$$

which hold for all \(s\in \mathbf {s}\), \(x\in \mathbf {x}\). Let further \(0<y\in {\mathbb {R}}^{p}\), \(0<v\in {\mathbb {R}}^{n}\) as before,

with \(\alpha \), \(\beta \), \(\gamma \) as defined in (4.24) and (4.26), respectively, and

Let \(\eta \in [0, \underline{\mu }]\) be maximal such that

where

with \({\mathfrak {w}}_j(\eta )\), \(\overline{{\mathfrak {b}}}_j(\eta )\), \(\overline{{\mathfrak {a}}}\) and \(D_j(\eta )\) as defined in (4.24), (4.25). Further, let \(\sigma \in \left[ 0,\underline{\mu }\right] \) be the largest value such that for all \(j=1,\dots ,n\)

for \(\mathbf {s}_{\sigma } \mathrel {\mathop :}=\left[ p-\sigma y, \, p+\sigma y\right] \). If

then for all \(s\in {{\,\mathrm{int}\,}}(\widetilde{\mathbf {s}})\cap \mathbf {s}\) with

there exists at least one solution x of (1.1) which lies inside the inclusion box \({\mathbf {R}}^i_s\) (as defined in (4.20)) and there are no solutions in \(\cup _{s\in \mathbf {s}}{{\,\mathrm{int}\,}}({\mathbf {R}}^e_s)\setminus \cup _{s\in \mathbf {s}}({\mathbf {R}}^i_s)\).

Proof

Wlog., let \(s=p+ \nu \, y\in {{\,\mathrm{int}\,}}(\widetilde{\mathbf {s}})\cap \mathbf {s}\), i.e., \(0<\nu <\mu \). In order to meet all requirements from Theorem 4.2, which provides the result about the inclusion/exclusion regions at s, we have to check that the following three conditions hold:

-

(i)

\(D_j(s)>0\) for all \(j=1,\dots ,n\), with \(D_j\) as in (4.16) (positivity requirement).

-

(ii)

\(\lambda _s^i< \lambda _s^e\) (monotonicity of the inclusion/exclusion parameters).

-

(iii)

\(\left( {\hat{x}}_j(s) +\left[ -1,\, 1\right] \, \lambda ^i_s\,\,v_j\right) \subseteq \mathbf {x}_j\) \(\forall \, j=1, \dots ,n\) (feasibility of the inclusion region \({\mathbf {R}}_s^i\)).

Condition (i) is satisfied by construction, since we get for \(D_j(s)\) as in (4.16) by the calculations preceeding the statement of the theorem

for all \(s\in {{\,\mathrm{int}\,}}(\widetilde{\mathbf {s}})\cap \mathbf {s}\).

For (ii) we consider \(\lambda ^e_j\), \(\lambda ^i_j\) componentwise as functions in \(\nu \), i.e.,

Since by construction \(\underline{{\mathfrak {w}}}\le {\mathfrak {w}}(s)\), \(\overline{{\mathfrak {b}}}\ge \overline{{\mathfrak {b}}}(s)\), \(\overline{{\mathfrak {a}}}\ge {\mathfrak {a}}(s)\), we have

with \(\lambda _j^e(s)\), \(\lambda _j^i(s)\) as in (4.17). Both \(\lambda ^e_j\left( \nu \right) \) and \(\lambda ^i_j\left( \nu \right) \) are depending continuously on \(\nu \). In particular, for increasing \(\nu \), \(\lambda ^e_j(\nu )\) is monotonically decreasing and \(\lambda ^i_j(\nu )\) monotonically increasing. Hence, \(\lambda ^e_{\nu } = \min _j \lambda _j^e(\nu )\) and \(\lambda ^i_{\nu } = \max _j \lambda _j^i(\nu )\) have the same monotonicity behaviour, since we take a minimum (resp. maximum) of a monotonically decreasing (resp. increasing) function. By computing \(\underline{\mu }\) from (4.32), we get a lower and an upper bound on the exclusion and inclusion parameter \(\lambda ^e\) and \(\lambda ^i\), respectively, since \(\underline{D}_k\bigl (\underline{\mu }\bigr ) = 0\) implies \(\lambda _k^e\bigl (\underline{\mu }\bigr )=\lambda _k^i\bigl (\underline{\mu }\bigr )\) for some \(k\in \lbrace {1,\dots ,n\rbrace }\). Since we choose \(\eta \in \bigl [0,\, \underline{\mu }\bigr ]\) in such a way that \(\lambda ^e_{\eta }>\lambda ^i_{\eta }\), we have in particular

for all \(j = 1,\dots ,n\). By monotonicity, we have

Taking the minimum (resp. maximum) over all j, we get by (4.38), (4.39) and assumption (4.33)

hence, condition (ii) is satisfied.

Finally, we have for \(\nu \le \sigma \) and by assumption (4.35)

since \({\hat{x}}(s)\in {\hat{x}}(\mathbf {s}_{\nu })\), and \(\lambda _s^i\le \lambda _{\nu }^i\) by (ii). Hence, condition (iii) is satisfied as well, which concludes the proof. \(\square \)

As for the non-parametric case, the above considerations simplify if the system (1.1) is quadratic in both x and s. Since the first order slopes then are linear in x and s, all second order slopes are constant. Hence the estimates \({\mathfrak {B}}(x,s)\) become valid everywhere in the domain of definition of H, i.e., \(\overline{{\mathfrak {B}}}={\mathfrak {B}}(x,s)=\overline{B}\). If the approximation function (4.1) is linear, its first order slope (2.1) is constant, which simplifies the bounds in (4.30) and (4.31). In particular, if (1.1) is quadratic and the approximation function \({\hat{x}}\) is linear both in x and s, \(\overline{A}\) from (4.31) is constant.

5 Numerical results

The proposed method is an integral tool in the new solver rPSE for rigorously solving Parameter-dependent Systems of Equations. The solver uses a branch-and-bound approach for computing an inner approximation of the feasible parameter region in a given initial box together with an enclosure of the solution set over found regions. Additionally, infeasible parameter regions are identified. In the following, the main steps of a generic implementation of the algorithm are described. A variant of the solver, \({{{\texttt {\textsc {rPSE}}}}_{q}}\) for quadratic problems, is currently implemented in Matlab, utilizing the interval toolbox Intlab [31]. In the quadratic case, the second order slopes needed for the calculations in (4.21) and (4.22) are constant. All computations were performed on a laptop equipped with a 2.40 GHz Intel Core i7-5500U CPU, 32GB RAM. A comprehensive description of the solver together with numerical results and a thorough comparison with other existing methods is provided in Ponleitner [26].

In the following, the new method is demonstrated on three examples. Example 5.1 illustrates the computation of feasible parameter regions as proposed in Sect. 4, i.e., performing approxSol, inExPoint, inExParam. Examples 5.2 and 5.3 provide computational results from applying a basic implementation of \({{{\texttt {\textsc {rPSE}}}}_{q}}\). Thereby, in step 4 (inExParam) of rPSE , we use a linear approximation function \({\hat{x}}(s)\), in splitBox we perform splits only in the parameter space, and for performing generic feasibility tests in boxFeasCheck we utilize centered forms. Since the examples serve the purpose to present the new method, we exclusively used the new parametric inclusion-exclusion region method for computing feasible parameter regions, and forwent the application of additional parametric methods, more sophisticated heuristics, or constraint propagation for improving the enclosures of the solution curves.

Main Steps of the solver rPSE . The generic implementation of the solver performs the following steps for rigorously computing an inner approximation of the feasible parameter region for (1.1) in an initial box \(\mathbf {s}\):

-

Input:

-

initial box \((\mathbf {x}, \mathbf {s})\),

-

model equations for (1.1),

-

accuracy-thresholds \(\epsilon _x\), \(\epsilon _s\)

-

Initialize:

$$\begin{aligned} \begin{array}{ll} {\mathcal {L}}\mathrel {\mathop :}=\{(\mathbf {x},\mathbf {s})\}\quad &{} \text {list of unprocessed boxes}\\ {\mathcal {B}}_{sol}\mathrel {\mathop :}=\emptyset \quad &{} \text {list of } feasible \text { parameter boxes together with solution enclosures}\\ {\mathcal {B}}_{undec}\mathrel {\mathop :}=\emptyset \quad &{} \text {list of } undecided \text { boxes (neither proven feasible, nor found infeasible)}\\ {\mathcal {B}}_{infeas}\mathrel {\mathop :}=\emptyset \quad &{} \text {list of } infeasible \text { parameter intervals together with corr. variable boxes}\\ \end{array} \end{aligned}$$ -

while \({\mathcal {L}}\ne \emptyset \) do

-

Step 1: \(B = {\mathcal {L}}_1\), \({\mathcal {L}} = {\mathcal {L}}\setminus \{ B\}\)

-

Step 2: approxSol: compute approx. solution z(p) at inital set of parameters \(p\in B_S\)

-

Step 3: inExPoint: compute inclusion/exclusion region at z

-

if successful \(\rightarrow \) goTo Step 4: inExParam

-

else \(\rightarrow \) do splitBox

-

Step 4: inExParam: compute \(\mu \), \(\widetilde{\mathbf {s}}\), check resulting solution enclosure \(\hat{\mathbf {x}}\) for feasibility

-

if successful \(\rightarrow \) update \({\mathcal {B}}_{sol} = {\mathcal {B}}_{sol}\cup \bigl (\hat{\mathbf {x}},\,\widetilde{\mathbf {s}}\bigr )\), \(\rightarrow \) do cutBox

-

else \(\rightarrow \) do splitBox

-

cutBox: compute \(\{B_i\} = B\setminus \{B_{sol}\}\), \(\rightarrow \) do boxFeasCheck for the remaining boxes \(B_i\)

-

splitBox:

-

if \(\max {{{\,\mathrm{rad}\,}}(\mathbf {s}_B)} > \epsilon _s\) (or \(\max {{{\,\mathrm{rad}\,}}(\mathbf {x})} > \epsilon _x\)): split the current box according to some heuristics, \(\rightarrow \) do boxFeasCheck for all new boxes \(B_i\)

-

else update \({\mathcal {B}}_{undec} = {\mathcal {B}}_{undec}\cup \{B\}\), \(\rightarrow \) goTo Step 1

-

boxFeasCheck: check all boxes \(B_i\) for feasibility:

-

foreach \(B_i\) do

-

if \(B_i\) feasible \(\rightarrow \) update \({\mathcal {L}} = {\mathcal {L}}\cup \{B_i\}\)

-

else (\(B_i\) infeasible) \(\rightarrow \) update \({\mathcal {B}}_{infeas} = {\mathcal {B}}_{infeas}\cup \{B_i\}\)

-

-

\(\rightarrow \) goTo Step 1

-

Output: \({\mathcal {B}}_{sol}\), \({\mathcal {B}}_{infeas}\), \({\mathcal {B}}_{undec}\)

Example 5.1

Illustration of the algorithmic concept from Sec. 4 for computing a feasible parameter interval given an approximate solution z(p) of (1.1) at center p.

Step 1: Inclusion/exclusion region for fixed parameter p. We consider the system of equations

for \(s\in \mathbf {s} = [0,\;2]\), \(x \in \mathbf {x}= [0,\;5]\times [0,\; 5]\). We set \(p = 1\) and compute a corresponding solution \(z = (3,4)^T\). A slope for H with center (z, p) can be computed as

We get for the solution from above

and for the Jacobian of H wrt. x at (z, p)

For the preconditioning matrix C we take

The slope from (5.2) can be put in form (2.3) with

Thus, we get for the bounds from (3.4)

where \(\overline{b}\) and \(B_0\) both vanish, since z happens to be an exact zero of (5.1), and we computed without roundoff-errors. With \(v=(1,1)^T\) we get from (3.5)

and with \(D_1 = D_2 = 1\) we get from (3.6)

Hence, we get

Step 2: Construction of a feasible parameter interval. Since (5.1) is a quadratic problem, we consider a linear approximation function

with \(\varTheta \in {\mathbb {R}}^{2\times 1}\). In order to indicate the influence of the approximation function on the radius of the resulting feasible parameter box, we compute the parameter interval \(\widetilde{\mathbf {s}}\) from Theorem 4.3 for two different linear approximations,

-

(i)

a tangent \({\hat{x}}^{tan}\) in (z, p) with

$$\begin{aligned} \varTheta ^{tan} = -(H'_x(z,\, p))^{-1}\,(H'_s(z, p)) = -\frac{1}{7}\begin{pmatrix}1\\ 1 \end{pmatrix}, \end{aligned}$$(5.6) -

(ii)

a secant \({\hat{x}}^{sec}\) through the center (z, p) and a second point \(x^1=(\sqrt{13},\sqrt{13})^T\) at \(s^1=0\) with

$$\begin{aligned} \varTheta ^{sec} = \left( x^1-z\right) \, \left( s^1-p\right) ^{-1} = \begin{pmatrix} 3-\sqrt{13}\\ 4-\sqrt{13} \end{pmatrix}. \end{aligned}$$(5.7)

Thus, we have

In order to apply Theorem 4.3 we compute the upper bounds \(\overline{G}_0\) and \(\overline{A}\) from (4.30) and (4.31), respectively. A slope for \(H'_x\) is

since

With preconditionig matrix C from (5.3) we get

for tangent \({\hat{x}}^{tan}\) and secant \({\hat{x}}^{sec}\), respectively. Thereby we compute the tensor-vector-product in the formula for \(\overline{A}\) using the tensor rules (1.2). Since we have a quadratic problem, the second order slopes are constant for all \(x \in \mathbf {x}\), \(s\in \mathbf {s}\), and thus, \(\overline{{\mathfrak {B}}}= \overline{B}\) from (3.4). With these preparations, we are able to compute \(\mu \). We take \(y = 1\), and get from (4.26)

-

(i)

for tangent \({\hat{x}}^{tan}\):

$$\begin{aligned} \alpha ^{tan} = \frac{2}{7}\begin{pmatrix}1\\ 1\end{pmatrix},\quad \beta ^{tan}=\frac{1}{49}\begin{pmatrix}65\\ 72\end{pmatrix},\quad \gamma ^{tan}=\begin{pmatrix}1\\ 1\end{pmatrix}, \end{aligned}$$ -

(ii)

and for secant \({\hat{x}}^{sec}\)

$$\begin{aligned} \alpha ^{sec} = \begin{pmatrix}1\\ 1\end{pmatrix},\quad \beta ^{sec}=\frac{1}{7}\begin{pmatrix}28\sqrt{13}-77\\ 123-28\sqrt{13}\end{pmatrix},\quad \gamma ^{sec}=\begin{pmatrix}1\\ 1\end{pmatrix}, \end{aligned}$$

which results in

Two solution curves (solid and dashed lines) for \(x_1\) over \(\mathbf {s}\), approximation function \({\hat{x}}^{tan}\), together with inclusion regions (dash-dotted curves expanding from center z) and exclusion regions (dotted curves, contracting towards the solid solution curve); the vertical dash-dotted lines represent the respective inclusion regions for \({\hat{x}}^{tan}(\underline{s})\) at the lower bound of \(\widetilde{\mathbf {s}}^{tan}\) and for \({\hat{x}}^{tan}(s_1)\) (\(s_1\in \widetilde{\mathbf {s}}^{tan}\)), the vertical dotted lines represent the respective exclusion regions at \(s_1\) and at the center p; the points \(+\) mark the intersection of the inclusion and exclusion regions at the boundaries of \(\widetilde{\mathbf {s}}^{tan}\)

The respective inclusion and exclusion parameters from (4.34) are

so, for both approximation functions the monotonicity requirement (4.33) holds for \(\mu \). We still have to check the feasibility condition (4.35) of the inclusion region for the parameter intervals

For both intervals the feasibility requirement is met, since

Hence, for both parameter intervals from (5.8) the existence of at least one solution of (5.1) can be guaranteed. The boxes from (5.9) provide a first outer approximation of the solution set. As we could already see in this low dimensional example, the choice of the approximation function greatly influences the size of the computed parameter box as well as the quality of the enclosure of the solution set. A good choice of \({\hat{x}}(s)\) is thus important, and may require a closer analysis of the problem at hand.

Inclusion (a) and exclusion (b) regions over \(\widetilde{\mathbf {s}}^{tan}\approx [0.657,\,1.343]\) for approx. function \({\hat{x}}^{tan}\) (computed with upper bounds from (4.21) and (4.22)), and a comparison (c) with the respective regions for approx. function \({\hat{x}}^{sec}\) with \(\widetilde{\mathbf {s}}^{sec}\approx [0.851,\,1.149]\)

In Fig. 1 two solution curves for \(x_1\) over the initial interval \(\mathbf {s}\) are shown together with the approximation tangent \({\hat{x}}^{tan}(s)\) in (z, p) and corresponding inclusion and exclusion regions. The dash-dotted curves show the development of the inclusion regions, the dotted curves the development of the exclusion regions expanding from center z, calculated using upper bounds over the initial interval \(\mathbf {s}\), i.e., with \(\overline{G_0}\) and \(\overline{A}\) from (4.21) and (4.22). Satisfiying the monotonicity requirement (4.33), the inclusion and exclusion curves intersect at the boundary of \(\widetilde{\mathbf {s}}_{\mu }\) (marked by ’\(+\)’ in Fig. 1). The vertical dash-dotted and dotted lines represent respective inclusion and exclusion regions for center z at p, for \({\hat{x}}(s_1)\) at a parameter \(s_1\in \widetilde{\mathbf {s}}_{\mu }^{tan}\) and for \({\hat{x}}(\underline{s})\) at the lower boundary of the calculated parameter interval. In Fig. 2 these inclusion and exclusion regions as well as a comparison between the respective regions for different linear approximations (tangent and secant) are depicted. The parameter interval computed with a secant as approximation function is much smaller than the one computed using the tangent at (z, p).

Close to the boundaries of the feasible parameter region, a phenomenon know as cluster effect can be observed, i.e., the step size \(\mu \) and thus the radii of the parameter boxes become smaller and smaller (see Fig. 3). The cluster effect occurs since at the boundary of the feasible parameter regions small changes in the parameter space may cause great differences in the solution space. Hence, a rigorous numerical proof of feasibility fails at the boundary of the feasible parameter region. Of course, in this case it would be possible to exchange x and s in order to compute a valid inclusion of the one dimensional manifold to avoid the cluster effect. However, in the multidimensional setting this becomes less clear.

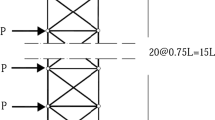

Several parameter intervals \(\widetilde{\mathbf {s}}_i\) (\(i=1,2,\ldots ,13\); bold horizontal lines) computed for (5.1) from various centers together with inclusion (dash-dotted lines) and exclusion (dotted lines) regions over the respective interval; the closer to the boundary of \(\mathbf {s}\), the smaller the radius of the parameter interval gets (cluster-effect)

Example 5.2

Numerical results I: 1-dimensional parameter space. We consider the system of equations \(H:{\mathbb {R}}^4\rightarrow {\mathbb {R}}^3\) with

for \(s\in \mathbf {s} = [5,\; 8]\), \(x \in \mathbf {x}= [5.5, \; 9]\times [2,\; 10]\times [1.5,\;5]\) and coefficients

with \(\varphi = \frac{\pi }{9}\), and compute a feasible parameter region using a basic implementation of solver \({{{\texttt {\textsc {rPSE}}}}_{q}}\), which performs the steps described above.

The three plots in Fig. 4 show an exemplary enclosure for each variable \(x_i\) (\(i=1,2,3\)) computed by \({{{\texttt {\textsc {rPSE}}}}_{q}}\) with accuracy-threshold \(\epsilon _s = 0.05\), i.e., boxes with parameter intervals \(\mathbf {s}\) with \({{\,\mathrm{rad}\,}}({\mathbf {s}}) < \epsilon _s\) where marked as undecided and not further processed during the branch-and-bound process. For elements in \({\mathcal {B}}_{undec}\) neither feasibility nor infeasibility can be proven by the algorithm. The computation terminated after 27 iterations (computation time 2.27 s), and the computed feasible parameter region covers about 0.8954 of the true feasible area. Table 1 displays computational results for several accuracy values \(\epsilon _s\). There we denote by \(\overline{\mathbf {s}_{feas}}\) the average ratio of the size of the computed feasible parameter region \(\mathbf {s}^C_{feas}\) to the size of the true feasible parameter region \(\mathbf {s}_{feas}\).

Enclosures of the solution curves (dash-dotted lines) of (5.10) for \(x_i\) (\(i=1,2,3\)) over \(\mathbf {s} = [5,\;8]\); the dark grey areas at the left and right boundary of the interval represent infeasible regions, the grey area in the middle represent feasible areas (steps 1–4 of the algorithm were performed successfully), the light grey areas in-between display the undecided regions

Example 5.3

Numerical results II: 2-dimensional parameter space. We consider the system of equations \(H:{\mathbb {R}}^5\rightarrow {\mathbb {R}}^3\) with

for \(s\in \mathbf {s} = [2,\; 3.5]\times [4.5,\;6.8]\), \(x \in \mathbf {x}= [5.5, \; 7]\times [6,\; 10]\times [1.5,\;5]\), and

with \(\varphi = \frac{\pi }{9}\), and compute a feasible parameter region using a basic implementation of solver \({{{\texttt {\textsc {rPSE}}}}_{q}}\). The feasible parameter region is an intersection of annular regions, bounded by arcs depending on the lower and upper bound of the bounding boxes for the variables \(x_i\). In Fig. 5 the by \({{{\texttt {\textsc {rPSE}}}}_{q}}\) computed feasible and infeasible parameter regions together with the true bounds of the feasible region are shown. One can also see the boxes with status undecided along the boundary of the feasible region. As in Example 5.1, close to the boundary of the feasible region the cluster effect is visible. The example was computed with accuracy threshold \(\epsilon _s=0.01\). The algorithm terminated after \(168\,s\) and 1478 iterations, the computed feasible area is \(A_{sol}=1.4517\), the infeasible area \(A_{infeas}=1.8486\), the reamaining undecided part \(A_{undec} = 0.1497\). The radius of the largest feasible box computed by \({{{\texttt {\textsc {rPSE}}}}_{q}}\) was \(\mu \approx 0.264\). In Table 2 some results for different accuracy values \(\epsilon _s\) are summarized.

Feasible and infeasible regions for (5.11) for parameters \(s\in \mathbf {s} = [2,\; 3.5]\times [4.5,\;6.8]\) together with the true bounds (white dashed line) and an undecided area (accuracy \(\epsilon _s=0.01\)) around the boundary of the feasible region; in (b) the lower right corner of the initial interval is enlarged in order to show a boundary of the feasible region in more detail

6 Conclusion

Most interval methods for rigorously enclosing the solution set of a system of equations require a reasonable initial estimate of the interval hull of the solution set. The newly proposed method allows to explicitely compute feasible areas \(\widetilde{\mathbf {s}}\) in the parameter space given an initial approximate solution z(p) for a set p of parameters, and provide an initial rigorous enclosure of the solution set of (1.1) for all parameters in the computed parameter boxes. The novelty of the method is the use of first and second order slope approximations for constructing such feasible parameter boxes. The new method is an integral part of the currently developed solver rPSE for solving nonlinear parameter-dependent systems of equations rigorously in a branch-and-bound setting. The discussed numerical results obtained with a generic implementation of a variant of the solver, \({{{\texttt {\textsc {rPSE}}}}_{q}}\) for quadratic problems, verify the practial applicability of the theoretical results. However, similar to other interval-based branch-and-bound methods, the new approach suffers from some sort of cluster effect (see Fig. 3) when approaching the boundaries of the feasible parameter regions, i.e., the step size \(\mu \) and thus the radii of the parameter boxes become smaller and smaller. This problem may be tackled by extending the method to more general polytopes instead of boxes (as suggested, e.g., in Goldsztejn and Granvilliers [9]), or by using an extension of Miranda’s theorem, and is addressed in Ponleitner [26]. The proposed Algorithm \({{{\texttt {\textsc {rPSE}}}}_{q}}\) globally identifies all feasible parameter regions within an initial box, and thus provides an inner approximation of the set of feasible parameters.

The purpose of the here demonstrated basic implementation of the solver is to illustrate the newly proposed method. Hence, the solver (i) does not claim to be competitive, and (ii) has certain limitations in terms of performance. The presented examples are chosen in such a way that on the one hand, the correctness of the results can easily be verified, and on the other hand, additional algorithmic challenges such as separation of multiple solutions in the variable domain, do not arise. Cleary, the problems from Examples 5.2 and 5.3 could be solved using techniques specifically dedicated to single-variable-equations, since the equations each depend on one variable only. Since this version of the solver solely relies on the new method for constructing and verifying feasible parameter regions, it is computationally expensive, as can be seen in Tables 1 and 2, respectively. Future implementations will address these limitations and utilize additional parametric interval methods and heuristics in order to reduce computational costs. Regardless of the simplicity of the problems, the results of Examples 5.2 and 5.3 suggest that the computational effort significantly increases for each new dimension in the parameter space. Hence, the applicability of the new method seems most promising for problems in low parameter dimensions.

An application for the new method is for example the workspace-computation of parallel manipulators (see, e.g., [19]). In particular, the computation of the total orientation workspace requires the solution of a parameter-dependent system of nonlinear equations. Up to the author’s knowledge, there are only a few results adressing this problem using rigorous methods (e.g., [18, 20]). Therefore, future work will, i.a., be concerned with applying the new method to the workspace problem [26, 28].

Data availibility

There is no additional raw data included. All sample data was produced by the algorithm in Sect. 5 and is available from the corresponding author on reasonable request.

References

Allgower, E.L., Georg, K.: Numerical path following. Handb. Numer. Anal. 5(3), 207 (1997)

Allgower, E.L., Schmidt, P.H.: An algorithm for piecewise-linear approximation of an implicitly defined manifold. SIAM J. Numer. Anal. 22(2), 322–346 (1985)

Caro, S., Chablat, D., Goldsztejn, A., Ishii, D., Jermann, C.: A branch and prune algorithm for the computation of generalized aspects of parallel robots. Artif. Intell. 211, 34–50 (2014)

Deuflhard, P., Heindl, G.: Affine invariant convergence theorems for Newton’s method and extensions to related methods. SIAM J. Numer. Anal. 16(1), 1–10 (1979)

Goldsztejn, A.: Verified projection of the solution set of parametric real systems. In: Proceedings of 2nd International Workshop on Global Constrained Optimization and Constraint Satisfaction (COCOS’03), Lausanne, Switzerland, vol. 28 (2003)

Goldsztejn, A.: A branch and prune algorithm for the approximation of non-linear AE-solution sets. In: Proceedings of the 2006 ACM Symposium on Applied computing, pp. 1650–1654 (2006)

Goldsztejn, A.: Sensitivity analysis using a fixed point interval iteration. arXiv preprint arXiv:0811.2984 (2008)

Goldsztejn, A., Caro, S., Chabert, G.: A three-step methodology for dimensional tolerance synthesis of parallel manipulators. Mech. Mach. Theory 105, 213–234 (2016)

Goldsztejn, A., Granvilliers, L.: A new framework for sharp and efficient resolution of NCSP with manifolds of solutions. Constraints 15(2), 190–212 (2010)

Kahan, W.: A more complete interval arithmetic. Lecture notes for a summer course at University of Michigan (1968)

Kantorovich, L.B.: Functional analysis and applied mathematics. Uspekhi Mat. Nauk 3, 89–185 (1948). (in Russian). Translated by C. D. Benster, Nat. Bur. Stand. Rep. 1509, Washington, DC, 1952

Kapur, D.: An Approach for Solving Systems of Parametric Polynomial Equations. In: Sarasawat, V., Van Hentenryck, P. (eds.) Principles and Practice of Constraint Programming, pp. 217–244. MIT Press (1995)

Kearfott, R.B., Xing, Z.: An interval step control for continuation methods. SIAM J. Numer. Anal. 31(3), 892–914 (1994)

Kolev, L.V.: Use of interval slopes for the irrational part of factorable functions. Reliab. Comput. 3(1), 83–93 (1997)

Kolev, L.V., Nenov, I.P.: Cheap and tight bounds on the solution set of perturbed systems of nonlinear equations. Reliab. Comput. 7(5), 399–408 (2001)

Krawczyk, R., Neumaier, A.: Interval slopes for rational functions and associated centered forms. SIAM J. Numer. Anal. 22(3), 604–616 (1985)

Martin, B., Goldsztejn, A., Granvilliers, L., Jermann, C.: Certified parallelotope continuation for one-manifolds. SIAM J. Numer. Anal. 51(6), 3373–3401 (2013)

Merlet, J.P.: Determination of 6D workspaces of a Gough-type 6 DOF parallel manipulator. In: 12th RoManSy, Paris, pp. 261–268 (1998)

Merlet, J.P.: Parallel Robots, 2nd edn. Springer (2006)

Merlet, J.P., Gosselin, C.M., Mouly, N.: Workspaces of planar parallel manipulators. Mech. Mach. Theory 33(1–2), 7–20 (1998)

Montes, A., Wibmer, M.: Gröbner bases for polynomial systems with parameters. J. Symb. Comput. 45(12), 1391–1425 (2010)

Neumaier, A.: Rigorous sensitivity analysis for parameter-dependent systems of equations. J. Math. Anal. Appl. 144(1), 16–25 (1989)

Neumaier, A.: Interval Methods for Systems of Equations, vol. 37. Cambridge University Press (1991)

Neumaier, A., Zuhe, S.: The Krawczyk operator and Kantorovich’s theorem. J. Math. Anal. Appl. 149(2), 437–443 (1990)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. SIAM (2000)

Ponleitner, B.: Rigorous techniques for solving parameter-dependent systems of equations. Ph.D. Thesis, University of Vienna (2021)

Ponleitner, B., Schichl, H.: Feasible parameter sets and rigorous solutions of parameter-dependent systems of equations. Technical Report (2018a)

Ponleitner, B., Schichl, H.: Rigorous methods for computing the workspace of parallel manipulators. Technical Report (2018b)

Rump, S.: Rigorous sensitivity analysis for systems of linear and nonlinear equations. Math. Comput. 54(190), 721–736 (1990)

Rump, S.: Expansion and estimation of the range of nonlinear functions. Math. Comput. Am. Math. Soc. 65(216), 1503–1512 (1996)

Rump, S.: INTLAB - INTerval LABoratory. In: Csendes, T. (ed.) Developments in Reliable Computing, pp. 77–104. Kluwer Academic Publishers, Dordrecht (1999)

Schichl, H., Márkót, M.: Algorithmic differentiation techniques for global optimization in the COCONUT environment. Optim. Methods Softw. 27(2), 359–372 (2012)

Schichl, H., Márkót, M., Neumaier, A.: Exclusion regions for optimization problems. J. Glob. Optim. 59(2–3), 569–595 (2014)

Schichl, H., Neumaier, A.: Exclusion regions for systems of equations. SIAM J. Numer. Anal. 42(1), 383–408 (2005)

Schichl, H., Neumaier, A.: Interval analysis on directed acyclic graphs for global optimization. J. Glob. Optim. 33(4), 541–562 (2005)

Stuber, M.D.: Evaluation of process systems operating envelopes. Ph.D. Thesis, Massachusetts Institute of Technology (2013)

Stuber, M.D., Barton, P.I.: Robust simulation and design using parametric interval methods. In: 4th International Workshop on Reliable Engineering Computing (REC 2010), pp. 536–553. Citeseer (2010)

Tannous, M., Caro, S., Goldsztejn, A.: Sensitivity analysis of parallel manipulators using an interval linearization method. Mech. Mach. Theory 71, 93–114 (2014)

Van Den Berg, J.B., Lessard, J.P.: Chaotic braided solutions via rigorous numerics: Chaos in the Swift–Hohenberg equation. SIAM J. Appl. Dyn. Syst. 7(3), 988–1031 (2008)

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

Open access funding provided by University of Vienna.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was funded by the uni:docs fellowship programme for Doctoral Candidates at the University of Vienna.

Original article has been revised: Funding note “Open access funding provided by University of Vienna.” Has been updated in the article.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ponleitner, B., Schichl, H. Exclusion regions for parameter-dependent systems of equations. J Glob Optim 81, 621–644 (2021). https://doi.org/10.1007/s10898-021-01082-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-021-01082-3

Keywords

- Zeros

- Parameter-dependent systems of equations

- Rigorous enclosures

- Inclusion region

- Exclusion region

- Feasible parameter region

- Branch and bound