Abstract

We show that a linear Young differential equation generates a topological two-parameter flow, thus the notions of Lyapunov exponents and Lyapunov spectrum are well-defined. The spectrum can be computed using the discretized flow and is independent of the driving path for triangular systems which are regular in the sense of Lyapunov. In the stochastic setting, the system generates a stochastic two-parameter flow which satisfies the integrability condition, hence the Lyapunov exponents are random variables of finite moments. Finally, we prove a Millionshchikov theorem stating that almost all, in a sense of an invariant measure, linear nonautonomous Young differential equations are Lyapunov regular.

Similar content being viewed by others

1 Introduction

In this article we study the Lyapunov spectrum of the nonautonomous linear Young differential equation (abbreviated by YDE)

where A, C are continuous matrix valued functions on \([0,\infty )\), and \(\omega \) is a continuous path on \([0,\infty )\) having finite p-th variation on each compact interval of \([0,\infty )\), for some \(p \in (1,2)\). Such system (1.1) appears, for instance, when considering the linearization of the autonomous Young differential equation

along any reference solution \(y(t, y_0,\omega )\). An example is when we would like to solve in the pathwise sense stochastic differential equations driven by fractional Brownian motions with Hurst index \(H \in (\frac{1}{2},1)\) defined on a complete probability space \((\Omega ,{{\mathcal {F}}},{{\mathbb {P}}})\) [24]. In fact it follows from [5] that (1.2) under the stochastic setting also satisfies the integrability condition.

The Eq. (1.1) can be rewritten in the integral form

where the second integral is understood in the Young sense [28], which can also be presented in terms of fractional derivatives [29]. Under some mild conditions, the unique solution of (1.1) generates a two-parameter flow \(\Phi _\omega (t_0,t)\), as seen in [9]. Under a certain stochastic setting, (1.1) actually generates a stochastic two-parameter flow in the sense of Kunita [16].

Our aim is to study the Lyapunov exponents and Lyapunov spectrum of the linear two-parameter flow generated from Young Eq. (1.1). Notice that Lyapunov spectrums and its splitting are the main content of the celebrated multiplicative ergodic theorem (MET) by Oseledets [25]. It was also investigated by Millionshchikov in [18,19,20,21] for linear nonautonomous differential equations. In the stochastic setting, the MET is also formulated for random dynamical systems in [1, Chapter 3]. Further investigations can be found in [6,7,8, 10] for stochastic flows generated by nonautonomous linear stochastic differential equations driven by standard Brownian motion.

For Young equations, we show that Lyapunov exponents can be computed based on the discretization scheme. Moreover, if the driving path \(\omega \) satisfies certain conditions, the Lyapunov spectrum can be computed independently of \(\omega \) for triangular systems (i.e. both A, C are upper triangular matrices) which are Lyapunov regular.

One important issue is the non-randomness of Lyapunov exponents when the system is considered under a certain stochastic setting, namely if the driving path \(\omega \) is a realization of a certain stochastic noise. In case the system is driven by standard Brownian noises, a filtration of independent \(\sigma -\) algebras can be constructed and the argument of Kolmogorov’s zero-one law can be applied to prove the non-randomness of Lyapunov exponents, which are measurable to tail events, see [7].

In general, the stochastic noise might be a fractional Brownian motion which is not Markov, hence it is difficult to construct such a filtration and to apply the Kolmogorov’s zero-one law. The question of non-randomness of Lyapunov spectrum is therefore still open. However, the answer is affirmative for some special cases. For example, autonomous and periodic systems can generate random dynamical systems satisfying the integrability condition, thus the Lyapunov spectrum is non-random by the multiplicative ergodic theorem [1]. Our investigation shows that the Lyapunov spectrum of triangular systems that are Lyapunov regular are also non-random. In general, we expect that the statement of non-randomness of Lyapunov spectrum is still true for any Lyapunov regular system, although finding a counter-example of a nonautonomous system with random Lyapunov spectrum also attracts our interest.

The paper is organized as follow. In Sect. 2, we prove in Proposition 2.4 the generation of a two-parameter flow from the unique solution of (1.1). The concepts of Lyapunov exponents and Lyapunov spectrum of system (1.1) are then defined in Sect. 3. Under the assumptions on the driving path \(\omega \) and on the coefficient functions, we prove in Theorem 3.3 that Lyapunov spectrum can be computed using the discretized flow and give an explicit formula of the spectrum in Theorem 3.7 in case of triangular systems which are regular in the sense of Lyapunov. Theorem 3.11 provides a criterion for a triangular system of YDE to be Lyapunov regular. In Sect. 4, we consider the system under random perspectives in which the driving path acts as a realization of a stochastic stationary process in a function space equipped with a probabilistic framework. The system is then proved to generate a stochastic two-parameter flow which satisfies the integrability condition, hence the Lyapunov exponents are proved in Theorem 4.3 to be random variables of finite moments. Section 4.2 is devoted to study the regularity of the system, where we prove a Millionshchikov Theorem 4.6 stating that almost all, in a sense of an invariant measure, nonautonomous linear Young differential equations are Lyapunov regular. We end up with a discussion on the non-randomness of Lyapunov spectrum in some special cases, and raise this interesting question in general.

2 Preliminary

In this section we present some well-known facts of Young differential equations and two parameter flows. Let \(0 \le T_1< T_2 < \infty \). Denote by \({\mathcal {C}}([T_1,T_2], {\mathbb {R}}^{d\times d})\) the Banach space of continuous matrix-valued functions on \([T_1,T_2]\) equipped with the sup norm \(\Vert \cdot \Vert _{\infty ,[T_1,T_2]}\), by \({\mathcal {C}}^{r\mathrm{-var}}([T_1,T_2],{\mathbb {R}}^d)\) the Banach space of bounded \(r-\)variation continuous functions on \([T_1,T_2]\) having values in \({\mathbb {R}}^d\) with the norm

in which \(|\cdot |\) is the Euclidean norm and \(\left| \left| \left| \cdot \right| \right| \right| _{r\mathrm{-var},[T_1,T_2]}\) is the seminorm defined by

where the supremum is taken over the whole class of finite partitions \(\Pi (T_1,T_2)=\{ T_1= t_0<t_1<\cdots < t_n=T_2\}\) of \([T_1,T_2]\). For each \(0<\alpha <1\), we denote by \({\mathcal {C}}^{\alpha \mathrm {-Hol}}([T_1,T_2],{\mathbb {R}}^d)\) the space of \(\alpha -\)Hölder continuous functions on \([T_1,T_2]\) equipped with the norm

in which \(\Vert u \Vert _{\infty ,[T_1,T_2]}:= \sup _{t\in [T_1,T_2]}|u(t)|\) and \(\left| \left| \left| u \right| \right| \right| _{\alpha \mathrm{-Hol},[T_1,T_2]} = \sup _{T_1\le s<t\le T_2}\frac{|u(t)-u(s)|}{(t-s)^\alpha }\). It is obvious that for all \(u\in {\mathcal {C}}^{\alpha \mathrm {-Hol}}([T_1,T_2],{\mathbb {R}}^d)\),

with \(\alpha =1/r\). Moreover, we have the following estimate, whose proof follows directly from the definitions of the \(p-\)var seminorm and the sup norm and will be omitted here.

Lemma 2.1

Let \(t_0\ge 0\) and \(T>0\) be arbitrary. If \(C\in {\mathcal {C}}^{q\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}}^{d\times d})\), \( x\in {\mathcal {C}}^{q\mathrm {\mathrm{-var}}}([t_0,t_0+T],{\mathbb {R}}^{d})\), then for all \(s<t\) in \([t_0,t_0+T]\),

Now, consider \(x\in {\mathcal {C}}^{q\mathrm {-}\mathrm {var}}([T_1,T_2],{\mathbb {R}}^{d\times m})\) and \(\omega \in {\mathcal {C}}^{p\mathrm {-}\mathrm {var}}([T_1,T_2],{\mathbb {R}}^m)\), \(p,q \ge 1\) and \(\frac{1}{p}+\frac{1}{q} > 1\), the Young integral \(\int _a^bx(t)d\omega (t)\) can be defined as

where the limit is taken on all finite partitions \(\Pi = \{T_1 = t_0< t_1< \ldots < t_n = T_2\}\) with \(|\Pi |:= \displaystyle \max \nolimits _{0\le i \le n-1} |t_{i+1}-t_i|\) (see [28, p. 264–265]). This integral satisfies additive property by the construction, and the so-called Young–Loeve estimate [12, Theorem 6.8, p. 116]

where

Now for any \(\omega \in {\mathcal {C}}^{p\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}})\) with some \(1< p<2 \), we consider the deterministic Young equation

in which \(A\in {\mathcal {C}}([t_0,t_0+T],{\mathbb {R}}^{d\times d}),C\in {\mathcal {C}}^{q\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}}^{d\times d})\) with \( q > p\) and \(\frac{1}{q}+\frac{1}{p}>1\). We first show that under mild conditions on coefficient functions A, C, (2.3) has a unique solution in \( {\mathcal {C}}^{q\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}}^{d})\).

Proposition 2.2

Fix \([t_0,t_0+T]\) and consider \(\omega \) varying as an element of the Banach space \({\mathcal {C}}^{p\mathrm{-var}}([t_0,t_0+T])\). Assume that \(A\in {\mathcal {C}}([t_0,t_0+T],{\mathbb {R}}^{d\times d}),C\in {\mathcal {C}}^{q\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}}^{d\times d})\) with \(q>p\) and \(\frac{1}{q}+\frac{1}{p}>1\). Then Eq. (2.3) has a unique solution \(x (\cdot ,t_0,x_{0},\omega )\) in the space \({\mathcal {C}}^{p\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}}^d)\) which satisfies

where

K is defined in (2.2), \(\mu \) is a constant such that \(0<\mu <\min \{1,M^*\}\) and \(\eta = -\,\log (1-\mu )\). In addition, the solution mapping

is continuous w.r.t \((x_0,\omega )\).

Proof

See the “Appendix”. \(\square \)

Remark 2.3

-

(i)

Fix \([t_0,t_0+T]\), by considering the backward equation similar to that of [9], we can draw the same conclusions on the existence and uniqueness of the solution for the backward equation at an arbitrary point \(a\in [t_0,t_0+T]\). Moreover, it can be proved that the solution mapping X is continuous with respect to \((a,x_0,\omega ) \in [t_0,t_0+T]\times {\mathbb {R}}^d\times {\mathcal {C}}^{p\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}})\).

-

(ii)

If \(\omega \in {\mathcal {C}}^{1/p\mathrm {-Hol}}([t_0,t_0+T],{\mathbb {R}})\subset {\mathcal {C}}^{p\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}})\) then similar arguments prove that the solution is \(1/p-\)Hölder continuous and the solution mapping X is continuous with respect to \((a,x_0,\omega ) \in [t_0,t_0+T]\times {\mathbb {R}}^d\times {\mathcal {C}}^{1/p\mathrm {-Hol}}([t_0,t_0+T],{\mathbb {R}})\).

For any \(t_0\le t_1\le t_2\le t_0+ T\) the Cauchy operator \(\Phi _\omega (t_1,t_2): {\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) of the YDE (1.1) is defined as \(\Phi _{\omega }(t_1,t_2)x_{t_1}:= x(t_2,t_1,x_{t_1},\omega )\) for any vector \(x_{t_1}\in {\mathbb {R}}^d\).

Following [1, p. 551], a family of mappings \(X_{s,t}: {\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) depending on two real variables \(s,t\in [a,b] \subset {\mathbb {R}}\) is called a two-parameter flow of homeomorphisms of \({\mathbb {R}}^d\) on [a, b] if the mapping \(X_{s,t}\) is a homeomorphism on \({\mathbb {R}}^d\); \(X_{s,s} = id\); \(X_{s,t}^{-1} = X_{t,s}\) and \(X_{s,t} = X_{u,t}\circ X_{s,u}\) for any \(s,t,u\in [a,b]\). If in addition, \(X_{s,t}\) is a linear operator for all \(s,t \in [a,b]\), then the family \(X_{s,t}\) is called a two-parameter flow of linear operators of \({\mathbb {R}}^d\) on [a, b].

Proposition 2.4

Suppose that the assumptions of Proposition 2.2 are satisfied. Then the Eq. (1.1) generates a two-parameter flow of linear operators of \({\mathbb {R}}^d\) by means of its Cauchy operators.

Proof

First note that the same method in the proof of Theorem 2.2 can be applied to prove the existence and uniqueness of solution \(\Phi _\omega (t_0,t)\) of the matrix-valued differential equation

It is easy to show that the solution \(\Phi _\omega (\cdot ,\cdot ): \Delta ^2 \rightarrow {\mathbb {R}}^{d\times d}\), with \(\Delta ^2 :=\{(s,t) \in [t_0,t_0+T] \times [t_0,t_0+T]: s\le t\}\), has properties that \(\Phi _\omega (s,s)= I_{d\times d}\) for all \(s\ge 0\) and

The solution \(\Phi _\omega (\cdot ,\cdot )\) is the mapping along trajectories of (2.3) in forward time since YDE is directed. Like the ODE case, in our setting, the solution of the matrix Eq. (2.7) is the Cauchy operator of the vector Eq. (2.3).

Next, consider the adjoint matrix-valued pathwise differential equation

with initial value \(\Psi (t_0,t_0) =I\), and \(A^T(\cdot ), C^T(\cdot )\) are the transpose matrices of \(A(\cdot )\) and \(C(\cdot )\), respectively. By similar arguments we can prove that there exists a unique solution \(\Psi _\omega (t_0,t)\) of (2.9). Introduce the transformation \(u(t) = \Psi _\omega (t_0,t)^T x(t)\). By the formula of integration by parts (see [12, Proposition 6.12 and Exercise 6.13] or a fractional version in Zähle [29]), we conclude that

In other words, \(u(t) = u(t_0)=x(t_0)=x_0\) or equivalently \(\Psi _\omega (t_0,t)^T x(t) = x_0\). Combining with \(\Phi \) in Eq. (2.7) we conclude that \(\Psi _\omega (t_0,t)^T \Phi _\omega (t_0,t)x_0 = x_0\) for all \(x_0 \in {\mathbb {R}}^d\), hence there exists \(\Phi _\omega (t_0,t)^{-1}\) and \(\Phi _\omega (t_0,t)^{-1} = \Psi _\omega (t_0,t)^T\). As a result, for any \(x_0 \ne 0\) we have \(\Phi _\omega (t_0,t)x_0 \ne 0 \) for all \(t\ge t_0\). Thus we showed that the linear operator \(\Phi _\omega (t_0,t)\), \(t\ge t_0\), is nondegenerate. Similarly, for all \(t_0\le s\le t\le t_0+T\) the operator \(\Phi _\omega (s,t)\) is nondegenerate and \(\Phi _\omega (s,t)^{-1} = \Psi _\omega (s,t)^T\). Putting \(\Phi _\omega (t,s) := \Psi _\omega (s,t)^T\) for \(t_0\le s\le t\le t_0+T\) we have defined the family \(\Phi _\omega (t,s)\) for all \(s,t\in [t_0,t_0+T]\), and it is clearly a continuous two-parameter flow generated by (2.3). \(\square \)

Remark 2.5

Using the solution formula for one dimensional system as in Sect. 3, one derives a Liouville–like formula as follow

which also proves the invertibility of \(\Phi _\omega (t_0,t)\).

3 Lyapunov Spectrum for Nonautonomous Linear System of YDEs

The classical Lyapunov spectrum of linear system of ordinary differential equations (henceforth abbreviated by ODEs) is a powerful tool in investigation of qualitative behavior of the system, see e.g. [4, 23]. Since (1.3) generates a two-parameter flow of homeomorphisms, we can instead study Lyapunov spectrum of the flow generated by the equation.

3.1 Exponents and Spectrum

We aim to follow the technique in [7, 18, 19]. From now on, let us consider the following assumptions on the coefficients of (1.3).

(\({\mathbf{H }}_1\)) \({\hat{A}}:=\Vert A\Vert _{\infty ,{\mathbb {R}}^+} < \infty .\)

(\({\mathbf{H }}_2\)) For some \(\delta >0\), \({\hat{C}}:=\Vert C\Vert _{q\mathrm{-var},\delta ,{\mathbb {R}}^+}:= \displaystyle \sup \nolimits _{0\le t-s \le \delta } \Vert C\Vert _{q\mathrm{-var},[s,t]}< \infty \).

In (\({\mathbf{H }}_2\)) we can assume, without loss of generality that \(\delta = 1\). Put

where K given by (2.2). It is obvious from (2.6) that, for any \(t_0\in {\mathbb {R}}^+\),

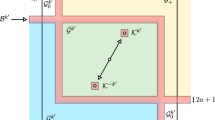

Note that conditions (\({\mathbf{H }}_1\)), (\({\mathbf{H }}_2\)) and Proposition 2.2 assure the existence and uniqueness of solution of (1.3) on \({\mathbb {R}}^+\). Moreover, Proposition 2.4 asserts that (1.3) generates a two-parameter flow on \({\mathbb {R}}^d\) by means of its Cauchy operators \(\Phi _\omega (\cdot ,\cdot )\), and \(\Phi _\omega (s,t)x_0\) represents the value at time \(t\in {\mathbb {R}}^+\) of the solution of (1.3) started at \(x_0\in {\mathbb {R}}^d\) at time \(s\in {\mathbb {R}}^+\). Following [7], we introduce the notion of Lyapunov exponents of two-parameter flow of linear operators first, and then use it to define the Lyapunov exponents. We shall denote by \({{\mathcal {G}}}_k\) the Grassmannian manifold of all linear k-dimensional subspaces of \({\mathbb {R}}^d\).

Recall that for a real function \(h: {\mathbb {R}}^+\rightarrow {\mathbb {R}}^d\) the Lyapunov exponent of h is the number (which could be \(\infty \) or \(-\infty \))

(We make the convention that \(\log \) is the logarithm of natural base and \(\log 0 := -\,\infty \).)

Definition 3.1

-

(i)

Given a two-parameter flow \(\Phi _\omega (s,t)\) of linear operators of \({\mathbb {R}}^d\) on the time interval \([t_0,\infty )\), the extended-real numbers (real numbers or symbol \(\infty \) or \(-\infty \))

$$\begin{aligned} \lambda _k(\omega ) := \inf _{V\in {{\mathcal {G}}}_{d-k+1}} \sup _{y\in V} \limsup _{t\rightarrow \infty } \frac{1}{t} \log |\Phi _ \omega (t_0,t)y|, \quad k=1,\ldots , d, \end{aligned}$$(3.2)are called Lyapunov exponents of the flow \(\Phi _\omega (s,t)\). The collection \(\{ \lambda _1(\omega ),\ldots , \lambda _d(\omega )\}\) is called Lyapunov spectrum of the flow \(\Phi _\omega (s,t)\).

-

(ii)

For any \(u\in [t_0,\infty )\) the linear subspaces of \({\mathbb {R}}^d\)

$$\begin{aligned} E_k^u(\omega ) := \big \{ y\in {\mathbb {R}}^d\bigm | \limsup _{t\rightarrow \infty } \frac{1}{t} \log |\Phi _\omega (u,t)y| \le \lambda _k(\omega ) \big \}, \quad k=1,\ldots ,d, \;\; \end{aligned}$$(3.3)are called Lyapunov subspaces at time u of the flow \(\Phi _\omega (s,t)\). The flag of nonincreasing linear subspaces of \({\mathbb {R}}^d\)

$$\begin{aligned} {\mathbb {R}}^d = E_1^u(\omega ) \supset E_2^u(\omega ) \supset \cdots \supset E_d^u(\omega ) \supset \{0\} \end{aligned}$$is called Lyapunov flag at time u of the flow \(\Phi _\omega (s,t)\).

-

(iii)

The Lyapunov spectrum, Lyapunov exponents and Lyapunov subspaces of the linear YDE (1.3) are those of the two-parameter flow \(\Phi _\omega (s,t)\) generated by (1.3).

It is easily seen that the Lyapunov exponents in Definition 3.1 are independent of \(t_0\), and are ordered:

Moreover, due to [7, Theorems 2.5, 2.7, 2.8], for any \(u\in [t_0,\infty )\) and \(k=1,\ldots , d\), the Lyapunov subspaces \(E_k^u(\omega )\) are invariant with respect to the flow in the following sense

The classical definition of Lyapunov spectrum of a linear system of ODE is based on the normal basis of the solution of the system (see [11]). Millionshchikov [18] pointed out that these definitions are equivalent. In the following remark we restate some facts in [11].

Remark 3.2

-

(i)

For every invertible matrix \(B(\omega )\), the matrix \(\Phi _\omega (t_0,t)B(\omega )\) satisfies

$$\begin{aligned} \sum _{i=1}^d \alpha _i(\omega )\ge \sum _{i=1}^d\lambda _i(\omega ) \end{aligned}$$where \(\alpha _i(\omega ) \) is the Lyapunov exponent of its \(i\mathrm{th}\) column.

-

(ii)

Furthermore, we have Lyapunov inequality

$$\begin{aligned} \sum _{i=1}^d\lambda _i \ge \displaystyle \limsup _{t\rightarrow \infty } \frac{1}{t}\log |\det \Phi _{\omega }(t_0,t)|. \end{aligned}$$Note that if Lyapunov exponents \(\{\alpha _i(\omega ), i=1,\ldots ,d\}\) of the columns of the matrix \(\Phi _\omega (t_0,t)B(\omega )\) satisfy the equality \(\sum _{i=1}^d\alpha (\omega ) =\limsup \nolimits _{t\rightarrow \infty } \frac{1}{t}\log |\det \Phi _{\omega }(t_0,t)|\) then \(\{\alpha _1(\omega ),\ldots , \alpha _d(\omega )\} \) is the spectrum of the flow \(\Phi _{\omega }(s,t)\), i.e

$$\begin{aligned} \{\alpha _i(\omega ),i=1,\ldots ,d\} = \{\lambda _i(\omega ),i=1,\ldots ,d\}, \end{aligned}$$(but the inverse is not true).

Now let us consider the following assumptions on the driving path \(\omega \).

(\({\mathbf{H }}_3\)) \(\lim \nolimits _{\begin{array}{c} n \rightarrow \infty \\ n\in {\mathbb {N}} \end{array}} \frac{1}{n} \left| \left| \left| \omega \right| \right| \right| ^p_{p-\mathrm{var},[n,n+1]} = 0.\)

(\({\mathbf{H }}_3^\prime \)) \(\lim \nolimits _{\begin{array}{c} n \rightarrow \infty \\ n\in {\mathbb {N}} \end{array}} \frac{1}{n} \sum _{k =0}^{n-1}\left| \left| \left| \omega \right| \right| \right| ^p_{p-\mathrm{var},[k,k+1]} = \Gamma _p(\omega ) < \infty .\)

It is easy to see that assumption (\({\mathbf{H }}_3^\prime \)) implies (\({\mathbf{H }}_3\)). We formulate below the first main result of this paper on the Lyapunov spectrum of Eq. (1.3).

Theorem 3.3

Let \(\Phi _{\omega }(s,t)\) be the two-parameter flow generated by (1.3) and \(\{\lambda _1(\omega ),\ldots ,\lambda _d(\omega )\}\) be the Lyapunov spectrum of the flow \(\Phi _{\omega }(s,t)\), hence of Eq. (1.3). Then under assumptions (\({\mathbf{H }}_1\)), (\({\mathbf{H }}_2\)), (\({\mathbf{H }}_3\)), the Lyapunov exponents \(\lambda _k(\omega ), k=1,\ldots ,d,\) can be computed via a discrete-time interpolation of the flow, i.e.

In addition, if condition (\({\mathbf{H }}_3^\prime \)) is satisfied, then

where \(M_0\) is determined by (3.1), \(0<\mu <\min \{1,M_0\}\) and \(\eta = -\,\log (1-\mu )\).

Proof

Recall from (2.4) that for each \(s\in {\mathbb {R}}^+\)

Fix \(k\in \{1,\ldots ,d\}\) and \(y\in {\mathbb {R}}^d\). Suppose \(0\le t_0<t_1<t_2<t_3\cdots \) is an increasing sequence of positive real numbers on which the upper limit

is realized, i.e.,

Let \(n_m\) denotes the largest natural number which is smaller than or equal to \(t_m\). Using the flow property of \(\Phi _{\omega }(s,t)\) and assumption (\({\mathbf{H }}_3\)) we have

On the other hand,

Consequently, for all \(k\in \{1,\ldots ,d\}\) and \(y\in {\mathbb {R}}^d\), we have the equality

which proves (3.4).

Next, assume condition (\({\mathbf{H }}_3^\prime \)) is satisfied. Then

Since \(\Phi _\omega (s,t) = (\Psi _\omega (s,t)^T)^{-1}\) where \(\Psi \) is the solution matrix of the adjoint Eq. (2.9), it follows that

Hence, either

or

which yields

where the last inequality can be proved similarly to the one in (3.7). Hence (3.5) holds. \(\square \)

Remark 3.4

The discretization scheme in Theorem 3.3 can be formulated for any step size \(h>0\).

3.2 Lyapunov Spectrum of Triangular Systems

It is well known in the theory of ODE that a linear triangular system can be solved successively and its Lyapunov spectrum is easily computed via its coefficients. In this subsection we present our similar result for linear triangular systems of YDE, under additional assumptions. Let us consider the system

in which, \(X= (x_1,x_2,\ldots ,x_d)\), \(A = (a_{ij}(t)),C=(c_{ij}(t))\) are d dimensional upper triangular matrices of coefficient functions satisfying conditions (\({\mathbf{H }}_1\)), (\(\mathbf{H }_2\)), the driving path \(\omega \) satisfies (\({\mathbf{H }}_3\)) and also the additional assumption

(\({\mathbf{H }}_4\)) \(\displaystyle \lim \nolimits _{\begin{array}{c} n\rightarrow \infty \\ n \in {\mathbb {N}} \end{array}} \dfrac{\Big |\int _0^n c_{ii}(s)d\omega (s)\Big |}{n} = 0\) for any elements \(c_{ii}(t),\; i=1,\ldots , d\) in the diagonal of C.

As a motivation of our ideas, (\({\mathbf{H }}_4\)) is satisfied for almost all realization \(\omega \) of a fractional Brownian motion (see Lemma 5.3 in Sect. 5 for the proof and [22] for details on fractional Brownian motions). Another situation satisfying (\({\mathbf{H }}_4\)) is the case in which \(\omega (t) = t^{\alpha }\) with \(0<\alpha <1\) and \(C(\cdot )\) is continuous and bounded.

To see how assumption (\({\mathbf{H }}_4\)) is applied, we first consider Eq. (3.8) in the one dimensional case

Thanks to the integration by part formula (see Zähle [29, Theorem 3.1]), (3.9) can be solved explicitly as

Moreover, we have the following lemma.

Lemma 3.5

The following estimates hold for any nontrivial solution \(z\not \equiv 0\) of (3.9)

-

(i)

\(\chi (z(t)) = {\overline{a}}\),

-

(ii)

\(\chi (\left| \left| \left| z\right| \right| \right| _{q\mathrm{-var},[t,t+1]}) \le {\overline{a}}\),

where \({\overline{a}}:=\displaystyle \limsup _{\begin{array}{c} n\rightarrow \infty \\ n\in {\mathbb {N}} \end{array}}\frac{1}{n} \int _0^na(s)ds \).

Proof

(i) The statement is evident under the assumption (\(\mathbf{H }_4\)). Namely,

(ii) Due to linearity it suffices to prove for \(z_0=1\). Introduce the notations \(f(t)= \int _0^ta(s)ds,\;\; g(t) = \int _0^tc(s)d\omega (s)\), then \(z(t) = e^{f(t)+g(t)}\). We have

and

For given \(\varepsilon >0\), there exists \(D_1\) such that

Hence, for any \(t_0\ge 0\), the estimates

hold for \(D_2=\max \{D_1, D_1e^{{\overline{a}}+\varepsilon /3}\}\). On the other hand, due to the inequality \(|e^a-e^b|\le |a-b|e^{\max \{a,b\}}\) for all \(a,b\in {\mathbb {R}}\) we have

which yields

Similarly,

For \(s,t\in [t_0,t_0+1]\),

hence by using Minkowski inequality we get

Note that condition (\({\mathbf{H }}_3\)) implies the boundedness of \(\frac{\left| \left| \left| \omega \right| \right| \right| _{p\mathrm{-var},[t_0,t_0+1]}}{t_0}\), \(t_0\in {\mathbb {R}}^+\). Therefore, there exists a constant \(D_3\) such that

which proves (ii). \(\square \)

Next we will show by induction that the Lyapunov spectrum of system (3.8) is \(\{{\overline{a}}_{kk}, 1\le k\le d\}\) with \({\overline{a}}_{kk}:=\lim \nolimits _{t\rightarrow \infty }\frac{\int _0^ta_{kk}(s)ds}{t}\), provided that the limit is well-defined and exact.

The following lemma is a modified version of Demidovich [11, Theorem 1, p. 127].

Lemma 3.6

Assume that \(g^i:{\mathbb {R}}^+\rightarrow {\mathbb {R}}\), \(i=1,\ldots , n\), are continuous functions of finite q-variation norm on any compact interval of \({\mathbb {R}}^+\), which satisfy

Then

-

(i)

\( \chi \left( \sum _{i=1}^n g^i(t)\right) ,\;\chi \left( \left| \left| \left| \sum _{i=1}^n g^i\right| \right| \right| _{q\mathrm{-var},[t,t+1]}\right) \le \max _{1\le i\le n}\lambda _i,\)

-

(ii)

\( \chi \left( \prod _{i=1}^n g^i(t)\right) ,\;\chi \left( \left| \left| \left| \prod _{i=1}^n g^i\right| \right| \right| _{q\mathrm{-var},[t,t+1]}\right) \le \sum _{i=1}^n\lambda _i.\)

Proof

- (i):

-

The proof is similar to [11, Theorem 1, p. 127] with note that

$$\begin{aligned} \left| \left| \left| \sum _{i=1}^ng^i\right| \right| \right| _{q\mathrm{-var},[t,t+1]}\le \sum _{i=1}^n \left| \left| \left| g^i\right| \right| \right| _{q\mathrm{-var},[t,t+1]}. \end{aligned}$$ - (ii):

-

The first inequality is known due to [11, Theorem 2, p. 19]. For the second one, it suffices to show for \(k=2\), since the general case is obtained by induction.

It follows from Lemma 2.1 that

$$\begin{aligned} \left| \left| \left| g^1g^2\right| \right| \right| _{q\mathrm{-var},[t,t+1]}\le & {} \left( \Vert g^1\Vert _{\infty ,[t,t+1]}+ \left| \left| \left| g^1\right| \right| \right| _{q\mathrm{-var},[t,t+1]}\right) \left( \Vert g^2\Vert _{\infty ,[t,t+1]}\right. \\&\left. +\, \left| \left| \left| g^2\right| \right| \right| _{q\mathrm{-var},[t,t+1]}\right) \\\le & {} 4 \left( \Vert g^1(t)\Vert + \left| \left| \left| g^1\right| \right| \right| _{q\mathrm{-var},[t,t+1]}\right) \left( \Vert g^2(t)\Vert + \left| \left| \left| g^2\right| \right| \right| _{q\mathrm{-var},[t,t+1]}\right) . \end{aligned}$$Therefore the the second inequality followed from the first one and (i).\(\square \)

By similar arguments using the integration by part formula, the non-homogeneous one dimensional linear equation

can be solved explicitly as

provided that \(h_1,h_2\) are in \({\mathcal {C}}^{q\mathrm {-var}}([0,t],{\mathbb {R}})\) for all \(t>0\). This allow us to solve triangular systems by substitution as seen in the following theorem.

Theorem 3.7

Under assumptions (\({\mathbf{H }}_1\)) – (\({\mathbf{H }}_4\)), if there exist the exact limits

then the spectrum of system (3.8) is given by

Proof

For all \(k=1,2,\ldots ,d\), put \(Y_k(t)=e^{ \int _0^t a_{kk}(s)ds +\int _0^t c_{kk}(s)d\omega (s)} \). Then due to Lemma 3.5

We construct a fundamental solution matrix \(X(t)=\left( x_{ij}(t)\right) _{d\times d}\) of (3.8) as follows.

in which, \(t_{ik}= {\left\{ \begin{array}{ll} 0,\;\;\mathrm{if}\;\; {\overline{a}}_{kk}-{\overline{a}}_{ii}\ge 0\\ +\infty ,\;\;\mathrm{if}\;\; {\overline{a}}_{kk}-{\overline{a}}_{ii}< 0. \end{array}\right. } \)

Now we consider the \(d\mathrm{th}\) collumn of X and prove by induction that

First, by Lemma 3.5 the statement is true for \(j=d\). Assume that \(\chi (x_{jd}(t)), \; \chi (\left| \left| \left| x_{jd}\right| \right| \right| _{q{{\mathrm{-var}}},[t,t+1]})\le {\overline{a}}_{dd}\) for all \(i+1\le j\le d\), we will prove that

Put

then

Since A is bounded, we apply [11, Corollary of Theorem 2, p. 129] to get

Therefore, \(\chi \left( Y^{-1}_i(s) \sum _{j=i+1}^{d} a_{ij}(s)x_{jd}(s)\right) \le {\overline{a}}_{dd}-{\overline{a}}_{ii}\). Due to [11, Theorem 4,p. 131] we obtain

On the other hand, the following estimate holds

Indeed, with \(I(t) = \int _0^t k(s)ds \) and \(\chi (k(s))\le \lambda \), we have for \(u,v\in [t,t+1]\),

This implies \(\left| \left| \left| I\right| \right| \right| _{q\mathrm{-var},[t,t+1]}\le D(\varepsilon )e^{(\lambda +\varepsilon )t}\). The proof for the case \(I(t) = \int _t^\infty k(s)ds \) is similar.

Next, \(\chi (Y^{-1}_i(t)),\chi (\left| \left| \left| Y^{-1}_i\right| \right| \right| _{q{\mathrm{-var}},[t,t+1]})\le - {\overline{a}}_{ii}\) and C satisfies (\({\mathbf{H }}_2\)), i.e \(\chi (C(t)),\;\chi (\left| \left| \left| C\right| \right| \right| _{q\mathrm{-var},[t,t+1]})\le 0\). Together with the induction hypothesis that

and Lemma 3.6 we obtain

Again, we apply Lemma 3.6 for \(Y_i\), I and J to get

Hence, the Lyapunov exponent of the column \(d\mathrm{th}\), \(X_d\), of matrix X does not exceed \({\overline{a}}_{dd}\), meanwhile \(\chi (x_{dd}(t)) = {\overline{a}}_{dd}\). This proves \(\chi (X_d(t))={\overline{a}}_{dd}\).

Similarly, \(\chi (X_i(t))={\overline{a}}_{ii}\) for \(i=1,2,\ldots ,d\), in which \(X_i\) is the column \(i\mathrm{th}\) of X. Finally, since \(\sum _{i=1}^d{\overline{a}}_{ii} = \lim _{t\rightarrow \infty }\frac{1}{t}\log |\det X(t)|\), X(t) is a normal matrix solution to (3.8) and the Lyapunov spectrum of (3.8) is \(\{{\overline{a}}_{11},{\overline{a}}_{22},\ldots ,{\overline{a}}_{dd}\}\). \(\square \)

Remark 3.8

In the theory of ODEs, Theorem Perron states that a linear equation can be reduced to a linear triangular system (see [11, p. 180]). However, we do not know if it is true for linear Young differential equations. That is because for a linear YDE, besides the drift term A corresponding to dt we do have also the diffusion term C corresponding to \(d\omega \). Hence it is difficult to tranform the original system to a triangular form whose coefficient matrices only depend on t.

3.3 Lyapunov Regularity

The concept regularity has been introduced by Lyapunov for linear ODEs, and since then has attracted lots of interests (see e.g. [1, Chapter 3, p. 115], [8, 20], or [3, Section 1.2]). For a linear YDE, we define the concept of Lyapunov regularity via the generated two-parameter flow.

Definition 3.9

Let \(\Phi _{\omega }(s,t)\) be a two-parameter flow of linear operators of \({\mathbb {R}}^d\) and \(\{\lambda _1(\omega ),\ldots , \lambda _d(\omega )\}\) be the Lyapunov spectrum of \(\Phi _{\omega }(s,t)\). Then the non-negative \({{\bar{{\mathbb {R}}}}}\)-valued random variable

is called coefficient of nonregularity of the two-parameter flow \(\Phi _{\omega }(s,t)\).

The coefficient of nonregularity of the linear YDE (1.3) is, by definition, the coefficient of nonregularity of the two-parameter flow generated by (1.3).

A two-parameter flow is called Lyapunov regular if its coefficient of nonregularity equals 0 identically. A linear YDE is called Lyapunov regular if its coefficient of nonregularity equals 0.

It follows from [7] that if a two-parameter linear flow \(\Phi _{\omega }(s,t)\) is Lyapunov regular then its determinant \(\det \Phi _{\omega }(s,t)\) as well as any trajectory have exact Lyapunov exponents, i.e. the limit in (3.2) is exact.

We define the adjoint equation of (1.1) [and also of the equivalent integral Eq. (1.3)] by

The following lemma is a version of Perron Theorem from the classical ODE case.

Lemma 3.10

(Perron Theorem) Let \(\alpha _1\ge \cdots \ge \alpha _d\) and \(\beta _1\le \cdots \le \beta _d\) be the Lyapunov spectrum of (1.3) and (3.14) respectively. Then (1.3) is Lyapunov regular if and only if \(\alpha _i + \beta _{i}=0\) for all \(i=1,\ldots ,d\).

Proof

The proof goes line by line with the ODE version in Demidovich [11, p. 170–173]. \(\square \)

Theorem 3.11

(Lyapunov theorem on regularity of triangular system) Suppose that the matrices A(t), C(t) are upper triangular and satisfy (\({\mathbf{H }}_1\)) – (\({\mathbf{H }}_4\)). Then system (3.8) is Lyapunov regular if and only if there exists \(\lim \nolimits _{t\rightarrow \infty } \frac{1}{t}\int _{t_0}^t a_{kk}(s)ds, \; k=\overline{1,d}\).

Proof

The only if part is proved in Theorem 3.7. For the if part, the proof is similar to the [11, p. 174]. Indeed, based on the normal basis of \({\mathbb {R}}^d\) which forms the unit matrix we construct a fundamental basis \( {\tilde{X}}\) of the system which is an upper triangular matrix and the diagonal entry is

where \(Y_{k}\) are defined in Theorem 3.7.

We choose an upper triangular matrix \(D=D(\omega )\) of which diagonal elements are 1, such that \(X:={\tilde{X}}D\) is an normal basis of (1.3) with \(x^i\) to be the column vectors (see also Remark 3.2). Put \(Y=(y_{ij})=(X^{-1})^T\) and repeat the arguments in Lemma 3.10 under the regularity assumption, it follows that Y is a normal basis of (3.14). Moreover, \( y_{kk}= Y_{k}^{-1}\) and

Hence

and similarly,

Therefore,

which implies that there exists the limit \(\lim _{t\rightarrow \infty }\frac{1}{t}\int _{t_0}^ta_{kk}(s)ds, \;\; k = 1,\ldots , d\). \(\square \)

4 Lyapunov Spectrum for Linear Stochastic Differential Equations

In this section, we would like to investigate the same question in the random perspective, i.e. the driving path \(\omega \) is a realization of a stochastic process Z with stationary increments. System (1.1) can then be embedded into a stochastic differential equation, or precisely a random differential equation which can be solved in the pathwise sense. Such a system generates a stochastic two-parameter flow, hence it makes sense to study its Lyapunov spectrum and also to raise the question on the non-randomness of the spectrum.

4.1 Generation of Stochastic Two-Parameter Flows

More precisely, recall that \({\mathcal {C}}^{0,p-\mathrm {var}}([a,b],{\mathbb {R}}^d)\) is the closure of smooth paths from [a, b] to \({\mathbb {R}}^d\) in \(p-\) variation norm. It is well known (see e.g. [12, Proposition 5.36, p. 98]) that \({\mathcal {C}}^{0,p-\mathrm {var}}([a,b],{\mathbb {R}}^d)\) is a separable Banach space and moreover

for all \(\alpha >1/p\). Denote by \({\mathcal {C}}^{0,p-\mathrm {var}}({\mathbb {R}},{\mathbb {R}}^d)\) the space of all \(x: {\mathbb {R}}\rightarrow {\mathbb {R}}^d\) such that \(x|_I \in {\mathcal {C}}^{0,p-\mathrm {var}}(I, {\mathbb {R}}^d)\) for each compact interval \(I\subset {\mathbb {R}}\). Then equip \({\mathcal {C}}^{0,p-\mathrm {var}}({\mathbb {R}},{\mathbb {R}}^d)\) with the compact open topology given by the \(p-\)variation norm, i.e the topology generated by the metric:

Assign

Note that for \(x\in {\mathcal {C}}^{0,p-\mathrm {var}}_0({\mathbb {R}},{\mathbb {R}}^d)\), \(\left| \left| \left| x\right| \right| \right| _{p\mathrm{-var},I} \) and \(\Vert x\Vert _{p\mathrm{-var},I}\) are equivalent norms for every compact interval I containing 0.

Let us consider a stochastic process \({\bar{Z}}\) defined on a probability space \(({\bar{\Omega }},\bar{{\mathcal {F}}},{\bar{{\mathbb {P}}}})\) with realizations in \(({\mathcal {C}}^{0,p-\mathrm {var}}_0({\mathbb {R}},{\mathbb {R}}), {\mathcal {B}})\), where \({\mathcal {B}}\) is Borel \(\sigma -\)algebra. Denote by \(\theta \) the Wiener shift on \(({\mathcal {C}}^{0,p-\mathrm {var}}_0({\mathbb {R}},{\mathbb {R}}), {\mathcal {B}})\)

Due to [2, Theorem 5], \(\theta \) forms a measurable dynamical system \((\theta _t)_{t\in {\mathbb {R}}}\) on \(({\mathcal {C}}^{0,p-\mathrm {var}}_0({\mathbb {R}},{\mathbb {R}}), {\mathcal {B}})\) (see also [9]). Moreover, because of its definition the Young integral satisfies the shift property with respect to \(\theta \), i.e.

Namely,

Assume further that \({\bar{Z}}\) has stationary increments. It follows, as the simplest version for rough cocycle in [2, Theorem 5] w.r.t. Young integrals that, there exists a probability \({\mathbb {P}}\) on \((\Omega , {\mathcal {F}}) = ({\mathcal {C}}^{0,p-\mathrm {var}}_0({\mathbb {R}},{\mathbb {R}}), {\mathcal {B}})\) that is invariant under \(\theta \), and the so-called diagonal process \(Z: {\mathbb {R}}\times \Omega \rightarrow {\mathbb {R}}, Z(t,{\tilde{\omega }}) = {\tilde{\omega }}(t)\) for all \(t\in {\mathbb {R}}, {\tilde{\omega }} \in \Omega \), such that Z has the same law with \({\bar{Z}}\) and satisfies the helix property:

Such stochastic process Z has also stationary increments and almost all of its realization belongs to \({\mathcal {C}}^{0,p-\mathrm {var}}_0({\mathbb {R}},{\mathbb {R}})\). It is important to note that the existence of \({\bar{Z}}\) is necessary to construct the diagonal process Z. For example if \({\bar{Z}}\) is a fractional Brownian motion then the corresponding probability space \(({\bar{\Omega }},\bar{{\mathcal {F}}},{\bar{{\mathbb {P}}}})\) can be constructed explicitly as in [13]. The fact that almost all realizations of a fractional Brownian motion are Hölder continuous is a direct consequence of Kolmogorov theorem.

Next, we consider the stochastic differential equation

where the second differential is understood in the path-wise sense as Young differential. Under the assumptions in Proposition 2.2, there exists, for almost sure all \(\omega \in \Omega \), a unique solution to (4.3) in the pathwise sense with the initial value \(x_0\in {\mathbb {R}}^d\). Moreover, the solution \(X:[t_0,t_0+T]\times [t_0,t_0+T]\times {\mathbb {R}}^d \times \Omega \rightarrow {\mathbb {R}}^d\) satisfies: (i) for a.s. all \(\omega \in \Omega \), \(X(\cdot ,a,x_0,\omega ) \in {\mathcal {C}}^{0,q\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}}^d)\), and (ii) \(X(t,\cdot ,\cdot ,\cdot )\) is measurable w.r.t \((a,x_0,\omega )\). As a result, the generated two parameter flow \(\Phi _{\omega }(s,t): {\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) in Proposition 2.4 in the pathwise sense is also a stochastic two-parameter flow (see definition in [16, p. 114]).

Proposition 4.1

-

(i)

The Lyapunov exponents \(\lambda _k(\omega )\), \(k=1,\ldots , d\), of \(\Phi _\omega (s,t)\) are measurable functions of \(\omega \in \Omega \).

-

(ii)

For any \(u\in [t_0,\infty )\), the Lyapunov subspaces \(E_k^u(\omega )\), \(k=1,\ldots , d\), of \(\Phi _\omega (s,t)\) are measurable with respect to \(\omega \in \Omega \), and invariant with respect to the flow in the following sense

$$\begin{aligned} \Phi _\omega (s,t) E_k^s (\omega ) = E_k^t(\omega ),\qquad \hbox {for all}\; s,t\in [t_0,\infty ), \omega \in \Omega , k=1,\ldots ,d. \end{aligned}$$

Proof

The proof of Theorem 4.1 is similar to the one in [7, Theorems 2.5, 2.7, 2.8]. \(\square \)

Lemma 4.2

(Integrability condition) Assume that there exists a function \(H(\cdot ,\cdot )\) which is increasing in the second variable, such that for any \(r \ge 0\)

Then under assumptions (\({\mathbf{H }}_1\)) and (\({\mathbf{H }}_2\)), \(\Phi _\omega \) satisfies the following integrability condition for any \(t_0\ge 0\)

where \(M_0\) is determined by (3.1), \(0<\mu <\min \{1,M_0\}\) and \(\eta = -\,\log (1-\mu )\), and we use the notation

Proof

The proof follows directly from (3.6) for \(\Phi \) and \(\Psi \), and from (4.4), with note that for the inverse flow \(\Psi _\omega (s,t)^{\mathrm{T}} = \Phi _\omega (s,t)^{-1}\)

and that (4.4) is still satisfied for all \(s,t\in [t_0,t_0+1]\) due to the increment stationary property of Z. \(\square \)

Notice that condition (4.4) derives (\(\mathbf{H }_3^\prime \)) for almost all driving paths \(\omega \) due to Birkhorff ergodic theorem. Moreover, \(\Gamma _p(\omega )\) is a random variable in \(L^r(\Omega ,{\mathcal {F}},{\mathbb {P}})\) for all \(r>0\). If the metric dynamical system \((\Omega , {\mathcal {F}}, {\mathbb {P}}, (\theta _t)_{t\in {\mathbb {R}}})\) is ergodic, it is known that \(\Gamma _p(\omega )=E\left| \left| \left| Z \right| \right| \right| ^p_{p\mathrm{-var},[0,1]} \) almost surely. As a result, the estimate (3.5) implies the following theorem.

Theorem 4.3

Under assumptions (\({\mathbf{H }}_1\)) and (\({\mathbf{H }}_2\)) and condition (4.4), for each \(k=1,\ldots , d\) the Lyapunov exponent \(\lambda _k(\omega )\) is of finite moments of any order \(r>0\). More precisely,

In particular, if the metric dynamical system \((\Omega , {\mathcal {F}}, {\mathbb {P}}, (\theta _t)_{t\in {\mathbb {R}}})\) is ergodic, the Lyapunov spectrum is bounded a.s. by non-random constants as follow,

Remark 4.4

Assumption (4.4) is satisfied in case Z is a fractional Brownian motion, see [22, Corollary 1.9.2] with \(H> \frac{1}{2}\). Indeed, applying Garsia–Rademich–Rumsey inequality, see [24, Lemma 7.3, Lemma 7.4] we see that for any fixed \(r\ge 1\) and \(\frac{1}{2}<\nu < H\)

Moreover, it is known in [13] that Z can be defined on a metric dynamical system \((\Omega , {\mathcal {F}}, {\mathbb {P}}, (\theta _t)_{t\in {\mathbb {R}}})\) which is ergodic.

4.2 Almost Sure Lyapunov Regularity

In this subsection, for simplicity of presentation we consider all the equations on the whole time line \({\mathbb {R}}\). The half-line case \({\mathbb {R}}^+\) can be easily treated in a similar manner.

We start the subsection with a very special situation in which the coefficient functions are autonomous, i.e. \(A(\cdot ) \equiv A, C(\cdot ) \equiv C\). In this case, the stochastic two-parameter flow \(\Phi _\omega (s,t)\) of (4.3) generates a linear random dynamical system \(\Phi ^\prime \) (see e.g. Arnold [1, Chapter 1] for the definition of random dynamical systems). Indeed, from (4.1) and the fact that

it follows due to the autonomy that \(\Phi _\omega (s,t)= \Phi (t-s,\theta _s \omega )\). Hence \(\Phi ^\prime (t,\omega ):= \Phi _\omega (0,t)\) satisfies the cocycle property

Assign \(t_0 = 0\), if follows from (4.5) that

By applying the multiplicative ergodic theorem (see Oseledets [25] and Arnold [1, Chapter 3]) for \(\Phi ^\prime \) generated from (4.3), there exists a Lyapunov spectrum consisting of exact Lyapunov exponents provided by the multiplicative ergodic theorem and it coincides with the Lyapunov spectrum defined in Definition 3.1. In addition, the flag of Oseledets’ subspaces coincides with the flag of Lyapunov spaces.

The same conclusions hold if the system is periodic with period r, i.e. \(A(\cdot + r) = A(\cdot ), C(\cdot +r) = C(\cdot )\). In fact, we can prove that \(\Phi _\omega (s,t) = \Phi _{\theta _r \omega }(s-r,t-r)\) and

In this case, (4.3) generates a discrete random dynamical system \(\{\Phi (nr,\omega )\}_{n\in {\mathbb {Z}}}\) which satisfies the integrability condition (4.5). Hence all the conclusions of the MET hold and almost all the path-wise system is Lyapunov regular.

In general, it might not be true that system (4.3) is regular for almost sure \(\omega \). However, under the further assumptions of A, C, we can construct a linear random dynamical system such that almost sure all the pathwise systems are Lyapunov regular. The construction uses the so-called Bebutov flow, as investigated by Millionshchikov [20, 21] (see also [14, 26, 27]). Specifically, assume that A satisfies a stronger condition that

Consider the shift dynamical system \(S^A_t(A)(\cdot ):= A(\cdot +t)\) in the space \({\mathcal {C}}^b = {\mathcal {C}}^b({\mathbb {R}},{\mathbb {R}}^{d \times d})\) of bounded and uniformly continuous matrix-valued continuous function on \({\mathbb {R}}\) with the supremum norm. The closed hull \({\mathcal {H}}^A:=\overline{\cup _t S_t(A)}\) in \({\mathcal {C}}^b\) is then compact, hence we can construct on \({\mathcal {H}}^A\) a probability structure such that \(({\mathcal {H}}^A,{\mathcal {F}}^A,\mu ^A, S^A)\) is a probability space where \(\mu ^A\) is a S-invariant probability measure, see e.g. [15, Theorem 4.9, p. 63].

When applying Millionshchikov’s approach of using Bebutov flows to our system (4.3), we need to construct not only \(({\mathcal {H}}^A,{\mathcal {F}}^A,\mu ^A, S^A)\), but also \(({\mathcal {H}}^C,{\mathcal {F}}^C,\mu ^C, S^C)\), with a little more regularity condition for C. Recall that \({\mathcal {C}}^{0,\alpha -\mathrm{Hol}}([a,b],{\mathbb {R}}^{d \times d})\) is the closure of smooth paths from [a, b] to \({\mathbb {R}}^{d \times d}\) in \(\alpha \)-Hölder norm and \({\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}},{\mathbb {R}}^{d \times d})\) is the space of all \(x: {\mathbb {R}}\rightarrow {\mathbb {R}}^{d \times d}\) such that \(x|_I \in {\mathcal {C}}^{0,\alpha -\mathrm{Hol}}(I,{\mathbb {R}}^{d \times d})\) for each compact interval \(I\subset {\mathbb {R}}\), equipped with the compact open topology given by the Hölder norm, i.e the topology generated by metric

Following [15, Chapter 2, p. 62], for any \(c \in {\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}},{\mathbb {R}}^{d \times d})\), any interval [a, b] and \(\delta >0\), we define the module of \(\alpha \)-Hölder on [a, b]:

By the same arguments as in [15, Theorems 4.9, 4.10, pp. 62–64] we get the following result, of which the proof is given in the “Appendix”.

Lemma 4.5

A set \({\mathcal {H}}\subset {\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}},{\mathbb {R}}^k)\) has a compact closure if and only if the following conditions hold:

To construct a Bebutov flow for C, assume that there exists \(\alpha > \frac{1}{q}\) such that \(C \in {\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}}, {\mathbb {R}}^{d\times d})\) satisfies a condition stronger than (\(\mathbf{H }_2\)):

Consider the set of translations \(C_r (\cdot ):= C(r + \cdot ) \in {\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}}, {\mathbb {R}}^{d\times d})\). Under conditions (4.8), Lemma 4.5 concludes that the closure set \({\mathcal {H}}^C:=\overline{\{C_r: r\in {\mathbb {R}}\}}\) is compact on the separable completely metrizable topological space \({\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}},{\mathbb {R}}^{d \times d})\) (the separability of \({\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}},{\mathbb {R}}^{d \times d})\) comes from the separability of \({\mathcal {C}}^{0,\alpha -\mathrm{Hol}}([-m,m],{\mathbb {R}}^{d \times d})\) [12, Proposition 5.36, p. 98] and the characteristics of metric d(x, y), see also [2, Proposition 1]), in fact \(\theta _t\) also preserves the norm on \({\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}},{\mathbb {R}}^{d \times d})\). The shift dynamical system \(S^C_t c(\cdot ) = c(t + \cdot )\) maps \({\mathcal {H}}^C\) into itself, hence by Krylov-Bogoliubov theorem [23, Chapter VI, §9], there exists at least one probability measure \(\mu ^C\) on \({\mathcal {H}}^C\) that is invariant under \(S^C\), i.e. \(\mu ^C (S^C_t \cdot ) = \mu ^C(\cdot )\), for all \(t \in {\mathbb {R}}\).

It makes sense then to construct the product probability space \({\mathbb {B}} = {\mathcal {H}}^A \times {\mathcal {H}}^C \times \Omega \) with the product sigma field \({\mathcal {F}}^A \times {\mathcal {F}}^C \times {\mathcal {F}}\), the product measure \(\mu ^{{\mathbb {B}}}:=\mu ^A \times \mu ^C \times {\mathbb {P}}\) and the product dynamical system \(\Theta = S^A \times S^C \times \theta \) given by

Now for each point \(b = ({\tilde{A}},{\tilde{C}},\omega ) \in {\mathbb {B}}\), the fundamental (matrix) solution \(\Phi ^*(t,b)\) of the equation

defined by \(\Phi ^*(t,b)x_0 := x(t)\), satisfies the cocycle property due to the existence and uniqueness theorem and the fact that

Therefore the nonautonomous linear YDE (4.9) generates a cocycle (random dynamical system) \(\Phi ^*: {\mathbb {R}}\times {\mathbb {B}} \times {\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) over the metric dynamical system \(({\mathbb {B}},\mu ^{{\mathbb {B}}})\). Thus, starting from investigation of one linear stochastic nonautonomous YDE (4.3) we consider its \(\omega \)-wise and embed to a Bebutov flow using Millionshchikov’s approach [21], henceforth construct a random dynamical system over the product probability space for which the following statement holds.

Theorem 4.6

(Millionshchikov theorem) Under assumptions (\({\mathbf{H }}_1^\prime \)), (\({\mathbf{H }}_2^\prime \)) and (4.4), the nonautonomous linear stochastic (\(\omega \)-wise) Young Eq. (4.9) is Lyapunov regular for almost all \(b \in {\mathbb {B}}\) in the sense of the probability measure \(\mu ^{{\mathbb {B}}}\).

Proof

The integrability condition for the product probability measure \(\mu ^{\mathbb {B}}\) is a direct consequence of (4.5). Hence all the conclusions of the multiplicative ergodic theorem hold for almost all \(b \in {\mathbb {B}}\), which implies the Lyapunov regularity of (4.9) for almost all \(b\in {\mathbb {B}}\) in the sense of the probability measure \(\mu ^{{\mathbb {B}}}\). \(\square \)

Remark 4.7

-

(i)

In [20, 21], Millionshchikov proved the Lyapunov regularity (almost surely with respect to an arbitrary invariant measure of the Bebutov flow on \({\mathcal {H}}^A\) generated by the ordinary differential equation \({\dot{x}} = A(t)x\)), using the triangularization scheme provided by the Perron theorem for ordinary differential equations. In other words, Millionshchikov obtained an alternative proof of the multiplicative ergodic theorem (see also Arnold [1, p. 112], Johnson et al. [14]). In fact, Millionshchikov proved a bit stronger property than Lyapunov regularity that, almost all such systems are statistically regular.

-

(ii)

Theorem 4.6 can be viewed as a version of multiplicative ergodic theorem for a nonautonomous linear stochastic Young differential equation which uses combination of Millionshchikov [21] approach (topological setting using Bebutov flow for differential equation) and Oseledets [25] approach (measurable setting with probability space \((\Omega ,{\mathcal {F}},{\mathbb {P}})\)).

-

(iii)

It is important to note that, although for almost all \(b\in {\mathbb {B}}\) the nonautonomous linear stochastic (\(\omega \)-wise) Young Eq. (4.9) is Lyapunov regular, it does not follow that the original system (4.3) is Lyapunov regular.

4.3 Discussions on the Non-randomness of Lyapunov Exponents

Since we are dealing with stochastic equation YDE (4.3) it is important and interesting to know whether its Lyapunov spectrum is nonrandom. We give here a brief discussion on this problem.

We remind the readers of the non-randomness of Lyapunov exponents \(\lambda _1(\omega ),\ldots , \lambda _d(\omega )\) for systems driven by standard Brownian noises (see e.g. [7, 10]). Since Theorem 3.3 still holds in that situation and the definition of Lyapunov exponent does not depend on the initial time \(t_0\), it follows that \(\lambda _k(\omega )\) is measurable with respect to the sigma algebra generate by \(\{W(n+1)-W(n): n\ge m\}\) for any \(m\ge 0\), thus measurable w.r.t. the tail sigma field \(\cap _m \sigma (\{W(n+1)-W(n): n\ge m\})\). Due to pairwise independence of all variables of the form \(W(n+1)-W(n)\), one can apply Kolmogorov’s zero-one law [15] to conclude that Lyapunov exponents are in fact non-random constants. Thus we have nonrandomness of the Lyapunov spectrum in the case of nonautonomous linear stochastic differential equations driven by standard Brownian motions. Note that here the Lyapunov exponents of the systems can be nonexact.

In general, a stochastic process Z does not have independent increments, thus it is difficult to construct such a filtration and to apply the Kolmogorov’s zero-one law. However, the second case of nonrandom Lyapunov spectrum is the case of autonomous or periodic linear stochastic Young equations discussed at the beginning of this subsection where we may apply the classical Oseledets MET by exploiting autonomy or periodicity of the system. Note that in this case the probability measure is the probability measure of the process Z and the Lyapunov exponents of the systems are exact.

The third case is triangular nonautonomous linear stochastic Young differential equations treated in Sect. 3. In this case, due to the triangular form of the system we may solve it successively and use explicit formula of the solution to derive Theorem 3.7 showing that the Lyapunov spectrum consists of exact Lyapunov exponents and is nonrandom. Note that in this case the system in nonautonomous, the measure is the probability measure of the process Z and the Lyapunov exponents of the systems are exact.

For a general system (4.3) which satisfies assumptions (\({\mathbf{H }}_1^\prime \)), (\({\mathbf{H }}_2^\prime \)) and (4.4), the statement on the non-randomness of Lyapunov spectrum depends on whether the product dynamical system \(\Theta \) is ergodic on the product probability measure \(\mu ^{\mathbb {B}}\), as a consequence of the Birkhorff ergodic theorem. The answer is then affirmative in case \(S^A\) and \(S^C\) are weakly mixing and \(\theta \) is ergodic, i.e. \(S^A\) (respectively \(S^C\)) satisfies the condition

(respectively for \(S^C\)). It is well known (see e.g. Mañé [17, p. 147]) that the weak mixing of \(S^A\) and \(S^C\) implies the weak mixing of the product dynamical system \(S^A \times S^C\) which, together with the ergodicity of \(\theta \), implies the ergodicity of the product flow \(\Theta \). The problem on non-randomness of Lyapunov spectrum can therefore be translated into the question on the weak-mixing of dynamical systems \(S^A\) and \(S^C\).

References

Arnold, L.: Random Dynamical Systems. Springer, Berlin (1998)

Bailleul, I., Riedel, S., Scheutzow, M.: Random dynamical systems, rough paths and rough flows. J. Differ. Equ. 262(12), 5792–5823 (2017)

Barreira, L.: Lyapunov Exponents. Birkhäuser, Basel (2017)

Bylov, B.F., Vinograd, R.E., Grobman, D.M., Nemytskii, V.V.: Theory of Lyapunov Exponents. Nauka, Moscow (1966). in Russian

Cass, T., Litterer, C., Lyon, T.: Integrability and tail estimates for Gaussian rough differential equations. Ann. Probab. 41(4), 3026–3050 (2013)

Cong, N.D.: On central and auxiliary exponents of linear equations with coefficients perturbed by a white noise. Diffrentsial’nye Uravneniya, 26, 420–427 (1990). English transl. in Differential equation, 26(1990). No 3, 307–313 (1990)

Cong, N.D.: Lyapunov spectrum of nonautonomous linear stochastic differential equations. Stoch. Dyn. 1(1), 1–31 (2001)

Cong, N.D.: Almost all nonautonomous linear stochastic differential equations are regular. Stoch. Dyn. 4(3), 351–371 (2004)

Cong, N.D., Duc, L.H., Hong, P.T.: Young differential equations revisited. J. Dyn. Differ. Equ. 30(4), 1921–1943 (2018)

Cong, N.D., Quynh, N.T.T.: Lyapunov exponents and central exponents of linear Ito stochastic differential equations. Acta Mathe. Vietnam. 36, 35–53 (2011)

Demidovich, B.P.: Lectures on Mathematical Theory of Stability. Nauka, Moscow (1967). In Russian

Friz, P., Victoir, N.: Multidimensional stochastic processes as rough paths: theory and applications. Cambridge Studies in Advanced Mathematics, 120. Cambridge University Press, Cambridge (2010)

Garrido-Atienza, M.J., Schmalfuß, B.: Ergodicity of the infinite dimensional fractional Brownian motion. J. Dyn. Differ. Equ. 23, 671681 (2011). https://doi.org/10.1007/s10884-011-9222-5

Johnson, R.A., Palmer, K.J., Sell, G.R.: Ergodic properties of linear dynamical systems. SIAM J. Math. Anal. 18(1), 1–33 (1987)

Karatzas, I., Shreve, S.: Brownian Motion and Stochastics Caculus, 2nd edn. Springer, Berlin (1991)

Kunita, H.: Stochastic Flows and Stochastic Differential Equations. Cambridge University Press, Cambridge (1990)

Mañé, R.: Ergodic Theory and Differentiable Dynamics. Springer, Berlin (1987)

Millionshchikov, V.M.: Formulae for Lyapunov exponents of a family of endomorphisms of a metrized vector bundle. Mat. Zametki, 39, 29–51. English translation in Math. Notes, 39, 17–30 (1986)

Millionshchikov, V.M.: Formulae for Lyapunov exponents of linear systems of differential equations. Trans. I. N. Vekya Institute of Applied Mathematics, 22, 150–179 (1987)

Millionshchikov, V.M.: Statistically regular systems. Math. USSR-Sbornik 4(1), 125–135 (1968)

Millionshchikov, V.M.: Metric theory of linear systems of differential equations. Math. USSR-Sbornik 4(2), 149–158 (1968)

Mishura, Y.: Stochastic calculus for fractional Brownian motion and related processes. Lecture notes in Mathematics, Springer, Berlin (2008)

Nemytskii, V.V., Stepanov, V.V.: Qualitative theory of differential equations. Princeton University Press, English translation (1960)

Nualart, D., Răşcanu, A.: Differential equations driven by fractional Brownian motion. Collect. Math. 53(1), 55–81 (2002)

Oseledets, V.I.: A multiplicative ergodic theorem. Lyapunov characteristic numbers for dynamical systems. Trans. Moscow Math. Soc. 19, 97–231 (1968)

Sacker, R.J., Sell, G.: Lifting properties in skew-product flows with applications to differential equations. Memoirs of the American Mathematical Society, 11(190) (1977)

Sell, G.: Nonautonomous differential equations and topological dynamics. I. The basic theory. Trans. Am. Math. Soc. 127(2), 241–262 (1967)

Young, L.C.: An inequality of the Hölder type, connected with Stieltjes integration. Acta Math. 67, 251–282 (1936)

Zähle, M.: Integration with respect to fractal functions and stochastic calculus. I. Probab. Theory Relat. Fields 111(3), 333–374 (1998)

Acknowledgements

Open access funding provided by Max Planck Society. This research is partly funded by Vietnam National Foundation for Science and Technology Development (NAFOSTED) under Grant Number FWO.101.2017.01.

Author information

Authors and Affiliations

Corresponding author

Additional information

In memory of V. M. Millionshchikov.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Proposition 2.2

The proof follows the same techniques in [24] and in [9] with some modifications. First, consider \(x\in {\mathcal {C}}^{q\mathrm{-var}}([a,b],{\mathbb {R}}^d)\) with some \([a,b] \subset [t_0,t_0+T]\). Define the mapping given by

Then \(F(x)\in {\mathcal {C}}^{p\mathrm{-var}}([a,b],{\mathbb {R}}^{d})\) and direct computations show that for every \([s,t]\subset [a,b]\)

where

Similar to [9], for a given \(0<\mu <\min \{1,M^*\}\), where \(M^*\) is defined by (2.6), we construct the sequence of strictly increase greedy times \(\tau _n\) with \(\tau _0 = 0\) satisfying

Then \(\tau _k\rightarrow \infty \) as \(k\rightarrow \infty \) (see the proof in [9]). Denote by \(N(a,b,\omega )\) the number of \(\tau _k\) in the finite interval (a, b], then from [9]

Without loss of generality assume that \(t_0=0\). Define the set

It is easy to check that B is a closed ball in Banach space \( {\mathcal {C}}^{q\mathrm{-var}}([\tau _0,\tau _1],{\mathbb {R}}^d)\). Using (5.2), (5.4) and the fact that \(p<q\), we have

Hence, \(F:B\rightarrow B\). On the other hand, by (5.3) and (5.4), for any \(x,y\in B\)

Since \(\mu <1\), F is a contraction mapping on B. We conclude that there exists a unique fixed point of F in B or there exists local solution of (2.3) on \([\tau _0,\tau _1]\). By induction we obtain the solution on \([\tau _i,\tau _{i+1}]\) for \(i=1,..,N(0,T,\omega )-1\) and finally on \([\tau _{N(0,T,\omega )-1},T]\). The global solution of (2.3) then exists uniquely. From (5.2) it is obvious that the solution is in \( {\mathcal {C}}^{p\mathrm{-var}}([t_0,t_0+T],{\mathbb {R}}^d)\). Estimate (2.4) can then be derived using similar arguments to [9, Remark 3.4iii]. In fact,

thus by induction, it is evident that

Combining to (5.5), we obtain (2.4).

Since

for all \(s<t\in [t_0, t_0+T]\), by proving similarly to [9, Corollary 3.5] we obtain

Next, fix \((x_0,\omega )\) and consider \((x_0',\omega ')\) in the ball centered at \((x_0,\omega )\) with radius 1.

Put \(x(\cdot ) = x(\cdot , t_0,x_0,\omega )\), \(x'(\cdot ) = x(\cdot , t_0,x'_0,\omega ')\) and \(y(\cdot ) =x(\cdot )-x'(\cdot ) \). By (2.4) and (2.5), we can choose a positive number \(D_1\) (depending on \(M^*,x_0,\omega \)) such that

We have

which yields

By applying [9, Corollary 3.5], we obtain

where \(D_2, D_3\) are constant depending on x and \(M^*\). Therefore

with some constant \(D_4\), which proves the continuity of X. \(\square \)

1.1 Young Integral on Infinite Domain

Consider \(f:{\mathbb {R}}^+\rightarrow {\mathbb {R}}\) such that \(\int _a^b f(s)d\omega (s)\) exists for all \(a<b\in {\mathbb {R}}^+\). Fix \(t_0\ge 0\), we define \(\int _{t_0}^\infty f(s)d\omega (s)\) as the limit \(\displaystyle \lim _{t\rightarrow \infty }\int _{t_0}^{t}f(s)d\omega (s)\) if the limit exists and is finite. In this case,

By assumption (\({\mathbf{H }}_3\)) the sequence \(\Big \{\frac{ \left| \left| \left| \omega \right| \right| \right| _{p\mathrm{-var},[k,k+1]}}{k},\; k\ge 1 \Big \}\) is bounded.

Lemma 5.1

Consider \(G(t) = \int _0^tg(s)d\omega (s)\), where g is of bounded \(q-\)variation function on every compact interval. If \(\chi (g(t)),\;\chi (\left| \left| \left| g\right| \right| \right| _{q\mathrm{-var},[t,t+1]})\le \lambda \in [0,+\infty )\) then

Proof

Since \(\chi (g(t)),\;\chi (\left| \left| \left| g\right| \right| \right| _{q\mathrm{-var},[t,t+1]})\le \lambda \), for any \(\varepsilon >0\), there exists \(D_1=D_1(\varepsilon )\) such that \(|g(s)|\le D_1e^{(\lambda +\varepsilon /2)s}\), \(\left| \left| \left| g\right| \right| \right| _{q\mathrm{-var},[s,s+1]}\le D_1e^{(\lambda +\varepsilon /2)s}\) for all \(s>0\). Then

where \(D_2\) is a generic constant depends on \(\varepsilon \). This yields \(\chi (G(t))\le \lambda \).

Next, fix \(t_0> 0\) then \([t_0,t_0+1]\subset [n_0,n_0+2]\) with some \(n_0\in {\mathbb {N}}\). For each \(s,t\in [t_0,t_0+1]\) we have

Hence

which implies \(\chi (\left| \left| \left| G\right| \right| \right| _{q\mathrm{-var},[t,t+1]})\le \lambda \). \(\square \)

Lemma 5.2

Let g be of bounded \(q-\)variation function on every compact interval, satisfying \(\chi (g(t)),\;\chi (\left| \left| \left| g\right| \right| \right| _{q\mathrm{-var},[t,t+1]})\le -\lambda \in (-\infty ,0)\). Then the integral \(G(t) := \int _t^\infty g(s)d\omega (s)\) exists for all \(t\in {\mathbb {R}}^+\) and

Proof

For each \(\varepsilon >0\) such that \(2\varepsilon <\lambda \), there exists a constant \(D_1\) such that

Now fix \(t_0 \ge 0\) we first prove the existence and finiteness of \(\lim \nolimits _{\begin{array}{c} n\in {\mathbb {N}}\\ n\rightarrow \infty \end{array}}\int _{t_0}^ng(s)d\omega (s)\). For all \(n<m\in {\mathbb {N}}\) we have

which converges to zero as \(n,m \rightarrow \infty \) since \(\frac{ \left| \left| \left| \omega \right| \right| \right| _{p\mathrm{-var},[k,k+1]}}{k}\) are bounded and the series \(\sum _{k=0}^{\infty }ke^{-k\varepsilon /2}\) converges. Therefore \(\lim \nolimits _{\begin{array}{c} n\in {\mathbb {N}}\\ n\rightarrow \infty \end{array}}\int _{t_0}^ng(s)d\omega (s)<\infty \). Moreover, for \(t>t_0\) by a similar estimate we have

as \(t\rightarrow \infty .\) This implies the existence of \(\int _{t_0}^\infty g(s)d\omega (s) \). Moreover, \(|G(t)|\le C(\varepsilon )e^{(-\lambda +2\varepsilon )t}\) which yields \(\chi (G(t))\le -\lambda \).

The second conclusion can be proved similarly to Lemma 5.1. \(\square \)

The following lemma shows that the condition (\({\mathbf{H }}_4\)) is satisfied for almost all realization \(\omega \) of a fractional Brownian motion \(B^H_t(\omega )\) (see [22] for definition and details on fractional Brownian motions).

Lemma 5.3

Assume that \(c_0:=\Vert c\Vert _{\infty ,{\mathbb {R}}^+} < \infty \) and the integral

exists for all \(t\in {\mathbb {R}}^+\). Then \(\lim \nolimits _{\begin{array}{c} n\rightarrow \infty \\ n\in {\mathbb {N}} \end{array}} \frac{X(n,\cdot )}{n} = \lim \limits _{\begin{array}{c} n\rightarrow \infty \\ n\in {\mathbb {N}} \end{array}} \ \frac{\int _0^nc(s)dB^H_s}{n} = 0,\ \text {a.s.} \)

Proof

Fix \(T>0\), and assume that \(\pi _n\) is a sequence of partition of [0, T] such that \(mesh(\pi _n) \rightarrow 0\) as \(n\rightarrow \infty \). Denote

Then \(X_n(t,\omega ) \rightarrow X(t,\omega )\) as \(n\rightarrow \infty \). It is evident that \(X_n\) is a Gaussian random variable with mean zero. Since

for all \(v<u\le s<t\) (see [22, pp. 7–8]), we have

where D(H) is a generic constant depending on H.

Since \(X_n \rightarrow X\), a.s, X(t, .) is a centered normal random variable with \(V(X(t,\cdot ))\le D(H)c^2_0t^{2H}\). It follows that \(EX(t,.)^{2k}\le D(H,k,c_0) t^{2kH}\) with \(D(H,k,c_0)\) is a constant depending on \(H,k,c_0\). Fix \(0<\varepsilon < 1-H\) and choose k large enough so that \(k(1-\varepsilon -H) \ge 1\) we then have

Using Borel–Caltelli lemma, we conclude that \(\frac{X(n,\cdot )}{n} \rightarrow 0\) as \(n\rightarrow \infty \) almost surely. \(\square \)

Proof of Lemma 4.5

The if part is obvious since it can be proved that

which shows the continuity of m on \({\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}},{\mathbb {R}}^k)\). Hence m is uniformly continuous on a compact set, which shows (4.6) and (4.7).

To be more precise, denote by \({\tilde{C}}\) the space \({\mathcal {C}}^{0,\alpha -\mathrm{Hol}}({\mathbb {R}},{\mathbb {R}}^k)\). Assume that \({\mathcal {H}}\) is compact in \({\tilde{C}}\), we prove that (4.6) and (4.7) are fulfilled. For each \(n\in {\mathbb {N}}^*\), put

Then \(G_n\) is open in \({\tilde{C}}\).

Since \({\overline{{\mathcal {H}}}} \subset \bigcup _{n=1}^{\infty }G_n\) and \(G_n\) is an increasing sequence of open sets, there exists \(n_0\) such that \({\mathcal {H}}\subset G_{n_0}\), which proves (4.6).

To prove (4.7), first note that for each \(c\in {\tilde{C}}\) and \([a,b] \subset {\mathbb {R}}\), \(\lim \nolimits _{\delta \rightarrow 0} m^{[a,b]}(c,\delta ) =0\) (see [12, Theorem 5.31, p. 96]). Secondly

Indeed, due to the definition of \(m^{[a,b]}(c,\delta )\), there exists for any \(\varepsilon >0\) two points \(s_0,t_0\in [a,b]\), \(0<|s_0-t_0|\le \delta \) such that

On the other hand, \(m^{[a,b]}(c',\delta )\ge \frac{|c'(t_0)-c'(s_0)|}{|t_0-s_0|^\alpha }\), which yields

Exchanging the role of c and \(c'\) we obtain

since \(\varepsilon \) is arbitrary.

We now prove the continuity of the map

In fact, fix \([-n,n]\) contains [a, b]. For each \(c_0\in {\tilde{C}}\) and \(\varepsilon \in (0,1)\) choose \(\eta =\varepsilon /2^n\). If \(d(c,c_0)<\eta \) we have \(\Vert c-c_0\Vert _{\alpha ,[-n,n]}\wedge 1\le 2^nd(c,c_0)< \varepsilon \). Therefore

Next, fix \(\varepsilon >0\) and define the set

Then \(K_{\delta }\) is closed for all \(\delta \). Due to the fact that \(\lim \nolimits _{\delta \rightarrow 0} m^{[a,b]}(c,\delta ) =0\) for all \(c\in {\tilde{C}}\), we have \(\displaystyle \bigcap _{\delta >0}K_{\delta }=\emptyset \). Then there exists \(\delta =\delta (\varepsilon )>0\) such that \(K_{\delta }=\emptyset \), which proves (4.7).

For the ”only if” part, assume that (4.6) and (4.7) hold, we are going to prove the compactness of \({\bar{{\mathcal {H}}}}\). Since \({\tilde{C}}\) is a complete metric space, it suffices to prove that every sequence \(\{c_n\}_{n=1}^\infty \subset {\mathcal {H}}\) has a convergent subsequence. Following the arguments of [15, Theorem 4.9, p. 63] line by line, we can construct a convergent subsequence \(\{{\tilde{c}}_n\}_{n=1}^\infty \) by the ”diagonal sequence” such that \({\tilde{c}}_n(r) \rightarrow c(r)\) as \(n\rightarrow \infty \) for any rational number \(r \in {\mathbb {Q}}\). With (4.6) and (4.7), \({\mathcal {H}}\) satisfies the condition in [15, Theorem 4.9, p. 63], hence \({\tilde{c}}_n\) converge uniformly to a continuous function c in every \([a,b]\subset {\mathbb {R}}\).

Fix [a, b], by (4.7) for each \(\varepsilon >0\) there exist \(\delta _0>0\) such that if \(\delta \le \delta _0\), \(\sup \nolimits _{\begin{array}{c} s,t\in [a,b] \\ |s-t|\le \delta \end{array}}\frac{|{\tilde{c}}_n(t)-{\tilde{c}}_n(s)|}{|t-s|^{\alpha }}\le \varepsilon \) for all n. Hence

thus \(c\in {\tilde{C}}\). Finally, we prove that \({\tilde{c}}_n\) converge to c in the Hölder seminorm on every compact interval [a, b]. Namely, with \(\varepsilon , \delta _0\) given, there exist \(n_0\) such that for all \(n\ge n_0\), \(\Vert {\tilde{c}}_n-c\Vert _{\infty ,[a,b]}\le \delta ^{\alpha }_0\varepsilon \). Then for \(n\ge n_0\)

which implies that \(\left| \left| \left| {\tilde{c}}_n-c\right| \right| \right| _{\alpha \mathrm{-Hol},[a,b]}\) converges to 0 as \(n\rightarrow \infty \). This completes the proof. \(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cong, N.D., Duc, L.H. & Hong, P.T. Lyapunov Spectrum of Nonautonomous Linear Young Differential Equations. J Dyn Diff Equat 32, 1749–1777 (2020). https://doi.org/10.1007/s10884-019-09780-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10884-019-09780-z