Abstract

Scalar difference equations \(x_{k+1}=f(x_k,x_{k-d})\) with delay \(d\in {\mathbb {N}}\) are well-motivated from applications e.g. in the life sciences or discretizations of delay-differential equations. We investigate their global dynamics by providing a (nontrivial) Morse decomposition of the global attractor. Under an appropriate feedback condition on the second variable of f, our basic tool is an integer-valued Lyapunov functional.

Similar content being viewed by others

1 Introduction

This paper studies the global dynamics of scalar difference equations

involving an arbitrary delay \(d\in {\mathbb {N}}\), where f fulfills a suitable positive or negative feedback condition in the second variable. Such problems are of interest not only as time-discretizations of delay-differential equations, but they also intrinsically arise in a multitude of models in the life sciences (see [8, 9] for references). Particularly in the latter applications, much work so far concentrated on the problem to provide (sufficient) conditions for the global asymptotic stability of a positive equilibrium of (1.1) (cf. [9, 11, 12]). Nevertheless, being a discrete time model it is no surprise that much more complicated dynamics can be expected.

Under quite natural and frequently met assumptions the delay-difference equation (1.1) are dissipative. This means their forward dynamics eventually enters a bounded subset of the state space. Whence, a global attractor \({\mathcal A}\) exists, which contains all bounded entire solutions and therefore all dynamically relevant objects, like for instance equilibria, periodic solutions, as well as homo- or heteroclinics. The dynamics on the attractor itself can be highly nontrivial due to e.g. a cascade of period doubling bifurcations and even chaotic dynamics might arise [13]. Beyond the pure existence of a global attractor, one is rather interested in an as detailed as possible picture of its interior structure. An adequate tool for this endeavor is a Morse decomposition of \({\mathcal A}\) due to the following reasons:

-

It allows to disassemble an attractor into finitely many invariant, compact subsets (the Morse sets) and their connecting orbits,

-

the recurrent dynamics in \({\mathcal A}\) occurs entirely in the Morse sets,

-

outside the Morse sets the dynamics of (1.1) on \({\mathcal A}\) is gradient-like.

On the one hand, identifying a nontrivial Morse decomposition of the attractor (along with the connecting orbits) provides a more detailed picture of the long-term behavior of (1.1), since every solution is attracted by exactly one Morse set. On the other hand, obtaining a Morse decomposition is a difficult task and requires further tools. In our case, this is an integer-valued (or discrete) Lyapunov functional, which roughly speaking counts the number of sign changes and decreases along solutions. This allows to quantize solutions to (1.1) in terms of their oscillation rates. Such a concept is not new and actually turned out to be very fruitful to understand the global behavior of other finite and infinite dimensional dynamical systems. For instance, discrete Lyapunov functionals are used to obtain convergence to equilibria in tridiagonal ODEs [24] and scalar parabolic equations [18], but also a Poincaré–Bendixson theory for a class of ODEs [14] in \({\mathbb {R}}^n\), \(n>2\), reaction-diffusion equations [4] and delay-differential equations [16]. Finally, a Morse decomposition of global attractors for delay-differential equations is constructed in [15, 19]. In conclusion, the existence of such a discrete Lyapunov functional imposes a serious constraint on the possible long-term behavior of various systems.

All the above applications have in common to address problems in continuous time, that is differential equations. A first approach to tackle difference equations via discrete Lyapunov functionals is due to Mallet-Paret and Sell [17]. In showing that such an integer-valued functional V decreases along forward solutions, they lay the foundations of our present work. Yet, [17] is primarily motivated by time-discretizations of delay-differential equations, while we are furthermore interested in applications being time-discrete right from the beginning by means of models originating e.g. in life sciences. Note that [17] prove the decay of V for a larger class of difference equations than (1.1), which combines (1.1) with (cyclic) tridiagonal systems (see also our Remark 3.4). Nonetheless, up to our knowledge, this paper is the first contribution using a discrete Lyapunov functional to actually understand the dynamics of discrete-time models.

Our detailed setting is as follows: The right-hand side \(f:J^2\rightarrow J\) of (1.1) is assumed to be of class \(C^1\) with a closed interval \(J\subseteq {\mathbb {R}}\). Since (1.1) is equivalent to the first order difference equation

the natural state space for (1.1) is the Cartesian product \(J^{d+1}\subseteq {\mathbb {R}}^{d+1}\). Indeed, the mapping \(F:J^{d+1}\rightarrow J^{d+1}\) generates a discrete semi-dynamical system via its iterates \(F^k\), \(k\ge 0\). It is one-to-one, if and only if

and \(F:J^{d+1}\rightarrow J^{d+1}\) is onto, if every \(f(x,\cdot ):J\rightarrow J\), \(x\in J\), has this property. An entire solution to (1.2) is a sequence \((y_k)_{k\in {\mathbb {Z}}}\) in \(J^{d+1}\) satisfying the identity \(y_{k+1}\equiv F(y_k)\) on \({\mathbb {Z}}\), and we speak of an entire solution through \(\eta \in J^{d+1}\), provided also \(y_0=\eta \) holds.

Under appropriate conditions on the function f, the equation (1.2) is dissipative, i.e. there exists a bounded subset \(A\subseteq J^{d+1}\) (the absorbing set) such that for every bounded \(B\subseteq J^{d+1}\) there exists a \(K=K(B)\ge 0\) with

The global attractor\({\mathcal A}\subseteq \overline{A}\) for (1.2), is a compact, invariant and nonempty set attracting all bounded \(B\subseteq J^{d+1}\). In our case, the existence of the global attractor is equivalent to the existence of a bounded absorbing set [22]. The global attractor is unique and allows the dynamical characterization

(cf. [22, Lemma 2.18]). It is invariantly connected (cf. [22, Proposition 2.20]). In case \(J={\mathbb {R}}\), the state space \({\mathbb {R}}^{d+1}\) of (1.2) is a Banach space, \({\mathcal A}\) is connected (cf. [22, Proposition 2.19]) and contains a fixed point (cf. [22, Theorem 2.29]).

The bounded entire solutions constituting the global attractor \({\mathcal A}\) are uniquely determined by the initial state \(\xi \in {\mathcal A}\) under the injectivity condition (1.3) and thus the continuous bijection \(F|_{{\mathcal A}}:{\mathcal A}\rightarrow {\mathcal A}\) is a homeomorphism on the compact set \({\mathcal A}\). Hence, the iterates \(F|_{{\mathcal A}}^k\), \(k\in {\mathbb {Z}}\), are a dynamical system on \({\mathcal A}\) and for each \(\xi \in {\mathcal A}\) the corresponding \(\alpha \)- and \(\omega \)-limit sets are denoted by \(\alpha (\xi ),\omega (\xi )\subseteq {\mathcal A}\). In the general, non-invertible case, for a bounded entire solution \((y_k)_{k\in {\mathbb {Z}}}\) of (1.2), we introduce the \(\alpha \)-limit set of y, defined by

Coming to our central notion, following [1], a Morse decomposition of the global attractor \({\mathcal A}\) is a finite ordered collection

of pairwise disjoint, compact and invariant Morse sets\({\mathcal M}_1,\ldots ,{\mathcal M}_m\subseteq {\mathcal A}\) such that for all \(\xi \in {\mathcal A}\) and all bounded entire solution \((y_k)_{k\in {\mathbb {Z}}}\) through \(\xi \) it holds that there exist \(i\ge j\) with

- \((m_1)\) :

-

\(\alpha (y)\subseteq {\mathcal M}_i\) and \(\omega (\xi )\subseteq {\mathcal M}_j\),

- \((m_2)\) :

-

\(i=j\)\(\Rightarrow \)\(\xi \in {\mathcal M}_i\) (thus, \(y_k\in {\mathcal M}_i\) for every \(k\in {\mathbb {Z}}\)).

The connecting orbits constitute the connecting sets

Together with the Morse sets \({\mathcal M}_1,\ldots ,{\mathcal M}_m\) they form a partition of the global attractor \({\mathcal A}\). Moreover, since \({\mathcal A}\) includes every limit point of an equation, due to \((m_1)\) indeed the Morse sets contain all limit sets.

A simple illustration is given in the following example:

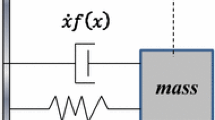

The mappings f and g from Example 1.1

Example 1.1

Consider the scalar difference equation (without delay)

having the equilibria \(-1,0,1\) and the global attractor \([-1,1]\). Using graphical iteration (see Fig. 1 (left)) or from the explicit solution representation \(f^k(x)=\frac{2^kx}{\sqrt{1+(4^k-1)x^2}}\) one readily obtains the Morse sets and connecting sets

The components of \({\mathcal M}_1\) and \({\mathcal C}_1^2\) are invariant; \({\mathcal M}_1\) is not minimal. The slightly modified difference equation (see Fig. 1 (right))

has the same global attractor, Morse and connecting sets. Now the Morse set \({\mathcal M}_1\) is a 2-periodic orbit, while \((-1,0)\), (0, 1) fail to be invariant.

The paper is organized as follows: In many cases the discrete Lyapunov functional is applied to a variational equation. This allows to argue that it decreases along the difference of two solutions. Hence, the next two sections include both preparatory work and crucial related results on linear difference equations; for instance Lemma 2.1 provides a description of the eigenvalue distribution for the linearized equation. In Sect. 3 we formulate our main assumptions, the feedback conditions and introduce an integer-valued Lyapunov functional, whose properties are given by Proposition 3.2 and Theorem 3.3. Our main result is Theorem 4.1, which yields a Morse decomposition of the global attractor of (1.2) (and in turn (1.1)) under appropriate assumptions on the right-hand side f. Some of the arguments are given only in the negative feedback case, which seems to have more applications and requires slightly more involved proofs. For the convenience of the reader, the proofs for positive feedback are given in the Appendix. Section 5 contains applications of the theory, mainly from the life sciences, which underline that both the positive and negative feedback case are relevant. Finally some open questions and perspectives are addressed in Sect. 6.

We conclude with our notation: A discrete interval\({\mathbb {I}}\) is the intersection of a real interval with the integers and  . Special cases are the positive half-axis

. Special cases are the positive half-axis  and the negative half-axis

and the negative half-axis  .

.

2 Spectrum of the Linearization

Let us consider an autonomous linear delay-difference equation

with real coefficients \(a>0\), \(b\ne 0\). Our further analysis requires a detailed understanding of the associated characteristic equation

whose solutions occur as complex-conjugated pairs \(\lambda _j,\overline{\lambda _j}\), where for real roots, clearly \(\lambda _j=\overline{\lambda _j}\). By convention, suppose that \({\text {Im}}\lambda _j\ge 0\).

The subsequent lemma thoroughly describes the distribution of the roots of (2.2) based on the following sectors of the complex plane:

and

Lemma 2.1

(Huszár [6]) Let  .

.

-

(a)

If \(b>0\), then for all \(j\in {\mathbb {N}}\), \(j< \frac{d+1}{2}\), there exists exactly one pair of characteristic roots \(\lambda _j, \overline{\lambda _j}\) of (2.2) with \(\lambda _j\in S^+_j\), and all other roots are real. For even d there exists a unique real root, namely \(\lambda _+>0\) and for odd d there exist exactly two real roots, namely \(\lambda _-<0<\lambda _+\).

-

(b)

If \(b<0\), then for all \(j\in {\mathbb {N}}\), \(j< \frac{d}{2}\), there exists exactly one pair of characteristic roots \(\lambda _j, \overline{\lambda _j}\) of (2.2), such that \(\lambda _j\in S^-_j\).

-

(1)

If \(m_0\le b<0\), then all other roots are real. Exactly two of those are positive and they are denoted by \(0<\lambda _{+,2}\le \lambda _{+,1}\).

-

(2)

If \(b<m_0\), then there is also a pair of complex roots \(\lambda _0,\overline{\lambda _0}\), such that \(\lambda _0\in S^-_0\), and then there exists no positive characteristic root.

-

(3)

If d is odd, then there is no negative real root, otherwise there is exactly one, which is denoted by \(\lambda _-\).

-

(1)

-

(c)

If \(b\ne 0\), and \(z_1=r_1 e^{i\varphi _1}\) and \(z_2=r_2 e^{i\varphi _2}\) are two distinct roots of (2.2) such that \(0\le \varphi _1 < \varphi _2 \le \pi \) holds, then \(r_1>r_2\).

Note that the above lemma also implies that the only possible multiple (at most double) root which possibly occurs, is the pair of two positive roots \(\lambda _{+,1}\) and \(\lambda _{+,2}\) which may coincide. All the other roots are simple. We refer to Figs. 2 and 3, in which the number and sign of the real roots of (2.2) is presented for all possible cases.

Proof

By means of the variable change \(\lambda =ax\) one transforms the characteristic equation (2.2) into the trinomial equation

Then one applies [6, 1. \(\S \)] to obtain the parts of statements (a) and (b) concerning the distribution of complex roots. Moreover, according to 8. \(\S \) of the same paper there is no multiple complex root. This determines also the number of real roots (counting multiplicity) in each case. Then applying Descartes’ sign rule yields immediately our conclusion about real roots. From [6, 2. §] we readily have statement (c). \(\square \)

3 A Lyapunov Functional

Our overall analysis is based on the following assumptions on the right-hand side \(f:J\times J\rightarrow J\) of (1.1):

- (\(\mathbf H _\mathbf 1 \)):

-

f is continuously differentiable and 0 is an inner point of J;

- (\(\mathbf H _\mathbf 2 \)):

-

\(D_1 f(x_d,x_0)>0\) for all \(x_d, x_0 \in J\);

moreover there exists a \(\delta ^*\in \left\{ -1,1\right\} \), fixed from now on, such that:

- (\(\mathbf H _\mathbf 3 \)):

-

\(\delta ^*D_2f(0,0)>0\), \(\delta ^*f(0,x_0)x_0>0\) for all \(x_0\in J\setminus \{0\}\).

Note that for \(\delta ^*=1\) one speaks of positive feedback, while \(\delta ^*=-1\) means negative feedback in (1.1).

It is central to have an ambient integer-valued Lyapunov functional for a variational equation along solutions to (1.1) at hand. For sequences \((a_k)_{k\in {\mathbb {Z}}}\), \((b_k)_{k\in {\mathbb {Z}}}\) in \({\mathbb {R}}\) we consider the nonautonomous linear delay-difference equation

as well as the associated first order system

or equivalently,

Remark 3.1

The assumptions (\(H_1\))–(\(H_3\)) allow to write every nonlinear difference equation (1.1) in the form (3.1) with

Moreover, for an entire solution \((x_k)_{k\in {\mathbb {Z}}}\) of (1.1), \(a_k>0\) holds for all \(k \in {\mathbb {Z}}\) (for which \(a_k\) is defined), and as \(b_k=f(0,x_{k-d})/x_{k-d}\) for any \(x_{k-d}\ne 0\) and \(b_k=D_2f(0,0)\) for \(x_{k-d}=0\), one has \(\delta ^*b_k>0\) for all \(k\in {\mathbb {Z}}\).

Analogously, every equation (1.2) can be rewritten in the form (3.2) with

where \(a_k>0\), \(\delta ^*b_k>0\) hold for all \(k\in {\mathbb {Z}}\) from the domain of the solution y.

Before proceeding, we abbreviate  for \(n\in {\mathbb {N}}\). It is crucial to note that \({\mathbb {R}}^{d+1}_*\) is positively invariant under the matrix (3.2) provided \(a_k>0\) and \(\delta ^*b_k>0\) hold on \({\mathbb {Z}}\). Let us also define the function

for \(n\in {\mathbb {N}}\). It is crucial to note that \({\mathbb {R}}^{d+1}_*\) is positively invariant under the matrix (3.2) provided \(a_k>0\) and \(\delta ^*b_k>0\) hold on \({\mathbb {Z}}\). Let us also define the function

where \({{\mathrm{sc}}}y\) is the number of sign changes in \(y=(y(0), y(1),\ldots ,y(n))\in {\mathbb {R}}^{n+1}_*\), that is

Also, by definition, set  , if there is no such \(\ell \). Based on this, we define the integer-valued functions

, if there is no such \(\ell \). Based on this, we define the integer-valued functions

and

Finally we introduce the sets \(\mathcal {R^+}\) resp. \(\mathcal {R^-}\) of regular points via

for the positive (resp. negative) feedback case.

The subsequent Proposition 3.2 and Theorem 3.3 give some nice properties of the functionals \(V^\pm \) that will be essential in the proof of our main result. We state them for both positive and negative feedback, however, we only present the proofs for the negative feedback case \(\delta ^*=-1\). For positive feedback, the proofs are rather similar to the ones presented below, and require only straightforward modifications in the arguments (cf. Appendix).

Proposition 3.2

If \((y^n)_{n\in {\mathbb {N}}}\) is a sequence in \({\mathbb {R}}_*^{d+1}\) with limit \(y\in {\mathbb {R}}_*^{d+1}\), then the following statements hold:

-

(a)

\(V^\pm (y)\le \liminf _{n\rightarrow \infty }V^\pm (y^n)\),

-

(b)

\(V^\pm (y)=\lim _{n\rightarrow \infty }V^\pm (y^n)\), if \(y\in \mathcal {R^\pm }\).

Proof

-

(a)

follows from the lower semi-continuity of the function \({{\mathrm{sc}}}\) on \(\mathbb {R}_*^{d+1}\).

-

(b)

is shown only in the negative feedback case, since the positive feedback case can be handled by straightforward modifications in the argument.

First note that there exists \(n_0\in {\mathbb {N}}\) such that for \(n>n_0\), \(y^n(j)\) has the same sign as y(j), where \(j\in \{0,\ldots ,d\}\) is an index for which \(y(j)\ne 0\). Moreover, from \(y\in \mathcal {R^-}\) it follows that for \(j\in \{1,\ldots , d-1\}\) with \(y(j)=0\),

and

holds for all \(n>n_0\). This in turn yields that for \(n>n_0\) and \(0\le i<j\le d\) such that \(y(i)\ne 0 \ne y(j)\) one has

which in particular means that \({{\mathrm{sc}}}y^n={{\mathrm{sc}}}y\) and thus also \(V^-(y)=V^-(y^n)\) hold whenever \(y(0)\ne 0 \ne y(d)\).

Since \(y\in \mathcal {R^-}\) excludes the possibility of y(0) and y(d) both being 0 at once, there remains the case when exactly one of them is 0. We may assume \(y(d)=0\) (the case \(y(0)=0\) is analogous). Again, by regularity of y we have that \(y(0)y(d-1){{}>0}\), which together with (3.5) yields that on the one hand

holds for \(n>n_0\) and on the other hand this number is even, say 2m. It is now clear that \({{\mathrm{sc}}}y={2m} \le {{\mathrm{sc}}}y^n\le {2m+1}\) and therefore \(V^-(y)=V^-(y^n)\) is satisfied for all \(n>n_0\), which completes the proof. \(\square \)

Theorem 3.3

If \((y_k)_{k\in {\mathbb {I}}}\) is a solution of (3.2) with \(a_k>0\) and \(\delta ^*b_k > 0\) for all \(k\in {\mathbb {I}}\), then

-

(a)

\(V^\pm (y_{k+1})\le V^\pm (y_k)\) holds for any \(k\in {\mathbb {I}}'\),

-

(b)

if \(k\in {\mathbb {I}}\) is such that \(k+4d+2\in {\mathbb {I}}\) and moreover \(y_k\ne 0\) and \(V^\pm (y_k)=V^\pm (y_{k+4d+2})\) hold, then \(y_{k+4d+2}\in \mathcal {R^\pm }\).

According to Remark 3.1 this applies to solutions of (1.2) as well. We additionally remark that Theorem 3.3 remains valid in the nonautonomous situation when f depends on k and our assumptions hold for every \(k\in {\mathbb {I}}\).

The next proof is valid for any \(d\in {\mathbb {N}}\) (using notations  where necessary in the \(d=1\) case). However it is worth mentioning that Theorem 3.3 almost trivially holds for \(d=1\), and some parts of the following proof can be omitted even in the case \(d=2\).

where necessary in the \(d=1\) case). However it is worth mentioning that Theorem 3.3 almost trivially holds for \(d=1\), and some parts of the following proof can be omitted even in the case \(d=2\).

Proof of Theorem 3.3

For better readability, we give the proof for negative feedback \(\delta ^*=-1\), the positive feedback case can be found in the Appendix.

(a) Since the last d components of \(y_k \in \mathbb {R}_*^{d+1}\) are the same as the first d coordinates of \(y_{k+1}\), clearly \({{\mathrm{sc}}}y_{k+1}\le {{\mathrm{sc}}}y_k+1\) holds. Assuming now to the contrary that \(V^-(y_{k+1})>V^-(y_k)\) holds for some k implies that \({{\mathrm{sc}}}y_{k+1}={{\mathrm{sc}}}y_k+1\) and \({{\mathrm{sc}}}y_k\) is odd. The former combined with \(a_k>0\) yields that \(y_k(0)\ne 0\) and the oddness of \({{\mathrm{sc}}}y_k\) implies that the last nonzero coordinate of \(y_k\) (say \(y_k(j)\), \({1}\le j\le d\)) has the opposite sign as \(y_k(0)\). We may assume w.l.o.g. that \(y_k(j)<0<y_k(0)\) (the other case is analogous). In particular this implies \(y_k(d)\le 0\). Using \(a_k>0\) and \(b_k<0\) one obtains readily from (3.3) that \(y_{k+1}(d)=a_k y_k(d) + b_k y_k(0)\) is negative. All these together give

where the last inequality is due to \({\text {sgn}}y_k(j)={\text {sgn}}y_{k+1}(d)\). This is a contradiction, which proves statement (a).

(b) Before we prove the statement, we need to introduce some auxiliary notions. Let us say that a component y(j) \((0\le j \le d)\) of a vector \(y\in {\mathbb {R}}^{d+1}\) is irregular if \(y(j)=0\), and  . Using this terminology, \(y\in {\mathcal {R^-}}\) (i.e. y is regular) holds if and only if y has no irregular component. Furthermore, we call a vector \((y(i),\ldots ,y(j))\) with \(0\le i\le j\le d\) an irregular block (of zeros) in \(y\in {\mathbb {R}}^{d+1}\) if \(y(i)=\cdots =y(j)=0\), and moreover it has maximal length in the sense that either \(i=0\) or \(i\ge 1\) and \(y(i-1)\ne 0\) hold, and similarly either \(j=d\) or \(j<d\) and \(y(j+1)\ne 0\) hold. The dimension of the block will be regarded as the length of it. Note also that consecutive zero components are irregular by definition. The proof of (b) consists of several, yet elementary, steps. From now on we always assume that \(V^-(y_k)=V^-(y_{k+4d+2})\), which implies, in the light of statement (a), that \(V^-(y_k)=V^-(y_{k+\ell })\) holds for all \(0\le \ell \le 4d+2\).

. Using this terminology, \(y\in {\mathcal {R^-}}\) (i.e. y is regular) holds if and only if y has no irregular component. Furthermore, we call a vector \((y(i),\ldots ,y(j))\) with \(0\le i\le j\le d\) an irregular block (of zeros) in \(y\in {\mathbb {R}}^{d+1}\) if \(y(i)=\cdots =y(j)=0\), and moreover it has maximal length in the sense that either \(i=0\) or \(i\ge 1\) and \(y(i-1)\ne 0\) hold, and similarly either \(j=d\) or \(j<d\) and \(y(j+1)\ne 0\) hold. The dimension of the block will be regarded as the length of it. Note also that consecutive zero components are irregular by definition. The proof of (b) consists of several, yet elementary, steps. From now on we always assume that \(V^-(y_k)=V^-(y_{k+4d+2})\), which implies, in the light of statement (a), that \(V^-(y_k)=V^-(y_{k+\ell })\) holds for all \(0\le \ell \le 4d+2\).

(I) If for some index k, \(y_{k+1}(j)=0\) is irregular (\(0\le j<d\)), then so is \(y_{k}(j+1)=0\) irregular.

This is trivial in case \(1\le j\le d-2\).

If \(y_{k+1}(d-1)=0\) is an irregular component of \(y_{k+1}\) (i.e. \(y_{k+1}(d-1)=0\) and \(y_{k+1}(d-2)y_{k+1}(d)\ge 0\) hold), then on the one hand \(y_k(d)=0\) holds and on the other hand, \(b_k<0\) implies \({\text {sgn}}y_{k+1}(d)={-}{\text {sgn}}y_k(0)\). Combining this with \(y_k(d-1)=y_{k+1}(d-2)\) yields \({{\text {sgn}}(y_k(d-1)y_k(d+1))=}\)\(-{\text {sgn}}(y_k(d-1)y_k(0))={\text {sgn}}(y_{k+1}(d-2)y_{k+1}(d))\), meaning \(y_k(d)\) is also irregular in \(y_k\).

For the case when \(y_{k+1}(0)=0\) is irregular, assume to the contrary that \(y_{k}(1)=0\) is regular, i.e. \(y_k(0)y_k(2)<0\). W.l.o.g. we may assume \(y_k(0)<0<y_k(2)\). Irregularity of \(y_{k+1}(0)\) combined with \(y_{k+1}(1)=y_k(2)>0\) yields \(y_{k+1}(d)\le 0\). Now, from \(a_k>0\) and \(b_k<0\) it follows that \(y_k(d)<0\). These all together imply that \({{\mathrm{sc}}}y_k\) is an even number, moreover \({{\mathrm{sc}}}y_k={{\mathrm{sc}}}y_{k+1}+1\), which gives \(V^-(y_k)=V^-(y_{k+1})+2\), a contradiction.

(II) If \(y_{k+1}(d)=0\), then there are two possibilities. Either \(y_k(0)=y_k(d)=0\), or \(y_k(0)y_k(d)>0\). Assume now that the latter holds and let \(0\le j<d\) be the smallest integer such that \(y_k(j+1)\ne 0\). As \(y_k(0)y_k(d)>0\), hence \({{\mathrm{sc}}}y_k\) is even, so \(V^-(y_k)=V^-(y_{k+1})\) holds only if \({\text {sgn}}y_k(j+1)={\text {sgn}}y_k(0)\), which in turn yields also

If in addition \(y_{k+1}(d)=0\) is irregular, then \({0\ge {}} y_{k+1}(0)y_{k+1}(d-1)=y_k(1)y_k(d)\) holds, which in the light of (3.6) yields that \(j\ne 0\), or what is equivalent, \(y_k(1)=0\).

To sum up, if \(y_{k+1}(d)=0\) is irregular, then either \(y_k(0)=y_{k}(d)=0\) or there exists some \(1\le j\le {d-1}\), such that

hold, which in particular means that \(y_k(0)\) is followed by an irregular block of zeros of length \(j\ge 1\).

(III) As a consequence of the first two steps, if \(y_k\in {\mathcal {R^-}}\) and \(V^-(y_k)=V^-(y_{k+1})\), then \(y_{k+1}\in {\mathcal {R^-}}\). Therefore, in order to prove the statement, it is sufficient to show that there exists \(0\le \ell \le 4d+2\) such that \(y_{k+\ell }\in {\mathcal {R^-}}\).

(IV) Note that if \(y_k(d)=0\) and \(y_k\ne 0\in {\mathbb {R}}^{d+1}\), then since \(a_j>0\) for all \(j\ge k\), there exists \(k+1\le k_1 \le k+d\) such that \(y_{k_1}(d) \ne 0\). Thus we may assume that \(k_1\), \(k\le k_1\le k+d\) is such that \(y_{k_1}(d)\ne 0\).

(V) This in particular implies that \(y_{k_1}(d)\) is regular. The rest of the vector \(y_{k_1}\) may contain several irregular blocks, which are separated by at least one regular coordinate from each other. The coordinate \(y_{k_1}(0)\) may also be zero, or even irregular.

Next we will study how the irregular blocks of \(y_{k_1+d+1}\) can be described by the irregular blocks of \(y_{k_1}\). Let us use notation \(k_2=k_1+d+1\).

(V.1) If there exists \(1\le j \le d\) so that \(y_{k_1}(0)=\cdots = y_{k_1}(j-1)=0\) and \(y_{k_1}(j)\ne 0\), then, as \(a_j>0\) for all \(j\ge k\), \({\text {sgn}}y_{k_1+j}(d)=\cdots ={\text {sgn}}y_{k_1+j}(d-j)={\text {sgn}}y_{k_1}(d)\) holds, and consequently one has \({\text {sgn}}y_{k_2}(0)=\cdots ={\text {sgn}}y_{k_2}(j-1)={\text {sgn}}y_{k_1}(d)\ne 0\).

(V.2) If \(y_{k_1}(i)=\cdots =y_{k_1}(j)=0\) is an irregular block with \(1\le i< j<d\) such that \(y_{k_1}(i-1)y_{k_1}(j+1)<0\), then for \(k'=k_1+i-1\) one has that \(y_{k'}(1)=\cdots =y_{k'}(j-i+1)= 0\) and \(y_{k'}(0)y_{k'}(j-i+2)<0\) hold. Due to the argument seen in (II), and that neither \(y_{k'}(d)=y_{k'}(0)=0\), nor (3.7) holds (with \(k=k'\)), we obtain that \(y_{k'+1}(d)\) is regular, which implies in this case that it is also nonzero. Going a bit further, to \(k''=k'+j-i+2=k_1+j+1\), one infers that \({\text {sgn}}y_{k''}(d)=\cdots ={\text {sgn}}y_{k''}(d-j+i-1)\ne 0\). Then it is straightforward that \({\text {sgn}}y_{k_2}(i)=\cdots ={\text {sgn}}y_{k_2}(j)\ne 0\) holds. Observe that in case \(i=j\), \(y_{k_1}(i)=0\) would not be irregular due to assumption \(y_{k_1}(i-1)y_{k_1}(i+1)<0\).

(V.3) Thus it remains to consider the case when \(y_{k_1}(i)=\cdots =y_{k_1}(j)=0\) is an irregular block with \(1\le i\le j<d\) such that \(y_{k_1}(i-1)y_{k_1}(j+1)>0\). Choosing \(k'=k_1 +i-1\) just as in the previous step one obtains \(y_{k'}(1)=\cdots =y_{k'}(j-i+1)= 0\) and \(y_{k'}(0)y_{k'}(j-i+2)>0\).

Now there are two possibilities. Either \(y_{k'+1}(d)\ne 0\) and then we have the same situation as in (V.2), i.e. \({\text {sgn}}y_{k_2}(i)=\cdots ={\text {sgn}}y_{k_2}(j)\ne 0\), or \(y_{k'+1}(d)= 0\). In the latter case one can easily see that \({\text {sgn}}y_{k_2}(i-1)=\cdots ={\text {sgn}}y_{k_2}(j)= 0\), moreover, if \(i\ge 2\), then \(y_{k_2}(i-2)y_{k_2}(j+1)<0\) holds. Note that the index of the last components of the corresponding irregular blocks in \(y_{k_1}\) and \(y_{k_2}\) coincide (it is j).

(VI) Conversely, Steps (I), (II) and (V) combined show that if \(y_{k_2}\) has an irregular block, whose last component is at index j (with \(0\le j<d\)), i.e. \(y_{k_2}(j)=0\) is irregular and \(y_{k_2}(j+1)\ne 0\), then \(y_{k_1}(j)=0\) is irregular and \(y_{k_1}(j+1)\ne 0\).

Our aim is to apply the arguments presented in (V) now for \(y_{k_3}\), where \(k_3\) is to be defined soon. For this reason we need to distinguish two cases with respect to the regularity of \(y_{k_2}(d)\).

If it is regular, then it may be zero or nonzero. If it is nonzero, then (V) shows that all irregular blocks of \(y_{k_2}\) are of the type considered in (V.1) and (V.2) (now with \(k_2\) instead of \(k_1\)). If \(y_{k_2}(d)=0\), then from its regularity it follows that \(y_{k_2}(0)\ne 0\), so all irregular blocks of zeros are of the type studied in (V.2). Note that in that step we did not use that \(y_{k_1}(d)\ne 0\). For regular \(y_{k_2}(d)\) let us define \(k_3=k_2\).

If \(y_{k_2}(d)=0\) is irregular, then \(y_{k_2-1}(0)=y_{k_1}(d)\ne 0\) implies that (3.7) holds with \(k={k_2-1}\) and some \(1\le j\le {d-1}\). Then for \(k_3=k_2+j+1\le {k_2+d}\) one infers that \(y_{k_3}(d)\ne 0\), and all irregular blocks are of the types handled in (V.1) and (V.2).

Applying now the arguments of (V.1) and (V.2) for \(y_{k_3}\) instead of \(y_{k_1}\) we obtain that, for \(k_4=k_3+d+1\), \(y_{k_4}(i)\) is regular for all \(0\le i<d\). We claim that the last coordinate \(y_{k_4}(d)\) is also regular. Arguing by way of contradiction, if \(y_{k_4}(d)\) is irregular, then according to (II) there are two possibilities: either \(y_{k_4-1}(0)=y_{k_4-1}(d)=0\) or (3.7) holds for \(k=k_4-1\). In the former case \(y_{k_4}(d-1)=y_{k_4}(d)=0\) are both irregular, which is a contradiction. If the latter holds, then \(y_{k_4-1}(1)=0\) is irregular and \(y_{k_4-1}(0)\ne 0\), thus from (I) we obtain that \(y_{k_3+1}(d)=y_{k_4-1-(d-1)}(d)=0\) is also irregular and \(y_{k_3+1}(d-1)=y_{k_4-1}(0)\ne 0\). Thus, (3.7) must hold for \(k=k_3\), as well, which contradicts the fact that every irregular block of \(y_{k_3}\) is of the types seen in (V.1) and (V.2). This proves that \(y_{k_4}(d)\) is regular.

Thus, we proved that \(y_{k_4}\in {\mathcal {R^-}}\) for some \(0\le k_4\le k+4d+{2}\). From (III) we obtain that \(y_{k+4d+{2}}\in {\mathcal {R^-}}\). \(\square \)

Assertion (a) of Theorem 3.3 is also true, if we extend \(V^\pm \) into the origin as \(V^+(0)=0\) resp. \(V^-(0)=1\). In this case we may also allow \(a_k\ge 0\) and \(\delta ^*b_k\ge 0\) instead of strict inequalities. The proof of this slightly modified statement is essentially identical to the one presented above.

Remark 3.4

With the modified assumptions, Theorem 3.3 (a) is a special case of a general result by Mallet-Paret and Sell [17, Theorem 2.1]. Yet, there are two reasons for presenting an independent proof: First, our simpler situation allows a much shorter argument. Second, in the more general setting of [17] extra (smallness) conditions on the sequences \(a_k\) and \(b_k\) are necessary. As their motivation was to give the Lyapunov property for time-discretization of delay-differential equations and tridiagonal ODEs, those extra conditions were always met for sufficiently small step-sizes. However, our motivation comes not only from time-discretization of continuous-time equations, therefore it is important that we can omit such extra conditions.

4 The Morse Decomposition

From now on, we suppose that the conditions (\(H_1\))–(\(H_3\)) are fulfilled. Furthermore, (1.2) is assumed to possess a global attractor \({\mathcal A}\).

If  and

and  , then (\(H_1\))–(\(H_3\)) imply \(a,\delta ^*b>0\).

, then (\(H_1\))–(\(H_3\)) imply \(a,\delta ^*b>0\).

Throughout this section we will treat the positive and negative feedback cases in a parallel way. Both statements and their proofs are formulated in this manner. The only exception in this regard is the proof of Proposition 4.2, whose positive feedback case is postponed to the Appendix.

Before stating our main theorem we introduce some notations concerning the linearization of equation (1.2), which becomes

or what is equivalent,

Denote by \(M^*\) the number of eigenvalues (counting multiplicities) of A with absolute values strictly greater than 1. Moreover, let

and

Our main result is the next theorem:

Theorem 4.1

(Morse decomposition) If (1.2) has a global attractor \({\mathcal A}\) and the assumptions (\(H_1\))–(\(H_3\)) hold, then the following collection of sets is a Morse decomposition of \({\mathcal A}\):

for \(0\le n\le d+1\), \(n\in 2{\mathbb {N}}_0 \setminus \{N^*_+\}\) (resp. \(n\in (2{\mathbb {N}}_0 +1)\setminus \{N^*_-\}\)).

Note that due to definitions of \(V^\pm \) and \(N^*_\pm \), \({\mathcal M}_{N^*_\pm }\ne \{0\}\) can only occur if the origin is non-hyperbolic or \(N^*_\pm =d+1\), and d is odd (resp. even) in the positive (resp. negative) feedback case.

The proof of Theorem 4.1 is based on several tools, whose logical structure is borrowed from [19]. However, there are certain differences: For instance, the finite dimensional state space of (1.2) allows to simplify various arguments based on the Arzelà–Ascoli theorem. Another simplification in our finite dimensional setting is the nonexistence of super-exponentially decaying solutions established in Lemma 4.5. On the flip side, the non-connectedness of orbits gives rise to technical difficulties in some arguments.

The next two results play a significant role in the sequel and show how bounded solutions (on either of the half-axes) can be characterized with the aid of \(N^*_\pm \) and functional \(V^\pm \). Although they correspond to continuous time results in [15, 19], we give a detailed proof.

Proposition 4.2

-

(a)

If \((y_k)_{k\le 0}\) is a nontrivial, bounded solution of (4.1) in \({\mathbb {R}}^{d+1}\), then \(V^\pm (y_k)\le N^*_\pm \) for all \(k\le 0\).

-

(b)

If \((y_k)_{k\ge 0}\) is a nontrivial, bounded solution of (4.1) in \({\mathbb {R}}^{d+1}\), then \(V^\pm (y_k)\ge N^*_\pm \) for all \(k\ge 0\).

Proof

We present only the proof (a) in the negative feedback case, i.e. when (\(H_1\))–(\(H_3\)) with \(\delta ^*=-1\) hold. The proof of (b) is analogous. The proof for the positive feedback case is also rather similar, but simpler (see Appendix), as there are less cases for the distribution of eigenvalues of (4.1) (cf. Lemma 2.1).

The eigenvalues of (4.1) are precisely the solutions of the characteristic equation (2.2). If \(M^*\) is equal to 0, 1 or \(d+1\), then the statement is trivial. Otherwise, according to Lemma 2.1, \(M^*\) is an even number, say \(M^*=2n'+2\), where \(0\le n'\le \frac{d-2}{2}\).

We use the notations and ordering for eigenvalues introduced in Lemma 2.1. For a non-real eigenvalue \(\lambda _j=r_j e^{i\varphi _j}\in {S^-_j}\) (with \(\varphi _j\in (0,\pi )\), \(j\in {\mathbb {Z}}_+,\ j<\frac{d}{2}\)) any linear combination of the real eigensolutions corresponding to \(\lambda _j\) can be written in the form \(c_j z_j\), where \(c_j\in {\mathbb {R}}\) and

with some \(\omega _j\in [0,2\pi )\). From \(\varphi _j\in (0,\pi )\) it is clear that \({{\mathrm{sc}}}z_{j,k}\) equals the number of sign changes the sine function has on interval \((k\varphi _j+\omega _j,\)\((k+d)\varphi _j+\omega _j)\). According to the definition of the sector \(S^-_j\), the length of this interval is \(d\varphi _j \in (2j\pi , \frac{d}{d+1}(2j+1)\pi )\). Now, from the definition of \(V^-\) we obtain that \(V^-(z_{j,k})=2j+1\) for all \(k\in {\mathbb {Z}}_-\).

As for the eigensolutions corresponding to real eigenvalues, let us first recall that determined by the value of b, either there are exactly two positive eigenvalues of (4.1) (\(\lambda _{+,2}\le \lambda _{+,1}\)), and in that case there is no eigenvalue in the sector \(S^-_0\), or there are no positive eigenvalues and there exists a pair of complex eigenvalues \(\lambda _0,\overline{\lambda _0}\), where \(\lambda _0\in S^-_0\). In the former case let \(z_{0}^1\), \(z_0^2\) and \(z_{0}^3\) be defined by \(z_{0,k}^1(j)=\lambda _{+,1}^{k+j}\), \(z_{0,k}^2(j)=\lambda _{+,2}^{k+j}\) and \(z_{0,k}^3(j)=(k+j)\lambda _{+,2}^{k+j}\) for all \(k\in {\mathbb {Z}}_-\) and \(j\in {\mathbb {N}}_0,\ j\le d\), respectively. Clearly, for any \(i=1,2,3\) and for all \(k\in {\mathbb {Z}}_-,\ k< -d\), every component of \(z_{0,k}^i\) has the same sign, and in particular \(V^-(z_{0,k}^i)=1\) for all \(k< -d\). Concerning negative eigenvalues, if d is even, say \(d=2\ell \) for some \(\ell \in {\mathbb {N}}\), then any eigensolution corresponding to the unique negative eigenvalue  can be written in the form \(c_\ell z_\ell \), where \(c_\ell \in {\mathbb {R}}\) and \(z_{\ell ,k}(j)=(-r_\ell )^{k+j}\) for all \(k\in {\mathbb {Z}}_-\) and \(j\in {\mathbb {N}}_0,\ j\le d\). Obviously \({{\mathrm{sc}}}z_{\ell ,k}=d\) and therefore also \(V^-(z_{\ell ,k})=d+1=2\ell +1\) hold for all \(k\in {\mathbb {Z}}_-\). According to Lemma 2.1 there are no other real eigenvalues.

can be written in the form \(c_\ell z_\ell \), where \(c_\ell \in {\mathbb {R}}\) and \(z_{\ell ,k}(j)=(-r_\ell )^{k+j}\) for all \(k\in {\mathbb {Z}}_-\) and \(j\in {\mathbb {N}}_0,\ j\le d\). Obviously \({{\mathrm{sc}}}z_{\ell ,k}=d\) and therefore also \(V^-(z_{\ell ,k})=d+1=2\ell +1\) hold for all \(k\in {\mathbb {Z}}_-\). According to Lemma 2.1 there are no other real eigenvalues.

First, let us consider the hyperbolic case. Assume that \(M^*=2n'+2\) for some integer \(0\le n' \le \frac{d-2}{2}\). Then \(N^*_-=M^*=2n'+2\). Let y be an arbitrary solution of (4.2) which is bounded in backward time. This means that there exist an integer \(0\le n\le n'\) and appropriate constants \(\omega _j\in [0,2\pi )\), \(c_{0,1},c_{0,2},c_{0,3},c_j\in {\mathbb {R}}\) with \(j=0 ,\ldots ,n\), such that

where those coefficients are understood to be automatically set to zero, that correspond to eigensolutions which do not exist in the given case (e.g. there exists no positive eigenvalue).

We claim that \(V^-(y_{k})\le V^-(z_{n,k})=2n+1< 2n'+2=N^*_-\) holds for all \(k\le k_1\), where \(k_1\) is to be defined soon. Then by monotonicity of \(V^-\) (proved in Theorem 3.3) we get statement (a). If \(c_0,\dots , c_n\) are all zeros, then the claim trivially holds. Otherwise we may assume w.l.o.g. that \(c_n\ne 0\).

If \(c_0 \ne 0\), then there are no positive eigenvalues of (4.1), so the first sum in (4.4) is zero, and we readily obtain that for \(n=0\), \(V^-(y_{k})= V^-(z_{n,k})=1< 2n'+2=N^*_-\) holds.

Thus we may assume \(n\ge 1\). From Lemma 2.1 one can easily obtain that there exists \(\vartheta _n>0\), sufficiently small, such that the inequalities

hold. Moreover, by the Lemma 2.1 (c) we can choose \(k_0<0\) so small that

holds for all \(k\le k_0\). Let \(k_1=k_0-d\).

For brevity, let us say that the \(\ell \)-th component of \(z_{n,k}\), i.e. \(z_{n,k}(\ell )=r_n^{k}\sin ((k+\ell )\varphi _n+ \omega _n)\) is small if \(|\sin ((k+\ell )\varphi _n+ \omega _n)|\le \sin \vartheta _n\), otherwise the component will be said to be big. Inequality (4.8) yields that if \(z_{n,k}(\ell )\) is big for some \(\ell =0,1,\ldots ,d\) and \(k\le k_1\), then \({\text {sgn}}y_k(\ell )={\text {sgn}}z_{n,k}(\ell )\ne 0\) holds. Note that inequality (4.5) guarantees that there is no \(k\le k_1\) and component \(0\le \ell <d\) such that \(z_{n,k}(\ell )\) and \(z_{n,k}(\ell +1)\) are both small. Similarly, (4.6)–(4.7) imply that \(z_{n,k}(0)\) and \(z_{n,k}(d)\) cannot be small at the same time. Moreover, by (4.5) one obtains that if \(z_{n,k}(\ell )\) is small for some \(k\le k_1\) and \(\ell \in \{1,\ldots , d-1\}\), then \({\text {sgn}}z_{n,k}(\ell -1)=-{\text {sgn}}z_{n,k}(\ell +1)\).

Combining them means that, if \(k\le k_1\) and \(z_{n,k}(0)\) and \(z_{n,k}(d)\) are both big, \({{\mathrm{sc}}}y_k={{\mathrm{sc}}}z_{n,k}\) holds, and \(V^-(y_k)=V^-(z_{n,k})=2n+1<N^*_-\) follows.

There remains the case, when exactly one of \(z_{n,k}(0)\) and \(z_{n,k}(d)\) is small. W.l.o.g. we may assume that \(z_{n,k}(d)\) is small, \(z_{n,k}(0)\) is big and positive. Then, as \(z_{n,k}(d-1)\) is also big,

follows. Thus either \(V^-(y_k)\le V^-(z_{n,k})\) holds or \({{\mathrm{sc}}}y_k = {{\mathrm{sc}}}z_{n,k}+1\) is even. We show that the latter case leads to contradiction. Should it hold, then \(y_k(0)\) and \(z_{n,k}(0)\) would be both positive, \(y_k(d-1)\) and \(z_{n,k}(d-1)\) would be both negative, while \(y_k(d)>0\) and \(z_{n,k}(d)\le 0\). Now, since \(z_{n,k}(d)\le 0\) is small, \(((k+d)\varphi _n+\omega _n) \in (-\vartheta _n,0] \cup [\pi ,\pi +\vartheta _n)\) mod \(2\pi \) holds. On the other hand, from \(z_{n,k}(d-1)<0\) and (4.5) it follows that \(((k+d)\varphi _n+\omega _n) \in (-\vartheta _n,0]\) mod \(2\pi \), which implies by inequalities (4.6) and (4.7) that \(z_{n,k}(0)<0\). This is a contradiction, so our proof is complete for the hyperbolic case.

If the origin is non-hyperbolic, and \(M^*\ge 2\), then \({N^*_-}=M^*+1\). Keeping our notations from above, there is a pair of eigenvalues \(\lambda _{n'+1},\overline{\lambda _{n'+1}}\) on the unit circle, or \(-1\) is a simple eigenvalue (if d is even and \(M^*=d\)). In the latter case, statement (a) of the lemma is trivial. In the former case all backward bounded solutions of (4.2) can be written as (4.4), this time with \(n\le n'+1\), and an argument similar to the one applied in the hyperbolic case shows that \(V^-(y_k)=2n+1\le 2n'+3=N^*_-\) for all \(k\in {\mathbb {Z}}_-\). \(\square \)

Proposition 4.3

There exists an open neighborhood \(U\subseteq J^{d+1}\) of 0, such that for all nontrivial solutions \((y_k)_{k\in {\mathbb {Z}}}\) in \({\mathbb {R}}^{d+1}\) of the nonlinear equation (1.2) the following statements hold:

-

(a)

If \(y_k\in \overline{U}\cap {\mathcal A}\) for all \(k\le 0\), then \(V^\pm (y_k)\le N^*_\pm \) for all \(k\in {\mathbb {Z}}\).

-

(b)

If \(y_k\in \overline{U}\cap {\mathcal A}\) for all \(k\ge 0\), then \(V^\pm (y_k)\ge N^*_\pm \) for all \(k\in {\mathbb {Z}}\).

Proof

The proof relies mainly on Proposition 4.2. We only prove statement (a), since the proof of part (b) is analogous.

We argue by way of contradiction. If the statement is not true, then there exists a sequence of nontrivial, bounded entire solutions \(y^n:{\mathbb {Z}}\rightarrow J^{d+1}\) of equation (1.2) such that \(\sup _{k\le 0}\Vert y_k^n\Vert \rightarrow 0\), as \(n\rightarrow \infty \) and \(V^\pm (y_{k_n}^n)>N^*_\pm \) holds for some \(k_n\in {\mathbb {Z}}\).

Since \(y^n\) is bounded, there exists an integer \(j_n\le k_n\), such that \(\Vert y^n_k\Vert <2\Vert y^n_{j_n}\Vert \) holds for all integers \(n\in {\mathbb {Z}}_+\) and \(k\le k_n\).

As equation (1.2) is autonomous and \(V^\pm \) is monotone non-increasing, we may assume w.l.o.g. that \(j_n=0\) for all \(n\in {\mathbb {Z}}_+\). Recall that equation (1.2) can be written in the form (3.2), (3.4) and set

Keeping the notations of Remark 3.1, \(y^n\) is a bounded entire solution of (3.2),(3.4) for all \(n\in {\mathbb {Z}}_+\), where y, A, a and b should be replaced throughout by \(y^n,A^n,a^n\) and \(b^n\), respectively. From the linearity of equation (3.2) one obtains that \(z^n\) is an entire solution of

By definition, \(\Vert z^n_k\Vert \le 2\) holds for all \(n\in {\mathbb {Z}}_+\), so by the Cantor diagonalization argument, there exists a subsequence \((n_\ell )_{\ell =0}^\infty \) and a bounded sequence \((z_k)_{k=-\infty }^0\) such that \(z^{n_\ell }_k \rightarrow z_k\), as \(\ell \rightarrow \infty \), for all integers \(k\le 0\).

On the other hand, by our assumptions, for any fixed integer \(k\le 0\), \(y^{n_\ell }_k\rightarrow 0\), as \(\ell \rightarrow \infty \). Thus \(a^{n_\ell }_k\rightarrow a=D_1f(0,0)\) and \(b^{n_\ell }_k\rightarrow b=D_2f(0,0)\) hold as \(\ell \rightarrow \infty \).

It follows then that \((z_k)_{k\le 0}\) is a bounded solution of the linear equation

where A is defined by (4.1), moreover it is nontrivial, since \(\Vert z_0\Vert =\Vert z^n_0\Vert =1\) for all \(n\in {\mathbb {Z}}_+\). Thus Proposition 4.2 can be applied to obtain that \(V^\pm (z_k)\le N^*_\pm \) holds for all \(k\le 0\). Furthermore, Theorem 3.3 (b) yields that there exists \(k_1\le 0\) such that \(z_k \in \mathcal {R^\pm }\) for all \(k\le k_1\). Finally, since \(z^{n_\ell }_{k_1}\rightarrow z_{k_1}\), as \(\ell \rightarrow \infty \), it follows from Proposition 3.2 (b) that

contradicting our assumption \(N^*_\pm <V^\pm (y^{n_\ell }_k)=V^\pm (z^{n_\ell }_k)\) for all \(k\le 0\). \(\square \)

From the Proposition 4.3 we immediately infer the next result.

Corollary 4.4

The following holds for solutions of (1.2):

-

(a)

If \(N^*_\pm >1\), then there exists no heteroclinic solution towards the trivial solution.

-

(b)

If there exists a homoclinic solution \((\tilde{y}_k)_{k\in {\mathbb {Z}}}\) to the trivial equilibrium, then \(V^\pm (\tilde{y}_k)\equiv N^*_\pm \) on \({\mathbb {Z}}\).

-

(c)

In particular, if the trivial solution is hyperbolic, then there exists no homoclinic solution to it in the positive feedback case, while in case of negative feedback it may only exist if \(M^*=N^*_-=1\).

Proof

-

(a)

Fixed-points \(y^*\ne 0\) of (1.2) have equal, nonzero components, so \(V^\pm (y^*)\le 1\) and \(y^*\in \mathcal {R^\pm }\). Assume now to the contrary that \((\tilde{y}_k)_{k\in {\mathbb {Z}}}\) is an entire solution with \(\lim _{k\rightarrow \infty }\tilde{y}_k=0\) and \(\lim _{k\rightarrow -\infty }\tilde{y}_k=y^*\). Then from Proposition 3.2 (b) we get \(\lim _{k\rightarrow -\infty }V^\pm (\tilde{y}_k)\le 1\), while Proposition 4.3 (b) yields \(\lim _{k\rightarrow \infty }V^\pm (\tilde{y}_k)\ge N^*_\pm >1\), a contradiction to Proposition 3.3 (a).

- (b)

-

(c)

Let the trivial solution be hyperbolic. If \(M^*\) equals 0 or \(d+1\), then the statement holds trivially since either the local unstable or the local stable manifold is trivial. Otherwise, except the case of negative feedback with \(M^*=N^*_-=1\), \(N^*_\pm =M^*\) is an odd (resp. even) number in the positive (resp. negative) feedback case. Now, recall that \(V^\pm \) takes on only even (resp. odd) values, then apply (b) to conclude the proof.\(\square \)

The next technical lemma shows that solutions of (1.2) can neither grow nor decrease faster than exponentially in some neighborhood of the origin.

Lemma 4.5

Let \((y_k)_{k\in {\mathbb {I}}}\) be a solution of (3.2). If \(\tilde{a}_1,\tilde{b}_0\) and \(\tilde{b}_1\) are positive reals with \(0<a_k < \tilde{a}_1\) and \(\tilde{b}_0<|b_k|<\tilde{b}_1\) for all \(k\in {\mathbb {I}}\), then there exist positive constants c and C (that may depend on \(\tilde{a}_1, \tilde{b}_0\) and \(\tilde{b}_1\)) such that

Proof

Without loss of generality we may use the \(\Vert \cdot \Vert _1\)-norm. Since \(A_k\) is invertible for all \(a_k\ne 0\ne b_k\), and

and

hold, thus

are appropriate choices. \(\square \)

Remark 4.6

From the above proof and the \(C^1\)-smoothness of f it is clear that for any solution \((y_k)_{k\in {\mathbb {I}}}\) of (3.2), (3.4) on some discrete interval \({\mathbb {I}}\) and any compact set \(K\subset {\mathbb {R}}^{d+1}\), there exists \(C>0\), such that \(y_k\in K\) for some \(k\in {\mathbb {I}}\) implies \(\Vert y_{k+1}\Vert \le C \Vert y_k\Vert \). Moreover, if K is a sufficiently small neighborhood of 0, then there exists also \(c>0\) such that \(c\Vert y_k\Vert \le \Vert y_{k+1}\Vert \).

We combine the next three lemmas with Proposition 4.3 in order to prove that the family of sets \({\mathcal M}_n\) fulfills the “Morse properties” (\(m_1\)) and (\(m_2\)).

Lemma 4.7

Suppose that \((y_k)_{k\in {\mathbb {Z}}}\) is a bounded entire solution of (1.2) through \(\xi \in {\mathcal A}\).

-

(a)

If \(\lim _{k\rightarrow \infty }V^\pm (y_k)=N\), then \(V^\pm (\eta )=N\) for any \(\eta \in \omega (\xi )\setminus \{0\}\).

-

(b)

If \(\lim _{k\rightarrow -\infty }V^\pm (y_k)=K\), then \(V^\pm (\eta )=K\) for any \(\eta \in \alpha (y)\setminus \{0\}\).

Proof

We only prove statement (a), since (b) can be shown analogously.

For an arbitrary \(\eta \in \omega (\xi )\setminus \{0\}\), there exist a monotone sequence \(k_n\rightarrow \infty \), as \(n \rightarrow \infty \), such that \(y_{k_n}\rightarrow \eta \), and – by the invariance of \(\omega (\xi )\setminus \{0\}\) – there exists a bounded entire solution \((z_k)\) of (1.2) such that \(z_0=\eta \) and \(z_k \in \omega (\xi )\setminus \{0\}\) holds for all \(k\in {\mathbb {Z}}\). By Theorem 3.3 (b), there exist integers \(\ell _1<0 < \ell _2\) such that \(z_{\ell _1}\) and \(z_{\ell _2}\) both belong to \(\mathcal {R^\pm }\). Since \(z_{\ell _1}\in \omega (\xi )\setminus \{0\}\), there exists a monotone sequence \(k'_n\rightarrow \infty \), as \(n \rightarrow \infty \), such that \(y_{k'_n}\rightarrow z_{\ell _1}\), and from the continuity of the right-hand side of equation (1.2) one infers that \(y_{k_n+\ell _2}\rightarrow z_{\ell _2}\), as \(n \rightarrow \infty \).

Combining these with Proposition 3.2 (b) and with the assumption \(\lim _{k\rightarrow \infty }V^\pm (y_k)=N\) we get that

Using that \(V^\pm \) is non-increasing and that \(\ell _1< 0 < \ell _2\), and \(z_0=\eta \) hold, \(V^\pm (\eta )=N\) is established. \(\square \)

Lemma 4.8

Suppose that \((y_k)_{k\in {\mathbb {Z}}}\) is a bounded entire solution of (1.2) through \(\xi \in {\mathcal A}\).

-

(a)

If \(\lim _{k\rightarrow \infty }V^\pm (y_k)\ne N^*_\pm \), then either \(\omega (\xi )=\{0\}\) or \(0 \notin \omega (\xi )\).

-

(b)

If \(\lim _{k\rightarrow -\infty }V^\pm (y_k)\ne N^*_\pm \), then either \(\alpha (y)=\{0\}\) or \(0 \notin \alpha (y)\).

Proof

Again, we only prove part (a). Let us assume that \(0\in \omega (\xi )\), but \(\omega (\xi )\ne \{0\}\), furthermore, let U be defined as in Proposition 4.3. Then there exists \(U_1\), an open neighborhood of 0 in \({\mathcal A}\) such that \(U_1\subset U\) and \(\omega (\xi )\not \subseteq \overline{{U}_1}\). This, together with \(0\in \omega (\xi )\) imply that \(y_k\) enters and leaves \(\overline{U_1}\) infinitely many times as \(k\rightarrow \infty \). Thus there exist positive integer sequences \(k_n, \sigma _n, \tau _n\) (\(n\ge 0\)), such that

and

hold for all \(n\ge 0\). By Remark 4.6 we get \(\tau _{n}\rightarrow \infty \) and \(\sigma _n\rightarrow \infty \), as \(n\rightarrow \infty \).

Now, let

Using the compactness of \({\mathcal A}\) and applying a Cantor diagonalization argument one obtains that there exist a subsequence \((n_\ell )_{\ell \in {\mathbb {N}}_0}\) and bounded entire solutions \((z_k)\) and \((w_k)\) of (1.2), such that \(z^{n_\ell }_k\rightarrow z_k\) and \(w^{n_\ell }_k \rightarrow w_k\) hold for all \(k\in {\mathbb {Z}}\), as \(\ell \rightarrow \infty \). Solutions z and w are nontrivial, since both \(z_{-1}\) and \(w_1\) are from \({\mathcal A}\setminus U_1\). On the other hand, \(z_k\in \overline{U_1}\subset \overline{U}\) and \(w_{-k}\in \overline{U_1}\subset \overline{U}\) hold for \(k\in {\mathbb {Z}}_+\). Thus by Proposition 4.3 it follows that

Observe that \(z_0=\lim _{\ell \rightarrow \infty }y_{k_{n_\ell }-\sigma _{n_\ell }}\), hence \(z_0\in \omega (\xi )\setminus \{0\}\), and similarly \(w_0\in \omega (\xi )\setminus \{0\}\). Then, from Lemma 4.7 it follows that

This combined with (4.9) (for \(k=0\)) leads to \(\lim _{k\rightarrow \infty }V^\pm (y_k)=N^*_\pm \). \(\square \)

Lemma 4.9

Suppose that \((y_k)_{k\in {\mathbb {Z}}}\) is a bounded entire solution of (1.2) through \(\xi \in {\mathcal A}\setminus \left\{ 0\right\} \).

-

(a)

If

, then either \(\omega (\xi )=\{0\}\) or \(\omega (\xi )\subseteq {\mathcal M}_N\).

, then either \(\omega (\xi )=\{0\}\) or \(\omega (\xi )\subseteq {\mathcal M}_N\). -

(b)

If

, then either \(\alpha (y)=\{0\}\) or \(\alpha (y)\subseteq {\mathcal M}_K\).

, then either \(\alpha (y)=\{0\}\) or \(\alpha (y)\subseteq {\mathcal M}_K\).

Proof

In order to prove assertion (a), let us assume that \(\omega (\xi )\ne \{0\}\) and that  \(V^\pm (y_k)\ne N^*_\pm \). According to Lemma 4.8, \(0\notin \omega (\xi )\). Let \(\eta \in \omega (\xi )\) be arbitrarily chosen, then using the invariance of \(\omega (\xi )\), there exists a bounded entire solution \((z_k)\), such that \(z_0=\eta \) and \(z_k\in \omega (\xi )\) holds for all \(k\in {\mathbb {Z}}\). From compactness of \(\omega (\xi )\), \(\alpha (z)\cup \omega (\eta )\subseteq \omega (\xi )\) follows. This implies that \(0\notin \alpha (z)\cup \omega (\eta )\). On the other hand, \(z_k\in \omega (\xi )\) and Lemma 4.7 together ensure that \(V^\pm (z_k)=N\) for all \(k\in {\mathbb {Z}}\), meaning that \(\eta \in {\mathcal M}_N\) holds.

\(V^\pm (y_k)\ne N^*_\pm \). According to Lemma 4.8, \(0\notin \omega (\xi )\). Let \(\eta \in \omega (\xi )\) be arbitrarily chosen, then using the invariance of \(\omega (\xi )\), there exists a bounded entire solution \((z_k)\), such that \(z_0=\eta \) and \(z_k\in \omega (\xi )\) holds for all \(k\in {\mathbb {Z}}\). From compactness of \(\omega (\xi )\), \(\alpha (z)\cup \omega (\eta )\subseteq \omega (\xi )\) follows. This implies that \(0\notin \alpha (z)\cup \omega (\eta )\). On the other hand, \(z_k\in \omega (\xi )\) and Lemma 4.7 together ensure that \(V^\pm (z_k)=N\) for all \(k\in {\mathbb {Z}}\), meaning that \(\eta \in {\mathcal M}_N\) holds.

The proof of statement (b) is analogous. \(\square \)

The next lemma is a step towards the compactness proof of Morse sets.

Lemma 4.10

For every \(N\in 2{\mathbb {N}}_0\setminus N^*_+\) (resp. \(N\in (2{\mathbb {N}}_0+1)\setminus N^*_-\)) there exists an open neighborhood \(\tilde{U}\) in \({\mathcal A}\) of the origin, such that \({\mathcal M}_N\cap \tilde{U}=\emptyset \).

Proof

Assume to the contrary that there is a non-negative even (resp. odd) \(N\ne N^*_\pm \) and a sequence \((\xi ^n)_{n\ge 0}\) in \({\mathcal M}_N\) such that \(\xi ^n\rightarrow 0\) as \(n\rightarrow \infty \). For each \(n \ge 0\) let \(y^n\) be a bounded entire solution such that \(y^n_0=\xi ^n\), \(0\notin \alpha (y^n)\cup \omega (\xi ^n)\) and \(V^\pm (y^n_k)=N\) hold for all \(k\in {\mathbb {Z}}\).

We claim that \(\omega (\xi ^n)\) cannot be a subset of \(\overline{U}\), where U is from Proposition 4.3. To see this, suppose that \(\omega (\xi ^n)\subseteq \overline{U}\). Let \(\eta \in \omega (\xi ^n)\). By invariance of \(\omega (\xi ^n)\) there exist a bounded entire solution \((z_k)\) through \(\xi ^n\), such that \(z_k\in \omega (\xi ^n)\subseteq \overline{U}\) holds for all \(k\in {\mathbb {Z}}\). Thus Proposition 4.3 implies that \(V^\pm (z_k)=N^*_\pm \) holds for all \(k\in {\mathbb {Z}}\). However, \(V^\pm (\eta )=N\) should also hold by Lemma 4.8, which is a contradiction, so \(\omega (\xi ^n)\not \subseteq \overline{U}\) for all \(n\ge 0\).

Assume for definiteness that \(N>N^*_\pm \). Since \(\lim _{n \rightarrow \infty }\xi ^n=0\), there exists a series of non-negative integers \((\sigma _n)_{n\in {\mathbb {N}}_0}\), such that

holds for all \(n\ge 0\). By Remark 4.6, it follows that \(\sigma _n\rightarrow \infty \), as \(n\rightarrow \infty \). Letting  and applying a Cantor diagonalization argument one obtains that there is subsequence \((z^{n_\ell })_{\ell \in {\mathbb {N}}_0}\) and an entire solution \((z_k)\) of (1.2) such that \(z^{n_\ell }_k\rightarrow z_k\) holds for all \(k\in {\mathbb {Z}}\), as \(\ell \rightarrow \infty \). Solution \((z_k)\) is nontrivial because \(z_1\notin U\). On the other hand, \(z_k \in \overline{U}\) holds for all \(k\le 0\), so Proposition 4.3 yields that \(V^\pm (z_k)\le N^*_\pm \) holds for all \(k\in {\mathbb {Z}}\).

and applying a Cantor diagonalization argument one obtains that there is subsequence \((z^{n_\ell })_{\ell \in {\mathbb {N}}_0}\) and an entire solution \((z_k)\) of (1.2) such that \(z^{n_\ell }_k\rightarrow z_k\) holds for all \(k\in {\mathbb {Z}}\), as \(\ell \rightarrow \infty \). Solution \((z_k)\) is nontrivial because \(z_1\notin U\). On the other hand, \(z_k \in \overline{U}\) holds for all \(k\le 0\), so Proposition 4.3 yields that \(V^\pm (z_k)\le N^*_\pm \) holds for all \(k\in {\mathbb {Z}}\).

Finally, Theorem 3.3 (b) ensures that there exists some \(k'> 0\), so that \(z_{k'}\in \mathcal {R^\pm }\). As \(y^{n_\ell }_{\sigma _{n_\ell }+k'}=z^{n_\ell }_{k'}\rightarrow z_{k'}\) (\(\ell \rightarrow \infty \)), we obtain

from Proposition 3.2 (b), which is a contradiction that proves our statement.

The proof for the case \(N<N^*_\pm \) is analogous. \(\square \)

Lemma 4.11

The set \({\mathcal M}_N\) is closed for all \(N\in 2{\mathbb {N}}_0\cup \{N^*_+\}\) (resp. all \(N\in (2{\mathbb {N}}_0+1)\cup \{N^*_-\}\)).

Proof

Let \((\xi ^n)_{n\ge 0}\) be a sequence in \({\mathcal M}_N\) for some \(N\in 2{\mathbb {N}}_0 \cup \{N^*_+\}\) (resp. \(N\in (2{\mathbb {N}}_0+1)\cup \{N^*_-\}\)), such that \(\lim _{n \rightarrow \infty }\xi ^n=\xi \) for some \(\xi \in {\mathcal A}\). We claim that \(\xi \in {\mathcal M}_N\).

If \(\xi =0\), then Lemma 4.10 yields that \(N=N^*_\pm \). By definition of \({\mathcal M}_{N^*_\pm }\), \(0\in {\mathcal M}_{N^*_\pm }\) also holds, so the statement is proved in this particular case.

Assume now that \(\xi \ne 0\). By definition of \({\mathcal M}_N\) and using \(\xi ^n\in {\mathcal M}_N\), there exist bounded entire solutions \((y^n_k)\) of (1.2), such that \(y^n_0=\xi ^n\), \(y^n_k\in {\mathcal M}_N\) and \(V^\pm (y^n_k)=N\) hold for all \(n\ge 0\) and \(k\in {\mathbb {Z}}\). The compactness of \({\mathcal A}\) and a Cantor diagonalization argument leads to a subsequence \((y^{n_\ell })_{\ell \in {\mathbb {N}}}\), and a solution \((y_k)_{k\in {\mathbb {Z}}}\) of (1.2), so that \(y^{n_\ell }_k \rightarrow y_k\) holds for every \(k\in {\mathbb {Z}}\), as \(\ell \rightarrow \infty \).

Necessarily, \(y_0=\xi \) holds. This combined with the compactness of \({\mathcal A}\) yields that \(y_k\in {\mathcal A}\setminus \{0\}\) for all \(k\in {\mathbb {Z}}\). Applying Theorem 3.3 (b) we obtain integers \(\ell _1<0<\ell _2\) such that \(y_{\ell _1}\) and \(y_{\ell _2}\) are from \(\mathcal {R^\pm }\), and that

hold. On the other hand, \(y^{n_\ell }_{\ell _1}\rightarrow y_{\ell _1}\) and \(y^{n_\ell }_{\ell _2}\rightarrow y_{\ell _2}\) hold as \(\ell \rightarrow \infty \), so Proposition 3.2 (b) assures that \(V^\pm (y_{\ell _1})=V^\pm (y_{\ell _2})=N\). This combined with (4.10) yields that \(V^\pm (y_k)=N\) for all \(k\in {\mathbb {Z}}\). In case \(N=N^*_\pm \), \(\xi \in {\mathcal M}_N\) is readily established. Otherwise, it remains to prove that \(0\notin \alpha (y)\cup \omega (\xi )\). In this case Lemma 4.10 gives an open neighborhood \(\tilde{U}\) of 0 in \({\mathcal A}\), such that \(y^n_k\in {\mathcal A}\setminus \tilde{U}\) holds for all \(k\in {\mathbb {Z}}\) and \(n\in {\mathbb {N}}_0\). Hence,

holds for all \(k\in {\mathbb {Z}}\), which leads to \(\alpha (y)\cup \omega (\xi )\subseteq {\mathcal A}\setminus \tilde{U}\). This shows that \(0\notin \alpha (y)\cup \omega (\xi )\) and completes the proof. \(\square \)

Now, we are finally in the position to prove our main theorem.

Proof of Theorem 4.1

By definition, the sets \({\mathcal M}_n\) are pairwise disjoint and invariant. Since \({\mathcal A}\) is compact, Lemma 4.11 yields that \({\mathcal M}_n\) is compact for all possible n.

It remains to prove that these sets fulfill the “Morse properties” (\(m_1\)) and (\(m_2\)), i.e. for all \(\xi \in {\mathcal A}\) and any bounded entire solution \((y_k)_{k\in {\mathbb {Z}}}\) for which \(y_0=\xi \) holds, there exist \(i\ge j\) with \(\alpha (y)\subseteq {\mathcal M}_i\) and \(\omega (\xi )\subseteq {\mathcal M}_j\), and in case \(i=j\), then \(\xi \in {\mathcal M}_i\) (thus, \(y_k\in {\mathcal M}_i\) for every \(k\in {\mathbb {Z}}\)).

In order to show this, let \(\xi \in {\mathcal A}\setminus \{0\}\) be arbitrary (the statement is trivial for \(\xi =0\)), and \((y_k)_{k\in {\mathbb {Z}}}\) be a bounded entire solution of (1.2) for which \(y_0=\xi \) holds. Furthermore, define

Note that from monotonicity of \(V^\pm \) we get that \(N\le K\).

First, observe that if \(N=N^*_\pm \), then \(\omega (\xi )\subseteq {\mathcal M}_{N^*_\pm }\). In order to prove this, choose \(\eta \in \omega (\xi )\) arbitrarily. If \(\eta =0\), then \(\eta \in {\mathcal M}_{N^*_\pm }\) holds by definition, thus we may assume now that \(\eta \ne 0\). By Lemma 4.7 we obtain that \(V^\pm (\eta )=N^*_\pm \). Moreover, by the invariance of \(\omega (\xi )\setminus \{0\}\) there exists an entire solution \((z_k)\) in \(\omega (\xi )\setminus \{0\}\), such that \(z_0=\eta \). Thus Lemma 4.7 yields that \(V^\pm (z_k)=N^*_\pm \) for all \(k\in {\mathbb {Z}}\), meaning that \(\eta \in {\mathcal M}_{N^*_\pm }\) holds.

A similar argument can be applied to prove that \(K=N^*_\pm \) implies that \(\alpha (y)\subseteq {\mathcal M}_{N^*_\pm }\) holds.

We will distinguish four cases in terms of the values of N and K.

Case 1 If \(N=K=N^*_\pm \), then \(\alpha (y)\cup \omega (\xi ) \subseteq {\mathcal M}_{N^*_\pm }\) holds by our previous observation. Moreover, from the monotonicity of \(V^\pm \) it follows that \(V^\pm (y_k)\equiv N^*_\pm \) on \({\mathbb {Z}}\). This implies \(\xi \in {\mathcal M}_{N^*_\pm }\), thus both (\(m_1\)) and (\(m_2\)) hold.

Case 2\(N=N^*_\pm < K\). As already shown, \(\omega (\xi )\subseteq {\mathcal M}_{N^*_\pm }\) holds in this case.

Moreover, observe that \(\alpha (y)\ne \{0\}\). Otherwise Proposition 4.3 would imply \(V^\pm (y_k)\le N^*_\pm \) for \(k\in {\mathbb {Z}}\), and thus \(K\le N^*_\pm =N\), which is impossible.

Therefore, Lemma 4.9 can be applied to obtain \(\alpha (y)\subseteq {\mathcal M}_K\), so property (\(m_1\)) is fulfilled. Note that (\(m_2\)) holds automatically, since the two Morse sets in question, i.e. \({\mathcal M}_{N^*_\pm }\) and \({\mathcal M}_K\), are different.

Case 3 A similar argument applies in the case when \(N<N^*_\pm =K\).

Case 4 If \(N\ne N^*_\pm \ne K\), then Lemma 4.9 yields that either \(\omega (\xi )=\{0\}\) or \(\omega (\xi )\subseteq {\mathcal M}_N\). Similarly, either \(\alpha (y)=\{0\}\) or \(\alpha (y)\subseteq {\mathcal M}_K\) holds. Note that \(\omega (\xi )\) and \(\alpha (y)\) cannot be both \(\{0\}\) in this case, because then Proposition 4.3 would imply \(V^\pm (y_k)\equiv N^*_\pm \) on \({\mathbb {Z}}\), contradicting assumption \(N\ne N^*_\pm \ne K\).

If \(\omega (\xi )\ne \{0\} \ne \alpha (y)\), then Lemma 4.9 yields that \(\omega (\xi )\subseteq {\mathcal M}_N\) and \(\alpha (y)\subseteq {\mathcal M}_K\) hold, so (\(m_1\)) is fulfilled. If \(K=N\), then their definition imply that \(V^\pm (y_k)=N=K\) for all \(k \in {\mathbb {Z}}\). Since the limit sets are now also assumed to be nontrivial, thus Lemma 4.8 ensures that \(0\notin \alpha (y)\cup \omega (\xi )\), thus \(y_k\in {\mathcal M}_N\) also holds for all \(k\in {\mathbb {Z}}\). This establishes property (\(m_2\)).

If \(\omega (\xi )=\{0\}\ne \alpha (y)\), then \(\omega (\xi )\subseteq {\mathcal M}_{N^*_\pm }\) holds by definition. Furthermore, Proposition 4.3 implies that \(V^\pm (y_k)\ge N^*_\pm \) holds for all \(k\in {\mathbb {Z}}\), and consequently \(N^*_\pm < N\le K\). On the other hand Lemma 4.9 yields that \(\alpha (y)\subseteq {\mathcal M}_K\), so (\(m_1\)) holds. Property (\(m_2\)) is fulfilled automatically.

An analogous argument applies for the case when \(\omega (\xi )\ne \{0\}=\alpha (y)\). We have taken all possible cases into consideration, so our proof is complete. \(\square \)

5 Applications

Since the existence of a global attractor is assumed in Theorem 4.1, we begin with a condition for dissipativity:

Lemma 5.1

Suppose \(A\in {\mathbb {R}}^{d\times d}\) and \(H:{\mathbb {R}}^d\rightarrow {\mathbb {R}}^d\) satisfy:

-

(i)

There exist reals \(a\in (0,1)\), \(K\ge 1\) with \(\left\| A^k\right\| \le Ka^k\) for all \(k\in {\mathbb {Z}}_+\),

-

(ii)

there exist reals \(\beta _0,\beta _1\ge 0\) so that \(\left\| H(y)\right\| \le \beta _0+\beta _1\left\| y\right\| \) for all \(y\in {\mathbb {R}}^d\).

If \(\beta _1\in [0,\tfrac{1-a}{K})\) and \(R>\tfrac{K\beta _0}{1-a-K\beta _1}\), then \(B_R(0)\) is an absorbing set for

Proof

Due to the variation of constants formula (cf. [21, p. 100, Theorem 3.1.16(a)]) the semigroup induced by (5.1) satisfies

and our assumptions yield

for \(k\in {\mathbb {Z}}_+\). With the formula for the geometric series in mind, this implies

using the Grönwall inequality [21, p. 348, Proposition A.2.1(a)] we obtain

for all \(k\in {\mathbb {Z}}_+\), and \(a+K\beta _1\in [0,1)\) leads to \(\limsup _{k\rightarrow \infty }\left\| \phi (t;\xi )\right\| \le \tfrac{K\beta _0}{1-a-K\beta _1}\). \(\square \)

5.1 Life Sciences

Applications from the life sciences typically require solutions with values in  . We thus present some dissipative delay-difference equations

. We thus present some dissipative delay-difference equations

with \(C^1\)-right-hand side \(f_0:{\mathbb {R}}_+\times {\mathbb {R}}_+\rightarrow {\mathbb {R}}_+\) fitting into our setting. They commonly have a unique equilibrium \(x_*>0\). Hence, the shifted equation

with \(f:J\times J\rightarrow J\) and the closed interval  possesses the trivial equilibrium. Under dissipativity conditions on (5.2) we obtain absorbing sets of the form \([0,R]^{d+1}\) with \(R>R_0\) for some \(R_0>0\) and consequently global attractors \({\mathcal A}\subseteq [-x_*,R-x_*]^{d+1}\) for (1.2). For brevity, let us introduce

possesses the trivial equilibrium. Under dissipativity conditions on (5.2) we obtain absorbing sets of the form \([0,R]^{d+1}\) with \(R>R_0\) for some \(R_0>0\) and consequently global attractors \({\mathcal A}\subseteq [-x_*,R-x_*]^{d+1}\) for (1.2). For brevity, let us introduce  .

.

In the literature often sufficient conditions for global asymptotic stability of \(x_*\) are given (cf., for example [11, 12]). In this case, only one Morse set \({\mathcal M}_{N^*_\pm }\) is obtained from Theorem 4.1. Let us consequently list several dissipative difference equations that fulfill the assumptions of our main theorem, i.e. (\(H_1\))–(\(H_3\)). Violating the conditions for global asymptotic stability might indicate bifurcations which lead to more complex dynamics and possibly Morse sets.

Throughout, suppose that \(\alpha \in (0,1)\), \(\beta >0\).

5.1.1 Clark-Type Models

Given a \(C^1\)-function \(h:{\mathbb {R}}_+\rightarrow {\mathbb {R}}_+\) we denote difference equations

as Clark’s delayed recruitment models. For such problems with \(h\in C^3\) it was recently shown in [8] that even if the Schwarzian

is negative, supercritical Neimark–Sacker bifurcations can occur. Thus, an invariant circle around the nontrivial equilibrium contributes to \({\mathcal A}\).

For bounded functions h one obtains the following result on dissipativity.

Proposition 5.2

(Dissipativity for (5.3), cf. [20]) If there exists a \(K^+\ge 0\) such that \(h(x)\le K^+\) for all \(x\in {\mathbb {R}}_+\), then (5.3) is dissipative and \(A=[0,R]\) is absorbing for every \(R>\tfrac{K^+}{1-\alpha }\).

Example 5.3

(Mackey–Glass equation I) Let \(p>0\). For the Mackey–Glass equation (5.3) one considers

and the injectivity condition (1.3) holds. Moreover, \(Sh(x)<0\) is true on the interval \((0,\infty )\). There exists a unique equilibrium \(x_*>0\) and Proposition 5.2 guarantees the absorbing set specified in Table 1. Global asymptotic stability of \(x^*\) for \(p\le 1\) is shown in [7]. Shifting \(x_*\) into the origin yields a right-hand side

which satisfies (\(H_1\))–(\(H_3\)) (negative feedback) with \(a=\alpha \), \(b=-\frac{\beta p x_*^{p-1}}{(1+x_*^p)^2}<0\) and a global attractor \({\mathcal A}\) consisting of entire solutions being uniquely defined in backward time. Note that, according to [7], for \(p>1\) and \(\beta >\bigl (\tfrac{s}{s-1}\bigr )^{1+1/s}\) there exists an \(\alpha _0>0\) such that a positive equilibrium of (5.3) is unstable for \(\alpha \in (0,\alpha _0]\) and that there exists a nontrivial, hyperbolic periodic solution.

Our next example requires some dissipativity criterion which applies even though h is unbounded.

Proposition 5.4

(Dissipativity for (5.3)) Let \(\beta _0,\beta _1\ge 0\) be reals satisfying \(0\le \beta _1\le \alpha ^d(1-\alpha )\). If \(h:{\mathbb {R}}_+\rightarrow {\mathbb {R}}_+\) fulfills

then (5.3) is dissipative and \(A=[0,R]\) is absorbing for every \(R>\tfrac{\beta _0}{\alpha ^d(1-\alpha )-\beta _1}\).

Proof

We equip \({\mathbb {R}}^{d+1}\) with the \(\max \)-norm and formulate (5.3) as first order system (5.1) in \({\mathbb {R}}^{d+1}\). Then it is not difficult to see the relations

with  and Lemma 5.1 implies the assertion. \(\square \)

and Lemma 5.1 implies the assertion. \(\square \)

Example 5.5

(Mackey–Glass equation II) Let \(p\in (0,1]\). A further difference equation attributed to Mackey and Glass is of the form (5.3) with

and the injectivity condition (1.3) holds. On the one hand, due to [12, Theorem 2] the trivial solution is globally asymptotically stable for \(\alpha +\beta <1\). On the other hand, for \(\alpha +\beta >1\) there bifurcates a unique positive equilibrium \(x_*\) given in Table 1. As shown in [3, Theorem 4.1(i)], \(x^*\) is globally asymptotically stable for solutions starting in positive initial values.

-

For \(p<1\) choose \(\beta _1\in (0,\alpha ^d(1-\alpha ))\) and from \(h'(y)=\frac{\beta (1+(1-p)y^p)}{(1+y^p)^2}\rightarrow 0\) as \(y\rightarrow \infty \) there is an \(\eta >0\) such that \( h'(\eta )=\beta _1\). Hence, (5.5) holds with \(\beta _0=h(\eta )-\beta _1\eta >0\) and Proposition 5.4 yields that [0, R] is absorbing for \(R>\tfrac{h(\eta )-\beta _1\eta }{\alpha ^d(1-\alpha )-\beta _1}\).

-

For \(p=1\) the bounded function \(y\mapsto \frac{\beta y}{1+y}\) is strictly increasing to \(\beta \), we derive from Proposition 5.2 that [0, R] is absorbing for \(R>\frac{\beta }{1-\alpha }\).

The resulting right-hand side \(f(x,y)=\alpha x+\frac{\beta (y+x_*)}{1+(y+x_*)^p}-\frac{\beta x_*}{1+x_*^p}\) fulfills (\(H_1\))–(\(H_3\)) (positive feedback) and \(a=\alpha \), \(b=\beta \frac{1+(1-p)x_*^p}{(1+x_*^p)^2}>0\). Whence, a global attractor \({\mathcal A}\) containing the equilibria \(-\underline{x}_*\), 0 exists and consists of bounded entire solutions being unique in backward time.

A series of further sufficient conditions guaranteeing global asymptotic stability of \(x^*>0\) is given in [3, Theorem 4.1], which for instance address the situation \(p>1\).

Example 5.6

(Wazewska–Lasota equation) Let W denote the Lambert-W-function. We investigate (5.3) with

Note that (1.3) holds, as well as a negative Schwarzian Sh is available. On the one hand, the equilibrium \(x^*>0\) is globally asymptotically stable for \(\tfrac{\beta }{1-\alpha }\le e\) (cf. [3, Theorem 4.5]). On the other hand, one observes a subcritical flip bifurcation in the equation \(x_{k+1}=\alpha x_k+h(x_k)\) for critical parameters \(\alpha \) such that \(\tfrac{1+\alpha }{1-\alpha }=W(\tfrac{\beta }{1-\alpha })\). Using Proposition 5.2 we obtain a concrete absorbing set from Table 1, which also lists the unique positive equilibrium \(x_*>0\). The right-hand side

fulfills (\(H_1\))–(\(H_3\)) (negative feedback) with \(a=\alpha \), \(b=-\beta e^{-x_*}<0\) and a global attractor \({\mathcal A}\) containing unique backward solutions.

Additional conditions for global asymptotic stability of \(x^*>0\), depending on the delay d though, can be found in [3, Theorem 4.5].

5.1.2 Models of Hutchinson-Type

Let \(F:{\mathbb {R}}_+\rightarrow (0,\infty )\) be of class \(C^1\). Delay-difference equations

are said to be of Hutchinson type

Example 5.7

(Pielou equation) The equation (5.8) with

is dissipative by [10, Theorem 3.1]. Its unique equilibrium \(x_*>0\) is asymptotically stable for \(\cos \tfrac{d\pi }{2d+1}>\tfrac{1-\alpha }{2}\). A sufficient condition for global asymptotic stability is given in Table 2. Both above sufficient conditions for asymptotic stability fail for large delays, but when \(\tfrac{d^d}{(d+1)^{d+1}}<1-\alpha \), nontrivial solutions oscillate about \(x_*\) (see [9, pp. 68–69, Theorem 3.4.2(c)]). Furthermore, the shifted equation with

fulfills (\(H_1\))–(\(H_3\)) (negative feedback) with \(a=1\), \(b=\alpha -1<0\) and a global attractor \({\mathcal A}\) containing the equilibria \(-\underline{x}_*,0\).

Example 5.8

(Ricker equation) For delayed Ricker equation (5.8) with

dissipativity was shown in [25, Theorem 3.1] for a more general equation. A sufficient condition for global asymptotic stability of the nontrivial equilibrium is given in Table 2. Moreover, the shifted equation with

fulfills (\(H_1\))–(\(H_3\)) (negative feedback) with \(a=1\), \(b=-\beta <0\) and a global attractor \({\mathcal A}\subseteq [-x_*,R-x_*]^{d+1}\) containing the fixed points \(-\underline{x}_*,0\).

Example 5.9

(Nonempty Morse sets) Let \(d=mp\) for some \(m,p\in {\mathbb {N}}\), \(p\ge 2\). We utilize the simple observation that \(\tilde{x}^p\) is a p-periodic solution of equation (1.1) if and only if it is a p-periodic solution of the corresponding undelayed equation \(x_{k+1}=f(x_k,x_k)\).

It is well known that the undelayed Ricker equation \(x_{k+1}=x_k e^{\beta -x_k}\) admits a 2-periodic solution if and only if \(\beta >2\), and that this solution oscillates about \(x_*=\beta \) (see [23, Proposition 3]). Now, let us fix \(\beta >2\). Then there exists \((\tilde{x}^2_k)_{k\in {\mathbb {Z}}}\), a 2-periodic solution of (5.10) for any even d. Thus the corresponding first order, \(d+1\) dimensional equation (1.2) with (5.11) has a 2-periodic solution \((\tilde{y}^2_k)_{k\in {\mathbb {Z}}}\), moreover, from the oscillation of \((\tilde{x}^2_k)_{k\in {\mathbb {Z}}}\) follows immediately that \(V^-(\tilde{y}^2_k)\equiv d+1\) holds on \({\mathbb {Z}}\). In particular, the corresponding Morse set \({\mathcal M}_{d+1}\) contains a 2-periodic orbit. Note that in this case \(d+1=N^*_-\) holds, meaning that \({\mathcal M}_{N^*_-}\) is nontrivial.

Now assume that \(\beta >1+\ln 9\) and let \(d=3m\) for some \(m\in {\mathbb {N}}\). Then according to [23, Proposition 5] and the comment subsequent to it, there exists a 3-periodic solution \((\tilde{x}^3_k)_{k\in {\mathbb {Z}}}\) of \(x_{k+1}=x_k e^{\beta -x_k}\) (by Šarkovs\({}^\prime \)kiĭ’s Theorem [26], also n-periodic solutions exist for any \(n\in {\mathbb {N}}\)). It is easy to see that \((\tilde{x}^3_k)_{k\in {\mathbb {Z}}}\) oscillates about \(x_*=\beta \). Then one readily obtains that the corresponding \(d+1\) dimensional equation (1.2) with (5.11) has a 3-periodic solution \((\tilde{y}^3_k)_{k\in {\mathbb {Z}}}\), for which \(V^-(\tilde{y}^3_k)\equiv 2m+1\) holds on \({\mathbb {Z}}\), and thus the corresponding Morse set \({\mathcal M}_{2m+1}\) contains a 3-periodic orbit.

As a consequence, if \(\beta >1+\ln 9\) and \(d=6n\) for some \(n\in {\mathbb {N}}\), then the Morse set \({\mathcal M}_{6n+1}={\mathcal M}_{N^*_-}\) is nontrivial and \({\mathcal M}_{4n+1}\) is nonempty for the \(d+1\) dimensional Ricker map (1.2) with (5.11).

Note that although one can similarly find oscillatory n-periodic solutions for \(nm+1\) dimensional Ricker maps with \(\beta >1+\ln 9\), it is nontrivial to determine the number of sign changes they have on one period, and therefore it is not clear in which Morse set they are contained.

The above example is closely related to the topic of the first open problem presented in Sect. 6.

5.2 Discretizations

We consider the scalar delay-differential equation

with a continuously differentiable right-hand side \(g:{\mathbb {R}}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) and delay \(r>0\). Its forward Euler discretization of stepsize \(\tfrac{r}{d}\), \(d\in {\mathbb {N}}\), becomes

and is of the form (1.1) with \(J={\mathbb {R}}\).

The Krisztin–Walther equation\(\dot{x}(t)=-ax(t)+h(x(t-r))\) is a special case with a \(C^1\)-function \(h:{\mathbb {R}}\rightarrow {\mathbb {R}}\) satisfying

In this situation, the discretization is of Clark-type

whenever \(a\tfrac{r}{d}<1\) holds.

Proposition 5.10

(Dissipativity, cf. [20]) If there exist \(K^-,K^+\ge 0\) such that \(-K^-\le h(x)\le K^+\) for \(x\in {\mathbb {R}}\), then (5.14) is dissipative and every \(A=[R^-,R^+]\) with \(R^+>K^+\) and \(R^-<-K^-\) is an absorbing set.

As a prototype for equation (5.14) we study the following example.

Example 5.11

Let \(J={\mathbb {R}}\) and \(\alpha \in (0,1)\), \(\beta \in {\mathbb {R}}\setminus \left\{ 0\right\} \). Consider the delay-difference equation

which according to [12, Theorem 2] has a globally asymptotically stable trivial solution for \(\alpha +\left| \beta \right| <1\). The injectivity condition (1.3) holds and using Proposition 5.10 we see that \([-R,R]\) is an absorbing set when \(R>\tfrac{\left| \beta \right| }{1-\alpha }\) and the backward solutions on the global attractor of (5.15) are unique. One has positive feedback for \(\beta >0\), negative feedback for \(\beta <0\) and it is

In case \(\tfrac{\beta }{1-\alpha }\in (0,1]\) there exists the unique fixed point 0, while for \(\tfrac{\beta }{1-\alpha }>1\) a symmetric pair of fixed points \(x_-<0<x_+\) bifurcates. In the positive feedback case, note that shifting (5.15) into one of the nontrivial equilibria \(x_\pm \) might yield another Morse decomposition.

As a closing remark we point out that the existence of the global attractor for (5.3) (and therefore for Examples 5.4, 5.7 and 5.11), as well as for Examples 5.9 and 5.10 follows also from [5, Theorem 3.1].

6 Perspectives

We conclude this paper with raising some open questions and indicating possible future research directions:

-

Which Morse sets are nonempty? First note that the Morse set \({\mathcal M}_{N^*_\pm }\) is obviously nonempty, as the trivial solution is contained in it. In Example 5.9 we showed one possible method which can provide nonempty Morse sets different from \({\mathcal M}_{N^*_\pm }\). However it seems a challenging problem whether it can be shown (maybe under further assumptions on (1.1)) that \({\mathcal M}_n\ne \emptyset \) holds for all \(n<N^*_\pm \), as it was proved by Mallet-Paret in the continuous time case with negative feedback [15].

-

Using a generalization of Huszár’s Lemma 2.1 by Egerváry [2], there is hope that similar results can be shown for delay-difference equations