Abstract

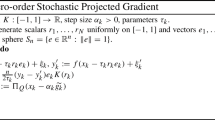

The online optimization model was first introduced in the research of machine learning problems (Zinkevich, Proceedings of ICML, 928–936, 2003). It is a powerful framework that combines the principles of optimization with the challenges of online decision-making. The present research mainly consider the case that the reveal objective functions are convex or submodular. In this paper, we focus on the online maximization problem under a special objective function \(\varPhi (x):[0,1]^n\rightarrow \mathbb {R}_{+}\) which satisfies the inequality \(\frac{1}{2}\langle u^{T}\nabla ^{2}\varPhi (x),u\rangle \le \sigma \cdot \frac{\Vert u\Vert _{1}}{\Vert x\Vert _{1}}\langle u,\nabla \varPhi (x)\rangle \) for any \(x,u\in [0,1]^n, x\ne 0\). This objective function is named as one sided \(\sigma \)-smooth (OSS) function. We achieve two conclusions here. Firstly, under the assumption that the gradient function of OSS function is L-smooth, we propose an \((1-\exp ((\theta -1)(\theta /(1+\theta ))^{2\sigma }))\)- approximation algorithm with \(O(\sqrt{T})\) regret upper bound, where T is the number of rounds in the online algorithm and \(\theta , \sigma \in \mathbb {R}_{+}\) are parameters. Secondly, if the gradient function of OSS function has no L-smoothness, we provide an \(\left( 1+((\theta +1)/\theta )^{4\sigma }\right) ^{-1}\)-approximation projected gradient algorithm, and prove that the regret upper bound of the algorithm is \(O(\sqrt{T})\). We think that this research can provide different ideas for online non-convex and non-submodular learning.

Similar content being viewed by others

Data Availability

Enquiries about data availability should be directed to the authors.

References

Abbassi Z, Mirrokni V, Thakur M (2013) Diversity maximization under matroid constraints. In: Proceedings of ACM SIGKDD, pp 32–40

Bian A, Levy K, Krause A, Buhmann, J (2017) Continuous dr-submodular maximization: structure and algorithms. In: Proceedings of NeurIPS, pp 486–496

Carbonell J, Goldstein J (1998) The use of mmr, diversity-based reranking for reordering documents and producing summaries. In: Proceedings of ACM SIGIR, pp 335–336

Chandra C, Quanrud K (2019) Submodular function maximization in parallel via the multilinear relaxation. In: Proceedings of SODA, pp 303–322

Chen L, Harshaw C, Hassani H, Karbasi A (2018a) Projection-free online optimization with stochastic gradient: From convexity to submodularity. In: Proceedings of ICML, pp 814–823

Chen L, Hassani H, Karbasi A (2018b) Online continuous submodular maximization. In: Proceedings of ICAIS, pp 1896–1905

Elad H (2016) Introduction to online convex optimization. Foundations and Trends® in Optimization 2:157–325

Garber D, Korcia G, Levy K (2020) Online convex optimization in the random order model. In: Proceedings of ICML, pp 3387-3396

Ghadiri M, Schmidt M (2019) Distributed maximization of submodular plus diversity functions for multilabel feature selection on huge datasets. In: Proceedings of AISTATS, pp 2077–2086

Hazan E, Agarwal A, Kale S (2007) Logarithmic regret algorithms for online convex optimization. Mach Learn 69:169–192

Kivinen J, Warmuth M (1999) Averaging expert predictions. In: Proceedings of EuroCOL, pp 153–167

Mehrdad G, Richard S, Bruce S (2021) Beyond submodular maximization via one sided \(\sigma \)-smoothness. In: Proceedings of SODA, pp 1006–1025

Nesterov Y (2003) Introductory lectures on convex optimization: a basic course. Springer Science and Business Media

Radlinski F, Dumais S (2006) Improving personalized web search using result diversification. In: Proceedings of ACM, SIGIR, pp 691–692

Streeter M, Golovin D (2020) An online algorithm for maximizing submodular functions. In: Proceedings of NeurIPS, pp 1577–1584

Thang N, Srivastav, A (2021) Online non-monotone dr-submodular maximization. In: Proceedings of AAAI, pp 9868–9876

Xin D, Cheng H, Yan X, Han J (2006) Extracting redundancy-aware top-\(k\) patterns. In: Proceedings of ACM SIGKDD, pp 444–453

Zadeh S, Ghadiri M, Mirrokni V, Zadimoghaddam M (2017) Scalable feature selection via distributed diversity maximization. In: Proceedings of AAAI, pp 2876–2883

Zhang H, Xu D, Gai L, Zhang Z (2022) Online one sided \(\sigma \)-smooth function maximization. In: Proceedings of COCOON 2022:177–185

Zinkevich M (2003) Online convex programming and generalized infinitesimal gradient ascent. In: Proceedings of ICML, pp 928–936

Acknowledgements

The first, second, and fourth authors are supported by National Natural Science Foundation of China (No. 12131003). The third author is supported by National Natural Science Foundation of China (No. 11201333).

Funding

This research was supported by NNSF of China (Grant Nos. 12131003 and 11201333).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This is an extended version of a paper Zhang et al. (2022) presented in the the 26th International Computing and Combinatorics Conference.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, H., Xu, D., Gai, L. et al. Online learning under one sided \(\sigma \)-smooth function. J Comb Optim 47, 76 (2024). https://doi.org/10.1007/s10878-024-01174-2

Accepted:

Published:

DOI: https://doi.org/10.1007/s10878-024-01174-2